Simple Summary

Neuronavigation and microscope-based augmented reality are widely used in neurosurgery to support intraoperative orientation, preserve neurological function, and maximize the extent of resection. However, in the sitting position, navigation may not be accurate enough to fully exploit its potential due to brain deformations caused by gravity and brain shift. To ensure accurate navigation and augmented reality support, it is necessary to verify and update navigation regularly. Intraoperative ultrasound is an easy-to-use tool that can be used to verify accuracy and to generate real-time image data to update navigation without significantly interrupting the surgical workflow. This can be achieved by outlining the lesion within the data set or by rigidly co-registering preoperative and intraoperative data to update and enable navigation and augmented reality support. In this study, image-based co-registration improved the navigation accuracy, making intraoperative ultrasound useful for enabling navigation and augmented reality support during posterior fossa surgery in the sitting position.

Abstract

Despite its broad use in cranial and spinal surgery, navigation support and microscope-based augmented reality (AR) have not yet found their way into posterior fossa surgery in the sitting position. While this position offers surgical benefits, navigation accuracy and thereof the use of navigation itself seems limited. Intraoperative ultrasound (iUS) can be applied at any time during surgery, delivering real-time images that can be used for accuracy verification and navigation updates. Within this study, its applicability in the sitting position was assessed. Data from 15 patients with lesions within the posterior fossa who underwent magnetic resonance imaging (MRI)-based navigation-supported surgery in the sitting position were retrospectively analyzed using the standard reference array and new rigid image-based MRI-iUS co-registration. The navigation accuracy was evaluated based on the spatial overlap of the outlined lesions and the distance between the corresponding landmarks in both data sets, respectively. Image-based co-registration significantly improved (p < 0.001) the spatial overlap of the outlined lesion (0.42 ± 0.30 vs. 0.65 ± 0.23) and significantly reduced (p < 0.001) the distance between the corresponding landmarks (8.69 ± 6.23 mm vs. 3.19 ± 2.73 mm), allowing for the sufficient use of navigation and AR support. Navigated iUS can therefore serve as an easy-to-use tool to enable navigation support for posterior fossa surgery in the sitting position.

1. Introduction

With its introduction in the 1990s, image-guided surgery and neuronavigation have become indispensable tools for many cranial and spinal surgical procedures [1,2]. Already proving its clinical usefulness and benefits in identifying deep-seated lesions, precisely defining resection margins, and preserving functional risk structures, imaging-based neuronavigation has broadly become a routine intrinsic part of surgical procedures [3,4]. The implementation of microscope-based augmented reality (AR), which allows for real-time AR visualization of outlined lesions and risk structures, thus supporting the surgeon’s mental transfer of relevant information between the image space and the surgical field, reducing the need for attention shifts and increasing surgeon comfort, further complements navigation-assisted intraoperative guidance [5,6,7,8,9]. Despite the well-known benefits, neuronavigation and, thereby, microscope-based AR have not found their way into surgery within the posterior fossa on a routine basis.

Lesions within the cerebellum and brain stem account for approximately 5% of all brain tumors in adults and exhibit a rate of about 50% in children, mostly requiring resection or at least a biopsy for diagnostics and tailored treatment [10]. It is imperative to choose the best surgical approach with an optimal surgical trajectory to target the lesion while limiting tissue dissection to a minimum [11]. The usefulness of neuronavigation assistance, especially in the sitting position for posterior fossa surgery, has been doubted due to concerns of accuracy being impaired by gravitational effects and brain shift caused by cerebrospinal fluid (CSF) loss [11], which, besides the reduction in cerebellar swelling, the reduced surgical time and blood loss and the gravitational loss of venous blood are undisputable advantages of the sitting position for posterior fossa surgery [12,13] over surgery in, e.g., the prone position. As recently demonstrated, surgical positioning differing from patient positioning during preoperative magnetic resonance imaging (MRI) data acquisition also affects the clinical accuracy from the beginning of the surgery, leading to further inaccuracies in the prone and sitting position when accessing the posterior fossa [10,12,14].

Even though the overall navigation accuracy can be improved in different ways, enabling an optimized initial patient-to-image registration by, e.g., reducing effects due to skin shift and the proper placement of artificial registration landmarks, accuracy is known to constantly decrease during surgery and, in combination with the non-linear effects of brain shift, could, in the worst case, lead to an unacceptable mismatch of image and patient data [3,4,15]. While the positional shift of the patient’s head in relation to the reference array alone can be compensated for by rigid re-registration techniques using intraoperatively acquired landmarks, intracranial positional and intraparenchymal shifting can be addressed by intraoperative imaging techniques such as intraoperative MRI (iMRI) or intraoperative ultrasound (iUS), if applicable, or partially also by AR-based navigation updates [15]. Navigation updates using AR can compensate for in-plane inaccuracies but cannot account for inaccuracies along the focal axis of the operating microscope. The usage of iMRI is generally limited due to its availability, high costs, structural requirements, relevant interruption of the surgical procedure and time consumption, constraints in patient positioning, and partially not allowing for its application [16,17]. Contrarily, iUS can be performed at any time during surgery, also repeatedly, with no significant interruption of the surgical procedure, is widely available, cost-effective, and straightforward to use [18]. Despite its presumed lower imaging quality compared to classical MRI techniques, its user dependency, and a lack of training and experience in interpreting iUS data, with its integration into neuronavigation systems, iUS has gained more attraction and is now part of the surgical routine in many setups [19,20,21,22,23,24,25]. Navigated iUS allows for a regular exploration of the navigation accuracy, brain shift, and extent of resection depending on the echogenicity of the lesion. It also offers an opportunity to update navigation using intraoperatively acquired 3D iUS data. However, its potential might not yet be fully exploited.

According to the experience of our study group in intraoperative US application in cerebral metastasis [25], glioma surgery [20,21], and spine surgery [26], navigated iUS is now fully integrated into the workflow of most neurosurgical procedures. Therefore, first, navigated iUS imaging is typically performed, and an iUS data set is acquired before the dural opening. If needed, and up to the surgeon’s intraoperative impression, iUS can be applied at any time during the surgical procedure to verify the navigation accuracy or update navigation, depending on the lesion, to determine the extent of resection, or to identify the remaining tumor intraoperatively, leading to continued resection.

Considering the benefits of neuronavigation and the limitations of the navigation accuracy concerning positional effects, overall accuracy, and brain shift, the application of intraoperative navigation US and the acquisition of iUS data sets to evaluate navigation accuracy and compensate for inaccuracies seems to be an ideal and easy-to-use tool to enable neuronavigation even in posterior fossa surgery. This study therefore aims to assess the applicability and usefulness of iUS in neuronavigation-supported posterior fossa surgery to compensate for navigation inaccuracies.

2. Materials and Methods

2.1. Study Cohort

Data from 15 patients (male/female: 6/9, mean age: 60.27 ± 9.33 years) who consecutively underwent neuronavigation-supported microsurgical resection of suspected cerebellar lesions or lesions of the brainstem or within the fourth ventricle in a sitting approach after exclusion of patent foramen ovale (PFO) were retrospectively analyzed within this study. All surgeries were performed by a single surgeon (C.N.) with over 25 years of experience in intraoperative imaging to reduce effects of user dependency during intraoperative US usage. Patients with lesions close to bony structures, such as cerebellopontine angle tumors, were excluded in this proof-of-concept study as bone-related artifacts and the induced signal loss led to an incomplete depiction of the lesion in the iUS data, inhibiting a lesion-based analysis (spatial overlap of outlined lesions in MRI and iUS data). Ethics approval for prospectively archiving and collecting routine clinical and technical data during neurosurgical treatment of patients was obtained in accordance with the Declaration of Helsinki and was approved by the ethics committee at the University of Marburg (No. 99/18); analysis of these data within this study was additionally approved by the ethics committee (RS 23/10). All included patients provided written informed consent before participation.

2.2. Technical Equipment

Surgical planning and, later on, retrospective analysis of all included cases was performed on a dedicated planning server (Origin Server, Brainlab, Munich, Germany) equipped with various software modules (Brainlab Elements, Brainlab, Munich, Germany) including, among other things, software tools for image fusion, tumor segmentation, and definition of landmarks.

For navigation-assisted surgery, both available neurosurgical operating rooms (ORs) are equipped with a Curve Navigation System (Brainlab, Munich, Germany), enabling patient registration, navigation and microscope-based AR support as well as two ultrasound systems (Flex Focus 800, BK5000, BK Medical, Herlev, Denmark), that are digitally fully integrated into the navigation systems (Ultrasound Navigation, Brainlab, Munich, Germany), allowing for a real-time overlay of iUS data onto navigation data sets as well as the intraoperative acquisition of iUS data sets that can be utilized for further navigational use.

Both ultrasound systems are equipped with comparable cranial transducers (Flex Focus 800: transducer 8862, BK5000: transducer N13C5, BK Medical, Herlev, Denmark), which are sterilizable and therefore can be used without an additional sterile cover that might impact image quality. In addition, using a sterile transducer rather than a non-sterile transducer covered with a sterile drape lowers the risk of loss of sterility during preparation and handling. The high-resolution N13C5 cranial transducer has a convex 29 mm × 10 mm contact surface and a scanning frequency of 5 to 13 MHz; the 8862 cranial transducer has the same spatial configuration but a scanning frequency of 3.8 to 10 MHz. Both transducers can be equipped with a sterile adapter and dedicated reference array with three reflective markers for navigated iUS usage after initial integration and technical calibration before the first overall use.

During navigated use, the probe is tracked in the patient coordinate system, allowing for an automatic real-time overlay of iUS data onto preoperative image data. For the acquisition of a 3D iUS data set, the transducer is constantly swept across the accessible field, and single 2D iUS images (with their corresponding position in the patient coordinate system) are acquired, which are then automatically stacked and post-processed by the navigation system (Ultrasound Navigation, Brainlab, Munich, Germany), resulting in a 3D iUS data set that can be further used during surgery.

2.3. Preoperative Planning

For surgical planning, MRI data acquired within a couple of days before surgery or resulting from routine diagnostics was used in all cases, typically including a contrast-enhanced T1-weighted 3D data set. Partially T2-weighted MRI data and/or time-of-flight MRI angiography (ToF) data were included. On the day before surgery, at least seven self-adhesive skin markers were attached to the patient’s head, and preoperative computed tomography (CT) imaging was performed to allow for a fiducial-based intraoperative patient registration procedure.

After rigid image registration of all included data sets using the Image Fusion Element (Brainlab, Munich, Germany), the lesion as the target structure, as well as the transverse sinuses and risk structures were outlined manually using the Smart Brush Element (Brainlab, Munich, Germany). In addition, depending on its spatial relation to the lesion, the brainstem was automatically delineated using the Anatomical Mapping Element (Brainlab, Munich, Germany), especially in case of close spatial relation to the lesion, and partially reshaped to further fit the individual patient image data. Case dependent, further additional structures such as vessels in close vicinity to the lesion were outlined manually.

2.4. Patient Positioning and Registration Procedure

All patients underwent navigation-assisted surgery in a semi-sitting position. Therefore, after induction of anesthesia and implementation of venous air embolism (VAE) monitoring (esophageal ultrasound probe) and insertion of a catheter placed in the right atrium of the heart to aspire air bubbles in case of embolism, the sitting position was achieved in a stepwise manner, by gradually bending and tilting the operating table until an angle of less than or equal to 90 degrees between the trunk and inferior limbs with a suitable knee flexion was obtained. The ankle joints were leveled in line with the atrium to achieve venous counterpressure. The patient’s head was fixed in a metallic 3-pin head clamp (DORO® QR3 Cranial Stabilization System, Black Forest Medical Group, Freiburg im Breisgau, Germany) adapted to the operating table.

For navigation purposes, a patient reference array with four reflective markers was attached on the right side of the head clamp close to the surgical field to increase navigation accuracy with direct line-of-sight of the navigation system’s stereo camera (Curve Navigation, Brainlab, Munich, Germany). Fiducial-based patient registration was performed by matching the attached self-adhesive skin markers with the virtual corresponding markers in the preoperatively acquired data set to enable neuronavigation-supported surgery, and registration quality was verified. Afterwards, the non-sterile reference array was removed, followed by skin disinfection and sterile draping, and a sterile reference array was attached. Pointer-based navigation, as well as microscope-based AR navigation support, was enabled in this way.

2.5. Intraoperative Navigated Ultrasound

Depending on the availability of a sterile transducer, preferably the BK5000, or the Flex Focus 800 (BK Medical, Herlev, Denmark) ultrasound system, with the corresponding cranial transducers, N13C5 (BK5000) or 8862 (Flex Focus 800), equipped with a reference array was utilized. The penetration depth was standardized to 65 mm (N13C5) or 62 mm (8862), respectively. To ensure high technical accuracy of the navigated transducer, contributing to overall accuracy, the calibration quality of the navigated transducer was visually inspected using a dedicated iUS phantom with integrated wires. Therefore, the concordance of calculated and within the ultrasound visualized wire crossings are visually verified to ensure high technical accuracy.

In supratentorial applications for iUS usage, the patient’s head is typically positioned in a way that at least a thin coupling fluid depot could be built up. In the sitting position, no stable saline depot can be built up. Therefore, continuous saline application close to the transducer is necessary while gently moving the probe across the dura to limit artifacts and generate interpretable iUS data sets.

Intraoperative navigated ultrasound was performed in all cases before dural opening. After exploration of the surgical field using the navigated ultrasound probe to evaluate the overall applicability in each case, which might be hampered due to dural artifacts, especially in repeated surgery, in case of non-echogenic lesions or lesions close to bony structures, at least one 3D iUS data set was acquired with a high sampling rate and mean slice thickness of 0.5 mm. Therefore, the navigated probe was constantly swept and moved across the accessible dural area in the cranio-caudal direction to acquire an almost axial data set. Depending on accessibility and limited line-of-sight issues, a second nearly sagittal data set was in part acquired in the left–right direction.

Real-time navigated iUS overlaid onto the preoperative MRI data, as well as the acquired 3D iUS data set (reference array-based co-registration), were then intraoperative visually inspected according to navigation accuracy. If navigation accuracy was rated sufficient by the surgeon, surgery was continued using MRI and iUS data in parallel, whereas in case navigation accuracy was rated insufficient, the lesion was manually outlined within the newly acquired 3D iUS data set, MRI data were discarded, and iUS-based navigation and microscope-based AR support including the iUS-based tumor outlines were further used intraoperatively.

2.6. Additional Postprocessing Using Rigid Image-Based Co-Registration

Retrospectively, additionally, rigid image-based co-registration of iUS and MRI data was used, provided by the SNAP Element (Brainlab, Munich, Germany), released during the course of this study. This rigid image-based co-registration relies on previously published work by Wein et al. [27,28], applying a Linear Correlation of Linear Combination (LC2) similarity metric, allowing for rigid image-based MRI-iUS co-registration in a matter of seconds. SNAP is now fully integrated into the navigation system in addition to the standard reference array-based registration of the iUS data set implemented in the clinical standard navigation workflow.

To evaluate the potential of rigid image-based co-registration in relation to reference array-based co-registration, potentially also enabling the integration of all preoperative generated information into the intraoperative data set, all iUS data sets were additionally rigidly co-registered with the preoperative MRI data sets using this rigid image-based co-registration approach implemented via SNAP. Therefore, within the Image Fusion Element (Brainlab, Munich, Germany), the MRI-iUS image pair is selected, initially still aligned according to the reference array-based co-registration, and then rigid image-based co-registration is initiated, without any further manual refinement.

2.7. Quantification of Navigation Accuracy

Navigation accuracy was estimated using different approaches.

First, intraoperatively, the visual matching of automatically overlaid preoperative MRI-based tumor outlines or outlines of other segmented structures on navigated iUS data was evaluated. If the surgeon decided on a “sufficient match”, MRI-based navigation was used as initially intended. In the case of a severe mismatch, iUS-based navigation was used further during surgery with intraoperative tumor segmentation based on the navigated 3D iUS data set. Second, postoperatively, navigation accuracy was analyzed in all cases using tumor outlines based on preoperative MRI data as well as tumor outlines based on intraoperatively acquired 3D iUS data sets. Manual segmentation was performed by two experts (M.B., A.G.), and the intraclass correlation coefficient (ICC) was calculated to ensure reliability of the segmentation process. Third, depending on the available data sets, postoperatively, up to ten uniquely identifiable landmarks (LMs) were manually defined within the preoperative MRI and the intraoperative 3D iUS data sets (M.B.). All landmarks were chosen based on clear visibility in both modalities, encompassing different tissue classes (e.g., gyri, sulci, vessels, tentorium) and various distances to the lesion, depending on the acquired iUS volume. All landmarks were technically verified to ensure correct labeling of the base data set and were reviewed by a second expert (A.G.) to ensure high quality.

Navigation accuracy was then assessed following a lesion-based analysis utilizing the Dice coefficient as a measure of spatial overlap and a landmark-based analysis using the overall Euclidean distance between corresponding landmarks for the standard reference array-based registration and the rigid image-based co-registration.

The Dice coefficient (DSC) [29] is a widely used parameter in medical imaging studies to quantify the degree of spatial overlap between two outlined objects, in this case, derived from MRI and iUS data, with poor agreement (DSC < 0.2), fair agreement (0.2 ≤ DSC < 0.4), moderate agreement (0.4 ≤ DSC < 0.6), good agreement (0.6 ≤ DSC < 0.8), and excellent agreement (0.8 ≤ DSC ≤ 1.0) [29], and is calculated as follows:

The mean Euclidean distance (ED) between corresponding landmarks per case was calculated for the reference array as well as for rigid image-based co-registration as a measure of navigation accuracy as follows:

2.8. Statistical Analysis

Statistical analysis was performed using the open-source software jamovi from the jamovi project (Version 2.3.21, computer software, retrieved from https://www.jamovi.org, accessed on 20 March 2024) [30]. The Shapiro–Wilk test was used to test for normal distribution of differences between groups as a prerequisite for the paired t-test. If no normal distribution was given, the Wilcoxon signed-rank test was applied as a non-parametric test. A two-way random ICC model was used to calculate the absolute agreement of manual segmentations. The significance level was set to p < 0.05.

3. Results

3.1. Patient Characteristics

In total, 15 patients (male/female: 6/9, mean age: 60.27 ± 9.33 years) were included in this study. Twelve patients underwent surgery for one, two patients for two, and one patient for four cerebellar lesions in the semi-sitting position. Neuropathological diagnosis revealed metastasis (n = 8, including three patients with multiple lesions), glioma (n = 2), meningioma (n = 1), subependymoma (n = 1), cavernoma (n = 1), hematoma (n = 1), and arteriovenous malformation (n = 1). In 14 cases, an osteoclastic approach (with/without C1 laminectomy: 12/2), and in one case, an osteoplastic suboccipital (midline/midline-right: 13/2) approach was chosen. In three cases (20.00%), intraoperatively, air bubbles were detected by transiently transesophageal echocardiography (TEE), which could be promptly and successfully treated. In the remaining twelve cases (80.00%), no VAE-related events or intraoperative complications were recorded; for further details, see Table 1.

Table 1.

Patient characteristics.

3.2. Tumor Characteristics and Navigation Accuracy

In total, 20 lesions in 15 patients were analyzed. Table 2 summarizes the tumor volumes segmented within the preoperative MRI and intraoperative US data sets. Manual segmentation by both experts led to an ICC of 0.994 (MRI) and 0.968 (iUS), showing excellent agreement. The manual segmentation of the tumor outlines based on preoperative MRI data revealed a mean tumor volume of 9.88 ± 10.61 cm3 (min: 0.08 cm3, max: 30.50 cm3), and based on the iUS data, the mean tumor volume was 9.15 ± 9.68 cm3 (min: 0.08 cm3, max: 29.50 cm3). The statistical analysis using the Wilcoxon signed-rank test (no normal distribution given according to Shapiro–Wilk test, p < 0.001) revealed no significant differences in tumor volumes gained by MRI-based and iUS-based tumor segmentation (p = 0.355).

Table 2.

Tumor object and iUS characteristics.

Intraoperatively, relying on reference array-based registration, in seven cases, the navigation accuracy was rated as “sufficient” (see Figure 1) and in the remaining eight cases was “insufficient”. In the latter cases, the lesion was outlined manually within the acquired iUS data set. Surgery was then continued with iUS-based navigation and microscope-based AR utilizing the new outlines (see Figure 2).

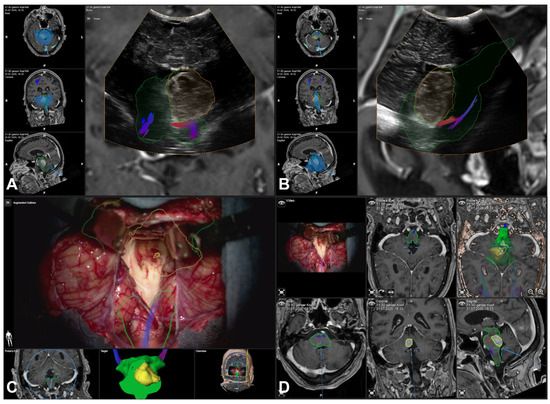

Figure 1.

Navigated intraoperative ultrasound (transducer 8862) revealed a “sufficient” navigation accuracy, as shown by the outlined contours of the MRI-based information (yellow/orange) overlaid on the iUS data set in estimated axial (A) and sagittal (B) slicing, also including outlines of the brainstem (green) as well as fiber tractography of the corticospinal tract. Showing no need for a navigation update, MRI-based preoperative information (tumor, brainstem, and corticospinal tract) was used throughout the surgery for microscope-based AR (C) and overall navigation support (D).

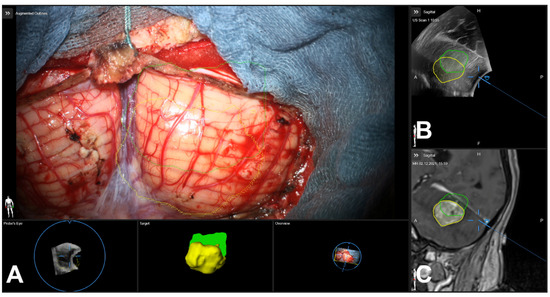

Figure 2.

Navigated intraoperative ultrasound (transducer N13C5) revealed an “insufficient” navigation accuracy, as shown by the outlined contours of MRI-based information (yellow) and iUS-based segmentation (green) of the lesion within the microscope-based AR view (A), the iUS (B), and the MRI data set (C). Following this rating, during surgery, only the iUS data and iUS-based segmentation were used for navigational purposes.

3.3. Lesion-Based Analysis

Regarding reference array-based registration, the mean Dice coefficient comparing the spatial overlap between the MRI- and iUS-based tumor segmentation was 0.42 ± 0.30, ranging from 0.00 to 0.87. The spatial overlap was rated as “poor” for five, “fair” for five, “moderate” for three, “good” for five, and “excellent” for two lesions. For the rigid image-based registration of the preoperative MRI and intraoperatively acquired US data based on tumor outlines, a mean Dice coefficient of 0.65 ± 0.23 was calculated, ranging from 0.00 to 0.88. The spatial overlap was rated as “poor” for one, “fair” for one, “moderate” for four, “good” for eight, and “excellent” for six lesions.

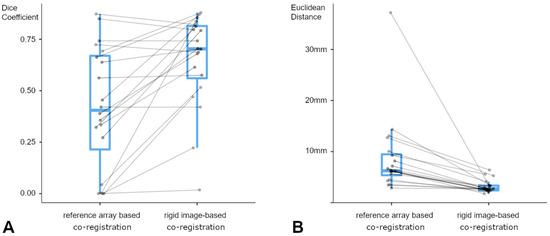

Comparing the spatial overlap achieved by standard reference array-based registration and rigid image-based registration of preoperative MRI and intraoperative US, a significant increase (paired t-test, p < 0.001) in the spatial overlap was seen in favor of rigid image-based registration; see Figure 3A.

Figure 3.

Plotted Dice coefficients (A) for reference array-based co-registration (left) and rigid image-based co-registration (right) show a significant improvement of spatial overlap between MRI- and iUS-based segmentation (paired t-test, p < 0.001). Despite its size dependency, in one case of a small lesion and low-volume iUS data set, spatial overlap did not improve significantly. Plotted mean Euclidean distances (B) for reference array-based co-registration (left) and rigid image-based co-registration (right) display a significant decrease (Wilcoxon signed-rank test, p < 0.001), even in a case with an initial mean offset of 37.60 mm.

3.4. Landmark-Based Analysis

For standard reference array-based registration, the mean Euclidean distance as a measure for the registration accuracy between corresponding landmarks in the preoperative MRI data and intraoperative US data was 8.69 ± 6.23 mm (median 6.24 mm), ranging from 2.85 mm to 37.2 mm. For rigid image-based registration, the resulting mean Euclidean distance was 3.19 ± 2.73 mm (median 2.73 mm) with a minimum distance of 1.77 mm and a maximum distance of 6.40 mm.

Comparing the mean Euclidean distance per lesion between the reference array- and image-based MRI-iUS registration as a measure of the registration quality, the image-based registration showed significantly lower distances (Wilcoxon signed-rank test, p < 0.001) between the corresponding landmarks and, therefore, a higher registration accuracy between the MRI and iUS data; see Figure 3B.

3.5. Illustrative Cases

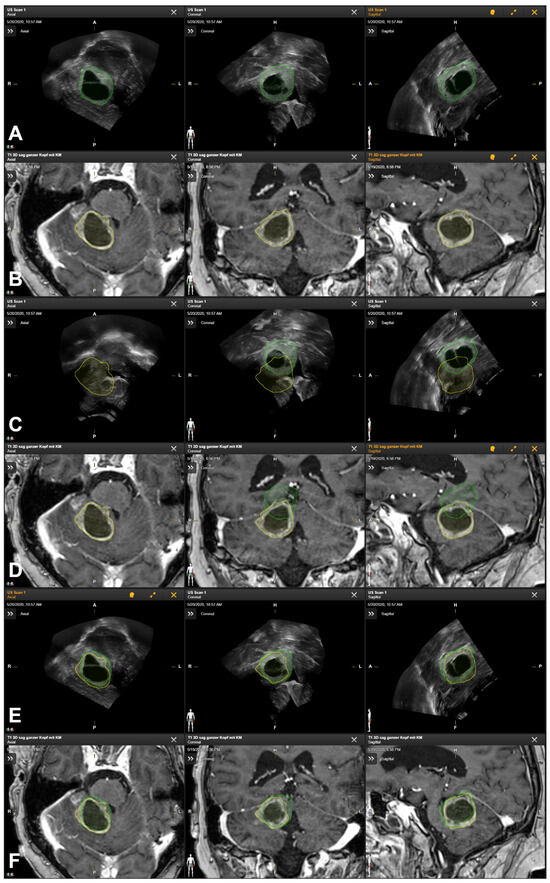

Patient No. 1 is a 42-year-old male patient with a cerebellar anaplastic astrocytic tumor. A suboccipital osteoclastic trepanation with C1 laminectomy was performed. Patient registration was achieved using a landmark-based registration with median initial accuracy. According to the intraoperatively acquired ultrasound data (178 slices, an average slice thickness of 0.5 mm), the lesion was clearly depictable and completely included in the data set. In parallel, a clinically significant mismatch between the preoperative MRI data and the MRI-based tumor segmentation (see Figure 4A) and the anatomical landmarks and tumor outlines within the iUS data set (see Figure 4B) was seen (see Figure 4C,D). Intraoperatively, the lesion was outlined manually within the iUS data set, and standalone iUS-based navigation support was used throughout the surgery with the outlined tumor also provided within the microscope-based AR navigation.

Figure 4.

Manual segmentation of tumor outlines based on preoperative MRI data (segmentation in yellow, (A)) and navigated intraoperative US data (segmentation in green, (B)) showing the spatial mismatch of preoperative MRI-based tumor outlines and intraoperative iUS data leading to an iUS-based navigation update by manually outlining the tumor within the intraoperatively acquired US data set further used for navigation purposes in case of reference array-based registration (C,D) as well as good match between both data sets after rigid image-based registration of MRI and iUS data (E,F).

Retrospective rigid image-based MRI-iUS co-registration (see Figure 4E,F) led to significantly increased co-registration results, improving the spatial overlap of the outlined lesion from 0.27 to 0.79. Assessing the accuracy based on ten uniquely identifiable landmarks, the mean Euclidean distance between the corresponding landmarks was 14.37 ± 1.97 mm (range: 10.76 mm to 17.31 mm), which was also improved to a mean Euclidean distance of 3.35 ± 1.43 mm (range 1.05 mm to 5.56 mm) for the image-based MRI-iUS registration.

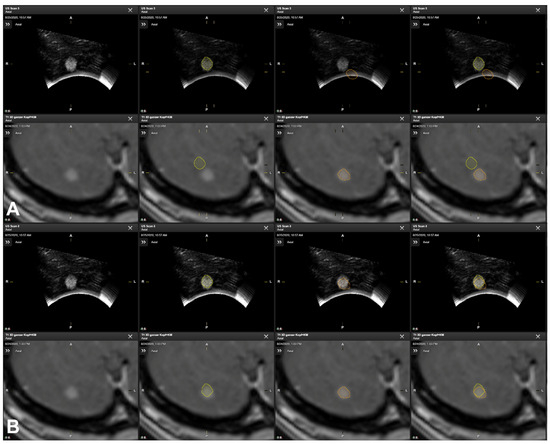

Patient No. 3 is a 62-year-old female patient with two colon carcinoma metastases in the cerebellum. The tumor volume was 8.54 cm3 and 0.09 cm3, respectively. A suboccipital osteoclastic trepanation with C1 laminectomy was performed. Patient registration was achieved using landmark-based registration with a median initial accuracy. In the first intraoperatively acquired ultrasound data set (125 slices, an average slice thickness of 0.5 mm), the larger lesion was clearly depictable and completely included, showing a “good” spatial overlap of the lesion outlines (DSC 0.74) with a moderate mean Euclidean distance of 4.5 mm. Further intraoperatively acquired ultrasound data (36 slices, an average slice thickness of 0.5 mm) covering the smaller lesion showed a clear discrepancy between the MRI and iUS data with no initial spatial overlap (DSC 0.00) of the outlined lesions and a mean Euclidean distance of 6.33 mm, which could be improved to a spatial overlap of 0.47 and a mean Euclidean distance of 1.77 mm, respectively, allowing for the intraoperative localization and identification of the lesion (see Figure 5). Intraoperatively, the smaller lesion was outlined manually in the iUS data set, and standalone iUS-based navigation support was used to continue navigation-supported surgery for this case.

Figure 5.

Manual segmentation of tumor outlines based on preoperative MRI data (orange) and navigated iUS data (yellow) showing the spatial mismatch seen in reference array-based co-registration, indicating the need for a navigation update (A), whereas navigation accuracy was increased after rigid image-based co-registration (B), showing a suitable spatial overlap of MRI- and iUS-based tumor outlines.

4. Discussion

Since its introduction in the 1990s, neuronavigation, first used as pointer-based navigation and, later, also used in terms of microscope-based AR navigation, has proven its clinical benefits and usefulness and has become an indispensable and routine tool for a wide variety of neurosurgical applications in cranial and spinal surgical procedures [1,2,3,4,31]. Neuronavigation is known to support intraoperative surgical orientation, the precise planning of surgical trajectories, the identification of the spatial relationship of the lesion and functional risk structures (e.g., eloquent areas, fiber tracts, vascular structures), and contributes to pre- and intraoperative surgical decision making and extending radical resection with in-parallel increasing patient safety [4,32,33,34,35,36].

However, the benefits and surgical advantages of neuronavigation, also extended by microscope-based AR, easing the mental transfer of image data onto the surgical field, and its application safety fundamentally depend on the highly precise and accurate mapping of preoperatively acquired data on the intraoperative surgical situs—linking image space and physical space—at the beginning and throughout the entire course of surgery, which remains a multifactorial and essential challenge [1,2,4,15,32,35,37,38,39,40].

Besides non-user-dependent factors such as the intrinsic accuracy of the neuronavigation system itself, several factors can be addressed to increase the overall navigation accuracy. The overall navigation accuracy can be divided into the imaging, technical, and registration accuracy, the latter being the most prominent relevant contributing factor before intracranial manipulation when mapping the image and patient space. The intraoperative accuracy is mainly impaired by brain deformations during surgery due to the loss of CSF, increased swelling, the insertion of brain retractors, and the effects of gravity [4,15,16,33,34]. The navigation accuracy, in terms of an altered spatial relationship between the patient’s head and the reference array, intraoperatively, not yet hampered by the effects of brain shift, is known to decrease throughout surgery [3,41,42] and can be compensated for up to a certain point, e.g., by re-registration utilizing acquired landmarks [42] or in-plane adaptions of AR when using microscope-based AR navigation [15]. Non-linear deformations remain a fundamental challenge. Whereas a high clinical accuracy is expected throughout surgery, the clinical accuracy steadily decreases and can, in the worst case, lead to a total loss of navigation capabilities [3,4,43].

Being aware of this, neuronavigation support is commonly implemented in supratentorial neurosurgical procedures. However, it has not yet found its way into posterior fossa surgery in the sitting position due to severe concerns about impaired accuracy [11]. The prone and sitting positions are both commonly used for posterior fossa surgery [44], both providing advantages and challenges for neurosurgeons and neuroanesthesiologists; however, which approach to choose is still a controversial issue and needs to balance risks related to the positioning and surgical and anesthesiological advantages [12,13,44]. The prone position is a widely used patient positioning for posterior approaches, also shown to decrease the VAE risk compared to the sitting position. However, VAE is still reported to occur in 10% to 17% of all craniotomy cases in the prone position and is not exclusively seen in the sitting approach [12,44]. However, this positioning is also challenging under anesthesiological considerations in terms of providing and ensuring adequate oxygenation and ventilation, maintaining hemodynamics, and securing intravenous lines and the tracheal tube, especially the access to the patient’s airways. The sitting position offers a broad range of surgical advantages, such as the gravitational drainage of venous blood and CSF from the surgical site, improving surgical orientation, allowing for a cleaner dissection while reducing the need for bipolar coagulation, facilitating cerebellar retraction and access to midline structures and deep areas, a significant reduction in cerebellar swelling, cerebral venous decompression and reduced blood loss [12,13]. Anesthesiological benefits include ventilation with lower airway pressure, less impairment of diaphragmatic motion, improved access to the tracheal tube, and easier access to the patient in case of emergency [12,13,44]. However, there are also notable risks associated with this positioning technique. The two major reported complications include VAE with possibly paradoxical air embolism, which might be devastating in the presence of PFO, typically considered a contraindication, and intraoperative hypotension [12,13,44,45]. The VAE incidence is reported to be highly varying with an overall incidence of 39%, ranging from 1% to 76% [45], markedly depending on the monitoring method and settings used. TEE seems to be the most sensitive method, providing the early detection of even small VAEs of little clinical significance [12,45,46], as also seen in this study, with an incidence of 20%. In all the cases, only transient VAE-related events were recorded promptly and successfully treated.

The surgical advantages of the sitting position, e.g., the gravitational drainage of CSF, leading to non-linear deformations of the brain tissue in relation to the preoperative imaging data typically considered for neuronavigation support, lead to doubts about the applicability of neuronavigation assistance in certain cases [11]. However, in general, as previously shown, the intraoperative patient positioning differing from the preoperative patient positioning during MRI acquisition, as is the case not only in sitting but also in prone positioning, leads to inaccuracies in mapping images and patient space as early as during patient registration, which relevantly contributes to the overall navigation accuracy, and the mapping of intracranial structures [10,12,14]. Various approaches can be considered for patient registration, such as paired-point registration using anatomical, artificial, or bony landmarks, surface matching techniques, or automatic registration [3]. Registration errors throughout the manual registration process with high user-dependency arise due to skin distortions caused by gravity, shifting, reduction in muscle tonus, intubation, nasogastric tube placement, skin movement due to fixation within the head clamp, and skin shifting using the navigation pointer, and is highly user-dependent, but is also affected by varying patient positioning [14]. When using intraoperative imaging such as CT or MRI where available, automatic patient registration is a valuable and highly accurate registration method that is user-independent and independent of effects of patient positioning throughout the preoperative image acquisition, leading to mean target registration errors of less than 1 mm [1,47,48,49], but, however, is not utilizable in specific surgical positions such as the sitting position for accessing the posterior fossa [14]. Besides patient registration, the patient positioning differing between the preoperative MRI used for surgical planning and the intraoperative patient positioning also affects intracranial structures. Compared to the cerebrum, the posterior fossa includes a larger amount of CSF and larger subarachnoidal spaces, allowing for the increased movement of the brain depending on the patient’s position due to the effects of gravity and was reported, based on MRI studies, to vary up to 11.46 mm [10,50,51,52,53,54]. This supports previous findings of higher navigation inaccuracies in the prone and sitting position when targeting the posterior fossa with the partial loss of navigational support compared to standard supratentorial approaches [10,55,56].

However, discrepancies between the image and patient space throughout surgery are daily challenges in the application of neuronavigation, and there are several opportunities to compensate for them [15,16,31,34,39,57,58]. While rigid positional shifts, e.g., due to a change in the relation between the reference array and the patient’s head, can be accounted for by re-registration, inaccuracies caused by intraparenchymal non-linear shifting due to the positional effects of patient positioning and surgical manipulation (e.g., loss of CSF, swelling, retractors, gravity) over the course of surgery can be overcome using intraoperative imaging techniques or partially by AR [15]. While the usage of iMRI is generally limited due to its availability, its high cost and time consumption, and also the intraoperative patient positioning, such as the sitting position, limits its applicability [16,17]. First introduced in the 1980s, iUS gained attraction due to its capability for real-time imaging and repeatability without significant interruption of the surgical workflow. It is cost-efficient, safe, and straightforward to use [18,59]. Over the years, iUS technology has undergone tremendous advancements, and nowadays offers an improved spatial and priceless temporal resolution that overcomes the initial limitations caused by a comparably low resolution, high user dependency, and lack of training and experience [59]. Enabling oblique imaging, the anatomical orientation within the limited field of view of iUS data due to the spatial restrictions of the craniotomy is challenging, and users might be encouraged to use iUS approximately aligned along the conventional anatomical axis (axial, coronal, sagittal) to allow for more intuitive understanding [59]. With its integration into neuronavigation systems, tracking the transducer similarly to other navigation tools allows for the regular exploration of the navigation accuracy, brain shift, and extent of resection, depending on the echogenicity of the lesion. With advancements in 3D iUS imaging (3D transducer or stacking of 2D images), it even offers the opportunity to update navigation by usage of the intraoperatively acquired 3D iUS data, accurately representing the recent intraoperative configuration of the brain following patient positioning, brain shift, and so on. Allowing for its navigated use, iUS has gained more attraction and has become an essential part of surgical routines in many setups [19,20,21,22,23,24,25]; most sites follow a specific standardized protocol, which supports a steep learning curve that allows for the transfer of this technique from simple to complex cases through technical and clinical experience, and experience in the interpretation of iUS data and the visual matching of multimodal data for navigational purposes. IUS has shown a good correlation with iMRI in determining the extent of resection and the identification of tumor remnants in metastases and glioma surgery and, thus, especially in combination with navigation, facilitates tumor removal and extended resection, improving the overall patient survival and quality of life [60,61].

In this proof-of-concept study, the capability of navigated iUS to identify navigation inaccuracies and to compensate for those inaccuracies was evaluated. In doing so, iUS was conducted before the dural opening as part of our institutional routine but can also be repeated at any time after the dural opening and over the course of resection depending on the surgeon’s decision to verify and update navigation, to identify remaining tumor, or to determine the extent of resection intraoperatively. The initial patient registration was, in all cases, performed by landmark-based registration with medium to high accuracy, depending on the quality of the preoperative imaging, skin shift, application of adhesive skin fiducials, and user-dependent registration. Within the clinical workflow observed in this study, the registration of iUS and preoperative MRI images was based on the spatial information of the precalibrated iUS probe within the patient coordinate system (reference array-based registration) and thus provided an estimate of the concordance between the pre- and intraoperative data [25,62,63,64,65,66] and allowed for an estimate of the navigation accuracy encompassing the positional and intraparenchymal shift. Consequently, if a “severe” mismatch was seen, disabling the use of navigation support, navigation support including microscope-based AR was enabled again by outlining the lesion within the iUS data set and continuing surgery based on iUS alone, thereby losing MRI-based pre-segmented information about related structures. Keeping this information while using the “up-to-date” spatial information provided by the intraoperative ultrasound requires image-based co-registration approaches and the matching of pre- and intraoperative data to enable multimodal navigation support throughout the surgery. Various rigid image-based MRI–iUS co-registration methods are available in research setups [67,68] based on varying approaches such as feature extraction and descriptors or, e.g., the use of hyperechogenic structures and joint probabilities. Another approach implemented rigid co-registration for CT and iUS data [27] and was later adapted to MRI and iUS data implementing the Linear Correlation of Linear Combination (LC2) similarity metric, allowing for rigid image co-registration over a couple of seconds [28], which has been successfully technically evaluated within the CuRIOUS2018 Challenge [69]. Based on this approach, rigid image-based MRI-iUS co-registration has been implemented (Brainlab, Munich, Germany), and a prior release has been applied in a retrospective case series including patients with intracranial lesions in a comparable setup as that used in this study [70] and released as a fully integrated part of the navigational system, easing the intraoperative workflow and usage. The results of the CuRIOUS2018 Challenge and the prerelease evaluation of a prototype showed a significant decrease in the navigation inaccuracy by iUS-based navigation updates relying on rigid image-based co-registration. Contrary to the previous reports, in this study, on the one hand, a lesion-based similarity metric (Dice coefficient) as well as a landmark-based similarity metric (Euclidean distance) was assessed for the analysis of the navigation accuracy with respect to the reference array-based approach as well as to the rigid image-based MRI-iUS co-registration.

The statistical analysis of the tumor volumes outlined in the preoperative MRI and iUS data did not differ significantly, suggesting that both modalities can be considered comparable in terms of tumor delineation, being a prerequisite for the lesion-based analysis of navigation accuracy, which is also in line with previous studies showing the comparability of MRI and iUS in tumor delineation [21,25,71]. Therefore, the spatial overlap of the segmented tumor volumes was assessed using the Dice coefficient, ranging from 0.00 (no overlap) to 1.00 (perfect match), as a measure of the lesion-based co-registration quality. The reference array-based registration revealed a Dice coefficient of 0.42 ± 0.30, ranging from 0.00 to 0.87, whereas rigid image-based co-registration was significantly improved to 0.65 ± 0.23, ranging from 0.00 to 0.88. The Dice coefficient seen in reference array-based co-registration was lower than that in previous studies, including supratentorial lesions only [21,25], underpinning the potentially decreased navigation accuracy expected in the sitting position when assessing the posterior fossa. Despite small lesions having a higher likelihood of low spatial overlap due to the Dice coefficient’s dependency on object size [72,73], the significant increase in the spatial overlap relying on rigid image-based co-registration now offers the opportunity to perform iUS-based navigation updates even in cases of severe mismatch, as seen in one case with an increase in the spatial overlap from 0.00 to 0.84 (tumor volume 30.50 cm3), not only contributed to by brain shift but also by a positional shift most plausibly due to the handling of the reference array [3,15]. In the case of a smaller lesion, the acquired iUS data set used for co-registration might also only cover a small range (as partially seen in Table 2), limiting the anatomical information in the iUS data set besides the lesion itself that is employed within the rigid co-registration approach, and therefore potentially leading to a limited improvement concerning the spatial overlap. However, in the case of a small lesion with an iUS data set covering a larger portion of the brain, a higher improvement was seen.

Besides the spatial overlap of lesions seen in the MRI and iUS data, the quality of co-registration was also assessed in a landmark-based manner, comparing the distances between the corresponding landmarks in both modalities, as previously performed in a recent pilot study with supratentorial lesions [70]. In this study on infratentorial lesions surgically treated in the sitting position, initial reference array-based registration led to a mean Euclidean distance of 8.69 ± 6.23 mm (ranging from 2.85 mm to 37.2 mm), which was also significantly improved by rigid image-based co-registration with a mean Euclidean distance of 3.19 ± 2.73 mm (ranging from 1.77 mm to 6.40 mm). Specifically, as seen in one case with an initial maximum Euclidean distance of 37.2 mm between the corresponding landmarks, not only the intraparenchymal shift but also the positional shift of the rigid structures (handling of, e.g., reference arrays) contributed to the overall high navigation inaccuracy in this specific case. The mean Euclidean distance between the corresponding landmarks based on reference array-based co-registration was therefore due to the configuration of the posterior fossa being expectedly higher than in previous studies with supratentorial lesions, even though studies on navigation within the posterior fossa and the explicit analysis of navigation accuracy are rare [11]. The main effects of brain shift are expected after dural opening. Still, larger discrepancies between MRI and iUS data have already been reported for applications in supratentorial lesions with mean distances of 3 mm to 4 mm even before dural opening. Higher inaccuracies might be caused by mechanical, operational, and technical effects (e.g., skin shift, shift of markers, forces during craniotomy, interchange of non-sterile/sterile reference array, draping) [2,3,15,52], the manual patient registration procedure, which is also reported to have a limited accuracy with a target registration error of about 1.8 to 5.0 mm [3,49,74], and intraparenchymal shift due to patient positioning varying from the position during preoperative imaging, especially within the posterior fossa [10,50,51,52,53,54]. However, after rigid image-based co-registration, the mean distance significantly decreased, also being in the range of the target registration error of the manual registration procedure itself; however, it is not close to zero yet. This is partially caused by some sort of uncertainty in detecting the same anatomical structures in both modalities and the choice of anatomical structures (hard/soft tissue landmarks) being shifted differently due to gravitational effects [11]. On the other hand, brain shift caused by gravitational effects even before dural opening; deformations caused by the convex iUS probe when gently pushing the probe on the dura while swiping across the accessible area in the craniotomy for improved image quality and coupling; and also in the later course of surgery, brain shift due to CSF loss results in non-linear deformations of the brain tissue that cannot be fully compensated for using only rigid co-registration approaches. However, there is a need for non-linear co-registration methods combining preoperative MRI and intraoperative US to overcome these limitations [59,75,76,77,78,79], as existing methods provide promising results but remain computationally time-consuming, limiting their intraoperative applicability. Also, the image quality seems to be a relevant factor that contributes to the overall co-registration quality. The iUS volume needs to cover a broad area of the brain tissue containing a lot of structural information for co-registration, which might be limited due to small craniotomies and requires small high-resolution footprint probes [59]. The typical acquisition of 3D iUS data sets using 2D probes is prone to acquisition errors and distortions while manually sweeping the probe across the situs, which might be overcome by the introduction of matrix phased array probes immediately generating a 3D volume without any sweep, but with a lower resolution [59,80].

Although neuronavigation support is routinely implemented in neurosurgical procedures, including microscope-based AR and intraoperative imaging, doubts about its applicability in the sitting position when targeting the posterior fossa limit its applicability even though it is imperative to choose the best surgical approach and optimal trajectory while limiting tissue dissection to a minimum [11]. This is also the case for supratentorial lesions, especially in case of small lesions or lesions that are difficult to identify in situ. The use of navigated iUS offers a straightforward opportunity to verify the navigation accuracy and, thus, to confirm its applicability or to update navigation by either using the iUS data set itself as the basis for navigation support or by adapting the preoperative planning data to the iUS data set, overcoming the limitations of accuracy and fully benefitting from intraoperative navigation support for maximum safe tumor resection. Specifically, in cases where lesions might not be clearly identifiable in iUS data or risk structures in close vicinity of the lesion, the co-registration of preoperative and intraoperative data offers a unique chance for multimodal navigation support throughout surgery, as is also routinely used in other cranial approaches. Navigation support might also assist in the education and training of residents and less experienced surgeons, supporting intraoperative orientation and allowing for the mental mapping of the surgical trajectory, the surgical field, intraoperative landmarks, and multimodal image data while harnessing the surgical advantages of the sitting position.

The limitations of this study encompass its retrospective character and the small sample size due to the stringent inclusion and exclusion criteria (e.g., single surgeon, lesion fully visible, no cerebellopontine angle tumors) aiming to reduce the variability in this proof-of-concept study. Having proven the applicability in this small and “optimal” cohort, this needs to be transferred to other cases with non-optimal image data with respect to lesion coverage, iUS volume, varying image quality, and different users to underpin its general usability. Due to its retrospective character and the availability of the recently released rigid image-based co-registration approach, which was not considered intraoperatively in this study, the immediate intraoperative clinical comparison of both methods cannot be performed yet, and further studies are needed to evaluate its clinical intraoperative benefits (subjective to the surgeon). Therefore, prospective studies should also include iUS data acquisition before and after dural opening and during surgery to analyze and support the applicability of iUS-based navigation updates in the later course of surgery.

The loss of navigation accuracy and brain shift are common challenges in neurosurgical applications, and iUS offers an opportunity to analyze these. The biomechanical properties of the brain tissue, as well as brain shift dynamics depending on different surgical positions, could also be further investigated, allowing for a deeper understanding of the navigation applicability and intraoperative needs for navigation updates.

5. Conclusions

Navigated intraoperative ultrasound can serve as an ideal and easy-to-use tool to enable navigation and microscope-based AR support in posterior fossa surgery in the sitting position, offering various surgical benefits but also being associated with a higher risk of navigation inaccuracies. Navigated iUS therefore provides real-time information about the navigation accuracy and an opportunity to update navigation support according to the recent intraoperative challenges of positional and intraparenchymal alternations and non-linear deformations of the brain and, in this way, supports the intraoperative orientation and allows for the mental mapping of the surgical trajectory, surgical field, landmarks, and multimodal imaging data, not only for experienced surgeons but also for younger residents and less experienced surgeons.

Author Contributions

Conceptualization, M.H.A.B.; methodology, M.H.A.B.; software, M.H.A.B.; validation, M.H.A.B. and A.G.; formal analysis, M.H.A.B. and A.G.; investigation, M.H.A.B. and C.N.; resources, M.H.A.B. and C.N.; data curation, M.H.A.B., A.G., M.G., M.P., B.S. and C.N.; writing—original draft preparation, M.H.A.B.; writing—review and editing, M.H.A.B., A.G., M.G., M.P., B.S. and C.N.; visualization, M.H.A.B.; supervision, M.H.A.B. and C.N.; project administration, M.H.A.B. All authors have read and agreed to the published version of the manuscript.

Funding

Open access funding provided by the Open Access Publishing Fund of Philipps-Universität Marburg.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki, and approved by the local ethics committee of the University of Marburg (No. 99/18 and RS 23/10).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Acknowledgments

We would like to thank J.W. Bartsch for proofreading the manuscript.

Conflicts of Interest

M.B. and C. N. are scientific consultants for Brainlab. A.G., M.G., M.P. and B.S. declare no conflicts of interest.

References

- Carl, B.; Bopp, M.; Sass, B.; Pojskic, M.; Gjorgjevski, M.; Voellger, B.; Nimsky, C. Reliable navigation registration in cranial and spine surgery based on intraoperative computed tomography. Neurosurg. Focus 2019, 47, E11. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, Y.; Fujii, M.; Hayashi, Y.; Kimura, M.; Murai, Y.; Hata, M.; Sugiura, A.; Tsuzaka, M.; Wakabayashi, T. Evaluation of errors influencing accuracy in image-guided neurosurgery. Radiol. Phys. Technol. 2009, 2, 120–125. [Google Scholar] [CrossRef] [PubMed]

- Stieglitz, L.H.; Fichtner, J.; Andres, R.; Schucht, P.; Krahenbuhl, A.K.; Raabe, A.; Beck, J. The silent loss of neuronavigation accuracy: A systematic retrospective analysis of factors influencing the mismatch of frameless stereotactic systems in cranial neurosurgery. Neurosurgery 2013, 72, 796–807. [Google Scholar] [CrossRef] [PubMed]

- Kantelhardt, S.R.; Gutenberg, A.; Neulen, A.; Keric, N.; Renovanz, M.; Giese, A. Video-Assisted Navigation for Adjustment of Image-Guidance Accuracy to Slight Brain Shift. Oper. Neurosurg. 2015, 11, 504–511. [Google Scholar] [CrossRef] [PubMed]

- Kelly, P.J.; Alker, G.J., Jr.; Goerss, S. Computer-assisted stereotactic microsurgery for the treatment of intracranial neoplasms. Neurosurgery 1982, 10, 324–331. [Google Scholar] [CrossRef] [PubMed]

- Roberts, D.W.; Strohbehn, J.W.; Hatch, J.F.; Murray, W.; Kettenberger, H. A frameless stereotaxic integration of computerized tomographic imaging and the operating microscope. J. Neurosurg. 1986, 65, 545–549. [Google Scholar] [CrossRef]

- Cannizzaro, D.; Zaed, I.; Safa, A.; Jelmoni, A.J.M.; Composto, A.; Bisoglio, A.; Schmeizer, K.; Becker, A.C.; Pizzi, A.; Cardia, A.; et al. Augmented Reality in Neurosurgery, State of Art and Future Projections. A Systematic Review. Front. Surg. 2022, 9, 864792. [Google Scholar] [CrossRef] [PubMed]

- Meola, A.; Cutolo, F.; Carbone, M.; Cagnazzo, F.; Ferrari, M.; Ferrari, V. Augmented reality in neurosurgery: A systematic review. Neurosurg. Rev. 2017, 40, 537–548. [Google Scholar] [CrossRef] [PubMed]

- Leger, E.; Drouin, S.; Collins, D.L.; Popa, T.; Kersten-Oertel, M. Quantifying attention shifts in augmented reality image-guided neurosurgery. Healthc. Technol. Lett. 2017, 4, 188–192. [Google Scholar] [CrossRef]

- Dho, Y.S.; Kim, Y.J.; Kim, K.G.; Hwang, S.H.; Kim, K.H.; Kim, J.W.; Kim, Y.H.; Choi, S.H.; Park, C.K. Positional effect of preoperative neuronavigational magnetic resonance image on accuracy of posterior fossa lesion localization. J. Neurosurg. 2019, 133, 546–555. [Google Scholar] [CrossRef]

- Hermann, E.J.; Petrakakis, I.; Polemikos, M.; Raab, P.; Cinibulak, Z.; Nakamura, M.; Krauss, J.K. Electromagnetic navigation-guided surgery in the semi-sitting position for posterior fossa tumours: A safety and feasibility study. Acta Neurochir. 2015, 157, 1229–1237. [Google Scholar] [CrossRef] [PubMed]

- Ganslandt, O.; Merkel, A.; Schmitt, H.; Tzabazis, A.; Buchfelder, M.; Eyupoglu, I.; Muenster, T. The sitting position in neurosurgery: Indications, complications and results. a single institution experience of 600 cases. Acta Neurochir. 2013, 155, 1887–1893. [Google Scholar] [CrossRef] [PubMed]

- Saladino, A.; Lamperti, M.; Mangraviti, A.; Legnani, F.G.; Prada, F.U.; Casali, C.; Caputi, L.; Borrelli, P.; DiMeco, F. The semisitting position: Analysis of the risks and surgical outcomes in a contemporary series of 425 adult patients undergoing cranial surgery. J. Neurosurg. 2017, 127, 867–876. [Google Scholar] [CrossRef] [PubMed]

- Furuse, M.; Ikeda, N.; Kawabata, S.; Park, Y.; Takeuchi, K.; Fukumura, M.; Tsuji, Y.; Kimura, S.; Kanemitsu, T.; Yagi, R.; et al. Influence of surgical position and registration methods on clinical accuracy of navigation systems in brain tumor surgery. Sci. Rep. 2023, 13, 2644. [Google Scholar] [CrossRef] [PubMed]

- Bopp, M.H.A.; Corr, F.; Sass, B.; Pojskic, M.; Kemmling, A.; Nimsky, C. Augmented Reality to Compensate for Navigation Inaccuracies. Sensors 2022, 22, 9591. [Google Scholar] [CrossRef] [PubMed]

- Nimsky, C.; Ganslandt, O.; Cerny, S.; Hastreiter, P.; Greiner, G.; Fahlbusch, R. Quantification of, visualization of, and compensation for brain shift using intraoperative magnetic resonance imaging. Neurosurgery 2000, 47, 1070–1079; discussion 1079–1080. [Google Scholar] [CrossRef]

- Reinertsen, I.; Lindseth, F.; Askeland, C.; Iversen, D.H.; Unsgard, G. Intra-operative correction of brain-shift. Acta Neurochir. 2014, 156, 1301–1310. [Google Scholar] [CrossRef] [PubMed]

- Sastry, R.; Bi, W.L.; Pieper, S.; Frisken, S.; Kapur, T.; Wells, W., 3rd; Golby, A.J. Applications of Ultrasound in the Resection of Brain Tumors. J. Neuroimaging 2017, 27, 5–15. [Google Scholar] [CrossRef]

- Gronningsaeter, A.; Kleven, A.; Ommedal, S.; Aarseth, T.E.; Lie, T.; Lindseth, F.; Lango, T.; Unsgard, G. SonoWand, an ultrasound-based neuronavigation system. Neurosurgery 2000, 47, 1373–1379; discussion 1379–1380. [Google Scholar] [CrossRef]

- Bopp, M.H.A.; Emde, J.; Carl, B.; Nimsky, C.; Sass, B. Diffusion Kurtosis Imaging Fiber Tractography of Major White Matter Tracts in Neurosurgery. Brain Sci. 2021, 11, 381. [Google Scholar] [CrossRef]

- Sass, B.; Zivkovic, D.; Pojskic, M.; Nimsky, C.; Bopp, M.H.A. Navigated Intraoperative 3D Ultrasound in Glioblastoma Surgery: Analysis of Imaging Features and Impact on Extent of Resection. Front. Neurosci. 2022, 16, 883584. [Google Scholar] [CrossRef] [PubMed]

- Unsgaard, G.; Ommedal, S.; Muller, T.; Gronningsaeter, A.; Nagelhus Hernes, T.A. Neuronavigation by intraoperative three-dimensional ultrasound: Initial experience during brain tumor resection. Neurosurgery 2002, 50, 804–812; discussion 812. [Google Scholar] [CrossRef] [PubMed]

- Unsgaard, G.; Rygh, O.M.; Selbekk, T.; Muller, T.B.; Kolstad, F.; Lindseth, F.; Hernes, T.A. Intra-operative 3D ultrasound in neurosurgery. Acta Neurochir. 2006, 148, 235–253; discussion 253. [Google Scholar] [CrossRef] [PubMed]

- Ohue, S.; Kumon, Y.; Nagato, S.; Kohno, S.; Harada, H.; Nakagawa, K.; Kikuchi, K.; Miki, H.; Ohnishi, T. Evaluation of intraoperative brain shift using an ultrasound-linked navigation system for brain tumor surgery. Neurol. Med. Chir. 2010, 50, 291–300. [Google Scholar] [CrossRef] [PubMed]

- Saß, B.; Carl, B.; Pojskic, M.; Nimsky, C.; Bopp, M. Navigated 3D Ultrasound in Brain Metastasis Surgery: Analyzing the Differences in Object Appearances in Ultrasound and Magnetic Resonance Imaging. Appl. Sci. 2020, 10, 7798. [Google Scholar] [CrossRef]

- Sass, B.; Bopp, M.; Nimsky, C.; Carl, B. Navigated 3-Dimensional Intraoperative Ultrasound for Spine Surgery. World Neurosurg. 2019, 131, e155–e169. [Google Scholar] [CrossRef] [PubMed]

- Wein, W.; Brunke, S.; Khamene, A.; Callstrom, M.R.; Navab, N. Automatic CT-ultrasound registration for diagnostic imaging and image-guided intervention. Med. Image Anal. 2008, 12, 577–585. [Google Scholar] [CrossRef] [PubMed]

- Wein, W.; Ladikos, A.; Fuerst, B.; Shah, A.; Sharma, K.; Navab, N. Global registration of ultrasound to MRI using the LC2 metric for enabling neurosurgical guidance. Med. Image Comput. Comput. Assist. Interv. 2013, 16, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Dice, L.R. Measures of the Amount of Ecologic Association Between Species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- The Jamovi Project. Jamovi, 2.3; 2023. Available online: https://www.jamovi.org (accessed on 20 March 2024).

- Letteboer, M.M.; Willems, P.W.; Viergever, M.A.; Niessen, W.J. Brain shift estimation in image-guided neurosurgery using 3-D ultrasound. IEEE Trans. Biomed. Eng. 2005, 52, 268–276. [Google Scholar] [CrossRef]

- Steinmeier, R.; Rachinger, J.; Kaus, M.; Ganslandt, O.; Huk, W.; Fahlbusch, R. Factors influencing the application accuracy of neuronavigation systems. Stereotact. Funct. Neurosurg. 2000, 75, 188–202. [Google Scholar] [CrossRef] [PubMed]

- Hastreiter, P.; Rezk-Salama, C.; Soza, G.; Bauer, M.; Greiner, G.; Fahlbusch, R.; Ganslandt, O.; Nimsky, C. Strategies for brain shift evaluation. Med. Image Anal. 2004, 8, 447–464. [Google Scholar] [CrossRef] [PubMed]

- Nimsky, C.; Ganslandt, O.; Hastreiter, P.; Fahlbusch, R. Intraoperative compensation for brain shift. Surg. Neurol. 2001, 56, 357–364; discussion 364–365. [Google Scholar] [CrossRef] [PubMed]

- Poggi, S.; Pallotta, S.; Russo, S.; Gallina, P.; Torresin, A.; Bucciolini, M. Neuronavigation accuracy dependence on CT and MR imaging parameters: A phantom-based study. Phys. Med. Biol. 2003, 48, 2199–2216. [Google Scholar] [CrossRef] [PubMed]

- Wolfsberger, S.; Rossler, K.; Regatschnig, R.; Ungersbock, K. Anatomical landmarks for image registration in frameless stereotactic neuronavigation. Neurosurg. Rev. 2002, 25, 68–72. [Google Scholar] [CrossRef] [PubMed]

- Jonker, B.P. Image fusion pitfalls for cranial radiosurgery. Surg. Neurol. Int. 2013, 4, S123–S128. [Google Scholar] [CrossRef]

- Carl, B.; Bopp, M.; Benescu, A.; Sass, B.; Nimsky, C. Indocyanine Green Angiography Visualized by Augmented Reality in Aneurysm Surgery. World Neurosurg. 2020, 142, e307–e315. [Google Scholar] [CrossRef]

- Nabavi, A.; Black, P.M.; Gering, D.T.; Westin, C.F.; Mehta, V.; Pergolizzi, R.S., Jr.; Ferrant, M.; Warfield, S.K.; Hata, N.; Schwartz, R.B.; et al. Serial intraoperative magnetic resonance imaging of brain shift. Neurosurgery 2001, 48, 787–797; discussion 797–798. [Google Scholar] [CrossRef] [PubMed]

- Gerard, I.J.; Kersten-Oertel, M.; Petrecca, K.; Sirhan, D.; Hall, J.A.; Collins, D.L. Brain shift in neuronavigation of brain tumors: A review. Med. Image Anal. 2017, 35, 403–420. [Google Scholar] [CrossRef]

- Stieglitz, L.H. One of nature’s basic rules: The simpler the better-why this is also valid for neuronavigation. J. Neurosci. Rural Pract. 2014, 5, 115. [Google Scholar] [CrossRef]

- Stieglitz, L.H.; Raabe, A.; Beck, J. Simple Accuracy Enhancing Techniques in Neuronavigation. World Neurosurg. 2015, 84, 580–584. [Google Scholar] [CrossRef] [PubMed]

- Golfinos, J.G.; Fitzpatrick, B.C.; Smith, L.R.; Spetzler, R.F. Clinical use of a frameless stereotactic arm: Results of 325 cases. J. Neurosurg. 1995, 83, 197–205. [Google Scholar] [CrossRef] [PubMed]

- Rozet, I.; Vavilala, M.S. Risks and benefits of patient positioning during neurosurgical care. Anesthesiol. Clin. 2007, 25, 631–653. [Google Scholar] [CrossRef] [PubMed]

- Fathi, A.R.; Eshtehardi, P.; Meier, B. Patent foramen ovale and neurosurgery in sitting position: A systematic review. Br. J. Anaesth. 2009, 102, 588–596. [Google Scholar] [CrossRef] [PubMed]

- Domaingue, C.M. Anaesthesia for neurosurgery in the sitting position: A practical approach. Anaesth. Intensive Care 2005, 33, 323–331. [Google Scholar] [CrossRef] [PubMed]

- Rachinger, J.; von Keller, B.; Ganslandt, O.; Fahlbusch, R.; Nimsky, C. Application accuracy of automatic registration in frameless stereotaxy. Stereotact. Funct. Neurosurg. 2006, 84, 109–117. [Google Scholar] [CrossRef] [PubMed]

- Nimsky, C.; Fujita, A.; Ganslandt, O.; von Keller, B.; Kohmura, E.; Fahlbusch, R. Frameless stereotactic surgery using intraoperative high-field magnetic resonance imaging. Neurol. Med. Chir. 2004, 44, 522–533; discussion 534. [Google Scholar] [CrossRef] [PubMed]

- Pfisterer, W.K.; Papadopoulos, S.; Drumm, D.A.; Smith, K.; Preul, M.C. Fiducial versus nonfiducial neuronavigation registration assessment and considerations of accuracy. Neurosurgery 2008, 62, 201–207; discussion 207–208. [Google Scholar] [CrossRef] [PubMed]

- Rohlfing, T.; Maurer, C.R., Jr.; Dean, D.; Maciunas, R.J. Effect of changing patient position from supine to prone on the accuracy of a Brown-Roberts-Wells stereotactic head frame system. Neurosurgery 2003, 52, 610–618; discussion 617–618. [Google Scholar] [CrossRef]

- Roberts, D.R.; Zhu, X.; Tabesh, A.; Duffy, E.W.; Ramsey, D.A.; Brown, T.R. Structural Brain Changes following Long-Term 6 degrees Head-Down Tilt Bed Rest as an Analog for Spaceflight. AJNR Am. J. Neuroradiol. 2015, 36, 2048–2054. [Google Scholar] [CrossRef]

- Ryan, M.J.; Erickson, R.K.; Levin, D.N.; Pelizzari, C.A.; Macdonald, R.L.; Dohrmann, G.J. Frameless stereotaxy with real-time tracking of patient head movement and retrospective patient-image registration. J. Neurosurg. 1996, 85, 287–292. [Google Scholar] [CrossRef] [PubMed]

- Schnaudigel, S.; Preul, C.; Ugur, T.; Mentzel, H.J.; Witte, O.W.; Tittgemeyer, M.; Hagemann, G. Positional brain deformation visualized with magnetic resonance morphometry. Neurosurgery 2010, 66, 376–384; discussion 384. [Google Scholar] [CrossRef] [PubMed]

- Monea, A.G.; Verpoest, I.; Vander Sloten, J.; Van der Perre, G.; Goffin, J.; Depreitere, B. Assessment of relative brain-skull motion in quasistatic circumstances by magnetic resonance imaging. J. Neurotrauma 2012, 29, 2305–2317. [Google Scholar] [CrossRef]

- Ogiwara, T.; Goto, T.; Aoyama, T.; Nagm, A.; Yamamoto, Y.; Hongo, K. Bony surface registration of navigation system in the lateral or prone position: Technical note. Acta Neurochir. 2015, 157, 2017–2022. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, E.; Mayanagi, Y.; Kosugi, Y.; Manaka, S.; Takakura, K. Open surgery assisted by the neuronavigator, a stereotactic, articulated, sensitive arm. Neurosurgery 1991, 28, 792–799; discussion 799–800. [Google Scholar] [CrossRef] [PubMed]

- Negwer, C.; Hiepe, P.; Meyer, B.; Krieg, S.M. Elastic Fusion Enables Fusion of Intraoperative Magnetic Resonance Imaging Data with Preoperative Neuronavigation Data. World Neurosurg. 2020, 142, e223–e228. [Google Scholar] [CrossRef] [PubMed]

- Riva, M.; Hiepe, P.; Frommert, M.; Divenuto, I.; Gay, L.G.; Sciortino, T.; Nibali, M.C.; Rossi, M.; Pessina, F.; Bello, L. Intraoperative Computed Tomography and Finite Element Modelling for Multimodal Image Fusion in Brain Surgery. Oper. Neurosurg. 2020, 18, 531–541. [Google Scholar] [CrossRef] [PubMed]

- Dixon, L.; Lim, A.; Grech-Sollars, M.; Nandi, D.; Camp, S. Intraoperative ultrasound in brain tumor surgery: A review and implementation guide. Neurosurg. Rev. 2022, 45, 2503–2515. [Google Scholar] [CrossRef] [PubMed]

- Pino, M.A.; Imperato, A.; Musca, I.; Maugeri, R.; Giammalva, G.R.; Costantino, G.; Graziano, F.; Meli, F.; Francaviglia, N.; Iacopino, D.G.; et al. New Hope in Brain Glioma Surgery: The Role of Intraoperative Ultrasound. A Review. Brain Sci. 2018, 8, 202. [Google Scholar] [CrossRef]

- Eljamel, M.S.; Mahboob, S.O. The effectiveness and cost-effectiveness of intraoperative imaging in high-grade glioma resection; a comparative review of intraoperative ALA, fluorescein, ultrasound and MRI. Photodiagnosis Photodyn Ther. 2016, 16, 35–43. [Google Scholar] [CrossRef]

- Prada, F.; Del Bene, M.; Mattei, L.; Lodigiani, L.; DeBeni, S.; Kolev, V.; Vetrano, I.; Solbiati, L.; Sakas, G.; DiMeco, F. Preoperative magnetic resonance and intraoperative ultrasound fusion imaging for real-time neuronavigation in brain tumor surgery. Ultraschall Med. 2015, 36, 174–186. [Google Scholar] [CrossRef] [PubMed]

- Lunn, K.E.; Paulsen, K.D.; Roberts, D.W.; Kennedy, F.E.; Hartov, A.; West, J.D. Displacement estimation with co-registered ultrasound for image guided neurosurgery: A quantitative in vivo porcine study. IEEE Trans. Med. Imaging 2003, 22, 1358–1368. [Google Scholar] [CrossRef] [PubMed]

- Coburger, J.; Konig, R.W.; Scheuerle, A.; Engelke, J.; Hlavac, M.; Thal, D.R.; Wirtz, C.R. Navigated high frequency ultrasound: Description of technique and clinical comparison with conventional intracranial ultrasound. World Neurosurg. 2014, 82, 366–375. [Google Scholar] [CrossRef] [PubMed]

- Aleo, D.; Elshaer, Z.; Pfnur, A.; Schuler, P.J.; Fontanella, M.M.; Wirtz, C.R.; Pala, A.; Coburger, J. Evaluation of a Navigated 3D Ultrasound Integration for Brain Tumor Surgery: First Results of an Ongoing Prospective Study. Curr. Oncol. 2022, 29, 6594–6609. [Google Scholar] [CrossRef] [PubMed]

- Shetty, P.; Yeole, U.; Singh, V.; Moiyadi, A. Navigated ultrasound-based image guidance during resection of gliomas: Practical utility in intraoperative decision-making and outcomes. Neurosurg. Focus 2021, 50, E14. [Google Scholar] [CrossRef] [PubMed]

- Schneider, R.J.; Perrin, D.P.; Vasilyev, N.V.; Marx, G.R.; Del Nido, P.J.; Howe, R.D. Real-time image-based rigid registration of three-dimensional ultrasound. Med. Image Anal. 2012, 16, 402–414. [Google Scholar] [CrossRef] [PubMed]

- Coupe, P.; Hellier, P.; Morandi, X.; Barillot, C. 3D Rigid Registration of Intraoperative Ultrasound and Preoperative MR Brain Images Based on Hyperechogenic Structures. Int. J. Biomed. Imaging 2012, 2012, 531319. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; Rivaz, H.; Chabanas, M.; Fortin, M.; Machado, I.; Ou, Y.; Heinrich, M.P.; Schnabel, J.A.; Zhong, X.; Maier, A.; et al. Evaluation of MRI to Ultrasound Registration Methods for Brain Shift Correction: The CuRIOUS2018 Challenge. IEEE Trans. Med. Imaging 2020, 39, 777–786. [Google Scholar] [CrossRef] [PubMed]

- Mazzucchi, E.; Hiepe, P.; Langhof, M.; La Rocca, G.; Pignotti, F.; Rinaldi, P.; Sabatino, G. Automatic rigid image Fusion of preoperative MR and intraoperative US acquired after craniotomy. Cancer Imaging 2023, 23, 37. [Google Scholar] [CrossRef]

- Bastos, D.C.A.; Juvekar, P.; Tie, Y.; Jowkar, N.; Pieper, S.; Wells, W.M.; Bi, W.L.; Golby, A.; Frisken, S.; Kapur, T. Challenges and Opportunities of Intraoperative 3D Ultrasound With Neuronavigation in Relation to Intraoperative MRI. Front. Oncol. 2021, 11, 656519. [Google Scholar] [CrossRef]

- Chapman, J.W.; Lam, D.; Cai, B.; Hugo, G.D. Robustness and reproducibility of an artificial intelligence-assisted online segmentation and adaptive planning process for online adaptive radiation therapy. J. Appl. Clin. Med. Phys. 2022, 23, e13702. [Google Scholar] [CrossRef] [PubMed]

- Sherer, M.V.; Lin, D.; Elguindi, S.; Duke, S.; Tan, L.T.; Cacicedo, J.; Dahele, M.; Gillespie, E.F. Metrics to evaluate the performance of auto-segmentation for radiation treatment planning: A critical review. Radiother. Oncol. 2021, 160, 185–191. [Google Scholar] [CrossRef]

- Kozak, J.; Nesper, M.; Fischer, M.; Lutze, T.; Goggelmann, A.; Hassfeld, S.; Wetter, T. Semiautomated registration using new markers for assessing the accuracy of a navigation system. Comput. Aided Surg. 2002, 7, 11–24. [Google Scholar] [CrossRef]

- Farnia, P.; Ahmadian, A.; Shabanian, T.; Serej, N.D.; Alirezaie, J. A hybrid method for non-rigid registration of intra-operative ultrasound images with pre-operative MR images. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2014, 2014, 5562–5565. [Google Scholar] [CrossRef] [PubMed]

- Farnia, P.; Ahmadian, A.; Shabanian, T.; Serej, N.D.; Alirezaie, J. Brain-shift compensation by non-rigid registration of intra-operative ultrasound images with preoperative MR images based on residual complexity. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 555–562. [Google Scholar] [CrossRef]

- Machado, I.; Toews, M.; Luo, J.; Unadkat, P.; Essayed, W.; George, E.; Teodoro, P.; Carvalho, H.; Martins, J.; Golland, P.; et al. Non-rigid registration of 3D ultrasound for neurosurgery using automatic feature detection and matching. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1525–1538. [Google Scholar] [CrossRef]

- D’Agostino, E.; Maes, F.; Vandermeulen, D.; Suetens, P. A viscous fluid model for multimodal non-rigid image registration using mutual information. Med. Image Anal. 2003, 7, 565–575. [Google Scholar] [CrossRef] [PubMed]

- Ferrant, M.; Nabavi, A.; Macq, B.; Jolesz, F.A.; Kikinis, R.; Warfield, S.K. Registration of 3-D intraoperative MR images of the brain using a finite-element biomechanical model. IEEE Trans. Med. Imaging 2001, 20, 1384–1397. [Google Scholar] [CrossRef]

- Yeole, U.; Singh, V.; Mishra, A.; Shaikh, S.; Shetty, P.; Moiyadi, A. Navigated intraoperative ultrasonography for brain tumors: A pictorial essay on the technique, its utility, and its benefits in neuro-oncology. Ultrasonography 2020, 39, 394–406. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).