Temporal Machine Learning Analysis of Prior Mammograms for Breast Cancer Risk Prediction

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

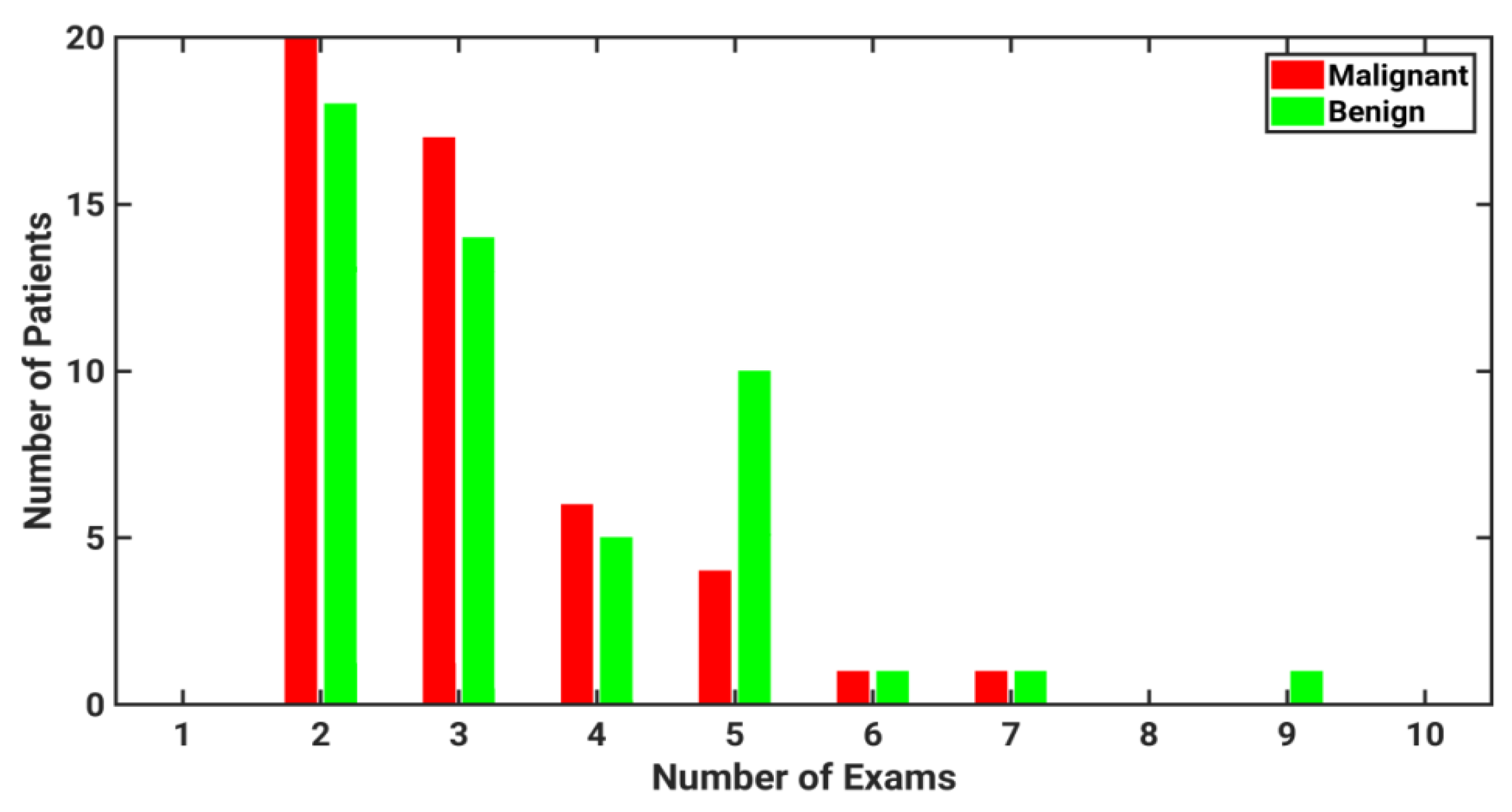

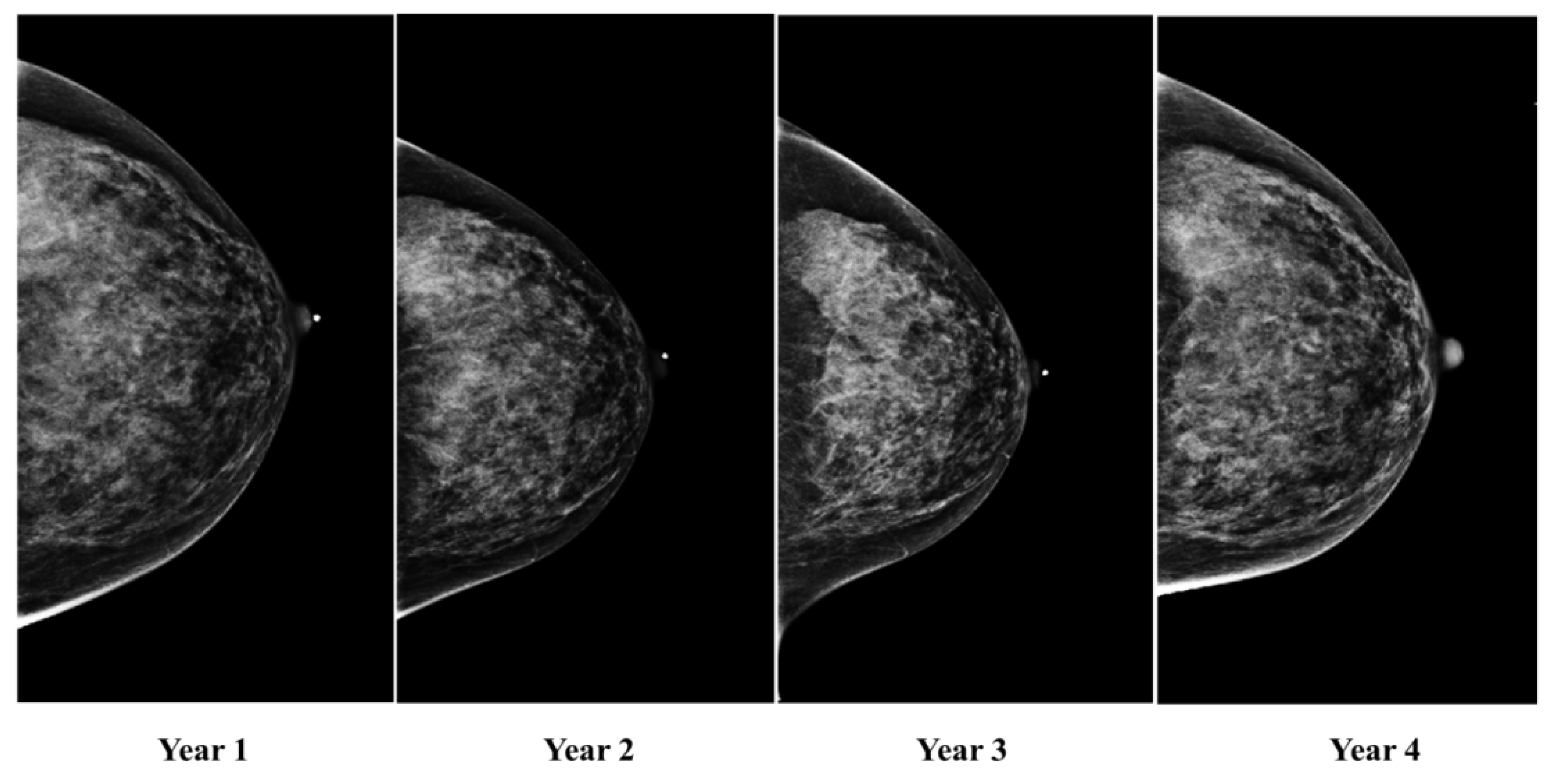

2.1. Image Acquisition and Database Description

2.2. Radiomic Feature Extraction

2.3. Deep Feature Extraction

2.4. Long Short-Term Memory Network

2.5. Classification and Evaluation

2.6. Temporal Sequence Classification with LSTM Network

2.7. Single Time-Point Classification with Support Vector Machine

2.8. Statistical Evaluation

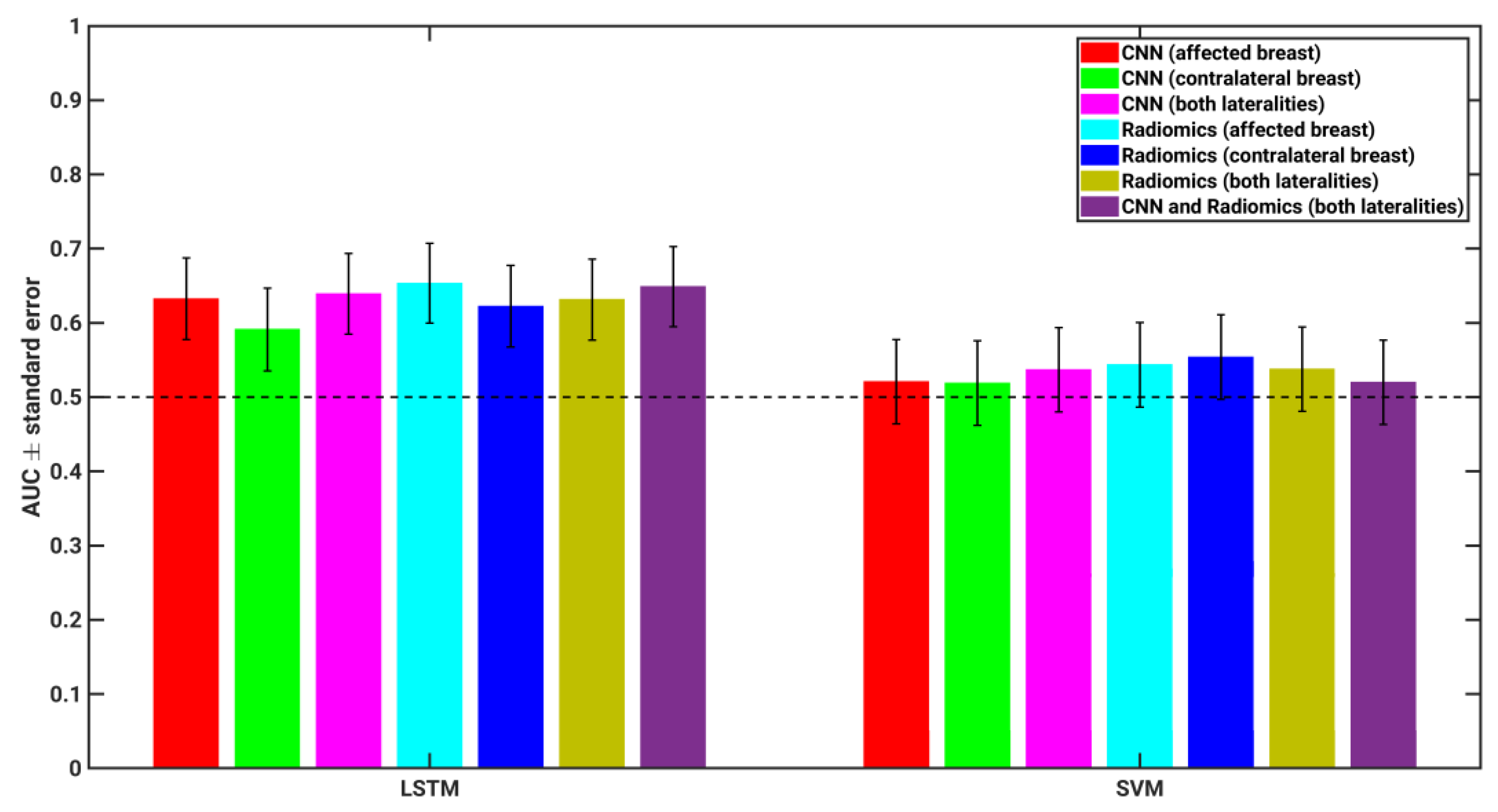

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Smith, R.A.; Cokkinides, V.; Brawley, O.W. Cancer screening in the United States, 2009: A review of current American Cancer Society guidelines and issues in cancer screening. CA Cancer J. Clin. 2009, 59, 27–41. [Google Scholar] [CrossRef]

- Qaseem, A.; Snow, V.; Sherif, K.; Aronson, M.; Weiss, K.B.; Owens, D.K.; Clinical Efficacy Assessment Subcommittee of the American College of Physicians. Screening Mammography for Women 40 to 49 Years of Age: A Clinical Practice Guideline from the American College of Physicians. Ann. Intern. Med. 2007, 146, 511–515. [Google Scholar] [CrossRef] [PubMed]

- Oeffinger, K.C.; Fontham, E.T.H.; Etzioni, R.; Herzig, A.; Michaelson, J.S.; Shih, Y.-C.T.; Walter, L.C.; Church, T.R.; Flowers, C.R.; LaMonte, S.J.; et al. Breast Cancer Screening for Women at Average Risk. JAMA 2015, 314, 1599–1614. [Google Scholar] [CrossRef] [PubMed]

- Yankaskas, B.C.; May, R.C.; Matuszewski, J.; Bowling, J.M.; Jarman, M.P.; Schroeder, B.F. Effect of Observing Change from Comparison Mammograms on Performance of Screening Mammography in a Large Community-based Population. Radiology 2011, 261, 762–770. [Google Scholar] [CrossRef] [PubMed]

- Santeramo, R.; Withey, S.; Montana, G. Longitudinal Detection of Radiological Abnormalities with Time-Modulated LSTM. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 20, 2018, Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018; pp. 326–333. [Google Scholar]

- Shao, Y.; Chen, Z.; Ming, S.; Ye, Q.; Shu, Z.; Gong, C.; Pang, P.; Gong, X. Predicting the Development of Normal-Appearing White Matter With Radiomics in the Aging Brain: A Longitudinal Clinical Study. Front. Aging Neurosci. 2018, 10, 393. [Google Scholar] [CrossRef] [PubMed]

- Antropova, N.; Huynh, B.; Li, H.; Giger, M.L. Breast lesion classification based on dynamic contrast-enhanced magnetic resonance images sequences with long short-term memory networks. J. Med. Imaging 2018, 6, 011002. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Mendel, K.R.; Li, H.; Lan, L.; Cahill, C.M.; Rael, V.; Abe, H.; Giger, M.L. Quantitative texture analysis: Robustness of radiomics across two digital mammography manufacturers’ systems. J. Med. Imaging 2018, 5, 11002. [Google Scholar] [CrossRef]

- Huo, Z.; Giger, M.L.; Wolverton, D.E.; Zhong, W.; Cumming, S.; Olopade, O.I. Computerized analysis of mammographic parenchymal patterns for breast cancer risk assessment: Feature selection. Med. Phys. 2000, 27, 4–12. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Giger, M.L.; Olopade, O.I.; Lan, L. Fractal Analysis of Mammographic Parenchymal Patterns in Breast Cancer Risk Assessment. Acad. Radiol. 2007, 14, 513–521. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Giger, M.L.; Olopade, O.I.; Chinander, M.R. Power Spectral Analysis of Mammographic Parenchymal Patterns for Breast Cancer Risk Assessment. J. Digit. Imaging 2008, 21, 145–152. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Giger, M.L.; Lan, L.; Janardanan, J.; Sennett, C.A. Comparative analysis of image-based phenotypes of mammographic density and parenchymal patterns in distinguishing between BRCA1/2 cases, unilateral cancer cases, and controls. J. Med. Imaging 2014, 1, 031009. [Google Scholar] [CrossRef] [PubMed]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2017, arXiv:1409.1556. [Google Scholar]

- Huynh, B.Q.; Li, H.; Giger, M.L. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J. Med. Imaging 2016, 3, 034501. [Google Scholar] [CrossRef]

- Mendel, K.; Li, H.; Sheth, D.; Giger, M. Transfer Learning from Convolutional Neural Networks for Computer-Aided Diagnosis: A Comparison of Digital Breast Tomosynthesis and Full-Field Digital Mammography. Acad. Radiol. 2019, 26, 735–743. [Google Scholar] [CrossRef]

- Antropova, N.; Huynh, B.Q.; Giger, M.L. A deep feature fusion methodology for breast cancer diagnosis demonstrated on three imaging modality datasets. Med. Phys. 2017, 44, 5162–5171. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Gulli, A.; Pal, S. Deep Learning with Keras; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Zhang, T. Solving Large Scale Linear Prediction Problems Using Stochastic Gradient Descent Algorithms. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; p. 116. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Vandewalle, J. Least Squares Support Vector Machine Classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Metz, C.E.; Herman, B.A.; Roe, C.A. Statistical Comparison of Two ROC-curve Estimates Obtained from Partially-paired Datasets. Med. Decis. Mak. 1998, 18, 110–121. [Google Scholar] [CrossRef] [PubMed]

- Metz, C.E. Basic Principles of ROC Analysis. In Seminars in Nuclear Medicine; WB Saunders: Philadelphia, PA, USA, 2018; Available online: http://gim.unmc.edu/dxtests/ROC1.htm (accessed on 4 May 2018).

- Holm, S. A Simple Sequentially Rejective Multiple Test Procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar]

- Gierach, G.L.; Li, H.; Loud, J.T.; Greene, M.H.; Chow, C.K.; Lan, L.; Prindiville, S.A.; Eng-Wong, J.; Soballe, P.W.; Giambartolomei, C.; et al. Relationships between computer-extracted mammographic texture pattern features and BRCA1/2 mutation status: A cross-sectional study. Breast Cancer Res. 2014, 16, 424. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Giger, M.L.; Huynh, B.Q.; Antropova, N.O. Deep learning in breast cancer risk assessment: Evaluation of convolutional neural networks on a clinical dataset of full-field digital mammograms. J. Med Imaging 2017, 4, 041304. [Google Scholar] [CrossRef]

| Feature Category | Number of Features |

|---|---|

| Box counting fractal dimension | 6 |

| Edge gradient | 4 |

| Histogram | 10 |

| Fourier | 2 |

| Neighborhood Gray-Tone Difference Matrix | 5 |

| Minkowski fractal dimension | 1 |

| Powerlaw beta | 8 |

| GLCM | 14 |

| Total | 50 |

| Feature Type | LSTM Classifier AUC (p-Value) * [95% CI of AUC] | SVM Classifier AUC (p-Value) * [95% CI of AUC] |

|---|---|---|

| CNN (affected breast) | AUC = 0.63 (p = 0.0231) [0.5010, 0.7175] | AUC = 0.52 (p = 0.7103) [0.3962, 0.6193] |

| CNN (contralateral breast) | AUC = 0.59 (p = 0.1024) [0.4791, 0.6982] | AUC = 0.52 (p = 0.7389) [0.4083, 0.6320] |

| CNN (both lateralities) | AUC = 0.64 (p = 0.0104) [0.5184, 0.7336] | AUC = 0.54 (p = 0.5140) [0.4138, 0.6372] |

| Radiomics (affected breast) | AUC = 0.65 (p = 0.0042) [0.5346, 0.7456] | AUC = 0.54 (p = 0.4425) [0.4510, 0.6723] |

| Radiomics (contralateral breast) | AUC = 0.62 (p = 0.0259) [0.4998, 0.7161] | AUC = 0.55 (p = 0.3434) [0.4439, 0.6672] |

| Radiomics (both lateralities) | AUC = 0.63 (p = 0.0159) [0.5122, 0.7263] | AUC = 0.54 (p = 0.5035) [0.4216, 0.6454] |

| CNN + Radiomics (both lateralities) | AUC = 0.65 (p = 0.0059) [0.5109, 0.7282] | AUC = 0.52 (p = 0.7226) [0.4190, 0.6422] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Robinson, K.; Lan, L.; Baughan, N.; Chan, C.-W.; Embury, M.; Whitman, G.J.; El-Zein, R.; Bedrosian, I.; Giger, M.L. Temporal Machine Learning Analysis of Prior Mammograms for Breast Cancer Risk Prediction. Cancers 2023, 15, 2141. https://doi.org/10.3390/cancers15072141

Li H, Robinson K, Lan L, Baughan N, Chan C-W, Embury M, Whitman GJ, El-Zein R, Bedrosian I, Giger ML. Temporal Machine Learning Analysis of Prior Mammograms for Breast Cancer Risk Prediction. Cancers. 2023; 15(7):2141. https://doi.org/10.3390/cancers15072141

Chicago/Turabian StyleLi, Hui, Kayla Robinson, Li Lan, Natalie Baughan, Chun-Wai Chan, Matthew Embury, Gary J. Whitman, Randa El-Zein, Isabelle Bedrosian, and Maryellen L. Giger. 2023. "Temporal Machine Learning Analysis of Prior Mammograms for Breast Cancer Risk Prediction" Cancers 15, no. 7: 2141. https://doi.org/10.3390/cancers15072141

APA StyleLi, H., Robinson, K., Lan, L., Baughan, N., Chan, C.-W., Embury, M., Whitman, G. J., El-Zein, R., Bedrosian, I., & Giger, M. L. (2023). Temporal Machine Learning Analysis of Prior Mammograms for Breast Cancer Risk Prediction. Cancers, 15(7), 2141. https://doi.org/10.3390/cancers15072141