Inter- and Intra-Observer Agreement of PD-L1 SP142 Scoring in Breast Carcinoma—A Large Multi-Institutional International Study

Abstract

Simple Summary

Abstract

1. Introduction

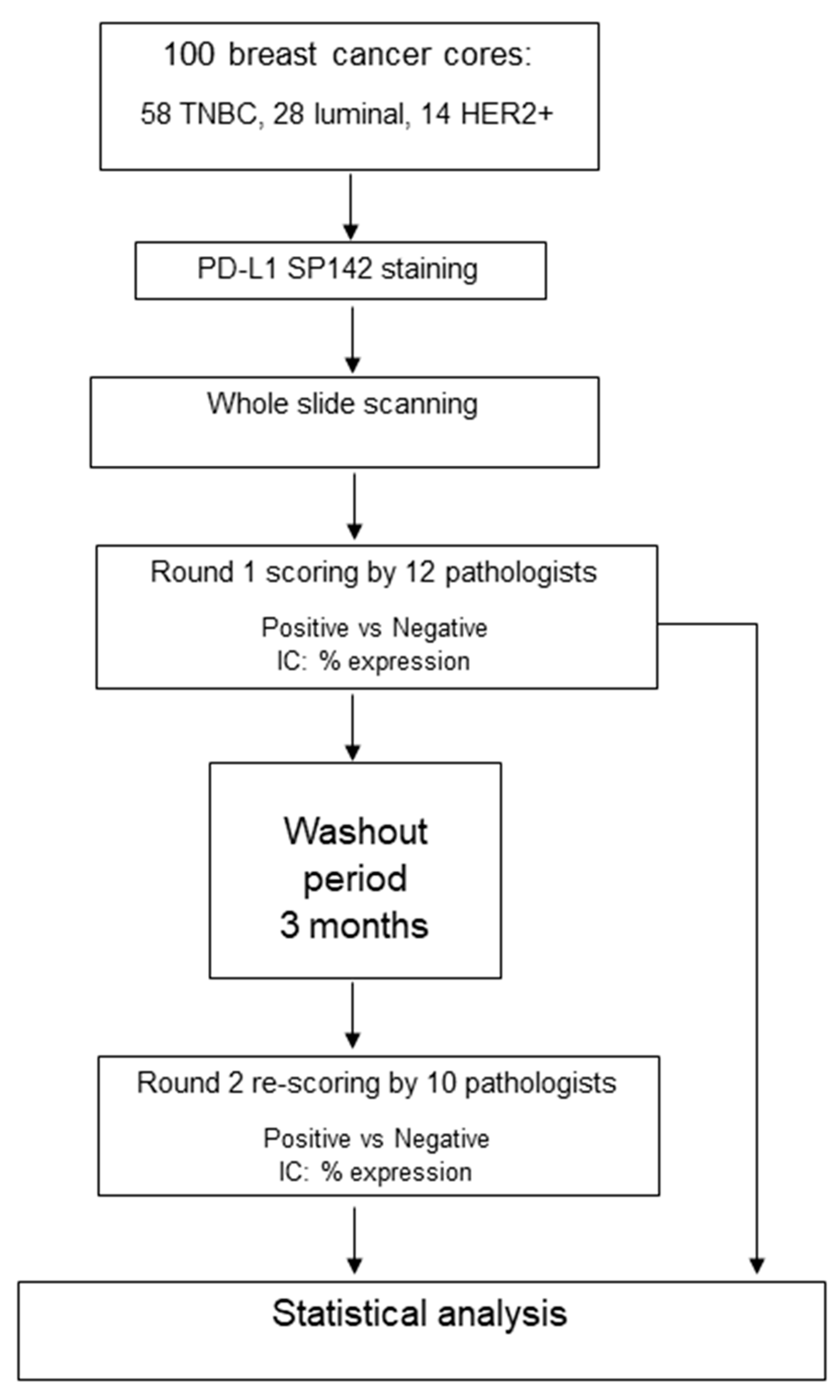

2. Materials and Methods

Statistical Analysis

3. Results

3.1. Cohort Characteristics

3.2. Inter-Observer Agreement and Pathologist Experience

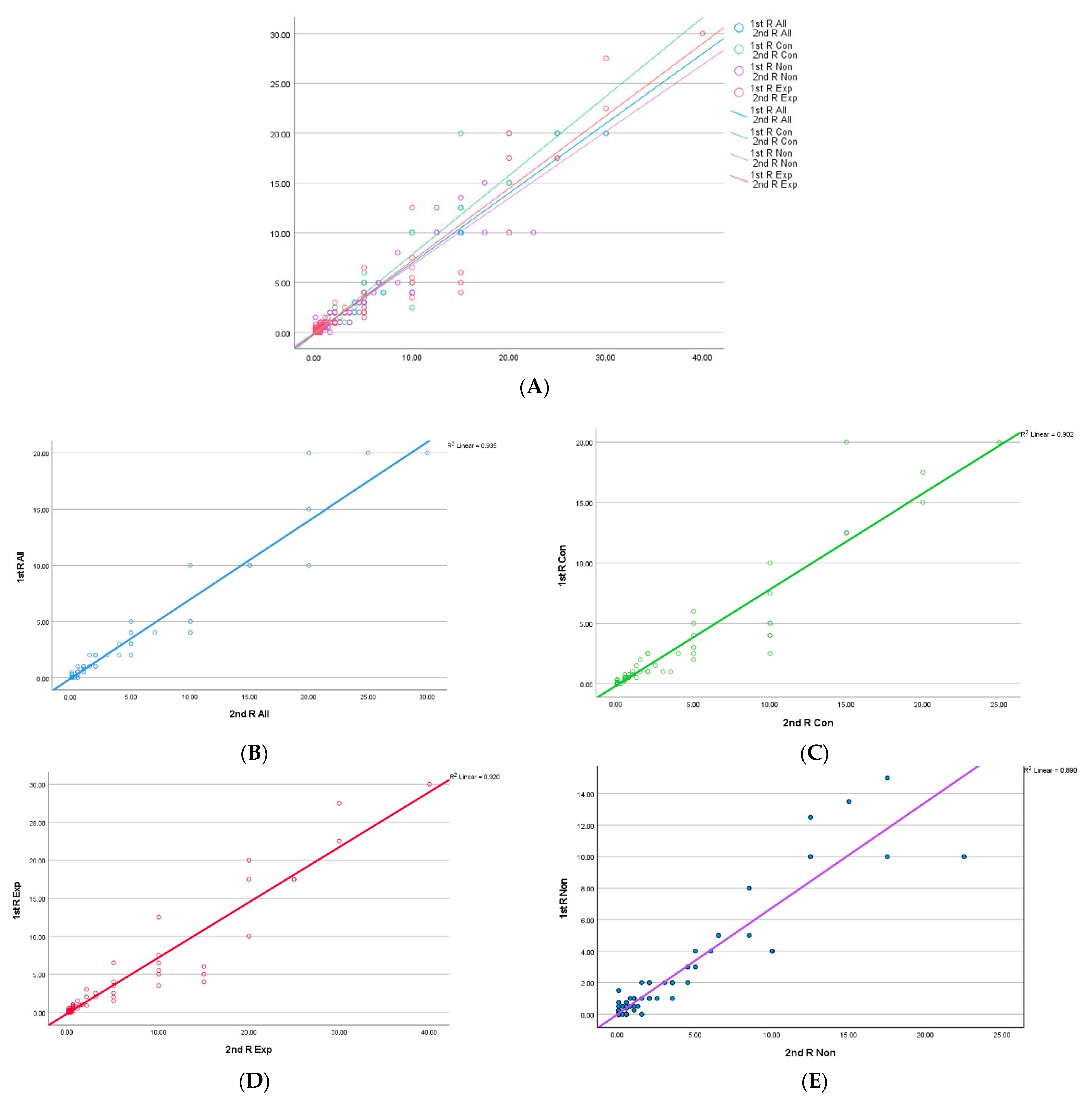

3.3. Concordance of PD-L1 Percentage Expression

3.4. Reasons for Discordance

3.5. Inter- and Intra-Observer Agreement

3.6. Intraclass Correlation Coefficient (ICC)

3.7. Intra-Observer Agreement and Scoring Reliability in Relation to Pathologists’ Experience

4. Discussion

| Reference | Number of Cases (Type) | Clone(s) | SP142 Scoring Method | Scorers | Inter-Observer Agreement | Intra-Observer Agreement |

|---|---|---|---|---|---|---|

| Downes et al. 2020 [19] | 30 surgical excisions TMAs | 22C3, SP142, E1L3N | IC ≥ 1% | 3 pathologists | Kappa for IC1%: 0.668 | 1 month washout period. Kappa = 0.798 |

| Noske et al. [13] | 30 (resections) | SP263, SP142, 22C3, 28–8 | IC ≥ 1% | 7 trained + one Ventana SP142 expert for SP142 only | ICC for SP142: 0.805 (0.710–0.887) | Not tested |

| Dennis et al. (abstract) [14] | 28 test sets through the Roche International Training Programme | SP142 | IC ≥ 1% | 432 (trained multiple institutions), from several countries | OPA: was 98.2%, with PPA of 99.4% and NPA of 96.6%. | Not tested |

| Hoda et al. [23] | 75 (cores and excision), primary and metastases | SP142 | IC ≥ 1% | 8 experienced (single institution) | Kappa 0.727 | Not tested |

| Reisenbichler et al. 2021 [21] | 68 cases for SP142 and 67 cases for SP263 | SP142, SP263 | IC ≥ 1% & % expression for cases scored as positive only | 19 randomly selected pathologists from 14 US institutions; breast pathologists, with few non-breast pathologists. Experience in reporting PD-L1 not stated | Complete agreement for SP142 categorisation into positive vs. negative in 38%. Agreement decreased with the increasing number of scorers, reaching a low plateau of 0.41 at eight scorers or more | Not tested |

| Pang et al. [26] | 60 TNBC TMAs | VENTANA SP142, DAKO 22C3 | IC ≥ 1% | 10 pathologists including 5 PD-L1 who were naïve and 5 who passed a proficiency test | 93.3% for experts; 81.5% for non-experts. | Tested after a 1 h training video and an overnight washout period. OPA increased from 81.5% to 85.7% for non-experts after video training. OPA was 96.3% for experts. |

| Van Bockstal et al. 2021 [15] | 49 metastatic TNBC (biopsies and resections) | VENTANA SP142 | IC ≥ 1% | 10 pathologists; all passed a proficiency test | Substantial variability at the individual patient level. In 20% of cases, chance of allocation to treatment was random, with a 50–50 split among pathologists in designating as PD-L1-positive or -negative | Not tested |

| Ahn et al. 2021 [27] | 30 surgical excisions | SP142, SP263, 22C3 and E1L3N | ICs and TCs were scored in both continuous scores (0–100%) and five categorical scores (<1%, 1–4%, 5–9%, 10–49% and ≥50%). | 10 pathologists with no special training, of whom 6 underwent Ventana Roche training | 80.7% inter-observer agreement at a 1% cut-off value | Proportion of cases with identical scoring at a 1% IC cut-off value increased from 40% to 70.0% after training |

| Abreu et al. 2022 (Conference abstract) [28] | 168 in tissue microarrays | 22C3 and SP142 | Not stated | 4 pathologists including 2 breast pathologists and 2 surgical pathologists with no specific PD-L1 training | Overall concordance for SP142 was 64.8%; overall κ = 0.331, with κ = 0.420 for breast pathologists and κ = 0.285 for general pathologists | Not tested |

| Chen et al. 2022 [22] | 426 primary and metastatic surgical excisions | SP142 | IC ≥ 1% | Two experienced pathologists | 78.2% concordance; κ = 0.567 | Not tested |

| Current study | 100 (cores), primary breast cancer | SP142 | IC ≥ 1% & % expression for all cases; two rounds of scoring separated by a 3-month washout period | 12 experienced breast pathologists from 8 institutions in the UK, Ireland and Belgium. All passed a proficiency test. | Absolute agreement was substantial in 52% and 60% of cases in the first and second rounds, with Kappa values of 0.654 and 0.655 for the first and second rounds, respectively. Higher concordance among experts, particularly in TNBC and challenging cases. | Tested after 3 months of a washout period. Almost perfect agreement regardless of pathologists’ PD-L1 experience |

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shaaban, A.M.; Shaw, E.C. Bench to bedside: Research influencing clinical practice in breast cancer. Diagn. Histopathol. 2022, 28, 473–479. [Google Scholar] [CrossRef]

- Vennapusa, B.; Baker, B.; Kowanetz, M.; Boone, J.; Menzl, I.; Bruey, J.-M.; Fine, G.; Mariathasan, S.; McCaffery, I.; Mocci, S.; et al. Development of a PD-L1 Complementary Diagnostic Immunohistochemistry Assay (SP142) for Atezolizumab. Appl. Immunohistochem. Mol. Morphol. 2019, 27, 92–100. [Google Scholar] [CrossRef] [PubMed]

- Melaiu, O.; Lucarini, V.; Cifaldi, L.; Fruci, D. Influence of the Tumor Microenvironment on NK Cell Function in Solid Tumors. Front. Immunol. 2020, 10, 3038. [Google Scholar] [CrossRef] [PubMed]

- Simiczyjew, A.; Dratkiewicz, E.; Mazurkiewicz, J.; Ziętek, M.; Matkowski, R.; Nowak, D. The Influence of Tumor Microenvironment on Immune Escape of Melanoma. Int. J. Mol. Sci. 2020, 21, 8359. [Google Scholar] [CrossRef] [PubMed]

- Badr, N.M.; McMurray, J.L.; Danial, I.; Hayward, S.; Asaad, N.Y.; El-Wahed, M.M.A.; Abdou, A.G.; El-Dien, M.M.S.; Sharma, N.; Horimoto, Y.; et al. Characterization of the Immune Microenvironment in Inflammatory Breast Cancer Using Multiplex Immunofluorescence. Pathobiology 2022, 90, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Conte, P.F.; Dieci, M.V.; Bisagni, G.; De Laurentiis, M.; Tondini, C.A.; Schmid, P.; De Salvo, G.L.; Moratello, G.; Guarneri, V. Phase III randomized study of adjuvant treatment with the ANTI-PD-L1 antibody avelumab for high-risk triple negative breast cancer patients: The A-BRAVE trial. Am. Soc. Clin. Oncol. 2020, 38, TPS598. [Google Scholar] [CrossRef]

- Schmid, P.; Cortes, J.; Dent, R. Pembrolizumab in Early Triple-Negative Breast Cancer. Reply. N. Engl. J. Med. 2022, 386, 1771–1772. [Google Scholar] [CrossRef]

- Emens, L.A.; Adams, S.; Barrios, C.H.; Diéras, V.; Iwata, H.; Loi, S.; Rugo, H.S.; Schneeweiss, A.; Winer, E.P.; Patel, S.; et al. First-line atezolizumab plus nab-paclitaxel for unresectable, locally advanced, or metastatic triple-negative breast cancer: IMpassion130 final overall survival analysis. Ann. Oncol. 2021, 32, 983–993. [Google Scholar] [CrossRef]

- Bartsch, R. ESMO 2020: Highlights in breast cancer. Memo-Mag. Eur. Med. Oncol. 2021, 14, 184–187. [Google Scholar] [CrossRef]

- Büttner, R.; Gosney, J.R.; Skov, B.G.; Adam, J.; Motoi, N.; Bloom, K.J.; Dietel, M.; Longshore, J.W.; López-Ríos, F.; Penault-Llorca, F.; et al. Programmed Death-Ligand 1 Immunohistochemistry Testing: A Review of Analytical Assays and Clinical Implementation in Non–Small-Cell Lung Cancer. J. Clin. Oncol. 2017, 35, 3867–3876. [Google Scholar] [CrossRef]

- Guo, H.; Ding, Q.; Gong, Y.; Gilcrease, M.Z.; Zhao, M.; Zhao, J.; Sui, D.; Wu, Y.; Chen, H.; Liu, H.; et al. Comparison of three scoring methods using the FDA-approved 22C3 immunohistochemistry assay to evaluate PD-L1 expression in breast cancer and their association with clinicopathologic factors. Breast Cancer Res. 2020, 22, 69. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Ding, Q.; Guo, H.; Gong, Y.; Zhao, J.; Zhao, M.; Sui, D.; Wu, Y.; Chen, H.; Liu, H.; et al. Comparison of three FDA-approved diagnostic immunohistochemistry assays of PD-L1 in triple-negative breast carcinoma. Hum. Pathol. 2021, 108, 42–50. [Google Scholar] [CrossRef] [PubMed]

- Noske, A.; Wagner, D.-C.; Schwamborn, K.; Foersch, S.; Steiger, K.; Kiechle, M.; Oettler, D.; Karapetyan, S.; Hapfelmeier, A.; Roth, W.; et al. Interassay and interobserver comparability study of four programmed death-ligand 1 (PD-L1) immunohistochemistry assays in triple-negative breast cancer. Breast 2021, 60, 238–244. [Google Scholar] [CrossRef] [PubMed]

- Dennis, E.; Kockx, M.; Harlow, G.; Cai, Z.; Bloom, K.; ElGabry, E. Abstract PD5-02: Effective and globally reproducible digital pathologist training program on PD-L1 immunohistochemistry scoring on immune cells as a predictive biomarker for cancer immunotherapy in triple negative breast cancer. Cancer Res. 2020, 80 (Suppl. 4), PD5-02. [Google Scholar] [CrossRef]

- Van Bockstal, M.R.; Cooks, M.; Nederlof, I.; Brinkhuis, M.; Dutman, A.; Koopmans, M.; Kooreman, L.; van der Vegt, B.; Verhoog, L.; Vreuls, C.; et al. Interobserver Agreement of PD-L1/SP142 Immunohistochemistry and Tumor-Infiltrating Lymphocytes (TILs) in Distant Metastases of Triple-Negative Breast Cancer: A Proof-of-Concept Study. A Report on Behalf of the International Immuno-Oncology Biomarker Working Group. Cancers 2021, 13, 4910. [Google Scholar]

- Padmanabhan, R.; Kheraldine, H.S.; Meskin, N.; Vranic, S.; Al Moustafa, A.-E. Crosstalk between HER2 and PD-1/PD-L1 in breast cancer: From clinical applications to mathematical models. Cancers 2020, 12, 636. [Google Scholar] [CrossRef]

- Kurozumi, S.; Inoue, K.; Matsumoto, H.; Fujii, T.; Horiguchi, J.; Oyama, T.; Kurosumi, M.; Shirabe, K. Clinicopathological values of PD-L1 expression in HER2-positive breast cancer. Sci. Rep. 2019, 9, 16662. [Google Scholar] [CrossRef]

- Marletta, S.; Fusco, N.; Munari, E.; Luchini, C.; Cimadamore, A.; Brunelli, M.; Querzoli, G.; Martini, M.; Vigliar, E.; Colombari, R.; et al. Atlas of PD-L1 for Pathologists: Indications, Scores, Diagnostic Platforms and Reporting Systems. J. Pers. Med. 2022, 12, 1073. [Google Scholar] [CrossRef]

- Downes, M.R.; Slodkowska, E.; Katabi, N.; Jungbluth, A.; Xu, B. Inter- and intraobserver agreement of programmed death ligand 1 scoring in head and neck squamous cell carcinoma, urothelial carcinoma and breast carcinoma. Histopathology 2020, 76, 191–200. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Reisenbichler, E.S.; Han, G.; Bellizzi, A.; Bossuyt, V.; Brock, J.; Cole, K.; Fadare, O.; Hameed, O.; Hanley, K.; Harrison, B.T.; et al. Prospective multi-institutional evaluation of pathologist assessment of PD-L1 assays for patient selection in triple negative breast cancer. Mod. Pathol. 2020, 33, 1746–1752. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Ma, X.; Li, Y.; Ma, J.; Yang, W.; Shui, R. Concordance of PD-L1 expression in triple-negative breast cancers in Chinese patients: A retrospective and pathologist-based study. Pathol. Res. Pract. 2022, 238, 154137. [Google Scholar] [CrossRef] [PubMed]

- Hoda, R.S.; Brogi, E.; D’Alfonso, T.M.; Grabenstetter, A.; Giri, D.; Hanna, M.G.; Kuba, M.G.; Murray, D.M.P.; Vallejo, C.E.; Zhang, H.; et al. Interobserver Variation of PD-L1 SP142 Immunohistochemistry Interpretation in Breast Carcinoma: A Study of 79 Cases Using Whole Slide Imaging. Arch. Pathol. Lab. Med. 2021, 145, 1132–1137. [Google Scholar] [CrossRef] [PubMed]

- A Rakha, E.; E Pinder, S.; Bartlett, J.M.S.; Ibrahim, M.; Starczynski, J.; Carder, P.J.; Provenzano, E.; Hanby, A.; Hales, S.; Lee, A.H.S.; et al. Updated UK Recommendations for HER2 assessment in breast cancer. J. Clin. Pathol. 2014, 68, 93–99. [Google Scholar] [CrossRef]

- Peg, V.; López-García, M.; Comerma, L.; Peiró, G.; García-Caballero, T.; López, Á.C.; Suárez-Gauthier, A.; Ruiz, I.; Rojo, F. PD-L1 testing based on the SP142 antibody in metastatic triple-negative breast cancer: Summary of an expert round-table discussion. Future Oncol. 2021, 17, 1209–1218. [Google Scholar] [CrossRef]

- Pang, J.-M.B.; Castles, B.; Byrne, D.J.; Button, P.; Hendry, S.; Lakhani, S.R.; Sivasubramaniam, V.; Cooper, W.A.; Armes, J.; Millar, E.K.; et al. SP142 PD-L1 Scoring Shows High Interobserver and Intraobserver Agreement in Triple-negative Breast Carcinoma But Overall Low Percentage Agreement With Other PD-L1 Clones SP263 and 22C3. Am. J. Surg. Pathol. 2021, 45, 1108–1117. [Google Scholar] [CrossRef]

- Ahn, S.; Woo, J.W.; Kim, H.; Cho, E.Y.; Kim, A.; Kim, J.Y.; Kim, C.; Lee, H.J.; Lee, J.S.; Bae, Y.K.; et al. Programmed Death Ligand 1 Immunohistochemistry in Triple-Negative Breast Cancer: Evaluation of Inter-Pathologist Concordance and Inter-Assay Variability. J. Breast Cancer 2021, 24, 266–279. [Google Scholar] [CrossRef]

- Abreu, R.; Peixoto; Corassa, M.; Nunes, W.; Neotti, T.; Rodrigues, T.; Toledo, C.; Domingos, T.; Carraro, D.; Gobbi, H.; et al. Determination of Inter-Observer Agreement in the Immunohistochemical Interpretation of PD-L1 Clones 22C3 and SP142 in Triple-Negative Breast Cancer (TNBC). In Virchows Archiv; Springer One New York Plaza; Springer: New York, NY, USA, 2022. [Google Scholar]

- Girolami, I.; Pantanowitz, L.; Barberis, M.; Paolino, G.; Brunelli, M.; Vigliar, E.; Munari, E.; Satturwar, S.; Troncone, G.; Eccher, A. Challenges facing pathologists evaluating PD-L1 in head & neck squamous cell carcinoma. J. Oral Pathol. Med. 2021, 50, 864–873. [Google Scholar]

- de Ruiter, E.J.; Mulder, F.; Koomen, B.; Speel, E.-J.; van den Hout, M.; de Roest, R.; Bloemena, E.; Devriese, L.; Willems, S. Comparison of three PD-L1 immunohistochemical assays in head and neck squamous cell carcinoma (HNSCC). Mod. Pathol. 2021, 34, 1125–1132. [Google Scholar] [CrossRef]

- Cerbelli, B.; Girolami, I.; Eccher, A.; Costarelli, L.; Taccogna, S.; Scialpi, R.; Benevolo, M.; Lucante, T.; Alò, P.L.; Stella, F.; et al. Evaluating programmed death-ligand 1 (PD-L1) in head and neck squamous cell carcinoma: Concordance between the 22C3 PharmDx assay and the SP263 assay on whole sections from a multicentre study. Histopathology 2021, 80, 397–406. [Google Scholar] [CrossRef]

- Vidula, N.; Yau, C.; Rugo, H.S. Programmed cell death 1 (PD-1) receptor and programmed death ligand 1 (PD-L1) gene expression in primary breast cancer. Breast Cancer Res. Treat. 2021, 187, 387–395. [Google Scholar] [CrossRef] [PubMed]

- Badve, S.S.; Penault-Llorca, F.; Reis-Filho, J.S.; Deurloo, R.; Siziopikou, K.P.; D’Arrigo, C.; Viale, G. Determining PD-L1 Status in Patients With Triple-Negative Breast Cancer: Lessons Learned From IMpassion130. Gynecol. Oncol. 2021, 114, 664–675. [Google Scholar] [CrossRef] [PubMed]

- IInge, L.; Dennis, E. Development and applications of computer image analysis algorithms for scoring of PD-L1 immunohistochemistry. Immunooncol. Technol. 2020, 6, 2–8. [Google Scholar] [CrossRef] [PubMed]

| No. | First Round | Second Round | |||

|---|---|---|---|---|---|

| Positive (%) | Negative (%) | Positive (%) | Negative (%) | ||

| TNBC | 58 | 32 (55%) | 26 (45%) | 32 (55%) | 26 (45%) |

| Median (range) | 4 (0.75–30) | 0 (0–1) | 5 (0.5–30) | 0 (0–1) | |

| Luminal | 28 | 4 (14%) | 24 (86%) | 4 (14%) | 24 (86%) |

| Median (range) | 2 (1–4) | 0 (0–0.75) | 3.5 (1.5–5) | 0 (0–0.5) | |

| Her2-positive | 14 | 2 (14%) | 12 (86%) | 1 (7%) | 13 (93%) |

| Median (range) | 5.5 (1–10) | 0 (0–0.5) | 10 | 0 (0–0.5) | |

| Total | 100 | 38 (38%) | 62 (62%) | 36 (36%) | 64 (64%) |

| Median (range) | 2 (0.75–30) | 0 (0–1) | 5 (0.5–30) | 0 (0–1) | |

| Raters | P1 | P2 | P3 | P4 c | P5 c | P6 c | P7 e | P8 e | P9 e | P10 e | P11 e | P12 e | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| First Round | Neg | 62 | 64 | 61 | 58 | 63 | 68 | 75 | 51 | 64 | 67 | 63 | 63 |

| Pos | 38 | 35 | 31 | 41 | 37 | 32 | 25 | 49 | 36 | 33 | 37 | 34 | |

| Total | 100 | 99 | 92 | 99 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 97 | |

| Kappa | 0.654 | ||||||||||||

| AA | 52/100 cases; 36 scored negative and 16 scored positive | ||||||||||||

| Second Round | Neg | 60 | 64 | 64 | 46 | 62 | 52 | 72 | 69 | 69 | 66 | ||

| Pos | 40 | 35 | 35 | 54 | 37 | 48 | 28 | 31 | 31 | 30 | |||

| Total | 100 | 99 | 99 | 100 | 100 | 100 | 100 | 100 | 100 | 97 | |||

| Kappa | 0.655 | ||||||||||||

| AA | 60/100 cases; 40 scored negative and 20 scored positive | ||||||||||||

| Round | Consensus (Agreement) | No Agreement | |||

|---|---|---|---|---|---|

| Majority | Challenging/Low Agreement | ≤50% | |||

| 100% (AA) | 67–99% | <67–>50% | |||

| First | Negative | 36 | 24 | 2 | 0 |

| Positive | 16 | 18 | 4 | 0 | |

| Total | 52 | 42 | 6 | 0 | |

| 94 | 6 | 0 | |||

| 100 | 0 | ||||

| Second | Negative | 40 | 20 | 2 | 2 |

| Positive | 20 | 12 | 4 | 0 | |

| Total | 60 | 32 | 6 | 2 | |

| 92 | 6 | 2 | |||

| 98 | 2 | ||||

| Fleiss Kappa First Round | Fleiss Kappa Second Round | |||||

|---|---|---|---|---|---|---|

| Scoring Categories | Scoring Categories | |||||

| Overall (TNBC) | NEG | POS | Overall (TNBC) | NEG | POS | |

| All | 0.654 (0.61) | 0.660 | 0.678 | 0.655 (0.602) | 0.656 | 0.669 |

| Consultants | 0.663 (0.616) | 0.664 | 0.673 | 0.633 (0.568) | 0.636 | 0.650 |

| Experienced | 0.659 (0.642) | 0.661 | 0.672 | 0.674 (0.600) | 0.677 | 0.695 |

| FIRST ROUND | SECOND ROUND | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | ALL (12) | PD-L1 Status | M | NON (6) | PD-L1 Status | M | EXP (6) | PD-L1 Status | M | All (10) | PD-L1 Status | M | Non (5) | PD-L1 Status | M | EXP (5) | PD-L1 Status | M | |

| TNBC | 6/11 (55%) | − | 0.5 | 3/6 (50%) | 0.75 | 3/5 (60%) | − | 0.5 | 6/9 (67%) | − | 0.5 | 4/5 (80%) | − | 0 | 2/4 (50%) | 1 | |||

| Her2 | 7/12 (58%) | − | 1 | 4/6 (67%) | − | 1 | 3/6 (50%) | 0.75 | 7/10 (70%) | − | 0.5 | 3/5 (60%) | + | 1 | 5/5 (100%) | − | 0.5 | ||

| TNBC | 7/12 (58%) | − | 0.5 | 4/6 (67%) | − | 0.25 | 3/6 (50%) | 0.75 | 6/10 (60%) | + | 1 | 4/5 (80%) | + | 1 | 3/5 (60%) | − | 0.5 | ||

| TNBC | 7/12 (58%) | − | 1 | 3/6 (50%) | 1 | 4/6 (67%) | − | 1 | 6/10 (60%) | − | 1 | 3/5 (60%) | + | 1 | 4/5 (80%) | − | 0.75 | ||

| TNBC | 7/12 (58%) | + | 1 | 4/6 (67%) | + | 1 | 3/6 (50%) | 0.75 | 5/9 (56%) | + | 1 | 2/4 (50%) | 2 | 3/5 (60%) | + | 0.75 | |||

| TNBC | 7/12 (58%) | − | 1 | 4/6 (67%) | + | 1 | 5/6 (83%) | − | 0.5 | 5/10 (50%) | 1 | 4/5 (80%) | + | 1.5 | 4/5 (80%) | − | 0.75 | ||

| TNBC | 9/12 (75%) | + | 1 | 4/6 (67%) | + | 1 | 5/6 (83%) | + | 1 | 6/10 (60%) | + | 2 | 4/5 (80%) | + | 2.5 | 3/5 (60%) | − | 0.5 | |

| TNBC | 8/12 (67%) | + | 1 | 3/6 (50%) | 0.5 | 5/6 (83%) | + | 1 | 6/10 (60%) | − | 1 | 3/5 (60%) | − | 0.5 | 4/5 (80%) | + | 1.5 | ||

| TNBC | 11/12 (83%) | − | 0.5 | 6/6 (100%) | − | 0.5 | 5/6 (83%) | − | 0.5 | 6/10 (60%) | − | 0.5 | 3/5 (60%) | + | 1 | 4/5 (80%) | − | 0.5 | |

| Lum | 8/12 (75%) | + | 1 | 4/6 (67%) | + | 1 | 4/6 (67%) | + | 1 | 5/10 (50%) | 1.5 | 4/5 (80%) | + | 3.5 | 4/5 (80%) | − | 0.5 | ||

| AGREEMENT | No | 0 | 3/10; 30% | 3/10; 30% | 2/10; 20% | 1/10; 10% | 1/10; 10% | ||||||||||||

| Low | 6/10; 60% | 0 | 1/10; 10% | 6/10; 60% | 4/10; 40% | 3/10; 30% | |||||||||||||

| High | 4/10; 40% | 7/10; 10% | 6/10; 60% | 2/10; 20% | 5/10; 50% | 6/10; 60% | |||||||||||||

| Consensus 1 | P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 | P11 | P12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Consensus 2 | 0.912 | 0.851 | 0.78 | 0.823 | 0.629 | 0.715 | 0.737 | 0.747 | 0.819 | 0.865 | 0.884 | ||

| P1 | 0.892 | 0.832 | 0.766 | 0.679 | 0.724 | 0.787 | 0.758 | 0.649 | 0.719 | 0.762 | 0.733 | ||

| P2 | 0.765 | 0.747 | 0.722 | 0.735 | 0.551 | 0.695 | 0.575 | 0.562 | 0.729 | 0.774 | 0.745 | ||

| P3 | 0.786 | 0.768 | 0.607 | ||||||||||

| P4 | 0.823 | 0.762 | 0.654 | 0.641 | 0.956 | 0.587 | 0.669 | 0.631 | 0.606 | 0.637 | 0.728 | 0.699 | |

| P5 | 0.74 | 0.723 | 0.64 | 0.696 | 0.607 | 0.667 | 0.682 | 0.801 | 0.498 | 0.554 | 0.515 | 0.539 | |

| P6 | 0.798 | 0.737 | 0.741 | 0.632 | 0.617 | 0.713 | 0.732 | 0.632 | 0.635 | 0.688 | 0.643 | 0.656 | |

| P7 | 0.718 | 0.659 | 0.579 | 0.717 | 0.669 | 0.54 | 0.634 | ||||||

| P8 | 0.678 | 0.658 | 0.533 | 0.543 | 0.613 | 0.678 | 0.577 | 0.475 | 0.94 | 0.552 | 0.574 | 0.614 | 0.661 |

| P9 | 0.891 | 0.871 | 0.745 | 0.764 | 0.715 | 0.72 | 0.733 | 0.744 | 0.658 | 0.772 | 0.784 | 0.64 | 0.826 |

| P10 | 0.822 | 0.76 | 0.634 | 0.831 | 0.687 | 0.649 | 0.704 | 0.711 | 0.597 | 0.801 | 0.862 | 0.762 | 0.88 |

| P11 | 0.87 | 0.808 | 0.724 | 0.649 | 0.738 | 0.743 | 0.801 | 0.586 | 0.638 | 0.763 | 0.737 | 0.778 | 0.784 |

| P12 | 0.843 | 0.735 | 0.646 | 0.758 | 0.708 | 0.643 | 0.7 | 0.687 | 0.563 | 0.732 | 0.749 | 0.732 | 0.906 |

| ALL-1 | EXP-1 | NON-1 | ALL-2 | EXP-2 | NON-2 | |

|---|---|---|---|---|---|---|

| ALL-1 | 0.907 | 0.931 | 0.906 | 0.768 | 0.932 | |

| EXP-1 | 0.915 | 0.772 | 0.974 | 0.913 | 0.919 | |

| NON-1 | 0.933 | 0.788 | 0.781 | 0.619 | 0.876 | |

| ALL-2 | 0.919 | 0.974 | 0.804 | 0.911 | 0.946 | |

| EXP-2 | 0.798 | 0.923 | 0.655 | 0.919 | 0.792 | |

| NON-2 | 0.936 | 0.920 | 0.891 | 0.949 | 0.808 |

| Rater | Position | Experience as a Breast Reporting Pathologist (years) | Experience in SP142 PD-L1 Reporting (years) | Previous Training in SP142 PD-L1 Reporting (Provider) | Intra-Observer Agreement (Cohen’s Kappa/Level of Agreement) | Intra-Observer Reliability (ICC/Level of Reliability) |

|---|---|---|---|---|---|---|

| P1 | Trainee Pathologist | 12 | 0 | Roche | 0.832/Almost perfect | 0.826/Good |

| P2 | 12 | 0 | Roche | 0.722/Substantial | 0.525/Moderate | |

| P3 | Consultant Scientist | N/A | 0 | Roche | N/A/N/A | N/A/N/A |

| P4 | Consultant Pathologist | 20 | 0 | N/S | 0.956/Almost perfect | 0.852/Good |

| P5 | 21 | 0 | Roche | 0.667/Substantial | N/A/N/A | |

| P6 | 25 | 0 | None | 0.732/Substantial | 0.770/Good | |

| P7 | 25 | 3 | Roche | N/A/N/A | N/A/N/A | |

| P8 | 29 | 1 | Roche | 0.94/Almost perfect | 0.935/Excellent | |

| P9 | 10 | 2 | Roche | 0.772/Substantial | 0.933/Excellent | |

| P10 | 25 | 2 | Roche | 0.862/Almost perfect | 0.920/Excellent | |

| P11 | 30 | 3 | Local | 0.778/Substantial | 0.756/Good | |

| P12 | 22 | 2 | Roche | 0.906/Almost perfect | 0.929/Excellent |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zaakouk, M.; Van Bockstal, M.; Galant, C.; Callagy, G.; Provenzano, E.; Hunt, R.; D’Arrigo, C.; Badr, N.M.; O’Sullivan, B.; Starczynski, J.; et al. Inter- and Intra-Observer Agreement of PD-L1 SP142 Scoring in Breast Carcinoma—A Large Multi-Institutional International Study. Cancers 2023, 15, 1511. https://doi.org/10.3390/cancers15051511

Zaakouk M, Van Bockstal M, Galant C, Callagy G, Provenzano E, Hunt R, D’Arrigo C, Badr NM, O’Sullivan B, Starczynski J, et al. Inter- and Intra-Observer Agreement of PD-L1 SP142 Scoring in Breast Carcinoma—A Large Multi-Institutional International Study. Cancers. 2023; 15(5):1511. https://doi.org/10.3390/cancers15051511

Chicago/Turabian StyleZaakouk, Mohamed, Mieke Van Bockstal, Christine Galant, Grace Callagy, Elena Provenzano, Roger Hunt, Corrado D’Arrigo, Nahla M. Badr, Brendan O’Sullivan, Jane Starczynski, and et al. 2023. "Inter- and Intra-Observer Agreement of PD-L1 SP142 Scoring in Breast Carcinoma—A Large Multi-Institutional International Study" Cancers 15, no. 5: 1511. https://doi.org/10.3390/cancers15051511

APA StyleZaakouk, M., Van Bockstal, M., Galant, C., Callagy, G., Provenzano, E., Hunt, R., D’Arrigo, C., Badr, N. M., O’Sullivan, B., Starczynski, J., Tanchel, B., Mir, Y., Lewis, P., & Shaaban, A. M. (2023). Inter- and Intra-Observer Agreement of PD-L1 SP142 Scoring in Breast Carcinoma—A Large Multi-Institutional International Study. Cancers, 15(5), 1511. https://doi.org/10.3390/cancers15051511