Communicator-Driven Data Preprocessing Improves Deep Transfer Learning of Histopathological Prediction of Pancreatic Ductal Adenocarcinoma

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

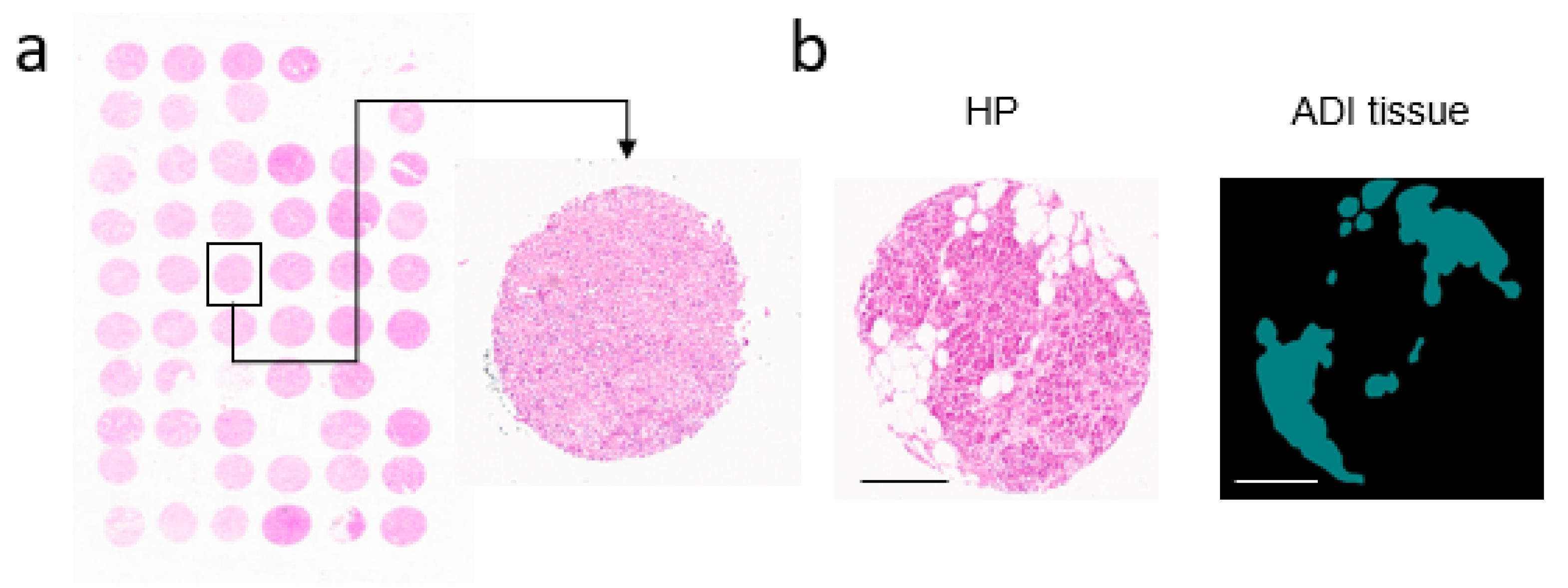

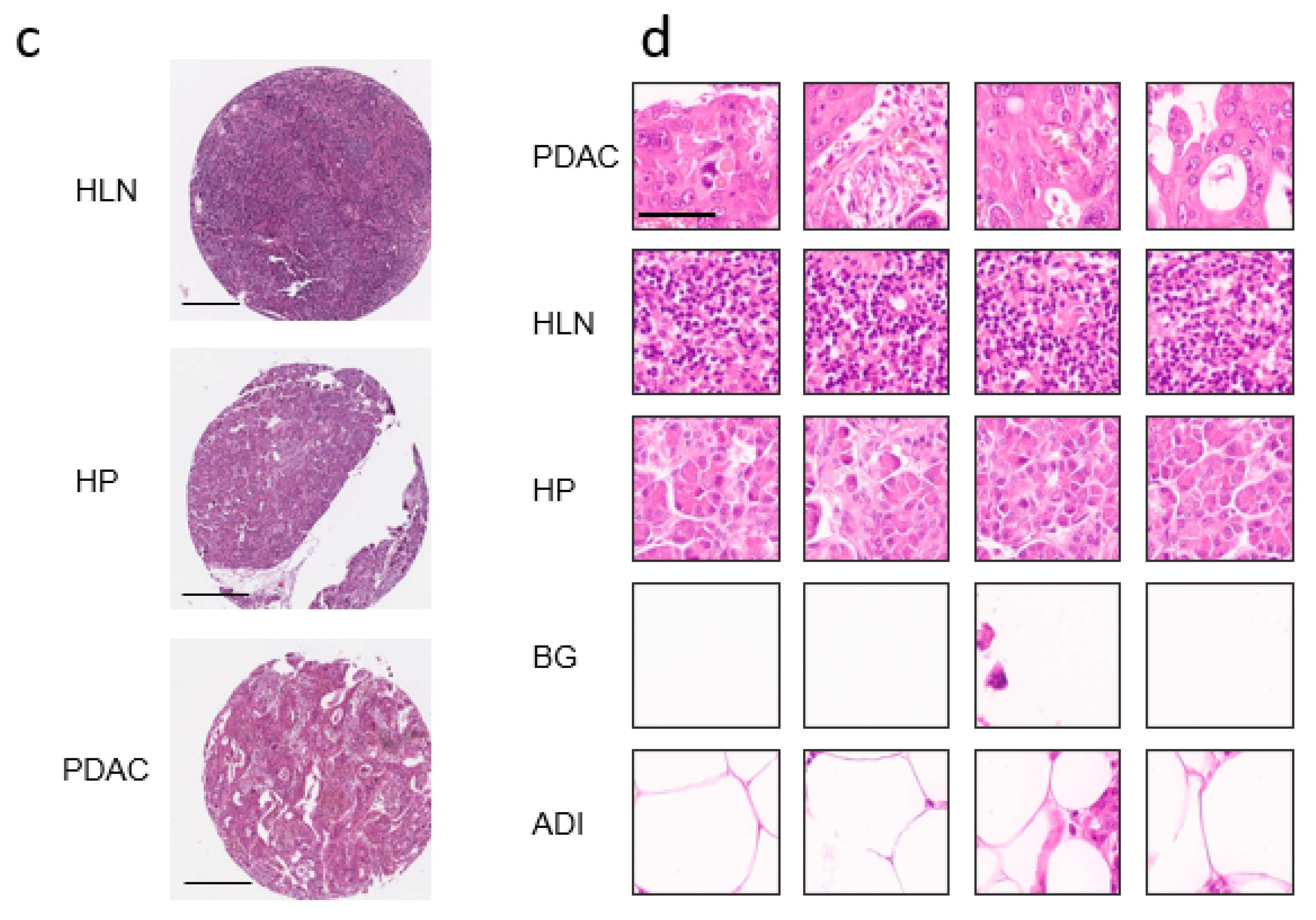

2.1. Digitalization of H&E Tissue Slides

2.2. Deep Transfer Learning

2.3. Software & Hardware

3. Results

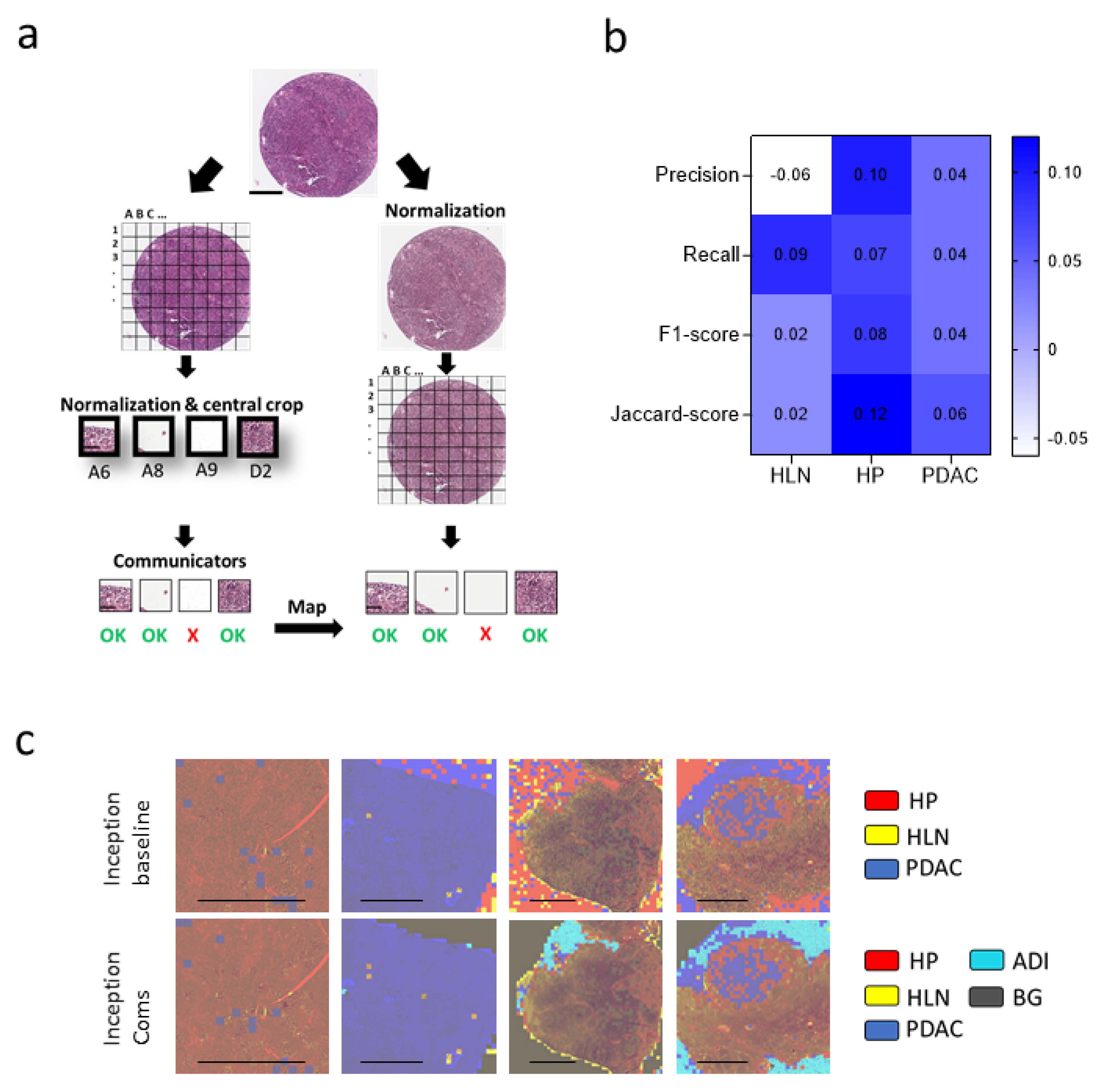

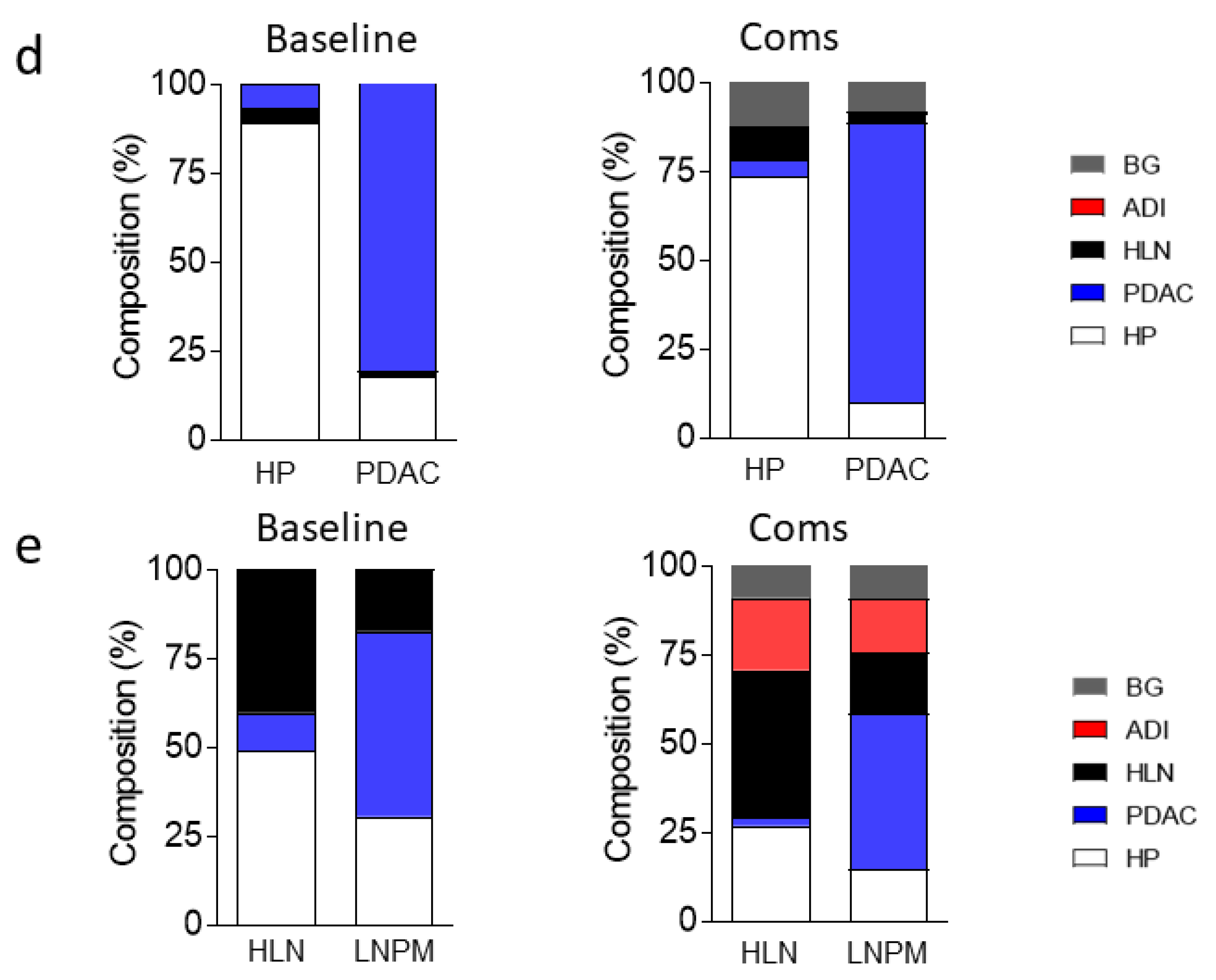

3.1. Communicating Neural Networks Enrich New Datasets for Parenchymal Tissue

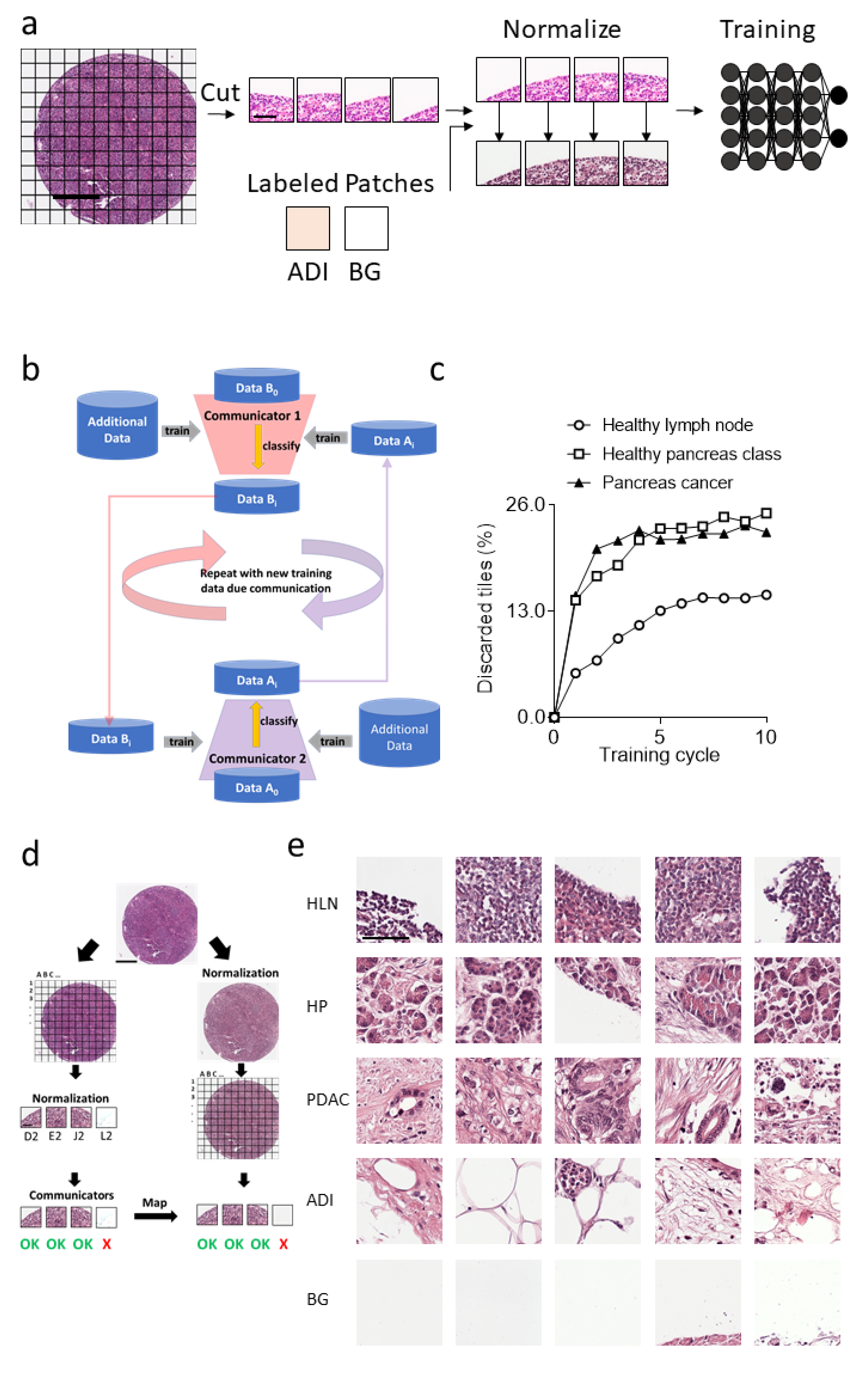

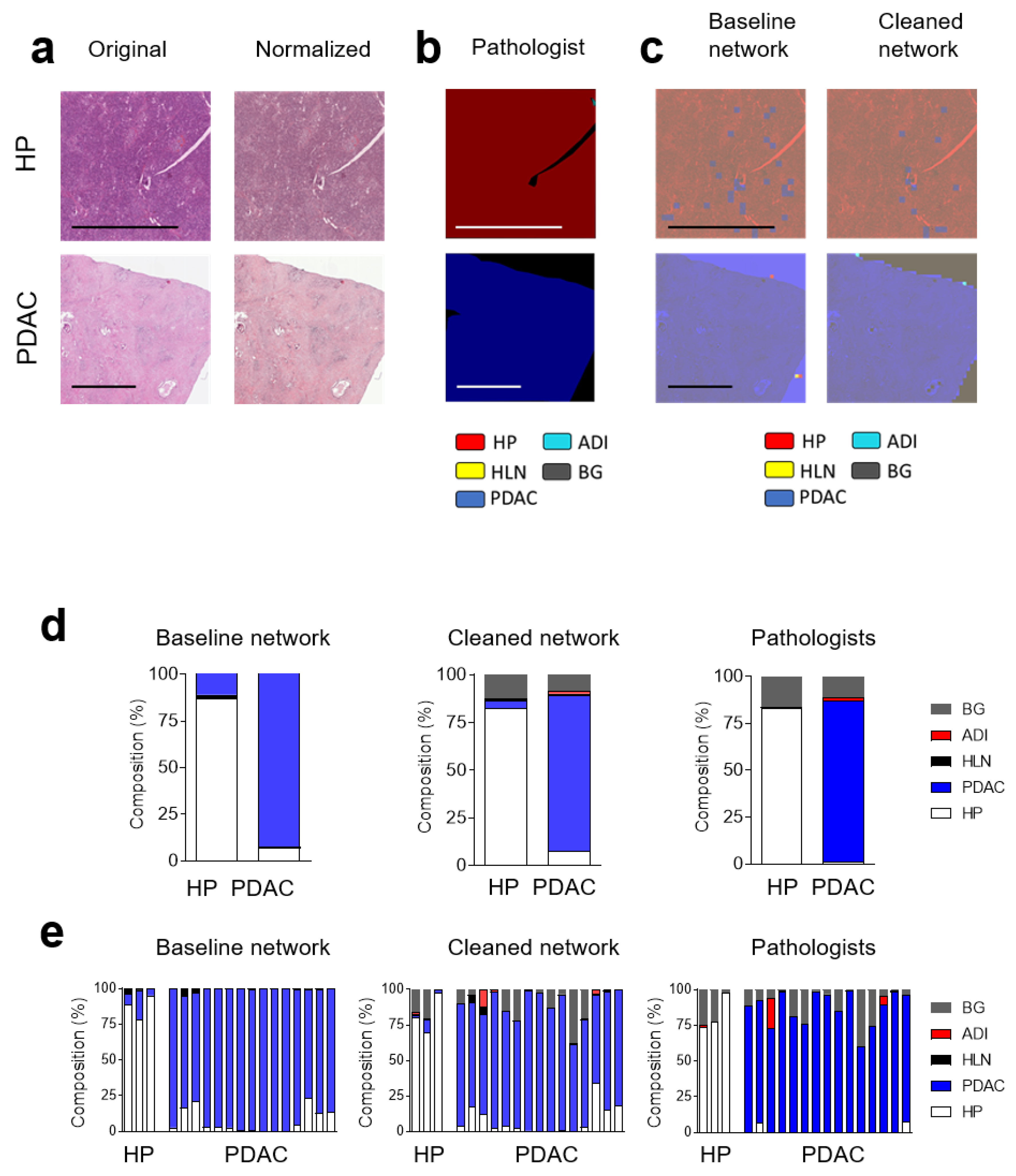

3.2. Dataset Clean-Up Improves Performance during Image Recognition

3.3. Convolutional Neural Networks (CNN) Classification of Histological Images of Primary Tumors and Lymph Node Metastases Can Be Improved through Hyperparameter Tuning during Training and Classification

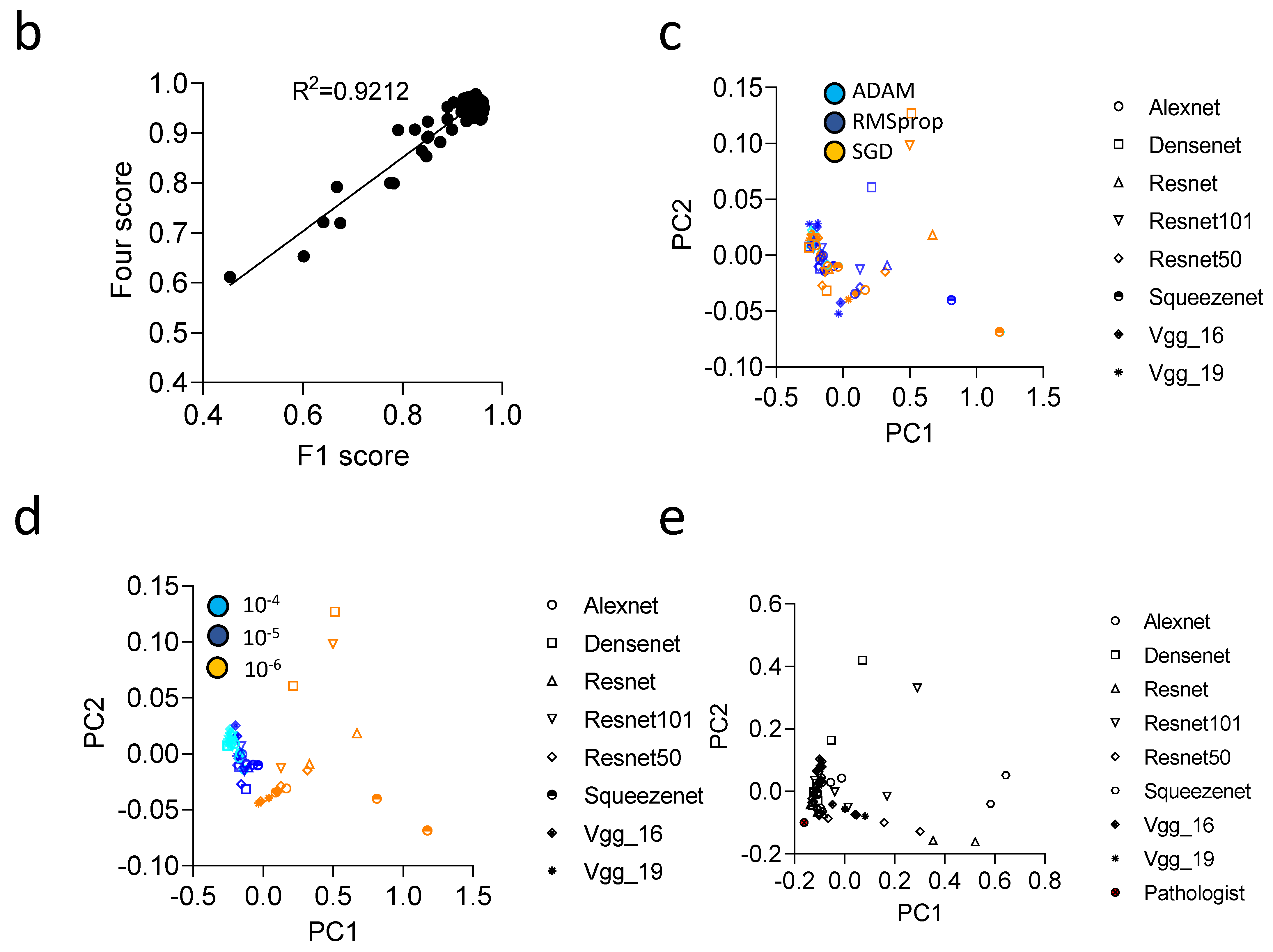

3.4. Communicator Based Preprocessing Can Be Transferred to Other Input Sizes

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ryan, D.P.; Hong, T.S.; Bardeesy, N. Pancreatic adenocarcinoma. N. Engl. J. Med. 2014, 371, 1039–1049. [Google Scholar] [CrossRef] [PubMed]

- Park, W.; Chawla, A.; O’Reilly, E.M. Pancreatic Cancer: A Review. JAMA 2021, 326, 851–862. [Google Scholar] [CrossRef] [PubMed]

- Orth, M.; Metzger, P.; Gerum, S.; Mayerle, J.; Schneider, G.; Belka, C.; Schnurr, M.; Lauber, K. Pancreatic ductal adenocarcinoma: Biological hallmarks, current status, and future perspectives of combined modality treatment approaches. Radiat. Oncol. 2019, 14, 141. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Liu, X.; Shen, J.; Li, Z.; Sang, Y.; Wu, X.; Zha, Y.; Liang, W.; Wang, C.; Wang, K. Clinically Applicable AI System for Accurate Diagnosis, Quantitative Measurements, and Prognosis of COVID-19 Pneumonia Using Computed Tomography. Cell 2020, 181, 1423–1433.e1411. [Google Scholar] [CrossRef]

- Harmon, S.A.; Sanford, T.H.; Xu, S.; Turkbey, E.B.; Roth, H.; Xu, Z.; Yang, D.; Myronenko, A.; Anderson, V.; Amalou, A. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. 2020, 11, 4080. [Google Scholar] [CrossRef]

- Barisoni, L.; Lafata, K.J.; Hewitt, S.M.; Madabhushi, A.; Balis, U.G. Digital pathology and computational image analysis in nephropathology. Nat. Rev. Nephrol. 2020, 16, 669–685. [Google Scholar] [CrossRef]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Mobadersany, P.; Yousefi, S.; Amgad, M.; Gutman, D.A.; Barnholtz-Sloan, J.S.; Vega, J.E.V.; Brat, D.J.; Cooper, L.A.D. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA 2018, 115, E2970–E2979. [Google Scholar] [CrossRef] [Green Version]

- Kather, J.N.; Krisam, J.; Charoentong, P.; Luedde, T.; Herpel, E.; Weis, C.-A.; Gaiser, T.; Marx, A.; Valous, N.A.; Ferber, D. Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study. PLoS Med. 2019, 16, e1002730. [Google Scholar] [CrossRef]

- Saillard, C.; Saillard, C.; Schmauch, B.; Laifa, O.; Moarii, M.; Toldo, S.; Zaslavskiy, M.; Pronier, E.; Laurent, A.; Amaddeo, G.; et al. Predicting survival after hepatocellular carcinoma resection using deep-learning on histological slides. Hepatology 2020, 72, 2000–2013. [Google Scholar] [CrossRef] [PubMed]

- Schmauch, B.; Romagnoni, A.; Pronier, E.; Saillard, C.; Maillé, P.; Calderaro, J.; Kamoun, A.; Sefta, M.; Toldo, S.; Zaslavskiy, M. A deep learning model to predict RNA-Seq expression of tumours from whole slide images. Nat. Commun. 2020, 11, 3877. [Google Scholar] [CrossRef] [PubMed]

- Kather, J.N.; Pearson, A.T.; Halama, N.; Jäger, D.; Krause, J.; Loosen, S.H.; Marx, A.; Boor, P.; Tacke, F.; Neumann, U.P. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat. Med. 2019, 25, 1054–1056. [Google Scholar] [CrossRef] [PubMed]

- Noorbakhsh, J.; Farahmand, S.; Pour, A.F.; Namburi, S.; Caruana, D.; Rimm, D.; Soltanieh-Ha, M.; Zarringhalam, K.; Chuang, J.H. Deep learning-based cross-classifications reveal conserved spatial behaviors within tumor histological images. Nat. Commun. 2020, 11, 6367. [Google Scholar] [CrossRef] [PubMed]

- Abbas, M.A.; Bukhari, S.U.K.; Syed, A.; Shah, S.S.H. The Histopathological Diagnosis of Adenocarcinoma & Squamous Cells Carcinoma of Lungs by Artificial intelligence: A comparative study of convolutional neural networks. medRxiv 2020. [Google Scholar] [CrossRef]

- Talo, M. Automated classification of histopathology images using transfer learning. Artif. Intell. Med. 2019, 101, 101743. [Google Scholar] [CrossRef] [Green Version]

- Saxena, S.; Shukla, S.; Gyanchandani, M. Pre-trained convolutional neural networks as feature extractors for diagnosis of breast cancer using histopathology. Int. J. Imaging Syst. Technol. 2020, 30, 577–591. [Google Scholar] [CrossRef]

- Wang, L.; Jiao, Y.; Qiao, Y.; Zeng, N.; Yu, R. A novel approach combined transfer learning and deep learning to predict TMB from histology image. Pattern Recognit. Lett. 2020, 135, 244–248. [Google Scholar] [CrossRef]

- Haeberle, L.; Steiger, K.; Schlitter, A.M.; Safi, S.A.; Knoefel, W.T.; Erkan, M.; Esposito, I. Stromal heterogeneity in pancreatic cancer and chronic pancreatitis. Pancreatology 2018, 18, 536–549. [Google Scholar] [CrossRef]

- Wahab, N.; Miligy, I.M.; Dodd, K.; Sahota, H.; Toss, M.; Lu, W.; Jahanifar, M.; Bilal, M.; Graham, S.; Park, Y. Semantic annotation for computational pathology: Multidisciplinary experience and best practice recommendations. J. Pathol. Clin. Res. 2021, 8, 116–128. [Google Scholar] [CrossRef]

- Macenko, M.; Niethammer, M.; Marron, J.S.; Borland, D.; Woosley, J.T.; Guan, X.; Schmitt, C.; Thomas, N.E. A method for normalizing histology slides for quantitative analysis. In Proceedings of the 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Boston, MA, USA, 28 June–1 July 2009; IEEE: New York, NY, USA, 2009; pp. 1107–1110. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In Proceedings of the International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 270–279. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Werner, J.; Kronberg, R.M.; Stachura, P.; Ostermann, P.N.; Müller, L.; Schaal, H.; Bhatia, S.; Kather, J.N.; Borkhardt, A.; Pandyra, A.A.; et al. Deep Transfer Learning Approach for Automatic Recognition of Drug Toxicity and Inhibition of SARS-CoV-2. Viruses 2021, 13, 610. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Prechelt, L. Early Stopping—But When? In Neural Networks: Tricks of the Trade; Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar]

- Wada, K. Labelme: Image Polygonal Annotation with Python. 2016. Available online: https://github.com/wkentaro/labelme (accessed on 1 November 2021).

- Kather, J.N.; Halama, N.; Marx, A. 100,000 Histological Images of Human Colorectal Cancer and Healthy Tissue. Zenodo10. 2018. Available online: https://zenodo.org/record/1214456#.YlU2AMjMJPZ (accessed on 1 November 2021).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A. One weird trick for parallelizing convolutional neural networks. arXiv 2014, arXiv:1404.5997. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Hashimoto, K.; Nishimura, S.; Ito, T.; Oka, N.; Akagi, M. Limitations and usefulness of biopsy techniques for the diagnosis of metastatic bone and soft tissue tumors. Ann. Med. Surg. 2021, 68, 102581. [Google Scholar] [CrossRef] [PubMed]

- Schaumberg, A.J.; Juarez-Nicanor, W.C.; Choudhury, S.J.; Pastrian, L.G.; Pritt, B.S.; Prieto Pozuelo, M.; Sotillo Sanchez, R.; Ho, K.; Zahra, N.; Sener, B.D.; et al. Interpretable multimodal deep learning for real-time pan-tissue pan-disease pathology search on social media. Mod. Pathol. 2020, 33, 2169–2185. [Google Scholar] [CrossRef]

- Amit, M.; Liu, C.; Gleber-Netto, F.O.; Kini, S.; Tam, S.; Benov, A.; Aashiq, M.; El-Naggar, A.K.; Moreno, A.C.; Rosenthal, D.I.; et al. Inclusion of extranodal extension in the lymph node classification of cutaneous squamous cell carcinoma of the head and neck. Cancer 2021, 127, 1238–1245. [Google Scholar] [CrossRef]

- Gruber, G.; Cole, B.F.; Castiglione-Gertsch, M.; Holmberg, S.B.; Lindtner, J.; Golouh, R.; Collins, J.; Crivellari, D.; Thürlimann, B.; Simoncini, E.; et al. Extracapsular tumor spread and the risk of local, axillary and supraclavicular recurrence in node-positive, premenopausal patients with breast cancer. Ann. Oncol. 2008, 19, 1393–1401. [Google Scholar] [CrossRef]

- Luchini, C.; Fleischmann, A.; Boormans, J.L.; Fassan, M.; Nottegar, A.; Lucato, P.; Stubbs, B.; Solmi, M.; Porcaro, A.; Veronese, N.; et al. Extranodal extension of lymph node metastasis influences recurrence in prostate cancer: A systematic review and meta-analysis. Sci. Rep. 2017, 7, 2374. [Google Scholar]

- Grosser, B.; Glückstein, M.; Dhillon, C.; Schiele, S.; Dintner, S.; VanSchoiack, A.; Kroeppler, D.; Martin, B.; Probst, A.; Vlasenko, D.; et al. Stroma A Reactive I nvasion F ront A reas (SARIFA)—A new prognostic biomarker in gastric cancer related to tumor-promoting adipocytes. J. Pathol. 2022, 256, 71–82. [Google Scholar] [CrossRef] [PubMed]

- Yu, K.H.; Wang, F.; Berry, G.J.; Ré, C.; Altman, R.B.; Snyder, M.; Kohane, I.S. Classifying non-small cell lung cancer types and transcriptomic subtypes using convolutional neural networks. J. Am. Med. Inform. Assoc. 2020, 27, 757–769. [Google Scholar] [CrossRef] [PubMed]

| Class | Precision | Recall | F1-Score | Jaccard Score | Support |

|---|---|---|---|---|---|

| HLN | 0.89 | 0.91 | 0.9 | 0.82 | 874 |

| HP | 0.9 | 0.82 | 0.86 | 0.75 | 1690 |

| PDAC | 0.9 | 0.94 | 0.92 | 0.85 | 2454 |

| Accuracy | 0.9 | 5018 | |||

| Macro avg | 0.9 | 0.89 | 0.89 | 0.81 | 5018 |

| Weighted avg | 0.9 | 0.9 | 0.9 | 0.81 | 5018 |

| Class | Precision | Recall | F1-Score | Jaccard | Support |

|---|---|---|---|---|---|

| ADI | 0.78 | 0.55 | 0.64 | 0.47 | 51 |

| BG | 0.95 | 0.96 | 0.96 | 0.92 | 908 |

| HLN | 0.92 | 0.94 | 0.93 | 0.87 | 758 |

| HP | 0.97 | 0.91 | 0.94 | 0.88 | 1277 |

| PDAC | 0.93 | 0.96 | 0.94 | 0.89 | 1910 |

| Accuracy | 0.94 | 4904 | |||

| Macro avg | 0.91 | 0.86 | 0.88 | 0.81 | 4904 |

| Weighted avg | 0.94 | 0.94 | 0.94 | 0.89 | 4904 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kronberg, R.M.; Haeberle, L.; Pfaus, M.; Xu, H.C.; Krings, K.S.; Schlensog, M.; Rau, T.; Pandyra, A.A.; Lang, K.S.; Esposito, I.; et al. Communicator-Driven Data Preprocessing Improves Deep Transfer Learning of Histopathological Prediction of Pancreatic Ductal Adenocarcinoma. Cancers 2022, 14, 1964. https://doi.org/10.3390/cancers14081964

Kronberg RM, Haeberle L, Pfaus M, Xu HC, Krings KS, Schlensog M, Rau T, Pandyra AA, Lang KS, Esposito I, et al. Communicator-Driven Data Preprocessing Improves Deep Transfer Learning of Histopathological Prediction of Pancreatic Ductal Adenocarcinoma. Cancers. 2022; 14(8):1964. https://doi.org/10.3390/cancers14081964

Chicago/Turabian StyleKronberg, Raphael M., Lena Haeberle, Melanie Pfaus, Haifeng C. Xu, Karina S. Krings, Martin Schlensog, Tilman Rau, Aleksandra A. Pandyra, Karl S. Lang, Irene Esposito, and et al. 2022. "Communicator-Driven Data Preprocessing Improves Deep Transfer Learning of Histopathological Prediction of Pancreatic Ductal Adenocarcinoma" Cancers 14, no. 8: 1964. https://doi.org/10.3390/cancers14081964

APA StyleKronberg, R. M., Haeberle, L., Pfaus, M., Xu, H. C., Krings, K. S., Schlensog, M., Rau, T., Pandyra, A. A., Lang, K. S., Esposito, I., & Lang, P. A. (2022). Communicator-Driven Data Preprocessing Improves Deep Transfer Learning of Histopathological Prediction of Pancreatic Ductal Adenocarcinoma. Cancers, 14(8), 1964. https://doi.org/10.3390/cancers14081964