Radiomic and Volumetric Measurements as Clinical Trial Endpoints—A Comprehensive Review

Abstract

Simple Summary

Abstract

1. Introduction

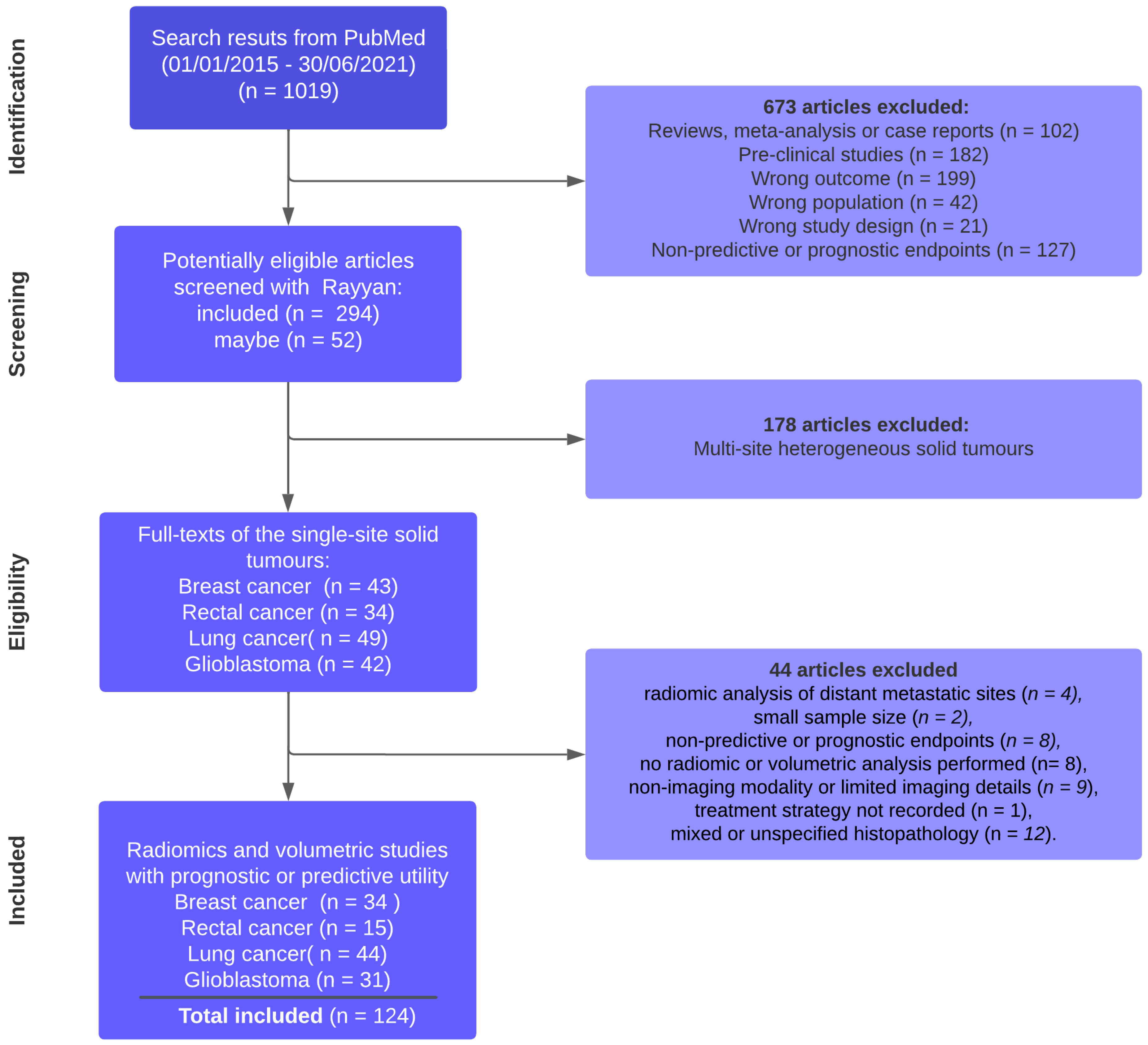

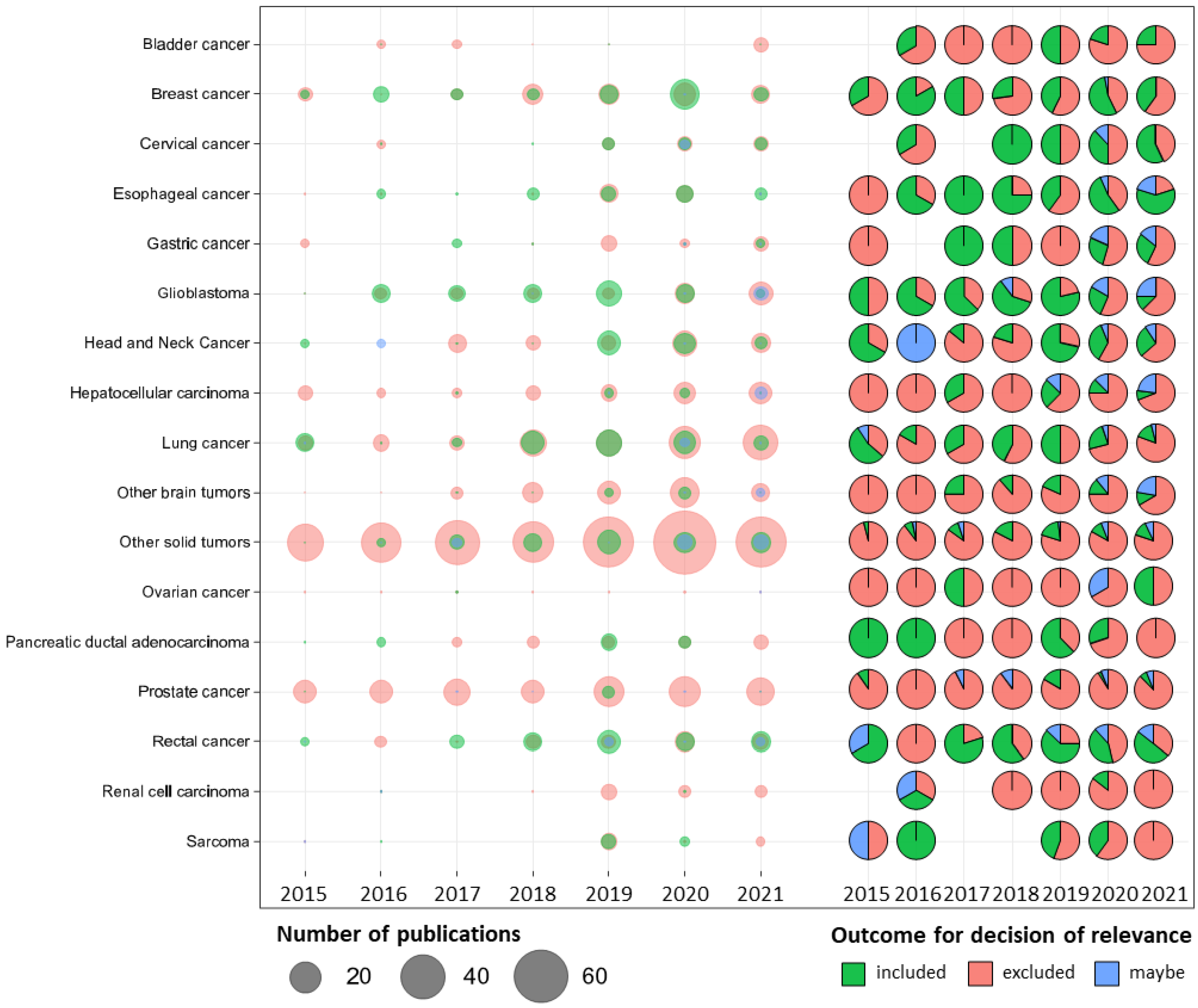

2. Article Search Strategy and Study Selection

Data Extraction and Relevance Rating

3. Results

3.1. Overview of Included Studies

3.1.1. Breast Cancer (BC)

3.1.2. Rectal Cancer (RC)

3.1.3. Lung Cancer (LC)

3.1.4. Glioblastoma Multiforme (GBM)

3.2. Study Design

3.2.1. Breast Cancer

3.2.2. Rectal Cancer

3.2.3. Lung Cancer

3.2.4. Glioblastoma Multiforme

3.3. Type of Imaging Modality and Strategy of Segmentation

3.3.1. Breast Cancer

3.3.2. Rectal Cancer

3.3.3. Lung Cancer

3.3.4. Glioblastoma Multiforme

3.4. Type of Algorithms and Primary Endpoints

3.4.1. Breast Cancer

3.4.2. Rectal Cancer

3.4.3. Lung Cancer

3.4.4. Glioblastoma Multiforme

3.5. Integration of Radiomics with Genomics and Multi-Omics

3.5.1. Breast Cancer

3.5.2. Rectal Cancer

3.5.3. Lung Cancer

3.5.4. Glioblastoma Multiforme

3.6. Validation and Data Sharing Strategy

3.6.1. Breast Cancer

3.6.2. Rectal Cancer

3.6.3. Lung Cancer

3.6.4. Glioblastoma Multiforme

4. Discussion

4.1. Strategy of Segmentation

4.2. Validation Strategy

4.3. Data Sharing

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Clinical Trial Endpoints for the Approval of Cancer Drugs and Biologics. FDA. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/clinical-trial-endpoints-approval-cancer-drugs-and-biologics (accessed on 12 October 2022).

- Table of Surrogate Endpoints That Were the Basis of Drug Approval or Licensure. FDA. Available online: https://www.fda.gov/drugs/development-resources/table-surrogate-endpoints-were-basis-drug-approval-or-licensure (accessed on 25 April 2022).

- Eisenhauer, E.A.; Therasse, P.; Bogaerts, J.; Schwartz, L.H.; Sargent, D.; Ford, R.; Dancey, J.; Arbuck, S.; Gwyther, S.; Mooney, M.; et al. New Response Evaluation Criteria in Solid Tumours: Revised RECIST Guideline (Version 1.1). Eur. J. Cancer 2009, 45, 228–247. [Google Scholar] [CrossRef]

- Bera, K.; Braman, N.; Gupta, A.; Velcheti, V.; Madabhushi, A. Predicting Cancer Outcomes with Radiomics and Artificial Intelligence in Radiology. Nat. Rev. Clin. Oncol. 2022, 19, 132–146. [Google Scholar] [CrossRef] [PubMed]

- Bettinelli, A.; Marturano, F.; Avanzo, M.; Loi, E.; Menghi, E.; Mezzenga, E.; Pirrone, G.; Sarnelli, A.; Strigari, L.; Strolin, S.; et al. A Novel Benchmarking Approach to Assess the Agreement among Radiomic Tools. Radiology 2022, 303. [Google Scholar] [CrossRef]

- Zimmermann, M.; Kuhl, C.; Engelke, H.; Bettermann, G.; Keil, S. Volumetric Measurements of Target Lesions: Does It Improve Inter-Reader Variability for Oncological Response Assessment According to RECIST 1.1 Guidelines Compared to Standard Unidimensional Measurements? Pol. J. Radiol. 2021, 86, e594–e600. [Google Scholar] [CrossRef]

- Nishino, M. Tumor Response Assessment for Precision Cancer Therapy: Response Evaluation Criteria in Solid Tumors and Beyond. Am. Soc. Clin. Oncol. Educ. Book 2018, 38, 1019–1029. [Google Scholar] [CrossRef]

- Hylton, N.M.; Blume, J.D.; Bernreuter, W.K.; Pisano, E.D.; Rosen, M.A.; Morris, E.A.; Weatherall, P.T.; Lehman, C.D.; Newstead, G.M.; Polin, S.; et al. Locally Advanced Breast Cancer: MR Imaging for Prediction of Response to Neoadjuvant Chemotherapy--Results from ACRIN 6657/I-SPY TRIAL. Radiology 2012, 263, 663–672. [Google Scholar] [CrossRef]

- Xiao, J.; Tan, Y.; Li, W.; Gong, J.; Zhou, Z.; Huang, Y.; Zheng, J.; Deng, Y.; Wang, L.; Peng, J.; et al. Tumor Volume Reduction Rate Is Superior to RECIST for Predicting the Pathological Response of Rectal Cancer Treated with Neoadjuvant Chemoradiation: Results from a Prospective Study. Oncol. Lett. 2015, 9, 2680–2686. [Google Scholar] [CrossRef]

- O’Connor, J.P.B.; Aboagye, E.O.; Adams, J.E.; Aerts, H.J.W.L.; Barrington, S.F.; Beer, A.J.; Boellaard, R.; Bohndiek, S.E.; Brady, M.; Brown, G.; et al. Imaging Biomarker Roadmap for Cancer Studies. Nat. Rev. Clin. Oncol. 2016, 14, 169–186. [Google Scholar] [CrossRef]

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; De Jong, E.E.C.; Van Timmeren, J.; Sanduleanu, S.; Larue, R.T.H.M.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The Bridge between Medical Imaging and Personalized Medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef] [PubMed]

- Moons, K.G.M.; Altman, D.G.; Reitsma, J.B.; Ioannidis, J.P.A.; Macaskill, P.; Steyerberg, E.W.; Vickers, A.J.; Ransohoff, D.F.; Collins, G.S. Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD): Explanation and Elaboration. Ann. Intern. Med. 2015, 162, W1–W73. [Google Scholar] [CrossRef]

- Zwanenburg, A.; Vallières, M.; Abdalah, M.A.; Aerts, H.J.W.L.; Andrearczyk, V.; Apte, A.; Ashrafinia, S.; Bakas, S.; Beukinga, R.J.; Boellaard, R.; et al. The Image Biomarker Standardization Initiative: Standardized Quantitative Radiomics for High-Throughput Image-Based Phenotyping. Radiology 2020, 295, 328–338. [Google Scholar] [CrossRef]

- Rayyan—Intelligent Systematic Review. Available online: https://www.rayyan.ai/ (accessed on 25 April 2022).

- Zhuang, X.; Chen, C.; Liu, Z.; Zhang, L.; Zhou, X.; Cheng, M.; Ji, F.; Zhu, T.; Lei, C.; Zhang, J.; et al. Multiparametric MRI-Based Radiomics Analysis for the Prediction of Breast Tumor Regression Patterns after Neoadjuvant Chemotherapy. Transl. Oncol. 2020, 13, 100831. [Google Scholar] [CrossRef]

- Zhou, J.; Lu, J.; Gao, C.; Zeng, J.; Zhou, C.; Lai, X.; Cai, W.; Xu, M. Predicting the Response to Neoadjuvant Chemotherapy for Breast Cancer: Wavelet Transforming Radiomics in MRI. BMC Cancer 2020, 20, 100. [Google Scholar] [CrossRef]

- Li, X.; Abramson, R.G.; Arlinghaus, L.R.; Kang, H.; Chakravarthy, A.B.; Abramson, V.G.; Farley, J.; Mayer, I.A.; Kelley, M.C.; Meszoely, I.M.; et al. Multiparametric Magnetic Resonance Imaging for Predicting Pathological Response after the First Cycle of Neoadjuvant Chemotherapy in Breast Cancer. Investig. Radiol. 2015, 50, 195–204. [Google Scholar] [CrossRef]

- Li, W.; Newitt, D.C.; Wilmes, L.J.; Jones, E.F.; Arasu, V.; Gibbs, J.; La Yun, B.; Li, E.; Partridge, S.C.; Kornak, J.; et al. Additive Value of Diffusion-Weighted MRI in the I-SPY 2 TRIAL. J. Magn. Reson. Imaging 2019, 50, 1742–1753. [Google Scholar] [CrossRef]

- Li, P.; Wang, X.; Xu, C.; Liu, C.; Zheng, C.; Fulham, M.J.; Feng, D.; Wang, L.; Song, S.; Huang, G. (18)F-FDG PET/CT Radiomic Predictors of Pathologic Complete Response (PCR) to Neoadjuvant Chemotherapy in Breast Cancer Patients. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 1116–1126. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Kim, S.H.; Lee, H.W.; Song, B.J.; Kang, B.J.; Lee, A.; Nam, Y. Intravoxel Incoherent Motion Diffusion-Weighted MRI for Predicting Response to Neoadjuvant Chemotherapy in Breast Cancer. Magn. Reson. Imaging 2018, 48, 27–33. [Google Scholar] [CrossRef]

- Kim, S.; Kim, M.J.; Kim, E.-K.; Yoon, J.H.; Park, V.Y. MRI Radiomic Features: Association with Disease-Free Survival in Patients with Triple-Negative Breast Cancer. Sci. Rep. 2020, 10, 3750. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Li, C.-L.; Luo, X.-M.; Chuan, Z.-R.; Lv, W.-Z.; Li, X.; Cui, X.-W.; Dietrich, C.F. Ultrasound-Based Deep Learning Radiomics in the Assessment of Pathological Complete Response to Neoadjuvant Chemotherapy in Locally Advanced Breast Cancer. Eur. J. Cancer 2021, 147, 95–105. [Google Scholar] [CrossRef]

- Jarrett, A.M.; Hormuth, D.A., 2nd; Wu, C.; Kazerouni, A.S.; Ekrut, D.A.; Virostko, J.; Sorace, A.G.; DiCarlo, J.C.; Kowalski, J.; Patt, D.; et al. Evaluating Patient-Specific Neoadjuvant Regimens for Breast Cancer via a Mathematical Model Constrained by Quantitative Magnetic Resonance Imaging Data. Neoplasia 2020, 22, 820–830. [Google Scholar] [CrossRef]

- Jahani, N.; Cohen, E.; Hsieh, M.K.; Weinstein, S.P.; Pantalone, L.; Hylton, N.; Newitt, D.; Davatzikos, C.; Kontos, D. Prediction of Treatment Response to Neoadjuvant Chemotherapy for Breast Cancer via Early Changes in Tumor Heterogeneity Captured by DCE-MRI Registration. Sci. Rep. 2019, 9. [Google Scholar] [CrossRef]

- Hylton, N.M.; Gatsonis, C.A.; Rosen, M.A.; Lehman, C.D.; Newitt, D.C.; Partridge, S.C.; Bernreuter, W.K.; Pisano, E.D.; Morris, E.A.; Weatherall, P.T.; et al. Neoadjuvant Chemotherapy for Breast Cancer: Functional Tumor Volume by MR Imaging Predicts Recurrence-Free Survival-Results from the ACRIN 6657/CALGB 150007 I-SPY 1 TRIAL. Radiology 2016, 279, 44–55. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Mai, J.; Huang, Y.; He, L.; Chen, X.; Wu, X.; Li, Y.; Yang, X.; Dong, M.; Huang, J.; et al. Radiomic Nomogram for Pretreatment Prediction of Pathologic Complete Response to Neoadjuvant Therapy in Breast Cancer: Predictive Value of Staging Contrast-Enhanced CT. Clin. Breast Cancer 2021, 21, e388–e401. [Google Scholar] [CrossRef]

- Yamamoto, S.; Han, W.; Kim, Y.; Du, L.; Jamshidi, N.; Huang, D.; Kim, J.H.H.; Kuo, M.D.D. Breast Cancer: Radiogenomic Biomarker Reveals Associations among Dynamic Contrast-Enhanced MR Imaging, Long Noncoding RNA, and Metastasis. Radiology 2015, 275, 384–392. [Google Scholar] [CrossRef] [PubMed]

- Ha, R.; Chin, C.; Karcich, J.; Liu, M.Z.; Chang, P.; Mutasa, S.; Pascual Van Sant, E.; Wynn, R.T.; Connolly, E.; Jambawalikar, S. Prior to Initiation of Chemotherapy, Can We Predict Breast Tumor Response? Deep Learning Convolutional Neural Networks Approach Using a Breast MRI Tumor Dataset. J. Digit. Imaging 2019, 32, 693–701. [Google Scholar] [CrossRef]

- Ha, R.; Chang, P.; Karcich, J.; Mutasa, S.; Van Sant, E.P.; Connolly, E.; Chin, C.; Taback, B.; Liu, M.Z.; Jambawalikar, S. Predicting Post Neoadjuvant Axillary Response Using a Novel Convolutional Neural Network Algorithm. Ann. Surg. Oncol. 2018, 25, 3037–3043. [Google Scholar] [CrossRef] [PubMed]

- Groheux, D.; Martineau, A.; Teixeira, L.; Espié, M.; de Cremoux, P.; Bertheau, P.; Merlet, P.; Lemarignier, C. (18)FDG-PET/CT for Predicting the Outcome in ER+/HER2- Breast Cancer Patients: Comparison of Clinicopathological Parameters and PET Image-Derived Indices Including Tumor Texture Analysis. Breast Cancer Res. 2017, 19, 3. [Google Scholar] [CrossRef]

- Fan, M.; Chen, H.; You, C.; Liu, L.; Gu, Y.; Peng, W.; Gao, X.; Li, L. Radiomics of Tumor Heterogeneity in Longitudinal Dynamic Contrast-Enhanced Magnetic Resonance Imaging for Predicting Response to Neoadjuvant Chemotherapy in Breast Cancer. Front. Mol. Biosci. 2021, 8, 622219. [Google Scholar] [CrossRef]

- Drukker, K.; Li, H.; Antropova, N.; Edwards, A.; Papaioannou, J.; Giger, M.L. Most-Enhancing Tumor Volume by MRI Radiomics Predicts Recurrence-Free Survival “Early on” in Neoadjuvant Treatment of Breast Cancer. Cancer Imaging 2018, 18. [Google Scholar] [CrossRef]

- Dogan, B.E.; Yuan, Q.; Bassett, R.; Guvenc, I.; Jackson, E.F.; Cristofanilli, M.; Whitman, G.J. Comparing the Performances of Magnetic Resonance Imaging Size vs Pharmacokinetic Parameters to Predict Response to Neoadjuvant Chemotherapy and Survival in Patients With Breast Cancer. Curr. Probl. Diagn. Radiol. 2019, 48, 235–240. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, A.; Brade, S.; Sannachi, L.; Quiaoit, K.; Fatima, K.; DiCenzo, D.; Osapoetra, L.O.O.; Saifuddin, M.; Trudeau, M.; Gandhi, S.; et al. Quantitative Ultrasound Radiomics Using Texture Derivatives in Prediction of Treatment Response to Neo-Adjuvant Chemotherapy for Locally Advanced Breast Cancer. Oncotarget 2020, 11, 3782–3792. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.H.; Kim, H.A.; Kim, W.; Lim, I.; Lee, I.; Byun, B.H.; Noh, W.C.; Seong, M.; Lee, S.; Kim, B.I.; et al. Early Prediction of Neoadjuvant Chemotherapy Response for Advanced Breast Cancer Using PET/MRI Image Deep Learning. Sci. Rep. 2020, 10, 21149. [Google Scholar] [CrossRef]

- Cattell, R.F.F.; Kang, J.J.J.; Ren, T.; Huang, P.B.B.; Muttreja, A.; Dacosta, S.; Li, H.; Baer, L.; Clouston, S.; Palermo, R.; et al. MRI Volume Changes of Axillary Lymph Nodes as Predictor of Pathologic Complete Responses to Neoadjuvant Chemotherapy in Breast Cancer. Clin. Breast Cancer 2020, 20, 68–79.e1. [Google Scholar] [CrossRef] [PubMed]

- Cain, E.H.; Saha, A.; Harowicz, M.R.; Marks, J.R.; Marcom, P.K.; Mazurowski, M.A. Multivariate Machine Learning Models for Prediction of Pathologic Response to Neoadjuvant Therapy in Breast Cancer Using MRI Features: A Study Using an Independent Validation Set. Breast Cancer Res. Treat. 2019, 173, 455–463. [Google Scholar] [CrossRef]

- Xiong, Q.; Zhou, X.; Liu, Z.; Lei, C.; Yang, C.; Yang, M.; Zhang, L.; Zhu, T.; Zhuang, X.; Liang, C.; et al. Multiparametric MRI-Based Radiomics Analysis for Prediction of Breast Cancers Insensitive to Neoadjuvant Chemotherapy. Clin. Transl. Oncol. 2020, 22, 50–59. [Google Scholar] [CrossRef] [PubMed]

- Braman, N.M.; Etesami, M.; Prasanna, P.; Dubchuk, C.; Gilmore, H.; Tiwari, P.; Plecha, D.; Madabhushi, A. Intratumoral and Peritumoral Radiomics for the Pretreatment Prediction of Pathological Complete Response to Neoadjuvant Chemotherapy Based on Breast DCE-MRI. Breast Cancer Res. 2017, 19, 57. [Google Scholar] [CrossRef]

- Bitencourt, A.G.V.; Gibbs, P.; Rossi Saccarelli, C.; Daimiel, I.; Lo Gullo, R.; Fox, M.J.; Thakur, S.; Pinker, K.; Morris, E.A.; Morrow, M.; et al. MRI-Based Machine Learning Radiomics Can Predict HER2 Expression Level and Pathologic Response after Neoadjuvant Therapy in HER2 Overexpressing Breast Cancer. EBioMedicine 2020, 61, 103042. [Google Scholar] [CrossRef]

- Bian, T.; Wu, Z.; Lin, Q.; Wang, H.; Ge, Y.; Duan, S.; Fu, G.; Cui, C.; Su, X. Radiomic Signatures Derived from Multiparametric MRI for the Pretreatment Prediction of Response to Neoadjuvant Chemotherapy in Breast Cancer. Br. J. Radiol. 2020, 93, 20200287. [Google Scholar] [CrossRef] [PubMed]

- Altoe, M.L.; Kalinsky, K.; Marone, A.; Kim, H.K.; Guo, H.; Hibshoosh, H.; Tejada, M.; Crew, K.D.; Accordino, M.K.; Trivedi, M.S.; et al. Changes in Diffuse Optical Tomography Images During Early Stages of Neoadjuvant Chemotherapy Correlate with Tumor Response in Different Breast Cancer Subtypes. Clin. Cancer Res 2021, 27, 1949–1957. [Google Scholar] [CrossRef] [PubMed]

- Tahmassebi, A.; Wengert, G.J.; Helbich, T.H.; Bago-Horvath, Z.; Alaei, S.; Bartsch, R.; Dubsky, P.; Baltzer, P.; Clauser, P.; Kapetas, P.; et al. Impact of Machine Learning With Multiparametric Magnetic Resonance Imaging of the Breast for Early Prediction of Response to Neoadjuvant Chemotherapy and Survival Outcomes in Breast Cancer Patients. Investig. Radiol. 2019, 54, 110–117. [Google Scholar] [CrossRef] [PubMed]

- Taghipour, M.; Wray, R.; Sheikhbahaei, S.; Wright, J.L.; Subramaniam, R.M. FDG Avidity and Tumor Burden: Survival Outcomes for Patients With Recurrent Breast Cancer. AJR. Am. J. Roentgenol. 2016, 206, 846–855. [Google Scholar] [CrossRef]

- Shia, W.-C.; Huang, Y.-L.; Wu, H.-K.; Chen, D.-R. Using Flow Characteristics in Three-Dimensional Power Doppler Ultrasound Imaging to Predict Complete Responses in Patients Undergoing Neoadjuvant Chemotherapy. J. Ultrasound Med. 2017, 36, 887–900. [Google Scholar] [CrossRef]

- O’Flynn, E.A.M.; Collins, D.; D’Arcy, J.; Schmidt, M.; de Souza, N.M. Multi-Parametric MRI in the Early Prediction of Response to Neo-Adjuvant Chemotherapy in Breast Cancer: Value of Non-Modelled Parameters. Eur. J. Radiol. 2016, 85, 837–842. [Google Scholar] [CrossRef]

- Lo, W.-C.; Li, W.; Jones, E.F.; Newitt, D.C.; Kornak, J.; Wilmes, L.J.; Esserman, L.J.; Hylton, N.M. Effect of Imaging Parameter Thresholds on MRI Prediction of Neoadjuvant Chemotherapy Response in Breast Cancer Subtypes. PLoS ONE 2016, 11, e0142047. [Google Scholar] [CrossRef]

- Liu, Z.; Li, Z.; Qu, J.; Zhang, R.; Zhou, X.; Li, L.; Sun, K.; Tang, Z.; Jiang, H.; Li, H.; et al. Radiomics of Multiparametric MRI for Pretreatment Prediction of Pathologic Complete Response to Neoadjuvant Chemotherapy in Breast Cancer: A Multicenter Study. Clin. Cancer Res. 2019, 25, 3538–3547. [Google Scholar] [CrossRef]

- van Griethuysen, J.J.M.; Lambregts, D.M.J.; Trebeschi, S.; Lahaye, M.J.; Bakers, F.C.H.; Vliegen, R.F.A.; Beets, G.L.; Aerts, H.J.W.L.; Beets-Tan, R.G.H. Radiomics Performs Comparable to Morphologic Assessment by Expert Radiologists for Prediction of Response to Neoadjuvant Chemoradiotherapy on Baseline Staging MRI in Rectal Cancer. Abdom. Radiol. 2020, 45, 632–643. [Google Scholar] [CrossRef]

- Schurink, N.W.; Min, L.A.; Berbee, M.; van Elmpt, W.; van Griethuysen, J.J.M.; Bakers, F.C.H.; Roberti, S.; van Kranen, S.R.; Lahaye, M.J.; Maas, M.; et al. Value of Combined Multiparametric MRI and FDG-PET/CT to Identify Well-Responding Rectal Cancer Patients before the Start of Neoadjuvant Chemoradiation. Eur. Radiol. 2020, 30, 2945–2954. [Google Scholar] [CrossRef]

- Liang, C.-Y.; Chen, M.-D.; Zhao, X.-X.; Yan, C.-G.; Mei, Y.-J.; Xu, Y.-K. Multiple Mathematical Models of Diffusion-Weighted Magnetic Resonance Imaging Combined with Prognostic Factors for Assessing the Response to Neoadjuvant Chemotherapy and Radiation Therapy in Locally Advanced Rectal Cancer. Eur. J. Radiol. 2019, 110, 249–255. [Google Scholar] [CrossRef]

- Delli Pizzi, A.; Chiarelli, A.M.; Chiacchiaretta, P.; d’Annibale, M.; Croce, P.; Rosa, C.; Mastrodicasa, D.; Trebeschi, S.; Lambregts, D.M.J.; Caposiena, D.; et al. MRI-Based Clinical-Radiomics Model Predicts Tumor Response before Treatment in Locally Advanced Rectal Cancer. Sci. Rep. 2021, 11, 5379. [Google Scholar] [CrossRef]

- Bulens, P.; Couwenberg, A.; Haustermans, K.; Debucquoy, A.; Vandecaveye, V.; Philippens, M.; Zhou, M.; Gevaert, O.; Intven, M. Development and Validation of an MRI-Based Model to Predict Response to Chemoradiotherapy for Rectal Cancer. Radiother. Oncol 2018, 126, 437–442. [Google Scholar] [CrossRef]

- Zhuang, Z.; Liu, Z.; Li, J.; Wang, X.; Xie, P.; Xiong, F.; Hu, J.; Meng, X.; Huang, M.; Deng, Y.; et al. Radiomic Signature of the FOWARC Trial Predicts Pathological Response to Neoadjuvant Treatment in Rectal Cancer. J. Transl. Med. 2021, 19, 256. [Google Scholar] [CrossRef]

- Shaish, H.; Aukerman, A.; Vanguri, R.; Spinelli, A.; Armenta, P.; Jambawalikar, S.; Makkar, J.; Bentley-Hibbert, S.; Del Portillo, A.; Kiran, R.; et al. Radiomics of MRI for Pretreatment Prediction of Pathologic Complete Response, Tumor Regression Grade, and Neoadjuvant Rectal Score in Patients with Locally Advanced Rectal Cancer Undergoing Neoadjuvant Chemoradiation: An International Multicenter Study. Eur. Radiol. 2020, 30, 6263–6273. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, F.-J.; Zhao, X.-X.; Yang, Y.; Liang, C.-Y.; Feng, L.-L.; Wan, X.-B.; Ding, Y.; Zhang, Y.-W. Development of a Joint Prediction Model Based on Both the Radiomics and Clinical Factors for Predicting the Tumor Response to Neoadjuvant Chemoradiotherapy in Patients with Locally Advanced Rectal Cancer. Cancer Manag. Res. 2021, 13, 3235–3246. [Google Scholar] [CrossRef]

- Bibault, J.; Giraud, P.; Housset, M.; Durdux, C.; Taieb, J.; Berger, A.; Coriat, R.; Chaussade, S.; Dousset, B.; Nordlinger, B.; et al. Deep Learning and Radiomics Predict Complete Response after Neo-Adjuvant Chemoradiation for Locally Advanced Rectal Cancer. Sci. Rep. 2018, 8, 12611. [Google Scholar] [CrossRef]

- Meng, Y.; Zhang, Y.; Dong, D.; Li, C.; Liang, X.; Zhang, C.; Wan, L.; Zhao, X.; Xu, K.; Zhou, C.; et al. Novel Radiomic Signature as a Prognostic Biomarker for Locally Advanced Rectal Cancer. J. Magn. Reson. Imaging 2018, 48, 605–614. [Google Scholar] [CrossRef]

- Schurink, N.W.; van Kranen, S.R.; Berbee, M.; van Elmpt, W.; Bakers, F.C.H.; Roberti, S.; van Griethuysen, J.J.M.; Min, L.A.; Lahaye, M.J.; Maas, M.; et al. Studying Local Tumour Heterogeneity on MRI and FDG-PET/CT to Predict Response to Neoadjuvant Chemoradiotherapy in Rectal Cancer. Eur. Radiol. 2021, 31, 7031–7038. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, Y.; Nie, K.; Sun, X.; Niu, T.; Yue, N.; Kwong, T.; Chang, P.; Chow, D.; Chen, J.-H.; et al. Machine Learning for Prediction of Chemoradiation Therapy Response in Rectal Cancer Using Pre-Treatment and Mid-Radiation Multi-Parametric MRI. Magn. Reson. Imaging 2019, 61, 33–40. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, C.; Zhao, Q.; Meng, Y.; Zou, S.; Yang, Y.; Liu, Y.; Jiang, J.; Ye, F.; Ouyang, H.; et al. Developing a Prediction Model Based on MRI for Pathological Complete Response after Neoadjuvant Chemoradiotherapy in Locally Advanced Rectal Cancer. Abdom. Radiol. 2019, 44, 2978–2987. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, X.-Y.; Shi, Y.-J.; Wang, L.; Zhu, H.-T.; Tang, Z.; Wang, S.; Li, X.-T.; Tian, J.; Sun, Y.-S. Radiomics Analysis for Evaluation of Pathological Complete Response to Neoadjuvant Chemoradiotherapy in Locally Advanced Rectal Cancer. Clin. Cancer Res. 2017, 23, 7253–7262. [Google Scholar] [CrossRef]

- Kassam, Z.; Burgers, K.; Walsh, J.C.; Lee, T.Y.; Leong, H.S.; Fisher, B. A Prospective Feasibility Study Evaluating the Role of Multimodality Imaging and Liquid Biopsy for Response Assessment in Locally Advanced Rectal Carcinoma. Abdom. Radiol. 2019, 44, 3641–3651. [Google Scholar] [CrossRef]

- Zheng, Y.; Huang, Y.; Bi, G.; Chen, Z.; Lu, T.; Xu, S.; Zhan, C.; Wang, Q. Enlarged Mediastinal Lymph Nodes in Computed Tomography Are a Valuable Prognostic Factor in Non-Small Cell Lung Cancer Patients with Pathologically Negative Lymph Nodes. Cancer Manag. Res. 2020, 12, 10875–10886. [Google Scholar] [CrossRef]

- Zhang, N.; Liang, R.; Gensheimer, M.F.; Guo, M.; Zhu, H.; Yu, J.; Diehn, M.; Loo, B.W.J.; Li, R.; Wu, J. Early Response Evaluation Using Primary Tumor and Nodal Imaging Features to Predict Progression-Free Survival of Locally Advanced Non-Small Cell Lung Cancer. Theranostics 2020, 10, 11707–11718. [Google Scholar] [CrossRef]

- van Timmeren, J.E.E.; Leijenaar, R.T.H.H.T.H.H.; van Elmpt, W.; Reymen, B.; Oberije, C.; Monshouwer, R.; Bussink, J.; Brink, C.; Hansen, O.; Lambin, P. Survival Prediction of Non-Small Cell Lung Cancer Patients Using Radiomics Analyses of Cone-Beam CT Images. Radiother. Oncol. 2017, 123, 363–369. [Google Scholar] [CrossRef]

- Tunali, I.; Gray, J.E.; Qi, J.; Abdalah, M.; Jeong, D.K.; Guvenis, A.; Gillies, R.J.; Schabath, M.B. Novel Clinical and Radiomic Predictors of Rapid Disease Progression Phenotypes among Lung Cancer Patients Treated with Immunotherapy: An Early Report. Lung Cancer 2019, 129, 75–79. [Google Scholar] [CrossRef]

- Trebeschi, S.; Drago, S.G.G.; Birkbak, N.J.J.; Kurilova, I.; Cǎlin, A.M.M.; Delli Pizzi, A.; Lalezari, F.; Lambregts, D.M.J.; Rohaan, M.W.W.; Parmar, C.; et al. Predicting Response to Cancer Immunotherapy Using Noninvasive Radiomic Biomarkers. Ann. Oncol. 2019, 30, 998–1004. [Google Scholar] [CrossRef]

- Sun, W.; Jiang, M.; Dang, J.; Chang, P.; Yin, F.-F. Effect of Machine Learning Methods on Predicting NSCLC Overall Survival Time Based on Radiomics Analysis. Radiat. Oncol. 2018, 13, 197. [Google Scholar] [CrossRef]

- Steiger, S.; Arvanitakis, M.; Sick, B.; Weder, W.; Hillinger, S.; Burger, I.A. Analysis of Prognostic Values of Various PET Metrics in Preoperative (18)F-FDG PET for Early-Stage Bronchial Carcinoma for Progression-Free and Overall Survival: Significantly Increased Glycolysis Is a Predictive Factor. J. Nucl. Med. 2017, 58, 1925–1930. [Google Scholar] [CrossRef]

- Soufi, M.; Arimura, H.; Nagami, N. Identification of Optimal Mother Wavelets in Survival Prediction of Lung Cancer Patients Using Wavelet Decomposition-Based Radiomic Features. Med. Phys. 2018, 45, 5116–5128. [Google Scholar] [CrossRef]

- Sharma, A.; Mohan, A.; Bhalla, A.S.; Sharma, M.C.; Vishnubhatla, S.; Das, C.J.; Pandey, A.K.; Sekhar Bal, C.; Patel, C.D.; Sharma, P.; et al. Role of Various Metabolic Parameters Derived From Baseline 18F-FDG PET/CT as Prognostic Markers in Non-Small Cell Lung Cancer Patients Undergoing Platinum-Based Chemotherapy. Clin. Nucl. Med. 2018, 43, e8–e17. [Google Scholar] [CrossRef]

- Seban, R.-D.; Mezquita, L.; Berenbaum, A.; Dercle, L.; Botticella, A.; Le Pechoux, C.; Caramella, C.; Deutsch, E.; Grimaldi, S.; Adam, J.; et al. Baseline Metabolic Tumor Burden on FDG PET/CT Scans Predicts Outcome in Advanced NSCLC Patients Treated with Immune Checkpoint Inhibitors. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 1147–1157. [Google Scholar] [CrossRef]

- Seban, R.-D.; Assie, J.-B.; Giroux-Leprieur, E.; Massiani, M.-A.; Soussan, M.; Bonardel, G.; Chouaid, C.; Playe, M.; Goldfarb, L.; Duchemann, B.; et al. FDG-PET Biomarkers Associated with Long-Term Benefit from First-Line Immunotherapy in Patients with Advanced Non-Small Cell Lung Cancer. Ann. Nucl. Med. 2020, 34, 968–974. [Google Scholar] [CrossRef]

- Pellegrino, S.; Fonti, R.; Mazziotti, E.; Piccin, L.; Mozzillo, E.; Damiano, V.; Matano, E.; De Placido, S.; Del Vecchio, S. Total Metabolic Tumor Volume by 18F-FDG PET/CT for the Prediction of Outcome in Patients with Non-Small Cell Lung Cancer. Ann. Nucl. Med. 2019, 33, 937–944. [Google Scholar] [CrossRef]

- Yu, W.; Tang, C.; Hobbs, B.P.; Li, X.; Koay, E.J.; Wistuba, I.I.; Sepesi, B.; Behrens, C.; Rodriguez Canales, J.; Parra Cuentas, E.R.; et al. Development and Validation of a Predictive Radiomics Model for Clinical Outcomes in Stage I Non-Small Cell Lung Cancer. Int. J. Radiat. Oncol. Biol. Phys. 2018, 102, 1090–1097. [Google Scholar] [CrossRef]

- Park, S.; Ha, S.; Lee, S.-H.H.S.H.; Paeng, J.C.C.; Keam, B.; Kim, T.M.M.; Kim, D.-W.W.D.W.; Heo, D.S.S. Intratumoral Heterogeneity Characterized by Pretreatment PET in Non-Small Cell Lung Cancer Patients Predicts Progression-Free Survival on EGFR Tyrosine Kinase Inhibitor. PLoS ONE 2018, 13, e0189766. [Google Scholar] [CrossRef]

- Oberije, C.; De Ruysscher, D.; Houben, R.; van de Heuvel, M.; Uyterlinde, W.; Deasy, J.O.O.; Belderbos, J.; Dingemans, A.-M.C.M.C.A.M.C.; Rimner, A.; Din, S.; et al. A Validated Prediction Model for Overall Survival From Stage III Non-Small Cell Lung Cancer: Toward Survival Prediction for Individual Patients. Int. J. Radiat. Oncol. Biol. Phys. 2015, 92, 935–944. [Google Scholar] [CrossRef]

- Lou, B.; Doken, S.; Zhuang, T.; Wingerter, D.; Gidwani, M.; Mistry, N.; Ladic, L.; Kamen, A.; Abazeed, M.E.E.E. An Image-Based Deep Learning Framework for Individualizing Radiotherapy Dose. Lancet Digit. Health 2019, 1, e136–e147. [Google Scholar] [CrossRef]

- Li, H.; Galperin-Aizenberg, M.; Pryma, D.; Simone, C.B., 2nd; Fan, Y. Unsupervised Machine Learning of Radiomic Features for Predicting Treatment Response and Overall Survival of Early Stage Non-Small Cell Lung Cancer Patients Treated with Stereotactic Body Radiation Therapy. Radiother. Oncol. 2018, 129, 218–226. [Google Scholar] [CrossRef]

- Li, H.; Zhang, R.; Wang, S.; Fang, M.; Zhu, Y.; Hu, Z.; Dong, D.; Shi, J.; Tian, J. CT-Based Radiomic Signature as a Prognostic Factor in Stage IV ALK-Positive Non-Small-Cell Lung Cancer Treated With TKI Crizotinib: A Proof-of-Concept Study. Front. Oncol. 2020, 10, 57. [Google Scholar] [CrossRef]

- Lee, J.H.H.; Lee, H.Y.Y.; Ahn, M.-J.J.M.J.; Park, K.; Ahn, J.S.S.; Sun, J.-M.M.J.M.; Lee, K.S.S. Volume-Based Growth Tumor Kinetics as a Prognostic Biomarker for Patients with EGFR Mutant Lung Adenocarcinoma Undergoing EGFR Tyrosine Kinase Inhibitor Therapy: A Case Control Study. Cancer Imaging 2016, 16, 5. [Google Scholar] [CrossRef]

- Kirienko, M.; Cozzi, L.; Antunovic, L.; Lozza, L.; Fogliata, A.; Voulaz, E.; Rossi, A.; Chiti, A.; Sollini, M. Prediction of Disease-Free Survival by the PET/CT Radiomic Signature in Non-Small Cell Lung Cancer Patients Undergoing Surgery. Eur. J. Nucl. Med. Mol. Imaging 2018, 45, 207–217. [Google Scholar] [CrossRef]

- Kim, D.-H.; Jung, J.-H.; Son, S.H.; Kim, C.-Y.; Hong, C.M.; Oh, J.-R.; Jeong, S.Y.; Lee, S.-W.; Lee, J.; Ahn, B.-C. Prognostic Significance of Intratumoral Metabolic Heterogeneity on 18F-FDG PET/CT in Pathological N0 Non-Small Cell Lung Cancer. Clin. Nucl. Med. 2015, 40, 708–714. [Google Scholar] [CrossRef] [PubMed]

- Khorrami, M.; Prasanna, P.; Gupta, A.; Patil, P.; Velu, P.D.; Thawani, R.; Corredor, G.; Alilou, M.; Bera, K.; Fu, P.; et al. Changes in CT Radiomic Features Associated with Lymphocyte Distribution Predict Overall Survival and Response to Immunotherapy in Non-Small Cell Lung Cancer. Cancer Immunol. Res. 2020, 8, 108–119. [Google Scholar] [CrossRef] [PubMed]

- Khorrami, M.; Khunger, M.; Zagouras, A.; Patil, P.; Thawani, R.; Bera, K.; Rajiah, P.; Fu, P.; Velcheti, V.; Madabhushi, A. Combination of Peri- and Intratumoral Radiomic Features on Baseline CT Scans Predicts Response to Chemotherapy in Lung Adenocarcinoma. Radiol. Artif. Intell. 2019, 1, 180012. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, B.; LaRiviere, M.J.; Cohen, E.A.; Buckingham, T.H.; Yee, S.S.; Black, T.A.; Chien, A.L.; Noël, P.; Hwang, W.-T.; Katz, S.I.; et al. Combining Radiomic Phenotypes of Non-Small Cell Lung Cancer with Liquid Biopsy Data May Improve Prediction of Response to EGFR Inhibitors. Sci. Rep. 2021, 11, 9984. [Google Scholar] [CrossRef]

- Khorrami, M.; Jain, P.; Bera, K.; Alilou, M.; Thawani, R.; Patil, P.; Ahmad, U.; Murthy, S.; Stephans, K.; Fu, P.; et al. Predicting Pathologic Response to Neoadjuvant Chemoradiation in Resectable Stage III Non-Small Cell Lung Cancer Patients Using Computed Tomography Radiomic Features. Lung Cancer 2019, 135, 1–9. [Google Scholar] [CrossRef]

- Kamiya, S.; Iwano, S.; Umakoshi, H.; Ito, R.; Shimamoto, H.; Nakamura, S.; Naganawa, S. Computer-Aided Volumetry of Part-Solid Lung Cancers by Using CT: Solid Component Size Predicts Prognosis. Radiology 2018, 287, 1030–1040. [Google Scholar] [CrossRef]

- Kakino, R.; Nakamura, M.; Mitsuyoshi, T.; Shintani, T.; Kokubo, M.; Negoro, Y.; Fushiki, M.; Ogura, M.; Itasaka, S.; Yamauchi, C.; et al. Application and Limitation of Radiomics Approach to Prognostic Prediction for Lung Stereotactic Body Radiotherapy Using Breath-Hold CT Images with Random Survival Forest: A Multi-Institutional Study. Med. Phys. 2020, 47, 4634–4643. [Google Scholar] [CrossRef]

- Jiao, Z.; Li, H.; Xiao, Y.; Aggarwal, C.; Galperin-Aizenberg, M.; Pryma, D.; Simone, C.B. 2nd B. 2nd B. 2nd B. 2nd; Feigenberg, S.J.J.J.J.; Kao, G.D.D.D.D.; Fan, Y. Integration of Risk Survival Measures Estimated From Pre- and Posttreatment Computed Tomography Scans Improves Stratification of Patients With Early-Stage Non-Small Cell Lung Cancer Treated With Stereotactic Body Radiation Therapy. Int. J. Radiat. Oncol. Biol. Phys. 2021, 109, 1647–1656. [Google Scholar] [CrossRef]

- Hyun, S.H.; Ahn, H.K.; Ahn, M.-J.J.; Ahn, Y.C.; Kim, J.; Shim, Y.M.; Choi, J.Y. Volume-Based Assessment With 18F-FDG PET/CT Improves Outcome Prediction for Patients With Stage IIIA-N2 Non-Small Cell Lung Cancer. AJR Am. J. Roentgenol. 2015, 205, 623–628. [Google Scholar] [CrossRef]

- Du, Q.; Baine, M.; Bavitz, K.; McAllister, J.; Liang, X.; Yu, H.; Ryckman, J.; Yu, L.; Jiang, H.; Zhou, S.; et al. Radiomic Feature Stability across 4D Respiratory Phases and Its Impact on Lung Tumor Prognosis Prediction. PLoS ONE 2019, 14, e0216480. [Google Scholar] [CrossRef]

- Domachevsky, L.; Groshar, D.; Galili, R.; Saute, M.; Bernstine, H. Survival Prognostic Value of Morphological and Metabolic Variables in Patients with Stage I and II Non-Small Cell Lung Cancer. Eur. Radiol. 2015, 25, 3361–3367. [Google Scholar] [CrossRef] [PubMed]

- Cui, S.; Ten Haken, R.K.K.K.K.; El Naqa, I. Integrating Multiomics Information in Deep Learning Architectures for Joint Actuarial Outcome Prediction in Non-Small Cell Lung Cancer Patients After Radiation Therapy. Int. J. Radiat. Oncol. Biol. Phys. 2021, 110, 893–904. [Google Scholar] [CrossRef] [PubMed]

- Choe, J.; Lee, S.M.; Do, K.-H.; Lee, J.B.; Lee, S.M.; Lee, J.-G.; Seo, J.B. Prognostic Value of Radiomic Analysis of Iodine Overlay Maps from Dual-Energy Computed Tomography in Patients with Resectable Lung Cancer. Eur. Radiol. 2019, 29, 915–923. [Google Scholar] [CrossRef]

- Buizza, G.; Toma-Dasu, I.; Lazzeroni, M.; Paganelli, C.; Riboldi, M.; Chang, Y.; Smedby, Ö.; Wang, C. Early Tumor Response Prediction for Lung Cancer Patients Using Novel Longitudinal Pattern Features from Sequential PET/CT Image Scans. Phys. Med. 2018, 54, 21–29. [Google Scholar] [CrossRef]

- Yossi, S.; Krhili, S.; Muratet, J.-P.; Septans, A.-L.; Campion, L.; Denis, F. Early Assessment of Metabolic Response by 18F-FDG PET during Concomitant Radiochemotherapy of Non-Small Cell Lung Carcinoma Is Associated with Survival: A Retrospective Single-Center Study. Clin. Nucl. Med. 2015, 40, e215–e221. [Google Scholar] [CrossRef] [PubMed]

- Blanc-Durand, P.; Campedel, L.; Mule, S.; Jegou, S.; Luciani, A.; Pigneur, F.; Itti, E. Prognostic Value of Anthropometric Measures Extracted from Whole-Body CT Using Deep Learning in Patients with Non-Small-Cell Lung Cancer. Eur. Radiol. 2020, 30, 3528–3537. [Google Scholar] [CrossRef] [PubMed]

- Bak, S.H.; Park, H.; Sohn, I.; Lee, S.H.; Ahn, M.-J.J.; Lee, H.Y. Prognostic Impact of Longitudinal Monitoring of Radiomic Features in Patients with Advanced Non-Small Cell Lung Cancer. Sci. Rep. 2019, 9, 8730. [Google Scholar] [CrossRef] [PubMed]

- Astaraki, M.; Wang, C.; Buizza, G.; Toma-Dasu, I.; Lazzeroni, M.; Smedby, Ö. Early Survival Prediction in Non-Small Cell Lung Cancer from PET/CT Images Using an Intra-Tumor Partitioning Method. Phys. Med. 2019, 60, 58–65. [Google Scholar] [CrossRef]

- Ahn, H.K.; Lee, H.; Kim, S.G.; Hyun, S.H. Pre-Treatment (18)F-FDG PET-Based Radiomics Predict Survival in Resected Non-Small Cell Lung Cancer. Clin. Radiol. 2019, 74, 467–473. [Google Scholar] [CrossRef]

- Yang, D.M.; Palma, D.A.; Kwan, K.; Louie, A.V.; Malthaner, R.; Fortin, D.; Rodrigues, G.B.; Yaremko, B.P.; Laba, J.; Gaede, S.; et al. Predicting Pathological Complete Response (PCR) after Stereotactic Ablative Radiation Therapy (SABR) of Lung Cancer Using Quantitative Dynamic [(18)F]FDG PET and CT Perfusion: A Prospective Exploratory Clinical Study. Radiat. Oncol. 2021, 16, 11. [Google Scholar] [CrossRef] [PubMed]

- Yan, M.; Wang, W. Radiomic Analysis of CT Predicts Tumor Response in Human Lung Cancer with Radiotherapy. J. Digit. Imaging 2020, 33, 1401–1403. [Google Scholar] [CrossRef]

- Wu, J.; Lian, C.; Ruan, S.; Mazur, T.R.; Mutic, S.; Anastasio, M.A.; Grigsby, P.W.; Vera, P.; Li, H. Treatment Outcome Prediction for Cancer Patients Based on Radiomics and Belief Function Theory. IEEE Trans. Radiat. Plasma Med. Sci. 2019, 3, 216–224. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.-Y.; Zhao, Y.-F.; Liu, Y.; Yang, Y.-K.; Wu, N. Prognostic Value of Metabolic Variables of [18F]FDG PET/CT in Surgically Resected Stage I Lung Adenocarcinoma. Medicine 2017, 96, e7941. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Dong, T.; Xin, B.; Xu, C.; Guo, M.; Zhang, H.; Feng, D.; Wang, X.; Yu, J. Integrative Nomogram of CT Imaging, Clinical, and Hematological Features for Survival Prediction of Patients with Locally Advanced Non-Small Cell Lung Cancer. Eur. Radiol. 2019, 29, 2958–2967. [Google Scholar] [CrossRef]

- Kickingereder, P.; Burth, S.; Wick, A.; Götz, M.; Eidel, O.; Schlemmer, H.P.; Maier-Hein, K.H.; Wick, W.; Bendszus, M.; Radbruch, A.; et al. Radiomic Profiling of Glioblastoma: Identifying an Imaging Predictor of Patient Survival with Improved Performance over Established Clinical and Radiologic Risk Models. Radiology 2016, 280, 880–889. [Google Scholar] [CrossRef]

- Chaddad, A.; Desrosiers, C.; Hassan, L.; Tanougast, C. A Quantitative Study of Shape Descriptors from Glioblastoma Multiforme Phenotypes for Predicting Survival Outcome. Br. J. Radiol. 2016, 89, 20160575. [Google Scholar] [CrossRef] [PubMed]

- Shboul, Z.A.A.; Alam, M.; Vidyaratne, L.; Pei, L.; Elbakary, M.I.I.; Iftekharuddin, K.M.M. Feature-Guided Deep Radiomics for Glioblastoma Patient Survival Prediction. Front. Neurosci. 2019, 13, 966. [Google Scholar] [CrossRef]

- Chaddad, A.; Daniel, P.; Sabri, S.; Desrosiers, C.; Abdulkarim, B. Integration of Radiomic and Multi-Omic Analyses Predicts Survival of Newly Diagnosed IDH1 Wild-Type Glioblastoma. Cancers 2019, 11, 1148. [Google Scholar] [CrossRef] [PubMed]

- Vils, A.; Bogowicz, M.; Tanadini-Lang, S.; Vuong, D.; Saltybaeva, N.; Kraft, J.; Wirsching, H.G.; Gramatzki, D.; Wick, W.; Rushing, E.; et al. Radiomic Analysis to Predict Outcome in Recurrent Glioblastoma Based on Multi-Center MR Imaging From the Prospective DIRECTOR Trial. Front. Oncol. 2021, 11. [Google Scholar] [CrossRef] [PubMed]

- Ingrisch, M.; Schneider, M.J.; Nörenberg, D.; De Figueiredo, G.N.; Maier-Hein, K.; Suchorska, B.; Schüller, U.; Albert, N.; Brückmann, H.; Reiser, M.; et al. Radiomic Analysis Reveals Prognostic Information in T1-Weighted Baseline Magnetic Resonance Imaging in Patients With Glioblastoma. Investig. Radiol. 2017, 52, 360–366. [Google Scholar] [CrossRef]

- Sanghani, P.; Ang, B.T.; King, N.K.K.; Ren, H. Regression Based Overall Survival Prediction of Glioblastoma Multiforme Patients Using a Single Discovery Cohort of Multi-Institutional Multi-Channel MR Images. Med. Biol. Eng. Comput. 2019, 57, 1683–1691. [Google Scholar] [CrossRef] [PubMed]

- Kickingereder, P.; Götz, M.; Muschelli, J.; Wick, A.; Neuberger, U.; Shinohara, R.T.; Sill, M.; Nowosielski, M.; Schlemmer, H.-P.; Radbruch, A.; et al. Large-Scale Radiomic Profiling of Recurrent Glioblastoma Identifies an Imaging Predictor for Stratifying Anti-Angiogenic Treatment Response. Clin. Cancer Res. 2016, 22, 5765–5771. [Google Scholar] [CrossRef] [PubMed]

- Fathi Kazerooni, A.; Akbari, H.; Shukla, G.; Badve, C.; Rudie, J.D.D.D.; Sako, C.; Rathore, S.; Bakas, S.; Pati, S.; Singh, A.; et al. Cancer Imaging Phenomics via CaPTk: Multi-Institutional Prediction of Progression-Free Survival and Pattern of Recurrence in Glioblastoma. JCO Clin. Cancer Inform. 2020, 4, 234–244. [Google Scholar] [CrossRef] [PubMed]

- Bakas, S.; Shukla, G.; Akbari, H.; Erus, G.; Sotiras, A.; Rathore, S.; Sako, C.; Ha, S.M.; Rozycki, M.; Singh, A.; et al. Integrative Radiomic Analysis for Pre-Surgical Prognostic Stratification of Glioblastoma Patients: From Advanced to Basic MRI Protocols. Proc. SPIE Int. Soc. Opt. Eng. 2020, 11315, 112. [Google Scholar] [CrossRef]

- Ferguson, S.D.; Hodges, T.R.; Majd, N.K.; Alfaro-Munoz, K.; Al-Holou, W.N.; Suki, D.; de Groot, J.F.; Fuller, G.N.; Xue, L.; Li, M.; et al. A Validated Integrated Clinical and Molecular Glioblastoma Long-Term Survival-Predictive Nomogram. Neuro-Oncol. Adv. 2021, 3, vdaa146. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Beteta, J.; Molina-García, D.; Martínez-González, A.; Henares-Molina, A.; Amo-Salas, M.; Luque, B.; Arregui, E.; Calvo, M.; Borrás, J.M.; Martino, J.; et al. Morphological MRI-Based Features Provide Pretreatment Survival Prediction in Glioblastoma. Eur. Radiol. 2019, 29, 1968–1977. [Google Scholar] [CrossRef]

- Li, Q.; Bai, H.; Chen, Y.; Sun, Q.; Liu, L.; Zhou, S.; Wang, G.; Liang, C.; Li, Z.C. A Fully-Automatic Multiparametric Radiomics Model: Towards Reproducible and Prognostic Imaging Signature for Prediction of Overall Survival in Glioblastoma Multiforme. Sci. Rep. 2017, 7, 14331. [Google Scholar] [CrossRef] [PubMed]

- Kim, N.; Chang, J.S.; Wee, C.W.; Kim, I.A.; Chang, J.H.; Lee, H.S.; Kim, S.H.; Kang, S.-G.; Kim, E.H.; Yoon, H.I.; et al. Validation and Optimization of a Web-Based Nomogram for Predicting Survival of Patients with Newly Diagnosed Glioblastoma. Strahlenther. Und Onkol. 2020, 196, 58–69. [Google Scholar] [CrossRef]

- Patel, K.S.; Everson, R.G.; Yao, J.; Raymond, C.; Goldman, J.; Schlossman, J.; Tsung, J.; Tan, C.; Pope, W.B.; Ji, M.S.; et al. Diffusion Magnetic Resonance Imaging Phenotypes Predict Overall Survival Benefit From Bevacizumab or Surgery in Recurrent Glioblastoma With Large Tumor Burden. Neurosurgery 2020, 87, 931–938. [Google Scholar] [CrossRef] [PubMed]

- Chaddad, A.; Daniel, P.; Desrosiers, C.; Toews, M.; Abdulkarim, B. Novel Radiomic Features Based on Joint Intensity Matrices for Predicting Glioblastoma Patient Survival Time. IEEE J. Biomed. Heal. Inform. 2019, 23, 795–804. [Google Scholar] [CrossRef] [PubMed]

- Rathore, S.; Akbari, H.; Doshi, J.; Shukla, G.; Rozycki, M.; Bilello, M.; Lustig, R.; Davatzikos, C. Radiomic Signature of Infiltration in Peritumoral Edema Predicts Subsequent Recurrence in Glioblastoma: Implications for Personalized Radiotherapy Planning. J. Med. Imaging 2018, 5, 21219. [Google Scholar] [CrossRef] [PubMed]

- Chakhoyan, A.; Woodworth, D.C.; Harris, R.J.; Lai, A.; Nghiemphu, P.L.; Liau, L.M.; Pope, W.B.; Cloughesy, T.F.; Ellingson, B.M. Mono-Exponential, Diffusion Kurtosis and Stretched Exponential Diffusion MR Imaging Response to Chemoradiation in Newly Diagnosed Glioblastoma. J. Neurooncol. 2018, 139, 651–659. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Beteta, J.; Martínez-González, A.; Molina, D.; Amo-Salas, M.; Luque, B.; Arregui, E.; Calvo, M.; Borrás, J.M.; López, C.; Claramonte, M.; et al. Glioblastoma: Does the Pre-Treatment Geometry Matter? A Postcontrast T1 MRI-Based Study. Eur. Radiol. 2017, 27, 1096–1104. [Google Scholar] [CrossRef] [PubMed]

- Sanghani, P.; Ang, B.T.; King, N.K.K.; Ren, H. Overall Survival Prediction in Glioblastoma Multiforme Patients from Volumetric, Shape and Texture Features Using Machine Learning. Surg. Oncol. 2018, 27, 709–714. [Google Scholar] [CrossRef] [PubMed]

- Chang, K.; Zhang, B.; Guo, X.; Zong, M.; Rahman, R.; Sanchez, D.; Winder, N.; Reardon, D.A.; Zhao, B.; Wen, P.Y.; et al. Multimodal Imaging Patterns Predict Survival in Recurrent Glioblastoma Patients Treated with Bevacizumab. Neuro. Oncol. 2016, 18, 1680–1687. [Google Scholar] [CrossRef] [PubMed]

- Chaddad, A.; Sabri, S.; Niazi, T.; Abdulkarim, B. Prediction of Survival with Multi-Scale Radiomic Analysis in Glioblastoma Patients. Med. Biol. Eng. Comput. 2018, 56, 2287–2300. [Google Scholar] [CrossRef]

- Tan, Y.; Mu, W.; Wang, X.-C.; Yang, G.-Q.; Gillies, R.J.; Zhang, H. Improving Survival Prediction of High-Grade Glioma via Machine Learning Techniques Based on MRI Radiomic, Genetic and Clinical Risk Factors. Eur. J. Radiol. 2019, 120, 108609. [Google Scholar] [CrossRef]

- Kickingereder, P.; Neuberger, U.; Bonekamp, D.; Piechotta, P.L.; Götz, M.; Wick, A.; Sill, M.; Kratz, A.; Shinohara, R.T.; Jones, D.T.W.; et al. Radiomic Subtyping Improves Disease Stratification beyond Key Molecular, Clinical, and Standard Imaging Characteristics in Patients with Glioblastoma. Neuro. Oncol. 2018, 20, 848–857. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Beteta, J.; Molina-García, D.; Ortiz-Alhambra, J.A.; Fernández-Romero, A.; Luque, B.; Arregui, E.; Calvo, M.; Borrás, J.M.; Meléndez, B.; Rodríguez de Lope, Á.; et al. Tumor Surface Regularity at MR Imaging Predicts Survival and Response to Surgery in Patients with Glioblastoma. Radiology 2018, 288, 218–225. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.; Ahn, K.-J.J.; Nam, Y.; Jang, J.; Shin, N.-Y.Y.; Choi, H.S.; Jung, S.-L.L.; Kim, B.-S. soo Analysis of Heterogeneity of Peritumoral T2 Hyperintensity in Patients with Pretreatment Glioblastoma: Prognostic Value of MRI-Based Radiomics. Eur. J. Radiol. 2019, 120, 108642. [Google Scholar] [CrossRef] [PubMed]

- Wijethilake, N.; Islam, M.; Ren, H. Radiogenomics Model for Overall Survival Prediction of Glioblastoma. Med. Biol. Eng. Comput. 2020, 58, 1767–1777. [Google Scholar] [CrossRef]

- Carles, M.; Popp, I.; Starke, M.M.M.; Mix, M.; Urbach, H.; Schimek-Jasch, T.; Eckert, F.; Niyazi, M.; Baltas, D.; Grosu, A.L.L. FET-PET Radiomics in Recurrent Glioblastoma: Prognostic Value for Outcome after Re-Irradiation? Radiat. Oncol. 2021, 16, 46. [Google Scholar] [CrossRef] [PubMed]

- Beig, N.; Bera, K.; Prasanna, P.; Antunes, J.; Correa, R.; Singh, S.; Saeed Bamashmos, A.; Ismail, M.; Braman, N.; Verma, R.; et al. Radiogenomic-Based Survival Risk Stratification of Tumor Habitat on Gd-T1w MRI Is Associated with Biological Processes in Glioblastoma. Clin. Cancer Res. 2020, 26, 1866–1876. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.; Nam, Y.; Jang, J.; Shin, N.-Y.Y.; Lee, Y.S.; Ahn, K.-J.J.; Kim, B.-S.; Park, J.-S.S.; Jeon, S.-S.; Hong, Y.G. Radiomics May Increase the Prognostic Value for Survival in Glioblastoma Patients When Combined with Conventional Clinical and Genetic Prognostic Models. Eur. Radiol. 2021, 31, 2084–2093. [Google Scholar] [CrossRef]

- Lundemann, M.; Munck Af Rosenschöld, P.; Muhic, A.; Larsen, V.A.; Poulsen, H.S.; Engelholm, S.A.; Andersen, F.L.; Kjær, A.; Larsson, H.B.W.; Law, I.; et al. Feasibility of Multi-Parametric PET and MRI for Prediction of Tumour Recurrence in Patients with Glioblastoma. Eur. J. Nucl. Med. Mol. Imaging 2019, 46, 603–613. [Google Scholar] [CrossRef]

- Horvat, N.; Rocha, C.C.T.; Oliveira, B.C.; Petkovska, I.; Gollub, M.J. MRI of Rectal Cancer: Tumor Staging, Imaging Techniques, and Management. Radiographics 2019, 39, 367–387. [Google Scholar] [CrossRef]

- Weller, M.; van den Bent, M.; Preusser, M.; Le Rhun, E.; Tonn, J.C.; Minniti, G.; Bendszus, M.; Balana, C.; Chinot, O.; Dirven, L.; et al. EANO Guidelines on the Diagnosis and Treatment of Diffuse Gliomas of Adulthood. Nat. Rev. Clin. Oncol. 2021, 18, 170–186. [Google Scholar] [CrossRef] [PubMed]

- Medical Device Development Tools (MDDT). FDA. Available online: https://www.fda.gov/medical-devices/science-and-research-medical-devices/medical-device-development-tools-mddt (accessed on 26 April 2022).

- Medical Devices. European Medicines Agency. Available online: https://www.ema.europa.eu/en/human-regulatory/overview/medical-devices (accessed on 26 April 2022).

- van Timmeren, J.E.; Cester, D.; Tanadini-Lang, S.; Alkadhi, H.; Baessler, B. Radiomics in Medical Imaging—“How-to” Guide and Critical Reflection. Insights Imaging 2020, 11, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Işin, A.; Direkoǧlu, C.; Şah, M. Review of MRI-Based Brain Tumor Image Segmentation Using Deep Learning Methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Gatta, R.; Depeursinge, A.; Ratib, O.; Michielin, O.; Leimgruber, A. Integrating Radiomics into Holomics for Personalised Oncology: From Algorithms to Bedside. Eur. Radiol. Exp. 2020, 4. [Google Scholar] [CrossRef]

- Corrias, G.; Micheletti, G.; Barberini, L.; Suri, J.S.; Saba, L. Texture Analysis Imaging “What a Clinical Radiologist Needs to Know”. Eur. J. Radiol. 2022, 146. [Google Scholar] [CrossRef]

- Bhinder, B.; Gilvary, C.; Madhukar, N.S.; Elemento, O. Artificial Intelligence in Cancer Research and Precision Medicine. Cancer Discov. 2021, 11, 900. [Google Scholar] [CrossRef]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G.M. Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD): The TRIPOD Statement. BMC Med. 2015, 13, 1–10. [Google Scholar] [CrossRef]

- Papanikolaou, N.; Matos, C.; Koh, D.M. How to Develop a Meaningful Radiomic Signature for Clinical Use in Oncologic Patients. Cancer Imaging 2020, 20, 1–10. [Google Scholar] [CrossRef]

- Litière, S.; Isaac, G.; De Vries, E.G.E.; Bogaerts, J.; Chen, A.; Dancey, J.; Ford, R.; Gwyther, S.; Hoekstra, O.; Huang, E.; et al. RECIST 1.1 for Response Evaluation Apply Not Only to Chemotherapy-Treated Patients But Also to Targeted Cancer Agents: A Pooled Database Analysis. J. Clin. Oncol. 2019, 37, 1102–1110. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Dercle, L.; Connors, D.E.; Tang, Y.; Adam, S.J.; Gönen, M.; Hilden, P.; Karovic, S.; Maitland, M.; Moskowitz, C.S.; Kelloff, G.; et al. Vol-PACT: A Foundation for the NIH Public-Private Partnership That Supports Sharing of Clinical Trial Data for the Development of Improved Imaging Biomarkers in Oncology. JCO Clin. Cancer Inform. 2018, 2, 1–12. [Google Scholar] [CrossRef]

- Dercle, L.; Zhao, B.; Gönen, M.; Moskowitz, C.S.; Firas, A.; Beylergil, V.; Connors, D.E.; Yang, H.; Lu, L.; Fojo, T.; et al. Early Readout on Overall Survival of Patients With Melanoma Treated With Immunotherapy Using a Novel Imaging Analysis. JAMA Oncol. 2022, 8. [Google Scholar] [CrossRef]

- Lu, L.; Dercle, L.; Zhao, B.; Schwartz, L.H. Deep Learning for the Prediction of Early On-Treatment Response in Metastatic Colorectal Cancer from Serial Medical Imaging. Nat. Commun. 2021, 12. [Google Scholar] [CrossRef]

- Raymond Geis, J.; Brady, A.P.; Wu, C.C.; Spencer, J.; Ranschaert, E.; Jaremko, J.L.; Langer, S.G.; Kitts, A.B.; Birch, J.; Shields, W.F.; et al. Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement. Radiology 2019, 293, 436–440. [Google Scholar] [CrossRef]

- Hedlund, J.; Eklund, A.; Lundström, C. Key Insights in the AIDA Community Policy on Sharing of Clinical Imaging Data for Research in Sweden. Sci. Data 2020, 7, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Data Sharing Guidelines. Cancer Research UK. Available online: https://www.cancerresearchuk.org/funding-for-researchers/applying-for-funding/policies-that-affect-your-grant/submission-of-a-data-sharing-and-preservation-strategy/data-sharing-guidelines (accessed on 6 September 2022).

| Tumor Type | Total Number of Papers | Proportion with Elements of Prospective Study Design | Proportion with Multicenter Data | Imaging Modality | |||

|---|---|---|---|---|---|---|---|

| MRI | PET/CT | CT | Other | ||||

| Breast cancer | 34 | 8 | 8 | 25 | 4 | 2 | 3 * |

| Rectal cancer | 15 | 3 | 6 | 10 | 0 | 1 | 4 ** |

| Lung cancer | 44 | 5 | 11 | 0 | 15 | 29 | 1 *** |

| Glioblastoma | 31 | 6 | 7 | 29 | 0 | 0 | 2 **** |

| Non-FDA Surrogate Endpoints | Pathology-Based Surrogate Endpoints (+/−RECIST Endpoints) | RECIST-Based Surrogate Endpoints | Survival Endpoints (+/− RECIST Endpoints) | Total | |

|---|---|---|---|---|---|

| Breast cancer | 1 | 28 | 4 | 1 | 34 |

| Disease-free survival [21,27,32,47] | 0 | 0 | 4 | 0 | 4 |

| Overall survival [44] | 0 | 0 | 0 | 1 | 1 |

| Pathologic complete response [15,16,17,18,19,20,22,26,28,29,31,33,35,36,37,38,39,40,41,45,46,48] | 0 | 22 | 0 | 0 | 22 |

| Pathologic complete response + Disease-free survival [24,25,30,34,42,43] | 0 | 5 | 0 | 0 | 5 |

| Pathologic complete response + Durable objective overall response rate [34] | 0 | 1 | 0 | 0 | 1 |

| Predictive therapy response [23] | 1 | 0 | 0 | 0 | 1 |

| Rectal cancer | 14 | 0 | 1 | 0 | 15 |

| Disease-free survival [58] | 0 | 0 | 1 | 0 | 1 |

| Pathologic complete response [49,50,51,52,53,54,55,56,57,59,60,61,62,63] | 14 | 0 | 0 | 0 | 14 |

| Lung cancer | 1 | 0 | 17 | 26 | 44 |

| Disease-free survival [83,84,89,102,105] | 0 | 0 | 5 | 0 | 5 |

| Durable objective overall response rate [90,104] | 0 | 0 | 2 | 0 | 2 |

| Overall survival [64,66,69,71,72,76,78,82,85,91,93,94,97,98,100,101,107] | 0 | 0 | 0 | 17 | 17 |

| Overall survival + Progression-free Survival [70,73,75,80,86,87] | 0 | 0 | 0 | 6 | 6 |

| Overall survival + Disease-free survival [88,92,96] | 0 | 0 | 0 | 3 | 3 |

| Pathologic complete response [103] | 1 | 0 | 0 | 0 | 1 |

| Progression-free survival [65,67,68,77,79,81,99,106] | 0 | 0 | 8 | 0 | 8 |

| Progression-free survival + Longterm benefit [74] | 0 | 0 | 1 | 0 | 1 |

| Progression-free survival + Radiation pneumonitis [95] | 0 | 0 | 1 | 0 | 1 |

| Glioblastoma | 2 | 0 | 2 | 26 | 31 |

| Associations with biological processes [136] | 1 | 0 | 0 | 0 | 1 |

| Overall survival [109,110,111,113,114,117,118,119,120,121,122,123,125,127,130,132,133,134] | 0 | 0 | 0 | 18 | 18 |

| Overall survival + Progression-free Survival [108,115,126,128,129,136,137] | 0 | 0 | 0 | 8 | 8 |

| Progression-free survival [112] | 0 | 0 | 1 | 0 | 1 |

| Progression-free survival + pMGMT status [116] | 0 | 0 | 1 | 0 | 1 |

| Recurrence site [124,138] | 2 | 0 | 0 | 0 | 2 |

| Grand Total | 19 | 28 | 24 | 53 | 124 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Funingana, I.-G.; Piyatissa, P.; Reinius, M.; McCague, C.; Basu, B.; Sala, E. Radiomic and Volumetric Measurements as Clinical Trial Endpoints—A Comprehensive Review. Cancers 2022, 14, 5076. https://doi.org/10.3390/cancers14205076

Funingana I-G, Piyatissa P, Reinius M, McCague C, Basu B, Sala E. Radiomic and Volumetric Measurements as Clinical Trial Endpoints—A Comprehensive Review. Cancers. 2022; 14(20):5076. https://doi.org/10.3390/cancers14205076

Chicago/Turabian StyleFuningana, Ionut-Gabriel, Pubudu Piyatissa, Marika Reinius, Cathal McCague, Bristi Basu, and Evis Sala. 2022. "Radiomic and Volumetric Measurements as Clinical Trial Endpoints—A Comprehensive Review" Cancers 14, no. 20: 5076. https://doi.org/10.3390/cancers14205076

APA StyleFuningana, I.-G., Piyatissa, P., Reinius, M., McCague, C., Basu, B., & Sala, E. (2022). Radiomic and Volumetric Measurements as Clinical Trial Endpoints—A Comprehensive Review. Cancers, 14(20), 5076. https://doi.org/10.3390/cancers14205076