Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Clinical Cases and Pathological Records

2.2. Dataset

2.3. Deep Learning Models

2.4. Software and Statistical Analysis

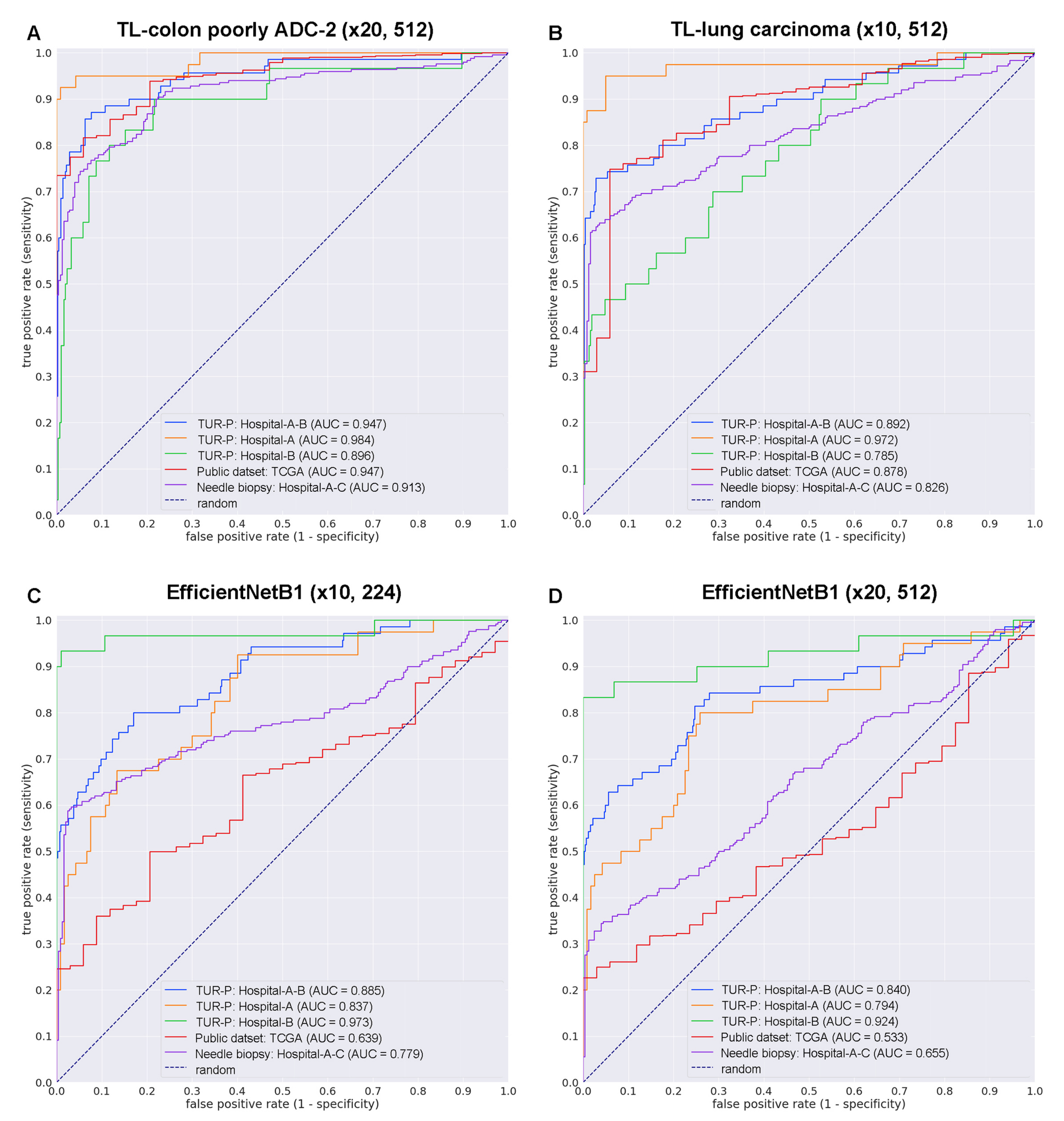

3. Results

3.1. Insufficient AUC Performance of WSI Prostate Adenocarcinoma Evaluation on TUR-P WSIS Using Existing Series of Adenocarcinoma Classification Models

3.2. High AUC Performance of TUR-P WSI Evaluation of Prostate Adenocarcinoma Histopathology Images

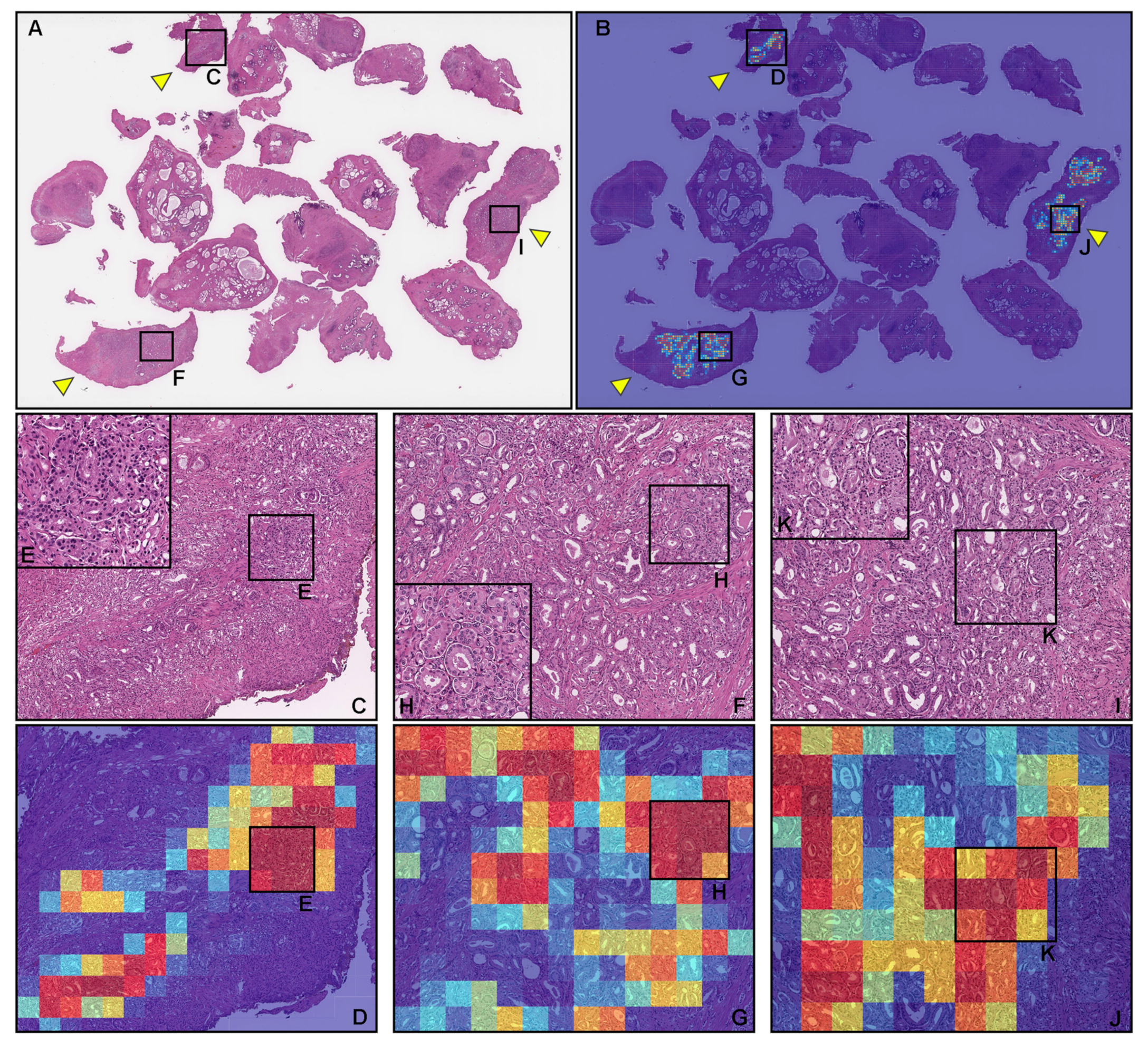

3.3. True Positive Prostate Adenocarcinoma Prediction of TUR-P WSIS

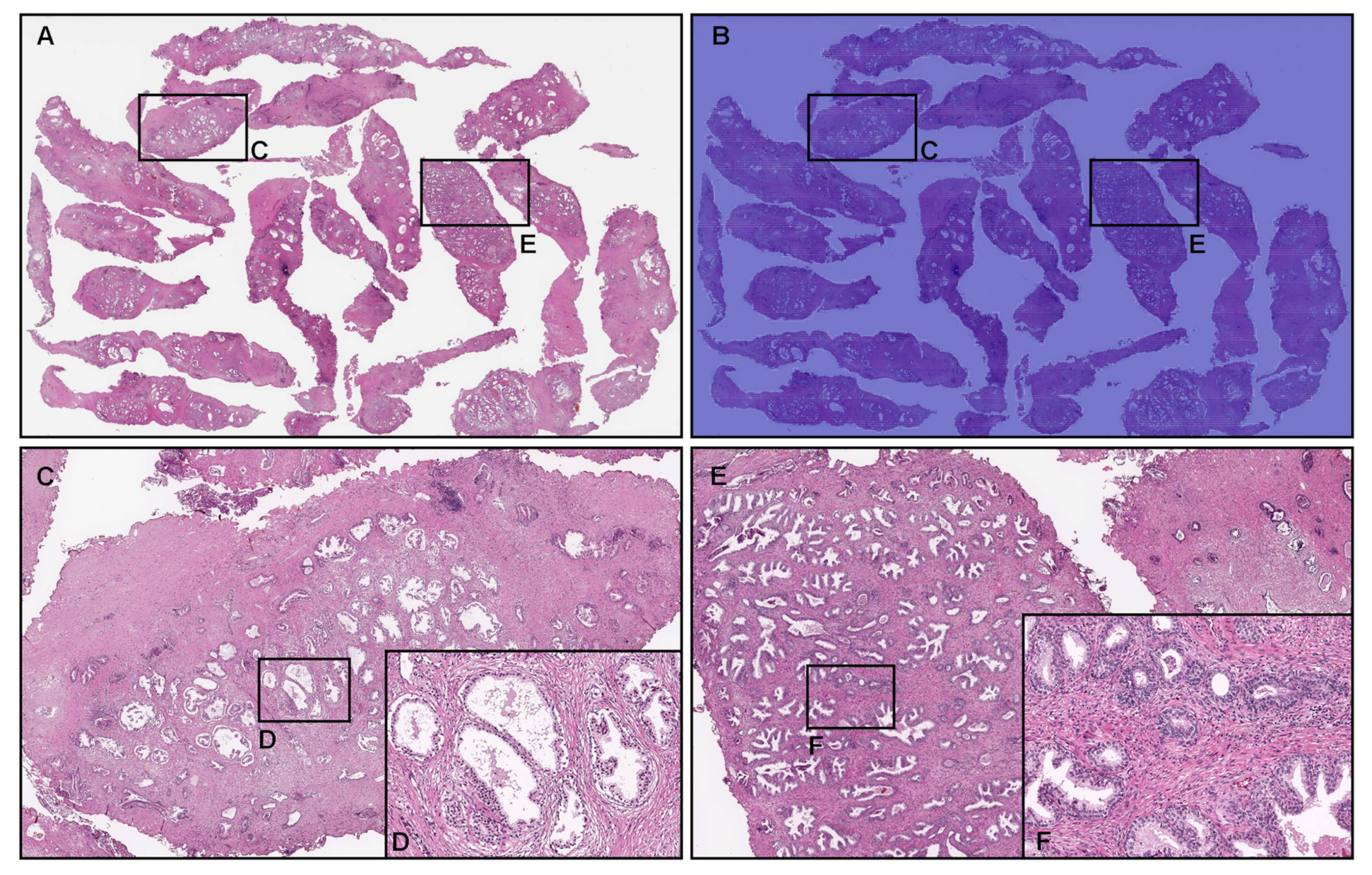

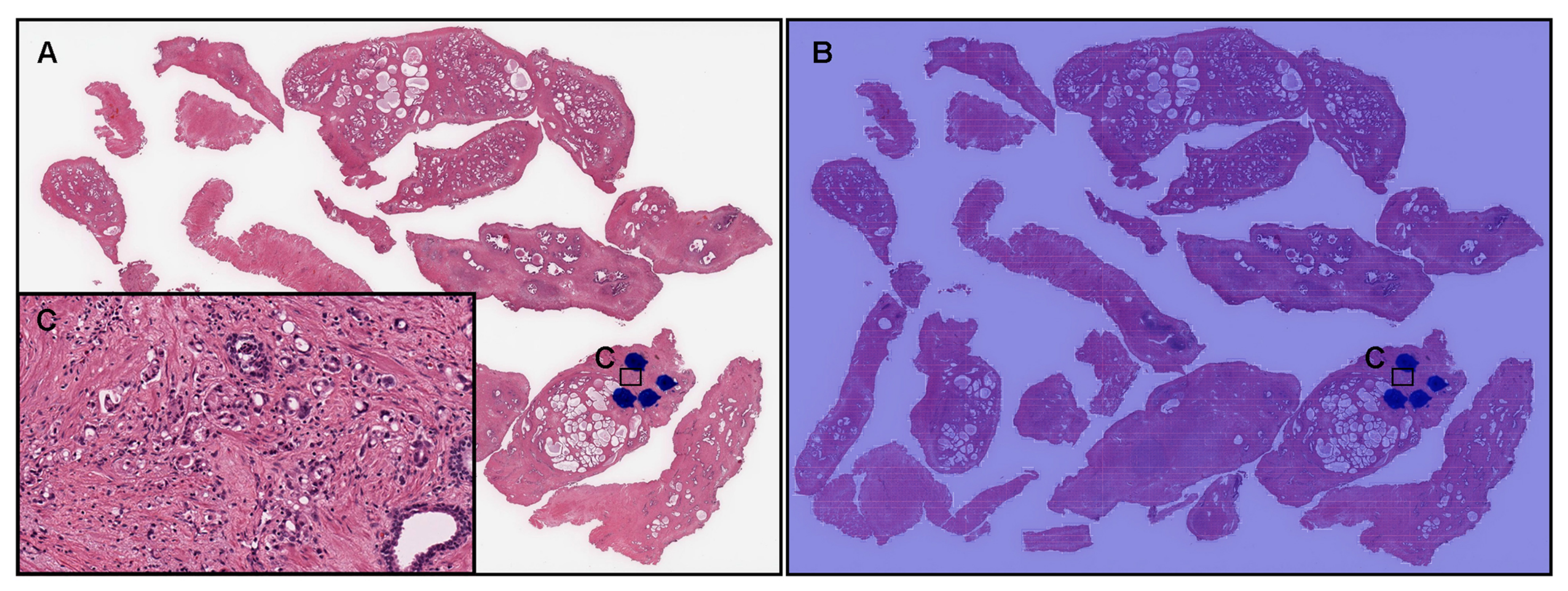

3.4. True Negative Prostate Adenocarcinoma Prediction of TUR-P WSIS

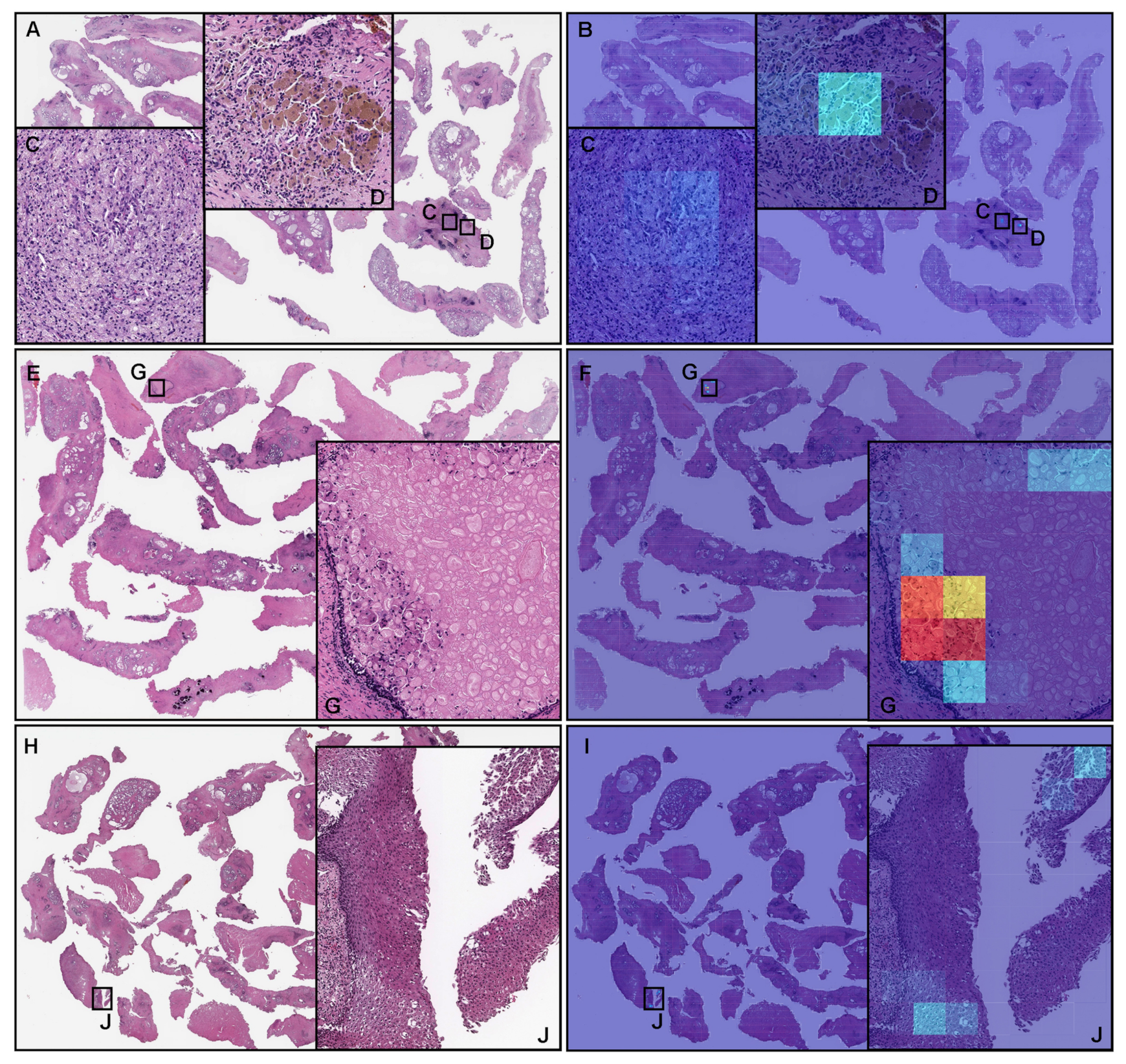

3.5. False Positive Prostate Adenocarcinoma Prediction of TUR-P WSIS

3.6. False Negative Prostate Adenocarcinoma Prediction of TUR-P WSIS

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Mohler, J.L.; Antonarakis, E.S.; Armstrong, A.J.; D’Amico, A.V.; Davis, B.J.; Dorff, T.; Eastham, J.A.; Enke, C.A.; Farrington, T.A.; Higano, C.S.; et al. Prostate cancer, version 2.2019, NCCN clinical practice guidelines in oncology. J. Natl. Compr. Cancer Netw. 2019, 17, 479–505. [Google Scholar] [CrossRef] [PubMed]

- Takamori, H.; Masumori, N.; Kamoto, T. Surgical procedures for benign prostatic hyperplasia: A nationwide survey in Japan, 2014 update. Int. J. Urol. 2017, 24, 476–477. [Google Scholar] [CrossRef] [PubMed]

- Dellavedova, T.; Ponzano, R.; Racca, L.; Minuzzi, F.; Dominguez, M. Prostate cancer as incidental finding in transurethral resection. Arch. Esp. Urol. 2010, 63, 855–861. [Google Scholar] [PubMed]

- Jones, J.; Follis, H.; Johnson, J. Probability of finding T1a and T1b (incidental) prostate cancer during TURP has decreased in the PSA era. Prostate Cancer Prostatic Dis. 2009, 12, 57–60. [Google Scholar] [CrossRef] [PubMed]

- Zigeuner, R.E.; Lipsky, K.; Riedler, I.; Auprich, M.; Schips, L.; Salfellner, M.; Pummer, K.; Hubmer, G. Did the rate of incidental prostate cancer change in the era of PSA testing? A retrospective study of 1127 patients. Urology 2003, 62, 451–455. [Google Scholar] [CrossRef]

- Yoo, C.; Oh, C.Y.; Kim, S.J.; Kim, S.I.; Kim, Y.S.; Park, J.Y.; Song, Y.S.; Yang, W.J.; Chung, H.C.; Cho, I.R.; et al. Preoperative clinical factors for diagnosis of incidental prostate cancer in the era of tissue-ablative surgery for benign prostatic hyperplasia: A korean multi-center review. Korean J. Urol. 2012, 53, 391–395. [Google Scholar] [CrossRef][Green Version]

- Sakamoto, H.; Matsumoto, K.; Hayakawa, N.; Maeda, T.; Sato, A.; Ninomiya, A.; Mukai, K.; Nakamura, S. Preoperative parameters to predict incidental (T1a and T1b) prostate cancer. Can. Urol. Assoc. J. 2014, 8, E815. [Google Scholar] [CrossRef][Green Version]

- Trpkov, K.; Thompson, J.; Kulaga, A.; Yilmaz, A. How much tissue sampling is required when unsuspected minimal prostate carcinoma is identified on transurethral resection? Arch. Pathol. Lab. Med. 2008, 132, 1313–1316. [Google Scholar] [CrossRef]

- Otto, B.; Barbieri, C.; Lee, R.; Te, A.E.; Kaplan, S.A.; Robinson, B.; Chughtai, B. Incidental prostate cancer in transurethral resection of the prostate specimens in the modern era. Adv. Urol. 2014, 2014, 627290. [Google Scholar] [CrossRef]

- Epstein, J.I.; Paull, G.; Eggleston, J.C.; Walsh, P.C. Prognosis of untreated stage A1 prostatic carcinoma: A study of 94 cases with extended followup. J. Urol. 1986, 136, 837–839. [Google Scholar] [CrossRef]

- Van Andel, G.; Vleeming, R.; Kurth, K.; De Reijke, T.M. Incidental carcinoma of the prostate. In Seminars in Surgical Oncology; Wiley Online Library: Hoboken, NJ, USA, 1995; Volume 11, pp. 36–45. [Google Scholar]

- Andrén, O.; Fall, K.; Franzén, L.; Andersson, S.O.; Johansson, J.E.; Rubin, M.A. How well does the Gleason score predict prostate cancer death? A 20-year followup of a population based cohort in Sweden. J. Urol. 2006, 175, 1337–1340. [Google Scholar] [CrossRef]

- Egevad, L.; Granfors, T.; Karlberg, L.; Bergh, A.; Stattin, P. Percent Gleason grade 4/5 as prognostic factor in prostate cancer diagnosed at transurethral resection. J. Urol. 2002, 168, 509–513. [Google Scholar] [CrossRef]

- Yu, K.H.; Zhang, C.; Berry, G.J.; Altman, R.B.; Ré, C.; Rubin, D.L.; Snyder, M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016, 7, 12474. [Google Scholar] [CrossRef]

- Hou, L.; Samaras, D.; Kurc, T.M.; Gao, Y.; Davis, J.E.; Saltz, J.H. Patch-based convolutional neural network for whole slide tissue image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2424–2433. [Google Scholar]

- Madabhushi, A.; Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016, 33, 170–175. [Google Scholar] [CrossRef]

- Litjens, G.; Sánchez, C.I.; Timofeeva, N.; Hermsen, M.; Nagtegaal, I.; Kovacs, I.; Hulsbergen-Van De Kaa, C.; Bult, P.; Van Ginneken, B.; Van Der Laak, J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016, 6, 26286. [Google Scholar] [CrossRef]

- Kraus, O.Z.; Ba, J.L.; Frey, B.J. Classifying and segmenting microscopy images with deep multiple instance learning. Bioinformatics 2016, 32, i52–i59. [Google Scholar] [CrossRef]

- Korbar, B.; Olofson, A.M.; Miraflor, A.P.; Nicka, C.M.; Suriawinata, M.A.; Torresani, L.; Suriawinata, A.A.; Hassanpour, S. Deep learning for classification of colorectal polyps on whole-slide images. J. Pathol. Informatics 2017, 8, 30. [Google Scholar] [CrossRef]

- Luo, X.; Zang, X.; Yang, L.; Huang, J.; Liang, F.; Rodriguez-Canales, J.; Wistuba, I.I.; Gazdar, A.; Xie, Y.; Xiao, G. Comprehensive computational pathological image analysis predicts lung cancer prognosis. J. Thorac. Oncol. 2017, 12, 501–509. [Google Scholar] [CrossRef]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Wei, J.W.; Tafe, L.J.; Linnik, Y.A.; Vaickus, L.J.; Tomita, N.; Hassanpour, S. Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks. Sci. Rep. 2019, 9, 3358. [Google Scholar] [CrossRef]

- Gertych, A.; Swiderska-Chadaj, Z.; Ma, Z.; Ing, N.; Markiewicz, T.; Cierniak, S.; Salemi, H.; Guzman, S.; Walts, A.E.; Knudsen, B.S. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Sci. Rep. 2019, 9, 1483. [Google Scholar] [CrossRef]

- Bejnordi, B.E.; Veta, M.; Van Diest, P.J.; Van Ginneken, B.; Karssemeijer, N.; Litjens, G.; Van Der Laak, J.A.; Hermsen, M.; Manson, Q.F.; Balkenhol, M.; et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef]

- Saltz, J.; Gupta, R.; Hou, L.; Kurc, T.; Singh, P.; Nguyen, V.; Samaras, D.; Shroyer, K.R.; Zhao, T.; Batiste, R.; et al. Spatial organization and molecular correlation of tumor-infiltrating lymphocytes using deep learning on pathology images. Cell Rep. 2018, 23, 181–193. [Google Scholar] [CrossRef]

- Campanella, G.; Hanna, M.G.; Geneslaw, L.; Miraflor, A.; Silva, V.W.K.; Busam, K.J.; Brogi, E.; Reuter, V.E.; Klimstra, D.S.; Fuchs, T.J. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 2019, 25, 1301–1309. [Google Scholar] [CrossRef]

- Iizuka, O.; Kanavati, F.; Kato, K.; Rambeau, M.; Arihiro, K.; Tsuneki, M. Deep learning models for histopathological classification of gastric and colonic epithelial tumours. Sci. Rep. 2020, 10, 1504. [Google Scholar] [CrossRef] [PubMed]

- Kanavati, F.; Tsuneki, M. A deep learning model for gastric diffuse-type adenocarcinoma classification in whole slide images. arXiv 2021, arXiv:2104.12478. [Google Scholar] [CrossRef]

- Kanavati, F.; Ichihara, S.; Rambeau, M.; Iizuka, O.; Arihiro, K.; Tsuneki, M. Deep learning models for gastric signet ring cell carcinoma classification in whole slide images. Technol. Cancer Res. Treat. 2021, 20, 15330338211027901. [Google Scholar] [CrossRef]

- Tsuneki, M.; Kanavati, F. Deep learning models for poorly differentiated colorectal adenocarcinoma classification in whole slide images using transfer learning. Diagnostics 2021, 11, 2074. [Google Scholar] [CrossRef]

- Kanavati, F.; Toyokawa, G.; Momosaki, S.; Takeoka, H.; Okamoto, M.; Yamazaki, K.; Takeo, S.; Iizuka, O.; Tsuneki, M. A deep learning model for the classification of indeterminate lung carcinoma in biopsy whole slide images. Sci. Rep. 2021, 11, 8110. [Google Scholar] [CrossRef]

- Kanavati, F.; Tsuneki, M. Breast invasive ductal carcinoma classification on whole slide images with weakly-supervised and transfer learning. Cancers 2021, 13, 5368. [Google Scholar] [CrossRef] [PubMed]

- Kanavati, F.; Ichihara, S.; Tsuneki, M. A deep learning model for breast ductal carcinoma in situ classification in whole slide images. Virchows Arch. 2022, 480, 1009–1022. [Google Scholar] [CrossRef] [PubMed]

- Tsuneki, M.; Abe, M.; Kanavati, F. A Deep Learning Model for Prostate Adenocarcinoma Classification in Needle Biopsy Whole-Slide Images Using Transfer Learning. Diagnostics 2022, 12, 768. [Google Scholar] [CrossRef] [PubMed]

- Kanavati, F.; Tsuneki, M. Partial transfusion: On the expressive influence of trainable batch norm parameters for transfer learning. In Medical Imaging with Deep Learning; PMLR: Lübeck, Germany, 2021; pp. 338–353. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In International Conference on Machine Learning; PMLR: Long Beach, CA, USA, 2019; pp. 6105–6114. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 4 June 2021).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R.J. An Introduction to the Bootstrap; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Naito, Y.; Tsuneki, M.; Fukushima, N.; Koga, Y.; Higashi, M.; Notohara, K.; Aishima, S.; Ohike, N.; Tajiri, T.; Yamaguchi, H.; et al. A deep learning model to detect pancreatic ductal adenocarcinoma on endoscopic ultrasound-guided fine-needle biopsy. Sci. Rep. 2021, 11, 8454. [Google Scholar] [CrossRef]

- Kanavati, F.; Toyokawa, G.; Momosaki, S.; Rambeau, M.; Kozuma, Y.; Shoji, F.; Yamazaki, K.; Takeo, S.; Iizuka, O.; Tsuneki, M. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci. Rep. 2020, 10, 9297. [Google Scholar] [CrossRef]

- Lokeshwar, S.D.; Harper, B.T.; Webb, E.; Jordan, A.; Dykes, T.A.; Neal, D.E., Jr.; Terris, M.K.; Klaassen, Z. Epidemiology and treatment modalities for the management of benign prostatic hyperplasia. Transl. Androl. Urol. 2019, 8, 529. [Google Scholar] [CrossRef]

| Adenocarcinoma | Benign | Total | ||

|---|---|---|---|---|

| Training set | Hospital-A | 59 | 222 | 281 |

| Hospital-B | 20 | 719 | 739 | |

| Validation set | Hospital-A | 10 | 10 | 20 |

| Hospital-B | 10 | 10 | 20 | |

| total | 99 | 961 | 1060 |

| Adenocarcinoma | Benign | Total | ||

|---|---|---|---|---|

| TUR-P | Hospital-A–B | 70 | 430 | 500 |

| Hospital-A | 40 | 120 | 160 | |

| Hospital-B | 30 | 310 | 340 | |

| Public dataset | TCGA | 733 | 34 | 767 |

| Needle biopsy | Hospital-A–C | 250 | 250 | 500 |

| Existing Models | ROC-AUC | Log Loss |

|---|---|---|

| Breast IDC (×10, 512) | 0.737 [0.664–0.807] | 1.428 [1.340–1.530] |

| Breast IDC, DCIS (×10, 224) | 0.635 [0.565–0.720] | 3.783 [3.624–3.929] |

| Colon ADC, AD (×10, 512) | 0.608 [0.546–0.679] | 3.812 [3.595–4.028] |

| Colon poorly ADC-1 (×20, 512) | 0.780 [0.713–0.840] | 0.863 [0.811–0.913] |

| Colon poorly ADC-2 (×20, 512) | 0.771 [0.681–0.837] | 0.859 [0.890–0.914] |

| Stomach ADC, AD (×10, 512) | 0.762 [0.689–0.833] | 3.133 [2.948–3.268] |

| Stomach poorly ADC (×20, 224) | 0.617 [0.529–0.698] | 1.588 [1.504–1.657] |

| Stomach SRCC (×10, 224) | 0.670 [0.600–0.734] | 0.549 [0.499–0.606] |

| Pancreas EUS-FNA ADC (×10, 224) | 0.808 [0.746–0.888] | 1.080 [1.031–1.142] |

| Lung Carcinoma (×10, 512) | 0.737 [0.662–0.801] | 0.357 [0.298–0.423] |

| TL-colon poorly ADC-2 (×20, 512) | |||

| ROC-AUC | Log-loss | ||

| TUR-P | Hospital-A–B | 0.947 [0.910–0.976] | 0.191 [0.146–0.242] |

| Hospital-A | 0.984 [0.956–1.000] | 0.127 [0.076–0.205] | |

| Hospital-B | 0.896 [0.822–0.956] | 0.221 [0.160–0.299] | |

| Public dataset | TCGA | 0.947 [0.922–0.972] | 0.335 [0.288–0.390] |

| Needle biopsy | Hospital-A–C | 0.913 [0.887–0.939] | 0.587 [0.480–0.700] |

| TL-lung carcinoma (×10, 512) | |||

| ROC-AUC | Log-loss | ||

| TUR-P | Hospital-A–B | 0.892 [0.860–0.948] | 0.328 [0.282–0.364] |

| Hospital-A | 0.972 [0.917–0.998] | 0.277 [0.217–0.364] | |

| Hospital-B | 0.785 [0.688–0.870] | 0.351 [0.301–0.403] | |

| Public dataset | TCGA | 0.878 [0.822–0.929] | 0.258 [0.213–0.299] |

| Needle biopsy | Hospital-A–C | 0.826 [0.786–0.860] | 0.808 [0.702–0.931] |

| EfficientNetB1 (×10, 224) | |||

| ROC-AUC | Log-loss | ||

| TUR-P | Hospital-A–B | 0.885 [0.829–0.927] | 0.239 [0.181–0.298] |

| Hospital-A | 0.837 [0.752–0.909] | 0.479 [0.318–0.619] | |

| Hospital-B | 0.973 [0.916–1.000] | 0.126 [0.092–0.168] | |

| Public dataset | TCGA | 0.639 [0.563–0.716] | 3.800 [3.613–3.977] |

| Needle biopsy | Hospital-A–C | 0.779 [0.736–0.822] | 0.659 [0.552–0.769] |

| EfficientNetB1 (×20, 512) | |||

| ROC-AUC | Log-loss | ||

| TUR-P | Hospital-A–B | 0.840 [0.767–0.897] | 0.315 [0.269–0.377] |

| Hospital-A | 0.794 [0.681–0.872] | 0.451 [0.323–0.601] | |

| Hospital-B | 0.924 [0.856–0.998] | 0.251 [0.203–0.290] | |

| Public dataset | TCGA | 0.533 [0.464–0.616] | 2.611 [2.485–2.721] |

| Needle biopsy | Hospital-A–C | 0.655 [0.609–0.702] | 1.785 [1.551–1.956] |

| Accuracy | Sensitivity | Specificity | ||

| TUR-P | Hospital-A–B | 0.916 [0.892–0.938] | 0.871 [0.794–0.951] | 0.923 [0.897–0.945] |

| Hospital-A | 0.969 [0.938–0.994] | 0.900 [0.800–0.976] | 0.992 [0.974–1.000] | |

| Hospital-B | 0.874 [0.841–0.909] | 0.767 [0.613–0.906] | 0.884 [0.848–0.922] | |

| Public dataset | TCGA | 0.821 [0.793–0.849] | 0.816 [0.786–0.843] | 0.941 [0.852–1.000] |

| Needle biopsy | Hospital-A–C | 0.844 [0.812–0.874] | 0.764 [0.710–0.813] | 0.924 [0.886–0.956] |

| NPV | PPV | |||

| TUR-P | Hospital-A–B | 0.978 [0.963–0.993] | 0.649 [0.550–0.730] | |

| Hospital-A | 0.968 [0.928–0.992] | 0.973 [0.909–1.000] | ||

| Hospital-B | 0.975 [0.955–0.990] | 0.390 [0.275–0.537] | ||

| Public dataset | TCGA | 0.192 [0.132–0.258] | 0.997 [0.992–1.000] | |

| Needle biopsy | Hospital-A–C | 0.797 [0.750–0.840] | 0.910 [0.865–0.946] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsuneki, M.; Abe, M.; Kanavati, F. Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images. Cancers 2022, 14, 4744. https://doi.org/10.3390/cancers14194744

Tsuneki M, Abe M, Kanavati F. Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images. Cancers. 2022; 14(19):4744. https://doi.org/10.3390/cancers14194744

Chicago/Turabian StyleTsuneki, Masayuki, Makoto Abe, and Fahdi Kanavati. 2022. "Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images" Cancers 14, no. 19: 4744. https://doi.org/10.3390/cancers14194744

APA StyleTsuneki, M., Abe, M., & Kanavati, F. (2022). Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images. Cancers, 14(19), 4744. https://doi.org/10.3390/cancers14194744