Simple Summary

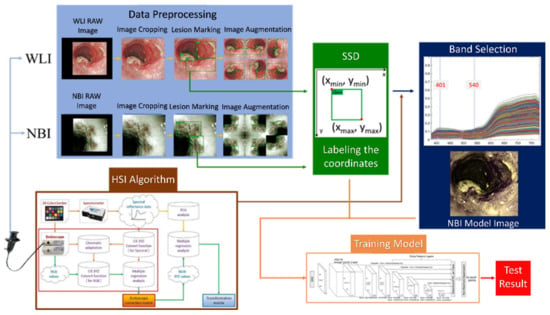

Early esophageal cancer detection is crucial for patient survival; however, even skilled endoscopists find it challenging to identify the cancer cells in the early stages. In order to categorize and identify esophageal cancer using a single shot multi-box detector, this research presents a novel approach integrating hyperspectral imaging by band selection and a deep learning diagnostic model. Based on the pathological characteristics of esophageal cancer, the pictures were categorized into three stages: normal, dysplasia, and squamous cell carcinoma. The findings revealed that mAP in WLIs, NBIs, and HSI pictures each achieved 80%, 85%, and 84%, respectively. The findings of this investigation demonstrated that HSI contains a greater number of spectral characteristics than white-light imaging, which increases accuracy by roughly 5% and complies with NBI predictions.

Abstract

In this study, the combination of hyperspectral imaging (HSI) technology and band selection was coupled with color reproduction. The white-light images (WLIs) were simulated as narrow-band endoscopic images (NBIs). As a result, the blood vessel features in the endoscopic image became more noticeable, and the prediction performance was improved. In addition, a single-shot multi-box detector model for predicting the stage and location of esophageal cancer was developed to evaluate the results. A total of 1780 esophageal cancer images, including 845 WLIs and 935 NBIs, were used in this study. The images were divided into three stages based on the pathological features of esophageal cancer: normal, dysplasia, and squamous cell carcinoma. The results showed that the mean average precision (mAP) reached 80% in WLIs, 85% in NBIs, and 84% in HSI images. This study′s results showed that HSI has more spectral features than white-light imagery, and it improves accuracy by about 5% and matches the results of NBI predictions.

1. Introduction

Noncommunicable diseases account for 70% of the world’s total deaths [1,2,3]. Among them, malignant tumors, also known as cancers, are the second leading cause of death in the world according to the latest statistics in 2020 [4,5,6]. Esophageal cancer has the sixth highest fatality rate [7,8,9]. Nearly 90% of esophageal carcinogenesis occurs in the mucosal layer, which is composed of multiple layers of squamous epithelial cells; thus, esophageal carcinoma can be divided into two types based on its cancerous location [10]. The first type is squamous cell carcinoma (SCC), and the other type is called adenocarcinoma (AC) [11,12]. AC is more common in America and Europe, whereas SCC is more common in Asia, Japan, and Taiwan [13,14]. Esophageal cancer is difficult to identify in its early stages given the lack of evident symptoms [15,16]. By the time esophageal cancer is detected, it is already in the second or third stages.

Although the incidence of esophageal cancer is comparatively lesser than that of other cancer types, the average survival rate is less than 10%, making it a fatal disease [17]. However, if esophageal cancer is detected in its early stages, the five-year survival rate is 90%; however, if it is detected in the later stages, the five-year survival rate drops below 20% [18,19]. Therefore, detecting esophageal cancer in its early stage is vital to increasing the five-year survival rate. One of the methods of detecting esophageal cancer is through a biosensor; however, most biosensors cannot detect cancer at the early stages [20,21,22,23].

In recent years, artificial intelligence (AI) has been extensively used in medical imaging to detect cancer lesions, and most of the methods exhibit a good performance [24,25,26,27,28,29]. Horie et al. used convolutional neural networks (CNNs) to train and predict esophageal cancer, and the sensitivity value was as high as 98% [30]. However, the dataset used in this study was considerably small. In 2020, Albert et al. compared the results of a model to detect early Barret′s esophagus with the results diagnosed by physicians, and they confirmed that the deep learning model outperformed the physicians [31]. At present, the related studies on predicting the spectrum of esophageal cancer using hyperspectral imaging (HSI) combined with AI are limited. Most of them use spectrometers for actual measurements. Maktabi et al. collected images of cancer lesions during surgery and measured the spectrum [32]. However, the patient selection in this study was biased toward patients who were already sick.

HSI has previously been used in numerous classifications fields, such as agriculture [33], astronomy [34], military [35], biosensors [36], air pollution detection [37,38], remote sensing [39], dental imaging [40], environment monitoring [41], satellite photography [42], cancer detection [43], forestry monitoring [44], food security [45], natural resource surveying [46], vegetation observation [47], and geological mapping [48]. The advantage of HSI lies in its excellent resolution, and with a minimum spectral resolution of less than 10 nm, it can be used to obtain more information than RGB images [49]. In addition, the spectral features of different substances are unique, which can provide better identification capability, making the model more accurate for identifying features [50,51]. However, such a large amount of spectrum information greatly increases the dimension of data. If the amount of data cannot meet the high-dimensional requirements, the accuracy will reduce. Therefore, the method of reducing the dimension is very important. Spectral redundancy must be removed while retaining important characteristics. The advantage of band selection is that a specific band or subset can be directly selected, thereby preserving most of the spectral information [52].

In this study, HSI (Smart Spectrum Cloud, Hitspectra Intelligent Technology Co., Ltd., Kaohsiung City, Taiwan) was combined with band selection and used to convert esophageal cancer images into spectral images to reduce the dimensions, and color reproduction was used to convert white-light images (WLIs) into narrowband endoscopic HSI images (NBI). Finally, deep learning was used to classify the images into three categories: normal, dysplasia, and SCC.

2. Materials and Methods

In this study, a total of 1780 esophageal cancer images, including 845 WLIs and 935 NBIs, were used. These images were divided into three categories: normal, dysplasia, and SCC. The WLI category comprised 470 normal, 156 dysplasia, and 219 SCC images, and the NBI category consisted of 425 normal, 290 dysplasia, and 220 SCC images. The images that were blurry, full of mucus, and contained a number of bubbles for the identification of lesions were excluded.

First, the endoscopic images were cut and zoomed in to remove the patient information and unnecessary noise from the images. Then, the doctors of Kaohsiung Medical University marked the processed images into three categories and exported the data of the marked images into the mark format required by the model. This dataset was amplified by rotating the images at different angles and cropping them. The spectral information from WLI was obtained in the visible-light band (380–780 nm, 401 bands) using the HSI conversion process (Figure 1). The 24 color patches were used as the benchmark target. After obtaining the spectral information and images of the 24 color blocks with a spectrometer and an endoscope camera, respectively, the two datasets were converted into numerous color spaces to obtain their conversion matrix, and the RGB images were converted into spectral information.

Figure 1.

Flow chart of spectrum conversion construction using standard 24 color blocks (X-Rite Classic, 24 Color Checkers) as the common target object for spectrum conversion of the endoscope and spectrometer, converting the endoscopic images into 401 bands of the visible-light spectrum information (See Supplementary Figure S4 for the HSI algorithm).

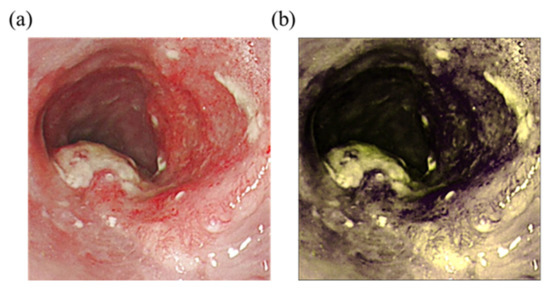

Two wavelengths, namely 415 and 540 nm, were selected to obtain the spectral information converted from the image. These wavelengths were specifically selected because of the different types of light in the images. The longer the wavelength, the deeper the penetration [53]. The red light is absorbed differently by heme in blood vessels based on the difference in depth [54]. As a result, the microvessels in the superficial mucosa tissue will be brown, and blood vessels in the submucosal tissue will be green, thereby creating a strong sense of hierarchy that is advantageous to identifying lesions of the mucosal tissue. Figure 2 shows the comparison of the WLIs and HSI images. The red color of blood vessels in the lesion in Figure 2a and those in Figure 2b were converted to purplish red color, which improved the contrast with the background, allowing doctors to more easily observe and diagnose cancer in its early stages.

Figure 2.

Comparison of WLIs and HSI images. (a) Original WLI; (b) HSI image of the NBI.

Single-shot multi-box detector (SSD) is a single-shot multi-category target detector that is constructed based on CNNs [55] (see Supplement 1 Section S1 for the detailed explanation of SSD). The SSD used in this study had a detection architecture based on the VGG-16 network. VGG-16 is a deep learning architecture with 16 hidden layers consisting of 13 convolutional layers and 3 fully connected layers (see Supplementary Section S2 for the detailed explanation of the model used in this study) [56]. The dataset was divided into three categories, namely WLI, NBI, and HSI endoscopic images, and allotted to a training set and a test set. The training set contained 601 WLI and 711 NBI endoscopic images. The test set comprised 244 WLIs and 224 NBIs. The HSI endoscopy image was the same as the WLI endoscopy image. Finally, the three test sets were inputted to the SSD model for training, and the results were evaluated with the test set.

The results of the prediction models were presented in two ways: visualization of the prediction frame and ground truth, with confidence in the prediction. The degree of coincidence between the prediction results and the marked position was compared with the degree of influence of the prediction model on the prediction results. Next, the prediction performance of the models was analyzed with several evaluation indicators, such as sensitivity, precision, F1-score, kappa value, and average precision (AP). The sensitivity indicates how well the model can detect symptoms of esophageal cancer. The accuracy value indicates the proportion of esophageal cancer and actual cancer symptoms in the model′s diagnosis. F1-score is a harmonic mean, and it can be used as a rough indicator of the model performance. Kappa value can be used to evaluate the consistency between prediction and pathological analysis results to evaluate the feasibility of prediction tools. Its value is between −1 and 1 and often has a threshold of 0.6. AP is a commonly used evaluation index for object detection, and the overall sensitivity and accuracy are used to quantify the overall performance of a prediction model.

3. Results

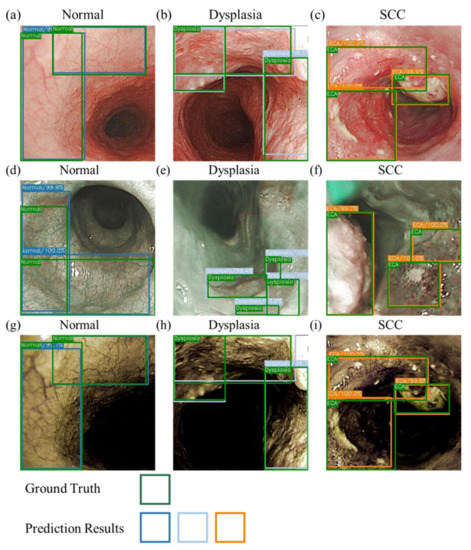

Figure 3 shows the sketch of the predictions from the three models developed. Table 1 shows the results of the prediction model based on the evaluation criteria. The precision, accuracy, AP, and kappa values were expressed in the order of normal, dysplasia, and SCC. The accuracies were 84.5%, 84.9%, and 87% for the WLI prediction model; 87.6%, 84%, and 87.4% for the NBI prediction model; and 90.9%, 89.7%, and 89.8% for the HSI prediction model. The sensitivity values were 69.3%, 81.6%, and 81% for the WLI prediction model; 79.2%, 69.7%, and 80.8% for the NBI prediction model; and 71.2%, 92.1%, and 85.6% for the HSI prediction model. The AP values were 75.3%, 81.2%, and 85% for the WLI prediction model; 84.5%, 84.2%, and 86.7% for the NBI prediction model; and 78.9%, 83.6%, and 88.5% for the HSI prediction model. The kappa values for WLI, NBI, and HSI were 0.60, 0.653, and 0.665, respectively.

Figure 3.

Schematic of the prediction results for WLIs, NBIs, and HSI images. (a–c) are the WLI prediction results. (d–f) are the NBI prediction results. (g–i) are the HSI prediction results. (a,d,g) are the normal period, and the green and blue boxes represent the real and predicted frames, respectively. (b,e,h) are the dysplasia period, and the green and gray boxes represent the real and predicted frames, respectively. (c,f,i) are the SCC period, and the green and orange boxes represent the ground truth box-predicted boxes, respectively.

Table 1.

Predicted performance results of the three developed models.

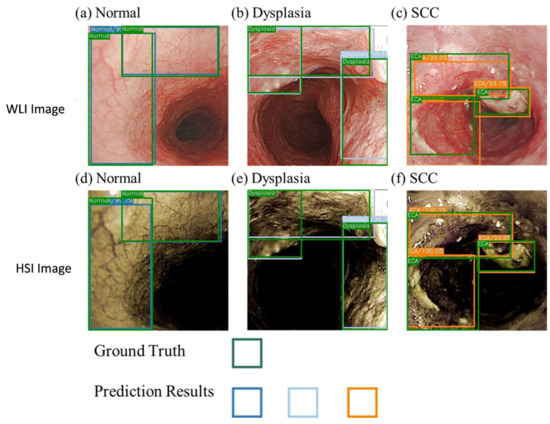

The NBI model showed a good performance in the SCC category and better prediction of the normal category compared with the other two models. This finding may be due to the higher consistency of the blood vessel features in normal images, which is conducive to the prediction of the box selection, compared with those in the other two models. In addition, the dataset showed a profound influence on the prediction results. Compared with the WLI, the accuracy and sensitivity of the HSI model have increased greatly. Figure 4 presents a schematic of the comparison of the prediction results of the WLI and HSI models. The highlighted blood vessel features of the HSI images resulted in similar prediction findings and ground truth box, thereby increasing the confidence level. The indicators in the dysplasia category of the WLI model increased significantly, and the gap with other evaluation indicators widened, improving the performance of the dysplasia category compared with that of the SCC category.

Figure 4.

Comparison of the WLI and the HSI models.

4. Discussion

Given the three data categories in the HSI model, except for the normal category, the other two exhibited a good performance. Although HSI images highlighted the blood vessels in the WLIs, comparing the original WLI with NBI, several blood vessel features were relatively lacking, resulting in incomplete blood vessel features after conversion into a spectral image. Thus, NBI performed better in the normal category. In terms of mAP, the HSI method did not obtain the highest results. However, the average mAP was only low because of the normal category, where the NBI method exhibited the highest performance. If only the other two categories were considered, namely dysplasia and SCC, HSI would have had a better mAP. The HSI method also presented the highest kappa value. All kappa values exceeded the threshold of 0.6, which indicates that the model in this study is feasible for the application in esophageal cancer detection. In addition, the performance of WLI was relatively better than that of NBI in the dysplasia category. However, logically, the results should be the opposite due to the difference in the images of the two datasets. Therefore, the dataset still needs to be improved.

This research proved that the HSI images converted by hyperspectral technology and band selection had an upward trend for all indicators, which also meant that the prediction results improved significantly after the blood vessel features in the WLIs were strengthened. Moreover, comparing the performances of WLI and NBI, highlighting vascular features can improve the prediction performance. The research based on AI-related studies using HSI for early esophageal cancer is limited, and most of the studies use hyperspectral imagers to obtain the actual spectrum and apply different dimensionality reduction methods to reduce the dimension of the spectrum data before training. However, the most suitable dimensionality reduction method for HSI to detect esophageal cancer has not been found. In addition, even though the accuracy of the model has been improved significantly by HSI, the accuracy can be further improved by increasing the dataset. In the future, the same model needs to be trained on marginally different samples of the training data to reduce variance without any noticeable effect on bias. Furthermore, the exact machine learning model that is perfectly suitable with HSI needs to be established by comparing the performance markers of different machine learning models. The related research on the spectrum of esophageal cancer has not yielded clear results, such as whether special features can be used for the imaging of the gastrointestinal tract. In addition, the new method proposed in this study can provide the function of NBI imaging for newer endoscopes, such as capsule endoscopes.

5. Conclusions

This study provides a new method for simulating WLIs into NBIs. Through the combination of HSI and band selection, the characteristics of blood vessels in WLIs can be enhanced. This method improves the diagnostic performance for early cancer. For early esophageal cancer, the simulated HSIs will increase the accuracy by up to 2% when compared with the traditional WLI. The improvement represents about 2–4% specificity, whereas the sensitivity improves by 5–10%. Therefore, this study will reduce the number of instruments to create the NBI of the esophagus, thereby increasing the ease of entering the body and reducing patient discomfort. Therefore, if the technology of this study can be further developed and combined with new endoscopes, it can provide patients with a more comfortable inspection experience, improve the inspection rate, and possibly enable early detection, thus achieving the purpose of early treatment and reducing mortality.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/cancers14174292/s1: Figure S1. SSD architecture. Figure S2. VGG16 architecture diagram. The red box is the VGG16 part; the yellow box is the pyramid structure. Figure S3. SSD feature map scaling (a) shows the image with a real frame; (b) and (c) are two feature maps of different sizes. Finally, two kinds of results are outputted. Figure S4. Default box matching diagram. Figure S5. The HSI algorithm. Table S1. Number of imaging data of esophageal cancer. Table S2. Classification of imaging data of esophageal cancer. Refs. [57,58] are cited in the Supplementary Materials.

Author Contributions

Conceptualization, T.-J.T., Y.-M.T., Y.-K.W., C.-W.H. and H.-C.W.; data curation, T.-H.C., Y.-S.C., A.M., Y.-M.T. and H.-C.W.; formal analysis, T.-H.C., Y.-K.W., Y.-M.T., I.-C.W., C.-W.H. and Y.-S.C.; funding acquisition, T.-J.T., Y.-S.C. and H.-C.W.; investigation, C.-W.H., Y.-M.T., Y.-S.C., A.M. and H.-C.W.; methodology, T.-J.T., Y.-K.W., Y.-S.C., I.-C.W., A.M., Y.-K.W. and H.-C.W.; project administration, Y.-K.W., C.-W.H., C.-W.H., Y.-S.C. and H.-C.W.; resources, H.-C.W. and T.-H.C.; software, Y.-K.W., T.-H.C., Y.-S.C., I.-C.W. and A.M.; supervision, H.-C.W.; validation, T.-J.T., Y.-S.C., I.-C.W., T.-H.C. and H.-C.W.; writing—original draft, A.M.; writing—review and editing, H.-C.W. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Science and Technology Council, The Republic of China under the grants NSTC 111-2221-E-194-007. This work was financially/partially supported by the Advanced Institute of Manufacturing with High-tech Innovations (AIM-HI) and the Center for Innovative Research on Aging Society (CIRAS) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE), the Ditmanson Medical Foundation Chia-Yi Christian Hospital, and the National Chung Cheng University Joint Research Program (CYCH-CCU-2022-11) in Taiwan.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of Kaohsiung Medical University Hospital (KMUH) (KMUHIRB-E(II)-20190376, KMUHIRB-E(I)-20210066).

Informed Consent Statement

Written informed consent was waived in this study because of the retrospective, anonymized nature of study design.

Data Availability Statement

The data presented in this study are available in this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Allen, L.N.; Wigley, S.; Holmer, H. Implementation of non-communicable disease policies from 2015 to 2020: A geopolitical analysis of 194 countries. Lancet Glob. Health 2021, 9, e1528–e1538. [Google Scholar] [CrossRef]

- Kassa, M.; Grace, J. The global burden and perspectives on non-communicable diseases (NCDs) and the prevention, data availability and systems approach of NCDs in low-resource countries. In Public Health in Developing Countries-Challenges and Opportunities; IntechOpen: London, UK, 2019. [Google Scholar]

- Lunde, P.; Nilsson, B.B.; Bergland, A.; Kværner, K.J.; Bye, A. The effectiveness of smartphone apps for lifestyle improvement in noncommunicable diseases: Systematic review and meta-analyses. J. Med. Internet Res. 2018, 20, e9751. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Ferlay, J.; Colombet, M.; Soerjomataram, I.; Parkin, D.M.; Piñeros, M.; Znaor, A.; Bray, F. Cancer statistics for the year 2020: An overview. Int. J. Cancer 2021, 149, 778–789. [Google Scholar] [CrossRef] [PubMed]

- Miller, K.D.; Nogueira, L.; Mariotto, A.B.; Rowland, J.H.; Yabroff, K.R.; Alfano, C.M.; Jemal, A.; Kramer, J.L.; Siegel, R.L. Cancer treatment and survivorship statistics, 2019. CA Cancer J. Clin. 2019, 69, 363–385. [Google Scholar] [CrossRef]

- Chen, R.; Zheng, R.; Zhang, S.; Zeng, H.; Wang, S.; Sun, K.; Gu, X.; Wei, W.; He, J. Analysis of incidence and mortality of esophageal cancer in China, 2015. Chin. J. Prev. Med. 2019, 53, 1094–1097. [Google Scholar]

- Fan, J.; Liu, Z.; Mao, X.; Tong, X.; Zhang, T.; Suo, C.; Chen, X. Global trends in the incidence and mortality of esophageal cancer from 1990 to 2017. Cancer Med. 2020, 9, 6875–6887. [Google Scholar] [CrossRef]

- sadat Yousefi, M.; Sharifi-Esfahani, M.; Pourgholam-Amiji, N.; Afshar, M.; Sadeghi-Gandomani, H.; Otroshi, O.; Salehiniya, H. Esophageal cancer in the world: Incidence, mortality and risk factors. Biomed. Res. Ther. 2018, 5, 2504–2517. [Google Scholar] [CrossRef]

- D’souza, S.; Addepalli, V. Preventive measures in oral cancer: An overview. Biomed. Pharmacother. 2018, 107, 72–80. [Google Scholar] [CrossRef]

- Pickens, A. Racial Disparities in Esophageal Cancer. Thorac. Surg. Clin. 2022, 32, 57–65. [Google Scholar] [CrossRef]

- Wu, S.-G.; Zhang, W.-W.; Sun, J.-Y.; Li, F.-Y.; Lin, Q.; He, Z.-Y. Patterns of distant metastasis between histological types in esophageal cancer. Front. Oncol. 2018, 8, 302. [Google Scholar] [CrossRef] [PubMed]

- Abnet, C.C.; Arnold, M.; Wei, W.-Q. Epidemiology of esophageal squamous cell carcinoma. Gastroenterology 2018, 154, 360–373. [Google Scholar] [CrossRef] [PubMed]

- Coleman, H.G.; Xie, S.-H.; Lagergren, J. The epidemiology of esophageal adenocarcinoma. Gastroenterology 2018, 154, 390–405. [Google Scholar] [CrossRef] [PubMed]

- Hazama, H.; Tanaka, M.; Kakushima, N.; Yabuuchi, Y.; Yoshida, M.; Kawata, N.; Takizawa, K.; Ito, S.; Imai, K.; Hotta, K. Predictors of technical difficulty during endoscopic submucosal dissection of superficial esophageal cancer. Surg. Endosc. 2019, 33, 2909–2915. [Google Scholar] [CrossRef] [PubMed]

- Mönig, S.; Chevallay, M.; Niclauss, N.; Zilli, T.; Fang, W.; Bansal, A.; Hoeppner, J. Early esophageal cancer: The significance of surgery, endoscopy, and chemoradiation. Ann. N. Y. Acad. Sci. 2018, 1434, 115–123. [Google Scholar] [CrossRef]

- Huang, F.-L.; Yu, S.-J. Esophageal cancer: Risk factors, genetic association, and treatment. Asian J. Surg. 2018, 41, 210–215. [Google Scholar] [CrossRef]

- Then, E.O.; Lopez, M.; Saleem, S.; Gayam, V.; Sunkara, T.; Culliford, A.; Gaduputi, V. Esophageal cancer: An updated surveillance epidemiology and end results database analysis. World J. Oncol. 2020, 11, 55. [Google Scholar] [CrossRef]

- He, H.; Chen, N.; Hou, Y.; Wang, Z.; Zhang, Y.; Zhang, G.; Fu, J. Trends in the incidence and survival of patients with esophageal cancer: A SEER database analysis. Thorac. Cancer 2020, 11, 1121–1128. [Google Scholar] [CrossRef]

- Mukundan, A.; Tsao, Y.-M.; Artemkina, S.B.; Fedorov, V.E.; Wang, H.-C. Growth Mechanism of Periodic-Structured MoS2 by Transmission Electron Microscopy. Nanomaterials 2022, 12, 135. [Google Scholar] [CrossRef]

- Mukundan, A.; Feng, S.-W.; Weng, Y.-H.; Tsao, Y.-M.; Artemkina, S.B.; Fedorov, V.E.; Lin, Y.-S.; Huang, Y.-C.; Wang, H.-C. Optical and Material Characteristics of MoS2/Cu2O Sensor for Detection of Lung Cancer Cell Types in Hydroplegia. Int. J. Mol. Sci. 2022, 23, 4745. [Google Scholar] [CrossRef]

- Tseng, K.-W.; Hsiao, Y.-P.; Jen, C.-P.; Chang, T.-S.; Wang, H.-C. Cu2O/PEDOT:PSS/ZnO Nanocomposite Material Biosensor for Esophageal Cancer Detection. Sensors 2020, 20, 2455. [Google Scholar] [CrossRef]

- Wu, I.C.; Weng, Y.-H.; Lu, M.-Y.; Jen, C.-P.; Fedorov, V.E.; Chen, W.C.; Wu, M.T.; Kuo, C.-T.; Wang, H.-C. Nano-structure ZnO/Cu2O photoelectrochemical and self-powered biosensor for esophageal cancer cell detection. Opt. Express 2017, 25, 7689–7706. [Google Scholar] [CrossRef]

- Fang, Y.-J.; Mukundan, A.; Tsao, Y.-M.; Huang, C.-W.; Wang, H.-C. Identification of Early Esophageal Cancer by Semantic Segmentation. J. Pers. Med. 2022, 12, 1204. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.-M.; Yang, W.-J.; Huang, Z.-Y.; Tang, C.-W.; Li, J. Artificial intelligence technique in detection of early esophageal cancer. World J. Gastroenterol. 2020, 26, 5959. [Google Scholar] [CrossRef]

- Zhang, Y.-H.; Guo, L.-J.; Yuan, X.-L.; Hu, B. Artificial intelligence-assisted esophageal cancer management: Now and future. World J. Gastroenterol. 2020, 26, 5256. [Google Scholar] [CrossRef] [PubMed]

- Rahaman, M.M.; Li, C.; Yao, Y.; Kulwa, F.; Wu, X.; Li, X.; Wang, Q. DeepCervix: A deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Comput. Biol. Med. 2021, 136, 104649. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Chen, L.; Luan, S.; Zhou, J.; Xiao, X.; Yang, Y.; Mao, C.; Fang, P.; Chen, L.; Zeng, X.; et al. The development and progress of nanomedicine for esophageal cancer diagnosis and treatment. Semin. Cancer Biol. 2022, in press. [Google Scholar] [CrossRef]

- Teixeira Farinha, H.; Digklia, A.; Schizas, D.; Demartines, N.; Schäfer, M.; Mantziari, S. Immunotherapy for Esophageal Cancer: State-of-the Art in 2021. Cancers 2022, 14, 554. [Google Scholar] [CrossRef]

- Horie, Y.; Yoshio, T.; Aoyama, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Hirasawa, T.; Tsuchida, T.; Ozawa, T.; Ishihara, S. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019, 89, 25–32. [Google Scholar] [CrossRef]

- de Groof, A.J.; Struyvenberg, M.R.; van der Putten, J.; van der Sommen, F.; Fockens, K.N.; Curvers, W.L.; Zinger, S.; Pouw, R.E.; Coron, E.; Baldaque-Silva, F. Deep-learning system detects neoplasia in patients with Barrett’s esophagus with higher accuracy than endoscopists in a multistep training and validation study with benchmarking. Gastroenterology 2020, 158, 915–929.e4. [Google Scholar] [CrossRef]

- Maktabi, M.; Köhler, H.; Ivanova, M.; Jansen-Winkeln, B.; Takoh, J.; Niebisch, S.; Rabe, S.M.; Neumuth, T.; Gockel, I.; Chalopin, C. Tissue classification of oncologic esophageal resectates based on hyperspectral data. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1651–1661. [Google Scholar] [CrossRef] [PubMed]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Mukundan, A.; Patel, A.; Saraswat, K.D.; Tomar, A.; Kuhn, T. Kalam Rover. In Proceedings of the AIAA SCITECH 2022 Forum, San Diego, CA, USA, 3–7 January 2022; p. 1047. [Google Scholar]

- Gross, W.; Queck, F.; Vögtli, M.; Schreiner, S.; Kuester, J.; Böhler, J.; Mispelhorn, J.; Kneubühler, M.; Middelmann, W. A multi-temporal hyperspectral target detection experiment: Evaluation of military setups. In Proceedings of the Target and Background Signatures VII, Online, 13–17 September 2021; pp. 38–48. [Google Scholar]

- Hsiao, Y.-P.; Mukundan, A.; Chen, W.-C.; Wu, M.-T.; Hsieh, S.-C.; Wang, H.-C. Design of a Lab-On-Chip for Cancer Cell Detection through Impedance and Photoelectrochemical Response Analysis. Biosensors 2022, 12, 405. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-W.; Tseng, Y.-S.; Mukundan, A.; Wang, H.-C. Air Pollution: Sensitive Detection of PM2. 5 and PM10 Concentration Using Hyperspectral Imaging. Appl. Sci. 2021, 11, 4543. [Google Scholar] [CrossRef]

- Mukundan, A.; Huang, C.-C.; Men, T.-C.; Lin, F.-C.; Wang, H.-C. Air Pollution Detection Using a Novel Snap-Shot Hyperspectral Imaging Technique. Sensors 2022, 22, 6231. [Google Scholar] [CrossRef]

- Gerhards, M.; Schlerf, M.; Mallick, K.; Udelhoven, T. Challenges and future perspectives of multi-/Hyperspectral thermal infrared remote sensing for crop water-stress detection: A review. Remote Sens. 2019, 11, 1240. [Google Scholar] [CrossRef]

- Lee, C.-H.; Mukundan, A.; Chang, S.-C.; Wang, Y.-L.; Lu, S.-H.; Huang, Y.-C.; Wang, H.-C. Comparative Analysis of Stress and Deformation between One-Fenced and Three-Fenced Dental Implants Using Finite Element Analysis. J. Clin. Med. 2021, 10, 3986. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.; Willmott, J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Mukundan, A.; Wang, H.-C. Simplified Approach to Detect Satellite Maneuvers Using TLE Data and Simplified Perturbation Model Utilizing Orbital Element Variation. Appl. Sci. 2021, 11, 10181. [Google Scholar] [CrossRef]

- Tsai, C.-L.; Mukundan, A.; Chung, C.-S.; Chen, Y.-H.; Wang, Y.-K.; Chen, T.-H.; Tseng, Y.-S.; Huang, C.-W.; Wu, I.-C.; Wang, H.-C. Hyperspectral Imaging Combined with Artificial Intelligence in the Early Detection of Esophageal Cancer. Cancers 2021, 13, 4593. [Google Scholar] [CrossRef]

- Vangi, E.; D’Amico, G.; Francini, S.; Giannetti, F.; Lasserre, B.; Marchetti, M.; Chirici, G. The new hyperspectral satellite PRISMA: Imagery for forest types discrimination. Sensors 2021, 21, 1182. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef]

- Terentev, A.; Dolzhenko, V.; Fedotov, A.; Eremenko, D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors 2022, 22, 757. [Google Scholar] [CrossRef] [PubMed]

- De La Rosa, R.; Tolosana-Delgado, R.; Kirsch, M.; Gloaguen, R. Automated Multi-Scale and Multivariate Geological Logging from Drill-Core Hyperspectral Data. Remote Sens. 2022, 14, 2676. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Ding, X.; Chaurasia, P.; Coyle, D. A hybrid capsule network for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11824–11839. [Google Scholar] [CrossRef]

- Transon, J.; d’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of hyperspectral earth observation applications from space in the sentinel-2 context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef]

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern trends in hyperspectral image analysis: A review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Sun, W.; Du, Q. Hyperspectral band selection: A review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 118–139. [Google Scholar] [CrossRef]

- Gounella, R.H.; Granado, T.C.; da Costa, J.P.C.; Carmo, J.P. Optical filters for narrow band light adaptation on imaging devices. IEEE J. Sel. Top. Quantum Electron. 2020, 27, 7200508. [Google Scholar] [CrossRef]

- Rybicka-Jasinńska, K.; Wdowik, T.; Łuczak, K.; Wierzba, A.J.; Drapała, O.; Gryko, D. Porphyrins as Promising Photocatalysts for Red-Light-Induced Functionalizations of Biomolecules. ACS Org. Inorg. Au 2022. [Google Scholar] [CrossRef]

- Kumar, A.; Zhang, Z.J.; Lyu, H. Object detection in real time based on improved single shot multi-box detector algorithm. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 204. [Google Scholar] [CrossRef]

- Alippi, C.; Disabato, S.; Roveri, M. Moving convolutional neural networks to embedded systems: The alexnet and VGG-16 case. In Proceedings of the 2018 17th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Porto, Portugal, 11–13 April 2018; pp. 212–223. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).