1. Introduction

Nowadays, many serious health problems negatively affect people’s quality of life. One of these problems is stroke, which results in a person losing their ability to move and becoming bedridden. Although individuals with this condition have normal brain activity, they are confined to bed due to neurological disorders and have difficulty meeting their daily needs. Brain–computer interface (BCI) systems have been developed to assist these individuals in fulfilling their basic daily needs. BCI systems are designed to produce meaningful outputs by measuring the potential differences in the human brain. Neurons located on the human brain’s surface constantly interact with each other. The rhythms resulting from these interactions are grouped according to their frequency values. These groups are known as alpha, beta, theta, delta, and gamma waves.

Table 1 provides the frequency and amplitude values of these waves.

The delta wave, which has a frequency range of 0.5–4 Hz, is the slowest wave with the highest amplitude (

Table 1). This type of wave is seen in infants up to one year old and in adults during deep sleep [

1]. Theta waves, ranging from 4–7 Hz, are observed during light sleep and relaxation. Alpha waves, appearing in the 7–12 Hz range, are associated with eye closure and relaxation. Beta waves, observed in the 12–30 Hz range, dominate during states of alertness and anxiety. These waves are also elevated in individuals solving mathematical problems [

2]. Gamma waves, with frequencies above 30 Hz, have the lowest amplitude and play a crucial role in detecting neurological diseases. They relate to perception, recognition, and similar cognitive functions [

3]. These waves, categorized by their frequency values, are frequently used in BCI systems.

BCI systems typically use electroencephalography (EEG), a non-invasive method that measures a person’s brain signals without requiring surgical intervention. In this method, the signals measured from the individual’s brain activity are processed and taught to machines. Associating the output obtained from signal processing with a command allows for controlling electronic devices such as electric wheelchairs, beds, and lamps [

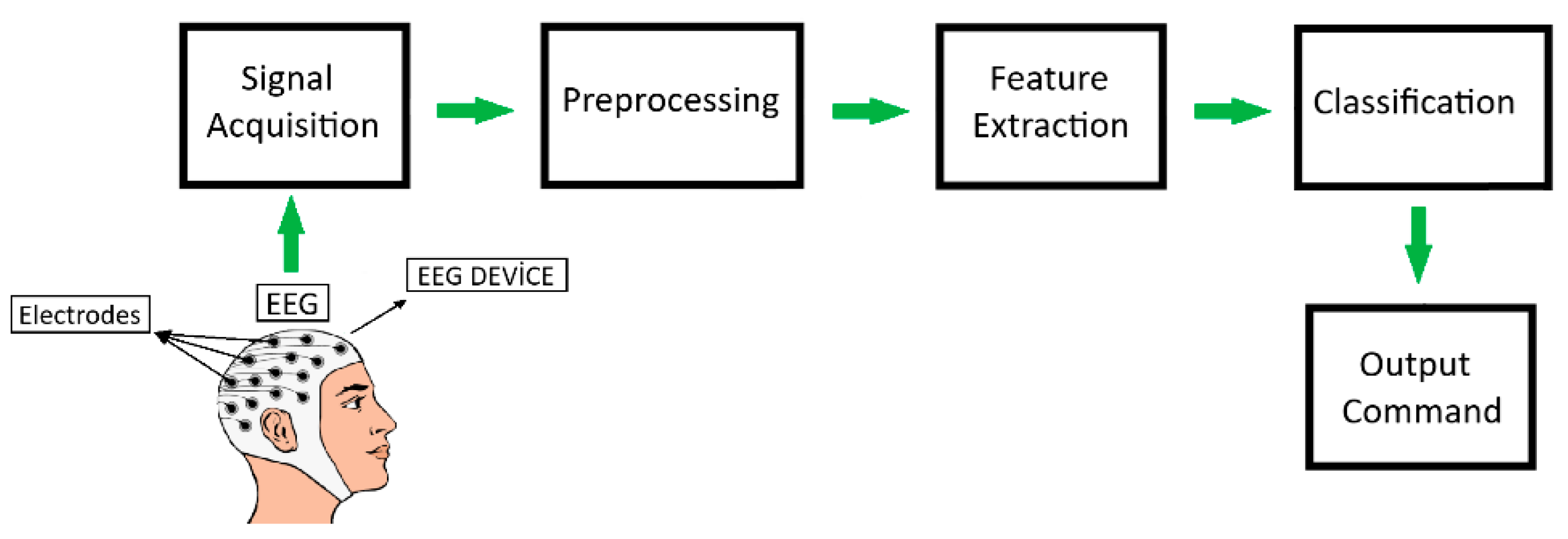

4]. This function enables individuals with paralysis to interact with their environment and meet their needs. The basic scheme used in BCI systems is depicted in

Figure 1.

As shown in

Figure 1, BCI systems are fundamentally composed of four groups. These groups are signal acquisition, preprocessing, feature extraction, and classification stages. In the signal acquisition stage, brain signals are recorded using EEG devices. During the preprocessing stage, operations such as trimming, filtering, and normalization are applied to the recorded data. Feature extraction methods are applied to the filtered data. The customized data are then classified in the classification stage, the final step of signal processing, using different learning algorithms. Then, an output command is generated based on the values obtained from classification.

In EEG-based BCI systems, methods such as visual evoked potential (VEP) [

5], motor imagery (MI) [

6], and P300 [

7] are frequently used during the signal acquisition phase. The P300 method involves a positive deflection in brain waves in response to certain stimuli such as lights, sounds, or various visual cues. P300 waves exhibit a positive deflection within a 300 ms to 600 ms time window and are recorded using EEG devices. In the MI method, the physical movements of the individual are replaced by imagined mental movements. When a person imagines any physical movement, specific patterns emerge in the brain, which are recorded using EEG devices. The recorded EEG signals from all these methods are processed using signal-processing techniques in a computer environment. Output is generated through classification based on the detected value from signal processing. In the VEP method, visual stimuli such as flashing lights or images at different frequency values are presented to the subject, and the resulting voltage changes in the brain are recorded. In the VEP method, when the frequency value exceeds 6 Hz, a state known as steady-state visual evoked potential (SSVEP) occurs.

An SSVEP-based BCI system consists of three main components: data acquisition, signal processing, and output command. In this system, visual stimuli with different frequency values are shown to the subject, and SSVEPs are stimulated in the brain [

8]. Potential changes are recorded through EEG devices. The created dataset is processed through computers and converted into command outputs. The resulting output commands are used to control peripheral devices. SSVEP stands out with its non-invasive structure, high ITR and signal-to-noise ratio (SNR) values, short training time, low training data, and low mental workload of the user [

9]. SSVEP responses, which can be seen in the 1–100 Hz range, are stronger at frequency values below 15 Hz [

10]. To evaluate the performance of an SSVEP-based BCI, first the SSVEP response is converted into the frequency domain and visualized using SNR. Then, the system’s classification accuracy, response time, and number of recognized targets are determined. Classification accuracy is largely affected by the strength and SNR value of the SSVEP response, while speed is related to the time required for the SSVEP signal to reach sufficient power. The number of targets, which determines the command options the system can offer, can have a direct impact on both accuracy and speed [

11].

Compared to P300 and MI methods, SSVEP-based BCI systems stand out with the advantages of short training time and high accuracy rate and ITR value. While MI-based BCI systems require users to mentally visualize hand, foot, etc., movements and go through long-term training processes, the SSVEP method is based on natural responses produced by the brain to direct visual stimuli. This makes the learning process of SSVEP-based systems significantly shorter and their use more practical. Additionally, SSVEP-based systems allow the simultaneous presentation of multiple visual stimuli at different frequencies. This feature eliminates the need for sequential scanning methods commonly used in P300-based BCI systems, making the multiple target selection process faster and more efficient. Visual stimuli directly creating a response in the occipital cortex enables the system to achieve high SNR and thus increases the reliability of signal detection. SSVEP-based BCI systems offer an accessible and effective alternative for a wide range of users with their advantages. They stand out as an important communication and control tool, especially for paralyzed individuals or patients with loss of motor control, thanks to their user-friendly interface and reliable performance [

12,

13]. When the studies are examined [

14,

15,

16,

17,

18], it is seen that SSVEP-based systems are widely used in hybrid form with other signals such as electromyography (EMG) and electrooculography (EOG) due to their advantages.

The primary goal of developing EEG-based BCI systems is to benefit individuals who have lost their ability to move. However, while these systems offer advantages, they also have some disadvantages. These issues can be listed as follows:

- ➢

Research and testing of the systems are only conducted in laboratory environments;

- ➢

The comfort of these systems, which users must rely on for life, is insufficient;

- ➢

The systems operate slowly;

- ➢

They are generally high cost, which limits their accessibility to a broader audience;

- ➢

The systems are not particularly suited for long-term use by the user [

19].

In addition to these disadvantages, EEG devices are relatively cost-effective compared to recording techniques such as magnetoencephalography (MEG) and functional magnetic resonance imaging (fMRI). However, they remain prohibitively expensive for daily use by individuals with disabilities [

7]. Non-medical EEG devices produced for EEG-based BCI systems research are slower than medical-grade EEG systems. For example, the low-cost Emotiv Epoc X EEG device produces lower-quality signals and operates at a lower speed compared to G.Tec, a medical-grade EEG system [

20,

21,

22,

23]. In a study comparing the synchronization of two devices, a delay of 162.69 ms was calculated for Emotiv EPOC, while a delay of 51.22 ms was calculated for G.Tec [

24]. Another problem is that the visual and mental fatigue caused by vibrating stimuli in visual stimulus-based BCI systems hinders users’ adoption of such systems and complicates their usage [

25]. Furthermore, users must continuously focus on visual stimuli for the system to be active. Nevertheless, research has shown that users experience eye problems over time due to prolonged and continuous exposure to visual stimuli [

26]. This serious issue impedes users’ long-term use of these systems and threatens eye health. Additionally, in visual stimulus-based systems, the frequency values of the stimuli create potentials in the brain via the eyes, rendering these systems unsuitable for individuals with preexisting eye health issues.

In this study, a new hybrid system that uses EOG artifacts contained in EEG signals and the SSVEP method was developed in order to provide an alternative to the eye health problems caused by the visual stimuli contained in visual stimulus-based BCI systems in system users and to increase the usage time, comfort, usability, and safety of the system. The SSVEP method is used more frequently than other common BCI paradigms because it can be more easily detected in EEG signals and does not require the creation of any training set [

27]. Therefore, in the current study, the SSVEP method was preferred to activate the system. Additionally, synchronized operation of BCI systems is a known challenge of these systems [

28].

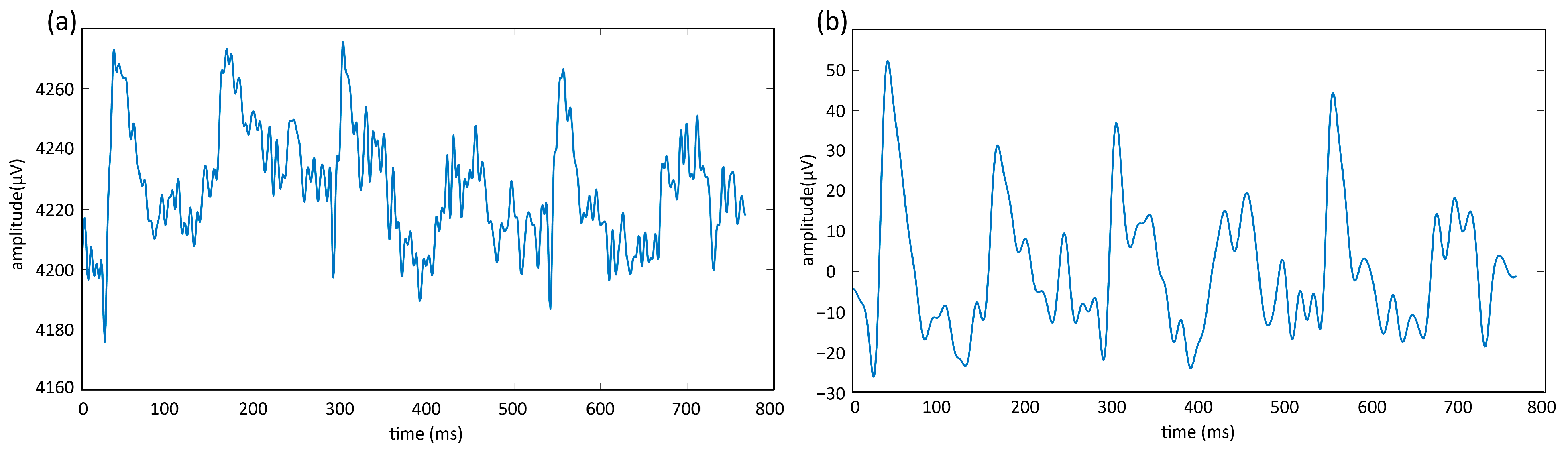

In EEG signals recorded with an EEG device, EOG artifacts occur as a result of eye movements, mostly in the channels located in the frontal lobe of the brain. Artifacts are unwanted signals that can negatively impact neurological processes. These signals may influence the characteristics of neurological events and may even be mistakenly perceived as control signals in BCI systems [

29]. EOG artifacts are filtered through various methods when they are not intended to affect neurological conditions. However, in studies where eye movements need to be detected [

30], EOG artifacts found in EEG signals can be used as a source. Particular eye movements, including blinks, upward and downward glances, leftward and rightward shifts, and eye closures, can be identified, isolated, and categorized using EEG data. These detected movements can subsequently be linked to distinct command outputs for use in a BCI typing system [

31].

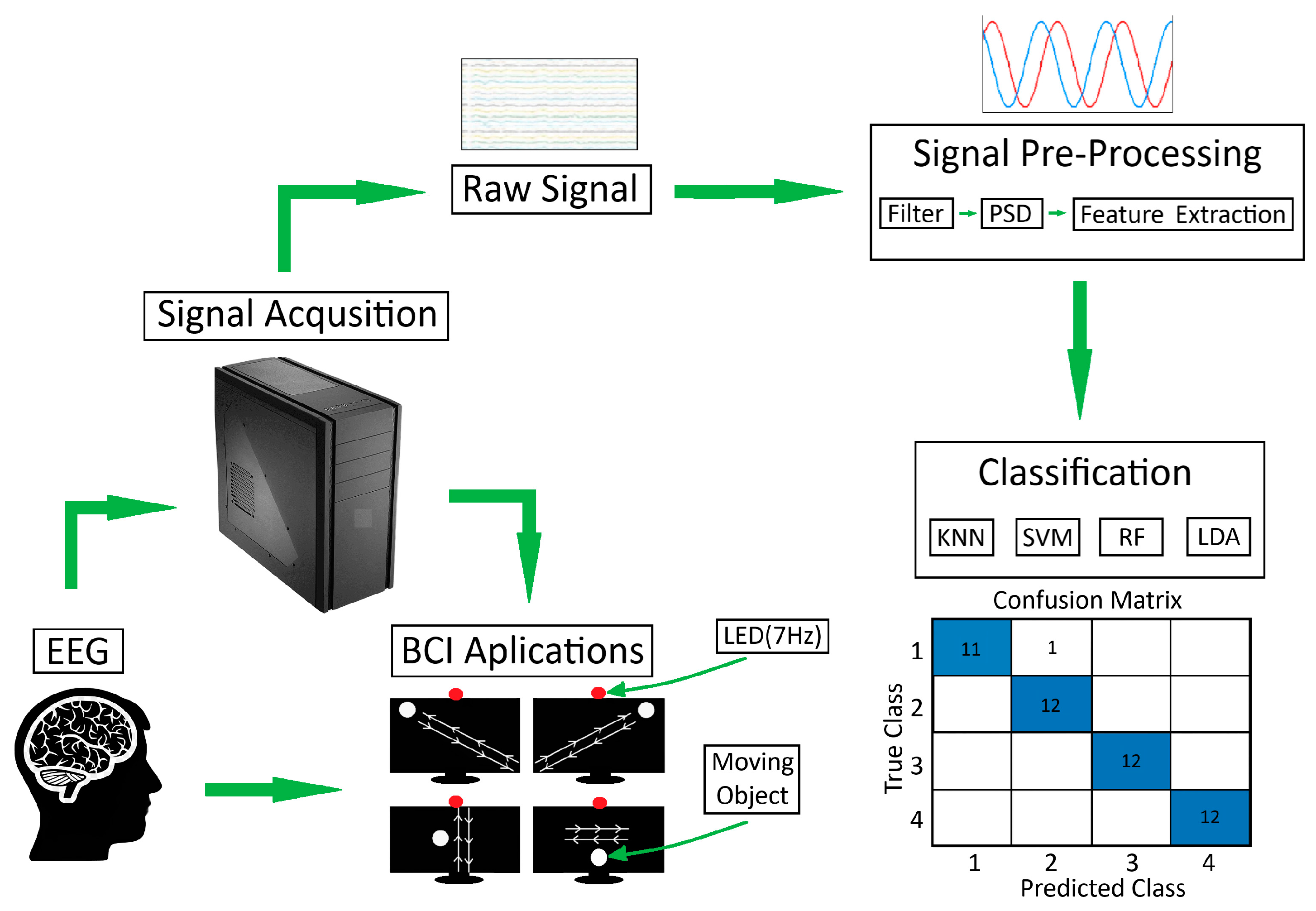

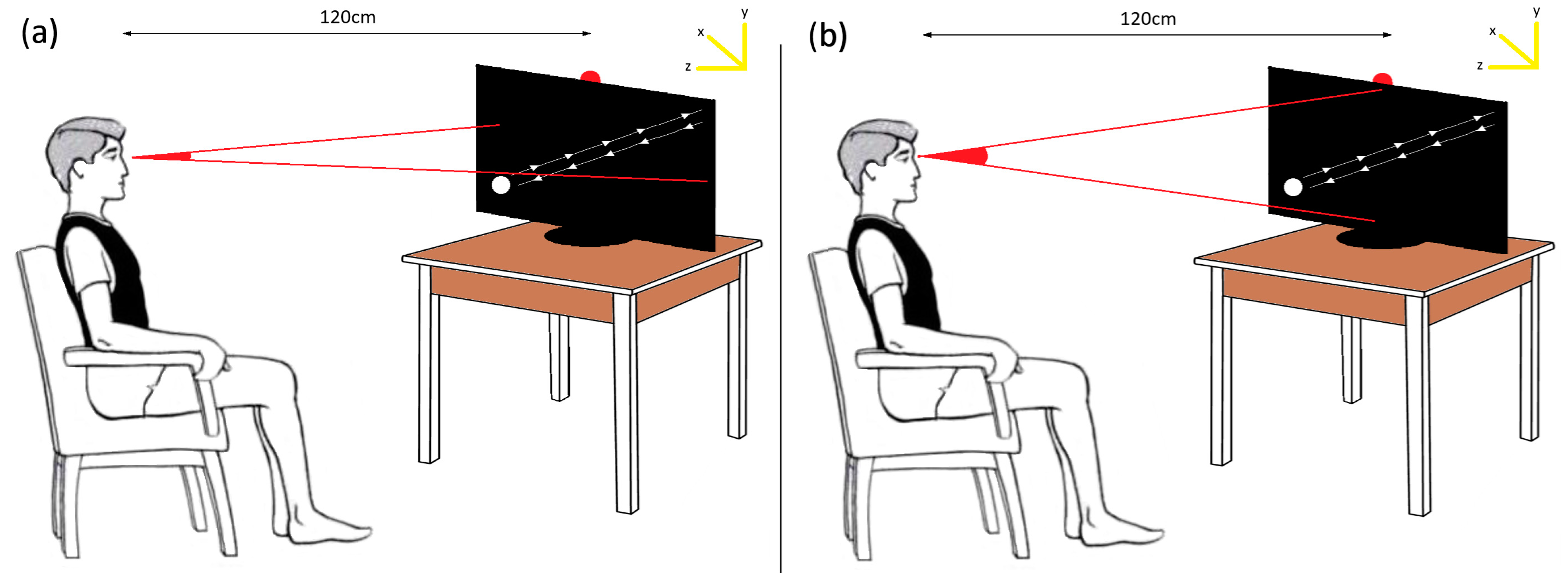

The hybrid system designed in the study proposes an innovative approach of moving objects (white balls), each with different routes, instead of the visual stimuli contained in visual stimulus-based BCI systems. In the system, moving balls are shown to the user via a computer screen. The command generated, which depends on the output assigned to the orbital movement focused on by the user, is sent as a control signal to peripheral devices (bed, wheelchair, etc.). However, this proposed approach has a drawback. The system user may involuntarily or unconsciously make the same orbital movement with the moving balls without looking at the monitor, and the system may be activated involuntarily. In order to solve this problem, a light-emitting diode (LED) with a frequency of 7 Hz was placed in the upper middle of the screen as a condition for the system to be active. When activation of the system with conscious eye movements is wanted, the system checks for the presence of the LED at the point of view through the SSVEP method and is not activated in cases where the LED cannot be detected. Thus, it uses the LED as the safety valve. The designed BCI system is shown in

Figure 2.

In the system, the general scheme of which is given in

Figure 2, since the number of LEDs is 1, not much light is emitted. In addition, the system does not require the user to look directly at or focus on the visual stimulus. In common SSVEP-based BCI systems, candidates are required to focus and look at least four flashing lights [

32]. In P300-based systems, it is necessary to look at the light at least two or more times for each command [

33]. However, in the proposed system, the candidate only needs to look in the direction of a flashing visual stimulus without focusing. Thus, the system user can use the system safely through a single visual stimulus, without the feeling of glare in the eye and without being exposed to the disturbing effect of light.

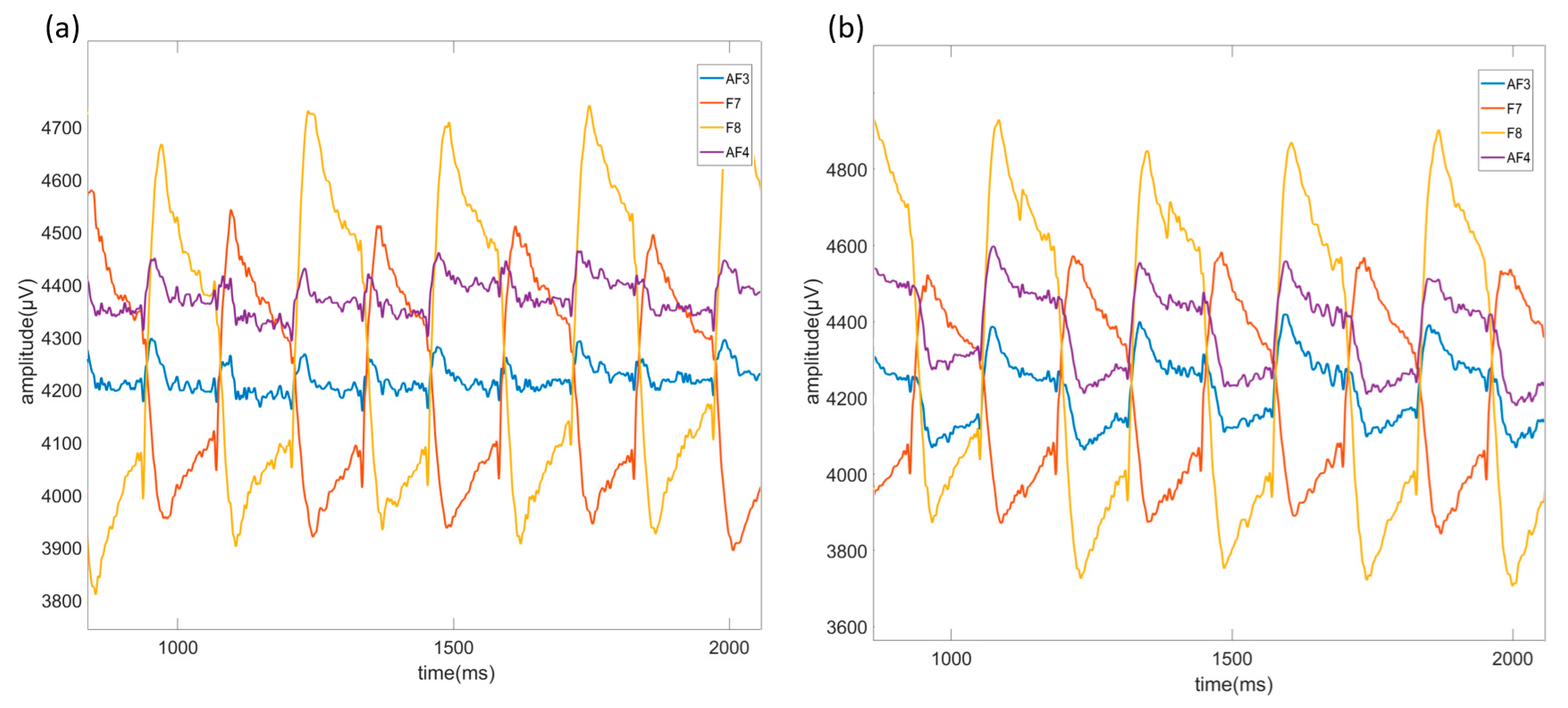

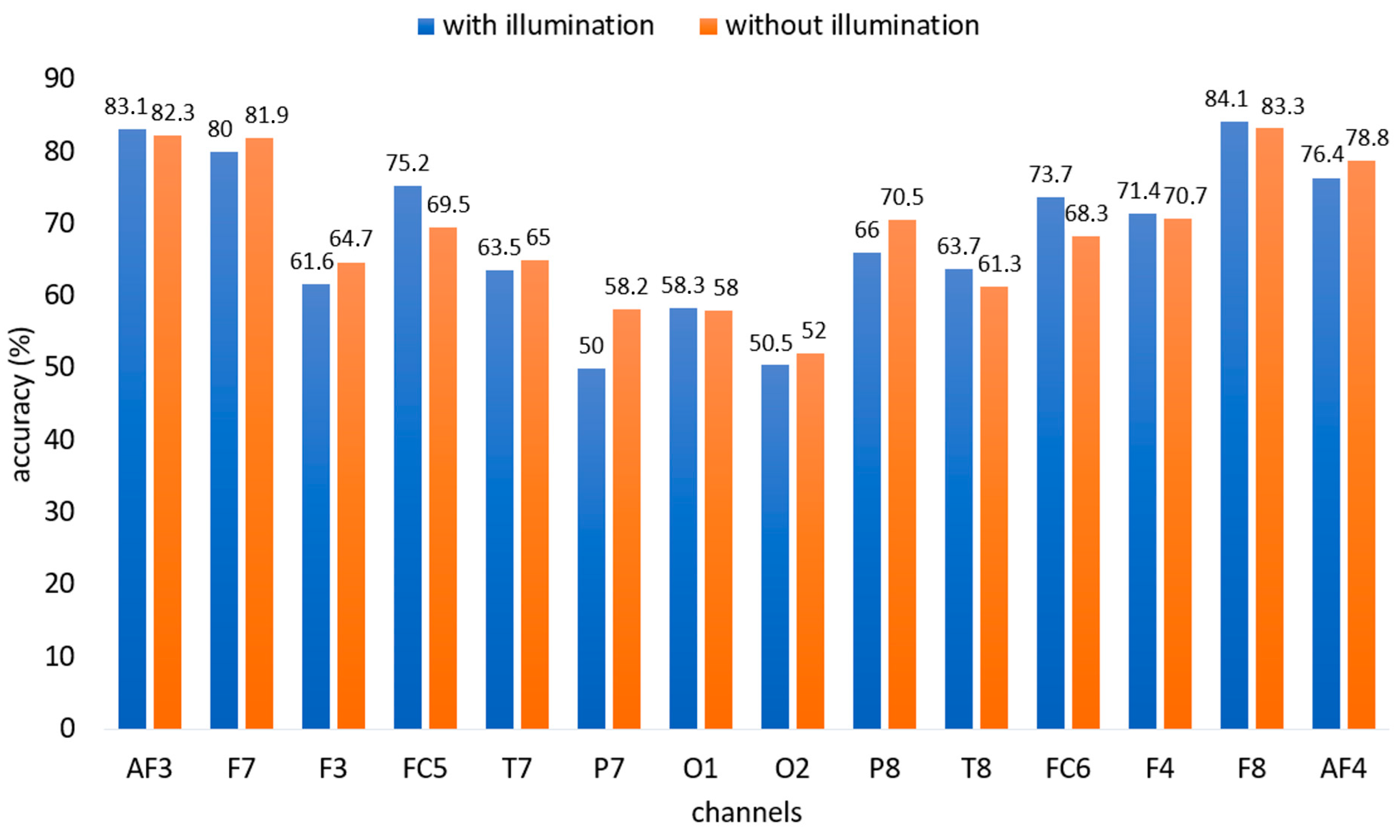

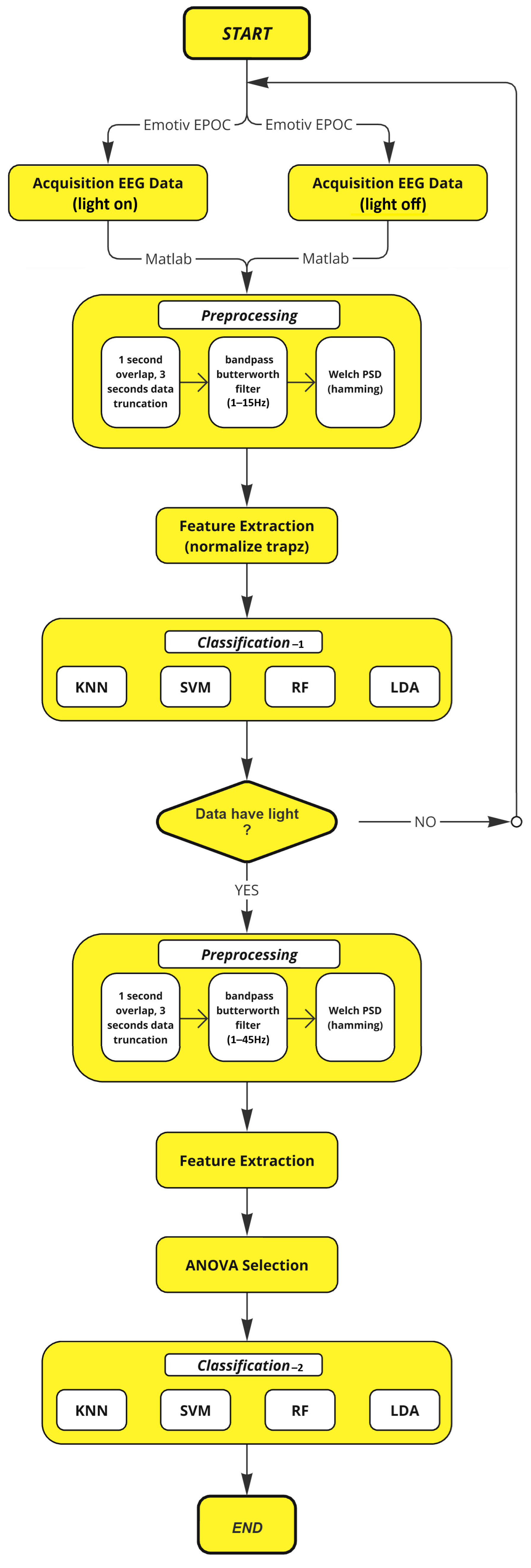

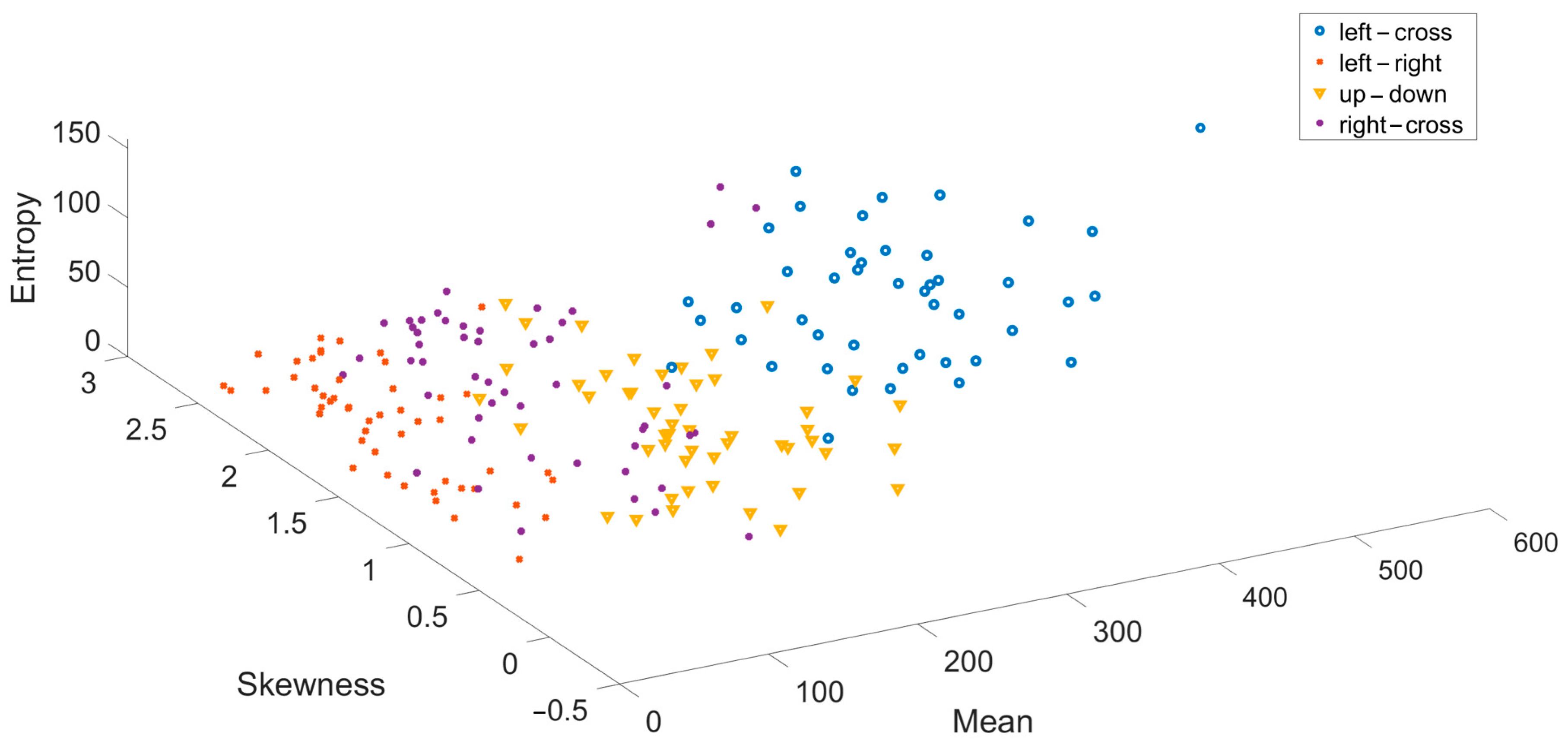

The designed system uses the 14-channel Emotiv Epoc X (EMOTIV Inc., San Francisco, CA, USA) EEG device, which is cost-effective, portable, wireless, and easy to clean and use. Data in the study were recorded at a sampling frequency of 256 Hz. The recording process was done in two stages: with the light on (illuminated) and with the light off (non-illuminated). Pre-processing steps such as cutting and filtering were applied to the recorded data, features were extracted, and effective channel selection was made. The features extracted using active channels were classified in two stages. In the first classification stage, the data recorded with the light on and off were distinguished from each other using the trapezoidal method with the SSVEP method. In the second classification stage, EEG data containing EOG artifacts detected in the LED active state in the background in the first stage were classified in order to distinguish objects moving up-down, left-right, right-cross, and left-cross.

This study is aimed to find solutions to the feeling of glare in the user’s eyes and eye health problems that occur over time, caused by the visual stimuli contained in visual stimulus-based BCI systems. Since these problems directly negatively affect the system usage time, usability, and comfort, a more usable, safe, and comfortable system is designed with the proposed hybrid method and approach. In the study, EOG artifacts contained in EEG data were recorded through moving objects suggested in the approach, while the 7 Hz LED, which is the condition for the system to be active, was active and disabled. Active channel selection was made through the recorded signals, and moving objects were classified with machine learning algorithms using EOG artifacts of the detected active channels. The detection of the LED, which acts as a safety valve, was made through the trapezoidal method using the SSVEP method. The proposed study is a hybrid system, as it uses EOG artifacts in EEG signals to classify moving objects and uses the SSVEP method to detect the presence of LED by the system. The study proved that the LED placed in the background can be detected without the need for focusing and that moving objects can be classified using the EOG artifacts in the EEG signals through the proposed approach. Thus, a hybrid BCI system that is relatively harmless to visual stimulus-based BCI systems in terms of eye health, relatively more comfortable, suitable for long-term use, and safer in terms of control has been proposed.

2. Related Works

BCI is a direct interaction, communication, and control system established between the brain and external devices [

34]. SSVEP and P300 visual stimulus-based paradigms are frequently used in BCI systems. SSVEP is a classical BCI paradigm that has been extensively studied for more than 20 years [

35] due to its advantages. SSVEP has a higher SNR value and requires less training than other methods such as P300 and MI [

36]. Since the method does not require any mental effort, as in the MI method, it is easier to apply and less tiring [

37]. Studies have shown that the method gives relatively higher accuracy and ITR values than other methods [

38]. It can also be detected more easily in EEG signals [

27]. However, SSVEP-based BCI systems cause eye fatigue and a feeling of glare in users due to the visual stimuli they contain [

39]. This significantly reduces the usage time of the systems and negatively affects system comfort. Another important disadvantage of the systems is their high cost [

40]. In order to provide solutions to existing problems, a hybrid system that uses EOG artifacts occurring in SSVEP and EEG signals is proposed in the study. The proposed system aims to free the user from visual stimuli through the moving objects approach. As a condition for the system to be active, 1 LED at 7 Hz was used. In addition, the Emotiv Epoc X (EMOTIV Inc., San Francisco, CA, USA) wearable EEG device [

41] was preferred in the designed system due to its cheap cost, long battery life, short installation time, and ease of use.

In this section, a literature review was conducted on hybrid BCI systems in which SSVEP and EOG signals are used together and SSVEP-based BCI systems using the Emotiv Epoc EEG device.

In one study, researchers [

14] proposed a hybrid BCI system by combining the SSVEP method and EOG signals. In the study, researchers obtained the prior probability distribution of the target with the SSVEP method in order to reduce the transition time between tasks of the SSVEP-based system. They obtained target prediction output by using EOG signals to optimize the probability distribution. In another study [

30] where EOG signals and SSVEP signals were used together, researchers controlled a robotic arm with six degrees of freedom. Designing an EOG-based switch using triple eye blink, the researchers achieved an accuracy rate of 92.09% and an ITR value of 35.98 (bits/min) in the experiment. In another study [

15], researchers proposed a new hybrid asynchronous BCI system based on a combination of SSVEP and EOG signals. The researchers used 12 buttons, each representing 12 characters with different frequency values, to trigger SSVEPs in the interface they designed. They recorded signals on 10 subjects by changing the size of the buttons. At the end of the study, they stated that the asynchronous hybrid BCI system has great potential for communication and control. In another study [

16] where SSVEP method and EOG signals were used in the same system, researchers used 20 buttons corresponding to 20 characters in the interface they designed. Researchers used ten healthy subjects in the study and asked the subject to look at the lights, each of which was flashing at the same time during the experiment. They recorded EOG signals when the buttons moved in different directions. At the end of the experiments, they achieved an accuracy rate of 94.75%. In another study [

17], which combined the SSVEP method and EOG signals, a hybrid printer system was developed using 36 targets. The researchers divided the targets into nine groups of letters, numbers, and characters. While using EOG signals to detect the target group, they identified the target within the selected group with SSVEP. Researchers tested the proposed system on ten subjects and obtained an accuracy rate of 94.16%. In a different study that [

18] used both methods together, researchers presented a comparison dataset for BCI systems. The dataset consisted of data from the SSVEP-based BCI system and the SSVEP-EMG and SSVEP-EOG-based BCI systems. They conducted the experiments using a virtual keyboard containing nine visual stimuli flashing between 5.58 Hz and 11.1 Hz. They used ten participants in the copywriting task and collected data for 10 sessions for each system. They evaluated the systems with criteria such as accuracy, ITR, and NASA-TLX workload index.

In a visual stimulus-based BCI system [

42], researchers designed a screen containing visual stimuli at frequencies of 7 Hz, 9 Hz, 11 Hz, and 13 Hz. They recorded signals over 10 min sessions. To augment the dataset, white noise with amplitudes of 0.5 and 5 was added to augment the size of the training set threefold. The classification was performed using support vector machine (SVM) and k-nearest neighbors (k-NN) classifiers. Without data augmentation, they achieved 51% and 54% accuracy rates, respectively. The accuracy rates improved with augmented data to 55% and 58%. In another study [

43], a drone controlled by EEG signals was developed and tested on 10 healthy subjects and detected visual stimuli at frequencies of 5.3 Hz, 7 Hz, 9.4 Hz, and 13.5 Hz. This system resulted in an average accuracy of 92.5% and an ITR value of 10 bits/min in the BCI system. In another study [

44], four LEDs with frequencies of 13 Hz, 14 Hz, 15 Hz, and 16 Hz were placed outside a visual interface. Next, the system was tested on five participants who completed image flickering experiments at four different frequencies in four directions. In this research, 23 participants were asked to complete tasks in different rooms. However, 12 participants either could not complete the tasks or did not achieve sufficient results. Those who completed all three tasks obtained an average accuracy of 79.2% and an ITR of 15.23 (bits/min). These studies indicate that systems incorporating multiple visual stimuli with different frequency values pose risks to users’ eye health due to the need for sustained focus. Consequently, these systems are uncomfortable and unsuitable for prolonged use. In this respect, some studies [

44] have observed that users experienced visual stimulus-related issues during the experiment, leading to task failure. Besides, the signal recording durations in these studies are often long, and the ITR values of the systems are relatively low.

Various studies have been conducted in the literature to mitigate the negative effects of visual stimuli on system users. For instance, in [

45], a BCI system operating at high frequencies (56–70 Hz) was proposed to reduce the sensation of flicker caused by vibrating stimuli. The system was tested with low-frequency (26–40 Hz) stimuli. The study achieved accuracy rates of 98% for low-frequency stimuli and 87.19% for high-frequency stimuli. The ITR of the system was calculated to be 29.64 (bits/min). This study demonstrated that while accuracy rates decreased with higher frequency values, the system did not provide a solution to the negative effects of visual stimuli on users. In another study [

46], a BCI system based on a rotating wing structure was proposed, wherein five healthy subjects aged between 27 and 32 participated. The designed interface had a black screen divided into four sections, each featuring a wing with an “A” mark. Each wing completed its rotation at different speeds and directions. Using the cubic SVM method, the researchers recorded data for 125 s per class and achieved the highest success rate of 93.72%. A review of these studies indicates that the results obtained by these systems do not provide a permanent solution to the existing problems. Moreover, these systems have long recording times, relatively low accuracy rates, and vulnerability to unintended eye movements.

Overall, relevant studies have focused on eye movements for BCI systems as an alternative to visual stimuli. For instance, in [

47], eye movements were used to control a wheelchair. This research proposed a brain activity paradigm based on imagined tasks, including closing the eyes for alpha responses and focusing attention on upward, rightward, and leftward eye movements. The experiment was conducted with twelve volunteers to collect EEG signals. Employing a traditional MI paradigm, the researchers achieved an average accuracy of 83.7% for left and right commands. Another study [

48] examined the relationship between eye blinking activity and the human brain. This research used channels AF3 and F7 for the left eye and AF4 and F8 for the right eye. Through a convolutional neural networks (CNN) structure in a different study focusing on eye movements [

49], researchers investigated the effect of visual stimuli on the classification accuracy of human brain signals. The study involved 16 healthy participants who were shown arrows indicating right and left directions while their brain beta waves were recorded. Using SVM methods, the researchers achieved an average accuracy of 70% in standard tests and an accuracy of 76% in tests with effective visual stimuli. These studies primarily focus on the eye movements of the system user. However, systems remain vulnerable to unintended eye movements made by the user. Implementing sequential movement coding makes it challenging for users to adapt to the system, resulting in increased error rates. Additionally, these systems often have long recording times and relatively low accuracy rates.

The P300 method is among the frequently used techniques in the field. Using this method, researchers [

50] classified P300 signals obtained from six healthy subjects aged 20 to 37 using deep learning (DL) techniques. The interface they designed required participants to follow two different scenarios. For classification, they employed a five-layer CNN deep learning module. The results showed that with deep learning classification, the transition time between stimuli achieved 100% success in training data. Meanwhile, in test data, 80% success was achieved with a 125 ms inter-stimulus interval and 40% success with a 250 ms inter-stimulus interval. In another study using the P300 method [

51], researchers developed a hybrid BCI hardware platform incorporating both SSVEP and P300. They created a chipboard platform with four independent radial green visual stimuli at frequencies of 7 Hz, 8 Hz, 9 Hz, and 10 Hz. The platform was designed to extract SSVEP and four high-power red LEDs flashing at random intervals to evoke P300 events. The platform was tested with five healthy subjects. The researchers successfully detected P300 events concurrently with four event markers in the EEG signals. In another study [

52], researchers investigated pattern recognition using the P300 component induced by visual stimuli. The study involved 19 healthy participants. Participants were instructed to look at a screen and count how many times it flashed, and the data were recorded. The researchers found that Bayesian networks (BN) achieved the highest accuracy rate of 99.86%. These studies indicate that while visual stimuli are actively used in these methods, the systems often do not provide sufficient comfort for the user.

In [

53], the MI method was used to examine the control of a spider robot. The researchers recorded EEG signals and tested the detection of imagined hand movements for controlling the robot. Specifically, the imagined opening of the hand was associated with forward movement of the robot, while the imagined closing of the hand was associated with backward movement. Through a CNN, the researchers achieved a maximum classification accuracy rate of 87.6%. In another study on MI [

54], researchers analyzed MI data obtained over 20 days from a participant. The study involved commands for right, left, up, and down movements. During the classification phase, they employed the ensemble subspace discriminant classifier and achieved an optimal daily average accuracy of 61.44% for the four-class classification. For five participants, the average accuracy for the four-class classification was 50.42%, while the binary classification accuracy for right and left movements was 71.84%.

In [

55], the control of a wheelchair was investigated. To this end, three white LEDs with frequencies of 8 Hz, 9 Hz, and 10 Hz were installed in the screen’s right, left, and upper corners, respectively. The setup was tested on five different participants aged between 29 and 58 years, with movements including left, right, and forward. These authors employed canonical correlation analysis (CCA) and multivariate synchronization index (MSI) methods to determine the dominant frequency. The researchers achieved an accuracy rate of 96% for both methods. In [

56], the authors differentiated error-related potentials (ERP) in both online and offline conditions with 14 participants in a visual feedback task. Participants were shown red, blue, and green visual stimuli at periods of 500 ms, 700 ms, and 3000 ms. The results showed an accuracy rate of 81% using deep learning techniques. In another study [

57], a system was designed to detect emotional parameters such as excitement, stress, focus, relaxation, and interest. Participants were shown a 15 min mathematics competition video to evoke excitement, attention, and focus. The researchers tested the collected experimental datasets using naive Bayes and linear regression learning algorithms. The linear regression classifier achieved an accuracy rate of 62%, while the naive Bayes classifier achieved an accuracy rate of 69%.

In a study investigating the impact of adjustable visual stimulus intensity, researchers [

58] designed a system to examine the effects of LED brightness on evoking SSVEP in the brain. The LED frequencies were set to 7 Hz, 8 Hz, 9 Hz, and 10 Hz; the brightness levels were adjusted to 25%, 50%, 75%, and 100%; and the system was tested on five individuals. The study found that the highest median response was achieved with a brightness level of 75%, which provided the highest SSVEP responses for all five participants. However, the 75% brightness level, despite yielding the best response, was found to be uncomfortably high for system users. Additionally, the number of visual stimuli used in the system was quite large. Thus, the applied method and obtained results do not provide an effective solution.

5. Discussion and Future Work

When the studies in the field are examined, they show that a significant part of the research in VEP-based BCI systems focuses on system performance [

75]. However, the visual stimuli used in these systems seriously threaten the eye health of users, reduce system comfort, and reduce system usage time [

25,

26]. In fact, in some studies, it was observed that the subjects could not complete the experimental stages due to the negative effects of visual stimuli on the users [

44]. VEP-based BCI systems are also unsuitable for individuals with inadequate eye health, as these systems require the ability to perceive the frequency values of visual stimuli. Additionally, while EEG devices used in these systems are more cost-effective compared to many EEG recording methods (such as MEG and fMRI), they are still not sufficiently affordable to be widely adopted. Moreover, these devices are inadequate in terms of usability and comfort [

7].

This study proposed an innovative approach based on the hybrid method to minimize the negative effects of visual stimuli contained in VEP-based BCI systems on the user. Instead of visual stimuli, four different moving objects, each moving in different trajectories, were used in the approach. Classification of moving objects was carried out using EOG artifacts found in the recorded EEG signals. However, the system may be activated unintentionally when the user makes the same eye movement as moving objects. This problem is also seen in many other eye movement-based BCI studies [

47,

48,

49]. Although various actions (for example, serial blinking or looking in a certain direction) have been tried to solve the problem, these methods complicate the use of the system and make it difficult for users to focus, increasing error rates. As a solution to this problem in the developed system, the user can activate the system by using the 7 Hz LED placed on the top of the computer screen, without being exposed to the negative effects of any visual stimulus and without focusing on the stimulus. The system checks the presence of the background LED via the SSVEP method when EEG data is received. Detection of the LED indicates that the user made a conscious eye movement to activate the system. Otherwise, when the LED is not detected, it is concluded that the eye movements are independent of the system and the system should not be active. Since there is only one LED, the stimulating brightness of the system is low, and the user does not have to focus directly on the LED. Thus, the system prevents users from experiencing a visual flash sensation, allowing users to safely use the system with moving objects without focusing on any visual stimuli.

In summary, in the study, while the control of moving objects was done using EOG artifacts contained in EEG signals, the LED placed in the background was detected using the SSVEP method. The system’s classification of moving objects using EOG artifacts has been proposed as an alternative to visual stimuli in visual stimulus-based BCI systems. Thus, it is aimed to find solutions to the problems of the feeling of glare in the eyes caused by visual stimuli, the negative impact on system comfort and usability due to the feeling of glare, eye disorders caused by long-term use, and reducing the system usage time. During the commissioning phase of the system using the LED in the background, the SSVEP method aims to enable the system to be safely activated and deactivated by the user. The success rate of the system during its activation phase is important because it is not difficult to foresee that systems that make wrong decisions will cause difficulties for paralyzed individuals. Thus, constant blinking, etc., is required to activate the system in the field. An alternative solution to these methods has also been provided.

Table 9 provides basic information on some visual stimulus-based studies using the Emotiv Epoc EEG device.

As can be inferred from previous studies [

50,

76,

79], the number of subjects is relatively insufficient compared to the general standard. The number of subjects directly affects the performance metrics of the systems. Compared to other studies, the number of subjects used in this study is moderate. The accuracy rate is among the most critical parameters for the usability of systems. As shown in

Table 8, the accuracy rate obtained in this study can be considered effective. Additionally, some studies [

77,

79,

80,

81] report that the ITR values are either not computed or are low. The ITR value reflects the speed of the system and is the most important parameter for assessing its practical applicability. The ITR value of 36.7 bits/min achieved in this study is considered successful compared to the values considered in the studies reviewed.

During the experiments, illuminated data were recorded without requiring any focus on visual stimuli or eye contact. Nevertheless, visual stimuli still caused discomfort for the subjects over time. Although this study significantly alleviates the reliance on visual stimuli for the system user, visual stimuli can still cause discomfort in various ways. Furthermore, the Emotiv Epoc X EEG device has its own disadvantages. These drawbacks include the excessive pressure it applies to the user’s head during use exceeding 30 min, the inability to adjust the device to fit different head sizes, electrode oxidation, and continuous drying. Furthermore, the device’s sensor contact points cannot be altered, leading to variability in contact points for individuals with different head sizes. Despite these issues, the device is preferred considering its advantages, such as affordability, portability, long battery life, ease of use and setup, and no need for any cleaning after use.

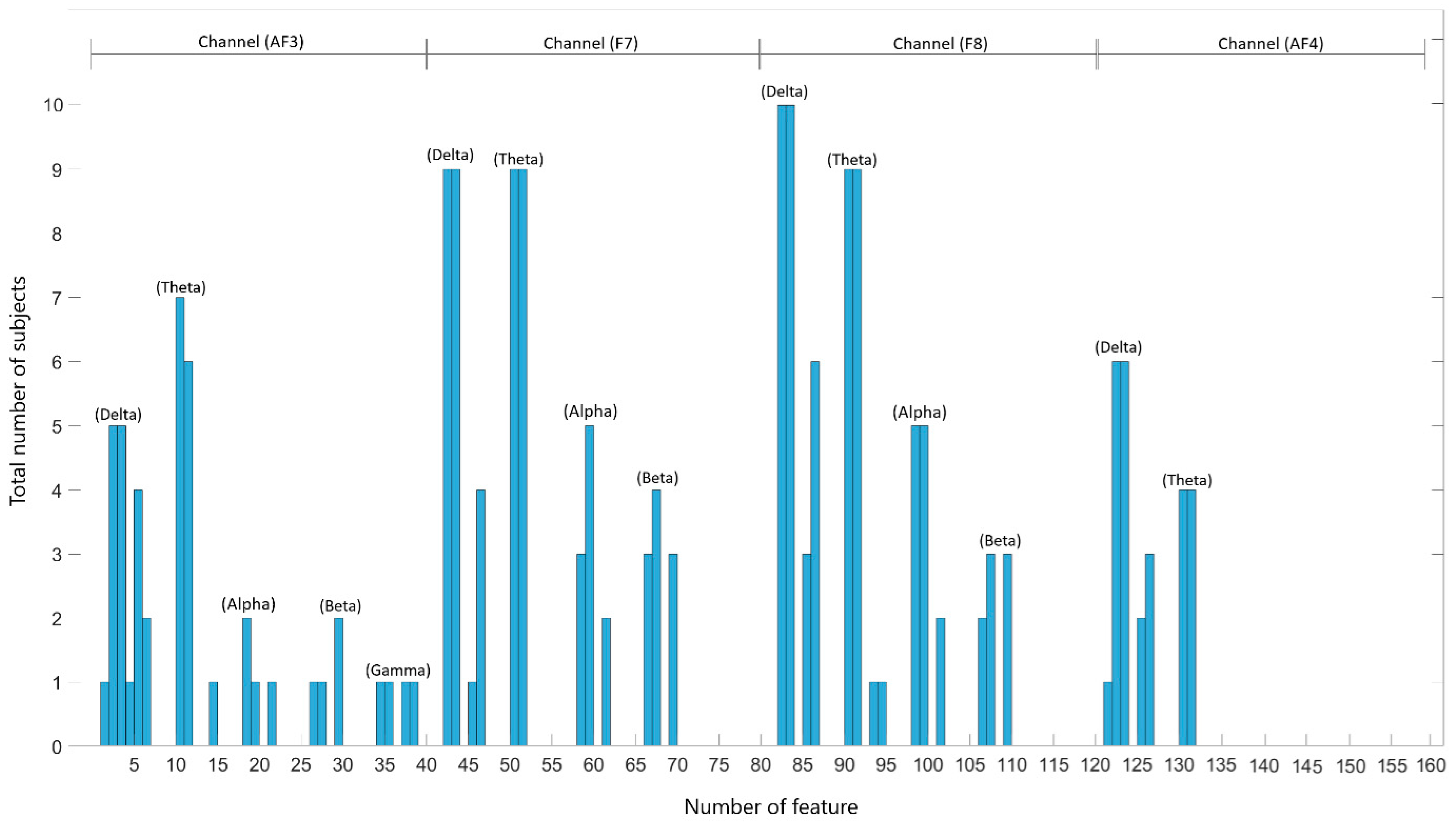

In BCI systems, using fewer EEG channels makes the system more efficient. According to the literature [

76,

80], researchers often increase the number of channels to enhance accuracy rates. In contrast, the current study utilized only channels F7, AF3, AF4, and F8 of the Emotiv Epoc X EEG device. Future research could further reduce the number of channels by evaluating the performance of effective waveforms for each channel.

In VEP-based BCI systems, brightness (lux) is an important parameter because the amount of light reaching the eye from the source creates a potential change in the brain. Studies on the intensity of light on SSVEP have been carried out in the field. The majority of research has explored illumination levels under 30 lx, revealing that as illumination intensity rises, the SSVEP response tends to improve [

85,

86,

87,

88]. However, a study showed that the highest illumination value used during the experiments did not give the best results [

58]. Moreover, some studies have shown that higher brightness changes also lead to greater discomfort [

86,

88]. In this study, by reducing the number of visual stimuli to 1, the amount of light the user is exposed to is reduced and the need to focus on the stimulus is eliminated. However, no investigation has been made about the brightness of the visual stimulus used. System performance can be increased by increasing the brightness level of the stimulus, or a more comfortable system can be designed by decreasing it. Another issue is that the monitor used to display the stimuli emits light and is annoying and unusable because it has to be constantly in front of the patients. Moreover, almost all studies reviewed consist of single-session or daily recordings. However, systems should be capable of producing consistent responses for the same user on different days. In summary, the model created from data recorded on the first day for person A should be compatible with data recorded on the second day for the same person. Another problem is that the designed system has not been tested in real time. Future research will address real-time implementation of the system and other issues identified.