A Practical Multi-Stage Grasp Detection Method for Kinova Robot in Stacked Environments

Abstract

1. Introduction

- (1)

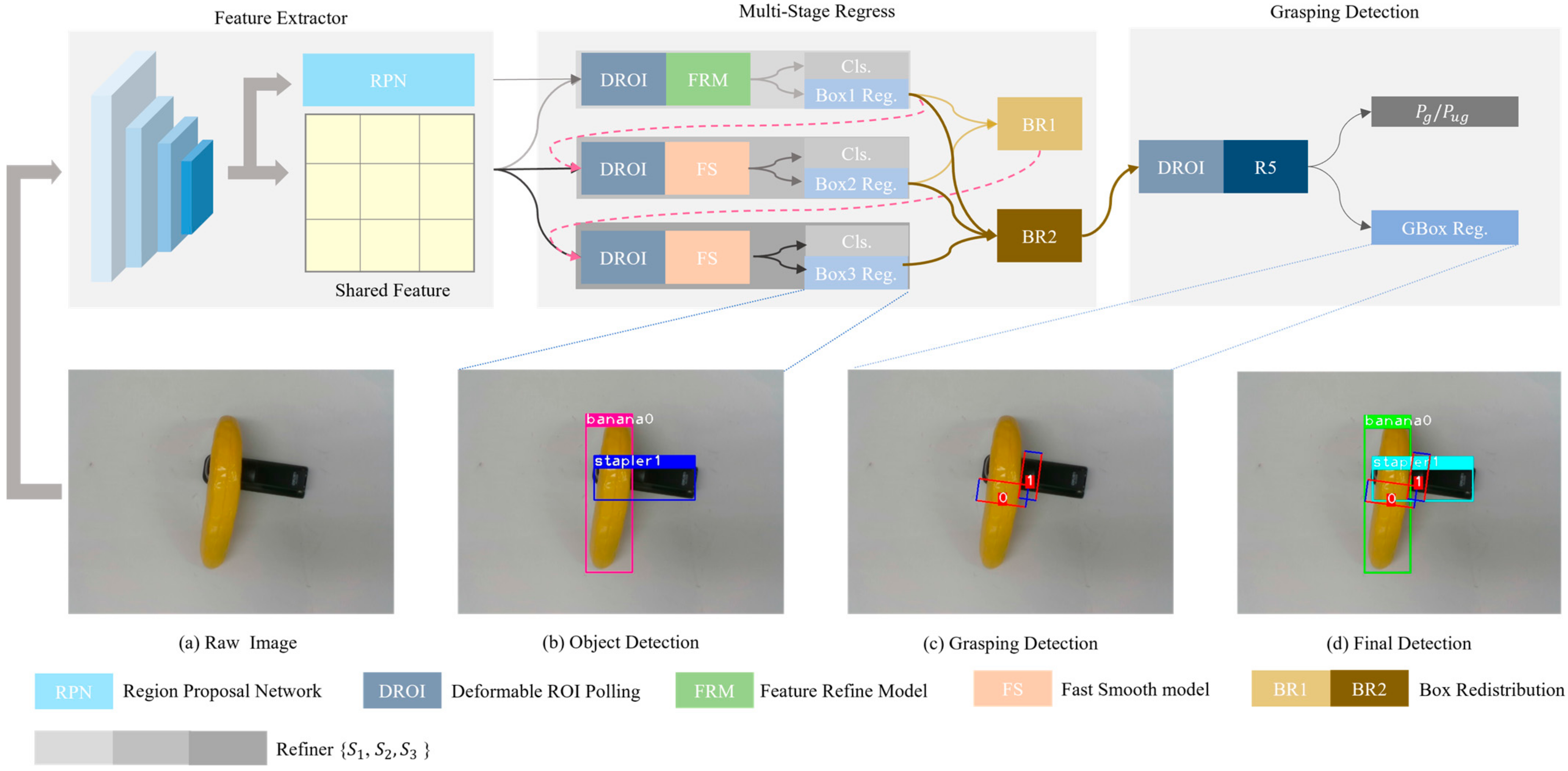

- A Cascade R-CNN implementation based on Faster-RCNN for grasp detection is provided. Our model allows for simultaneous grasp detection and target detection.

- (2)

- MMD is an improved multi-stage end-to-end grasp detection model that we proposed. This algorithm performs well on the VMRD dataset in stack scenarios and also shows great environmental adaptability on our homemade test sets.

- (3)

- A FRM (feature refine model) is proposed in MMD, thus allowing the network to improve the quality of the region proposal feature map. Its effectiveness in facilitating the detection of grasping has been proven through experiments.

- (4)

- A box redistribution strategy is proposed in MMD, which avoids filtering the false positive samples to a certain extent and increases the system's fault tolerance for detection. Experimental results also indicate that it can increase the accuracy of grasp detection.

- (5)

- In order to test the practicality of our model, we also carried out experiments on our homemade test sets and our Kinova robot.

2. Related Works

3. Proposed Method

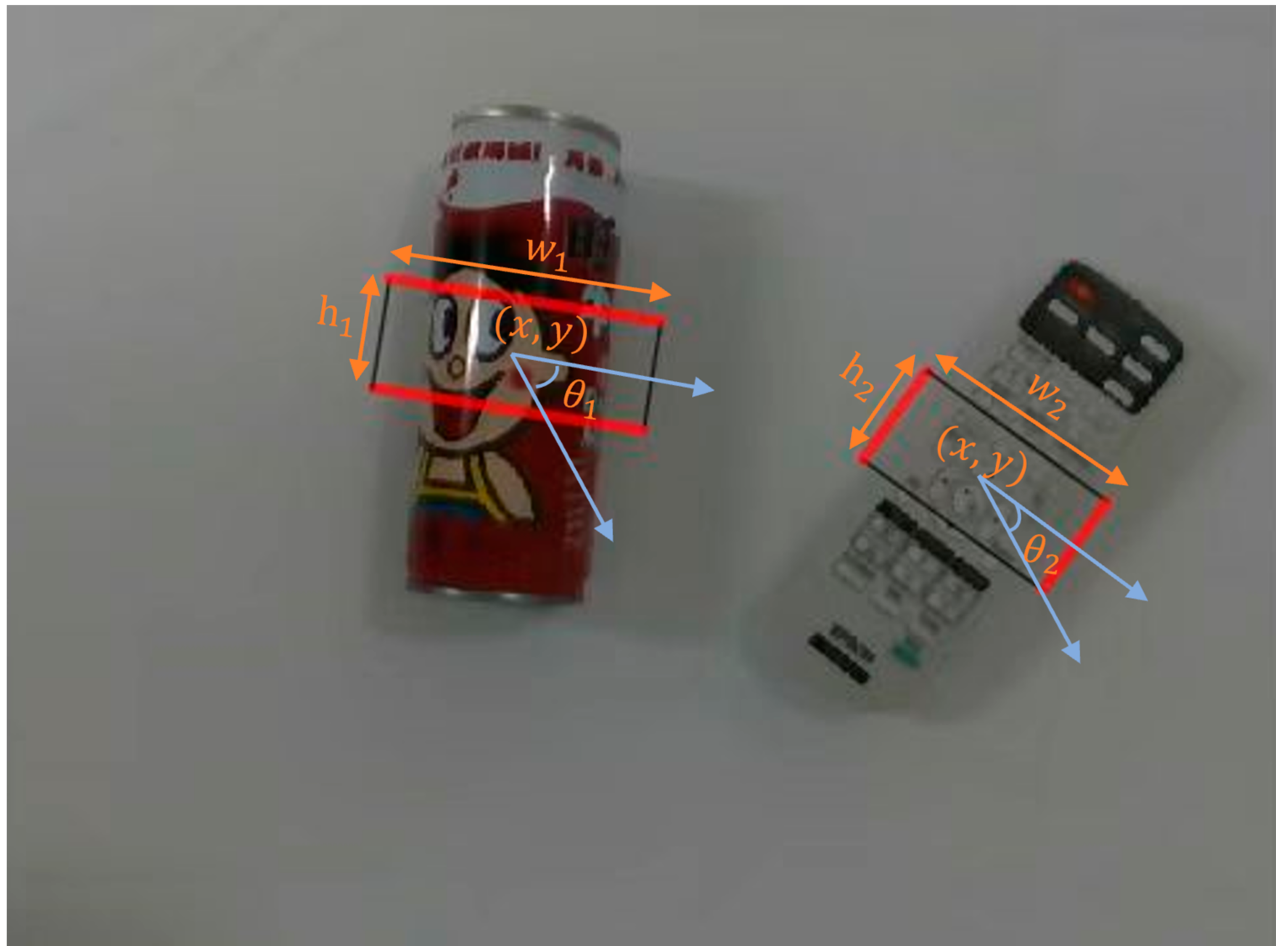

3.1. System Overview

3.2. Network Architecture

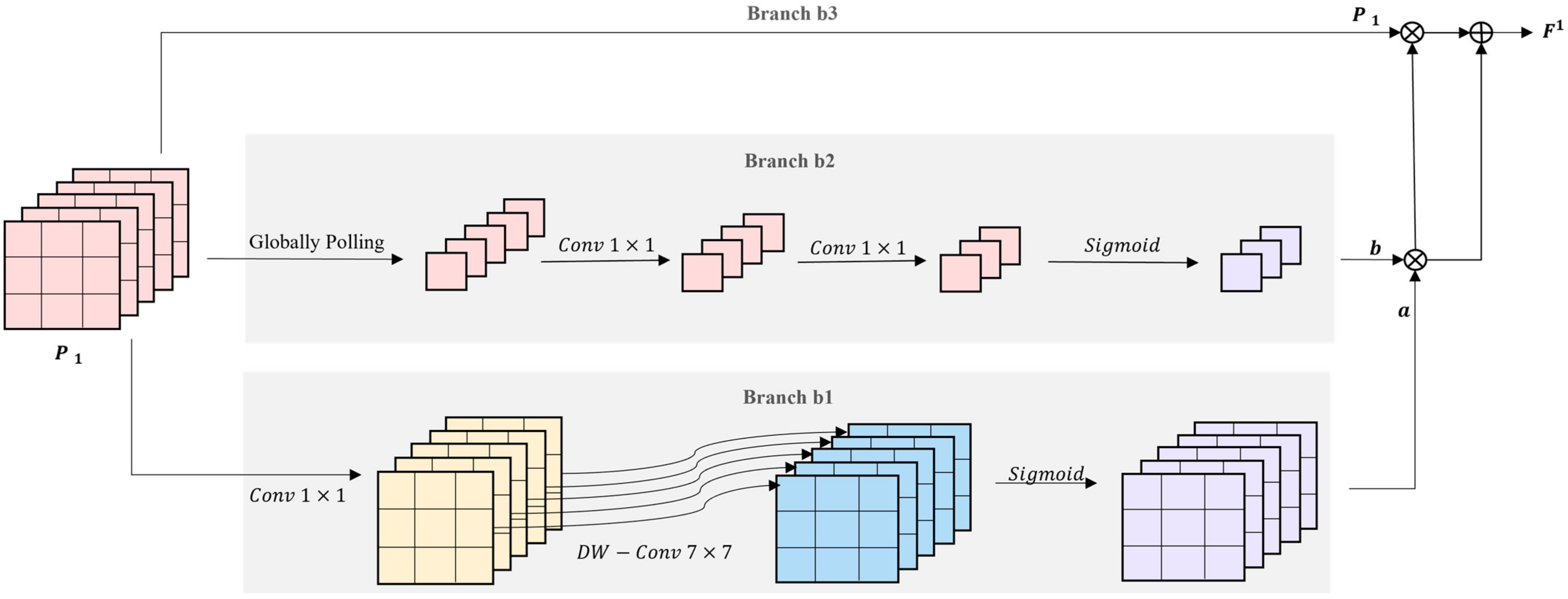

3.3. Feature Refine Module (FRM)

3.4. Box Redistribution(BR)

3.5. Loss Function

4. Experiment

4.1. Dataset

4.2. Tarining Details

4.3. Evalution Metrics

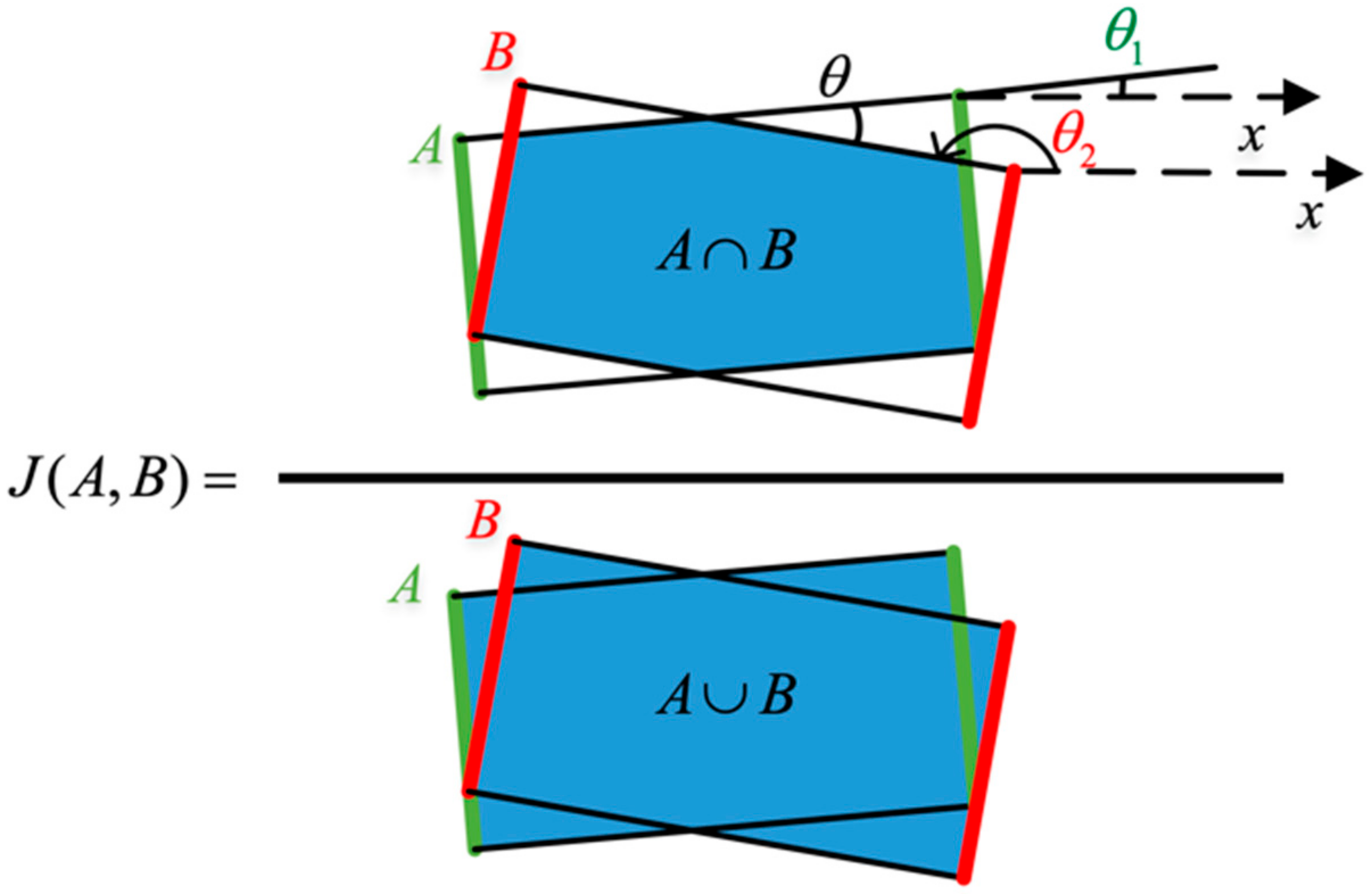

- (1)

- The difference in angle between the predicted grasping proposals and the ground-truth box should be less than 30°.

- (2)

- The Jaccard intersection ratio between the predicted grasping proposals and the ground-truth box should not be less than 25%. The specific context is in Figure 4. A is the ground truth box, and B is the predicted grasping box.

4.4. Evaluation on VMRD Dataset

4.5. Ablation Study

- (1)

- Effectiveness of FRM

- (2)

- Position of FRM.

- (3)

- Effectiveness of Box Redistribution

- (4)

- Parameters of Box Redistribution

- (5)

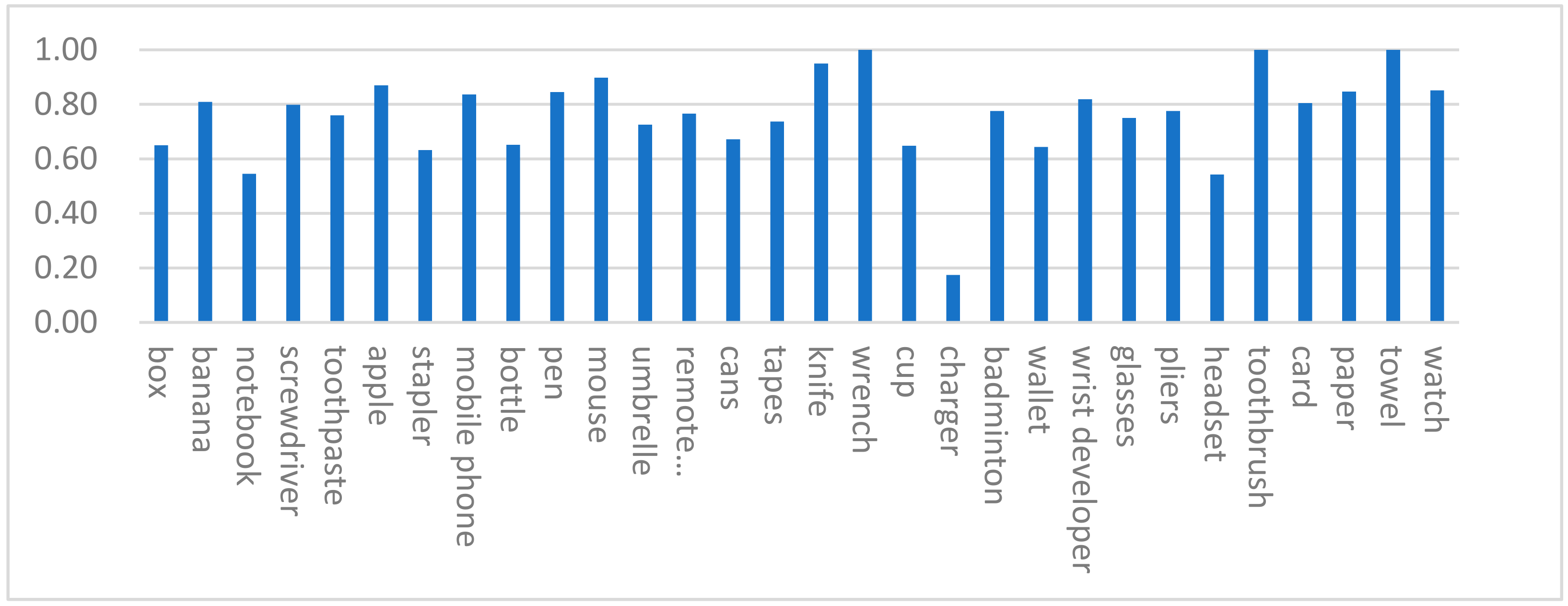

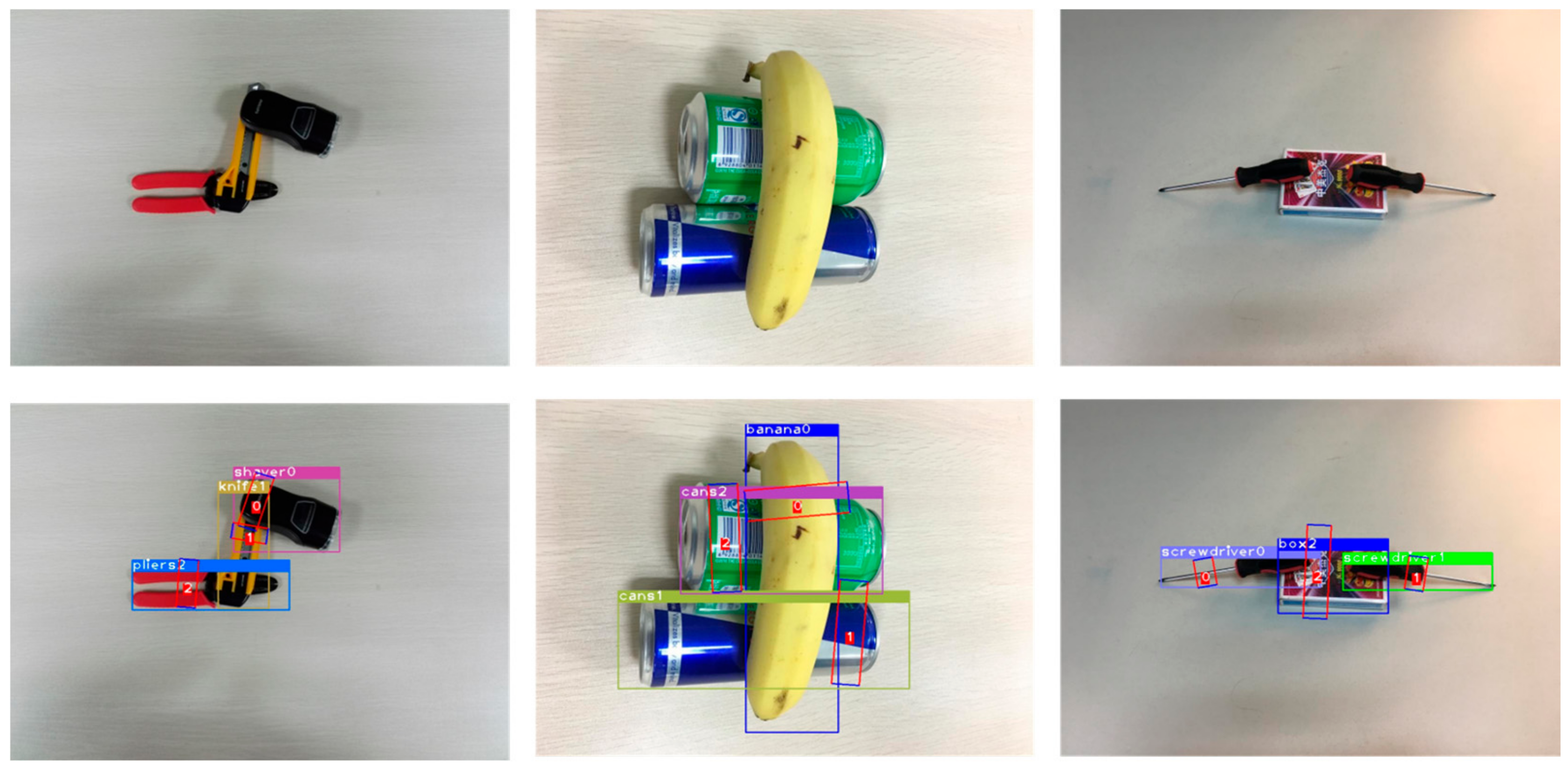

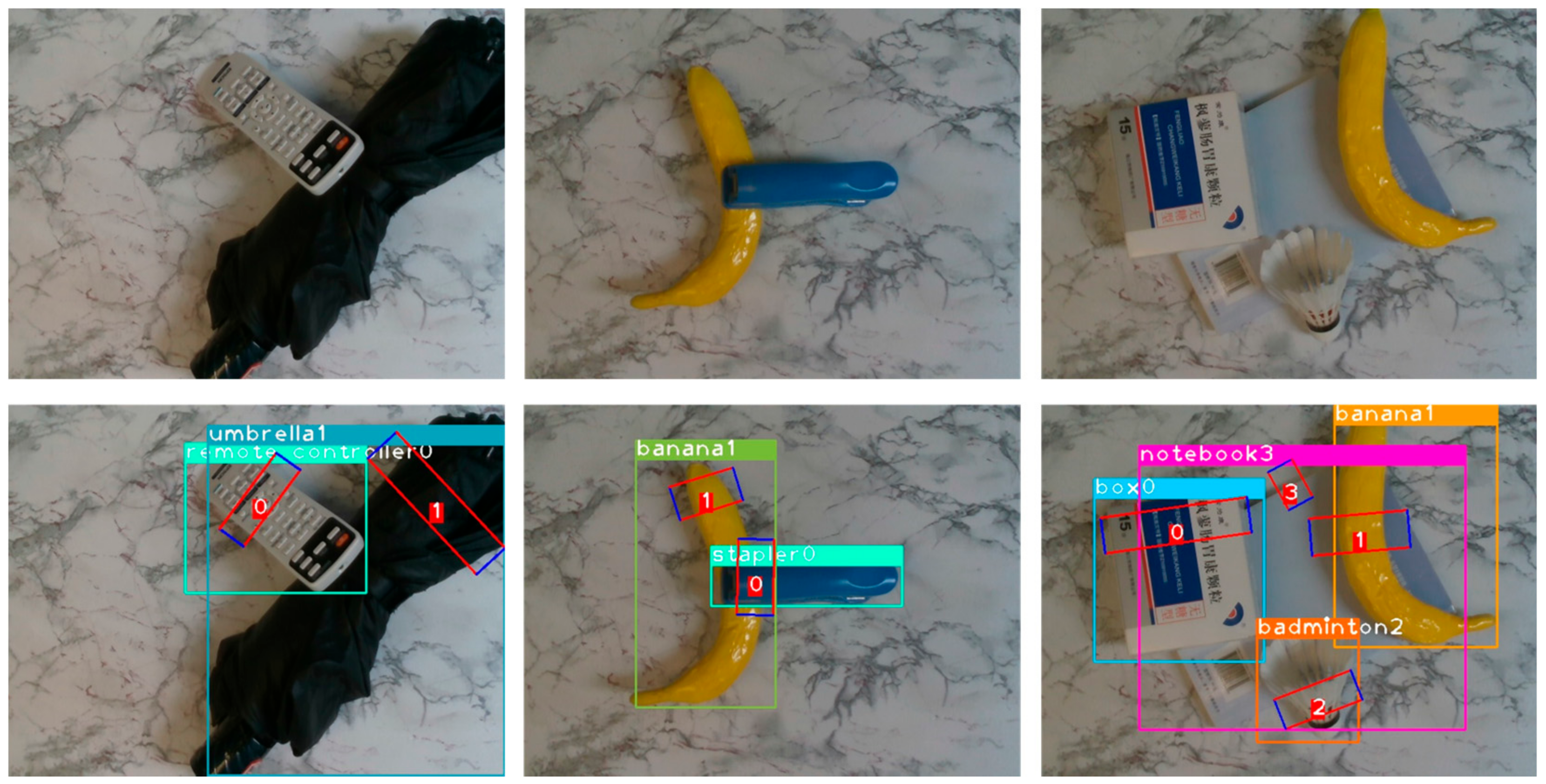

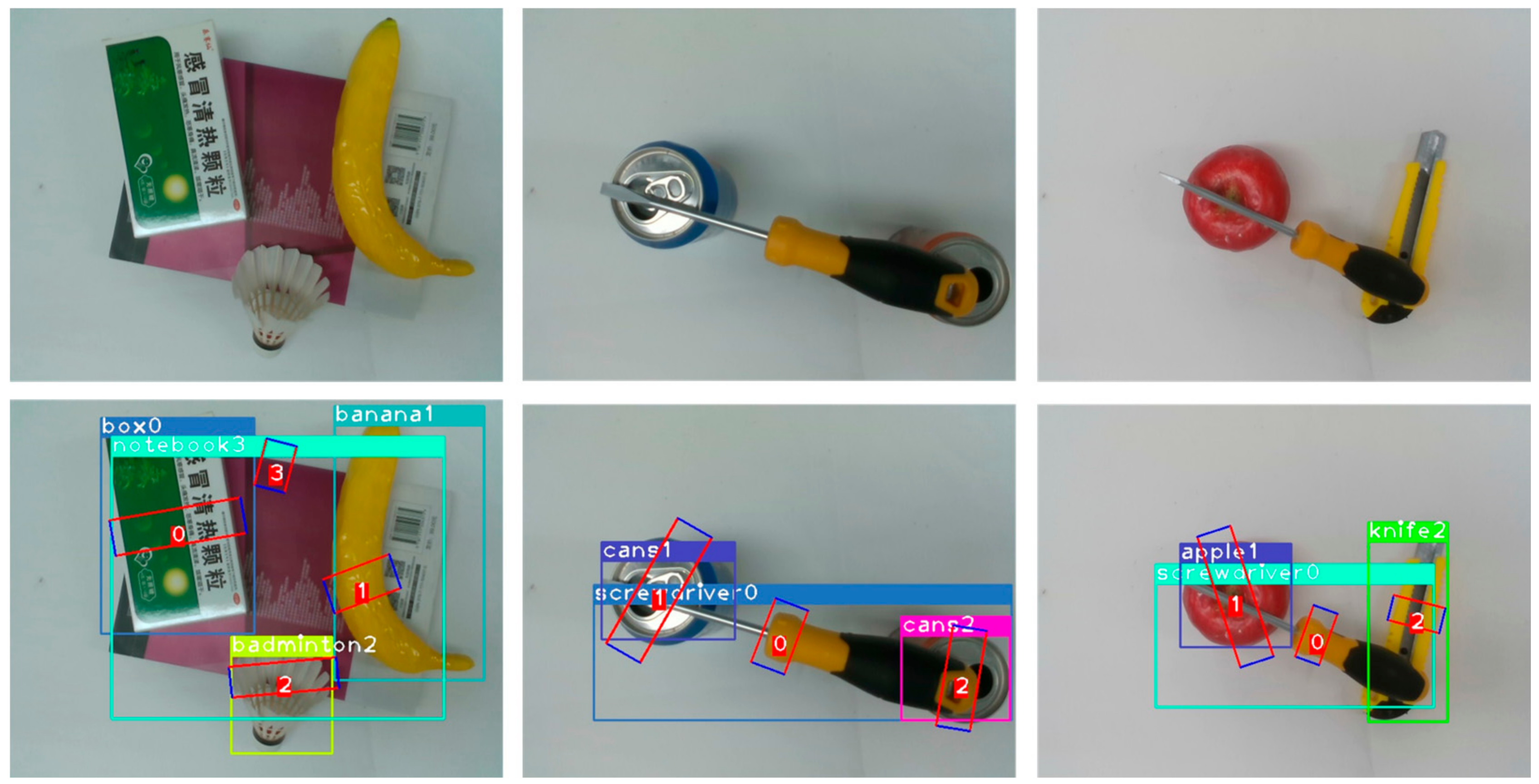

- Environmental adaptability Research

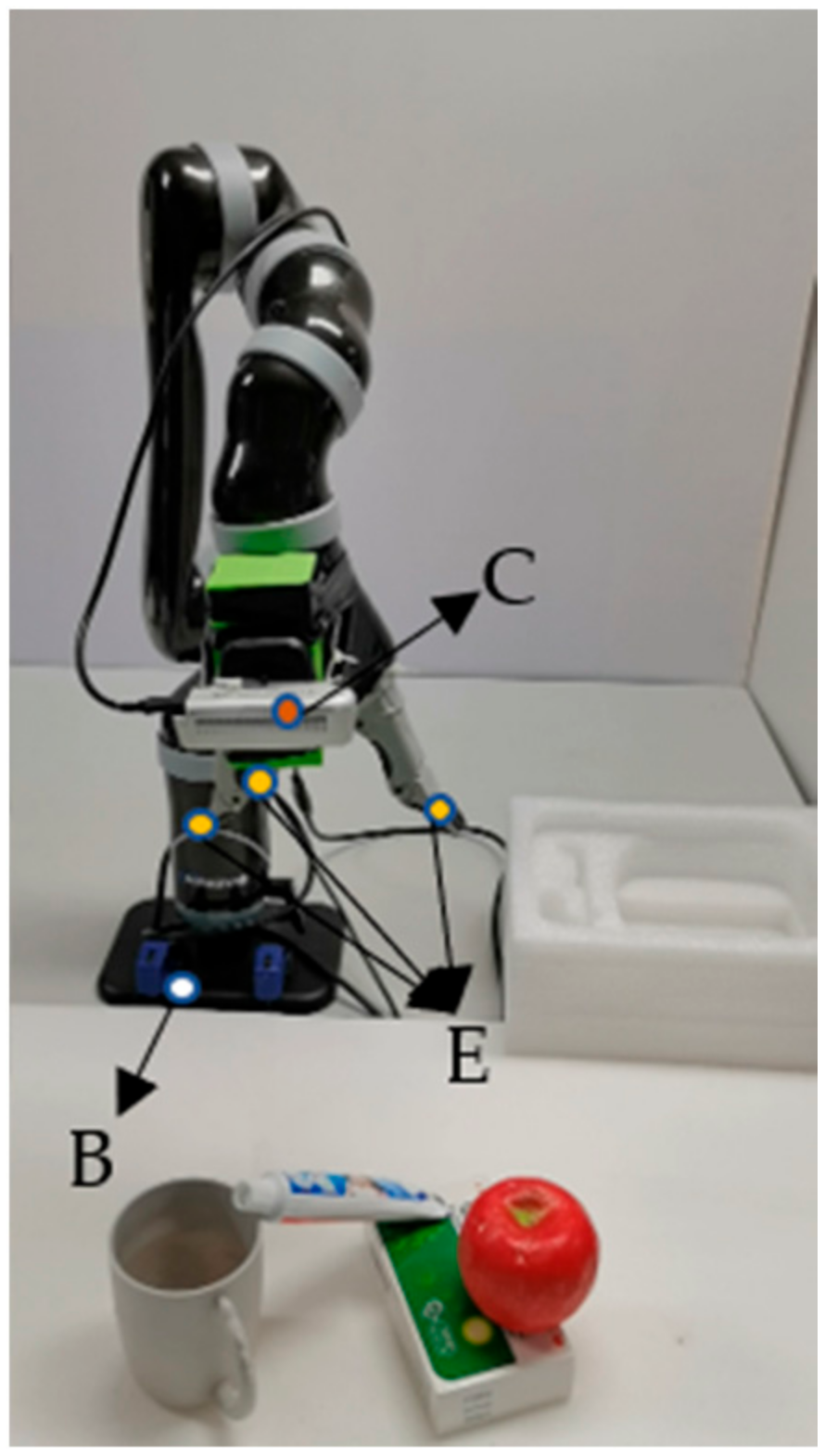

4.6. Experiment on Kinova Robotic Arm

4.6.1. Details of Equipment

4.6.2. Hand-Eye Calibration

4.6.3. Camera Calibration

4.6.4. Grasp Experiment on Knovia

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiang, Y.; Moseson, S.; Saxena, A. Efficient grasping from rgbd images: Learning using a new rectangle representation. In Proceedings of the International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3304–3311. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 3406–3413. [Google Scholar] [CrossRef]

- Pinto, L.; Davidson, J.; Gupta, A. Supervision via competition: Robot adversaries for learning tasks. In Proceedings of the International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 1601–1608. [Google Scholar]

- Chen, L.; Huang, P.; Meng, Z. Convolutional multi-grasp detection using grasp path for RGBD images. Robot. Auton. Syst. 2019, 113, 94–103. [Google Scholar] [CrossRef]

- Zhang, H.; Lan, X.; Bai, S.; Wan, L.; Yang, C.; Zheng, N. A multi-task convolutional neural network for autonomous robotic grasping in object stacking scenes. In Proceedings of the International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019; pp. 6435–6442. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Li, T.; Wang, F.; Ru, C.; Jiang, Y.; Li, J. Keypoint-based robotic grasp detection scheme in multi-object scenes. Sensors 2021, 21, 2132. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef]

- Yokota, Y.; Suzuki, K.; Kanazawa, Y.; Takebayashi, T. A multi-task learning framework for grasping-position detection and few-shot classification. In Proceedings of the International Symposium on System Integration, Honolulu, HI, USA, 12–15 January 2020; pp. 1033–1039. [Google Scholar]

- Guo, D.; Kong, T.; Sun, F.; Liu, H. Object discovery and grasp detection with a shared convolutional neural network. In Proceedings of the International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 2038–2043. [Google Scholar]

- Redmon, J.; Angelova, A. Real-time grasp detection using convolutional neural networks. In Proceedings of the International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 1316–1322. [Google Scholar]

- Wu, G.; Chen, W.; Cheng, H.; Zuo, W.; Zhang, D.; You, J. Multi-object grasping detection with hierarchical feature fusion. IEEE Access 2019, 7, 43884–43894. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wu, H.; Deng, J.; Wen, C.; Li, X.; Wang, C.; Li, J. CasA: A Cascade Attention Network for 3-D Object Detection from LiDAR Point Clouds. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Zhou, X.; Lan, X.; Zhang, H.; Tian, Z.; Zhang, Y.; Zheng, N. Fully convolutional grasp detection network with oriented anchor box. In Proceedings of the International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 7223–7230. [Google Scholar]

- Park, D.; Seo, Y.; Shin, D.; Choi, J.; Chun, S.Y. A single multi-task deep neural network with post-processing for object detection with reasoning and robotic grasp detection. In Proceedings of the International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 7300–7306. [Google Scholar]

- Zhang, H.; Yang, D.; Wang, H.; Zhao, B.; Lan, X.; Ding, J.; Zheng, N. Regrad: A large-scale relational grasp dataset for safe and object-specific robotic grasping in clutter. IEEE Robot. Autom. Lett. 2022, 7, 2929–2936. [Google Scholar] [CrossRef]

- Zhang, H.; Lan, X.; Zhou, X.; Tian, Z.; Zhang, Y.; Zheng, N. Visual Manipulation Relationship Network for Autonomous Robotics. In Proceedings of the IEEE-RAS 18th International Conference on Humanoid Robots (HUMANOIDS), Beijing, China, 6–9 November 2018. [Google Scholar]

- Zhang, H.; Lan, X.; Bai, S.; Zhou, X.; Tian, Z.; Zheng, N. Roi-based robotic grasp detection for object overlapping scenes. In Proceedings of the International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019; pp. 4768–4775. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

| Algorithm | mAPg (%) |

|---|---|

| Faster-RCNN [9] + FCGN [18] | 54.5 |

| ROI-GD [22] | 68.2 |

| Zhang [5] | 70.5 |

| Keypoint-based scheme [7] | 74.3 |

| MMD | 74.57 |

| MMD + FRM | 76.02 |

| MMD + FRM + RR1 + RR2 | 76.71(+2.41%) |

| FRM | RR1 | RR2 | mAPg (%) | mAPd (%) |

|---|---|---|---|---|

| 74.57 | 94.68 | |||

| √ | 76.02 | 93.14 | ||

| √ | 75.73 | 94.46 | ||

| √ | √ | 76.68 | 92.56 | |

| √ | 74.58 | 94.73 | ||

| √ | √ | 76.04 | 93.17 | |

| √ | √ | 75.76 | 94.48 | |

| √ | √ | √ | 76.71 | 92.86 |

| mAPg (%) | mAPd (%) | |||

|---|---|---|---|---|

| 74.57 | 94.68 | |||

| √ | 76.71 | 92.86 | ||

| √ | 75.10 | 92.91 | ||

| √ | 1.84 | 94.60 | ||

| √ | √ | 75.10 | 92.91 | |

| √ | √ | 1.84 | 94.60 | |

| √ | √ | 1.69 | 92.88 | |

| √ | √ | √ | 1.69 | 92.88 |

| FRM | RR1 | RR2 | mAPg (%) | mAPd (%) |

|---|---|---|---|---|

| 74.57 | 94.68 | |||

| √ | 75.73 | 94.46 | ||

| √ | 74.58 | 94.73 | ||

| √ | √ | 75.76 | 94.48 | |

| √ | 76.02 | 93.14 | ||

| √ | √ | 76.68 | 92.56 | |

| √ | √ | 76.04 | 96.17 | |

| √ | √ | √ | 76.71 | 92.86 |

| mAPg (%) | mAPd (%) | ||

|---|---|---|---|

| 0.1 | 0.9 | 75.61 | 93.13 |

| 0.2 | 0.8 | 76.71 | 92.86 |

| 0.3 | 0.7 | 74.35 | 92.63 |

| 0.4 | 0.6 | 74.03 | 92.51 |

| 0.5 | 0.5 | 72.12 | 91.9 |

| 0.6 | 0.4 | 72.05 | 91.91 |

| 0.7 | 0.3 | 72.94 | 91.7 |

| 0.8 | 0.2 | 72.25 | 91.2 |

| 0.9 | 0.1 | 73.29 | 91.15 |

| Method | Experiment Number | Number of Success | Grasp Success Rate (%) |

|---|---|---|---|

| Cascade R-CNN [10] | 150 | 142 | 94.60 |

| MMD + FRM + RR1 + RR2 | 150 | 146 | 97.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, X.; Jiang, Y.; Zhao, F.; Xia, J. A Practical Multi-Stage Grasp Detection Method for Kinova Robot in Stacked Environments. Micromachines 2023, 14, 117. https://doi.org/10.3390/mi14010117

Dong X, Jiang Y, Zhao F, Xia J. A Practical Multi-Stage Grasp Detection Method for Kinova Robot in Stacked Environments. Micromachines. 2023; 14(1):117. https://doi.org/10.3390/mi14010117

Chicago/Turabian StyleDong, Xuefeng, Yang Jiang, Fengyu Zhao, and Jingtao Xia. 2023. "A Practical Multi-Stage Grasp Detection Method for Kinova Robot in Stacked Environments" Micromachines 14, no. 1: 117. https://doi.org/10.3390/mi14010117

APA StyleDong, X., Jiang, Y., Zhao, F., & Xia, J. (2023). A Practical Multi-Stage Grasp Detection Method for Kinova Robot in Stacked Environments. Micromachines, 14(1), 117. https://doi.org/10.3390/mi14010117