1. Introduction

The problem of an ageing population is becoming increasingly serious, with disabled people accounting for around 15% of the world’s population, of whom 285 million are visually impaired or blind, Wheelchairs are an indispensable travel aid for people with disability, with annual demand for wheelchairs reaching over 30 million. At the same time, with the widespread use of wheelchairs, wheelchair users and guardians have become more concerned about the comfort and safety of wheelchairs, which has led to the rapid development of research on smart wheelchairs [

1]. Smart wheelchairs, in which cameras, lidar and EEG (electroencephalogram) sensors are installed on traditional wheelchairs to build sensor networks, provide users with a full range of sensing, human-machine interaction, remote monitoring and mobility control functions, are smarter and safer to use than traditional electric or push wheelchairs, and are the future direction of wheelchairs.

The perception function of the intelligent wheelchair includes the perception of the external environment and the perception of the user. In terms of wheelchair occupant perception, Zhang Zhen constructed a safety monitoring system for wheelchairs, installed sensors for positioning, pressure sensing and body temperature measurement on the wheelchair, and was able to view various types of information about the wheelchair in real time on the mobile phone [

2]. Rahimunnisa studied the installation of MPU6050 and SPO2 sensors made by InvenSense, Sunnyvale, the U.S.A on the wheelchair, which were able to detect the orientation of the user’s hand and health parameters, while the data health was synchronized to the cloud for viewing [

3]. Basak et al. studied a smart wheelchair based on gesture recognition by installing a gesture detection sensor on the wheelchair [

4].

In the area of wheelchair environment perception, Zhanyinze et al. proposed a vehicle target recognition algorithm based on lidar and infrared image fusion, which can recognize vehicle targets [

5]; Chenguang Liu et al. built an experimental platform for 3D lidar target recognition for unmanned boats in real time, solving key technologies such as laser point cloud data processing, target segmentation, and remote interaction of point cloud images [

6]. Xiangmo Zhao et al. fused lidar range data and camera image data to obtain Regions of Interest using lidar, and then input to a CNN (convolutional neural network) with image data for training to achieve accurate recognition of objects around cars [

7]. Radhika Ravi et al. studied a lane width recognition algorithm for moving regions, extracting road surface information from laser point clouds, extracting pavement information, extracting pavement markings and clustering them based on intensity thresholds. They used equally spaced centreline points to calculate lane widths with an accuracy of 3 cm for lane width estimation [

8]. Reza et al. achieved beacon recognition on roads based on linear and kernel (non-linear) support vector machines, multi-core learning, light detection and radar ranging 3D data [

9].

The movement control mode of intelligent wheelchairs includes three modes: handle control, occupant state detection and autonomous navigation movement, among which the handle control mode is for the user to control the movement direction and speed of the wheelchair through the electric handle; the occupant state detection mode is to control the movement of the wheelchair by detecting the user’s head posture, pupil centre position, electromyographic signals and EEG signals [

10,

11,

12,

13]. Wang Beiyi designed an intelligent wheelchair that combines head movement control with traditional button control; it is capable of detecting the user’s head posture to achieve wheelchair control, as well as having distance detection and alarm functions [

14]. Tang Xianlun et al. installed an EEG signal acquisition module on the wheelchair, capable of collecting the user’s motion imagination EEG signals to control the wheelchair [

15]. Wu Jiabao et al. installed a human visual tracking device that was able to capture and analyse the human eye position to control the wheelchair’s movement [

16]. Javeria Khan investigated a wheelchair control mode based on EEG signals [

17]. Rosero-Montalvo investigated a wheelchair control mode based on human posture [

18]. Al-Wesabi et al. combined brain activity, blink frequency and Ardunio controller to achieve wheelchair mobility without manual control for disabled people [

19]. Baiju et al. designed a smart wheelchair that included both manual and automatic control modes, using infrared sensors to assist movement in manual mode and a camera and Raspberry Pi for obstacle avoidance and image processing in automatic mode [

20]. Autonomous guided mobility is achieved by installing inertial sensors, lidar, vision and range sensors on the wheelchair to sense the wheelchair’s surroundings [

21,

22]. Megalingam et al. installed 2D lidar on the wheelchair to enable autonomous navigation of the wheelchair within a specified area without human control [

23].

This paper carries out research on the perception, control and human-machine interaction of intelligent wheelchairs, mainly accomplishing the following:

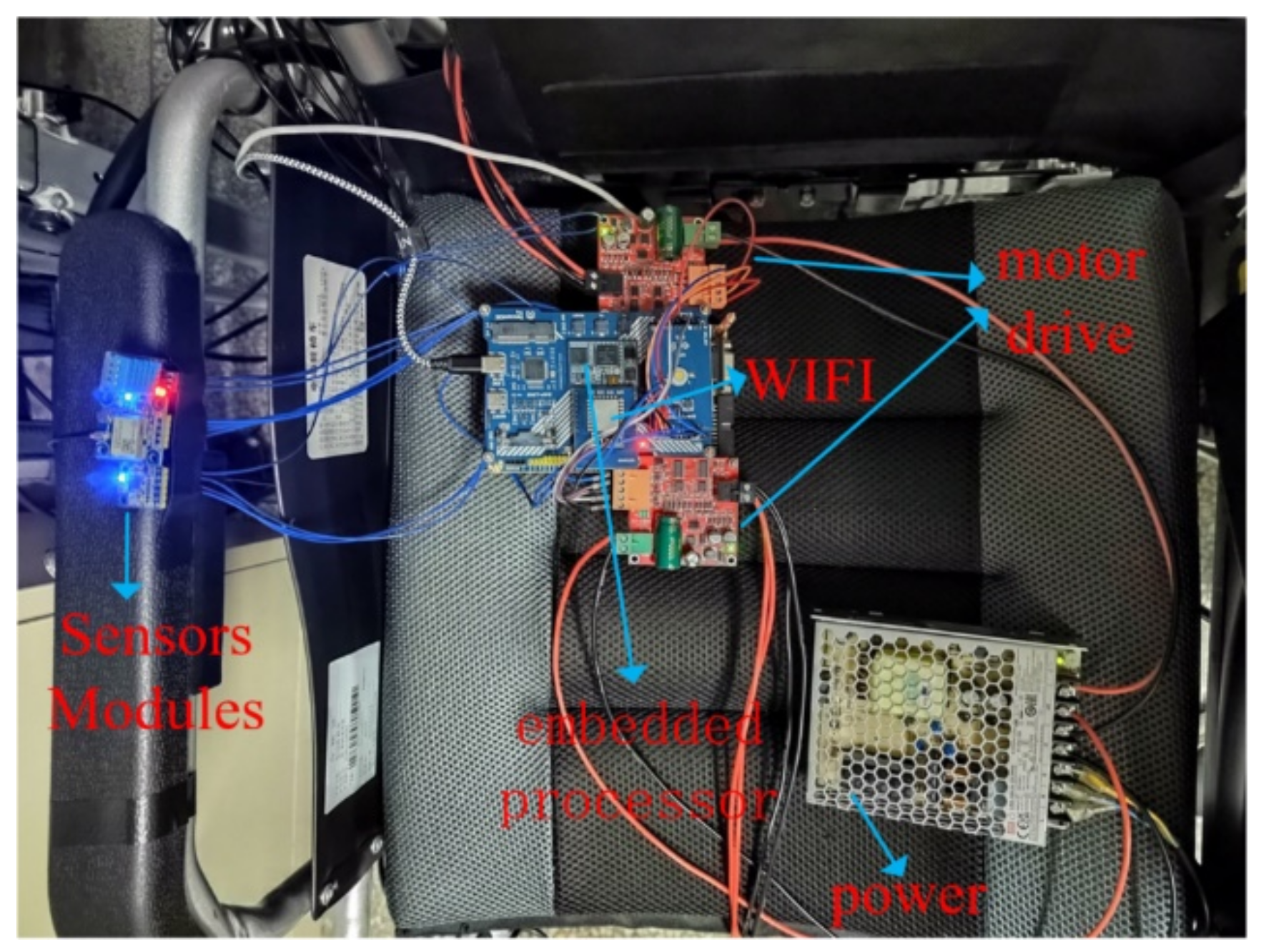

(1) Sensing occupant gesture change information through gesture recognition sensors to achieve occupant perception; detecting posture angle, positioning and speed information during wheelchair movement through posture sensors and GPS positioning sensors to achieve wheelchair state perception; using lidar and temperature and humidity sensors to identify road information such as lane lines and pedestrians and environmental conditions to achieve multi-mode information for human-wheelchair-environment sensing.

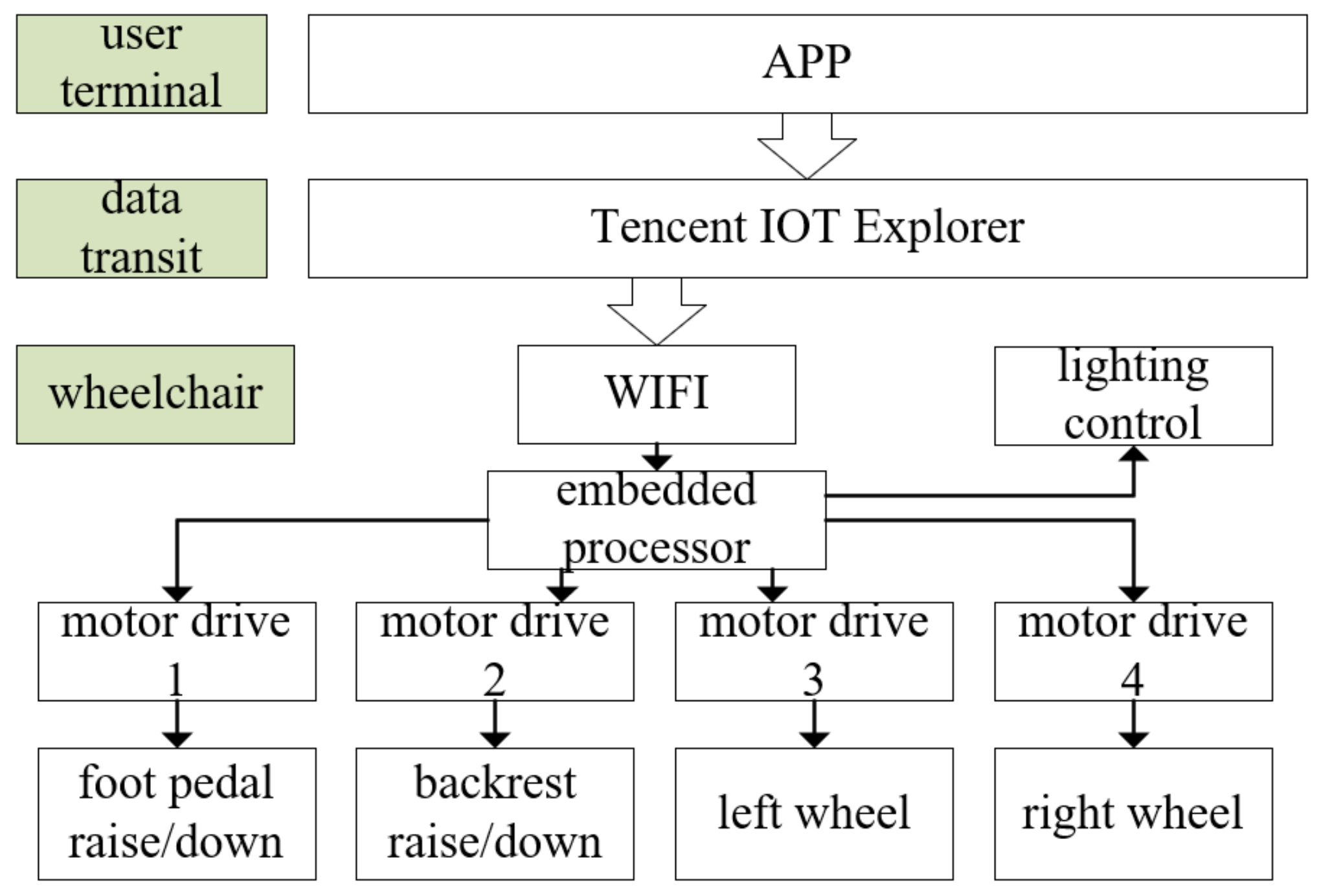

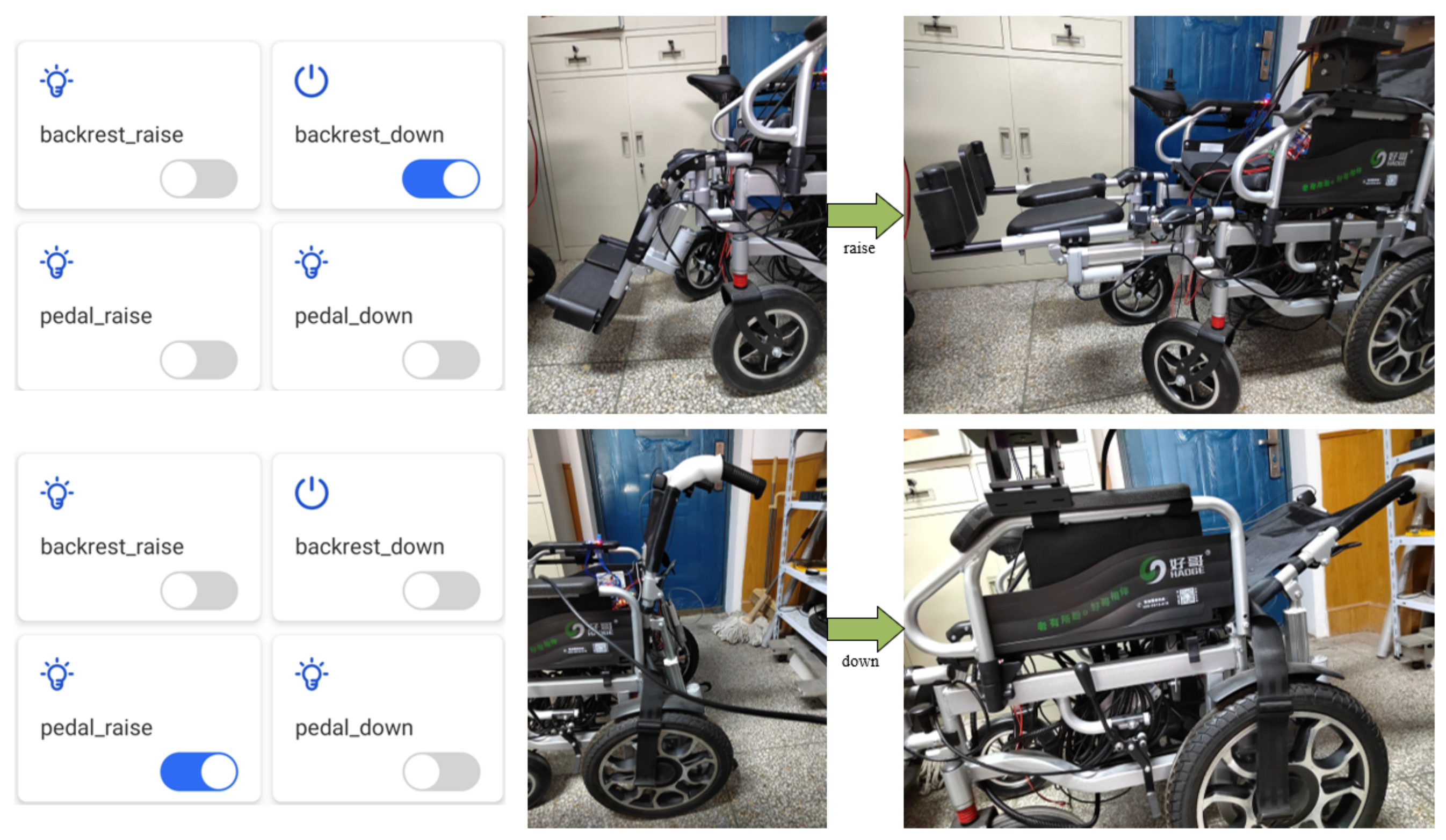

(2) Wheelchair multi-mode control solution, installing a handle on the traditional hand-push wheelchair, which can be used to control the wheelchair quickly and easily; developing a gesture control system, using different gesture changes identified by gesture sensors, combined with embedded processor and motor drive to achieve wheelchair movement control, suitable for people with hand disabilities and unable to grasp the handle; developing a remote control system for the wheelchair based on the App and the Tencent IoT Explorer. The wheelchair, Tencent Cloud and the mobile phone use the MQTT protocol to interact with sensory data and control commands, so that users and guardians can view the sensory data and control the wheelchair remotely on their mobile phones, including movement, footrest and backrest control, further improving the safety performance of the wheelchair.

The remainder of the paper is organised as follows. In

Section 2, we present the general design of the intelligent wheelchair.

Section 3 presents the study of multimodal sensing technology for wheelchairs, including user sensing, wheelchair state sensing and environment sensing.

Section 4 presents the local and remote control of the wheelchair. Experiments with the wheelchair are presented in

Section 5, and

Section 6 concludes the paper.

3. Smart Wheelchair Multi-Mode Sensing Technology

In the process of using traditional wheelchairs, the following problems exist: (1) In terms of mobility control, the handle control is mainly for healthy people, while users with missing palms or unable to hold the handle have to rely on others to use the wheelchair. It is necessary to install an occupant detection device on the wheelchair to realise the use of the wheelchair. (2) When using a conventional wheelchair, the user’s family members do not have access to status information such as the wheelchair’s positioning in a timely manner, making it impossible to deal with emergencies experienced by the user in time. (3) The occupant needs to pay attention to the environment around the wheelchair at all times during the use of the wheelchair, which increases the burden of using the wheelchair.

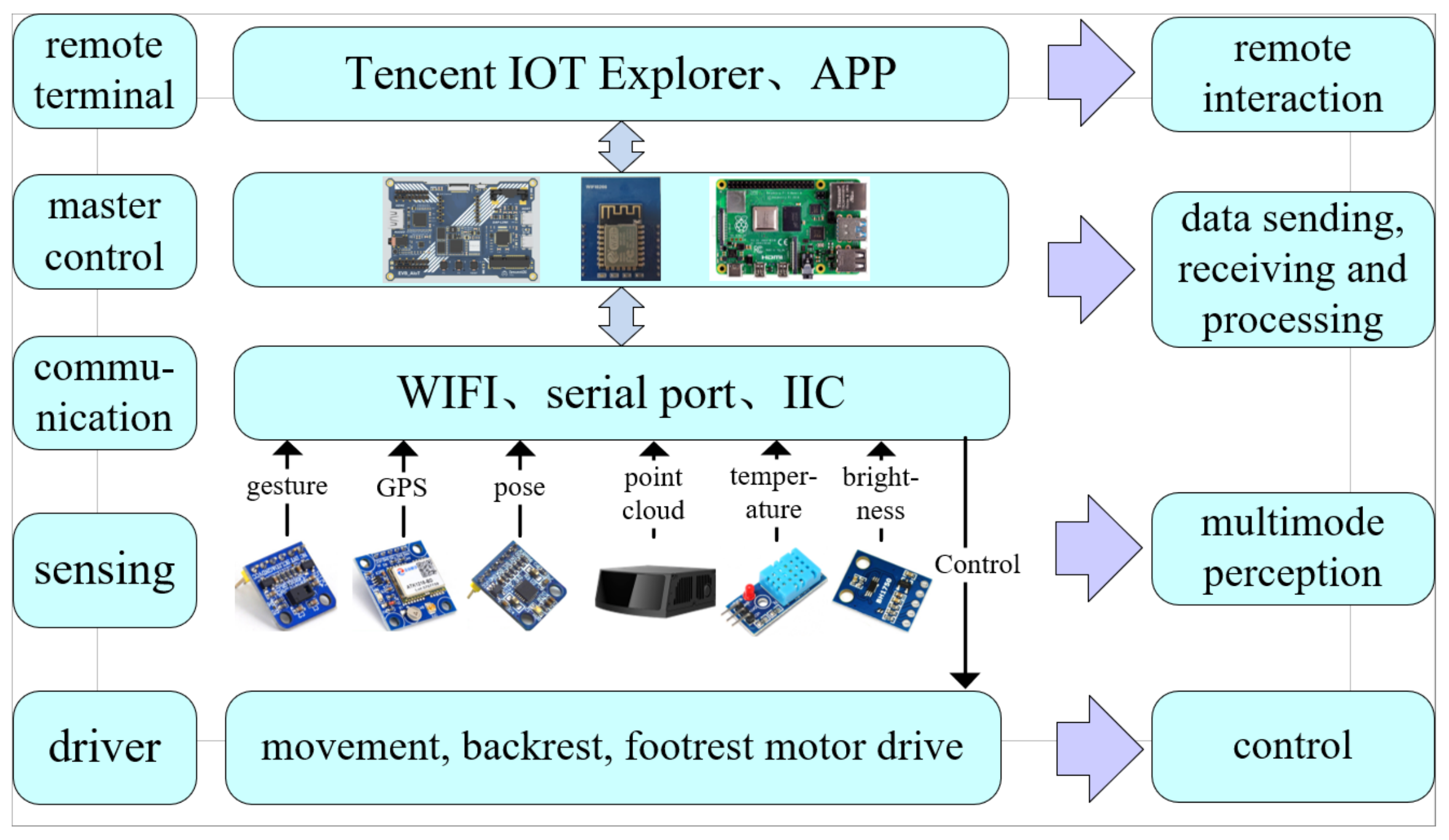

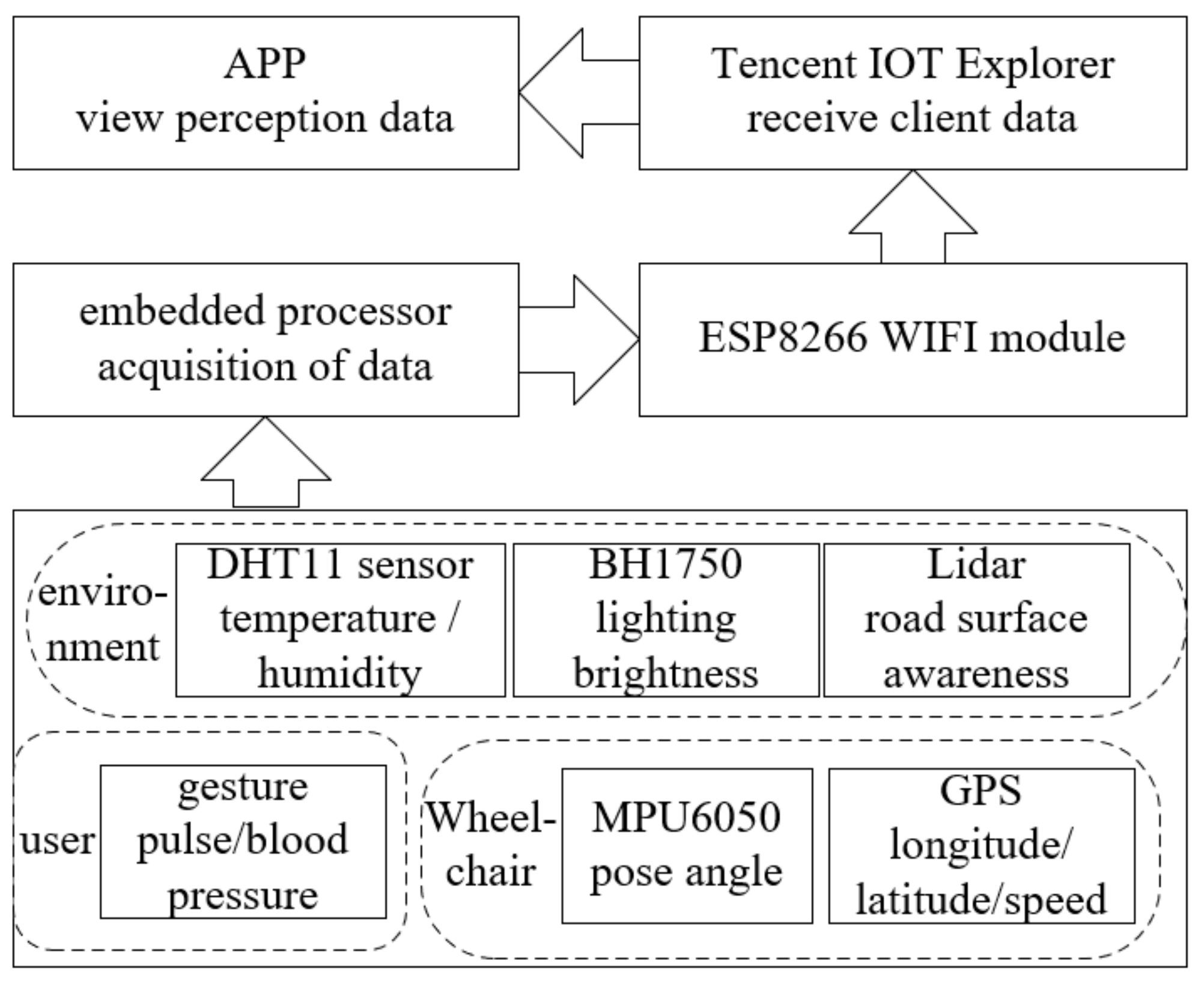

In response to the problems of traditional wheelchairs, this article proposes a multimode sensing scheme for wheelchairs and a remote viewing scheme for sensing data, as shown in

Figure 2. We first proposed a multimode perception technology including occupant perception, state perception and environment perception. To solve problem (1), gesture recognition sensors were installed on the wheelchair and the microcontroller received movement data from the end of the arm using the IIC protocol to achieve gesture perception; for problem (2), we installed MEMS (Micro-Electro-Mechanical System) posture sensors and positioning sensors on the wheelchair to achieve state perception; for problem (3), we installed lidar and weather sensors on the wheelchair and invoked the radar recognition algorithm to collect road information and weather data to achieve perception of the environment.

In addition, we designed a remote viewing solution for wheelchair-aware data based on the MQTT protocol. The embedded processor encapsulates the sensing data into frames and uses the WiFi module to transmit them in real time to the Tencent IoT Explorer. In addition, the mobile phone and APP is connected to the IoT Explorer and will automatically update the data on the APP interface when the data is updated.

Figure 2 shows the architecture design of the multi-mode sensing solution.

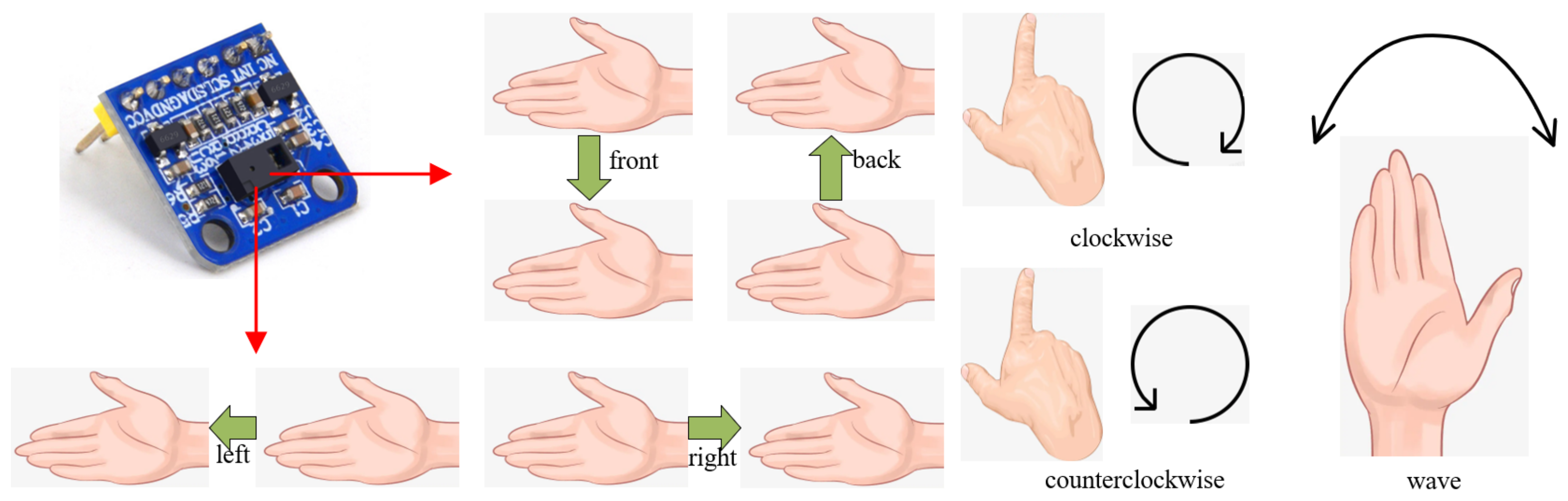

3.1. User State Awareness Based on Gesture Sensor

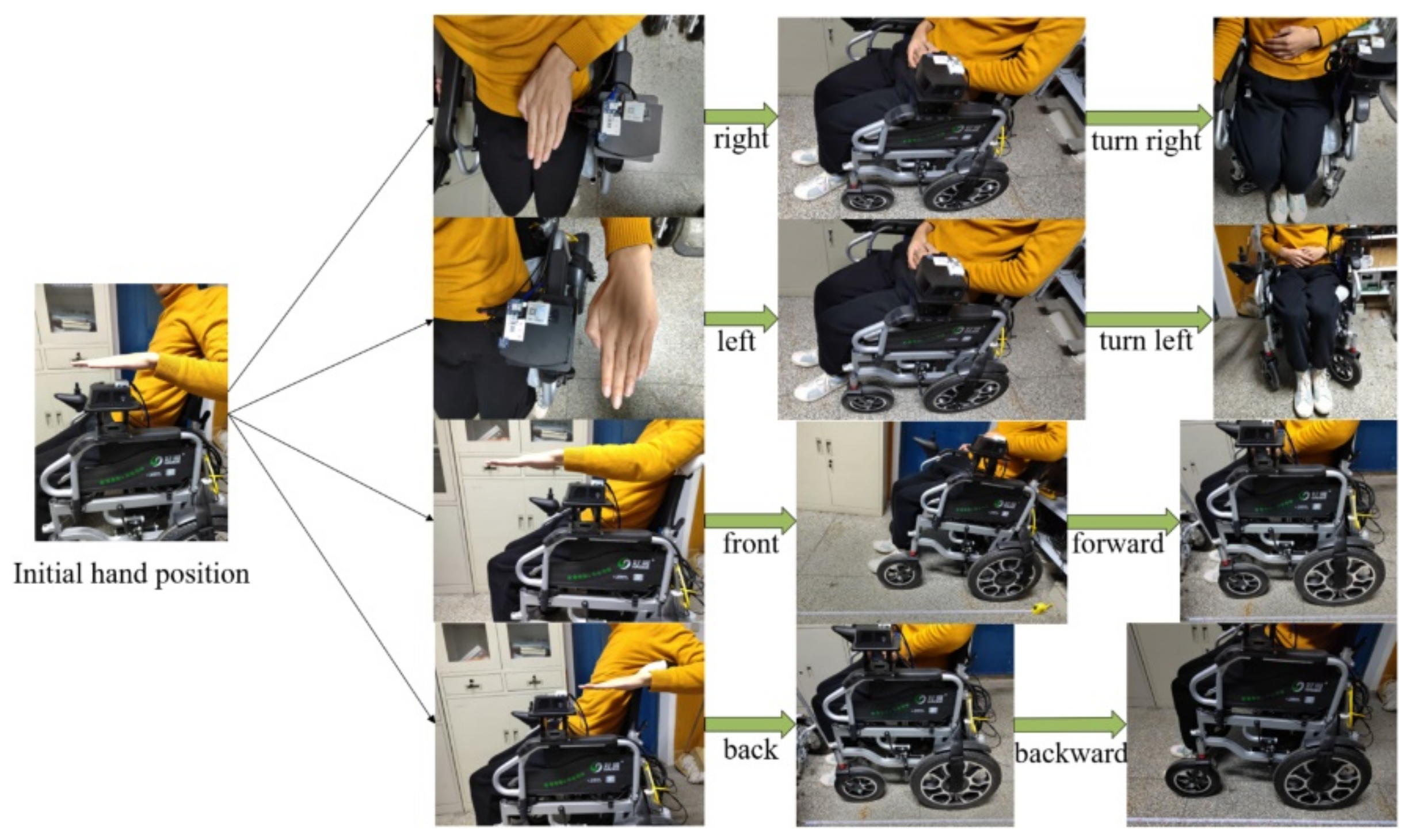

People with muscle atrophy and arm weakness are unable to control the wheelchair by means of a joystick or hand push, while the joystick is flexible and unsuitable for some elderly people. Therefore, we designed a user state sensing solution based on gesture recognition, in which gesture recognition sensors are installed on the wheelchair to detect changes in the user’s gestures and use the gesture recognition information to also control the movement of the wheelchair. In addition, pulse and blood pressure sensors are also installed on the wheelchair in order to fully sense the physiological status of the user.

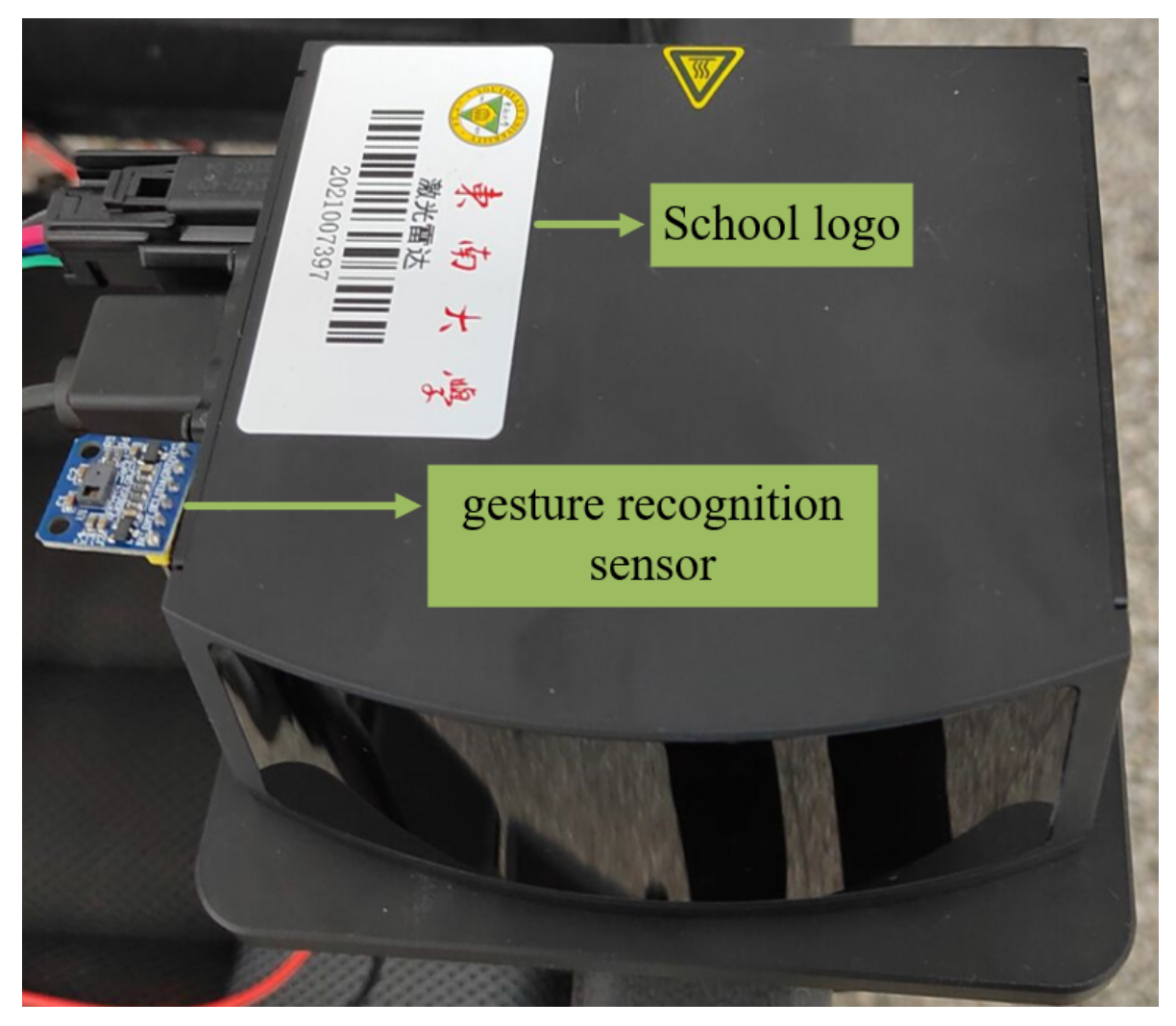

For recognition accuracy, cost and practicality considerations, this paper uses the high-performance gesture recognition sensor module ATK-PAJ7620 from Alientek, Guangzhou, China, which uses the PAJ7620U2 chip from Original Phase Technology (Pixart) and supports the recognition of 9 gesture types such as left, right, front, back, etc. [

24].

Figure 3 shows the physical diagram of the sensor and the gestures that can be recognized.

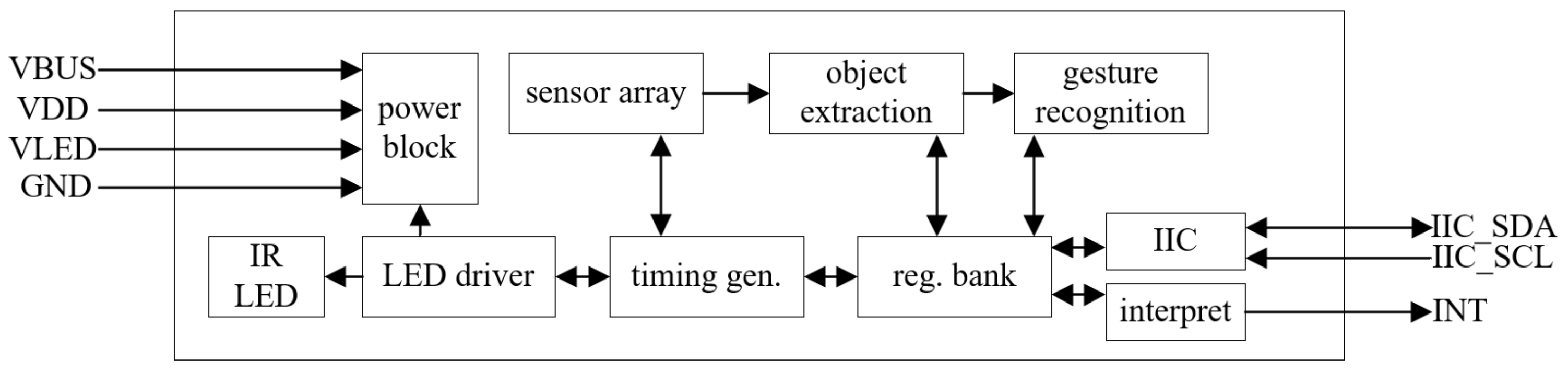

The functional block diagram of the PAJ7602U2 chip made by PixArt, Taiwan, China is shown in

Figure 4. During operation, through the internal LED (Light Emitting Diode) driver, the infrared LED is driven to emit infrared signals outwards. When the sensor array detects an object in the effective distance, the target information extraction array will acquire the characteristic raw data of the detected target, and the acquired data will be stored in the register, while the gesture recognition array will recognize the raw data for processing, and finally store the recognition result in the register, and output the recognition result using the IIC bus.

When using the PAJ7620 sensor, the IIC interface of the microcontroller is connected to the sensor and the sensor is driven in three steps: wake-up, initialisation and recognition test. The gesture information is obtained by reading and writing the two bank register areas inside the sensor during the test.

3.2. Wheelchair Status Awareness

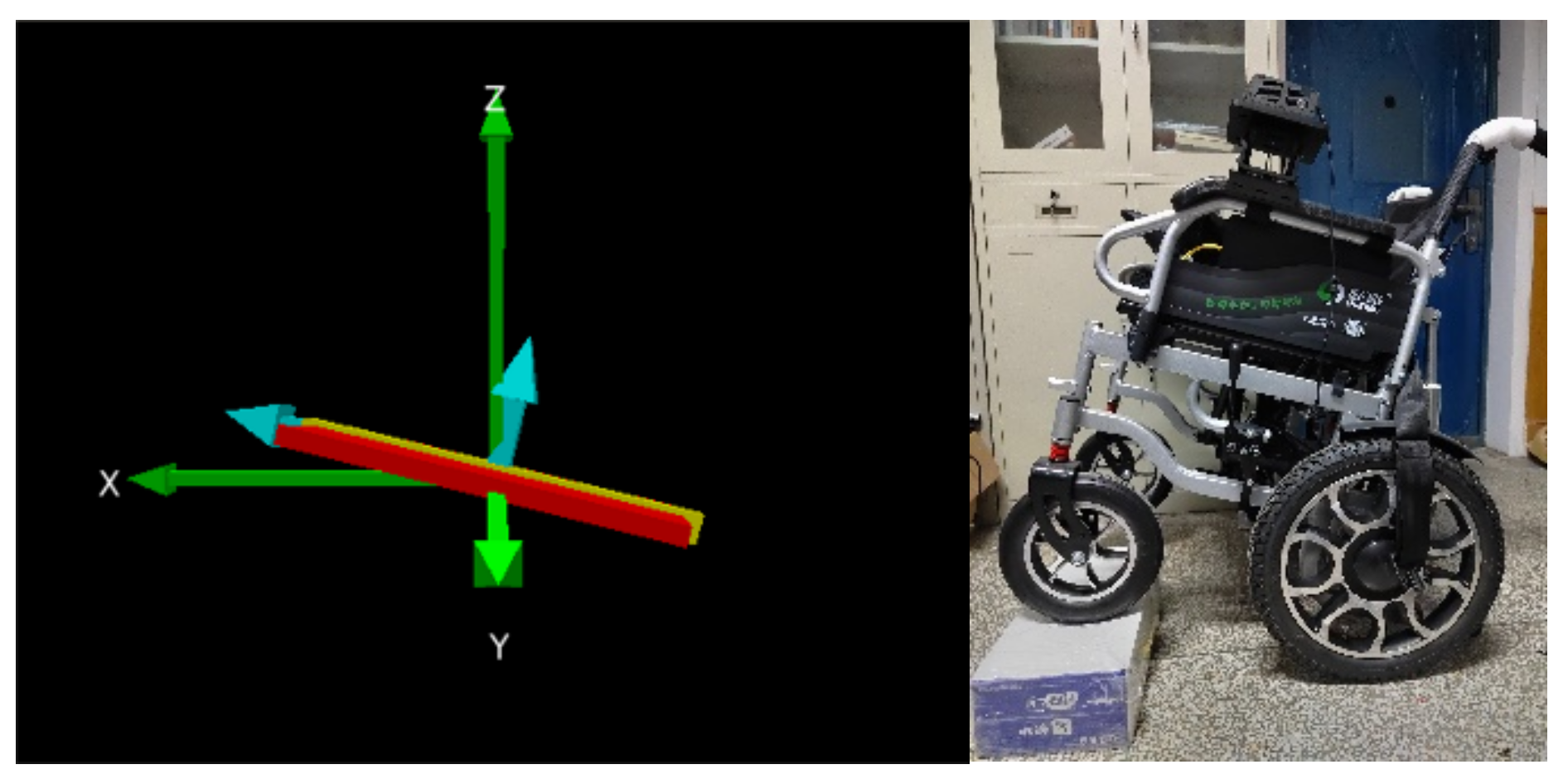

3.2.1. MEMS Sensor Based Wheelchair Posture Sensing

Wheelchairs can tip over during movement due to road conditions or improper handling by the user, so there is a need to sense the posture of the wheelchair and transmit posture data to the remote guardian in real time to prevent the wheelchair from tipping over without rescue. We have used the ATK-MPU6050 si

x-axis attitude sensor made by Alientek, Guangzhou, China [

25], based on the IIC (Inter-Integrated Circuit) protocol, which is capable of outputting three-axis acceleration and three-axis gyroscope information to enable real-time monitoring of the wheelchair’s attitude angle, as shown in

Figure 5.

The raw data output from the MPU6050 sensor made by InvenSense, Sunnyvale, the U.S.A is acceleration data and angular velocity data, which is not intuitive for the user to view, so the article uses the Data Management Platform that comes with the MPU6050 to convert the raw data into quaternions, noted as [

q0,

q1,

q2,

q3], and then converts the quaternions into attitude angles using Equation (1).

where pitch is the pitch angle, yaw is the yaw angle and roll is the roll angle and

q0,

q1,

q2 and

q3 are the quaternions solved by the sensor. By using this formula, the pose angle can be solved.

3.2.2. GPS Module Based Wheelchair Position Awareness

In order to help the guardian to obtain the location information of the wheelchair user in real time, the ATK-GPS/BeiDou module from Alientek, Guangzhou, China was installed [

26], which uses the S1216F8 BD module made by Skytrax, London, England with a smaller size and a positioning accuracy of 2.5mCEP (SBAS: 2.0mCEP), thus helping the loved ones of wheelchair users to obtain real-time information on the location of the wheelchair.

The positioning module communicates with the external embedded controller via the serial port and outputs GPS/BeiDou positioning data including longitude, latitude and speed according to the NMEA-0183 protocol; the control protocol for the module is SkyTraq. The protocol uses ASCII codes to transfer GPS positioning information and the format of a frame of data is as follows.

where

$ is the start bit, aaccc is the address bit, ddd, ..., dd is the data bit, * is the checksum prefix, hh is the checksum and (CR)(LF) is the end-of-frame flag.

Figure 6 shows the ATK-S1216F8-BD positioning module and the active antenna of the SMA interface.

3.3. Wheelchair External Environment Awareness

3.3.1. Road Surface Perception Based on 3D Lidar

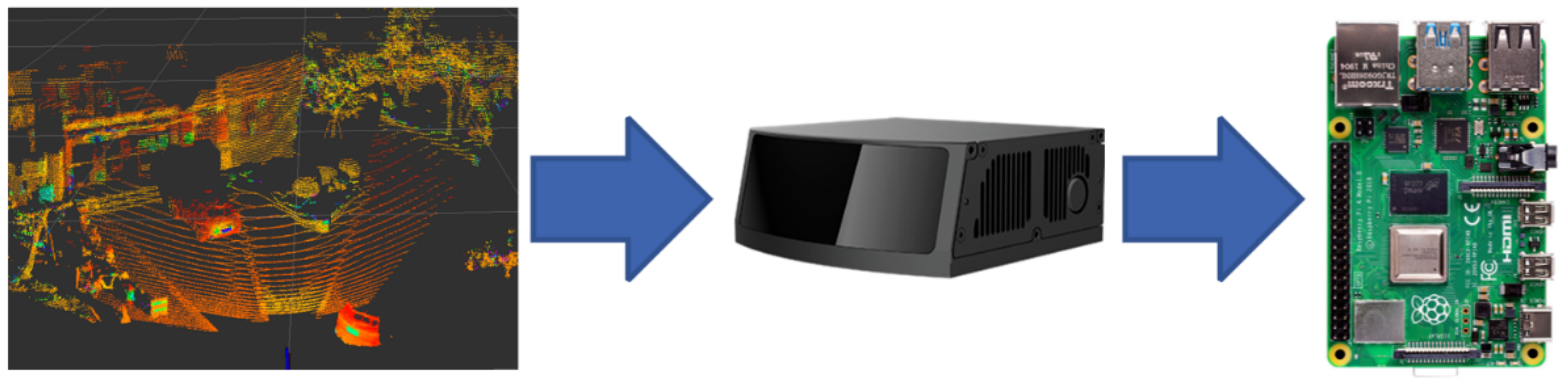

In order to sense the road environment in real time and assess the safety status of the wheelchair while it is moving, the article selects RoboSense’s 3D lidar RS-LiDAR-M1 made by Robosense, Shanghai, China, which is the world’s first and only radar based on MEMS intelligent scanning technology and AI algorithm technology for self-driving passenger cars, using a main data stream output protocol to output 3D point cloud coordinate data, reflection intensity information and time stamps.

Figure 7 shows a physical view of the lidar, the point cloud collected and the connection to the processor. The radar parameters are shown in

Table 1 [

27].

In addition, the lidar integrates road environment sensing algorithms, combining traditional point cloud algorithms based on geometric rules with data-driven deep learning algorithms. Firstly, the 3D point cloud is filtered using the downsampling method, and the large-scale point cloud is divided into a 3D grid with a number of points

k;

is the coordinate of the point and

is the centre-of-mass coordinate.

Road routes, boundaries and other objects are then extracted using geometric methods and fused with neural network algorithms to classify other objects, and to output road object sensing information in seven categories: cylinders, pedestrians, cyclists, small vehicles, large vehicles, trailers and unknown categories.

3.3.2. Environment Awareness

During the movement of the wheelchair, we deployed the DHT11 temperature and humidity sensor and the BH1750 light sensor made in Asair, Guang, China to collect information on the temperature [

28,

29], humidity and light intensity of the environment in which the wheelchair is located, while displaying this information in the APP, so that the wheelchair user can remotely access the real-time environmental status of the wheelchair.

The sensors are shown in

Figure 8, where the DHT11 sensor uses a single bus data format with 8 bit humidity integer data + 8 bit humidity fractional data + 8 bi temperature integer data + 8 bit temperature fractional data in the data packet; the BH1750 sensor uses an IIC(Inter-Integrated Circuit) interface and has a built-in 16 bit AD converter for high accuracy measurement of brightness at 1 lux.

3.4. Remote Viewing of Sensory Data

After the embedded processor collects multi-mode sensing data, in order to view the sensing data remotely on the mobile phone APP, a data remote viewing solution based on the Tencent IoT Explorer was designed. Tencent IoT Explorer is an IoT PaaS platform launched by Tencent Cloud for smart life and industrial applications. It supports WiFi, cellular, LoRa, Bluetooth and other communication standard devices on the cloud. Developers’ devices can be quickly uploaded to the cloud and provide efficient information processing and convenient data services. At the same time, Tencent IoT Explorer has launched Tencent Connect, which allows developers to complete application development for their devices through a development-free panel.

The data remote viewing solution includes: (1) a communication protocol, supporting two-way interaction of data between APP, embedded processor and cloud platform using MQTT server/client protocol; (2) updating and viewing of data. Firstly, the embedded processor on the wheelchair side encapsulates the collected data and uses a WiFi module to access Tencent IoT Explorer to publish sensory data packets. Meanwhile, APP subscribes to the data and accesses the cloud platform to obtain the real-time data uploaded by the wheelchair, which in turn enables viewing of data.

3.4.1. MQTT-Based Protocol for Wheelchair Data Communication

The article uses the MQTT server/client protocol, with the Tencent IoT Explorer as the MQTT server and the mobile app and wheelchair as the MQTT clients. The server node is responsible for managing the data published by the client node, and the client node can also subscribe to the data on a topic held by the server.

Table 2 shows the designed communication topics, including data topic, event topic and control topic, where the content of the data topic is sensed data, the content of the event topic is events reported by wheelchairs, and the content of the control topic is control commands. When a client publishes a data topic named ‘ID/

${deviceName}/data’ to the server, all clients subscribed to this topic will receive the data, thus enabling dynamic updating of the data.

3.4.2. Remote Data Transmission and Encapsulation

The embedded processor needs to communicate with the MQTT server of the cloud platform to complete the reporting of sensory data, so the article installed an ESP8266 WIFI communication module at the wheelchair end, as shown in

Figure 9a for the physical diagram, which is made by Espressif, Shanghai, China.

The embedded processor drives the WiFi module to access the network and accesses the HTML address of the Tencent IoT Explorer so that the wheelchair end can report data to the IoT Explorer as an MQTT client. When reporting sensory data, the embedded processor encapsulates the collected sensory data into a frame of data, as shown in

Figure 9b, which is 9 bytes in total, including 3 bytes of attitude data, 3 bytes of positioning and velocity data and 3 bytes of temperature, humidity and light intensity data. Then the sensing packet with the topic ‘788H526A3U/

${deviceName}/data’ is sent, where 788H526A3U is the device ID number.

Once IoT Explorer has received the packet, it sends the contents of the packet to the mobile app subscribed to this topic so that the user can view the perception data of the wheelchair on the mobile phone.

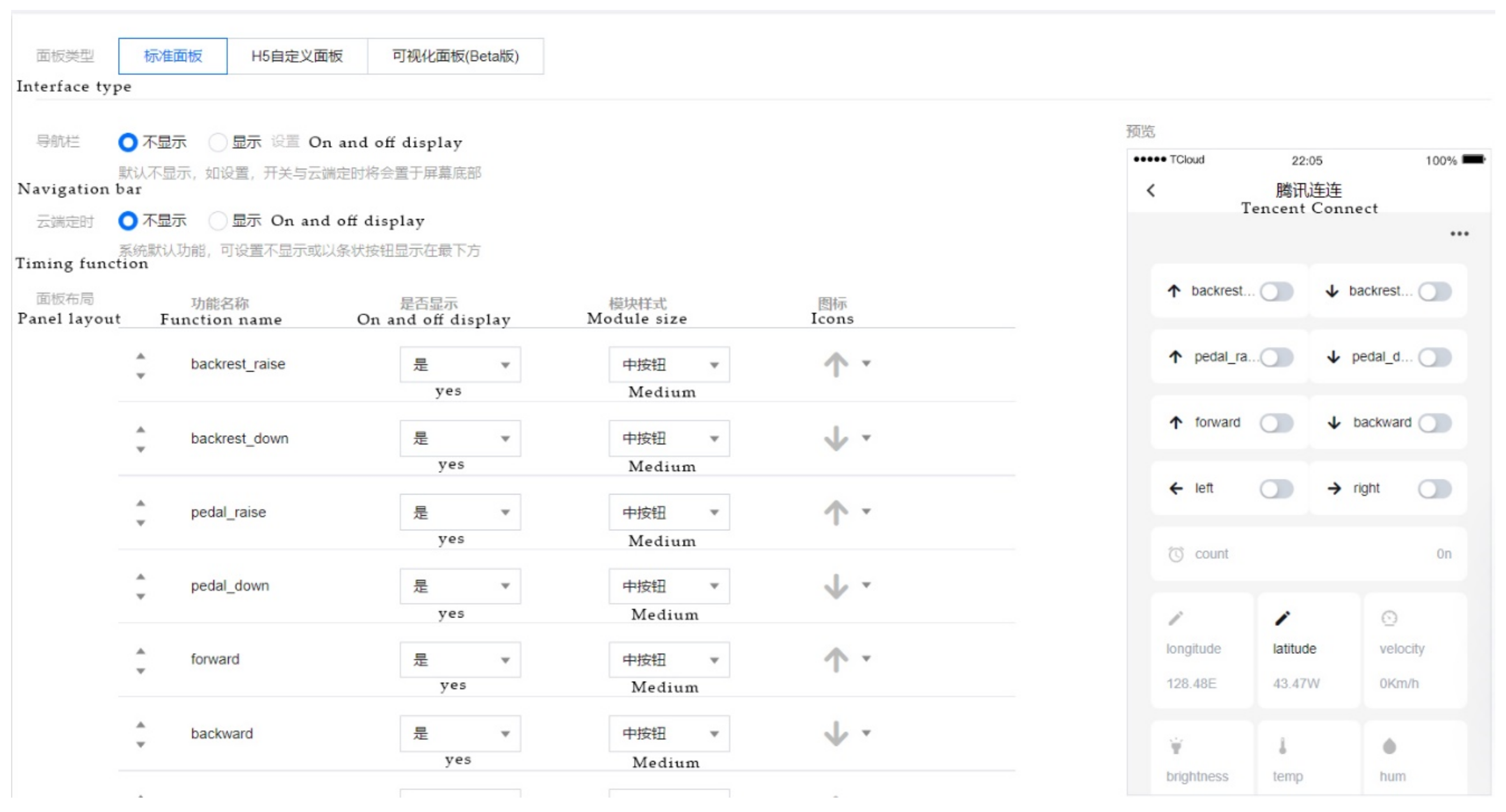

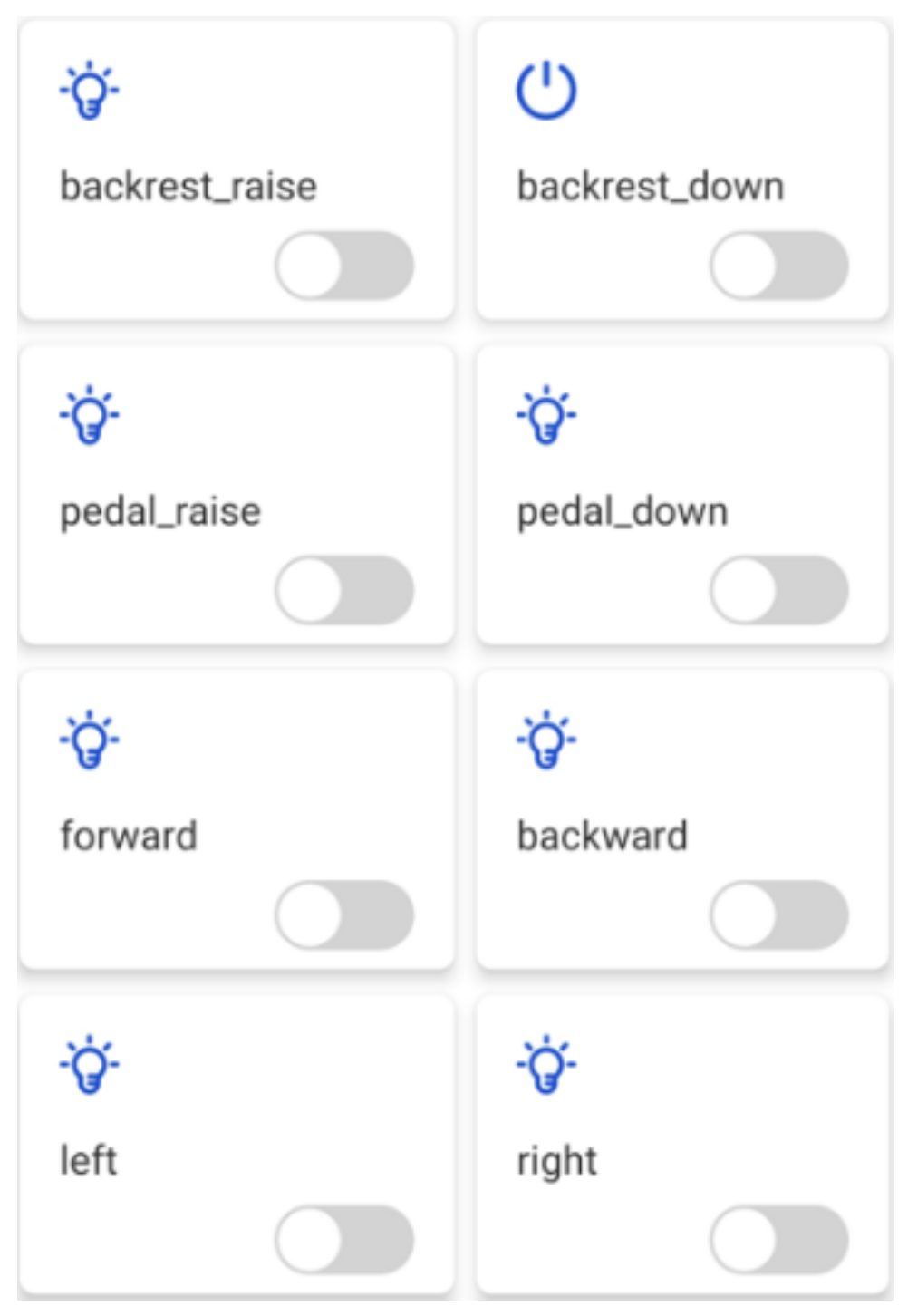

3.4.3. APP Interface

The Tencent IoT Explorer integrates the Tencent Connect APP design function, which allows developers to configure the functions of the APP using a graphical interface on top of the cloud platform, thus greatly reducing the development cycle. Once the APP is created, users can scan the APP QR code and start using it.

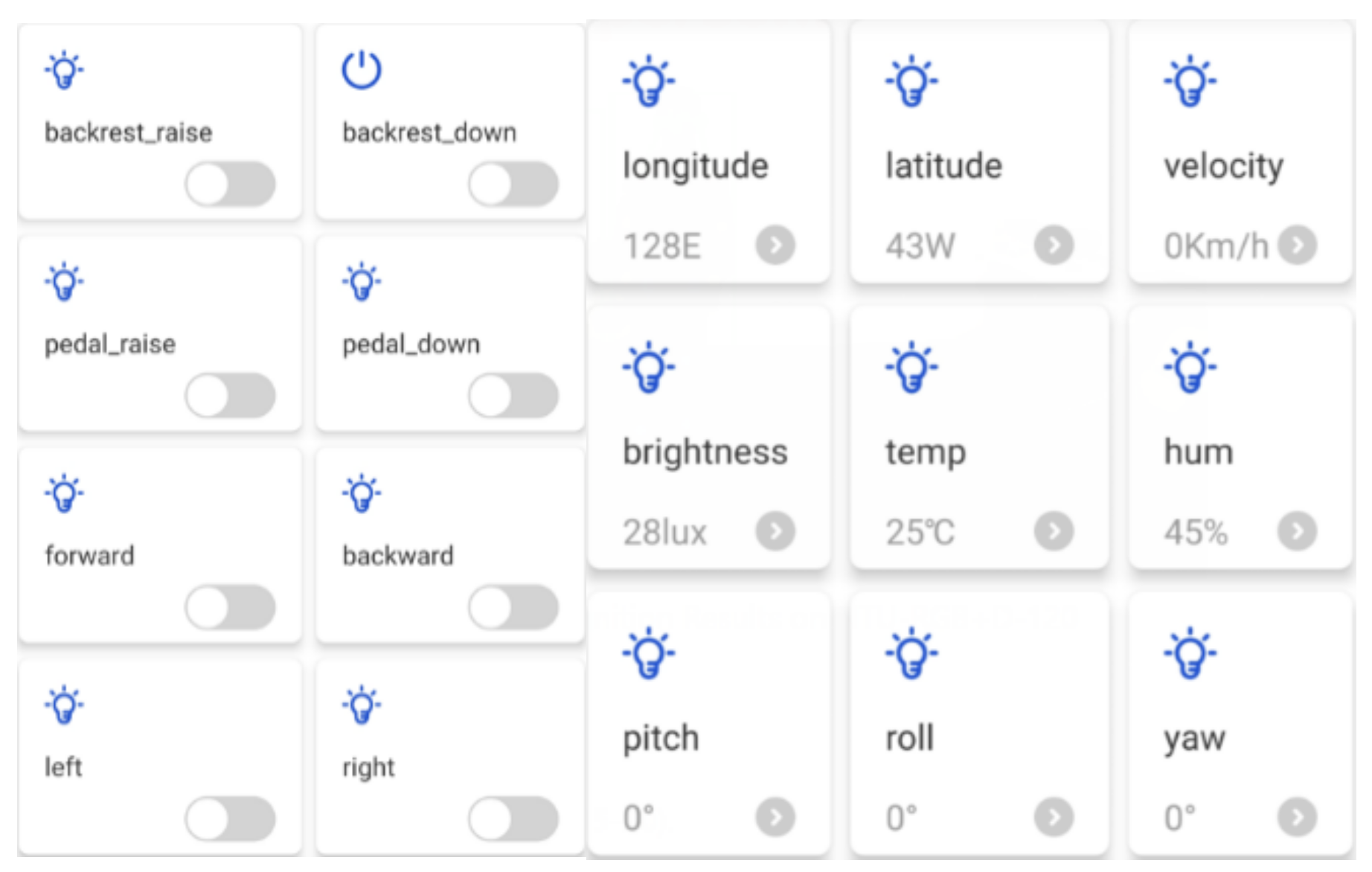

Figure 10 shows the app design interface.

Figure 11 shows the app perception information viewing interface designed in this paper, including the wheelchair’s current positioning information, speed, lighting brightness, temperature and humidity three-axis posture angle information. The interface will dynamically update the display based on the data.

6. Conclusions

In order to improve the perception performance and control of traditional wheelchairs so that they can be operated by different types of users while their loved ones can view the status information of the wheelchair remotely, we have studied the multi-mode perception and control technology of intelligent wheelchairs and explored the remote APP control technology and data transmission technology of wheelchairs based on IoT technology with the following main functions.

(1) Wheelchair perception: we designed and implemented occupant perception, state perception and environment perception for the wheelchair and compared it with correct data after the experiment to test the perception capability. The gesture perception recognition speed is 240 Hz, and can quickly recognise 9 gestures of the user; state perception achieves the acquisition of positioning, velocity and attitude data, relying on BeiDou navigation technology, with a positioning accuracy of around 1 m, and a resolution of 16384LSB/g (Max) and 131LSB/(°/s) (Max) for acceleration and gyroscope, respectively, when collecting attitude. Environmental sensing included road surface sensing and meteorological sensing, in which the accuracy of target classification and target recognition within 50 m reached over 85% and 95%, respectively, and the accuracy of humidity and temperature sensing was ±5%RH and ±2 °C, respectively.

(2) Wheelchair control: we have implemented joystick control, gesture control and APP remote control of the wheelchair. The three control methods can be applied by different people and realize the forward, backward, left and right turn functions of the wheelchair. In the APP, the user can also control the footrest and backrest of the wheelchair and view the real-time sensory data of the wheelchair. In addition, the delay for remote control is around 100 ms, and the accuracy rate of hand gestures and APP control is about 90%.

The intelligent wheelchair developed in this paper has improved the sensing capability of the wheelchair, using the sensing data to also enable the safety monitoring of the wheelchair. In addition, the remote control app allows the user’s loved ones to access the status of the wheelchair and provide assistance in case of danger.

The intelligent wheelchair described in this paper has the advantages of higher intelligence, various operation methods and higher safety performance. After our experiments, the following shortcomings remain: (1) a large number of sensors are installed on the wheelchair and the wire layout is confusing and still needs to be integrated; (2) most of the electrical components are not waterproof, so a waterproof device needs to be designed. In the future, we need solve these two problems. In addition, when the wheelchair is going up and down a slope, the wheelchair may fall due to factors such as speed. We can adjust the horizontal posture of the seat according to the posture information in this paper to ensure safe performance and a safe experience of using the wheelchair.