Dual-Arm Visuo-Haptic Optical Tweezers for Bimanual Cooperative Micromanipulation of Nonspherical Objects

Abstract

1. Introduction

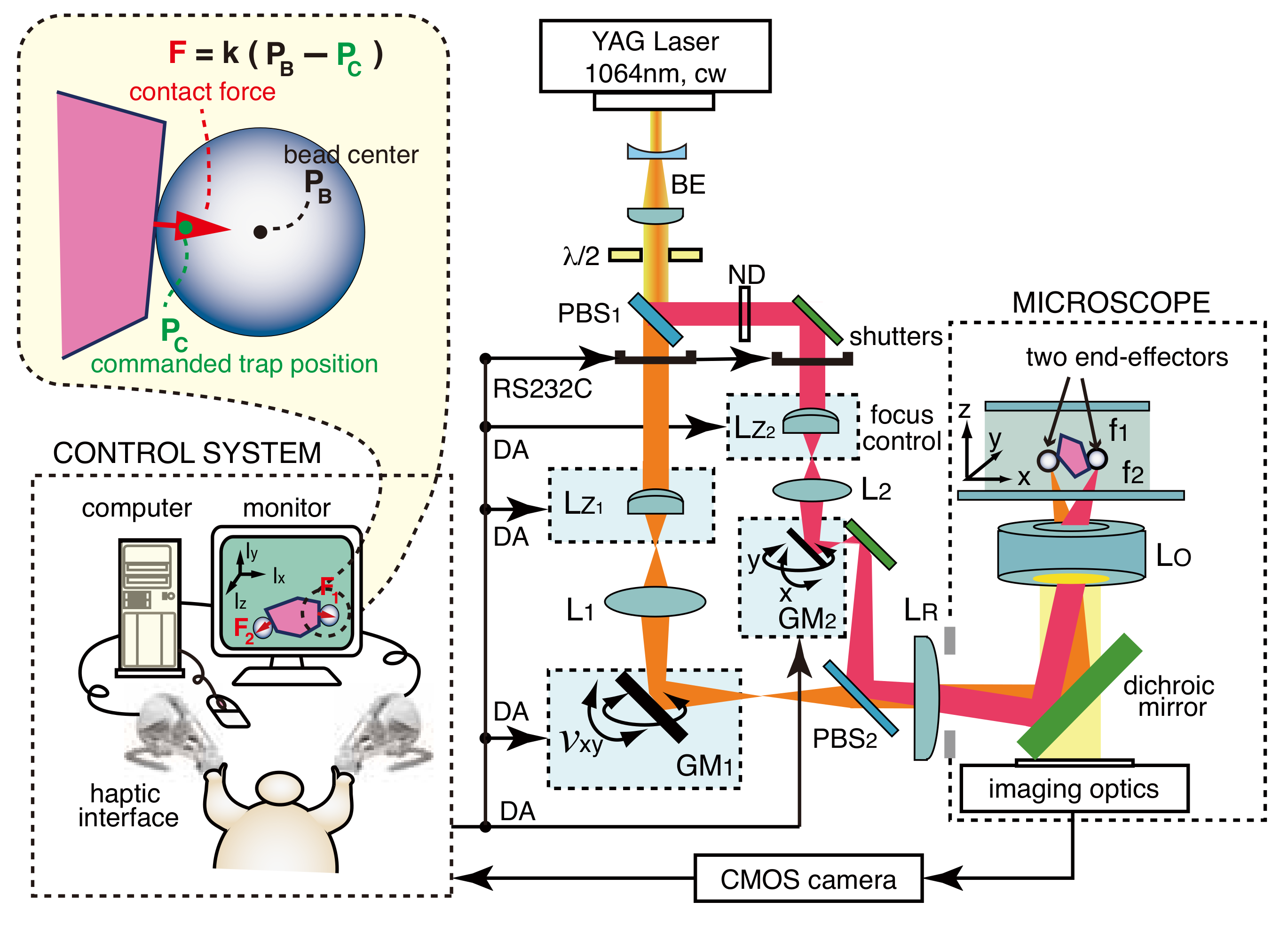

2. Dual-Arm Visuo-Haptic Optical Tweezers

2.1. System Design and Experimental Setup

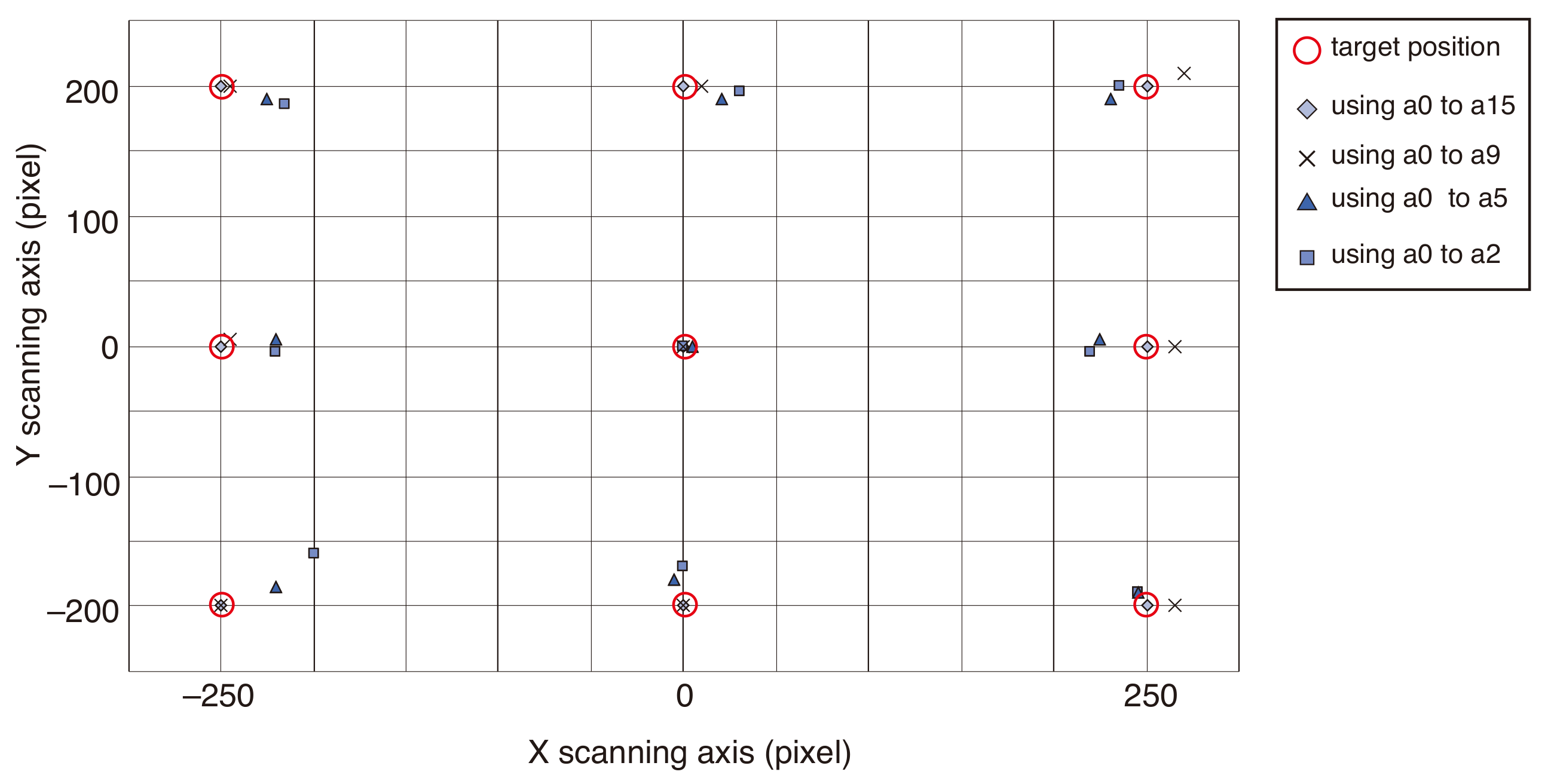

2.2. Correction Method for Field Distortion in a Dual-Arm System

3. Demonstrations and Discussion

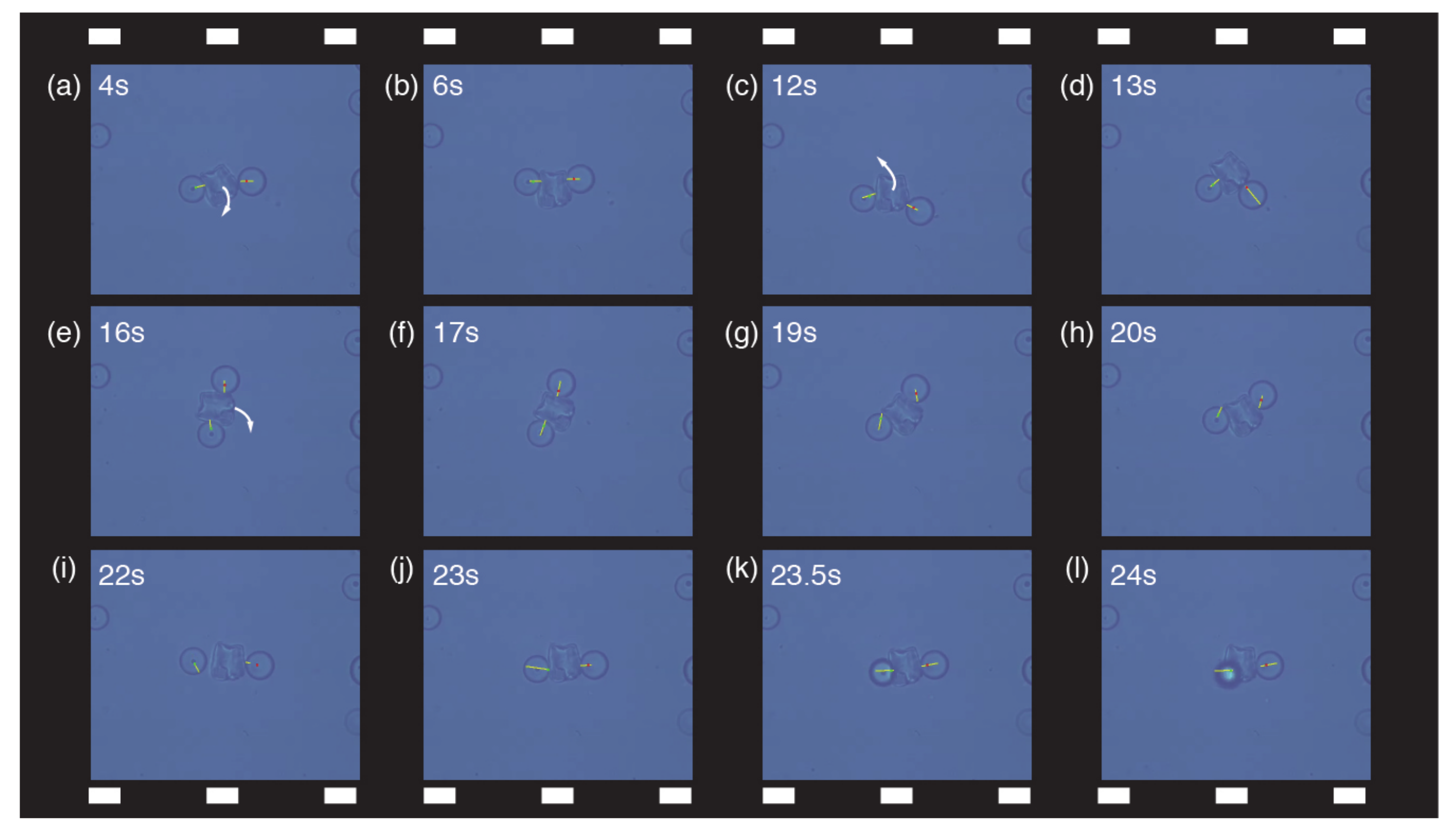

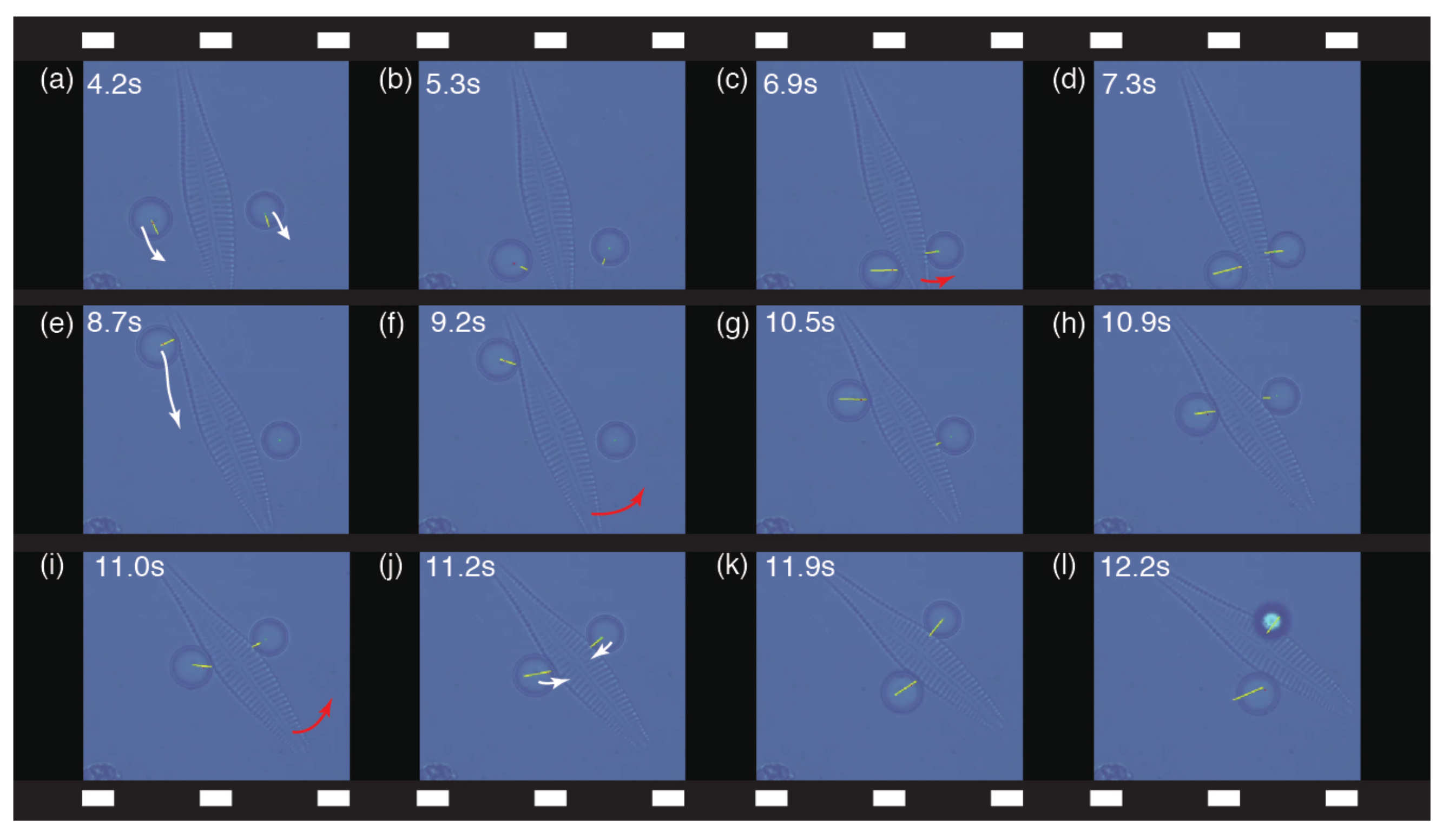

3.1. Bimanual Control of End-Effectors

3.2. Cooperative Micromanipulation of Nonspherical Objects

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xie, M.; Shakoor, A.; Shen, Y.; Mills, J.K.; Sun, D. Out-of-plane rotation control of biological cells with a robot-tweezers manipulation system for orientation-based cell surgery. IEEE Trans. Biomed. Eng. 2019, 66, 199–207. [Google Scholar] [CrossRef] [PubMed]

- Ashkin, A.; Dziedzic, J.M.; Yamane, T. Optical trapping and manipulation of single cells using infrared laser beams. Nature 1987, 330, 769–771. [Google Scholar] [CrossRef]

- Gross, S.P. Application of optical traps in vivo. Meth. Enzymol. 2003, 361, 162–174. [Google Scholar]

- Zhang, H.; Liu, K.K. Optical tweezers for single cells. J. R. Soc. Interface 2008, 5, 671–690. [Google Scholar] [CrossRef]

- Rodrigo, P.J.; Kelemen, L.; Palima, D.; Alonzo, C.A.; Ormos, P.; Glückstad, J. Optical microassembly platform for constructing reconfigurable microenvironments for biomedical studies. Opt. Express 2009, 17, 6578–6583. [Google Scholar] [CrossRef]

- Tanaka, Y.; Kawada, H.; Hirano, K.; Ishikawa, M.; Kitajima, H. Automated manipulation of non-spherical micro-objects using optical tweezers combined with image processing techniques. Opt. Express 2008, 16, 15115–15122. [Google Scholar] [CrossRef]

- Tanaka, Y.; Wakida, S. Controlled 3D rotation of biological cells using optical multiple-force clamps. Biomed. Opt. Express 2014, 5, 2341–2348. [Google Scholar] [CrossRef]

- Chowdhury, S.; Thakur, A.; Švec, P.; Wang, C.; Losert, W.; Gupta, S.K. Automated manipulation of biological cells using gripper formations controlled by optical tweezers. IEEE Trans. Autom. Sci. Eng. 2014, 11, 338–347. [Google Scholar] [CrossRef]

- Cheah, C.C.; Ta, Q.M.; Haghighi, R. Grasping and manipulation of a micro-particle using multiple optical traps. Automatica 2016, 68, 216–227. [Google Scholar] [CrossRef]

- Gerena, E.; Régnier, S.; Haliyo, S. High-bandwidth 3-D multitrap actuation technique for 6-DoF real-time control of optical robots. IEEE Robot. Autom. Lett. 2019, 4, 647–654. [Google Scholar] [CrossRef]

- Gerena, E.; Legendre, F.; Molawade, A.; Vitry, Y.; Régnier, S.; Haliyo, S. Tele-robotic platform for dexterous optical single-cell manipulation. Micromachines 2019, 10, 677. [Google Scholar] [CrossRef]

- Hu, S.; Hu, R.; Dong, X.; Wei, T.; Chen, S.; Sun, D. Translational and rotational manipulation of filamentous cells using optically driven microrobots. Opt. Express 2019, 27, 16475–16482. [Google Scholar] [CrossRef]

- Pacoret, C.; Bowman, R.; Gibson, G.; Haliyo, S.; Carberry, D.; Bergander, A.; Régnier, S.; Padgett, M. Touching the microworld with force-feedback optical tweezers. Opt. Express 2009, 17, 10259–10264. [Google Scholar] [CrossRef]

- Pacoret, C.; Régnier, S. Invited article: A review of haptic optical tweezers for an interactive microworld exploration. Rev. Sci. Instrum. 2013, 84, 081301. [Google Scholar] [CrossRef]

- Onda, K.; Arai, F. Multi-beam bilateral teleoperation of holographic optical tweezers. Opt. Express 2012, 20, 3633–3641. [Google Scholar] [CrossRef]

- Cadière, G.B.; Himpens, J.; Germay, O.; Izizaw, R.; Degueldre, M.; Vandromme, J.; Capelluto, E.; Bruyns, J. Feasibility of robotic laparoscopic surgery: 146 cases. World J. Surg. 2001, 25, 1467–1477. [Google Scholar] [CrossRef]

- Crew, B. A closer look at a revered robot. Nature 2020, 580, S5–S7. [Google Scholar] [CrossRef]

- SepúLveda, D.; Fernández, R.; Navas, E.; Armada, M.; González-De-Santos, P. Robotic aubergine harvesting using dual-arm manipulation. IEEE Access 2020, 8, 121889–121904. [Google Scholar] [CrossRef]

- Kitagawa, S.; Wada, K.; Hasegawa, S.; Okada, K.; Inaba, M. Few-experiential learning system of robotic picking task with selective dual-arm grasping. Adv. Robot. 2020, 34, 1171–1189. [Google Scholar] [CrossRef]

- Fleischer, H.; Joshi, S.; Roddelkopf, T.; Klos, M.; Thurow, K. Automated analytical measurement processes using a dual-arm robotic system. SLAS Technol. 2019, 24, 354–356. [Google Scholar] [CrossRef] [PubMed]

- Zareinejad, M.; Rezaei, S.M.; Abdullah, A.; Shiry Ghidary, S. Development of a piezo-actuated micro-teleoperation system for cell manipulation. Int. J. Med. Robot. 2009, 5, 66–76. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, Y. Double-arm optical tweezer system for precise and dexterous handling of micro-objects in 3D workspace. Opt. Lasers Eng. 2018, 111, 65–70. [Google Scholar] [CrossRef]

- Tanaka, Y. 3D multiple optical tweezers based on time-shared scanning with a fast focus tunable lens. J. Opt. 2013, 15, 025708. [Google Scholar] [CrossRef]

- Preece, D.; Bowman, R.; Linnenberger, A.; Gibson, G.; Serati, S.; Padgett, M. Increasing trap stiffness with position clamping in holographic optical tweezers. Opt. Express 2009, 17, 22718–22725. [Google Scholar] [CrossRef]

- Ballard, D.H.; Brown, C.M. Computer Vision; Prentice-Hall: Englewood Cliffs, NJ, USA, 1982. [Google Scholar]

- Kaehler, A.; Bradski, G. Learning OpenCV 3: Computer Vision in C++ with the OpenCV Library, 3rd ed.; O’Reilly: Sebastopol, CA, USA, 2017. [Google Scholar]

- Chen, M.F.; Chen, Y.P.; Hsiao, W.T. Correction of field distortion of laser marking systems using surface compensation function. Opt. Lasers Eng. 2009, 47, 84–89. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. 1966, 32, 444–462. [Google Scholar]

- Rao, C.R.; Mitra, S.K. Generalized Inverse of Matrices and Its Application; Wiley: Hoboken, NJ, USA, 1971. [Google Scholar]

- Landenberger, B.; Yatish; Rohrbach, A. Towards non-blind optical tweezing by finding 3D refractive index changes through off-focus interferometric tracking. Nat. Commun. 2021, 12, 6922. [Google Scholar] [CrossRef]

- Arai, Y.; Yasuda, R.; Akashi, K.; Harada, Y.; Miyata, H.; Kinosita, K.; Itoh, H. Tying a molecular knot with optical tweezers. Nature 1999, 399, 446–448. [Google Scholar] [CrossRef]

- Brouwer, I.; Sitters, G.; Candelli, A.; Heerema, S.J.; Heller, I.; Melo de, A.J.; Zhang, H.; Normanno, D.; Modesti, M.; Peterman, E.J.G.; et al. Sliding sleeves of XRCC4-XLF bridge DNA and connect fragments of broken DNA. Nature 2016, 535, 566–569. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanaka, Y.; Fujimoto, K. Dual-Arm Visuo-Haptic Optical Tweezers for Bimanual Cooperative Micromanipulation of Nonspherical Objects. Micromachines 2022, 13, 1830. https://doi.org/10.3390/mi13111830

Tanaka Y, Fujimoto K. Dual-Arm Visuo-Haptic Optical Tweezers for Bimanual Cooperative Micromanipulation of Nonspherical Objects. Micromachines. 2022; 13(11):1830. https://doi.org/10.3390/mi13111830

Chicago/Turabian StyleTanaka, Yoshio, and Ken’ichi Fujimoto. 2022. "Dual-Arm Visuo-Haptic Optical Tweezers for Bimanual Cooperative Micromanipulation of Nonspherical Objects" Micromachines 13, no. 11: 1830. https://doi.org/10.3390/mi13111830

APA StyleTanaka, Y., & Fujimoto, K. (2022). Dual-Arm Visuo-Haptic Optical Tweezers for Bimanual Cooperative Micromanipulation of Nonspherical Objects. Micromachines, 13(11), 1830. https://doi.org/10.3390/mi13111830