Abstract

Cellular Nonlinear Networks (CNN) are a concept introduced in 1988 by Leon Chua and Lin Yang as a bio-inspired architecture capable of massively parallel computation. Since then, CNN have been enhanced by incorporating designs that incorporate memristors to profit from their processing and memory capabilities. In addition, Stochastic Computing (SC) can be used to optimize the quantity of required processing elements; thus it provides a lightweight approximate computing framework, quite accurate and effective, however. In this work, we propose utilization of SC in designing and implementing a memristor-based CNN. As a proof of the proposed concept, an example of application is presented. This application combines Matlab and a FPGA in order to create the CNN. The implemented CNN was then used to perform three different real-time applications on a 512 × 512 gray-scale and a 768 × 512 color image: storage of the image, edge detection, and image sharpening. It has to be pointed out that the same CNN was used for the three different tasks, with the sole change of some programmable parameters. Results show an excellent capability with significant accompanying advantages, such as the low number of needed elements further allowing for a low cost FPGA-based system implementation, something confirming the system’s capacity for real time operation.

1. Introduction

Cellular Nonlinear Networks (CNN) were introduced by Chua and Yang [1] in 1988, and can be described as a mixture between Cellular Networks and Artificial Neural Networks that can implement a parallel processing universal computer machine. This bio-inspired architecture is able to process in parallel massive amounts of data, thus being specially suitable for image processing, with single ASIC CMOS prototypes already implemented being able to deal with rates up to frames per second [2].

Memristors have been proposed as a device that may help to implement this kind of circuit [3,4], but experimental implementations are still lacking. These devices, memristors, are passive, two-pole elements also introduced by Chua in 1971 [5], as a theoretically possible basic circuit element. In 2008, Strukov et al. [6] realized their ReRAM devices were, actually, a kind of memristor. There have been many groups dedicated to creating either devices or emulators, ever since. One of the more classical mathematical memristor descriptions including memconductance G can be written as:

where Q (also known as ) is the integral over time of the current i:

Notice that the requirement for the device to be a memristor is mapped to the requirement for the characteristics of the device to be dependent on some internal variables, as will be further discussed below, in addition to some fingerprints [7,8].

One of the main problems for using memristors into circuits is that they are not yet readily available for implementation in usual technologies. In this paper we use Stochastic Computing to implement a fully digital realization of a CNN using memristors. To do so, we have used a memristor emulator presented in [9], as well as a Stochastic Computing implementation of a CNN using it, as in our previous work [10], where a simpler implementation was presented operating exclusively on gray images. The notion of Stochastic Computing (SC) was introduced by Von Neumann in the 1950s [11] as a theoretical framework to explain how (relatively) accurate results could be obtained using imprecise systems. In this framework, time and accuracy are balanced in a trade-off. It was later popularized by Gaines [12], and it has found a niche in approximate computing. There are many examples in the literature for diverse applications, such as data compression [13], chaotic equation calculation [14], data mining [15], FFT computation [16], control [17], image processing algorithms [18,19], or A/D conversion [20]. As of today, it seems probable that it may conquer an important share in edge computing applications, since it can decrease energy consumption and circuit complexity and area overhead for low numbers of bits. However, this comes at a price, since the time required to perform the operation also increases exponentially with the number of bits. This, in turn, may increase the total energy consumption when the number of bits exceeds 16–17 [21,22]. There are, however, techniques that allow this problem to be alleviated, and make this competitive even for higher bit-numbers [22].

Within this paradigm, strings of binary digits are used to represent real numbers p. These strings of digits are called stochastic computing numbers (SCN) and their mean value corresponds to the number p represented [23]. Thus, the absolute value ranges for any implementation of SCN must fall within . There are two distinct possibilities to map a real number to SCN: the first one is from the domain , and the second one corresponds to [].

Once the selected mapping has been chosen, the different SCN operations can be easily implemented, requiring only the use of fundamental logic gates or very simple digital circuits. As an example, in the case of the [] domain, multiplication of SCNs are implemented using an AND gate; in the second case, the [] domain, this same operation demands using a XNOR gate.

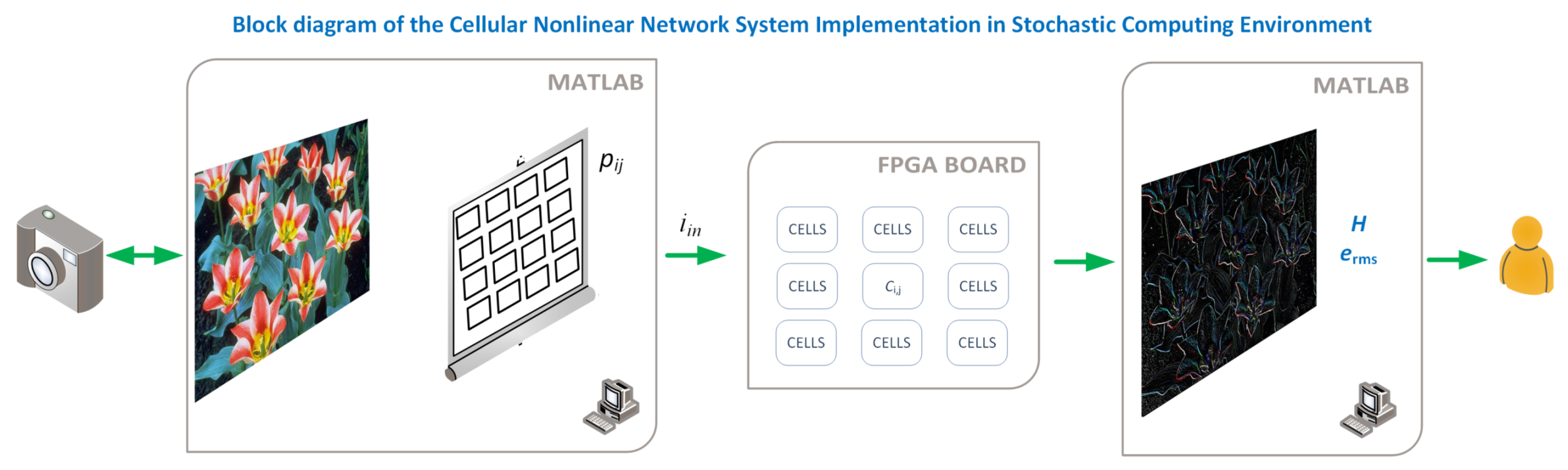

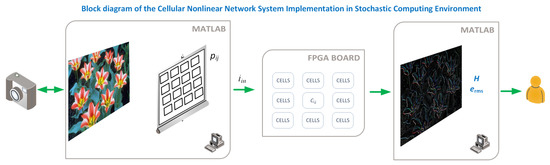

The full system we have implemented is depicted in Figure 1. The first part is processed in the computer, where the images are read using a Matlab script and converted to gray scale if needed. The resulting image is then sent to the FPGA board, which is used as an accelerator and connected using the FPGA-in-the-loop methodology that allows to integrate it in a seamless way. The FPGA performs the processing using a massively parallel implementation of the proposed Stochastic Memristive Cellular Nonlinear Network, and the result is then read back into the computer and represented to the user, along with the entropy of the image and the rms error if needed.

Figure 1.

Conceptual depiction of the system, showing the tasks assigned to Matlab and those performed by the FPGA. The FPGA and Matlab are used jointly by using the FPGA-in-the-loop tool from Matlab, where the VHDL code is automatically generated, uploaded, and integrated with the main script at the computer.

The paper is structured as follows: after this introduction, the basics of memristors and Cellular Nonlinear Networks are presented in Section 2, which is being used in Section 3 to implement the basic CNN cell in Stochastic Computing. Section 4 presents the results obtained using three different pictures (two gray, one color) with three different sets of parameters. These three sets of parameters allow the CNN to perform three different operations on the images: storing, edge detection, and image improvement. Finally, Section 5 concludes the paper.

2. Memristive Cellular Nonlinear Networks

2.1. Memristors and Memristive Systems Modeling

Among the possible theoretical descriptions of memristive systems, Corinto et al. present in [24] a very complete framework to study memristors and, in general, systems that may present memristive behavior. They propose using both the classical description using voltage and current, but they also discuss the flux–charge (–q) approach.

The memristive systems can be classified according to how far they are from ideality. Following the taxonomy proposed in [7], there are three distinct possibilities: the ideal, the generic, and the extended memristor. This extended categorization was a theory requirement, needed to cover the description of pinched, hysteretic behaviors found in numerous new various elements.

The most general class of memristors are the extended memristors. The memristors belonging to this class are described by extra internal state variables (in addition either to current and voltage, or to and q). As an example, Equations (3) to (5) implement the case of flux-controlled memristors:

This way, an extended memristor has a memristance M represented by the nonlinear memconductance G (or, more accurately, its inverse) in Equation (3), where is the flux, and v is the voltage between the terminals of the memristive device. The extra variables are grouped into the vector , and they may comprise different physical magnitudes depending on the specific memristive system; as examples, we can mention the radius of a conducting filament, the internal temperature of the system, as well as other non-electrical variables that may be used to describe the state of the memristor. These state variables present a dynamic behavior described by and Equation (4). As a side comment, it is worth noticing that all the devices described as being memristors are indeed extended memristors.

When no parasitic effects are present, those extended memristors are better described as generic memristors (or, simply, memristors), since function depends only on the state variables and . Ideal memristors, finally, are those corresponding to the original definition [5], and can be considered in this framework as generic memristors with no state variable dependence other than charge or flux.

As an example, let us consider the simplest memristor model that can be devised, similar to those discussed in [24] or, for real devices, in [25,26,27,28]. In this case, the model of an ideal memristor where the memresistance or the memconductance depends only on the charge or the flux can be written as:

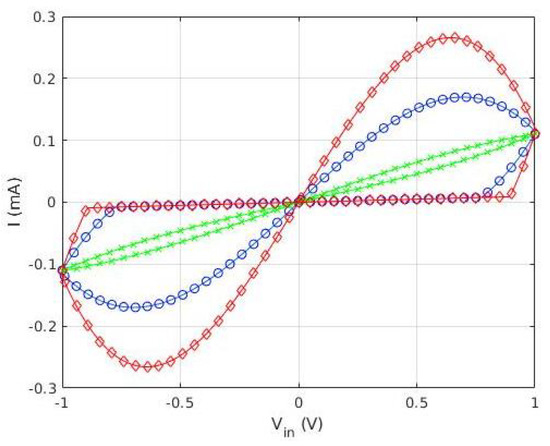

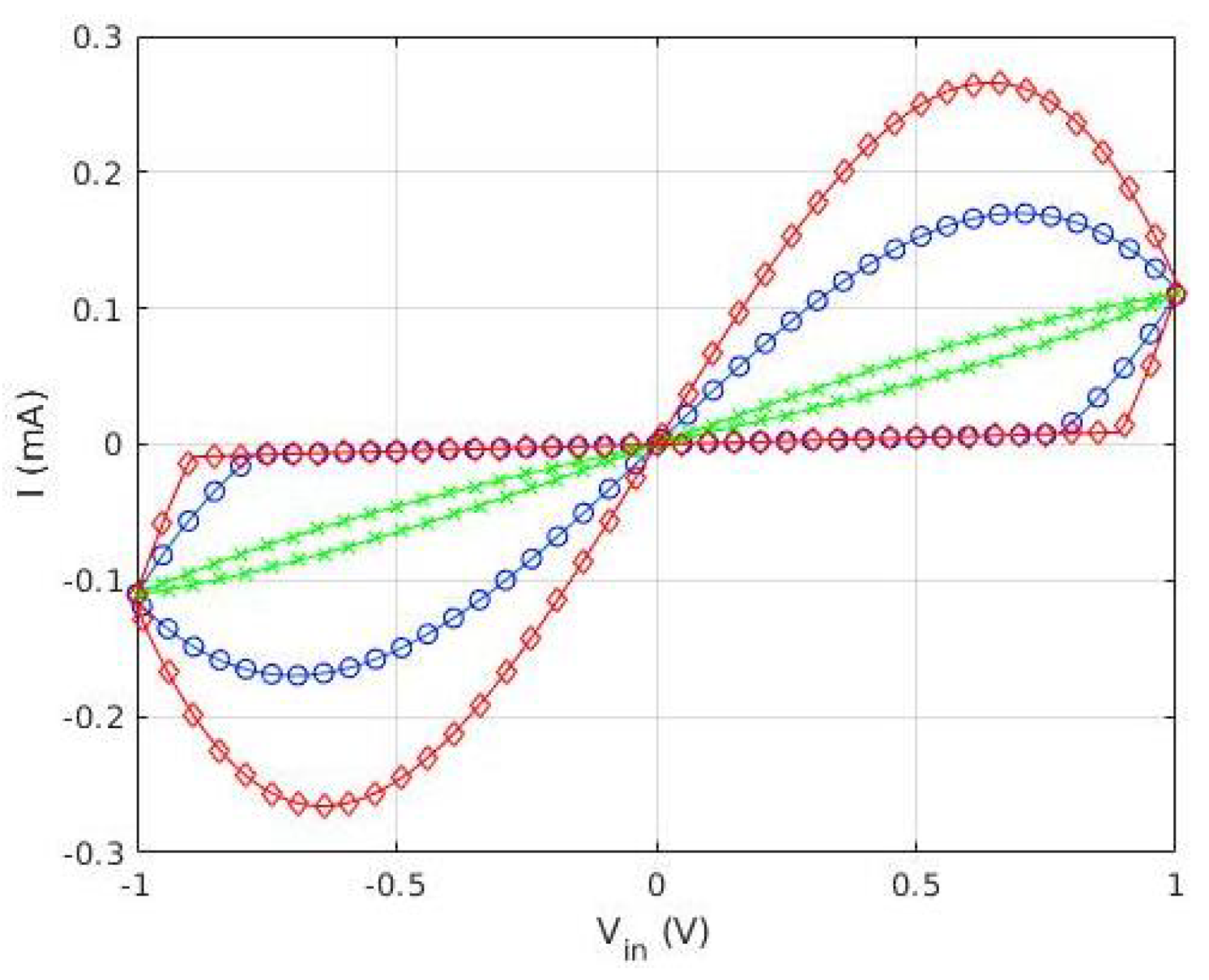

where is the unperturbed conductance, and includes the importance of the memristive effect. Notice that for , the behavior tends to be similar to that of an ideal resistor. The behavior of the device is represented in Figure 2, for , , and three different frequencies. Notice how the device reproduces the two fingerprints of a memristor [7,8]: it presents a pinched loop whose area tends to zero at high frequency (green line, ‘x’ symbol).

2.2. Cellular Nonlinear Networks

The system discussed in this paper, Cellular Nonlinear Network (CNNs) [1], is not to be confounded with Convolutional Neural Networks (also CNN), even if they share the same acronym. The CNNs discussed here represent a powerful massively parallel, multivariate signal processing paradigm. In their most basic description, they are made of independent processing units, called cells, where each cell has an input, an output which is fed back as another input, and also feels the effect of the inputs and outputs of its nearest neighbors. These effects are then processed internally into a state variable, and the output is linearly dependent on the result of this processing, with a positive and negative saturation.

As an example of hardware implementation of a CNN, we find [2], where each processing element typically accommodates additional data storage units, which allow the CNNs to store the programming parameters at the cell level. As a result, these Universal Machines (UMs) can be considered as one of the earliest examples of a non-von Neumann computer. Unfortunately, these memory blocks need a large integrated circuit (IC) area to be implemented, which increases significantly the size of each cell. As a consequence, the spatial resolution is quite poor compared with simple image sensors, which is a common problem that CNN-UMs and, as an inherited problem, arrays comprising sensor-processor cells based upon them, suffer from.

Mathematically, the behavior of the cell can be described by a differential equation as:

where is the state variable, and are the inputs and outputs, respectively, and and are the feedback and feed-forward coefficients. The stability of the system can be controlled by setting the value to an appropriate value [4], and is equivalent to a constant bias in the electrical equivalent. Function is a nonlinear function, saturating to a minimum and a maximum values ( and ). In this sense, it is similar to the output function of a neuron. However, the most usual shape for it is a piecewise linear function, defined as:

Notice that, even if the sum is made over the whole set of integers, we usually restrict ourselves to just the nearest neighbors. This Equation (10) can be implemented with a single element thanks to the unique nonlinear behavior of memristors. Thanks to this capability to process or store data within a common physical nanoscale medium, their use in future CNN cell designs may allow to remove the burden of extra memory blocks within each processing element, allowing the development of co-located sensor-processor arrays with enormous high-resolution levels, specially suited for the Internet of Things (IoT) industry.

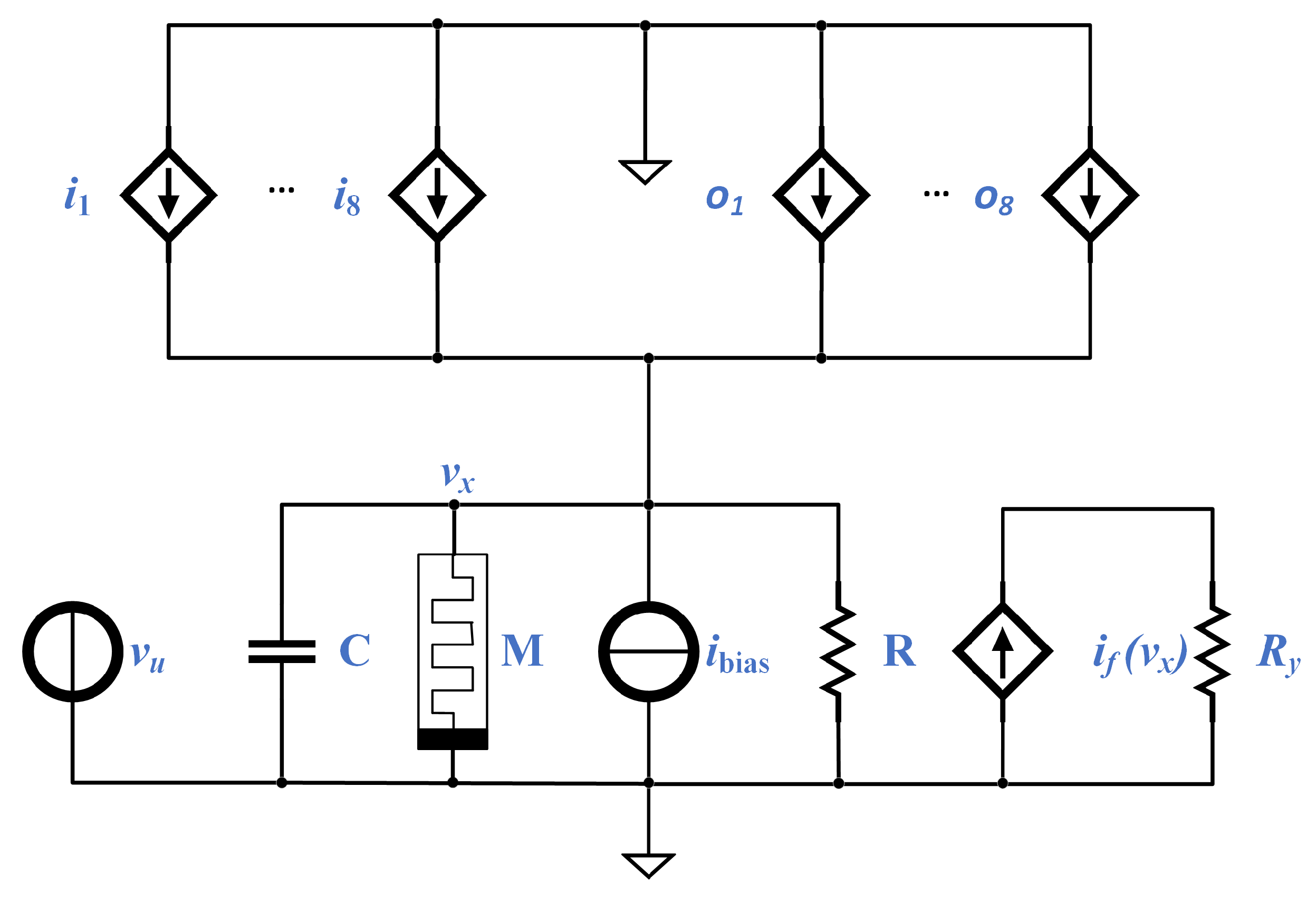

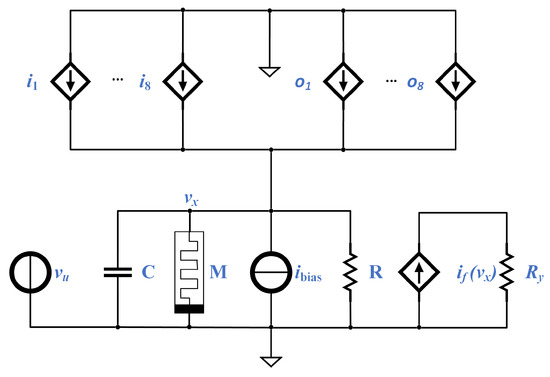

Referring to the determination of the coefficients, some methods have been published [29,30] for the traditional CNN, and, more recently, using the so-called Dynamic Route Map (DRM) [3,4,31,32], to the first-order approximate model of each cell of a Memristor CNN (M-CNN). Such an approximation is depicted as a circuit in Figure 3, and the evolution of the state variable () is described by an equation equivalent to Equation (9):

where i ∈ 1,..., M, j∈ 1,..., N, is the unit step function, stands for the voltage across the capacitor , whereas , and denote, respectively, the state and voltage of memristor , whose current is described via the generalized Ohm’s law from Equation (3): , with the memductance given by . The non-linear function proposed in [32] to characterize the memristive CNNs are:

with , featuring units , and denoting the minimum voltage needed by the memristor for switching. In addition, there are two different window functions, to ensure that the memristor stays in between the two possible states . These two window functions can be written in a compact way [32] as:

where when “+” (the upper boundary), and in the opposite case; p can be any integer (). In addition, the dynamical evolution of the voltage of each cell in system presented in Figure 3 is:

Figure 3.

Schematics and Stochastic implementation of a CNN cell, the processing element in cell ({1, ⋯, M}, {1, ⋯, N} ). The other elements (resistor, memristor, and capacitor) present the same values from cell to cell, i.e., , , and . Adapted from [3].

Notice the equivalence of Equation (14) with Equation (9): the last line of both equations is equivalent, while the first line of Equation (9) corresponds to the first two lines of Equation (14). Thus, we can conclude that the circuit in Figure 3 accurately represents a possible implementation of a CNN cell, and it is the circuit we will implement in the next section as a SC module.

3. M-CNN Stochastic Computing

3.1. Stochastic Computing Basics

The SC approach adopts real number representation by strings of N random binary numbers . The probability of “1” bits to appear within the bit-string is proportional to the number to be operated [23]:

These strings are called Stochastic Computing Numbers (SCN) or Stochastic Encoded Numbers (SEN). In this paper, we will opt for the second, using also the term Binary Encoded Numbers (BEN) for those encoded as classical binary numbers. Notice that the number of bits that can be encoded in a chain of length N is , since the relevant information is just the number of “1”s. There are two main ways to generate a map between a SCN and real numbers: first, we can map the desired range of real numbers to the real domain ; second, we can map them to the interval . Depending on which mapping is to be implemented, many different mathematical operations can then be performed using simple logic gates or simple sequential circuits.

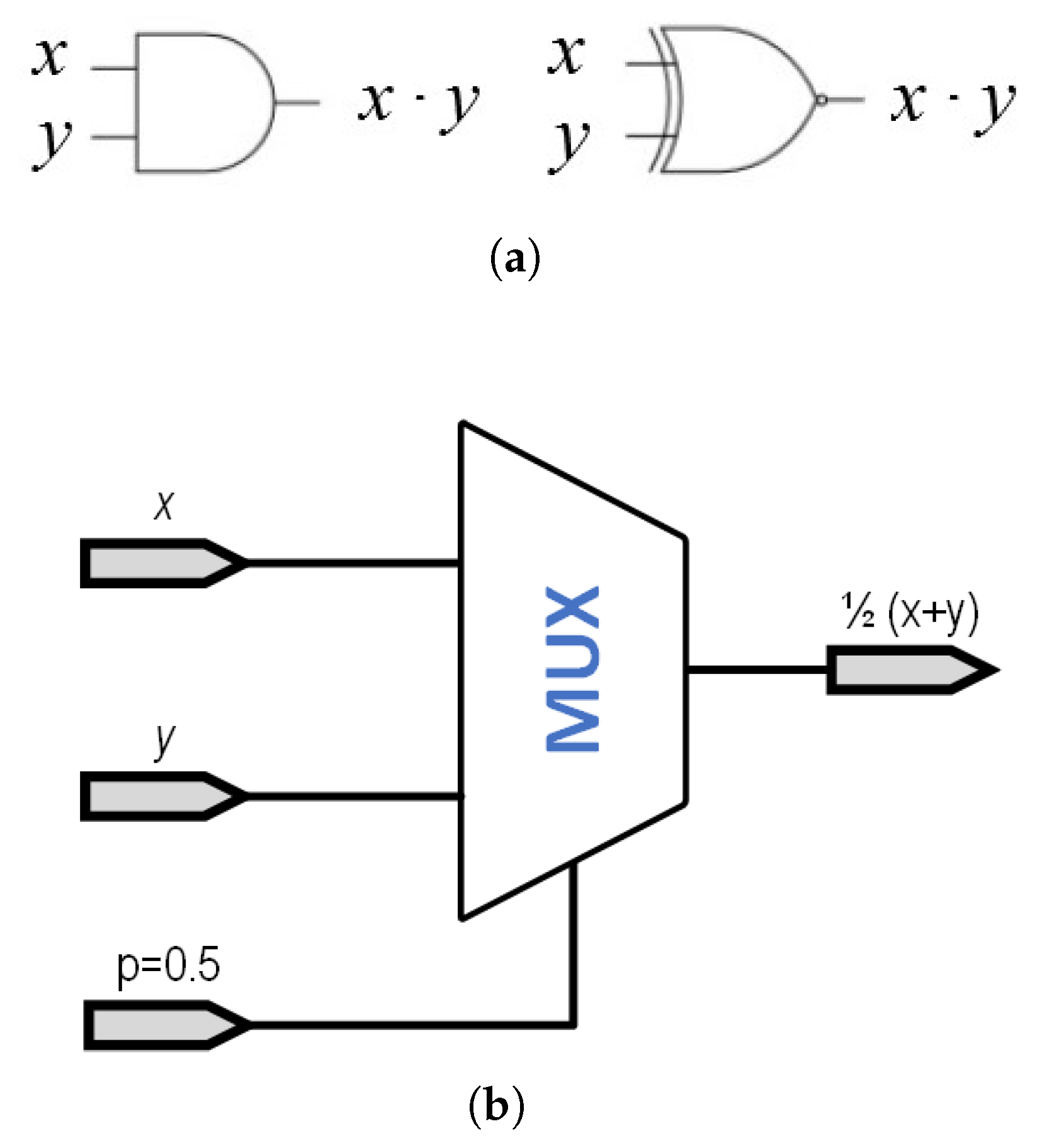

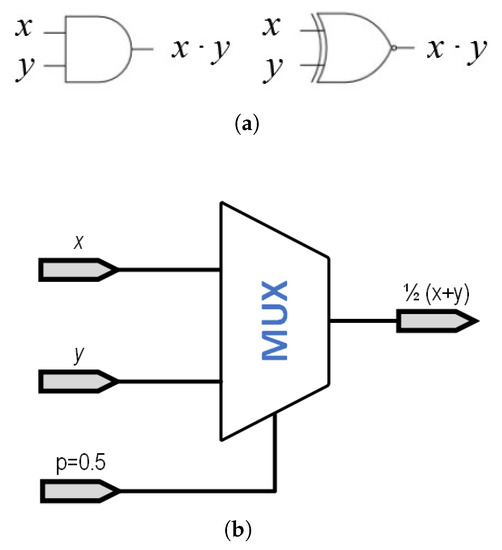

As an example, multiplication of SCN is performed using a simple AND gate when using the domain. Alternatively, considering the domain, the same multiplicative operation requires the use of an XNOR gate, as shown in Figure 4a.

Figure 4.

Basic implementation of basic operation in SC. (a) Basic implementation scheme of a SC multiplier in the range (AND gate, left) and in the range (XNOR gate, right). (b) Basic implementation scheme of a SC adder using a multiplexer.

Since we cannot represent any SC number as a probability higher than one, the case of addition becomes slightly more complex, since . Thus, the operation that should be implemented is , which would always return a maximum value of 1. This operation is usually implemented using a multiplexer, as shown in Figure 4b, where the p(0.5) indicates a signal with a probability of 50% of being ‘1’ or ‘0’. This necessary input signal is generated using one of the bits generated in the RNG, so no additional circuitry is needed. It is worth pointing out that this gate is the same in both the and the domains. Other more complex operations (division [17], square roots [17], reversible gates [33], etc., ⋯) are also discussed in the literature, although not presented in this paper.

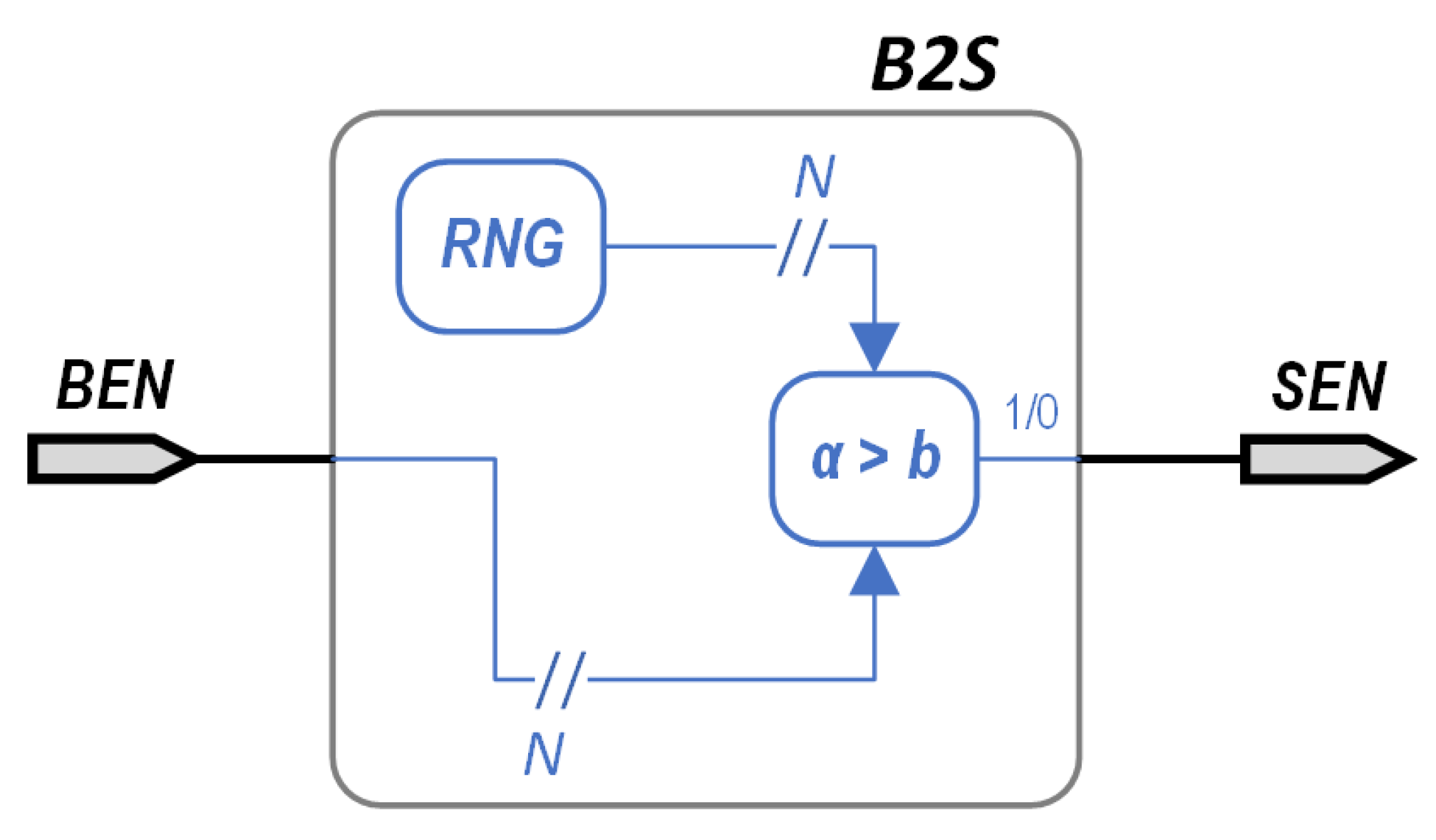

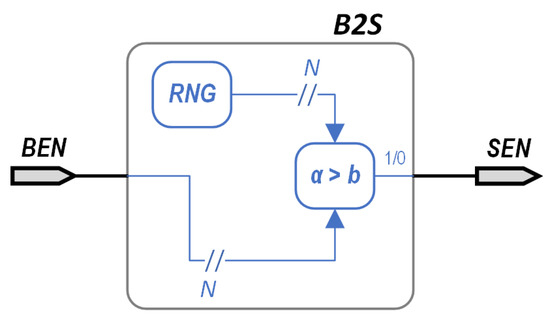

Another important point is the conversion from BEN to SEN. This is usually achieved by using a scheme similar to that in Figure 5, where an N-bit random number is generated by utilizing a random number generator (RNG) and compared to the value of the N-bit BEN. If the output of the RNG is below the BEN, the converter’s output would be bit “1”, and bit “0” otherwise. In the opposite operation, converting SEN back to its BEN representation, the number of 1’s included in the signal needs to be calculated; something that can be straightforwardly achieved by a simple counter.

Figure 5.

Basic implementation scheme of a Binary Encoded Number (BEN) to a Stochastic Encoded Number (SEN), using a Random Number Generator (RNG).

It is apparent that the error in the approximation of the SEN to its actual value is equivalent to the error provided by a random walk process of length n, and thus proportional to , as it has been discussed in the literature [34]. Therefore, using N bits, we may consider that all the noise caused by the process is included in the lowest bits. This way, the noise figure for a signal of power with noise power caused by the use of the SEN is:

Notice that for this equation we have considered the maximum possible amplitude for the input signal. In order to consider the minimum amplitude the noise, we consider we are 1 bit over the noise (). In this case, we obtain:

Thus, the system is expected to have a NF between 3 dB and dB. This desired would set the required number of bits, which is related to the sensitivity of the equation system, on noise. Empirically, we have seen that linear equations allow for a low N, while nonlinear systems call for higher values. Notice that a value of the dB calls for bits, while for bits the provided noise figure dB.

3.2. Stochastic Computing Implementation of a Memristor Emulator

The described advantages of SC framework were used by a stochastic computing implementation of a memristor emulator [9], which describes the memristor using Equation (6), and was written in the form of Equations (19)–(), so that it could be implemented in a discrete way. The mathematical operations Equations (19)–() were carried out by simple digital gates. A simple way to model memristors is using a linear relation of the charge Q with the memconductance , with an upper and a lower value, and , correspondingly. Then according to this approach:

It is apparent that now Stochastic Computing can be used to implement such a model within a digital environment (an FPGA, or an ASIC). Other complex, physically based models simulating memristive behavior in FPGAs can be found in the literature [35,36], but they are very mathematically complex models, requiring a very large number of gates.

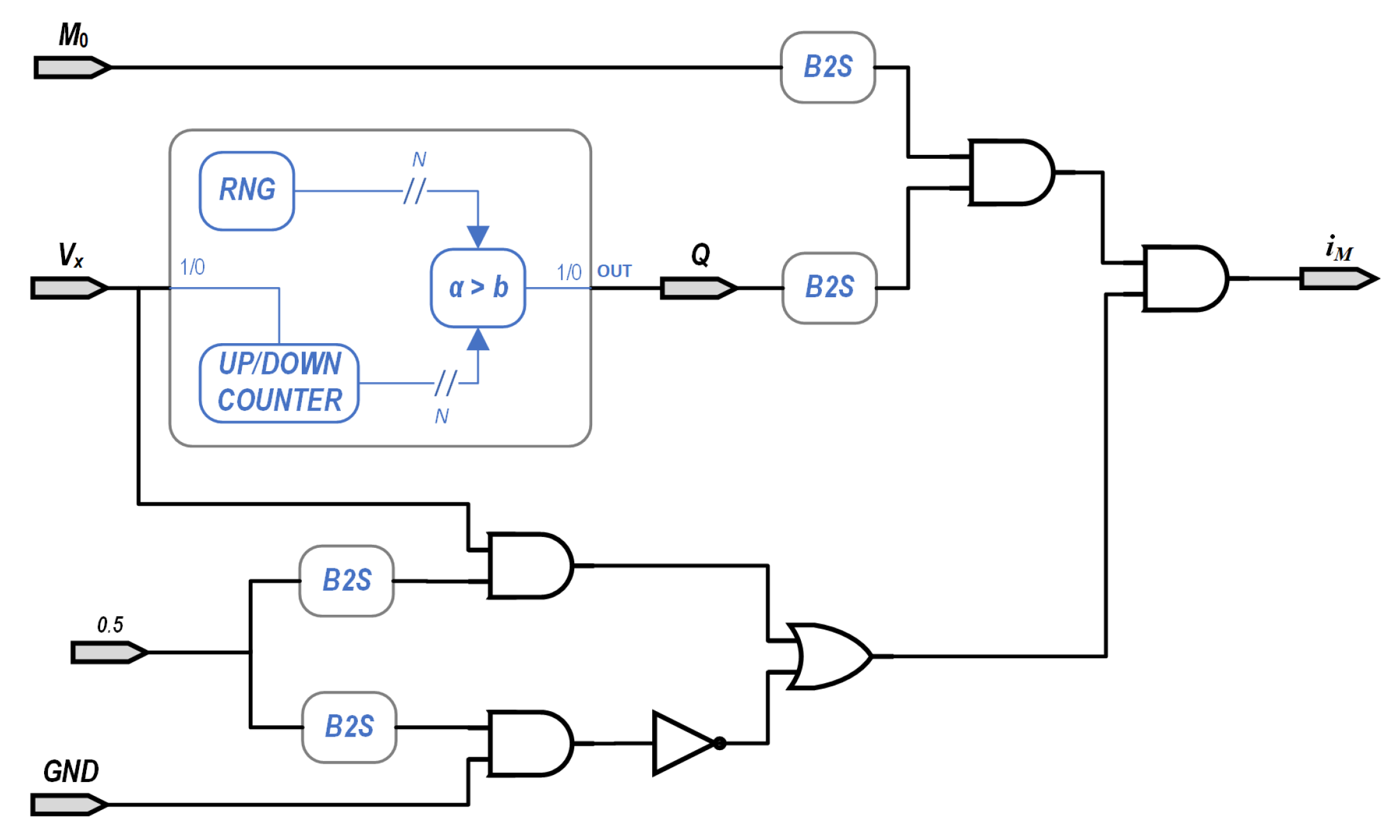

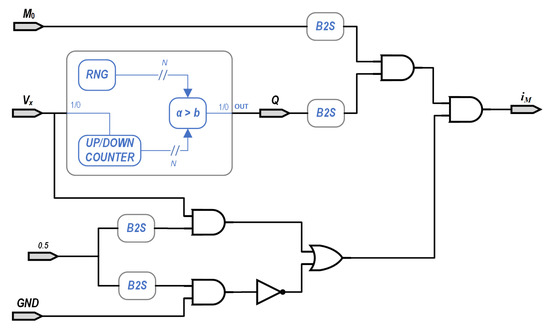

The emulator discussed above presents all the standard fingerprints of a memristor as required by the theory [8]. This implementation appears as a block diagram in Figure 6, where and are the SCN values of the positive and negative terminals of the memristor, respectively, while the calculated current is represented by the stochastic value . The SCN value of has to be represented by a probability of 0.5 for an “1”, since we are mapping the interval to an interval that includes negative and positive values. In our case, we used a public version of the Mersenne twister algorithm, available from GitHub [37].

Figure 6.

The Stochastic Computing memristor implementation. Inputs and GND are the SCN values of the positive and negative inputs of the memristor, respectively, and is the calculated SCN value of the current.

Rewriting Equations (19)–() to allow them to be implemented in discrete time results in:

where the integration step is . Using Equation (22), we can rewrite (19) as:

The adder needs to increase or decrease its output by a unit, depending on the inputs, since i and are both SEN. An increasing is performed when , while when the output is reduced. A single constant is used to group and , and the and functions are built into the adder by establishing a maximum and a minimum values. A SEN output is generated from the adder’s output by comparing it to a random number spanning . An AND gate is then used to obtain the current as per Equation (1), as in Figure 6.

3.3. Stochastic Computing Implementation of a M-CNN

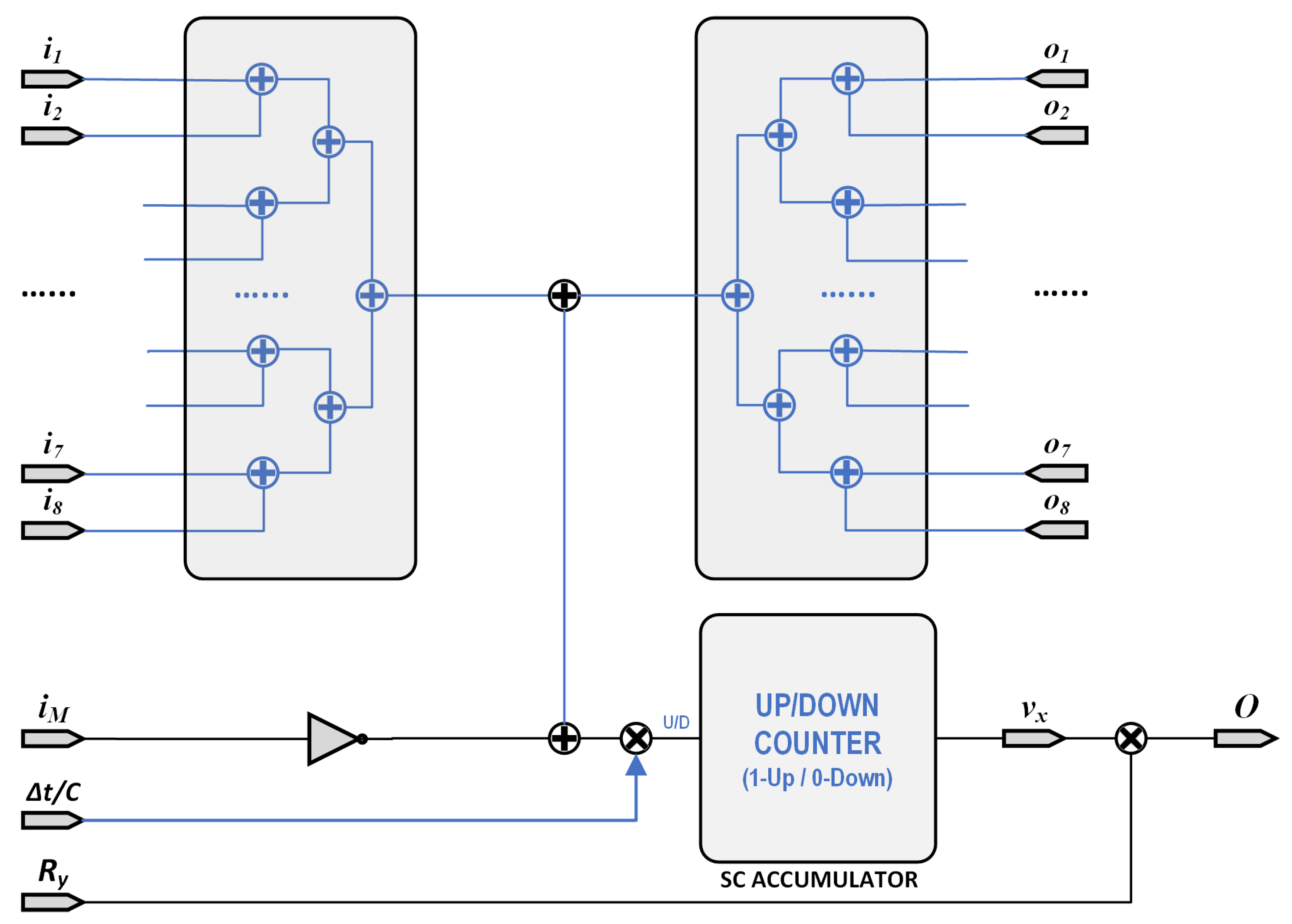

As discussed above, a very compact implementation of a single cell of a Cellular Nonlinear Network can be performed as in Figure 3 [3]. The output voltage presents a non-linear dependence on the internal voltage . The dynamic behavior of this is governed by a differential equation:

where are the input currents caused by the of the nearest cells. The currents correspond to the of those cells, while the current is that flowing through the memristor. As proposed in [3,4], we only consider the 8 closest neighbors. This way, Stochastic Computing can be used to make an implementation of this equation. As an initial step, we perform a first-order integration of (24):

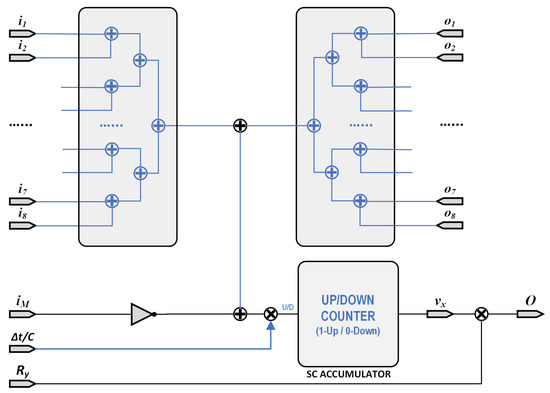

The above Equation (25) has been implemented in Figure 7 as a stochastic equivalent circuit, where all data circulation correspond to 1-bit lines. Thus, implementation of Equation (25) was performed with 16 adders each implemented using 1 gate with a 1-bit multiplexers, 2 multipliers implemented as gates, 1 inverter, and 1 accumulator. Additionally, a random number generator is also needed (note that it may be shared between different cells) along with the memristor emulator previously presented.

Figure 7.

Stochastic Computing Circuit implementation of the M-CNN processing element as in Figure 3.

The speed of the system can be estimated with the length of the chain needed to implement the SCN representation of the numbers to be used. These numbers are determined considering the input images. In our case, we are using both gray images and color images, all of them downloaded from http://www.hpca.ual.es/~vruiz/images (accessed on 20 June 2021). The gray images use a 8-bit single plane to store it, while the color images use three different 8-bit planes. Thus, at least 8 bits need to be recovered faithfully. As discussed above [34], the use of 14 bits corresponds to a chain length of 16,384 bits and can represent values with an error confined in the last 6 bits, with more than a 95% probability. Following this reasoning, we choose these 14 bits for both the length of the chain and the accumulators.

4. Image Processing Results

The CNN described above was simulated using Matlab, with the circuit parameters equivalent to those appearing in Table 1. Parameter values have been chosen to be similar to those proposed in [9], to optimize the emulator behavior. Another option would have been using the process described in [3,32] or [38], but then scaling of the values was differing significantly to allow the emulator performing efficiently. The FPGA-in-the-loop methodology, as shown in Figure 1, was implemented to speed up the simulation, using an Arria V development kit. This FPGA system was connected to the computer running the Matlab code via a cabled network.

The A and B matrices were changed to three different sets, corresponding to three different cases: store, edge, sharpening, as discussed below. The notation to represent the coefficients in the matrices is shown in Table 2.

Table 2.

Coefficient notation for . Notice that the coefficient for the current node is .

The images all have different sizes (notated as ), as reported in Table 3, and they were all initially color images with 8-bit color resolution at each RGB plane. We have processed these figures by performing two different experiments, both of them using three different sets of parameters: first, we used a color-to-gray conversion, and we processed the images through the three different CNN. In a second step, we used a color image, and we processed each color plane independently to finally reconstruct a color image from these three planes.

Table 3.

Picture size in pixels. The color depth is 8 bits per channel.

In order to keep a good NF during the stochastic processing, and according to Equation (17) and the discussion in the previous section, each pixel was converted from 8 to 14 bits by padding the least significant positions with zeros. After the processing, the stochastic images to normal images were converted back by disregarding the 6 least significant bits of the corresponding accumulator in Figure 6.

The results are shown in two different ways: as pictures, and also using the RMS error and the entropy of the image. The RMS error is defined in Equation (26), where and are the values of the pixel for the input and the processed image, respectively. The entropy H is calculated using the Matlab implementation of Equation (27), where contains the normalized histogram counts for each gray level. The entropy of the unprocessed images is reported in Table 4. Notice that for the color images we report the values of the entropy for each channel, while for the rest we report only the entropy of the gray image.

Table 4.

Calculated values of entropy of the images. The results for the color image show the three color planes separately.

4.1. Store Image

As a first example, we show the results of storing the image into the CNN. That is, the values of internal voltage are evolved until the output is equal to the input. As a comment, this is the easiest “program” that can be implemented into the SM-CNN, and can be used as a first step for more complex algorithms as can be, for instance, a background removal or a motion detection algorithms. The coefficients of the matrices are provided in Table 5.

Table 5.

Coefficients for the input and output weights in Equation (24) for the case of the image store setup.

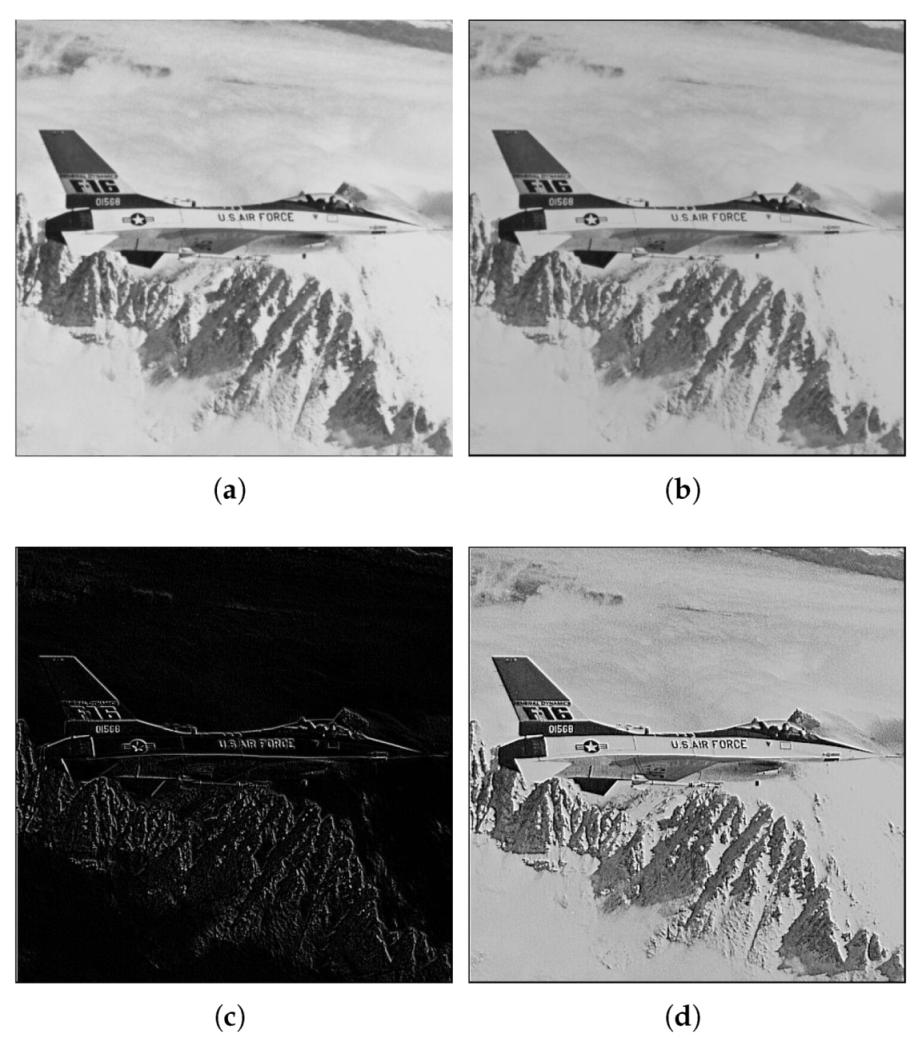

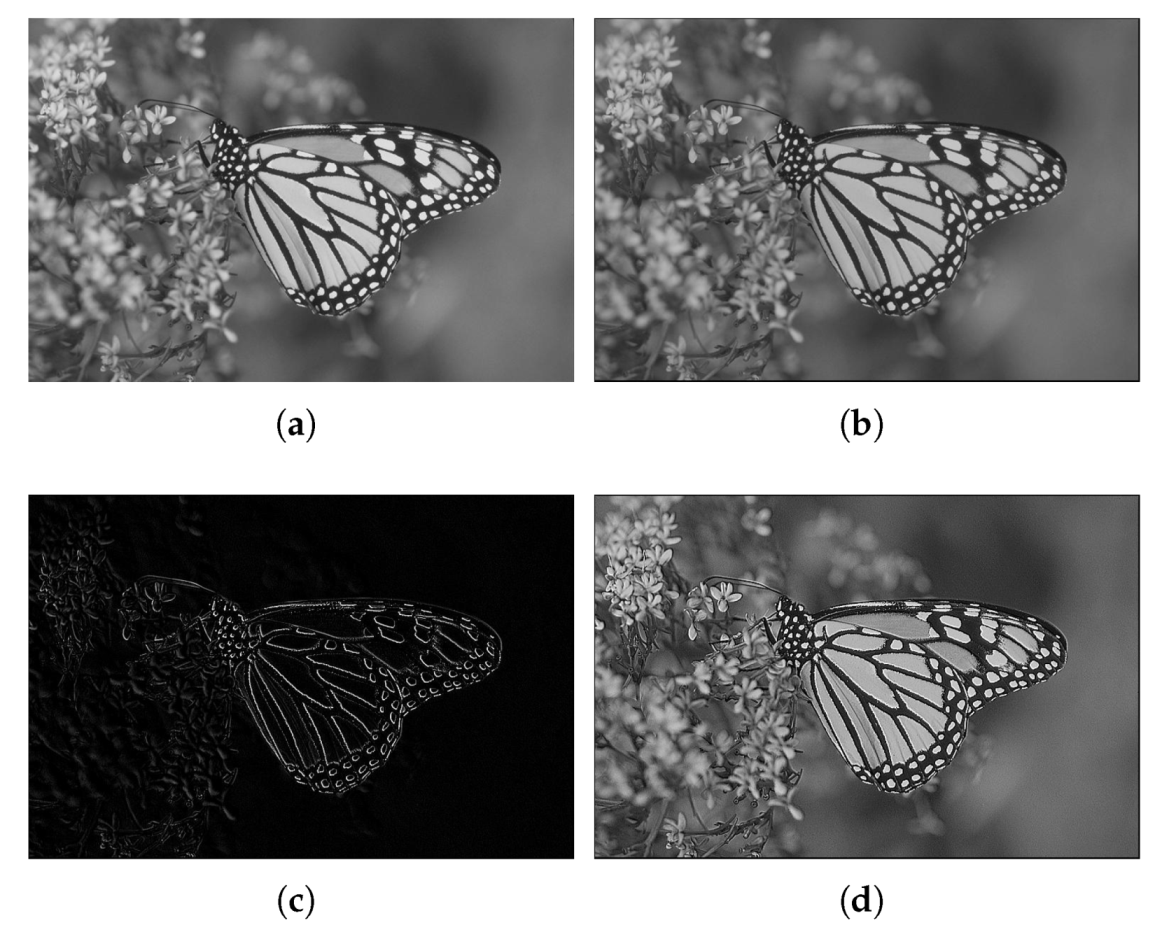

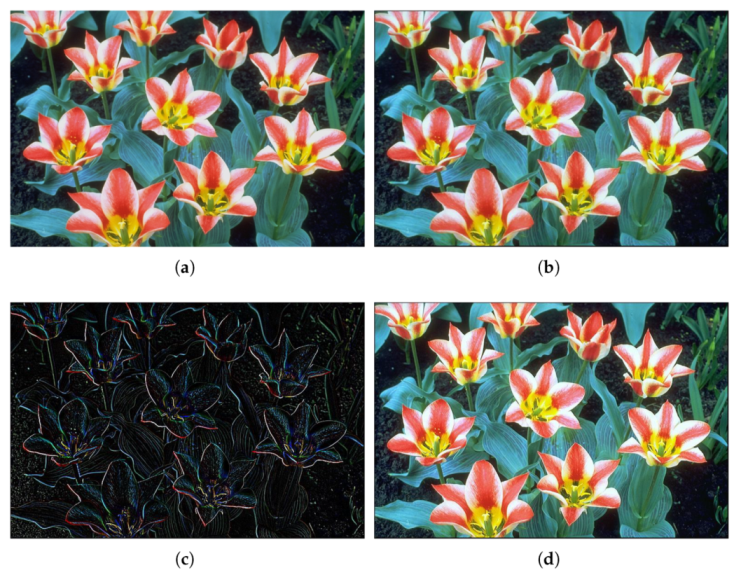

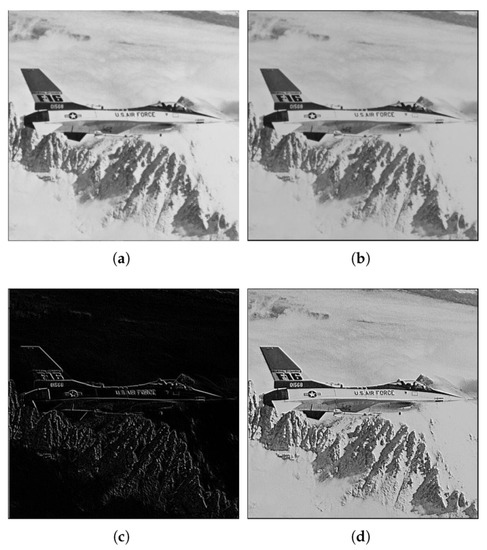

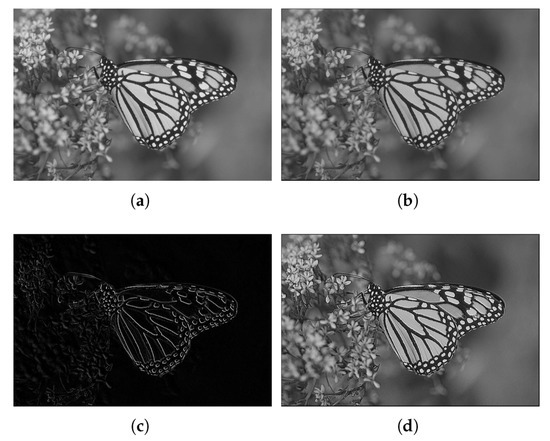

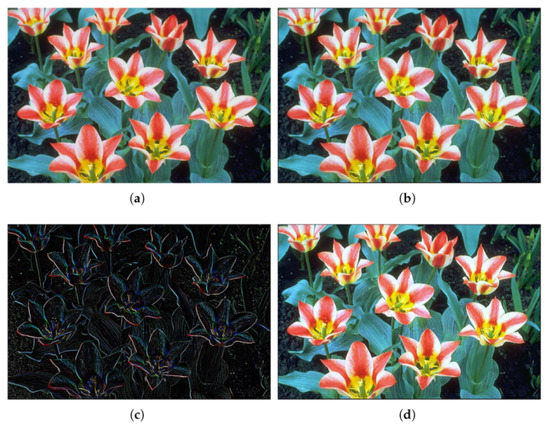

We have represented both the input and output images for two different cases input gray images namely Figure 8a and Figure 9a (a zoom-in of the latter, appears in Figure 10a) and a color image (Figure 11a). The results for the store process are shown in Figure 8b and Figure 9b for the gray images, while the stored color image is presented in Figure 11b. Visually, it can be seen there that the algorithm performs correctly.

Figure 8.

Example 1: results obtained using the three proposed stochastic computing CNN with different gene values. (a) Original image. (b) Stored image. (c) Edge detection result. (d) Sharpened image.

Figure 9.

Example 2: results obtained using the three proposed stochastic computing CNN with different gene values. (a) Original image. (b) Stored image. (c) Edge detection result. (d) Sharpened image.

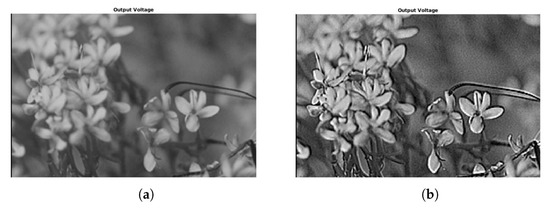

Figure 10.

Example 2: Zoom-in of Figure 9a,d, showing a detail of the sharpening results obtained using the proposed stochastic computing CNN. (a) Zoom-in of the original image. (b) Zoom-in of the sharpened image.

Figure 11.

Example 3: Color figure, showing the sharpening results obtained using the proposed stochastic computing CNN. (a) Original image. (b) Stored image. (c) Edge detection result. (d) Sharpened image.

Additionally, we have calculated the entropy of the images and the RMS error, as shown in Table 4 and Table 6. The entropy of the images is nearly the same, and the rms error is kept, at most, below 1.3%.

Table 6.

Calculated RMS of the stored images, referred to the original image. The results for the color image show the three color planes separately.

4.2. EDGE Detection Using SM-CNN

The proposed SM-CNN was further checked using an implementation of one of the stochastic systems proposed in [4]. Specifically, we have improved the EDGE routine presented in [10]. This routine performs a border detection algorithm in the image using the coefficients in Table 7. In the previous work, the routine was fixed, and no quantitative analysis was performed. The edge algorithm aims to detect changes between adjacent pixels, so the output value will evolve to a 1 or 0, depending on the change of color. The evolution will depend on the threshold of the output function and can thus be changed.

Table 7.

Coefficients for the input and output weights in Equation (24) for the case of the edge detection setup.

We used the same images as in the previous example, where two of the images were gray 8-bit images and another one was a 24-bit color image ( bits planes). They were processed as in the previous case to 14 bits by padding, and back to 8 bits by truncation. We have represented both the input and output images for two different gray images in Figure 8c and Figure 9c, while the result for the color image is shown in Figure 11c. It can be seen there that the algorithm performs as expected, showing also the corresponding decreasing in the values of the entropy in Table 4.

4.3. Sharpening

The sharpening algorithm is a variation of the EDGE detection, combined with the STORE genii. In fact, we calculated a new coefficient set as a linear combination of the two previous sets: for the store matrices, and for the edge detection. The new set is calculated as:

where M stands for both A and B. In this case, and , and the corresponding matrix coefficients are provided in Table 8.

Table 8.

Coefficients for the input and output weights in Equation (24) for the case of the image enhancement setup.

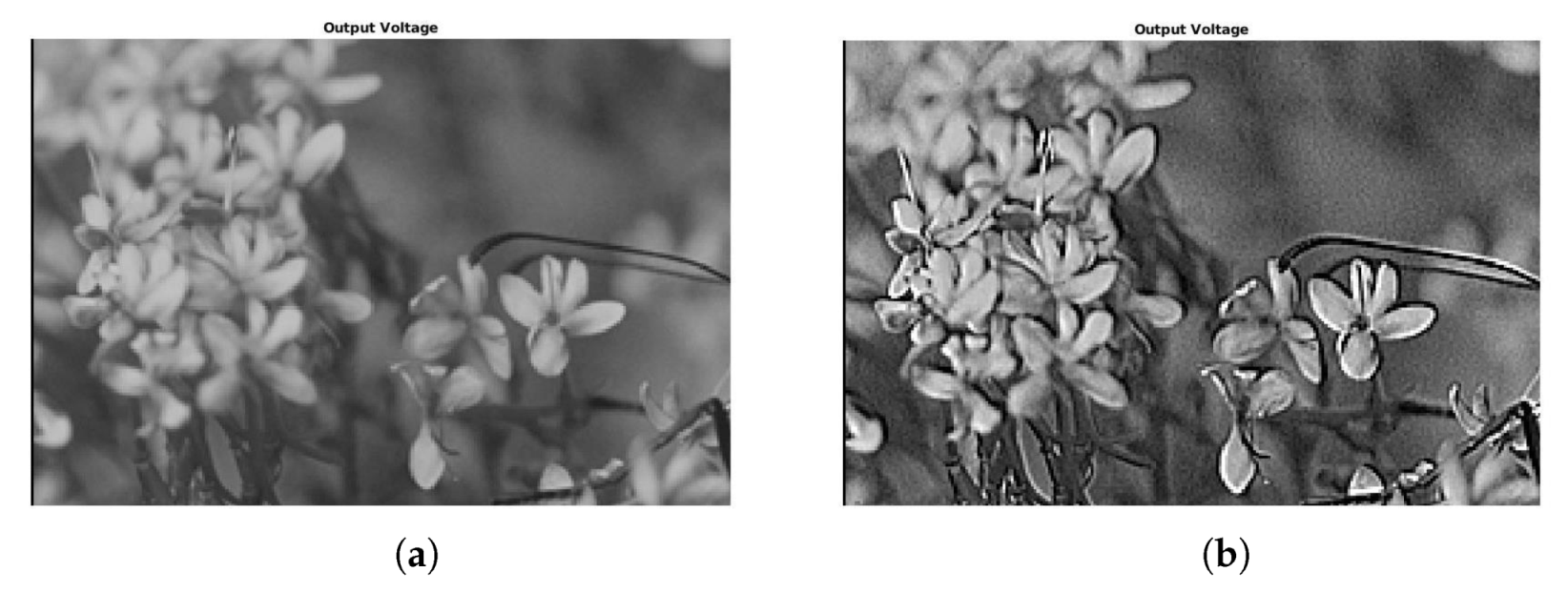

We used the same images as in the previous example, where two of the images were gray 8-bit images and another one was a 24-bit color image (3x8 bits planes). They were processed as in the previous case to 14 bits by padding, and back to 8 bits by truncation. Results of applying this set of coefficients to gray images are shown in Figure 8d and Figure 9d (with a zoom comparing original in Figure 10a against the processed output in Figure 10b). The results for a color image are depicted in Figure 11d, with a zoom-in shown in Figure 12a,b for the original and processed images, respectively. Visually, the images seem to be improved, with less fuzzy edges. This is further corroborated by the increase in the entropy shown in Table 4.

Figure 12.

Example 3: Zoom-in of Figure 11a,d, showing a detail of the sharpening results obtained using the proposed stochastic computing CNN. (a) Zoom-in of the original image. (b) Zoom-in of the sharpened image.

5. Conclusions

In this work we have performed a fully digital implementation of a Memristive Cellular Nonlinear Network profiting from the Stochastic Computing paradigm. The basic unitary cell of the proposed CNN features a digital memristor emulator, plus several arithmetic units that are implemented as very simple gates, allowing for an enormous number of cells in parallel, which can translate into a very fast image processor.

We have implemented this full CNN structure into an ARRIA V FPGA, and we have tested it along with Matlab by implementing three different procedures: a image store, an edge detection, and, finally, an image sharpening process. Notice that these three procedures involve only the change of the matrix coefficients, that are common to all the cells. As has been discussed, the results imply that the system performs smoothly, with errors lower than 1.3% in the storage, an excellent edge detection capability, and a very good detail sharpening. A full FPGA implementation of images with lower number of pixels would allow for a very high image processing speed, adequate for real time needs, and well inside the capabilities and requirements of edge computing. In addition, as shown in [38], the proposed kind of memristive CNNs are resilient to individual “pixel” failures.

As an example, in this paper we have used 14-bit stochastic numbers, which translates into a chain length of 16,384. An entry-level FPGA can run at 80MHz, so it could operate around 4800 operations per second. Since all the operations in the circuit are sequential, this is also the speed at which each step of the numerical integration is performed. Assuming that you need around 10–20 steps to reach the stable point, we could be processing more than 200 points per second. Then, the full resolution would be a matter of how many parallel threads can be implemented into an FPGA or an ASIC, but the numbers show that it seems to be adequate for real-time processing, even using low-speed systems.

Notice that in this paper only the proof of concept for the SC Memristive CNN has been discussed, not comparing it against any other improving algorithm using, for instance, a hard-wired algorithm implementation or Neural Networks, which may show much better image improvement. It has to be noted, however, that the method presented here is training-free, which simplifies the design when compared against NNs and also removes any possible bias introduced by the training. In addition, the facility to change the algorithm is also worth mentioning, since it reduces to changing the values of the coefficients in the the cells. This makes this approach especially preferable over a hard implementation of specific algorithms in, for instance, multi-purpose systems that can need to be swapping functions on the fly.

Author Contributions

Conceptualization, R.P. and S.G.S.; methodology, R.P.; validation, O.C., M.M.A.C. and R.P.; investigation, O.C. and R.P.; resources, O.C., M.M.A.C. and R.P.; writing—original draft preparation, O.C., S.G.S. and R.P.; writing—review and editing, O.C., M.M.A.C., S.G.S. and R.P.; visualization, O.C., S.G.S. and R.P.; supervision, R.P. and S.G.S.; funding acquisition, R.P. All authors have read and agreed to the published version of the manuscript.

Funding

Some of the authors wish to acknowledge support from DPI2017-86610-P, TEC2017-84877-R projects, awarded by the MICINN and also with partial support by the FEDER program.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All the images used in the work are publicly available in the internet for free download.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Chua, L.O.; Yang, L. Cellular neural networks: Theory. IEEE Trans. Circuits Syst. 1988, 35, 1257–1272. [Google Scholar] [CrossRef]

- Rodriguez-Vazquez, A.; Fernandez-Berni, J.; Lenero-Bardallo, J.A.; Vornicu, I.; Carmona-Galan, R. CMOS vision sensors: Embedding computer vision at imaging front-ends. IEEE Circuits Syst. Mag. 2018, 18, 90–107. [Google Scholar] [CrossRef]

- Tetzlaff, R.; Ascoli, A.; Messaris, I.; Chua, L.O. Theoretical Foundations of Memristor Cellular Nonlinear Networks: Memcomputing With Bistable-Like Memristors. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 502–515. [Google Scholar] [CrossRef]

- Ascoli, A.; Tetzlaff, R.; Kang, S.; Chua, L.O. Theoretical Foundations of Memristor Cellular Nonlinear Networks: A DRM2-Based Method to Design Memcomputers with Dynamic Memristors. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 2753–2766. [Google Scholar] [CrossRef]

- Chua, L.O. Memristor-the missing circuit element. Circuit Theory IEEE Trans. 1971, 18, 507–519. [Google Scholar] [CrossRef]

- Strukov, D.B.; Snider, G.S.; Stewart, D.R.; Williams, R.S. The missing memristor found. Nature 2008, 453, 80–83. [Google Scholar] [CrossRef] [PubMed]

- Chua, L.O. Everything you wish to know about memristors but are afraid to ask. Radioengineering 2015, 24, 319. [Google Scholar] [CrossRef]

- Biolek, D.; Biolek, Z.; Biolková, V.; Kolka, Z. Some fingerprints of ideal memristors. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 201–204. [Google Scholar]

- Camps, O.; Picos, R.; de Benito, C.; Chawa, M.M.A.; Stavrinides, S.G. Emulating memristors in a digital environment using stochastic logic. In Proceedings of the 7th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 7–9 May 2018; pp. 1–4. [Google Scholar]

- Camps, O.; Stavrinides, S.G.; Picos, R. Efficient Implementation of Memristor Cellular Nonlinear Networks using Stochastic Computing. In Proceedings of the European Conference on Circuit Theory and Design (ECCTD), Sofia, Bulgaria, 7–10 September 2020; pp. 1–4. [Google Scholar]

- Von Neumann, J. Probabilistic logics and the synthesis of reliable organisms from unreliable components. Autom. Stud. 1956, 34, 43–98. [Google Scholar]

- Gaines, B.R. Stochastic computing systems. In Advances in Information Systems Science; Springer: Berlin/Heidelberg, Germany, 1969; pp. 37–172. [Google Scholar]

- Wang, R.; Han, J.; Cockburn, B.; Elliott, D. Stochastic circuit design and performance evaluation of vector quantization. In Proceedings of the IEEE 26th International Conference on Application-Specific Systems, Architectures and Processors (ASAP), Toronto, ON, Canada, 27–29 July 2015; pp. 111–115. [Google Scholar]

- Camps, O.; Stavrinides, S.G.; Picos, R. Stochastic Computing Implementation of Chaotic Systems. Mathematics 2021, 9, 375. [Google Scholar] [CrossRef]

- Morro, A.; Canals, V.; Oliver, A.; Alomar, M.L.; Rossello, J.L. Ultra-fast data-mining hardware architecture based on stochastic computing. PLoS ONE 2015, 10, e0124176. [Google Scholar] [CrossRef] [Green Version]

- Yuan, B.; Wang, Y.; Wang, Z. Area-efficient scaling-free DFT/FFT design using stochastic computing. IEEE Trans. Circuits Syst. II Express Briefs 2016, 63, 1131–1135. [Google Scholar] [CrossRef]

- Marin, S.T.; Reboul, J.Q.; Franquelo, L.G. Digital stochastic realization of complex analog controllers. IEEE Trans. Ind. Electron. 2002, 49, 1101–1109. [Google Scholar] [CrossRef]

- Najafi, M.H.; Jamali-Zavareh, S.; Lilja, D.J.; Riedel, M.D.; Bazargan, K.; Harjani, R. Time-encoded values for highly efficient stochastic circuits. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2017, 25, 1644–1657. [Google Scholar] [CrossRef]

- Fick, D.; Kim, G.; Wang, A.; Blaauw, D.; Sylvester, D. Mixed-signal stochastic computation demonstrated in an image sensor with integrated 2D edge detection and noise filtering. In Proceedings of the IEEE 2014 Custom Integrated Circuits Conference, San Jose, CA, USA, 15–17 September 2014; pp. 1–4. [Google Scholar]

- Toral, S.; Quero, J.; Ortega, J.; Franquelo, L. Stochastic A/D sigma-delta converter on FPGA. In Proceedings of the 42nd Midwest Symposium on Circuits and Systems (Cat. No.99CH36356), Las Cruces, NM, USA, 8–11 August 1999; Volume 1, pp. 35–38. [Google Scholar]

- Moons, B.; Verhelst, M. Energy-Efficiency and Accuracy of Stochastic Computing Circuits in Emerging Technologies. IEEE J. Emerg. Sel. Top. Circuits Syst. 2014, 4, 475–486. [Google Scholar] [CrossRef]

- Li, S.; Glova, A.O.; Hu, X.; Gu, P.; Niu, D.; Malladi, K.T.; Zheng, H.; Brennan, B.; Xie, Y. SCOPE: A Stochastic Computing Engine for DRAM-Based In-Situ Accelerator. In Proceedings of the 51st Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Fukuoka, Japan, 20–24 October 2018; pp. 696–709. [Google Scholar]

- Toral, S.; Quero, J.; Franquelo, L. Stochastic pulse coded arithmetic. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Geneva, Switzerland, 28–31 May 2000; Volume 1, pp. 599–602. [Google Scholar]

- Corinto, F.; Civalleri, P.P.; Chua, L.O. A theoretical approach to memristor devices. IEEE J. Emerg. Sel. Top. Circuits Syst. 2015, 5, 123–132. [Google Scholar] [CrossRef] [Green Version]

- Al Chawa, M.; Picos, R.; Covi, E.; Brivio, S.; Garcia-Moreno, E.; Spiga, S. Flux-charge characterizing of reset transition in bipolar resistive-switching memristive devices. In Proceedings of the 11th Spanish Conference on Electron Devices, Barcelona, Spain, 8–10 February 2017. [Google Scholar]

- Picos, R.; Roldan, J.B.; Al Chawa, M.M.; Garcia-Fernandez, P.; Jimenez-Molinos, F.; Garcia-Moreno, E. Semiempirical Modeling of Reset Transitions in Unipolar Resistive-Switching Based Memristors. Radioengineering 2015, 24, 421. [Google Scholar] [CrossRef]

- Picos, R.; Roldan, J.; Al Chawa, M.; Jimenez-Molinos, F.; Villena, M.; Garcia-Moreno, E. Exploring ReRAM-based memristors in the charge-flux domain, a modeling approach. In Proceedings of the Memristive Systems (MEMRISYS) 2015 International Conference on, Paphos, Cyprus, 8–10 November 2015; pp. 1–2. [Google Scholar]

- Al Chawa, M.M.; Picos, R. A Simple Quasi-Static Compact Model of Bipolar ReRAM Memristive Devices. IEEE Trans. Circuits Syst. II Express Briefs 2019, 67, 390–394. [Google Scholar] [CrossRef]

- Itoh, M.; Chua, L.O. Designing CNN genes. Int. J. Bifurc. Chaos 2003, 13, 2739–2824. [Google Scholar] [CrossRef]

- Itoh, M. Some Interesting Features of Memristor CNN. arXiv 2019, arXiv:1902.05167. [Google Scholar]

- Maldonado, D.; Gonzalez, M.B.; Campabadal, F.; Jimenez-Molinos, F.; Al Chawa, M.M.; Stavrinides, S.G.; Roldan, J.B.; Tetzlaff, R.; Picos, R.; Chua, L.O. Experimental evaluation of the dynamic route map in the reset transition of memristive ReRAMs. Chaos Solitons Fractals 2020, 139, 110288. [Google Scholar] [CrossRef]

- Ascoli, A.; Tetzlaff, R.; Messaris, I.; Kang, S.; Chua, L. Image Processing by Cellular Memcomputing Structures. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Seville, Spain, 12–14 October 2020; pp. 1–5. [Google Scholar]

- Khanday, F.A.; Akhtar, R. Reversible stochastic computing. Int. J. Numer. Model. Electron. Netw. Devices Fields 2020, 33, e2711. [Google Scholar] [CrossRef]

- Camps, O.; Picos, R.; de Benito, C.; Al Chawa, M.M.; Stavrinides, S.G. Effective accuracy estimation and representation error reduction for stochastic logic operations. In Proceedings of the 7th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 7–9 May 2018; pp. 1–4. [Google Scholar]

- Vourkas, I.; Abusleme, A.; Ntinas, V.; Sirakoulis, G.C.; Rubio, A. A Digital Memristor Emulator for FPGA-Based Artificial Neural Networks. In Proceedings of the Verification and Security Workshop (IVSW), Sant Feliu de Guixols, Spain, 4–6 June 2016; pp. 1–4. [Google Scholar]

- Ranjan, R.; Ponce, P.M.; Kankuppe, A.; John, B.; Saleh, L.A.; Schroeder, D.; Krautschneider, W.H. Programmable memristor emulator asic for biologically inspired memristive learning. In Proceedings of the 2016 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27–29 June 2016; pp. 261–264. [Google Scholar]

- Forencich, A. Verilog Implementation of Mersenne Twister PRNG. 2018. Available online: https://github.com/alexforencich/verilog-mersenne (accessed on 20 June 2021).

- Duan, S.; Hu, X.; Dong, Z.; Wang, L.; Mazumder, P. Memristor-Based Cellular Nonlinear/Neural Network: Design, Analysis, and Applications. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1202–1213. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).