Towards a Comprehensive and Robust Micromanipulation System with Force-Sensing and VR Capabilities

Abstract

:1. Introduction

2. Materials and Methods

2.1. Micromanipulation System Overview

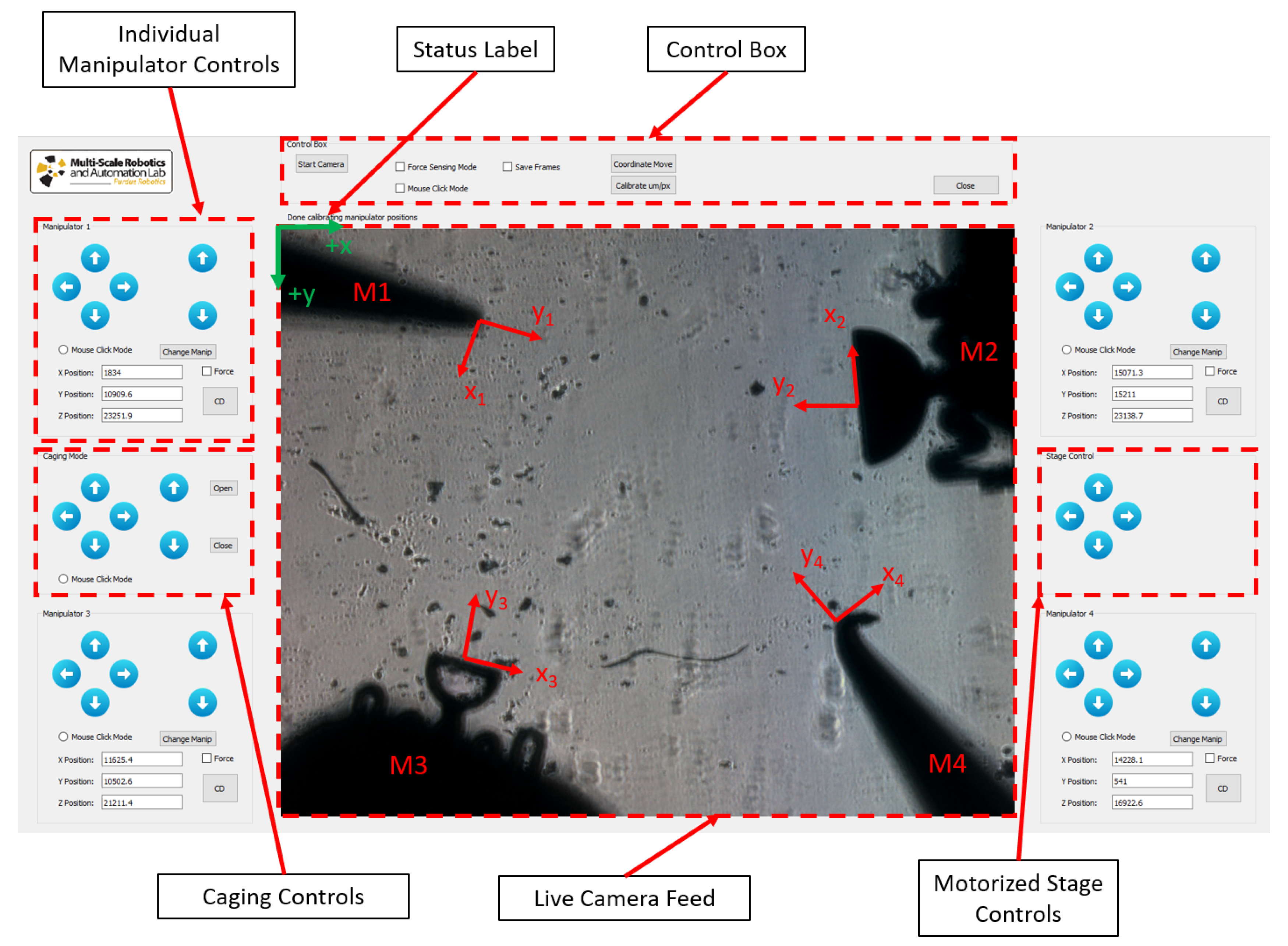

2.2. Graphical User Interface (GUI) Overview

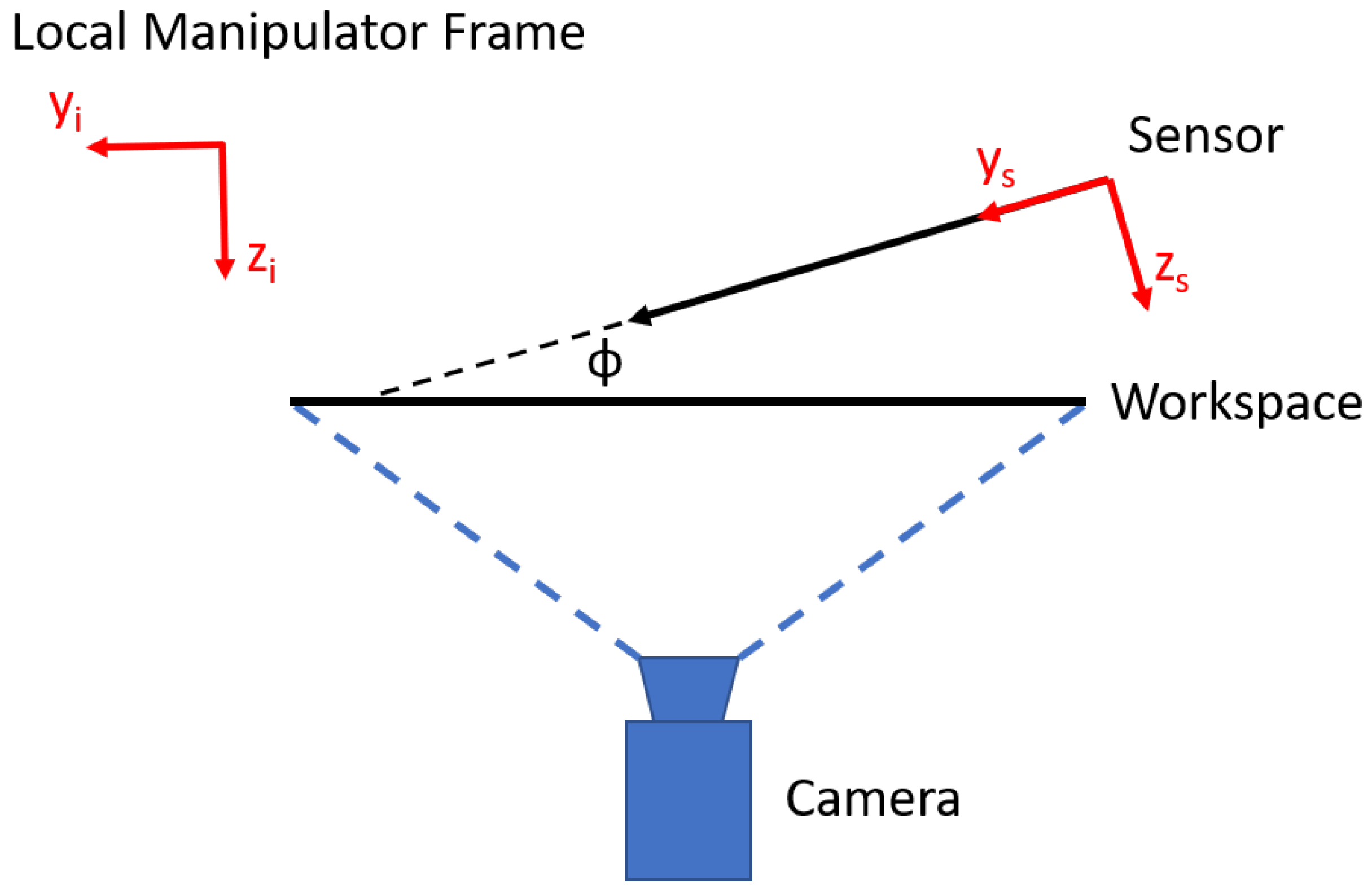

2.3. The Vision-Based Micro-Force Sensing Probe (VBFS-P)

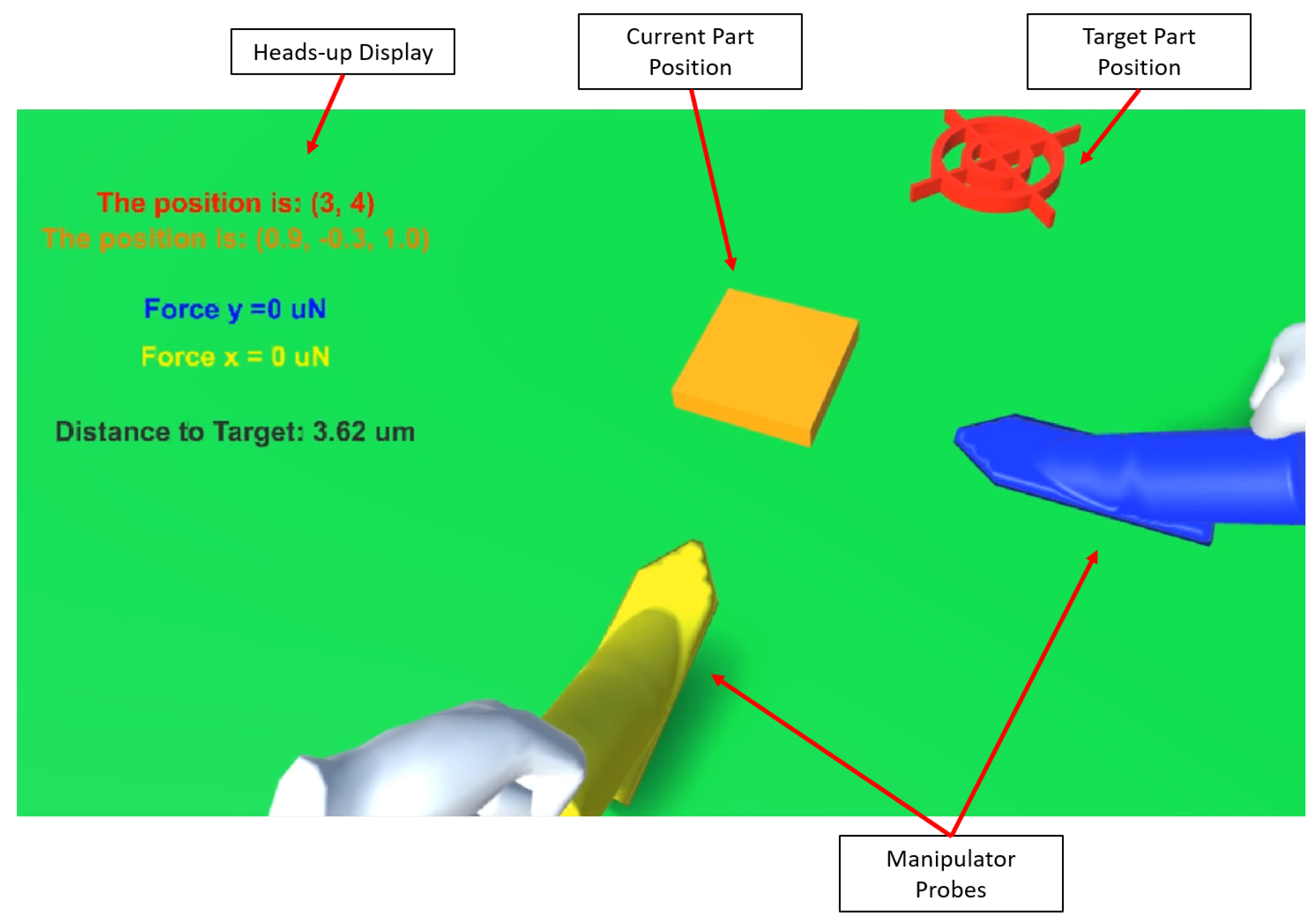

2.4. Virtual Reality (VR) System

3. Results

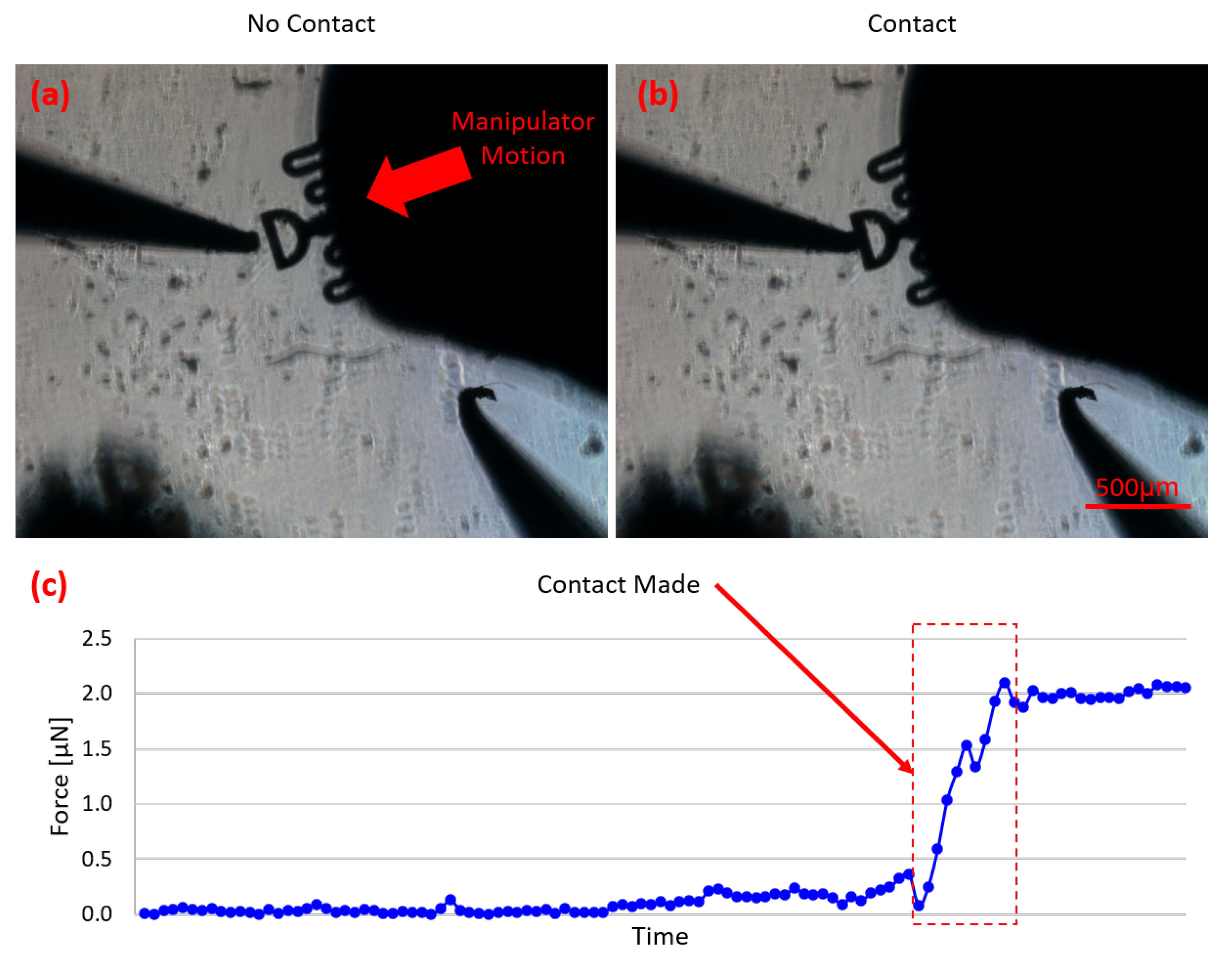

3.1. Contact Detection

3.2. Caging Accuracy

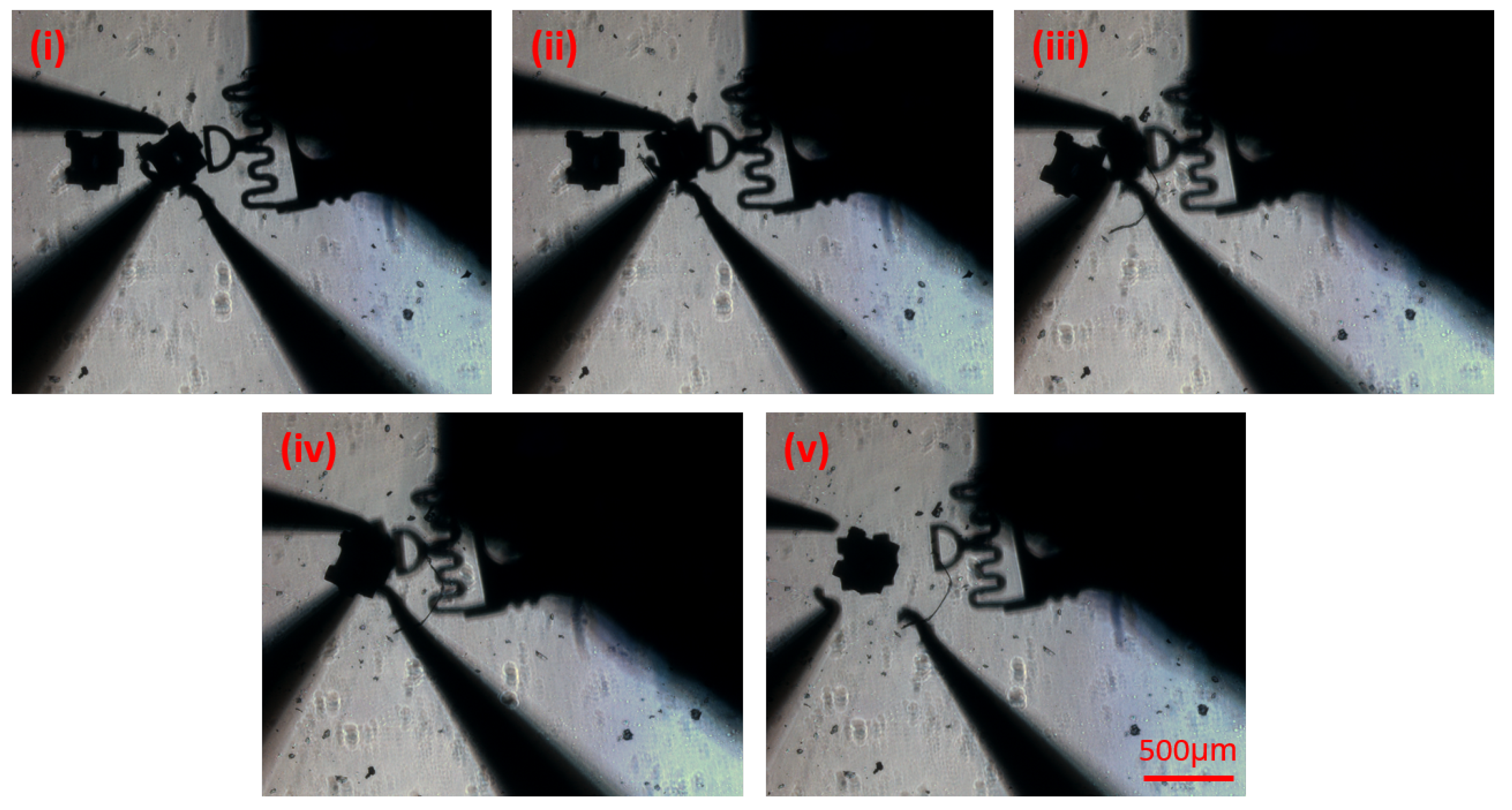

3.3. Caging Manipulation/Assembly

3.4. VR Experiments

4. Discussion

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DOF | Degrees of Freedom |

| AFM | Atomic Force Microscope |

| VBFS-MS | Vision-based Micro-Force Sensing Manipulation System |

| VBFS-P | Vision-based Micro-Force Sensing Probe |

| VR | Virtual Reality |

| GUI | Graphical User Interface |

| CD | Contact Detection |

| PDMS | Polydimethylsiloxane |

| DRIE | Deep Reactive-Ion Etching |

| MEMS | Micro Electromechanical Systems |

| MOSSE | Minimum Output Sum of Squared Error |

| CSRT | Spatial Reliability Correlation Filter Tracker |

| ROI | Region of Interest |

| GPU | Graphics Processing Unit |

References

- Wang, G.; Wang, Y.; Lv, B.; Ma, R.; Liu, L. Research on a new type of rigid-flexible coupling 3-DOF micro-positioning platform. Micromachines 2020, 11, 1015. [Google Scholar] [CrossRef] [PubMed]

- Tan, N.; Clevy, C.; Laurent, G.J.; Sandoz, P.; Chaillet, N. Accuracy Quantification and Improvement of Serial Micropositioning Robots for In-Plane Motions. IEEE Trans. Robot. 2015, 31, 1497–1507. [Google Scholar] [CrossRef]

- Sun, Y.; Nelson, B.J. Biological cell injection using an autonomous microrobotic system. Int. J. Robot. Res. 2002, 21, 861–868. [Google Scholar] [CrossRef]

- Ouyang, P.R.; Zhang, W.J.; Gupta, M.M.; Zhao, W. Overview of the development of a visual based automated bio-micromanipulation system. Mechatronics 2007, 17, 578–588. [Google Scholar] [CrossRef]

- Wang, X.; Law, J.; Luo, M.; Gong, Z.; Yu, J.; Tang, W.; Zhang, Z.; Mei, X.; Huang, Z.; You, L.; et al. Magnetic Measurement and Stimulation of Cellular and Intracellular Structures. ACS Nano 2020, 14, 3805–3821. [Google Scholar] [CrossRef] [PubMed]

- Adam, G.; Hakim, M.; Solorio, L.; Cappelleri, D.J. Stiffness Characterization and Micromanipulation for Biomedical Applications using the Vision-based Force-Sensing Magnetic Mobile Microrobot. In Proceedings of the MARSS 2020: International Conference on Manipulation, Automation, and Robotics at Small Scales, Toronto, ON, Canada, 13–17 July 2020; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Venkatesan, V.; Cappelleri, D.J. Path Planning and Micromanipulation Using a Learned Model. IEEE Robot. Autom. Lett. 2018, 3, 3089–3096. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, X.; Kojima, M.; Huang, Q.; Arai, T. Advances in micromanipulation actuated by vibration-induced acousticwaves and streaming flow. Appl. Sci. 2020, 10, 1260. [Google Scholar] [CrossRef] [Green Version]

- Baudoin, M.; Thomas, J.L. Acoustic Tweezers for Particle and Fluid Micromanipulation. Annu. Rev. Fluid Mech. 2020, 52, 205–234. [Google Scholar] [CrossRef]

- Youssefi, O.; Diller, E. Contactless robotic micromanipulation in air using a magneto-acoustic system. IEEE Robot. Autom. Lett. 2019, 4, 1580–1586. [Google Scholar] [CrossRef]

- Guix, M.; Wang, J.; An, Z.; Adam, G.; Cappelleri, D.J. Real-Time Force-Feedback Micromanipulation Using Mobile Microrobots with Colored Fiducials. IEEE Robot. Autom. Lett. 2018, 3, 3591–3597. [Google Scholar] [CrossRef]

- Wang, X.; Luo, M.; Wu, H.; Zhang, Z.; Liu, J.; Xu, Z.; Johnson, W.; Sun, Y. A Three-Dimensional Magnetic Tweezer System for Intraembryonic Navigation and Measurement. IEEE Trans. Robot. 2018, 34, 240–247. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, K.; Tao, H.; Hopyan, S.; Sun, Y. Magnetic Micromanipulation for In Vivo Measurement of Stiffness Heterogeneity and Anisotropy in the Mouse Mandibular Arch. Research 2020, 2020, 7914074. [Google Scholar] [CrossRef]

- Zhang, S.; Scott, E.Y.; Singh, J.; Chen, Y.; Zhang, Y.; Elsayed, M.; Dean Chamberlain, M.; Shakiba, N.; Adams, K.; Yu, S.; et al. The optoelectronic microrobot: A versatile toolbox for micromanipulation. Proc. Natl. Acad. Sci. USA 2019, 116, 14823–14828. [Google Scholar] [CrossRef] [Green Version]

- Arai, F.; Yoshikawa, K.; Sakami, T.; Fukuda, T. Synchronized laser micromanipulation of multiple targets along each trajectory by single laser. Appl. Phys. Lett. 2004, 85, 4301–4303. [Google Scholar] [CrossRef]

- Zhou, Q.; Korhonen, P.; Laitinen, J.; Sjövall, S. Automatic dextrous microhandling based on a 6-DOF microgripper. J. Micromechatron. 2006, 3, 359–387. [Google Scholar] [CrossRef] [Green Version]

- Wason, J.D.; Wen, J.T.; Gorman, J.J.; Dagalakis, N.G. Automated multiprobe microassembly using vision feedback. IEEE Trans. Robot. 2012, 28, 1090–1103. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, K.; Chen, W.; Jiang, J.; Chen, W. Monolithically integrated two-axis microgripper for polarization maintaining in optical fiber assembly. Rev. Sci. Instrum. 2015, 86, 025105. [Google Scholar] [CrossRef]

- Seon, J.A.; Dahmouche, R.; Gauthier, M. Enhance In-Hand Dexterous Micromanipulation by Exploiting Adhesion Forces. IEEE Trans. Robot. 2018, 34, 113–125. [Google Scholar] [CrossRef]

- Brazey, B.; Dahmouche, R.; Seon, J.A.; Gauthier, M. Experimental validation of in-hand planar orientation and translation in microscale. Intell. Serv. Robot. 2016, 9, 101–112. [Google Scholar] [CrossRef] [Green Version]

- Abondance, T.; Abondance, T.; Jayaram, K.; Jafferis, N.T.; Shum, J.; Wood, R.J. Piezoelectric Grippers for Mobile Micromanipulation. IEEE Robot. Autom. Lett. 2020, 5, 4407–4414. [Google Scholar] [CrossRef]

- Wei, J.; Porta, M.; Tichem, M.; Staufer, U.; Sarro, P.M. Integrated Piezoresistive Force and Position Detection Sensors for Micro-Handling Applications. J. Microelectromech. Syst. 2013, 22, 1310–1326. [Google Scholar] [CrossRef]

- Schulze, R.; Gessner, T.; Heinrich, M.; Schueller, M.; Forke, R.; Billep, D.; Sborikas, M.; Wegener, M. Integration of piezoelectric polymer transducers into microsystems for sensing applications. In Proceedings of the 2012 21st IEEE International Symposium on Applications of Ferroelectrics Held Jointly with 11th IEEE European Conference on the Applications of Polar Dielectrics and IEEE PFM, ISAF/ECAPD/PFM 2012, Aveiro, Portugal, 9–13 July 2012. [Google Scholar] [CrossRef]

- Wang, G.; Xu, Q. Design and Precision Position/Force Control of a Piezo-Driven Microinjection System. IEEE/ASME Trans. Mechatron. 2017, 22, 1744–1754. [Google Scholar] [CrossRef]

- Guo, S.; Zhu, X.; Jańczewski, D.; Siew Chen Lee, S.; He, T.; Lay Ming Teo, S.; Julius Vancso, G. Measuring protein isoelectric points by AFM-based force spectroscopy using trace amounts of sample. Nat. Nanotechnol. 2016, 11, 817–823. [Google Scholar] [CrossRef] [PubMed]

- Efremov, Y.M.; Wang, W.H.; Hardy, S.D.; Geahlen, R.L.; Raman, A. Measuring nanoscale viscoelastic parameters of cells directly from AFM force-displacement curves. Sci. Rep. 2017, 7, 1541. [Google Scholar] [CrossRef] [PubMed]

- Adam, G.; Cappelleri, D.J. Towards a real-time 3D vision-based micro-force sensing probe. J. Micro-Bio Robot. 2020. [Google Scholar] [CrossRef]

- Sun, X.; Chen, W.; Chen, W.; Qi, S.; Li, W.; Hu, C.; Tao, J. Design and analysis of a large-range precision micromanipulator. Smart Mater. Struct. 2019, 28, 115031. [Google Scholar] [CrossRef]

- Muntwyler, S.; Beyeler, F.; Nelson, B.J. Three-axis micro-force sensor with sub-micro-Newton measurement uncertainty and tunable force range. J. Micromech. Microeng. 2010, 20, 025011. [Google Scholar] [CrossRef]

- Rodriguez, A.; Mason, M.T.; Ferry, S. From caging to grasping. Int. J. Robot. Res. 2012, 31, 886–900. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Lukežič, A.; Vojíř, T.; Čehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter Tracker with Channel and Spatial Reliability. Int. J. Comput. Vis. 2018, 126, 671–688. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Forward-backward error: Automatic detection of tracking failures. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2756–2759. [Google Scholar] [CrossRef] [Green Version]

- Cappelleri, D.J.; Cheng, P.; Fink, J.; Gavrea, B.; Kumar, V. Automated Assembly for Mesoscale Parts. IEEE Trans. Autom. Sci. Eng. 2011, 8, 598–613. [Google Scholar] [CrossRef]

| Sensor | Direction | Stiffness (N/m) | Resolution (N) | Range (N) |

|---|---|---|---|---|

| I | x | 0.24 | 0.92 | [0, 32] |

| y | 0.43 | 1.65 | [0, 65] | |

| z | 0.05 | 0.19 | [0, 5] | |

| II | x | 0.12 | 0.46 | [0, 17] |

| y | 0.24 | 0.93 | [0, 37] | |

| z | 0.05 | 0.21 | [0, 6] |

| Average Offset [m] | Maximum Offset [m] | Standard Deviation [m] | Percent Error | |

|---|---|---|---|---|

| Caging with Polygonal parts | 15.46 | 23.45 | 1.83 | 7.73% |

| Caging with Circular parts | 17.55 | 28.08 | 2.29 | 8.78% |

| Pushing Method | 32.92 | 97.84 | 19.16 | 14.07% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adam, G.; Chidambaram, S.; Reddy, S.S.; Ramani, K.; Cappelleri, D.J. Towards a Comprehensive and Robust Micromanipulation System with Force-Sensing and VR Capabilities. Micromachines 2021, 12, 784. https://doi.org/10.3390/mi12070784

Adam G, Chidambaram S, Reddy SS, Ramani K, Cappelleri DJ. Towards a Comprehensive and Robust Micromanipulation System with Force-Sensing and VR Capabilities. Micromachines. 2021; 12(7):784. https://doi.org/10.3390/mi12070784

Chicago/Turabian StyleAdam, Georges, Subramanian Chidambaram, Sai Swarup Reddy, Karthik Ramani, and David J. Cappelleri. 2021. "Towards a Comprehensive and Robust Micromanipulation System with Force-Sensing and VR Capabilities" Micromachines 12, no. 7: 784. https://doi.org/10.3390/mi12070784

APA StyleAdam, G., Chidambaram, S., Reddy, S. S., Ramani, K., & Cappelleri, D. J. (2021). Towards a Comprehensive and Robust Micromanipulation System with Force-Sensing and VR Capabilities. Micromachines, 12(7), 784. https://doi.org/10.3390/mi12070784