Powering Nutrition Research: Practical Strategies for Sample Size in Multiple Regression

Abstract

1. Introduction

2. Materials and Methods

2.1. Fundamentals of Sample Size Calculations

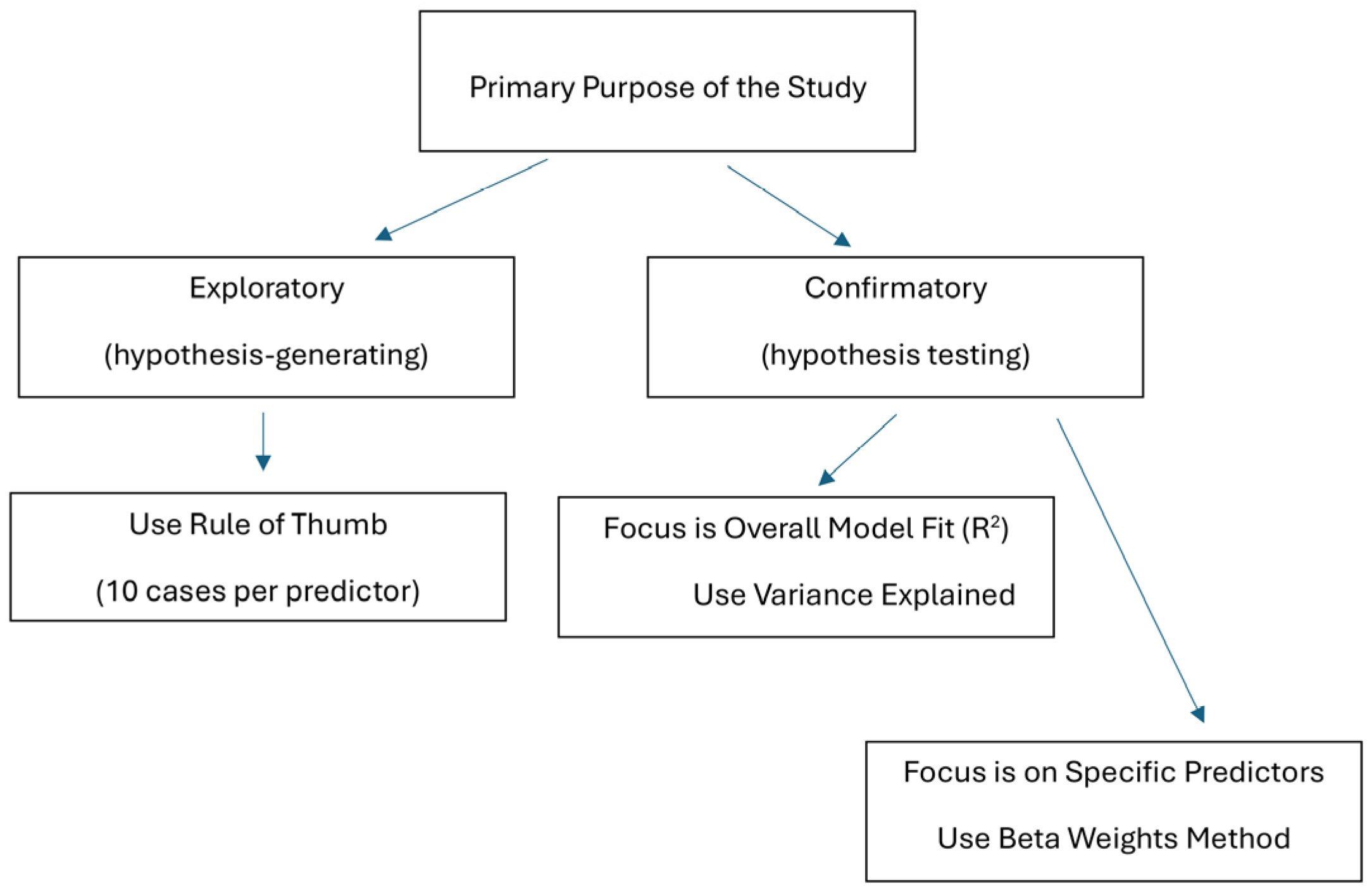

2.2. Approaches to Sample Size Calculations for Multiple Regression

3. Results

3.1. The Rule of Thumb

- N = the total sample size (i.e., the number of participants or observations in the study)

- k = the number of predictor variables (also called independent variables or regressors) in the multiple regression model

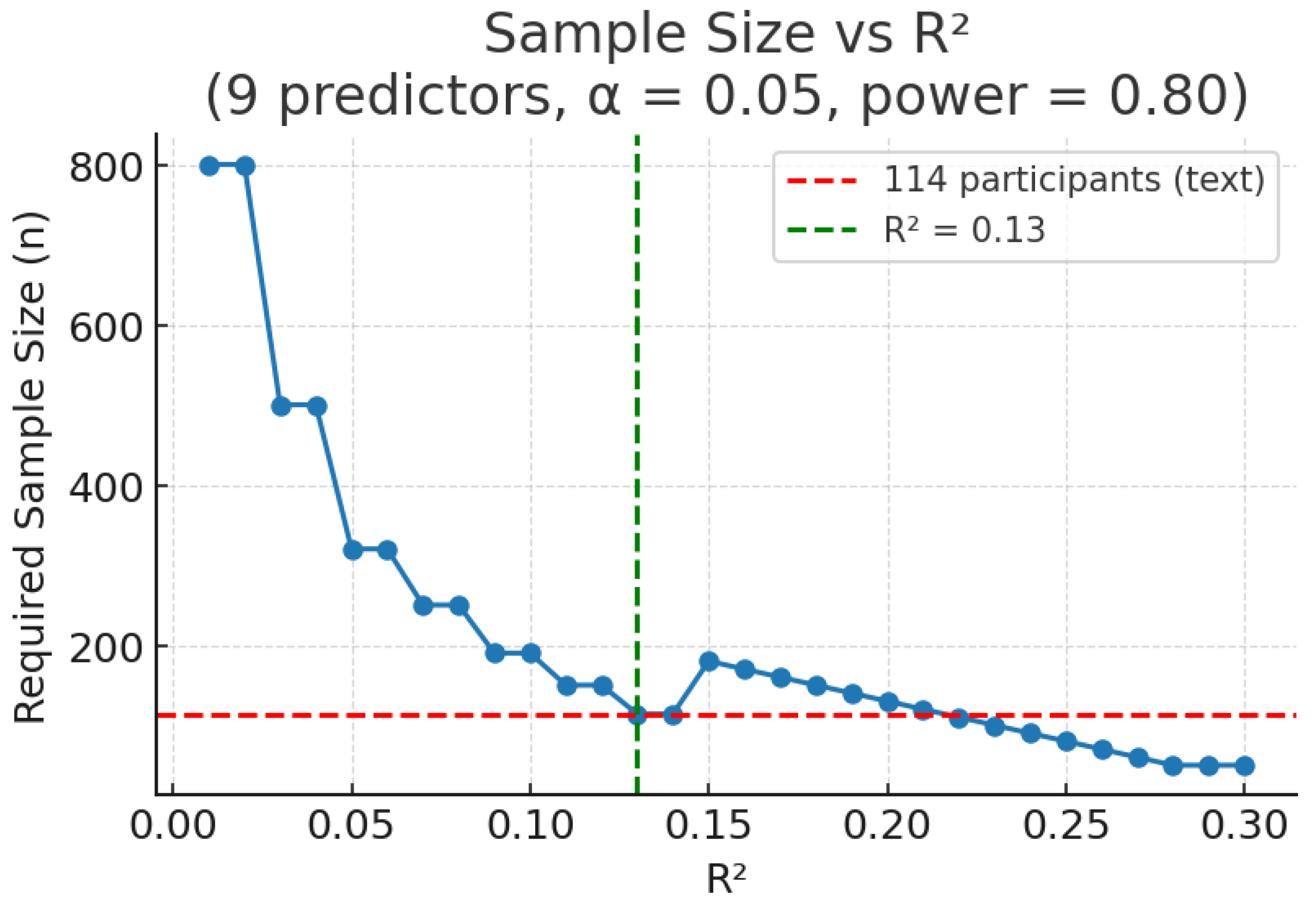

3.2. Variance Explained (R2) Method

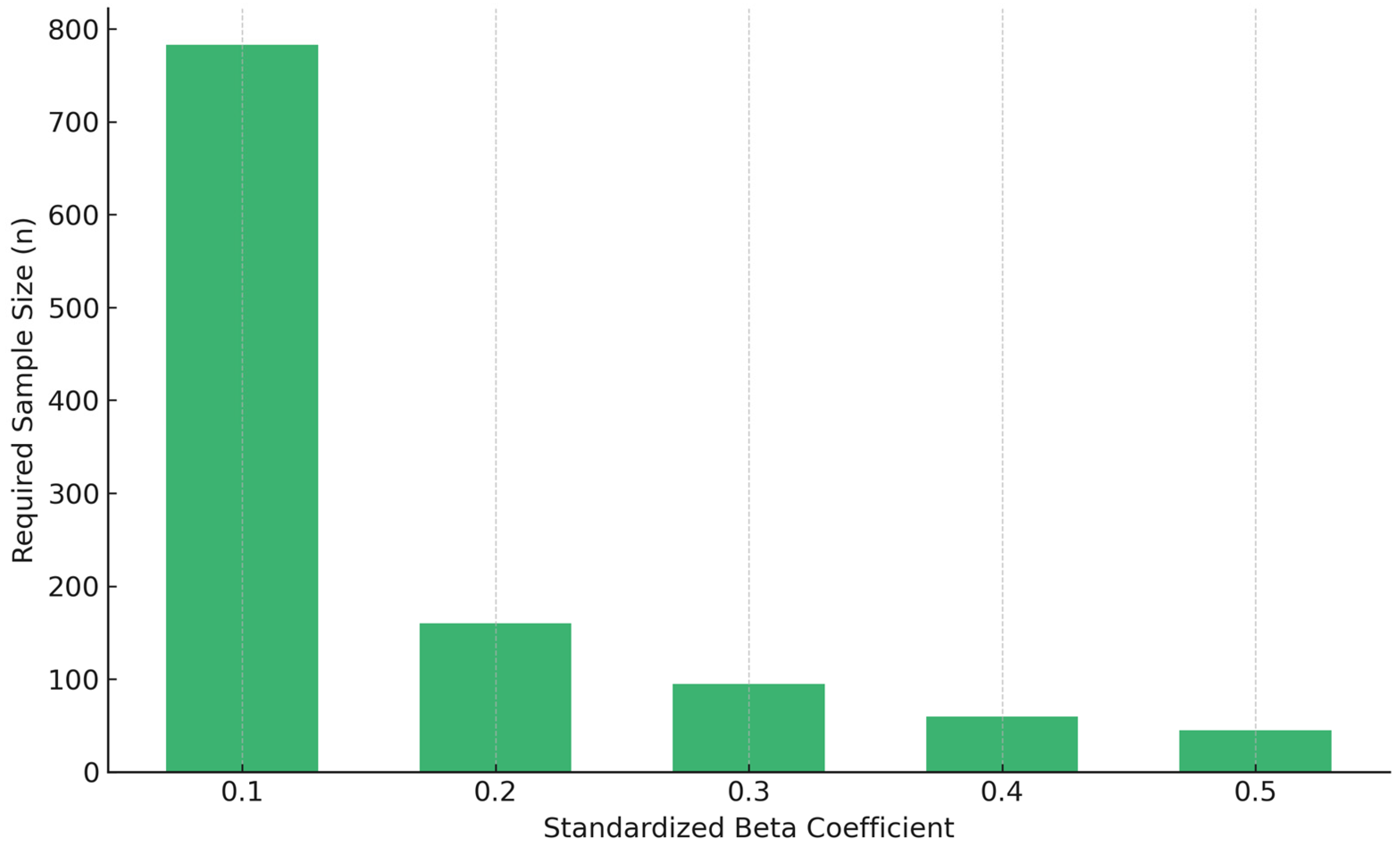

3.3. Beta Weights Approach

4. Discussion

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| R2 | Variance explained |

| BMI | Body mass index |

References

- Carbonneau, E.; Lamarche, B.; Provencher, V.; Desroches, S.; Robitaille, J.; Vohl, M.C.; Bégin, C.; Bélanger, M.; Couillard, C.; Pelletier, L.; et al. Associations Between Nutrition Knowledge and Overall Diet Quality: The Moderating Role of Sociodemographic Characteristics-Results From the PREDISE Study. Am. J. Health Promot. 2021, 35, 38–47. [Google Scholar] [CrossRef]

- Giretti, I.; Correani, A.; Antognoli, L.; Monachesi, C.; Marchionni, P.; Biagetti, C.; Bellagamba, M.P.; Cogo, P.; D’Ascenzo, R.; Burattini, I.; et al. Blood urea in preterm infants on routine parenteral nutrition: A multiple linear regression analysis. Clin. Nutr. 2021, 40, 153–156. [Google Scholar] [CrossRef]

- Seabrook, J.A.; Dworatzek, P.D.N.; Matthews, J.I. Predictors of Food Skills in University Students. Can. J. Diet. Pract. Res. 2019, 80, 205–208. [Google Scholar] [CrossRef]

- Sheean, P.M.; Bruemmer, B.; Gleason, P.; Harris, J.; Boushey, C.; Van Horn, L. Publishing nutrition research: A review of multivariate techniques—Part 1. J. Am. Diet Assoc. 2011, 111, 103–110. [Google Scholar] [CrossRef]

- Woods, N.; Seabrook, J.A.; Haines, J.; Stranges, S.; Minaker, L.; O’Connor, C.; Doherty, S.; Gilliland, J. Breakfast Consumption and Diet Quality of Teens in Southwestern Ontario. Curr. Dev. Nutr. 2022, 7, 100003. [Google Scholar] [CrossRef]

- Schaafsma, H.; Laasanen, H.; Twynstra, J.; Seabrook, J.A. A Review of Statistical Reporting in Dietetics Research (2010–2019): How is a Canadian Journal Doing? Can. J. Diet. Pract. Res. 2021, 82, 59–67. [Google Scholar] [CrossRef]

- Seabrook, J.A. How Many Participants Are Needed? Strategies for Calculating Sample Size in Nutrition Research. Can. J. Diet. Pract. Res. 2025, 86, 479–483, Erratum in Can. J. Diet Pract. Res. 2025, 86, 107. https://doi.org/10.3148/cjdpr-2025-207. [Google Scholar] [CrossRef]

- Avraham, S.B.; Chetrit, A.; Agay, N.; Freedman, L.S.; Saliba, W.; Goldbourt, U.; Keinan-Boker, L.; Kalter-Leibovici, O.; Shahar, D.R.; Kimron, L.; et al. Methodology and challenges for harmonization of nutritional data from seven historical studies. Nutr. J. 2024, 23, 88. [Google Scholar] [CrossRef]

- Ioannidis, J.P. We need more randomized trials in nutrition-preferably large, long-term, and with negative results. Am. J. Clin. Nutr. 2016, 103, 1385–1386. [Google Scholar] [CrossRef]

- Green, S.B. How Many Subjects Does It Take To Do A Regression Analysis. Multivar. Behav. Res. 1991, 26, 499–510. [Google Scholar] [CrossRef]

- Knofczynski, G.T.; Mundfrom, D. Sample sizes when using multiple linear regression for prediction. Educ. Psychol. Meas. 2008, 68, 431–442. [Google Scholar] [CrossRef]

- Maxwell, S.E. Sample size and multiple regression analysis. Psychol. Methods 2000, 5, 434–458. [Google Scholar] [CrossRef]

- Sawyer, R. Sample size and the accuracy of predictions made from multiple regression equations. J. Educ. Stat. 1982, 7, 91–104. [Google Scholar] [CrossRef]

- Bujang, M.A.; Sa’at, N.; Tg Abu Bakar Sidik, T.M.I. Determination of minimum sample size requirement for multiple linear regression and analysis of covariance based on experimental and non-experimental studies. Epidemiol. Biostat. Public Health 2017, 14, e12117. [Google Scholar] [CrossRef]

- Button, K.S.; Ioannidis, J.P.; Mokrysz, C.; Nosek, B.A.; Flint, J.; Robinson, E.S.; Munafò, M.R. Power failure: Why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 2013, 14, 365–376, Erratum in Nat. Rev. Neurosci. 2013, 14, 451. [Google Scholar] [CrossRef]

- Ioannidis, J.P. Why most published research findings are false. PLoS Med. 2005, 2, e124, Erratum in PLoS Med. 2022, 19, e1004085. [Google Scholar] [CrossRef]

- Kvarven, A.; Strømland, E.; Johannesson, M. Comparing meta-analyses and preregistered multiple-laboratory replication projects. Nat. Hum. Behav. 2020, 4, 423–434, Erratum in Nat Hum Behav. 2020, 4, 659–663. [Google Scholar] [CrossRef]

- Simmons, J.P.; Nelson, L.D.; Simonsohn, U. False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol. Sci. 2011, 22, 1359–1366. [Google Scholar] [CrossRef]

- Wilson Vanvoorhis, C.R.; Morgan, B.L. Understanding power and rules of thumb for determining sample sizes. Tutor. Quant. Methods Psychol. 2007, 3, 43–50. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods. 2009, 41, 1149–1160. [Google Scholar] [CrossRef]

- Vatcheva, K.P.; Lee, M.; McCormick, J.B.; Rahbar, M.H. Multicollinearity in Regression Analyses Conducted in Epidemiologic Studies. Epidemiology 2016, 6, 227. [Google Scholar] [CrossRef] [PubMed]

- Hanley, J.A. Simple and multiple linear regression: Sample size considerations. J. Clin. Epidemiol. 2016, 79, 112–119. [Google Scholar] [CrossRef] [PubMed]

- Riley, R.D.; Snell, K.I.E.; Ensor, J.; Burke, D.L.; Harrell, F.E., Jr.; Moons, K.G.M.; Collins, G.S. Minimum sample size for developing a multivariable prediction model: Part I—Continuous outcomes. Stat. Med. 2019, 38, 1262–1275. [Google Scholar] [CrossRef] [PubMed]

- Lakens, D. Sample size justification. Collabra Psychol. 2022, 8, 33267. [Google Scholar] [CrossRef]

- Kruschke, J.K. Rejecting or accepting parameter values in Bayesian estimation. Adv. Methods Pract. Psychol. Sci. 2018, 1, 270–280. [Google Scholar] [CrossRef]

| Beta (Standardized) | Effect Size (f2) | Approximate Required Sample Size |

|---|---|---|

| 0.10 | 0.011 | 783 |

| 0.20 | 0.042 | 160 |

| 0.30 | 0.097 | 95 |

| 0.40 | 0.267 | 60 |

| 0.50 | 0.500 | 45 |

| Method | Assumed Effect Size | Sample Size Estimate |

|---|---|---|

| Rule of Thumb | Medium | 90 |

| R2 Method (R2 = 0.02) | Small | 395 |

| R2 Method (R2 = 0.13) | Medium | 114 |

| R2 Method (R2 = 0.26) | Large | 63 |

| Beta Weight (β = 0.10) | Small | 783 |

| Beta Weight (β = 0.20) | Medium | 160 |

| Beta Weight (β = 0.50) | Large | 40–70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seabrook, J.A. Powering Nutrition Research: Practical Strategies for Sample Size in Multiple Regression. Nutrients 2025, 17, 2668. https://doi.org/10.3390/nu17162668

Seabrook JA. Powering Nutrition Research: Practical Strategies for Sample Size in Multiple Regression. Nutrients. 2025; 17(16):2668. https://doi.org/10.3390/nu17162668

Chicago/Turabian StyleSeabrook, Jamie A. 2025. "Powering Nutrition Research: Practical Strategies for Sample Size in Multiple Regression" Nutrients 17, no. 16: 2668. https://doi.org/10.3390/nu17162668

APA StyleSeabrook, J. A. (2025). Powering Nutrition Research: Practical Strategies for Sample Size in Multiple Regression. Nutrients, 17(16), 2668. https://doi.org/10.3390/nu17162668