A Semi-Supervised Transformer with a Curriculum Training Pipeline for Remote Sensing Image Semantic Segmentation

Highlights

- A new curriculum-based pipeline effectively reduces overfitting and stabilizes the training of ViT models for semantic segmentation with very few labeled remote sensing images.

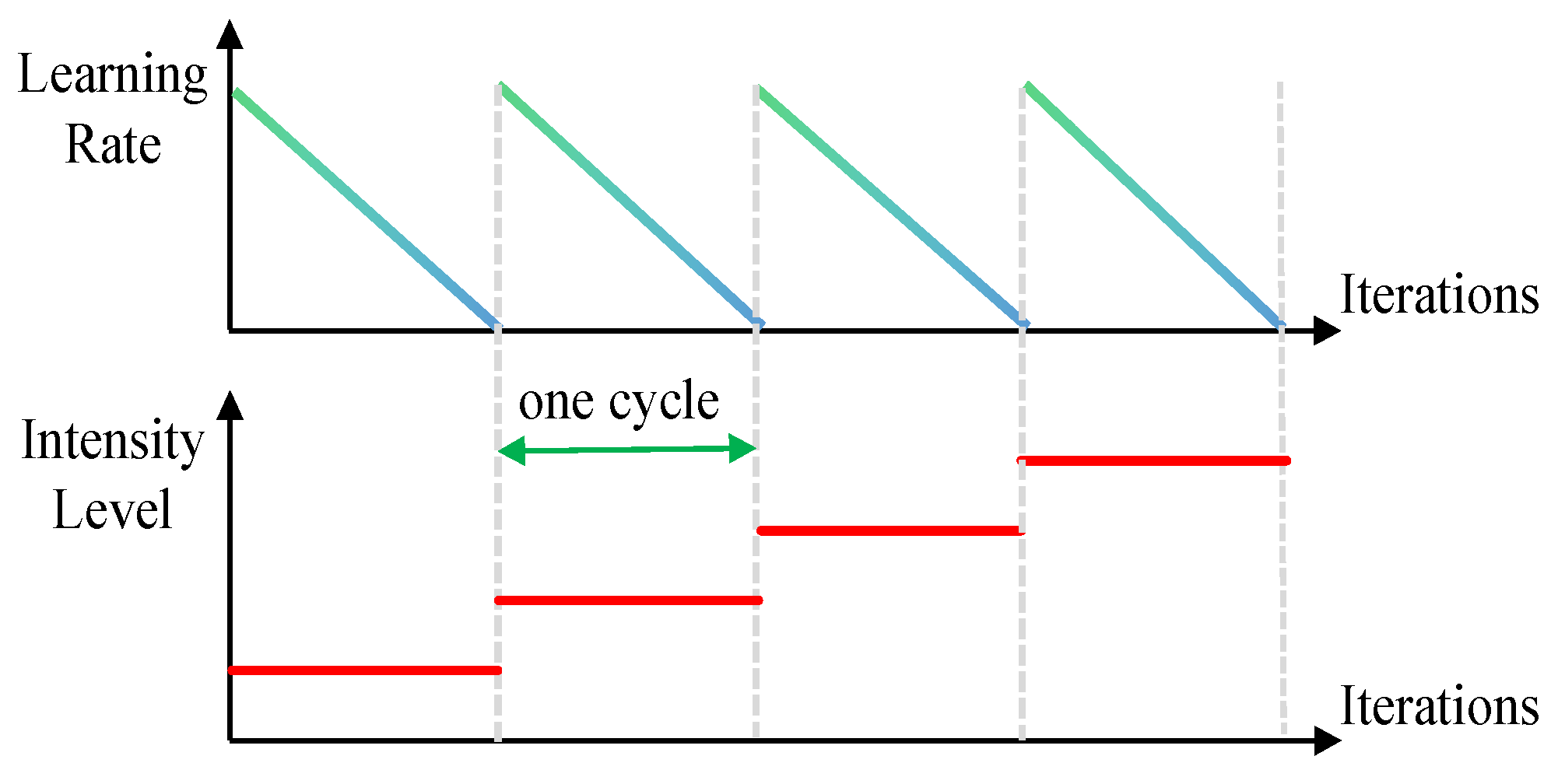

- A progressive augmentation strategy combining geometric and intensity adaptations further improves the model’s generalization ability.

- This approach allows for the training of ViT-based segmentation models with minimal annotation effort, facilitating more data-efficient remote sensing analysis.

- The cross-domain version of the pipeline successfully uses labeled data from a source domain to boost performance on a target domain with limited labels.

Abstract

1. Introduction

- (1)

- We propose a CSSP, a stable, curriculum-based training paradigm for large ViT-based models for RSI SSSS. Through a structured four-stage pipeline, the CSSP systematically addresses the critical issues of overfitting and training instability under limited annotations.

- (2)

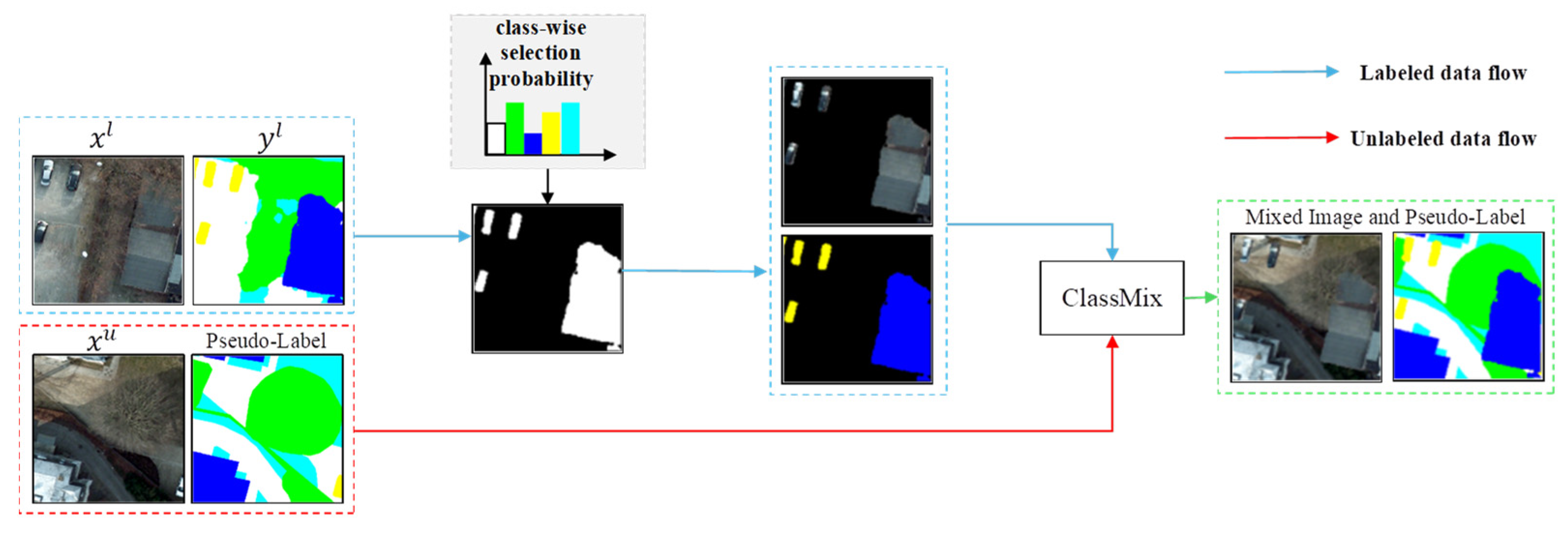

- Within the CSSP framework, we design and validate two key innovative components, the DA-ClassMix augmentation and the PIA strategy, which regulate data complexity progression from geometric-semantic and photometric perspectives, respectively.

- (3)

- By extending the CSSP’s core design, we propose a CDCSSP for the SSDA task. Experiments demonstrate that the CDCSSP achieves significant performance improvements over state-of-the-art (SoTA) UDA and SSDA methods, confirming the effectiveness and generalizability of our proposed training paradigm.

2. Related Works

2.1. Methods for RSI SSSS

2.2. ViT-Based Methods for RSI SSSS

2.3. Curriculum Learning for RSI SSSS

2.4. Domain Adaptive Semantic Segmentation

3. Method

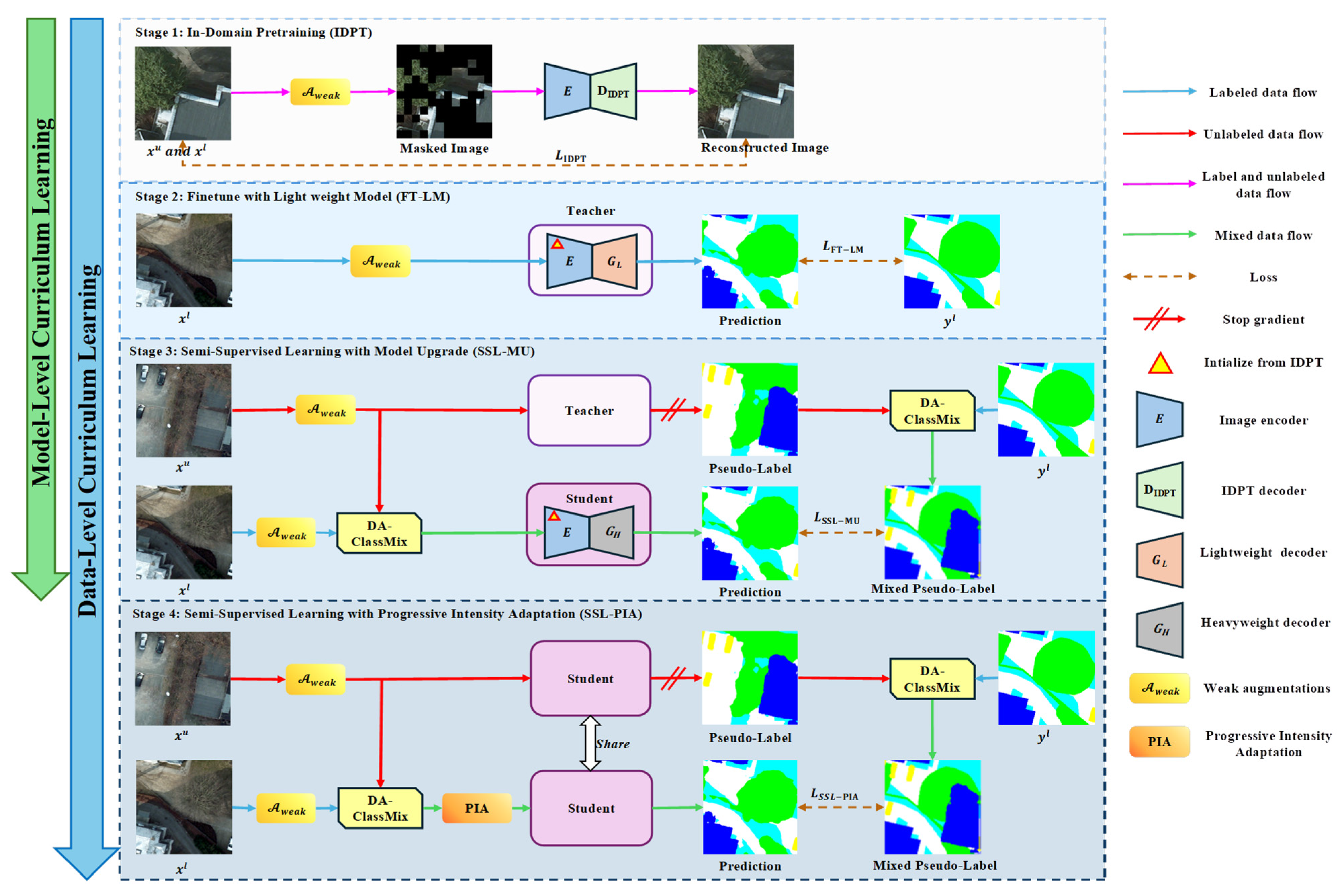

3.1. CSSP for RSI SSSS

3.1.1. Overall Framework

3.1.2. In-Domain Pretraining and Finetuning

3.1.3. SSL with Model Upgrade

3.1.4. SSL with Progressive Intensity Adaptation

3.2. Extension to SSDA: The CDCSSP Pipeline

4. Experiments and Analysis

4.1. Experimental Setup

4.1.1. Datasets

- (1)

- ISPRS Potsdam Dataset [85]: This benchmark comprises 38 high-resolution aerial images with a ground sampling distance (GSD) of 5 cm. We use 24 images for training and 14 for validation, utilizing only the RGB bands. The annotations follow a six-category scheme (impervious surfaces, buildings, low vegetation, trees, cars, and clutter), though the clutter category is excluded in both training and evaluation. All images are cropped into non-overlapping 512 × 512 patches, yielding 2904 training and 1694 validation patches.

- (2)

- ISPRS Vaihingen Dataset [85]: This dataset includes 16 training and 17 validation images with a GSD of 9 cm. Each image contains three spectral bands: near-infrared, red, and green. It uses the same six-class labeling scheme as Potsdam, and similarly, the clutter category is omitted in our experiments. Images are divided into non-overlapping 512 × 512 patches, resulting in 210 training and 249 validation samples.

- (3)

- Inria Aerial Image Labeling Dataset [86]: This dataset addresses a binary segmentation task, distinguishing between “building” and “not building” classes. A labeled subset of 180 images is used in our experiments. Each image has a size of 5000 × 5000 pixels and a GSD of 0.3 m. The subset covers five geographic regions: Austin, Chicago, Kitsap County, western Tyrol, and Vienna. Following the data partition in [44], we use images with IDs 1–5 from each region for validation and the remaining images for training, resulting in 155 training and 25 validation images. All images are cropped into non-overlapping 512 × 512 patches, yielding 12,555 training and 2025 validation samples.

4.1.2. Implementation Details

4.1.3. Metrics

4.2. Comprehensive Evaluation of CSSP

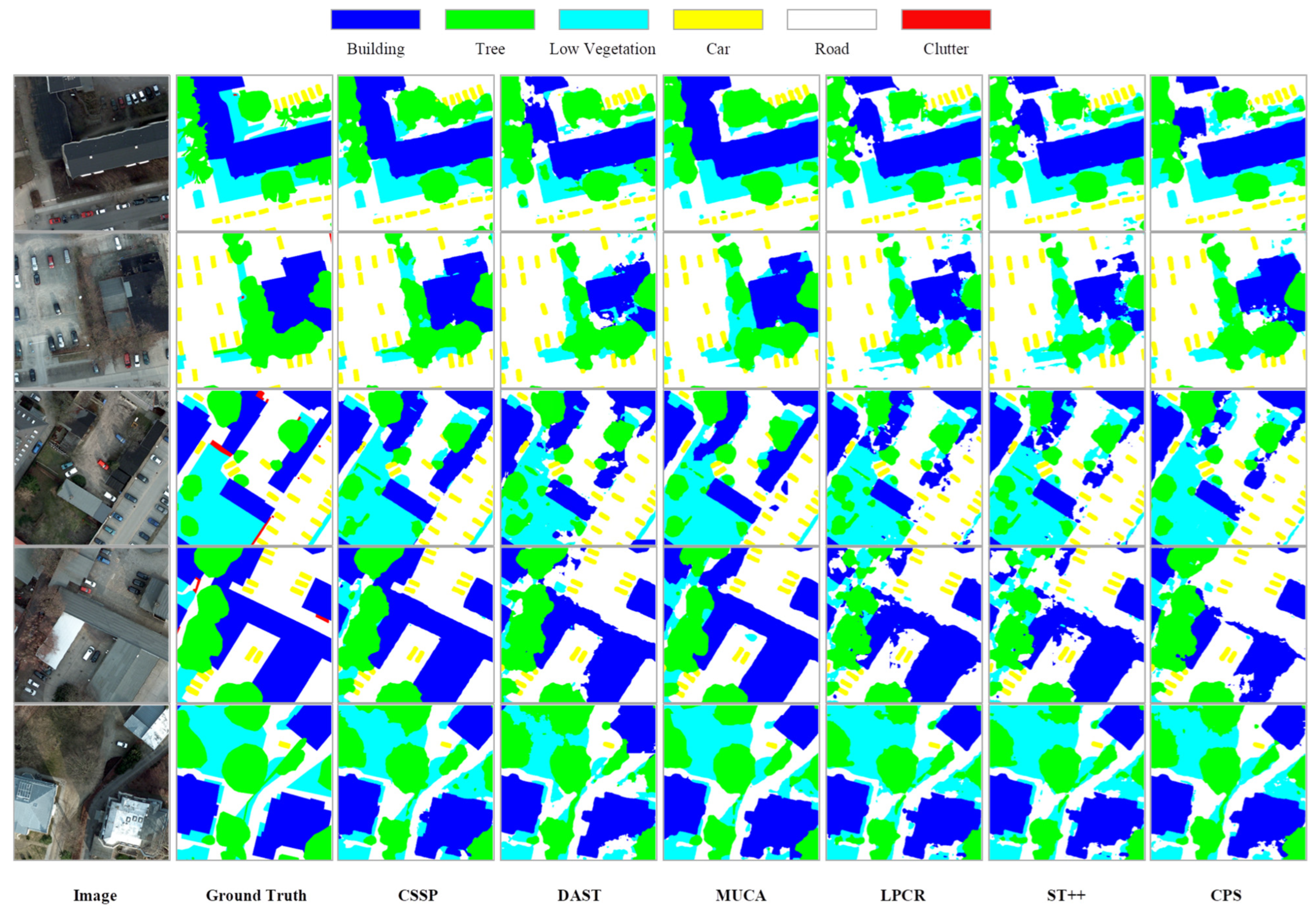

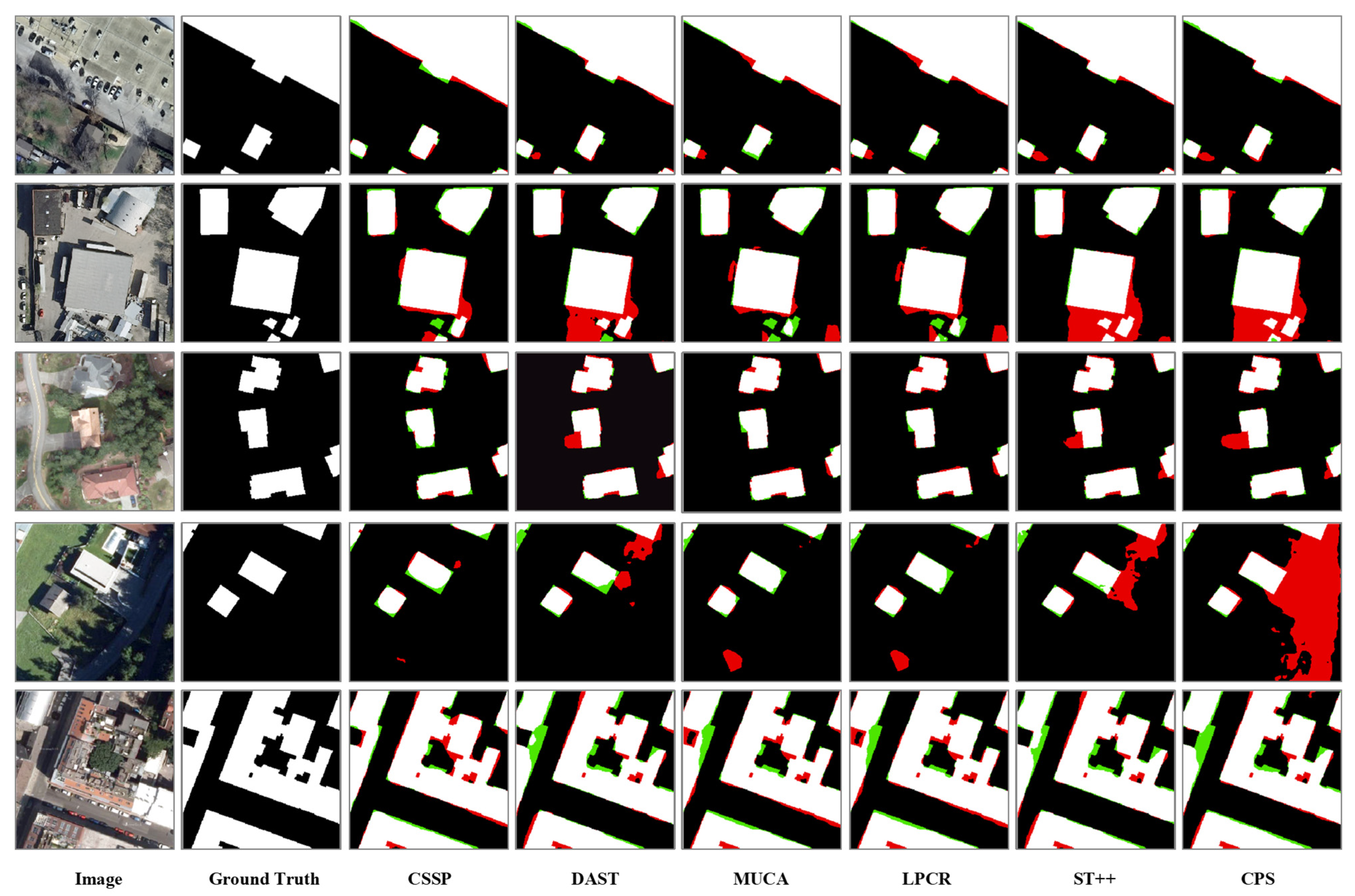

4.3. Comparison with SoTA Methods

4.4. Ablation Studies on CSSP

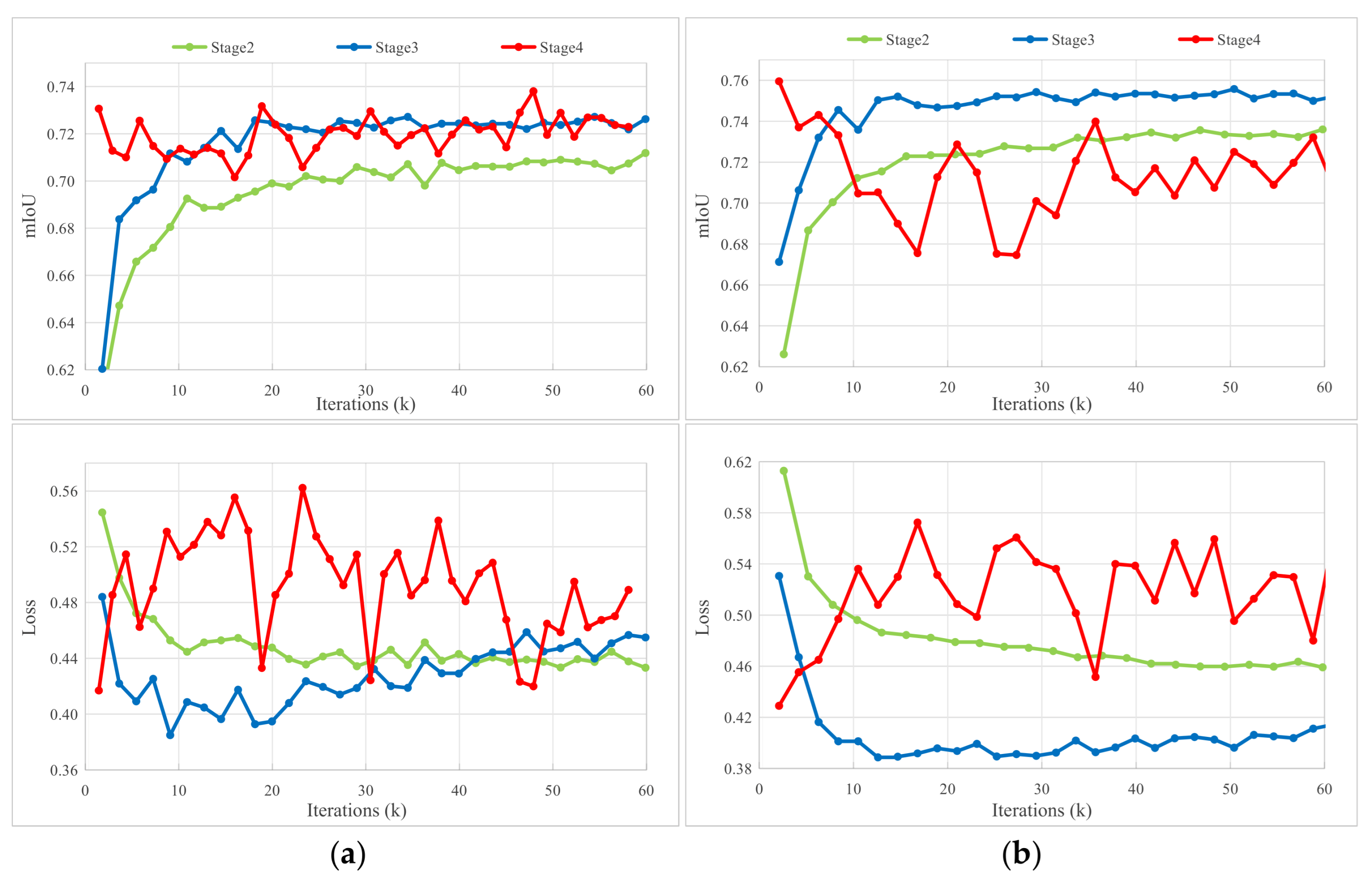

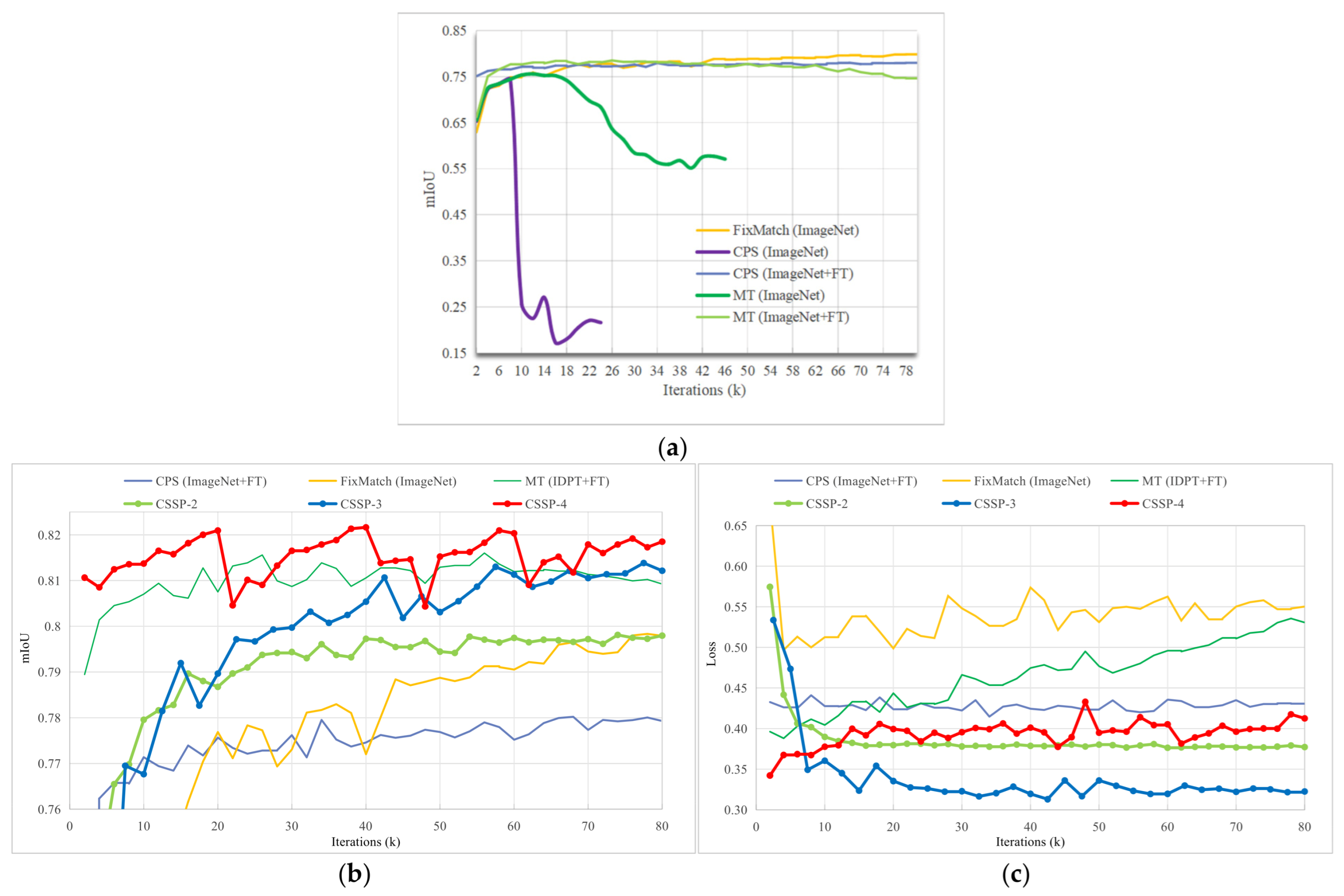

4.4.1. Revealing the Spectrum of Stability Needs

4.4.2. The Role of IDPT

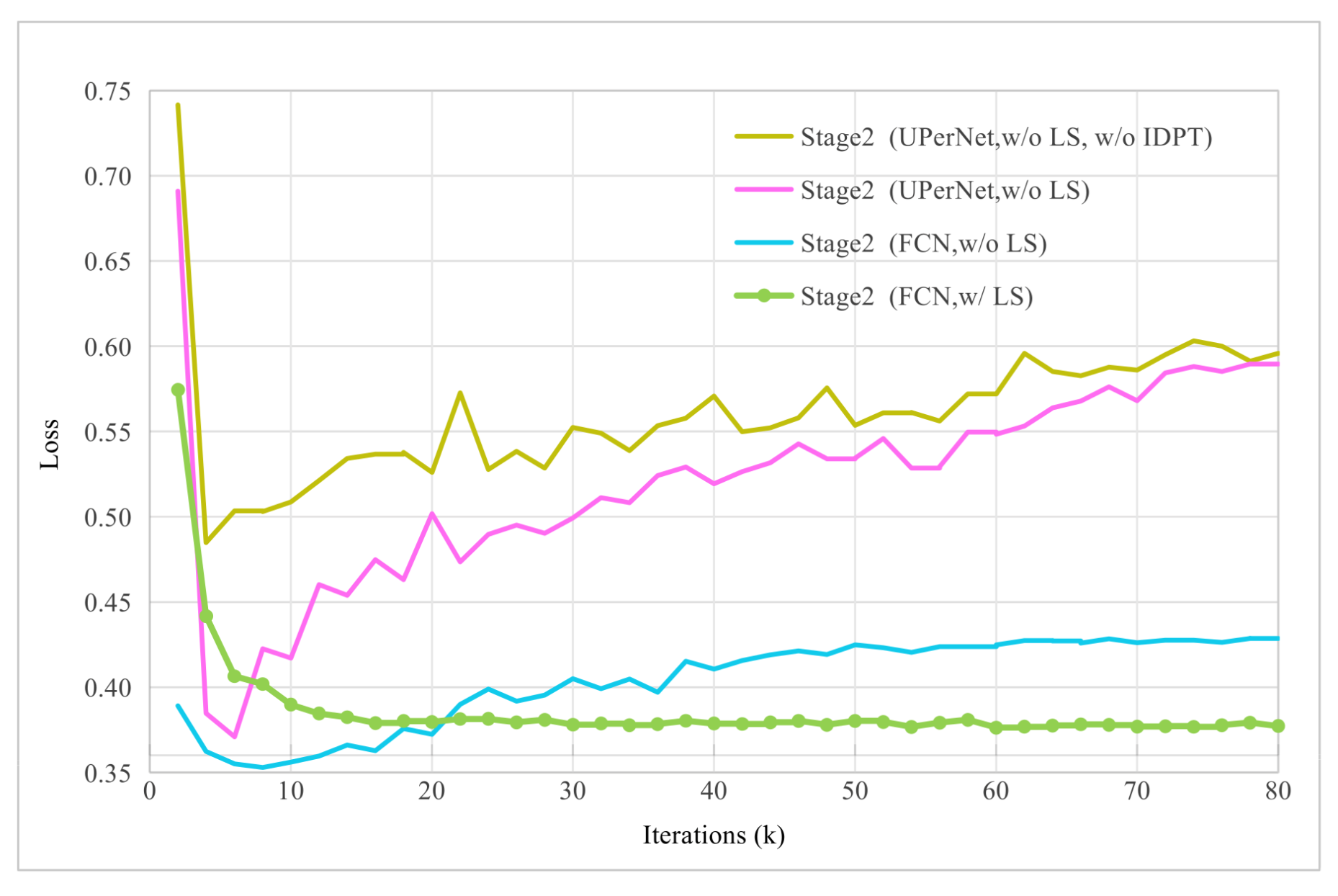

4.4.3. The Role of the Lightweight Decoder and Label Smoothing in FT-LM

4.4.4. Effects of Decoder Upgrade and DA-ClassMix in SSL-MU

4.4.5. Evaluation of Pseudo-Label Error Mitigation Strategies

4.4.6. Impact of Teacher–Student Coupling in SSL-PIA

4.4.7. The Effect of the Curriculum-Based Pipeline

4.4.8. Training Efficiency and Cost Analysis

4.4.9. Model Generalizability Experiments

4.5. Experiments on SSDA

4.5.1. Results onPot2Vai Experiment

- (1)

- Baseline comparison: First, although the SSDA baseline uses only 10 annotated target domain images in addition to the UDA setup, it achieves a significant performance gain of 16.90% mIoU (44.26% → 61.16%) compared with the UDA baseline. Second, the supervised baseline attains an mIoU of only 69.73%, indicating that when the RSI dataset is small (Vaihingen contains only 210 images), using ImageNet pretrained weights alone as initialization for swinV2-B is insufficient to achieve satisfactory supervised training performance. After incorporating the proposed CDPT, the supervised baseline performance increases significantly to 73.55% (a gain of 3.82%), demonstrating the importance of CDPT for enhancing feature representation capability of RSI.

- (2)

- Effectiveness of the proposed method: The proposed method CDCSSP-2 achieves an mIoU of 71.01%, already surpassing all compared methods. CDCSSP-3 further improves the result to 72.72% mIoU, a gain of 1.71% over stage 2, validating the efficacy of our curriculum learning pipeline in the SSDA setting. The final performance exceeds the supervised baseline by 2.99%, indicating that our approach effectively leverages the large amount of labeled data from the source domain and unlabeled data from the target domain.

- (3)

- Comparison between different types of methods: First, the selected SoTA UDA method, FLDA-Net, achieves 55.97% mIoU, showing a considerable 5.19% gap compared to the SSDA baseline with only 10 target domain annotations. This substantial performance gap indicates fundamental limitations in UDA methodologies, whereas SSDA approaches with few-shot supervision present a more practical alternative to complex UDA methods. Second, existing SSDA methods perform similarly to the SSDA baseline, around 61% mIoU, which is considerably lower than the proposed CDCSSP-3. We attribute this to their reliance on ResNet-based backbones, which struggle to simultaneously learn and adapt to the significantly different band configurations of RGB and IRRG imagery, thus limiting effective knowledge transfer from the source to the target domain. Third, although our SSL method, the CSSP, outperforms the supervised baseline, it remains 2.28% mIoU behind CDCSSP-3. This confirms the CDCSSP’s ability to utilize source domain annotations effectively, leading to further improvement over the CSSP.

4.5.2. Results on Vai2Pot Experiment

- (1)

- Baseline comparison: First, the UDA baseline achieves only 32.31% mIoU, which is considerably lower than the corresponding baseline in the Pot2Vai setting (44.26%). This suggests that a larger source domain dataset contributes positively to the model’s generalization capability in the target domain. Second, the SSDA baseline significantly outperforms the UDA baseline by 34.1% in mIoU (reaching 66.41%), demonstrating that even a small number of target domain annotations can substantially enhance model performance. Third, unlike the Pot2Vai scenario, the fully supervised baseline achieves a high mIoU of 80.06%, which can be attributed to the large scale of the Potsdam dataset (2904 images).

- (2)

- Effectiveness of the proposed method: Our CDCSSP method improves the mIoU from 73.63% at stage 2 to 75.63% at stage 3, validating the continuous optimization capability of the curriculum learning strategy. The final performance exceeds the SSDA baseline by 9.22%, although a gap of 4.43% remains compared to the supervised baseline.

- (3)

- Comparison between different types of methods: First, similarly to the Pot2Vai case, UDA methods perform significantly worse than SSDA methods, further confirming that SSDA has greater research potential and practical value than UDA. Second, existing SoTA SSDA methods fail to surpass even the SSDA baseline. This can be attributed to the superior cross-domain feature representation capacity of the ViT-based backbone—a capability that is typically lacking in CNN-based architectures. Third, our proposed CSSP method significantly outperforms all existing SoTA SSDA methods, even though it cannot see labeled data in the source domain compared with SSDA methods. However, CDCSSP-3 only slightly surpasses the CSSP by a margin of 0.12% in mIoU. A plausible explanation for this marginal gain is the limited volume of source domain data (only 210 images in Vaihingen), which may restrict the additional performance contribution that domain adaptation can provide over the SSL approach.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Ablation Study on PIA in the SSDA

Appendix A.1. Experiment and Results

Appendix A.2. Discussion and Conclusion

References

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); IEEE: New York, NY, USA, 2021; pp. 9992–10002. [Google Scholar]

- Ouali, Y.; Hudelot, C.; Tami, M. An Overview of Deep Semi-Supervised Learning. arXiv 2020, arXiv:2006.05278. [Google Scholar] [CrossRef]

- Chen, X.; Yuan, Y.; Zeng, G.; Wang, J. Semi-Supervised Semantic Segmentation with Cross Pseudo Supervision. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2021; pp. 2613–2622. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean Teachers Are Better Role Models: Weight-Averaged Consistency Targets Improve Semi-Supervised Deep Learning Results. In Proceedings of the 31st Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 1195–1204. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2022; pp. 11999–12009. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. In Proceedings of the European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2018; pp. 418–434. [Google Scholar]

- French, G.; Laine, S.; Aila, T.; Mackiewicz, M.; Finlayson, G. Semi-Supervised Semantic Segmentation Needs Strong, Varied Perturbations. arXiv 2019, arXiv:1906.01916. [Google Scholar]

- Yuan, J.; Liu, Y.; Shen, C.; Wang, Z.; Li, H. A Simple Baseline for Semi-Supervised Semantic Segmentation with Strong Data Augmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision; IEEE: New York, NY, USA, 2021; pp. 8229–8238. [Google Scholar]

- Xu, M.; Wu, M.; Chen, K.; Zhang, C.; Guo, J. The Eyes of the Gods: A Survey of Unsupervised Domain Adaptation Methods Based on Remote Sensing Data. Remote Sens. 2022, 14, 4380. [Google Scholar] [CrossRef]

- Chen, J.; Sun, B.; Wang, L.; Fang, B.; Chang, Y.; Li, Y.; Zhang, J.; Lyu, X.; Chen, G. Semi-Supervised Semantic Segmentation Framework with Pseudo Supervisions for Land-Use/Land-Cover Mapping in Coastal Areas. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102881. [Google Scholar] [CrossRef]

- Lu, X.; Jiao, L.; Liu, F.; Yang, S.; Liu, X.; Feng, Z.; Li, L.; Chen, P. Simple and Efficient: A Semisupervised Learning Framework for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5543516. [Google Scholar] [CrossRef]

- Lu, X.; Jiao, L.; Li, L.; Liu, F.; Liu, X.; Yang, S.; Feng, Z.; Chen, P. Weak-to-Strong Consistency Learning for Semisupervised Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5510715. [Google Scholar] [CrossRef]

- Li, J.; Sun, B.; Li, S.; Kang, X. Semisupervised Semantic Segmentation of Remote Sensing Images with Consistency Self-Training. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5615811. [Google Scholar] [CrossRef]

- Miao, W.; Xu, Z.; Geng, J.; Jiang, W. ECAE: Edge-Aware Class Activation Enhancement for Semisupervised Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5625014. [Google Scholar] [CrossRef]

- Li, Y.; Yi, Z.; Wang, Y.; Zhang, L. Adaptive Context Transformer for Semisupervised Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5621714. [Google Scholar] [CrossRef]

- Meng, Y.; Yuan, Z.; Yang, J.; Liu, P.; Yan, J.; Zhu, H.; Ma, Z.; Jiang, Z.; Zhang, Z.; Mi, X. Cross-Domain Land Cover Classification of Remote Sensing Images Based on Full-Level Domain Adaptation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11434–11450. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, G.; Cui, H.; Li, X.; Hou, S.; Ma, J.; Li, Z.; Li, H.; Wang, H. A Novel Weakly Supervised Semantic Segmentation Framework to Improve the Resolution of Land Cover Product. ISPRS J. Photogramm. Remote Sens. 2023, 196, 73–92. [Google Scholar] [CrossRef]

- Li, J.; Liu, Y.; Zhang, S.; Yu, B.; Zhou, Z.; Liu, J. Dynamic Category Preference Learning: Tackling Long-Tailed Semi-Supervised Segmentation in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4709514. [Google Scholar] [CrossRef]

- Hu, H.; Wei, F.; Hu, H.; Ye, Q.; Cui, J.; Wang, L. Semi-Supervised Semantic Segmentation via Adaptive Equalization Learning. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021); Curran Associates Inc.: Red Hook, NY, USA, 2021; pp. 22106–22118. [Google Scholar]

- Fu, Y.; Wang, M.; Vivone, G.; Ding, Y.; Zhang, L. An Alternating Guidance with Cross-View Teacher–Student Framework for Remote Sensing Semi-Supervised Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4402012. [Google Scholar] [CrossRef]

- Yang, L.; Qi, L.; Feng, L.; Zhang, W.; Shi, Y. Revisiting Weak-to-Strong Consistency in Semi-Supervised Semantic Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2023; pp. 7236–7246. [Google Scholar]

- Lv, L.; Zhang, L. Advancing Data-Efficient Exploitation for Semi-Supervised Remote Sensing Images Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5619213. [Google Scholar] [CrossRef]

- Wang, J.-X.; Chen, S.-B.; Ding, C.H.Q.; Tang, J.; Luo, B. Semi-Supervised Semantic Segmentation of Remote Sensing Images with Iterative Contrastive Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 2504005. [Google Scholar] [CrossRef]

- Li, Z.; Pan, J.; Zhang, Z.; Wang, M.; Liu, L. MTCSNet: Mean Teachers Cross-Supervision Network for Semi-Supervised Detection. Remote Sens. 2023, 15, 2040. [Google Scholar] [CrossRef]

- Cui, M.; Li, K.; Li, Y.; Kamuhanda, D.; Tessone, C.J. Semi-Supervised Semantic Segmentation of Remote Sensing Images Based on Dual Cross-Entropy Consistency. Entropy 2023, 25, 681. [Google Scholar] [CrossRef] [PubMed]

- Xin, Y.; Fan, Z.; Qi, X.; Zhang, Y.; Li, X. Confidence-Weighted Dual-Teacher Networks with Biased Contrastive Learning for Semi-Supervised Semantic Segmentation in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5614416. [Google Scholar] [CrossRef]

- Liu, R.; Luo, T.; Huang, S.; Wu, Y.; Jiang, Z.; Zhang, H. CrossMatch: Cross-View Matching for Semi-Supervised Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5650515. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, L.; Fan, Z.; Wang, W.; Liu, J. S5Mars: Semi-Supervised Learning for Mars Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4600915. [Google Scholar] [CrossRef]

- Wang, J.-X.; Chen, S.-B.; Ding, C.H.Q.; Tang, J.; Luo, B. RanPaste: Paste Consistency and Pseudo Label for Semisupervised Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 2002916. [Google Scholar] [CrossRef]

- Wang, J.; Ding, C.H.Q.; Chen, S.; He, C.; Luo, B. Semi-Supervised Remote Sensing Image Semantic Segmentation via Consistency Regularization and Average Update of Pseudo-Label. Remote Sens. 2020, 12, 3603. [Google Scholar] [CrossRef]

- Hobley, B.; Arosio, R.; French, G.; Bremner, J.; Dolphin, T.; Mackiewicz, M. Semi-Supervised Segmentation for Coastal Monitoring Seagrass Using RPA Imagery. Remote Sens. 2021, 13, 1741. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y.; Li, Y.; Wan, Y.; Guo, H.; Zheng, Z.; Yang, K. Semi-Supervised Deep Learning via Transformation Consistency Regularization for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5782–5796. [Google Scholar] [CrossRef]

- Yang, Z.; Yan, Z.; Diao, W.; Zhang, Q.; Kang, Y.; Li, J.; Li, X.; Sun, X. Label Propagation and Contrastive Regularization for Semisupervised Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5609818. [Google Scholar] [CrossRef]

- Xin, Y.; Fan, Z.; Qi, X.; Geng, Y.; Li, X. Enhancing Semi-Supervised Semantic Segmentation of Remote Sensing Images via Feature Perturbation-Based Consistency Regularization Methods. Sensors 2024, 24, 730. [Google Scholar] [CrossRef]

- Zhang, M.; Gu, X.; Qi, J.; Zhang, Z.; Yang, H.; Xu, J.; Peng, C.; Li, H. CDEST: Class Distinguishability-Enhanced Self-Training Method for Adopting Pre-Trained Models to Downstream Remote Sensing Image Semantic. Remote Sens. 2024, 16, 1293. [Google Scholar] [CrossRef]

- Sun, W.; Lei, Y.; Hong, D.; Hu, Z.; Li, Q.; Zhang, J. RSProtoSemiSeg: Semi-Supervised Semantic Segmentation of High Spatial Resolution Remote Sensing Images with Probabilistic Distribution Prototypes. ISPRS J. Photogramm. Remote Sens. 2025, 228, 771–784. [Google Scholar] [CrossRef]

- Sohn, K.; Berthelot, D.; Li, C.-L.; Zhang, Z.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Zhang, H.; Raffel, C. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. In Advances in Neural Information Processing Systems 33 (NeurIPS 2021); Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 596–608. [Google Scholar]

- He, Y.; Wang, J.; Liao, C.; Shan, B.; Zhou, X. ClassHyPer: ClassMix-Based Hybrid Perturbations for Deep Semi-Supervised Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2022, 14, 879. [Google Scholar] [CrossRef]

- Xu, Y.; Yan, L.; Jiang, J. EI-HCR: An Efficient End-to-End Hybrid Consistency Regularization Algorithm for Semisupervised Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4405015. [Google Scholar] [CrossRef]

- Lu, X.; Cao, G.; Gou, T. Semi-Supervised Landcover Classification with Adaptive Pixel-Rebalancing Self-Training. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2022; pp. 4611–4614. [Google Scholar]

- Ouali, Y.; Hudelot, C.; Tami, M. Semi-Supervised Semantic Segmentation with Cross-Consistency Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2020; pp. 12674–12684. [Google Scholar]

- Lu, Y.; Zhang, Y.; Cui, Z.; Long, W.; Chen, Z. Multi-Dimensional Manifolds Consistency Regularization for Semi-Supervised Remote Sensing Semantic Segmentation. Knowl. Based. Syst. 2024, 299, 112032. [Google Scholar] [CrossRef]

- Li, Q.; Shi, Y.; Zhu, X.X. Semi-Supervised Building Footprint Generation with Feature and Output Consistency Training. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5623217. [Google Scholar] [CrossRef]

- Olsson, V.; Tranheden, W.; Pinto, J.; Svensson, L. ClassMix: Segmentation-Based Data Augmentation for Semi-Supervised Learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; IEEE: New York, NY, USA, 2021; pp. 1369–1378. [Google Scholar]

- Zhou, L.; Duan, K.; Dai, J.; Ye, Y. Advancing Perturbation Space Expansion Based on Information Fusion for Semi-Supervised Remote Sensing Image Semantic Segmentation. Inf. Fusion 2025, 117, 102830. [Google Scholar] [CrossRef]

- Luo, Y.; Sun, B.; Li, S.; Hu, Y. Hierarchical Augmentation and Region-Aware Contrastive Learning for Semi-Supervised Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4401311. [Google Scholar] [CrossRef]

- Kang, J.; Wang, Z.; Zhu, R.; Sun, X.; Fernandez-Beltran, R.; Plaza, A. PiCoCo: Pixelwise Contrast and Consistency Learning for Semisupervised Building Footprint Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10548–10559. [Google Scholar] [CrossRef]

- Zhang, L.; Lu, W.; Zhang, J.; Wang, H. A Semisupervised Convolution Neural Network for Partial Unlabeled Remote-Sensing Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6507305. [Google Scholar] [CrossRef]

- Chen, J.; Chen, G.; Zhang, L.; Huang, M.; Luo, J.; Ding, M.; Ge, Y. Category-Sensitive Semi-Supervised Semantic Segmentation Framework for Land-Use/Land-Cover Mapping with Optical Remote Sensing Images. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104160. [Google Scholar] [CrossRef]

- Wang, S.; Su, C.; Zhang, X. A Class-Aware Semi-Supervised Framework for Semantic Segmentation of High-Resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 22372–22391. [Google Scholar] [CrossRef]

- Patel, C.; Sharma, S.; Pasquarella, V.J.; Gulshan, V. Evaluating Self and Semi-Supervised Methods for Remote Sensing Segmentation Tasks. arXiv 2021, arXiv:2111.10079. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2018; pp. 801–818. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Huang, W.; Shi, Y.; Xiong, Z.; Zhu, X.X. Decouple and Weight Semi-Supervised Semantic Segmentation of Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2024, 212, 13–26. [Google Scholar] [CrossRef]

- Wang, S.; Sun, X.; Chen, C.; Hong, D.; Han, J. Semi-Supervised Semantic Segmentation for Remote Sensing Images via Multiscale Uncertainty Consistency and Cross-Teacher–Student Attention. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5517115. [Google Scholar] [CrossRef]

- Jin, J.; Lu, W.; Yu, H.; Rong, X.; Sun, X.; Wu, Y. Dynamic and Adaptive Self-Training for Semi-Supervised Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5639814. [Google Scholar] [CrossRef]

- Lu, W.; Jin, J.; Sun, X.; Fu, K. Semi-Supervised Semantic Generative Networks for Remote Sensing Image Segmentation. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2023; pp. 6386–6389. [Google Scholar]

- Jiang, Y.; Lu, W.; Guo, Z. Multi-Stage Semi-Supervised Transformer for Remote Sensing Semantic Segmentation with Various Data Augmentation. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2023; pp. 6924–6927. [Google Scholar]

- Soviany, P.; Ionescu, R.T.; Rota, P.; Sebe, N. Curriculum Learning: A Survey. Int. J. Comput. Vis. 2022, 130, 1526–1565. [Google Scholar] [CrossRef]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. ST++: Make Self-Training Work Better for Semi-Supervised Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2022; pp. 4268–4277. [Google Scholar]

- Zhang, B.; Wang, Y.; Hou, W.; Wu, H.; Wang, J.; Okumura, M.; Shinozaki, T. FlexMatch: Boosting Semi-Supervised Learning with Curriculum Pseudo Labeling. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021); Curran Associates Inc.: Red Hook, NY, USA, 2021; pp. 18408–18419. [Google Scholar]

- Xue, X.; Zhu, H.; Li, X.; Wang, J.; Qu, L.; Hou, B. EGPO: Enhanced Guidance and Pseudo-Label Optimization for Semi-Supervised Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5651913. [Google Scholar] [CrossRef]

- Qi, X.; Mao, Y.; Zhang, Y.; Deng, Y.; Wei, H.; Wang, L. PICS: Paradigms Integration and Contrastive Selection for Semisupervised Remote Sensing Images Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5602119. [Google Scholar] [CrossRef]

- Nambiar, K.G.; Morgenshtern, V.I.; Hochreuther, P.; Seehaus, T.; Braun, M.H. A Self-Trained Model for Cloud, Shadow and Snow Detection in Sentinel-2 Images of Snow- and Ice-Covered Regions. Remote Sens. 2022, 14, 1825. [Google Scholar] [CrossRef]

- Hu, Q.; Wu, Y.; Li, Y. Semi-Supervised Semantic Labeling of Remote Sensing Images with Improved Image-Level Selection Retraining. Alex. Eng. J. 2024, 94, 235–247. [Google Scholar] [CrossRef]

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. arXiv 2017, arXiv:1610.02242. [Google Scholar] [CrossRef]

- Xie, Q.; Dai, Z.; Hovy, E.; Luong, M.T.; Le, Q.V. Unsupervised Data Augmentation for Consistency Training. In Advances in Neural Information Processing Systems 33 (NeurIPS 2021); Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 6256–6268. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV); IEEE: New York, NY, USA, 2017; pp. 2242–2251. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.-Y.; Isola, P.; Saenko, K.; Efros, A.; Darrell, T. Cycada: Cycle-Consistent Adversarial Domain Adaptation. In Proceedings of the International Conference on Machine Learning; Pmlr: Cambridge, MA, USA, 2018; pp. 1989–1998. [Google Scholar]

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.-H.; Chandraker, M. Learning to Adapt Structured Output Space for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2018; pp. 7472–7481. [Google Scholar]

- Luo, Y.; Zheng, L.; Guan, T.; Yu, J.; Yang, Y. Taking a Closer Look at Domain Shift: Category-Level Adversaries for Semantics Consistent Domain Adaptation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2019; pp. 2502–2511. [Google Scholar]

- Chang, H.; Chen, K.; Wu, M. A Two-Stage Cascading Method Based on Finetuning in Semi-Supervised Domain Adaptation Semantic Segmentation. In Proceedings of the 2022 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC); IEEE: New York, NY, USA, 2022; pp. 897–902. [Google Scholar]

- Gao, Y.; Wang, Z.; Zhang, Y. Delve into Source and Target Collaboration in Semi-Supervised Domain Adaptation for Semantic Segmentation. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME); IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Chen, S.; Jia, X.; He, J.; Shi, Y.; Liu, J. Semi-Supervised Domain Adaptation Based on Dual-Level Domain Mixing for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2021; pp. 11018–11027. [Google Scholar]

- Wang, Z.; Wei, Y.; Feris, R.; Xiong, J.; Hwu, W.-M.; Huang, T.S.; Shi, H. Alleviating Semantic-Level Shift: A Semi-Supervised Domain Adaptation Method for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops; IEEE: New York, NY, USA, 2020; pp. 936–937. [Google Scholar]

- Alonso, I.; Sabater, A.; Ferstl, D.; Montesano, L.; Murillo, A.C. Semi-Supervised Semantic Segmentation with Pixel-Level Contrastive Learning from a Class-Wise Memory Bank. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); IEEE: New York, NY, USA, 2021; pp. 8199–8208. [Google Scholar]

- Gao, K.; Yu, A.; You, X.; Qiu, C.; Liu, B.; Zhang, F. Cross-Domain Multi-Prototypes with Contradictory Structure Learning for Semi-Supervised Domain Adaptation Segmentation of Remote Sensing Images. Remote Sens. 2023, 15, 3398. [Google Scholar] [CrossRef]

- Yang, Z.; Yan, Z.; Diao, W.; Ma, Y.; Li, X.; Sun, X. Active Bidirectional Self-Training Network for Cross-Domain Segmentation in Remote-Sensing Images. Remote Sens. 2024, 16, 2507. [Google Scholar] [CrossRef]

- Xie, Z.; Zhang, Z.; Cao, Y.; Lin, Y.; Bao, J.; Yao, Z.; Dai, Q.; Hu, H. SimMIM: A Simple Framework for Masked Image Modeling. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2022; pp. 9643–9653. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2016; pp. 2818–2826. [Google Scholar]

- Xie, Q.; Luong, M.-T.; Hovy, E.; Le, Q.V. Self-Training with Noisy Student Improves ImageNet Classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: New York, NY, USA, 2020; pp. 10684–10695. [Google Scholar]

- Zhu, Y.; Zhang, Z.; Wu, C.; Zhang, Z.; He, T.; Zhang, H.; Manmatha, R.; Li, M.; Smola, A. Improving Semantic Segmentation via Efficient Self-Training. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 1589–1602. [Google Scholar] [CrossRef] [PubMed]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS Benchmark on Urban Object Classification and 3D Building Reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I–3, 293–298. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS); IEEE: New York, NY, USA, 2017; pp. 3226–3229. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Kai, L.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2009; Volume 20, pp. 248–255. [Google Scholar]

- Bastani, F.; Wolters, P.; Gupta, R.; Ferdinando, J.; Kembhavi, A. SatlasPretrain: A Large-Scale Dataset for Remote Sensing Image Understanding. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV); IEEE: New York, NY, USA, 2023; pp. 16726–16736. [Google Scholar]

- Wang, F.; Wang, H.; Wang, D.; Guo, Z.; Zhong, Z.; Lan, L.; Yang, W.; Zhang, J. Harnessing Massive Satellite Imagery with Efficient Masked Image Modeling. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV); IEEE: New York, NY, USA, 2025; pp. 6935–6947. [Google Scholar]

| Method | Core Mechanism | Derived Works |

|---|---|---|

| MT [5] | Updates teacher via student’s EMA to stabilize pseudo-labels. | [29,30,31,32,33,34,35,36,37] |

| FixMatch [38] | Enforces consistency between weakly and strongly augmented views of the same image, sharing parameters between the teacher and student. | [12,13] |

| CPS [4] | Uses disagreement between two independent branches for mutual supervision. | [39,40,41] |

| CCT [42] | Uses a shared encoder with independent decoders; injects feature perturbations into student decoders. | [11,43,44] |

| Type | Operation | Description |

|---|---|---|

| Weak Augmentations | Random Rotate | Rotates the image by 90 degrees with a probability of 0.5 |

| Random Flip | Flips the image horizontally or vertically with a probability of 0.5 | |

| Strong Geometrical Augmentation | DA-ClassMix | Mixes randomly selected regions from a labeled image with an unlabeled image |

| Strong Intensity-based Augmentations | Gaussian Blur | Blurs images with a Gaussian kernel of size 3 |

| Brightness | Adjusts the brightness of images by [0.9, 1.1] | |

| Hue | Adjusts the hue of images by [0, 0.25] | |

| Saturation | Adjusts the saturation of images by [0.7, 2.0] | |

| Contrast | Adjusts the contrast of images by [0.5, 1.1] | |

| Identity | Keeps the image unchanged |

| Method | Label Ratio | Segmentation Model | IoU per Category | mIoU | mF1 | ||||

|---|---|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||||

| Sup | 1 | DeeplabV3+ | 86.00 | 75.14 | 92.32 | 82.06 | 74.69 | 82.04 | 89.99 |

| Sup | 1 | swinV2-UPerNet | 85.22 | 74.56 | 92.16 | 84.76 | 74.50 | 82.24 | 90.10 |

| Sup | 1/2 | swinV2-UPerNet | 84.09 | 73.50 | 91.33 | 84.07 | 73.29 | 81.26 | 89.50 |

| Sup baseline | 1/32 | swinV2-UPerNet | 80.44 | 70.26 | 86.29 | 80.16 | 69.56 | 77.34 | 87.07 |

| CSSP (Ours) | 1/32 | swinV2-UPerNet | 85.22 | 74.71 | 91.53 | 83.94 | 75.41 | 82.16 | 90.08 |

| Method | Label Ratio | Segmentation Model | IoU per Category | mIoU | mF1 | ||||

|---|---|---|---|---|---|---|---|---|---|

| Imp. Surf | Tree | Building | Car | Low Veg. | |||||

| Sup | 1 | DeeplabV3+ | 81.18 | 75.75 | 88.17 | 68.02 | 64.38 | 75.50 | 85.71 |

| Sup | 1 | swinV2-UPerNet | 80.40 | 75.98 | 86.38 | 66.55 | 63.54 | 74.57 | 85.16 |

| Sup baseline | 1/4 | swinV2-UPerNet | 75.18 | 72.67 | 80.96 | 57.34 | 57.37 | 68.71 | 81.06 |

| CSSP (Ours) | 1/4 | swinV2-UPerNet | 82.65 | 77.23 | 88.72 | 63.12 | 66.09 | 75.56 | 85.73 |

| Method | Label Ratio | Segmentation Model | Building IoU |

|---|---|---|---|

| Sup | 1 | DeeplabV3+ | 75.47 |

| Sup | 1 | swinV2-UPerNet | 76.15 |

| Sup baseline | 1/10 | swinV2-UPerNet | 73.40 |

| CSSP (Ours) | 1/10 | swinV2-UPerNet | 76.03 |

| Method | Segmentation Model | IoU per Category | mIoU | ||||

|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||

| Sup baseline 1 | DeeplabV3+ | 81.44 | 68.47 | 87.98 | 81.96 | 69.64 | 77.90 |

| Sup baseline 2 | SegFormer-B2 | 81.55 | 70.95 | 86.60 | 79.63 | 71.76 | 78.10 |

| Sup baseline 3 | swinV2-UPerNet | 80.44 | 70.26 | 86.29 | 80.16 | 69.56 | 77.34 |

| ST++ | DeeplabV3+ | 82.74 | 72.91 | 89.95 | 80.99 | 70.36 | 79.39 |

| ClassMix | DeeplabV3+ | 83.88 | 69.35 | 87.89 | 81.52 | 70.88 | 78.70 |

| LPCR | DeeplabV3+ | 82.53 | 72.27 | 89.52 | 82.18 | 71.34 | 79.57 |

| MT | DeeplabV3+ | 82.17 | 70.15 | 88.96 | 77.98 | 71.15 | 78.08 |

| CPS | DeeplabV3+ | 82.65 | 74.06 | 86.81 | 83.05 | 68.05 | 78.92 |

| DWL | SegFormer-B2 | 83.06 | 73.73 | 88.50 | 81.58 | 73.00 | 79.98 |

| MUCA | SegFormer-B2 | 83.41 | 74.43 | 88.50 | 82.27 | 73.47 | 80.42 |

| DAST | swinV2-UPerNet | 84.21 | 73.42 | 90.22 | 82.92 | 72.12 | 80.58 |

| CSSP (Ours) | swinV2-UPerNet | 85.22 | 74.71 | 91.53 | 83.94 | 75.41 | 82.16 |

| Method | Segmentation Model | Building IoU |

|---|---|---|

| Sup baseline1 | DeeplabV3+ | 73.80 |

| Sup baseline2 | SegFormer-B2 | 73.68 |

| Sup baseline3 | swinV2-UPerNet | 73.40 |

| ST++ | DeeplabV3+ | 74.84 |

| ClassMix | DeeplabV3+ | 74.66 |

| LPCR | DeeplabV3+ | 75.02 |

| MT | DeeplabV3+ | 74.21 |

| CPS | DeeplabV3+ | 74.48 |

| DWL | SegFormer-B2 | 74.78 |

| MUCA | SegFormer-B2 | 75.01 |

| DAST | swinV2-UPerNet | 75.57 |

| CSSP (Ours) | swinV2-UPerNet | 76.03 |

| Method | Initial Weights | IoU per Category | mIoU | mF1 | ||||

|---|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | ||||

| Sup baseline | ImageNet | 80.44 | 70.26 | 86.29 | 80.16 | 69.56 | 77.34 | 87.07 |

| CPS * | ImageNet | 80.66 | 71.62 | 86.86 | 81.31 | 69.65 | 78.02 | 87.51 |

| FixMatch | ImageNet | 82.33 | 72.36 | 90.29 | 82.96 | 71.22 | 79.83 | 88.61 |

| MT * | ImageNet | 78.77 | 67.59 | 86.92 | 73.07 | 66.32 | 74.53 | 85.20 |

| Sup | IDPT | 82.18 | 71.03 | 88.16 | 80.48 | 71.06 | 78.58 | 87.85 |

| MT * | IDPT | 84.25 | 74.75 | 91.33 | 83.65 | 74.02 | 81.60 | 89.73 |

| Segmentation Decoder | Label Smoothing | IoU per Category | mIoU | mF1 | Val Loss | ||||

|---|---|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||||

| UPerNet | No | 82.18 | 71.03 | 88.16 | 80.48 | 71.06 | 78.58 | 87.85 | 0.5018 |

| FCN | No | 83.07 | 72.89 | 89.04 | 78.88 | 72.68 | 79.31 | 88.33 | 0.4106 |

| FCN | Yes | 83.74 | 73.09 | 90.04 | 78.91 | 73.28 | 79.81 | 88.63 | 0.3768 |

| Decoder | DA-ClassMix | IoU per Category | mIoU | mF1 | Val Loss | ||||

|---|---|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||||

| FCN | No | 84.27 | 73.75 | 90.68 | 79.57 | 73.92 | 80.44 | 89.02 | 0.3711 |

| UPerNet | No | 84.72 | 74.38 | 90.86 | 81.19 | 74.43 | 81.12 | 89.44 | 0.3644 |

| UPerNet | Yes | 84.75 | 74.54 | 90.78 | 82.13 | 74.87 | 81.41 | 89.63 | 0.3292 |

| Pseudo-Label Error Mitigation Strategies | mF1 | Val Loss | |

|---|---|---|---|

| ConfFilt | ConfWeight | ||

| No | No | 89.53 | 0.3379 |

| Threshold = 0.6 | No | 89.60 | 0.4144 |

| Threshold = 0.8 | No | 89.50 | 0.4313 |

| No | Yes | 89.48 | 0.3781 |

| Parameter Relationship | IoU per Category | mIoU | mF1 | ||||

|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||

| EMA update | 83.88 | 75.29 | 89.33 | 81.16 | 73.10 | 80.55 | 89.11 |

| Fixed teacher | 85.09 | 75.33 | 91.06 | 83.50 | 75.15 | 82.02 | 90.00 |

| Share parameters | 85.22 | 74.71 | 91.53 | 83.94 | 75.41 | 82.16 | 90.08 |

| Dataset | Label Ratio | Stage | Segmentation Model | mIoU |

|---|---|---|---|---|

| Potsdam | 1/32 | CSSP-2 | swinV2-FCN | 79.81 |

| CSSP-3 | swinV2-UPerNet | 81.41 | ||

| CSSP-4 | swinV2-UPerNet | 82.16 | ||

| Vaihingen | 1/4 | CSSP-2 | swinV2-FCN | 73.62 |

| CSSP-3 | swinV2-UPerNet | 75.23 | ||

| CSSP-4 | swinV2-UPerNet | 75.56 | ||

| Inria | 1/10 | CSSP-2 | swinV2-FCN | 75.55 |

| CSSP-3 | swinV2-UPerNet | 75.74 | ||

| CSSP-4 | swinV2-UPerNet | 76.03 |

| Method | IDPT | Finetuning Stage | SSL Stage | Total Time | mIoU | |||

|---|---|---|---|---|---|---|---|---|

| Time | Mem | Time | Mem | Time | Mem | |||

| CPS | 0 | 0 | 10 | 11.5 | 24 | 22.1 | 34 | 78.02 |

| MT | 5 | 24.7 | 10 | 11.5 | 19.5 | 16.8 | 34.5 | 81.6 |

| FixMatch | 0 | 0 | 0 | 0 | 19 | 11.5 | 19 | 79.83 |

| CSSP (Ours) | 5 | 24.7 | 6 | 7.7 | 10 + 19.5 | 15.6 + 11.5 | 40.5 | 82.16 |

| Encoder | Decoder | FT-LM | SSL-MU | SSL-PIA | Best PIA Intensity |

|---|---|---|---|---|---|

| swinV2-B | FCN/UPerNet | 79.81 | 81.41 | 82.16 | 3 |

| ViT-B | Linear/DeeplabV3+ | 79.16 | 80.93 | 81.48 | 1 |

| Experiments | Settings | ||||

|---|---|---|---|---|---|

| Pot2Vai | UDA | 2904 | 0 | 210 | 249 |

| SSDA | 2904 | 10 | 200 | 249 | |

| SSL | 0 | 10 | 200 | 249 | |

| UDA baseline | 2904 | 0 | 0 | 249 | |

| SSDA baseline | 2904 | 10 | 0 | 249 | |

| Sup baseline | 0 | 210 | 0 | 249 | |

| Vai2Pot | UDA | 210 | 0 | 2904 | 1694 |

| SSDA | 210 | 10 | 2894 | 1694 | |

| SSL | 0 | 10 | 2894 | 1694 | |

| UDA baseline | 210 | 0 | 0 | 1694 | |

| SSDA baseline | 210 | 10 | 0 | 1694 | |

| Sup baseline | 0 | 2904 | 0 | 1694 |

| Type | Methods | IoU per Category | mIoU | ||||

|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||

| UDA | FLDA-Net [17] | 60.34 | 59.94 | 78.57 | 46.89 | 34.11 | 55.97 |

| CLAN [73] | 46.84 | 53.38 | 59.61 | 33.45 | 30.07 | 44.67 | |

| SSDA | PCLMB [78] | 71.59 | 70.45 | 77.48 | 38.64 | 49.33 | 61.50 |

| DDM [76] | 70.64 | 47.74 | 70.47 | 51.99 | 48.93 | 57.95 | |

| TCM [74] | 76.00 | 51.92 | 79.09 | 52.51 | 50.07 | 61.92 | |

| CDCSSP-2 (Ours) | 79.16 | 73.36 | 86.15 | 57.41 | 58.95 | 71.01 | |

| CDCSSP-3 (Ours) | 80.49 | 74.22 | 87.22 | 60.87 | 60.79 | 72.72 | |

| SSL | CSSP (Ours) | 78.34 | 75.30 | 82.45 | 56.44 | 59.66 | 70.44 |

| Sup | UDA baseline | 53.75 | 61.00 | 54.06 | 42.75 | 9.76 | 44.26 |

| SSDA baseline | 68.68 | 67.47 | 75.37 | 47.59 | 46.67 | 61.16 | |

| Sup baseline | 77.10 | 74.45 | 84.30 | 51.18 | 61.60 | 69.73 | |

| Sup baseline (CDPT) | 80.84 | 76.80 | 87.83 | 57.46 | 64.84 | 73.55 | |

| Settings | Methods | IoU per Category | mIoU | ||||

|---|---|---|---|---|---|---|---|

| Imp. Surf. | Tree | Building | Car | Low Veg. | |||

| UDA | FLDA-Net | 55.08 | 34.47 | 71.54 | 58.37 | 19.34 | 47.76 |

| CLAN | 45.28 | 23.62 | 29.36 | 58.75 | 10.34 | 33.47 | |

| SSDA | PCLMB | 71.94 | 55.72 | 69.77 | 66.59 | 55.38 | 63.88 |

| DDM | 65.94 | 30.91 | 72.26 | 72.92 | 48.77 | 58.16 | |

| TCM | 70.30 | 50.11 | 67.11 | 73.28 | 41.94 | 60.55 | |

| CDCSSP-2 (Ours) | 78.45 | 65.74 | 85.91 | 70.11 | 67.93 | 73.63 | |

| CDCSSP-3 (Ours) | 79.96 | 67.40 | 87.37 | 74.62 | 68.78 | 75.63 | |

| SSL | CSSP (Ours) | 79.22 | 69.85 | 84.64 | 75.98 | 67.85 | 75.51 |

| Sup | UDA baseline | 48.19 | 6.70 | 41.46 | 56.76 | 8.47 | 32.31 |

| SSDA baseline | 68.55 | 61.21 | 75.48 | 68.55 | 58.22 | 66.41 | |

| Sup baseline | 83.38 | 74.26 | 90.96 | 78.67 | 73.05 | 80.06 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Liu, P.; Zhu, H.; Mi, X.; Meng, Y.; Zhao, H.; Gu, X. A Semi-Supervised Transformer with a Curriculum Training Pipeline for Remote Sensing Image Semantic Segmentation. Remote Sens. 2026, 18, 480. https://doi.org/10.3390/rs18030480

Liu P, Zhu H, Mi X, Meng Y, Zhao H, Gu X. A Semi-Supervised Transformer with a Curriculum Training Pipeline for Remote Sensing Image Semantic Segmentation. Remote Sensing. 2026; 18(3):480. https://doi.org/10.3390/rs18030480

Chicago/Turabian StyleLiu, Peizhuo, Hongbo Zhu, Xiaofei Mi, Yuke Meng, Huijie Zhao, and Xingfa Gu. 2026. "A Semi-Supervised Transformer with a Curriculum Training Pipeline for Remote Sensing Image Semantic Segmentation" Remote Sensing 18, no. 3: 480. https://doi.org/10.3390/rs18030480

APA StyleLiu, P., Zhu, H., Mi, X., Meng, Y., Zhao, H., & Gu, X. (2026). A Semi-Supervised Transformer with a Curriculum Training Pipeline for Remote Sensing Image Semantic Segmentation. Remote Sensing, 18(3), 480. https://doi.org/10.3390/rs18030480