JFDet: Joint Fusion and Detection for Multimodal Remote Sensing Imagery

Highlights

- A Joint Fusion and Detection Network (JFDet) is proposed, which realizes the joint optimization of low-level image fusion and high-level object detection through a dual-loss feedback strategy (fusion loss + detection loss), effectively addressing the “task mismatch” issue between the two in traditional methods. On the VEDAI dataset, its mean Average Precision (mAP) reaches 79.6%, which is 1.7% higher than that of the current state-of-the-art method MMFDet (77.9%). On the FLIR-ADAS dataset, its mAP reaches 76.3%, and the detection accuracy of key categories (person, car, bicycle) ranks first among the compared methods.

- A collaborative architecture consisting of a Gradient-Enhanced Residual Module (GERM) and detection enhancement modules (SOCA + MCFE) is designed: GERM combines dense feature connections with Fourier phase enhancement to strengthen the preservation of edge structures and fine-grained texture details; SOCA captures higher-order feature dependencies, and MCFE expands the receptive field. The combination of these two modules significantly improves the detection robustness of small and variably scaled targets in remote sensing imagery.

- This study achieves high radiometric consistency fusion of optical and infrared images, overcoming the limitations of single-modality data (e.g., degradation of visible light under low-light or adverse weather conditions, and the lack of texture information in infrared data). It provides a reliable detection scheme for scenarios with high timeliness requirements and complex environments, such as disaster monitoring and emergency search and rescue, helping to improve the efficiency of emergency decision-making.

- The modular design (GERM, SOCA, MCFE) and adaptive training strategy exhibit good compatibility, which can be adapted to different detection frameworks such as Yolov5s and Faster RCNN. Additionally, they provide high-quality multimodal feature support for downstream remote sensing tasks like semantic segmentation and change detection, expanding the application boundaries of multimodal fusion technology.

Abstract

1. Introduction

- We propose a novel dual-Loss feedback strategy that achieves adaptive synergy between low-level fusion and high-level detection tasks. The fusion process is explicitly guided by feedback from the detection loss, ensuring the fused images are optimized specifically for improving detection accuracy.

- We introduce a Gradient-Enhanced Residual Module (GERM) within the fusion network. This module effectively combines dense feature connections with gradient residual pathways to significantly strengthen edge, structural representation, and fine-grained texture details, providing high-quality, task-aware input for the detection stage.

- We design a robust detection network that integrates two key components to boost recognition: a Second-Order Channel Attention (SOCA) module that models higher-order feature dependencies to highlight semantically important regions and a Multi-scale Contextual Feature Encoding (MCFE) module that captures features across different scales, significantly enhancing the network’s robustness for detecting the small and variably scaled targets common in remote sensing.

- Experimental results on the VEDAI and FLIR-ADAS dataset demonstrate that our method achieves state-of-the-art performance, confirming the effectiveness of our joint optimization strategy, particularly in enhancing detection under challenging conditions.

2. Related Work

2.1. The Attention Mechanism in Multimodal Image Fusion

2.2. Image Fusion in Spatial and Transform Domains

3. Methodology

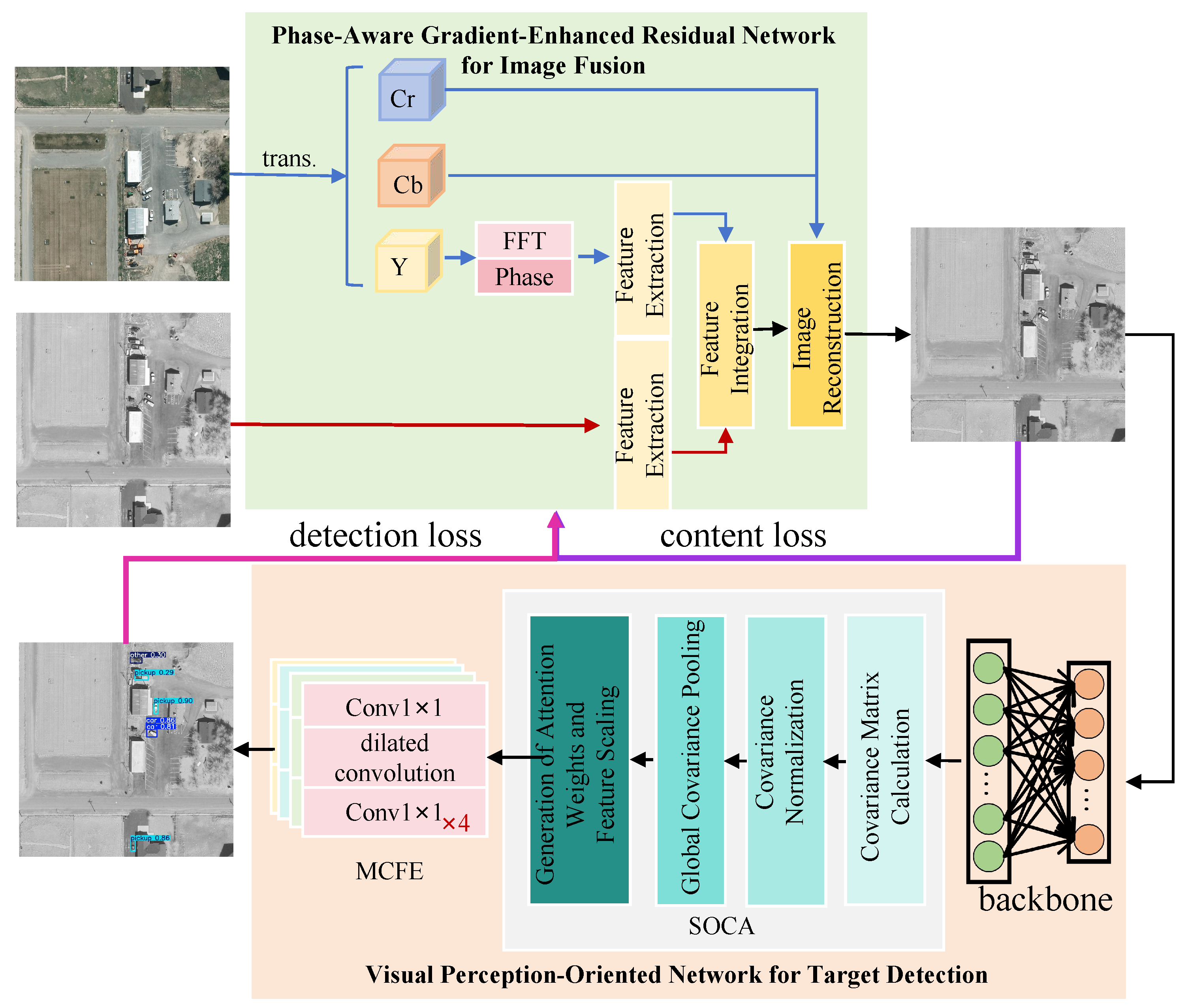

3.1. The Overall Architecture of JFDet

3.2. Phase-Aware Gradient-Enhanced Residual Network for Image Fusion

3.2.1. Phase-Aware Strategy Based on Fourier Transform

3.2.2. Phase-Aware Gradient-Enhanced Residual Network for Image Fusion

3.3. Visual Perception-Oriented Network for Object Detection

3.3.1. Second-Order Channel Attention (SOCA)

3.3.2. Multi-Scale Contextual Feature Encoding (MCFE)

3.4. Loss Function and Training Mechanism

3.4.1. Loss Function

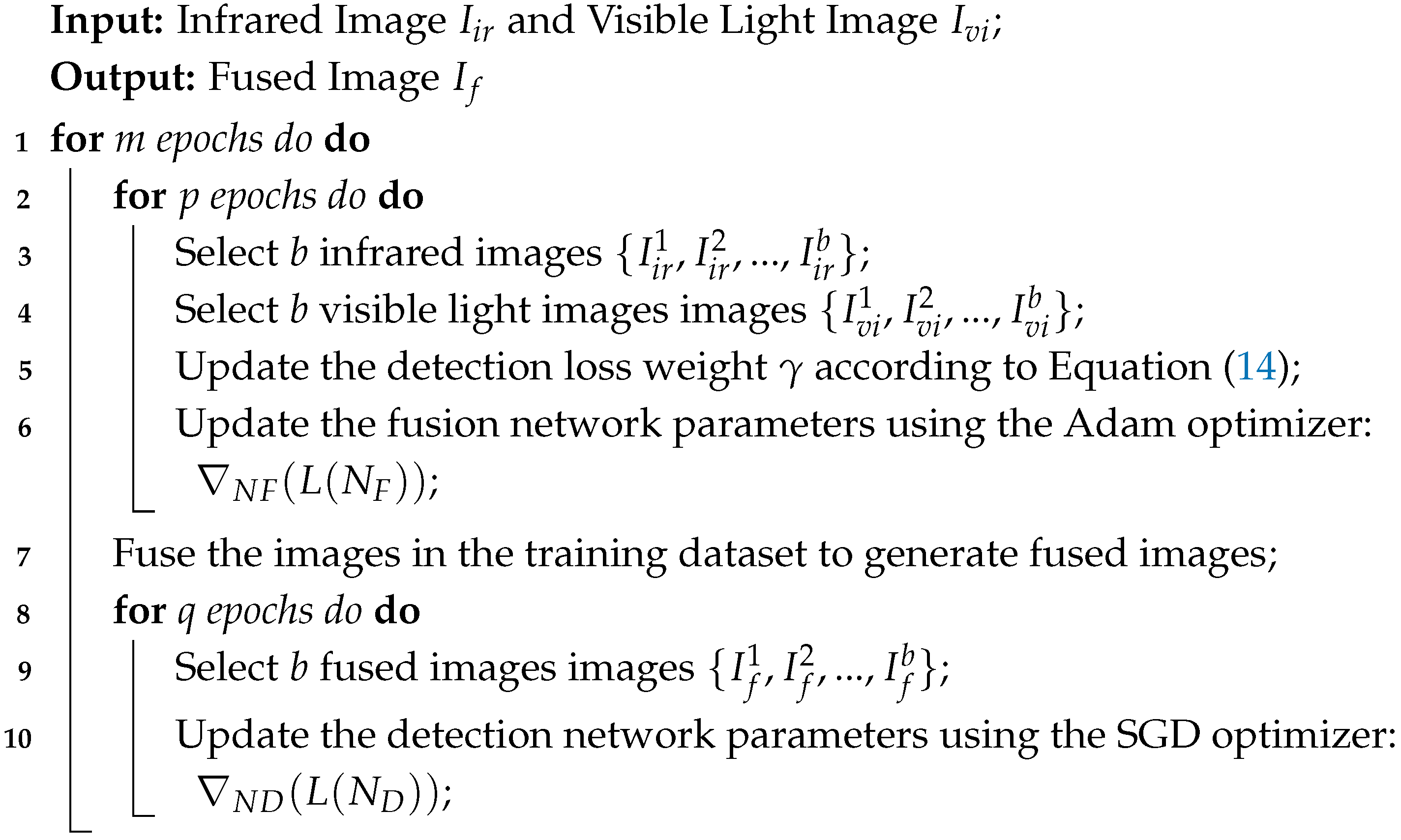

3.4.2. Adaptive Training Strategy

| Algorithm 1: Adaptive training strategy for joint image fusion and object detection tasks. |

|

4. Experiments and Discussion

4.1. Experimental Dataset

4.2. Experimental Setup

4.3. Evaluation Criteria

4.4. Ablation Experiments

4.5. Quantitative Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, D.; Cao, X.; Wu, X.; Li, J.; Yao, J.; Bai, X.R.; Jiang, D.S.; Li, Y.; Meng, D.Y. AeroGen: Enhancing remote sensing object detection with diffusion-driven data generation. In Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR2025), Nashville, TN, USA, 11–15 June 2025; pp. 3614–3624. [Google Scholar]

- Li, H.; Zhang, R.; Pan, Y.; Ren, J.C.; Shen, F. Lr-fpn: Enhancing remote sensing object detection with location refined feature pyramid network. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN2024), Piscataway, NJ, USA, 30 June–5 July 2024; pp. 1–8. [Google Scholar]

- Wang, J.; Ma, L.; Zhao, B.; Gou, Z.; Yin, Y.; Sun, G. MRLF: Multi-Resolution Layered Fusion Network for Optical and SAR Images. Remote Sens. 2025, 17, 3740. [Google Scholar] [CrossRef]

- Ding, X.; Fang, J.; Wang, Z.; Liu, Q.; Yang, Y.; Shu, Z.Y. Unsupervised learning non-uniform face enhancement under physics-guided model of illumination decoupling. Pattern Recognit. 2025, 162, 111354. [Google Scholar] [CrossRef]

- Khan, R.; Yang, Y.; Liu, Q.; Shen, J.L.; Li, B. Deep image enhancement for ill light imaging. J. Opt. Soc. Am. A 2021, 38, 827–839. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yang, Y.; Wu, K.; Liu, Q.; Xu, X.H.; Ma, X.X.; Tang, J. ASIFusion: An adaptive saliency injection-based infrared and visible image fusion network. ACM Trans. Multim. Comput. Commun. Appl. 2024, 20, 1–23. [Google Scholar] [CrossRef]

- Khan, R.; Zhang, J.; Wang, Z.; Chen, J.; Wang, F.; Gao, L. An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery. Remote Sens. 2025, 17, 3398. [Google Scholar]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, J.; Wu, Y.; Xu, J.W.; Zhang, X.Q. UNFusion: A unified multi-scale densely connected network for infrared and visible image fusion. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3360–3374. [Google Scholar] [CrossRef]

- Long, Y.; Jia, H.; Zhong, Y.; Jiang, Y.D.; Jia, Y.M. RXDNFuse: A aggregated residual dense network for infrared and visible image fusion. Inf. Fusion 2021, 69, 128–141. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J.J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.J.; Ling, H.B. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.J.; Ma, J.Y. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI2020), New York, NY, USA, 7–12 February 2020; pp. 12797–12804. [Google Scholar]

- Li, J.; Zhu, J.; Li, C.; Chen, X.; Yang, B. CGTF: Convolution-guided transformer for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

- Zhou, H.; Hou, J.; Zhang, Y.; Ma, J.Y.; Ling, H.B. Unified gradient-and intensity-discriminator generative adversarial network for image fusion. Inf. Fusion 2022, 88, 184–201. [Google Scholar] [CrossRef]

- Wang, J.; Xi, X.; Li, D.; Li, F. FusionGRAM: An infrared and visible image fusion framework based on gradient residual and attention mechanism. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Xiang, X.; Zhou, G.; Niu, B.; Pan, Z.; Huang, L.; Li, W.; Wen, Z.; Qi, J.; Gao, W. Infrared-Visible Image Fusion Meets Object Detection: Towards Unified Optimization for Multimodal Perception. Remote Sens. 2025, 17, 3637. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2018), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR2018), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.G.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE-CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.W.; Yin, H.T. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Li, H.; Manjunath, B.S.; Mitra, S.K. Multisensor image fusion using the wavelet transform. CVGIP Graph. Model. Image Process. 1995, 57, 235–245. [Google Scholar] [CrossRef]

- Rockinger, O. Image sequence fusion using a shift-invariant wavelet transform. In Proceedings of International Conference on Image Processing (ICIP1997), Washington, DC, USA, 26–29 October 1997; pp. 288–291. [Google Scholar]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. Multi-focus image fusion for visual sensor networks in DCT domain. Comput. Electr. Eng. 2011, 37, 789–797. [Google Scholar] [CrossRef]

- Yao, Z.; Fan, G.; Fan, J.; Gan, M.; Chen, C.L.P. Spatial-frequency dual-domain feature fusion network for low-light remote sensing image enhancement. IEEE Trans. Geosci. Remote Sens. 2024, in press. [Google Scholar] [CrossRef]

- Cao, L.; Jin, L.; Tao, H.; Li, G.N.; Zhuang, Z.; Zhang, Y.F. Multi-focus image fusion based on spatial frequency in discrete cosine transform domain. IEEE Signal Process. Lett. 2014, 22, 220–224. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L.; Cheng, J.; Li, C.; Chen, X. Multi-focus image fusion: A survey of the state of the art. Inf. Fusion 2020, 64, 71–91. [Google Scholar] [CrossRef]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR2019), Long Beach, CA, USA, 16–20 June 2019; pp. 11065–11074. [Google Scholar]

- Qingyun, F.; Zhaokui, W. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.M.; Li, Y.S.; Du, Q. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery. TGRS IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W.K. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.Y.; Yan, J.H. FFCA-YOLO for Small Object Detection in Remote Sensing Images. TGRS IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

- Zhao, W.; Zhao, Z.; Avignon, B. Differential multimmodal fusion algorithm for remote sensing object detection through multi-branch feature extraction. Expert Syst. Appl. 2025, 265, 125826. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefevre, S.; Wu, X.J.; Kittler, J. Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP2020), Virtual, 25–28 October 2020; pp. 276–280. [Google Scholar]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Guided attentive feature fusion for multispectral pedestrian detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV2021), Virtual, 5–9 January 2021; pp. 72–80. [Google Scholar]

- You, S.; Xie, X.; Feng, Y.; Mei, C.J.; Ji, Y.M. Multi-scale aggregation transformers for multispectral object detection. IEEE Signal Process. Lett. 2023, 30, 1172–1176. [Google Scholar] [CrossRef]

- Zhou, H.; Sun, M.; Ren, X.; Wang, X. Visible-thermal image object detection via the combination of illumination conditions and temperature information. Remote Sens. 2021, 13, 3656. [Google Scholar] [CrossRef]

- Jang, J.; Lee, J.; Paik, J. CAMDet: Condition-adaptive multispectral object detection using a visible-thermal translation model. In Proceedings of ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP2025), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

| Image Fusion Methods | Object Detection Methods | mAP@0.5 |

|---|---|---|

| GTF | Yolov5s | 73.7 |

| DenseFuse | Yolov5s | 75.9 |

| IFCNN | Yolov5s | 74.8 |

| Ours | Yolov5s | 76.3 |

| GTF | Ours | 74.2 |

| DenseFuse | Ours | 76.7 |

| IFCNN | Ours | 75.0 |

| Ours | FCOS | 63.0 |

| Ours | Faster RCNN | 76.0 |

| Ours | Mask RCNN | 73.8 |

| Ours | Ours | 79.6 |

| GERM Without FFT | GERM with FFT | SOCA | MCFE | mAP@0.5 |

|---|---|---|---|---|

| 75.4 | ||||

| ✓ | 75.8 | |||

| ✓ | ✓ | 76.3 | ||

| ✓ | ✓ | 75.9 | ||

| ✓ | ✓ | ✓ | 77.8 | |

| ✓ | 76.2 | |||

| ✓ | ✓ | 77.5 | ||

| ✓ | ✓ | 77.4 | ||

| ✓ | ✓ | ✓ | 79.6 |

| Intensity Loss | Texture Loss | Gradient Loss | Detection Loss | mAP@0.5 |

|---|---|---|---|---|

| ✓ | ✓ | ✓ | 78.5 | |

| ✓ | ✓ | ✓ | 77.6 | |

| ✓ | ✓ | ✓ | 77.2 | |

| ✓ | ✓ | ✓ | ✓ | 79.6 |

| Methods | Car | Pickup | Camping | Truck | Tractor | Boat | Van | Other | mAP@0.5 |

|---|---|---|---|---|---|---|---|---|---|

| CMAFF [31] | 91.7 | 85.9 | 78.9 | 78.1 | 71.9 | 71.7 | 75.2 | 54.7 | 76.01 |

| SuperYOLO [32] | 91.13 | 85.66 | 79.3 | 70.18 | 80.41 | 60.24 | 76.5 | 57.33 | 75.09 |

| ICAFusion [33] | - | - | - | - | - | - | - | - | 76.62 |

| FFCAYOLO [34] | 89.6 | 85.7 | 78.7 | 85.7 | 81.8 | 61.5 | 67.0 | 48.6 | 74.8 |

| MMFDet [35] | 88.3 | 78.5 | 81.6 | 59.8 | 86.2 | 76.0 | 88.3 | 63.5 | 77.9 |

| JFDet | 89.9 | 81.8 | 81.0 | 86.6 | 76.0 | 71.1 | 90.7 | 59.6 | 79.6 |

| Methods | Person | Car | Bicycle | mAP@0.5 |

|---|---|---|---|---|

| CFR_3 [36] | 74.49 | 84.91 | 57.77 | 72.39 |

| GAFF [37] | - | - | - | 72.9 |

| MSANet [38] | - | - | - | 76.2 |

| CAPTM _3 [39] | 77.04 | 84.61 | 57.79 | 73.15 |

| CAMDet(V2T) [40] | - | - | - | 75.4 |

| CAMDet(T2V) [40] | - | - | - | 76.3 |

| JFDet | 81.6 | 88.5 | 58.8 | 76.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Xu, W.; Yang, Y. JFDet: Joint Fusion and Detection for Multimodal Remote Sensing Imagery. Remote Sens. 2026, 18, 176. https://doi.org/10.3390/rs18010176

Xu W, Yang Y. JFDet: Joint Fusion and Detection for Multimodal Remote Sensing Imagery. Remote Sensing. 2026; 18(1):176. https://doi.org/10.3390/rs18010176

Chicago/Turabian StyleXu, Wenhao, and You Yang. 2026. "JFDet: Joint Fusion and Detection for Multimodal Remote Sensing Imagery" Remote Sensing 18, no. 1: 176. https://doi.org/10.3390/rs18010176

APA StyleXu, W., & Yang, Y. (2026). JFDet: Joint Fusion and Detection for Multimodal Remote Sensing Imagery. Remote Sensing, 18(1), 176. https://doi.org/10.3390/rs18010176