Robust Object Detection for UAVs in Foggy Environments with Spatial-Edge Fusion and Dynamic Task Alignment

Highlights

- We introduce Fog-UAVNet, a lightweight detector with a unified design that fuses edge and spatial cues, adapts its receptive field to fog density, and aligns classification with localization.

- Across multiple fog benchmarks, Fog-UAVNet consistently achieves higher detection accuracy and more efficient inference than strong baselines, leading to a superior accuracy–efficiency trade-off under foggy conditions.

- Robust, real-time UAV perception is feasible without large models, enabling practical onboard deployment.

- The design offers a simple recipe for adverse weather detection and may generalize across aerial scenarios.

Abstract

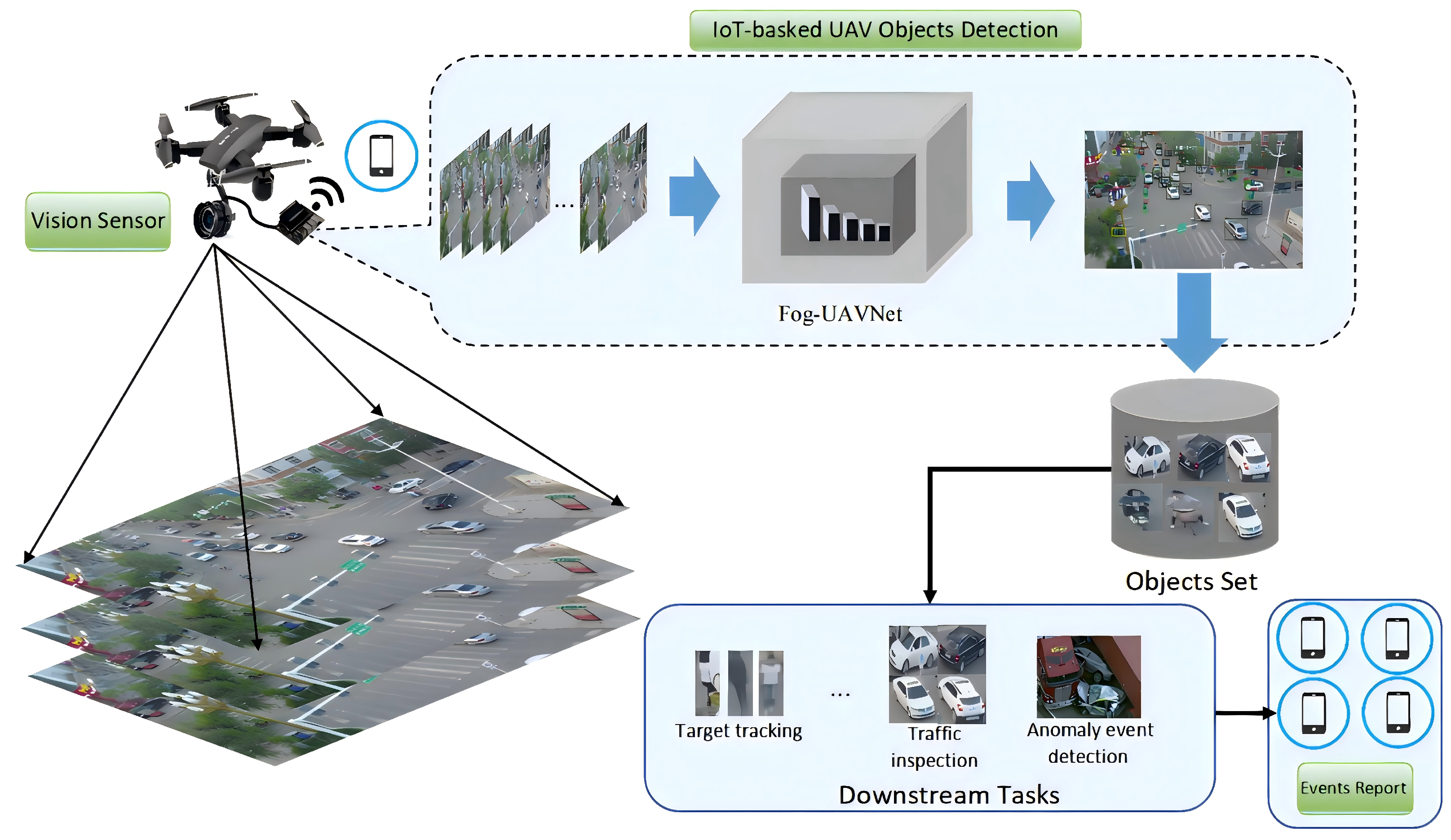

1. Introduction

- 1.

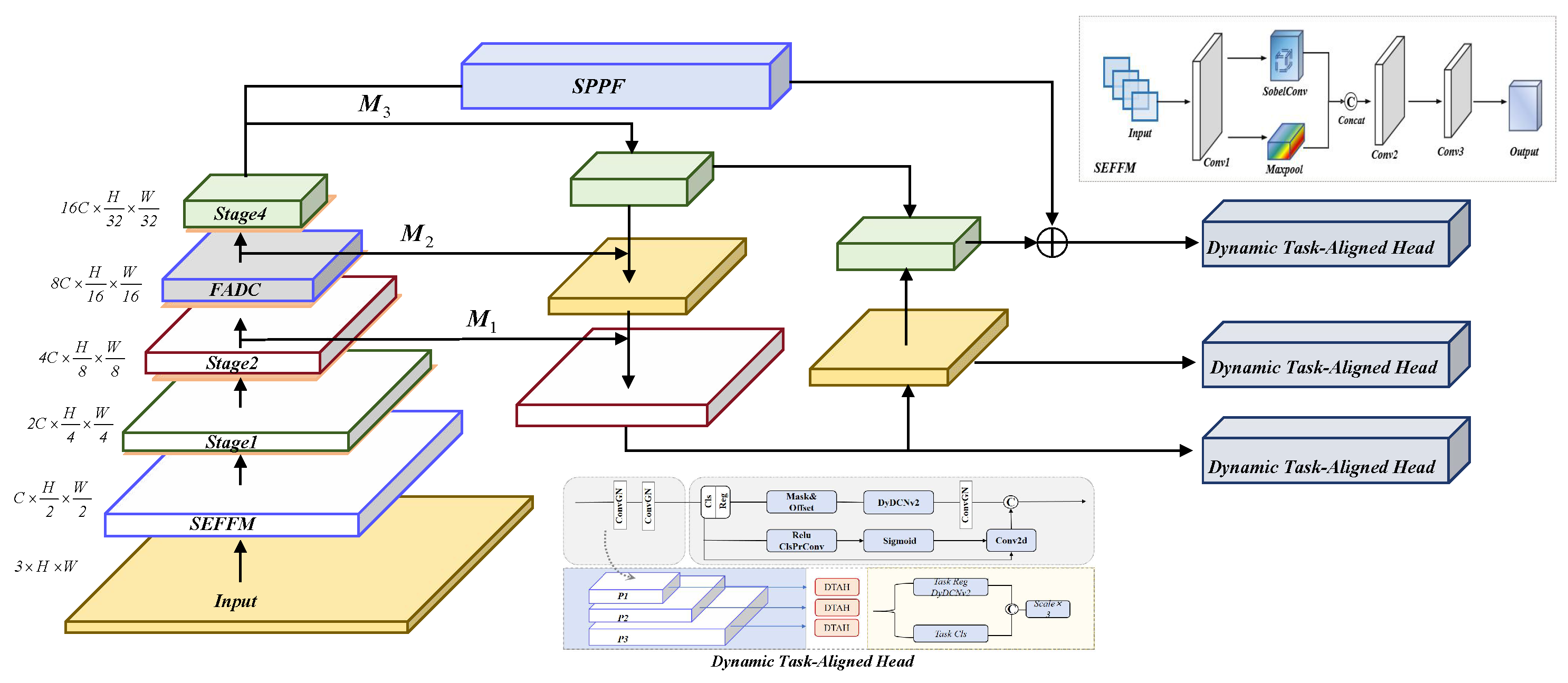

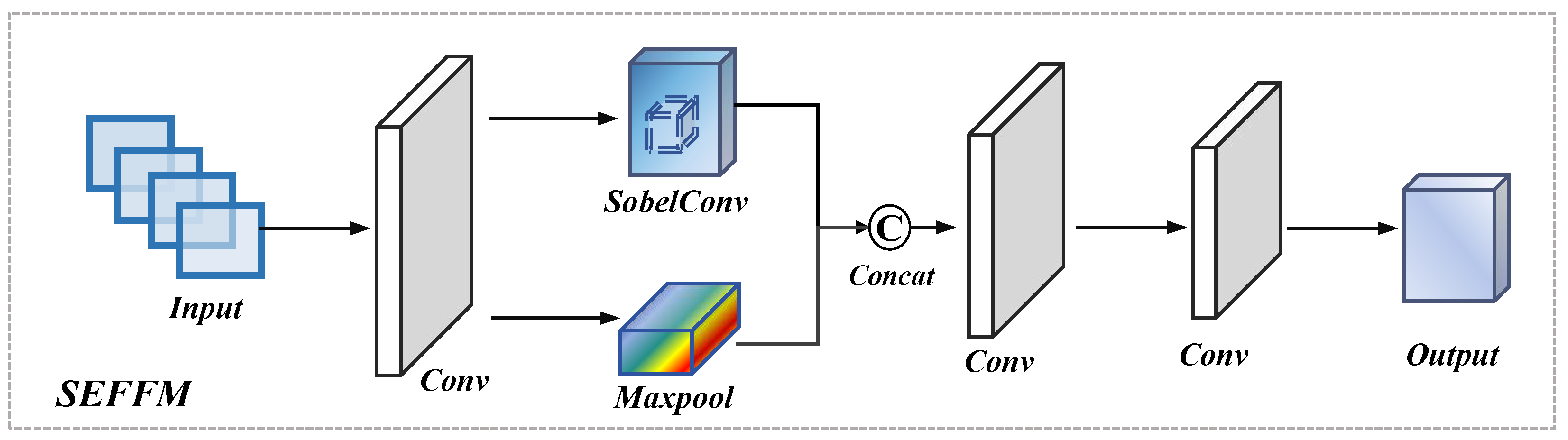

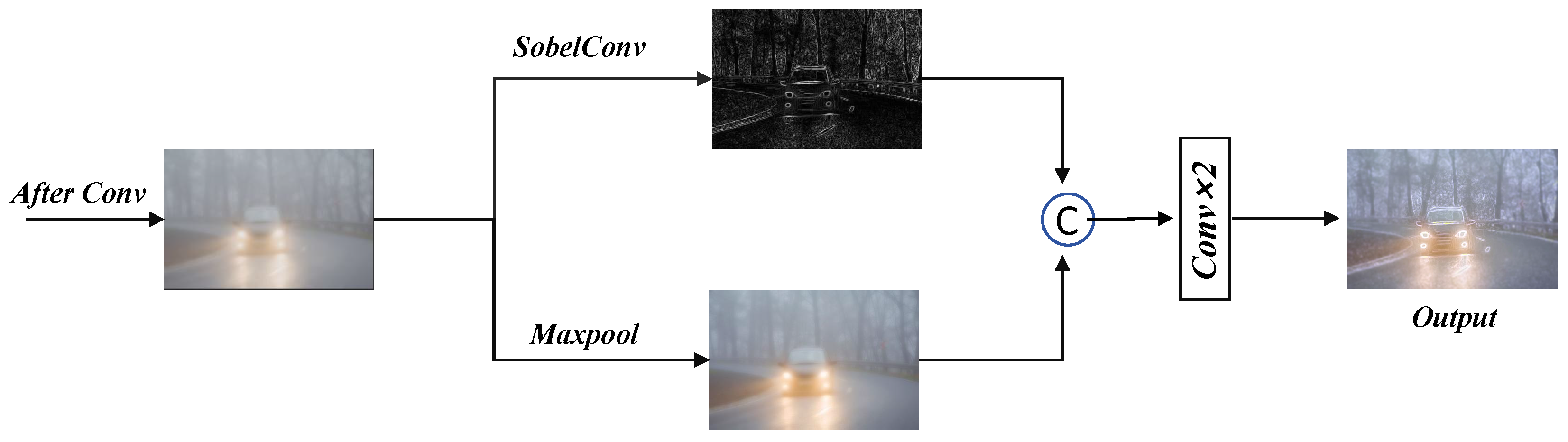

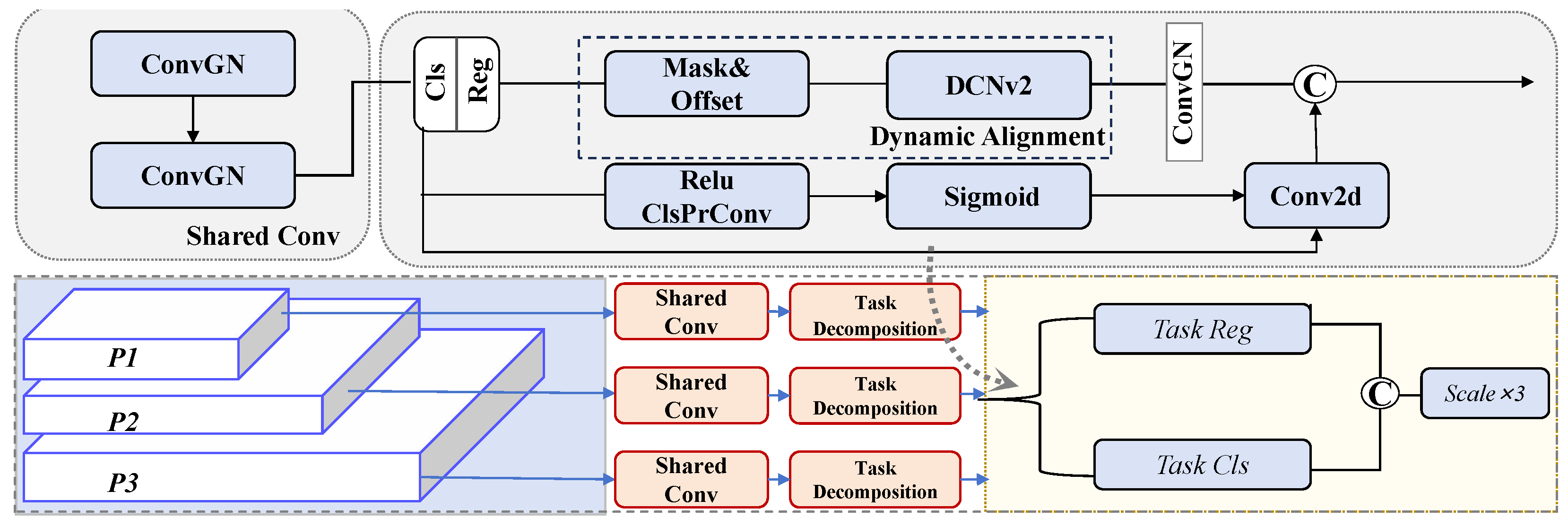

- We design Fog-UAVNet, a tailored detection framework for UAV deployment in foggy conditions. It incorporates three novel modules: (i) a Spatial-Edge Feature Fusion Module (SEFFM) for enhancing structural details under low visibility; (ii) a Frequency-Adaptive Dilated Convolution (FADC) for fog-aware receptive field modulation; and (iii) a Dynamic Task-Aligned Head (DTAH) for adaptive multi-task prediction. Together, they enable robust representation learning and task-consistent inference in adverse weather.

- 2.

- We developed the VisDrone-fog dataset by introducing artificial fog into the original VisDrone dataset, creating a specialized resource for UAV object detection. Tailored for training and evaluating detection models under challenging conditions of reduced visibility and varying fog densities, we scientifically generated realistic artificial foggy environments in strict accordance with the Mie scattering theory originally proposed by McCartney in 1975 when constructing the dataset. By leveraging the VisDrone-fog dataset, we demonstrate Fog-UAVNet’s effectiveness in handling the complexities of low-visibility environments, ensuring its adaptability across diverse foggy scenarios.

- 3.

- In order to simulate the real situation even further, we constructed Foggy Datasets, a real-world dataset that covers diverse fog densities and complex environmental conditions. This dataset enables thorough evaluation of model generalization in practical UAV-based IoT scenarios beyond synthetic assumptions.

- 4.

- We extensively validate Fog-UAVNet across six fog-specific benchmarks—VisDrone, VisDrone-Fog, VOC-Foggy, RTTS, Foggy Datasets, and Foggy Driving—demonstrating consistent gains over state-of-the-art detectors in localization and classification accuracy, with a compact and efficient architecture suited for real-world deployment.

2. Related Work

2.1. Object Detection Under Complex and Adverse Conditions

2.2. IoT-Driven UAV Detection in Challenging Environments

3. Materials and Methods

3.1. Dataset Description

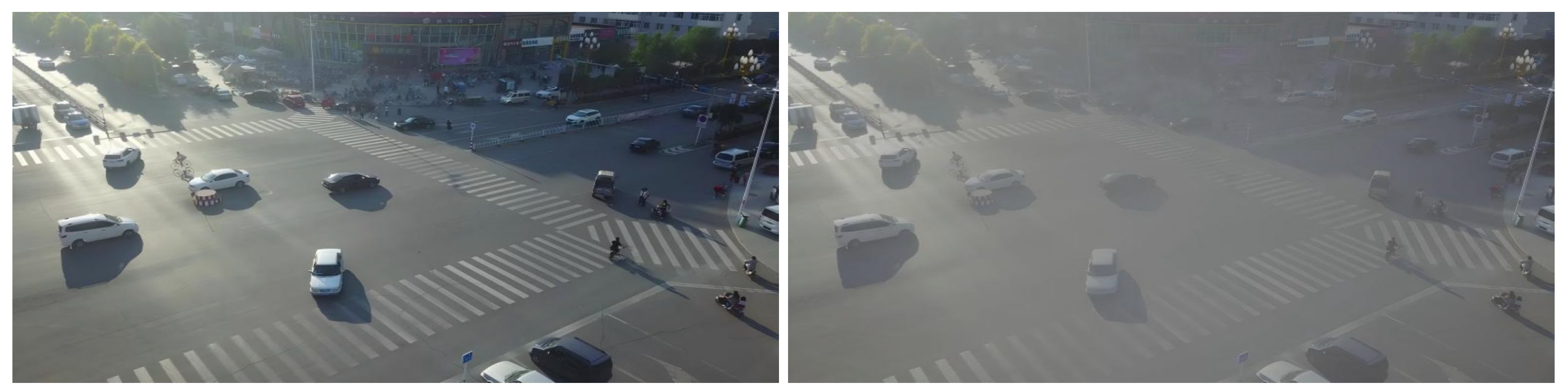

- VisDrone-Fog Dataset is a large-scale dataset specifically designed for drone-based object detection and tracking tasks [30]. It contains a wide variety of annotated images and video sequences captured by drones in diverse environments and under various weather conditions, including fog. This dataset is instrumental for training and evaluating UAV detection systems in real-world scenarios, making it highly relevant for IoT applications such as surveillance, traffic monitoring, and disaster management. Considering there are few publicly available datasets for UAV object detection in adverse weather conditions, we extended the VisDrone dataset by generating the VisDrone-fog dataset. This extension was created by introducing artificial fog into the original VisDrone dataset using an atmospheric scattering model. This model, based on the Mie scattering theory initially proposed by McCartney in 1975, divides the light received by optical sensors into two components: light reflected by the target object and ambient light surrounding the target. The model is defined aswhere represents the observed foggy image, is the corresponding clear image, A is the global atmospheric light, and denotes the scene depth. The parameter controls the scattering coefficient and is set to ensure a uniform fog density across the dataset.

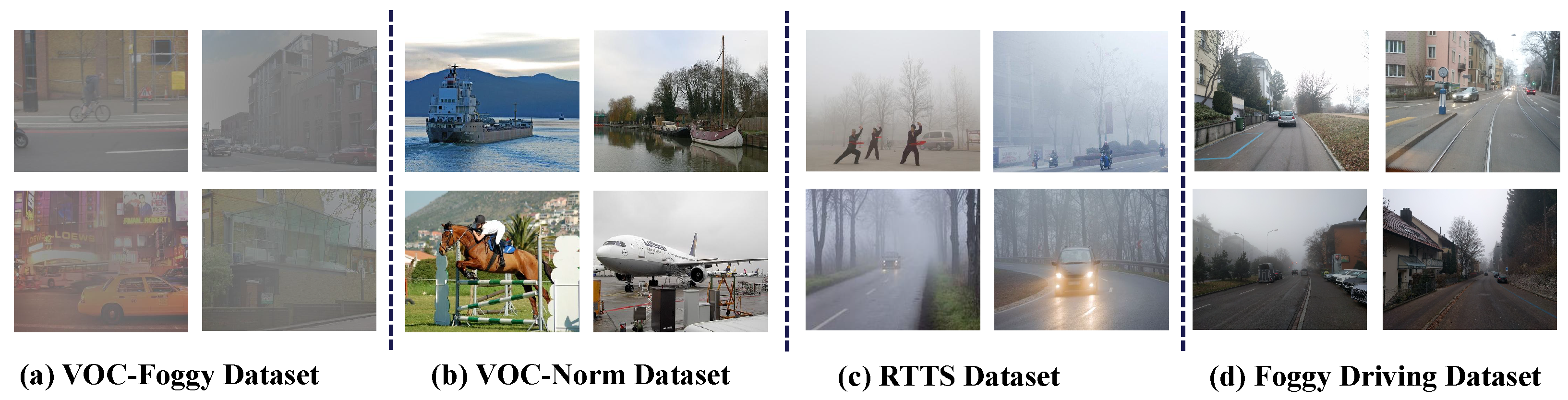

- VOC-Norm Dataset includes non-foggy images from VOC2007 and VOC2012 trainval splits, serving as a baseline for evaluating model robustness and calibration across clear and degraded environments.

- RTTS Dataset [32] consists of 4322 real-world foggy images with annotated vehicles and pedestrians, enabling performance evaluation in naturalistic urban fog scenarios, especially for smart city applications.

- Foggy Driving Dataset [33] comprises 101 annotated images of vehicles and pedestrians in real fog, reflecting typical challenges faced by UAVs in traffic management scenarios.

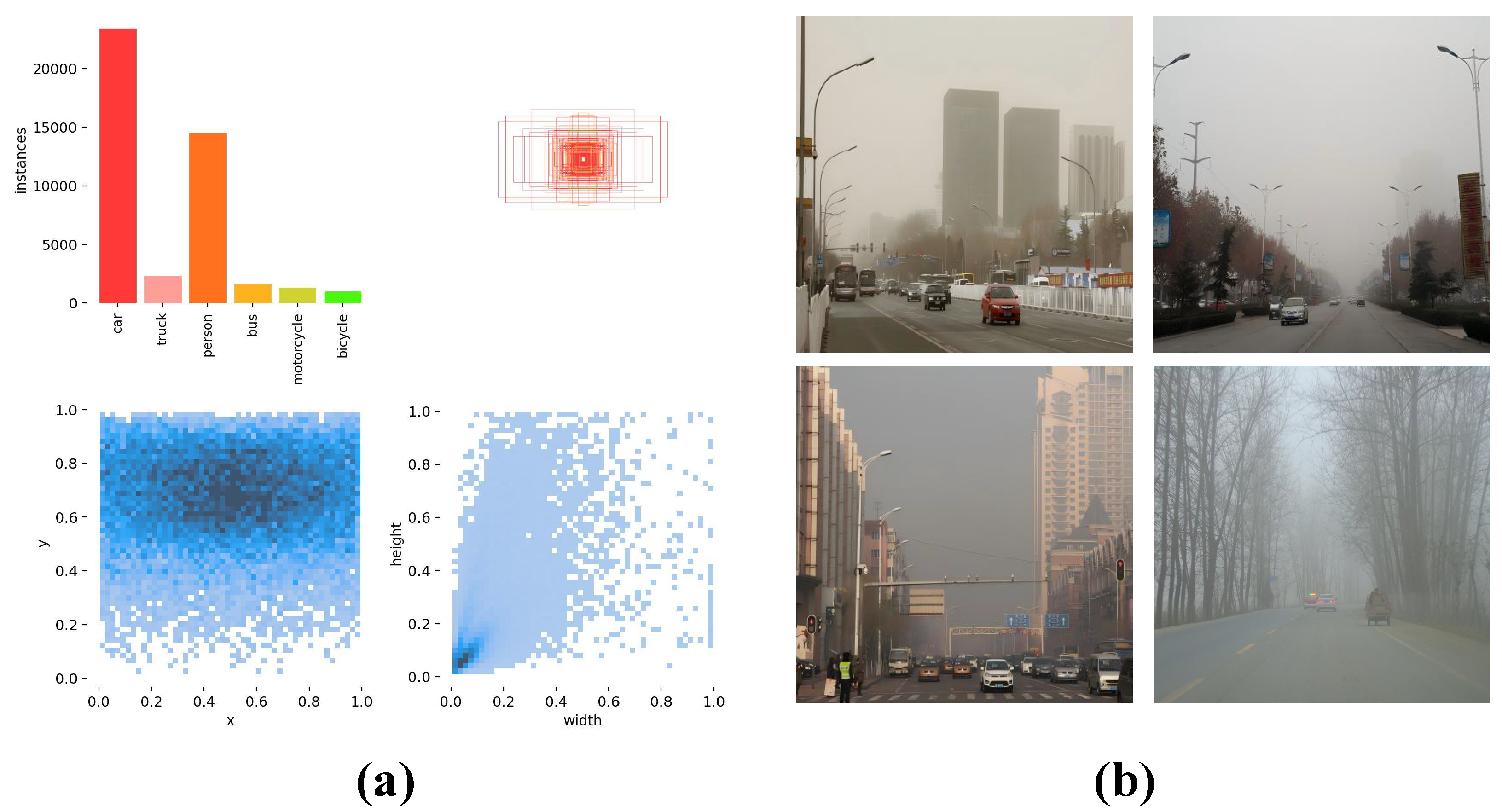

- Foggy Datasets is a self-made dataset capturing real-world foggy scenes, designed to enhance UAV detection performance in diverse IoT contexts. Compiled through data scraping and careful annotation, it includes 8700 images with 55,701 instances across key classes such as car, person, bus, bicycle, motorcycle, and truck. This dataset covers various fog densities, times of day, and weather conditions, making it valuable for training models to handle the natural variability of foggy environments. The diversity in scenes from urban to rural areas under different fog conditions makes this dataset suitable for a wide range of UAV-based IoT applications, including urban surveillance, traffic monitoring, disaster management, and environmental monitoring. To promote further research, the Foggy Datasets, including the images and visualizations shown in Figure 4, are publicly available on our project website.

3.2. Experimental Setup and Evaluation Metrics

- Average Precision (AP) quantifies detection accuracy via the area under the precision-recall curve. Mean Average Precision (mAP) is averaged across all categories:

- Mean Average Precision at IoU 0.5 (mA) denotes mean AP at an intersection over union (IoU) threshold of 0.5.

- Parameters represents the total number of trainable parameters.

- Giga Floating Point Operations (GFLOPs) measures the computational cost.

- Speed refers to the latency per image during inference.

- Frames Per Second (FPS) measures the number of frames that a detector can process per second on a given hardware platform and thus directly reflects the model’s throughput and practical real-time capability. In deployment scenarios, especially under resource-constrained UAV settings, a higher FPS at comparable accuracy is crucial for ensuring timely perception and stable downstream control. In this work, we report FPS on a representative embedded device (NVIDIA Jetson Nano), which provides a realistic estimate of the end-side inference speed and highlights the efficiency advantage of the proposed Fog-UAVNet under typical edge-computing constraints.

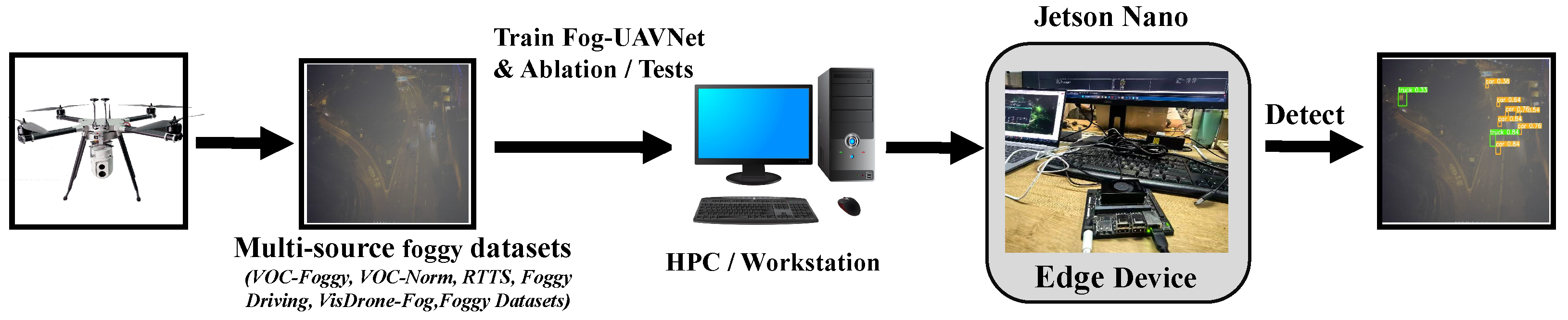

3.3. Fog-UAVNet

3.4. Spatial-Edge Feature Fusion Module

3.5. Dynamic Task-Aligned Head (DTAH)

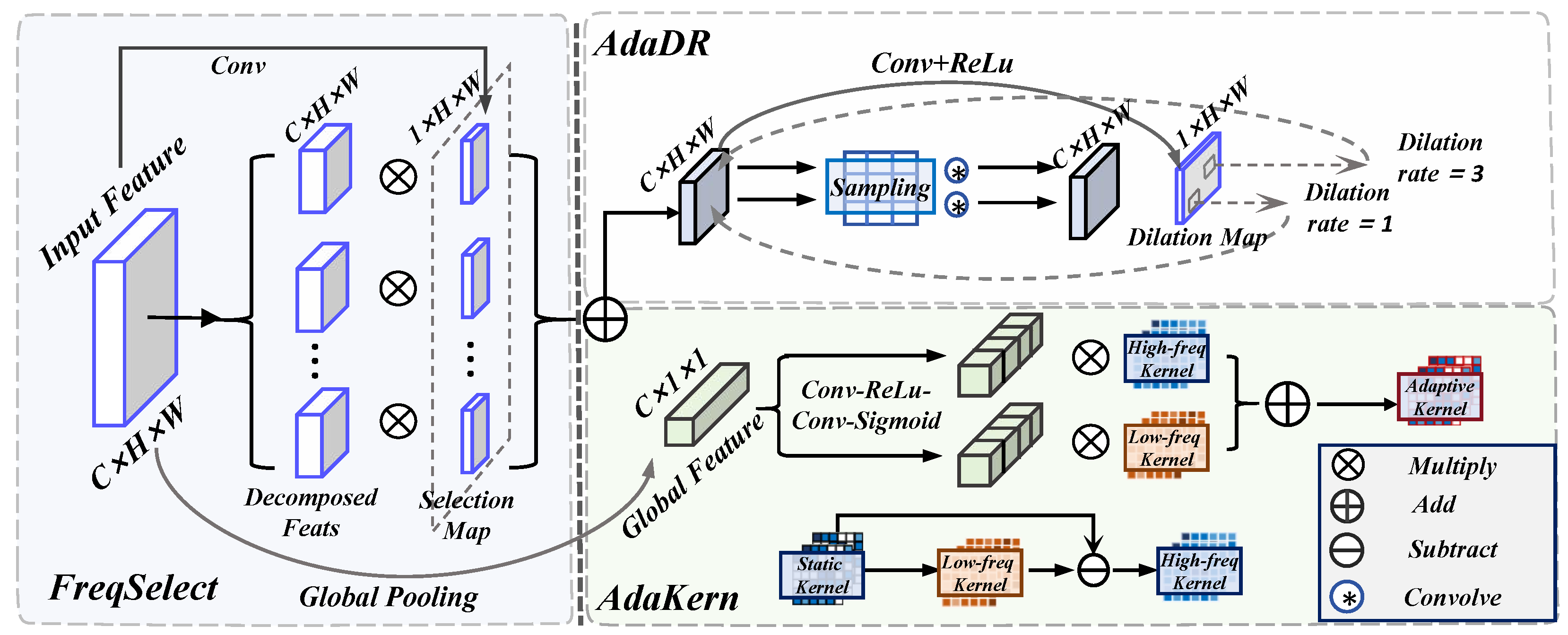

3.6. Frequency-Adaptive Dilated Convolution (FADC)

3.7. Embedded Deployment and Optimization

4. Results

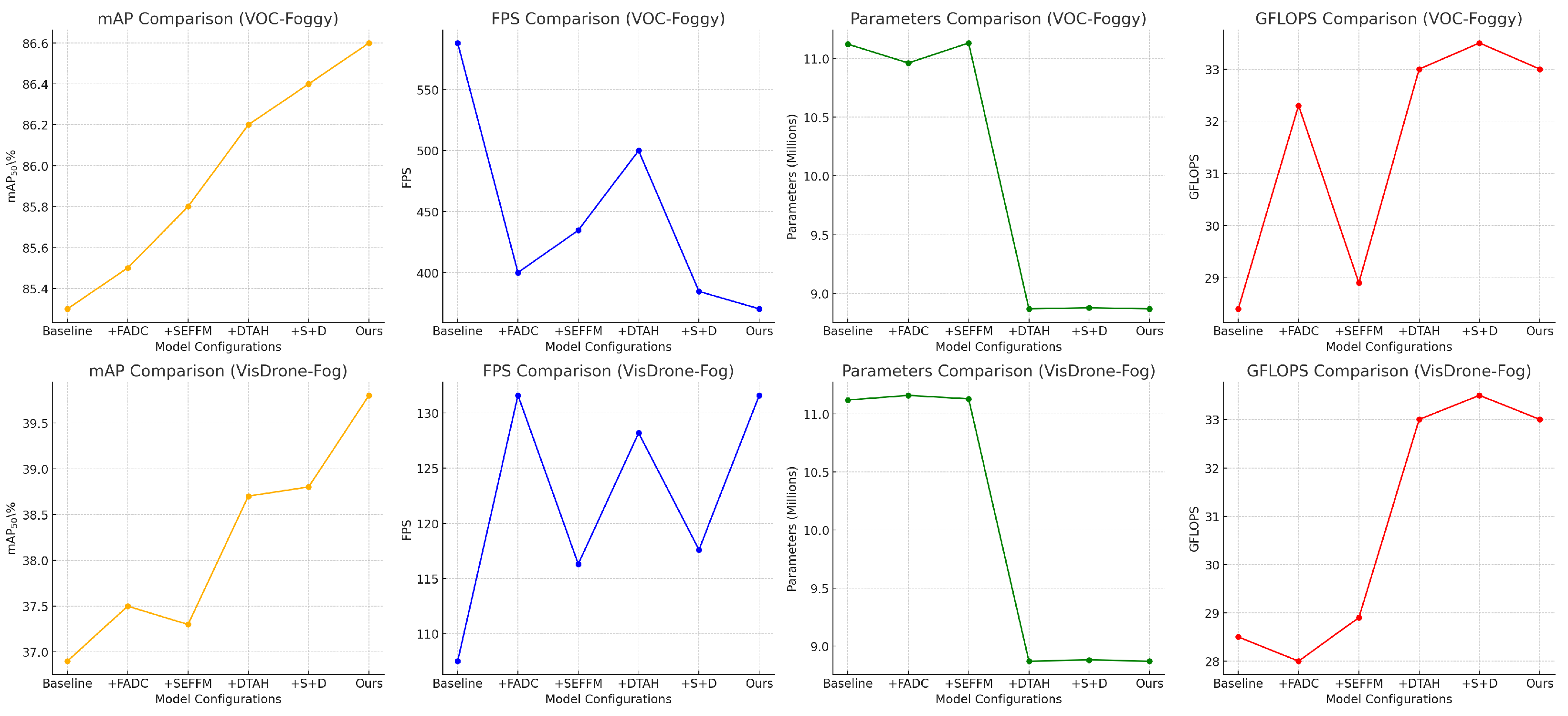

4.1. Ablation Studies

4.1.1. Ablation Studies on VOC-Foggy

4.1.2. Ablation Studies on VisDrone-Fog

4.2. Comparison with State-of-the-Art Methods

4.2.1. Comparison on Synthetic Dataset

4.2.2. Comparison on Real-World Datasets

4.2.3. Comparison on Foggy Dataset

4.3. Edge Deployment Evaluation

4.4. Object Detection on VisDrone and VisDrone-Fog Datasets

4.5. Visual Analysis of UAV Object Detection in Foggy Conditions

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviations | Full name |

| Fog-UAVNet | Fog-aware Unmanned Aerial Vehicle Network |

| UAV | Unmanned Aerial Vehicle |

| IoT | Internet of Things |

| SEFFM | Spatial-Edge Feature Fusion Module |

| FADC | Frequency-Adaptive Dilated Convolution |

| DTAH | Dynamic Task-Aligned Head |

| SPPF | Spatial Pyramid Pooling-Fast |

| FreqSelect | Frequency Selection |

| AdaDR | Adaptive Dilation Rate |

| AdaKern | Adaptive Kernel |

| CNN | Convolutional Neural Network |

| AP | Average Precision |

| mAP | Mean Average Precision |

| mA | Mean Average Precision at IoU = 0.5 |

| IoU | Intersection over Union |

| FPS | Frames Per Second |

| VOC | Visual Object Classes |

| VOC-Foggy | Foggy Visual Object Classes (foggy PASCAL VOC) |

| VOC-Norm | Normal Visual Object Classes (clear PASCAL VOC) |

| VisDrone-Fog | Foggy VisDrone Dataset |

| RTTS | Real-world Task-driven Testing Set |

| SOTA | State of the Art |

References

- Buzcu, B.; Özgün, M.; Gürcan, G.; Aydo&gcaron;an, R. Fully Autonomous Trustworthy Unmanned Aerial Vehicle Teamwork: A Research Guideline Using Level 2 Blockchain. IEEE Robot. Autom. Mag. 2024, 31, 78–88. [Google Scholar] [CrossRef]

- Chandran, I.; Vipin, K. Multi-UAV networks for disaster monitoring: Challenges and opportunities from a network perspective. Drone Syst. Appl. 2024, 12, 1–28. [Google Scholar] [CrossRef]

- Piao, S.; Li, N.; Miao, Z. Research on UAV Vision Landing Target Detection and Obstacle Avoidance Algorithm. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 4443–4448. [Google Scholar] [CrossRef]

- Yang, X.; Mi, M.B.; Yuan, Y.; Wang, X.; Tan, R.T. Object Detection in Foggy Scenes by Embedding Depth and Reconstruction into Domain Adaptation. In Proceedings of the Computer Vision—ACCV 2022: 16th Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 303–318. [Google Scholar] [CrossRef]

- Tahir, N.U.A.; Zhang, Z.; Asim, M.; Chen, J.; ELAffendi, M. Object Detection in Autonomous Vehicles under Adverse Weather: A Review of Traditional and Deep Learning Approaches. Algorithms 2024, 17, 103. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Liu, S.; Bai, Y. Multiple UAVs collaborative traffic monitoring with intention-based communication. Comput. Commun. 2023, 210, 116–129. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Dong, Q.; Han, T.; Wu, G.; Qiao, B.; Sun, L. RSNet: Compact-Align Detection Head Embedded Lightweight Network for Small Object Detection in Remote Sensing. Remote Sens. 2025, 17, 1965. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Dong, Q.; Han, T.; Wu, G.; Sun, L.; Huang, M.; Zhang, F. Industrial device-aided data collection for real-time rail defect detection via a lightweight network. Eng. Appl. Artif. Intell. 2025, 161, 112102. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Li, F.; Feng, Z.; Jia, L.; Li, P. RailVoxelDet: A Lightweight 3-D Object Detection Method for Railway Transportation Driven by Onboard LiDAR Data. IEEE Internet Things J. 2025, 12, 37175–37189. [Google Scholar] [CrossRef]

- Keshun, Y.; Yingkui, G.; Yanghui, L.; Yajun, W. A novel physical constraint-guided quadratic neural networks for interpretable bearing fault diagnosis under zero-fault sample. Nondestruct. Test. Eval. 2025, 1–31. [Google Scholar] [CrossRef]

- Butilă, E.V.; Boboc, R.G. Urban Traffic Monitoring and Analysis Using Unmanned Aerial Vehicles (UAVs): A Systematic Literature Review. Remote Sens. 2022, 14, 620. [Google Scholar] [CrossRef]

- Ye, T.; Zhang, Y.; Jiang, M.; Chen, L.; Liu, Y.; Chen, S.; Chen, E. Perceiving and Modeling Density for Image Dehazing. In Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022, Proceedings, Part XIX; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 130–145. [Google Scholar]

- Guo, C.; Yan, Q.; Anwar, S.; Cong, R.; Ren, W.; Li, C. Image Dehazing Transformer with Transmission-Aware 3D Position Embedding. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5810. [Google Scholar] [CrossRef]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision Transformers for Single Image Dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef]

- Bai, H.; Pan, J.; Xiang, X.; Tang, J. Self-Guided Image Dehazing Using Progressive Feature Fusion. IEEE Trans. Image Process. 2022, 31, 1217–1229. [Google Scholar] [CrossRef] [PubMed]

- Qiu, Y.; Lu, Y.; Wang, Y.; Jiang, H. IDOD-YOLOV7: Image-Dehazing YOLOV7 for Object Detection in Low-Light Foggy Traffic Environments. Sensors 2023, 23, 1347. [Google Scholar] [CrossRef]

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-Adaptive YOLO for Object Detection in Adverse Weather Conditions. In Proceedings of the AAAI Conference on Artificial Intelligence, Pomona CA USA, 24–28 October 2022. [Google Scholar]

- Wang, Y.; Yan, X.; Zhang, K.; Gong, L.; Xie, H.; Wang, F.L.; Wei, M. TogetherNet: Bridging Image Restoration and Object Detection Together via Dynamic Enhancement Learning. Comput. Graph. Forum 2022, 41, 465–476. [Google Scholar] [CrossRef]

- Hnewa, M.; Radha, H. Multiscale Domain Adaptive Yolo For Cross-Domain Object Detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. LWUAVDet: A Lightweight UAV Object Detection Network on Edge Devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Xiong, X.; He, M.; Li, T.; Zheng, G.; Xu, W.; Fan, X.; Zhang, Y. Adaptive Feature Fusion and Improved Attention Mechanism-Based Small Object Detection for UAV Target Tracking. IEEE Internet Things J. 2024, 11, 21239–21249. [Google Scholar] [CrossRef]

- Bisio, I.; Haleem, H.; Garibotto, C.; Lavagetto, F.; Sciarrone, A. Performance Evaluation and Analysis of Drone-Based Vehicle Detection Techniques From Deep Learning Perspective. IEEE Internet Things J. 2022, 9, 10920–10935. [Google Scholar] [CrossRef]

- Liu, K.; Peng, D.; Li, T. Multimodal Remote Sensing Object Detection Based on Prior-Enhanced Mixture-of-Experts Fusion Network. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–14. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Sindagi, V.A.; Oza, P.; Yasarla, R.; Patel, V.M. Prior-Based Domain Adaptive Object Detection for Hazy and Rainy Conditions. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 763–780. [Google Scholar]

- Hahner, M.; Dai, D.; Sakaridis, C.; Zaech, J.N.; Gool, L.V. Semantic Understanding of Foggy Scenes with Purely Synthetic Data. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3675–3681. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Gu, L.; Zheng, D.; Fu, Y. Frequency-Adaptive Dilated Convolution for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 3414–3425. [Google Scholar]

- Zhao, S.; Fang, Y. DCP-YOLOv7: Dark channel prior image-dehazing YOLOv7 for vehicle detection in foggy scene. In Proceedings of the International Conference on Computer Vision and Pattern Analysis, Hangzhou, China, 31 March–2 April 2023. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-One Dehazing Network. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4780–4788. [Google Scholar] [CrossRef]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Li, C.; Wang, G.; Wang, B.; Liang, X.; Li, Z.; Chang, X. Dynamic Slimmable Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

| Dataset | Images | Person | Bicycle | Car | Bus | Motor | Truck | Total |

|---|---|---|---|---|---|---|---|---|

| VOC-Foggy-train | 8111 | 13,256 | 1064 | 3267 | 822 | 1052 | - | 19,561 |

| VOC-Foggy-val | 2734 | 4528 | 377 | 1201 | 213 | 325 | - | 6604 |

| RTTS | 4322 | 7950 | 534 | 18,413 | 1838 | 862 | - | 29,577 |

| Foggy Datasets | 8700 | 18,389 | 1227 | 29,521 | 2070 | 1606 | 2888 | 55,701 |

| Foggy Driving | 101 | 181 | 12 | 295 | 21 | 16 | - | 525 |

| Model | AP% | mA% | FPS | Parameters () | GFLOPS (G) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Person | Bicycle | Car | Motorbike | Bus | |||||

| baseline | 83.8 | 87.1 | 88.2 | 85.3 | 82.3 | 85.3 | 588.1 | 11.12 | 28.4 |

| +FADC | 84.0 | 86.8 | 88.2 | 84.9 | 83.2 | 85.5 | 400.0 | 10.96 | 32.3 |

| +SEFFM | 84.2 | 87.3 | 88.7 | 85.7 | 83.2 | 85.8 | 434.7 | 11.13 | 28.9 |

| +DTAH | 84.9 | 86.9 | 89.4 | 85.2 | 84.7 | 86.2 | 500.0 | 8.87 | 33.0 |

| +SEFFM + DTAH | 84.3 | 87.9 | 89.3 | 86.2 | 84.7 | 86.4 | 384.6 | 8.88 | 33.5 |

| Fog-UAVNet | 84.9 | 88.5 | 89.6 | 85.9 | 84.9 | 86.6 | 370.3 | 8.87 | 33.0 |

| Model | Pedestrian | People | Bicycle | Car | Van | Truck | Tricycle | Awning Tricycle | Bus | Motor | mA% | FPS | Params () | GFLOPS (G) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| baseline | 40.9 | 30.6 | 10.7 | 78.2 | 41.8 | 33.9 | 23.8 | 13.4 | 53.8 | 41.9 | 36.9 | 107.5 | 11.12 | 28.5 |

| +FADC | 40.2 | 31.9 | 10.9 | 78.4 | 40.9 | 34.8 | 25.9 | 14.0 | 56.6 | 41.8 | 37.5 | 131.6 | 11.16 | 28.0 |

| +SEFFM | 40.5 | 31.5 | 11.1 | 78.7 | 43.0 | 32.9 | 25.8 | 13.8 | 53.9 | 41.8 | 37.3 | 116.3 | 11.13 | 28.9 |

| +DTAH | 42.9 | 33.0 | 12.1 | 79.2 | 43.0 | 36.3 | 25.5 | 13.6 | 57.7 | 44.0 | 38.7 | 128.2 | 8.87 | 33.0 |

| +SEFFM + DTAH | 42.5 | 33.3 | 11.9 | 79.3 | 44.1 | 35.7 | 25.8 | 14.1 | 58.3 | 43.3 | 38.8 | 117.6 | 8.88 | 33.5 |

| Fog-UAVNet | 43.6 | 33.9 | 12.3 | 79.9 | 44.6 | 37.0 | 28.5 | 14.7 | 58.7 | 44.4 | 39.8 | 131.6 | 8.87 | 33.0 |

| Method | Train Dataset | Type | Person | Bicycle | Car | Motor | Bus | mA |

|---|---|---|---|---|---|---|---|---|

| YOLOv8s | VOC-FOG | Baseline | 83.81 | 87.13 | 86.20 | 84.01 | 82.32 | 85.30 |

| YOLOv8s * | VOC-Norm | Baseline | 79.97 | 67.95 | 74.75 | 58.62 | 83.12 | 72.88 |

| DCP-YOLO * [37] | VOC-Norm | Dehaze | 81.58 | 78.80 | 79.75 | 78.51 | 85.64 | 80.86 |

| AOD-Net * [38] | VOC-Norm | Dehaze | 81.26 | 73.56 | 76.98 | 71.18 | 83.08 | 77.21 |

| Semi-YOLO * | VOC-Norm | Dehaze | 81.15 | 76.94 | 76.92 | 72.89 | 84.88 | 78.56 |

| FFA-Net * [39] | VOC-Norm | Dehaze | 78.30 | 70.31 | 69.97 | 68.80 | 80.72 | 73.62 |

| MS-DAYOLO [25] | VOC-FOG | Dehaze | 82.52 | 75.62 | 86.93 | 81.92 | 90.10 | 83.42 |

| DS-Net [40] | VOC-FOG | Multi-task | 72.44 | 60.47 | 81.27 | 53.85 | 61.43 | 65.89 |

| IA-YOLO [23] | VOC-FOG | I-adaptive | 70.98 | 61.98 | 70.98 | 57.93 | 61.98 | 64.77 |

| TogetherNet [24] | VOC-FOG | Multi-task | 87.62 | 78.19 | 85.92 | 84.03 | 88.75 | 85.90 |

| Fog-UAVNet | VOC-FOG | Multi-task | 84.91 | 87.92 | 89.62 | 86.23 | 84.95 | 86.60 |

| Method | Person | Bicycle | Car | Motor | Bus | mA |

|---|---|---|---|---|---|---|

| YOLOv8s | 80.88 | 64.27 | 56.39 | 52.74 | 29.60 | 56.74 |

| AOD-Net | 77.26 | 62.43 | 56.70 | 53.45 | 30.01 | 55.83 |

| GCA-YOLO | 79.12 | 67.10 | 56.41 | 58.68 | 34.17 | 58.64 |

| DCP-YOLO | 78.69 | 67.99 | 55.50 | 57.57 | 33.27 | 58.32 |

| FFA-Net | 77.12 | 66.51 | 64.23 | 40.64 | 23.71 | 52.64 |

| MS-DAYOLO | 74.22 | 44.13 | 70.91 | 38.64 | 36.54 | 57.39 |

| DS-Net | 68.81 | 18.02 | 46.13 | 15.44 | 15.44 | 32.71 |

| IA-YOLO | 67.25 | 35.84 | 42.65 | 17.64 | 37.04 | 37.55 |

| TogetherNet | 82.70 | 57.27 | 75.31 | 55.40 | 37.04 | 61.55 |

| Ours | 79.60 | 56.90 | 79.90 | 51.30 | 56.94 | 63.21 |

| Methods | Person | Bicycle | Car | Motor | Bus | mA |

|---|---|---|---|---|---|---|

| YOLOv8s | 24.36 | 27.25 | 55.08 | 8.04 | 44.79 | 33.06 |

| AOD-Net | 26.15 | 33.72 | 56.95 | 6.44 | 34.89 | 32.51 |

| GCA-YOLO | 27.96 | 34.11 | 56.36 | 6.77 | 34.21 | 33.77 |

| DCP-YOLO | 22.64 | 11.07 | 56.37 | 4.66 | 36.03 | 31.56 |

| FFA-Net | 19.22 | 21.40 | 50.64 | 3.69 | 43.85 | 28.74 |

| MS-DAYOLO | 21.52 | 34.57 | 57.41 | 18.20 | 46.75 | 34.89 |

| DS-Net | 26.74 | 20.54 | 54.16 | 7.14 | 36.11 | 29.74 |

| IA-YOLO | 20.24 | 19.04 | 50.67 | 14.87 | 22.97 | 31.55 |

| TogetherNet | 30.48 | 30.47 | 57.87 | 14.87 | 40.88 | 36.75 |

| Ours | 35.69 | 35.26 | 59.15 | 16.17 | 45.88 | 38.41 |

| Methods | Person | Bicycle | Car | Bus | Motor | Truck | mA |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 92.96 | 92.41 | 59.36 | 91.42 | 34.81 | 80.57 | 79.74 |

| Grid R-CNN | 92.64 | 11.07 | 56.37 | 4.66 | 30.63 | 31.56 | 26.56 |

| dynamic R-CNN | 89.22 | 79.70 | 51.64 | 69.49 | 62.35 | 58.94 | 79.74 |

| YOLOv5 | 93.74 | 74.94 | 92.16 | 87.54 | 87.91 | 83.04 | 86.60 |

| YOLOv6 | 93.54 | 73.34 | 93.27 | 85.57 | 86.97 | 80.95 | 85.23 |

| YOLOv3-tiny | 90.24 | 78.04 | 89.67 | 88.57 | 86.97 | 80.95 | 85.93 |

| Cascade R-CNN | 91.15 | 92.90 | 66.95 | 92.61 | 79.89 | 84.21 | 83.27 |

| Ours | 96.50 | 90.00 | 96.25 | 91.90 | 92.40 | 83.10 | 91.90 |

| Model | Parameter | FPS (Jetson Nano) | GFLOPs |

|---|---|---|---|

| baseline | 11.12 | 19.4 | 28.5 |

| Fog-UAVNet | 8.87 | 78.5 | 33.0 |

| Model | Pedestrian | People | Bicycle | Car | Van | Truck | Tricycle | Awning Tricycle | Bus | Motor | mA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| VisDrone Test Set | |||||||||||

| SSD | 18.7% | 9.0% | 7.3% | 63.2% | 29.9% | 33.1% | 11.7% | 11.1% | 49.8% | 19.1% | 25.3% |

| Faster R-CNN | 12.5% | 8.1% | 5.8% | 44.1% | 20.4% | 19.0% | 8.5% | 8.73% | 43.8% | 16.8% | 19.9% |

| Cascade-RCNN | 22.2% | 14.8% | 7.6% | 54.6% | 31.5% | 21.0% | 14.8% | 8.6% | 33.0% | 21.4% | 23.2% |

| CenterNet | 22.9% | 11.6% | 7.5% | 61.9% | 19.4% | 24.7% | 13.1% | 14.2% | 42.6% | 18.8% | 23.7% |

| YOLOv8 | 40.4% | 32.2% | 11.6% | 78.7% | 43.5% | 35.6% | 25.6% | 15.3% | 53.5% | 42.3% | 37.7% |

| FiFoNet | 45.1% | 35.4% | 12.8% | 79.1% | 40.1% | 34.3% | 22.0% | 12.1% | 48.6% | 42.4% | 37.3% |

| YOLOv10 | 43.4% | 25.1% | 8.65% | 75.7% | 38.1% | 27.7% | 20.5% | 11.3% | 45.5% | 36.9% | 36.6% |

| LWUAVDet-S | 44.0% | 34.6% | 14.2% | 78.9% | 45.6% | 35.8% | 25.7% | 15.8% | 56.7% | 43.4% | 40.3% |

| LW-YOLOv8 | 39.3% | 31.9% | 9.2% | 78.5% | 42.1% | 30.8% | 23.8% | 15.0% | 50.6% | 41.4% | 36.3% |

| Fog-UAVNet | 43.3% | 33.8% | 14.8% | 79.6% | 45.5% | 36.5% | 26.5% | 15.2% | 56.1% | 43.9% | 39.6% |

| VisDrone-fog Test Set | |||||||||||

| SSD | 16.2% | 8.0% | 6.2% | 54.3% | 26.7% | 29.5% | 10.4% | 10.0% | 43.1% | 17.2% | 22.1% |

| Faster R-CNN | 10.8% | 6.8% | 4.9% | 37.9% | 17.6% | 16.4% | 7.3% | 7.5% | 37.6% | 15.4% | 17.2% |

| Cascade-RCNN | 19.2% | 12.8% | 6.6% | 46.9% | 27.1% | 18.1% | 13.2% | 7.6% | 28.4% | 18.9% | 20.2% |

| CenterNet | 20.1% | 10.3% | 6.1% | 53.7% | 16.8% | 21.4% | 11.3% | 12.4% | 36.9% | 16.1% | 20.5% |

| YOLOv7-tiny | 34.2% | 31.2% | 8.2% | 66.5% | 32.9% | 26.0% | 16.6% | 8.7% | 41.2% | 37.1% | 30.2% |

| YOLOv8 | 40.5% | 31.5% | 11.1% | 78.5% | 43.4% | 32.1% | 25.8% | 13.5% | 53.2% | 41.9% | 36.8% |

| FiFoNet | 44.9% | 35.8% | 11.8% | 78.3% | 41.1% | 33.9% | 22.4% | 12.3% | 48.7% | 42.6% | 36.9% |

| YOLOv10 | 39.1% | 20.3% | 6.9% | 70.5% | 34.2% | 23.4% | 17.6% | 9.2% | 40.8% | 32.0% | 33.5% |

| LWUAVDet-S | 33.5% | 27.2% | 7.8% | 67.6% | 35.4% | 26.7% | 20.7% | 13.5% | 42.5% | 34.8% | 33.1% |

| LW-YOLOv8 | 30.5% | 22.2% | 6.9% | 57.1% | 30.2% | 23.7% | 15.1% | 9.5% | 41.8% | 32.8% | 29.9% |

| Fog-UAVNet | 43.6% | 33.9% | 12.3% | 79.9% | 44.6% | 37.0% | 28.5% | 14.7% | 58.7% | 44.1% | 39.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Dong, Q.; Han, T.; Wu, G.; Sun, L.; Lu, Y. Robust Object Detection for UAVs in Foggy Environments with Spatial-Edge Fusion and Dynamic Task Alignment. Remote Sens. 2026, 18, 169. https://doi.org/10.3390/rs18010169

Dong Q, Han T, Wu G, Sun L, Lu Y. Robust Object Detection for UAVs in Foggy Environments with Spatial-Edge Fusion and Dynamic Task Alignment. Remote Sensing. 2026; 18(1):169. https://doi.org/10.3390/rs18010169

Chicago/Turabian StyleDong, Qing, Tianxin Han, Gang Wu, Lina Sun, and Yuchang Lu. 2026. "Robust Object Detection for UAVs in Foggy Environments with Spatial-Edge Fusion and Dynamic Task Alignment" Remote Sensing 18, no. 1: 169. https://doi.org/10.3390/rs18010169

APA StyleDong, Q., Han, T., Wu, G., Sun, L., & Lu, Y. (2026). Robust Object Detection for UAVs in Foggy Environments with Spatial-Edge Fusion and Dynamic Task Alignment. Remote Sensing, 18(1), 169. https://doi.org/10.3390/rs18010169