Abstract

Unmanned aerial vehicle (UAV) remote sensing has emerged as a powerful tool for precision agriculture, offering high-resolution crop monitoring capabilities. However, multi-flight UAV missions introduce radiometric inconsistencies that hinder the accuracy of vegetation indices and physiological trait estimation. This study investigates the efficacy of relative radiometric correction in enhancing canopy chlorophyll content (CCC) estimation for winter wheat. Dual UAV sensor configurations captured multi-flight imagery across three experimental sites and key wheat phenological stages (the green-up, heading, and grain filling stages). Sentinel-2 data served as an external radiometric reference. The results indicate that relative radiometric correction significantly improved spectral consistency, reducing RMSE values (in spectral bands by >86% and in vegetation indices by 38–96%) and enhancing correlations with Sentinel-2 reflectance. The predictive accuracy of CCC models improved after the relative radiometric correction, with validation errors decreasing by 17.1–45.6% across different growth stages and with full-season integration yielding a 44.3% reduction. These findings confirm the critical role of relative radiometric correction in optimizing multi-flight UAV-based chlorophyll estimation, reinforcing its applicability for dynamic agricultural monitoring.

1. Introduction

Precision agriculture technologies are revolutionizing global food security strategies by enabling real-time crop health diagnostics. As wheat is a major staple crop globally, the advanced monitoring of wheat growth dynamics enables targeted interventions that optimize resource allocation. In particular, chlorophyll content monitoring emerges as a critical physiological indicator, as it directly correlates with photosynthetic capacity and nitrogen status [1,2,3]. Therefore, precise quantification of wheat chlorophyll content is critical to optimizing precision agriculture frameworks, bridging physiological insights with actionable agronomic decisions.

Conventional wheat chlorophyll monitoring methodologies face fundamental tradeoffs between spatial granularity and operational scalability. Field surveys, while providing localized accuracy, suffer from labor intensiveness and limited spatial representativeness. Conversely, satellite platforms achieve regional-scale coverage but with inherent resolution constraints (>10 m pixels) that obscure critical field-scale heterogeneities. As an emerging technology, unmanned aerial vehicles (UAVs) have revolutionized field-scale agricultural monitoring [4,5,6]. However, current UAV operational constraints, including limited battery endurance (typically <30 min) and regulatory altitude restrictions (commonly <120 m), restrict single-flight coverage to approximately 2–3 km2 under standard operational parameters. This technological limitation has confined most existing research to localized experimental plots [7,8], significantly impeding the scalability of UAV applications for regional agricultural management.

Large-area precision mapping necessitates the acquisition of multi-flight UAV missions [9,10]. However, a critical technical challenge emerges from the inherent spectral variability observed in multi-temporal UAV datasets, where identical surface features might exhibit variable reflectance characteristics across different acquisition campaigns. The resulting spectral inconsistencies introduce substantial uncertainties in the comparative analysis of crop growth dynamics. To address these limitations, the radiometric correction of multi-flight UAV data becomes imperative to maintain physiological signal fidelity in large-area UAV chlorophyll mapping [11].

Radiometric correction techniques are primarily classified into two categories: absolute radiometric correction and relative radiometric correction [12]. Absolute approaches, alternatively termed absolute atmospheric correction, aim to remove atmospheric effects, requiring synchronized meteorological measurements (atmospheric pressure, temperature, humidity, etc.) that are rarely obtainable at UAV operational scales [13,14,15,16,17]. Conversely, relative radiometric correction operates through reference-image normalization without requiring supplementary atmospheric data [18,19]. Due to the significant operational advantages of simplified computational workflow and reduced data dependency, relative radiometric correction has become the preferred methodology for enhancing radiometric consistency in remote sensing images acquired at different times [20,21]. A representative approach in relative radiometric correction involves pseudo-invariant-feature (PIF)-based methodologies. These techniques normalize target images to a reference image through linear/nonlinear transformations, predicated on two core assumptions: (1) sufficient spectrally stable PIFs exist across all images; (2) inter-image radiometric discrepancies follow mathematically definable relationships [19,22,23,24]. While effective for multi-temporal satellite imagery captured by identical sensors over consistent areas [25,26], PIF-based correction proves inadequate for multi-flight UAV datasets given their partial or non-overlapping coverage, where insufficient PIFs may exist. An alternative paradigm employs exterior reference normalization, utilizing concurrent imagery from auxiliary sensors (typically higher-quality, lower-resolution datasets) for cross-calibration. Originally developed for satellite sensor inter-calibration [27,28], these methods have been adapted for UAV applications [11,29,30,31]. In particular, a relative radiometric correction method multi-flight unmanned aerial vehicle images based on concurrent satellite imagery (MACA) was proposed and has been successfully applied in the predictions of winter wheat nitrogen accumulation [11]. However, the operational efficacy of such exterior-reference strategies remains unverified for chlorophyll content prediction across heterogeneous sensor configurations and dynamic phenological stages.

To address these critical research gaps, this study investigated the effectiveness of relative radiometric correction in multi-flight UAV-based chlorophyll content estimation. The specific objectives were as follows: (1) to assess the applicability of relative radiometric correction across different UAV sensor systems; (2) to examine the enhancement potential of this correction method for cross-temporal UAV modeling throughout wheat phenological stages. To do this, we employed dual UAV sensor configurations to capture multi-flight imagery across three experimental sites and key growth stages. The MACA method was implemented using concurrent Sentinel-2 data as an external reference standard. Through comparative analysis of the relationships between Sentinel-2 observations and both uncorrected/corrected UAV datasets, we evaluated MACA’s performance at both spectral band and vegetation index (VI) levels. Furthermore, we quantified the methodological contributions to canopy chlorophyll content (CCC) prediction accuracy across study sites and wheat growth stages. Finally, the discussion emphasized the operational implications of relative radiometric correction for optimizing multi-sensor synergy in precision agriculture.

2. Materials and Methods

2.1. Experimental Sites and Design

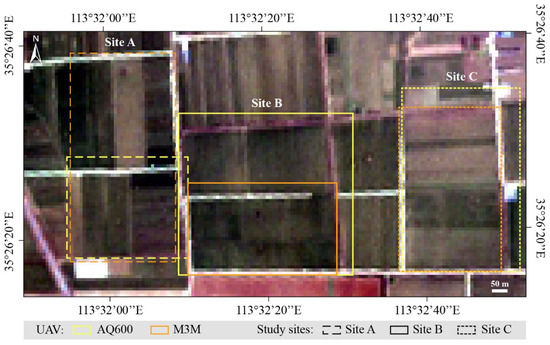

The study area is located in Baobi County, Xinxiang City, Henan Province (35.4448° N, 113.5305° E), one of the major wheat producing regions in China (Figure 1). The climate of this area is characterized by a warm temperate continental monsoon, with an annual average air temperature of 14 °C and mean annual precipitation of 590 mm. The soil type is predominantly tidal soil, which is highly fertile and conducive to agricultural cultivation. Winter wheat in this region is typically sown in October and harvested in June of the following year.

Figure 1.

Study sites in Baobi County, Xinxiang City, Henan Province, China. The displayed image is the Sentinel-2 RGB image on DOY 62 at 10 m. The coverage for each site illuminates the range of AQ600 and Mavic 3 multispectral (M3M) imagery. The flight routes for each drone are shown in Figure S1 in Supplementary Materials.

In the study area, we selected three sites with variation in growth status of winter wheat (Figure 1). Firstly, multi-flight UAV images were collected for each study site, along with the concurrent satellite data and field measurements (Section 2.2). Secondly, the relative radiometric correction method for multiflight UAV images based on concurrent satellite imagery (i.e., MACA) were used to generate the radiometrically corrected UAV images (Section 2.3). Finally, to evaluate the impact of relative radiometric correction on wheat CCC estimation, the multi-flight UAV data before and after the radiometric correction were compared with satellite data, including the comparisons of the corresponding spectral bands, VIs, and the performance of CCC inversion models (Section 2.4).

2.2. Data Acquisition

2.2.1. UAV Data

To assess the performance of relative radiometric correction on different UAV data, two UAV-mounted sensors were used in this study, i.e., AQ600 Pro multispectral camera and DJI Mavic 3 multispectral (M3M) imagery. The AQ600 (YUSENSE, Qingdao, China) is a high-precision imaging system designed for UAV-based remote sensing, integrating five spectral bands, i.e., blue, green, red, near infrared (NIR), and red edge (RE) bands (Table 1). The AQ600 was mounted on a DJI Matrice 300 quadcopter (SZ DJI Technology Co., Ltd., Shenzhen, China). The M3M (SZ DJI Technology Co., Ltd., Shenzhen, China) is a compact UAV equipped with an integrated multispectral imaging system, featuring four bands, i.e., green, red, near infrared, and red edge (Table 1). Its built-in RTK module ensures centimeter-level positioning accuracy, while the sunlight intensity sensor compensates for irradiance variations.

Table 1.

The satellite and UAV data used in this study.

All UAV images were collected in cloud-free conditions between 10:00 and 13:00 local time (see detailed weather conditions in Table S1 in Supplementary Materials). Due to the logistical constraints of UAV deployment and equipment limitations, each UAV system was operated separately during different wheat growth stages. The AQ600 was used to collect the data on day of year (DOY) 62, and the M3M was operated on DOY 107 and 129. This scheduling approach allowed optimal use of clear weather windows and ensured standardized flight conditions for each sensor. Additionally, this temporal separation was intentionally designed to evaluate MACA’s ability to harmonize data acquired by heterogeneous sensors across varying phenological stages, thereby offering a more comprehensive assessment of its robustness under realistic operational scenarios. The AQ600 and M3M were flown at 180 m with a speed of 8 m/s and 75 m with a speed of 6 m/s, respectively. These flight parameters were optimized based on the inherent operational constraints and sensor specifications of each UAV system to balance battery endurance, equipment stability, and spatial coverage. For instance, the speed adjustments (8 m/s for AQ600 vs. 6 m/s for M3M) ensured stable image acquisition given differences in payload weight and gimbal stabilization capabilities. All images were collected once every second with 70% side overlap and 80% forward overlap, adhering to photogrammetric best practices for minimizing gaps and ensuring seamless orthomosaic generation. The AQ600 images were processed in Yusense Map (YUSENSE, Qingdao, China), and the M3M images were processed in Agisoft Metashape (Agisoft LLC, St. Petersburg, Russia). Generally, the UAV image pre-processing includes six steps: (1) reflectance calibration based on the calibration panels with a surface reflectance of 25%, 50%, and 70%; (2) photo alignment; (3) geometric correction based on ground control points (GCPs) with measured coordinates by T5 Pro RTK (Shanghai Huace Navigation Technology Ltd., Shanghai, China); (4) dense cloud conduction; (5) digital elevation model (DEM) conduction; (6) orthomosaic production. Consequently, UAV images with a spatial resolution of 0.1 m were generated for analysis.

2.2.2. Sentinel-2 Data

The Sentinel-2 multispectral instrument (MSI) Level-2A surface reflectance products were obtained from the Copernicus Data Space Ecosystem. These bottom-of-atmosphere (BOA) reflectance products employ the Sen2Cor atmospheric correction processor [32], which implements the LIBRADTRAN radiative transfer model [33] to account for atmospheric scattering and absorption effects. Extensive validation studies [34] have demonstrated radiometric uncertainties below 3% across reflective wavelength bands (490–2190 nm), establishing these geometrically corrected products as a reliable reference dataset for relative radiometric correction.

Five bands with similar wavelength configurations to the bands of UAV data were used in this study (Table 1). The RE band data, with a resolution of 20 m, were resampled to 10 m using SNAP (ESA Sentinel Application Platform). Furthermore, the image pairs of UAV and Sentinel-2 data were manually co-registered based on GCPs and cropped to the same area in ENVI 5.3 (Exelis, Boulder, CO, USA).

2.2.3. Wheat CCC Measurement

This study focused on winter wheat (Triticum aestivum L., cultivar ‘Zhongmai 578′), sown in drills. Field measurements were synchronized with UAV data acquisition across three critical growth stages, employing a stratified random sampling strategy across Sites A, B, and C (Figure 1). A total of 84–93 georeferenced plots per field survey were positioned with a T5 Pro RTK GNSS receiver (sub-centimeter accuracy) (CHC Navigation, Shanghai, China) and characterized using destructive quantification methods. Destructive sampling was conducted within a 0.2 m × 0.2 m quadrat plot with stand closure exceeding 90%, ensuring plot homogeneity. At each plot, all leaves from plants were excised. Leaf area was measured using a CI-110 plant canopy imager, and leaf area index (LAI, m2/m2) was calculated as the ratio of total leaf area to plot area. Leaf chlorophyll content (LCC, µg/cm2) was measured through standard wet chemistry procedures [35]. LAI and LCC ranges were 0.47–7.06 m2/m2 and 18.9–53.5 μg/cm2, respectively, across growth stages. Canopy chlorophyll content (CCC, g/m2) was derived as the product of LAI and LCC (CCC = LAI × LCC), ensuring biophysical consistency with canopy-scale photosynthetic capacity.

2.3. Relative Radiometric Correction Method

Among the existing relative radiometric correction method, the MACA method [11] is designed specifically for the relative radiometric correction of multi-flight UAV data and shows higher robustness (especially in crop monitoring) than other methods, such as mean of ratio [36] and PIF-based method [22]. Therefore, we used MACA as the relative radiometric correction method in this study.

The MACA method employs a two-stage spectral harmonization framework to address radiometric inconsistencies in UAV imagery through the synergistic use of concurrent satellite observations. Firstly, a rule-based regression model (i.e., Cubist) was implemented to establish nonlinear relationships between UAV spectral bands and their Sentinel-2 counterparts. For each study site, band-specific models were trained using all spectral channels from the UAV sensor as predictors, thereby inherently compensating for spectral response mismatches between sensors. Model complexity was optimized through the iterative tuning of rule quantities (1–20 rules) based on the root mean square error (RMSE) minimization within training subsets. Then, the pretrained Cubist models were applied to native-resolution UAV orthomosaics (0.1 m) to generate fine-scale reference imagery spectrally aligned with Sentinel-2 standards. Per-band linear correction coefficients were then derived via least squares optimization:

where ai (slope) and bi (intercept) were calculated by minimizing residuals between original UAV reflectance (ρUAV) and Cubist-generated reference values across all pixels. This dual-resolution approach decoupled spectral alignment (coarse scale) from radiometric adjustment (native scale), effectively mitigating spatial aggregation biases while preserving fine-grained spatial patterns. Consequently, the original multi-flight UAV data were calibrated to be more consistent at 0.1 m.

2.4. Comparison and Evaluation

The radiometric correction efficacy was quantitatively assessed through multispectral consistency analysis and agronomic predictive validation. The UAV images were resampled to 10 m and directly compared with the corresponding Sentinel-2 data across spectral bands and eight chlorophyll-sensitive VIs (Table 2). Inter-season agreement was quantified using the coefficient of determinations (R2) and RMSE, with the relative difference (RD) of the RMSE values of corrected and uncorrected UAV-VIs serving as a normalized metric for assessing correction efficacy:

RD = (RMSEcorrected − RMSEuncorrected)/RMSEuncorrected × 100%.

To further assess the operational value of relative radiometric correction in multi-flight UAV-based CCC monitoring, we implemented a dual-phase validation framework combining spectral correlation analysis and machine-learning prediction. The correlations between the VIs and measured CCC were quantified by the Pearson correlation coefficient (PCC). The random forest (RF) algorithm was employed to predict CCC due to its demonstrated efficacy in accurately estimating crop chlorophyll content [37,38,39]. Eight RF models were developed based on the following: (1) uncorrected and (2) corrected AQ600 imagery at the green-up stage; (3) uncorrected and (4) corrected M3M imagery at the heading stage; (5) uncorrected and (6) corrected M3M imagery at the grain filling stage; (7) uncorrected and (8) corrected UAV imagery at all stages. All RF models were developed using MATLAB’s TreeBagger implementation (MATLAB R2022b), with hyperparameters set to default mtry (predictors per split) and ntree = 1000 for variance stabilization. The selected VIs (Table 2) were used to build the RF models. Each one was trained on 66% stratified random samples (2 of 3 samples per dataset) and validated on the remaining data. Model performance was evaluated using R2 and relative RMSE (rRMSE).

Table 2.

The vegetation indices (VIs) used in this study.

Table 2.

The vegetation indices (VIs) used in this study.

| VIs | Formulation | Reference |

|---|---|---|

| NDVI | (NIR − Red)/(NIR + Red) | [40] |

| NDRE | (NIR − RE)/(NIR + RE) | [41] |

| EVI | 2.5 × (NIR − Red)/(NIR + 6Red − 7.5Green + 1) | [42] |

| CIre | NIR/RE − 1 | [43] |

| CIgreen | NIR/Green − 1 | [44] |

| SARE | (NIR − RE)/(NIR + RE + 0.25) + 0.25 | [45] |

| REDVI | NIR − RE | [46] |

| MTCI | (NIR − RE)/(RE − Red) | [47] |

3. Results

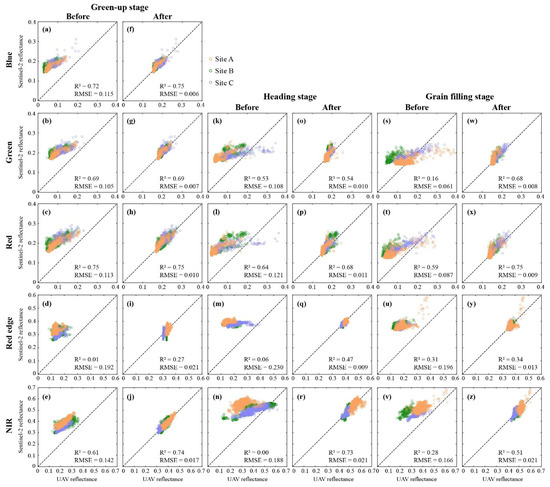

3.1. Comparison of Spectral Bands Before and After Correction

A comparison was performed between UAV reflectance values before and after radiometric correction against Sentinel-2 reflectance values across three wheat growth stages (Figure 2). Prior to radiometric correction, UAV reflectance showed varying levels of agreement with Sentinel-2 reflectance across different growth stages and UAV sensors. After applying relative radiometric correction, significant improvements were observed across all stages and spectral bands. The R² values increased notably, especially in the red edge band. For instance, in the green-up stage, the red edge band improved from R2 = 0.01 to R2 = 0.27. Similarly, the red edge band increased dramatically from R2 = 0.06 to R2 = 0.47 in the heading stage. Generally, the RMSE values also showed significant reductions (>86%), regardless of spectral bands, UAV sensors, and growth stages. It is indicated that relative radiometric correction effectively improved the consistency and accuracy of UAV-derived reflectance across different UAV sensors and growth stages.

Figure 2.

Comparison of UAV-derived reflectance before and after relative radiometric correction against Sentinel-2 reflectance across three wheat growth stages, i.e. (a–j) green-up stage, (k–r) heading stage, and (s–z) grain filling stage, for different spectral bands.

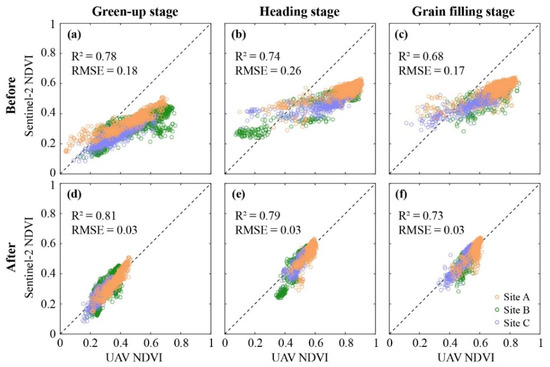

3.2. Comparison of VIs Before and After Correction

The impact of radiometric correction on various vegetation indices (VIs) was assessed (Table 3). Across all growth stages, RMSE values for VIs significantly decreased after correction, with reductions ranging from 38% to 96%. The NDVI, a key indicator of vegetation health, showed RMSE reductions of 81%, 88%, and 82% at the green-up, heading, and grain filling stages, respectively. Similar trends were observed in other indices, particularly NDRE and CIre, which experienced over 89% error reduction across wheat growth stages. The correction had a greater impact on M3M-derived VIs, which initially exhibited higher RMSE values. The scatter plots of NDVI (Figure 3) further illustrate this improvement, with R2 values increasing after correction (e.g., from 0.78 to 0.81 at the green-up stage and from 0.68 to 0.73 at the grain-filling stage), alongside notable reductions in RMSE. These results confirm that relative radiometric correction enhances the accuracy and consistency of UAV-based VI calculations, improving their reliability for monitoring wheat CCC.

Table 3.

The RMSE assessment of VIs before and after the radiometric correction.

Figure 3.

Comparison of UAV-derived NDVI (a–c) before and (d–f) after relative radiometric correction against Sentinel-2 NDVI across three wheat growth stages.

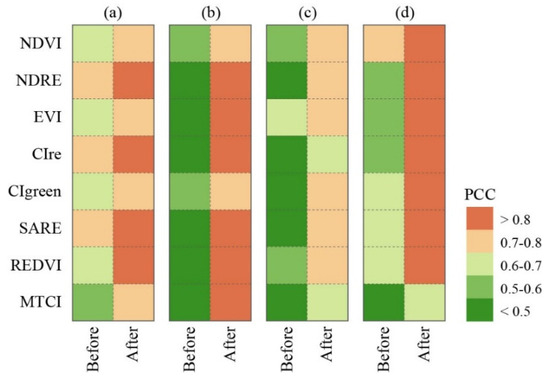

3.3. Evaluation of CCC Modelling Before and After Correction

Figure 4 presents the Pearson correlation coefficient (PCC) between measured CCC and VIs at different growth stages. Generally, all corrected VIs showed a higher correlation with CCC. The PCC values exhibited statistically significant improvements (paired t-test, p < 0.01), with mean PCC values increasing from 0.50 (before correction) to 0.78 (after correction). These improvements confirm that relative radiometric correction reduces the spectral variability of multi-flight UAV data, thereby amplifying the physiological signal captured by VIs and strengthening their utility for multi-flight UAV-based CCC chlorophyll quantification methodologies.

Figure 4.

Pearson correlation coefficient (PCC) between the measured canopy chlorophyll content (CCC) and vegetation indices (VIs) extracted from the multi-flight UAV imagery before and after relative radiometric correction at the (a) green-up, (b) heading, (c) grain filling, and (d) all stages.

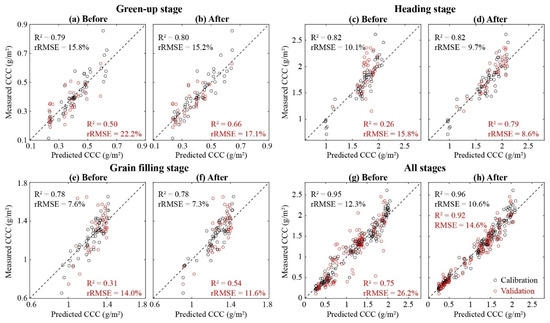

Furthermore, the evaluation of CCC modeling before and after radiometric correction was conducted across all growth stages. The results are presented in Figure 5, which shows the relationship between predicted and measured CCC values, with calibration and validation datasets. Before correction, while the model exhibited a strong correlation with measured CCC values (R2 = 0.79) and an rRMSE of 15.8% in the green-up stage, the rRMSE for the validation dataset was higher at 22.2% (Figure 5a). After applying relative radiometric correction, the model’s performance showed significant improvements, especially with R² increasing to 0.66 and rRMSE decreasing to 17.1% for validation (Figure 5b). A similar pattern was observed across the other stages. The CCC estimation based on corrected UAV data demonstrated substantial improvements in model accuracy across wheat growth stages, with validation errors decreasing by 22.9% (from 22.2 to 17.1%), 45.6% (from 15.8 to 8.6%), and 17.1% (from 14.0 to 11.6%) at the green-up, heading, and grain filling stages, respectively. These results indicate that the enhanced radiometric consistency reduced discrepancies between calibration and validation performance after relative radiometric correction. Despite the significant improvement in CCC prediction performance after radiometric correction for each stage (Figure 5a–f), residual discrepancies were still observed in CCC intervals with more discrete data distribution. These prediction errors were especially pronounced in the lower and upper CCC ranges, which were underrepresented in the training dataset. Compared to the models trained on only one-stage samples, the models trained using all samples improved the CCC predictions (Figure 5g,h), which is likely attributed to the larger range of training data, including the lowest and highest measures of CCC. Furthermore, integrated multitemporal models exhibited superior robustness compared to single-stage configurations, achieving a 44.3% reduction in full-season validation errors (from 26.2 to 14.6%) (Figure 5g,h), confirming the model’s effectiveness in harmonizing spectral responses across heterogeneous growth conditions. Generally, these results highlight the significant improvements in model accuracy and consistency after applying radiometric correction, particularly for the validation dataset. It is also recommended to consider data-balanced sampling strategies (e.g., using full-season training data) to improve model robustness.

Figure 5.

Comparison of measured versus predicted canopy chlorophyll content (CCC) before and after relative radiometric correction across different wheat growth stages: (a,b) green-up stage, (c,d) heading stage, (e,f) grain filling stage, and (g,h) all stages.

4. Discussion

4.1. Benefits of Relative Radiometric Correction for Multi-Flight UAV Analysis

The integration of multi-flight UAV data into operational crop monitoring systems necessitates stringent radiometric consistency and model generalizability across temporal and spatial domains. Our findings demonstrate the critical role of relative radiometric correction in enabling reliable multi-flight UAV analysis through three principal mechanisms: (1) enhanced inter-sensor spectral agreement between UAV-derived and satellite reference observations across discrete flight campaigns; (2) mitigated cross-sensor discrepancies arising from heterogeneous imaging systems; (3) stabilized predictive performance of chlorophyll estimation models against phenological variations. This tripartite enhancement establishes relative radiometric correction as a foundational preprocessing step for scaling UAV-based crop monitoring from plot-level to regional applications while maintaining physiological relevance.

The enhanced spectral agreement between UAV and satellite observations stems from the capacity of relative radiometric correction to reconcile sensor-specific spectral response functions. This capability enables methods like MACA to harmonize data, even when UAV and satellite bands exhibit center wavelength offsets exceeding 20 nm (Table 1). Our results demonstrate that MACA achieved post-correction R² improvements of 0.21–0.46 across bands with initial weak correlations (R2 < 0.3) (Figure 2), most notably in the red edge domain where vegetation exhibits heightened chlorophyll sensitivity [48]. Compared to spectral bands, vegetation indices demonstrated a greater degree of RMSE reduction after correction, indicating that VIs are more sensitive to radiometric inconsistencies and benefit more from correction. This disparity arises because VIs amplify subtle radiometric inconsistencies through band arithmetic [49], making their correction critical for reliable chlorophyll monitoring.

Concerning cross-sensor harmonization, the relative radiometric correction approach effectively addressed systematic biases between UAV platforms. Such discrepancies primarily originate from variations in sensor radiometric calibration protocols and spectral bandpass characteristics, which conventionally require laborious field calibration campaigns [50,51]. Even after radiation correction in the preprocessing of single-flight UAV data, sensor-specific inconsistencies in uncorrected UAV data were observed in spectral bands and VIs (Figure 2 and Figure 3 and Table 2). These inconsistencies highlight the impact of radiometric variations across different flights and platforms. The exterior-reference (i.e., the concurrent satellite data) employed in MACA circumvents these limitations by leveraging Sentinel-2’s rigorous vicarious calibration, effectively projecting UAV data onto a standardized radiometric scale. This paradigm shift enables interoperability between disparate UAV systems without requiring shared invariant targets, a critical advancement for scalable agricultural monitoring networks integrating heterogeneous sensor fleets.

The stabilized predictive performance across phenological stages demonstrates that relative radiometric correction fundamentally enhances the physiological relevance of UAV spectral data by suppressing sensor-induced variability while preserving chlorophyll-sensitive signals, which is a critical advancement for temporal crop monitoring [52,53]. Prior to correction, the substantial divergence between calibration and validation errors indicated model overfitting [54,55]. Post-correction validation error reductions (17.1–45.6% across stages) confirm that harmonizing multi-flight datasets mitigates spectral overfitting, enabling models to generalize beyond site-specific acquisition conditions. The multi-temporal model’s superior robustness (44.3% full-season error reduction) further arises from MACA’s capacity to normalize phenologically driven spectral variations [11]. These findings substantiate the critical role of radiometric normalization in strengthening the physiological relationship between spectral indices and photosynthetic pigments, thereby advancing the precision of multi-flight UAV-based chlorophyll quantification methodologies.

4.2. Limitations and Future Perspectives

While this study achieved robust relative radiometric correction, several limitations warrant further investigation. First, it is important to acknowledge the potential impact of heterogeneous UAV acquisition strategies on the radiometric correction outcomes. In this study, different UAV platforms (AQ600 and M3M) were employed at distinct time points, along with their varied flight parameters. This asynchronous acquisition strategy was necessitated by logistical constraints and was intentionally adopted to test MACA’s performance under more realistic, heterogeneous operational conditions. Nevertheless, future studies could benefit from controlled experiments with synchronized multi-sensor flights to further isolate sensor performance from temporal confounding factors.

Second, the current validation assumes minimal diurnal atmospheric variation, with all UAV flights conducted within a 3-hour solar noon window (10:00–13:00 local time). Future work should account for significant variations in solar geometry or illumination conditions across multiple flights. It is suggested to evaluate performance under extended temporal gaps (e.g., >6 h) between UAV flights to evaluate BRDF (Bidirectional Reflectance Distribution Function) effects under substantial solar angle variations (e.g., morning vs. noon vs. afternoon flights), as anisotropic reflectance patterns can introduce systematic biases in vegetation indices [56,57,58]. Additionally, the impact of heterogeneous cloud cover or diffuse/direct light ratios on MACA’s performance remains unexplored, which is critical for operational applications in dynamic crop monitoring.

Third, although Sentinel-2 serves as an effective radiometric anchor, its 5-day revisit cycle (under cloud-free conditions) constrains applications, especially in regions with frequent cloud cover and precipitation [59,60,61]. This temporal-resolution limitation may hinder MACA’s ability to capture rapid chlorophyll variations during critical growth phases (e.g., post-fertilization or drought stress). To enhance temporal flexibility, future implementations could integrate higher-spatiotemporal-resolution satellites such as Planet (3 m daily imagery) or commercial constellations (e.g., SkySat), which offer near-daily coverage. Combining these datasets with MACA’s normalization framework would enable a finer temporal interpolation of chlorophyll dynamics while maintaining radiometric consistency.

Finally, the demonstrated synergy between UAV and satellite data via MACA reveals broader potential for multi-source agricultural monitoring. Beyond multispectral data, future extensions could integrate hyperspectral UAV data to resolve narrowband chlorophyll absorption features (e.g., red edge inflection points), thermal imagery to account for canopy temperature-driven physiological changes, and SAR satellites to mitigate cloud interference, enabling cross-sensor data fusion for comprehensive crop trait retrieval [62,63,64]. In addition, future implementations could couple MACA with spatiotemporal fusion architectures [65] to generate chlorophyll maps blending UAV-scale spatial detail with satellite-grade temporal consistency. This aligns with emerging trends in digital agriculture that demand scalable solutions for field-to-landscape monitoring, particularly for chlorophyll-sensitive applications like precision nitrogen management.

These advancements align with emerging trends in digital agriculture that demand scalable solutions for field-to-landscape monitoring, particularly for chlorophyll-sensitive applications like precision nitrogen management. By addressing temporal-resolution constraints and embracing multi-sensor interoperability, MACA’s operational utility can be expanded to support real-time decision-making in heterogeneous agricultural systems.

5. Conclusions

This study highlights the importance of relative radiometric correction for multi-flight UAV-based wheat canopy chlorophyll content (CCC) estimation. By implementing the MACA method with Sentinel-2 data as an external reference, we demonstrated significant improvements in spectral consistency across multiple UAV platforms and flight campaigns. Corrected UAV-derived spectral data exhibited stronger alignment with Sentinel-2 observations, reducing RMSE values for vegetation indices by up to 96% and enhancing their correlation with CCC measurements. Furthermore, the predictive performance of random forest models for CCC estimation improved substantially, with validation errors reduced by 17.1–45.6% across different wheat growth stages.

Our findings emphasize the necessity of relative radiometric correction for achieving robust and scalable UAV-based crop monitoring. The improved radiometric consistency facilitates multi-temporal and multi-sensor data integration, enabling more accurate physiological assessments of crop status. However, further research is required to address potential limitations, including the influence of varying solar illumination angles and atmospheric conditions on radiometric correction accuracy. Additionally, future studies should explore the integration of higher-temporal-resolution satellite data and advanced data fusion techniques to enhance operational applicability.

Overall, the successful application of relative radiometric correction underscores its critical role in advancing multi-flight UAV-based agricultural monitoring. Implementing such methodologies can bridge the gap between high-resolution UAV imagery and broader regional-scale assessments, ultimately supporting precision agriculture initiatives aimed at optimizing crop management and resource allocation.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs17091557/s1.

Author Contributions

Conceptualization, J.J.; methodology, J.J.; validation, J.J. and Q.Z.; formal analysis, J.J.; investigation, Q.Z. and S.G.; writing—original draft preparation, J.J. and Q.Z.; writing—review and editing, Q.Z. and S.G.; funding acquisition, J.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number 42301387) and the Science and Technology Program of Guangdong (No. 2024B1212070012).

Data Availability Statement

The Sentinel-2 data used in this paper are available in the Copernicus Data Space Ecosystem (https://browser.dataspace.copernicus.eu/ (accessed on 21 July 2024)). The UAV and field measurement data are available on request to the corresponding author.

Acknowledgments

The authors would like to thank Changchun Li’s group at Henan Polytechnic University for their assistance during the data and field collection campaigns. We would also like to thank Chengxi Cai for collating and debugging the algorithm code of MACA in Python 3.9.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pan, W.D.; Cheng, X.D.; Du, R.Y.; Zhu, X.H.; Guo, W.C. Detection of chlorophyll content based on optical properties of maize leaves. Spectroc. Acta Pt. A-Molec. Biomolec. Spectr. 2024, 309, 7. [Google Scholar] [CrossRef] [PubMed]

- Taha, M.F.; Mao, H.P.; Wang, Y.F.; Elmanawy, A.I.; Elmasry, G.; Wu, L.T.; Memon, M.S.; Niu, Z.; Huang, T.; Qiu, Z.J. High-Throughput Analysis of Leaf Chlorophyll Content in Aquaponically Grown Lettuce Using Hyperspectral Reflectance and RGB Images. Plants 2024, 13, 22. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.Y.; Li, W.L.; Li, H.C.; Luo, Y.F.; Li, Z.; Wang, X.S.; Chen, X.D. A Leaf-Patchable Reflectance Meter for In Situ Continuous Monitoring of Chlorophyll Content. Adv. Sci. 2023, 10, 10. [Google Scholar] [CrossRef] [PubMed]

- Gokool, S.; Mahomed, M.; Kunz, R.; Clulow, A.; Sibanda, M.; Naiken, V.; Chetty, K.; Mabhaudhi, T. Crop Monitoring in Smallholder Farms Using Unmanned Aerial Vehicles to Facilitate Precision Agriculture Practices: A Scoping Review and Bibliometric Analysis. Sustainability 2023, 15, 18. [Google Scholar] [CrossRef]

- Phang, S.K.; Chiang, T.H.A.; Happonen, A.; Chang, M.M.L. From Satellite to UAV-Based Remote Sensing: A Review on Precision Agriculture. IEEE Access 2023, 11, 127057–127076. [Google Scholar] [CrossRef]

- Tanaka, T.S.T.; Wang, S.; Jorgensen, J.R.; Gentili, M.; Vidal, A.Z.; Mortensen, A.K.; Acharya, B.S.; Beck, B.D.; Gislum, R. Review of Crop Phenotyping in Field Plot Experiments Using UAV-Mounted Sensors and Algorithms. Drones 2024, 8, 21. [Google Scholar] [CrossRef]

- Agrawal, J.; Arafat, M.Y. Transforming Farming: A Review of AI-Powered UAV Technologies in Precision Agriculture. Drones 2024, 8, 25. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.L.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intell. Serv. Robot. 2023, 16, 109–137. [Google Scholar] [CrossRef]

- Tong, P.F.; Yang, X.R.; Yang, Y.J.; Liu, W.; Wu, P.Y. Multi-UAV Collaborative Absolute Vision Positioning and Navigation: A Survey and Discussion. Drones 2023, 7, 35. [Google Scholar] [CrossRef]

- Wang, L.; Huang, W.C.; Li, H.X.; Li, W.J.; Chen, J.J.; Wu, W.B. A Review of Collaborative Trajectory Planning for Multiple Unmanned Aerial Vehicles. Processes 2024, 12, 26. [Google Scholar] [CrossRef]

- Jiang, J.L.; Zhang, Q.F.; Wang, W.H.; Wu, Y.P.; Zheng, H.B.; Yao, X.; Zhu, Y.; Cao, W.X.; Cheng, T. MACA: A Relative Radiometric Correction Method for Multiflight Unmanned Aerial Vehicle Images Based on Concurrent Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 14. [Google Scholar] [CrossRef]

- Yuan, D.; Elvidge, C.D. Comparison of relative radiometric normalization techniques. ISPRS-J. Photogramm. Remote Sens. 1996, 51, 117–126. [Google Scholar] [CrossRef]

- Chen, Y.; Sun, K.; Li, D.; Bai, T.; Huang, C. Radiometric Cross-Calibration of GF-4 PMS Sensor Based on Assimilation of Landsat-8 OLI Images. Remote Sens. 2017, 9, 811. [Google Scholar] [CrossRef]

- Padró, J.-C.; Pons, X.; Aragonés, D.; Díaz-Delgado, R.; García, D.; Bustamante, J.; Pesquer, L.; Domingo-Marimon, C.; González-Guerrero, Ò.; Cristóbal, J.; et al. Radiometric Correction of Simultaneously Acquired Landsat-7/Landsat-8 and Sentinel-2A Imagery Using Pseudoinvariant Areas (PIA): Contributing to the Landsat Time Series Legacy. Remote Sens. 2017, 9, 1319. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and Change Detection Using Landsat TM Data: When and How to Correct Atmospheric Effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Teillet, P.M. Image correction for radiometric effects in remote sensing. Int. J. Remote Sens. 1986, 7, 1637–1651. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, J.; Li, J.; Hu, D. Atmospheric correction of PROBA/CHRIS data in an urban environment. Int. J. Remote Sens. 2011, 32, 2591–2604. [Google Scholar] [CrossRef]

- Hall, F.G.; Strebel, D.E.; Nickeson, J.E.; Goetz, S.J. Radiometric rectification: Toward a common radiometric response among multidate, multisensor images. Remote Sens. Environ. 1991, 35, 11–27. [Google Scholar] [CrossRef]

- Xu, Q.; Hou, Z.; Tokola, T. Relative radiometric correction of multi-temporal ALOS AVNIR-2 data for the estimation of forest attributes. ISPRS-J. Photogramm. Remote Sens. 2012, 68, 69–78. [Google Scholar] [CrossRef]

- Chen, X.; Vierling, L.; Deering, D. A simple and effective radiometric correction method to improve landscape change detection across sensors and across time. Remote Sens. Environ. 2005, 98, 63–79. [Google Scholar] [CrossRef]

- Schroeder, T.A.; Cohen, W.B.; Song, C.; Canty, M.J.; Yang, Z. Radiometric correction of multi-temporal Landsat data for characterization of early successional forest patterns in western Oregon. Remote Sens. Environ. 2006, 103, 16–26. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A.; Schmidt, M. Automatic radiometric normalization of multitemporal satellite imagery. Remote Sens. Environ. 2004, 91, 441–451. [Google Scholar] [CrossRef]

- Du, Y.; Teillet, P.M.; Cihlar, J. Radiometric normalization of multitemporal high-resolution satellite images with quality control for land cover change detection. Remote Sens. Environ. 2002, 82, 123–134. [Google Scholar] [CrossRef]

- Tokola, T.; Löfman, S.; Erkkilä, A. Relative Calibration of Multitemporal Landsat Data for Forest Cover Change Detection. Remote Sens. Environ. 1999, 68, 1–11. [Google Scholar] [CrossRef]

- Rahman, M.M.; Hay, G.J.; Couloigner, I.; Hemachandran, B.; Bailin, J. A comparison of four relative radiometric normalization (RRN) techniques for mosaicing H-res multi-temporal thermal infrared (TIR) flight-lines of a complex urban scene. ISPRS-J. Photogramm. Remote Sens. 2015, 106, 82–94. [Google Scholar] [CrossRef]

- Xu, H.Z.Y.; Wei, Y.C.; Li, X.; Zhao, Y.D.; Cheng, Q. A novel automatic method on pseudo-invariant features extraction for enhancing the relative radiometric normalization of high-resolution images. Int. J. Remote Sens. 2021, 42, 6155–6186. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F. A Cubesat enabled Spatio-Temporal Enhancement Method (CESTEM) utilizing Planet, Landsat and MODIS data. Remote Sens. Environ. 2018, 209, 211–226. [Google Scholar] [CrossRef]

- Voskanian, N.; Wenny, B.N.; Tahersima, M.H.; Thome, K. Inter-calibration of Landsat 8 and 9 Operational Land Imagers. In Proceedings of the Conference on Earth Observing Systems XXVII, San Diego, CA, USA, 23–25 August 2022. [Google Scholar]

- Li, Y.; Yan, W.; An, S.; Gao, W.L.; Jia, J.D.; Tao, S.; Wang, W. A Spatio-Temporal Fusion Framework of UAV and Satellite Imagery for Winter Wheat Growth Monitoring. Drones 2023, 7, 18. [Google Scholar] [CrossRef]

- Zhao, H.T.; Song, X.Y.; Yang, G.J.; Li, Z.H.; Zhang, D.; Feng, H.K. Monitoring of Nitrogen and Grain Protein Content in Winter Wheat Based on Sentinel-2A Data. Remote Sens. 2019, 11, 25. [Google Scholar] [CrossRef]

- Zhang, S.M.; Zhao, G.X.; Lang, K.; Su, B.W.; Chen, X.N.; Xi, X.; Zhang, H.B. Integrated Satellite, Unmanned Aerial Vehicle (UAV) and Ground Inversion of the SPAD of Winter Wheat in the Reviving Stage. Sensors 2019, 19, 17. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Satellite Imagery: ATCOR-2/3 User Guide, Version 9.5; German Remote Sensing Data Center (DFD) of the German Aerospace Center (DLR): Mecklenburg-Vorpommern, Gernamy, 2023. [Google Scholar]

- Mayer, B.; Kylling, A. Technical note: The libRadtran software package for radiative transfer calculations—Description and examples of use. Atmos. Chem. Phys. 2005, 5, 1855–1877. [Google Scholar] [CrossRef]

- Gascon, F.; Bouzinac, C.; Thépaut, O.; Jung, M.; Francesconi, B.; Louis, J.; Lonjou, V.; Lafrance, B.; Massera, S.; Gaudel-Vacaresse, A.; et al. Copernicus Sentinel-2A Calibration and Products Validation Status. Remote Sens. 2017, 9, 584. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K.; Wellburn, A.R. Determinations of total carotenoids and chlorophylls a and b of leaf extracts in different solvents. Biochem. Biochem. Biochem. Soc. Trans. 1983, 11, 591–592. [Google Scholar] [CrossRef]

- Tuominen, S.; Pekkarinen, A. Local radiometric correction of digital aerial photographs for multi source forest inventory. Remote Sens. Environ. 2004, 89, 72–82. [Google Scholar] [CrossRef]

- Brewer, K.; Clulow, A.; Sibanda, M.; Gokool, S.; Naiken, V.; Mabhaudhi, T. Predicting the Chlorophyll Content of Maize over Phenotyping as a Proxy for Crop Health in Smallholder Farming Systems. Remote Sens. 2022, 14, 20. [Google Scholar] [CrossRef]

- Priyanka; Srivastava, P.K.; Rawat, R. Retrieval of leaf chlorophyll content using drone imagery and fusion with Sentinel-2 data. Smart Agric. Technol. 2023, 6, 14. [Google Scholar]

- Shi, H.Z.; Guo, J.J.; An, J.Q.; Tang, Z.J.; Wang, X.; Li, W.Y.; Zhao, X.; Jin, L.; Xiang, Y.Z.; Li, Z.J.; et al. Estimation of Chlorophyll Content in Soybean Crop at Different Growth Stages Based on Optimal Spectral Index. Agronomy 2023, 13, 17. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Huete, A.; Justice, C.; Liu, H. Development of vegetation and soil indices for MODIS-EOS. Remote Sens. Environ. 1994, 49, 224–234. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Keydan, G.P.; Merzlyak, M.N. Three-band model for noninvasive estimation of chlorophyll, carotenoids, and anthocyanin contents in higher plant leaves. Geophys. Res. Lett. 2006, 33, L11402. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.; Montzka, C.; Stadler, A.; Menz, G.; Thonfeld, F.; Vereecken, H. Estimation and Validation of RapidEye-Based Time-Series of Leaf Area Index for Winter Wheat in the Rur Catchment (Germany). Remote Sens. 2015, 7, 2808–2831. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Jiang, J.L.; Johansen, K.; Tu, Y.H.; McCabe, M.F. Multi-sensor and multi-platform consistency and interoperability between UAV, Planet CubeSat, Sentinel-2, and Landsat reflectance data. GISci. Remote Sens. 2022, 59, 936–958. [Google Scholar] [CrossRef]

- Teillet, P.M.; Ren, X. Spectral band difference effects on vegetation indices derived from multiple satellite sensor data. Can. J. Remote Sens. 2008, 34, 159–173. [Google Scholar] [CrossRef]

- Cao, S.; Danielson, B.; Clare, S.; Koenig, S.; Campos-Vargas, C.; Sanchez-Azofeifa, A. Radiometric calibration assessments for UAS-borne multispectral cameras: Laboratory and field protocols. ISPRS-J. Photogramm. Remote Sens. 2019, 149, 132–145. [Google Scholar] [CrossRef]

- Jiang, J.; Zheng, H.; Ji, X.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Ehsani, R.; Yao, X. Analysis and Evaluation of the Image Preprocessing Process of a Six-Band Multispectral Camera Mounted on an Unmanned Aerial Vehicle for Winter Wheat Monitoring. Sensors 2019, 19, 747. [Google Scholar] [CrossRef]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.W.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 24. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 25. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Navasca, H.; Worral, H.; Oduor, P.; Delavarpour, N.; Morales, M.; Bandillo, N.; Flores, P. Predicting lodging severity in dry peas using UAS-mounted RGB, LIDAR, and multispectral sensors. Remote Sens. Appl.-Soc. Environ. 2024, 34, 20. [Google Scholar] [CrossRef]

- Sakamoto, T. Incorporating environmental variables into a MODIS-based crop yield estimation method for United States corn and soybeans through the use of a random forest regression algorithm. ISPRS-J. Photogramm. Remote Sens. 2020, 160, 208–228. [Google Scholar] [CrossRef]

- Guo, Y.H.; Mu, X.H.; Chen, Y.Y.; Xie, D.H.; Yan, G.J. Correction of Sun-View Angle Effect on Normalized Difference Vegetation Index (NDVI) With Single View-Angle Observation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 13. [Google Scholar] [CrossRef]

- Mao, Z.H.; Deng, L.; Duan, F.Z.; Li, X.J.; Qiao, D.Y. Angle effects of vegetation indices and the influence on prediction of SPAD values in soybean and maize. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 14. [Google Scholar] [CrossRef]

- Petri, C.A.; Galvao, L.S. Sensitivity of Seven MODIS Vegetation Indices to BRDF Effects during the Amazonian Dry Season. Remote Sens. 2019, 11, 18. [Google Scholar] [CrossRef]

- Lewinska, K.E.; Frantz, D.; Leser, U.; Hostert, P. Usable observations over Europe: Evaluation of compositing windows for Landsat and Sentinel-2 time series. Eur. J. Remote Sens. 2024, 57, 28. [Google Scholar] [CrossRef]

- Poussin, C.; Peduzzi, P.; Giuliani, G. Snow observation from space: An approach to improving snow cover detection using four decades of Landsat and Sentinel-2 imageries across Switzerland. Sci. Remote Sens. 2025, 11, 16. [Google Scholar] [CrossRef]

- Zhou, Y.; Hu, D.Y.; Li, T.H.; Chen, Y.H. Calibration of Urban Surface Simulated Radiance Using Sentinel-2 Satellite Image and the DART Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 8. [Google Scholar] [CrossRef]

- Ma, J.C.; Liu, B.H.; Ji, L.; Zhu, Z.C.; Wu, Y.F.; Jiao, W.H. Field-scale yield prediction of winter wheat under different irrigation regimes based on dynamic fusion of multimodal UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 12. [Google Scholar] [CrossRef]

- Shi, H.Z.; Liu, Z.Y.; Li, S.Q.; Jin, M.; Tang, Z.J.; Sun, T.; Liu, X.C.; Li, Z.J.; Zhang, F.C.; Xiang, Y.Z. Monitoring Soybean Soil Moisture Content Based on UAV Multispectral and Thermal-Infrared Remote-Sensing Information Fusion. Plants 2024, 13, 20. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Aggarwal, A.K.; Rage, U.K.; Katiyar, V.; Avtar, R. Deep Learning-Based Spatiotemporal Fusion of Unmanned Aerial Vehicle and Satellite Reflectance Images for Crop Monitoring. IEEE Access 2023, 11, 85600–85614. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.-A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).