Abstract

In many extended object tracking applications (e.g., tracking vehicles using a millimeter-wave radar), the shape of an extended object (EO) remains unchanged while the orientation angle varies over time. Thus, tracking the shape and the orientation angle as individual parameters is reasonable. Moreover, the tight coupling between the orientation angle and the heading angle contains information on improving estimation performance. Hence, this paper proposes a constrained filtering approach utilizing this information. First, an EO model is built using an orientation vector with a heading constraint. This constraint is formulated using the relation between the orientation vector and the velocity vector. Second, based on the proposed model, a variational Bayesian (VB) approach is proposed to estimate the kinematic, shape, and orientation vector states. A pseudo-measurement is constructed from the heading constraint and is incorporated into the VB framework. The proposed approach can also address the ambiguous issue in orientation angle estimation. Simulation and real-data results are presented to illustrate the effectiveness of the proposed model and estimation approach.

1. Introduction

Recent advances in sensor technologies have led to an increase in sensor resolution, making the traditional point target assumption less conducive to many practical applications, e.g., autonomous driving, where multiple measurements can be generated from the scattering centers of an object. In this case, an object is preferably considered an extended object (EO). The use of multiple measurements is referred to as extended object tracking (EOT) [1,2,3,4,5,6]. EOT estimates not only the kinematic state but also the extension (orientation and shape). In recent decades, many EOT models have been investigated, such as the random matrix model [1,2,3,4,5] and the random hyper-surface model [6]. An overview of EOT approaches can be found in [7,8].

In recent years, several approaches have been proposed to estimate the orientation angle of an EO that is assumed to be elliptical or rectangular [9,10,11,12,13]. These approaches parameterize the object extension by semi-axes lengths and orientation angle, which have been proven to lead to a successful tracking framework. The work in [9] estimates these quantities using data fitting. A multiplicative error model is proposed in [10]. In [11,12], the EOT problem is cast as joint kinematic state estimation and object extension identification using the expectation maximization algorithm. Ref. [13] proposes a model that defines a Gaussian prior for the orientation angle and an inverse Gamma prior for the shape parameters.

More specifically, the tight coupling between the heading angle (velocity direction of the object centroid) and the orientation angle contains information on the kinematic state and the extension. To directly utilize this information for estimation, modeling of the heading constraint is important. In [11,12,14], an angle is introduced to establish the relation between the heading angle and the orientation angle; however, it is difficult to model this angle directly. In [15], some approaches have been proposed for state estimation with constraints, but they are not specifically developed for EOT. Generally, the existing approaches do not use the heading constraint directly for EOT. Moreover, in the above approaches, the orientation angle is chosen as a state or a parameter being estimated directly. However, the orientation angle comprises angular data with a circular nature. The orientation-based heading constraint may not hold due to the different value ranges of the orientation angle and heading angle, which may cause estimation problems [16]. For clarity, Table 1 presents a comprehensive list of the existing orientation-based approaches for the heading constraint, including their limitations.

Table 1.

Summary of the heading constraint-related literature.

This paper proposes an orientation-vector-based (OVB) model for EOT based on the heading constraint and the orientation vector. The orientation vector is composed of the sine and cosine terms of the orientation angle. A truncated Gaussian distribution is introduced to model the orientation vector. The OVB heading constraint is then obtained by using the relation between the orientation vector and the velocity vector. However, for our proposed model, it is hard to find a closed-form expression for the resulting posterior density. Hence, we utilize the variational Bayesian (VB) for estimation, which is applied to complex filtering problems in the literature to obtain approximate posterior densities [17]. In the approach, a pseudo-measurement is constructed to incorporate the OVB heading constraint into the VB estimation framework. Moreover, the projection method is applied for constraint estimation within this framework.

The main contributions are as follows.

(1) An OVB EOT model is proposed. This model introduces the orientation vector as a state and incorporates the OVB heading constraint to describe the coupling between the orientation vector and the velocity vector. Moreover, the orientation vector is modeled by a truncated Gaussian distribution and the shape has an inverse Gamma prior.

(2) The estimation approach is performed by using both the VB approach and the projection method. Additionally, the pseudo-measurement constructed from the OVB heading constraint can be naturally incorporated into the VB framework. The proposed approaches can recursively estimate the kinematic, shape, and orientation vector states of an EO in an analytical way.

(3) The effectiveness of the proposed model and algorithms, compared with the existing orientation-based EOT algorithms, is demonstrated using simulated and real data. The evaluation results are presented with further discussions.

The paper is organized as follows. Section 2 reviews the existing EOT approaches for modeling the orientation angle separately and the motivations of this paper. Section 3 proposes an OVB EOT model. The estimation approach using the VB approach is derived in Section 4. Section 5 presents experimental evaluation results using simulation and real data. The discussion on the proposed approach is given in Section 6. Section 7 concludes the paper. Mathematical details are included in the Appendix A, Appendix B and Appendix C.

2. Existing Work and Motivations

Some popular approaches estimate not only the kinematic state but also the extension [10,11,12,13,18]. The extension can be represented by a symmetric positive definite matrix , with being the dimension of the physical space. includes the information of the orientation angle and the shape. In many scenarios, the orientation angle of an EO changes over time, while the shape remains unchanged. Thus, several orientation-based approaches have been proposed to model the orientation angle and shape separately.

2.1. Existing EOT Approaches Modeling the Orientation Angle Separately

2.1.1. Shape and Orientation Angle Dynamic Models

In [13], the shape is represented by , and the predicted density of is given by

where denotes the inverse Gamma distribution for with the shape parameter and the scale parameter , respectively. For an elliptical EO, and represent the squares of the semi-axes lengths. Assuming are the parameters of at time step , and can be predicted as and , respectively, following [13]. is the forgetting factor.

According to [13], the orientation angle indicates the counterclockwise angle of rotation from the x-axis, and the dynamic model for the orientation angle is assumed as

where is the white Gaussian process noise, with being the variance.

2.1.2. Kinematic Dynamic Model

To describe the evolution of the kinematic state , the following dynamic model is assumed:

where is a transition matrix. Refs. [2,3] assume as a general proper matrix and with being the kinematic process noise covariance. For the nearly constant velocity (CV) model in [19], and are given by

where T is the sampling interval, is the identity matrix, and ⊗ denotes the Kronecker product with process noise standard deviation for the centroid position.

2.1.3. Measurement Model

At time step k, a sensor generates a set of measurements of an EO, denoted as . The following measurement model is proposed by [10,11,13,18]

where is the Cartesian position; is a known matrix; provides a good Gaussian approximation for measurement sources uniformly distributed across the object surface; is the covariance of the true-measurement noise; and is the rotation matrix defined as .

2.2. Motivations

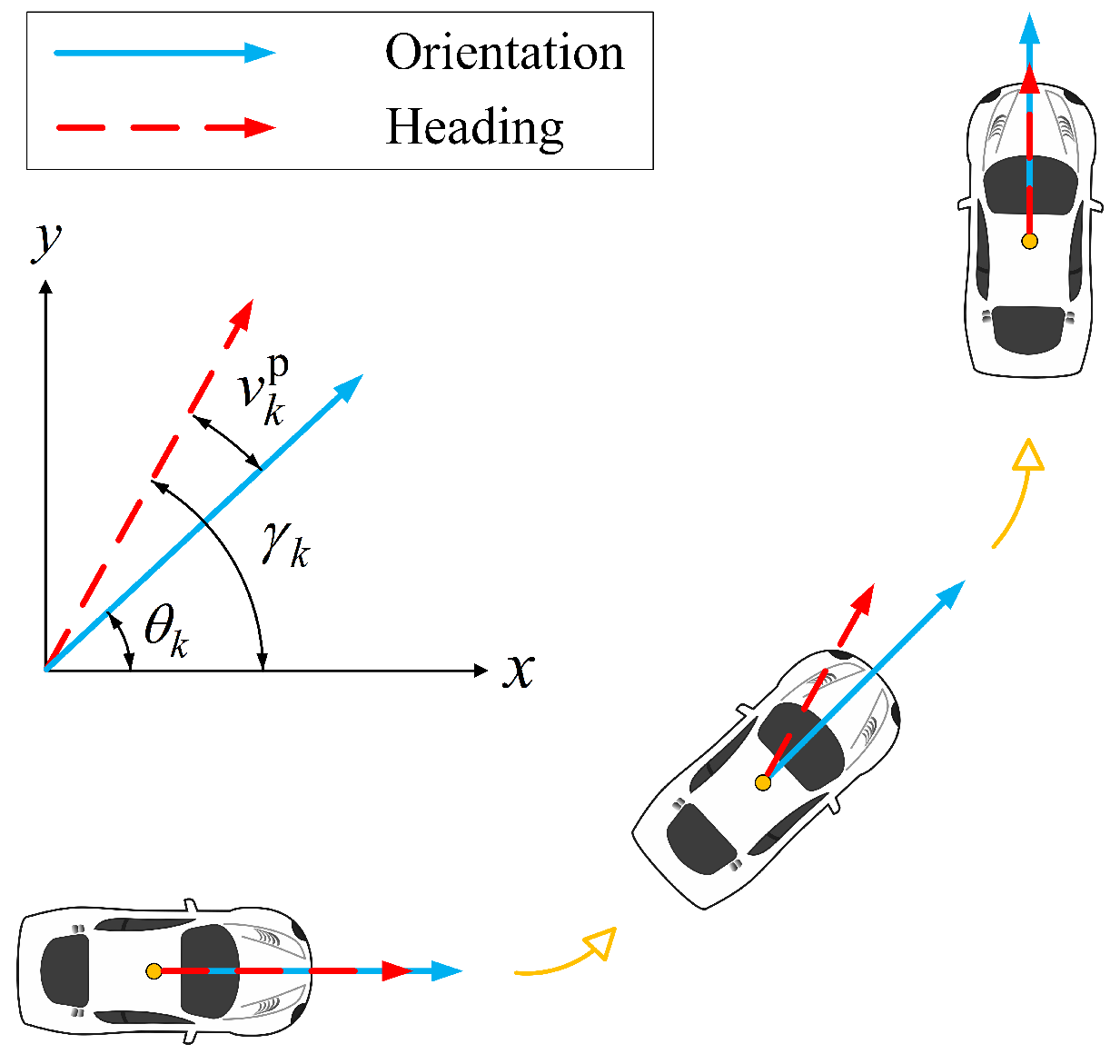

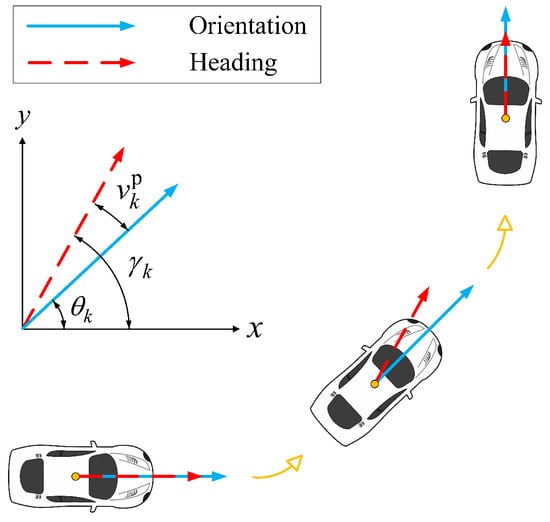

Orientation angle estimation is important for EOT. In practice, the orientation angle is related to the heading angle . As shown in Figure 1, an angle may appear between and , especially during the turns.

Figure 1.

Illustration of orientation angle and heading angle.

Remark 1. (a) In [20], the orientation angle is defined as the orientation angle of the longitudinal vehicle’s axis. In comparison, the heading angle is the direction of movement of the object centroid position of the EO, which can vary during a turn maneuver. We follow the definitions for the heading angle and orientation angle in [20], which are also used in [21].

(b) In fact, and are not always identical but highly dependent. This phenomenon is caused by the object centroid position not coinciding with its rotation center [20]. is almost equal to during a straight-line motion, while there is an induced systematic error between them during a turning maneuver. Equation (21) in [20], which is related to the angle between the heading and the orientation, is derived for the target vehicle. However, this equation is overdetermined and analytic solutions of some variables are not available in practical applications. Thus, it is necessary to use a new model to describe the coupling relation between the heading angle and orientation angle.

(c) In [22], a sideslip angle defined by the angle between the heading and the orientation is estimated by using a large-scale experimental dataset of 216 maneuvers. This indicates that there is indeed a distinction between heading angle and orientation angle.

Considering is a time-varying small angle and is difficult to measure [23], we treat it as a random variable and use a heading constraint to describe the interdependency of and , as follows.

where is the Gaussian noise, is the noise variance, arctan2 refers to a four-quadrant inverse tangent function, and is a Cartesian velocity vector that can be obtained from the kinematic state . The heading constraint provides useful information on the orientation angle. However, it is difficult to handle both transition Equation (2) and heading constraint (5) for estimation directly.

First, the estimation approach should use the information in both transition Equation (2) and heading constraint (5) effectively. Second, the range value of the inverse tangent function and in (5) may be different. The discontinuity at the boundaries of the range value of the inverse tangent function should be handled carefully. Third, always establishes its relation with the measurement equation through a rotation matrix. The rotation matrix includes sine and cosine terms of , i.e., and , which makes the measurement highly nonlinear in the orientation angle.

In view of the above, we consider proposing a model using an orientation vector with a heading constraint and filtering approach. The orientation vector is composed of the sine and cosine functions of the orientation angle. To obtain a recursive algorithm, a variational Bayesian (VB) approach is proposed. A pseudo-measurement can then be constructed to incorporate the OVB heading constraint into the VB estimation framework.

Remark 2. (a) Without further information, we model the angle in (5) via a Gaussian distribution for simplicity. This simple assumption can describe the interdependency of the heading angle and the orientation angle, as well as the randomness of .

(b) The variance is related to object motions and mainly depends on the maneuverability of an EO. The higher the maneuverability, the larger could be.

3. Modeling

3.1. EO State

In this paper, we focus on single elliptical EOT. The EO state is described by the kinematic state , the shape , and the orientation vector . involves the centroid position and . Following [13], the shape is parameterized by the squares of semi-axes lengths, and each parameter of is modeled by an inverse Gamma distribution. The modeling of the orientation vector will be discussed later. For notational simplicity, let .

3.2. Orientation Vector Model

3.2.1. Orientation Vector Distribution and Rotation Matrix

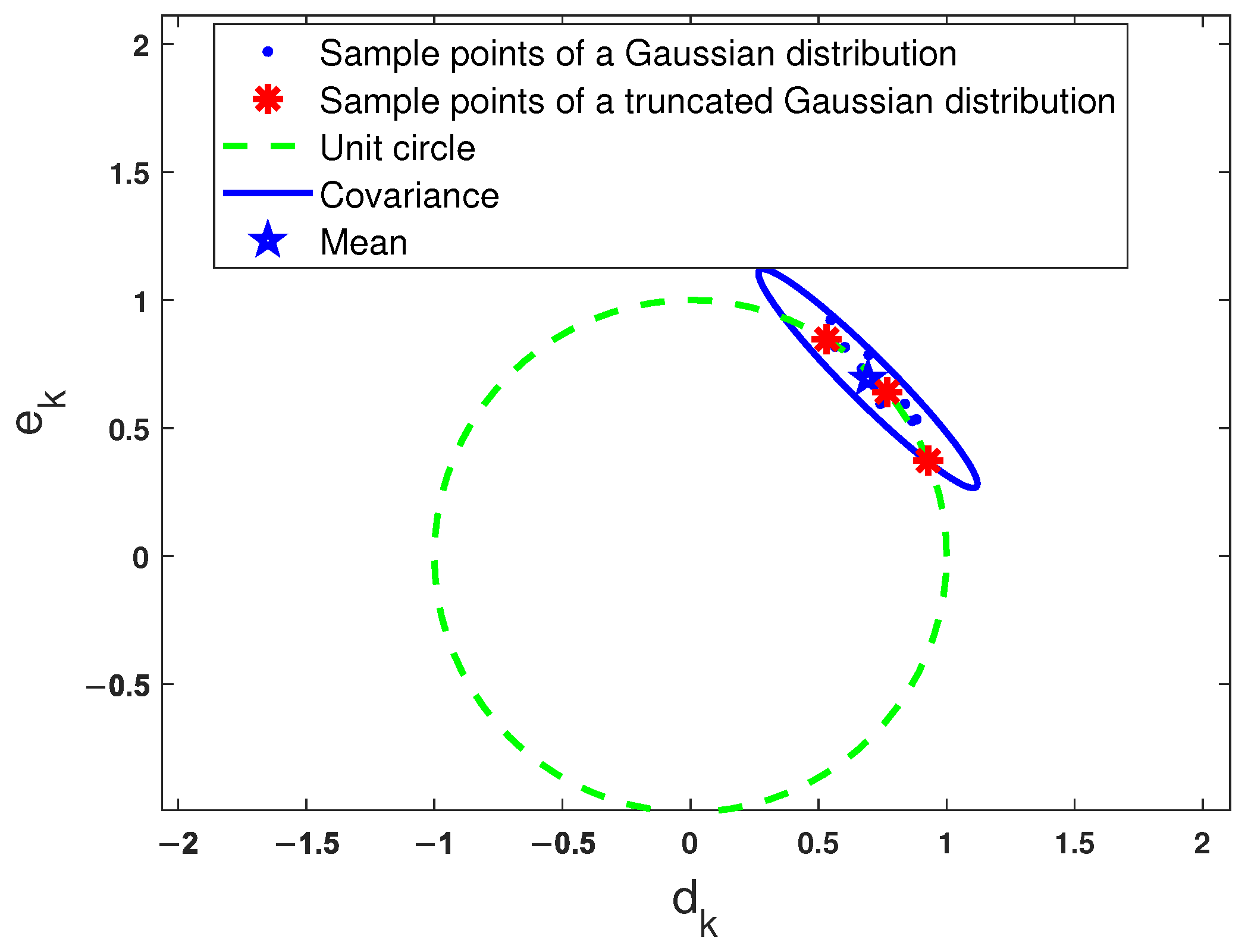

The orientation vector can be modeled as a truncated Gaussian distributed vector with PDF

where denotes the truncated Gaussian distribution; and are the mean and covariance of the truncated Gaussian distribution, respectively; and are the mean and covariance of the “parent” Gaussian distribution, respectively, following [24]; is the indicator function on ; and is the corresponding normalization factor. specifies the truncated Gaussian density support, i.e., the region meeting the following nonlinear constraint:

where means the 2-norm.

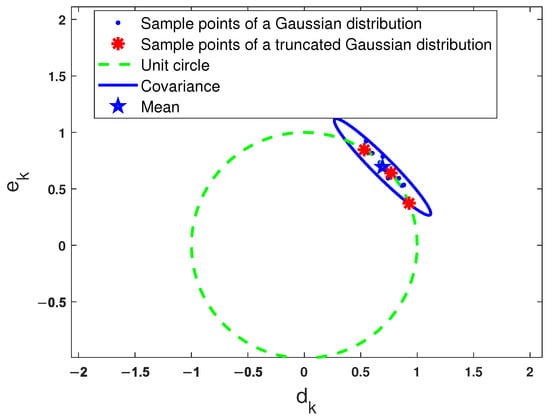

An illustration of the truncated Gaussian distribution is depicted in Figure 2. Each individual sample point of (in red) can be regarded as a sample point generated from the Gaussian PDF of (in red and blue) with constraint (7).

Figure 2.

Illustration of a truncated Gaussian distribution (the set of red asterisk sample points).

Then, the rotation matrix can be given by the orientation vector with constraint (7), as follows.

Remark 3. (a) The reasons for modeling in (6) as a truncated Gaussian distribution are two fold. First, this distribution can naturally cooperate with constraint (7). Second, a Gaussian distribution is characterized by its mean and standard deviation, making it easy to parameterize. A truncated Gaussian distribution retains this simplicity, even when the variable is constrained.

(b) Any vector that satisfies the constraint can always be regarded as an orientation vector . Thus, can be written as in (9).

3.2.2. Dynamic Model of the Orientation Vector

3.3. Heading Constraint on the Orientation Vector

In order to introduce the heading constraint (5) into the OVB estimation framework, we substitute (5) into . Then, can be expressed as follows.

where , ; is the Cartesian velocity vector in ; is the zero matrix; ; and .

Constraint Equation (11) can be rewritten equivalently as the form of the pseudo-measurement equation

Remark 4. (a) The orientation-based approaches may encounter the problem of ambiguity, i.e., the same ellipse being parameterized in different ways [16]. For example, the semi-axes length estimates are reversed and the orientation angle deviates from the true value by , etc. The constraints in (5) and (12) describe the interdependency of the heading angle and the orientation angle, which makes the orientation angle align with the heading angle and prevents ambiguous EO representations.

(b) A special treatment should be performed in order to track an EO along the negative (positive) x-axis when the range value of (5) is limited to a range (). Moreover, the unscented transformation (UT) result for the inverse tangent function in (5) may be wrong in these boundary regions [26]. In contrast, using (12) can provide a convenient solution to this problem.

3.4. New Measurement Model

At time step k, assume that a set of measurements are used for filtering. is the two-dimensional (2D) Cartesian position measurement. For radar sensor applications, the polar measurement includes the measured range and bearing , then we can obtain the radar position measurement by employing the standard coordinate conversion, i.e., and . By assuming that each position measurement source lies on the elliptical object surface and follows a uniform spatial distribution [10], the new measurement model can be written as

where is the measurement matrix; is the Gaussian noise which considers both the shape uncertainties and the true-measurement noise; is the rotation matrix defined in (9); is the shape; is suitable for uniformly distributed scattering centers across the object extension [10]; and is the covariance of the true-measurement noise.

4. Variational Bayesian Approach to EOT

4.1. Pseudo-Measurement

Two nonlinear constraints of (7) and (12) are included in this work. We propose to use the pseudo-measurement method [27] to handle (12) in this part and to use the projection method [25,28] to handle (7) in Section 4.3.

To obtain an additive noise representation, (12) can be approximated using the Taylor series expansions. In (12), is a function of and . The Gaussian noise defined in (5) is assumed to be small and we have . The velocity component estimate can be obtained using the kinematic estimate in the previous iteration. Thus, (12) can be approximated using the following model via the first-order Taylor series expansions around and :

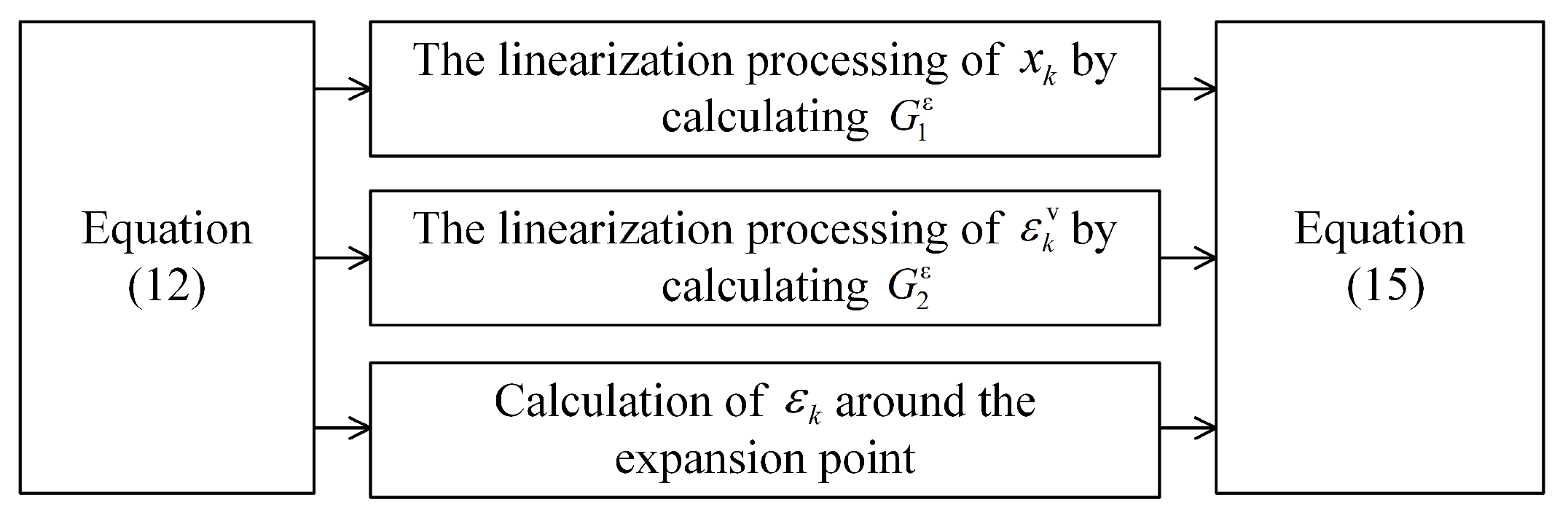

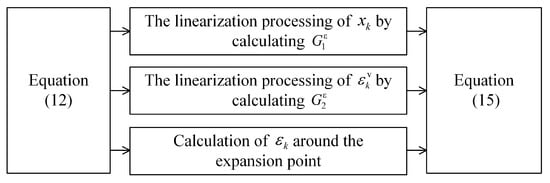

where h.o.t. denotes the higher-order terms, , , and . The linearization process scheme for obtaining (15) is shown in Figure 3.

Figure 3.

The linearization process scheme for obtaining (15).

In order to incorporate (15) into the OVB framework for object tracking, the distribution of in (15) is approximated as a Gaussian distribution using the moment-matching method. We assume that is a Gaussian noise with the same first two moments of . Then, the resulting pseudo-measurement constructed from (15) can be approximated as

4.2. Likelihood Function

By using the matched linearization method proposed in [4], (13) can be rewritten as

where and . denotes the expectation of with respect to the variable . and are one-step predictions of the orientation vector and the shape at time step k, respectively. Note that the above matched linearization is used concerning the noise rather than the state to be estimated so that the linear model can fit the VB framework.

Remark 5. (a) For (13), the measurement equation is linear with the kinematic state . However, the form of Equation (13) indicates that the measurements have two sources of uncertainty: the extension and the true-measurement noise. Following [4], to address the absence of conjugacy caused by the additive true-measurement noise covariance in (13), the matched linearization method is adopted to obtain Equation (18), which is conducive to the derivation of the tracking algorithm within the VB framework.

(b) The proof of (18) is given in Appendix A, which justifies that (18) is a matched linearization form of (13).

Then, the likelihood function of the true measurements can be obtained as

We assume that and is the pseudo-measurement defined in (16). Then, the likelihood function is

According to (16), if , we can obtain

The above equation can provide the coupling information on the kinematic state and the orientation vector .

4.3. Prediction

At time step k, all available measurement information is included in before receiving . To obtain a recursive filter, we make the following usual assumption for the approach:

where , , and are the parameters in the distributions of , , and , respectively.

Under assumption (22), for model (14) we directly obtain the predicted densities of the kinematic state and shape as

where

For , its predicted density is assumed:

As illustrated in Figure 2, each individual sample point of the truncated Gaussian distribution in (27) can be regarded as a sample point generated from with constraint (7). In this case, in accordance with (8), (27) can be equivalently formulated as [25]

Thus, we consider a two-stage estimation scheme to obtain the parameters in (27). First, we derive an analytical result under the assumption to obtain the parameters . Second, we apply the constraint to determine the constrained parameters .

In the first stage, we can use the assumption in (22) and the dynamic model in (10) to calculate the predicted parameters of . However, the dynamic matrix in (10) is a function of noise, which makes it difficult to calculate the distribution in (28). To derive an analytical result under the assumption in (28), a moment-matching method is used. In this case, we calculate the expectation and covariance of conditioned on without constraint, i.e., and . More details of and are given in Appendix B.

In the second stage, the constraint is used to determine the constrained parameters of . The projection method proposed in [28] can be utilized to project into a nonlinear quadratic constraint space, and the constrained estimate is derived by minimizing a constrained cost function, as follows.

where is a positive definite weighting matrix. The closed-form solution to the constrained optimization in (29) is given in [28].

According to [28,29], a solution of the constrained estimate in (29) is equivalent to the normalization of the unconstrained estimate , i.e.,

and the corrected covariance is given by

4.4. Measurement Update Using the Variational Bayesian Approach

EOT aims to estimate the of the object. In [10], the semi-axes lengths are assumed to be Gaussian distributed and the extended Kalman filter (EKF) is used to find the posterior density. However, The squares of the semi-axes lengths (represented by the shape ) are modeled by the inverse Gamma distributions in this paper and there is a coupling relation between the state parameters, which makes the posterior density hard to calculate. The VB framework is powerful for dealing with the hard-to-calculate posterior density. Thus, we will seek an approximate analytical solution using the VB approach.

4.4.1. Measurement Update Without Using Pseudo-Measurement

The measurement update approach in this part does not consider pseudo-measurement (16), and the approach using (16) will be discussed in the next part.

The factorized approximation of the posterior density is given by

For , the optimal estimation of factorized density can be obtained using the following equation [4]:

where denotes the expectation with respect to variables in the set except .

By iteratively using (33), we can obtain , , and after initialization. By assuming conditional independence of the measurements at time step k, the joint density can be written as

where is given in (19) and is the product of (23), (24), and (27).

Substituting (34) into (33), we can obtain

with

where the details of , , and are given in Appendix C.

Additionally, we define and , then .

4.4.2. Measurement Update Using Pseudo-Measurement

The above measurement update approach does not use pseudo-measurement (16), which includes information between the orientation angle and the heading angle to improve the estimation performance. In view of this, we propose to introduce the measurement update using (16). To be more accurate, we replace the likelihood function in (34) with that in (20). Thus, the new measurement update values of and can be obtained by combining the contribution of (21) with (34). As for the update parameters of , they are the same as those in the previous subsection, which can be obtained using (40) and (41).

For , substituting (21) into (33) yields

where denotes any constant terms concerning . By combining (46) with (38) and (39), we can obtain the VB update of with pseudo-measurement (16), as follows.

For , substituting (21) into (33) yields

where denotes any constant terms concerning . Given (42), (43) and (49), the VB update of can be obtained similarly to that of , as follows.

The constrained estimate and the covariance correction can also be calculated using (44) and (45), respectively.

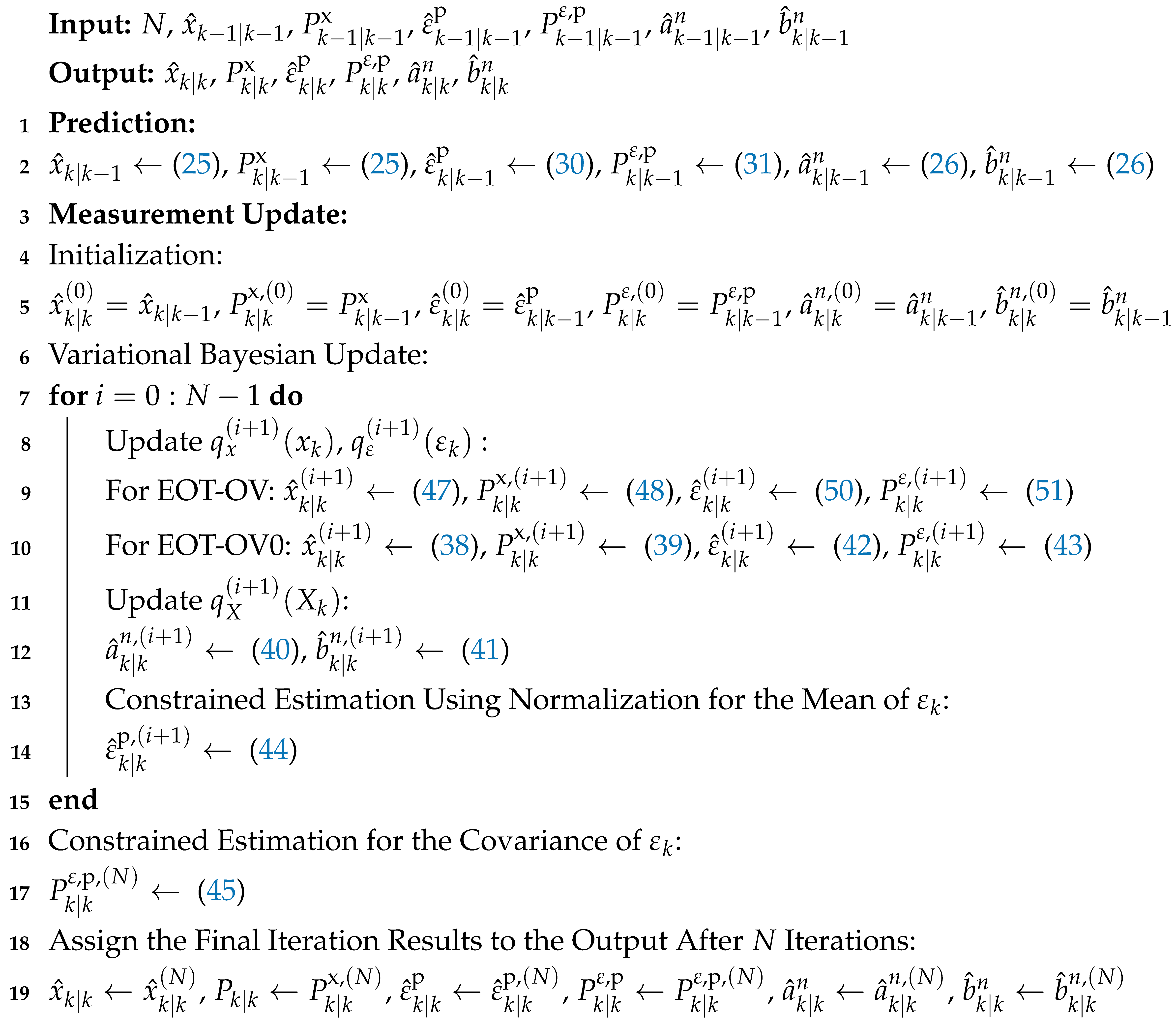

The algorithm using pseudo-measurement (16) in this part is referred to as “EOT-OV”. For comparison, the algorithm without using (16), which is proposed in Section 4.4.1, is referred to as “EOT-OV0”. One cycle of the proposed EOT-OV and EOT-OV0 is given in Algorithm 1, where N is a predetermined number of iterations.

Remark 6. (a) The proposed approaches recursively output the estimations of the kinematic, shape, and orientation vector states through several iterations. As proven in [30], the iteration will converge due to a general property of the VB approach.

(b) The computational complexity of the proposed VB approaches can be approximated as at time step k, following [31] and [32]. Moreover, as the number of measurement points increases, the tracking performance will be improved accordingly [33]. Therefore, a suitable tradeoff between performance and computational complexity needs to be considered for practical applications.

| Algorithm 1: One cycle of the proposed EOT-OV and EOT-OV0 |

|

5. Results

In this section, our approaches are evaluated using simulation and real data. Our proposed algorithms (EOT-OV and EOT-OV0), the orientation-based EOT algorithm of [13] (EOT-OA), MEM-EKF proposed in [10], and the CNN-based (convolutional Neural Network-based) EOT algorithm NN-ETT proposed in [34] are compared. The root-mean-square errors (RMSEs) [2] of centroid position and orientation angle, respectively, and the average Gaussian–Wasserstein distances (GWDs) [10,35,36,37] of joint centroid position and extension estimation over Monte Carlo (MC) runs are compared.

Following [13], the iteration number of VB approaches is set to 10 for all experiments, and a scaling factor is set to for the elliptical EO for all algorithms. For the shape dynamics, the forgetting factor is set to for all time steps. The CV model is used to model the object motion.

5.1. Experiments with Simulated Data

In the simulation, we consider an elliptical EO with semi-axes lengths of and that moves at a constant speed of .

At each time step, measurements are generated from a uniform distribution. The number of measurements is Poisson distributed with a mean of 10, and T is set to 0.1 s. Comparison results are performed over Monte Carlo runs. The noise covariance in all the measurement models is set to . The initial kinematic state is , along with for all algorithms. is drawn according to the induced systematic error specified following [20], which is corrupted by the additive noise with a uniform distribution between and .

5.1.1. Scenario 1

This scenario considers an ellipse EO whose trajectory is a straight line with orientation angle . All the trackers are provided with the same motion parameters. The orientation angle parameters are initialized as and . For all algorithms except MEM-EKF and NN-ETT, the initial shape parameters are set to and . For EOT-OV, the variance in (5) is set to and and are calculated using (17a), (17b), respectively. As for MEM-EKF, the prior shape variables are specified using the mean vector and covariance . The vector is defined by , where and are the semi-axis length 1 and semi-axis length 2, respectively. The process noise covariance for the shape variables is set to . The process noise variances for the kinematic state in (3) and the orientation angle in (2) are set to and , respectively. The used for NN-ETT can be calculated accordingly.

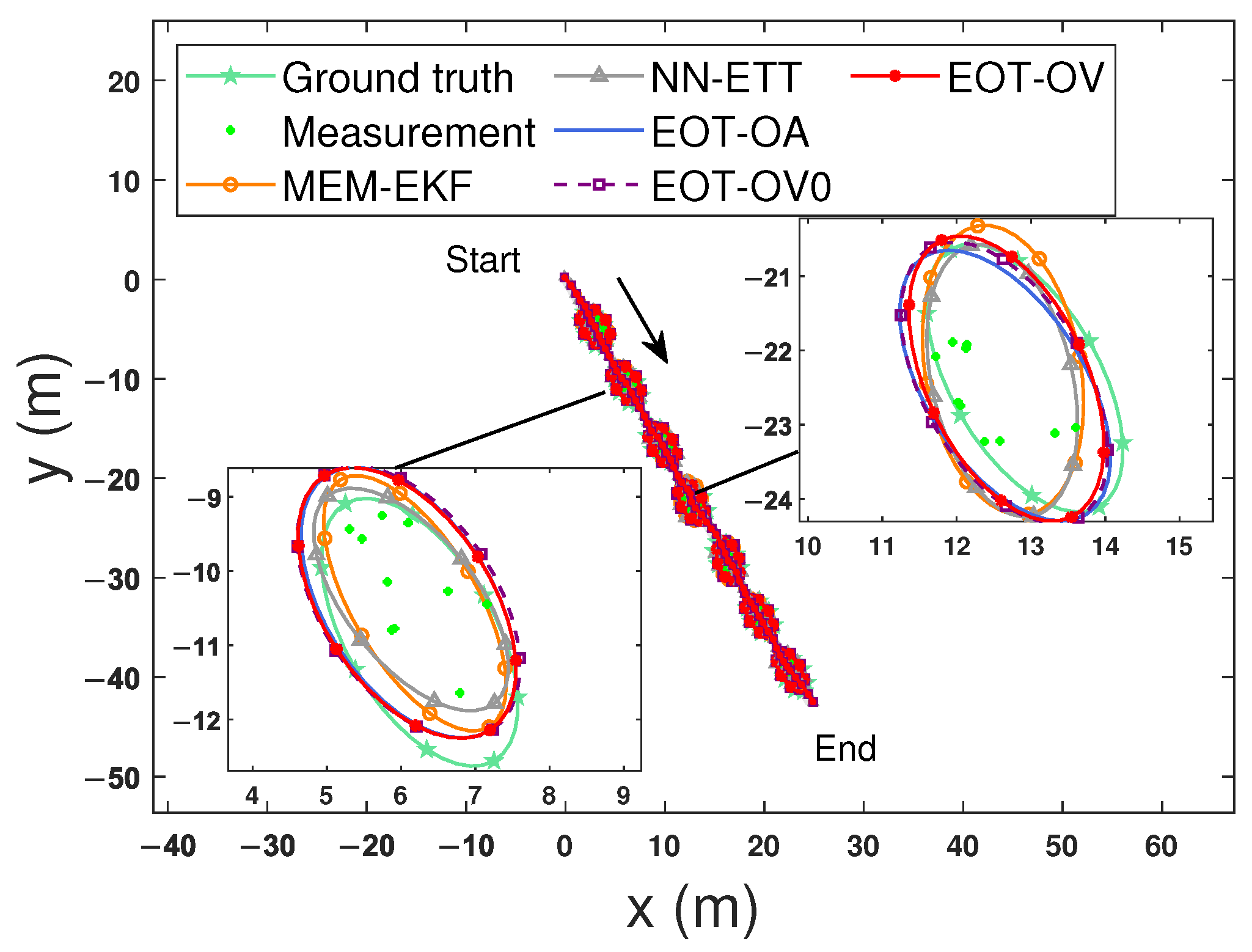

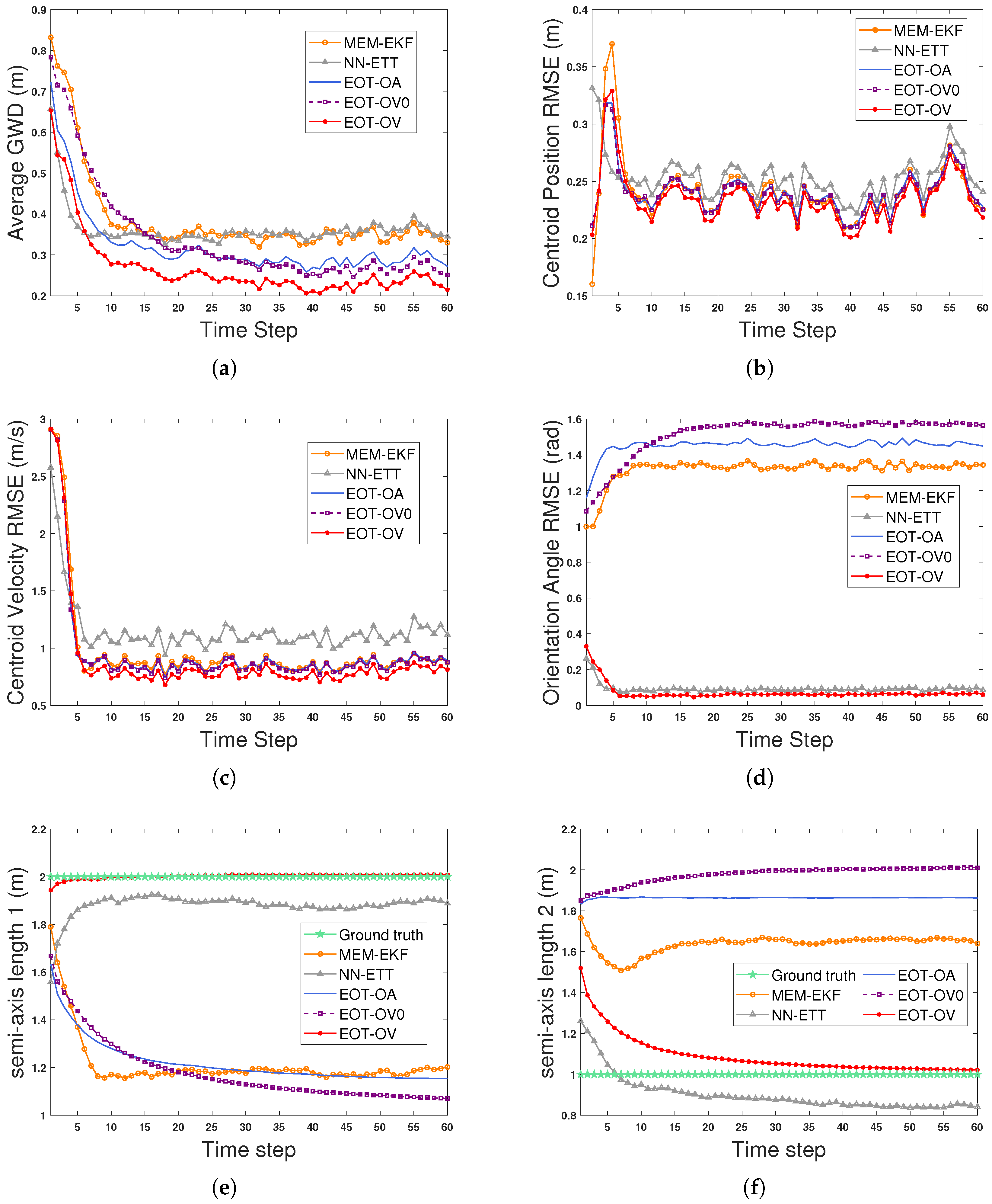

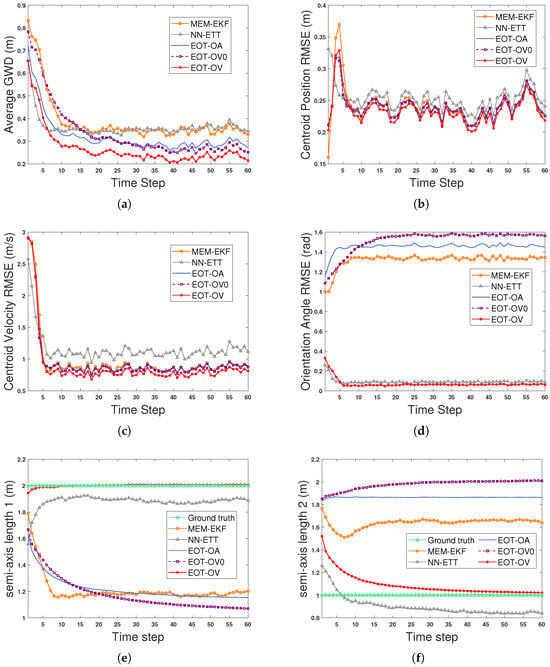

Figure 4 shows the estimated trajectories and extensions at selected time steps in scenario 1 (S1). The comparison results of S1 are shown in Figure 5 and contain the average GWDs, centroid position RMSEs, centroid velocity RMSEs, orientation angle RMSEs, semi-axis length 1, and semi-axis length 2. As shown in Figure 5, the advantage brought by using the OVB heading constraint (12) is obvious. EOT-OV, which uses the constraint (12), can effectively estimate the kinematic state and extension and has the best overall performance in terms of the average GWDs. All algorithms have similar performance regarding the centroid velocity estimation. The main reason is that all algorithms have similar performance on centroid position estimation and there is a derivative relation between the centroid position and centroid velocity, making it hard to improve the accuracy of the centroid velocity estimation using the OVB heading constraint (12). Moreover, EOT-OV addresses the mismatched initial parameter issue caused by the highly uncertain scenario, which makes this method more adaptive and practically applicable. In comparison, the algorithms that do not consider this constraint (MEM-EKF, EOT-OV0, and EOT-OA) may suffer from undesirable results. Their long- and short-axis estimates are reversed (as shown in Figure 5e and Figure 5f, respectively), and the orientation angle estimate deviates from the true value by (as shown in Figure 5d). NN-ETT avoids this ambiguous estimation problem because it uses the velocity estimate of the filter to produce an orientation estimate.

Figure 4.

Estimated trajectories and extensions at selected time steps for S1. The subfigures from bottom-left to top-right show the ground truth and estimated extensions of different algorithms at time steps 16 and 32, respectively.

Figure 5.

Estimation results of an EO for S1. (a) Average GWDs. (b) Centroid position RMSEs. (c) Centroid velocity RMSEs. (d) Orientation angle RMSEs. (e) Ground truth and estimation results for semi-axis length 1. (f) Ground truth and estimation results for semi-axis length 2.

Based on S1, the computational costs are evaluated using Matlab R2021b for each algorithm. Table 2 shows the CPU (Intel Core i7-12700H) time averaged over the Monte Carlo runs along with relative time to EOT-OV0. It shows that EOT-OV0 is more efficient than EOT-OA. Moreover, we can see that the algorithm with the OVB heading constraint (EOT-OV) enhances the estimation performance without introducing a substantial extra computational burden.

Table 2.

Average computation time of the algorithms.

The number of iterations has an impact on the convergence of the VB approaches as well as the computational complexity. In Table 3, the tracking performance of the proposed EOT-OV is evaluated for different iteration numbers, where the average GWD averaged over the total time steps (AGWDA) is chosen as a metric. As shown in Table 3, the proposed EOT-OV will converge after only four iterations. Thus, an appropriate iteration number should be chosen carefully for time-constrained applications. One possible way is to obtain this parameter in advance through simulation experiments. Meanwhile, when the number of measurements is very large, clustering as a form of pre-processing allows fewer measurements to be used, which can simplify the measurement update and reduce the computational complexity accordingly.

Table 3.

The AGWDA values of EOT-OV for different iteration numbers.

5.1.2. Scenario 2

In scenario 2 (S2), we assume that the object of interest has high maneuverability. The EO starts at the coordinate origin with the orientation angle . There are three turns in the whole trajectory, in which the three yaw rates are (time steps 12–26), (time steps 38–62), and (time steps 74–103), respectively.

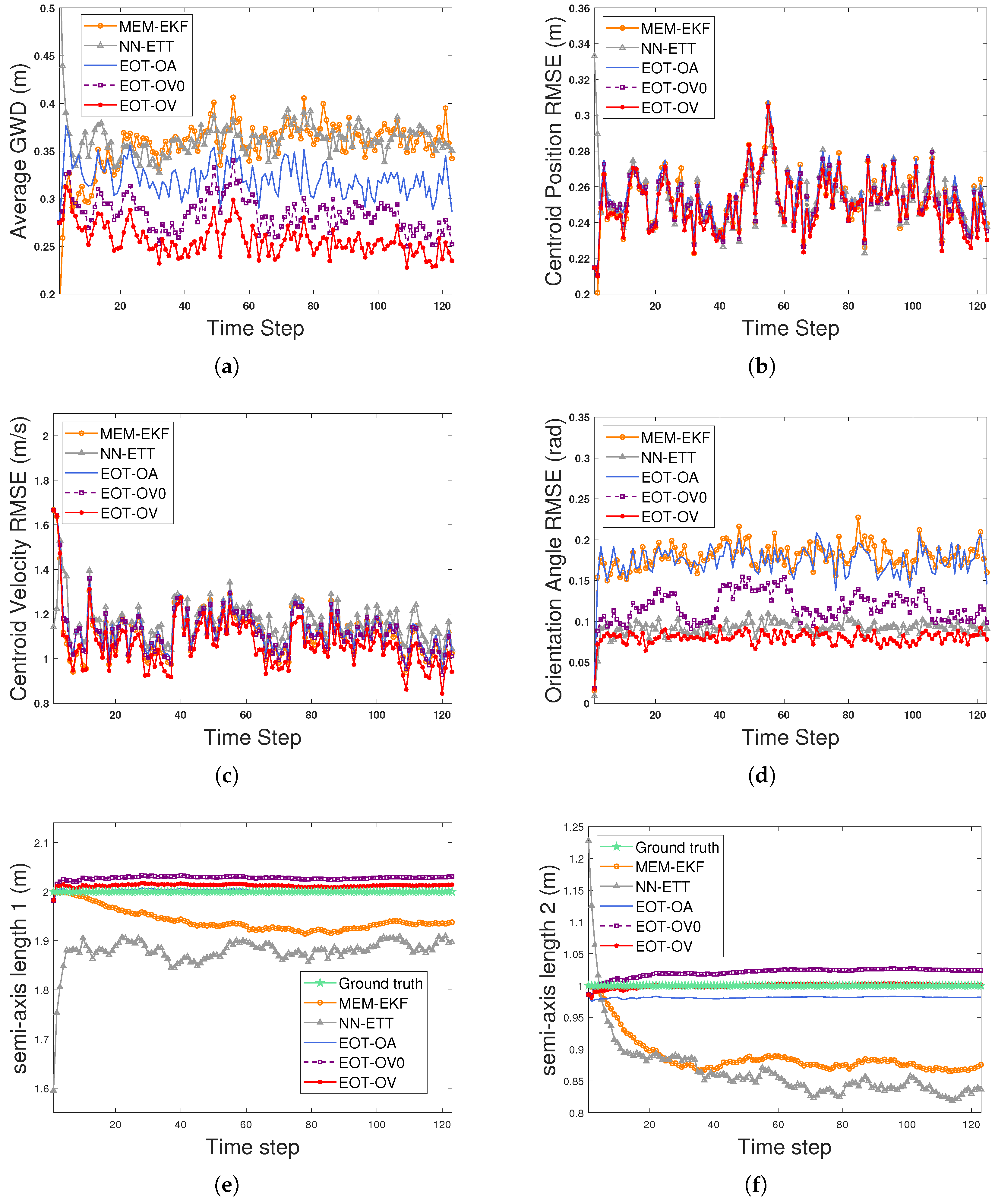

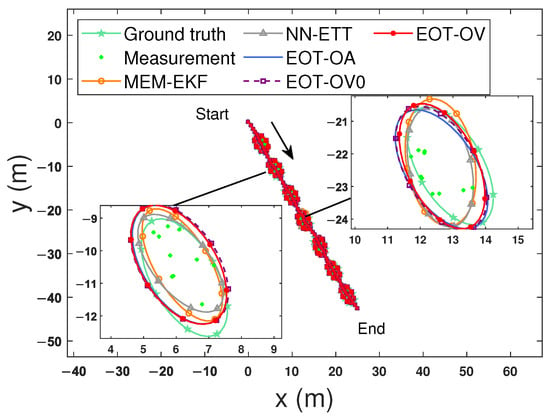

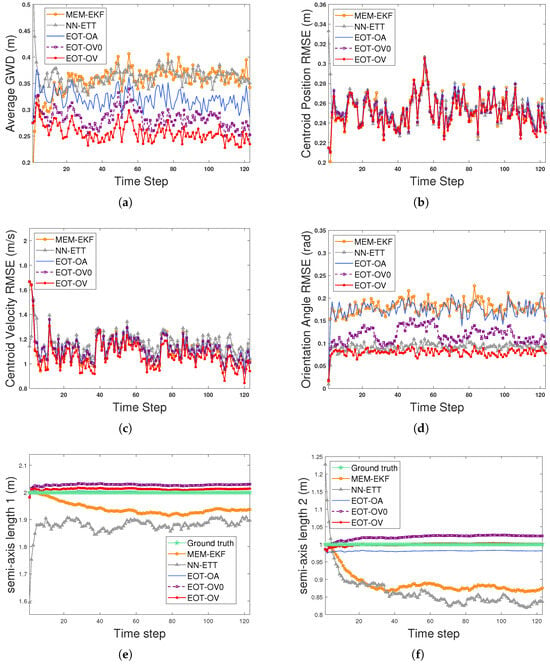

The experiment in S2 evaluates the performance of five algorithms under a highly maneuvering scenario. In S2, the initial shape parameters are set to and . The orientation angle parameters are initialized as and . The process noise variances in (3) and (2) are set to ( can be calculated accordingly for NN-ETT) and , respectively. The variance in (5) is set to . As for MEM-EKF, , , and . The methods of calculating the initial orientation vector parameters and the other parameter settings are the same as those in S1. Figure 6 shows the estimation results of the average GWD, centroid position RMSE, centroid velocity RMSE, orientation angle RMSE, semi-axis length 1, and semi-axis length 2 of each algorithm. Figure 7 shows the ground truth and estimated ellipse contours of all algorithms. As shown in Figure 6, all the algorithms have similar performance on the centroid position and centroid velocity estimation. EOT-OV outperforms EOT-OA, NN-ETT, and MEM-EKF in the estimation of orientation angle and extension (indicated by the GWD metric). The EOT-OV also has better performance than EOT-OV0 because EOT-OV considers the OVB heading constraint (12) in filtering while the latter does not. By using (12), the velocity information has high accuracy, which brings an estimation performance boost to the extension. The results indicate that (12) can be effectively utilized by EOT-OV for estimation. Thus, this demonstrates the effectiveness of the proposed modeling and estimation approach to EOT using the OVB heading constraint.

Figure 6.

Estimation results of an EO for S2. (a) Average GWDs. (b) Centroid position RMSEs. (c) Centroid velocity RMSEs. (d) Orientation angle RMSEs. (e) Ground truth and estimation results for semi-axis length 1. (f) Ground truth and estimation results for semi-axis length 2.

Figure 7.

Estimated trajectories and extensions at selected time steps for S2 with high maneuverability.

Based on S2, the impact that has on the tracking performance of EOT-OV has been evaluated, as shown in Table 4. The AGWDA values are calculated for typical values of . Generally, EOT-OV should find an optimal value of at different time steps in different experimental scenarios; however, it performs well within a wide range of values from the results of Table 4.

Table 4.

The AGWDA values of EOT-OV for different values.

5.2. Experiments with Real Data in the VoD Dataset

In this subsection, we consider the scenario given in the View-of-Delft (VoD) dataset [38] and apply the data collected by a 3+1D millimeter-wave radar. The VoD dataset is recorded with a preference for scenarios containing vulnerable road users in the city of Delft (The Netherlands).

The 3+1D radar outputs a point cloud with spatial, Doppler, and reflectivity channels for each time step, giving a total of five features for each point: range, azimuth, elevation, relative radial velocity, and RCS reflectivity. Since most point-cloud-based object detectors use Cartesian coordinates, the dataset also provides the radar point cloud: , where p denotes a point and are the three spatial coordinates. We obtain measurements from p and only use x,y as measurements for extended object tracking in this paper. The VoD dataset also provides the annotated bounding box of the tracking object, i.e., the true state parameters, which can be regarded as ground truth for performance evaluation.

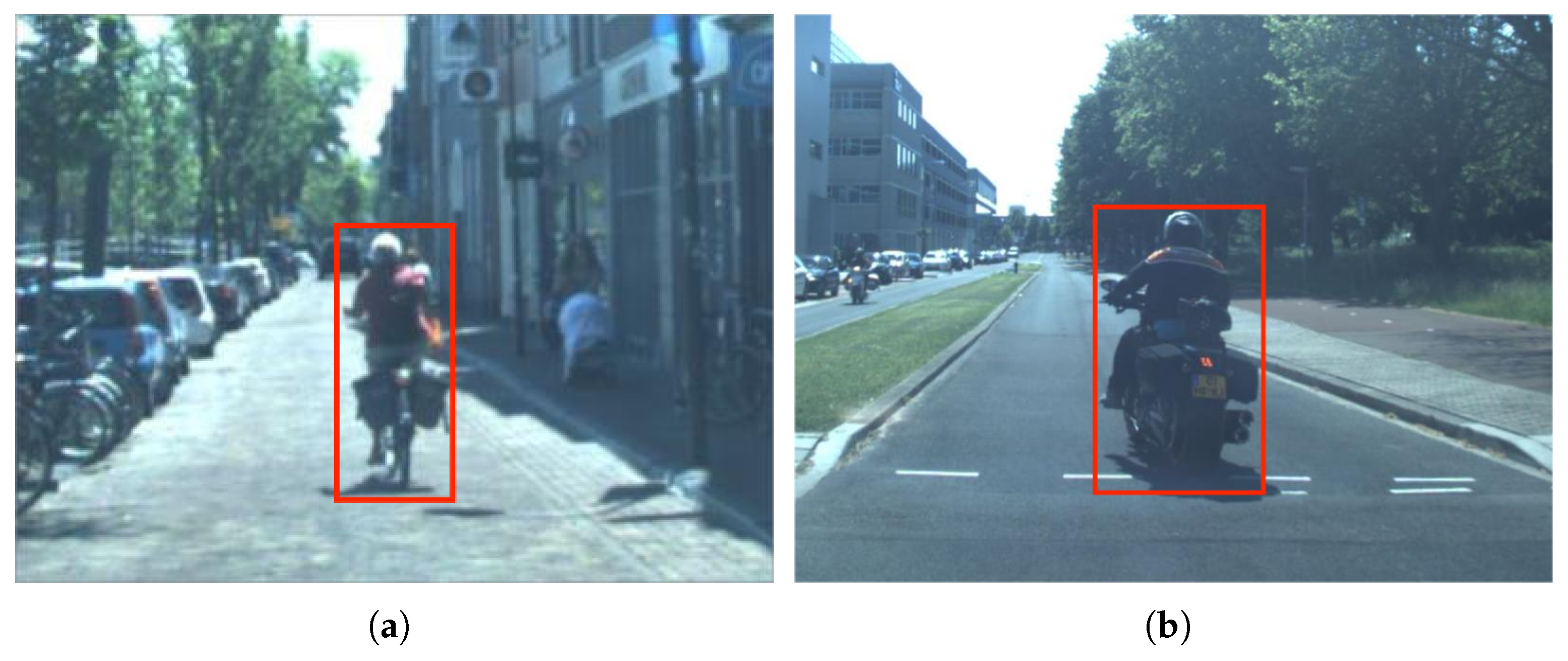

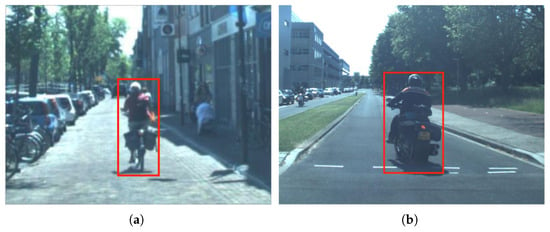

In order to evaluate the capabilities of the algorithms sufficiently, two types of objects are considered: bicyclist and motorcyclist. The selected scenarios are shown in Figure 8.

Figure 8.

Bicyclist and motorcyclist tracking scenarios. (a) Bicyclist tracking scenario. (b) Motorcyclist tracking scenario.

5.2.1. Bicyclist Tracking

The VoD dataset provides a bicyclist in 43 continuous time steps. For simplicity, we only consider 2D tracking in this paper and take the 2D-orientation bounding box as the ground truth.

Since the measurement noise is not given in the VoD, an approximated measurement noise covariance is used in this experiment. For all algorithms, the process noise variances in (3) and (2) are set to and , respectively. The variance in (5) is set to , , and for MEM-EKF. The initial centroid position of the bicyclist is extracted from the first time step for all algorithms. The initial velocity is initialized via the two-point differencing technique [27], and the initial orientation angle and semi-axes lengths are determined by the true data given in the VoD.

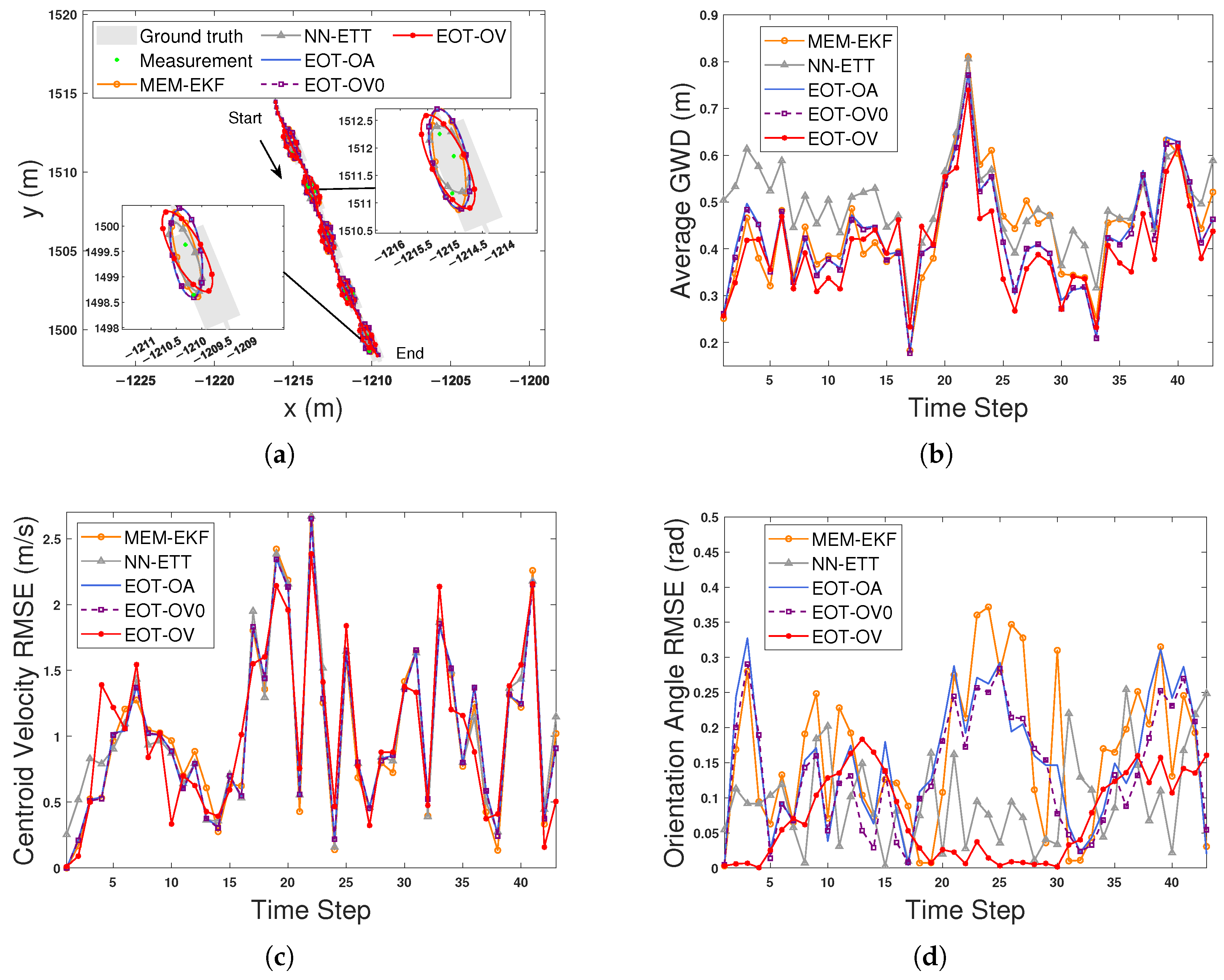

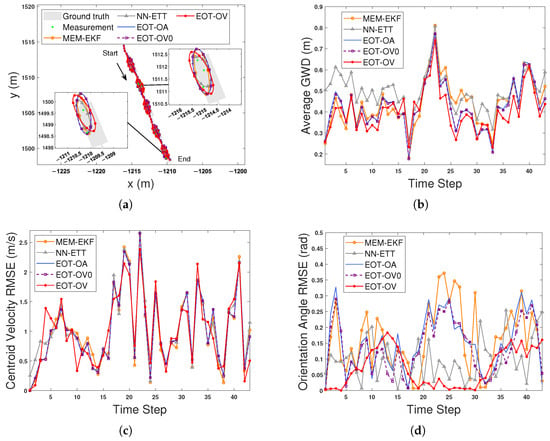

Figure 9a shows the estimated trajectories of the tracked bicyclist and extensions at selected time steps. The extension estimation errors of all the algorithms are large due to the non-uniformly distributed measurements, but EOT-OV can also give accurate orientation angle estimates of the EO, even in this demanding scenario. As shown in Figure 9c, all algorithms have similar performance on the centroid velocity estimation.

Figure 9.

Estimation results using five algorithms for bicyclist tracking. (a) Estimated trajectories and extensions. (b) Average GWDs. (c) Centroid velocity RMSEs. (d) Orientation angle RMSEs.

Figure 9b,d show the average GWDs and orientation angle RMSEs, respectively. Note that the orientation angle RMSEs and average GWDs are calculated for a single MC run. As can be seen from the figure, EOT-OV outperforms MEM-EKF, NN-ETT, EOT-OA, and EOT-OV0 in the accuracy of the orientation angle estimation and the overall performance at most times.

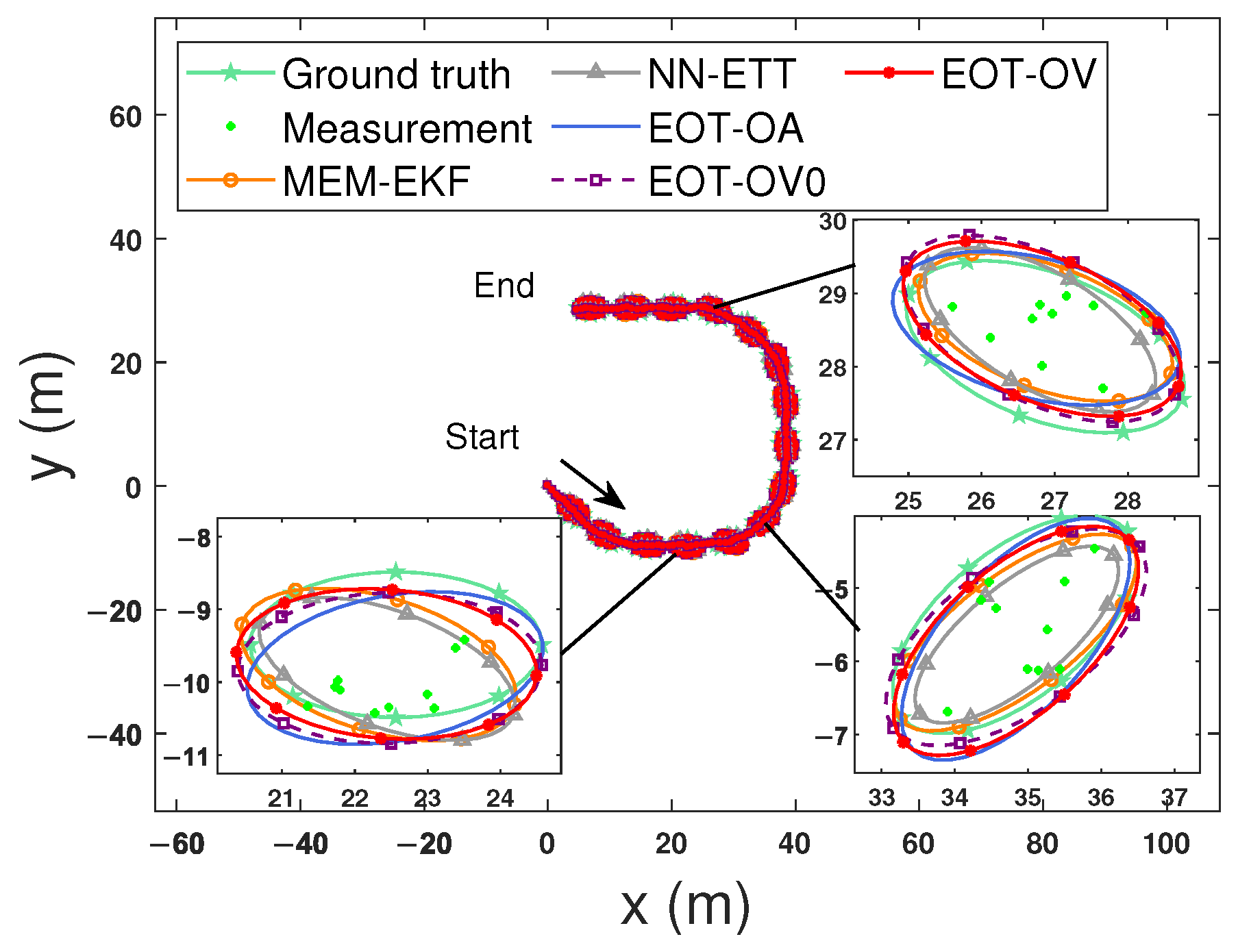

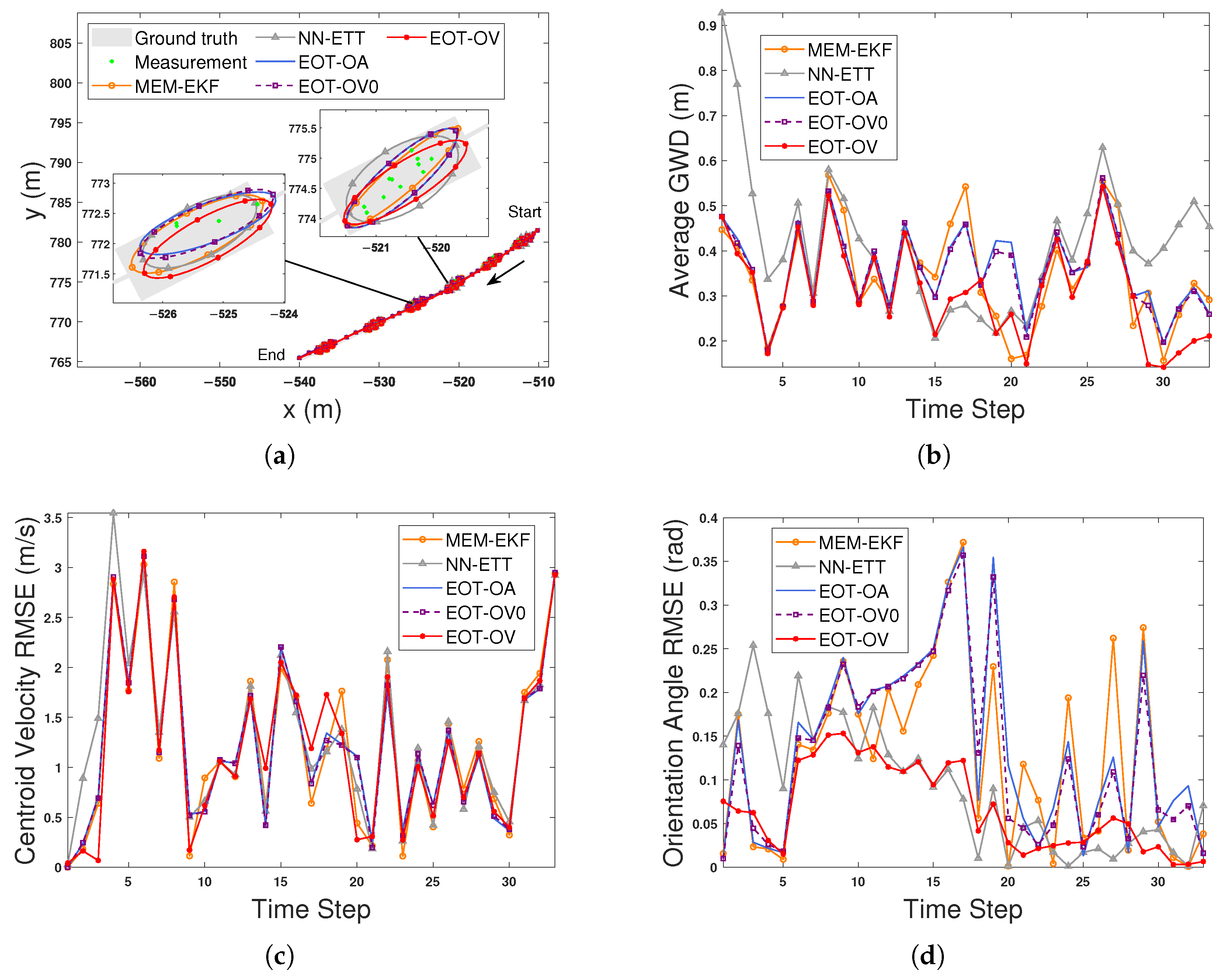

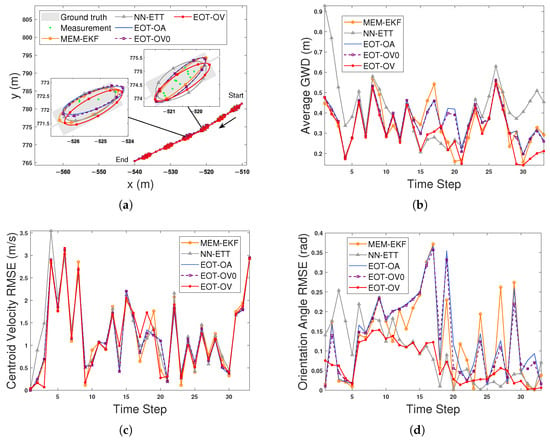

5.2.2. Motorcyclist Tracking

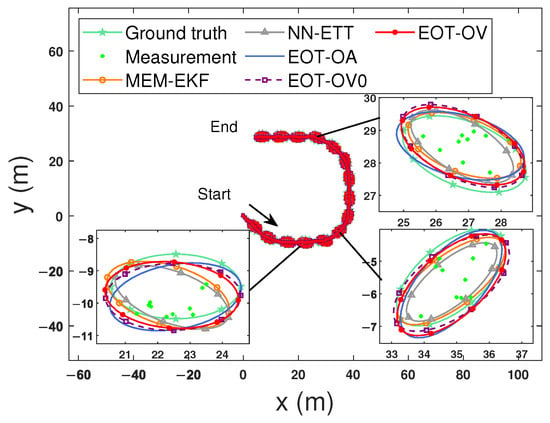

A motorcyclist that is traveling in a straight line is tracked until they turn and disappear. The initialization methods and parameter settings used are the same as those in the bicyclist tracking experiment. Figure 10a shows the estimated trajectories and extensions of the tracked motorcyclist at selected time steps. Figure 10b–d present the average GWDs, centroid velocity RMSEs, and orientation angle RMSEs, respectively. As we can see from Figure 10, EOT-OV exhibits favorable performance in terms of the orientation angle estimation, which coincides with the results for bicyclist tracking.

Figure 10.

Estimation results using five algorithms for motorcyclist tracking. (a) Estimated trajectories and extensions. (b) Average GWDs. (c) Centroid velocity RMSEs. (d) Orientation angle RMSEs.

5.3. Experiment with Real Data in the nuScenes Dataset

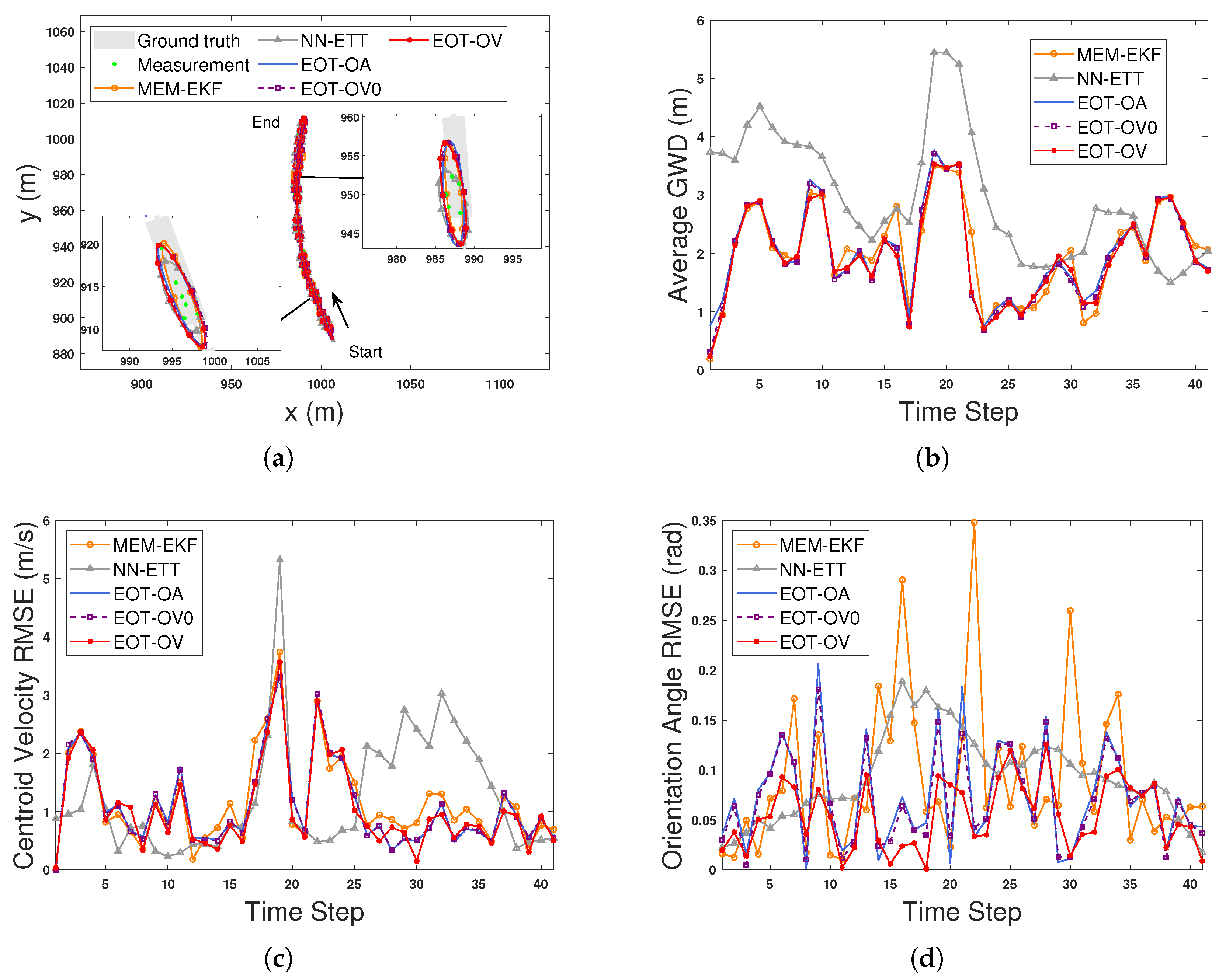

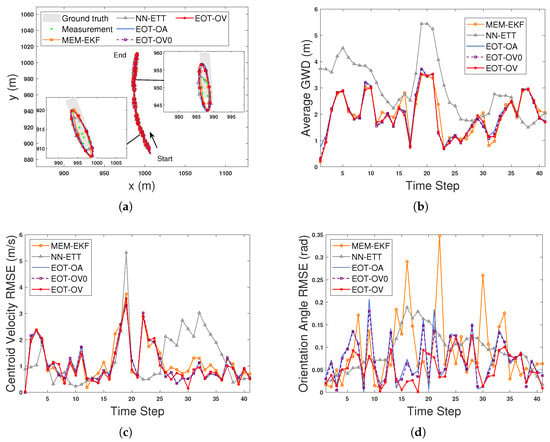

This subsection uses real data in the nuScenes dataset [39] and assesses the tracking performance of five algorithms with a turning maneuver scenario. Thus, we choose the scenario referring to scene-14 and scene-15 in the dataset, where a bus (target no.1 in scene-14 and no.3 in scene-15) with a length of 12.971 m and width of 2.741 m turns right at the intersection. The nuScenes dataset provides annotated bounding boxes of the objects of interest so that the ground truth can be regarded as known. With a millimeter-wave radar (ARS-408-21) mounted on the front of the ego vehicle, the measurements can be extracted and transformed to the global Cartesian coordinates from the ego frame coordinates. More details can be found in [39].

We use only the measurements at the key frames in the nuScenes dataset to test the algorithms, and the sampling interval is . Based on the range and angular variances provided by nuScenes dataset, we set measurement noise as . The process noise variances in (4) and (2) are set as and , respectively, and and for MEM-EKF. The other initialization methods and parameter settings used are the same as those in the bicyclist tracking experiment in Section 5.2.1.

Figure 11a shows the estimated trajectories and extensions of the tracked bus at selected time steps. From this figure, we can see most measurements are distributed over the rear part rather than the whole body of the target bus, and all algorithms can track the object even in such a hard situation. Figure 11b–d show the comparison results in terms of the average GWDs, centroid velocity RMSEs, and orientation angle RMSEs. It can be seen from these figures that due to using the OVB heading constraint, the proposed EOT-OV can estimate the orientation angle more accurately than the other four algorithms during a turning maneuver. This result is consistent with the above experimental results using the VoD dataset.

Figure 11.

Estimation results using five algorithms for bus tracking with nuScenes dataset. (a) Estimated trajectories and extensions. (b) Average GWDs. (c) Centroid velocity RMSEs. (d) Orientation angle RMSEs.

6. Discussion

To utilize the coupling information between the orientation angle and the heading angle, we propose a new extended object tracking approach that incorporates the orientation vector and OVB heading constraint. Simulation and real-data results demonstrate that the proposed approach can avoid ambiguous estimation problems of the extension, significantly enhancing the accuracy of the orientation angle estimation. Furthermore, a variational Bayesian approach is proposed, which can recursively estimate all states of an EO in an analytical way.

However, the proposed model requires manual tuning of , and further research is needed to make the model more accurate and adaptable to more complex scenarios. To address these issues, we plan to combine our proposed approach with adaptive or multiple model methods to account for the changing scenario. Furthermore, we aim to model the heading constraint accurately for different targets as our next research direction.

7. Conclusions

In this paper, an EO model using an orientation vector with a heading constraint is proposed. The model can effectively describe the tight coupling between the heading angle and the orientation angle. Moreover, this model fits the VB framework by constructing a pseudo-measurement from the OVB heading constraint. A VB approach is thus derived, which can recursively estimate the kinematic, orientation vector, and shape states. The proposed constrained filtering approach can effectively address the ambiguous issue in orientation angle estimation. In addition, by using the OVB heading constraint, the approach can utilize the coupling information to improve the estimation performance. The approach also has a simple and analytical form, which facilitates its application. Results using both simulation and real data demonstrate the effectiveness of the proposed approach.

Author Contributions

Conceptualization, Z.W. and L.Z.; Data curation, Z.W.; Formal analysis, Z.W.; Funding acquisition, L.Z. and T.Z.; Investigation, Z.W., L.Z. and T.Z.; Methodology, Z.W. and T.Z.; Project administration, L.Z. and T.Z.; Resources, L.Z. and T.Z.; Software, Z.W.; Supervision, L.Z. and T.Z.; Validation, Z.W.; Visualization, Z.W.; Writing—original draft, Z.W.; Writing—review and editing, Z.W. and L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grants 62401059 and 62388102); the Shandong Provincial Natural Science Foundation (Grant ZR2024QF029).

Data Availability Statement

The data presented in this study include two parts. The first part is openly available in [Multi-Class Road User Detection With 3+1D Radar in the View-of-Delft Dataset] at [10.1109/LRA.2022.3147324], reference number [38]. The second part is openly available in [nuScenes: A Multimodal Dataset for Autonomous Driving] at [10.1109/CVPR42600.2020.01164], reference number [39].

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. A Proof of ML Equation

The measurement equation is assumed as

where . The ML equation of can be obtained by

with

where

means the covariance of x. So the ML equation can be represented by

Appendix B. Calculation of and

Based on (10), (27), , and , we obtain the predicted mean of the orientation vector , as follows.

where is defined in (10).

As for the predicted covariance , we have

Appendix C. Calculation of , , and

Through algebraic manipulations, the following equations hold:

References

- Koch, W. Bayesian Approach to Extended Object and Cluster Tracking Using Random Matrices. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 1042–1059. [Google Scholar] [CrossRef]

- Feldmann, M.; Fränken, D.; Koch, W. Tracking of Extended Objects and Group Targets Using Random Matrices. IEEE Trans. Signal Process. 2011, 59, 1409–1420. [Google Scholar] [CrossRef]

- Li, P.; Ge, H.; Yang, J.; Wang, W. Modified Gaussian Inverse Wishart Phd Filter for Tracking Multiple Non-ellipsoidal Extended Targets. Signal Process. 2018, 150, 191–203. [Google Scholar] [CrossRef]

- Lan, J.; Li, X.R. Extended-Object or Group-Target Tracking Using Random Matrix with Nonlinear Measurements. IEEE Trans. Signal Process. 2019, 67, 5130–5142. [Google Scholar] [CrossRef]

- Hu, Q.; Ji, H.; Zhang, Y. A Standard PHD Filter for Joint Tracking and Classification of Maneuvering Extended Targets Using Random Matrix. Signal Process. 2018, 144, 352–363. [Google Scholar] [CrossRef]

- Sun, L.; Yu, H.; Fu, Z.; He, Z.; Zou, J. Modeling and Tracking of Maneuvering Extended Object with Random Hypersurface. IEEE Sensors J. 2021, 21, 20552–20562. [Google Scholar] [CrossRef]

- Granström, K.; Baum, M.; Reuter, S. Extended Object Tracking: Introduction, Overview and Applications. J. Adv. Inf. Fusion 2017, 12, 139–174. [Google Scholar]

- Mihaylova, L.; Carmi, A.Y.; Septier, F.; Gning, A.; Pang, S.K.; Godsill, S. Overview of Bayesian Sequential Monte Carlo Methods for Group and Extended Object Tracking. Digit. Signal Process. 2014, 25, 1–16. [Google Scholar] [CrossRef]

- Degerman, J.; Wintenby, J.; Svensson, D. Extended Target Tracking Using Principal Components. In Proceedings of the IEEE 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Yang, S.; Baum, M. Tracking the Orientation and Axes Lengths of an Elliptical Extended Object. IEEE Trans. Signal Process. 2019, 67, 4720–4729. [Google Scholar] [CrossRef]

- Liu, S.; Liang, Y.; Xu, L. Maneuvering Extended Object Tracking Based on Constrained Expectation Maximization. Signal Process. 2022, 201, 108729. [Google Scholar] [CrossRef]

- Liu, S.; Liang, Y.; Xu, L.; Li, T.; Hao, X. EM-based Extended Object Tracking without A Priori Extension Evolution Model. Signal Process. 2021, 188, 108181. [Google Scholar] [CrossRef]

- Tuncer, B.; Özkan, E. Random Matrix Based Extended Target Tracking with Orientation: A New Model and Inference. IEEE Trans. Signal Process. 2021, 69, 1910–1923. [Google Scholar] [CrossRef]

- Li, Z.; Liang, Y.; Xu, L. Distributed Extended Object Tracking Using Coupled Velocity Model From WLS Perspective. IEEE Trans. Signal Inform. Process. Netw. 2022, 8, 459–474. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, K.; Zhou, G. State Estimation with Heading Constraints for On-Road Vehicle Tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 13614–13635. [Google Scholar] [CrossRef]

- Li, M.; Lan, J.; Li, X.R. Tracking of Elliptical Object with Unknown but Fixed Lengths of Axes. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 6518–6533. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Yang, S.; Baum, M. Extended Kalman Filter for Extended Object Tracking. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 4386–4390. [Google Scholar]

- Li, X.R.; Jilkov, V.P. Survey of Maneuvering Target Tracking. Part I. Dynamic Models. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1333–1364. [Google Scholar]

- Kellner, D.; Barjenbruch, M.; Klappstein, J.; Dickmann, J.; Dietmayer, K. Tracking of Extended Objects with High-resolution Doppler Radar. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1341–1353. [Google Scholar] [CrossRef]

- Jeon, W.; Zemouche, A.; Rajamani, R. Tracking of Vehicle Motion on Highways and Urban Roads Using a Nonlinear Observer. IEEE/ASME Trans. Mechatron. 2019, 24, 644–655. [Google Scholar] [CrossRef]

- Bertipaglia, A.; Alirezaei, M.; Happee, R.; Shyrokau, B. An Unscented Kalman Filter-informed Neural Network for Vehicle Sideslip Angle Estimation. IEEE Trans. Veh. Technol. 2024, 73, 12731–12746. [Google Scholar] [CrossRef]

- Gräber, T.; Lupberger, S.; Unterreiner, M.; Schramm, D. A Hybrid Approach to Side-Slip Angle Estimation with Recurrent Neural Networks and Kinematic Vehicle Models. IEEE Trans. Intell. Veh. 2019, 4, 39–47. [Google Scholar] [CrossRef]

- Burkardt, J. The Truncated Normal Distribution. Dep. Sci. Comput. Website Fla. State Univ. 2014, 1, 35. [Google Scholar]

- Julier, S.J.; LaViola, J.J. On Kalman Filtering with Nonlinear Equality Constraints. IEEE Trans. Signal Process. 2007, 55, 2774–2784. [Google Scholar] [CrossRef]

- Crouse, D.F. Cubature/Unscented/Sigma Point Kalman Filtering with Angular Measurement Models. In Proceedings of the IEEE 18th International Conference on Information Fusion, Washington, DC, USA, 6–9 July 2015; pp. 1550–1557. [Google Scholar]

- Cao, X.; Lan, J.; Li, X.R.; Liu, Y. Automotive Radar-Based Vehicle Tracking Using Data-Region Association. IEEE Trans. Intell. Transp. Syst. 2022, 23, 8997–9010. [Google Scholar] [CrossRef]

- Yang, C.; Blasch, E. Kalman Filtering with Nonlinear State Constraints. IEEE Trans. Aerosp. Electron. Syst. 2009, 45, 70–84. [Google Scholar] [CrossRef]

- Zanetti, R.; Majji, M.; Bishop, R.H.; Mortari, D. Norm-Constrained Kalman Filtering. J. Guid. Control Dyn. 2009, 32, 1458–1465. [Google Scholar] [CrossRef]

- Šmídl, V.; Quinn, A. The Variational Bayes Method in Signal Processing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Tuncer, B.; Orguner, U.; Özkan, E. Multi-Ellipsoidal Extended Target Tracking with Variational Bayes Inference. IEEE Trans. Signal Process. 2022, 70, 3921–3934. [Google Scholar] [CrossRef]

- Liu, B.; Tharmarasa, R.; Jassemi, R.; Brown, D.; Kirubarajan, T. Extended Target Tracking with Multipath Detections, Terrain-Constrained Motion Model and Clutter. IEEE Trans. Intell. Transp. Syst. 2020, 22, 7056–7072. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, J. Extended Object Tracking Using Random Matrix with Skewness. IEEE Trans. Signal Process. 2020, 68, 5107–5121. [Google Scholar] [CrossRef]

- Steuernagel, S.; Thormann, K.; Baum, M. CNN-Based Shape Estimation for Extended Object Tracking Using Point Cloud Measurements. In Proceedings of the 2022 25th International Conference on Information Fusion (FUSION), Linköping, Sweden, 4–7 July 2022; pp. 1–8. [Google Scholar]

- Givens, C.R.; Shortt, R.M. A Class of Wasserstein Metrics for Probability Distributions. Mich. Math. J. 1984, 31, 231–240. [Google Scholar] [CrossRef]

- Yang, S.; Baum, M.; Granström, K. Metrics for Performance Evaluation of Elliptic Extended Object Tracking Methods. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Baden-Baden, Germany, 19–21 September 2016; pp. 523–528. [Google Scholar]

- Zhang, L.; Lan, J. Tracking of Extended Object Using Random Matrix with Non-Uniformly Distributed Measurements. IEEE Trans. Signal Process. 2021, 69, 3812–3825. [Google Scholar] [CrossRef]

- Palffy, A.; Pool, E.; Baratam, S.; Kooij, J.F.; Gavrila, D.M. Multi-Class Road User Detection with 3+1D Radar in the View-of-Delft Dataset. IEEE Robot. Autom. Lett. 2022, 7, 4961–4968. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11618–11628. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).