Abstract

Deep learning techniques have garnered significant attention in remote sensing scene classification. However, obtaining a large volume of labeled data for supervised learning (SL) remains challenging. Additionally, SL methods frequently struggle with limited generalization ability. To address these limitations, self-supervised multi-mode representation learning (SSMMRL) is introduced for local climate zone classification (LCZC). Unlike conventional supervised learning methods, SSMMRL utilizes a novel encoder architecture that exclusively processes augmented positive samples (PSs), eliminating the need for negative samples. An attention-guided fusion mechanism is integrated, using positive samples as a form of regularization. The novel encoder captures informative representations from the unannotated So2Sat-LCZ42 dataset, which are then leveraged to enhance performance in a challenging few-shot classification task with limited labeled samples. Co-registered Synthetic Aperture Radar (SAR) and Multispectral (MS) images are used for evaluation and training. This approach enables the model to exploit extensive unlabeled data, enhancing performance on downstream tasks. Experimental evaluations on the So2Sat-LCZ42 benchmark dataset show the efficacy of the SSMMRL method. Our method for LCZC outperforms state-of-the-art (SOTA) approaches.

1. Introduction

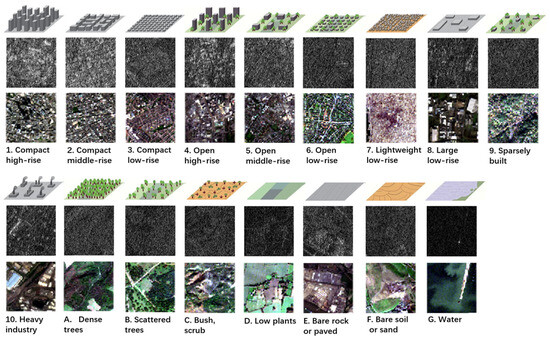

A classification method called Local Climate Zone (LCZ) classification is used to group urban and non-urban areas according to their climatic and environmental features. When describing the microclimates of certain regions, LCZs take into account elements such as vegetation, surface types, and urban morphology. By using supervised learning [1], we can automatically classify different urban and rural zones into meaningful climate categories, but challenges like the need for large, high-quality annotated datasets and problems with data annotation can impede progress. By addressing these issues, self-supervised learning significantly increases the scalability and efficacy of LCZ classification, particularly in cases of limited resources. Local climate zones are divided into two primary categories: urban LCZs and natural LCZs. Urban LCZs pertain to urban areas and are numbered from LCZ1 to LCZ10, while natural LCZs are associated with non-urban areas, represented by LCZA to LCZG. Figure 1 describes each climate zone, and further details are provided in [2].

Figure 1.

SAR and multispectral scenes for local climate zone classification [2].

Self-supervised learning is used to learn representations from unlabeled data. However, a key challenge in SSL lies in learning effective representations without relying on negative samples, which has been a cornerstone of many traditional SSL approaches. Existing methods, such as contrastive learning techniques including MoCo [3], often depend on the construction of negative samples to enforce distinct feature representations. However, negative samples similarity issue may limit the performance, in [4] authors overcame this issue with clustering approach but still the performance is limited by negative samples. Data-to-Vector [5] has demonstrated potential in managing different modalities, but it encounters difficulties if the data is lacking diversity.

We aim to contribute to the development of SSL techniques that can effectively learn meaningful representations from positive pairs alone. This is crucial for advancing the applicability of SSL, as negative samples may not always be available or well defined.

Supervised learning methods are also used for LCZC, but their reliance on labeled data, which are often costly and time-consuming to obtain, remains a major drawback. Additionally, these techniques are prone to overfitting, particularly when the amount of labeled data is limited.

SAR and MS data have been used for LCZ classification because of their large imaging widths [6]. MS image interpretation is relatively easy because reflectance and emission from ground objects can provide sufficient details [7]. However, clouds and atmospheric conditions can make temporal requirements difficult for MS images [8]. In contrast to MS imaging, terrain and object backscattering affect SAR images—in particular, the form, shape, and composition of objects. Additionally, SAR has the unique feature of coherent imaging, which means it captures amplitude and phase information [9]. Moreover, SAR can penetrate through clouds and provides all-day image acquisition capability. However, SAR images are less intuitive to interpret and more challenging compared to MS images due to a limited number of bands and issues such as speckle noise, non-vertical range imaging, projected shortening, signal voids, surface overlap distortion, and shadowing effects [10]. Given the complementary nature of MS and SAR data, the fusion of MS and SAR has been used for LCZC [11]. In this paper, we introduce an SSL method built upon an innovative fusion network, drawing inspiration from BYOL [12].

Recent studies on Local Climate Zone Classification (LCZC) have explored various methodologies, datasets, and applications. In [13], a classification approach for LCZs in Xi’an City was proposed, leveraging 192 spatial indicators and remote sensing (RS) data. Expanding on large-scale LCZ mapping, ref. [14] developed comprehensive LCZ land use datasets for key Chinese cities and urban clusters to enhance urban climate and environmental modeling. The role of LCZC in urban heat island (UHI) analysis was examined in [15], where Zhuhai-1 satellite imagery was used to study daytime temperature variations. Similarly, ref. [16] investigated LCZ transformations over time in five major global cities, providing insights into urban climate dynamics. A broader review of LCZC methodologies and applications was conducted in [17], contributing valuable perspectives for urban climate research.

The practical applications of LCZC in sustainable urban development were highlighted in [18], while [19] applied LCZC to identify surface urban heat islands (SUHIs) and improve the understanding of urban thermal effects. The relevance of LCZC for ventilation assessment in urban planning was explored in [20]. Spatio-temporal variations of SUHIs in Beijing and their correlation with population density were analyzed in [21]. Additionally, ref. [22] reviewed LCZ mapping applications across European cities, identifying emerging trends in urban environmental studies. Studies such as that reported in [23] have assessed the effectiveness of LCZC for land surface temperature (LST) analysis, focusing on UHI pattern variability.

Advancements in data fusion techniques for LCZC have also been explored. In [24], the integration of multispectral (MS) and PALSAR-2 data was investigated for LCZ classification in Nanchang, China. Deep learning methods such as a multi-cascaded fusion network [25] and a dual graph convolutional neural network (CNN) [26] have been introduced to improve LCZ classification accuracy. The dynamic changes in LCZs across three cities and their impact on the UHI phenomenon were examined in [27]. Furthermore, ref. [28] evaluated the thermal characteristics of Riyadh’s LCZs and their implications for urban planning. Seasonal UHI analyses were conducted in Wuhan using LCZ classification in [29], while [30] explored spatial trends in urban environments through LCZ classification. Variations in LCZs across three Yangtze River mega cities were studied in [31]. The application of SAR for global LCZ classification was discussed in [32], while [33] investigated the fusion of SAR and multispectral data for LCZC using a dual-branch CNN.

SSL methods based on generative networks like the Autoencoder (AE) architecture [34] are based on the encoding and reconstruction of input data. The model is simple but suffers from overfitting and cannot capture complex distributions, which limits the generalization ability of model. Sparse AEs [35] are based on sparsity constraints to improve efficiency. However, the sparsity constraint can reduce the model’s expressiveness and may lead to instability during training if not carefully tuned. Denoising AEs [36] focuses on reconstructing clear images from noisy inputs, which helps in learning robust representations. However, they struggles when noise levels are high or when the noise distribution is not well understood, requiring a large amount of clean data for optimal performance. Variational Autoencoders (VAEs) [37] model the data distribution by encoding the input into a normal distribution. While VAEs have gained popularity for their probabilistic approach, the assumption of a Gaussian distribution can be restrictive, leading to poor results in more complex datasets. Masked Autoencoders (MAEs) [38] use random masking of image patches and leverage vision transformers for reconstruction. While MAEs have demonstrated strong performance on various vision tasks, they require significant computational resources and may lose fine-grained details during reconstruction due to masking.Generative Adversarial Networks (GANs) [39] are among the most influential models in generative learning. They consist of two neural networks—a generator and a discriminator—that are trained adversarially to produce realistic data. Adversarial Autoencoders (AAEs) [40] combine the principles of VAEs and GANs by incorporating adversarial training into the autoencoder framework. BiGANs (Bidirectional GAN) [41] extend GANs by adding an encoder that maps data samples to their latent representations. This additional encoder increases the model’s complexity and computational cost while also introducing new challenges in balancing the training of both the generator and encoder.

One of the foundational techniques in SSL is negative sampling, which aims to maximize the distance between dissimilar data points. Methods such as triplet Loss [42], MoCo, and SimCLR [43] have proven effective in various single-mode applications. These approaches suffers from negative sample selection and require large batch sizes. DeepCluster [44] and SwAV [45] generate pseudo-labels for contrastive learning but suffer from poor computational efficiency. DINO [46] is based on positive sample generation but suffers from hyperparameter tuning and scaling issues. Barlow Twins [47] and VICReg [48] are based on redundancy reduction but suffer from loss of features and instability during training.

Existing SSL methods based on negative sampling, clustering, knowledge distillation, and redundancy reduction have improved the performance but still suffer when applied to multimodal data. However SSMMRL is more efficient and scalable, making it a promising solution for multi-modal classification.

The advantages of using only positive samples for model training include the simplicity of the objective function as compared to using negative and positive samples. Contrastive loss due to the use of positive and negative samples may cause instability if the samples are unbalanced and large. Models based on positive samples are more efficient and perform better in cases of limited labels.

Main Contribution

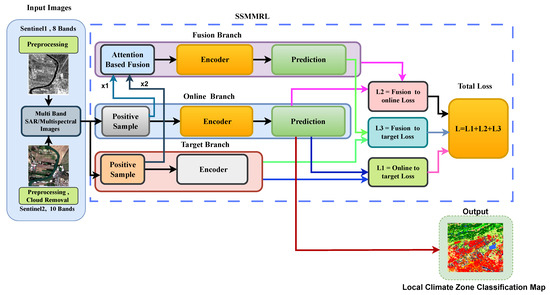

The main contributions of this work are listed below. It is pertinent to mention that, unlike BYOL, our SSMMRL has three branches, namely online, target, and fusion branches, whereas BYOL has two branches, namely teacher and student branches. SSMMRL offers greater flexibility and adaptability, making it suitable for more complex and diverse tasks that involve multiple views or modalities.

- We propose a method that actively combines positive samples to create a new fused sample, driven by an attention mechanism. By encouraging the model to create more useful features and projections from aggregated data, this regularization technique improves feature generalization.

- An additional fusion branch is added to extract meaningful features from the SAR and multispectral data and reduce overall loss.

- A dynamic convolutional module is proposed that works with different input sizes by dynamically modifying the mixed input channels to support the attention strategy.

- The suggested SSL approach requires less labeled data and performs better on multi-modal data when compared to other self-supervised learning approaches.

The structure of this article is outlined as follows. Section 2 describes study areas. Section 3 presents the methodology. Section 4 introduces the datasets and experimental settings. Section 5 is about obtained results and Section 6 is about discussion. Section 7 provides the conclusion of the article and future research.

2. Study Area

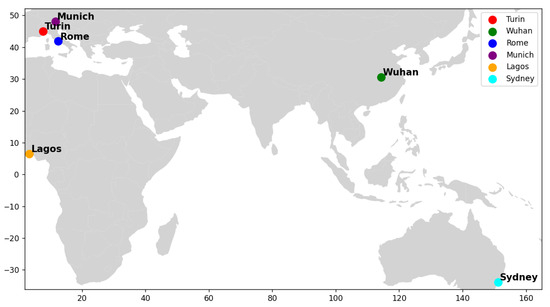

In this article, six cities were selected for performance evaluation, namely Lagos, Wuhan, Rome, Munich, Sydney, and Turin. The Google imagery of regions of interest (ROIs) for all these cities is shown in Figure 2. The global map of these cities is shown in the Figure 3. The exact coordinates of the ROIs are given in Table 1.

Figure 2.

Google images of selected cities.

Figure 3.

Selected cities.

Table 1.

Regions of interest (ROIs) for selected cities.

3. Methodology

In this section, the frame work for SSMMRL is discussed. The methodology involves data preprocessing and augmentation, feature extraction using a dynamic convolution, squeeze and excitation, and self-supervised training with a fusion-branch framework. The fusion branch leverages an attention-based mechanism to fuse information from two augmented views of the input, enhancing the learned representations.

3.1. Proposed Framework

The proposed framework is shown in Figure 4. Multi-spectral and SAR images are preprocessed and applied to the online, target, and fusion branches. The input image (x) is passed through the positive sample generator, and it produces for the online branch and for the target branch. Both and are passed through the feature encoder to generate representations ( and ), respectively. The online and target encoders are represented as , and respectively.

Figure 4.

Proposed scheme for LCZC.

The predictions are generated from the representations, and the predictor is denoted by g. The fusion branch gets , . Attention-based fusion is applied to achieve better feature representation. The predictions from the online and fusion branches, along with the encoder outputs, are used to compute the weighted loss. The target branch network is updated using online network parameters. Initially, the network is pretrained without any labeled samples; then, a few annotated training samples are used for fine-tuning and classification tasks.

The outputs of the online feature extractor are ( and ), while the representation of the target feature extractor are (, ).

The augmented views ( and ) are generated from x.

Representations are mapped to predictions using the operator, called the predictor.

An attention mechanism is applied to obtain the attention map. The attention map helps in weighting the importance of each pixel or feature in the input images, resulting in a hybrid sample.

The target branch network () is updated using online network parameters.

where is a momentum parameter. Initially, the network is pretrained without any labeled samples; then, a small portion of annotated samples is used for fine-tuning and classification tasks.

3.2. Preprocessing and Sample Generation

Augmentation includes a random crop with specific aspect-ratio and scale constraints, as well as a random horizontal flip. A random crop is applied, with the height (ph) in the range of [0.5, 1.0] and the width (pw) based on the height (ensuring the width is between 0.67 and 1.0 times the height). The crop size is fixed at 32 × 32. A random horizontal flip with 0.5 probability is used. The random crop and horizontal flip are applied sequentially to the input image (x). The augmented image is returned, maintaining the correct number of channels (though this part is implicit, since the number of channels is maintained through the transformations). The combined augmentation pipeline can be expressed as follows:

where the augmented image () is obtained by applying a random crop (), then a horizontal flip ().

It is pertinent to mention that data augmentation is a powerful tool for improving the performance and generalization of machine learning models, especially when training data are limited. It helps the model learn more robust features by introducing variability in the data, making it more capable of handling real-world data. However, it is important to apply augmentations judiciously, as too many extreme transformations can lead to overfitting or unrealistic data, thereby decreasing the model’s ability to generalize. It also comes with trade-offs in terms of increased training time and computational cost. Hence, a balanced approach, where augmentations are both realistic and diverse, is key to leveraging the full potential of this technique. The ablation study shows the effect of data augmentation.

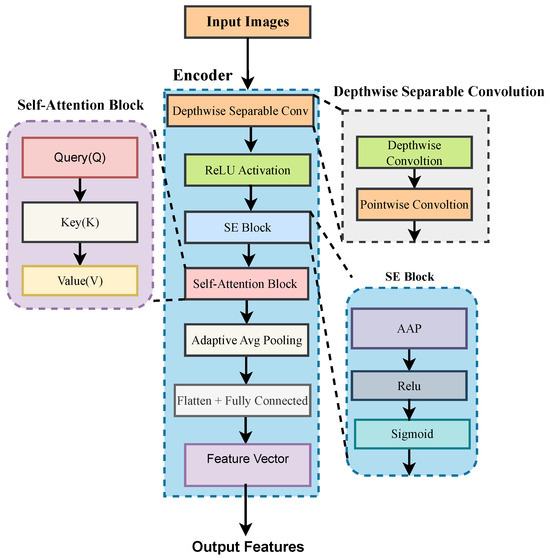

3.3. Encoder Architecture

A detailed diagram of the encoder is shown in Figure 5. The Dynamic-Separable Convolution-Encoder (DS-Conv) is a neural network that integrates depthwise separable convolutions, a squeeze-and-excitation module [SE], and a self-attention block [SAB] to encode the input data. It also applies an adaptive average-pooling (AAP) operation to minimize the spatial dimensions and a fully connected layer to output the final feature representation. Depthwise Separable Convolution [DSC] separates the depth-wise and point-wise convolutions to minimize the computation. Unlike normal convolutions, each channel is applied independently with a unique filter. This results in fewer parameters. After applying depth-wise convolution, point-wise convolution is performed. The output of DSC is applied to the SEB. The SEB recalibrates feature maps by learning channel-wise dependencies, which consist of A-AP, Relu, and sigmoid modules. The objective of SE is to capture global information (squeezing) and selectively emphasize certain features (excitation), it focuses on more important features and ignore less important features. After SE, the features are passed through the self-attention block (SAB), which contains query (Q), key (K), and value (V) modules. The attention weights are applied to the value matrix, and the result is summed with the original input tensor. The objective is to compute the attention score and identify important data. The output of the SAB is passed through adaptive average pooling (A-AP) and, lastly, flattened.

Figure 5.

Encoder architecture for SSL.

Algorithm 1 shows the entire pretraining process. The unlabeled data (x) are used to obtain the pretrained encoder (f). The algorithm shows all steps, including positive sample generation, loss computation, and backpropagation.

| Algorithm 1 Self-Supervised Learning Pretraining |

| Input: Unlabeled training images x Output: Trained encoder f

|

Similarly, Algorithm 2 shows the entire process of fine tuning of the pretrained model. In this step, labeled data are used, and loss is computed using cross entropy between labeled samples and predicted samples.

| Algorithm 2 Fine Tuning a Pretrained Encoder for Classification |

| Input: Training dataset file, Pretrained encoder weights, Number of epochs Output: Fine-tuned classifier

|

Algorithm 3 is used to find the classification results by using fine-tuned encoder and test data without labels. Once the predicted labels are stored, they can be used later to find the confusion matrix, output accuracy, average accuracy, and kappa values.

| Algorithm 3 Evaluation of Fine-Tuned Classifier on Test Data |

| Input: Testing dataset file , Fine-tuned classifier Output: Model accuracy on test dataset

|

3.4. Attention-Based Mixing

Attention-based mixing assists the model in paying attention to different parts of data, making the network more flexible and capable of handling varying feature relationships between the two inputs. This step mixes the two input feature maps ( and ) based on the attention map. The attention map acts as a weighting function to blend the two inputs.

where and are input images from the online and target branches, respectively, is the final augmented image, and stands for attention map.

3.5. Loss Computation

The final loss is computed as the sum of the individual losses. As there are three branches in our model, all of the branches are used to compute the final loss. The loss due to the online and target branches is called . Similarly, loss due to the fusion and online branches is called , and loss due to the fusion and target branches is called .

3.5.1. Online-to-Target Loss

In order to ensure that the representations learned by the online branch match a set of target representations, we minimize the cosine similarity loss as follows:

where:

- represents the (cosine) similarity between and ;

- represents the (cosine) similarity between and ; and

- M is the batch size.

3.5.2. Fusion-to-Online Loss

The online representation should be in line with the fused representation (), which is enforced by the following loss, as it is expected to capture significant information from both branches:

Loss from the fusion to the online branch is defined as follows:

where:

- is the fusion-branch representation vector and

- is the online branch projection.

3.5.3. Fusion-to-Target Loss

To further verify that the fused representation retains significant target information, we add the fusion-to-target loss:

where:

- is the fusion-branch representation vector and

- is the target representation.

3.5.4. Final Loss Function

The sum of the individual losses is expressed as

where , , and are are weights of individual losses controlling the contribution of each loss term. These weights allow for flexibility in balancing the different objectives during training. The dataset, augmentation technique, and model architecture are some of the variables that affect the ideal weight values. Typically, training performance is used to experimentally select the weights.

4. Data and Experimental Framework

4.1. Dataset

The So2Sat-LCZ42 [2] dataset contains co-registered SAR and Multispectral images of local climate zones.Three versions of the dataset have been released (https://github.com/zhu-xlab/So2Sat-LCZ42, accessed on 5 January 2025). In this article, version 3 is used. It consists of 352,366 samples and 48,307 test samples. The dataset was chosen for the following reasons. First, it contains cities around the world. Second, the cities have complex morphologies. Third, the number of samples is large. Fourth, the samples are collected from various cultural cities within the continent. These reasons make it a challenging dataset. The training and testing patches have dimensions of 32 × 32 pixels. Sentinel-2 has ten bands, and Sentinel-1 has eight bands, with a ground sampling distance of 10 m. Descriptions of Sentinel-1 bands are presented in Table 2, and the Sentinel-2 bands are described in Table 3. Sentinel-1 consists of VH, HH, and VV polarization. Some of the bands are not filtered, and some are speckle-filtered by applying a Lee filter. Similarly, Sentinel-2 bands consist of multiple bands, including visible and infrared bands. We used all of the 18 bands in the training and testing process. It is pertinent to mention that MS images are normalized before testing.

Table 2.

Sentinel-1 bands.

Table 3.

Description of Sentinel-2 data.

4.2. Performance Evaluation and Experimental Configuration

The performance of LCZC is evaluated using Average Accuracy (AA), Output Accuracy (OA), and the kappa measure.

where OA is the observed accuracy and EA is the expected accuracy. We selected hyperparameters based on preliminary experiments. We used a batch size of 64, as it provided a good trade-off between computational efficiency and stable convergence. Smaller batch sizes (e.g., 32) resulted in noisier gradient updates, while larger batch sizes increased memory requirements without notable improvements in performance. The initial learning rate was set to 0.001. We tested alternative values (0.01, 0.0005, and 0.0001) and observed that 0.01 caused instability, while 0.0001 led to very slow convergence. A cosine decay learning-rate schedule was also used to gradually reduce the learning rate for improved optimization.

We implemented pretraining for 100 epochs with an initial learning rate of 0.001. The batch size was set as 64. The loss weights were set as , , and . For few-shot classification, the dimension of the fully connected layer was equal to the number of local climate zones. For fine tuning, the model was trained for 100 epochs with a learning rate of 0.001 and a batch size of 64. Table 4 shows the effect of batch size on accuracy.

Table 4.

Performance based on batch size and learning rate.

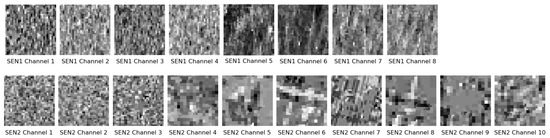

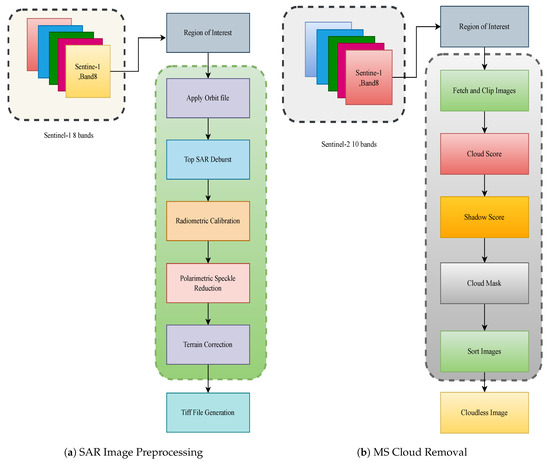

4.3. SAR and MS Preprocessing

SAR image preprocessing is a necessary step. Once the SAR and multispectral data have been downloaded, the preprocessing steps are used to generate ten MS bands and eight SAR bands, as shown in Figure 6. The entire process is shown in Figure 7 for both SAR and MS images.

Figure 6.

Sentinel-1 (row1) and Sentinel-2 (row2) patches for training.

Figure 7.

Preprocessing for SAR and multispectral data.

Region selection is the first step where the area of interest (AOI) is selected from the larger dataset. For both SAR and MS images, it is important to define the region where further analysis will occur. This step could involve cropping or selecting a specific geographic region. The orbit file contains information about the satellite’s position and its path in space. It is required for georeferencing so that images are aligned with geographical coordinates. The technique of “debursting” involves stitching together the separate bursts of SAR images to create a single, seamless image. By eliminating any distortions caused by the sensor, radiometric calibration guarantees that the reflectance values in the picture match the true physical characteristics of the Earth’s surface. Speckle noise due to the interference of radar waves is very common; by eliminating this noise, speckle reduction techniques improve the clarity and interpretability of the images. Terrain correction is the process of modifying an image to take into consideration distortions brought about by the Earth’s surface topography, such as valleys and mountains. This stage guarantees that the image depicts the landscape. Clouds are frequently captured in MS images, which can make the land surface invisible. Cloud removal is an essential preprocessing step to eliminate these features from the data, making the analysis more accurate. Similar to SAR images, multispectral images also need to be cropped to focus on the region of interest, eliminating irrelevant areas. Cloud score computation involves identifying areas in the image that are likely to contain clouds. Cloud detection algorithms can assign a cloud score to each pixel, helping to classify and filter out cloud-covered regions. Shadows cast by clouds or terrain can also obscure the surface in multispectral images. A shadow score is computed to identify these areas, allowing for their removal or correction. After cloud and shadow removal, the remaining images are sorted or processed in a way that enhances their usability for further analysis, typically focusing on the areas of interest with clear visibility. In essence, the process involves several preprocessing steps for both SAR and multispectral images to prepare them for further analysis, ensuring that the data are clean, accurate, and ready for the next stages of processing.The outcome of MS preprocessing and SAR preprocessing is 18 bands. These bands are concatenated. The 18-band concatenated images are further divided into 32 × 32 patches. The patches are then applied to the fine tuned network for classification. For pretraining and fine tuning, only the So2satLC42 dataset is used. Table 5 and Table 6 show preprocessingsteps for Sentinel-1 and Sentinel-2, respectively.

Table 5.

Preprocessing steps for Sentinel-1 (SAR) images.

Table 6.

Preprocessing steps for Sentinel-2 (Optical) images.

The preprocessing steps for both SAR and multispectral images have a significant impact on the final classification results.

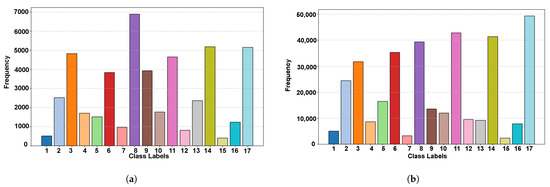

A histogram of the testing sample is shown in Figure 8. It shows the number of samples per class for both training and testing data. It is obvious from the histogram that number of samples per class differs and needs to be balanced before training the network.

Figure 8.

Training and testing data. (a) Histogram of testing samples. (b) Histogram of training samples.

5. Results

5.1. Results Comparison

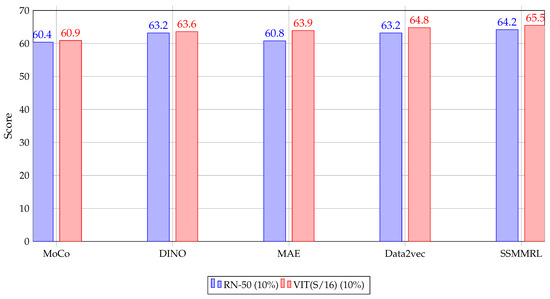

The So2satLC42 dataset is used to determine the classification accuracy. First of all, unlabeled data are used to pretrain the network. The pretraining results are presented in Table 7, and Figure 9 shows a comparative graph for OA with different encoders and models. We compared the results with the state-of-the-art [49] SSL models like Moco, DINO, MAE, and Data2vec. Instead of using ResNet (RN-50) or Vision Transformer (VIT), we used novel SSMMRL. The percentage of samples used for performance comparison are 10% and 100%, respectively. It is evident that SSMMRL achieved 61.4% output accuracy for 10% and 62.5% for 100% data, which is better than the rest of the algorithms.

Table 7.

Linear evaluation [49].

Figure 9.

Comparison of performance (RN-50, VIT(S/16)) with 10% and 100% samples for different models.

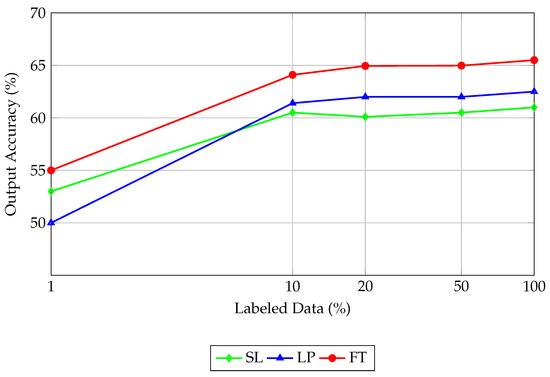

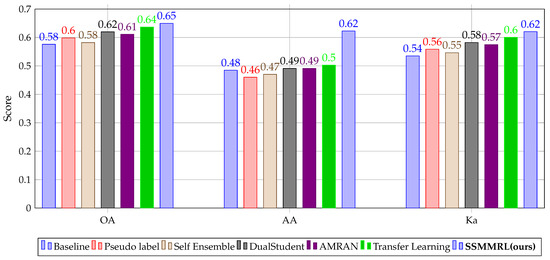

After the pretraining, fine tuning of the model is performed using labeled data. However, very few labels are required to train the model because the model has already learned features in the pretraining stage. The results are depicted in Table 8 and Figure 10. It can be seen that our model achieves better results than the other state-of-the-art models. We achieved 64.1% and 65.5% for 10% and 100% samples, respectively. Furthermore, Figure 11 shows a comparison of linear, fine-tuning, and supervised methods. Here, we increase the percentage of labeled data from 1% to 100% and evaluate the performance accordingly for pretraining, also called linear probing (LP), fine tuning (FT), and supervised learning (SL). Fine-tuning results are better than the pretraining and supervised learning results. The results were also compared with other models [50], like pseudo labels, self-ensemble, dual student, AMRAN, and transfer learning models as shown in Table 9 and Figure 12. SSMMRL results are better than the rest of the models in terms of OA, AA, and Ka.

Table 8.

Fine tuning [49].

Figure 10.

Comparison of fine-tuning performance (RN-50, VIT(S/16)) with 10% and 100% samples for different models.

Figure 11.

OA (%) vs. annotated samples (%).

Table 9.

Comparison of results [50].

Figure 12.

Comparison of OA, AA, and Ka scores across different models.

In order to show that SSMMRL is computationally efficient, the training time for 100 epochs is shown for different SSL models in Table 10 using NVIDIA A100 GPUs.

Table 10.

Training times of the studied SSL methods (100 epochs) [50].

A statistical test was performed to compare performance using self-supervised learning models. Table 11 shows that SSMMRL is significantly better than the rest of the models. The p-value and t-value parameters were obtained by using the average value of five experiments for each model, p-values below 0.05 indicate a statistically significant difference. Similarly, high t-values indicate significant differences.

Table 11.

Comparison of SSMMRL with different methods using a t-test.

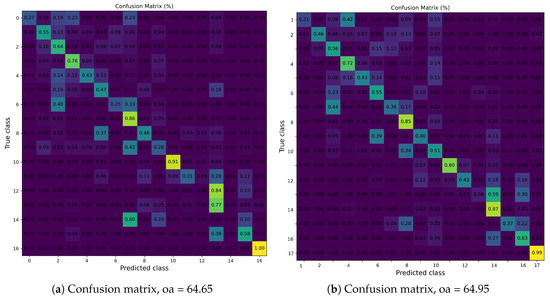

In order to evaluate the class-wise accuracy, the confusion matrix is shown in Figure 13 for OA = 64.65% and 64.95% respectively, as determined in the ablation study. The diagonal values show the overall accuracy; it can be seen that class 17 has a maximum accuracy of 99%.

Figure 13.

Confusion matrix.

Overall, the classification is effective. The detailed classification report is shown in Table 12, showing the precision, recall, and F1 score for each class.

Table 12.

Classification Report: Precision, Recall, and F1-Score.

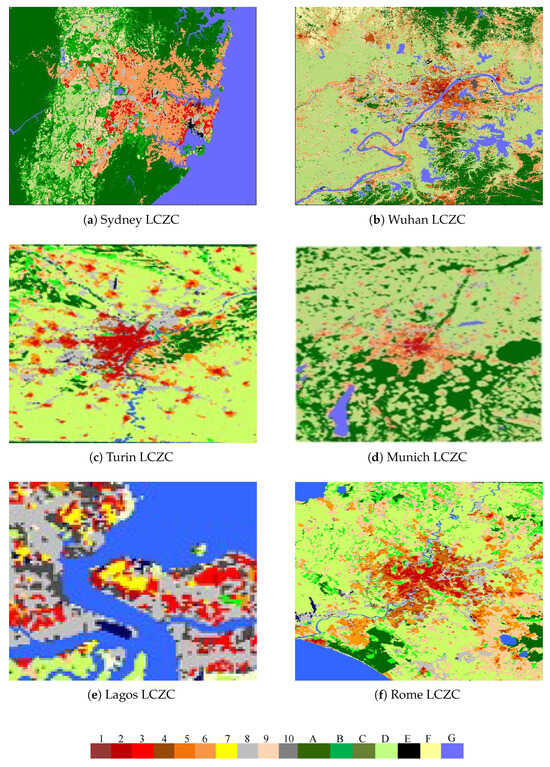

5.2. LCZ Maps

The local climate zone classification of six cities is shown in Figure 14. The 17 classes are shown in various colors. The LCZ map shows that most of the classes are correctly classified. The maximum accuracy is achieved for class G (water). It is evident from the LCZ maps that water bodies are easily classified (shown in blue color). The accuracy for the rest of the classes is as per the confusion matrix, which is discussed earlier.

Figure 14.

Local climate zone classification for 17 classes.

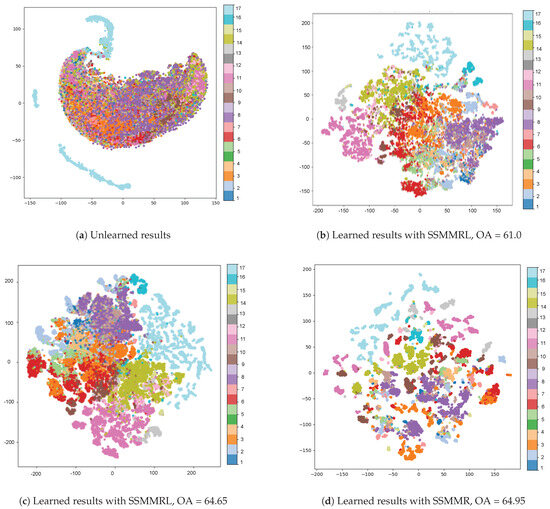

5.3. Data Visualization

A T−SNE visualization is presented in Figure 15. These plots show how the application of SSMMRL enhances the representation of data, turning initially scattered points (unlearned) into well-separated clusters (learned), reflecting the power of the learned model in organizing and extracting meaningful patterns from the data.

Figure 15.

T−SNE visualization of So2satLC42 data.

6. Discussion

6.1. Ablation Study

Table 13 shows the results of an ablation study conducted to evaluate the effects of various components, loss functions, and positive sample generation methods. Here is an explanation of the study: With only the SEB, the performance shows an OA of 60.85% and Ka of 59.97%. Adding an SAB results in a slight increase in accuracy, with an OA of 61.14% and Ka of 60.98%. Incorporating DS-Conv in the SEB and SAB improves the OA to 64.65% and the Ka to 61.57%. Including all components (SEB, SAB, DS-Conv, and A-AP) and three losses with a full augmentation pipeline leads to the highest observed OA of 64.95% (with 20% labels only) and a Ka of 62.4%. The results suggest that combining the SEB, SAB, DS-Conv, and A-AP with full augmentation and three loss terms significantly enhances the model’s performance, achieving the highest accuracy in terms of both the OA and Ka metrics. If the various components, losses, and augmentations are removed, the results are less than the highest value.

Table 13.

Ablation study with structural components, loss functions, and positive sample generation methods (L1 = , L2 = , and L3 = ).

6.2. Interpretation of Classification Performance

It is pertinent to mention that for eight classes, the OA is below 50%; class 13 (Bush) and class 14 (low plants) have the highest misclassification values for several reasons. The first reason is similarity in the contents. The second reason is that the distinctions between these three classes primarily depend on the proportion of vegetation coverage and the types of vegetation species. Third, seasonal fluctuations affect the performance. Fourth, the low resolution of Sentinel-1 and Sentinel-2 data limits their ability to distinguish the aforementioned variations.

Classes 3 (compact low rise) and 7 (lightweight low rise) also are misclassified due to several reasons. First, These classes exhibit comparable patterns of compactness. Second, the primary difference among the classes is the weight of the construction materials, which is challenging to determine from Sentinel-1 and Sentinel-2 images. Third, there is a scarcity of reference data for the lightweight low-rise class.

6.3. Custom Data Results

A custom dataset was generated using coregistered Sentinel-1 and Sentinel-2 images. Small patches with dimensions of 8 × 8, 16 × 16 and 32 × 32 were generated and saved as training data. Only 10% of total samples were used as labels for fine tuning. The results of classification are shown in Table 14. It is pertinent to mention that custom data accuracy is less than that of the So2satLC42 dataset because the custom dataset was created using only one city, whereas the So2satLC42 dataset contains cities around the world.

Table 14.

Results on the custom dataset.

7. Conclusions

In this paper, a novel feature extractor for self-supervised learning using positive samples only is introduced. The So2satLC42 dataset is used without labels to pretrain the network, and only a few annotated samples are used to fine tune the network. The novel encoder and positive sample-based regularization enhance the overall efficacy. The proposed method achieves superior results relative to SOTA methods by using few labeled samples and avoided overfitting. While our approach demonstrates strong performance in the context of multi-modal learning, the generalization ability of the model could be further explored, particularly in scenarios where the domains of the data vary significantly. Furthermore, similarity between types of climate zones, like bush and shrub, limits the performance of the algorithm. Future work will focus on enhancing the encoder’s performance for transfer learning and domain adaptation.

Author Contributions

Resources, W.Y., H.Z. and Y.W.; Writing—original draft, A.N.; Supervision, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (NNSFC) under Grant U2241202.

Data Availability Statement

The original data presented in the study are openly available in So2Sat LCZ42 at http://doi.org/10.14459/2018mp1483140 (accessed on 5 January 2025).

Acknowledgments

The authors would like to thank Xiaoxiang Zhu and Yuanyuan Wang from TUM. Their support and the provision of the dataset for local climate zone classification have been invaluable. This dataset has played a key role in this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhu, X.X.; Qiu, C.; Hu, J.; Shi, Y.; Wang, Y.; Schmitt, M. The Urban Morphology on Our Planet—A Global Perspective from Space. Remote Sens. Environ. 2022, 269, 112794. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.X.; Hu, J.; Qiu, C.; Shi, Y.; Kang, J.; Mou, L.; Bagheri, H.; Haberle, M.; Hua, Y.; Huang, R.; et al. So2Sat LCZ42: A Benchmark Data Set for the Classification of Global Local Climate Zones [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2020, 8, 76–89. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, S.; Zou, B.; Dong, H. Unsupervised Deep Representation Learning and Few-Shot Classification of Pol-SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5100316. [Google Scholar]

- Baevski, A.; Zhou, H.; Mohamed, A.; Ali, M. Data2Vec: A General Framework for Self-Supervised Learning in Speech, Vision, and Language. NeurIPS 2022, 162, 1298–1312. Available online: https://proceedings.mlr.press/v162/baevski22a.html (accessed on 5 January 2025).

- Mil, G.; Chin, J.; See, L.; Bechtel, B.; Foly, M. An Introduction to the WUDAPT Project. In Proceedings of the 9th International Conference on Urban Climate, Toulouse, France, 20–24 July 2015. [Google Scholar]

- Bechtel, B.; Foly, M.; Mil, G.; Chin, J.; See, L.; Alexander, P.; O’Conor, M.; Albuquerque, T. CENSUS of Cities: LCZ-Classification of Cities (Level-0) Workflow Initial Results from Various Cities. In Proceedings of the 9th International Conference on Urban Climate, Toulouse, France, 20–24 July 2015. [Google Scholar]

- Seo, D.K.; Kimm, Y.H.; Eo, Y.D.; Le, M.H.; Park, W.Y. Fusion of SAR/Multispectral Images with Random Forest Regression for Change Detection. ISPRS Int. J. Geo-Inf. 2018, 7, 401. [Google Scholar] [CrossRef]

- Oliviero, C.; Quegan, S. Understanding SAR Images; SciTech Publishing: Raleigh, NC, USA, 2004. [Google Scholar]

- Blas, X.; Vanhale, L.; Deforny, P. Efficiency of Crop Identification Using Optical/SAR Image Time-Series. Remote Sens. Environ. 2005, 96, 352–365. [Google Scholar] [CrossRef]

- Xu, G.; Zhu, X.; Taper, N.; Bechtel, B. Urban Climate Zones Classification Using CNN and Ground-Level Images. Prog. Phys. Geogr. Earth Environ. 2019, 43, 410–424. [Google Scholar] [CrossRef]

- Grill, J.-B. Bootstrap Your Own Latents (BYOL): A New Method for Self-Supervised Learning. In Proceedings of the 34th International Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 21271–21284. [Google Scholar]

- Xu, D.; Zhan, Q.; Zhou, D.; Yan, Y.; Wan, Y.; Rogoa, A. Local Climate Zones in Xian City: A New Classification Approach Employing Spatial Indicators and Supervised Classification. Buildings 2023, 13, 2806. [Google Scholar] [CrossRef]

- Wan, Y.; Zhou, D.; Ma, Q. Developing a Comprehensive Local Climate Zones Land Use Dataset for Advanced High-Resolution Urban Climate and Environment Modeling. Remote Sens. 2023, 15, 3111. [Google Scholar] [CrossRef]

- Lian, Y.; Song, W.; Cao, S.; Du, M. Local Climate Zones Classification Using Daytime Zhuhai1 Hyperspectral Imagery and Night-Time Light Datasets. Remote Sens. 2023, 15, 3351. [Google Scholar] [CrossRef]

- Moix, E.; Giulini, G. Mapping Local Climate Zone Change in the 5 Largest Cities of Switzerland. Urban Sci. 2024, 8, 120. [Google Scholar] [CrossRef]

- Fen, W.; Liu, J. A Literature Survey of Local Climate Zones Classification: Status, Applications, and Prospects. Buildings 2022, 12, 1693. [Google Scholar] [CrossRef]

- Xu, J.; Yo, R.; Lu, W.; Chen, C.; Laai, D. Applications of Local Climate Zone Classification Schemes to Improve Urban Sustainability: A Bibliometric Review. Sustainability 2020, 12, 8083. [Google Scholar] [CrossRef]

- Fernandes, R.; Nascimanto, V.; Freitas, M.; Ometo, J. Local Climate Zones to Identify Surface Urban Heat Islands (SUHI): A Systematic Review. Remote Sens. 2023, 15, 884. [Google Scholar] [CrossRef]

- Zhao, Z.; Shen, L.; Le, L.; Wang, H.; He, B.-J. Local Climate Zones Classification Schemes Can Also Indicate Local-Scale Urban Ventilation Performances: An Evidence-Based Study. Atmosphere 2020, 11, 776. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, D.; Liu, L.; Liang, Z.; Shen, J.; Wei, F.; Li, S. Spatio-temporal Characteristics of the Surface Urban Heat Island and Its Driving Factors Based on Local Climate Zones and Population in Beijing, China. Atmosphere 2021, 12, 1271. [Google Scholar] [CrossRef]

- Lehnert, M.; Saveć, S.; Milšević, D.; Dunjeć, J.; Gelatić, J. Mapping Local Climate Zones and Their Applications in European Urban Environments: A Systematic Literature Review and Future Development Trends. ISPRS Int. J. Geo-Inf. 2021, 10, 260. [Google Scholar] [CrossRef]

- Zhao, Z.; Sharifi, A.; Dong, X.; Shen, L.; He, B.-J. Spatial Variability and Temporal Heterogeneity of Surface Urban Heat Island (SUHI) Patterns and the Suitability of Local Climate Zones for Land Surface Temperature Characterization. Remote Sens. 2021, 13, 4338. [Google Scholar] [CrossRef]

- Chen, C.; Bagen, H.; Xi, X.; Laa, Y.; Yamagata, Y. Combination of Sentinel-2 and PALSAR-2 for Local Climate Zone Classification: A Case Study of Nancheng, China. Remote Sens. 2021, 13, 1902. [Google Scholar] [CrossRef]

- Ji, W.; Chen, Y.; Li, K.; Dai, X. Multicascaded Feature Fusion-Based Deep Learning Network for Local Climate Zone Classification Based on the So2Sat LCZ42 Benchmark Dataset. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2023, 16, 449–467. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Yuan, Q.; Shi, Q.; Shen, H.; Zhang, L. Coupling Dual Graph Convolution Network and Residual Network for Local Climate Zone Mapping. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 1221–1234. [Google Scholar] [CrossRef]

- Luu, Y.; Yang, J.; Ma, S. Dynamic Changes of Local Climate Zones in the Guangdong–Hong Kong–Macao Greater Bay Area and Their Spatio-Temporal Impacts on the Surface Urban Heat Island Effects Between 2005 and 2015. Sustainability 2021, 13, 6374. [Google Scholar] [CrossRef]

- Alghamdi, A.S.; Alzhrani, A.I.; Alanazi, H.H. Local Climate Zones and Thermal Characteristics in Riyadh City, Saudi Arabia. Remote Sens. 2021, 13, 4526. [Google Scholar] [CrossRef]

- Shi, L.; Linge, F.; Foody, G.M.; Yang, Z.; Liu, X.; Du, Y. Seasonal SUHI Analysis Using Local Climate Zone Classification: A Case Study of Wuhan, China. Int. J. Environ. Res. Public Health 2021, 18, 7242. [Google Scholar] [CrossRef]

- Wang, R.; Wang, M.; Zhang, Z.; Hu, T.; Xin, J.; He, Z.; Liu, X. Geographical Detection of Urban Thermal Environment Based on the Local Climate Zones: A Case Study in Wuhan, China. Remote Sens. 2022, 14, 1067. [Google Scholar] [CrossRef]

- Xian, Y.; Tan, Y.; Wan, Z.; Peng, C.; Huang, C.; Dian, Y.; Teng, M.; Zhao, Z. Seasonal Variation of the Relationships between Spectral Indices and Land Surface Temperatures Based on Local Climate Zones: A Study in Three Yangtze River Megacities. Remote Sens. 2023, 15, 870. [Google Scholar] [CrossRef]

- Hu, J.; Ghamsi, P.; Zhu, X.X. Feature Extraction and Selection of Sentinel-1 Dual-Pol Data for Global-Scale Local Climate Zones Classification. ISPRS Int. J. Geo-Inf. 2018, 7, 379. [Google Scholar] [CrossRef]

- He, G.; Dong, Z.; Guan, J.; Feng, P.; Jin, S.; Zhang, X. SAR and Multi-Spectral Data Fusion for Local Climate Zones Classification with Multi-Branch CNN. Remote Sens. 2023, 15, 434. [Google Scholar] [CrossRef]

- Ballard, D.H. Modular Learning in Neural Networks. In Proceedings of the Sixth National Conference on Artificial Intelligence, Seattle, WA, USA, 13–17 July 1987; Volume 647, pp. 279–284. [Google Scholar]

- Ng, A. Sparse Autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar] [CrossRef]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. arXiv 2021, arXiv:2111.06377. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27. Available online: https://proceedings.neurips.cc/paper_files/paper/2014/file/f033ed80deb0234979a61f95710dbe25-Paper.pdf (accessed on 5 January 2025).

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, I.; Frey, B. Adversarial Autoencoders. arXiv 2015, arXiv:1511.05644. [Google Scholar] [CrossRef]

- Donahue, J.; Krahenbuhl, P.; Darrell, T. Adversarial Feature Learning. arXiv 2016, arXiv:1605.09782. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a Similarity Metric Discriminatively, with Application to Face Verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 539–546. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning, PMLR, Vienna, Austria, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep Clustering for Unsupervised Learning of Visual Features. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 132–149. [Google Scholar]

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised Learning of Visual Features by Contrasting Cluster Assignments. arXiv 2020, arXiv:2006.09882. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. arXiv 2021, arXiv:2104.14294. [Google Scholar] [CrossRef]

- Zbontar, J.; Jing, L.; Misra, I.; LeCun, Y.; Deny, S. Barlow Twins: Self-Supervised Learning via Redundancy Reduction. arXiv 2021, arXiv:2103.03230. [Google Scholar] [CrossRef]

- Bardes, A.; Ponce, J.; LeCun, Y. VICReg: Variance-Invariance-Covariance Regularization for Self-Supervised Learning. arXiv 2021, arXiv:2105.04906. [Google Scholar]

- Wang, Y.; Ali Brahem, N.A.; Xiong, Z.; Liu, C.; Albrecht, C.M.; Zhu, X.X. SSL4EO-S12: A large-scales multimodals, multitemporals dataset for self-supervised learning in Earth observations [Softwarer and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2023, 11, 98–106. [Google Scholar] [CrossRef]

- Zhao, X.; Hu, J.; Mou, L.; Xiong, Z.; Zhu, X.X. Cross-city landuses clasification of remote sensing images via deep transfer learning. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103358. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).