Evaluation of Sentinel-2 Deep Resolution 3.0 Data for Winter Crop Identification and Organic Barley Yield Prediction

Abstract

1. Introduction

2. Materials and Methods

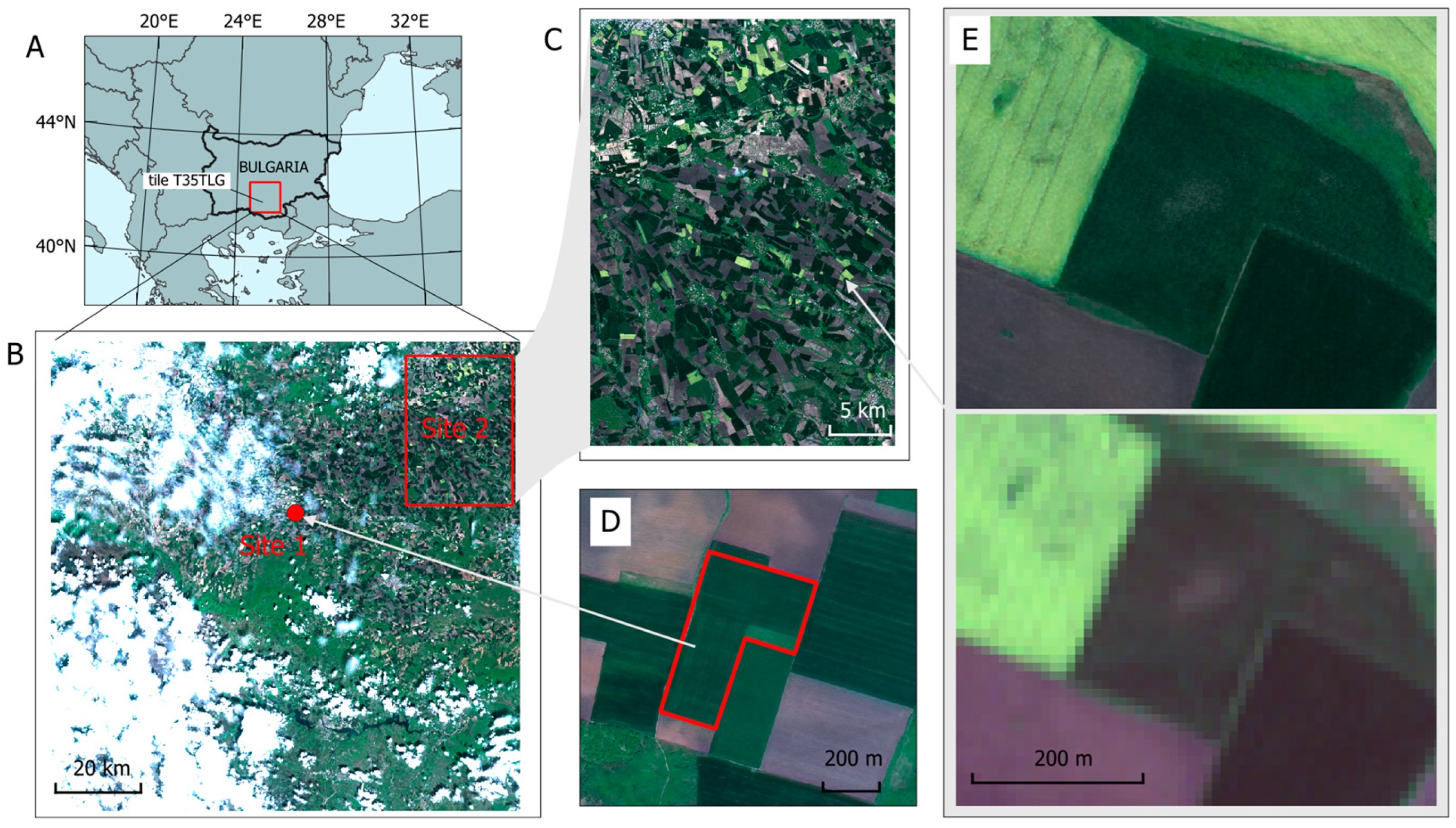

2.1. Study Sites and Satellite Image Datasets

2.1.1. Barley Yield Data

2.1.2. Crop Type Data

2.2. Methods

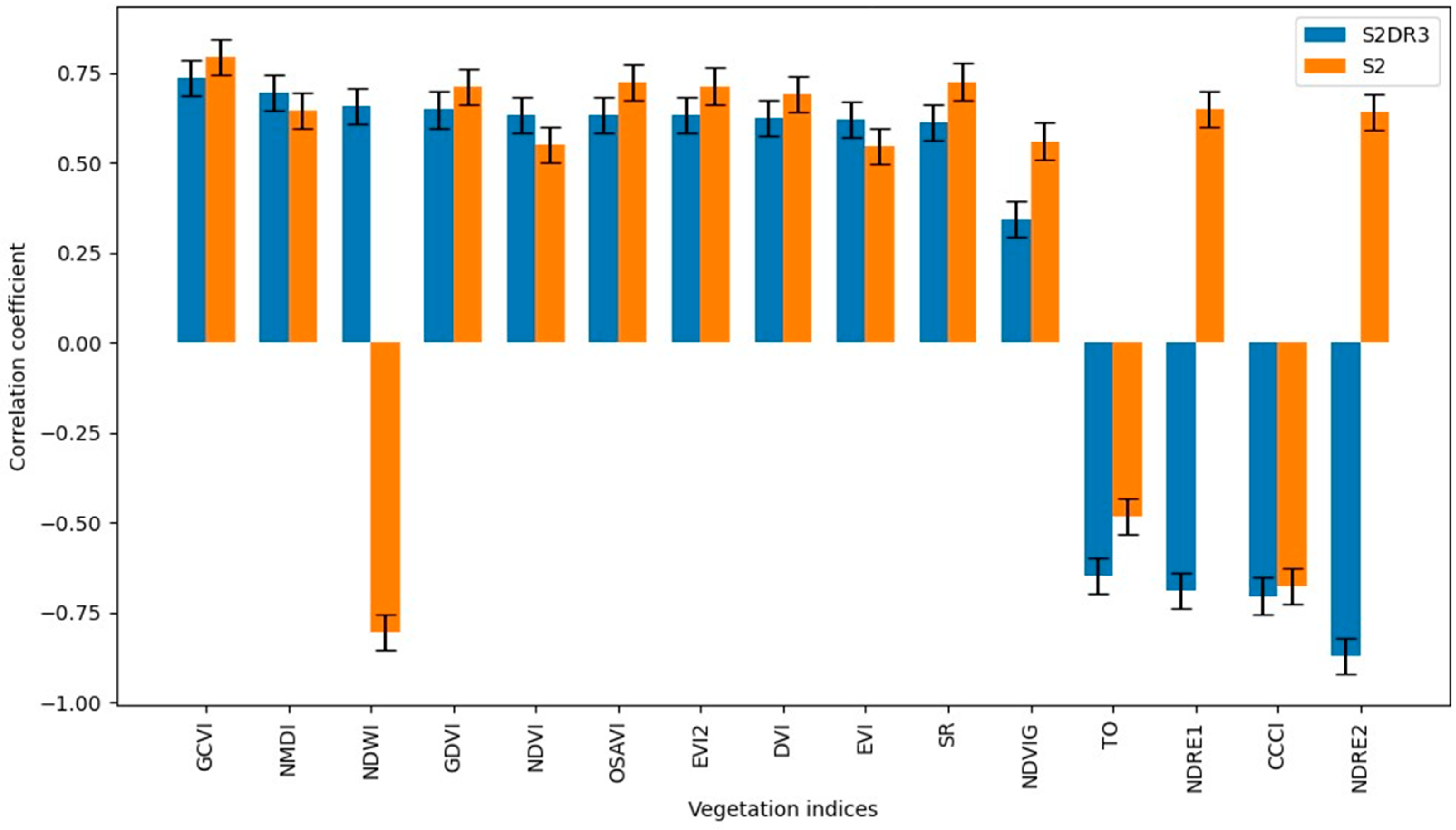

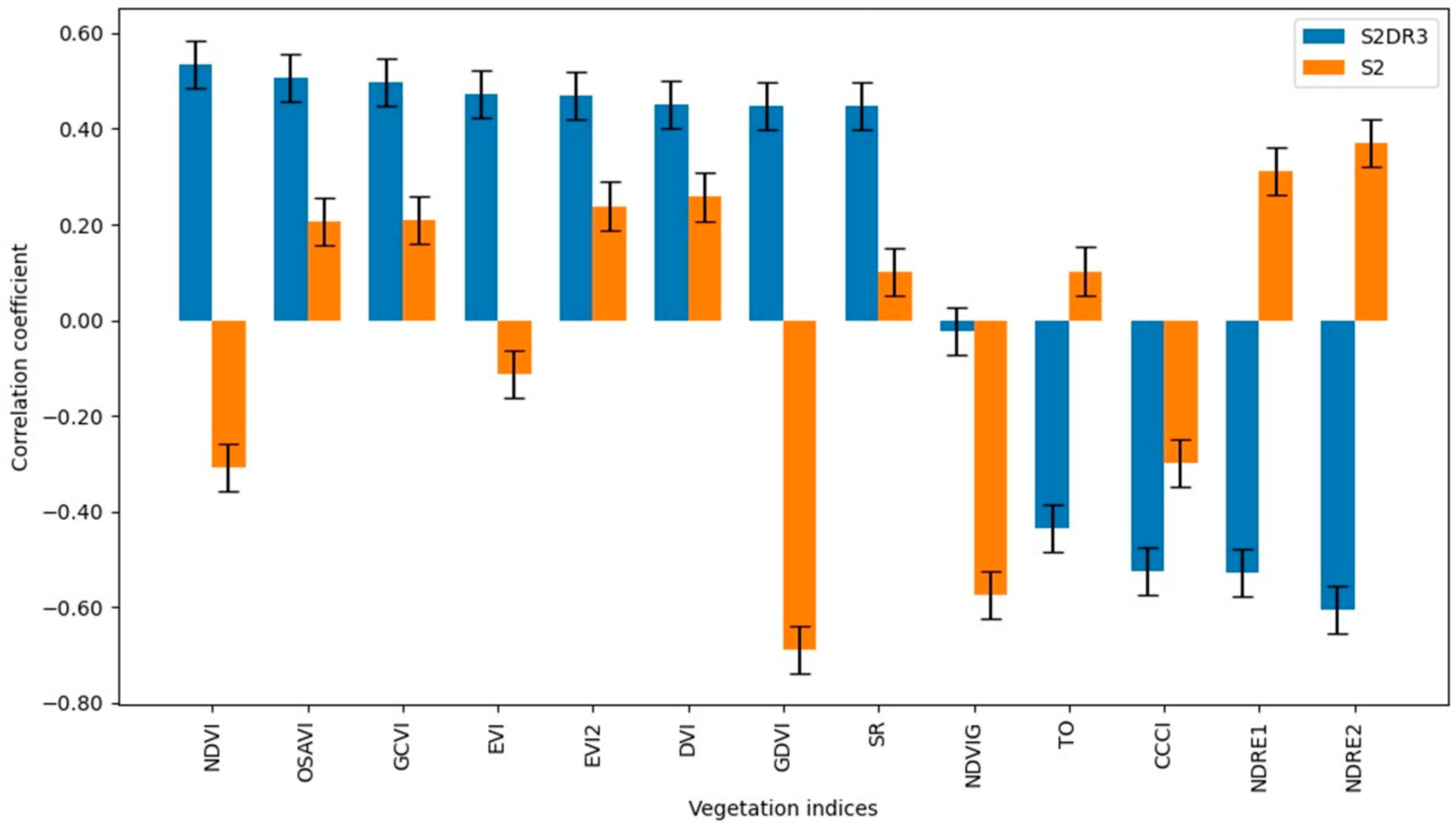

2.2.1. Correlation of Yield with Vegetation Indices

2.2.2. Crop Classification

3. Results

3.1. Results of the Correlation Between Crop Yield and Vegetation Indices

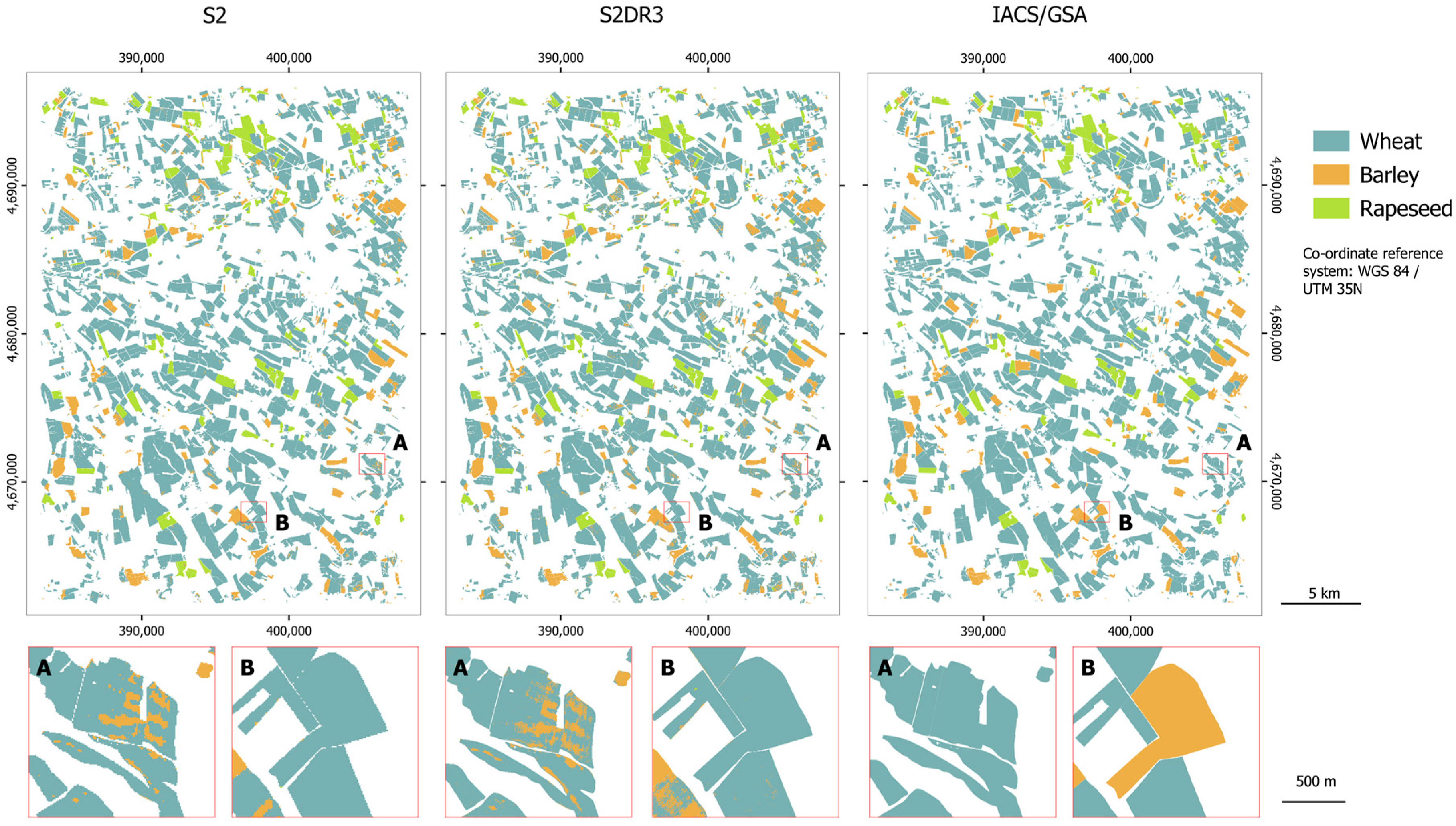

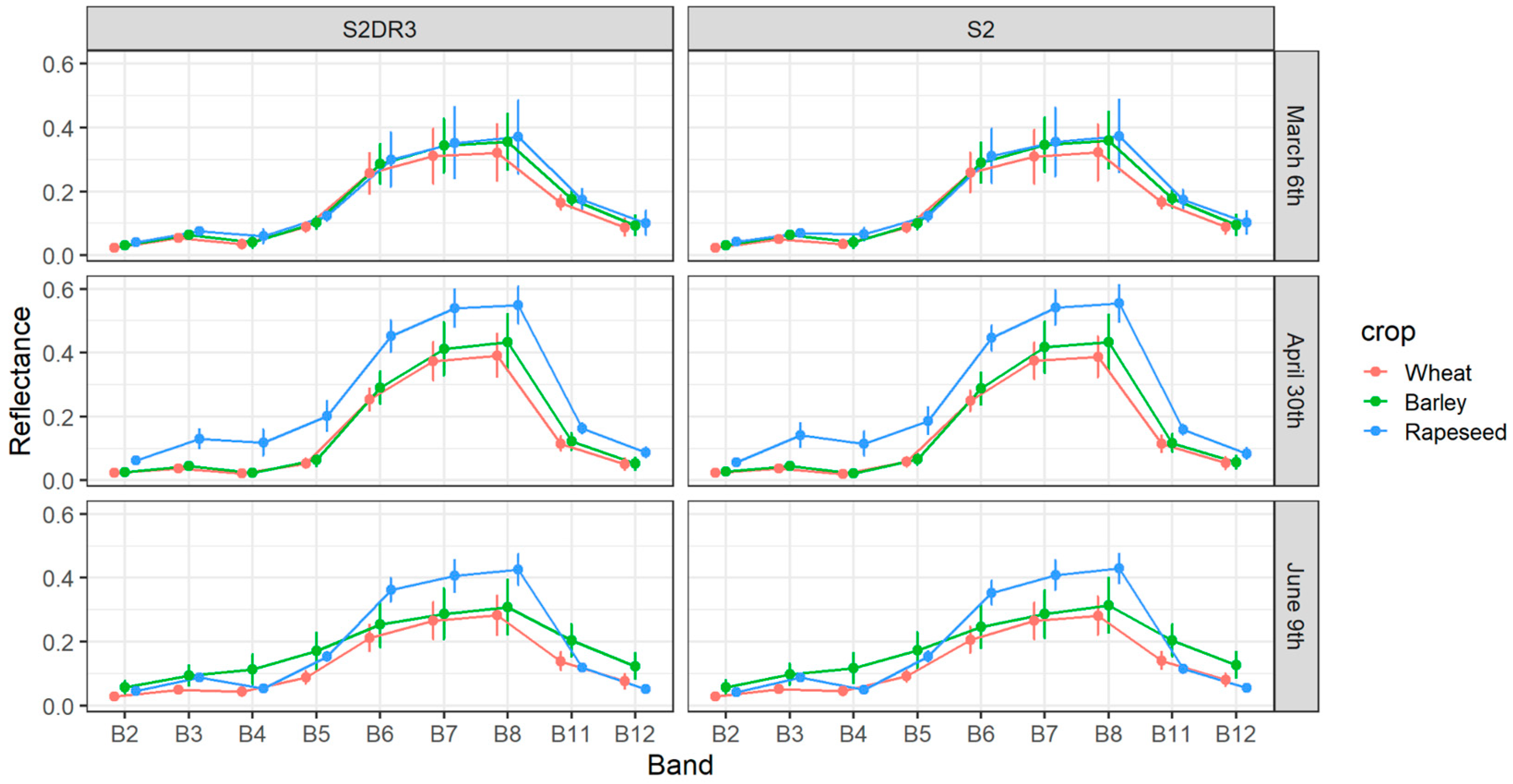

3.2. Crop Classification

4. Discussion

4.1. Winter Crop Discrimination

4.2. The Performance of S2DR3

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Biologische Bundesanstalt, Bundessortenamt und CHemische Industrie (Phenological Scale) | BBCH |

| Canopy Chlorophyll Content Index | CCCI |

| Common Agricultural Policy | CAP |

| Deep Resolution | DR |

| Difference Vegetation Index | DVI |

| EOS Data Analytics | EOSDA |

| Enhanced Vegetation Index | EVI |

| Two-band Enhanced Vegetation Index | EVI2 |

| Green Chlorophyll Vegetation Index | GCVI |

| Geographic Information System | GIS |

| Geospatial Application | GSA |

| Integrated Administration and Control System | IACS |

| Multidisciplinary Digital Publishing Institute | MDPI |

| Normalised Difference Red Edge Index 1 | NDRE1 |

| Normalised Difference Red Edge Index 2 | NDRE2 |

| Normalised Difference Vegetation Index | NDVI |

| Green Normalised Difference Vegetation Index | NDVIG |

| Normalised Difference Water Index | NDWI |

| Normalised Multi-band Drought Index | NMDI |

| Optimised Soil-Adjusted Vegetation Index | OSAVI |

| Quantum Geographic Information System | QGIS |

| Random Forest | RF |

| Sentinel-2 | S2 |

| Sentinel-2 Deep Resolution 3.0 | S2DR3 |

| Single-Image Super-Resolution | SISR |

| Simple Ratio | SR |

| Triangular Optical Reflectance | TO |

| Vegetation Index | VI |

References

- Leitner, C.; Vogl, C.H. Farmers’ Perceptions of the Organic Control and Certification Process in Tyrol, Austria. Sustainability 2020, 12, 9160. [Google Scholar] [CrossRef]

- Novikova, A.; Rocchi, L.; Startiene, G. Integrated Assessment of Farming System Outputs: Lithuanian Case Study. Inžinerinė Ekon. 2020, 31, 282–290. [Google Scholar] [CrossRef]

- Novikova, A.; Zemaitiene, R.; Marks-Bielska, R.; Bielski, S. Assessment of the Environmental Public Goods of the Organic Farming System: A Lithuanian Case Study. Agriculture 2024, 14, 362. [Google Scholar] [CrossRef]

- Aleksiev, G. Sustainable Development of Bulgarian Organic Agriculture. Trakia J. Sci. 2020, 18, 603–606. [Google Scholar] [CrossRef]

- Georgieva, N.; Kosev, V.; Vasileva, I. Suitability of Lupinus albus L. Genotypes for Organic Farming in Central Northern Bulgaria. Agronomy 2024, 14, 506. [Google Scholar] [CrossRef]

- Lone, A.H.; Rashid, I. Sustainability of Organic Farming: A Review via Three Pillar Approach. Sustain. Agri Food Environ. Res. Discontin. 2023, 11, 1–24. [Google Scholar] [CrossRef]

- Rozaki, Z.; Yudanto, R.S.B.; Triyono; Rahmawati, N.; Alifah, S.; Ardila, R.A.; Pamungkas, H.W.; Fathurrohman, Y.E.; Man, N. Assessing the sustainability of organic rice farming in Central Java and Yogyakarta: An economic, ecological, and social evaluation. Org. Farming 2024, 10, 142–158. [Google Scholar] [CrossRef]

- Kociszewski, K.; Graczyk, A.; Mazurek-Łopacinska, K.; Sobocińska, M. Social Values in Stimulating Organic Production Involvement in Farming—The Case of Poland. Sustainability 2020, 12, 5945. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote Sensing Estimation of Crop Biophysical Characteristics at Various Scales. Hyperspectral Remote Sens. Veget. 2016, 20, 329. [Google Scholar]

- Atanasova, D.; Bozhanova, V.; Biserkov, V.; Maneva, V. Distinguishing Areas of Organic, Biodynamic and Conventional Farming by Means of Multispectral Images—A Pilot Study. Biotechnol. Biotechnol. Equip. 2021, 35, 977–993. [Google Scholar] [CrossRef]

- Li, G.; Wan, S.; Zhou, J.; Yang, Z.; Qin, P. Leaf Chlorophyll Fluorescence, Hyperspectral Reflectance, Pigments Content, Malondialdehyde and Proline Accumulation Responses of Castor Bean (Ricinus communis L.) Seedlings to Salt Stress Levels. Ind. Crops Prod. 2010, 31, 13–19. [Google Scholar] [CrossRef]

- Usha, K.; Singh, B. Potential Applications of Remote Sensing in Horticulture—A Review. Sci. Hortic. 2013, 153, 71–83. [Google Scholar] [CrossRef]

- Chanev, M.; Filchev, L.; Ivanova, D. Opportunities for Remote Sensing Applications in Organic Cultivation of Cereals—A Review. J. Bulg. Geogr. Soc. 2020, 43, 31–36. [Google Scholar] [CrossRef]

- Nadzirah, R.; Qurrohman, T.; Indarto, I.; Putra, B.T.W.; Andriyani, I. Estimating Harvest Yield Using Sentinel-2 Derivative Vegetation Indices (Case Study: Organic Paddy in Candipuro, Lumajang). In AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2024; Volume 3176. [Google Scholar] [CrossRef]

- Tarasiewicz, T.; Nalepa, J.; Farrugia, R.A.; Valentino, G.; Chen, M.; Briffa, J.A.; Kawulok, M. Multitemporal and multispectral data fusion for super-resolution of Sentinel-2 images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Jumaah, H.J.; Rashid, A.A.; Saleh, S.A.R.; Jumaah, S.J. Deep neural remote sensing and Sentinel-2 satellite image processing of Kirkuk City, Iraq for sustainable prospective. J. Opt. Photonics Res. 2024, 1–9. [Google Scholar] [CrossRef]

- Michel, J.; Vinasco-Salinas, J.; Inglada, J.; Hagolle, O. SEN2VENµS, a Dataset for the Training of Sentinel-2 Super-Resolution Algorithms. Data 2022, 7, 96. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Akhtman, Y. Sentinel-2 Deep Resolution. Available online: https://medium.com/@ya_71389/sentinel-2-deep-resolution-3-0-c71a601a2253 (accessed on 23 January 2024).

- Kawulok, M.; Kawulok, J.; Smolka, B.; Celebi, M.E. (Eds.) Super-Resolution for Remote Sensing; Springer Cham: Cham, Switzerland, 2024; 384p. [Google Scholar] [CrossRef]

- Ye, S.; Zhao, S.; Hu, Y.; Xie, C. Single-Image Super-Resolution Challenges: A Brief Review. Electronics 2023, 12, 2975. [Google Scholar] [CrossRef]

- Dixit, M.; Yadav, R.N. A Review of Single Image Super Resolution Techniques using Convolutional Neural Networks. Multimed Tools Appl. 2024, 83, 29741–29775. [Google Scholar] [CrossRef]

- Al-Mekhlafi, H.; Liu, S. Single image super-resolution: A comprehensive review and recent insight. Front. Comput. Sci. 2024, 18, 181702. [Google Scholar] [CrossRef]

- Wang, P.; Bayram, B.; Sertel, E. A comprehensive review on deep learning based remote sensing image super-resolution methods. Earth-Sci. Rev. 2022, 232, 104110. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-Resolution for Remote Sensing Images via Local–Global Combined Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Ninov, N. Chapter 4: Soils. In Geography of Bulgaria; Kopralev, I., Yordanova, M., Mladenov, C., Eds.; ForCom: Sofia, Bulgaria, 2002; pp. 277–315. (In Bulgarian) [Google Scholar]

- Shishkov, T.; Kolev, N. The Soils of Bulgaria; Springer: Cham, Switzerland, 2014; 208p. [Google Scholar]

- Kopralev, I.; Yordanova, M.; Mladenov, C. (Eds.) Geography of Bulgaria; ForCom: Sofia, Bulgaria, 2002; 760p. (In Bulgarian) [Google Scholar]

- Marinkov, E.; Dimova, D. Opitno Delo i Biometriya; Akademichno Izdatelstvo na VSI: Plovdiv, Bulgaria, 1999; 262p. (In Bulgarian) [Google Scholar]

- Meier, U. Growth Stages of Mono- and Dicotyledonous Plants: BBCH Monograph; Open Agrar Repositorium: Quedlinburg, Germany, 2018. [Google Scholar] [CrossRef]

- Shanin, J. Metodika na Polskija Opit; Izdatelstvo na BAN: Sofia, Bulgaria, 1977; 383p. (In Bulgarian) [Google Scholar]

- Integrated Administration and Control System (IACS). Available online: https://agriculture.ec.europa.eu/common-agricultural-policy/financing-cap/assurance-and-audit/managing-payments_en (accessed on 24 January 2024).

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation. NASA Technical Reports Server (NTRS), USA, NASA-CR-132982. 1973. Available online: https://ntrs.nasa.gov/api/citations/19730017588/downloads/19730017588.pdf (accessed on 24 January 2024).

- Metternicht, G. Vegetation Indices Derived from High-Resolution Airborne Videography for Precision Crop Management. Int. J. Remote Sens. 2003, 24, 2855–2877. [Google Scholar] [CrossRef]

- Gao, B.C. Normalized Difference Water Index for Remote Sensing of Vegetation Liquid Water from Space. Imaging Spectrom. 1995, 2480, 225–236. [Google Scholar]

- Wang, L.; Qu, J.J. NMDI: A Normalized Multi-Band Drought Index for Monitoring Soil and Vegetation Moisture with Satellite Remote Sensing. Geophys. Res. Lett. 2007, 34, L20405. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of Soil-Adjusted Vegetation Indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Steven, M.D. The Sensitivity of the OSAVI Vegetation Index to Observational Parameters. Remote Sens. Environ. 1998, 63, 49–60. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the Radiometric and Biophysical Performance of the MODIS Vegetation Indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Daughtry, C.S.; Walthall, C.L.; Kim, M.S.; De Colstoun, E.B.; McMurtrey, J.E., III. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated Narrow-Band Vegetation Indices for Prediction of Crop Chlorophyll Content for Application to Precision Agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships Between Leaf Chlorophyll Content and Spectral Reflectance and Algorithms for Non-Destructive Chlorophyll Assessment in Higher Plant Leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident Detection of Crop Water Stress, Nitrogen Status and Canopy Density Using Ground Based Multispectral Data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; Volume 1619. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory. Nat. Stat. Learn. Theory 1995, 38, 409. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogrammetry Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Peştemalci, V.; Dinç, U.; Yeğingil, I.; Kandirmaz, M.; Çullu, M.A.; Öztürk, N.; Aksoy, E. Acreage estimation of wheat and barley fields in the province of Adana, Turkey. Int. J. Remote Sens. 1995, 16, 1075–1085. [Google Scholar] [CrossRef]

- Toulios, L.; Tournaviti, A. Cereals discrimination based on spectral measurements. In Proceedings of the International Symposium on Remote Sensing, Crete, Greece, 23–27 September 2002. [Google Scholar] [CrossRef]

- Gerstmann, H.; Möller, M.; Gläßer, C. Optimization of spectral indices and long-term separability analysis for classification of cereal crops using multi-spectral RapidEye imagery. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 115–125. [Google Scholar] [CrossRef]

- Harfenmeister, K.; Itzerott, S.; Weltzien, C.; Spengler, D. Detecting Phenological Development of Winter Wheat and Winter Barley Using Time Series of Sentinel-1 and Sentinel-2. Remote Sens. 2021, 13, 5036. [Google Scholar] [CrossRef]

- Ashourloo, D.; Nematollahi, H.; Huete, A.; Aghighi, H.; Azadbakht, M.; Shahrabi, H.S.; Goodarzdashti, S. A new phenology-based method for mapping wheat and barley using time-series of Sentinel-2 images. Remote Sens. Environ. 2022, 280, 113206. [Google Scholar] [CrossRef]

- Heupel, K.; Spengler, D.; Itzerott, S. A Progressive Crop-Type Classification Using Multitemporal Remote Sensing Data and Phenological Information. J. Photogramm. Remote Sens. Geoinf. Sci. 2018, 86, 53–69. [Google Scholar] [CrossRef]

- Nasrallah, A.; Baghdadi, N.; Mhawej, M.; Faour, G.; Darwish, T.; Belhouchette, H.; Darwich, S. A Novel Approach for Mapping Wheat Areas Using High Resolution Sentinel-2 Images. Sensors 2018, 18, 2089. [Google Scholar] [CrossRef]

- Pfeil, I.; Reuß, F.; Vreugdenhil, M.; Navacchi, C.; Wagner, W. Classification of Wheat And Barley Fields Using Sentinel-1 Backscatter. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2020), Waikoloa, HI, USA, 26 September–2 October 2020; pp. 140–143. [Google Scholar] [CrossRef]

- Marini, M.F. Discriminación de trigo y cebada empleando imágenes satelitales ópticas y radar: Estudio de caso: Partido de Coronel Rosales (Argentina). Investig. Geográficas 2021, 104, e60173. [Google Scholar] [CrossRef]

| Usage | Clouds (%) | Image Date |

|---|---|---|

| Crop classification | 13 | 6 March 2023 |

| Yield | 37 | 15 April 2023 |

| Yield/Crop classification | 40 | 30 April 2023 |

| Yield/Crop classification | 2 | 9 June 2023 |

| Reference | Formula | Vegetation Index |

|---|---|---|

| [33] | (B8 − B4)/(B8 + B4) | NDVI |

| [34] | (B6 − B3)/(B3 + B6) | NDVIG |

| [35] | (B8 − B12)/(B8 + B12) | NDWI |

| [36] | (B8a − (B11 − B12))/(B8a + (B11 + B12)) | NMDI |

| [37] | (B8 − B4)/(B8 + B4 + 0.16) | OSAVI |

| [38] | B8/B4 | SR |

| [39] | B8 − B4 | DVI |

| [40] | 2.5 × (B8 − B4)/(B8 + 6 × B4 − 7.5 × B2 + 1) | EVI |

| [41] | 2.5 × (B8 − B4)/(B8 + 2.4 × B4 + 1) | EVI2 |

| [42] | TCARI/OSAVI | TO |

| [43] | B8 − B3 | GDVI |

| [43] | B8/B3 − 1 | GCVI |

| [44] | (B6 − B5)/(B6 + B5) | NDRE1 |

| [44] | (B7 − B5)/(B7 + B5) | NDRE2 |

| [45] | NDRE1/OSAVI | CCCI |

| Default Value in EnMAP-Box v3.15 | Candidate Values | Description [49] | Parameter [49] | Classifier |

|---|---|---|---|---|

| 100 | 100, 200, 300, 400, 500, 600, 700, 800, 900, 1000 | The number of trees in the forest | n_estimators | RF |

| Square root of the number of features | 3, 4, 5, 6, 7 | The number of features to consider when looking for the best split | max_features | |

| 2 | 2, 3, 5, 10, 15, 20, 30 | The minimum number of samples required to split an internal node | min_samples_split | |

| 1 | 1, 2, 3, 4 | The minimum number of samples required to be at a leaf node | min_samples_leaf | |

| - | 0.00001, 0.0001, 0.001, 0.01, 0.1, 1, 10, 100 | Kernel coefficient | gamma | SVC |

| - | 0.01, 0.1, 1, 10, 100, 1000, 10,000, 100,000 | Regularisation parameter | C |

| Support Vector Classification | Random Forest | |||

|---|---|---|---|---|

| S2 | S2DR3 | S2 | S2DR3 | |

| Winter wheat | ||||

| 0.96 (0.94; 0.98) | 0.96 (0.94; 0.98) | 0.97 (0.95; 0.98) | 0.96 (0.95; 0.98) | User’s accuracy |

| 0.97 (0.95; 0.98) | 0.95 (0.87; 0.97) | 0.90 (0.87; 0.94) | 0.90 (0.85; 0.93) | Producer’s accuracy |

| Winter barley | ||||

| 0.78 (0.69; 0.86) | 0.70 (0.49; 0.82) | 0.53 (0.34; 0.67) | 0.52 (0.35; 0.65) | User’s accuracy |

| 0.70 (0.59; 0.88) | 0.70 (0.59; 0.88) | 0.74 (0.65; 0.87) | 0.73 (0.63; 0.86) | Producer’s accuracy |

| Winter rapeseed | ||||

| 0.95 (0.90; 0.99) | 0.95 (0.90; 0.98) | 0.93 (0.86; 0.95) | 0.91 (0.85; 0.95) | User’s accuracy |

| 0.98 (0.97; 0.99) | 0.98 (0.96; 0.99) | 0.99 (0.98; 1.00) | 0.99 (0.98; 0.99) | Producer’s accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chanev, M.; Kamenova, I.; Dimitrov, P.; Filchev, L. Evaluation of Sentinel-2 Deep Resolution 3.0 Data for Winter Crop Identification and Organic Barley Yield Prediction. Remote Sens. 2025, 17, 957. https://doi.org/10.3390/rs17060957

Chanev M, Kamenova I, Dimitrov P, Filchev L. Evaluation of Sentinel-2 Deep Resolution 3.0 Data for Winter Crop Identification and Organic Barley Yield Prediction. Remote Sensing. 2025; 17(6):957. https://doi.org/10.3390/rs17060957

Chicago/Turabian StyleChanev, Milen, Ilina Kamenova, Petar Dimitrov, and Lachezar Filchev. 2025. "Evaluation of Sentinel-2 Deep Resolution 3.0 Data for Winter Crop Identification and Organic Barley Yield Prediction" Remote Sensing 17, no. 6: 957. https://doi.org/10.3390/rs17060957

APA StyleChanev, M., Kamenova, I., Dimitrov, P., & Filchev, L. (2025). Evaluation of Sentinel-2 Deep Resolution 3.0 Data for Winter Crop Identification and Organic Barley Yield Prediction. Remote Sensing, 17(6), 957. https://doi.org/10.3390/rs17060957