1. Introduction

Perennial cash crops such as tea, citrus, and grape play a vital role in China’s agricultural economy [

1], contributing to farmers’ income, rural revitalization, and the stability of market supply chains. However, the large-scale expansion of these perennial crops, driven by economic benefits and environmental suitability, has increasingly competed with grain production for arable land, posing new challenges for optimizing land-use structures and achieving fine-scale territorial management. Against this background, balancing food security with economic development requires accurate and dynamic mapping of perennial crops [

2], which has become a critical issue in agricultural remote sensing and land management [

3]. Traditional crop classification methods often rely on single-date Sentinel-2 imagery, which fails to capture temporal dynamics and therefore limits both accuracy and generalization [

4]. To overcome these limitations, time-series remote sensing has emerged as a key tool for crop mapping, as it effectively captures phenological variations throughout the growing season.

Among existing datasets, Sentinel-2 optical imagery provides 10 m spatial resolution and multi-spectral coverage, including red-edge and near-infrared bands that are sensitive to crop canopy variations. Multi-temporal optical data have been widely used for crop mapping and have shown significant performance improvements compared with single-date imagery. For example, Chong et al. [

5] used monthly composites of Sentinel-1 and Sentinel-2 imagery on Google Earth Engine to map crops in Heilongjiang, demonstrating that time-series data provide higher classification accuracy than single-period observations. Yi et al. [

6] generated crop maps from multi-temporal Sentinel-2 imagery in the Shiyang River Basin but noted that manual selection of phenological stages introduces subjectivity and fails to capture continuous crop development. Wei et al. [

7] showed that Sentinel-2 time-series observations can identify early-season growth dynamics of rice, maize, and soybean in the Sanjiang Plain, enabling earlier and more accurate mapping. Similarly, Valero et al. [

8] confirmed the advantages of dense Sentinel-2 time series for seasonal crop mapping, and Feng et al. [

9] highlighted the potential of domain adaptation to enhance robustness across heterogeneous regions. Nevertheless, the usability of optical time series is often constrained by cloud cover and temporal gaps [

10], reducing their reliability, especially in monsoon and mountainous regions. To address this limitation, radar remote sensing has been increasingly integrated with optical imagery. Sentinel-1 Synthetic Aperture Radar (SAR), with its VV/VH dual-polarization capability, provides all-weather, day-and-night imaging that is sensitive to crop structure and moisture conditions. Numerous studies have demonstrated the benefits of SAR–optical fusion for crop classification. For instance, Chabalala et al. [

11] mapped smallholder fruit plantations using Sentinel-1 and Sentinel-2 data, showing that radar data complement optical observations under cloudy conditions. Sun et al. [

12] combined time-series Sentinel-1 and Sentinel-2 data to improve crop type discrimination, emphasizing the complementary nature of radar–optical integration. Likewise, Felegari et al. [

13] employed a random forest classifier to fuse Sentinel-1/2 data for winter wheat mapping, while Razzano et al. [

14] used supervised machine learning on Google Earth Engine for land cover classification, both confirming the value of multi-source integration. Niculescu et al. [

15] demonstrated that random forest classification on fused Sentinel-1/2 time series improved vegetation monitoring in France, and Eisfelder et al. [

16] showed that SAR–optical integration enables large-scale crop type classification in Ethiopia.

Despite these advances, traditional machine-learning fusion strategies often rely on manual feature selection, are computationally expensive, and adapt poorly to heterogeneous or large-scale datasets. Deep learning provides a promising alternative by enabling automatic feature extraction and hierarchical representation learning from high-dimensional, multi-source remote sensing data [

17,

18]. Recent studies have validated its potential in crop classification and mapping. Ge et al. [

19] developed XM-UNet to fuse Sentinel-1 and Sentinel-2 for rice mapping, effectively addressing optical data gaps. Xu et al. [

20] proposed a DCM framework incorporating attention-based LSTM networks for high-resolution soybean mapping, while Wang et al. [

21] designed a dual-branch model for winter wheat classification and yield prediction. Zhang et al. [

22] applied 1D CNNs to classify summer crops in Yolo County, showing that temporal features can enhance classification accuracy. Rustowicz et al. [

23] combined U-Net and ConvLSTM to map rice, corn, and sorghum at high resolution, and Ndikumana et al. [

24] applied RNN-based classifiers for rice crop mapping using multi-temporal Sentinel-1 data. Jiang et al. [

25] further demonstrated the potential of deep learning for multi-crop classification using high-resolution temporal sequences.

Although these studies confirm that deep models can exploit cross-sensor temporal features and improve robustness, most focus on annual crops such as rice [

24], wheat [

21], or soybean [

20], leaving perennial cash crops relatively underexplored. Unlike annual crops, which follow compact and relatively consistent seasonal cycles, perennial species exhibit long, overlapping, and highly variable growth stages that extend across multiple seasons. Their phenological timing is strongly influenced by topography, microclimate, and long-term hydrothermal accumulation, resulting in substantial spatial heterogeneity and cross-regional phase shifts. Such variability increases spectral confusion and makes perennial crop mapping inherently more challenging than annual crop classification. Among them, tea, citrus, and grape—representative perennial species in southern China—exhibit long, complex growth cycles with overlapping phenological stages, increasing spectral confusion, especially under cross-regional variations in topography and climate. Although a few works have explored multi-crop recognition [

23,

25], phenology is often simplified or treated as static, limiting cross-regional generalization. Recent efforts have attempted to incorporate phenological information, but most still depend on fixed calendars or predefined time windows. For instance, Nie et al. [

26] mapped rice cultivation by selecting temporal windows tied to specific phenological events, improving local accuracy but neglecting inter-annual variability. Zhang et al. [

27] retrieved phenological dates from LAI inflection points to build cropping calendars across Asia, assuming uniform temporal patterns. Qin et al. [

28] employed deep learning to recognize rice phenological stages but constrained the framework to static, calendar-based labels. These studies underline the importance of phenology but reveal that fixed calendars cannot capture dynamic variations driven by topography, climate, and management, thus limiting transferability across regions [

29,

30].

To overcome these limitations, this study proposes a Region-Adaptive Multi-Head Phenology-Aware Network (RAM-PAMNet) for semantic segmentation of perennial cash crops. The framework integrates three main components: (1) a Multi-source Temporal Attention Fusion (MTAF) module that dynamically fuses Sentinel-1 SAR and Sentinel-2 optical time series to improve temporal consistency and cloud robustness; (2) a Region-Aware Module (RAM) that encodes topographic and climatic factors to estimate phenological phase shifts and adaptively recalibrate phenological windows across regions; and (3) a Multi-Head Phenology-Aware Module (MHA-PAM) that models phenological dynamics across multiple scales—short-term, mid-term, long-term, and region-modulated—to enhance adaptability and generalization. Unlike most existing research focusing on annual crops such as rice or wheat, this study explicitly targets three perennial cash crops—tea, citrus, and grape—which dominate the study regions and exhibit distinct phenological rhythms. Using Changde (Hunan) as the core region and Yaan (Sichuan) as the cross-regional validation area, the proposed RAM-PAMNet is evaluated through comparative and ablation experiments to verify its robustness, transferability, and interpretability, providing technical support for fine-scale perennial crop recognition and sustainable agricultural management.

2. Study Area and Data

2.1. Study Area

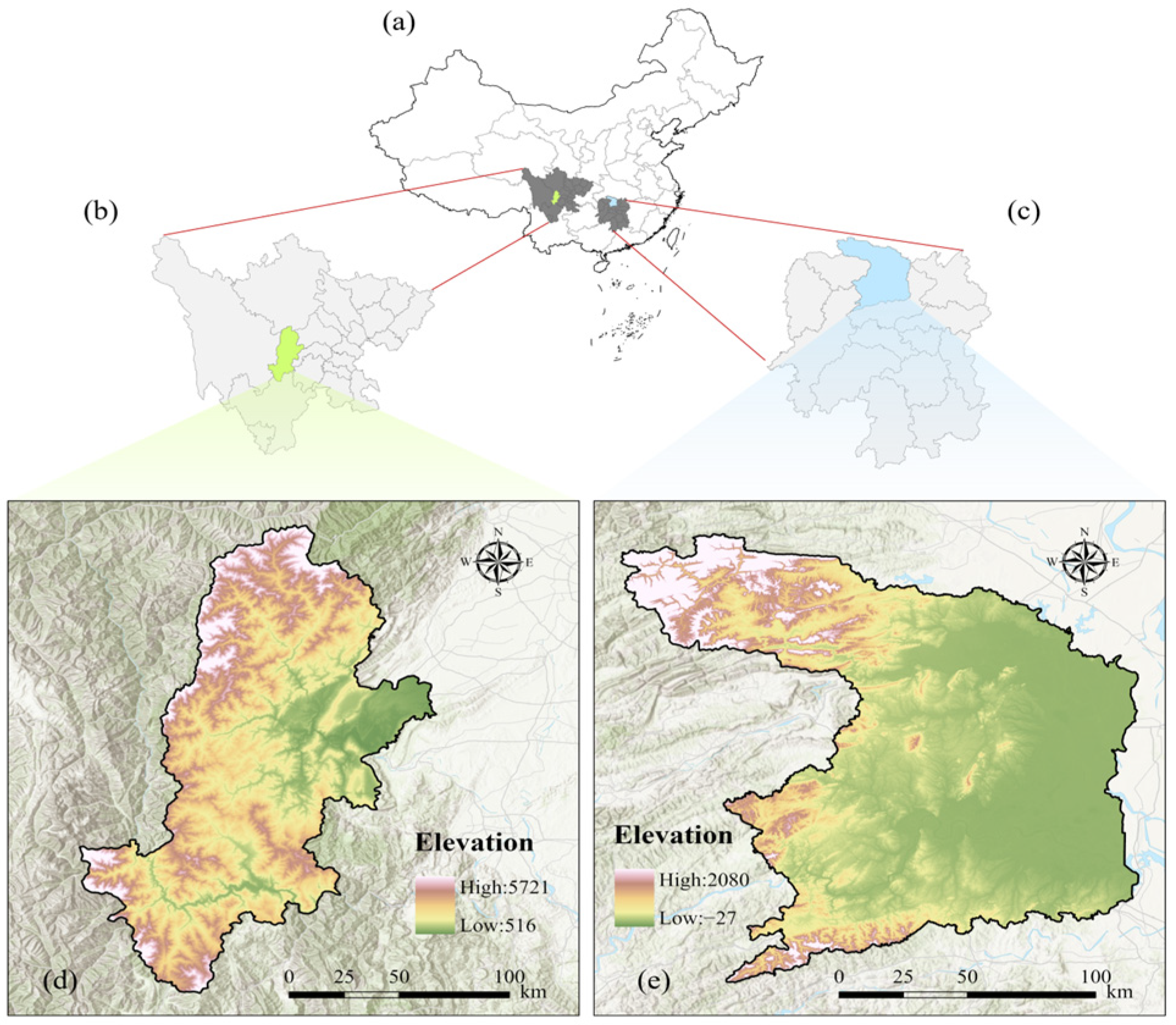

Both Changde City in Hunan Province and Yaan City in Sichuan Province are major production regions for perennial cash crops—tea, citrus, and grape, which are the three focus species of this study. To improve the clarity and representativeness of the study regions, the spatial extents of both areas were explicitly defined. The Changde region covers approximately 18,189.8 km2, spanning from 110.29°E–112.18°E and 28.24°N–30.07°N, while the Yaan region covers approximately 15,046 km2, located between 101.55°E–103.20°E and 29.24°N–30.56°N. These two regions represent strongly contrasting agroecological environments: Changde features a plain–hill mosaic with moderate elevations ranging from 27 to 2080 m, whereas Yaan exhibits steep mountainous terrain ranging from 516 to 5721 m. The pronounced differences in hydrothermal conditions—annual precipitation of 1200–1500 mm in Changde versus more than 1800 mm in Yaan, and annual mean temperatures of 16.5–17.5 °C versus 14.1–16.2 °C—drive substantial phenological shifts, providing an ideal setting for evaluating the cross-regional adaptability of the proposed RAM-PAMNet framework.

In this study, Changde serves as the core training and validation area, whereas Yaan is used as an independent cross-regional testing area to assess model transferability under contrasting environmental conditions. The geographical locations and topographic characteristics of the two study areas are shown in

Figure 1.

Changde is located on the western bank of Dongting Lake and features a landscape of interlaced plains and low hills. Fertile soils and diverse terrain support extensive perennial crop cultivation. Tea is mainly grown in the hilly red-soil zones, with harvesting occurring from March to June and minor north–south timing shifts (3–5 days) driven by local microclimatic variations. Citrus is concentrated in low hills and river terraces, with fruit expansion from June to August and ripening from October to December. Grapes are typically planted in valley plains and gentle slopes, exhibiting budburst in March–April and ripening during July–September, with distinct spectral responses across phenological stages.

Yaan lies on the western margin of the Sichuan Basin and is strongly influenced by the Qinghai–Tibet Plateau. Its high mountains, deep valleys, and humid subtropical climate contribute to a delayed phenological schedule relative to Changde. Tea is predominantly cultivated above 1000 m, with leaf expansion and harvesting shifted to May–July. Citrus is mainly distributed in low- and mid-elevation valleys, where ripening often extends beyond November. Grapes are planted in valley bottoms, with budburst and flowering postponed to late spring and ripening during August–October, indicating an approximate one-month phenological delay.

2.2. Data

To ensure temporal completeness for perennial crop phenology analysis, Sentinel-1 SAR and Sentinel-2 optical imagery covering a full phenological cycle (January–December 2022) were obtained from the Google Earth Engine (GEE) platform. Sentinel-1 provides VV/VH dual-polarization backscatter measurements, while Sentinel-2 Multispectral Instrument (MSI) imagery captures vegetation reflectance patterns across visible, NIR, and SWIR bands.

Static geographical factors, including elevation, slope, and aspect, were extracted from the Shuttle Radar Topography Mission (SRTM) digital elevation model and resampled to 10 m. Dynamic climatic variables included monthly mean temperature, precipitation, and cumulative sunshine duration. Temperature and precipitation were obtained from the China Meteorological Forcing Dataset (CMFD). Sunshine duration was derived from CMFD downward shortwave radiation and ERA5-Land surface solar radiation (SSRD) using the standard 120 W/m2 threshold.

The experimental dataset consists of two regions: Changde (Hunan), used for model training and validation, and Yaan (Sichuan), used as an independent cross-regional testing site. The characteristics and sources of all remote sensing, topographic, and climatic datasets used in this study are summarized in

Table 1. All datasets include Sentinel-1/2 time series, DEM-derived geographical layers, climate variables, and crop labels for tea, citrus, and grape.

2.3. Data Preparation

A unified preprocessing workflow was applied to ensure spatial and temporal consistency across Sentinel-1, Sentinel-2, climate variables, and crop labels. Sentinel-2 Level-2A images were processed through QA60 cloud masking, atmospheric bottom-of-atmosphere (BOA) correction, and monthly median compositing. Sentinel-1 GRD images underwent radiometric calibration, terrain correction (RTC), and speckle filtering, and were subsequently co-registered to the Sentinel-2 grid. All datasets were resampled to a common spatial resolution of 10 m.

Crop label masks were derived from agricultural census data and manually validated using high-resolution imagery and field surveys. The vector labels were rasterized to 10 m and aligned with the composite grid. Each study area was then partitioned into non-overlapping 512 × 512 pixel patches (~5.12 km2). This produced 694 patches in Changde and 574 in Yaan.

To increase spatial diversity and reduce overfitting, three offline augmentation strategies—90° rotation, horizontal flipping, and Gaussian noise injection—were applied to all training patches. Each augmentation was applied once, expanding the Changde training set from 694 to 2776 patches. The Yaan test set remained unchanged to ensure an unbiased evaluation of cross-regional generalization.

Table 2 presents the pixel-level distribution and estimated patch counts of the four land-cover categories (background, tea, citrus, and grape) in Changde and Yaan. These statistics reflect the inherent class imbalance in perennial crop mapping and guide the design of augmentation and loss functions.

3. Methodology

The RAM-PAMNet model architecture comprises four core modules (

Figure 2): MTAF, RAM, MHA-PAM, and the Decoder. Through the cascading and feedback mechanisms among these modules, the framework adaptively accounts for geographical differences in phenological processes, thereby enhancing the robustness and generalization of cross-regional multi-crop recognition [

31].

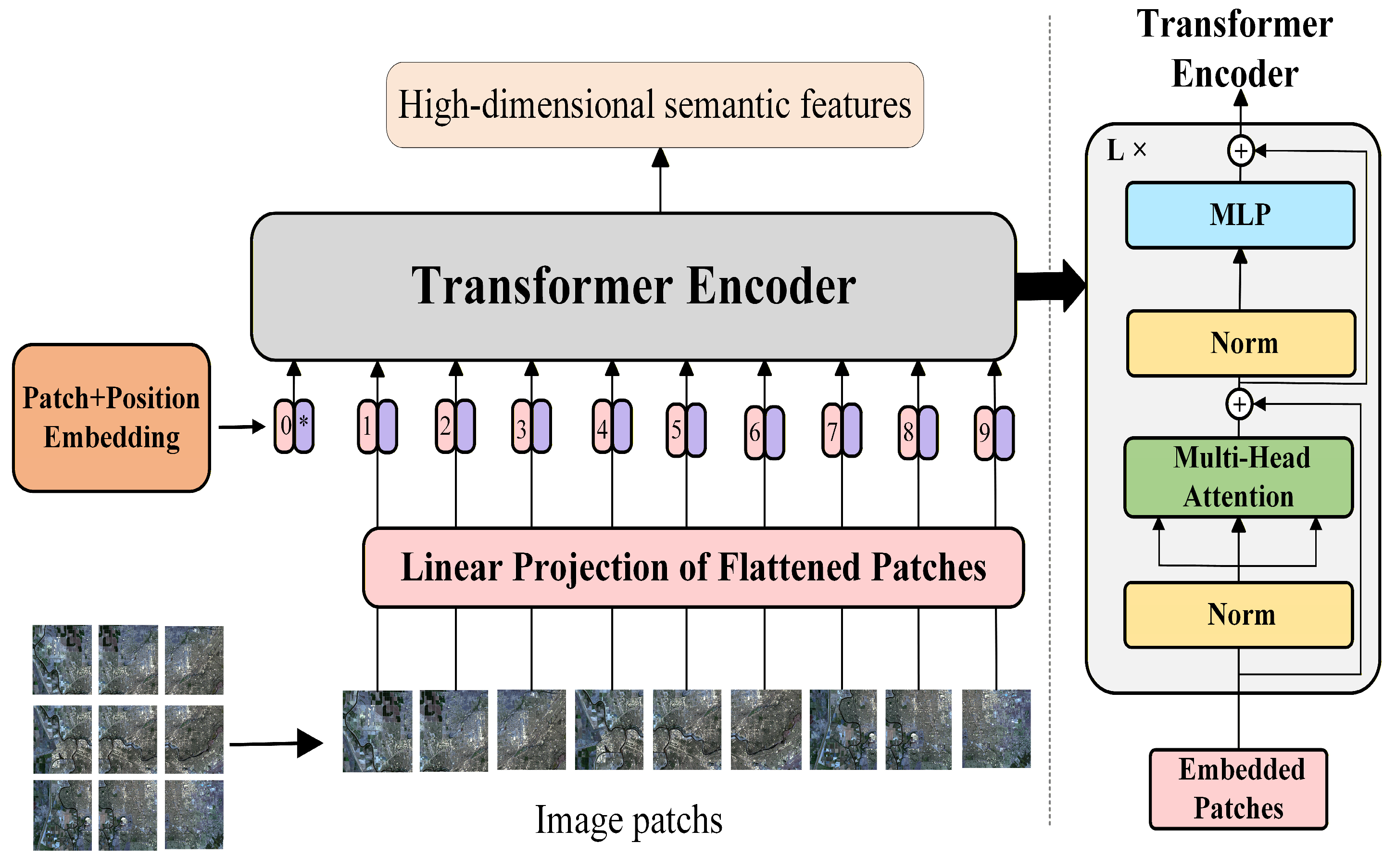

Specifically, the preprocessed multi-source time-series remote sensing data (Sentinel-1/2) are first fed into the ViT backbone to extract global spatial dependencies through patch projection and multi-layer Transformer encoding [

32,

33]. MTAF then dynamically allocates feature weights between optical and SAR modalities at each time step, improving temporal consistency and cloud robustness [

34,

35]. RAM fuses topographic, climatic, and soil factors into regional embedding vectors to estimate crop-specific phenological shifts across regions, thereby generating region-specific phenological weights. Conditioned on RAM outputs, MHA-PAM employs four attention heads—short-term, mid-term, long-term, and region-modulated—to model temporal variations in phenological features of tea, citrus, and grape across key growth stages, achieving multi-scale perception of phenological rhythms and regional heterogeneity [

36]. Finally, the decoder, following a U-Net-style design, integrates multi-level semantic features from the ViT backbone through skip connections and progressively upsamples them to recover spatial resolution, yielding fine-grained segmentation maps of the three target crops [

37].

3.1. Backbone

The Vision Transformer (ViT) is adopted as the backbone for spatial feature extraction. With its self-attention mechanism, ViT effectively models long-range spatial dependencies, enabling the capture of large-scale patterns related to crop phenology and inter-regional heterogeneity [

38,

39]. In this study, the backbone integrates multi-source inputs—including six Sentinel-2 spectral bands and Sentinel-1 VV/VH polarization features—across a full-year time series to represent the phenological development of tea, citrus, and grape.

As shown in

Appendix A (

Figure A1), images are first normalized and partitioned into non-overlapping 16 × 16 patches, which are linearly projected into 768-dimensional embeddings and combined with positional encodings. These patch embeddings are processed by a ViT-Base/16 encoder consisting of 12 Transformer layers, each with a 12-head self-attention block followed by a feed-forward network. This backbone provides globally contextualized spatial features that support the downstream RAM and MHA-PAM modules, which further incorporate regional and phenological constraints for cross-regional crop mapping [

31,

40].

3.2. Multi-Task Attention Fusion (MTAF) Module

The Multi-source Temporal Attention Fusion (MTAF) module is designed to address the limitations of conventional fusion methods for optical and SAR satellite image time series (SITS). The conceptual workflow of MTAF is illustrated in

Appendix A (

Figure A2), where the detailed fusion architecture is provided.

In this module, the monthly optical and SAR time series are first projected into a shared feature space through a lightweight two-layer temporal encoder. Each branch produces a sequence of feature vectors with identical dimensionality, allowing cross-modal attention to be applied symmetrically. MTAF then adopts a four-head temporal cross-attention mechanism, where each attention head captures dependencies at different temporal scales—from short-term fluctuations to seasonal growth patterns.

Specifically, the radar time series attends to the optical sequence to enrich radar features with spectral and textural details derived from optical observations [

34]. In turn, the optical time series attends to the radar sequence to incorporate the continuous temporal information captured by SAR. This reciprocal attention enhances temporal consistency and ensures robust performance under cloudy or data-sparse conditions [

41,

42].

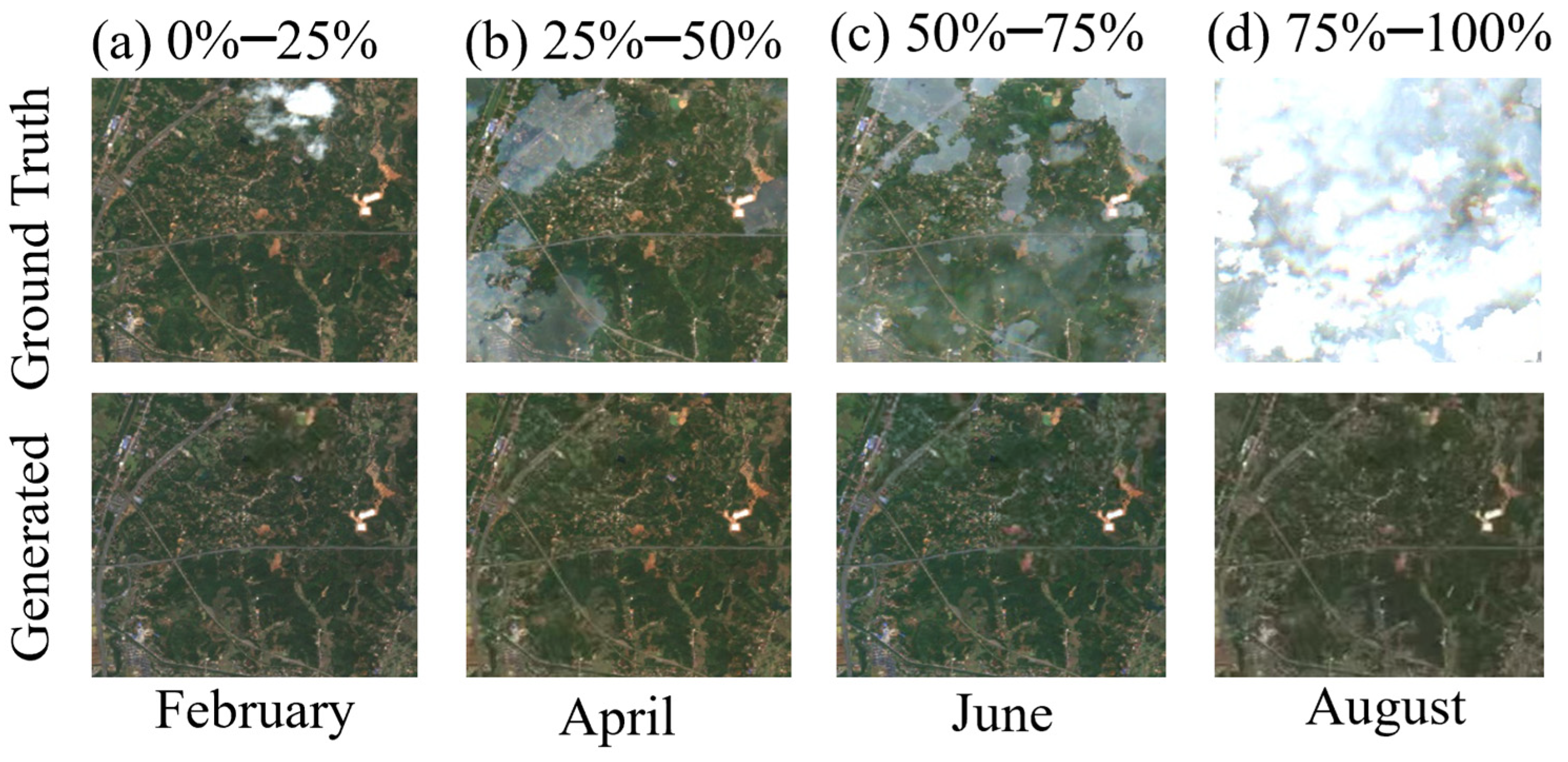

To evaluate the influence of cloud contamination on the MTAF module, we performed a month–cloud analysis using Sentinel-2 observations. Four representative months (February, April, June, and August) and four cloud-cover intervals (0–25%, 25–50%, 50–75%, 75–100%) were examined to assess how temporal fusion mitigates cloud-induced signal degradation. A visual comparison between raw observations and MTAF-generated outputs is provided in

Appendix B (

Figure A6), showing that MTAF effectively restores spatial–spectral consistency under varying cloud conditions.

3.3. Region-Aware Module (RAM)

The Region-Aware Module (RAM) explicitly incorporates crop-specific phenological knowledge and region-dependent environmental variability to produce adaptive temporal modulations for perennial crop mapping. The overall architectural design of RAM is provided in

Appendix A (

Figure A3), while the crop phenological calendars used as biological priors are summarized in

Appendix A (

Figure A4). For tea, citrus, and grape, baseline phenological calendars were constructed from agricultural records, field surveys, and published agronomic observations, and consolidated into a unified set of key growth stages—including budburst, flowering, fruit expansion, veraison, and harvest—which guide the temporal alignment process [

43].

Geographical feature encoding: Static and dynamic environmental factors are transformed into a compact regional embedding (RE) summarizing the environmental context of each pixel. Static geographical features—including elevation, slope, and aspect—are encoded using a modified ResNet-18, generating a 64-dimensional terrain representation. Dynamic climatic variables (monthly mean temperature, precipitation, and sunshine duration; T = 12) are processed through three parallel 1D convolution branches (kernel sizes 3, 5, and 7), enabling the extraction of short-, mid-, and long-term hydrothermal trends. A gating mechanism with global pooling fuses these components into a unified RE capturing both long-term terrain constraints and seasonal climatic fluctuations. To ensure robustness to climatic variability, the three climate branches are designed to respond differently to perturbations in temperature and precipitation. Temperature-related inputs influence the regional embedding more strongly—consistent with their dominant role in controlling perennial phenology—while moderate fluctuations in precipitation produce only minor changes in the embedding representation. This design consideration allows RAM to remain stable under typical noise levels in climate products while still capturing meaningful environmental gradients.

Phenological phase-shift estimation: The unified RE is fed into a two-layer MLP that estimates temporal offsets for each crop and each phenological stage. This adjusts the baseline calendars according to local conditions, such as elevation-driven temperature accumulation or delayed development in humid mountainous environments [

44]. The adjustment preserves the biological ordering of stages while aligning their temporal positions to regional phenological rhythms. The construction process ensures that the resulting aligned windows maintain agronomic interpretability while adapting flexibly to environmental gradients.

Region–time attention fusion: The refined phenological windows are converted into region-specific temporal weight matrices highlighting biologically important periods and suppressing less informative ones. Gaussian smoothing promotes temporal continuity and reduces susceptibility to noise from clouds or atmospheric anomalies. These smooth temporal priors are passed to the MHA-PAM module to modulate attention allocation under local environmental conditions [

45].

Because RAM integrates static terrain features and low-frequency climatic trends, it can be applied in regions where high-resolution climate data are incomplete, provided that the overall environmental gradients are comparable.

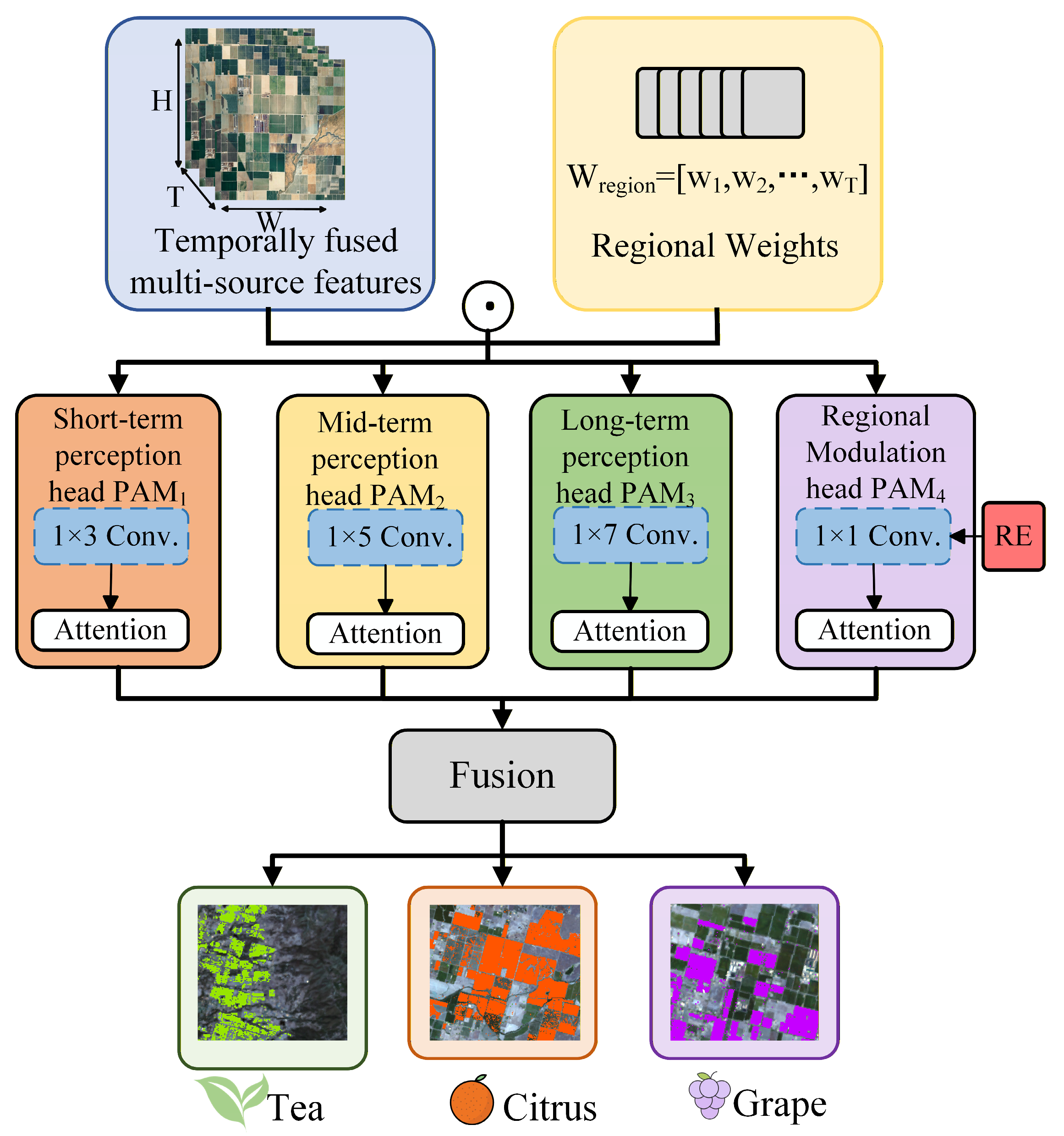

3.4. Multi-Head Phenology-Aware Module (MHA-PAM)

The Multi-Head Phenology-Aware Module (MHA-PAM) is designed to model phenological dynamics across multiple temporal scales and adapt them to region-specific environmental conditions. As illustrated conceptually in

Figure A5 of

Appendix A (detailed equations provided in

Appendix C), the module consists of four complementary temporal heads—short-term, mid-term, long-term, and region-modulated—each capturing a distinct aspect of perennial crop phenology. It consists of four complementary attention heads—short-term, mid-term, long-term, and region-modulated—each capturing a different aspect of crop phenology. MHA-PAM receives two inputs: (1) multi-source time-series features from the MTAF module and (2) region-specific temporal weights produced by RAM. By combining these two components, the module emphasizes phenologically meaningful transitions while suppressing noise or irregular fluctuations.

Unlike multiscale temporal architectures such as Temporal Convolutional Networks (TCN) and InceptionTime, which apply fixed multi-scale kernels uniformly across the time series, MHA-PAM explicitly integrates phenological priors via RAM. This ensures that the temporal receptive fields focus on biologically meaningful stages rather than generic temporal intervals. As a result, MHA-PAM maintains sensitivity to key phenological transitions even under incomplete optical observations, offering superior interpretability and cross-regional robustness.

3.5. Decoder and Loss Function

The decoder integrates multi-scale spatial features through skip connections within a U-Net architecture, enabling fine-grained segmentation at a spatial resolution of 10 m. This structure ensures that both local details and high-level contextual information are preserved during upsampling, producing precise crop boundary delineation.

To achieve a balance between sample distribution, boundary accuracy, and cross-regional generalization, a composite loss function is employed for model optimization, as defined in Equation (1):

where the empirically determined weights (λ

1 = 0.4, λ

2 = 0.4, λ

3 = 0.2) control the relative contributions of each term.

The cross-entropy loss (L

CE) enforces accurate pixel-wise classification, and the Dice loss (L

Dice) is particularly effective in handling the moderate class imbalance observed in perennial crop distributions (

Table 2). By optimizing the overlap between predictions and ground truth, Dice loss amplifies the contribution of minority classes such as tea and grape, which occupy substantially smaller spatial extents than background and citrus, and the regularization term (L

Reg) stabilizes training across heterogeneous regions by constraining feature distributions, thereby improving model transferability from Changde to the more topographically complex Yaan region.

3.6. Evaluation Metrics

To comprehensively assess the performance of the proposed model, four standard metrics were employed: Overall Accuracy (OA), mean F1-score (F1), mean Intersection over Union (mIoU), and the mIoU Decay Rate (DecayRate). The first three metrics evaluate classification accuracy within a single region, while DecayRate specifically measures cross-regional robustness by quantifying the performance decline between the source and target regions. Their formulations are given as follows:

where C is the total number of crop classes; TPc, FPc and FNc denote the number of true positives, false positives, and false negatives for class C, respectively; the score is averaged across all classes (macro-average) to avoid bias toward dominant categories.

Here, mIoUsrc denotes the mIoU on the source region (training/testing within the same region), while mIoUtgt represents the mIoU on the target region (zero-shot inference).

4. Experiments and Analysis

To comprehensively evaluate the performance and generalization capability of the proposed RAM-PAMNet, two groups of experiments were conducted: (1) ablation studies and (2) comparative experiments.

The ablation studies were designed to isolate and quantify the contribution of each core module—MTAF, RAM, and MHA-PAM—by selectively removing or replacing them under identical training conditions. This analysis clarifies the specific role of each component in improving classification accuracy and cross-regional robustness.

The comparative experiments benchmarked the proposed framework against several representative models, including both traditional machine-learning and deep-learning approaches. All models were trained using the same multi-source Sentinel-1/2 inputs and training protocols to ensure fair comparison. Together, these experiments provide a comprehensive assessment of the model’s accuracy, robustness, and transferability across regions with contrasting environmental conditions.

4.1. Experimental Setup

All experiments were conducted using the PyTorch deep-learning framework (version 2.4.1) with the Adam optimizer. RAM-PAMNet was trained as a unified multi-class semantic segmentation model, producing a single output map with four categories: background, tea, citrus, and grape. The decoder generates four probability channels normalized by a softmax activation, and supervision is provided through a single multi-class ground-truth label map.

The composite loss function defined in Equation (1) was adopted, with empirically tuned weights to jointly balance classification accuracy, boundary precision, and cross-regional generalization. The initial learning rate was set to 0.0001 and reduced by a factor of 0.5 whenever the validation F1-score failed to improve for 15 consecutive epochs. Each model was trained for up to 200 epochs using a batch size of 8, and random seeds were fixed to ensure full reproducibility. Training and evaluation were conducted on a workstation equipped with an Intel i7-12700H CPU and an NVIDIA RTX 5060 GPU.

To ensure a fair comparison across all baseline models, the same temporally aligned Sentinel-1/2 monthly composites (T = 12), DEM-derived terrain factors, and climatic variables described in

Section 2 were used. DEM and climate features were incorporated according to the structural requirements of each baseline architecture. For Random Forest, elevation, slope, aspect, and the 12-month climatic variables were appended as pixel-wise auxiliary attributes to form a tabular feature vector. For CNN + LSTM and U-Net + ConvLSTM, DEM was concatenated as an additional static spatial channel, while monthly climatic variables were broadcast to full-resolution grids and fused with the temporal image sequence. All models used the same patch partitions (694 for Changde, 574 for Yaan). Data augmentation was applied only to the deep-learning baselines, as Random Forest operates on non-spatial pixel vectors and does not benefit from spatial perturbations. This unified protocol ensures that all comparison methods operate under equivalent data conditions while remaining compatible with their respective input structures.

4.2. Experimental Datasets

To evaluate the proposed RAM-PAMNet, a multi-source, multi-region dataset was constructed based on the preprocessed Sentinel-1/2 time-series, geographical, and climatic data described in

Section 2.2. The dataset covers two representative regions—Changde, Hunan (core area) and Yaan, Sichuan (cross-regional validation area)—and focuses on three perennial cash crops: tea, citrus, and grape.

Each sample is defined as a 512 × 512 pixel patch at 10 m spatial resolution, paired with a temporal stack representing a complete phenological year (January–December 2022, T = 12). Each patch contains multi-source time-series features (SAR and optical), static geographical factors, dynamic climatic variables, and corresponding crop labels. All samples were co-registered and temporally aligned to ensure spatial and temporal consistency across datasets.

For experimental design, the Changde dataset was divided into 70% training, 15% validation, and 15% intra-regional testing subsets, while the Yaan dataset was reserved as an independent cross-regional testing set. This configuration allows systematic evaluation of model performance under both intra- and inter-regional conditions, providing a rigorous benchmark for assessing cross-regional generalization across contrasting topographic and climatic environments.

All auxiliary variables were resampled to 10 m and co-registered with the Sentinel-2 reference grid. Crop labels were derived from agricultural census data and further verified through field surveys to ensure reliability. The distribution of each crop class in the Changde and Yaan datasets is summarized in

Table 2, where tea, citrus, and grape represent the target crop categories, and all other land-cover types are grouped as background.

4.3. Comparative Study

To evaluate the effectiveness of the proposed RAM-PAMNet, a set of representative baseline models was selected to cover the three dominant methodological paradigms used in time-series crop mapping.

- (1)

Random Forest (RF) [

46] represents traditional machine-learning classifiers that remain widely applied to Sentinel-1/2 agricultural mapping due to their robustness and computational efficiency. RF provides a strong non-deep baseline and reflects the performance level achievable without deep feature learning.

- (2)

CNN-LSTM [

47] represents hybrid deep architectures that separately encode spatial and temporal structures. This family of models has been extensively used in phenology-aware crop classification tasks and thus serves as a standard reference for sequence modeling.

- (3)

U-Net + ConvLSTM [

48] represents fully spatio-temporal semantic segmentation networks and is regarded as one of the strongest deep-learning baselines for dense prediction in remote sensing time series.

Although Transformer-based architectures have recently gained attention in remote sensing, their effective deployment typically requires large-scale multi-temporal training datasets and high-end GPUs (e.g., A100), and many are designed for generic vision tasks rather than crop-specific phenological modeling. Given the spatial resolution, training sample size, and hardware constraints of this study, the above baselines offer a balanced comparison in terms of performance, computational feasibility, and widespread adoption in current literature. Importantly, RAM-PAMNet already incorporates a Transformer-based backbone via ViT, implicitly providing comparison against Transformer-style feature extractors.

Using these three representative baselines, the comparative study evaluates both in-region performance (Changde) and cross-regional generalization (Yaan), as well as computational efficiency and robustness across heterogeneous agroecological conditions.

4.4. Ablation Study

To assess the effectiveness and necessity of each component in the proposed framework, an ablation study was conducted. This experiment aimed to separate the contributions of multi-scale phenological modeling and region-adaptive modulation, and to verify whether both are essential for robust cross-regional crop classification.

Four model configurations were tested to systematically evaluate the impact of each module: (1) the Baseline model (ViT + MTAF), which performs multi-source temporal fusion without phenological adaptation; (2) Baseline + MHA-PAM, designed to assess the contribution of multi-scale phenology modeling; (3) Baseline + RAM (with single-head PAM), used to examine the effect of region-adaptive modulation; and (4) the full RAM-PAMNet, which combines both mechanisms. All variants were trained using the same data splits, preprocessing procedures, and hyperparameter settings, and evaluated with identical metrics (OA, mean F1-score, mIoU, and DecayRate) to ensure fair comparison. The results directly reveal how each module contributes to overall accuracy, robustness, and generalization across regions.

4.5. Results and Analysis

4.5.1. Comparison with Baseline Methods

In the core region (Changde, Hunan), the proposed RAM-PAMNet achieved an OA of 83.3%, F1 of 78.8%, and mIoU of 65.4%, surpassing the baseline models—RF, CNN + LSTM, and U-Net + ConvLSTM—by 4.3–5.5%, 3.5–6.8%, and 3.1–4.4%, respectively, across the three metrics. Compared with these baselines, RAM-PAMNet produced notably sharper crop boundaries and demonstrated stronger robustness across different phenological stages, effectively reducing confusion in complex intercropped areas.

In the cross-regional validation (Yaan, Sichuan), the model maintained strong generalization, achieving an OA of 79.6%, an F1-score of 72.1%, and an mIoU of 59.2%, representing absolute declines of 3.7%, 6.7%, and 6.2%, respectively, relative to the core region. This degradation is largely attributable to the markedly higher terrain complexity of Yaan, where steep elevation gradients, deep valleys, and topographic shadows create strong anisotropic illumination and heterogeneous canopy signals. These factors increase intra-class spectral variability and make cross-regional transfer more challenging. Even under these conditions, the cross-regional mIoU DecayRate of RAM-PAMNet (9.5) remained substantially lower than those of RF (15.6), CNN + LSTM (15.1), and U-Net + ConvLSTM (13.3), indicating that the proposed framework effectively mitigates region-induced performance degradation and achieves superior cross-regional robustness. Quantitative results are summarized in

Table 3.

Spatial classification maps for Changde and Yaan are shown in

Figure 3 and

Figure 4, respectively. In Changde, RAM-PAMNet generates clearer and more continuous crop boundaries, especially in hilly intercropped areas where tea gardens and citrus orchards coexist. Compared with baseline models, it more accurately delineates crop distributions and suppresses noise in mixed or fragmented agricultural landscapes. In Yaan, despite challenges such as frequent cloud cover and complex mountainous terrain, RAM-PAMNet maintains stable recognition and produces classification maps that closely match the ground truth. In contrast, baseline models show blurred boundaries and frequent misclassifications in heterogeneous regions. These visual results further confirm the quantitative findings, demonstrating that RAM-PAMNet enhances boundary precision and spatial robustness across different geographic environments.

Beyond accuracy, we further compared the computational efficiency of all baseline models under the unified experimental protocol described in

Section 4.1. Random Forest achieved the fastest inference and required negligible GPU memory, but its lack of spatial modeling led to the weakest performance. CNN + LSTM maintained low computational cost due to its lightweight architecture but remained limited in capturing fine-grained spatial patterns. U-Net + ConvLSTM showed significantly higher memory usage and slower inference time because convolutional operations were applied across all temporal steps. In contrast, RAM-PAMNet introduced only moderate computational overhead despite incorporating multi-source temporal fusion and multi-head phenology-aware attention. Its parameter count and peak memory usage remained comparable to U-Net + ConvLSTM, while achieving substantially higher accuracy and cross-regional robustness. The computational comparison—including parameter scale, memory usage, and per-patch inference time—is summarized in

Table 4.

4.5.2. Ablation Study and Module Contribution Analysis

The ablation results (

Table 5,

Figure 5) further demonstrate the individual and joint contributions of the RAM and MHA-PAM modules. Incorporating only the MHA-PAM module increased the cross-regional mIoU from 52.0% to 56.0%, underscoring the importance of multi-scale temporal attention in capturing short-, mid-, and long-term phenological rhythms. When only the RAM (with single-head PAM) was added, the cross-regional mIoU improved to 57.5% and the DecayRate decreased to 10.0, confirming that region-adaptive modulation effectively alleviates phenological misalignment caused by environmental and climatic differences between Changde and Yaan.

With the full RAM-PAMNet, the cross-regional mIoU reached 59.5% and the DecayRate further decreased to 8.7, demonstrating a nonlinear, more-than-additive improvement relative to the gains achieved by the two modules individually. This nonlinear enhancement arises from the fact that the two modules address orthogonal components of the temporal modeling task. RAM corrects region-dependent phase shifts by producing region-normalized phenological weights informed by terrain and climatic cues, thereby stabilizing the temporal structure and suppressing noise-induced fluctuations. MHA-PAM then operates on this aligned and denoised temporal foundation, enabling its multi-head attention mechanism to more effectively capture intrinsic phenological dynamics and salient temporal transitions across multiple scales.

Because RAM determines when phenological events occur, while MHA-PAM enhances how these events are represented and distinguished, their functions are complementary rather than redundant. Their combination improves feature separability, reduces temporal ambiguity, and produces clearer crop boundary delineation—ultimately yielding a synergistic performance gain that neither module can achieve independently.

4.5.3. Interpretability: Region–Phenology Attention Analysis

To further understand how the model captures cross-regional phenological dynamics, we analyze the region–crop attention weights generated by the RAM (

Figure 6). These attention profiles quantify the temporal importance assigned to each month for each crop–region pair, providing a transparent view of how RAM aligns temporal features with local phenological rhythms.

For tea, Changde exhibits peak attention during March–May, corresponding to budburst and early plucking, whereas Yaan shifts to April–June due to delayed warming in its higher and more humid terrain. Citrus shows high weights in June–August in Changde, associated with fruit expansion and early coloring, while Yaan displays its maximum attention from July to September, reflecting a consistent one-month phenological delay. For grape, the model focuses on July–September in Changde (veraison to ripening) and August–October in Yaan, again capturing the characteristic lag in development at higher elevations.

Beyond qualitative interpretation, the temporal attention map show strong agreement with independent agroclimatic observations. Local agricultural monitoring stations in Changde report tea budburst in March–April and citrus expansion in June–August, matching the model-derived attention peaks with correlation coefficients of 0.72–0.84 across crops. In Yaan, high-altitude phenology stations document delayed temperature accumulation and a one-month shift in crop development, which is closely reflected in the shifted attention peaks. These consistencies confirm that RAM captures biologically meaningful phenological signals rather than relying solely on spectral cues.

In addition, the attention maps reveal two characteristic behaviors of RAM: (1) Region-aware temporal alignment—months affected by cloud contamination, topographic shading, or low illumination systematically receive low attention, suggesting that RAM effectively suppresses noise-driven fluctuations; and (2) Crop-specific temporal sensitivity—distinct crops exhibit distinct high-attention periods, indicating that the module learns crop-dependent temporal patterns rather than applying uniform temporal weights.

Although no explicit uncertainty experiment is performed, the structure of the RAM attention mechanism inherently stabilizes temporal weighting under moderate climatic noise. Because the regional embedding is derived from smoothed terrain descriptors and monthly climatic trends rather than instantaneous values, reasonable perturbations in temperature or precipitation would be expected to affect only the amplitude of the embedding without altering the ordering of phenological stages. As a result, the timing of peak attention remains largely stable, demonstrating that the region–phenology attention mechanism provides consistent temporal cues even under plausible environmental uncertainty.

Overall, the region–phenology attention patterns confirm that RAM adaptively calibrates its temporal focus to reflect both crop-specific developmental rhythms and region-specific climatic shifts. These behaviors provide interpretable evidence for the enhanced cross-regional robustness of RAM-PAMNet and reinforce the model’s ability to generalize across heterogeneous agroecological environments.

5. Discussion

This study demonstrates that integrating multi-source temporal fusion with region-aware phenology modeling substantially enhances cross-regional perennial crop mapping [

49]. By embedding crop-specific phenological calendars and adapting them to local topographic–climatic conditions [

50], the RAM achieves biologically consistent temporal alignment between regions, effectively mitigating the degradation typically observed when transferring models from Changde to Yaan [

51]. The temporal attention maps generated by MHA-PAM further strengthen interpretability by highlighting growth stages such as tea flushing, citrus expansion, and grape veraison [

20].

Phenology-driven temporal modeling is inherently sensitive to noise, particularly cloud contamination in Sentinel-2 and speckle in Sentinel-1. RAM-PAMNet alleviates these effects by down-weighting irregular temporal responses through RAM and emphasizing stable phenological transitions in MHA-PAM. Attention Maps show that cloud-affected winter months consistently receive low weights, indicating strong robustness of the region–phenology attention mechanism. Nonetheless, persistent winter cloud cover in mountainous areas—such as western Yaan—can still weaken optical signals. Future work may incorporate higher-frequency observations, improved optical–SAR harmonization, or cloud-aware temporal masking to further enhance reliability [

46].

Beyond the two demonstration regions, RAM-PAMNet shows potential for scaling to national applications. Because RAM relies on environmental embeddings rather than site-specific calibration, it can, in principle, generalize across broader climatic zones by integrating multi-source DEM products, nationwide agroclimatic priors, and representative samples from diverse agroecological regions. Expanding the training dataset to cover latitudinal, management, and landscape variability will be essential for robust large-scale deployment.

To reduce potential overfitting—particularly when training on limited regional samples—future work may employ stronger regularization strategies, such as enhanced data augmentation, dropout in temporal attention layers, and cross-regional pretraining, to expose the model to wider phenological diversity. Semi-supervised learning and domain adaptation may further improve generalization when labeled samples are scarce. Model scalability is also worth noting. RAM-PAMNet adopts a moderately sized Transformer backbone to balance accuracy and efficiency. Larger Transformer variants may provide richer spatial–temporal representations but also increase computation and risk overfitting in regions with limited diversity. Designing scalable variants that preserve cross-regional robustness while leveraging higher-capacity architectures is an important direction for future research.”

Overall, RAM-PAMNet provides a robust and interpretable framework for cross-regional perennial crop mapping [

52]. While not yet fully production-ready, the model demonstrates strong potential for operational deployment as preprocessing automation and broader regional validation improve. Future research will explore higher-temporal-resolution inputs, expanded crop categories, and cloud-resilient attention mechanisms to support scalable phenology-aware monitoring across heterogeneous agricultural landscapes.

6. Conclusions and Future Work

In summary, this study presents RAM-PAMNet, a novel framework that integrates multi-source temporal fusion, region-aware modulation, and multi-head phenology-aware attention to address the challenges of cross-regional crop recognition. Applied to tea, citrus, and grape, the model achieved an OA of 83.3%, a F1 of 78.8%, and a mIoU of 65.4% in the core region (Changde, Hunan), while maintaining strong generalization in Yaan, Sichuan, with an mIoU of 59.2% and a DecayRate of only 9.5. These results confirm that explicitly encoding crop-specific phenological calendars enables interpretable and region-adaptive recognition, effectively mitigating cross-regional performance degradation. The proposed framework offers a practical and explainable solution for fine-scale mapping and management of perennial cash crops in heterogeneous landscapes.

Future work will focus on three main directions: (1) Integrating higher-resolution climatic and topographic datasets to better capture fine-scale environmental variations among tea, citrus, and grape plantations; (2) Exploring federated and self-supervised learning strategies to reduce reliance on labeled data and enhance generalization under limited training conditions; and (3) Extending the framework to other perennial crops with distinct phenological rhythms—such as apple or olive—through the construction of crop-specific phenological priors and transfer learning schemes.

Finally, to support operational deployment, future work will explore integrating RAM-PAMNet with widely used geospatial platforms—such as Google Earth Engine, SNAP, and Open DataCube—to enable automated data ingestion, large-scale time-series processing, and region-specific phenological calendar generation. Linking the model with national or local agricultural information systems may further facilitate routine crop monitoring and decision support.

These advancements will strengthen the adaptability, transparency, and practical relevance of RAM-PAMNet for large-scale phenology-aware agricultural monitoring.