4.2. Datasets

We use the RICE1, RICE2, T-cloud, and WHUS2-CR datasets for evaluation. These multi-source datasets provide a robust testbed for evaluating the model’s cross-platform generalization capability. The RICE dataset provides a cross-source validation challenge: RICE1 contains optical images sourced via Google Earth, while RICE2 is based on Landsat 8 imagery with thick clouds. The T-cloud dataset offers a multispectral Landsat 8 benchmark, and WHUS2-CR introduces the distinct spectral profile of Sentinel-2A across diverse regions, collectively forming a comprehensive evaluation framework.

We adopt the cross-source RICE [

7] to train and evaluate our model. The RICE dataset is composed of two subsets: RICE1 and RICE2. RICE1 contains 500 pairs of

images, sourced from Google Earth. RICE2 comprises 450 pairs of

images, derived from the Landsat 8 OLI/TIRS dataset. Specifically, its image pairs are selected from images captured at the same location within a 15-day window to ensure consistency in underlying ground features. T-cloud [

24] dataset comprises 2939 pairs of

images, sourced from Landsat 8 multispectral imagery. We randomly select 400 pairs for training and 100 pairs for testing. WHUS2-CR is a cloud removal evaluation dataset with cloudy/cloud-free paired Sentinel-2A images from the Copernicus Open Access Hub, selected under the conditions of: (1) wide distribution, (2) all-season coverage [

25].

Sentinel-2A is a high-resolution multi-spectral imaging satellite that carries a multi-spectral imager (MSI) covering 13 spectral bands in the visible, near infrared, and shortwave infrared [

25]. Band 2 represents the red band, with a central wavelength of 0.490 µm, a bandwidth of 98 nm, and a spatial resolution of 10 m. Band 3 represents the green band, with a central wavelength of 0.560 µm, a bandwidth of 45 nm, and a spatial resolution of 10 m. Band 4 represents the blue band, with a central wavelength of 0.665 µm, a bandwidth of 38 nm, and a spatial resolution of 10 m. In our experiment, We select Band 2/3/4 images from two regions, that is, Ukraine with Urban Land Cover, Australia with Urban. For each region, we randomly select 400 pairs for training and 100 pairs for testing. The images pairs are resized to

.

4.3. Comparisons with State-of-the-Art Methods

In this section, our method is evaluated qualitatively and quantitatively against the state-of-the-art methods based on the hand-crafted priors DCP [

26] and deep convolutional neural networks (AOD-Net [

27], CloudGAN [

28], McGANs [

29], CycleGAN [

30], DC-GAN-CL [

31], Vanilla GAN [

32], U-net GAN [

32], MAE-CG [

33], PM-LSMN [

11]). We evaluate PMSAF-Net against several representative cloud removal methods. These methods span different technical paradigms. They include the physics-based classical baseline DCP and efficient CNN architectures such as the lightweight AOD-Net. Our primary contemporary baseline is PM-LSMN, which is specifically designed for thin cloud removal. We also compare with popular GAN-based approaches, including the standard unpaired training framework CycleGAN. This category contains recent improvements like DC-GAN-CL and U-net GAN, along with earlier variants such as CloudGAN and McGANs. Additionally, we include the recent attention-enhanced method MAE-CG, which incorporates multi-attention mechanisms. This selection encompasses both established benchmarks and the latest advances, ensuring a comprehensive performance comparison.

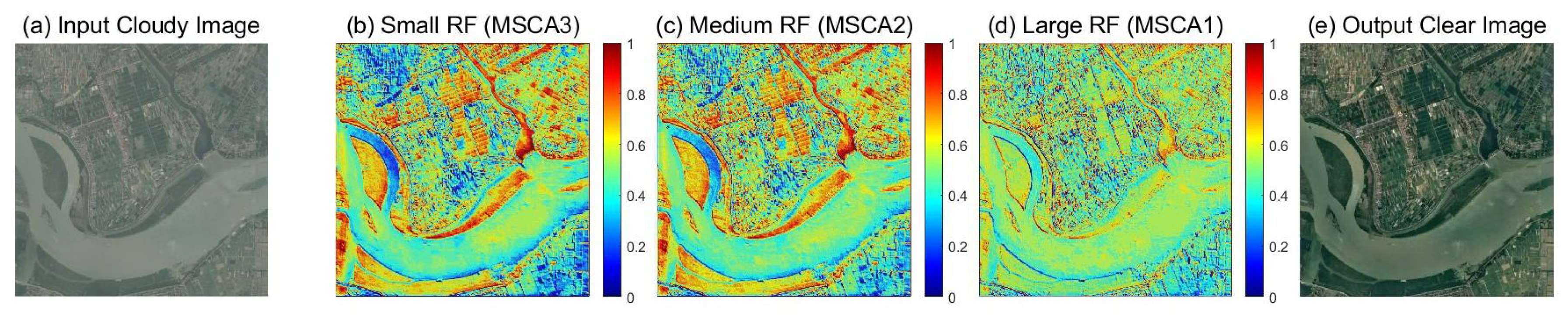

To demonstrate the effectiveness of our method, we conducted training and qualitative evaluations on the RICE1, RICE2, T-Cloud, and WHUS2 datasets in this section. The results indicate that our method achieves performance comparable to other methods. First, we quantitatively compare our method with the other methods on the RICE1 dataset, as shown in

Table 9. For the PSNR metric, our method ranks first with a value of 27.258, followed by CycleGAN (26.888) in second place and PM-LSMN (25.939) in third. In terms of SSIM, our method achieves the highest value of 0.930. Regarding the SAM metric, our method secures a respectable third place with a value of 5.423. For the ERGAS metric, our method attains the lowest (best) score of 0.39 among all methods, outperforming the second-ranked PM-LSMN (0.41) and other competitors. It can be seen that our method achieves the top rank in three out of the four evaluation metrics (PSNR, SSIM, ERGAS) and a competitive third rank in SAM. This shows that our method achieves comparable results with the other methods.

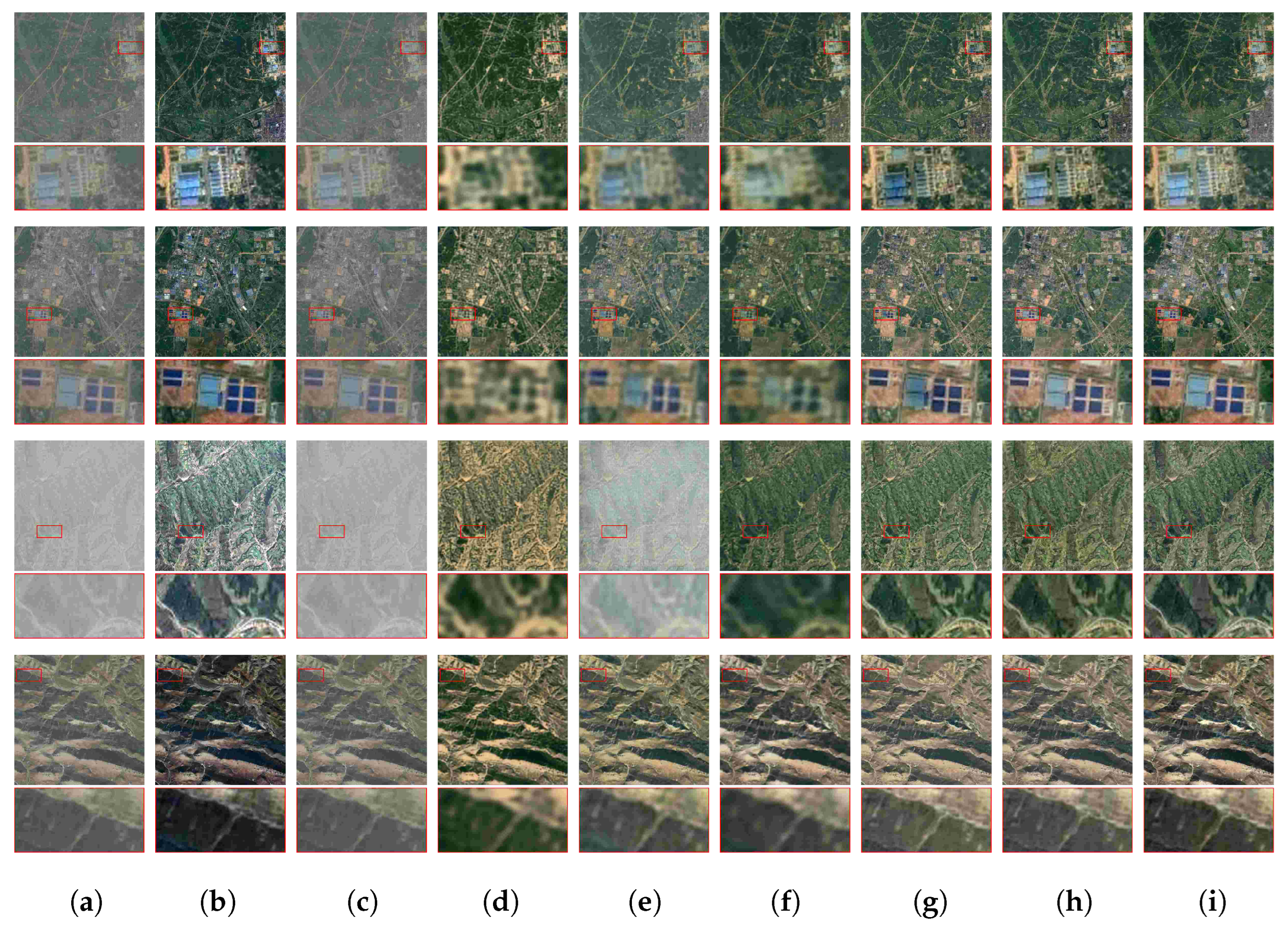

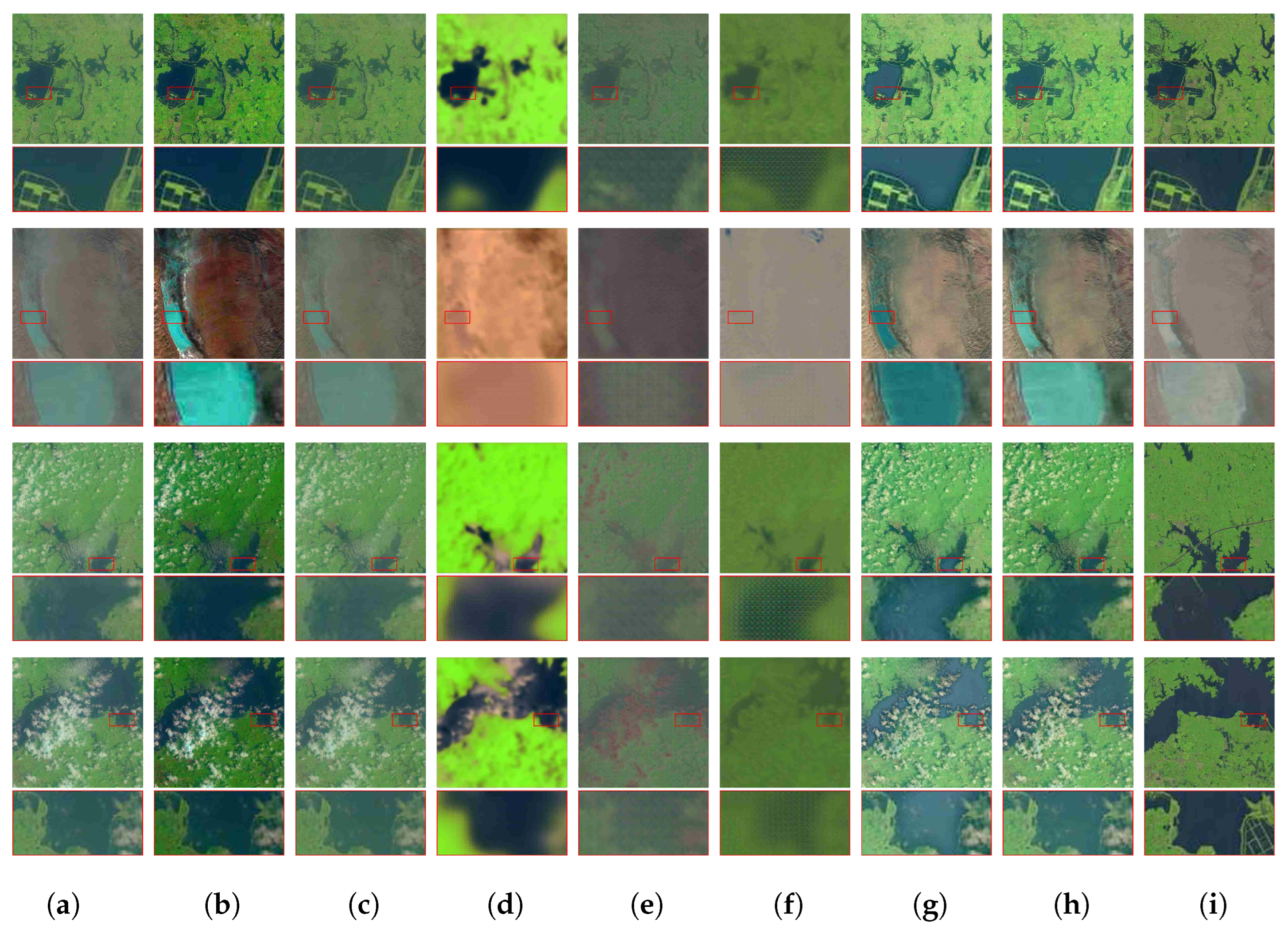

Qualitative results on RICE1 are shown in

Figure 5. Our method effectively removes cloud haze while preserving fine details and maintaining natural, vivid colors. The resulting images are visually clear and closely resemble the ground truth shown in

Figure 5i. In the magnified views of the first and second rows of

Figure 5h, the color of the blue lake closely matches that of the corresponding ground truth.

Figure 6 shows the pixel-wise mean absolute error (MAE) between different methods and the ground truth. The error maps clearly show that our method achieves the lowest reconstruction error. This is visible from the dominant blue colors in subfigure (d), which represent small errors. In comparison, CloudGAN (b) and PM-LSMN (c) show more warm-colored areas. These warm colors mean larger errors remain. The input image (a) has the highest errors, especially where clouds are present. These visual comparisons confirm that our approach better preserves original scene details while effectively eliminating cloud interference.

Unlike RICE1, the RICE2 dataset contains thick cloud layers that completely obscure the underlying image content. Recovering such heavily occluded details is inherently challenging and typically requires additional input sources, as the algorithm cannot reconstruct information that is entirely absent. Despite this limitation, which precludes full reconstruction in heavily occluded areas, our experiments demonstrate that our method maintains robust performance. As shown in

Table 10, it achieves superior results on metrics that assess overall fidelity (PSNR, SSIM, ERGAS). Visually, as illustrated in

Figure 7, our approach retains better color fidelity and detail preservation with fewer artifacts compared to other methods, which often exhibit severe color distortion.

For PSNR, our method ranks first with 28.855, followed by PM-LSMN (28.144) in second and CycleGAN (27.794) in third. For SSIM, our method reaches 0.87, tying with PM-LSMN for the highest score. Both outperform competitors like CycleGAN (0.823) and CloudGAN (0.764). For SAM, our method takes second place with 5.689; CycleGAN (4.248) is first, and PM-LSMN (6.183) ranks third. For ERGAS, our method obtains the lowest score of 0.476 among all methods. These results show that our method achieves the top or joint-top rank in three out of the four evaluation metrics (first in PSNR and ERGAS, joint-first in SSIM) and a highly competitive second place in SAM.

Figure 7 shows results on the challenging RICE2 dataset with thick clouds. While the heavy occlusion poses difficulties, our method retains better color fidelity and detail preservation compared to others, which exhibit severe color distortion or artifacts. Our method removes thin clouds while retaining relatively fidelity in both original color tone and spatial details. The baseline method of PM-LSMN exhibits a color discrepancy along the lake boundaries, whereas our proposed approach effectively mitigates this issue without introducing visible color artifacts.

While the quantitative results on RICE2 appear superior to those on RICE1, this should be interpreted in the context of fundamental dataset differences. Performance variation stems from the inherent characteristics of each dataset. RICE1 contains high-resolution details that are difficult to recover perfectly. This challenge often leads to a lower PSNR score. In contrast, RICE2 features thick clouds that frequently obscure the underlying scene. As a result, the task shifts from thin-cloud removal to content inpainting. Our model addresses this by generating semantically plausible content in the missing regions. This approach can result in higher PSNR when the generated content aligns well with the reference. Therefore, the superior performance on RICE2 reflects the model’s strong generative capability rather than better thick-cloud removal. The core challenge remains the recovery of subtle details in RICE1’s thin cloud scenarios.

Table 11 presents quantitative results on the T-cloud dataset. For PSNR, our method ranks first with 24.164, followed by PM-LSMN (23.791) in second and CycleGAN (23.719) in third. For SSIM, PM-LSMN achieves the highest score of 0.848, while our method closely follows with 0.846, securing second place, and MAE-CG ranks third with 0.830. For SAM, CycleGAN obtains the best score of 5.490, followed by McGANs (6.998) in second and U-net GAN (7.69) in third, while our method ranks seventh with 9.007. For ERGAS, CloudGAN achieves the lowest score of 0.590, with our method ranking third at 0.612, following AOD-Net (0.768). These results demonstrate that our method achieves the top rank in one metric (PSNR), competitive second-place rankings in two metrics (SSIM and SAM), and a respectable third place in ERGAS.

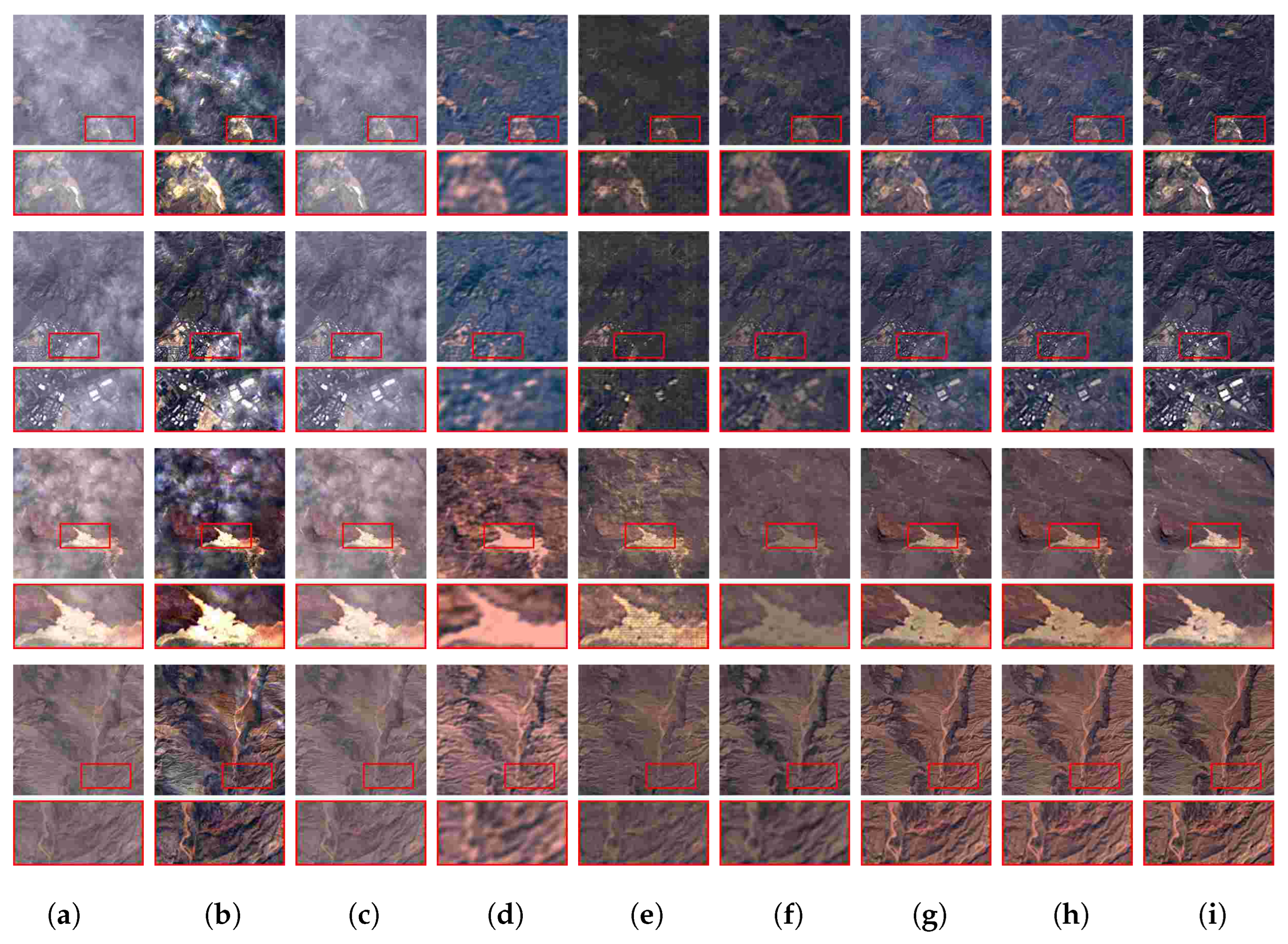

On the T-cloud dataset, which contains diverse cloud types, our method demonstrates more effective cloud removal compared to PM-LSMN and other approaches, with less residual cloud cover as shown in

Figure 8. Our method is similar to PM-LSMN but demonstrates a more effective capacity for cloud removal. In the first and second rows, the output images in

Figure 8h contain less cloud cover than those from PM-LSMN in

Figure 8g.

The T-cloud dataset contains diverse cloud types, ranging from thin, semi-transparent clouds to thick, complex coverings. Without multi-modal inputs, the experiments show limited performance in these scenarios. Nevertheless, our approach maintains moderate overall visual quality in terms of color accuracy, detail clarity, and cloud removal.

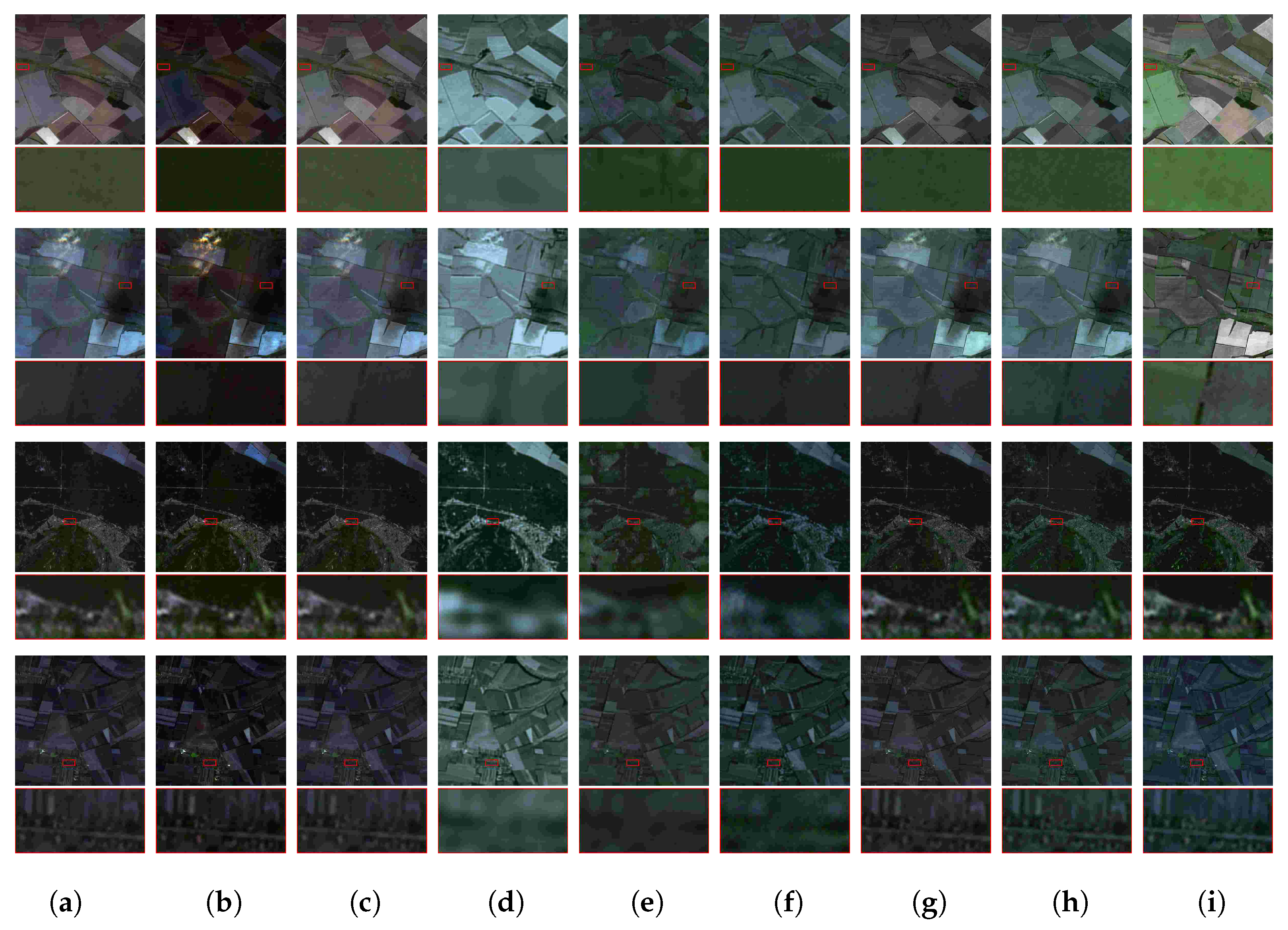

WHUS2-CR consists of real Sentinel-2A images. For our experiments, the Blue (B2), Green (B3), and Red (B4) bands were concatenated to form true color composited image. We conducted four tests targeting four regions: Test 1, an urban area in Ukraine; Test 2, an urban area in Australia. To demonstrate the visual characteristics of individual bands, we present the following for Test 1: the standard RGB composite, and the separate grayscale images from Band 2 (Blue), Band 3 (Green), and Band 4 (Red).

Table 12 presents quantitative results on the WHUS2 test1 dataset. For PSNR, our method ranks first with 23.084, followed by CycleGAN (22.736) in second and U-net GAN (22.536) in third. For SSIM, our method achieves the highest score of 0.789, while PM-LSMN closely follows with 0.764 in second place and MAE-CG (0.762) in third. For SAM, McGANs obtains the best score of 7.901, with CycleGAN (8.726) ranking second and Vanilla GAN (9.101) in third, while our method ranks eighth with 15.451. For ERGAS, CycleGAN attains the lowest score of 0.168, followed by CloudGAN (0.429) in second and U-net GAN (0.857) in third, while our method ranks fifth with 0.967. These results demonstrate that our method achieves the top rank in two metrics (PSNR and SSIM) while maintaining competitive performance in the remaining metrics.

For the real Sentinel-2A images in WHUS2-CR (

Figure 9), our method and PM-LSMN show comparable cloud removal, but our results exhibit more accurate color representation, closer to the ground truth. The WHUS2 test1 dataset contains diverse scenes with varying cloud conditions. Our approach maintains better visual quality in terms of color accuracy, detail clarity, and cloud removal compared to other methods, producing results that are more consistent with the ground truth.

Our proposed PMSAF-Net achieves state-of-the-art or comparable performance across all benchmark datasets with merely 0.32 M trainable parameters, demonstrating its superior accuracy-efficiency trade-off for multi-platform deployment. Qualitative evaluations reveal that the method effectively removes thin clouds while preserving spectral continuity and color consistency.

4.4. Ablation Study and Configuration Analysis

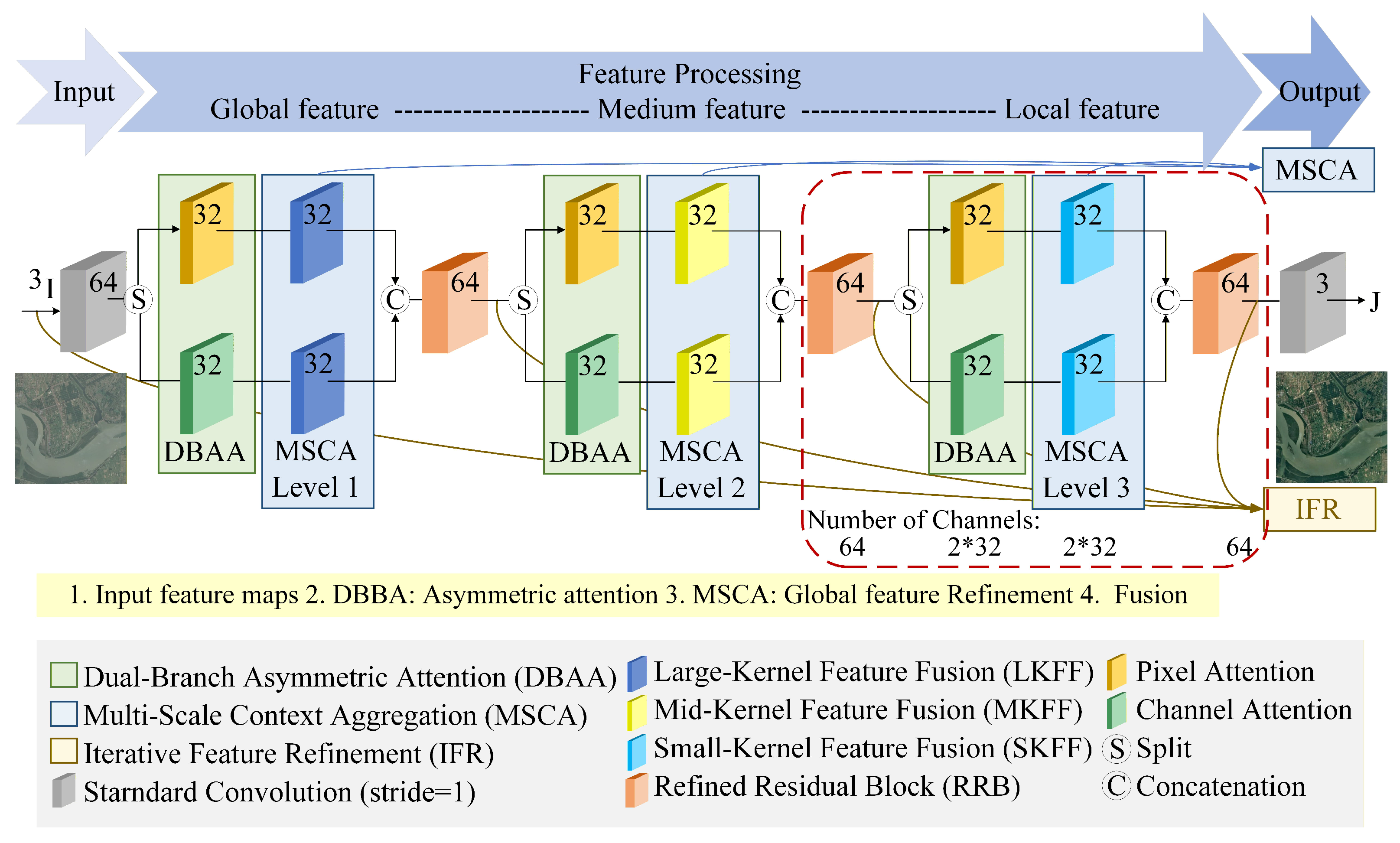

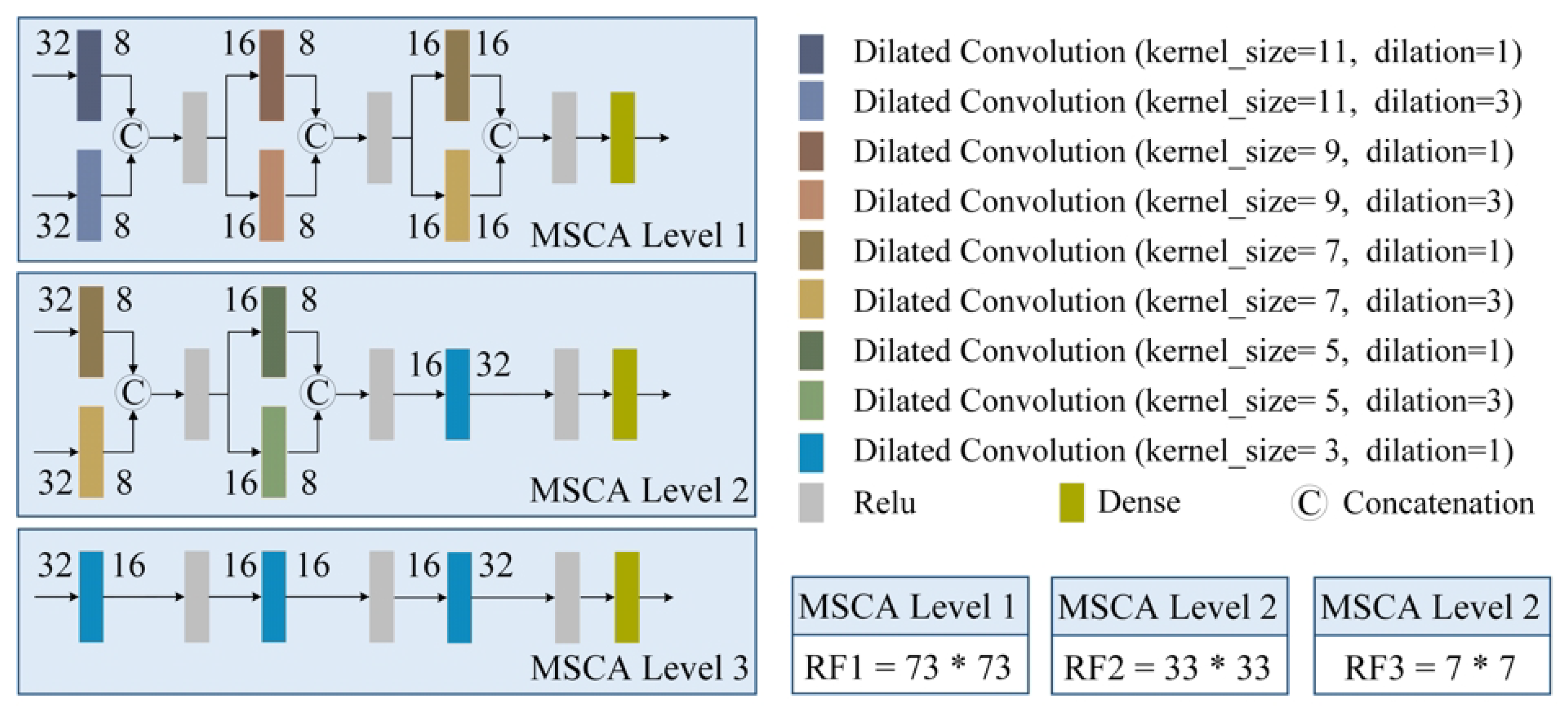

Based on our model, we construct 6 variants with different component combinations: (a) Variant model A is the baseline PM-LSMN model. (b) Variant model B adopts the DBAA module. (c) Variant model C adopts the RRB block. (d) Variant model D adopts the MSCA module. (e) Variant model E adopts the IFR modules. (f) Proposed model F adopts all the introduced modules (DBAA, RRB, MSCA, and IFR). In

Table 13, the models B, C, D and E achieve improvement gains in PSNR of 0.863 dB, 0.753 dB, 0.361 dB and 0.236 dB, respectively, compared to model A, demonstrating the effectiveness of the proposed individual modules. Our proposed model F achieves the best performance with PSNR of 27.258 dB and SSIM of 0.930, outperforming all variant models across multiple metrics. Furthermore, model F achieves the best scores in SAM (5.423) and ERGAS (0.390), demonstrating comprehensive performance improvements. With approximately 0.32 M parameters, model F maintains a compact model size comparable to the baseline model A (0.33 M parameters). Therefore, the introduced DBAA, RRB, MSCA, and IFR modules collectively enable our model to achieve better performance while maintaining computational efficiency.

4.4.1. Effectiveness of Proposed Modules

All values of Variants B-F are formatted as “measured value (relative baseline change rate%)”, where the change rate is calculated as [(Variant Value-Baseline Value)/Baseline Value] × 100. For positive indicators (PSNR, SSIM; higher is better), a positive change rate denotes improvement. For negative indicators (SAM, ERGAS, Parameters; lower is better), a negative change rate denotes optimization. All ablation experiments were conducted on the RICE1 dataset. The ablation study reveals distinct contributions from each module:

DBAA enhances performance by increasing PSNR by 3.33% through the separate optimization of spatial and channel attention. This approach significantly reduces computational redundancy while improving feature extraction efficiency. RRB achieves superior spectral preservation, reducing SAM by 5.08%, through the use of reflection padding and residual calibration. This effectively minimizes boundary artifacts, ensuring more accurate spectral information retention. MSCA contributes to ERGAS improvement by 0.98% by expanding the receptive field to 111 × 111 through hierarchical dilated convolutions. This enables better global context capture, crucial for handling complex cloud distributions. IFR provides moderate but consistent improvements across all metrics through dense cross-stage connections. This ensures progressive feature refinement and error reduction, enhancing overall image quality. The integrated model (F) achieves synergistic performance, with a 5.08% improvement in PSNR and a 5.36% reduction in SAM, while maintaining a compact parameter size of 0.32 M, slightly smaller than the baseline.

We conducted ablation studies on the RICE1 dataset to evaluate each component’s contribution. Using a single benchmark allows for a controlled comparison, isolating the effect of each module without the added complexity of multiple datasets. A comprehensive ablation study has been conducted on the RICE2 dataset to complement the analysis on RICE1. The results, summarized in

Table 13 and

Table 14, confirm that the contributions of the DBAA, RRB, MSCA, and IFR modules are consistent across both datasets. This demonstrates the general effectiveness of the proposed architectural components under varying cloud scenarios.

To systematically evaluate the contribution of each component, we first conducted an ablation study on the RICE1 dataset. This study provided a controlled benchmark for isolating module effects. To further verify the generalizability of these findings, this study was extended to the RICE2 dataset. This dataset presents different cloud characteristics.

As shown in

Table 14, the results demonstrate consistent performance improvements from the DBAA, RRB, MSCA, and IFR modules on RICE2 dataset, confirming their robustness and universal effectiveness under varying conditions.

These results demonstrate the value of each module within our architecture. Furthermore, the performance of our full model across all four datasets shows that these benefits generalize well. Our complete model achieves competitive results on RICE1, RICE2, T-cloud, and WHUS2-CR, which have different cloud types and sensors. This consistent performance confirms that our architectural improvements are effective across diverse real-world conditions.

4.4.2. Study of the Network Configuration

We evaluate our method on RICE1 under different network configurations, shown in

Table 15. Considering the trade-off between model performance and hardware efficiency, we selected the configuration that offers the best balance. In terms of Channel Count (CC), the baseline (64 channels) is chosen as it provides superior performance (PSNR: 27.094) with acceptable parameters, while half (0.08 M) and quarter (0.02 M) channels lead to significant performance drops. For the Kernel Size (KS), the multi-scale configuration is selected for its robust performance across metrics. For the Dilation Rate (DR), the setting d = (1,3) is adopted because it achieves the highest PSNR (27.258) and excellent SSIM (0.930) without increasing the parameter counts. This combination ensures optimal performance while maintaining computational efficiency.

4.5. Benchmark Tests on Edge Computing Platforms

To validate the practical deployment capability of PMSAF-Net, we performed benchmark tests on a typical edge device. The following section details the setup and results.

We used a development board with a Rockchip RK3588 SoC (Rockchip Electronics Co., Ltd., Fuzhou, Fujian, China). This processor is designed for edge AI applications. The model was optimized with the official RKNN toolkit (v2.3.2). The input tensor shape was set to (1, 512, 512, 3). All tests ran under normal conditions without active cooling. The results confirm the model’s efficiency in resource-limited settings. Key metrics are listed in

Table 16.

The model achieved a single-image inference time of 508.68 ms, demonstrating its capability for near-real-time processing on edge hardware. This sub-second latency is suitable for applications such as onboard satellite or UAV image processing. Prior to execution, the system reported 0.54 GB of memory in use, leaving 7.44 GB free, which corresponded to a minimal memory utilization rate of 10.4%. This initial state indicates a low system load, establishing a clean baseline for measurement. Memory usage was closely monitored. Before inference, the system used 0.54 GB of memory. After inference, it used 0.55 GB. The increase was only 0.01 GB (10 MB). This shows a very low memory footprint.

The key finding from this data is the model’s exceptionally low dynamic memory footprint. The inference process resulted in an incremental memory consumption of only approximately 0.01 GB (10 MB). This negligible increase in resource demand powerfully validates the model’s lightweight architecture and its suitability for deployment on memory-sensitive edge devices without causing significant resource contention. The chip temperature remained stable at 33.3 °C before and after inference. This minimal thermal fluctuation indicates low power draw and operational stability, which is critical for always-on or battery-powered edge devices. The benchmark results substantiate that PMSAF-Net meets the critical requirements for edge deployment. It successfully balances computational accuracy with low latency, a minimal memory footprint, and stable power characteristics. This balance fulfills the core design objective of enabling effective multi-platform deployment. These performance characteristics directly align with the constraints of target edge platforms. With only 0.32 M parameters and a 10 MB memory footprint, PMSAF-Net operates well within the limits of typical deployment scenarios. For instance, it is compatible with nano-satellites such as CubeSats, which typically have memory constraints under 2 GB. The model also suits drone processors like the NVIDIA Jetson series, designed for 5–15 W power budgets. This validates PMSAF-Net’s practical deployment capability across various resource-constrained multi-platform scenarios.