1. Introduction

Trees are an indispensable part of the earth’s ecosystem; they not only provide us with valuable resources such as oxygen, timber, and medicinal herbs, but also play a vital role in regulating the climate, purifying the air, and preventing soil erosion. By absorbing carbon dioxide, forest ecosystems reduce greenhouse gas and mitigate global warming, and are a key component in the global carbon cycle. However, with the continuous invasion of invasive alien species (IAS), the growth of trees faces unprecedented threats. These IAS not only compete with native tree species for living space, nutrients, and water, but also seriously disturb the ecological balance of forests by spreading pests and diseases. Their invasion has already caused a great disaster to forestry ecology, affecting the health and sustainable development of forests. With the growing seriousness of this problem, it has become imperative to protect and restore forest ecosystems, resist invasive alien species, and detect and count the diseases of invasive alien species.

With the advancement of remote sensing technology, image recognition, computer vision, and artificial intelligence, more and more studies are exploring automated tree disease detection methods [

1] based on image analysis. These efforts aim to improve the efficiency, accuracy, and intelligence of forest pest and disease monitoring. By collecting tree images and processing them with deep learning models, automatic identification and localization of diseases can be achieved, greatly reducing the workload of manually detecting diseased trees.

Following these advancements, numerous detection and recognition models have been developed. For instance, the You Only Look Once (YOLO) series [

2] enables the identification of diseased trees by extracting visual features and performing multi-scale predictions on input images, while the Segment Anything Model (SAM) [

3] provides powerful segmentation capabilities that precisely delineate tree regions for accurate disease localization and analysis. In 2020, Alaa et al. [

4] proposed a palm tree disease detection method combining a convolutional neural network (CNN) [

5] and a support vector machine (SVM) [

6], effectively integrating the deep learning model’s feature extraction ability with the robust classification strength of traditional machine learning to distinguish visually similar disease symptoms. More recently, Lin et al. [

7] introduced a Transformer-based model with graph-structured modulation, in which attention and feature centrality graphs jointly refine self-attention and feature representations, mitigating attention homogenization and improving detection accuracy. Despite these advances, current models still face significant challenges that limit their performance in complex real-world scenarios.

In many complex and dense scenes, a large number of trees are clustered together with different sizes of individuals, and this high density and scale difference significantly increase the detection difficulty, which seriously affects the recognition and localization ability of the model.

The generalization ability of many models is weak, as they usually only perform well on trained data and are less effective in recognizing unseen samples, making it difficult to adapt to complex and changing practical application scenarios.

Traditional models rely only on single-modal information, which leads to their lack of sufficient semantic expressiveness to effectively capture richer and deeper semantic features, and the recognition accuracy of the models is greatly reduced when facing similar categories.

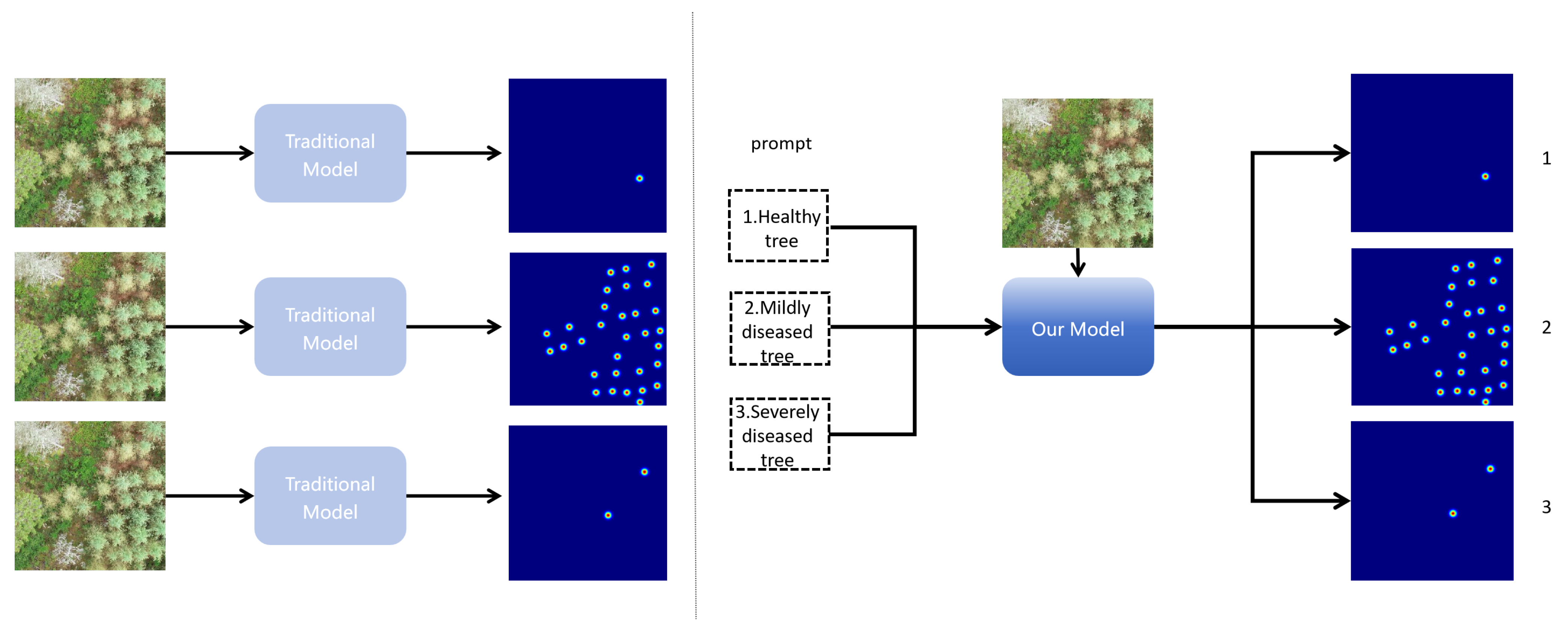

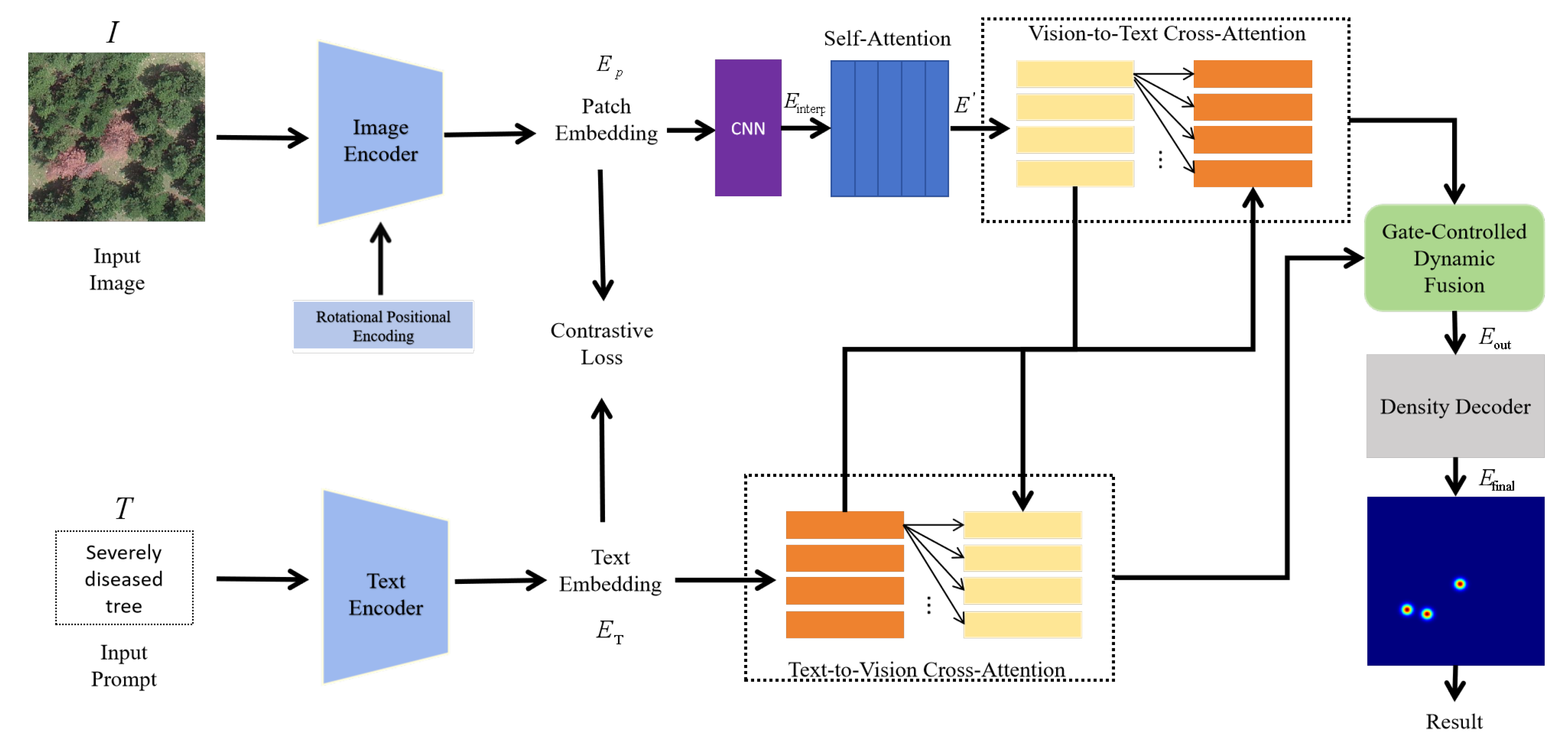

To cope with these challenges, this paper proposes a multimodal tree disease detection model, as shown in

Figure 1, which is able to distinguish different classes of trees in an image compared to the traditional model that only realizes one modality as input. Thanks to the introduction of a textual modality [

8], our model shows unprecedented multi-scene adaptability, and is able to efficiently train and differentiate statistics on tree images taken by unmanned aerial vehicles (UAVs) with multiple complex conditions at the same time. This breakthrough design covers different physiological states, including healthy, diseased, and insect-infested trees. We introduce rotational attention encoding [

9] in the visual coder to enable the model to capture local and global features in the image more efficiently. In order to better fuse the image information and text information, we introduce gated bidirectional cross-attention [

10] and a contrastive loss function, which deeply interact with the image information and text information to enhance the alignment and fusion of multimodal features. Finally, the fused multimodal features are inputted into the downstream task module of diseased tree detection and statistics to obtain accurate diseased tree conditions, which provides an efficient and accurate technical solution for forestry ecological protection.

In summary, our contributions are as follows:

We propose an end-to-end deep learning model for disease detection that incorporates rotational position encoding in the image encoder, which allows the model to better understand the positional information of the trees in the image, and improves the model’s ability to capture the trees in complex scenes, thus increasing the accuracy.

Our model demonstrates exceptional generalization capabilities, enabling it to extract universal features based on existing knowledge and adapt to diverse datasets, even when distributions shift. When confronted with unseen data scenarios, it maintains robust recognition performance, overcoming the limitations of traditional models that require extensive re-training in new environments.

Our model fuses visual and textual information in a gated bidirectional cross-attention module, and the ability to incorporate textual information enables the model to be dynamically adapted to a wider range of real-world scenarios based on user-input descriptions or specific needs.

The rest of the paper is organized as follows: In

Section 2, we describe the development of traditional disease detection with the emerging large model. In

Section 3, we present the general architecture of our model, with each subsection describing each part of the model. In

Section 4, we describe the experimental setup and experimental results, and show visualization images. In the

Section 5 and

Section 6, we present our discussion and conclusions.

4. Experimentation and Analysis

4.1. Experimental Setup

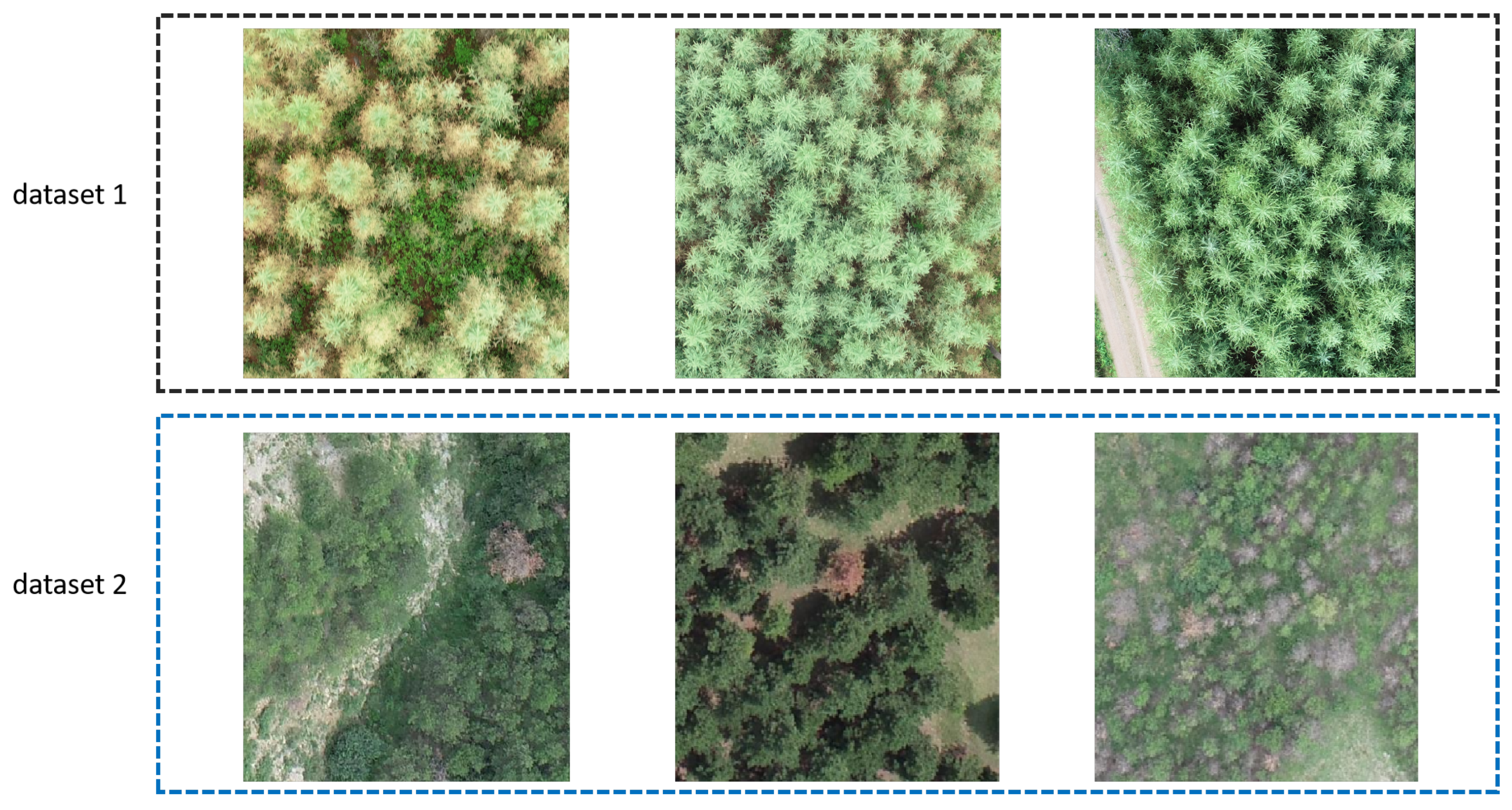

Datasets: (1) We utilize the open-source dataset Larch Casebearer (Swedish Forest Agency (2021): Forest Damages – Larch Casebearer 1.0. National Forest Data Lab, Dataset) [

38], which is a project initiated by the Swedish Forest Agency with support from Microsoft that monitors forest areas affected by the white moth. The project annotates the locations of healthy, mildly diseased, and highly diseased trees across five distinct regions. Healthy trees exhibit green foliage with no visible discoloration. Mildly diseased trees show slight yellowing of leaves or minor thinning. Severely diseased trees display severe discoloration or even dieback. In total, the dataset contains more than 1500 images of over 40,000 trees. Several sample images from dataset 1 are displayed within the black dashed box in

Figure 3.

(2) The open-source dataset PDT dataset (PDT: Uav Target Detection Dataset for Pests and Diseases Tree; Mingle Zhou, Rui Xing, Delong Han, Zhiyong Qi, Gang Li*; ECCV 2024) [

39], which is from the Shandong Computer Science Center, is also utilized to collect aerial images of the pine forest area. It contains more than 4000 images with two types of trees in each image, healthy trees and trees damaged by the red fat-sized borer. Several sample images from dataset 2 are displayed within the blue dashed box in

Figure 3.

Both datasets consist of aerial forest images captured vertically by unmanned aerial vehicles (UAVs). For both datasets, images with incomplete or missing annotations were removed to ensure data validity. In the Larch Casebearer dataset, this resulted in approximately 800 images with roughly 44,000 annotated instances used for experiments. Similarly, in the PDT dataset, around 3900 images with approximately 110,000 annotations were retained after filtering. To ensure consistency, all images were resized to a uniform resolution of 384 × 384 pixels. The datasets were divided into training, validation, and test sets with a ratio of approximately 5:1:1. All textual inputs in our framework are expressed as natural language prompts.

4.2. Comparison Methods

In order to validate the effectiveness of our model, we chose to focus on deep learning methods to compare with our method, which are as follows:

ALEXTNET [

40]: The first model to apply deep convolutional neural networks to large-scale image classification and achieve a breakthrough.

RESNET50 [

5]: A 50-layer convolutional neural network that introduces residual connectivity to solve the difficulty of training deep networks.

UNET [

41]: Supports density map generation through fine segmentation capability, suitable for small-target or sparse counting tasks, with jump–junction fusion of multilayer features.

EFFICIENTNET [

42]: An image classification model that combines high accuracy and efficiency through a composite scaling strategy.

ICC [

43]: Rich features are extracted by multi-scale convolution, and accuracy and computational efficiency are balanced in counting.

MCNN [

44]: A crowd counting model based on multi-column convolutional branching, adapting to different density and scale variations.

COUNTX [

45]: A single-level model that does not distinguish between categories and can count the number of instances of any category based on image and text descriptions.

CLIP-COUNT [

35]: A text-guided zero-shot object counting model capable of automatically completing object counting based on natural language prompts, demonstrating considerable application potential.

MSTNET [

46]: A Transformer-based network for remote sensing segmentation that captures multi-scale features to improve accuracy.

GRAMFORMER [

7]: A graph-modulated Transformer for crowd counting that diversifies attention maps via an anti-similarity graph and modulates node features using centrality encoding to address homogenization in dense scenes.

VL-COUNTER [

47]: A text-guided zero-shot object counting model that leverages CLIP’s visual–linguistic alignment capability to generate high-quality density maps for unseen open-vocabulary objects.

DAVE [

48]: A zero-shot object counting model that identifies and leverages high-quality exemplars to accurately count objects.

VA-COUNT [

25]: An object counting model that combines detection and verification to reliably estimate counts.

4.3. Evaluation Metrics

In this paper, we use the mean absolute error (MAE) and the root mean square error (RMSE) to evaluate the performance of the model.

where

is the predicted number of the ith sample,

is the true number of the ith sample, and

n is the total number of samples.

We also use the structural similarity index measure (SSIM) and the peak signal-to-noise ratio (PSNR) to evaluate the similarity between the predicted and ground truth density maps. Equation (

21) presents the formula for SSIM, and Equation (

22) presents the formula for PSNR.

where

,

,

,

, and

represent the mean, variance, and covariance of

x and

y,

and

are constant values to prevent the division of zeros, and

signifies the density map’s maximum value.

4.4. Measurement Setup

During the experiments, we use the ViT-B/16 encoder from the CLIP model as the image encoder, decoder-depth is set to 4, and decoder-head is set to 8. For MSE loss training, we perform 200 rounds, with 28 rounds of contrastive loss training before that, using a batch size of 32, with a learning rate set to 0.0001, and the adamw optimizer is used; the training is performed on an nvdia rtx- 4090 GPU. The image size of our dataset is 384 × 384.

4.5. Comparison Experiment

To demonstrate the reliability of our model, we introduce multiple comparative experiments across two datasets. In the Larch Casebearer dataset, we test three types: healthy, mildly diseased, and severely diseased. In the PDT dataset, we conduct tests on the severely diseased condition.

In the Larch Casebearer dataset, single-mode models struggle to effectively train across multiple tree typess simultaneously. To address this limitation, we adopt a strategy of independently training and testing each tree type. In contrast, our approach enables unified training of all types within a single framework by leveraging distinct prompts. For fair comparison with other models, we also employ type-wise testing during the evaluation phase.

As shown in

Table 1, our model consistently achieves lower MAE and RMSE, and higher SSIM and PSNR across all test scenarios, outperforming the other comparative models. In addition,

Table 2 shows that while the MAE of the other models generally exceeds 3, our model achieves a much lower MAE of 2.85, which is about 0.4 lower than the lowest MAE among the other models. Moreover, our model attains an SSIM of 92.1, whereas none of the other models exceed 90, and achieves a PSNR of 28.4, which is 0.7 higher than the best among the others. It can be observed that our model achieves the lowest MAE and RMSE, as well as the highest SSIM and PSNR across both datasets, suggesting that it not only delivers more accurate count estimations but also generates density maps with improved structural consistency. These comparative results clearly demonstrate the superior performance of our model in category-specific scenarios, thereby further validating its effectiveness.

By accurately counting diseased trees within the target areas, our model not only proves its high precision and robustness in practical applications, but also provides a scientific foundation for disease monitoring and forest management. Specifically, the model enables efficient identification and counting of diseased trees, substantially reducing the cost and time of manual surveys while improving data accuracy and reliability. This achievement offers strong technical support for forest protection and ecological restoration, highlighting the great potential of deep learning technologies in natural resource management.

4.6. Ablation Experiment

To better validate each module in the model, we perform ablation experiments on it.

Our design idea is to use the image encoder and text encoder to output the corresponding information. We design the visual cue optimization in the image encoder, and we set the contrast loss function between the two corresponding outputs of the two modalities of information; after that, we fuse the two kinds of information, then generate the corresponding density maps by the decoder, and then compare them with the real density maps.

Firstly, we verify the reliability of our multimodal model by incorporating and removing textual information. As shown in

Table 3 and

Table 4, experiments are conducted on two datasets. The results clearly demonstrate that the MAE and RMSE values are consistently lower, while the SSIM and PSNR values are higher when textual input is included. These findings fully validate the effectiveness of our proposed multimodal model.

We define three variables: rotational position encoding (Rotate), contrast loss (Contrast), and fusion module (Fusion). ✓ indicates that the variable is enabled, while ✗ indicates that it is disabled. In the fusion module, Cross represents the fusion of image information and text information through a gated bidirectional cross-attention mechanism, and Add represents the operation of adding image information and text information. By designing these three variables, the effects of different variables on the model can be effectively observed. By choosing to enable and disable each module, the effectiveness of each module can be demonstrated. Val MAE and Val RMSE represent the mean absolute error and root mean square error of the validation set, and Test MAE and Val RMSE represent the mean absolute error and root mean square error of the test set, respectively. SSIM and PSNR denote the structural similarity index measure and peak signal-to-noise ratio, which are reported as the average values across the validation and test sets. In

Table 5, it is easy to find that the model achieves the best results when using the rotational position encoding, contrast loss, and gated bidirectional cross-attention module, with a mean absolute error of 3.50 and a root mean square error of 4.75 for the validation set, and a mean absolute error of 3.62 and a root mean square error of 5.02 for the test set. In addition, the model attains an SSIM of 91.2 and a PSNR of 28.2, further demonstrating its superior reconstruction quality.

In a continuation of the above design ideas, in

Table 6, we find that when using the rotational position encoding, contrast loss, and gated bidirectional cross-attention module, the model achieves the best results, with a mean absolute error of 2.85 and a root mean square error of 4.17 in the validation set, and a mean absolute error of 2.91 and a root mean square error of 4.18 in the test set. Moreover, the model attains an SSIM of 92.1 and a PSNR of 28.4, further confirming the superior reconstruction quality and the reasonableness of introducing each module.

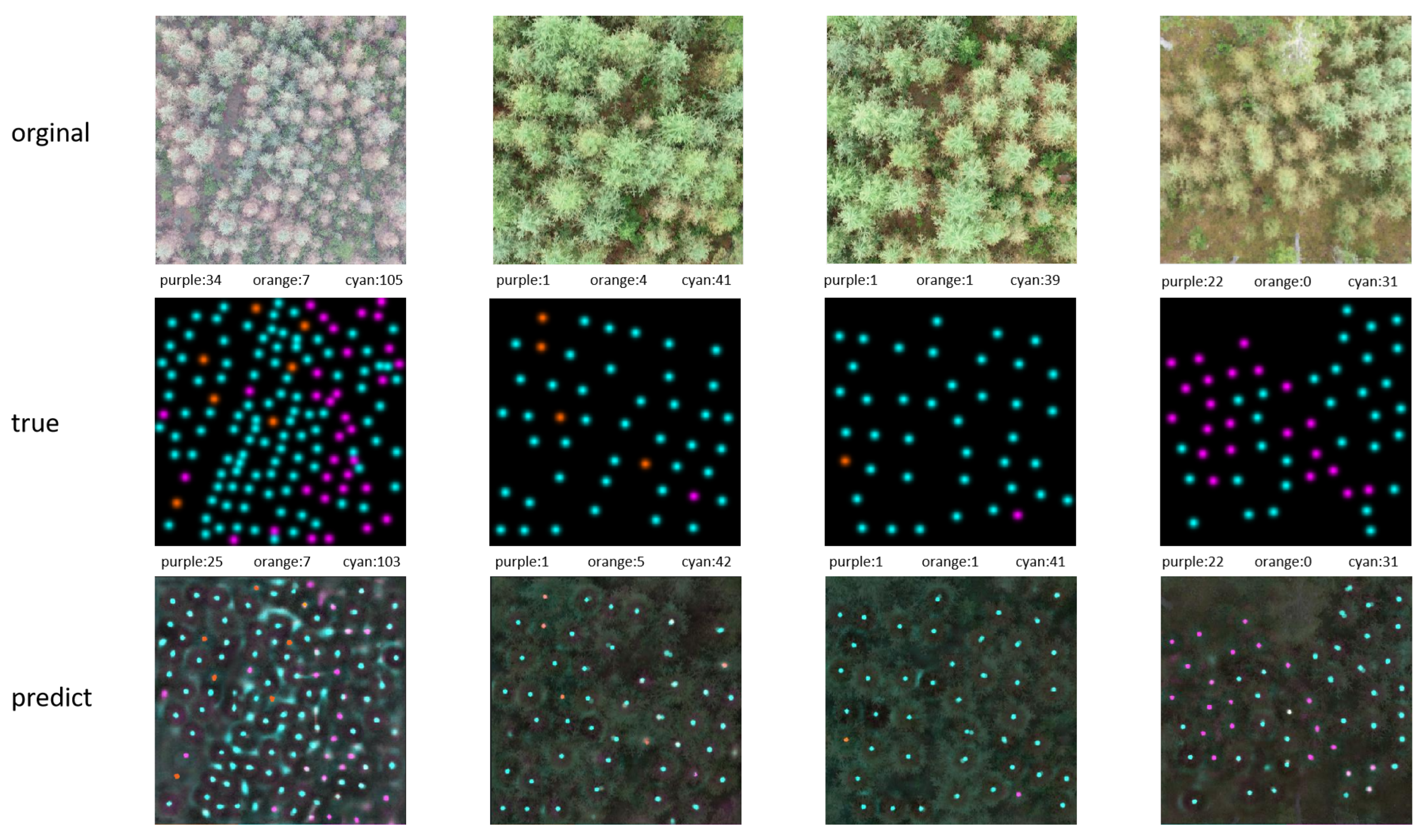

4.7. Case Study

In order to better demonstrate the test results of our model on the two datasets, we have designed the “Case Study” Section. In this section, we will show some specific visualization results to visualize the prediction performance of our model in complex scenarios. We hope to convey the reliability of the model’s predictions more clearly through these specific cases.

Figure 4 shows the effect of the model’s visual recognition of diseased trees. The first row is the original image, the second row is the real point map, and the third row is the model-predicted point map. The colors of the points indicate different tree health states: orange for healthy trees, cyan for mildly diseased trees, and purple for severely diseased trees. The number following the color above each image is the number of trees of that type represented by that color. All images are taken from the Larch Casebearer dataset.

From the four examples in

Figure 4, we can observe that even in complex scenarios such as dense trees and overlapping tree canopies, our model is still able to accurately localize the trees from the images according to the cue words. This is in comparison to traditional tree detection models, where usually, each model can only be trained for a single scene or a specific class of trees, and cannot handle tree detection tasks under different conditions. Our approach undoubtedly improves the practicality and simplicity by allowing the model to handle multiple tree types simultaneously during a single training session through a multi-task learning strategy. This not only improves the adaptability and robustness of the model, but also significantly enhances its practicality and simplicity.

4.8. Generalization Ability Test

To rigorously assess the generalization capability of our model, we conducted experiments on previously unseen test datasets from different geographic regions. This evaluation relies on quantitative performance metrics, allowing us to systematically examine the model’s robustness and adaptability across diverse spatial contexts. The results provide a comprehensive validation of the model’s effectiveness and reliability in scenarios not encountered during training.

The Kampe area is one of the four regions included in the Larch Casebearer dataset. It contains the largest number of annotations, and also has the highest average number of annotations per image. There are approximately 170 images from the Kampe area, with around 15,000 annotations. As shown in

Table 7, although our model exhibits some decline in MAE, RMSE, SSIM, and PSNR, these metrics still remain within a reasonable range. This shows that the model not only is able to learn the features of a specific dataset, but also has some cross-domain adaptability, which validates the effectiveness and robustness of our approach when dealing with unknown data.

The reason for our model’s strong generalization capability in classification, detection, and counting, which can effectively detect and roughly count trees in different scenarios even if it is not specifically trained on a specific dataset, is the model’s strong generalization performance and the migration learning mechanism, which extracts generalized features from existing knowledge and applies them to unknown data distributions.

5. Discussion

This study proposes an efficient approach to distinguish trees with varying health conditions for forestry statistical analysis, providing robust technical support for ecological monitoring and tree disease prevention. The proposed method demonstrates significant potential in assessing tree health status based on remote sensing data, offering strong support for the efficient management of forest resources. However, certain uncertainties remain, as the model’s performance may be affected by factors such as illumination conditions, shooting angles, atmospheric variations, and background interference.

In the experimental stage, two large-scale datasets were employed to provide a solid and representative foundation for model training. In the future, with the incorporation of more diverse and heterogeneous datasets, the diversity and coverage of training data are expected to be further enhanced. We plan to continuously collect and integrate additional relevant data resources to improve the model’s robustness and generalization ability under varying data distributions. The support of larger-scale data will not only optimize the model training process but also enhance its stability and accuracy in complex and diverse scenarios, thereby improving its adaptability and practical value. Furthermore, we intend to extend this method to broader geographic regions and integrate it with UAV-based canopy feature analysis to enhance its spatial scalability in diverse forest environments.

Our model is about 1.1 GB in size and processes each image in approximately 46 ms. It performs efficiently, but the speed is still not sufficient for real-time UAV aerial analysis. In future work, we will develop lighter and more optimized structures to improve real-time performance and deployment feasibility.

6. Conclusions

In this paper, we further improved the CLIP model by introducing rotational position encoding in the image encoder to improve the model’s ability to perceive diseased trees at different locations in the image, realized multimodal feature alignment and fusion through the gated bidirectional cross-attention module and the contrast loss function, utilized the decoder to generate the corresponding density map information for quantity counting, and ultimately constructed a deep learning model for diseased tree counting.

This deep learning model is applied to the task of recognizing and counting diseased trees in forests. It effectively extracts key regions from disease-affected images and integrates textual descriptions—such as disease types and characteristic terms—for accurate identification and classification. By enhancing the automation and precision of disease tree detection and tree counting, the proposed model holds promise for supporting forest resource protection and pest management. It can assist relevant authorities in early warning and informed decision-making, thereby providing technological support for ecological security and sustainable development.