DLiteNet: A Dual-Branch Lightweight Framework for Efficient and Precise Building Extraction from Visible and SAR Imagery

Highlights

- A dual-branch lightweight multimodal framework (DLiteNet) is proposed. It decouples building extraction into a context branch for global semantics (via STDAC) and a CDAM-guided spatial branch for edges and details, with MCAM adaptively fusing visible–SAR features. Removing the complex decoding stage enables efficient segmentation.

- DLiteNet consistently outperforms state-of-the-art multimodal building-extraction methods on the DFC23 Track2 and MSAW datasets, achieving a strong efficiency–precision trade-off and demonstrating strong potential for real-time on-board deployment.

- By removing complex decoding and adopting a dual-branch, task-decoupled design, DLiteNet shows that accurate visible–SAR building extraction is achievable under tight compute/memory budgets, enabling large-area, high-frequency mapping and providing a reusable blueprint for other multimodal segmentation tasks (e.g., roads, damage, change detection).

- Its lightweight yet precise architecture makes real-time on-board deployment on UAVs and other edge platforms practical for city monitoring and rapid disaster response.

Abstract

1. Introduction

- 1.

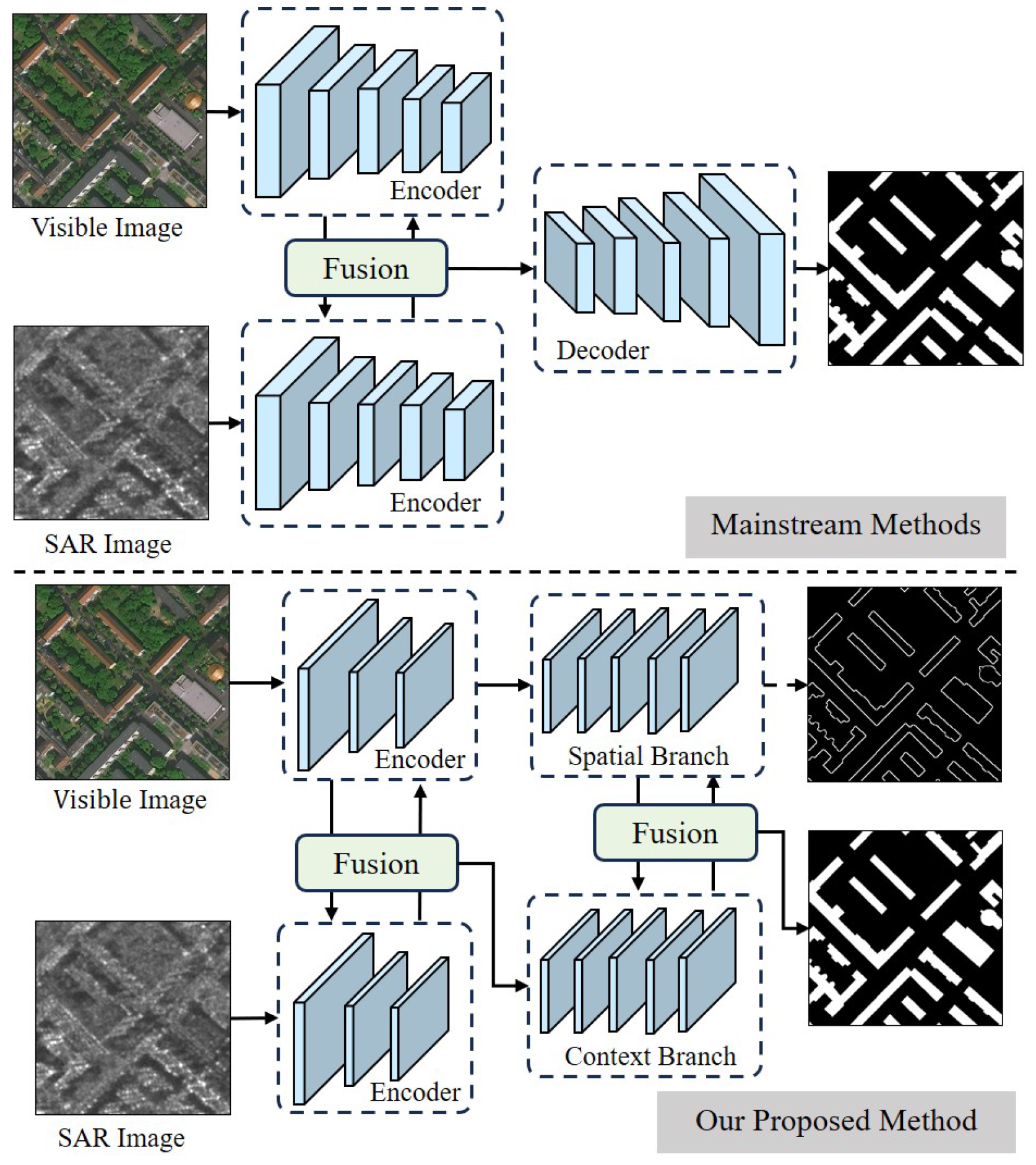

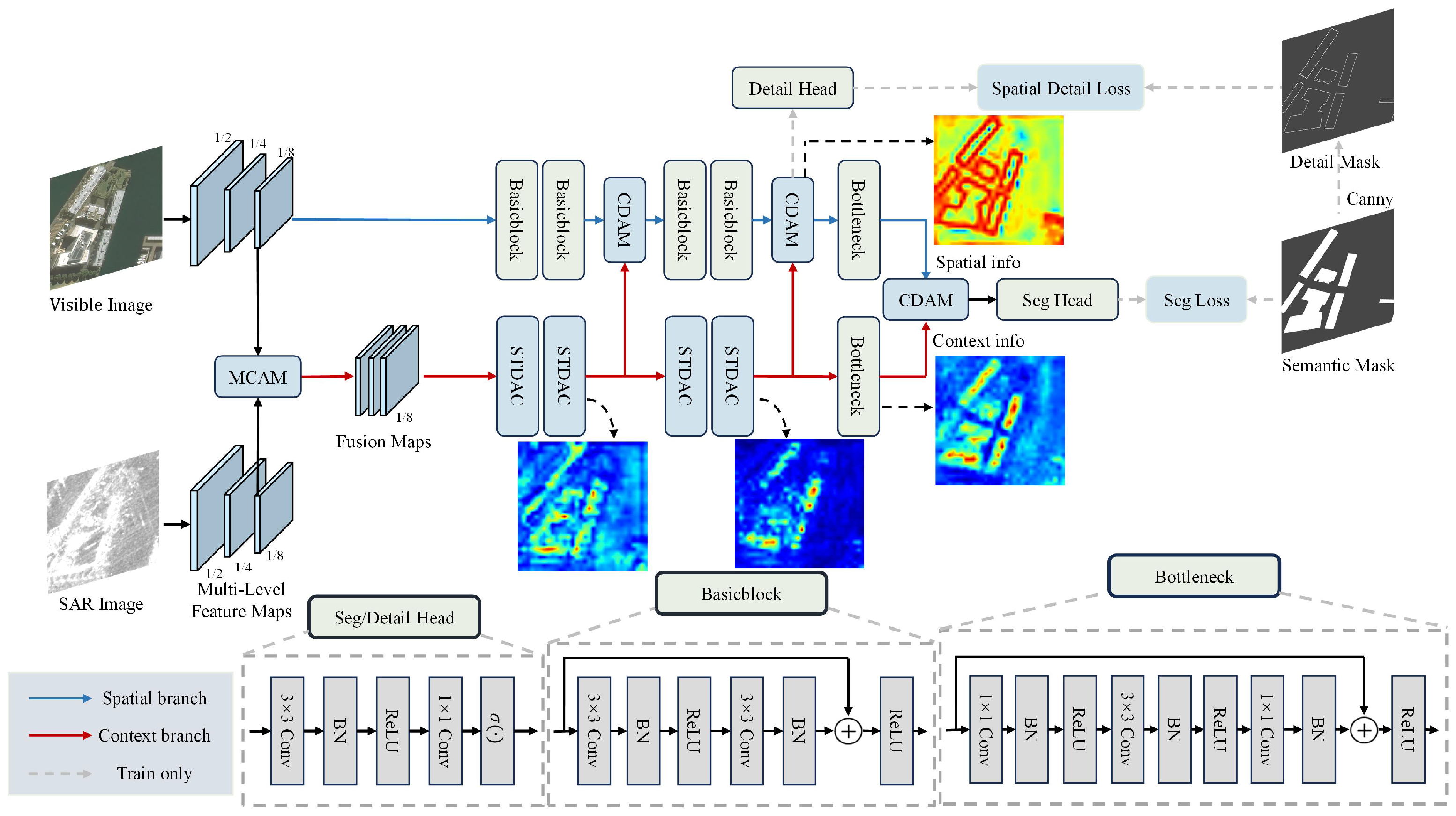

- We propose DLiteNet, a dual-branch lightweight network for multimodal building extraction using visible and SAR imagery. The overall task is functionally decoupled into two subtasks: global context modeling and spatial detail capturing. Accordingly, we design a context branch to learn global semantic representations by jointly leveraging visible and SAR information, and a spatial branch to extract fine-grained structural and texture features from visible images. This design eliminates the need for a conventional decoder, enabling an efficient balance between accuracy and computational cost.

- 2.

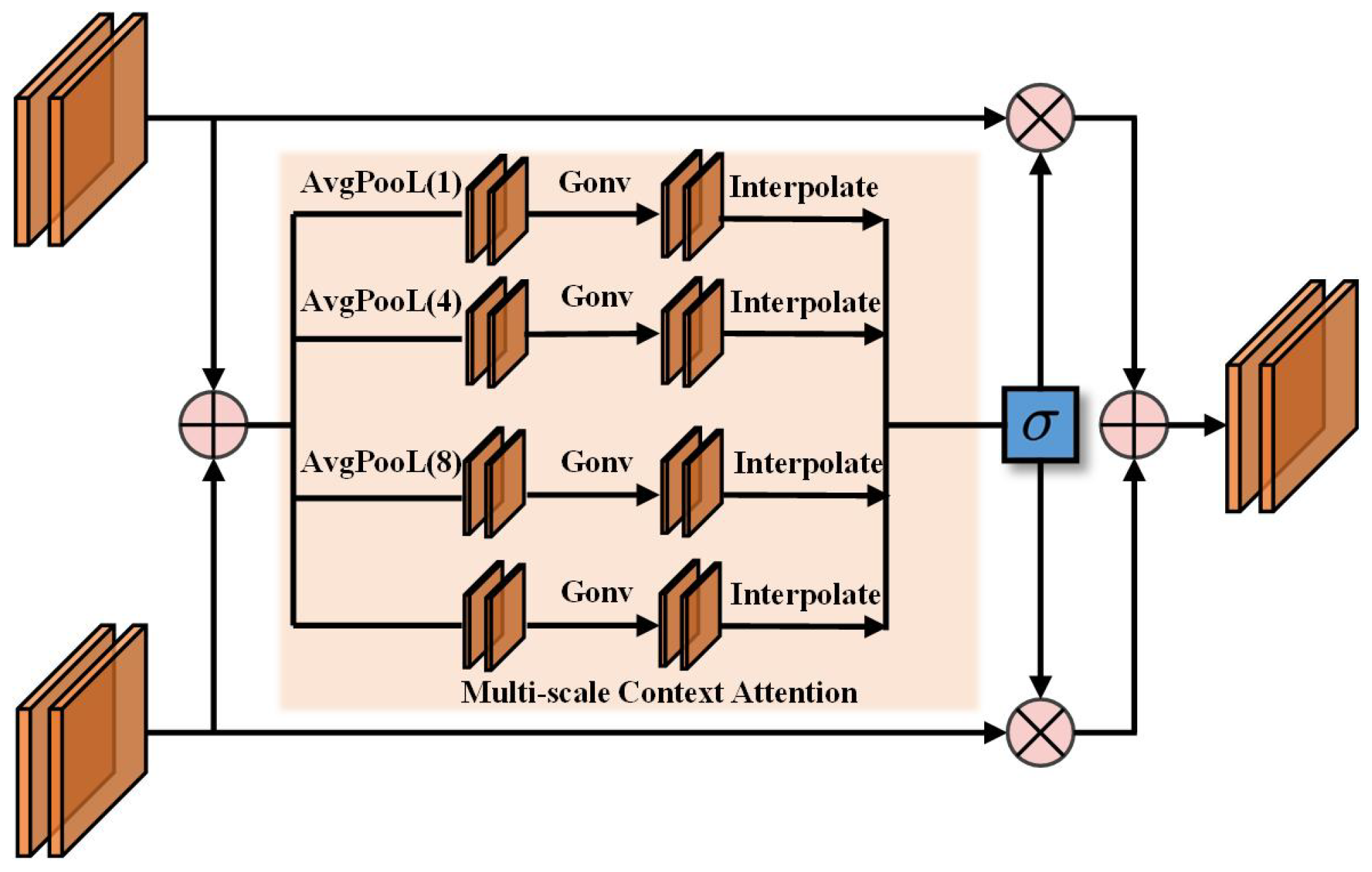

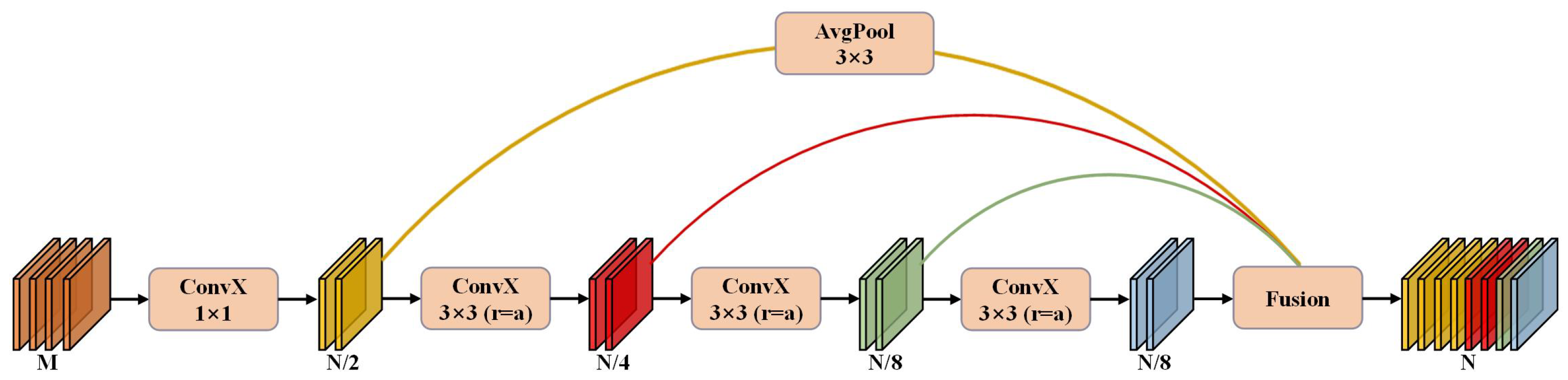

- In the context branch, we propose the Multi-scale Context Attention Module (MCAM), which facilitates adaptive multimodal feature interaction through multi-scale context attention and a complementary selection gate. This module dynamically selects and integrates complementary contextual information from both modalities. Additionally, we propose the Short-Term Dense Atrous Concatenate (STDAC) module, which employs multi-rate atrous convolutions and hierarchical feature fusion to enhance multi-scale contextual understanding of buildings while significantly reducing the parameter burden.

- 3.

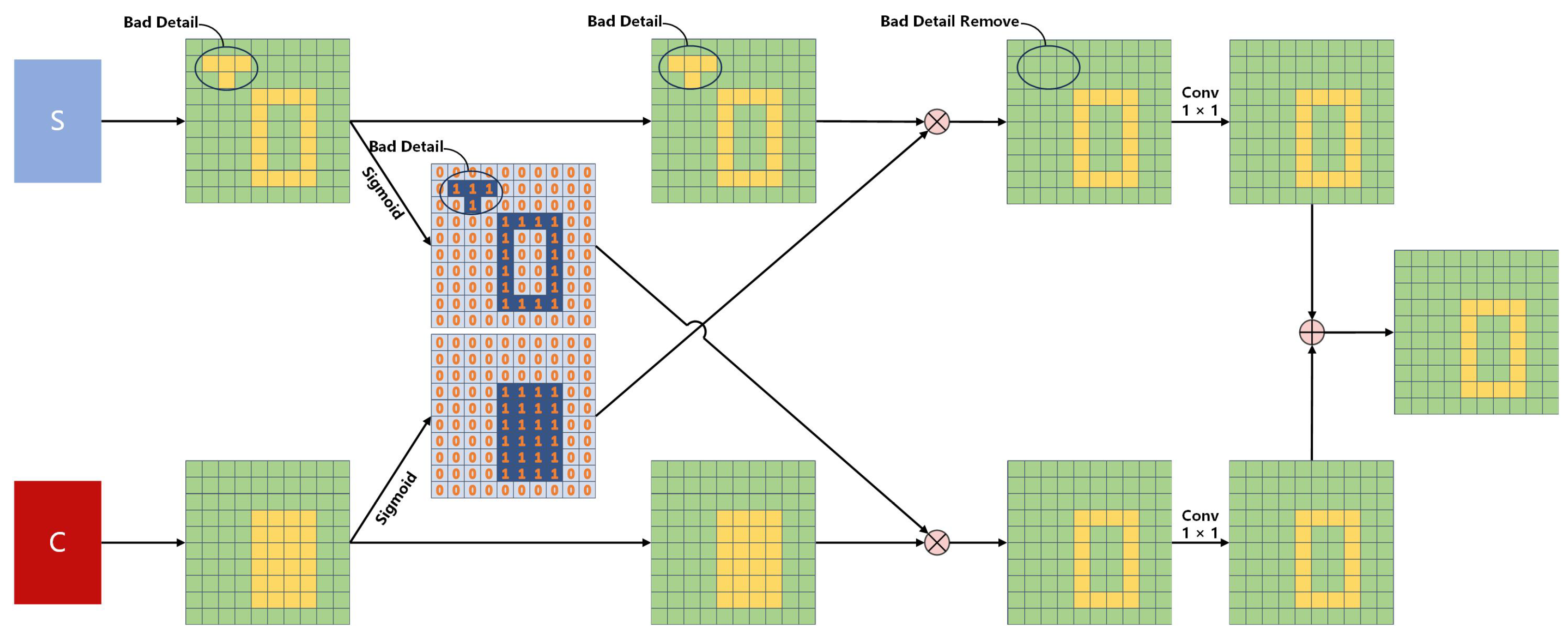

- In the spatial branch, a sequence of basic residual blocks is employed to extract high-resolution structural and textural features from visible images. To enhance spatial feature learning, we propose the Context-Detail Aggregation Module (CDAM), which incorporates contextual priors from the context branch to guide detail refinement. Furthermore, explicit edge supervision is incorporated to reinforce building boundary delineation, leading to more precise and structurally coherent segmentation results.

2. Related Work

2.1. Real-Time Semantic Segmentation in Natural Scenes

2.2. Semantic Segmentation in Building Extraction

3. Materials and Methods

3.1. Datasets

3.1.1. MSAW Dataset

3.1.2. DFC23 Track2 Dataset

3.2. Evaluation Metrics

3.2.1. Accuracy Metrics

- Precision indicates the fraction of correctly predicted building pixels among all pixels labeled as buildings.

- Recall captures the fraction of actual building pixels correctly detected by the model.

- F1-Score integrates Precision and Recall into a single measure, highlighting their balance via their harmonic mean.

- IoU evaluates spatial consistency by measuring the overlap ratio between predicted regions and ground truth annotations.

3.2.2. Computational Cost Metrics

- Parameters denote the count of trainable weights within the model, reflecting both storage requirements and architectural complexity. A smaller number of parameters typically indicates a more lightweight model.

- GFLOPs measure the computational workload per forward pass, offering a direct estimate of inference cost. Lower GFLOPs usually correspond to higher efficiency.

- FPS indicates the number of frames the model can process per second. In this paper, FPS is measured using an NVIDIA A5000 GPU.

- Inference Time refers to the per-frame latency of the model. For a given FPS value, the inference time (in milliseconds) is computed as .

3.3. Implementation Details

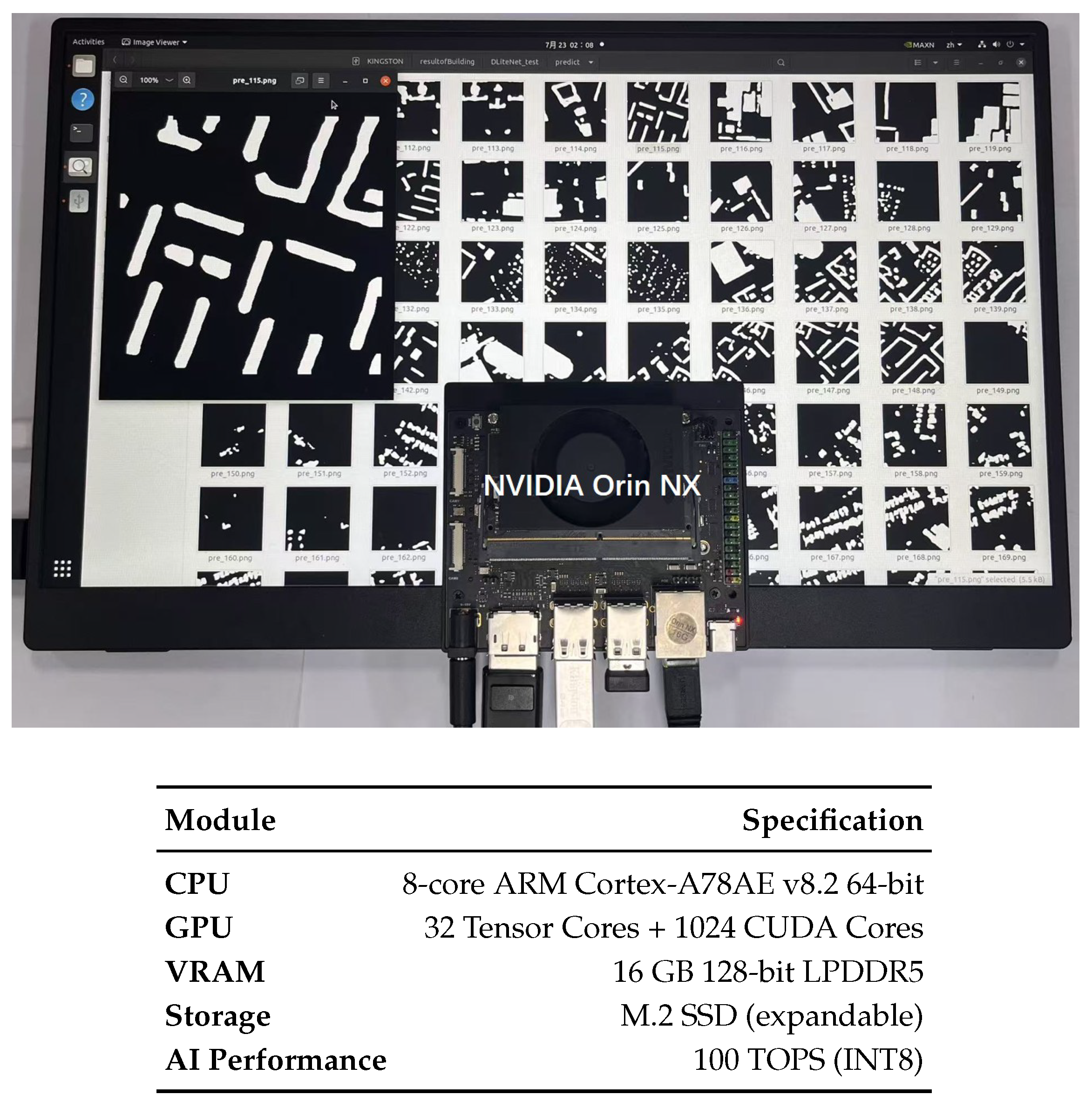

3.3.1. Computing Facilities

High-Performance Training and Inference Platform

Edge Inference Platform

3.3.2. Training and Inference Settings

3.4. Overall Network Architecture

3.5. MCAM: Balancing the Context of Visible and SAR

3.6. STDAC: Short-Term Dense Atrous Concatenate

3.7. CDAM: Fast Aggregation of Contexts and Details

3.8. Loss Functions

4. Results

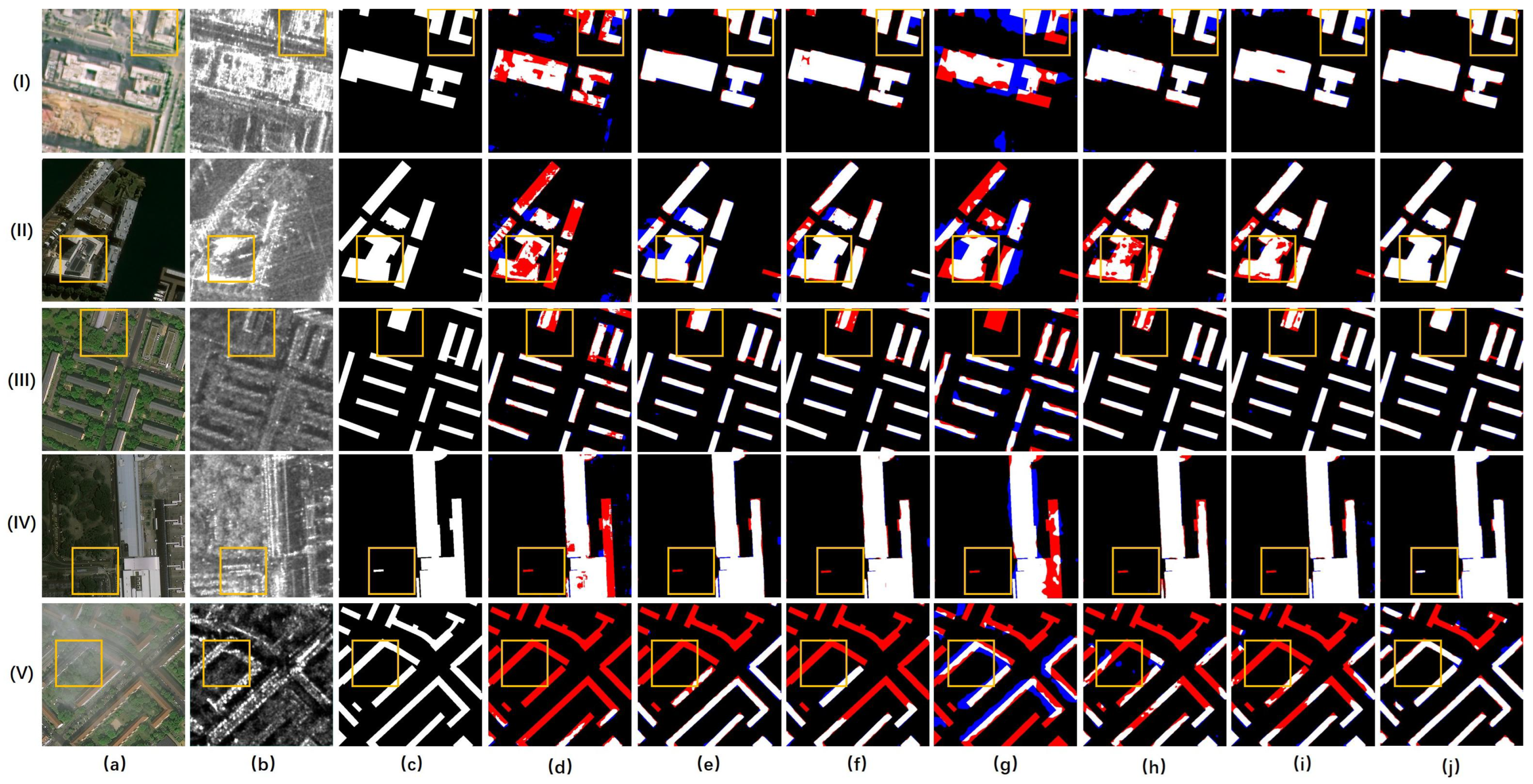

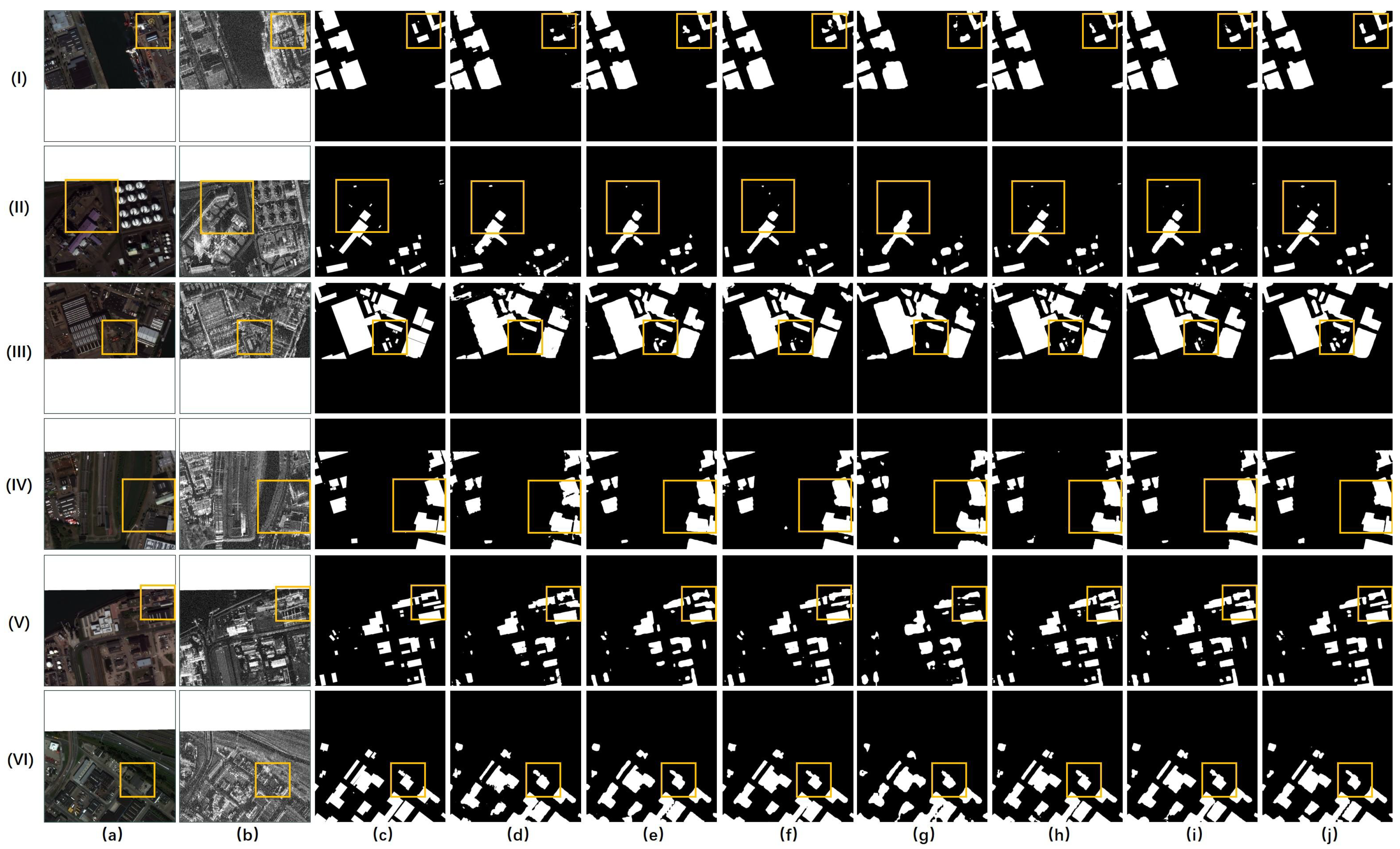

4.1. Results on DFC23 Track2 Dataset

4.2. Results on MSAW Dataset

4.3. Deployment Results on Edge Platform (Jetson Orin NX)

5. Discussion

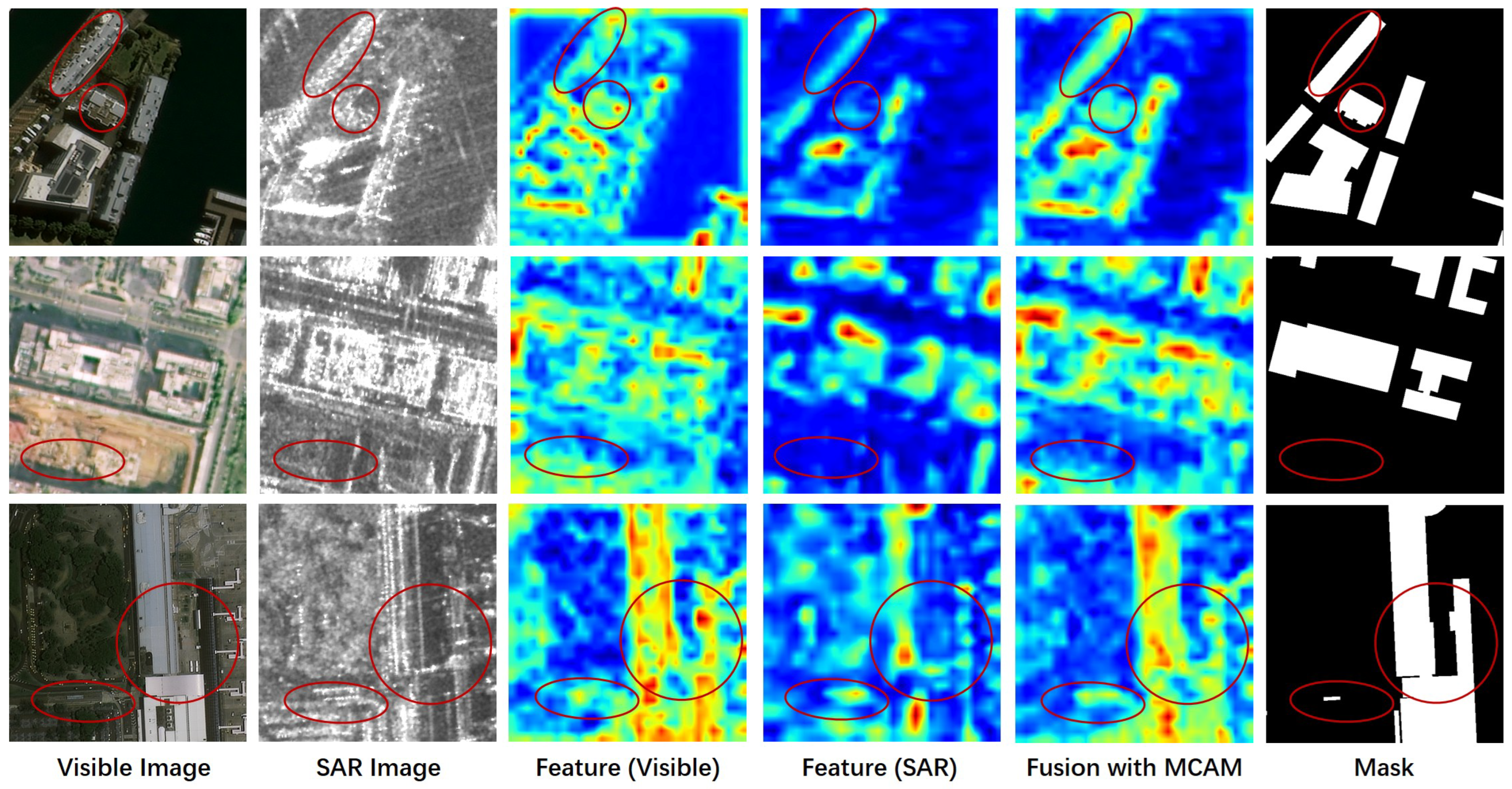

5.1. Effectiveness of MCAM

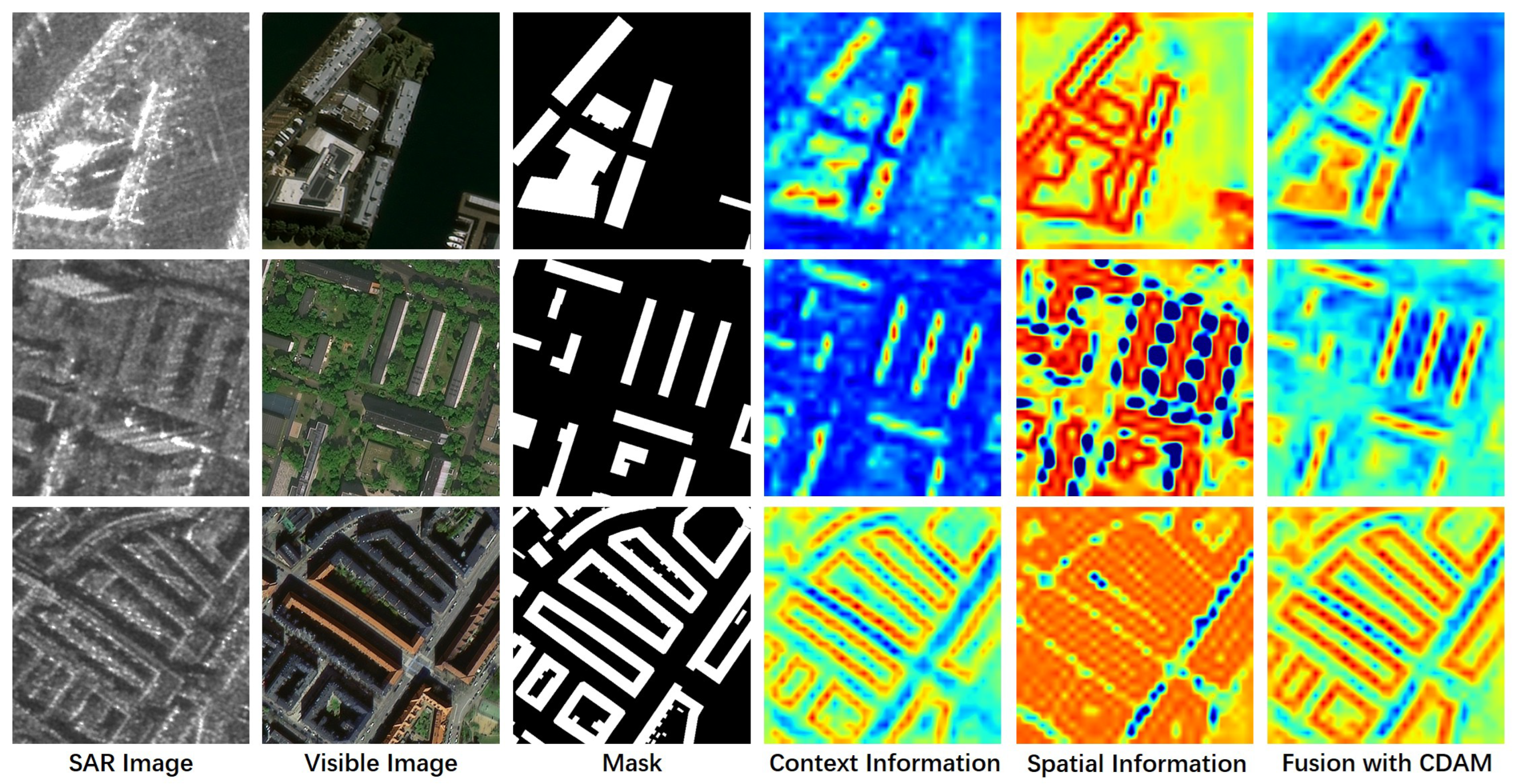

5.2. Effectiveness of CDAM

5.3. Effectiveness of STDAC vs. Bottleneck and Basic Block

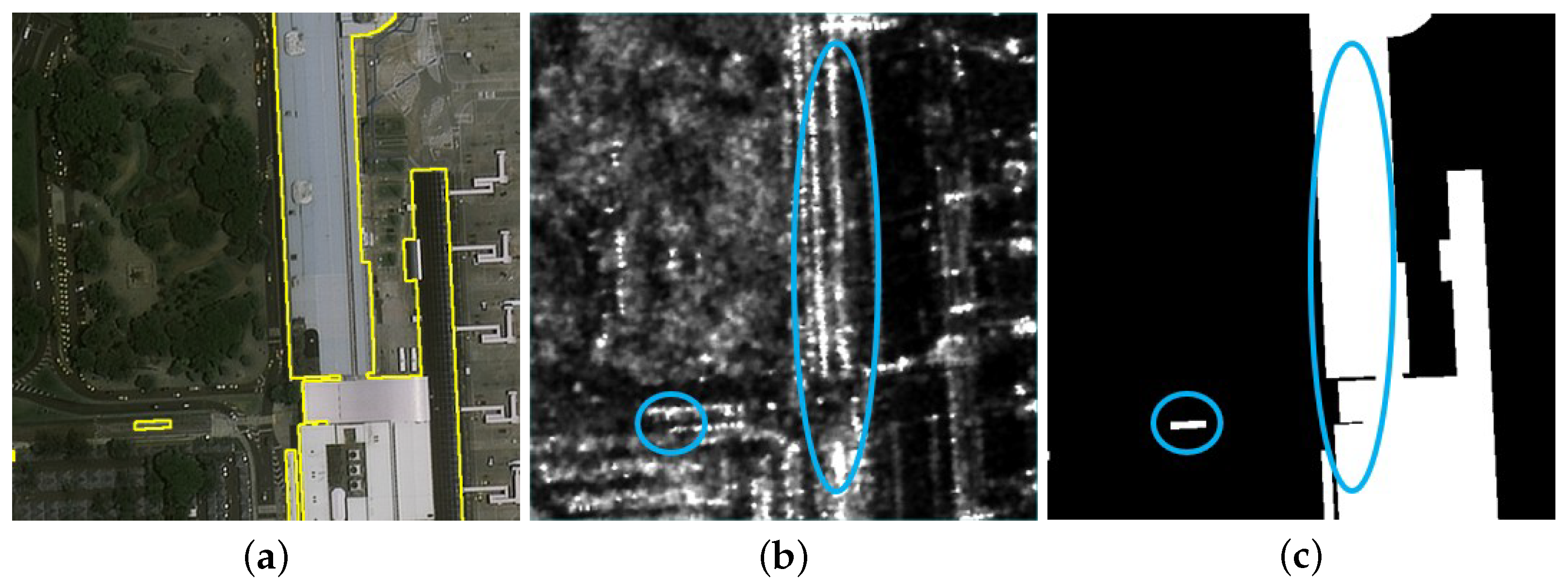

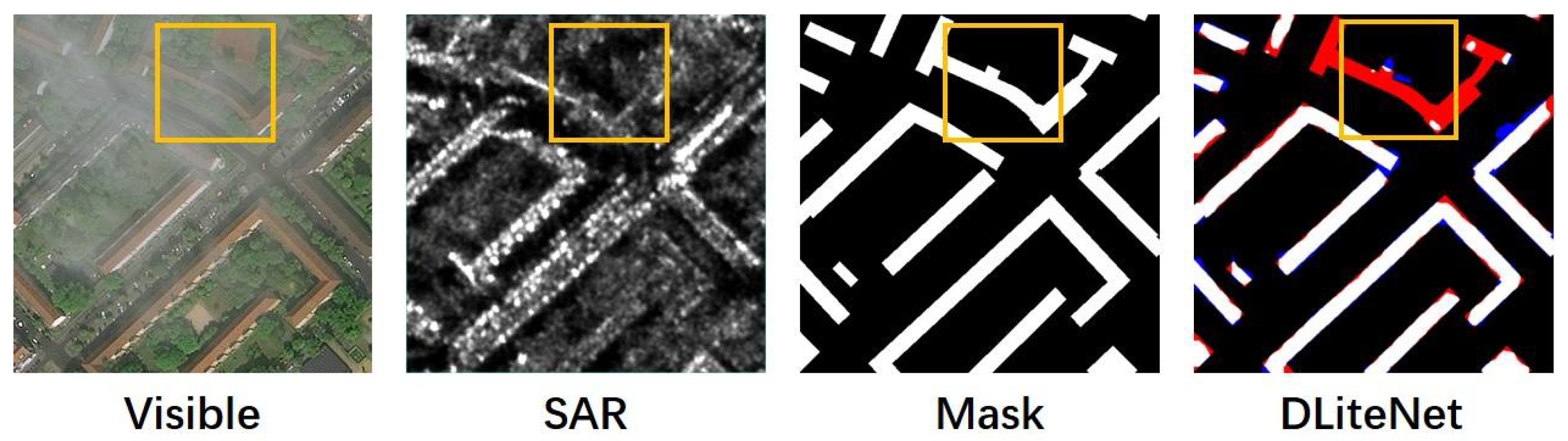

5.4. Limitations and Failure Cases

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth–Death Dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 33–50. [Google Scholar] [CrossRef] [PubMed]

- Hou, Z.; Qu, Y.; Zhang, L.; Liu, J.; Wang, F.; Yu, Q.; Zeng, A.; Chen, Z.; Zhao, Y.; Tang, H.; et al. War City Profiles Drawn from Satellite Images. Nat. Cities 2024, 1, 359–369. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Urban-Area and Building Detection Using SIFT Keypoints and Graph Theory. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1156–1167. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, C.; Zhang, H.; Wu, F. A Review on Building Extraction and Reconstruction from SAR Image. Remote Sens. Technol. Appl. 2012, 27, 496–503. [Google Scholar]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Wang, S.; Li, X.; Chen, Y.; Li, Z.; Zhang, L. MCANet: A Joint Semantic Segmentation Framework of Optical and SAR Images for Land Use Classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102638. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-Attention Mask Transformer for Universal Image Segmentation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science. Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Li, X.; Lei, L.; Sun, Y.; Li, M.; Kuang, G. Multimodal Bilinear Fusion Network with Second-Order Attention-Based Channel Selection for Land Cover Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1011–1026. [Google Scholar] [CrossRef]

- Orsic, M.; Kreso, I.; Bevandic, P.; Segvic, S. In Defense of Pre-Trained ImageNet Architectures for Real-Time Semantic Segmentation of Road-Driving Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 12607–12616. [Google Scholar] [CrossRef]

- Li, H.; Xiong, P.; Fan, H.; Sun, J. DFANet: Deep Feature Aggregation for Real-Time Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9522–9531. [Google Scholar]

- Gamal, M.; Siam, M.; Abdel-Razek, M. ShuffleSeg: Real-Time Semantic Segmentation Network. arXiv 2018, arXiv:1803.03816. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar] [CrossRef]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral Segmentation Network for Real-Time Semantic Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 334–349. [Google Scholar] [CrossRef]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. BiSeNet V2: Bilateral Network with Guided Aggregation for Real-Time Semantic Segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet for Real-Time Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar] [CrossRef]

- Pan, H.; Hong, Y.; Sun, W.; Jia, Y. Deep Dual-Resolution Networks for Real-Time and Accurate Semantic Segmentation of Traffic Scenes. IEEE Trans. Intell. Transp. Syst. 2022, 24, 3448–3460. [Google Scholar] [CrossRef]

- Xu, J.; Xiong, Z.; Bhattacharyya, S.P. PIDNet: A Real-Time Semantic Segmentation Network Inspired by PID Controllers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 19529–19539. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous Extraction of Roads and Buildings in Remote Sensing Imagery with Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Yang, H.L.; Yuan, J.; Lunga, D.; Laverdiere, M.; Rose, A.; Bhaduri, B. Building Extraction at Scale Using Convolutional Neural Network: Mapping of the United States. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2600–2614. [Google Scholar] [CrossRef]

- Hui, J.; Du, M.; Ye, X.; Qin, Q.; Sui, J. Effective Building Extraction from High-Resolution Remote Sensing Images with Multitask Driven Deep Neural Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 786–790. [Google Scholar] [CrossRef]

- Rapuzzi, A.; Nattero, C.; Pelich, R.; Chini, M.; Campanella, P. CNN-Based Building Footprint Detection from Sentinel-1 SAR Imagery. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1707–1710. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H.; Li, J.; Li, L.; Chen, W.; Zhang, B. Built-Up Area Mapping in China from GF-3 SAR Imagery Based on the Framework of Deep Learning. Remote Sens. Environ. 2021, 262, 112515. [Google Scholar] [CrossRef]

- Kang, J.; Wang, Z.; Zhu, R.; Xia, J.; Sun, X.; Fernandez-Beltran, R.; Plaza, A. DisOptNet: Distilling Semantic Knowledge from Optical Images for Weather-Independent Building Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4706315. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Sun, Y.; Hua, Y.; Shi, Y.; Zhu, X.X. A review of building extraction from remote sensing imagery: Geometrical structures and semantic attributes. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4702315. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A coarse-to-fine boundary refinement network for building footprint extraction from remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Chen, Y.; Li, Z.; Li, H.; Wang, H. Progressive fusion learning: A multimodal joint segmentation framework for building extraction from optical and SAR images. ISPRS J. Photogramm. Remote Sens. 2023, 195, 178–191. [Google Scholar] [CrossRef]

- Zhang, P.; Peng, B.; Lu, C.; Huang, Q.; Liu, D. ASANet: Asymmetric Semantic Aligning Network for RGB and SAR image land cover classification. ISPRS J. Photogramm. Remote Sens. 2024, 218, 574–587. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhao, B.; Wu, Y.; He, Z.; Gao, L. Building Extraction From High-Resolution Multispectral and SAR Images Using a Boundary-Link Multimodal Fusion Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 3864–3878. [Google Scholar] [CrossRef]

- Shermeyer, J.; Hogan, D.; Brown, J.; Van Etten, A.; Weir, N.; Pacifici, F.; Hansch, R.; Bastidas, A.; Soenen, S.; Bacastow, T. SpaceNet 6: Multi-Sensor All Weather Mapping Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, DC, USA, 14–19 June 2020; pp. 768–777. [Google Scholar] [CrossRef]

- Huang, X.; Ren, L.; Liu, C.; Wang, Y.; Yu, H.; Schmitt, M.; Hänsch, R.; Sun, X.; Huang, H.; Mayer, H. Urban Building Classification (UBC)—A dataset for individual building detection and classification from satellite imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 565–571. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Rsecognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision, Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large Kernel Matters: Improve Semantic Segmentation by Global Convolutional Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1743–1751. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, Granada, Spain, 16 September 2018; pp. 3–11. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1–11. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Liu, X.; Li, W.; Wang, C.; Liu, H.; Yuan, Y. U-KAN Makes Strong Backbone for Medical Image Segmentation and Generation. arXiv 2024, arXiv:2406.02918. [Google Scholar] [CrossRef]

- Jiang, J.; Zheng, L.; Luo, F.; Zhang, Z. RedNet: Residual Encoder-Decoder Network for Indoor RGB-D Semantic Segmentation. arXiv 2018, arXiv:1806.01054. [Google Scholar]

- Chen, X.; Lin, K.; Wang, J.; Wu, W.; Qian, C.; Li, H.; Zeng, G. Bi-Directional Cross-Modality Feature Propagation with Separation-and-Aggregation Gate for RGB-D Semantic Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 561–577. [Google Scholar] [CrossRef]

- Hosseinpour, H.; Samadzadegan, F.; Javan, F.D. CMGFNet: A Deep Cross-Modal Gated Fusion Network for Building Extraction from Very High-Resolution Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2022, 184, 96–115. [Google Scholar] [CrossRef]

| Method | Modality | Precision↑ | Recall↑ | IoU↑ | F1-Score↑ | GFLOPs↓ | Params (M)↓ | Fps↑ | Inf. Time (ms)↓ |

|---|---|---|---|---|---|---|---|---|---|

| U-Net [9] | Visible | 82.9 | 73.0 | 63.5 | 77.7 | 124.7 | 31.0 | 77.12 | 12.97 |

| GCNet [37] | Visible | 86.2 | 88.1 | 77.1 | 87.1 | 183.3 | 85.2 | 41.34 | 24.19 |

| DANet [38] | Visible | 87.3 | 86.6 | 76.9 | 86.9 | 500.6 | 46.2 | 26.54 | 37.68 |

| U-Net++ [39] | Visible | 87.8 | 87.0 | 77.6 | 87.4 | 800.5 | 45.1 | 16.89 | 59.21 |

| Deeplabv3+ [40] | Visible | 89.3 | 90.6 | 81.7 | 89.9 | 48.1 | 39.0 | 163.69 | 6.11 |

| HRNet [41] | Visible | 90.1 | 89.8 | 81.7 | 89.9 | 161.8 | 70.0 | 42.82 | 23.35 |

| U-KAN [42] | Visible | 89.6 | 88.0 | 79.9 | 88.8 | 27.6 | 9.4 | 39.14 | 25.55 |

| BiSeNet [16] | Visible | 86.2 | 84.8 | 74.7 | 85.5 | 40.7 | 23.1 | 382.68 | 2.61 |

| BiSeNetV2 [17] | Visible | 87.3 | 88.6 | 78.5 | 87.9 | 38.0 | 21.6 | 131.84 | 7.58 |

| DDRNet-Slim [19] | Visible | 85.4 | 83.1 | 72.8 | 84.2 | 4.53 | 5.7 | 258.23 | 3.87 |

| U-Net [9] | SAR | 44.3 | 45.7 | 29.1 | 45.0 | 124.4 | 31.0 | 77.16 | 12.96 |

| DeepLabv3+ [40] | SAR | 63.8 | 51.6 | 39.9 | 57.1 | 47.3 | 39.0 | 163.78 | 6.11 |

| HRNet [41] | SAR | 52.2 | 43.0 | 30.9 | 47.2 | 161.7 | 70.0 | 42.90 | 23.31 |

| RedNet [43] | Visible + SAR | 87.2 | 87.7 | 77.7 | 87.4 | 84.5 | 81.9 | 71.48 | 13.99 |

| SA-Gate [44] | Visible + SAR | 88.8 | 87.4 | 78.7 | 88.1 | 276.8 | 53.4 | 37.70 | 26.53 |

| CMGFNet [45] | Visible + SAR | 91.2 | 79.1 | 73.5 | 84.7 | 155.3 | 85.2 | 76.76 | 13.03 |

| MCANet [5] | Visible + SAR | 89.6 | 90.5 | 81.9 | 90.1 | 375.9 | 71.2 | 39.98 | 25.01 |

| DLiteNet (Ours) | Visible + SAR | 90.2 | 90.5 | 82.3 | 90.3 | 5.8 | 5.6 | 80.28 | 12.46 |

| Dataset | Method | Modality | Core Design | Params (M) | GFLOPs | IoU (%) |

|---|---|---|---|---|---|---|

| DFC23 Track2 | BiSeNet | Visible | two-branch lightweight CNN | 23.1 | 40.7 | 74.7 |

| BiSeNetV2 | Visible | two-branch lightweight CNN | 21.6 | 38.0 | 78.5 | |

| DDRNet-Slim | Visible | two-branch lightweight CNN | 5.7 | 4.53 | 72.8 | |

| RedNet | Visible + SAR | dual-encoder multimodal CNN with decoder | 81.9 | 84.5 | 77.7 | |

| SA-Gate | Visible + SAR | dual-encoder multimodal CNN with decoder | 53.4 | 276.8 | 78.7 | |

| CMGFNet | Visible + SAR | dual-encoder multimodal CNN with decoder | 85.2 | 155.3 | 73.5 | |

| MCANet | Visible + SAR | dual-encoder multimodal CNN with decoder | 71.2 | 375.9 | 81.9 | |

| DLiteNet (Ours) | Visible+SAR | dual-branch lightweight multimodal fusion | 5.6 | 5.8 | 82.3 | |

| MSAW | BiSeNet | Visible | two-branch lightweight CNN | 23.1 | 128.4 | 76.8 |

| BiSeNetV2 | Visible | two-branch lightweight CNN | 21.7 | 118.8 | 77.2 | |

| DDRNet-Slim | Visible | two-branch lightweight CNN | 5.7 | 14.2 | 70.3 | |

| SA-Gate | Visible + SAR | dual-encoder multimodal CNN with decoder | 53.4 | 849.7 | 79.0 | |

| CMGFNet | Visible + SAR | dual-encoder multimodal CNN with decoder | 85.2 | 490.9 | 73.6 | |

| MCANet | Visible + SAR | dual-encoder multimodal CNN with decoder | 71.2 | 874.5 | 82.6 | |

| DLiteNet (Ours) | Visible + SAR | dual-branch lightweight multimodal fusion | 5.6 | 51.7 | 83.6 |

| Method | Modality | Precision↑ | Recall↑ | IoU↑ | F1-Score↑ | GFLOPs↓ | Params (M)↓ | Fps↑ | Inf. Time (ms)↓ |

|---|---|---|---|---|---|---|---|---|---|

| U-Net [9] | Visible | 84.6 | 80.0 | 69.8 | 82.2 | 494.0 | 31.0 | 22.32 | 44.80 |

| GCNet [37] | Visible | 86.9 | 82.3 | 73.2 | 84.6 | 578.8 | 85.2 | 17.56 | 56.95 |

| DANet [38] | Visible | 85.9 | 81.0 | 71.5 | 83.4 | 1552.2 | 46.2 | 7.18 | 139.28 |

| Deeplabv3+ [40] | Visible | 87.8 | 86.4 | 77.1 | 87.1 | 151.8 | 39.0 | 51.91 | 19.26 |

| HRNet [41] | Visible | 88.6 | 87.2 | 78.4 | 87.9 | 504.2 | 70.0 | 15.35 | 65.15 |

| U-KAN [42] | Visible | 86.5 | 85.0 | 75.0 | 85.7 | 85.0 | 9.4 | 13.79 | 72.52 |

| BiSeNet [16] | Visible | 87.4 | 86.3 | 76.8 | 86.9 | 128.4 | 23.1 | 145.06 | 6.89 |

| BiSeNetV2 [17] | Visible | 86.5 | 87.8 | 77.2 | 87.1 | 118.8 | 21.7 | 70.94 | 14.10 |

| DDRNet-Slim [19] | Visible | 83.9 | 81.3 | 70.3 | 82.6 | 14.2 | 5.7 | 221.72 | 4.51 |

| U-Net [9] | SAR | 46.6 | 53.7 | 33.2 | 49.9 | 493.1 | 31.0 | 22.42 | 44.60 |

| Deeplabv3+ [40] | SAR | 79.3 | 77.3 | 64.3 | 78.3 | 149.2 | 39.0 | 51.98 | 19.24 |

| HRNet [41] | SAR | 66.3 | 69.4 | 51.3 | 67.9 | 503.2 | 70.0 | 15.39 | 64.98 |

| SA-Gate [44] | Visible + SAR | 89.0 | 87.6 | 79.0 | 88.3 | 849.7 | 53.4 | 14.82 | 67.48 |

| CMGFNet [45] | Visible + SAR | 88.4 | 81.5 | 73.6 | 84.8 | 490.9 | 85.2 | 23.24 | 43.03 |

| MCANet [5] | Visible + SAR | 91.0 | 89.9 | 82.6 | 90.5 | 874.5 | 71.2 | 15.47 | 64.64 |

| DLiteNet (Ours) | Visible + SAR | 91.7 | 90.4 | 83.6 | 91.1 | 51.7 | 5.6 | 76.51 | 13.07 |

| Quantization | Model Size (MB) | Inf. Time (ms) |

|---|---|---|

| FP32 | 27.4 | 14.97 |

| FP16 | 16.6 | 12.33 |

| INT8 | 11.0 | 11.18 |

| Dataset | MCAM | CDAM | Precision (%)↑ | Recall (%)↑ | IoU (%)↑ | F1-Score (%)↑ |

|---|---|---|---|---|---|---|

| DFC23 Track2 | 89.2 | 87.1 | 78.8 | 88.1 | ||

| ✓ | 89.0 | 88.9 | 80.1 | 88.9 | ||

| ✓ | 88.9 | 89.1 | 80.2 | 89.0 | ||

| ✓ | ✓ | 90.2 | 90.5 | 82.3 | 90.3 |

| Model | Context Feature Extraction | IoU (%) ↑ | F1-Score (%) ↑ |

|---|---|---|---|

| DLiteNet | STDAC (d = 1, 1, 1) | 80.8 | 89.4 |

| DLiteNet | STDAC (d = 2, 2, 2) | 81.2 | 89.7 |

| DLiteNet | STDAC (d = 3, 3, 3) | 82.3 | 90.3 |

| DLiteNet | Bottleneck | 80.6 | 89.3 |

| DLiteNet | Basic Block | 79.5 | 88.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Zhao, B.; Du, R.; Wu, Y.; Chen, J.; Zheng, Y. DLiteNet: A Dual-Branch Lightweight Framework for Efficient and Precise Building Extraction from Visible and SAR Imagery. Remote Sens. 2025, 17, 3939. https://doi.org/10.3390/rs17243939

Zhao Z, Zhao B, Du R, Wu Y, Chen J, Zheng Y. DLiteNet: A Dual-Branch Lightweight Framework for Efficient and Precise Building Extraction from Visible and SAR Imagery. Remote Sensing. 2025; 17(24):3939. https://doi.org/10.3390/rs17243939

Chicago/Turabian StyleZhao, Zhe, Boya Zhao, Ruitong Du, Yuanfeng Wu, Jiaen Chen, and Yuchen Zheng. 2025. "DLiteNet: A Dual-Branch Lightweight Framework for Efficient and Precise Building Extraction from Visible and SAR Imagery" Remote Sensing 17, no. 24: 3939. https://doi.org/10.3390/rs17243939

APA StyleZhao, Z., Zhao, B., Du, R., Wu, Y., Chen, J., & Zheng, Y. (2025). DLiteNet: A Dual-Branch Lightweight Framework for Efficient and Precise Building Extraction from Visible and SAR Imagery. Remote Sensing, 17(24), 3939. https://doi.org/10.3390/rs17243939