1. Introduction

Change detection aims to identify surface changes by comparing multi-temporal remote sensing images. It has broad applications, including disaster assessment [

1], environmental monitoring [

2], urban planning [

3], and military reconnaissance [

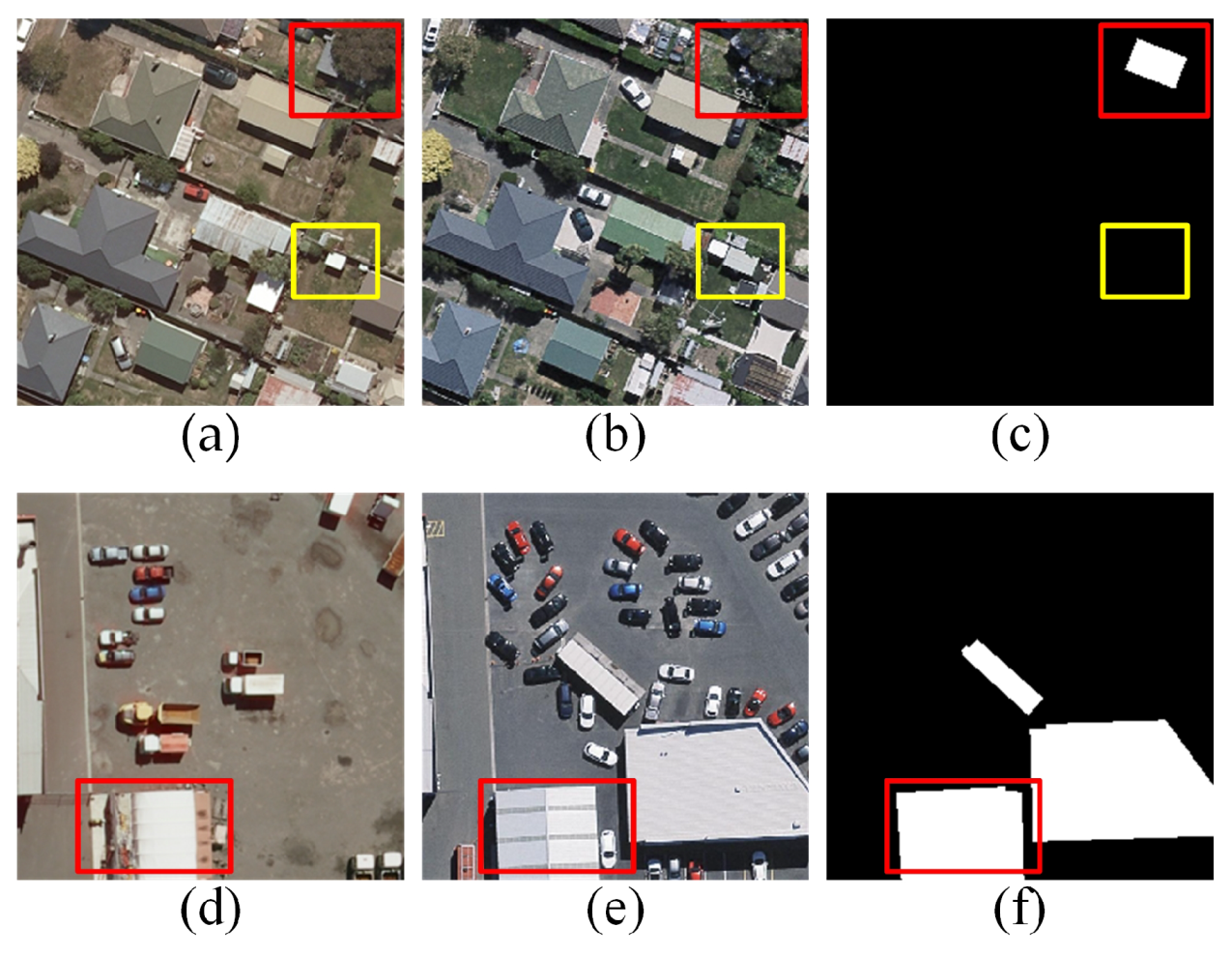

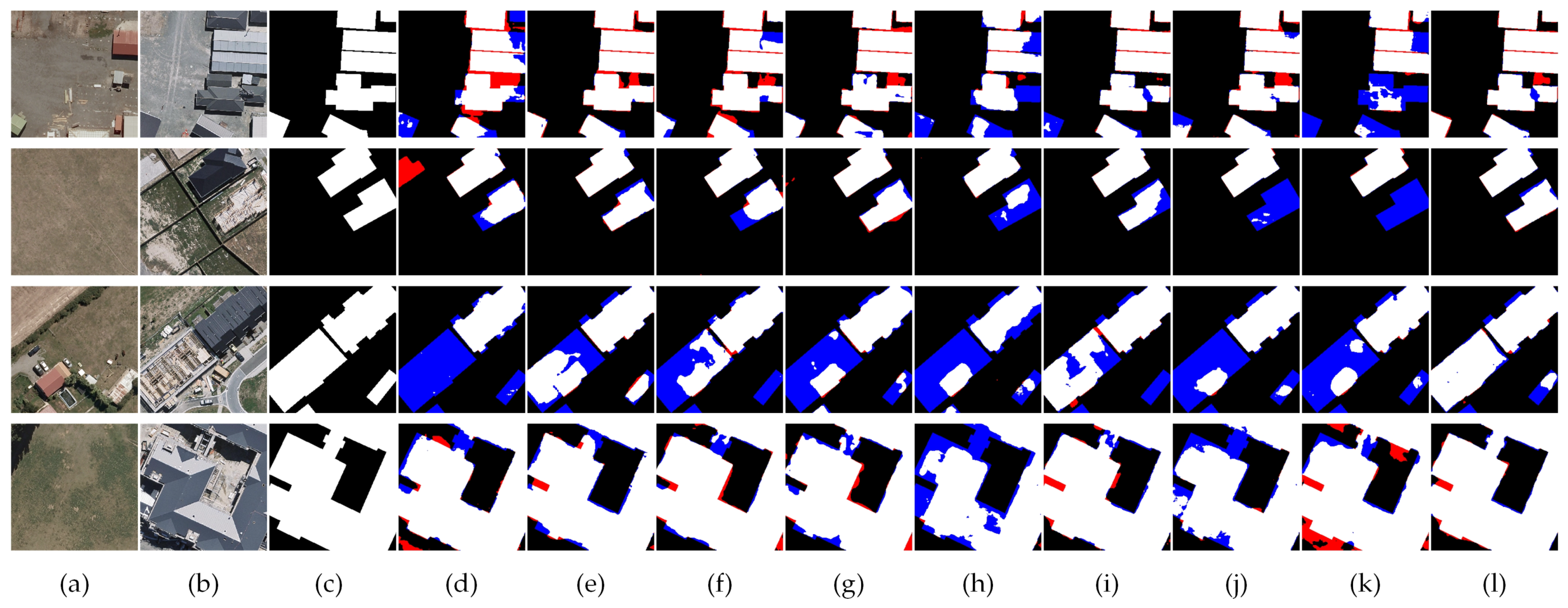

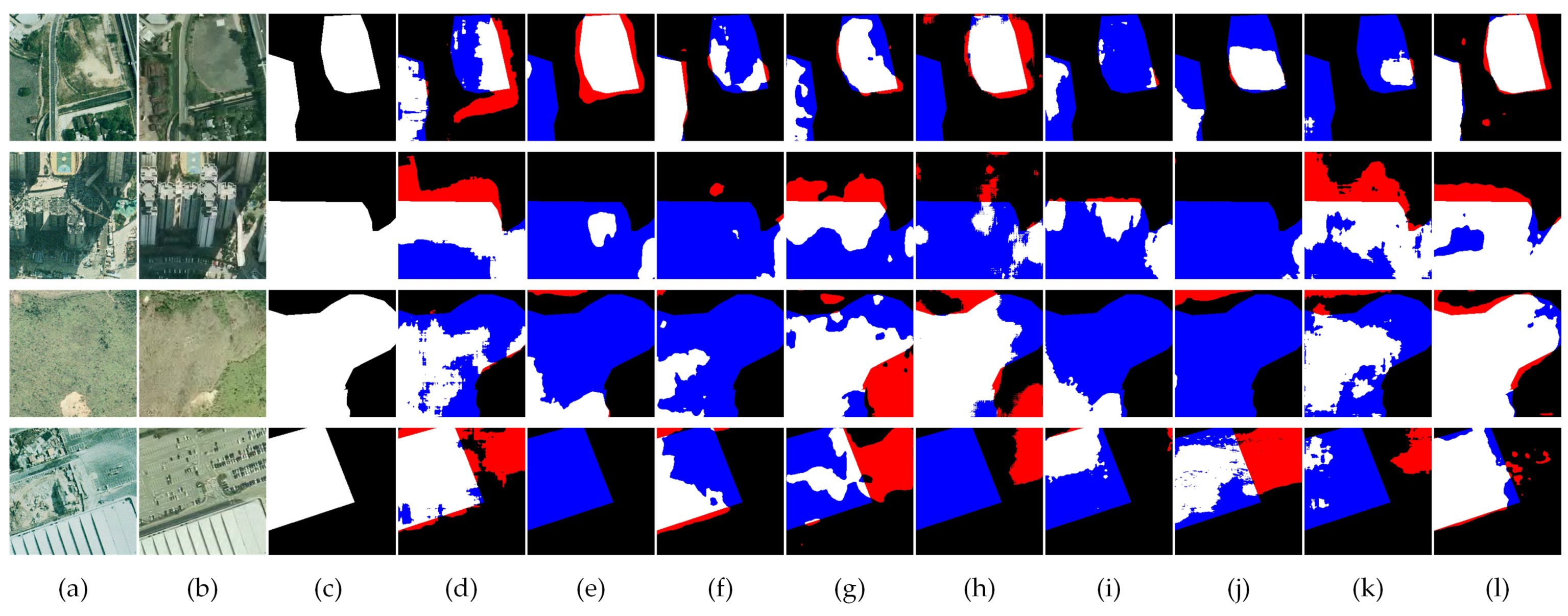

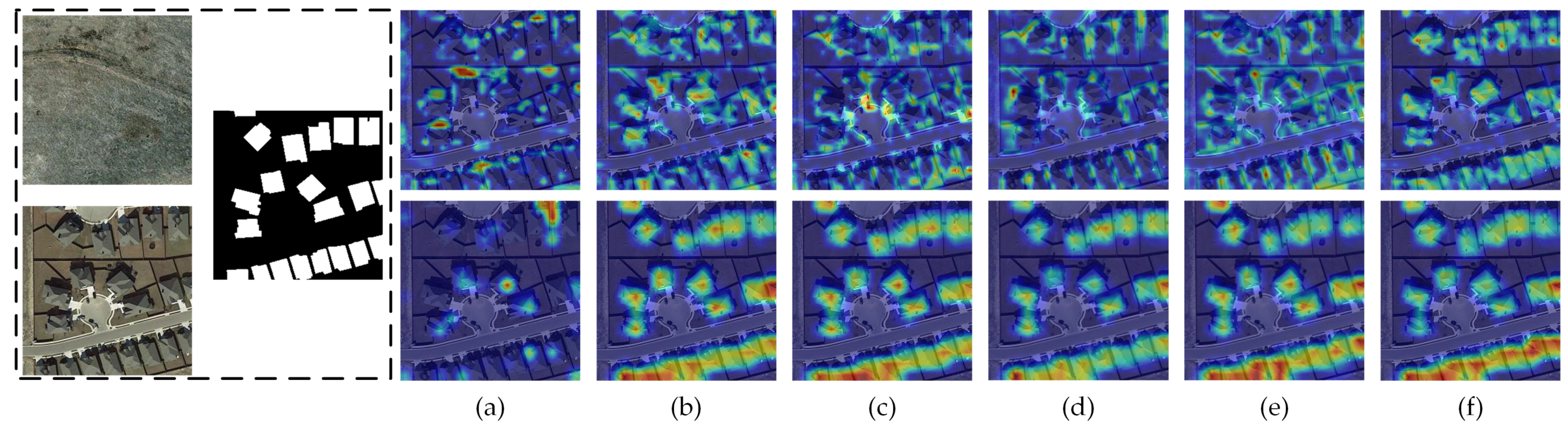

4]. Recent advances in earth-observation sensors have made high-resolution imagery increasingly accessible, thereby driving the evolution of change detection methods. However, this progress also brings new challenges, such as inter-sensor discrepancies, seasonal and illumination variations, pseudo-changes, and complex scene characteristics including occlusions, shadows, and diverse textures. To illustrate these issues,

Figure 1 presents a representative example: regions (a–c) demonstrate the difficulty of distinguishing true changes from pseudo-changes caused by tree occlusions and non-target objects, whereas regions (d–f) depict a building replaced by a new structure, which—despite their high visual similarity—still constitutes a true change.

Prior to deep learning, change detection relied on three traditional paradigms: algebra-based, transformation-based, and classification-based methods. Algebra-based methods construct difference maps via image differencing, ratioing, or regression analysis, followed by thresholding to identify changed pixels. In transformation-based methods, Principal Component Analysis (PCA) [

5,

6] and Change Vector Analysis (CVA) [

7] are the most commonly used statistical techniques, which identify changes by mapping spectral features into transformed spaces. Classification-based methods utilize classifiers such as K-means or SVM [

8,

9] to categorize pixels into changed and unchanged classes. However, these methods are highly sensitive to image quality, radiometric consistency, and noise. The proliferation of high-resolution imagery, with its rich details and variations, has outpaced the capacity of these methods to model complex scenes accurately.

Deep learning has opened up promising new avenues for change detection, significantly improving both accuracy and efficiency. Since Daudt et al. [

10] introduced FCNs [

11] to this task, CNN-based methods have long dominated the field. To meet the demands of change detection, researchers have successively proposed various innovative network architectures. For instance, IFN [

12] enhances multi-scale features via deep supervision and fusion, while FC-Siam-diff-PA [

13] improves differential feature extraction through a pyramid attention layer built upon FC-Siam-diff [

10]. Despite strong performance on public datasets, CNNs’ limited receptive fields hinder long-range dependency capture and global modeling. Consequently, existing methods struggle with accurate detection in complex scenarios involving significant cross-temporal and cross-resolution variations.

Since Dosovitskiy et al. [

14] introduced Vision Transformer (ViT), Transformers have overcome CNNs’ global modeling limitations. Through self-attention, ViT captures local-global feature relationships for richer contextual representation. This has spurred numerous Transformer-based change detection approaches. For example, ChangeFormer [

15] employs a purely Transformer encoder, achieving competitive performance on multiple benchmarks, while BIT [

16] integrates semantic labels to enhance change region perception and global modeling. However, Transformers’ quadratic complexity with input size incurs prohibitive overhead for dense prediction on high-resolution imagery. To mitigate this, Swin Transformer [

17] employs window-based attention, reducing computation and serving as the backbone in models like SwinSUNet [

18]. Nonetheless, window partitioning inherently constrains the global receptive field, leaving long-range dependency modeling incompletely resolved.

Recent studies have introduced State Space Model (SSM) architectures to balance global modeling with computational efficiency. Mamba [

19] exemplifies this approach, leveraging structured state space modeling for efficient long-sequence modeling with linear complexity, and demonstrating strong performance in NLP [

20,

21] and vision tasks [

22,

23]. SSMs capture global dependencies while reducing computational cost, offering an emerging alternative to Transformers for dense prediction in remote sensing [

24,

25,

26,

27,

28]. However, existing SSM-based methods remain limited by their reliance on fixed scanning directions, lacking adaptability for complex change regions.

Currently, deep learning-based change detection methods generally consist of three key stages: bi-temporal feature extraction, difference feature generation, and multi-scale feature fusion with classification. Although existing models have achieved notable progress in terms of accuracy, they still suffer from certain limitations. First, most methods encode bi-temporal images independently, neglecting cross-temporal interaction. This yields insufficient cross-temporal representation and undermines difference feature quality. Second, current difference generation methods rely on simple concatenation or absolute difference, neglecting rich frequency-domain information and limiting downstream classification accuracy.

To tackle the limitations of existing methods, this paper proposes AGFNet, a novel change detection model that integrates Visual State Space Models with a two-dimensional Discrete Cosine Transform (2D-DCT). AGFNet is designed to address three key challenges: (1) the lack of explicit interaction in Siamese encoders, which leads to underutilized spatiotemporal features; (2) the sensitivity of difference features to high-frequency noise, which results in pervasive pseudo-changes; and (3) the inflexibility of fixed scanning orders in SSMs, which hinders the modeling of irregular structural changes. To this end, AGFNet introduces three core components. First, the Cross-Spatial Guidance Attention (CSGA) module is embedded into the Visual State Space (VSS) backbone to enable direct spatial interaction between bi-temporal features during encoding, thereby producing richer and more discriminative spatiotemporal representations. Second, the Frequency-guided Adaptive Difference Module (FADM) applies lightweight adaptive filtering in the frequency domain to suppress high-frequency noise and enhance genuine change cues, effectively reducing pseudo-changes. Finally, in the decoding stage, the Dual-Stage Multi-Scale Residual Integrator (DS-MRI) incorporates an Attention-Guided State Space (AGSS) block, which dynamically adjusts its scanning sequence based on attention maps from CSGA. By progressively integrating and reconstructing multi-scale features, AGFNet achieves efficient and accurate change detection.

The main contributions of this paper are summarized as follows:

AGFNet builds a Siamese feature extraction backbone based on VSS Blocks and introduces the CSGA module. Unlike traditional Siamese networks that extract features independently, CSGA enables explicit interaction and guidance between bi-temporal images during the extraction stage, effectively enhancing cross-temporal feature alignment and representation while reducing the interference of pseudo-changes in subsequent modeling.

The FADM is designed to address the challenge that high-frequency noise and texture differences often cause pseudo-changes. It employs lightweight adaptive frequency-domain filtering to suppress high-frequency pseudo-change components, integrates a channel attention mechanism to align frequency-domain channels, and enhances true change differences in the spatial domain, thereby significantly improving the discriminability of difference features.

We propose DS-MRI and the Adaptive Guided Scanning (AGSS) mechanism. AGSS adaptively adjusts scanning sequences using attention maps generated by CSGA, breaking the limitations of fixed scanning patterns. This enables dynamic modeling of irregular change patterns and further enhances multi-scale feature fusion and change region reconstruction.

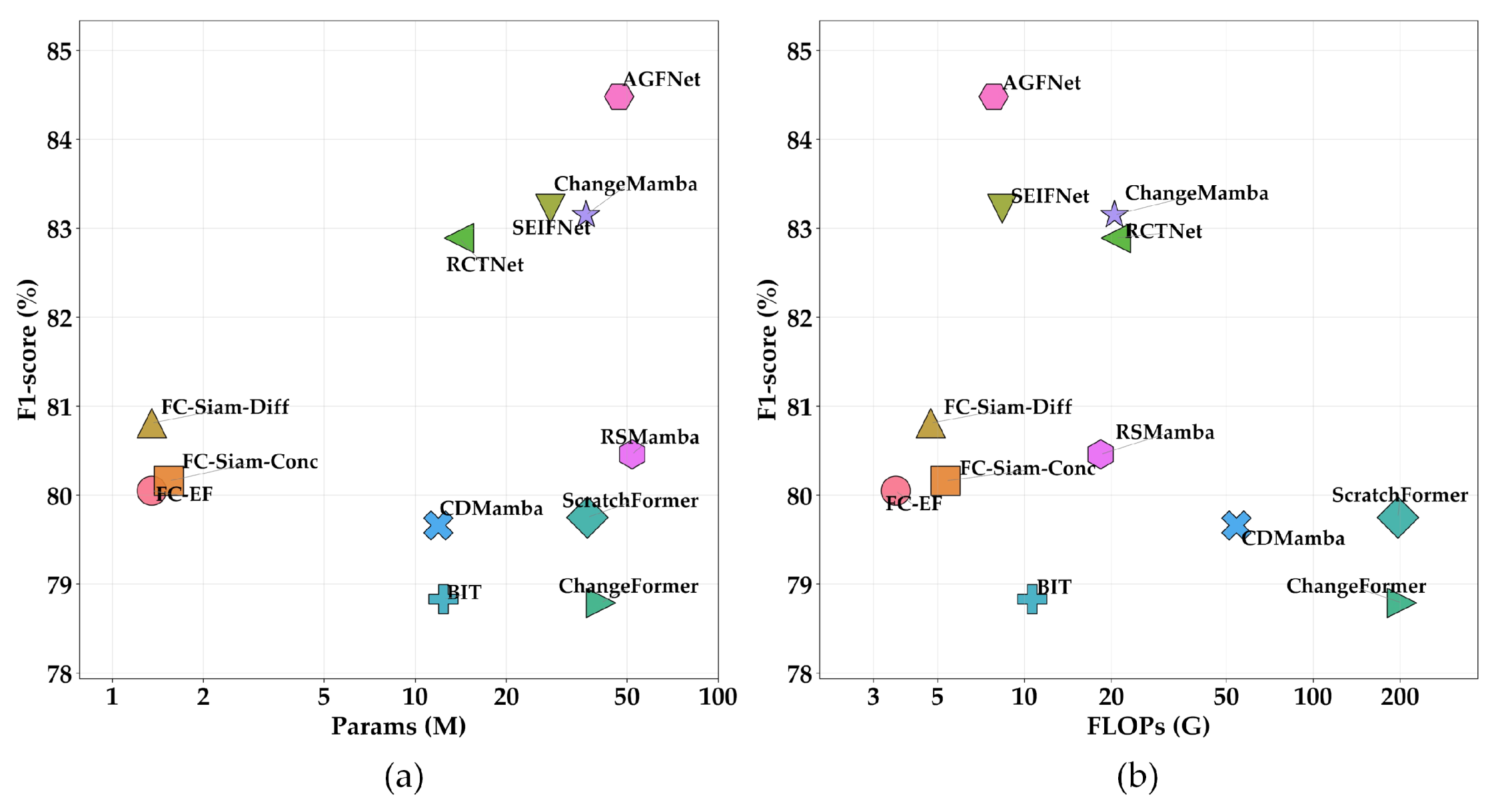

Extensive experiments on multiple public change detection datasets demonstrate the superior performance of AGFNet. The results show that our method achieves higher accuracy and robustness than existing mainstream approaches under lower computational complexity, with particularly strong performance in complex backgrounds and weak-change scenarios.

The remainder of this paper is organized as follows:

Section 2 reviews the related work;

Section 3 presents the detailed methodology of AGFNet;

Section 4 reports experimental results and discussion;

Section 5 concludes the paper.

3. Methodology

3.1. Preliminaries

In recent years, SSMs have gradually emerged as a promising class of methods for handling long-sequence problems in deep learning. These models are based on linear time-invariance, with the core idea of modeling the dynamic evolution from input sequence

to output sequence

through recursive hidden variables

. This enables long-range dependency modeling while maintaining computational efficiency. The key characteristic lies in describing the temporal evolution of hidden variables using a set of linear ordinary differential equations:

where

N denotes the state space dimension,

represents the state transition matrix,

denotes the input matrix, and

represents the output matrix. To integrate SSMs into deep learning models, the continuous formulation must first be discretized using the Zero-Order Hold (ZOH) method applied to the continuous parameters

A and

B , as follows:

where

and

denote the state transition matrix and the input matrix at time step

, respectively, with the assumption that the input remains constant within each time step. After discretization, Equation (

1) can be expressed as:

The final output is then obtained through convolution operations.

However, the parameters in conventional state-space models are fixed, limiting their expressive capacity. To address this, Mamba [

19] introduces a selective scanning mechanism that makes parameters

,

B, and

C data-dependent and learnable. Combined with hardware-aware algorithms, Mamba efficiently filters irrelevant information while extracting critical details, with linear computational complexity and memory consumption.

3.2. Overview

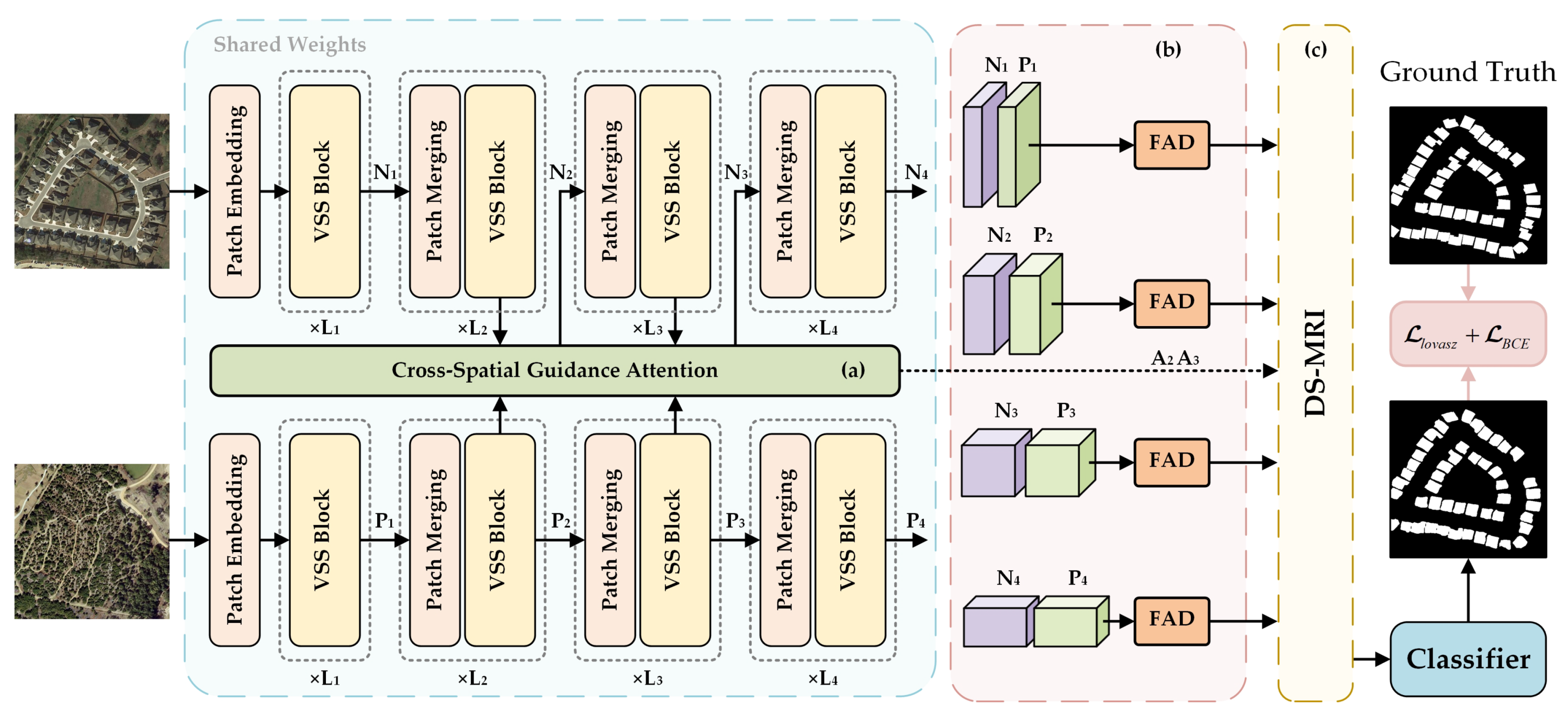

The architecture of the proposed AGFNet is illustrated in

Figure 2. It is composed of three main components: a Siamese feature extraction backbone with CSGA module, a difference feature generation module, and a decoder.

Figure 2.

Overview of the proposed AGFNet architecture. (

a) CSGA module: provides guided feature maps for the backbone network, where

and

serve as the scan guidance map. Specific details are illustrated in

Figure 3. (

b) FAD module. Specific details are illustrated in

Figure 4. (

c) DS-MRI decoder. Specific structural details are illustrated in

Figure 5.

Figure 2.

Overview of the proposed AGFNet architecture. (

a) CSGA module: provides guided feature maps for the backbone network, where

and

serve as the scan guidance map. Specific details are illustrated in

Figure 3. (

b) FAD module. Specific details are illustrated in

Figure 4. (

c) DS-MRI decoder. Specific structural details are illustrated in

Figure 5.

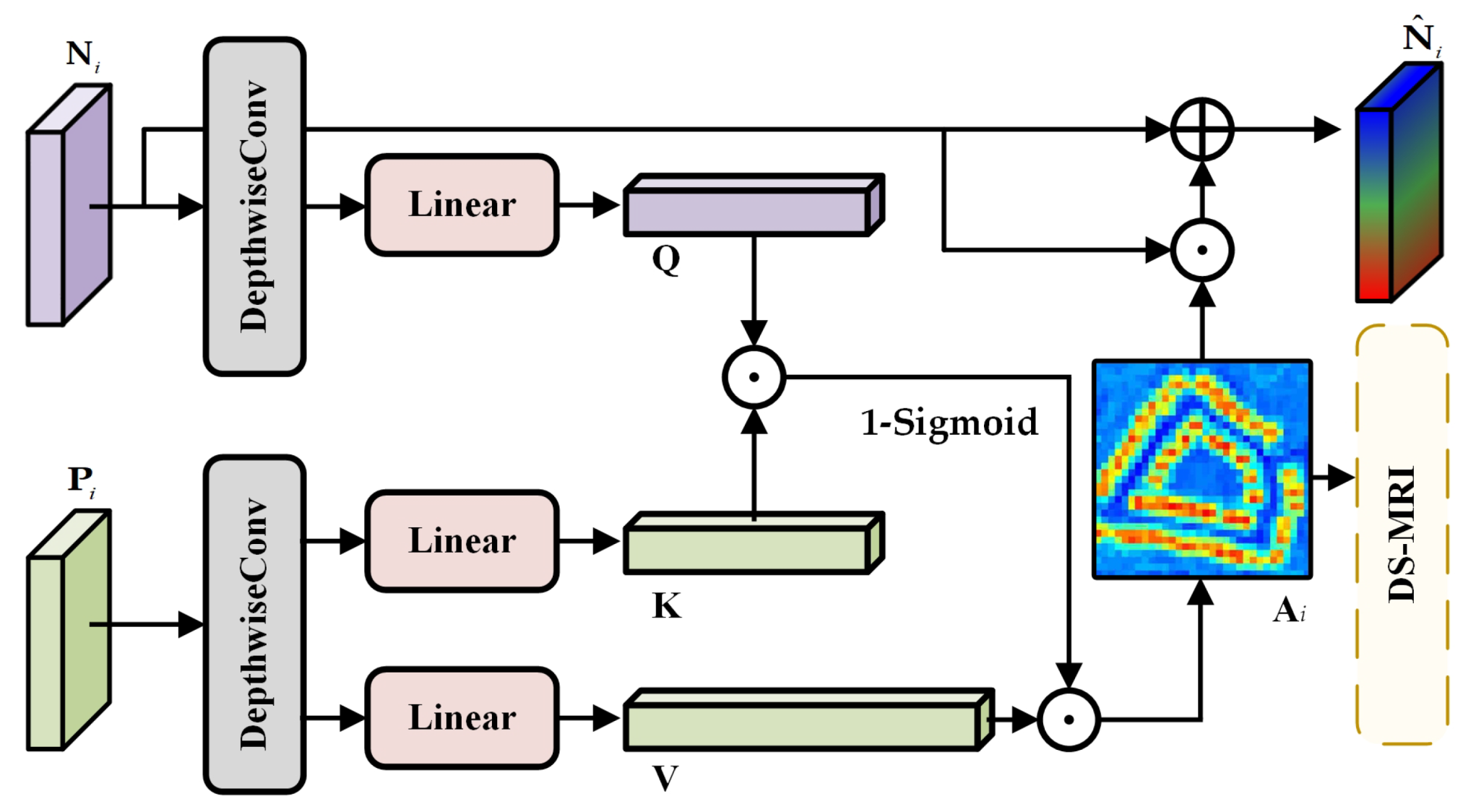

Figure 3.

Illustration of the CSGA module. The inputs and denote the i-th layer outputs from the T2 and T1 branches, where , respectively. The output replaces the original , while serves as the guidance map for subsequent scanning.

Figure 3.

Illustration of the CSGA module. The inputs and denote the i-th layer outputs from the T2 and T1 branches, where , respectively. The output replaces the original , while serves as the guidance map for subsequent scanning.

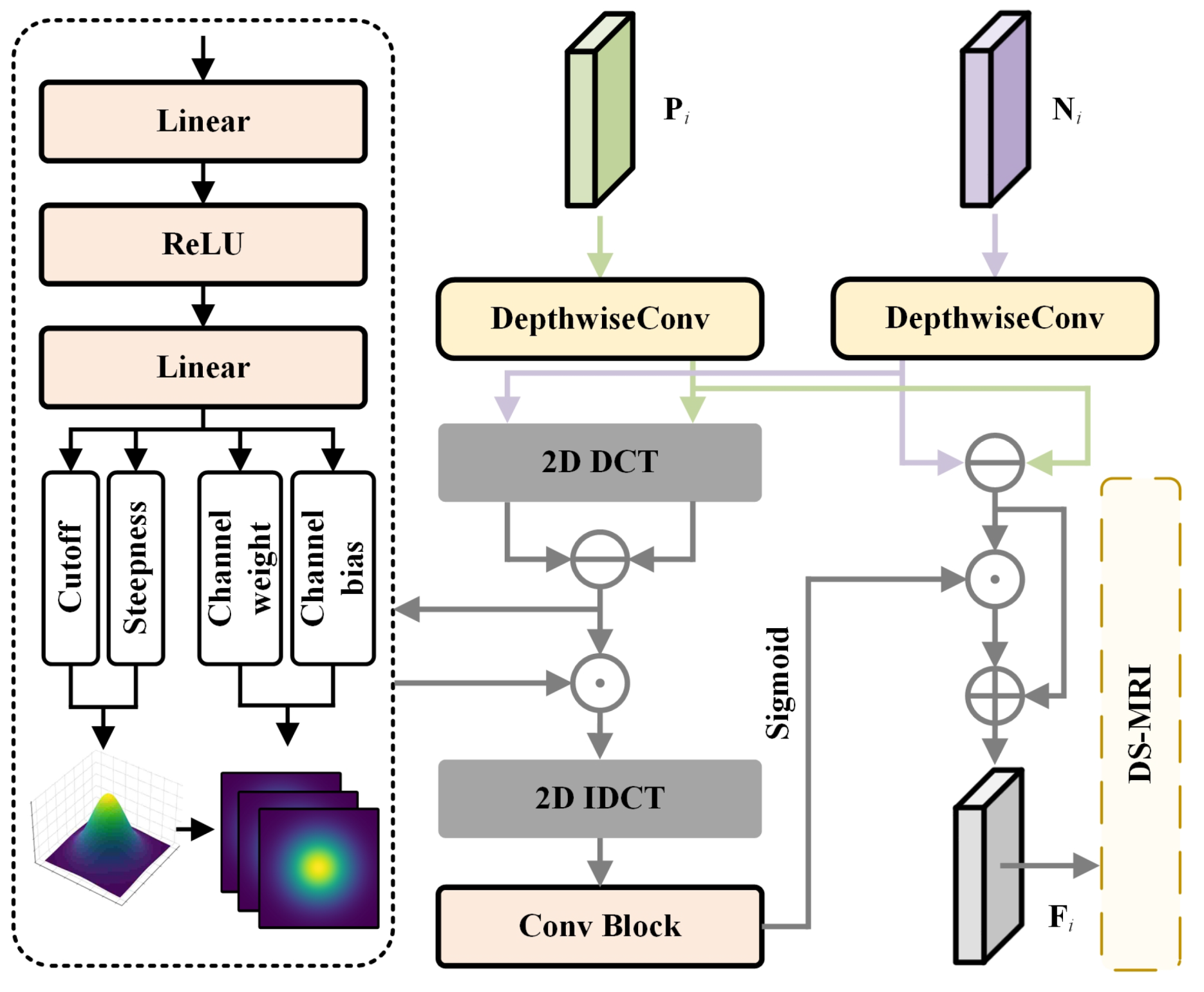

Figure 4.

Illustration of the FADM. The module takes bi-temporal feature maps from the feature extraction network as input and outputs differential feature maps, which are denoted as , after adaptive difference processing. The set constitutes the multi-level feature maps for the DS-MRI.

Figure 4.

Illustration of the FADM. The module takes bi-temporal feature maps from the feature extraction network as input and outputs differential feature maps, which are denoted as , after adaptive difference processing. The set constitutes the multi-level feature maps for the DS-MRI.

First, in the feature extraction stage, we adopt a Siamese network structure based on the VSS Block [

22] to encode bi-temporal images separately. Each branch consists of a Patch Embedding layer, multiple VSS Blocks, and a Patch Merging module. Unlike conventional independent extraction strategies, we integrate the CSGA module into the backbone network. Given the bi-temporal remote sensing images

and

, we process

and

through the feature extraction network for layer-by-layer encoding. At the second and third stages, outputs from the

and

branches undergo cross-spatial guidance in the CSGA module. The guided feature maps are subsequently used as inputs for further extraction. Finally, we obtain four-level feature maps

and

from the two temporal branches.

Next, in the difference stage, we propose the FADM to model differences between multi-scale feature pairs. Specifically, the input bi-temporal features are first processed with frequency-domain filtering to suppress high-frequency pseudo-change components, then aligned in dimensionality through a frequency-domain channel attention mechanism, and finally highlighted in the spatial domain to emphasize true change regions. This process effectively enhances the discriminability of differential features, thereby providing high-quality inputs for subsequent fusion.

In the decoding stage, we design DS-MRI, which progressively integrates differential features across multiple scales. Specifically, we propose the Attention-Guided State Space (AGSS) mechanism within this structure, which leverages attention maps from the CSGA module to generate dynamic scanning orders, thereby overcoming the limitations of conventional fixed scanning patterns. AGSS can adaptively model multi-scale contextual information based on the spatial distribution of change regions, significantly improving the localization and reconstruction of change areas.

Finally, the fused features are fed into a classification head to generate prediction results, and the model is trained through joint optimization of binary cross-entropy loss and Lovasz loss.

Section 3.3,

Section 3.4 and

Section 3.5 provide detailed descriptions of these three key components.

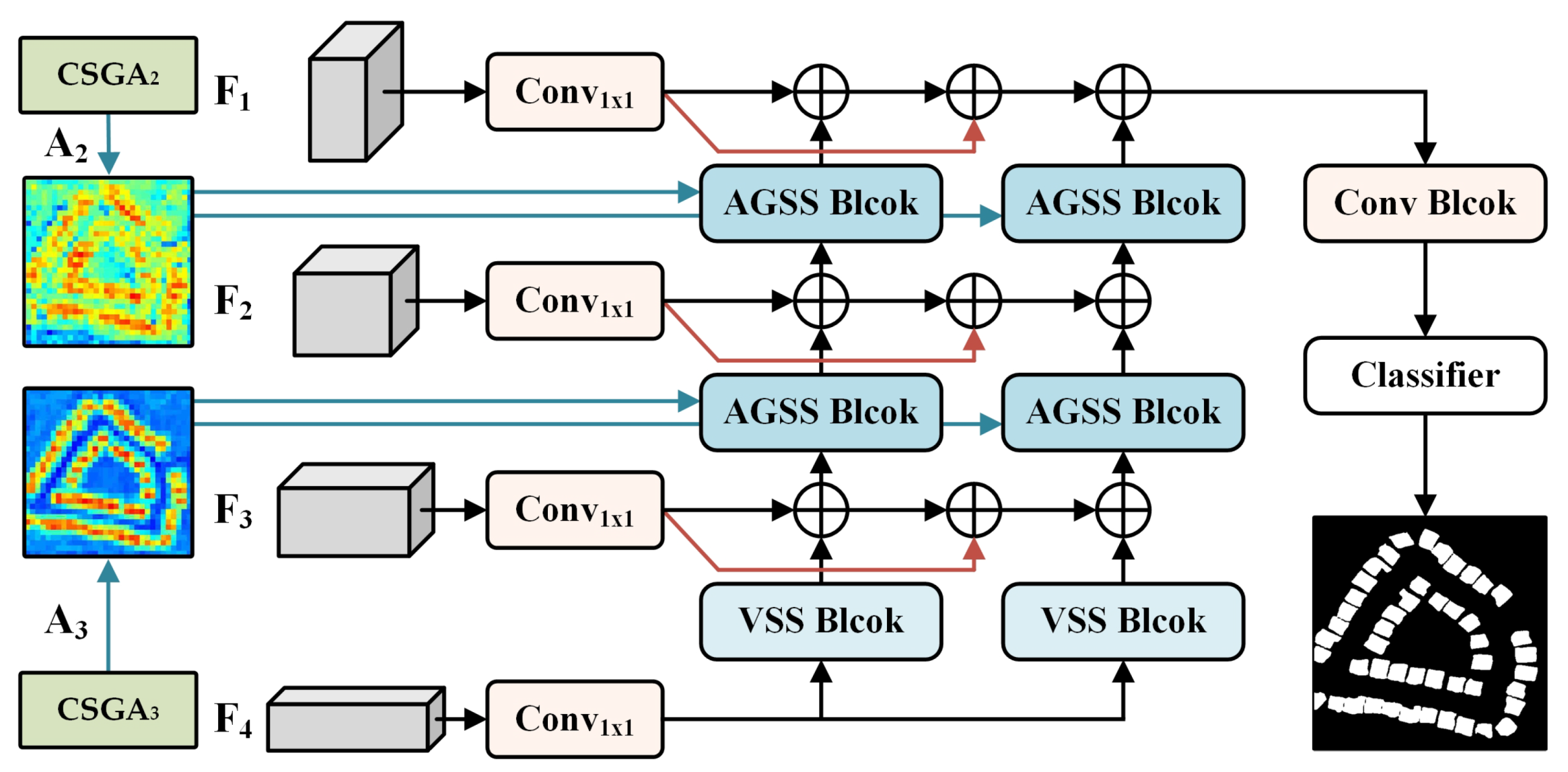

Figure 5.

Illustration of the DS-MRI, where and are the attention maps output by CSGA2 and CSGA3, and – are the feature maps output by FADM at each layer.

Figure 5.

Illustration of the DS-MRI, where and are the attention maps output by CSGA2 and CSGA3, and – are the feature maps output by FADM at each layer.

3.3. Siamese Feature Extraction Network Based on VSS Block with Cross-Spatial Guidance Attention Module

In the feature extraction stage, AGFNet employs a Siamese network based on VSS Blocks, where two parameter-sharing branches encode bi-temporal images separately to ensure feature consistency. The input images are first split into non-overlapping patches and embedded into high-dimensional features via a patch embedding module. These features are then passed through alternating stacks of VSS Blocks and patch merging layers to progressively extract multi-scale representations. As a state-space modeling unit, the VSS Block captures long-range dependencies with linear complexity, providing superior contextual modeling in large-scale remote sensing scenarios.

However, conventional Siamese architectures typically perform independent feature extraction for bi-temporal images, neglecting cross-temporal interactions at the early stage, which often results in amplified pseudo-changes and poor alignment of actual changes. To establish stronger spatial dependencies between bi-temporal features, we design the Cross-Spatial Guidance Attention (CSGA) module, whose structure is illustrated in

Figure 3. The core idea of this module is to leverage the spatial contextual information of the

branch as guidance to dynamically refine the features of the

branch.

Specifically, given the bi-temporal feature representations

and

produced by the

and

branches, we first apply a depthwise convolution block to extract detailed contextual information and suppress pseudo-changes:

where

denotes a depthwise separable convolution block, which is used to model local contextual dependencies. Subsequently, we take

as the guiding feature and

as the guided feature, i.e., we perform a query mapping on

:

Then, cross-temporal spatial guidance weights are computed via an attention mechanism:

where

denotes the Sigmoid activation function, unlike conventional cross-attention mechanisms, here we employ a reverse activation scheme, i.e., inverting the attention values, whereas the standard attention mechanism is typically formulated as:

Essentially, traditional attention mechanisms aim to identify “matching regions”, where locations with high similarity between the features and features are amplified. However, in the context of change detection, the focus shifts from similarity to differences. In addition, Softmax normalization introduces competition among pixels for attention allocation. In change detection tasks, it is common that certain regions exhibit no significant changes or that all regions have changed. When some regions show no noticeable change, they are still forced to receive a portion of the attention; conversely, when all regions change, the attention is either evenly distributed or allocated unpredictably, causing the guidance to become misleading. Therefore, we adopt as a replacement for Softmax, making the cross-attention mechanism more consistent with the sparse and global response characteristics required for change detection tasks.

Subsequently, the guided weights are applied to update

:

where ⊙ denotes element-wise multiplication and

is a learnable scaling factor. In this way, the

features are explicitly aligned and enhanced under the guidance of

, thereby suppressing pseudo-changes and highlighting true change regions at an early stage.

The CSGA module is deployed only in the 2nd and 3rd layers of the encoder. Shallow features (1st layer) lack semantic information and are sensitive to both noise and misregistration, while deep features (4th layer) lose spatial details due to excessive abstraction. Intermediate layers strike an optimal balance between spatial detail and semantic richness, making them suitable for cross-temporal guidance.

By integrating CSGA, AGFNet achieves explicit interaction between bi-temporal features during encoding. The branch is refined under the guidance of , enhancing feature consistency and alignment, and providing a more robust foundation for subsequent difference modeling and decoding.

3.4. Frequency-Guided Adaptive Difference Module

In remote sensing change detection tasks, bi-temporal images are often affected by pseudo-change interference, primarily arising from illumination differences, seasonal variations, and geometric misregistration errors. Conventional strategies such as absolute differencing or feature concatenation tend to propagate these non-semantic discrepancies into subsequent representations, thereby weakening the model’s discriminative capability. To address this issue, we propose the Frequency-guided Adaptive Difference Module (FADM), whose structure is illustrated in

Figure 4. By combining frequency-domain filtering with spatial-domain enhancement, FADM suppresses pseudo differences while preserving genuine changes.

Given the bi-temporal feature maps

and

, we first employ non-shared depthwise separable convolution branches to extract preliminary contextual features:

where DWConv(·) denotes the depthwise separable convolution block. Subsequently,

and

are projected into the frequency domain, where a two-dimensional Discrete Cosine Transform (2D-DCT) is applied to obtain the spectra:

Finally, the frequency-domain difference is computed as:

where

denotes the 2D-DCT operation. The low-frequency components usually capture the global consistency of the scene, corresponding to stable semantic structures, whereas the high-frequency components are more susceptible to noise, misregistration errors, and environmental variations, in which pseudo-changes are predominantly concentrated. To suppress such pseudo-changes, we design an adaptive low-pass filter. Specifically, inspired by FCANet [

49], we introduce a lightweight MLP to predict the filtering parameters

,

,

w, and

b from the features of the low-frequency sub-block, which, respectively, represent the cutoff frequency, steepness, channel weight, and bias. The filtering mask is defined as:

where

denotes the distance matrix of normalized frequency positions, which serves as the basis of the filter. By adjusting the steepness

, the filter can achieve either smooth transitions or approximately ideal hard cutoffs, thereby offering a diverse range of frequency responses. To ensure the positivity and training stability of the steepness parameter, we apply the Softplus activation function to the predicted raw steepness values. In this manner, the filter can adaptively determine which high-frequency components should be suppressed and which should be preserved, dynamically accommodating varying noise levels across different scenarios, and is ultimately formulated as:

This design offers several advantages. Unlike fixed high- or low-pass operations, the adaptive filter can automatically adjust to input image characteristics, thereby enhancing model robustness and generalization capability. Moreover, it achieves differentiable adaptive low-pass filtering with minimal parameters, rather than relying on convolution to globally predict the filter. Subsequently, the filtered frequency-domain differences are mapped back to the spatial domain via inverse transformation, yielding a purified change signal

:

This signal generates a spatial weight map

, which encapsulates prior knowledge from frequency-domain analysis regarding the locations of significant changes. Meanwhile, another spatial branch directly computes the absolute difference in features:

Finally, the output is obtained by modulating the spatial difference with the frequency-derived weight map:

In summary, FADM balances the complementary strengths of frequency and spatial domains: frequency-domain differencing emphasizes global energy consistency and suppresses noise, while spatial differencing preserves local details and structural integrity. This integration effectively suppresses pseudo-changes while retaining true variations. A channel-wise weighting and bias mechanism further enhances robustness through selective emphasis and compensation. Moreover, predicting parameters via low-frequency sub-blocks enables efficient global modeling with minimal cost, which is significantly lighter than convolutional kernels or quadratic-complexity attention. The use of low-pass filtering aligns with the observation that high-frequency components often correspond to pseudo-changes, thereby preserving sensitivity to genuine semantic variations. Overall, FADM offers an efficient and robust dual-domain differencing paradigm for change detection.

3.5. Dual-Stage Multi-Scale Residual Integrator

In change detection, the decoding stage not only requires the progressive recovery of spatial resolution but also the effective integration of cross-temporal difference features. To this end, we propose the Dual-Stage Multi-Scale Residual Integrator (DS-MRI) decoder, whose core lies in a two-stage multi-scale residual fusion process: the first stage focuses on progressively enhancing cross-temporal alignment at both local and global levels, while the second stage further restores semantic consistency through residual iteration. The overall structure is illustrated in

Figure 5.

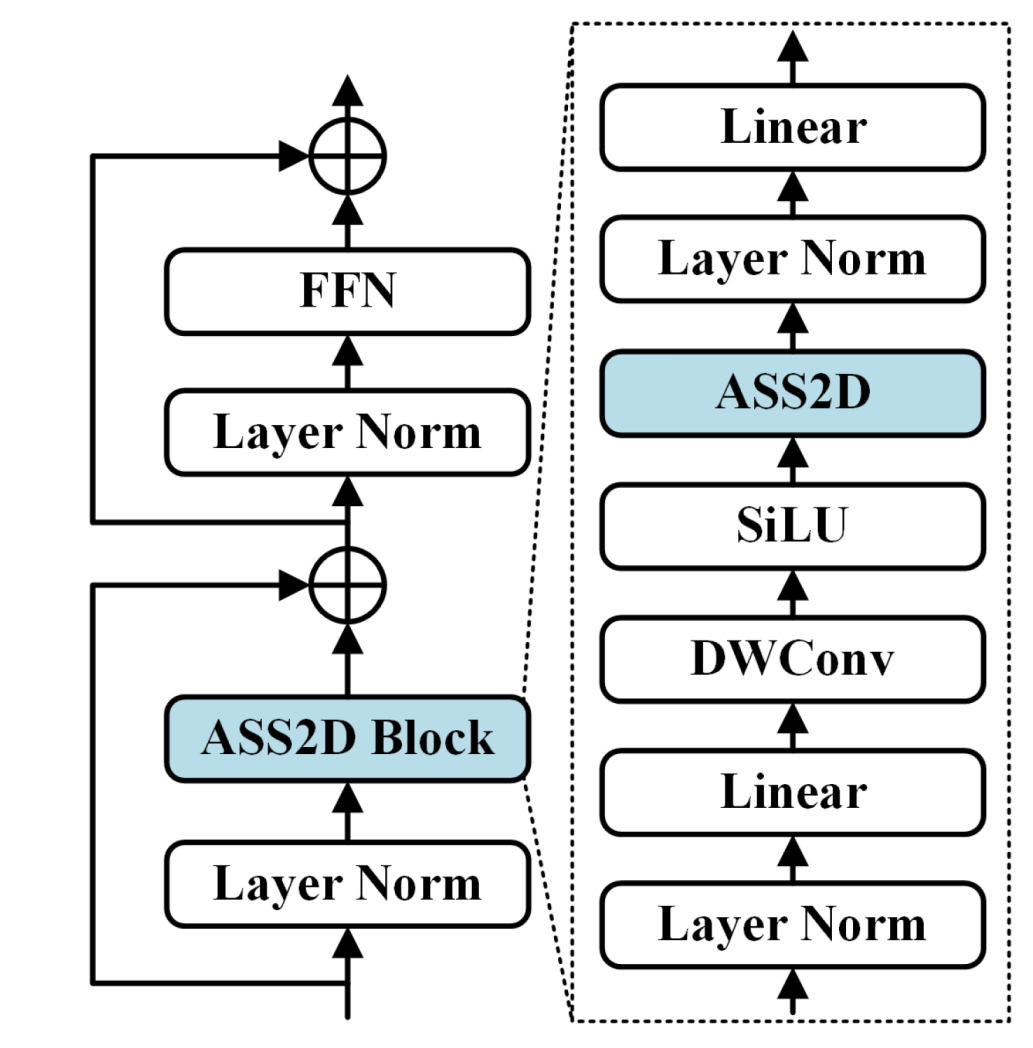

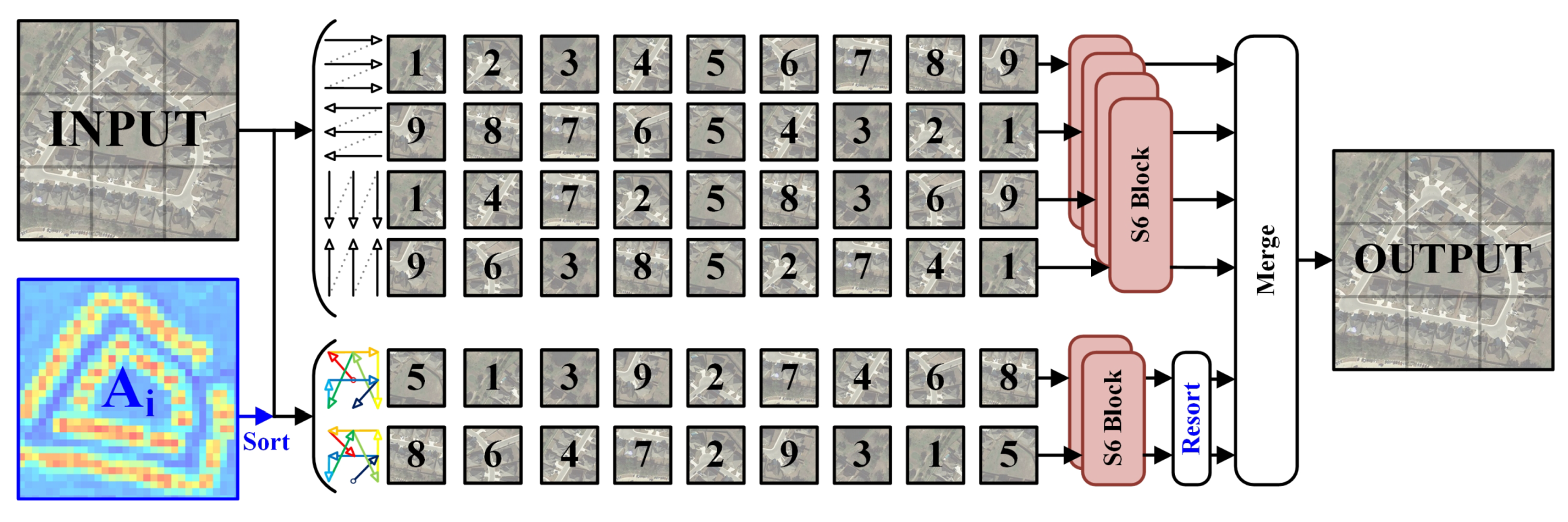

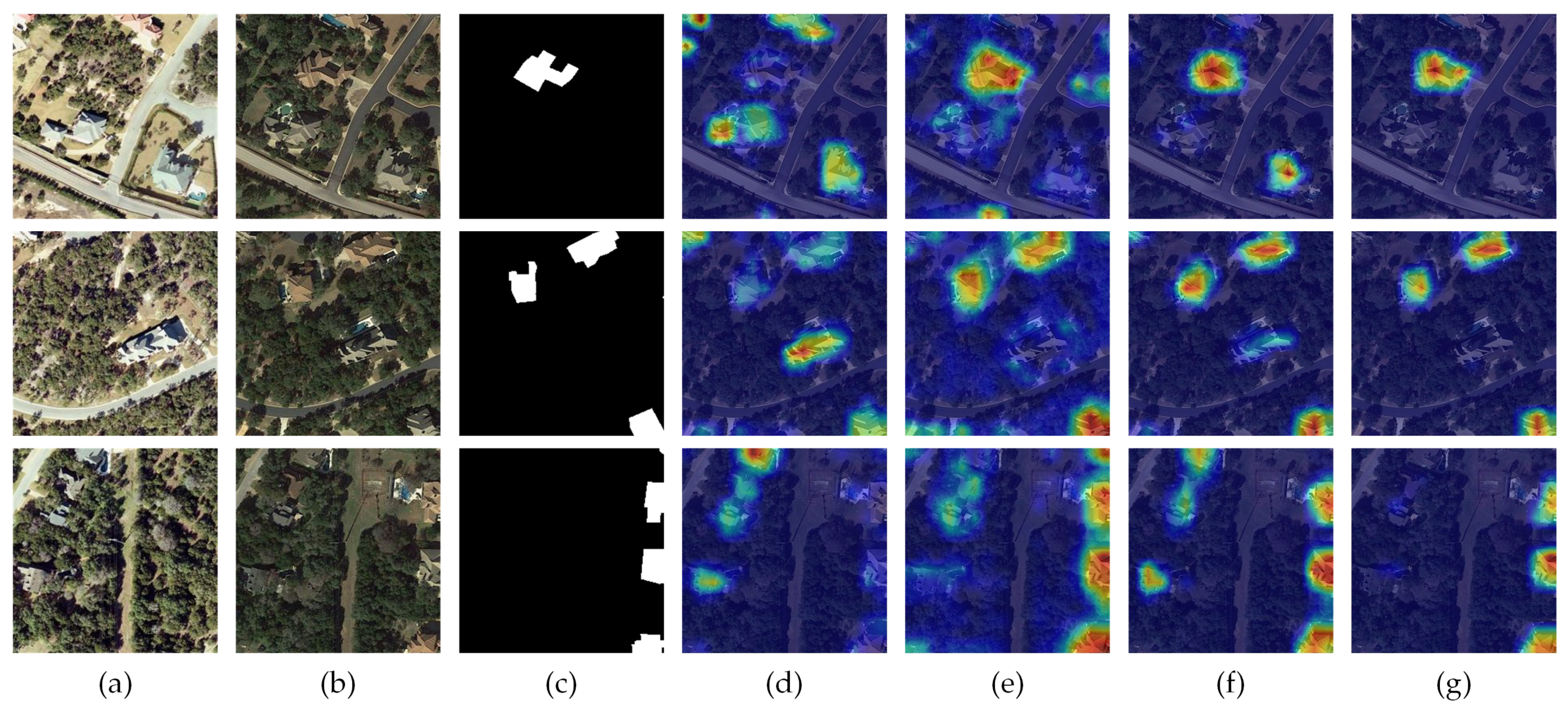

In DS-MRI, we design the Attention-Guided Selective Scan (AGSS) Block (

Figure 6) to replace conventional self-attention or unconditional scanning. Unlike fixed-order scans, AGSS incorporates the spatial change probability map from the CSGA module to guide its scanning strategy. Specifically, we introduce a six-directional scanning approach: four original fixed-direction scans from the VSS Block are retained to capture anisotropic structures and ensure robust spatial propagation, while two new adaptive scan orders are introduced. The first order scans from high to low confidence regions, prioritizing early modeling of salient changes to prevent their dilution by background features. The second order scans from low to high confidence, gradually aggregating contextual information into high-confidence areas to correct boundary predictions and suppress isolated false differences.

These two orders are complementary: the first emphasizes early modeling of prominent regions, while the second provides semantic compensation from background areas. By fusing the four fixed-direction scans with the two adaptive scans in parallel, the AGSS Block combines the stability of spatial priors with the flexibility of attention guidance, effectively avoiding biases from a single scanning strategy and significantly improving overall robustness. Given the input feature map

, the ASS2D module in

Figure 7 can be expressed as:

where

denotes the four directions of the fixed scanning strategy,

represents the two directions of the adaptive scanning strategy, and

is the corresponding attention map output from CSGA.

and

indicate the scan expansion and scan aggregation operations, respectively.

serves as the core of the module, representing the selective scanning operation.

In the DS-MRI processing pipeline, the input is the highly condensed difference map, denoted as

, obtained via FADM processing, where

represents the downsampling factor. First, a linear projection is applied to map features across different scales to a uniform channel dimension

d, thereby eliminating channel discrepancies:

Subsequently, the first stage employs a top-down, layer-wise residual propagation to gradually transfer high-level semantic information to shallower features:

where

and

refer to the attention maps generated by the CSGA module introduced in

Section 3.3. In the second stage, the outputs from the first stage are re-aggregated with the original input features and then progressively fed back to higher-resolution outputs through residual paths:

Finally, the decoder output is produced by integrating these features via a series of convolutional blocks:

In summary, the DS-MRI decoder leverages a six-direction fusion mechanism (fixed spatial priors plus attention-guided adaptive scanning) to achieve comprehensive modeling of cross-temporal differential features. Driven by this two-stage residual integration framework, the model effectively balances the semantic relationships between salient change regions and background areas while restoring spatial resolution, ultimately yielding more robust and fine-grained change detection results.

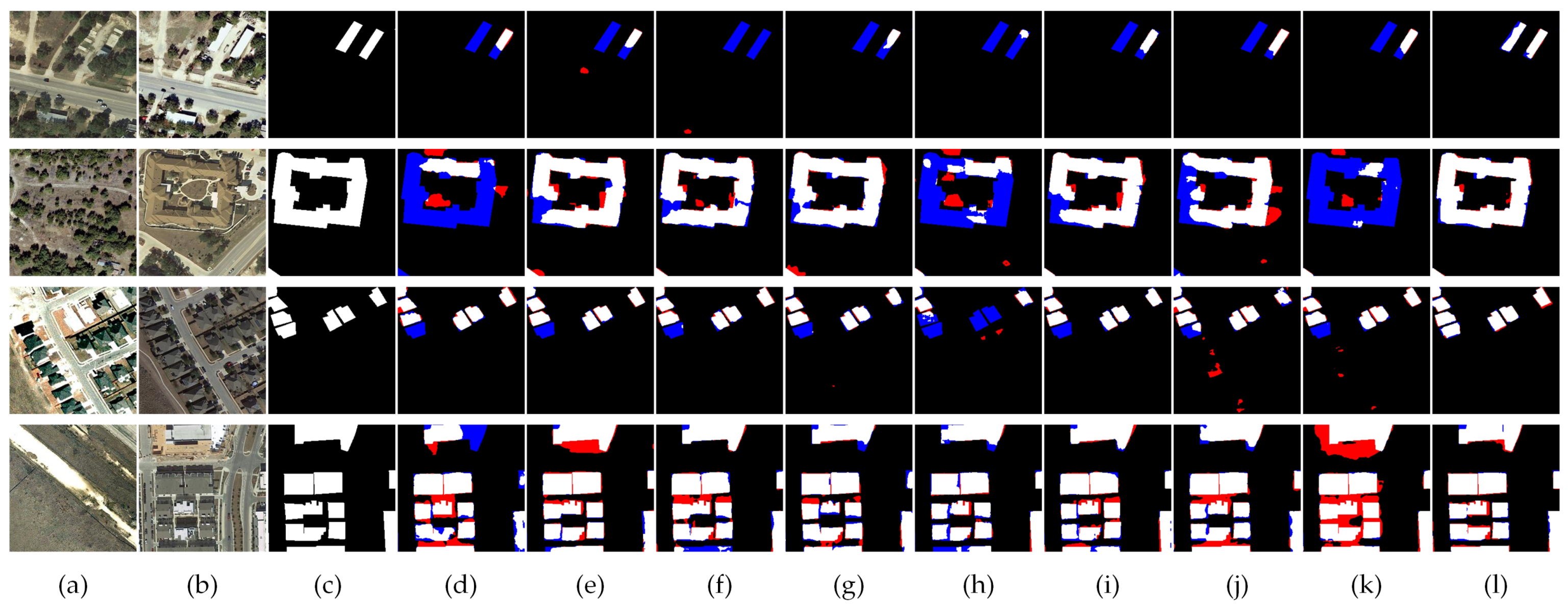

6. Conclusions

This paper addresses key challenges in high-resolution remote sensing change detection, such as insufficient exploitation of cross-temporal relationships and strong interference from irrelevant variations. We introduce AGFNet, a novel change detection framework that integrates a visual state space (VSS) model with 2D-DCT-based frequency-domain analysis. In the early feature extraction stage, the CSGA module performs unidirectional guidance to complement the backbone, aligning channel responses and providing explicit cues that facilitate subsequent feature extraction and difference modeling. Next, the FADM module processes bi-temporal feature pairs through parallel frequency–spatial operations within a lightweight architecture, efficiently producing highly discriminative difference representations. Finally, the DS-MRI decoder equipped with the AGSS Block adaptively models change regions through attention-guided scanning and progressively fuses multi-scale features.

Comprehensive experiments on three public datasets show that AGFNet achieves state-of-the-art performance in both accuracy and computational efficiency, demonstrating strong robustness in complex scenes and weak-change scenarios. Compared with competing methods, AGFNet delivers notably lower computational cost. Ablation studies further reveal the importance of both the VSSBlock and AGSSBlock for enhancing semantic consistency and spatial detail reconstruction, while the FADM module effectively suppresses pseudo-change interference.

Although AGFNet exhibits strong detection accuracy and statistically significant improvements, its overall model efficiency still presents limitations for deployment in resource-constrained environments. Future work will explore model compression strategies—such as knowledge distillation and pruning—as well as architectural refinements to better balance accuracy and complexity. We believe these enhancements will further improve practical applicability while preserving the model’s statistical performance advantages.