4.2. Experimental Design

In the experimental design, six benchmark models were selected for comparison. These include the traditional method of bilinear interpolation; two models based on simple CNN architectures, namely LinearCNN and SRCNN; and three models built on more complex CNN architectures, namely U-Net, Attention U-Net, and PMDRnet. All of these approaches represent widely adopted and classical baselines in SR tasks.

4.2.1. Analysis of the Impact of Input Days on Model Performance

To establish a scientifically sound experimental workflow, we first conducted an exploratory analysis of the input sequence length to assess its impact on model performance. This step is essential for understanding the model’s sensitivity to temporal information and identifying the optimal input configuration, which in turn helps enhance the effectiveness and efficiency of subsequent SR reconstruction. Specifically, we evaluated the performance of SR estimation under different input durations (1 day, 3 days, 5 days, and 7 days). This comparison aims to explore how various network architectures respond to extended temporal inputs.

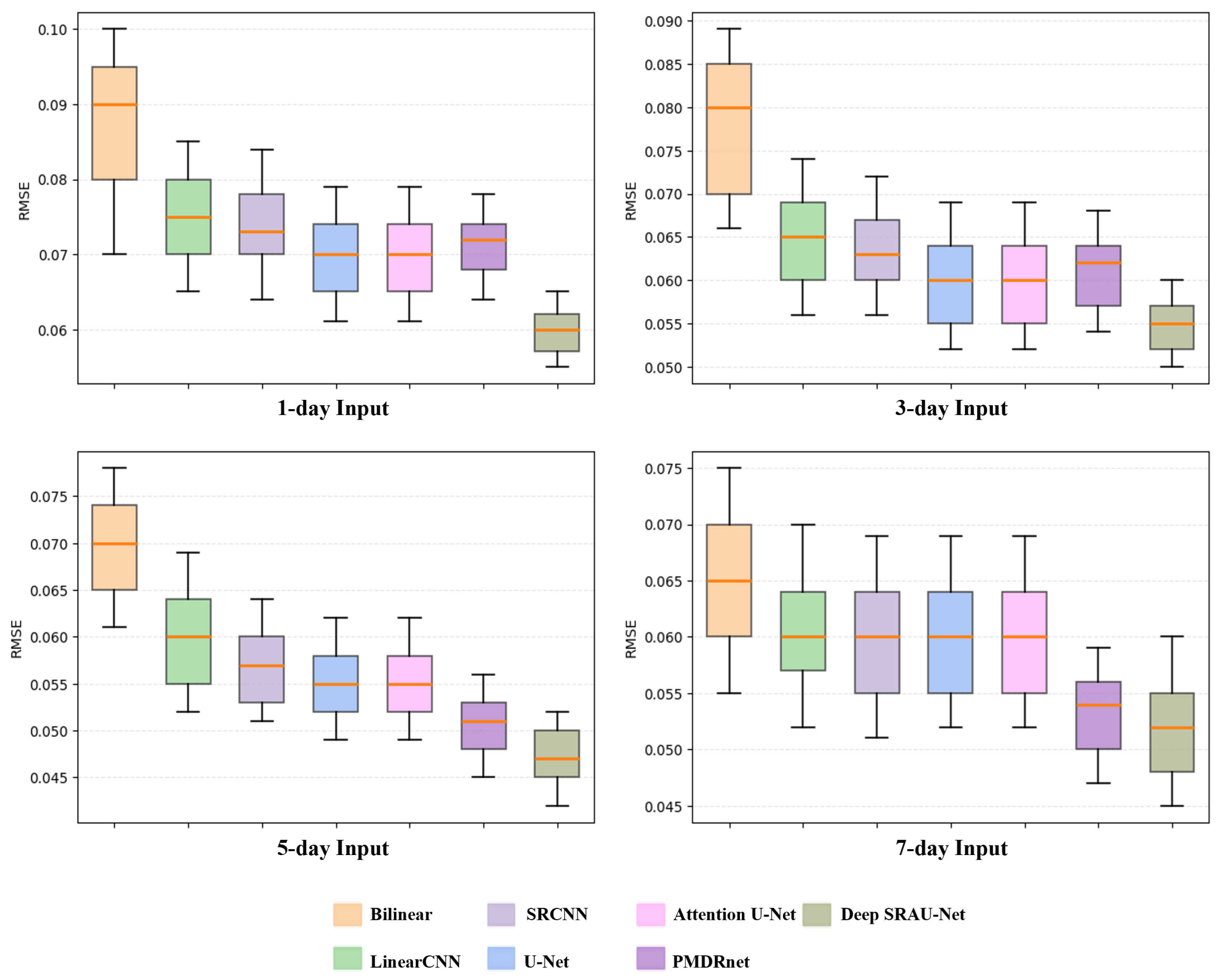

Figure 5 presents the impact of different input sequence lengths on model performance.

Figure 5 shows the RMSE distribution for various models with different input sequence lengths. Generally, the RMSE decreases progressively as the input sequence length increases from 1 to 5 days, suggesting that model performance improves with more input days, provided there is no overfitting on the validation set. It is noteworthy that deep CNNs, particularly U-Net, Attention U-Net, PMDRnet, and Deep SARU-Net, exhibit more concentrated RMSE distributions. Among them, Deep SARU-Net demonstrates the tightest distribution, followed by PMDRnet, while U-Net and Attention U-Net show comparable performance. This indicates that Deep SARU-Net achieves the least data fluctuation, the most stable distribution, and the best overall performance.

However, as the input sequence length increases, the risk of model overfitting also rises. In our experiments, when the input sequence length reached 7 days, most networks showed signs of overfitting, resulting in a noticeable decline in reconstructive performance. The RMSE size and tightness exhibit fluctuations when the input number of days is 7, confirming the overfitting issue. This trend is understandable: a longer input sequence provides the model with more temporal context, which helps capture the evolving patterns of SIC. While additional input days offer valuable context for learning sea ice changes, an excess of input information can lead to overfitting, as the model might start learning noise rather than generalizable features.

To mitigate overfitting and improve model performance, we selected a 5-day input sequence for the subsequent experiments. Under this configuration, Deep SARU-Net achieved a notable reduction in average RMSE, dropping to 0.0449. Furthermore, its correlation coefficient (r) increased to 0.9962, PSNR reached 32.03, and SSIM rose to 0.9744, demonstrating significant improvements across all metrics.

4.2.2. Performance of the Self-Attention Mechanism

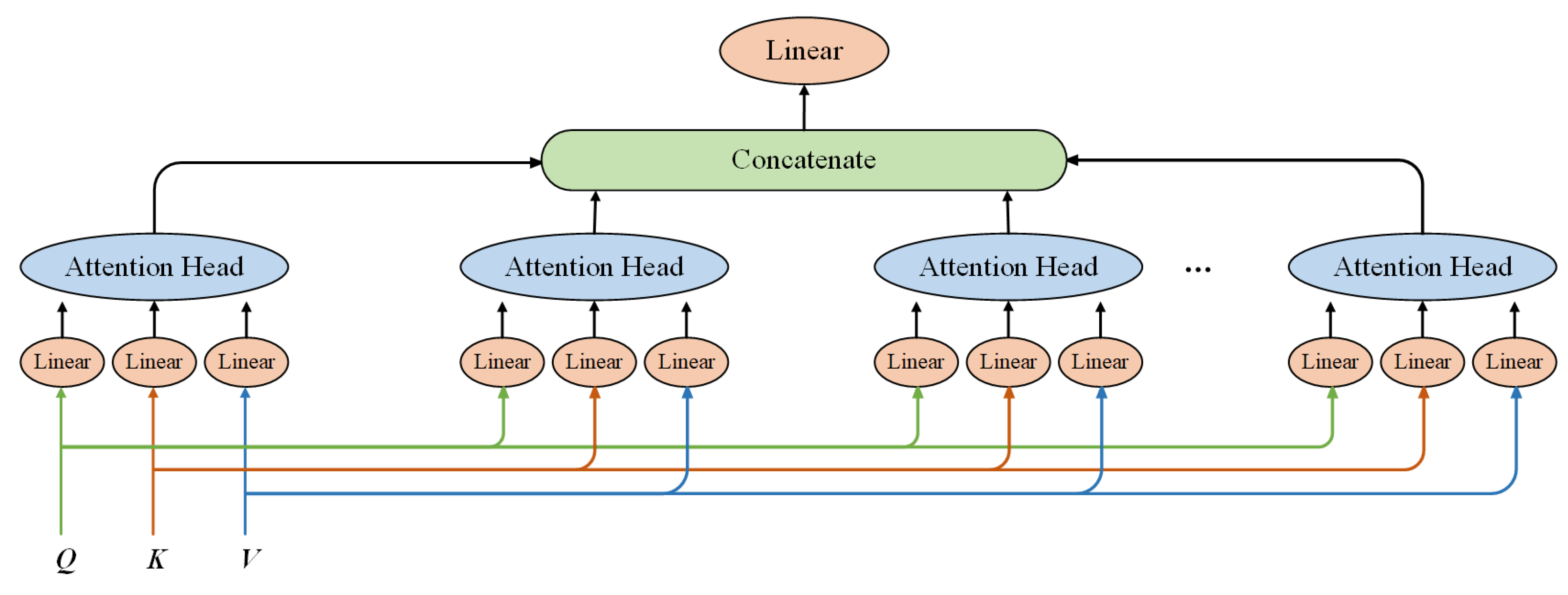

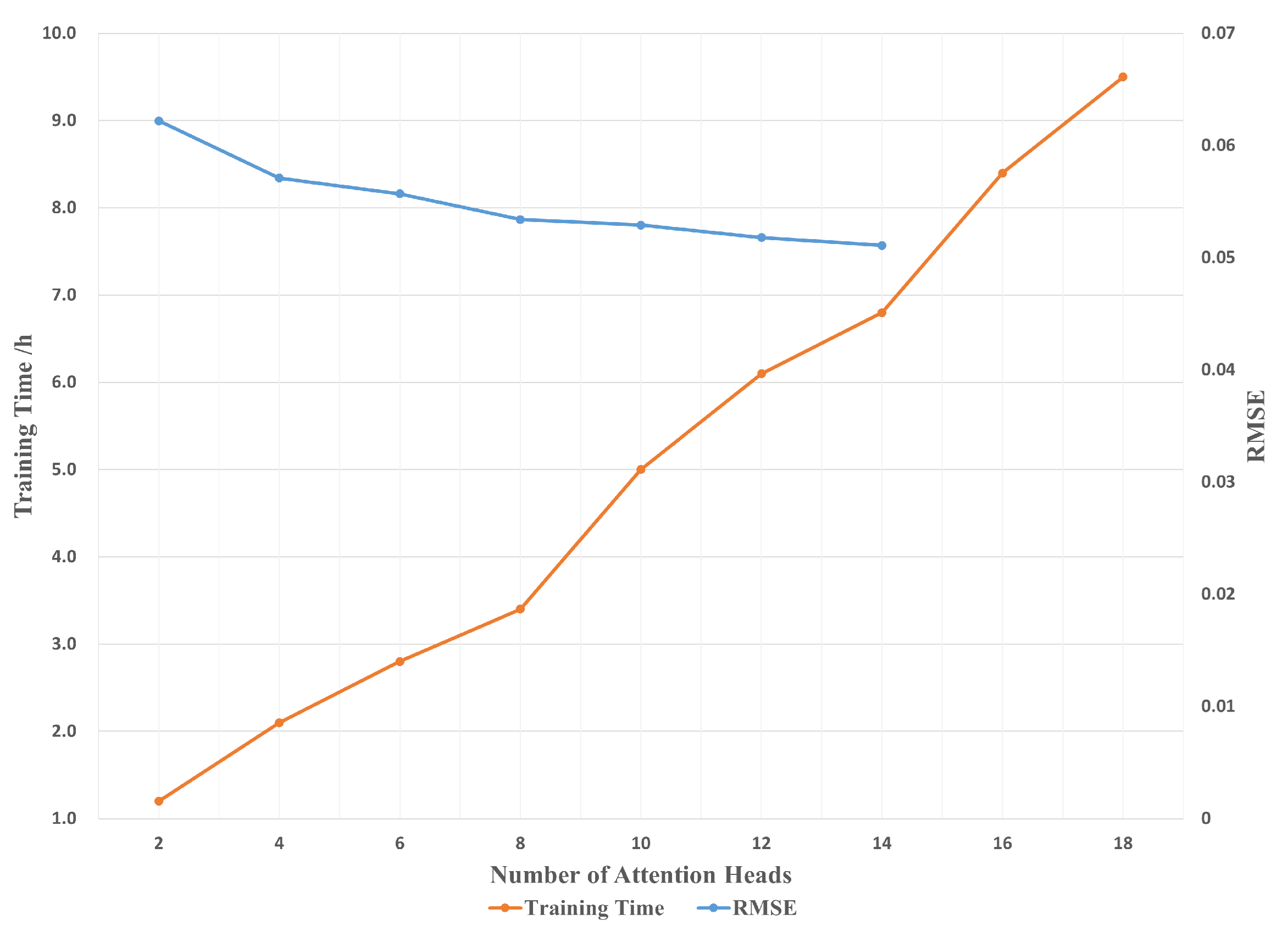

In the previous section, we identified 5 days as the optimal input sequence length. In this section, we further investigate the role and effectiveness of the self-attention mechanism embedded in the model architecture. Deep SARU-Net incorporates a multi-head self-attention mechanism, where each head captures different spatial and temporal feature correlations, thereby enhancing the model’s ability to learn complex dependencies. However, increasing the number of attention heads introduces notable computational and memory overhead, as each head involves intensive matrix operations. Moreover, an excessive number of heads may lead to overfitting—particularly when training data is limited—because the dimensionality of features processed by each head decreases, potentially causing information loss. Therefore, selecting an appropriate number of attention heads is critical for balancing computational efficiency, model capacity, and generalization performance. Based on a series of optimization trials, we set the number of attention heads to 8. The experimental results supporting this choice are presented in

Figure 6.

The curves in the chart clearly demonstrate that increasing the number of attention heads leads to a longer total training duration, reflecting higher computational resource demands. In terms of RMSE, which measures model accuracy, a lower value indicates a better fit to the observed data. As the number of attention heads increases to 8, RMSE consistently decreases, signifying improved model performance. However, beyond 8 attention heads, the reduction in RMSE plateaus, indicating diminishing returns on performance relative to the rising computational cost.

Additionally, when the number of attention heads exceeds 14, the model begins to exhibit signs of over-fitting, suggesting that it may be capturing noise rather than true underlying patterns in the data. A comprehensive analysis indicates that the optimal balance between low RMSE and reasonable training time is achieved at 8 attention heads. This configuration ensures an effective trade-off between computational efficiency and model performance, maintaining strong spatiotemporal feature capture without excessive over-fitting. Ultimately, we selected an input sequence length of 5 days and set the number of attention heads to 8 as the unified configuration for all subsequent experiments.

4.2.3. Run-Time Performance and Memory Requirements

In this study, we focus on a comprehensive analysis of several key aspects during the model execution process, including trainable parameters, memory consumption, total training time, and single SR estimation time. To ensure scientific validity and reliability, we implemented rigorous experimental designs and method controls.

To ensure consistency and comparability, we process data in batches of 40 to 60 samples, depending on memory availability. This experiment focuses on single-channel input and output, specifically targeting SR estimation for single-day data. When calculating trainable parameters, we account for the increased computational complexity as the network deepens. We consider the entire training cycle, including SR estimation, loss computation, and parameter optimization, to comprehensively evaluate model performance. For a single SR estimation time, we use the average inference time on the validation set as a representative measure.

To minimize errors caused by hardware response time and process delays, we conducted five experimental measurements for each model and averaged the results. This includes the total training duration (500 epochs, 4-year training set) and the total SR estimation time (1-year validation set). The single-step estimation time is calculated as the ratio of the total estimation time to the number of validation set inputs. Detailed metrics from different experimental runs for each model are summarized in

Table 3.

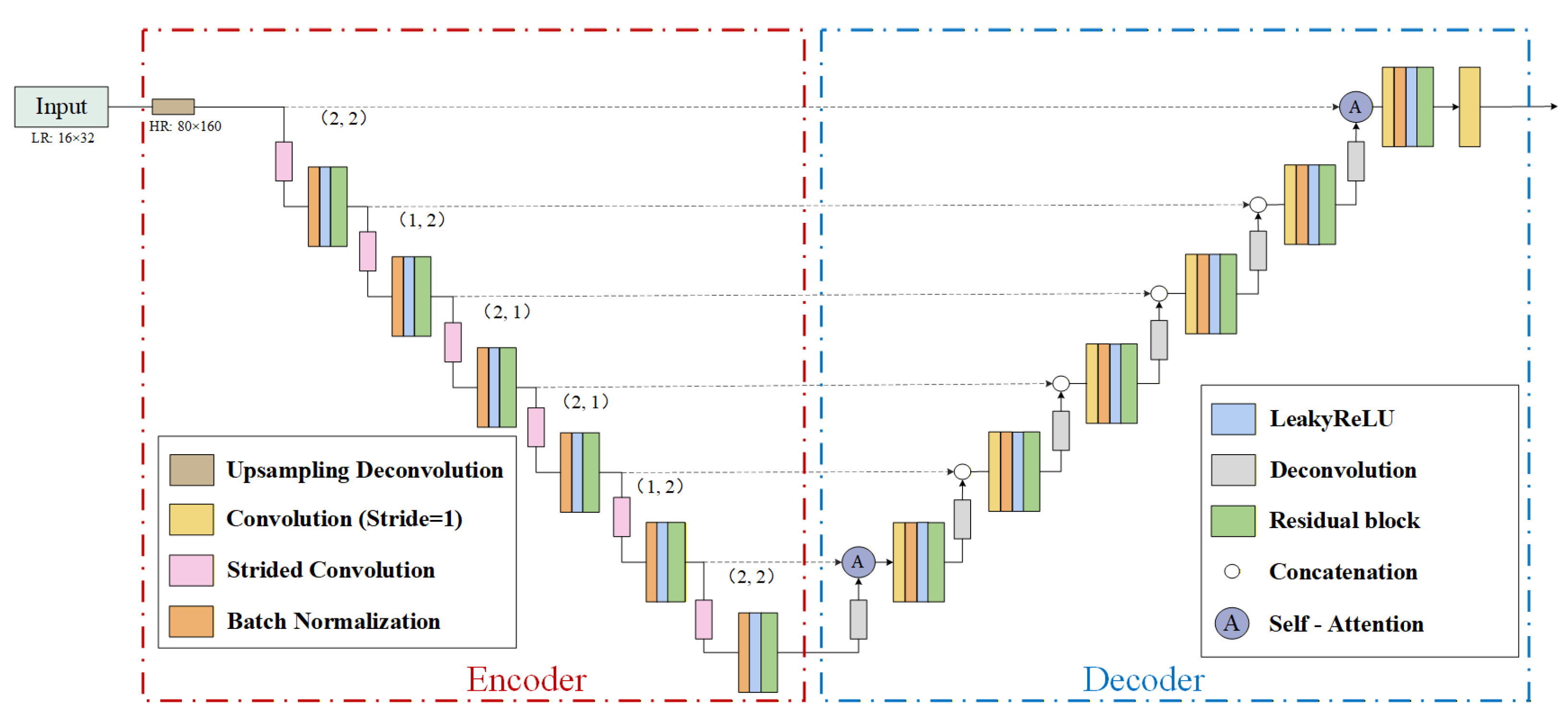

Experimental results demonstrate that increasing model complexity leads to a substantial rise in computational costs. Compared to the simpler LinearCNN and SRCNN models, deep CNNs based on the U-Net architecture show a significant escalation in both parameter scale and computational time. The Attention U-Net, due to the incorporation of the attention mechanism, also exhibits a slight increase in parameters and computation time compared to the standard U-Net. PMDRnet, with its parallel multi-branch design, registers the highest parameter count and total training duration. To address this challenge, our proposed Deep SARU-Net adopts a sequential convolutional design and incorporates self-attention mechanisms solely in the skip connections at the highest and lowest resolution levels, thereby achieving an effective reduction in the number of trainable parameters. Compared to U-Net, Attention U-Net, and PMDRnet, Deep SARU-Net achieves a significant reduction in parameters by 11.9%, 38.8%, and 75.1%, respectively. Furthermore, by strategically introducing residual blocks, we successfully enhance the predictive performance without inflating the parameter count, making Deep SARU-Net more advantageous than conventional U-Net, and demonstrating marked improvements in memory consumption, total training procedure duration, and single estimation procedure step.

Although Deep SARU-Net contains fewer parameters than the other two deep networks, its reconstructive performance has not yet been fully validated. Therefore, in the next section, we analyze the downscaling performance of different SR models.

4.2.4. Performance Evaluation of SR Estimation Models

This study aims to evaluate the performance of Deep SARU-Net in SIC SR estimation and compare it with six baseline models. All experiments are conducted under a unified configuration of 5-day input and 1-day output, with model performance assessed on the validation dataset using RMSE, correlation coefficient (

r), PSNR, and SSIM. The detailed results are presented in

Table 4.

The table provides a comparative analysis of different models for single-day SIC SR estimation. Among the six methods, bilinear interpolation exhibits the weakest performance, with the highest overall error and the lowest PSNR and SSIM values, indicating poor spatial detail enhancement. LinearCNN and SRCNN, as basic CNN models, improve spatial detail representation through convolution operations, outperforming bilinear interpolation. However, both employ uniform linear convolution kernels across the entire sea domain, producing general estimates while limiting precision, particularly for complex regions like sea ice edges and sea-land interfaces. As a result, their SR performance remains suboptimal in capturing fine-scale SIC variations.

The remaining four methods, U-Net, Attention U-Net, PMDRnet, and Deep SARU-Net, are more complex deep neural networks and overall demonstrate better performance. Standard U-Net and Attention U-Net achieve RMSE values of 0.0685 and 0.0642 on the validation set, respectively, but still fall short compared to the other two models. PMDRnet attains an RMSE of 0.0532, second only to Deep SARU-Net. This indicates that increasing the number of trainable parameters and incorporating attention mechanisms can indeed improve performance. However, higher model complexity also introduces the risk of overfitting. Even with L2 regularization and dropout applied, signs of overfitting remain observable. In addition, although PMDRnet achieves relatively strong performance in terms of RMSE, its SSIM is slightly lower than that of Attention U-Net. On the one hand, this is partly attributed to overfitting; on the other hand, it is related to the mismatch between data characteristics and model design. The original PMDRnet was specifically developed for processing AMSR2 passive microwave remote sensing images, whereas this study employs multi-source satellite remote sensing products that have been fused and regridded. These data differ substantially from raw AMSR2 imagery in terms of spatial resolution, noise properties, and statistical distribution, which reduces PMDRnet’s effectiveness in SR reconstruction.

Deep SARU-Net effectively mitigates overfitting by redesigning the encoder–decoder architecture and optimizing the integration of attention mechanisms with residual connections. This optimization not only reduces the number of trainable parameters and simplifies the network structure but also preserves strong generalization capability. Experimental results demonstrate that the model achieves the best performance in SIC SR estimation, with an RMSE of 0.0449, correlation coefficient (

r) of 0.9962, PSNR of 32.03 dB, and SSIM of 0.9744. Compared to bilinear interpolation, RMSE is reduced by 59.29%,

r is increased by 5.00%, PSNR improves by 51.77%, and SSIM rises by 13.15%. Compared with the next-best model, PMDRnet, the RMSE is reduced by 15.60%, the correlation coefficient

r increases by 0.34%, the PSNR improves by 8.69%, and the SSIM rises by 1.67%. These improvements clearly highlight the model’s remarkable ability to enhance image quality and spatial correlation.

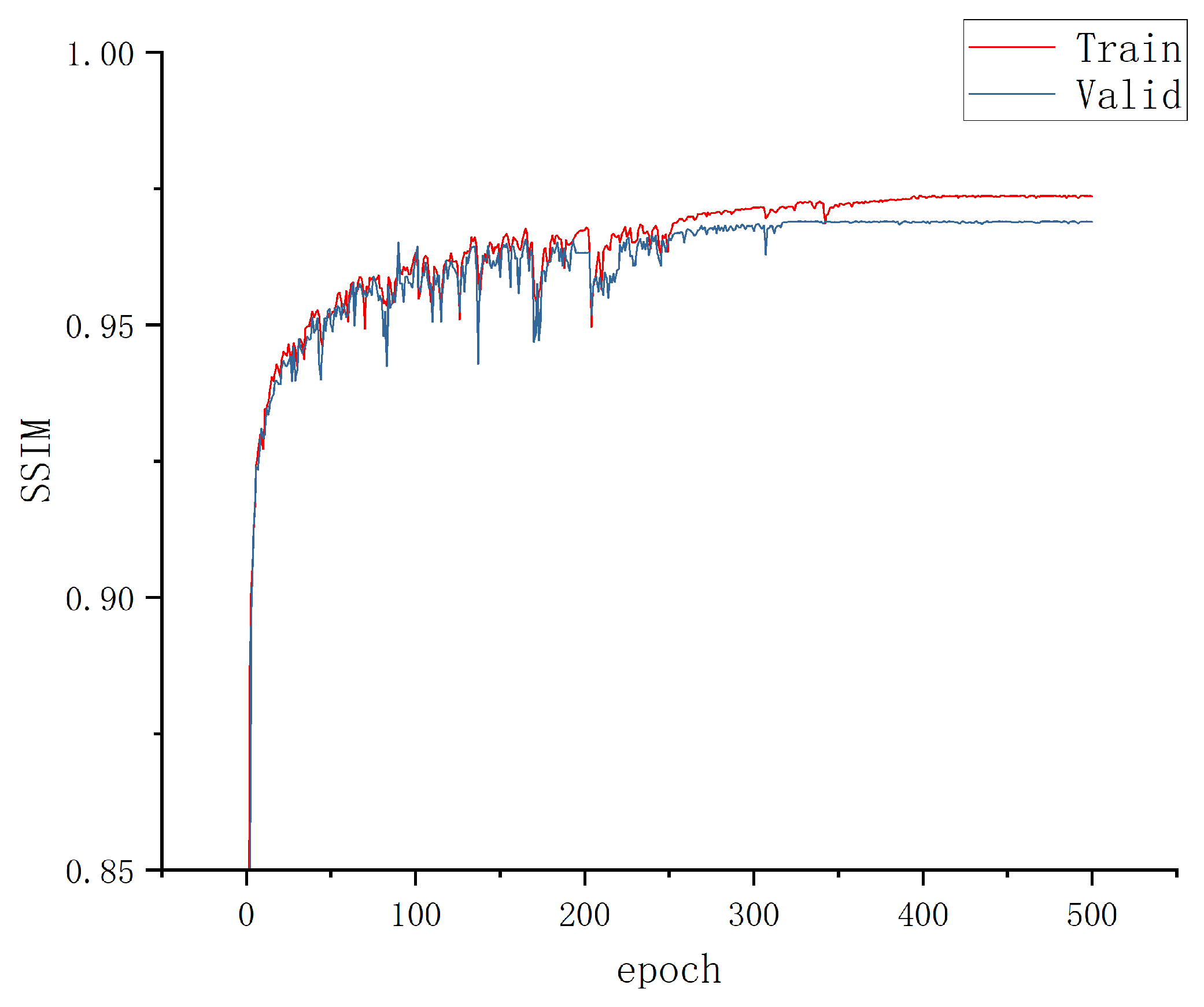

Figure 7 presents the training and validation loss curves (SSIM and RMSE) of Deep SARU-Net, showing no signs of overfitting.

4.2.5. Spatial Distribution of Estimation Results

The analysis of the above experimental results primarily relies on data-based metrics. However, these metrics provide limited insight and may not intuitively or comprehensively present the SIC SR estimation outcomes and their spatial error distributions. In this section, we conduct a more detailed analysis from a spatial distribution perspective, focusing on critical areas such as the seacoast, sea ice edges, and the interior of sea ice. By comparing HR ground truth with the SR estimations produced by the model, we aim to provide a more intuitive understanding of the model’s performance in key locations, facilitating a deeper evaluation of its capabilities.

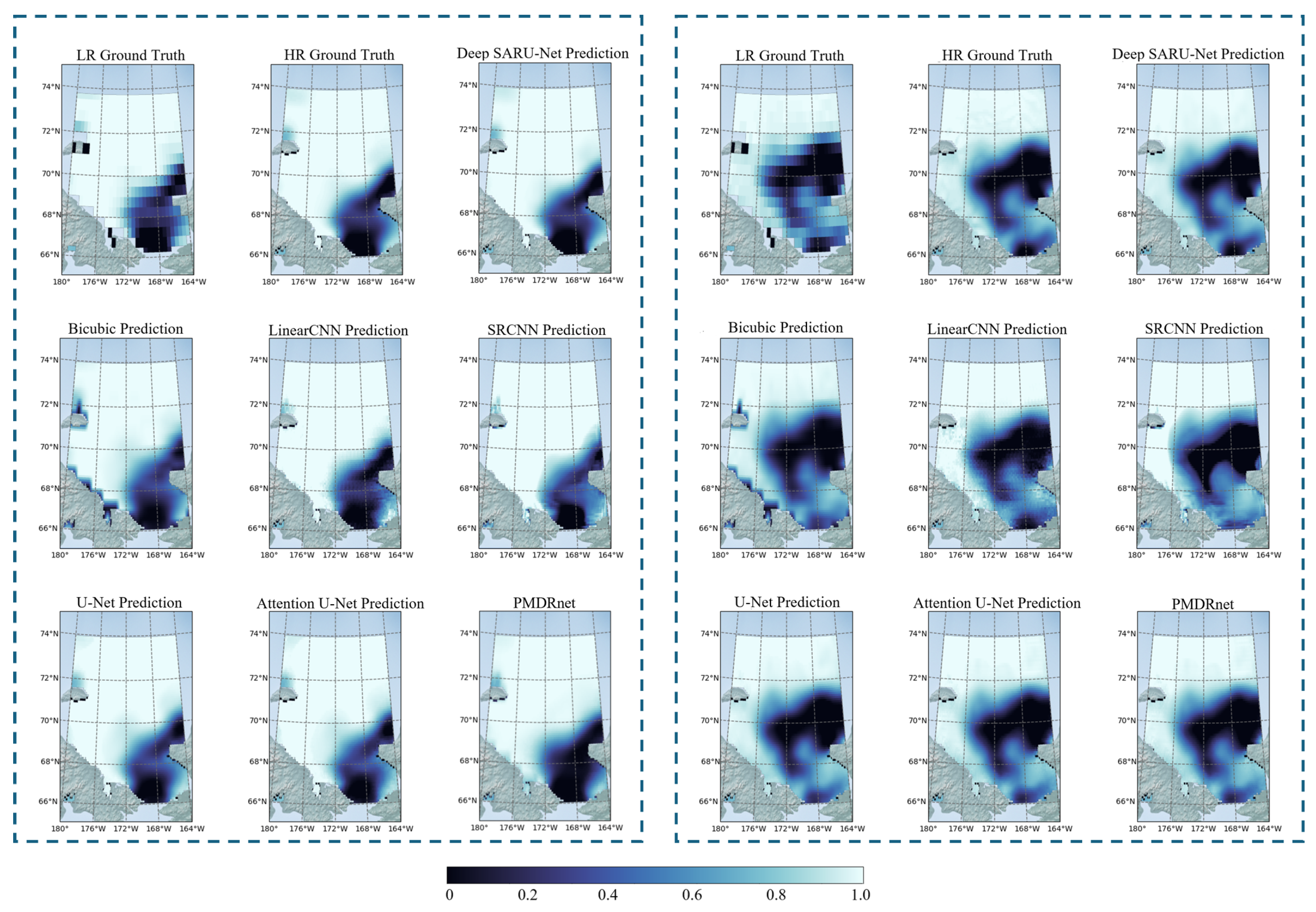

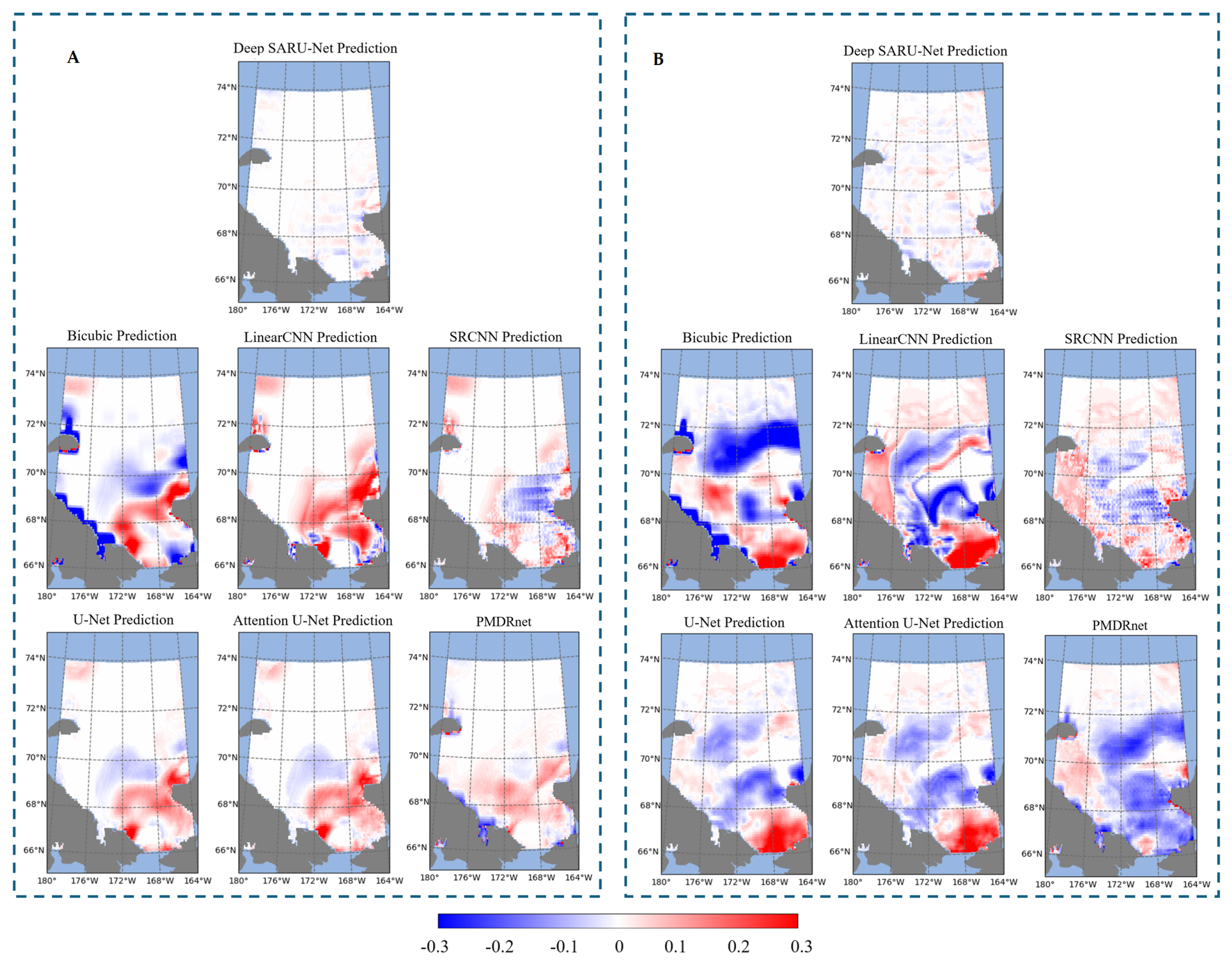

As mentioned earlier, the input sequence length is set to 5 days, using LR SIC data from T-5 to T-1 days to reconstruct the SR result for the Tth day. Among the various models considered, we selected the group that performed best in the SSIM evaluation. The spatial distribution of the SIC SR estimation results is illustrated in

Figure 8.

Figure 8 shows the SIC SR estimation results for 1 June 2022 (first half of the year) and 28 December 2022 (second half of the year), respectively. In the SIC LR data, the sea ice distribution near the ice edge and coastal areas is largely inaccurate, especially in the southeastern part of the Chukchi Sea, where the floating ice appears blurry. Compared to the LR data, all seven models successfully perform SIC SR estimation, providing more accurate results for the detailed distribution of sea ice at the ice edge and within the sea ice.

Taking the left panel as an example, it is evident that bilinear interpolation produces blurred boundaries near the coastline. Within the ice edge and interior sea ice regions, the estimated results appear overly smoothed, lacking small-scale variations. In addition, checkerboard-like artifacts can be observed along the coast and in the southeastern Chukchi Sea. These artifacts are likely caused by differences in spatial resolution and grid structure between the LR and HR datasets, as well as the bilinear interpolation method applied during visualization.

LinearCNN and SRCNN, as relatively simple CNN-based models, improve the clarity of land–sea boundaries to some extent. However, significant inaccuracies remain at the sea ice edge. While LinearCNN performs better than SRCNN in certain aspects, it also introduces more pronounced artifacts that undermine reliability. In contrast, more complex deep CNN models—U-Net, Attention U-Net, PMDRnet, and Deep SARU-Net—achieve sharper boundaries and higher spatial SR estimation accuracy. Although Attention U-Net incorporates the CBAM attention mechanism, its performance improvement over U-Net remains limited. This limitation is primarily due to its large number of trainable parameters, which causes parameter redundancy, and the fact that its attention mechanism focuses only on spatial and channel features without effectively capturing more complex contextual dependencies. PMDRnet, despite employing temporal attention to integrate information from different time steps, still shows suboptimal reconstruction performance in the interior ice regions.

Overall, Deep SARU-Net demonstrates the most superior performance. By redesigning the network architecture and integrating residual networks with multi-head self-attention mechanisms, its performance is significantly enhanced. The residual network facilitates more efficient gradient propagation and accelerates training, while the multi-head self-attention mechanism enables the model to capture complex dependencies among sequence elements. As a result, Deep SARU-Net exhibits outstanding SR estimation capability in mixed ice–water regions, with superior detail preservation. For the right panel of

Figure 8, we can conduct a similar analysis.

The SIC SR estimation results fall short in clearly, intuitively, and concisely expressing the small-scale distribution and magnitude of errors for various methods. Therefore, we computed the discrepancies between the SR estimated values and the HR ground truth, referred to as residuals, to more accurately unveil the model’s performance.

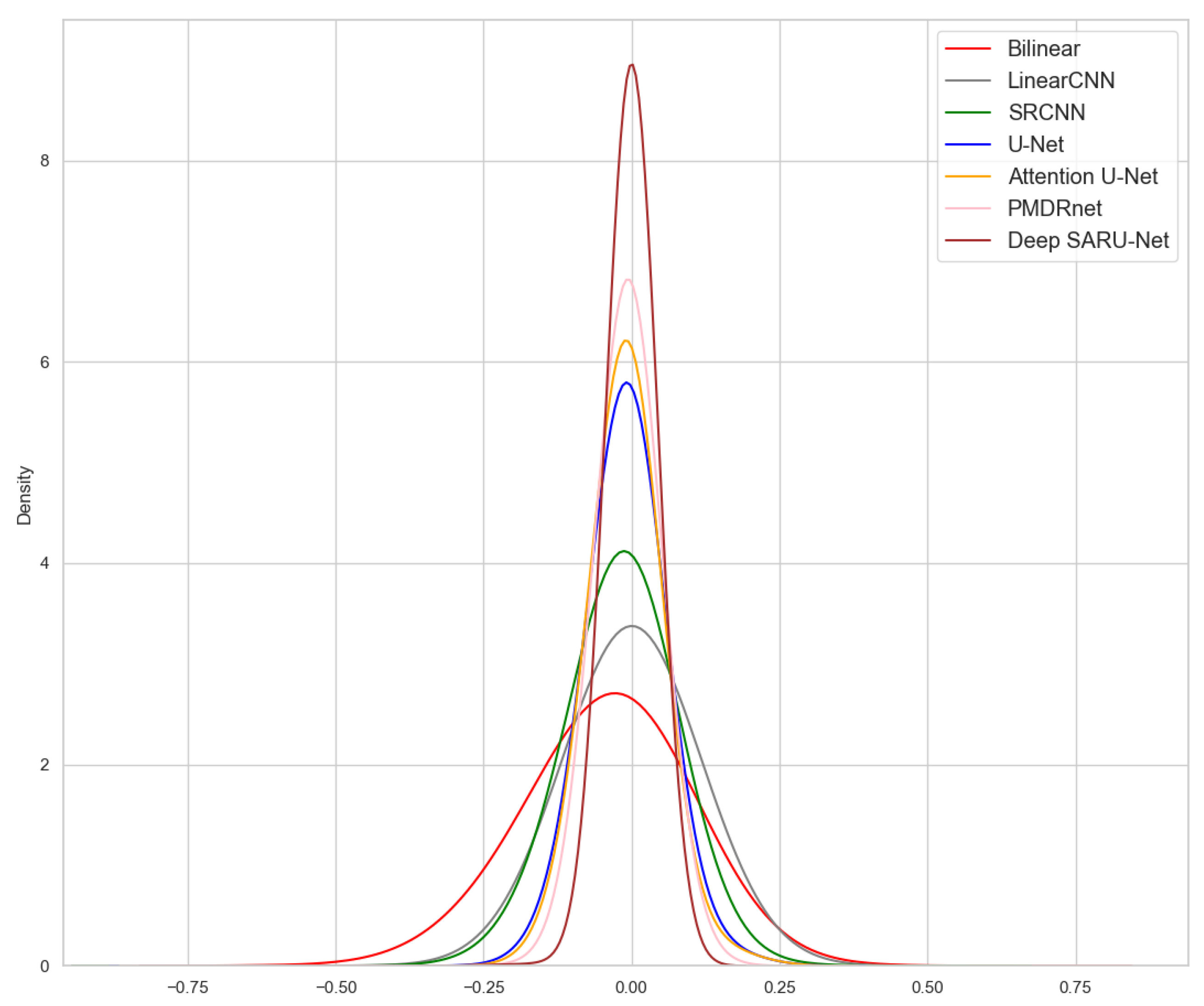

Figure 9 contrasts the residuals of different SR estimation models, vividly illustrating the disparities between the estimated outcomes and HR ground truth.

In the SR estimation results for 1 June 2022 (left panel), the bilinear interpolation model exhibits substantial residuals across large sea areas, underscoring its limitations in capturing the complex spatial patterns of sea ice distribution. Although LinearCNN and SRCNN, as convolutional neural networks, outperform interpolation overall, they still produce considerable residuals in dynamically complex regions such as coastlines and sea ice edges. This indicates that simpler models with fewer parameters struggle to fully represent the intricacies of sea ice dynamics. In contrast, U-Net and Attention U-Net demonstrate better generalization, with Attention U-Net showing a slight improvement in reducing residuals in critical areas. PMDRnet achieves smaller overall errors compared to Attention U-Net, with residuals generally constrained within the range of [−0.15, 0.15], though notable deviations remain in coastal regions. Most notably, Deep SARU-Net achieves the smallest residuals overall, with error values largely confined to [−0.1, 0.1], particularly excelling along sea ice edges and coastlines. These findings suggest that the redesigned architecture of Deep SARU-Net enables it to more effectively capture the complex spatiotemporal dynamics of sea ice, resulting in more accurate estimations.

In the SR estimation results for 28 December 2022 (right panel), the residual distributions across models remain consistent with the earlier findings. A comparison between the left and right panels reveals that winter residuals are generally larger than those in summer. This can largely be attributed to the prolonged “triple-dip” La Niña event since 2020, which lowered Pacific SSTs and influenced Arctic sea ice dynamics, including those in the Chukchi Sea. La Niña typically strengthens Arctic oscillations, intensifies westerlies, and reduces sea ice extent. In summer, cooler temperatures may stabilize sea ice cover, leading to more accurate estimations; in contrast, winter introduces greater variability in sea ice due to shifts in atmospheric circulation, complicating reconstructions. Despite these challenges, Deep SARU-Net successfully constrains residuals within [−0.06, 0.06], underscoring its robust SR performance.

To evaluate the residual distribution across the entire validation set, we applied Gaussian kernel density estimation, a non-parametric method widely used in statistical analysis. This experiment analyzed SIC SR estimations over 365 days (80 × 160 grid points per day, totaling over 4.6 million points). Using kernel density estimation, we statistically examined the residual distribution of different methods, as shown in

Figure 10. This figure provides a comprehensive visualization of residual distributions across the validation set.

Figure 10 illustrates the distinct residual distribution patterns across different methods. Bilinear interpolation exhibits the widest range and lowest kurtosis, with residuals primarily spanning [−0.5, 0.3], indicating lower accuracy. In contrast, LinearCNN and SRCNN produce more compact distributions with higher kurtosis, suggesting that CNNs can effectively capture spatiotemporal features for finer SR estimation. Moreover, the U-Net–based models demonstrate superior performance, with residuals becoming more concentrated and kurtosis substantially increased. The symmetric structure and skip connections of U-Net facilitate efficient feature integration, enhancing its capability for spatiotemporal SR tasks, while Attention U-Net further improves residual concentration through its attention mechanism. PMDRnet achieves a peak height second only to Deep SARU-Net, though the difference from Attention U-Net is marginal. Deep SARU-Net delivers the best overall performance, with the smallest residual variance and the most compact distribution, primarily confined to [−0.1, 0.1]. Its near-zero mean residuals and approximately random distribution indicate higher reconstructive accuracy.

4.2.6. Ablation Experiments on Different Modules

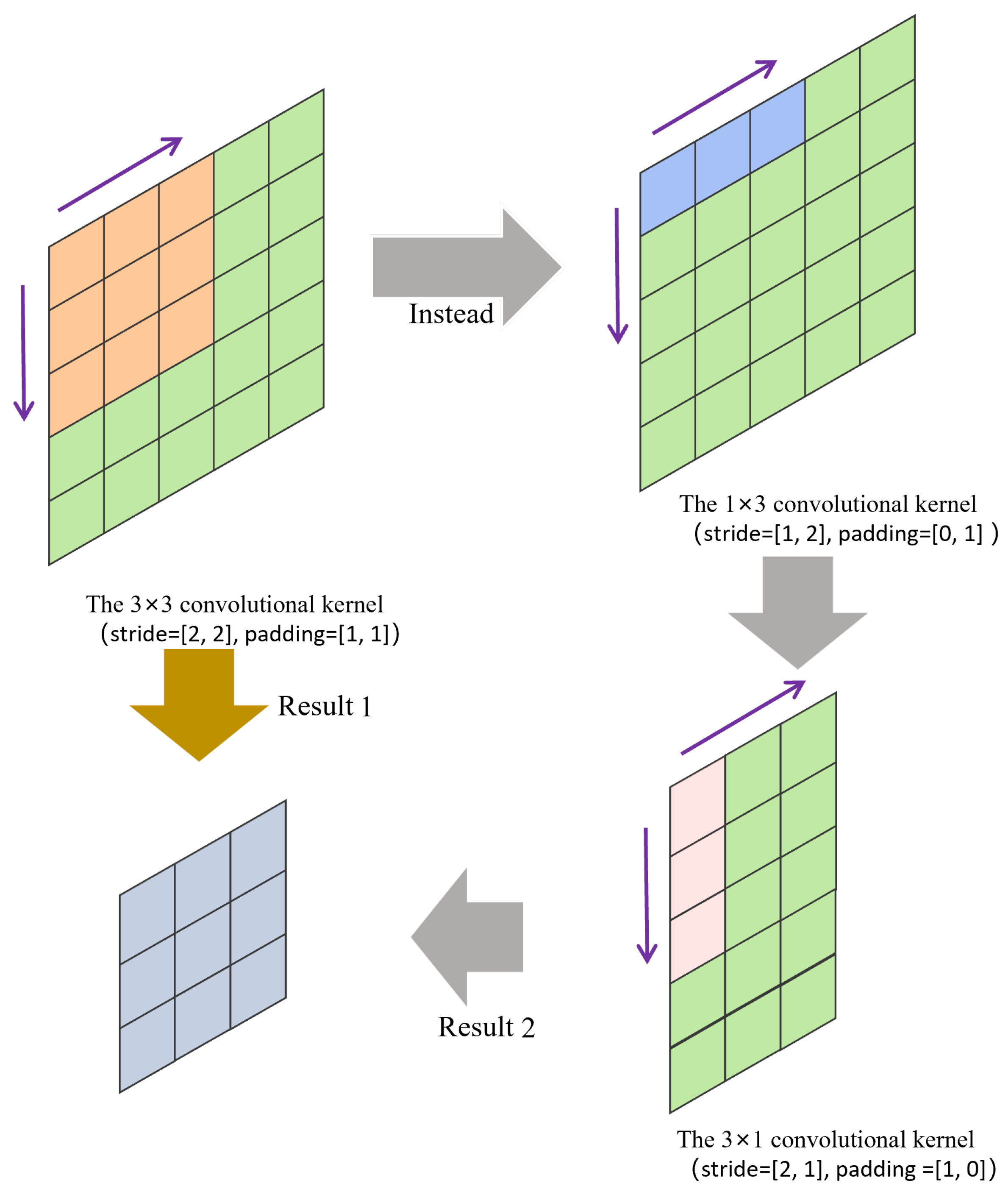

Following the previous experiments that validated the strong performance of Deep SARU-Net in Arctic SIC SR estimation, we conducted a series of ablation studies to systematically assess the effectiveness of each proposed architectural enhancement. By progressively removing key modules from the full model, we quantitatively evaluated their individual contributions to SR performance. This section focuses on three components: orthogonal rectangular convolutional kernels, residual blocks, and multi-head self-attention mechanisms.

To ensure fairness, all compared models were trained with the same dataset, loss functions, and optimization strategies, using a consistent input sequence length of 5 days and 8 attention heads (unless the attention mechanism was removed). Four model variants were constructed for comparison: Baseline Model: A standard U-Net architecture with all enhancements removed; Model 1: Adds orthogonal rectangular convolutional kernels to replace max pooling layers; Model 2: Builds on Model 1 by incorporating residual connections; Deep SARU-Net: Adds multi-head self-attention mechanisms to form the full model.

Table 5 presents the performance metrics of each model on the validation set. The results show that each module contributes significantly to performance improvement, with accuracy and stability increasing as more enhancements are included. Compared to the baseline, adding orthogonal convolutions reduced RMSE from 0.0736 to 0.0619 and increased PSNR by approximately 1.15 dB, indicating better spatial feature representation. Introducing residual blocks further reduced RMSE to 0.0527 and raised the correlation coefficient r to 0.9939, confirming the benefit of residual paths in mitigating gradient vanishing and enhancing feature flow.

With the integration of the attention mechanism, the model achieved optimal performance across all metrics (RMSE = 0.0449, r = 0.9962, PSNR = 32.03, SSIM = 0.9744). This highlights the self-attention mechanism’s effectiveness in modeling complex spatial dependencies and enhancing sensitivity to subtle structures, especially in transition zones such as sea-land boundaries and fine-scale cracks.

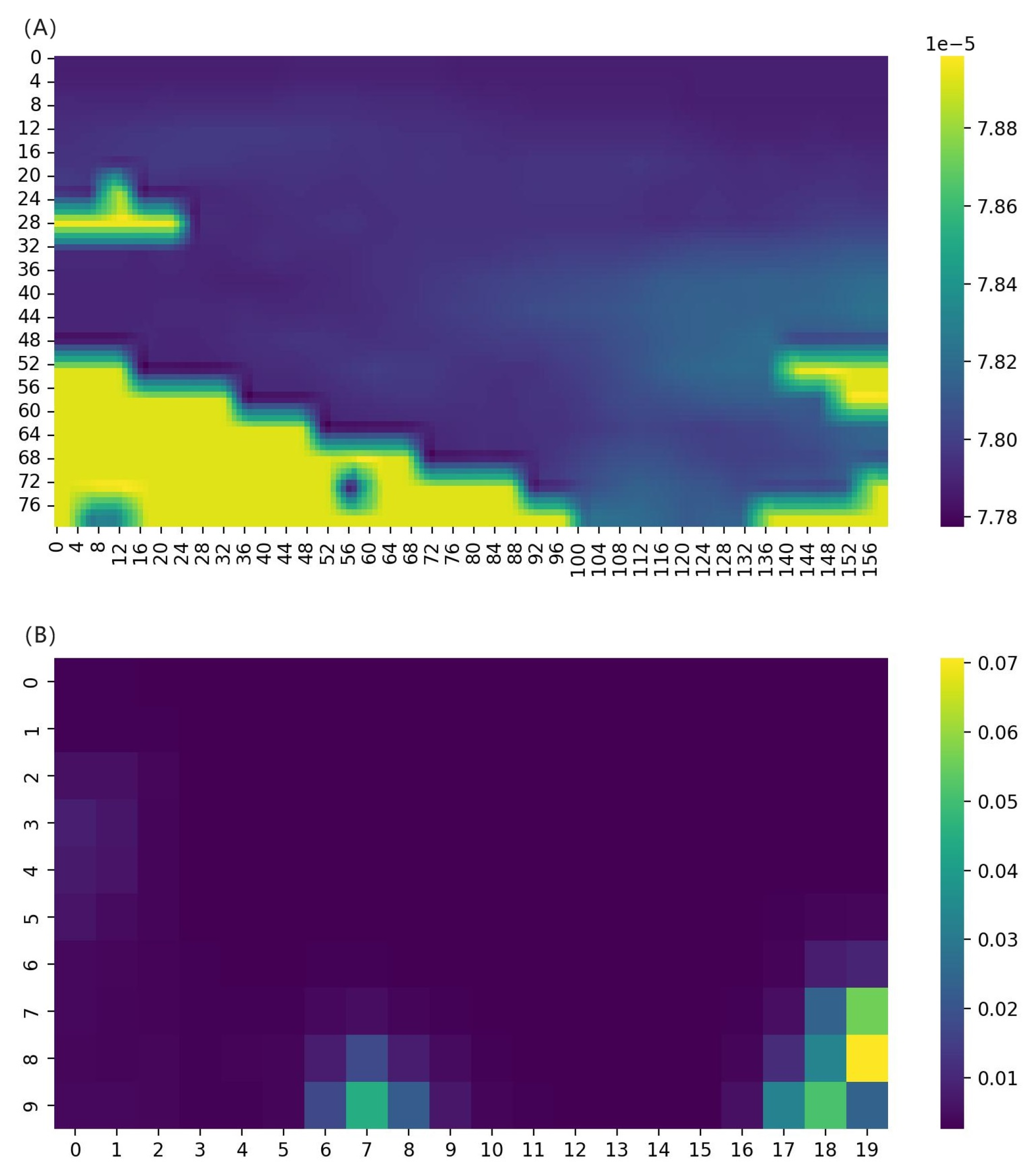

To further explore the spatial behavior of self-attention, we visualized the attention weight distributions at the skip connections of the first and last resolution stages. These results are shown in

Figure 11.

Figure 11 presents the weight distribution of the self-attention mechanism in Deep SARU-Net across two resolution stages with skip connections. The color depth corresponds to attention weight magnitude.

Figure 11A,B depict the spatial distribution of attention weights for a specific channel. In

Figure 11A, representing the highest resolution stage, the weights are concentrated along sea-land boundaries, emphasizing the model’s focus on capturing spatial correlations, which is crucial for distinguishing sea ice from land. Conversely,

Figure 11B, at the lowest resolution stage, displays a more dispersed distribution, with heightened attention in key regions, likely corresponding to high SIC areas. This demonstrates that self-attention effectively enhances the model’s ability to capture both local and global sea ice features. These observations confirm that the multi-stage self-attention mechanism enhances the model’s capacity to capture both local and global ice structures.

4.2.7. Model Performance Across Sea Domains and Observation Datasets

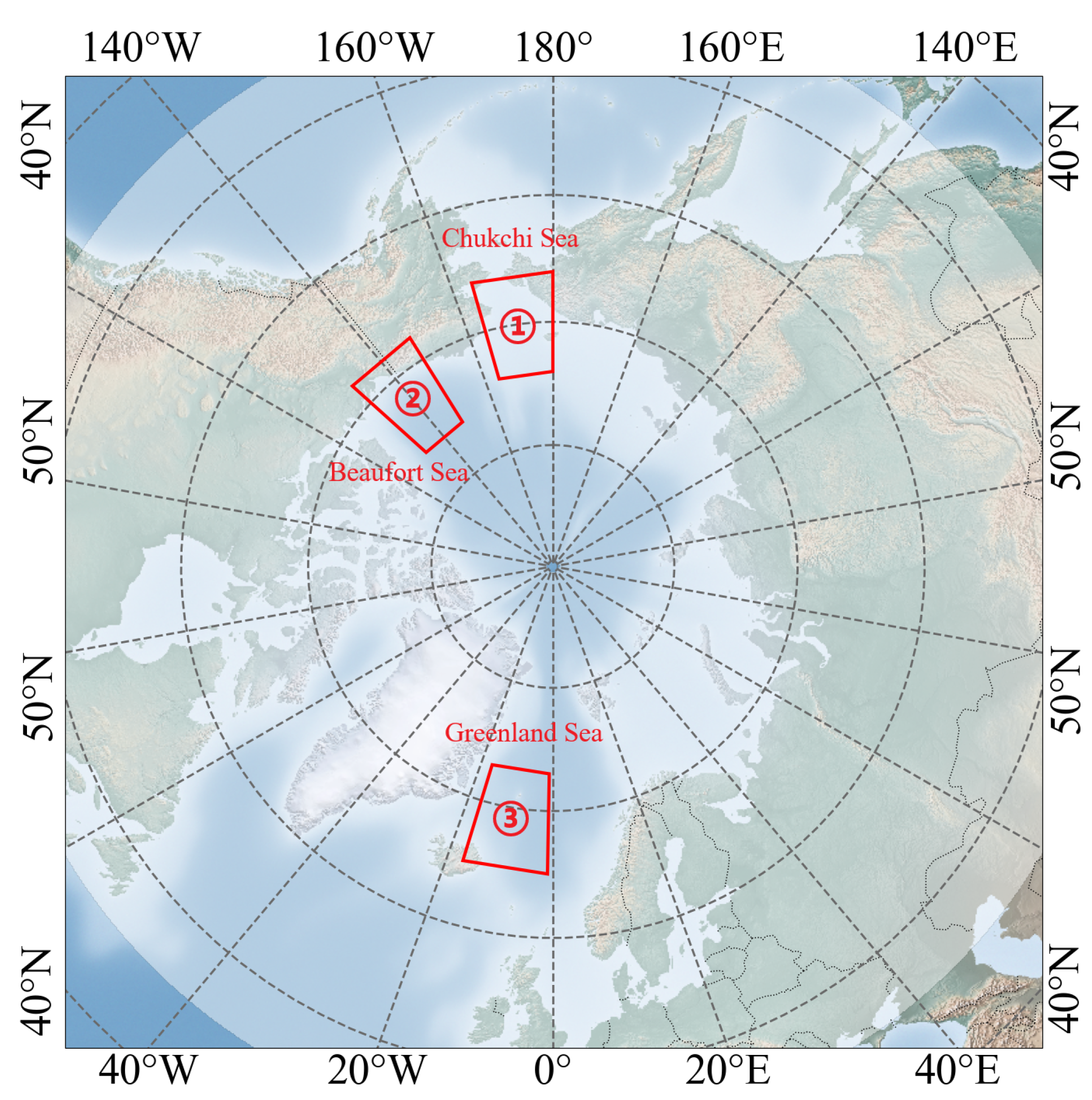

Our previous study analyzed Deep SARU-Net’s performance in SIC spatial SR estimation, primarily focusing on the Chukchi Sea. However, this localized evaluation limits our understanding of the model’s generalization across the broader Arctic region. In contrast, mainstream HR SIC numerical models, such as global and polar models, typically achieve spatial resolutions around 1/10°. To assess whether our model can achieve comparable performance, we will conduct experiments evaluating its generalization capability across different Arctic sea regions.

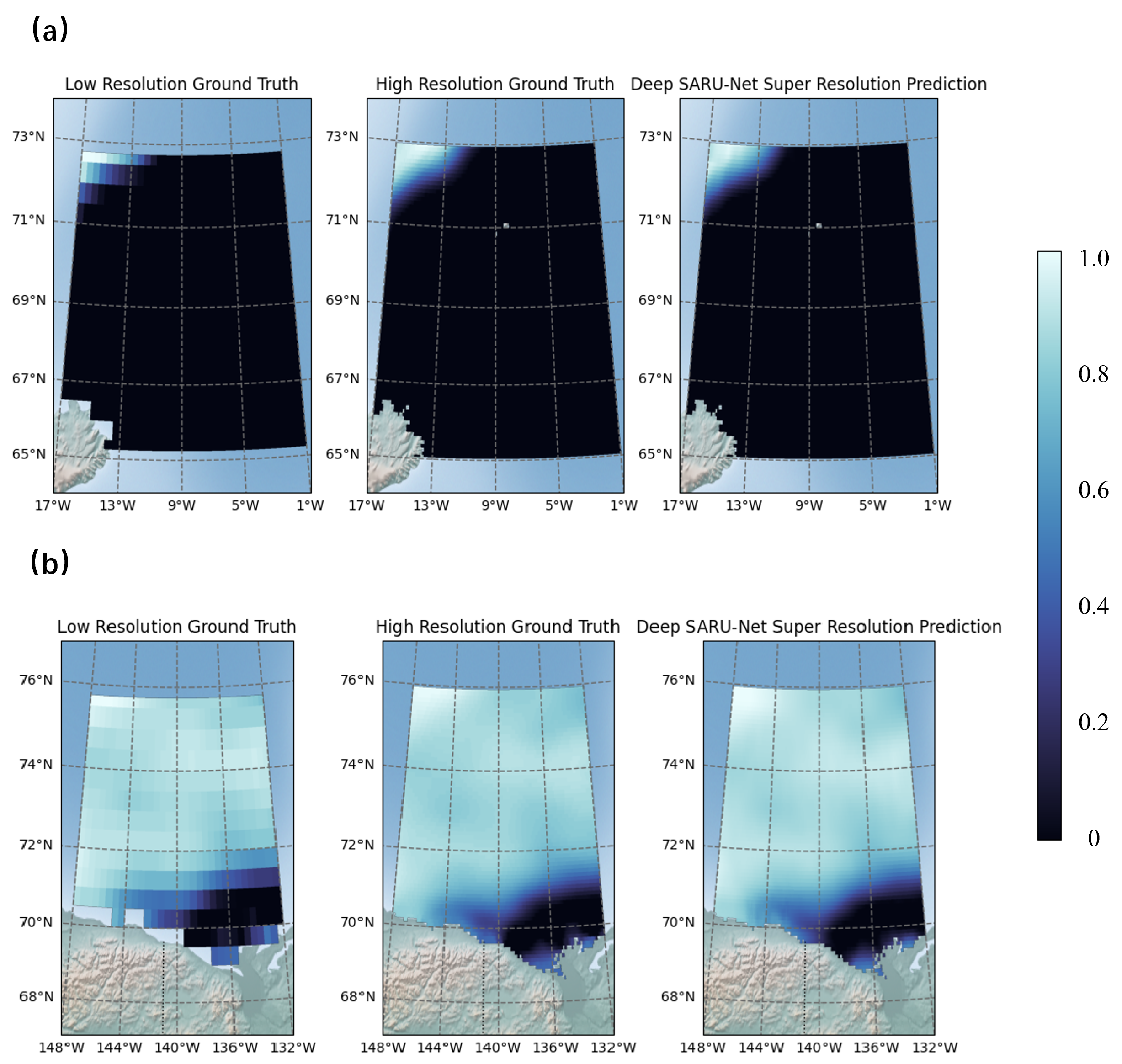

Two additional sea areas, the Beaufort Sea and the Greenland Sea, are chosen for SIC SR estimation studies. The Beaufort Sea, a marginal sea of the Arctic Ocean, remains mostly frozen year-round, with a narrow open-water passage during August to September. Due to Arctic climate change, the ice-free area has expanded, enabling Arctic navigation. The Greenland Sea, part of the Nordic Seas, is important for studies on temperature–salinity circulation and contains potential natural gas and oil resources. These areas were selected to evaluate the model’s generalization performance. OSTIA satellite remote sensing data continue to be selected for the experimental data. The SIC SR results for these regions are shown in

Figure 12.

Figure 12 shows the SIC downscaling result by Deep SARU-Net for the two sea regions. In

Figure 12a, the model effectively distinguishes sea ice from water, with estimations closely matching HR ground truth and capturing detailed concentration gradients. The model achieves a PSNR of 31.17 dB and an SSIM of 0.9716 in this area.

Figure 12b demonstrates the model’s accuracy in capturing the extent and internal concentration of sea ice near Greenland, despite the limited ice coverage, with a PSNR of 40.32 dB and SSIM of 0.9867.

A comprehensive analysis of the SIC SR estimations in these two marine areas shows that our model closely matches HR ground truth, especially in concentration gradients and sea ice distribution continuity. Similar to previous studies in the Chukchi Sea, our model achieves an average RMSE below 0.05 and correlation coefficients above 0.99 across multiple marine areas. This demonstrates that our model’s performance is on par with HR numerical simulations. Additionally, the AI-based Deep SARU-Net model offers significant advantages in terms of parameter efficiency and computational time, while excelling in capturing the detailed distribution and smooth concentration gradients.

In our previous experiments, the SR estimation of SIC was primarily conducted using the OSTIA satellite-derived dataset. Although OSTIA provides relatively high spatial resolution, it is fundamentally generated from passive microwave sensors (e.g., AMSR2, SSMIS) and infrared sensors (e.g., AVHRR) through multi-source data fusion and interpolation. As a result, a substantial portion of the dataset consists of interpolated values rather than direct observations, which reduces its ability to accurately represent fine-scale sea ice structures and ice-edge morphology, particularly under cloudy, harsh-weather, or polar night conditions. In contrast, SAR observations offer significantly higher spatial resolution and, as an active microwave system, can penetrate cloud cover and operate independently of illumination conditions. This enables SAR to capture detailed sea ice texture and ice-edge variations more reliably. However, SAR data also suffer from limited spatial coverage and relatively long revisit intervals, making it difficult to construct large-scale, continuous time-series datasets.

To address these limitations, we adopted a transfer-learning-based strategy: sparse SAR observations were used to retrain and fine-tune the OSTIA-pretrained Deep SARU-Net, enabling us to evaluate its cross-sensor generalization capability and enhance its robustness and applicability for SR estimation under real observational conditions. Specifically, we used 257 days of SAR observations from 2018 to 2021 for fine-tuning. The LR and HR pairs were generated via cubic interpolation of the SAR data. The model was fine-tuned until reconvergence, allowing it to adapt to the spatial patterns and textural characteristics inherent to SAR observations. The fine-tuned model was then applied to the 2022 SAR LR data to estimate SIC, and the results were validated using 64 days of SAR HR data from 2022. The evaluation metrics are summarized in

Table 6.

The results indicate that Deep SARU-Net exhibits strong generalization capability when applied to SAR observations. Using the OSTIA-pretrained weights directly, the model achieved an average RMSE of 0.0725, correlation coefficient r of 0.9744, PSNR of 29.27 dB, and SSIM of 0.9361 on the 2022 validation set, demonstrating that the model can produce reasonably accurate SIC SR estimates even without retraining on SAR data. Furthermore, after fine-tuning with SAR observations, the model required only 56 epochs to reach performance comparable to its OSTIA-based results. The fine-tuned model achieved an RMSE of 0.0535 (a reduction of 26.21%), an r of 0.9945 (an increase of 2.06%), a PSNR of 30.42 dB (an increase of 6.67%), and an SSIM of 0.9602 (an increase of 2.57%). These findings show that Deep SARU-Net can rapidly adapt to SAR-specific characteristics with only limited additional training, achieving SR estimation accuracy similar to that obtained from satellite reanalysis data. This confirms the strong cross-dataset transferability and practical applicability of the proposed model.

4.2.8. Pan-Arctic SR Estimation Performance

Previously, we have validated the capability of Deep SARU-Net for SIC SR estimation in various small Arctic regions. Now, we extend its application to the entire Arctic region to further explore its SR estimation performance on a larger spatial scale. For the study domain, we select the pan-Arctic region (45°N–90°N, −180°W–180°E), which encompasses the Arctic Ocean and its surrounding landmasses, including ecosystems, climate systems, and sociol-economic factors related to the Arctic environment. Conducting SIC SR estimation in this region is crucial for enhancing the precision of Arctic environmental monitoring and estimation.

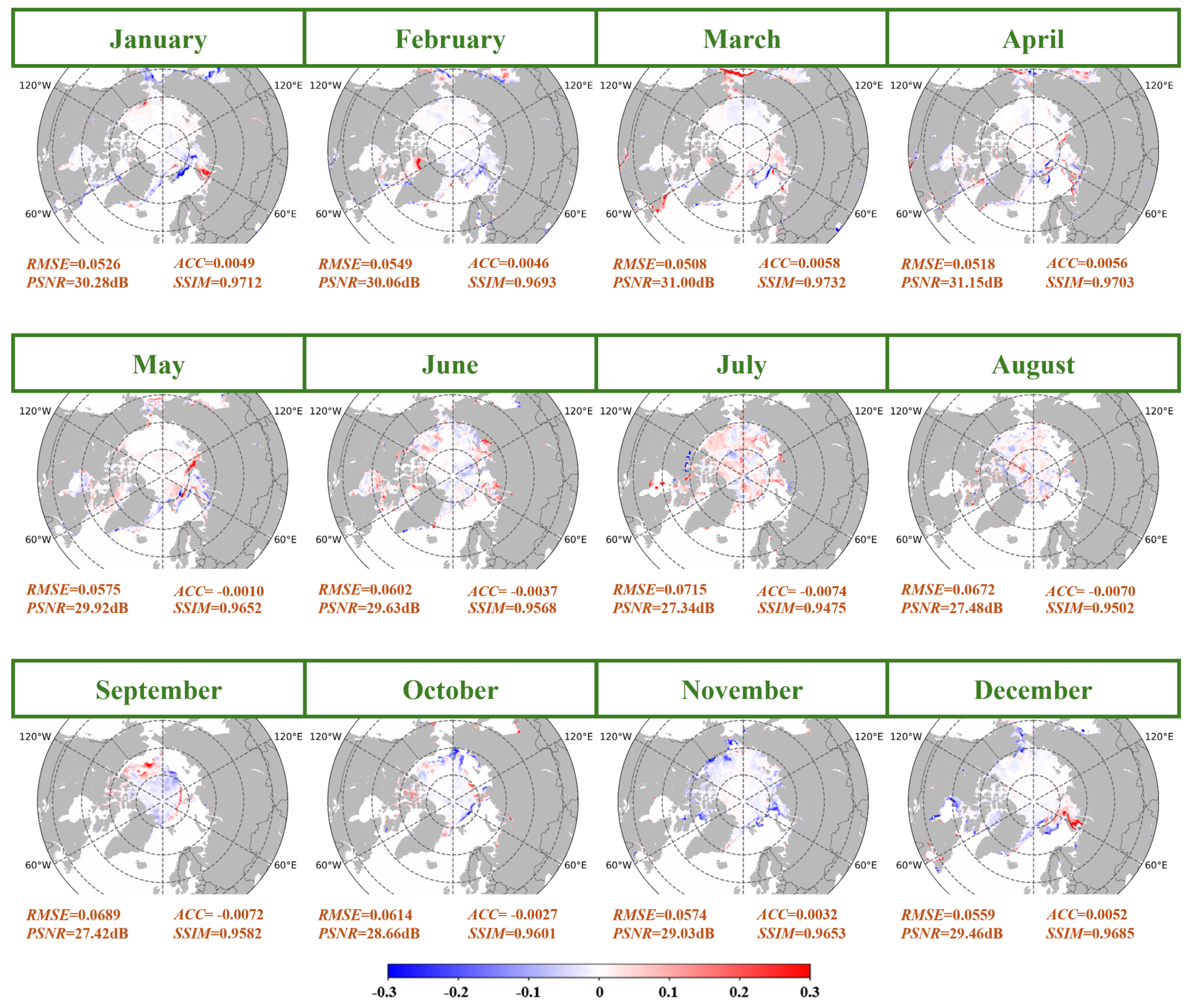

For data selection, we continue to use the OSTIA satellite remote sensing data in our experiments. In earlier stages, a 5× SR factor was adopted to achieve a fine-scale resolution of 1/10° × 1/10° (less than 2 km at high latitudes) to represent detailed SIC structures. However, considering the significant expansion of the study domain, along with constraints on computational cost, hardware resources, and the required level of spatial detail, we have adjusted the SR factor to 2×. Specifically, the LR input data are set to 1/2° × 1/2°, and the HR target data to 1/4° × 1/4°. The Deep SARU-Net is trained under this setting and applied to the pan-Arctic validation set after convergence. Monthly mean estimations are then calculated, and the spatial residuals are visualized in

Figure 13.

Although the Pearson correlation coefficient r has been widely used to evaluate overall agreement, it primarily reflects similarity in spatial patterns and is often dominated by the climatological mean. In our experiments, we observed that the monthly average r across the validation set reaches 0.9902, indicating high correlation. However, this high value may mask the model’s ability to capture anomalous sea ice variability, especially during dynamic seasons. To address this limitation, and following the reviewer’s suggestion, we adopt the Anomaly Correlation Coefficient (ACC) as a more meaningful metric. ACC measures the correlation between the reconstructed and observed anomalies—i.e., deviations from the mean state—which better reflects the model’s capacity to reconstruct interannual and spatial fluctuations beyond the climatological baseline. In our analysis, the monthly mean climatology is computed from the training data and subtracted from both the reconstructions and observations before computing ACC.

According to the ACC-based evaluation, Deep SARU-Net demonstrates robust performance across the pan-Arctic region under the 2× SR setting. In winter and spring (January to May), ACC values consistently exceed 0.980, accompanied by RMSE values below 0.0560, PSNR above 30 dB, and SSIM greater than 0.9650—indicating both accurate anomaly reconstruction and high spatial fidelity. During the summer and autumn melting season (June to September), despite greater dynamic complexity, the model still maintains ACC values above 0.960, with RMSE under 0.0750, PSNR above 27 dB, and SSIM above 0.9450. These results confirm that the model effectively captures the evolving deviations from climatology, even under conditions of rapid sea ice retreat.

In terms of spatial residual distribution, the overall residuals of Deep SARU-Net in the 12-month SIC SR estimation range from [−0.3, 0.3], with 95% of the residuals constrained within [−0.2, 0.2]. During January to May and October to December, the residuals in high-latitude regions are nearly zero, with noticeable errors only appearing along the sea ice edges and coastal areas at lower latitudes, primarily within the range of [−0.1, 0.1]. This indicates that the multi-head self-attention mechanism introduced at both the lowest and highest resolution stages effectively enhances the model’s ability to reconstruct variations in SIC, leading to superior performance.

During the melting season from June to September, the rapid retreat of Arctic sea ice significantly increases the difficulty of SR reconstruction. Although the overall sea ice extent decreases, residuals are more widely distributed across the pan-Arctic region. Nevertheless, Deep SARU-Net maintains high estimation accuracy, with errors mostly confined within [−0.15, 0.15] and no significant systematic bias. This suggests that the model effectively constrains the reconstruction of sea ice edges, land-sea transitions, and internal concentration variations, thereby improving the accuracy of spatial distribution reconstruction. These results further confirm that Deep SARU-Net exhibits strong learning capabilities in capturing the spatial distribution patterns of Arctic sea ice, enhancing its SR estimation performance during the melting season, and improving feature representation at sea-land boundaries. These advancements are critical for achieving higher accuracy in SIC SR estimation across the pan-Arctic region.