Highlights

What are the main findings?

- Proposed a Parallel Multi-Scale Receptive Field Unit (PMSRFU) and C2f-PMSRFU module that enhance receptive field diversity while preserving fine-grained semantic details of small objects under a lightweight computational scale.

- Designed a Shared-Decoupled Interactive Dynamic Alignment Head (SDIDA-Head) with shared convolutions for efficient feature reuse, task decoupling, dynamic alignment, and adaptive scale modulation, significantly enhancing detection accuracy.

What are the implication of the main findings?

- Enhances detection accuracy and robustness for small targets in UAV imagery under complex environmental conditions.

- Demonstrates the effectiveness of task decoupling and dynamic alignment in balancing precision and computational efficiency for UAV-based small-target detection in complex environments, providing valuable insights and references for future research.

Abstract

Unmanned aerial vehicles (UAVs) have been widely used in aerial photography and target detection tasks due to their flexibility and unique perspective. However, small targets often suffer from insufficient resolution, uneven scale distribution, and complex background clutter, which are constrained by imaging conditions such as high-altitude imaging, long-distance capture, and wide field of view. These factors weaken the feature representation and generalization ability of the model, becoming the key bottleneck that restricts the improvement of small target detection accuracy in UAV scenarios. To address the above issues, this paper proposes a small target detection algorithm for UAV perspective, namely MTD-YOLO. First, a Parallel Multi-Scale Receptive Field Unit (PMSRFU) is designed. This unit effectively enhances the receptive field range of feature extraction and the fusion ability of multi-scale contextual information by introducing parallel branches with different-sized convolutional kernels. Second, we embed PMSRFU into a C2f block to form C2f-PMSRFU, which reuses shallow details and fuses multi-scale features to clarify edges and textures in small targets, yielding stronger fine-grained representations. Finally, an efficient detection head with task decoupling, dynamic alignment, and adaptive scale adjustment capabilities, namely SDIDA-Head, is proposed, which significantly improves the model’s small target detection accuracy. Extensive experiments on the VisDrone2019 and HazyDet datasets demonstrate that MTD-YOLO achieves a 7.6% and 6.6% increase in mAP@0.5 compared to the baseline YOLOv8n, respectively. Meanwhile, the Precision is improved by 6.0% and 1.1%, and the Recall is enhanced by 7.5% and 6.9%, respectively. These results fully validate the effectiveness and superiority of the proposed method in UAV small target detection tasks.

1. Introduction

In recent years, Unmanned Aerial Vehicles (UAVs) have revitalized the field of target detection with their unique aerial data acquisition capabilities, expanding the application scenarios of detection tasks from traditional ground perspectives to complex aerial ones. UAVs’ inherent advantages of high flexibility, ease of deployment, and unique top-down view have made them indispensable tools in a variety of tasks, such as infrastructure inspection [1], precision agriculture [2], environmental monitoring [3], and disaster response [4,5]. The deep integration of advanced computer vision algorithms and multifunctional UAV platforms has greatly stimulated and promoted research and application innovations in UAV-based target detection. However, target detection from the UAV perspective faces numerous challenges [6]. First, due to imaging conditions such as high-altitude imaging, long-distance shooting, and wide-angle coverage, targets in images often exhibit characteristics such as small size, weak texture, low contrast, and blurred edges. These factors significantly weaken the model’s ability to perceive fine-grained features. Traditional feature extraction modules struggle to effectively capture the contextual semantics and structural information of targets, leading to targets being easily overwhelmed by redundant interference in complex backgrounds, thereby reducing the distinctiveness and discriminability of features. Second, there is often a semantic shift and spatial misalignment between multi-level features, making it difficult for the detection head to achieve effective feature alignment and information fusion, which can easily lead to classification confusion and localization bias. Moreover, targets in UAV scenarios frequently change in scale and are often accompanied by occlusions, pose changes, and background clutter, which further increase the difficulty of feature matching and spatial localization. In addition, traditional detection head designs typically lack a scale-adaptive mechanism and dynamic response capability for targets, resulting in insufficient robustness of the model in complex scenes, ultimately manifesting as significant false positives and false negatives.

Early target detection methods mainly relied on traditional manual feature extraction techniques, such as Scale-Invariant Feature Transform (SIFT) [7] and Histogram of Oriented Gradients (HOG) [8]. These methods usually combined a sliding window with a classifier for target localization. Although they achieved certain results in specific scenarios, they had several limitations, including insufficient sensitivity to complex background textures, weak adaptability to multi-scale targets, and low resolution of small target features. These issues made it difficult for them to generalize to diverse UAV aerial images. With the continuous development of deep learning and Convolutional Neural Networks (CNNs), their powerful feature extraction and representation capabilities have gradually replaced traditional manual feature extraction methods and have become the mainstream technology for detection and recognition in the field of computer vision [9,10]. The Deep Belief Network (DBN) proposed by Hinton et al. [8] is widely regarded as the formal beginning of deep learning research. Subsequently, a series of high-performance CNN models such as VGGNet [11], GoogLeNet [12], and ResNet [13] were proposed, which greatly promoted the development and application of deep learning in image recognition and other fields. Based on this, researchers have successively proposed a variety of object detection frameworks based on deep neural networks. According to the differences in the detection process, they can mainly be divided into two categories: one is the two-stage detector based on region proposals, with representative methods including Faster R-CNN [14] and Cascade R-CNN [15]. These methods first generate region proposals and then classify and perform bounding box regression on them. They have high detection accuracy but are computationally complex and have relatively slow inference speeds. The other category is the one-stage detector based on regression, such as SSD [16] and the YOLO series [17]. These methods directly perform object classification and location regression on the entire image, omitting the region proposal generation process. They maintain high accuracy while significantly improving detection speed and are more suitable for scenarios with limited computational resources or requiring real-time processing.

Focusing on the challenges of small target detection in UAVs, researchers have conducted in-depth exploration in two aspects: structural design and training strategies. In terms of structural design, Lin et al. [18] proposed the Feature Pyramid Network (FPN), which effectively improves the detection performance of small targets by integrating high-level semantic information with low-level spatial details through top-down feature fusion. Xie et al. [19] proposed KL-YOLO, which integrates the WIoUv3 loss function, GAM attention mechanism, and an additional detection branch for small objects based on YOLOv8s. This method achieves a mAP@0.5 of 43.1% on the VisDrone2019 dataset with a compact model size of only 3.16 MB. Jiang et al. [20] designed MFFSODNet, which combines a Multi-scale Feature Enhancement Module (MSFEM) with a Bi-directional Dense Feature Pyramid (BDFPN), demonstrating excellent detection accuracy and robustness in multiple small target detection tasks. Sun et al. [21] proposed MIS-YOLOv8, which effectively reduces feature loss by using a Multi-level Feature Extraction (MFE) module, Spatially Dilated Convolution (SDA), and a small target branch, resulting in a 9.0% improvement in mAP@0.5 on the VisDrone2019 dataset. Zhu et al. [22] introduced TPH-YOLOv5, which incorporates Transformer Prediction Heads, attention enhancement mechanisms, and multi-scale testing and data augmentation strategies, achieving approximately a 7% performance improvement on the VisDrone2021 DET test challenge.

In terms of training optimization, Zeng et al. [23] proposed PA-YOLO for UAV scenarios, which effectively reduced false negatives and false positives through a small target feature enhancement module and Soft-NMS post-processing. On the VisDrone2019 dataset, this method achieved a 42.3% mAP@0.5, an improvement of approximately 11.3% over the baseline YOLOv8, demonstrating its effectiveness in UAV aerial small target detection tasks. Feng et al. [24] introduced Task-aligned One-stage Object Detection (TOOD), which incorporates a task alignment strategy during the label assignment phase to dynamically coordinate classification and regression, thereby enhancing stability and accuracy. It achieved a 51.1% AP on the MS-COCO dataset. Li et al. [25] proposed Generalized Focal Loss (GFL), which integrates classification scores with localization quality and introduces a quality-aware mechanism, achieving a 45.0% AP on COCO test-dev using a ResNet-101 backbone. Zhang et al. [26] proposed Adaptive Training Sample Selection (ATSS), which adaptively selects positive and negative samples based on target scale, effectively alleviating detection bias across different scales and ultimately achieving a 50.7% AP on the MS-COCO dataset. These innovations in structural design and training strategies have provided feasible paths for improving the performance of UAV small target detection.

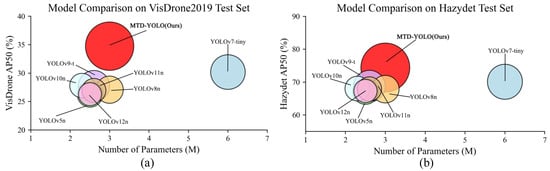

Despite the achievements of the aforementioned methods in feature fusion, multi-scale representation, and training strategies, few studies have focused on the joint optimization of task decoupling and dynamic alignment mechanisms in multi-scale feature modeling. To address this gap, this paper proposes a UAV small target detection algorithm based on multi-scale perception, task decoupling, and dynamic alignment—MTD-YOLO. The proposed MTD-YOLO was systematically evaluated on the VisDrone2019 [6] and HazyDet [27] datasets. The experimental results show that this method significantly outperforms various mainstream detection algorithms in terms of small target detection performance. Figure 1a,b illustrate the performance comparison of MTD-YOLO and the YOLO series object detection algorithms on the VisDrone2019 and HazyDet test sets, respectively.

Figure 1.

Comparison of our MTD-YOLO with other algorithms in terms of accuracy and efficiency. Comparison on the VisDrone2019 test set (a), comparison on the HazyDet test set (b), where the radius of the circle represents GFLOPs.

The main contributions of this paper are as follows:

- To address the issue of drastic scale variation in small targets, a Parallel Multi-Scale Receptive Field Unit (PMSRFU) is designed, which effectively enhances the model’s ability to perceive and express features of targets of different sizes.

- Based on the PMSRFU, a lightweight C2f-PMSRFU module is constructed. While inheriting the multi-scale target feature representation capability of the PMSRFU, it retains the efficient feature extraction advantages of the C2f module, achieving a good balance between model parameter scale and detection accuracy.

- To address the issues of insufficient interaction between task branches and the lack of scale-adaptive adjustment in traditional detection heads, this paper proposes a novel detection head, termed Shared-Decoupled Interactive Dynamic Alignment Head (SDIDA-Head). This architecture significantly enhances detection accuracy in small object detection tasks by introducing shared feature extraction, task-decoupled interaction, dynamic alignment strategies, and a scale-adaptive adjustment module.

- The proposed model is extensively evaluated on two different UAV aerial detection datasets. Both quantitative metrics and feature visualization results fully demonstrate the effectiveness and superiority of the proposed method.

2. Related Works

2.1. Small Object Detection in UAV Images

Current research on small target detection using UAVs focuses on improving the robustness and accuracy of detection under complex backgrounds, perspective changes, and imaging limitations. In this domain, the classification of object sizes widely adopts the criteria proposed by the AI-TOD dataset [28], which defines objects with sizes from 2 to 8 pixels as “very tiny,” from 8 to 16 pixels as “tiny,” from 16 to 32 pixels as “small,” from 32 to 64 pixels as “medium,” and objects larger than 64 pixels as “large.”

Li et al. [29] proposed the RT-DETR-UAVs model, specifically designed for small target detection in UAV images. This method reconstructs the Feature Fusion Path, introduces a Global Feature Aggregation Module (GFAM) to enhance cross-layer information integration, and combines the BiFormer Routing Attention Mechanism to increase the encoder’s focus on tiny targets. This approach significantly improves the detection performance of the Transformer architecture in Dense Small Object Scenarios. Song et al. [30] introduced the MHA-YOLOv5 model, which integrates three core components: Multi-Scale Attention (MSA), Foreground Enhancement Module (FEM), and Depthwise Separable Channel Attention (DSCA). These components are used to establish scale associations between small and large targets, focus on foreground information, and extract multi-dimensional channel features, respectively. Experimental results show that this method improves the mAP by 2.82%, 2.25%, and 3.07% on the VisDrone2019-DET, UAVDT, and CARPK datasets compared to YOLOv5. Wang et al. [31] proposed the RPLFDet network, which introduces Rational Stride Convolution (RSConv) to achieve non-integer downsampling, thereby preserving more low-level detail features while controlling computational costs. The designed Downsampling Residual Block (DSR) effectively reduces redundancy in high-resolution feature maps and improves computational efficiency. Additionally, the proposed Differential Set Balanced IoU Loss (DSB-IoU) optimizes the bounding box regression accuracy for small targets, achieving an excellent balance between detection performance, computational cost, and model size. Liu et al. [32] proposed DFAS-YOLO to mitigate feature misalignment and detail loss in remote sensing images. The Soft-Aware Adaptive Fusion (SAAF) module corrects upsampling deviation, while the Global Dense Local Aggregation (GDLA) module preserves fine-grained information after downsampling, achieving competitive performance on the VisDrone2019 and HIT-UAV datasets. Xu et al. [33] proposed SRTSOD-YOLO, a YOLO11-based model series designed for efficient small-object detection in UAV imagery. The Multi-scale Feature Complementary Aggregation Module (MFCAM) enhances small-target representation, while the Gated Activation Convolutional Fusion Pyramid Network (GAC-FPN) improves multi-scale feature fusion and suppresses background noise, achieving notable mAP improvements on the VisDrone2019 and UAVDT datasets. Zhao et al. [34] proposed DVIF-Net, a visible–infrared fusion network for UAV small-object detection that exploits the complementary properties of dual modalities. By employing a cross-modal fusion strategy and dual context-guided fusion modules, the model enhances feature representation and edge sensitivity, achieving superior accuracy and robustness on the DroneVehicle and VEDAI datasets compared with state-of-the-art methods.Despite the performance improvements achieved by the aforementioned methods from the perspectives of feature fusion, attention mechanisms, loss function design, and multi-modal learning, they have overlooked the issue of fine-grained information loss caused by feature misalignment, which restricts the further enhancement of detection accuracy.

2.2. Multiscale Feature Extraction Methods

In object detection tasks, targets of different scales often exhibit significant differences in appearance and contextual features. Fixed convolutional kernels struggle to balance fine-grained features with global information, and their single receptive field limits the expression of feature diversity. This limitation significantly increases the demand for feature representation capabilities of detection algorithms in complex scale distribution scenarios. Therefore, researchers have proposed various multi-scale feature extraction methods to enhance the network’s feature representation and adaptability [35]. Zhang et al. [36] proposed an Adaptive Multi-Scale Feature Extraction Encoder (AMFEFormer), which combines a Multi-Scale Contextual Information Extraction Module (MCIEM) with an Interactive Extraction Transformer Module (IETrans). This approach efficiently and interactively transmits input features from different layers of the FPN, achieving adaptive multi-scale information collection and compensating for the shortcomings of traditional FPNs. Deng et al. [37] introduced a Multi-Scale Feature Extraction and Cross-Level Feature Fusion Network (MCFN), which captures target features of different scales using a Multi-Scale Feature Extraction Module (MSFE) and fuses high-level abstract features with low-level detailed features through a Cross-Stage Feature Pyramid Network (CSFPN) to improve small object detection. Wang et al. [38] proposed a Multi-Scale and Multi-Directional Feature Fusion Network, which enhances multi-scale perception in remote sensing images through Direction-Controlled Convolutions (SCM-D), Multi-Scale Residual Encoders (MSR), and Multi-Directional Feature Fusion Modules (MDFF). Liu et al. [39] introduced LFEMAP-Net, which strengthens low-level spatial details based on edge priors and achieves high-precision building extraction through a Multi-Scale Attention Pyramid in the decoding stage. Kong et al. [40] proposed a Multi-Scale Residual Dense Network for super-resolution tasks, introducing Cross-Layer Multi-Scale Feature Connections in feature fusion. This allows each layer to not only integrate current features but also all previous layer outputs, thereby maximizing the use of hierarchical features in the original low-resolution image and achieving more efficient adaptive feature learning. Liao et al. [41] proposed MSSA, a Multi-Scale Semantic-Aware image–text retrieval framework that enhances cross-modal alignment in remote sensing. By introducing progressive spatial–channel attention and image-guided text attention, the method captures hierarchical semantic associations and achieves state-of-the-art retrieval performance across multiple datasets. Chen et al. [42] proposed WetSegNet, an edge-guided network that integrates Multi-Scale Feature Interaction (MFI) and Multi-Feature Fusion (MFF) modules for wetland classification. These modules enhance multi-scale feature representation and boundary delineation within a CNN–Swin Transformer U-Net framework, achieving state-of-the-art accuracy on GF-2 wetland datasets. Wen et al. [43] proposed MambaSegNet, an encoder–decoder segmentation network based on the Mamba architecture for high-resolution ship extraction. By employing MambaLayer and ResMambaBlock for multi-scale feature processing, the model achieves superior accuracy and robustness on the DIOR-SHIP and LEVIR-SHIP datasets. Li et al. [44] proposed RCF-YOLOv8, an enhanced detection framework for forward-looking sonar imagery that improves underwater target recognition. By integrating CoordConv for spatial encoding and an Efficient Multi-Scale Attention (EMA) mechanism for sparse target fusion, the model achieves superior detection accuracy and robustness on the URPC2021 dataset.Although the aforementioned multi-scale feature extraction methods have strengthened representation power through structural design, their fusion processes still predominantly follow fixed paradigms, failing to effectively resolve cross-scale semantic misalignment and fine-grained information conflicts, thereby constraining further improvements in detection performance.

2.3. Task Decoupling and Alignment Methods

In object detection tasks, the classification task focuses on global semantic information, while the regression task is concerned with local geometric information. Direct coupling of the two can easily lead to feature competition and mutual interference, thereby limiting the improvement of detection performance. To address this, researchers have proposed various task decoupling and task alignment methods. Through structural optimization and feature interaction strategies, these methods enable the two types of tasks to obtain the feature representations they need while achieving effective collaboration on key information, thereby improving overall detection accuracy. Zuo et al. [45] proposed an efficient anchor-free detector combining Dynamic Receptive Field Allocation (DRFA) and task alignment mechanisms. By introducing DRFA in the feature pyramid, the receptive field is adaptively adjusted to fit targets of different scales. The task decoupling prediction mechanism and task-specific information enhancers are used to optimize classification and localization separately. The anchor-free task alignment deep supervision ensures the consistency of predictions from both tasks, significantly improving detection performance. Ding et al. [46] proposed EdgeSight, a detection framework tailored for edge computing. This approach enhances feature extraction while maintaining computational efficiency by incorporating RepViTBlock in the backbone. In the detection head, a Task-Dynamic Alignment Detection Head is introduced, which leverages Deformable Convolution v2 (DCNv2) to adaptively adjust the receptive field, thereby optimizing both classification and regression tasks. Additionally, the framework employs LAMP pruning and dual distillation to reduce computational complexity while minimizing accuracy degradation. Zuo et al. [47] proposed the Task-Guided Attention Decoupling Head, composed of a Task Decoupling Attention Distributor (TDAD) and a Task-Correlated Network (TCN). The TDAD designs dedicated attention modules for classification and localization, while the TCN transfers key information across tasks, maintaining branch independence while ensuring prediction consistency, thereby improving accuracy and stability in one-stage detection. Bai et al. [48] proposed an efficient framework for Incremental Object Detection (IOD) based on Task-Aligned Learning and Knowledge Distillation to address the issues of multi-task transfer and catastrophic forgetting in IOD. By employing a tailored Task-Alignment Mechanism and a Decoupled Classification Network, this method mitigates conflicts between different optimization objectives and achieves high detection accuracy in multi-step incremental scenarios on MS COCO and PASCAL VOC datasets. Zhou et al. [49] proposed ScaleViM-PDD, a state-space-model-based dehazing network that applies task decoupling and multi-scale fusion to remote-sensing image restoration. The Multi-Scale EfficientViM with Physical Decoupling module and Dual-Domain Fusion module separate physical and perceptual learning tasks while combining spatial and frequency features, significantly enhancing detail recovery and generalization across diverse hazy scenes.While the aforementioned methods have alleviated feature conflicts to a certain extent, their optimization processes are not embedded within a multi-scale joint modeling framework, resulting in cross-scale collaboration bottlenecks under complex and crowded multi-object scenes and thus limiting further performance gains.

3. Methods

This section first provides an overview of the overall architecture of the proposed method, followed by a detailed introduction to the implementation details of each designed module.

3.1. Overall Architecture of MTD—YOLO

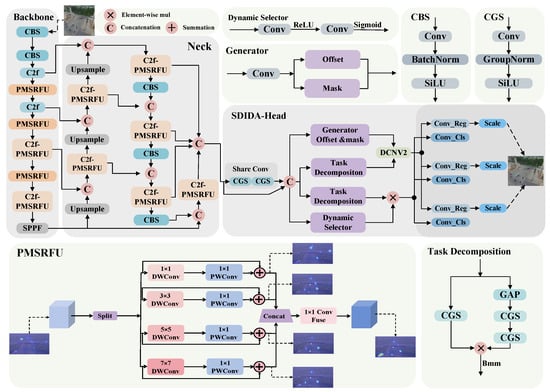

The overall structure of MTD-YOLO is shown in Figure 2. First, the PMSRFU is integrated into the Backbone to enhance the model’s target perception ability across multiple scales. Second, the C2f-PMSRFU module is constructed to replace the original C2f module, reducing the model’s parameter count while retaining the original C2f’s efficient feature extraction capability. By alternately stacking PMSRFU and C2f-PMSRFU modules, the model’s receptive field is further expanded, significantly improving its representation ability for small targets. Finally, a detection head with task collaborative interaction and alignment mechanisms is designed. By introducing feature collaborative interaction and dynamic alignment mechanisms between classification and regression tasks, it enhances the information sharing and alignment capabilities between branches. Meanwhile, combined with the Scale layer, it achieves dynamic adaptation to multi-scale targets, significantly improving the model’s detection accuracy in complex scenarios.

Figure 2.

The overall structure diagram of MTD-YOLO.

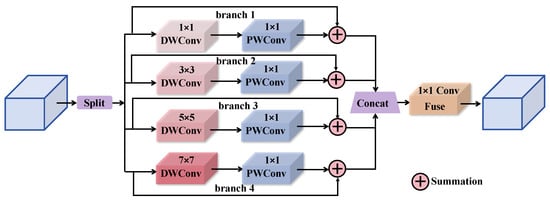

3.2. Parallel Multi-Scale Receptive Field Unit

To enhance the model’s perception ability for multi-scale targets in UAV scenarios with complex backgrounds and significant scale variations, this paper designs a Parallel Multi-Scale Receptive Field Unit. The structure of PMSRFU is shown in Figure 3. The input feature map is first evenly divided into four groups along the channel dimension and fed into four parallel convolutional branches to achieve multi-scale feature extraction. Assuming the input feature is , where C, H, and W represent the number of channels, height, and width, respectively. The input feature is divided into four groups along the channel dimension as , and each group is fed into a parallel convolutional branch for multi-scale feature extraction. Each branch consists of a DepthWise Convolution (DWConv) and a PointWise Convolution (PWConv). The specific calculation process of each branch is shown in Equation (1), where represents a depthwise convolution with a kernel size of , represents a pointwise convolution, and represents the i-th group of features after channel division.

Figure 3.

The structure diagram of PMSRFU.

To enhance the diversity of receptive fields and semantic expression capabilities, the PMSRFU introduces four parallel convolutional branches with kernel sizes of 1 × 1, 3 × 3, 5 × 5, and 7 × 7 within the same layer. The values of these kernels are approximately logarithmically spaced, enabling sparse sampling from local to larger contexts within the same layer. Compared to densely stacking multiple layers of 3 × 3 convolutions, a small number of heterogeneous kernels efficiently expand the receptive field while keeping computations manageable and reducing the risks of latency and optimization degradation associated with excessively deep networks. This design aligns with the scale characteristics of FPN and is well-suited to the statistical properties of UAV data, which are characterized by “small sizes and dense occlusions”: the 1 × 1 branch focuses on fine-grained local features and channel integration; the 3 × 3 branch captures medium-range contexts; the 5 × 5 branch strengthens the modeling of medium-to-large scale structures; and the 7 × 7 branch further extends the receptive range to represent long-range dependencies. Moreover, this module works in conjunction with the SDIDA-Head. The large-kernel branches provide ample global cues, while the dynamic alignment head achieves fine-grained geometric correction, with the two components complementing each other. Relying solely on small kernels would easily result in the loss of global consistency under complex backgrounds and scale variations. In implementation, the outputs of each branch are first fused with the input features via residual connections to obtain These are then concatenated along the channel dimension to form Z, which is subsequently compressed and uniformly mapped through a 1 × 1 convolution to produce the output feature map O; the specific calculations are shown in Equation (2).

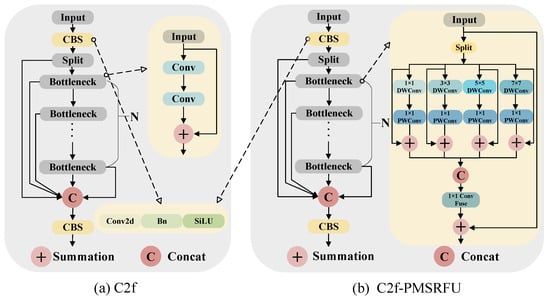

3.3. C2f-PMSRFU Module

To further extend the expression ability of PMSRFU for targets of different scales and improve the model’s feature extraction efficiency, this paper proposes an improved structure based on the original C2f module—the C2f-PMSRFU module, the structure of which is shown in Figure 4b. The C2f module mainly consists of CBS convolutional structures and multiple Bottleneck modules, and has good feature extraction capabilities. However, when dealing with targets with significant scale changes and complex backgrounds, its limited receptive field coverage makes it difficult to fully capture contextual information. To alleviate the above problems, the C2f-PMSRFU module integrates a parallel multi-scale receptive field mechanism while retaining the overall framework of the original C2f module. Similar to PMSRFU, after channel division, four parallel branches are added to the original feature channels. These branches use DWConv and PWConv with different kernel sizes (1 × 1, 3 × 3, 5 × 5, 7 × 7) to extract multi-scale semantic information, thereby enhancing the module’s scale diversity in feature representation. Subsequently, the features extracted by each branch are fused with the corresponding input features through residual connections, further enhancing local semantic stability and detail retention. The multi-scale features are then concatenated along the channel dimension to integrate semantic information from different receptive fields. Next, a 1 × 1 convolution is used to complete feature compression and unified mapping. Finally, the processed features are fused with the features from the main path through a residual connection, outputting a feature map with higher semantic richness and stronger feature representation capabilities.

Figure 4.

The structure diagram of the C2f-PMSRFU module.

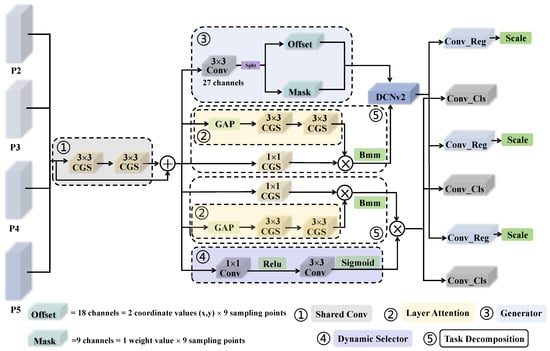

3.4. Shared-Decoupled Interactive Dynamic Alignment Head

To further enhance the overall performance of the detection head in small target detection tasks, this paper proposes a Shared-Decoupled Interactive Dynamic Alignment Head, the structure of which is shown in Figure 5. The detection head introduces strategies such as shared feature extraction, task decoupling, dynamic interactive alignment, and scale adaptive adjustment, greatly improving the model’s detection accuracy and multi-scale generalization ability.

Figure 5.

The structure diagram of SDIDA-Head.

Firstly, to address the issue of parameter redundancy in the detection head, SDIDA-Head introduces a shared convolutional structure that uniformly processes multi-scale feature maps from P2 to P5 derived from the FPN. This design significantly reduces the number of parameters and computational overhead, while ensuring consistent feature representation across scales, mitigating feature distribution shifts caused by scale variations, and improving detection accuracy and generalization. Let the input feature be , where l represents the index of the feature map level, corresponding to the feature levels in the Feature Pyramid Network, and , , represent the number of channels, height, and width, respectively. This feature is processed by a shared convolutional structure composed of two consecutive Convolutional Group and Squeeze (CGS) modules to generate the shared features required for subsequent decoupling and alignment modules. The computation process is shown in Equation (3).

The CGS module introduces GroupNorm operations, which have been proven in numerous studies to significantly enhance the stability and generalization ability of the detection head for classification and regression tasks. At the same time, the shared convolutional structure significantly reduces the model’s parameter size while effectively minimizing redundant calculations.

Secondly, to address the issue of complete independence between the classification and regression paths in the detection head, and the lack of interaction between tasks, SDIDA-Head has designed a task decoupling and dynamic alignment mechanism for the classification and regression paths to enhance the collaborative expression ability between feature branches. As shown in the Figure 5, in the regression branch, the generated shared feature is first sent to the Generator module, which outputs a 27-channel feature map through a convolution, used to produce the offset and mask features required by the deformable convolution DCNv2. In the channel feature map, the first 18 channels serve as the offset branch output, representing the two-dimensional spatial offsets of 9 sampling points, which are used for the sampling bias of the DCNv2 convolution kernel, allowing the kernel to dynamically adjust the sampling position according to the target shape, thereby enhancing the spatial perception ability of the regression branch for target boundaries; the last 9 channels serve as the mask branch output, generating corresponding weight coefficients for each sampling point, to weight and regulate the feature response of the sampling points, achieving dynamic weight modulation, thereby enhancing the regression branch’s expression and discrimination ability for fine-grained targets in complex backgrounds. The specific calculation process is as follows: let the output feature of the Generator module be , the offset branch output feature after division be , and the mask branch output feature be , where represents the two-dimensional spatial offsets of the 9 sampling points, and m represents the modulation weight coefficients corresponding to the sampling points. The modulated convolution sampling process of DCNv2 is shown in Equation (4).

In the formula, represents the coordinate of the center point of the convolutional kernel on the input feature map, is the fixed offset of the n-th sampling point of the convolutional kernel relative to , is the learnable sampling position offset, is the modulation coefficient for the sampling point, and represents the weight of the n-th position of the convolutional kernel.

At the same time, the shared features are fed into the Task Decomposition module for feature decoupling and expression supplementation of the regression task. This module consists of two sub-paths: the main path processes the shared features through a CGS module to extract initial task features; the auxiliary path is a Layer Attention branch, which first performs Global Average Pooling (GAP) to extract global information from the shared features, and then generates guiding attention weights through two successive CGS modules. The attention weights are fused with the features from the main path through element-wise multiplication. Additionally, SDIDA-Head uses Batch Matrix Multiplication (BMM) operations to achieve efficient and low-latency computation between weights and features in the batch dimension. Ultimately, the features output by Task Decomposition are synergistically fused with the spatially dynamic sampling features from the Generator, thereby guiding DCNv2 to output more accurate regression prediction results.

In the classification branch, in addition to achieving task decoupling through the Task Decomposition module, a Dynamic Selector module is further proposed to spatially dynamically weight the classification features, thereby enhancing the discriminative power and semantic representation accuracy of the classification branch. As shown in Figure 5, the Dynamic Selector takes the shared features as input, first generates a compact feature channel representation through a 1 × 1 convolution, then passes it through a ReLU activation function to enhance the discriminability between features. Subsequently, it obtains spatial contextual information through a 3 × 3 convolution, and finally outputs channel weights through a Sigmoid function. The calculation process of the channel weights is shown in Equation (5).

In the formula, represents the Sigmoid activation function. Subsequently, the channel weights are element-wise multiplied with the classification task features, enhancing the response to the target areas while suppressing background interference and noise, thereby effectively improving the recognition accuracy and discrimination ability for multi-class targets.

In the final output stage, considering the differences in target scales corresponding to different feature layers, SDIDA-Head introduces a Scale module in each detection branch to adaptively adjust the feature maps at various scales, further enhancing the consistency of the model’s multi-scale feature representation.

4. Experiments and Results

This section first introduces the two datasets used in the experiments, the corresponding experimental parameter settings, and the evaluation criteria. Subsequently, detailed experimental comparison results are provided, including overall performance comparison, detailed category-specific performance comparison, Precision-Recall (PR) curve analysis, and corresponding visualizations.

4.1. Experimental Environment and Parameter Configuration

All experiments in this paper were conducted on the same deep learning workstation. The training processes were initiated from scratch without utilizing any pre-trained weights. The specific configuration includes the Windows 11 operating system, NVIDIA GeForce RTX 4080s-16gb GPU, Intel I7-14700KF processor, deep learning framework PyTorch 1.12.0 with CUDA 11.6. During model training, the input image size was set to , the batch size was 16, and the number of training epochs was 300. The initial learning rate was , and the minimum learning rate was . Model optimization used stochastic gradient descent with a momentum of 0.937 and a weight decay of . During testing, the IoU threshold for Non-Maximum Suppression (NMS) was set to 0.7.

4.2. Datasets

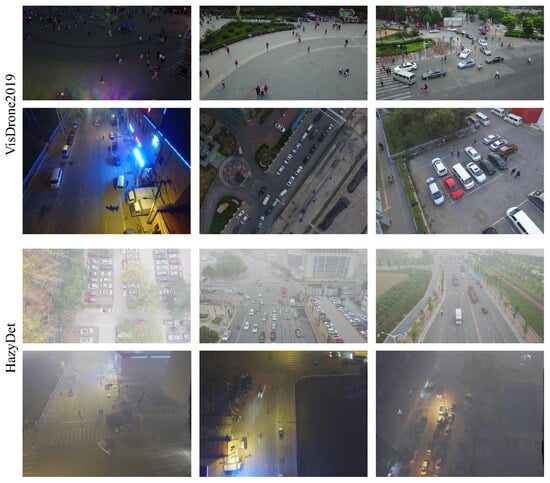

To comprehensively verify the detection performance and generalization ability of the proposed algorithm in complex scenarios, this paper uses two public UAV aerial photography datasets, VisDrone2019 and HazyDet, to conduct a comprehensive evaluation of the proposed method. These two datasets exhibit complementary scene characteristics and can provide a thorough assessment of UAV detection performance in different and challenging environments. Figure 6 shows image samples from the two different datasets.

Figure 6.

Samples from the VisDrone2019 and HazyDet datasets.

The VisDrone2019 dataset was published by the Machine Learning and Data Mining Lab (AISKYEYE team) at Tianjin University. Images were collected from various cities in China, covering different times, scenes, weather conditions, and environmental settings. The dataset includes a total of 10,290 high-resolution images with complete target annotations, specifically divided into 6471 training images, 1610 testing images, 548 validation images, and 1580 images for the challenge task. The annotation categories cover 10 common objects such as pedestrians, vehicles, and bicycles. This dataset is characterized by rich scene diversity and target distribution features and is widely used in research areas such as small target detection, target tracking, and multi-object recognition. In contrast, the HazyDet dataset was jointly published by the PLA Army Engineering University, Nankai University, Nanjing University of Posts and Telecommunications, and Nanjing University of Science and Technology. It focuses on images captured by UAVs under low visibility conditions such as haze, fog, and insufficient lighting, aiming to evaluate and enhance the robustness of object detection algorithms in adverse atmospheric and lighting environments. HazyDet includes 11,000 unique UAV images, with each image provided in both hazy and haze-free versions, totaling 22,000 images. It covers three common traffic target categories: cars, trucks, and buses, with a total of 383,000 annotated instances. The dataset is divided into 8000 training images, 1000 validation images, and 2000 testing images. HazyDet excels in image authenticity, annotation completeness, and sample size, providing high-quality data support for research in object detection, image dehazing, and multi-task perception under low visibility scenarios.

4.3. Evaluation Metrics

To comprehensively evaluate the efficiency and detection performance of the algorithm proposed in this paper, we use GFLOPs and Parameters to measure the computational overhead of the model. GFLOPs represent the total number of floating-point operations required by the model during a single forward inference process, reflecting the computational complexity of the model; Parameters refer to the total number of trainable parameters in the model.

In terms of detection performance, this paper selects Precision, Recall, and mean Average Precision at IoU = 0.5 (mAP@0.5) as the main evaluation metrics. Among these, Precision measures the proportion of true positives among the samples predicted by the model as positive, and its calculation formula is shown in Equation (7);

In the formula, P represents the precision, True Positive (TP) is the number of instances that are predicted as positive and actually are positive, and False Positive (FP) represents the number of instances that are predicted as positive but actually are negative. Recall measures how many of all true positives are successfully detected, and its calculation formula is shown in Equation (8);

In the formula, R represents the recall rate, TP and FP are consistent with Equation (8), and False Negative (FN) indicates the number of instances that are predicted as negative but actually are positive. mAP@0.5 is the mean value of Average Precision across all categories at an IoU threshold of 0.5, commonly used to measure the overall detection performance of a model across all categories, and its calculation formula is shown in Equation (9);

In the formula, N represents the total number of categories, is the Average Precision for the i-th category, and IoU = 0.5 indicates that the Intersection over Union threshold is set to 0.5 when calculating the Average Precision.

Additionally, this paper further introduces metrics such as , , , , and to measure the detection accuracy of extremely tiny, tiny, small, medium, and large targets, respectively. This allows for a more fine-grained assessment of the model’s detection performance across different target sizes.

4.4. Comparison Experiment

4.4.1. Comprehensive Performance Comparison on the VisDrone2019 Test Set

Based on the aforementioned experimental environment and evaluation metrics, the proposed MTD-YOLO algorithm was comprehensively compared with various YOLO series models and the latest FBRT-YOLO on the test set of the VisDrone2019 UAV dataset in this paper. In addition to evaluating the overall detection performance, the detection results for each target category were also meticulously analyzed. The experimental results are shown in Table 1 and Table 2.

Table 1.

Performance Comparison of MTD-YOLO and YOLO Series Algorithms on the VisDrone2019 Test Set.

Table 2.

Detailed Category-wise Performance Comparison of MTD-YOLO and YOLO Series Algorithms on the VisDrone2019 Test Set.

On the VisDrone2019 test set, MTD-YOLO outperforms all compared models across core evaluation metrics. Specifically, the mAP@0.5 of MTD-YOLO reaches 34.7%, which is 7.4% higher than that of the state-of-the-art FBRT-YOLO. For small-object detection metrics, , , and increase by 2%, 3.3%, and 4.9%, respectively, highlighting MTD-YOLO’s advantage in tiny-object recognition. Additionally, the precision and recall of MTD-YOLO reach 46% and 36.7%, respectively, both of which are significantly higher than those of all compared models, indicating that MTD-YOLO achieves a more favorable balance between detection precision and recall.

As can be seen from Table 2, MTD-YOLO achieves the highest detection accuracy in all categories of the VisDrone2019 test set. Its advantage is particularly significant in small target categories such as pedestrian, people, and bicycle, where it leads FBRT-YOLO by 10.4%, 10%, and 4.4%, respectively. In medium and large target categories such as car, van, and bus, MTD-YOLO also achieves the best accuracy, demonstrating the model’s good scale adaptability.

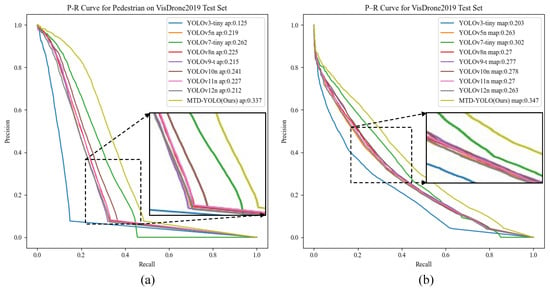

This study further plots the overall Precision-Recall (PR) curves for all models on the VisDrone2019 test set from the UAV perspective. Additionally, to provide a more fine-grained analysis of model performance, the PR curves for the representative small target category “pedestrian” in the VisDrone2019 test set are also presented, as shown in Figure 7.

Figure 7.

Overall Precision–Recall (PR) curves of the models on the VisDrone2019 Test Set (a). Precision–Recall (PR) curves of the models for the pedestrian category (b).

As shown in Figure 7a, MTD-YOLO almost consistently maintains the highest precision across the entire recall range, fully demonstrating its superior overall discriminative ability and good generalization performance. Figure 7b further validates the model’s advantages in dense small target scenarios, indicating its stronger feature extraction and target localization capabilities, which enable stable and efficient detection outputs in complex environments.

4.4.2. Comprehensive Performance Comparison on the HazyDet Test Set

After completing the performance comparison analysis on the VisDrone2019 test set, this paper further conducted a comprehensive comparison of MTD-YOLO with several YOLO series models on the HazyDet test set, including overall detection performance and detection performance for each target category. The experimental results are detailed in Table 3 and Table 4.

Table 3.

Performance Comparison of MTD-YOLO and YOLO Series Algorithms on the HazyDet Validation Set.

Table 4.

Detailed Category-wise Performance Comparison of MTD-YOLO and YOLO Series Algorithms on the HazyDet Test Set.

On the HazyDet test set, MTD-YOLO also demonstrates excellent detection performance, with the mAP@0.5 increasing to 74.5%, which is 6% higher than FBRT-YOLO’s 68.5%. In the small target detection task, , , are increased by 0.7%, 3.7%, and 7.5%, respectively, significantly enhancing the model’s ability to distinguish small-scale targets in low-clarity images. In addition, the precision and recall of MTD-YOLO are also superior to all comparison methods, further verifying the model’s detection robustness and generalization ability under low-quality image conditions.

As can be seen from Table 4, MTD-YOLO shows significant advantages over various versions of YOLO series algorithms on the HazyDet test set. In the target categories of car, truck, and bus, MTD-YOLO achieves mAP@0.5 values of 88.9%, 51.9%, and 82.6%, respectively, all of which are the highest values currently, fully verifying its adaptability and detection robustness for medium and large targets in low-visibility and complex environments.

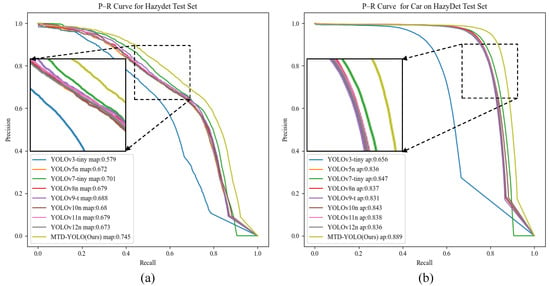

We also plotted the PR curves for all models on the HazyDet test set and for the car category, as shown in Figure 8.

Figure 8.

Overall Precision–Recall (PR) curves of the models on the HazyDet test set (a). Precision–Recall (PR) curves of the models for the Car category (b).

As can be seen from Figure 8a, MTD-YOLO maintains a high precision curve across the entire recall range, reflecting its stronger feature extraction capability and robustness in complex environments with heavy haze interference. The PR curve for the car category in Figure 8b further validates this advantage. This fully indicates that MTD-YOLO still possesses stronger category discrimination ability and detection accuracy in scenarios where target features are weakened.

The comprehensive comparison results indicate that MTD-YOLO not only achieves a significant improvement in overall detection accuracy but also demonstrates higher robustness and environmental adaptability in small target detection tasks from the UAV perspective. This fully validates the effectiveness and superiority of the proposed method in complex scenarios with severe occlusion and variable scales. Meanwhile, MTD-YOLO also achieves leading performance in the detection of medium and large targets, reflecting its good generalization ability and stability for targets of different scales. While enhancing the overall performance, the model size of MTD-YOLO is comparable to that of YOLOv8n. However, due to the introduction of multi-scale feature fusion, task decoupling, and dynamic alignment strategies, its GFLOPs are increased by 17.5 G compared with YOLOv8n, which reflects a reasonable trade-off between enhanced model representation ability and increased computational cost.

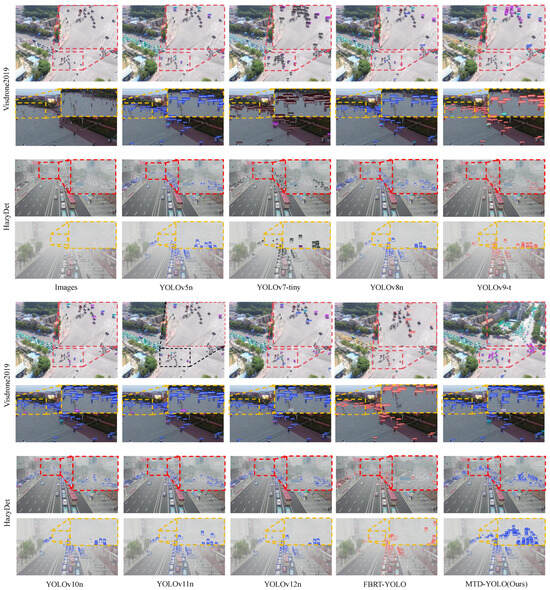

To more intuitively compare the detection performance of different models, this paper selected two sample images from both the VisDrone2019 and HazyDet test sets for visualization of detection results, covering typical extreme detection scenarios such as dense crowd gatherings and severe haze occlusions. The visualization results are shown in Figure 9. In dense small target scenarios, comparison models such as YOLOv8n are prone to problems like missed detections, boundary offsets, or box overlaps, while MTD-YOLO can accurately locate target boundaries, maintaining high detection integrity and boundary fit. Under low-visibility conditions such as haze, MTD-YOLO can still correctly identify fine-grained targets and effectively suppress background noise and false positives. These visualization results, together with the quantitative evaluation metrics, fully demonstrate the superiority of MTD-YOLO in small target detection tasks.

Figure 9.

Visualization results of various models on the VisDrone2019 and HazyDet test sets.

4.4.3. Comparison with Other Representative Detection Algorithms

Following the comparative analysis with YOLO-series algorithms, this study further evaluates MTD-YOLO against other representative detection algorithms on the VisDrone2019 and HazyDet test sets to comprehensively validate its superior performance in small target detection tasks under UAVs perspectives. The experimental results are presented in Table 5 and Table 6.

Table 5.

Performance Comparison of MTD-YOLO with Other Detection Algorithms on the VisDrone2019 Test Set.

Table 6.

Performance Comparison of MTD-YOLO with Other Detection Algorithms on the HazyDet Test Set.

The results from Table 5 and Table 6 indicate that MTD-YOLO demonstrates significant overall performance advantages on both the VisDrone2019 and HazyDet test sets. On the VisDrone2019 test set, compared to the second-best algorithm DINO-R50, MTD-YOLO reduces the model parameter count by 93.6% and computational load by 65.1%. With only 3.07M parameters and 25.6 GFLOPs, it achieves a 2.9% improvement in mAP@0.5. On the HazyDet test set, MTD-YOLO similarly achieves the best detection performance with the least computational resources and parameter scale. The aforementioned results substantiate the superiority of MTD-YOLO, showing that it achieves a high balance between detection accuracy and computational efficiency.

4.5. Ablation Analysis

This paper conducted ablation experiments to verify the effectiveness of the proposed modules. The experimental results are shown in Table 7 and Table 8.

Table 7.

Ablation Study of MTD-YOLO on the VisDrone2019 Test Set.

Table 8.

Ablation Study of MTD-YOLO on the HazyDet Test Set.

4.5.1. Effect of PMSRFU and C2f-PMSRFU

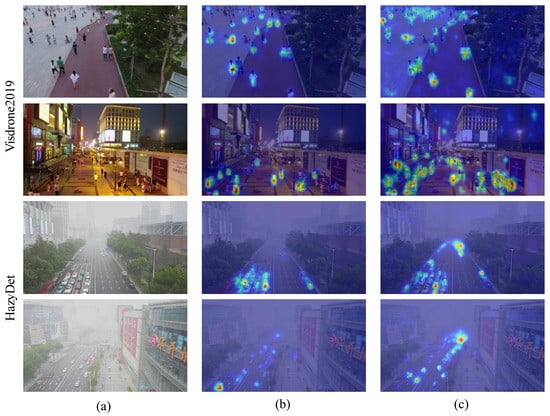

To address the issue of large scale variations and difficult feature extraction in small targets of UAV aerial images, the multi-branch parallel structure of PMSRFU proposed in this paper effectively enhances the model’s feature extraction capability and multi-scale expression ability, thereby improving detection accuracy. As shown in Table 7 and Table 8, the introduction of PMSRFU increased mAP@0.5 by 1.1% and 2% on the VisDrone2019 and HazyDet test sets, respectively, but this improvement in accuracy came with an increase in the number of parameters and GFLOPs. In contrast, the introduction of the C2f-PMSRFU module slightly improved the model’s detection accuracy while reducing the number of model parameters and computational load. When used in combination, the multi-scale expression ability of the model was further enhanced, with mAP@0.5 increased by 1.4% and 2.4% on the VisDrone2019 and HazyDet test sets, respectively, while keeping the number of parameters at 3.37 M and GFLOPs at 9. The overall detection accuracy was improved without a significant computational burden. Figure 10 shows the visualization heatmap after the introduction of the PMSRFU and C2f-PMSRFU modules. From Figure 10, it can be observed that the combined use of PMSRFU and C2f-PMSRFU modules significantly enhances the network’s attention to densely populated small target areas.

Figure 10.

Heatmap Comparison, Original Image (a), with PMSRFU (b), with C2f-PMSRFU (c).

4.5.2. Effect of PMSRFU SDIDA-Head

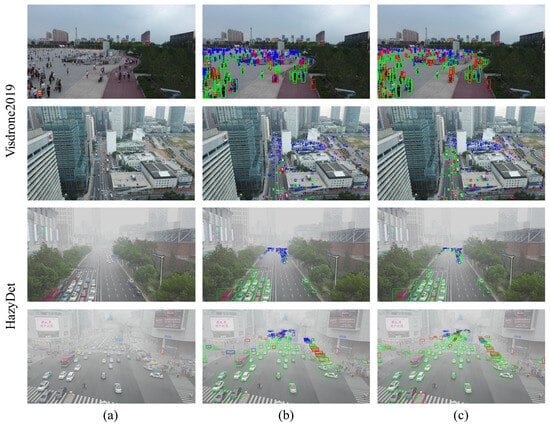

To address the existing issue of lack of effective feature interaction between task branches and the absence of scale adaptive adjustment in detection heads, this paper proposes a novel detection head structure—SDIDA-Head. This structure, based on shared convolutional feature extraction, introduces task decoupling and dynamic interaction alignment mechanisms, combined with scale adaptive adjustment strategies, to enhance the semantic and spatial consistency between classification and regression branches. This reduction in missed detections and false positives significantly improves the detection accuracy of small targets. From Table 7 and Table 8, it can be seen that the introduction of SDIDA-Head simultaneously increased the mAP@0.5 on the VisDrone2019 and HazyDet test sets by 6.9%, verifying the effectiveness of this strategy. Figure 11 shows the visualization of detection results after the introduction of SDIDA-Head and illustrates the comparison of True Positive, False Positive and False Negative for each image with the ground truth annotations. As shown in Figure 11, SDIDA-Head effectively reduces the occurrence of missed detections and false positives.

Figure 11.

Visualization comparison of detection results with ground truth annotations. Original image (a). Visualization of YOLOv8 detection results (b). Visualization of detection results with SDIDA-Head (c) (green boxes represent True Positives, blue boxes represent False Positives, and red boxes represent False Negatives).

4.6. Real-Time Analysis of the Algorithm

From the results presented in Table 1, Table 3, Table 5 and Table 7, it can be observed that the MTD-YOLO model achieves inference speeds of 175 FPS and 217 FPS on the VisDrone2019 and HazyDet datasets, respectively, when conducting image inference on the RTX 4080S GPU platform. Although its frame rate is not the highest among YOLO models of similar scale, it still demonstrates satisfactory real-time inference capability. The ablation studies in Table 7 and Table 8 further indicate that the introduction of the PMSRFU and C2f-PMSRFU modules has a minimal impact on the model’s inference speed. In contrast, the SDIDA-Head, which incorporates dynamic alignment, deformable sampling and multi-branch task decoupling operations, increases the computational complexity of the model, thereby resulting in a decrease in FPS. However, this computational cost is offset by significant gains in accuracy, overall reflecting a favorable trade-off between precision and speed. Considering the differences in computational power and memory bandwidth between the RTX 4080S and typical UAV onboard edge platforms, approximate scaling analysis based on computational power and bandwidth suggests that MTD-YOLO can achieve inference performance that meets the requirements for real-time object detection on mainstream UAV onboard SoC platforms such as AGX Orin and Orin NX. This validates the deployability of the proposed method in UAV edge computing scenarios.

5. Conclusions

This paper addresses the challenges of drastic scale variation and complex background information in small target detection within UAV aerial images by proposing a small target detection algorithm oriented towards the UAV perspective—MTD-YOLO. Firstly, a Parallel Multi-Scale Receptive Field Unit (PMSRFU) was designed to effectively enhance the model’s feature extraction and multi-scale representation capabilities. On this basis, a C2f-PMSRFU module was constructed to work in conjunction with PMSRFU, further improving detection performance without increasing model complexity. Lastly, a Shared-Decoupled Interactive Dynamic Alignment Head (SDIDA-Head) was introduced, which significantly enhances detection accuracy by incorporating task decoupling and dynamic interaction alignment mechanisms along with scale adaptive adjustment strategies. Extensive experimental results on the VisDrone2019 and HazyDet datasets demonstrate that MTD-YOLO achieves remarkable performance in both detection accuracy and inference speed. Specifically, the mAP@0.5 is improved by 7.6% and 6.6% compared to the YOLOv8 baseline, and by 7.4% and 6.0% compared to FBRT-YOLO, respectively. On the RTX 4080S platform, MTD-YOLO achieves inference speeds of 175 FPS and 217 FPS, respectively, showcasing its strong real-time processing capability and potential for engineering deployment. The favorable trade-off between accuracy and speed ensures that the model can maintain reliable and efficient detection performance under the limited onboard computational resources of UAVs. In view of the practical deployment requirements for UAVs, future work will focus on the collaborative optimization of model lightweighting and generalization capabilities. Techniques such as model distillation and structured pruning will be employed to further reduce computational complexity and enhance the algorithm’s adaptability and robustness under various target types and environmental conditions. This will facilitate the deployment of MTD-YOLO in typical UAV application scenarios, such as inspection monitoring and emergency response.

Author Contributions

Conceptualization, H.X. and M.W.; methodology, H.X. and M.W.; software, H.X.; validation, H.X.; formal analysis, H.X.; investigation, H.X.; resources, M.W.; data curation, H.X.; writing—original draft preparation, H.X.; writing—review and editing, M.W., L.J., R.C., J.W., Y.J. and Q.H.; visualization, H.X.; supervision, M.W.; project administration, M.W.; funding acquisition, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the National Natural Science Foundation of China (Nos. 41775165 and U22B2002), and by the Anhui Provincial University Outstanding Youth Research Project (No. 2023AH020022).

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors would also like to express their sincere gratitude to all colleagues and collaborators for their valuable discussions and constructive suggestions during the development of this research. Their insights and feedback greatly contributed to improving the quality and completeness of this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Guo, J.; Cao, S.; Wang, T.; Wang, K.; Xiao, J.; Meng, X. Transformer-based InspecNet for improved UAV surveillance of electrical infrastructure. Int. J. Appl. Earth Obs. Geoinf. 2025, 137, 104424. [Google Scholar] [CrossRef]

- Hajjaji, Y.; Boulila, W.; Farah, I.R.; Koubaa, A. Enhancing palm precision agriculture: An approach based on deep learning and UAVs for efficient palm tree detection. Ecol. Inform. 2025, 85, 102952. [Google Scholar] [CrossRef]

- Liu, K.; Zheng, J. UAV trajectory optimization for time-constrained data collection in UAV-enabled environmental monitoring systems. IEEE Internet Things J. 2022, 9, 24300–24314. [Google Scholar] [CrossRef]

- Alotaibi, J.; Oubbati, O.S.; Atiquzzaman, M.; Alromithy, F.; Altimania, M.R. Optimizing Disaster Response with UAV-Mounted RIS and HAP-Enabled Edge Computing in 6G Networks. J. Netw. Comput. Appl. 2025, 241, 104213. [Google Scholar] [CrossRef]

- Zhang, R.; Li, L.; Zhang, Q.; Zhang, J.; Xu, L.; Zhang, B.; Wang, B. Differential Feature Awareness Network Within Antagonistic Learning for Infrared-Visible Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 6735–6748. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Zhang, R.; Xu, L.; Yu, Z.; Shi, Y.; Mu, C.; Xu, M. Deep-IRTarget: An Automatic Target Detector in Infrared Imagery Using Dual-Domain Feature Extraction and Allocation. IEEE Trans. Multimed. 2022, 24, 1735–1749. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, Z.; Huang, Y.; Yang, S.; Xu, L.; Xu, M. Visible-Infrared Person Re-Identification With Real-World Label Noise. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4857–4869. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Xie, J.; Yuan, B.; Guo, C.; Li, H.; Wang, F.; Chu, P.; Tian, J.; Wang, Y.; Liu, Z.; Wang, C. KL-YOLO: A lightweight adaptive global feature enhancement network for small object detection in low-altitude remote sensing imagery. IEEE Trans. Instrum. Meas. 2025, 74, 5037213. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multiscale feature fusion small object detection network for UAV aerial images. IEEE Trans. Instrum. Meas. 2024, 73, 5015214. [Google Scholar] [CrossRef]

- Tao, S.; Shengqi, Y.; Liu, H.; Gu, J.; Deng, L.; Liu, L. MIS-YOLOv8: An improved algorithm for detecting small objects in UAV aerial photography based on YOLOv8. IEEE Trans. Instrum. Meas. 2025, 74, 5020212. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2778–2788. [Google Scholar]

- Zeng, M.; He, S.; Zeng, Q.; Niu, Y.; Zhang, R. PA-YOLO: Small target detection algorithm with enhanced information representation for UAV aerial photography. IEEE Sens. Lett. 2025, 9, 7500304. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

- Feng, C.; Chen, Z.; Li, X.; Wang, C.; Yang, J.; Cheng, M.-M.; Dai, Y.; Fu, Q. HazyDet: Open-source benchmark for drone-view object detection with depth-cues in hazy scenes. arXiv 2025, arXiv:2409.19833. [Google Scholar]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.-S. Tiny Object Detection in Aerial Images. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Virtual, 10–15 January 2021; pp. 3791–3798. [Google Scholar]

- Tan, L.; Liu, Z.; Liu, H.; Li, D.; Zhang, C. A real-time unmanned aerial vehicle (UAV) aerial image object detection model. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–7. [Google Scholar]

- Song, G.; Du, H.; Zhang, X.; Bao, F.; Zhang, Y. Small object detection in unmanned aerial vehicle images using multi-scale hybrid attention. Eng. Appl. Artif. Intell. 2024, 128, 107455. [Google Scholar] [CrossRef]

- Wang, R.; Lin, C.; Li, Y. RPLFDet: A lightweight small object detection network for UAV aerial images with rational preservation of low-level features. IEEE Trans. Instrum. Meas. 2025, 74, 5013514. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, S.; Ma, J.; Sun, Y.; Zhang, J.; Zuo, H. DFAS-YOLO: Dual Feature-Aware Sampling for Small-Object Detection in Remote Sensing Images. Remote Sens. 2025, 17, 3476. [Google Scholar] [CrossRef]

- Xu, Z.; Zhao, H.; Liu, P.; Wang, L.; Zhang, G.; Chai, Y. SRTSOD-YOLO: Stronger Real-Time Small Object Detection Algorithm Based on Improved YOLO11 for UAV Imageries. Remote Sens. 2025, 17, 3414. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, H.; Li, C.; Wang, K.; Zhang, Z. DVIF-Net: A Small-Target Detection Network for UAV Aerial Images Based on Visible and Infrared Fusion. Remote Sens. 2025, 17, 3411. [Google Scholar] [CrossRef]

- Zhang, R.; Yang, B.; Xu, L.; Huang, Y.; Xu, X.; Zhang, Q.; Jiang, Z.; Liu, Y. A Benchmark and Frequency Compression Method for Infrared Few-Shot Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5001711. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, J.; Wei, J.; Han, L.; Li, T. AIFE-Net: Transformer-based adaptive multi-scale feature extraction encoder for object detection. Neurocomputing 2025, 640, 130366. [Google Scholar] [CrossRef]

- Bian, D.; Tang, M.; Ling, M.; Xu, H.; Lv, S.; Tang, Q.; Hu, J. A refined methodology for small object detection: Multi-scale feature extraction and cross-stage feature fusion network. Digit. Signal Process. 2025, 164, 105297. [Google Scholar] [CrossRef]

- Wang, Y.; Tong, L.; Luo, S.; Xiao, F.; Yang, J. A multiscale and multidirection feature fusion network for road detection from satellite imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5615718. [Google Scholar] [CrossRef]

- Liu, Y.; Li, E.; Liu, W.; Li, X.; Zhu, Y. LFEMAP-Net: Low-level feature enhancement and multiscale attention pyramid aggregation network for building extraction from high-resolution remote sensing images. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2024, 17, 2718–2730. [Google Scholar] [CrossRef]

- Kong, D.; Gu, L.; Li, X.; Gao, F. Multiscale residual dense network for the super-resolution of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5612612. [Google Scholar] [CrossRef]

- Liao, Y.; Hu, Z.; Jin, F.; Liu, J.; Chen, N.; Lv, J.; Duan, Q. MSSA: A Multi-Scale Semantic-Aware Method for Remote Sensing Image–Text Retrieval. Remote Sens. 2025, 17, 3341. [Google Scholar] [CrossRef]

- Chen, L.; Xia, S.; Liu, X.; Xie, Z.; Chen, H.; Long, F.; Wu, Y.; Zhang, M. WetSegNet: An Edge-Guided Multi-Scale Feature Interaction Network for Wetland Classification. Remote Sens. 2025, 17, 3330. [Google Scholar] [CrossRef]

- Wen, R.; Yuan, Y.; Xu, X.; Yin, S.; Chen, Z.; Zeng, H.; Wang, Z. MambaSegNet: A Fast and Accurate High-Resolution Remote Sensing Imagery Ship Segmentation Network. Remote Sens. 2025, 17, 3328. [Google Scholar] [CrossRef]

- Li, X.; Chen, Y.; Liu, X.; Qin, Z.; Wan, J.; Yan, Q. RCF-YOLOv8: A Multi-Scale Attention and Adaptive Feature Fusion Method for Object Detection in Forward-Looking Sonar Images. Remote Sens. 2025, 17, 3288. [Google Scholar] [CrossRef]

- Zuo, F.; Liu, J.; Fu, M.; Wang, L.; Zhao, Z. An efficient anchor-free defect detector with dynamic receptive field and task alignment. IEEE Trans. Ind. Informat. 2024, 20, 8536–8547. [Google Scholar] [CrossRef]

- Ding, Y.; Wu, W.; Zhai, Y.; Zhang, L.; Pan, L.; Zhao, J. Adaptive feature fusion and task-dynamic alignment for real-time object detection on edge devices. Expert Syst. Appl. 2025, 290, 128341. [Google Scholar] [CrossRef]

- Zuo, F.; Liu, J.; Chen, Z.; Fu, M.; Wang, L. TGADHead: An efficient and accurate task-guided attention-decoupled head for single-stage object detection. Knowl.-Based Syst. 2024, 302, 112349. [Google Scholar] [CrossRef]

- Bai, L.; Song, H.; Feng, T.; Fu, T.; Yu, Q.; Yang, J. Revisiting class-incremental object detection: An efficient approach via intrinsic characteristics alignment and task decoupling. Expert Syst. Appl. 2024, 257, 125057. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, Y.; Peng, W.; Guan, X.; Tao, T. ScaleViM-PDD: Multi-Scale EfficientViM with Physical Decoupling and Dual-Domain Fusion for Remote Sensing Image Dehazing. Remote Sens. 2025, 17, 2664. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8 by Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 August 2025).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. In Computer Vision–ECCV 2024; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J. YOLOv11 by Ultralytics YOLO. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 August 2025).

- Tian, Y.; Ye, Q.; Doermann, D.S. YOLOv12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Xiao, Y.; Xu, T.; Xin, Y.; Li, J. FBRT-YOLO: Faster and Better for Real-Time Aerial Image Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 8673–8681. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.-J.; Ni, L.M.; Shum, H.-Y. DINO: DETR with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).