1. Introduction

Coastal restoration continues to expand as managers work to mitigate wetland loss and degradation, yet effective monitoring remains challenging, especially in areas with limited accessibility [

1]. Vegetation community composition data are critical for monitoring restoration performance and informing adaptive management strategies [

2]. Traditional field-based surveys provide detailed plot- or quadrat-level data (e.g., plant species composition and coverage, plant survivability, and diversity) to assess restoration outcomes [

3,

4,

5,

6,

7], yet are labor-intensive, spatially limited, and often insufficient for capturing conditions across large or complex sites [

2]. As restoration efforts scale up, practitioners are increasingly seeking ways to supplement existing monitoring approaches that provide broader spatial coverage and greater efficiency.

Remote sensing has become a way to evaluate restoration performance, such as characterizing vegetation composition, abundance, structure, function, diversity, colonization rates, and condition [

3,

7,

8,

9,

10]. In wetland systems, where vegetation is foundational to habitat provision and ecological processes, remote sensing can offer valuable inputs for understanding restoration trajectories [

2,

6,

7]. Uncrewed aircraft system (UAS) technology is an emerging tool that can complement and maximize field-based methods by providing very high spatial resolution imagery and elevation data [

11,

12,

13]. However, its rapid evolution, shifting guidelines, and technical demands can pose challenges for implementation [

6,

14]. As a result, practitioners continue to seek clarity on questions such as when UASs are appropriate for vegetation monitoring, how accurately species or vegetation types can be identified, and which data sources or classification approaches are most effective.

A growing body of research attempts to assess UAS capabilities for assessing restoration performance, including species-level or habitat type identification in coastal marsh sites [

2,

10,

12,

13]. These studies generally evaluate how such factors as UAS data types (e.g., imagery or digital elevation model [DEM]), data collection timing (e.g., seasonality), classification method (e.g., pixel- or object-based image analysis [OBIA], supervised, etc.), or classification inputs (e.g., single spectral bands, vegetation indices [VIs], canopy height models [CHMs] etc.) contribute to classification performance. Such studies examine how combining various UAS data types and classification methods can influence classification accuracy. For example, studies used high-resolution RGB imagery modified for near-infrared (NIR), along with plant-height estimates and an OBIA approach, to map basic vegetation classes [

15]. Another study found that OBIA coupled with DSM inputs and high-resolution multispectral imagery can improve identification of both individual and dominant species groups [

16]. Similarly, others reported higher accuracies when elevation layers were added and when using red–green–NIR composites with pixel-based classifiers to identify salt marsh species [

17], or that classification performance was improved with finer spatial resolution and when including DSM, CHM, and rugosity-derived variables [

18]. Generally, these studies reported high accuracies (70–90%) across a range of marsh vegetation types with dependencies on spectral configuration and classifier type.

Other studies have compared or integrated UAS and satellite imagery to classify coastal marsh vegetation. For instance, one study used modified RGB UAS imagery and WorldView-2/3 multispectral data to map dominant vegetation types, noting moderate species-level agreement and limitations associated with NIR capture and training data [

19]. Another study combined UAS and WorldView-3 data to evaluate multiple classifiers and spectral inputs for several wetland species, achieving high overall accuracies (>85%) but with reduced performance for some species [

20]. Similarly, another found that UAS imagery served as an effective supplement for training and validating satellite-based classifications, producing high classification accuracies for nine habitat types [

21]. Such studies often include VIs estimated from imagery, such as the Normalized Difference Vegetation Index (NDVI), which is a ratio between the NIR and red bands that serves as a proxy for vegetation extent, density, and biomass [

22,

23]. Collectively, these studies demonstrate that UASs can strengthen satellite-derived vegetation mapping, though species-specific challenges remain.

While these studies show UAS-based remote sensing potential for wetland vegetation classification, they also reveal opportunities to understand related technological and methodological capabilities in a restoration context [

14,

24]. Better integration of traditional field surveys, especially routine collections conducted for restoration monitoring, with remote sensing is needed but constrained by several challenges, including limited collaboration among disciplines, incompatibilities between plot-based data and pixel-based classifications, and reliance on point-based “ground truth” data collected specifically for training and validation [

3,

25]. Traditional accuracy assessments are typically based on ground truth (e.g., ground observations) and a confusion or error matrix [

26]; they have changed little despite advances in remote sensing methods and may impose unrealistic expectations that underestimate classification performance [

27,

28]. Furthermore, they do not quantify or express relevance to restoration metrics. Because field monitoring data are collected at the plot-level (capturing species composition, percent cover, and community structure) and remote sensing relies on pixel-level observations, discrepancies between plots and pixels often hinder direct comparison and highlight the need for evaluating more integrated and comparative approaches [

25]. More specifically, ecological information summarized over the plot area is often at a different spatial scale and sampling unit than a classification based on a spectral measurement integrated at the pixel level [

25]. This can lead to unreliable or invalid comparisons in which plot-based data do not translate well to training or validation (site-based observations) used in most traditional accuracy assessments of pixel-based classifications [

25].

With most UAS studies relying on point-based ground truth collected solely for training and validation, few address how classifications compare to traditional monitoring plot data that restoration practitioners rely on to evaluate project performance. As a result, it’s unclear how well UAS-derived classifications align with restoration-relevant plot-level metrics (e.g., taxa richness, species assemblage, etc.), revealing a gap in understanding. This disconnect poses challenges for integrating field and remote sensing methods, even though improved integration would maximize their complementary strengths and help overcome limitations of each [

3,

5,

29]. In turn, improved integration of field and remote sensing techniques has direct implications for selecting suitable UAS data and analysis methods appropriate for addressing specific restoration goals, restoration performance assessment, and ultimately, the use of remotely sensed data in vegetation monitoring practices.

Given this gap, this study focuses on evaluating the comparability of UAS-derived wetland vegetation classifications with traditional field monitoring data that are fundamental to restoration monitoring. It builds on the evolving body of work that aims to understand the utility and performance of UAS multispectral imagery and lidar elevation data to identify restored wetland vegetation by utilizing two coastal restoration sites in southeast Louisiana [

11]. Specifically, the work examines the effects of classification method (maximum likelihood and random forest), spectral data source (5- and 10-band multispectral imagery), and lidar-derived elevation surfaces on classification performance across metrics relevant to restoration monitoring: taxa richness, community assemblage similarity (species and percent cover), species presence identification (precision, recall, and F1 scores), and percent-cover prediction. The evaluation steps described in this paper provide an evidence-based, foundational approach to determine when, and under what conditions, practitioners can apply and adapt the technology to other restoration sites. The results provide insight into how well UAS-based classifications align with traditional field monitoring data, providing practical pathways UAS technology can be used to assess restoration progress and outcomes related to vegetation.

2. Materials and Methods

2.1. Study Sites

Two coastal study sites in southeast Lousiana were selected from an inventory of potential candidate sites, including Spanish Pass Ridge and Marsh Restoration (BA-0191) and Bayou LaBranche Wetlands Creation (PO-17), referred to hereafter as Spanish Pass and LaBranche, respectively (

Figure 1, [

11]). These sites were selected because they represent a range of wetland restoration types and conditions, with LaBranche being one of the first wetland restoration projects in the state as a typical constructed marsh platform and Spanish Pass being more recently restored as a unique marsh ridge system, both created from dredged sediment. The two study areas are likewise part of U.S. Army Corps of Engineers (USACE) research aimed at exploring technological advancements, such as UASs, for ecosystem restoration monitoring [

11,

30].

2.1.1. Bayou LaBranche Wetland Creation Site

LaBranche was the first restoration project constructed under the Coastal Wetlands Planning, Protection and Restoration Act (CWPPRA) and completed in 1994, in which the USACE is the federal sponsor, and the Coastal Protection and Restoration Authority (CPRA) is the state sponsor. The constructed marsh platform totals 1.76 km

2 in size and is located in St. Charles Parish, LA between Lake Pontchartrain and U.S. Interstate 10 near the Bonnet Carre Floodway. The restoration goal was to create new vegetated marsh utilizing dredged material, including 1.23 km

2 of shallow-water habitat for emergent wetland vegetation and a marsh to open-water ratio of 70% marsh to 30% water five years after construction [

31]. Prior to restoration, the site experienced almost complete wetland loss from altered hydrology and increased salinity compounded from railroad construction, draining to support farming, flooding, hurricanes, storm surge, and canals, ultimately converting to open water [

31]. Restoration included 2.1 million m

3 of sediment dredged from the lake and deposited into earthen containment berms, followed by aerial seeding help stabilize the sediment. Two years after construction, an additional 1600 emergent wetland plants were placed along the marsh edge to increase marsh protection and encourage vegetation growth [

31]. Over 20 years of monitoring was conducted with a partial focus on habitat mapping to evaluate emergent marsh, including traditional vegetation surveys to assess species composition changes. Overall, project goals were met with final land–water analyses done in 2012 showing that the percentage of land increased to 94%, resulting in the creation of 327 acres of land, while most of the remaining open water areas (27 acres) occurred in canals [

31]. Although taking longer than expected, by 2013 the project area elevation settled to those of surrounding natural marsh with predominantly emergent marsh vegetation and resulting in greater project longevity than expected. For field demonstration purposes, a western section of the larger project area was subset to meet logistical and UAS line-of-sight requirements, resulting in an area of 0.5 km

2 for evaluation in this study (

Figure 2A).

2.1.2. Spanish Pass Ridge and Marsh Restoration Site

Spanish Pass is a unique ridge restoration and marsh creation project recently completed in 2018 and located in Plaquemines Parish, west of Venice, LA. [

32]. It totals 0.4 km

2 of constructed ridge (~1.5 km long; highest point at 1.8 m elevation) with a marsh platform (~137 m wide) created from 531,000 m

3 of dredged sediment (

Figure 2B). The project is part of the Louisiana Coastal Area, Beneficial Use of Dredged Material Program, which serves to restore naturally historic ridges in coastal LA. Sediment was dredged through routine operation and maintenance dredging of the USACE Authorized Navigation Project within the Hopper Dredge Disposal Area. Marsh ridges were historically found in LA, but degradation of natural channel banks and adjacent marsh has resulted from a variety of natural and anthropogenic factors [

32]. Project benefits include essential habitat (e.g., habitat for neotropical migratory birds) and protection from saltwater intrusion and storm surge. No funding was allocated for vegetation plantings to the restored area after construction; however, the Barataria-Terrebonne National Estuary Program (BTNEP) received support from Shell Oil Company through the Saving Marshes and Ridges Together (SMART) initiative to plant native vegetation in 2019. SMART volunteers planted the area with over 4300 native trees and grasses, including 1500 herbaceous seashore paspalum (

Paspalum vaginatum), 1450 red mulberry (

Morus rubra), 450 salt matrimony vine (

Lycium carolinianum), and 900 other transplants of assorted species to promote stabilization of sediment and provision of habitat to local fauna. Approximately one third of the planted vegetation survived and natural vegetation generation has likewise been observed, producing a mix of herbaceous and woody vegetation. Formal restoration monitoring was not planned following construction because of funding limitations.

2.2. Field Vegetation Survey Data Collection and Processing

Prior to conducting field surveys, vegetation inventory plots were established for a planning-level assessment in both study sites. For LaBranche, these included previously monitored plots described in Richardi (2014) [

31] as well as new plots to be surveyed for determining habitat and species diversity and documenting any invasive species. New plot locations were delineated based on areas of representative habitat type or vegetation density changes using Google Earth imagery. A total of 46 pre-determined plots were selected for LaBranche (

n = 20) and Spanish Pass (

n = 26) for monitoring. Plot centers were loaded onto a Trimble Geo 7X handheld Global Navigation Satellite System (GNSS) (Trimble Inc., Westminster, CO, USA) to navigate to in the field. At each plot center, a 0.02 hectare circular sampling plot (8 m radius) was designated via a hypsometer and surveyed. Latitude and longitude coordinates were recorded at each plot center. Within each plot, habitat type and percent cover were assessed, with all vegetation recorded by species, cover/abundance, diameter-at-breast height (DBH) for woody vegetation, height, phenology (buds, flowers, fruit/seed), and major strata community structure. This method was analogous to a granular level “tier 3” survey as described in Theel et al. (2024) [

33]. Values greater than 100% coverage were possible when vertical layering of species within the plot occurred. Any invasive species within the plot were noted, and a general plot level description was recorded. Ultimately, the number of plots at LaBranche was reduced to seven, while Spanish Pass was 13 (

Figure 3A,B), due to site restrictions, yet the reduced plots were selected to capture ecological variability. Plot data were reserved for comparison to the remote sensing classification results.

In addition to plots, individual points were collected to assist with classification training, attributing the dominant plant type at the recorded point location using the same equipment to collect the plot center coordinates. Points focused on characteristic or foundational vegetation types (e.g., representative, restored, or invasive species) to help differentiate them in the remotely sensed data (e.g., used as classification training data). For the same reasons limiting the number of field plots, it was not possible to collect points randomly, and thus, points were selected to help balance the number and variety of vegetation types (e.g., possible strata) while being practically asseccesible [

26], though some low-abundance species were less frequently encountered. At LaBranche, species included those listed in a previous monitoring report [

31], while at Spanish Pass they included restored plants as well as species that established naturally. In total, 55 individual points were collected at the two study sites (

Figure 3A,B). Note that at LaBranche, acccessibility constraints precluded plot and point collection in the southeast part of the study site. Individual point and plot center location coordinates collected in the field were post-processed using GPS Pathfinder Version 5.6 differential correction, which combines data from the field GPS rover and a known reference point or base station to improve GPS accuracy and correct atmospheric and satellite geometry errors. All field data were collected 24–28 October 2022 and compiled into spreadshseet and geospatial formats for use in remote sensing analysis.

2.3. UAS Data Collection

UAS imagery and lidar were collected using two platforms with various mounted sensors for evaluation. Lidar and RGB imagery were collected using an Inspired Flight IF1200 heavy lift multirotor UAS with a GeoCue TrueView 640 survey-grade lidar system (e.g., pulse repetition rate up to 200 k per second). The system consists of a Riegl miniVUX-3UAV lidar scanner with an Applanix Position and Orientation System (POS) APX-20 and two Sony complementary metal-oxide semiconductor (CMOS) IMX-183 20-megapixel (MP) RGB cameras positioned off-nadir for expanded 120° field of view. Multispectral imagery was also collected using the IF1200 and a fixed-winged Vertical Take-Off and Landing (VTOL) WingtraOne GEN I UAS. The AgEagle MicaSense Dual 5-band integrated sensor consisted of RedEdge-MX and RedEdge-MX Blue sensors configured for operation on the IF1200 (herein referred to as 10-band), while the AgEagle MicaSense Altum 6-band imager was mounted on the WingtraOne GEN I (

Table 1). The sixth band for the Altum is an alpha channel and was not used in this study; therefore, the sensor will be referenced herein as 5-band. Both MicaSense imagers were equipped with a downwelling light sensor (DLS) to accurately calibrate the at-sensor radiance.

Table 2 lists the visible to NIR multispectral band names, band numbers, center wavelengths, and bandwidths for the multi-band imagers used in this study. All UAS imagery and lidar data were collected 24–26 October 2022, at LaBranche and 27–28 October 2022, at Spanish Pass (

Table 1).

Light plans were generated for all sensors and respective platforms (

Table 3). The TrueView sensor suite was used to collect data at an altitude of 70 m with a mean lidar point density of roughly 100 points/m

2 in non-overlap regions and an image ground sample distance (GSD) of 1.9 cm. The 5-band imager was flown at 120 m resulting in an image GSD of 5.1 cm, and products were resampled to 7.5 cm to be consistent with the 10-band imagery GSD. The 10-band imager was flown at 110 m with an image GSD of 7.5 cm.

2.4. UAS Data Processing

The IF1200 with the TrueView 640 is a survey-grade mapping sensor capable of 3 cm vertical accuracy using post-processed kinematic (PPK) with high-end Applanix APX-20 GNSS and inertial measurement hardware. This level of accuracy requires post-processed corrections derived from a localized static GNSS base station. Lidar processing was accomplished using GeoCue’s TrueView Evo software version 2021.1.47.0 (GeoCue, Madison, AL, USA), which packages many third-party applications into a workflow-focused processing stream. GeoCue’s Evo Software runs Applanix’s POSPac-UAV (version 8.7) to ensure accurate and reliable post-processed solution.

Raw cycle data from the TrueView was ingested into the Evo, including onboard generated point cloud data, GNSS and inertial navigation data from the sensor. The Applanix POSPac-UAV software version 8.7 was utilized to generate a post-processed kinematic trajectory solution from the GNSS inertial navigation data and the GNSS base station data. This trajectory provided accuracies better than 3 cm Root Mean Square Error (RMSE) and precision better than 2.5 cm at 1 σ. Once the trajectory was checked for quality assurance (QA) and quality control (QC) using Applanix’s accuracy plots, Evo generated the lidar point cloud. An automated point cloud classification algorithm was applied to classify objects and terrain features, such as bare ground, vegetation, buildings and other structures.

American Society for Photogrammetry and Remote Sensing (ASPRS) LAS 1.4 Specification (version 1.4—R15; July 2019, [

36]) for classification attributes were used in the classification’s nomenclature. A DSM was created using all valid classified points. Additional quality control measures were utilized to ensure points were classified correctly, specifically ground points, and that there were no surface anomalies in the derived products. The subsequent bare ground points were extracted to generate a DTM. In both cases, the models were created using a 50 cm resolution GSD, and the DTM was used as input in Evo image processing.

During EVO project creation, raw images collected from both 20 MP cameras were imported, including GPS coordinates and camera parameters locally stored within the image Joint Photographic Experts Group (JPEG) as Exchangeable Image File Format (EXIF) metadata. Evo utilized a third-party software, Agisoft Metashape Professional version 1.7.4 build 12,950 (Agisoft, St. Petersburg, Russia), for a seamless photogrammetric Structure from Motion (SfM) workflow. The embedded GNSS coordinates were used as input to perform a bundle adjustment and camera calibration to refine the spatial orientation of the images and correct for any geometric distortions introduced during flight. The SfM reconstructed the 3D geometry by analyzing the overlapping images and extracting key tie-point features resulting in sparse points clouds from the estimated camera positions. Depth maps were generated using a “mild” filtering mode to control noise. Mild settings preserve the finer detail of the points while removing excessive noise [

37]. Dense point clouds were created and can be used for orthomosaic creation; however, the DTM created during the lidar processing was used as input for orthomosaic creation. Orthomosaic resolution is based on flying height for optimal resolution which resulted in a GSD of 1.9 cm. Final mosaics were exported in Tag Image File Format (TIFF) format (as files were over 4 GB threshold for standard TIFF) and compressed using Lempel-Ziv-Welch (LZW) compression at full resolution.

The MicaSense 10-band and 5-band data were stored in TIFF files (one image per band). The UAS on-board navigation provided planned GNSS exposure points, while the on-camera GNSS sensor provided positioning of each frame location. The RedEdge-MX camera was synced to the RedEdge-MX Blue camera to collate the same positioning information for each image file. Positioning data (latitude, longitude, and altitude) data were stored as TIFF Tags. Metadata from the image was extracted with the Phil Harvey ExifTool software version 12.61. Additionally, the MicaSense cameras have a DLS for integration and accurate ambient light calibration, though for processing this sensor it was not used for calibration due to dynamic environmental lighting conditions caused by broken clouds and multiple cloud layers.

The unrectified TIFF files, navigation data, DTM file, DLS and reflectance calibration panel images as well as camera calibration parameters provided by the manufacture were given as input to SimActive’s Correlator 3D (C3D) software version 9.2.0 (SimActive, Montreal, Quebec, QC, Canada). Aerial triangulation (AT) was performed using unconstrained optimization for interior orientation (IO) (focal length, principal points, radial and tangential distortion coefficients) and exterior orientation (EO) parameters (omega phi, kappa). AT collected tie-points for each image to perform a bundle adjustment. Unconstrained optimization allowed for the IO and EO parameters to float (float meaning here to unlock the values so that they are adjusted) to find the best bundle adjustment and camera calibration which is assessed based on average projection error of less than 1 pixel and 1 σ. Photo identifiers (PID) were used to further constrain the tie point optimization and bundle adjustment. When using photo identifiers, C3D adjusts for user error and uncertainties in PID selection of the XYZ coordinates.

MicaSense’s radiometric calibration model [

38] was used to convert raw pixel values into absolute spectral radiance values with units of watt per square meter per steradian per nanometer (w/m

2/sr/nm). This radiometric calibration process compensates for sensor gain and exposure settings, lens vignette effects as well as black-level. These metadata are stored in the TIFF tags of the image and are read by the software during the calibration process. The equation to compute spectral radiance is as follows:

where

is the normalized raw pixel value;

is the normalized black level value;

, , are the radiometric calibration coefficients;

is the vignette polynomial function for pixel location (x,y);

is the image exposure time;

is the sensor gain setting;

are the pixel column and row number;

L is the spectral radiance in w/m2/sr/nm.

The lidar-derived DTM was used to assist in further constraining the image positions to ground for geometric correction and final orthorectification. Prior to and after each flight, calibration reflectance panels (CRP) were exposed with each camera to convert radiance images to absolute reflectance during orthorectification. Reflectance was calculated by taking the average value of radiance for the image pixels located inside the capture panel area of the before and after flight image. Then a transfer function of radiance to reflectance for each band using the following equation [

39]:

where

is the reflectance calibration factor for band i;

is the average reflectance of the CRP for the ith band (from the calibration data of the panel provided by MicaSense Inc.);

is the average value of the radiance for the pixels inside the panel for band i.

A 7.5 cm GSD mosaic was created from the orthorectified images and inspected for quality assurance and quality control, where, if necessary, any seamlines were adjusted to improved accuracy. The final reflectance mosaic was exported at full resolution as a 16-Bit TIFF files in a single image block over the study sites.

Photo identification and ground control points (GCP) were collected at both study sites for an accuracy check. The MicaSense data collected utilized PIDs to help constrain the geometric calibration and to help improve accuracy. More specifically, thirteen points were collected across the western portion of Spanish Pass, while points in the eastern portion were limited due to site access constraints. Conversely, four points were collected at LaBranche due to site access limitations and dense vegetation. GCPs consisted of checkerboard markers, and centers were surveyed using a survey-grade Leica® GS18 RTK GNSS base-rover system. All lidar, imagery and survey data were processed with ellipsoid heights tied to Geodetic Reference System 1980 (GRS80) in North American Datum (NAD) of 1983 with the 2011 realization and Universal Transverse Mercator (UTM) Zone 15 north for LaBranche and UTM zone 16 north for Spanish Pass. Derivative products (e.g., DTMs, and DSMs) were processed in NAVD88 Geoid18 vertical datum.

2.5. UAS Analysis and Classification

All imagery and lidar data were analyzed in ArcGIS Pro Desktop software (version 3.0.1; Esri, Inc., Redlands, CA, USA) and ENVI

® (version 5.7; NV5 Geospatial Inc., Broomfield, CO, USA) and clipped to the study site boundaries shown in

Figure 2A,B. To focus the classification analysis on land pixels only, water pixels were masked from the 5- and 10-band reflectance images generated in

Section 2.4. This was accomplished using the Normalized Difference Water Index (NDWI), McFeeters (1996) [

40]. This is a normalized ratio of green to NIR bands that was applied to each reflectance image,

NDWI(5

band) and

NDWI(10

band), as follows:

where

B2 is the green band in the 5-band reflectance image,

B7 is the green band in the 10-band reflectance image, and

B4 is the NIR band in both reflectance images. Field survey points and visual image inspection were used to help guild the selection of an index value threshold to mask water pixels. An incremental threshold adjustment was made so that water pixels that appeared to have submerged or emergent vegetation were included, so vegetation at, above, or just below the water surface could be classified. In addition to spectral bands, a DSM and CHM were also prepared for use in the classification analysis. The CHM was created for each site by subtracting the DTM from the DSM, resulting in a digital surface of vegetation height. Layer stacks, or composites, were created using an intersection option to encompass overlap between rasters for each image type (5- and 10-band), plus the DSM and CHM, which were resampled to match the spatial resolution of the reflectance image at each site. This resulted in two composites per site as follows: (1) spectral bands 1–5 + DSM + CHM, and (2) spectral bands 1–10 + DSM + CHM (following spectral band order in

Table 2), totaling four composites as classification inputs. This allowed the comparative focus to be on the combination of the different reflectance image types with the lidar-derived surfaces. When used with multispectral bands, such elevation surfaces have shown utility for improving wetland vegetation classification accuracy and to help discriminate between spectrally similar species [

41].

Two pixel-based classification methods were used to classify the composites, maximum likelihood (ML) and random forest (RF), in ENVI software version 5.7. Pixel-based classifiers were selected since they can result in superior classification accuracies for wetland mapping [

18,

20], while segmentation/OBIA methods require additional user input and parameterization [

6]. Although there are many supervised classifiers, ML and RF were selected for this study because they have been demonstrated successfully in published studies [

6] and represent contrasting methods. ML has a long-standing history and wide use in remote sensing starting in the late 1970s [

42,

43], while RF is a relative newcomer in the field of remote sensing [

44], with increasing popularity since the 2010s, owing to advances in computational power and growing adoption of machine learning methods. While they both utilize training samples, ML is a probabilistic method that assumes that the statistics for each class in each band are normally distributed and calculates the probability that a given pixel belongs to a particular class type [

45], whereas RF is a non-parametric method that creates a set of decision trees from a random subset of training samples and then creates a model to perform the classification [

44]. Despite their different approaches and histories, classification accuracies for wetland vegetation vary, though they are generally commensurate, with RF tending towards higher levels of consistency on the upper end of classification accuracies [

6]. In this study, RF parameters in ENVI included the following: balanced classes (yes), estimators, or number of trees (100), max features, or number of features to consider (sqrt), and max depth, or maximum depth of the tree (undefined so nodes are expanded until all leaves are pure). Default ML parameters were used, including an undefined threshold probability, meaning that each pixel is assigned to the class with the highest statistical likelihood.

Individual field points (described in

Section 2.2) and UAS 2 cm RGB imagery were used to train the classifiers, while field plots were reserved for comparison to the classification results to assess their performance. This is because the primary goal of the study was to compare UAS-based classifications to traditional field survey monitoring data. Such a comparison is intended to illustrate the integration of field and remote sensing techniques, especially in the context of better understanding UAS capabilities and limitations to characterize important vegetation metrics used in restoration monitoring. To help augment individual field points, the UAS 2 cm RGB imagery was used to identify training samples, especially to supplement underrepresented or lower abundance vegetation types with limited field points where possible. Such an approach works well to create additional training samples, especially in access-limited wetlands [

16,

21]. RGB-based samples focused on the same characteristic or foundational vegetation types (e.g., representative, restored, or invasive species), while being stratified across study sites and resulting in a combination of random and non-random training samples [

26]. RGB imagery was likewise used to confirm vegetation types and extents of monotypic stands that were noted during field surveys. Regions of interest (ROIs) were delineated in ENVI using the ROI tool as groups of pixels using the 55 individual points and 2 cm RGB imagery as a visual reference to guide ROI delineation, encompassing geographic and spectral variability within classes. In addition to vegetation types, bare classes, such as dry (light) sand, wet (dark) sand/mud, and wrack (e.g., dead vegetation typically along the shoreline at Spanish Pass) and shadow (primarily accounting for dark pixels in the 5-band imagery collected at Spanish Pass) were also included. Approximately 160 ROIs (462 m

2) were delineated across 11 classes at LaBranche, while 203 ROIs (325 m

2) were delineated across 15 classes (not including bare and shadow). Some species had a low number of training samples since they were low in abundance across the sites.

Table 4 lists the number and area of training samples delineated as ROIs for classified vegetation types (species) using both the individual points and 2 cm RGB imagery at LaBranche and Spanish Pass study sites. The same ROIs were used in the ML and RF classifiers to train the four datasets (e.g., 5- and 10-band/DSM/CHM composites) per study site. A post classification majority filter (3 × 3 kernel size) was used to minimize isolated pixels in the classification results, thus reassigning individually occurring pixels considered “noise”. Summary statistics were calculated for each dataset, totaling the amount of area and percent area per species within each plot. The areal statistics for all four classification results were consolidated and exported to tabular format to enable comparison with the field vegetation plot data.

2.6. Field and Remote Sensing Vegetation Comparison Methods

Several analyses were conducted to compare the field plot monitoring data to the remote sensing classification results. Species in common between the remote sensing classifications and field surveys were compared in classified plots. There were species identified in field plots that could not be identified in the remote sensing classifications, such as low-abundance (e.g., infrequent in number or spatial coverage to be visible in imagery), understory (e.g., occluded by overstory vegetation), and mixed, such as one species growing in or on another species. Conversely, there were species that were identified at the individual points (and following in the 2 cm RGB imagery) that were not encountered in field plots. For example, at LaBranche, these included cutgrasss (Zizaniopsis miliacea), common reed (Phragmites australis), and giant bulrush (Schoenoplectus californicus), while at Spanish Pass they included seaoats (Uniola paniculata), Chloris sp., saltmeadow cordgrass (Spartina patens), and smooth cordgrass (Spartina alterniflora). Thus, species not in common between the two methods were not included in statistical comparisons.

LaBranche and Spanish Pass sites were analyzed separately. As a general comparison, taxa richness, or the count number of uniquely identified species or ground cover, such as bare, was first considered. Here, classification method (ML and RF), data source (band number of the primary UAS image type used in the composite, 5 or 10), and plot (identifier number) were considered the model predictor variables for the response, richness. Poisson generalized linear models were conducted for each site and least significant difference pairwise comparisons were completed for predictor variables if significant. In this context, non-significant results indicate high similarity of models per respective factor.

To assess overall vegetation community assemblage (species and percent coverage) similarities between field and classification results, Bray–Curtis similarity matrix scores were developed for method-data source classification combination results, or models (Random Forest-10 band [RF-10], Maximum Likelihood-10 band [ML-10], Random Forest-5 band [RF-5], and Maximum Likelihood-5 band [ML-5]), at each plot. The closer to a score of one, the more similar the communities (species and coverages), or the closer a particular model result matched the field data. Similarly to the richness analysis, predictive factors (method, data source, and plot) were inferentially analyzed via gamma loglink generalized linear models for influence on response variable, Bray–Curtis similarity scores. Least significant difference pairwise comparisons were completed for predictor variables if significant. Additionally, vegetation variables, canopy height (maximum and mean), canopy-forming coverage, and taxa richness, derived from field measurements were compared to model similarity scores with linear and inverse regression, considering these factors may play a role in any observed model differences.

Lastly, precision, recall, and F1 scores were developed to ascertain species presence identification performance between field data and model results within the plots [

46]. For each plot, confusion, or error, matrices were developed for each model combination (RF-10, ML-10, RF-5, ML-5). True positives, false positives, true negatives, false negatives were counted at each field-model combination result for classified species. For remote sensing classification results, species were evaluated if their coverage was greater than 1%. While this method assessed species presence, coverage differences per species, data source, and plot were also analyzed via normal identity generalized linear models to illustrate the intensity of coverage similarity between model results and field data at a species level (i.e., if field and model results were identical, the resulting ‘coverage difference’ equaled zero). Equations for the performance assessment were as follows [

46]:

4. Discussion

This study addresses two research topics: (1) assessing the utility of UAS multispectral imagery and lidar elevation data to identify restored wetland vegetation, and (2) integrating traditional plot-level surveys with remote sensing-based approaches by examining how well UAS-based wetland classification results compare to traditional monitoring data used in restoration monitoring. Addressing both topics works to collectively understand UAS technology for assessing restoration performance related to vegetation targets, while maximizing field and remote sensing methods and illustrating how well classification products align with essential monitoring data. Related research tends to focus purely on evaluating the utility of remote sensing technology to evaluate wetland ecosystems [

6] or characterize vegetation type and condition [

3], offering an account of classification accuracy expectations using typical ground validation data collected for remote sensing analysis. Our study expounds upon that understanding by evaluating the representativeness of species-level wetland classifications compared to traditional monitoring data, and thus, their utility in assessing restoration performance.

In comparing plot-level richness, community assemblage, species presence identification, and percent cover, this study evaluated the effect of model factors (predictors): method (ML vs. RF), data source (5- and 10-band/DSM/CHM composites), and plot at two coastal restoration sites in southeast LA, LaBranche and Spanish Pass. In general, for taxa richness, predictors did not influence how well model results compared to field monitoring data except for method at Spanish Pass, in which ML performed relatively better than RF. For community assemblage, similarity scores were higher at LaBranche than Spanish Pass, with plot playing a factor at both sites, and once again, method affecting comparisons at Spanish Pass. Species presence identification performance was relatively better at LaBranche, and ML outperformed RF at Spanish Pass, depending on the species. For percent cover, plot was a factor at both sites, though model performance was species dependent. Generally, classification underestimations were more common than overestimations at both sites, but percent cover differences were relatively less at LaBranche than Spanish Pass. Data source did not play a factor in any of the comparisons, indicating that the number of spectral bands (5- versus 10-band) was not significant in this study.

Section 4.1 discusses the effect of model factors in more detail.

4.1. Effect of Model Factors

4.1.1. Classification Method

Some studies show that RF has higher overall classification accuracies for wetland vegetation compared to ML, depending on individual species or habitat type [

6]; however, in this study, RF sometimes underperformed compared to ML. Machine learning algorithms like RF often require more training samples than traditional supervised classifiers like ML [

47]. If the RF model goes deep into the decision tree, it can result in an overfitting to the specified training data rather than the dataset as a whole. Thus, classification errors may occur when training data is too small, limited, or does not adequately represent the whole dataset, and the classifier often requires specific, iterative tuning to work properly [

6,

48,

49]. In this study, training samples may have been too limited for some species, especially at Spanish Pass, where some lower abundance species, such as herb of grace (

Bacopa monnieri), were attempted in the classification, but only had five or six samples (

Table 4). As more studies attempt to evaluate the utility of high spatial resolution data to increase classification discrimination capabilities, reliance on comprehensive training data will become even more critical. Thus, taking advantage of routinely collected field monitoring data will likewise become more important to capitalize on machine learning methods that require expansive training data. While our study only utilized traditional plot-level monitoring data for the classification performance evaluation, and not in training, traditional field data and methods could be modified for use in both tasks [

50]. Regardless, the findings in this study were consistent with others that experienced reliable classification performance, especially with ML, for detailed wetland classification [

6,

18,

20].

At LaBranche, both methods performed comparably for taxa richness estimation and overall community assemblage similarity, suggesting that vegetation types were spectrally and structurally distinct enough to sufficiently characterize both metrics. However, at Spanish Pass, ML demonstrated better performance across multiple metrics, achieving significantly higher Bray–Curtis similarity scores (mean = 0.52) compared to RF (mean = 0.33), with RF-5 performing particularly poorly (mean = 0.28). As stated above, this performance differential may be due to RF experiencing overfitting as a result of limited training samples for some species, especially at Spanish Pass where classification detail was higher and more complex than at LaBranche (e.g., more species were included in the classification, including woody types), and the 5-band imagery had image quality issues. In cases where data are more normally distributed and some classes have a relatively small number of training samples, ML may perform better than RF, which requires a larger training dataset to establish an ensemble of decision trees. Yet, this can be difficult to acquire in wetlands with site accessibility constraints resulting in infrequent observations of lower abundance species.

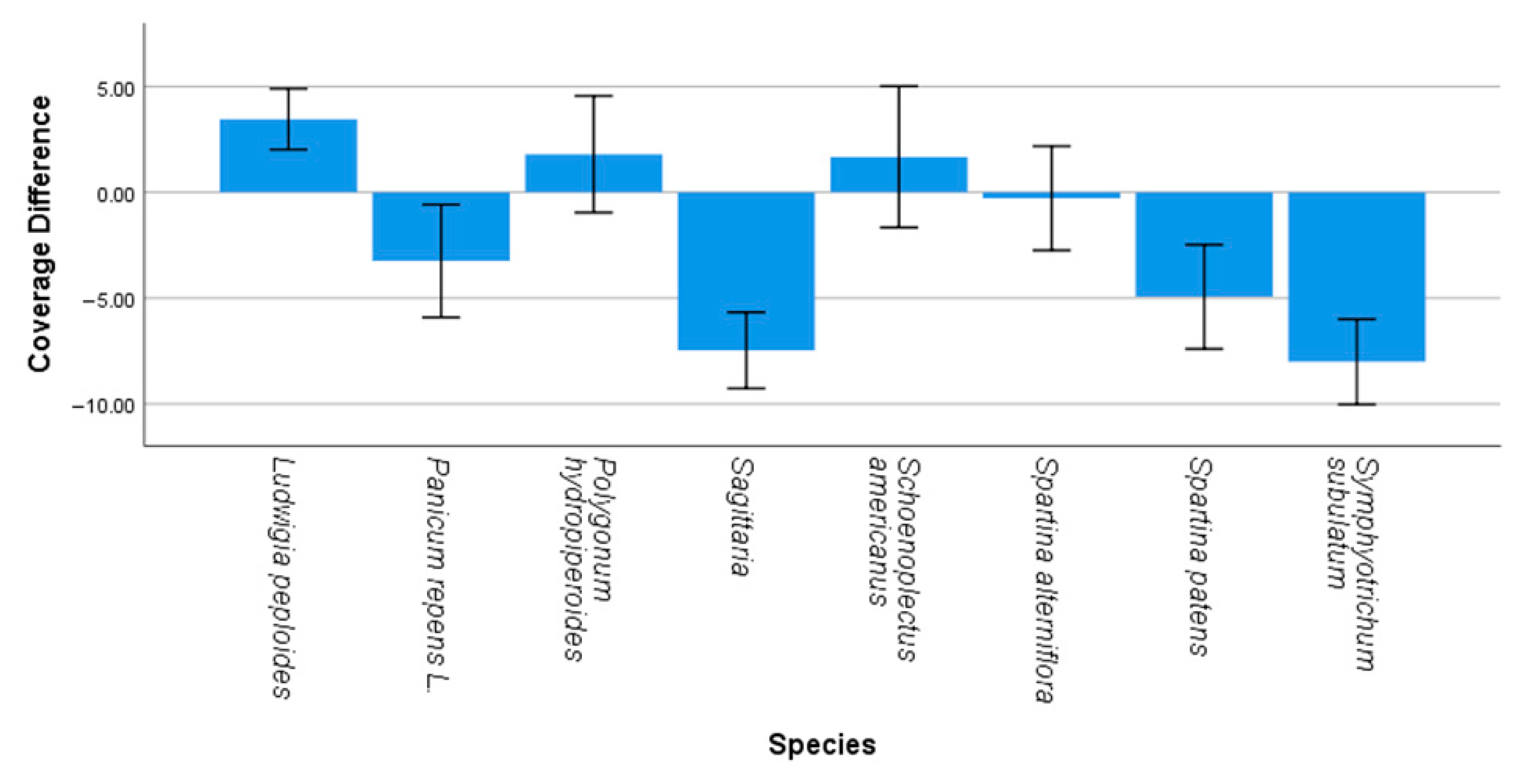

The general underestimation of species coverage by both methods at both sites suggests limitations (

Figure 11 and

Figure 12). The fact that method did not significantly affect coverage prediction performance may suggest that the challenge lies in translating data to coverage estimates. This finding has important implications for monitoring programs as both methods faced similar constraints when quantifying vegetation coverages from UAS data. Coverage underestimation was also seen in Tay et al. (2018) [

51] which used UAS imagery to classify common ragwort (

Jacobaea vulgaris), with UAS-based abundances underestimated compared to field data, and was attributed to differences in field-based methods assessing the whole rosette, while UAS-derived methods were aerial estimates of the flower heads. Thus, site-specific model calibration, training, and method selection may be necessary, and hybrid approaches combining algorithms or incorporating ancillary environmental data could improve classification reliability for coverage estimation across diverse coastal restoration sites.

4.1.2. Data Source

Neither site showed consistent significant differences between 5-band and 10-band data sources for taxa richness, community assemblage similarity, species presence identification, or coverage prediction, suggesting that the additional spectral information provided by 10-band data may not translate to improved classification performance for these vegetation types. This finding contradicts expectations that higher spectral resolution or more spectral bands enhances species discrimination, particularly for spectrally similar species [

6]. The lack of clear advantages for 10-band data may reflect confounding factors for discrimination. First, the increased dimensionality of 10-band data may introduce noise or redundancy that does not improve discrimination among the species present at these sites. Second, the heterogeneous and often mixed nature of coastal vegetation communities may mask the benefits of additional spectral bands, as pure or distinct spectral signatures may be diluted in complex vegetation assemblages. Such spectral distinctiveness was reduced when species were intermixed in Jochems et al. (2021) [

18], leading to potential classification confusion. While Lane et al. (2014) [

52] found that added satellite multispectral bands led to a modest increase in overall classification accuracy of wetland vegetation (79% with 4 bands versus 82.9% with 8 bands), most UAS-based studies focus on comparison of RGB versus RGB plus NIR combinations to show the benefit of four spectral bands versus three [

17,

53]. In our study, both sensors included an NIR band and at least one red edge band, both known to enhance wetland vegetation discrimination [

6]. While the 10-band sensor included an additional red edge band, coastal blue band, etc., they did not appear to benefit classification performance compared to traditional plot monitoring data in this study. Additional research is needed to understand the relationship of optimal UAS spectral bands and spectral resolution for discriminating specific wetland species.

The 5-band imagery at Spanish Pass had illumination issues that caused problems with the vegetation classification. These were due to timing of flights that caused dark patches from clouds and tree shadow. These illumination issues seemed especially apparent in the RF-5 model, which had the lowest mean similarity score of 0.28 and lower score similarity scores for most plots than the RF-10 model. While other models performed more uniformly, the poor performance of RF-5 at Spanish Pass may suggest that the interaction between classification method and 5-band imagery was more influential than either factor alone. Regardless, poor UAS image quality has negative impacts on vegetation characterization [

12,

51], emphasizing the importance of optimized flight planning and UAS survey designs in operational monitoring programs.

4.1.3. Plot

The performance differences between LaBranche and Spanish Pass highlighted the influence of local environmental conditions and vegetation assemblage complexity on model results compared to traditional field plot data even within individual restoration sites. The significant correlations between model similarity scores and field-based vegetation variables (mean and maximum height, canopy-forming coverage, and taxa richness) at both sites (r

2 = 0.52, 0.29, and 0.67, respectively) demonstrated that vegetation assemblage complexity (e.g., species composition diversity and canopy structural characteristics) was an influential factor in classification performance (

Figure 9 and

Figure 10).

Model performance across all combinations at LaBranche was relatively better across all metrics (e.g., taxa richness detection, community similarity scores > 60%, and coverage differences < 10%) than at Spanish Pass. This likely reflects spatially and spectrally distinctive vegetation, where dominant species like torpedograss (

Panicum repens) and saltmeadow cordgrass (

Spartina patens) created spatially coherent patches with clear spectral characteristics for select plots at LaBranche. At this site, plots with relatively higher taxa richness (e.g., PO17-02 and LB9) experienced comparatively lower similarity scores than more homogeneous plots (e.g., LB1 and LB2) which had higher similarity scores. Not surprisingly, the more mixed plots had reduced model similarity scores (e.g., decreased classification performance across model combinations) compared to field monitoring data. This trend is consistent with reduced classification accuracies for class types prone to increased intermixing of species or species with more heterogenous features, in which increased spectral variability can lead to classification confusion [

6,

18].

Spanish Pass presented greater classification challenges, with comparably lower community assemblage similarity scores (<60%) and larger coverage estimation errors (up to 15%), reflecting greater canopy structural heterogeneity than LaBranche. The presence of taller, canopy-forming vegetation occluded understory vegetation, representing a fundamental limitation of nadir-viewing imagery in structurally complex coastal systems [

54]. As a result, similarity scores were relatively lower in many plots (e.g., SP3 and SP8) than at LaBranche. Increased classification confusion likely occurred in plots characterized by greater structural complexity (e.g., multilayered canopy) that diminished model similarity scores. This finding suggests that restoration sites transitioning toward woody vegetation communities may require specific timing of UAS surveys to coincide with phenological stages offering maximal detection of understory or obscured species [

55] as well as integrated monitoring protocols to maintain thorough vegetation surveys, including sub-canopy strata.

4.2. Field Versus Remote Sensing Techniques: Challenges and Solutions

Traditional field monitoring data (e.g., collected via pre-existing and established survey methods used in routine monitoring programs and protocols) and remote sensing data are not like-for-like. Their differences present incompatibilities, affecting their potential for comparison and integration, and presenting hurdles in doing so. Although the approach in this study reflects the structure and constraints of actual restoration monitoring data, it carries statistical limitations related to cross-scale integration, classifier behavior, and the structure of the ecological datasets used. The following sections discuss some of the challenges encountered in this study with ideas for potential solutions.

4.2.1. Species Location

One of the major differences between traditional monitoring and remote sensing methods is that traditional field-based surveys do not include the specific location of various species observed within a plot. This is an obstacle in their comparison, especially since most accuracy assessments are reliant on the geographic location of known species (e.g., ground truth). In our analysis of remote sensing and field data, plot-level assemblages and species presence identification were assessed to understand UAS capabilities for identifying dominant or characteristic plant types, specifically compared to traditional plot monitoring surveys. However, we were unable to evaluate species-level misclassifications because field plot data did not have the location of species to support such an analysis. For example, if the classifier identified a pixel as poisonbean (

Sesbania drummondii) and it was common reed (

Phragmites australis), the comparative approach demonstrated in this study was not able to identify such species-level confusion. While typical plot surveys do not include species locations, field methods could be amended to include individual point locations or geolocated sub-plots or quadrats of dominant species in the overstory (or species targeted in a remote sensing classification) throughout a plot to better evaluate misclassifications. The amount, size, and type of locations within a plot (e.g., individual points, sub-plots or quadrats) need to be commensurate with the spatial resolution of the imagery being evaluated for appropriate comparison [

25].

4.2.2. Species Specificity

Another major difference is that the species types that can be identified from remote sensing and field-based methods are not necessarily the same. This is because remote sensing cannot identify all species encountered in a field plot. Such species can include low-abundance, understory, mixed, and ones occurring in small patch sizes, with fine-scale features and interspersed with other cover types (e.g., leaf litter, floating leaves, etc.) that are not readily visible or are obscured in imagery [

11,

18]. Typically, field surveys attempt to record all species observed in plots within a designated project site, while remote sensing methods attempt to classify dominant, characteristic, or target species over a project site. This difference can present yet another potential obstacle, affecting their comparison or integration. Moreover, in traditional field methods, ocular estimates of species coverage are recorded in different canopy strata. As such, the same species can be represented multiple times in a single plot. In this study, coverages were summed across strata to get a total cover percentage for a species in a plot (

Section 4.2.3). Furthermore, there were some species with few training samples since they had relatively lower abundances or were obscured by taller canopy-forming vegetation (e.g., herb of grace [

Bacopa monnieri],

Chloris sp., saltgrass [

Distichlis spicata] etc.), causing potential classification errors and confusion with other species. Conversely, there were some species that were identified from the individual field points and UAS 2 cm RGB imagery that were not encountered in field plots (at LaBranche, these included cutgrass [

Zizaniopsis miliacea], common reed [

Phragmites australis], and giant bulrush [

Schoenoplectus californicus], while at Spanish Pass they included seaoats [

Uniola paniculata],

Chloris sp., saltmeadow cordgrass [

Spartina patens], and smooth cordgrass [

Spartina alterniflora]), which could not be included in statistical comparisons since they were not recorded in the field plots. Thus, the performance of those classified species could not be assessed. And although plot-based surveys are considered detailed and comprehensive in nature, they can sometimes miss certain species or overrepresent others across a project site simply due to their spatial distribution. The number and distribution of plots used in the comparative analysis in this study were somewhat limited owing to site access limitations and time- and resource-constraints. Additional plots may have reduced or removed those species that could not be compared (e.g., species in the remote sensing classifications but not in field data) and would have likewise strengthened statistical analyses, ultimately lending greater understanding towards the suitability of UAS-based wetland classifications for use in restoration monitoring. Field survey designs should strive to encompass the range of targeted habitat across a project site to optimize the number and type of species encountered, while likewise accommodating their use in remote sensing application [

25]. To that end, coupled monitoring strategies using both traditional field monitoring and remote sensing methods can help reduce occurrences of missed species and bolster reliability surrounding estimations via multiple lines of evidence.

When possible, it is helpful to develop a target list of species ahead of field surveys and remote sensing analyses to address or mitigate differences by attempting to either align targeted species or include additional field survey techniques specific to the targeted species [

6]. However, this is not always possible, and strategies must be taken post hoc to enable datasets for comparison. Our study fell into the latter scenario, and comparison of species was limited to those in common between the field survey data and those that could be classified using the remotely sensed data. This made sense from a comparative standpoint but left some species out of the assessment altogether. In cases where time and resources allow, early field reconnaissance, communication with local practitioners, and reviewing previous monitoring reports can provide guidance on selecting target species. This is valuable not only for comparing or integrating field and remote sensing data and methods, but also to ensure that the target species are relevant to restoration monitoring needs. Even still, some species are not possible or are too challenging to identify via remote sensing, and tradeoffs must be weighed relative to restoration monitoring needs to determine a suitable identification method [

11].

At LaBranche, several species demonstrated relatively higher presence identification scores across multiple models, including smartweed mix (

Polygonum hydropiperoides), bulltongue arrowhead (

Sagittaria sp.), saltmeadow cordgrass (

Spartina patens), and torpedograss (

Panicum repens), with F1 scores consistently above 0.80 (

Table 7). These species showed that their presence could be reliably identified compared to traditional monitoring data, suggesting spectral features or growth forms conducive for identification by the various models. Conversely, eastern annual saltmarsh aster (

Symphyotrichum subulatum) showed no detection with RF-10 but achieved variable performance (F1 = 0.33–0.75) with other models. In addition, the performance of common threesquare (

Schoenoplectus americanus) illustrated a critical pattern observed across all models: perfect recall (1.00) coupled with low precision (0.17–0.33), indicating that while the models did not miss any occurrences of the species, they also generated false positives, misclassifying it as other vegetation types. Lastly, smooth cordgrass (

Spartina alterniflora) had consistently poor to moderate F1 scores (0.33–0.50) across all models. This is consistent with other studies that found classification confusion tendencies in sites with multiple wetland grass species [

17].

At Spanish Pass, seaside goldenrod (

Solidago sempervirens) demonstrated consistently high identification scores across three models (F1 = 0.92) except for RF-5 (F1 = 0.47), while common reed (

Phragmites australis) had moderate to high scores across three models (F1 = 0.56–0.71) except for ML-5 (F1 = 0.29),

Table 7. Other species had variable performance scores, including bushy bluestem (

Andropogon glomeratus, F1 = 0.33–0.86), or consistent performance scores, such as wax myrtle (

Morella cerifera, F1 = 0.70–0.83), black willow (

Salix nigra, F1 = 0.67), and poisonbean (

Sesbania drummondii, F1 = 0.70–0.78). In contrast, the frequent occurrence of NA values (

Table 7), particularly for RF models, illustrated presence detection failure for those species. NA values occurred for eastern baccharis (

Baccharis halimifolia), herb of grace (

Bacopa monnieri), saltgrass (

Distichlis spicata), common rush (

Juncus spp.), and bigpod (

Sesbania herbacea), while some of these same species showed improved detection with ML models (e.g., eastern baccharis [

Baccharis halimifolia]). Except for eastern baccharis (

Baccharis halimifolia), these species all had relatively few training samples, and as mentioned previously, this can be problematic, resulting in misclassifications. While it may not always be feasible to add more training samples (such as through field methods), especially for low-abundance species in access-limited sites, one approach that can help address this challenge is to combine or reduce the number of species targeted in the classification. Durgan et al. 2020 [

16] reduced their classification scheme from 17 targeted wetland species (some of which experienced classification confusion) to 10 major ones which resulted in improved classification accuracy. Alternatively, some studies opt to group species in their classifications, especially spectrally similar ones or species that tend to be mixed, since additional classes (species) can lead to classification confusion between species [

19]. Though merging or reducing classes can be helpful from a remote sensing perspective, it may not be ideal for monitoring, depending on the species being targeted. Other approaches, such as soft classification techniques using fuzzy logic (e.g., fractional class membership), offer an alternative to binary classification approaches and may help to manage intra-class heterogeneity and mixed pixel situations, potentially reducing misclassifications [

54]. Regardless, the observed species-specific responses suggest that optimal remote sensing approaches may need to be tailored not only to site conditions but to individual target species.

4.2.3. Percent Cover

Another noteworthy difference between field and remote sensing data related to this study is the mismatch between field percent cover compared to the remote sensing data. In traditional field monitoring surveys, percent cover does not have to equal 100% in a plot (e.g., can be more or less), whereas in remote sensing classification results, cover estimates will total 100% in a plot (assuming all pixels are classified). In the field data used in this study, percent cover was summed for species occurring in multiple strata in a given plot. While the effect of this was not assessed in this study, it’s important to point out that this was limited to taller species (e.g., greater than or within mid-canopy). Thus, this approach of summing coverage could have resulted in overestimated coverages used in comparisons, ultimately making model performances appear worse for some species. This is another possible issue in which field methods could be modified to accommodate remote sensing. For example, a secondary field cover estimation of dominant overstory species or species targeted for remote sensing classification could be made to total 100% in a plot. A primary and secondary cover estimation would allow for a more direct comparison of percent cover between the two methods, while still including a traditional cover estimation of all species in a plot.

The tendency toward coverage underestimation observed at both sites and across models revealed limitations in translating remote sensing data to quantitative vegetation assessments. The prevalence of underestimation (five of eight species at LaBranche, eight of 11 species at Spanish Pass) suggested consistent challenges in detection, in which low-coverage occurrences or mixed-pixel scenarios where target species contributed to but did not dominate spectral signatures may have led to reduced classification performance as they are prone to confusion. Species with the highest coverage underestimations likely suffered from such confusion. For example, at LaBranche, even though bulltongue arrowhead (

Sagittaria sp.) had high F1 scores for species presence detection, it also underestimated coverage in comparison to field data (though the difference was still considered low at ~7%). This may have been because it occurred in plots with relatively higher taxa richness (e.g., LB4, LB9, and PO17-02), in which increased spectral diversity and variability between and within species tends to reduce classification reliability due to confusion [

6,

16,

17,

18,

20,

21]. Such confusion could have occurred with floating primrose-willow (

Ludwigia peploides), another emergent aquatic species that occurred in many of the same plots yet had overestimated coverage. Similarly, at Spanish Pass, seaside goldenrod (

Solidago sempervirens) showed high F1 scores but also had underestimated coverage. This may have happened for several reasons, (1) the species tends to have fine-scale physical features, (2) it was often interspersed with bare sand along the central ridge section of the study area, and (3) it also occurred in plots dominated by canopy-forming vegetation—all of which could have contributed to reduced coverage estimations. Additionally, at Spanish Pass, even though herb of grace (

Bacopa monnieri) had fewer training samples, it also tended to occur in plots with relatively higher coverage of canopy-forming vegetation. This co-occurrence with taller vegetation affected classification performance which is illustrated by lower F1 scores and coverage underestimation across models. This is consistent with studies that examine challenges with using remote sensing to classify understory vegetation [

55,

56]. Beyond canopy occlusion, factors such as spectral mixing with water, bare ground or mud, and senescent material, bidirectional reflectance behavior related to vegetation structure, and adjacency effects impacting spectral signatures at fine spatial scales may also contribute to vegetation cover underestimation for some species or in some project areas. The trend towards coverage underestimation has implications for restoration monitoring, as early successional or establishing populations may be under-detected, potentially leading to misrepresentation of restoration progress or species colonization rates.

4.2.4. Other Remote Sensing Considerations

Other classification iterations could be evaluated to improve or build upon this study. These include adding other layers to the composite, such as vegetation indices (e.g., NDVI). Such indices were not evaluated in this study; however, they have been shown to help improve vegetation classification accuracy [

57]. We elected not to include them in this study to keep the number and type of classification iterations manageable in the performance evaluation. This allowed the focus to remain on evaluating the different imagery (5- and 10-band) and classifiers (ML and RF), while, more importantly, assessing the utility of comparing classification results to traditional field survey monitoring data. Additionally, other classifiers or classification methods, such as SVM or OBIA, respectively, have shown utility in classifying wetland vegetation [

6,

58]; however, they were not considered in this study. Again, this was to keep the number of iterations manageable, and because there are somewhat conflicting outcomes in terms of classification accuracy between methods in published literature. Some studies show improved classification accuracy using OBIA, while others have superior performance with pixel-based methods. A future study could consider adding vegetation indices to the composite as well as evaluating other classification methods, such as OBIA, and examine those as factors for predicting taxa richness, species identification, and community assemblage.

5. Conclusions

Few remote sensing studies attempt to address technical issues surrounding integration with traditional plot-based survey data collected for restoration monitoring because of the inherent differences in how data are collected, quantified, analyzed, assessed, summarized, reported, etc. Chasmer et al. (2020) [

6] is one example that makes theoretical recommendations for how to collect traditional-style field data specifically to validate remote sensing classifications of wetland vegetation, primarily using transect-based designs. The field data collection recommendations contain elements that are present in traditional monitoring surveys (e.g., wetland species, structural and composition characteristics), and thus could be used to support restoration monitoring; however, the authors stop short of providing the critical link with how to compare those data to remote sensing products, such as to assess remote sensing classification performance or agreement. Additionally, Lawley et al. (2016) [

3] mentions the difficulty with integrating traditional site-based data in remote sensing applications for wetlands, calling for more work to improve their integration. Similarly, Reinke and Jones (2006) [

25] attempt to address specific integration issues by describing plot-size and image sample mismatches, number of reference points, temporal mismatches, etc. Applied studies, such as Shuman and Ambrose (2003) [

5], represent an early example attempting to address the question of integration for determining overall wetland vegetation cover by comparing airborne image-based classification using two different traditional field methods (quadrat vs. line-intercept), citing challenges with obtaining sufficient field samples (especially in patchy habitats), yet determining that cover was comparable across methods. DiGiacomo et al. (2022) [

12] is a more recent study that compared percent cover values from fixed long-term monitoring plots to those derived from UAS imagery with mixed results, while Thomsen et al. (2022) [

13] also utilized long-term monitoring transects (quadrats) to examine trends in overall percent cover via UAS imagery. Lastly, Chabot and Bird (2013) [

50] utilized traditional plot data to classify and validate UAS-based results in which statistical comparisons were made to assess percent cover agreement between field and UAS data to identify basic wetland types (with good agreement between methods to identify water, cattail (

Typha), and a mixed other class) as opposed to more detailed classes that could not be distinguished in the UAS imagery.

There exists a complementary nature between traditional field monitoring and remote sensing data [

29], and future work should continue to address their respective shortcomings, while likewise exploiting their individual strengths. The aim is to adapt or modify traditional field methods to accommodate their use in remote sensing application, further expanding their capacity to inform restoration monitoring. Additionally, statistical methods and metrics typically used in biological field-based assessments in monitoring reports could be employed and adapted to strengthen remote sensing analyses and outputs, bolstering their relevance in restoration science. Moreover, field data is commonly reported as lacking in many published remote sensing studies, and thus, routinely collected field monitoring data could help improve that shortage. A hybridization, integration, and adaptation of methods across geography and ecology disciplines could not only help bridge methodological divides or gaps but also increase the amount of high quality monitoring data to better support restoration decision-making. In turn, that information could be used to help optimize field- and remote sensing-based data collection efforts, increasing efficiency by better focusing resources [

29]. This study attempts to move in this direction by shedding light on capability and limitations of UAS technology for wetland vegetation classification and how those results relate to traditional plot survey data, providing a foundational step toward more formal integration. By directly comparing UAS-derived classifications with plot-based metrics used in restoration monitoring, this study provides an evidence-based approach needed to determine when, and under what conditions, UAS products are aligned with traditional field data fundamental to assessing restoration performance.