1. Introduction

Synthetic Aperture Radar (SAR) systematic geocorrected products are typically geocoded to a map projection without ground control points (GCPs), relying on the range-Doppler (RD) signal model [

1,

2,

3,

4]. However, due to cumulative errors from aircraft positioning/attitude determination, payload time calibration, radio wave propagation delays, and local average elevation inaccuracies, even rigorous geolocation models may result in SAR images with non-negligible systematic geolocation errors, failing to meet the demands of high-precision applications [

4,

5]. Meter-level precise geolocation, a critical geometric correction step, traditionally requires a rigorous geometric model calibrated with user-collected GCPs [

6]. Yet, GCP acquisition is often arduous in remote regions, featureless terrains (e.g., glaciers, deserts), or restricted areas [

5,

7], where reliable GCPs may be inaccessible or cost-prohibitive. Moreover, SAR-specific radiometric and geometric effects—such as speckle noise, foreshortening, layover, and shadow—render GCP identification and collection far more challenging than in optical imagery. Thus, developing GCP-free precise geolocation methods for SAR images remains an urgent need [

5,

7,

8].

Recent efforts have explored stereo localization via multi-view SAR image fusion [

9]. Chen and Dowman [

10] proposed a spaceborne SAR 3D intersection algorithm based on weighted least-squares. Luo et al. [

11] achieved robust multi-view spaceborne SAR 3D positioning using the range-Doppler model. Yin et al. [

12] developed a two-stage stereo positioning method for multi-view spaceborne SAR, involving initial and secondary positioning on a normalized RD model to enhance accuracy and stability. However, these methods cannot eliminate positioning offsets induced by SAR system calibration parameter errors.

To address such errors, researchers have investigated high-precision GCP-free geolocation by fusing multi-pass, multi-view SAR data of the same area [

13,

14]. In the absence of GCPs, calibration parameters can be corrected using geometric relationships between overlapping SAR images under specific observation configurations [

8,

15]. For instance, a self-calibration model with symmetric geometric constraints [

16] enables systematic error estimation but requires two images from different orbits with identical incident angles. Zhang et al. [

17] proposed a self-calibration method using at least three overlapping images, leveraging spatial intersection residuals of conjugate points to detect timing offsets. For dual-view airborne SAR, Zhang et al. [

18] estimated self-calibration parameters via a simple affine transformation model on ground-range images, improving GCP-free geolocation accuracy.

Key geometric calibration parameters for SAR include range-direction fast time and azimuth-direction slow time, which originate from systematic errors (e.g., instrument internal delays, GNSS-radar time synchronization errors). These parameters are generally assumed stable when the same radar acquires images from different perspectives [

16,

17,

18], forming the basis for geometric self-calibration via multi-view data fusion.

However, for three or more multi-view SAR images, even from the same system, estimates of 2D calibration parameters (azimuth and range) derived from different dual-view combinations often exhibit large fluctuations. In some cases, individual images may show significant positioning offsets (see experimental results), qualifying as outliers. This arises because non-ideal factors causing systematic positioning errors are complex and diverse: minor error terms—such as platform positioning errors, antenna phase center offsets, atmospheric delays, and ground elevation projection errors—exhibit randomness across different viewing angles [

19]. Consequently, effective outlier detection and removal are critical to avoid compromising self-calibration.

Notably, the stability of self-calibration and the handling of ill-conditioned problems in multi-view scenarios have attracted research attention. For spaceborne SAR, Yin et al. [

20] proposed a sensitive geometric self-calibration method based on the RD model, utilizing the determinant and an accuracy stabilization factor to filter images, thereby mitigating singular solutions and enhancing robustness. That study identified satellite position error as the primary error source and recommended acquiring ipsilateral images with incidence angles greater than 8° to improve geometric configuration. For UAV-borne SAR with spatially variant slant range errors, Luo et al. [

21] proposed a geometric auto-calibration method that incorporated a tie-point quality-based weighting strategy and an iterative eigenvalue-correction least-squares solution, achieving significant accuracy improvements. These methods underscore the importance of image selection, error source analysis, and robust solving in multi-view self-calibration. Nevertheless, the effective detection of outlier images and the optimal fusion of multi-view data for consistent and high-precision geolocation remain challenging.

This study presents a novel GCP-free high-precision geolocation approach based on multi-view synthetic aperture radar (SAR) image fusion. Focusing on multi-view airborne SAR ground-range images, the proposed method is implemented through the following steps:

- (1)

A positioning error correlation model for homologous point pairs in multi-view SAR images is established, where model parameters are derived from an affine transformation-based geometric positioning model constructed using the four corner points of the ground-range image.

- (2)

Under the assumption of approximately equal positioning errors, initial error estimates are obtained for all arbitrary dual-view combinations. And then potential outlier images are detected and eliminated to prevent interference with the self-calibration process.

- (3)

The remaining multi-view data are fused in two successive steps using the weighted least squares method and the minimum norm least squares method, respectively, to suppress inconsistent errors across different views.

This approach significantly enhances both the GCP-free geolocation accuracy and algorithm robustness for multi-view SAR images. The main contributions of this work are summarized as follows:

- (1)

By leveraging the stability of SAR geometric calibration parameters, an outlier detection and removal method is proposed to retain the subset of multi-view SAR images with the most consistent calibration parameters.

- (2)

A weighted least squares fusion strategy based on a multi-view error propagation model is designed to accurately estimate self-calibration parameters. This strategy balances the influence of angular geometric relationships on positioning error estimation, with a particular focus on suppressing large error propagation caused by small angle differences.

- (3)

The initial weighted fusion estimation results are further refined using the minimum norm least-squares principle. By minimizing residuals and balancing the respective equations, more accurate and robust results are obtained.

The remainder of this paper is organized as follows:

Section 2 introduces the multi-view positioning error correlation model.

Section 3 elaborates on the principles and steps of the proposed outlier removal and multi-view SAR fusion positioning method.

Section 4 validates the method using both simulated and measured data, with performance analysis conducted via ablation and comparative experiments. Some factors affecting algorithm accuracy are discussed in

Section 5. Finally, conclusions are drawn in

Section 6.

2. Multi-View Positioning Error Correlation Model

In practical airborne SAR image processing, a simple first-order affine transformation model is typically established using the latitude and longitude coordinates of the four corner points attached to ground-range SAR images. This model describes the transformation from pixel coordinates

to the systematic geolocation coordinates

of scatterers:

where

B and

L denote the geographic latitude and longitude of the scatterer’s systematic geolocation, respectively.

C and

D represent the coefficient matrices for the linear term and constant term of the affine transformation model, respectively, which can be derived inversely from the latitude and longitude coordinates of the image’s four corner points [

18].

Considering residual errors in the SAR system’s geometric calibration parameters, the actual geographic coordinates of ground scatterers strictly satisfy:

where

and

are the true latitude and longitude of the scatterer

in the SAR image;

and

are the ground sampling distances (GSD) in the range and azimuth directions, respectively;

r and

a denote the positioning offsets in the range and azimuth directions. These offsets are closely related to the SAR system’s range electronic delay and azimuth timing offset, exhibiting good numerical stability during multi-view image acquisition with slight random fluctuations across different views. Our objective is precisely to achieve geometric self-calibration by fusing multi-view SAR images, thereby estimating the 2D positioning offsets—i.e., range

and azimuth

—of each image as accurately as possible.

After system-level positioning, rotational and scale differences between multi-view images are eliminated. Therefore, a high-precision area-based matching method [

22] is employed to align multi-view SAR images, yielding multiple sets of homogenous points (sharing identical true geographic coordinates). Compared to feature-based methods, this approach yields more reliable homogenous point pairs. Based on these points, the positioning error correlation model for dual-view SAR homologous point pairs is established as follows:

where

m and

n correspond to SAR images from different views. Formula (3) can be rewritten as:

where

and

are the systematic geolocation coordinates of homologous points (Hps) in images

m and

n, respectively, calculated using (

1);

represents the systematic geolocation difference of Hps between the dual-view images. Further transformation of (

4) yields:

Formula (

5) can be rewritten as:

where

denotes the element in the

i-th row and

j-th column of

, and

denotes the element in the

i-th row and

j-th column of

. Since

,

,

, and

are unknowns, this system of equations is rank-deficient and cannot be solved directly. Equation (

6) establishes the relationship between range-azimuth positioning errors in dual-view images and latitude-longitude differences. Matrix elements reflect the influence of affine model coefficients and ground sampling distance on error propagation. To address this, dual-view fusion positioning typically assumes equal positioning errors for the two images to avoid rank deficiency. However, this assumption often leads to significant positioning accuracy loss in multi-view observation scenarios.

Generalizing to the case of

N multi-view SAR images, the positioning error correlation model for multi-view SAR images is established as:

where

is a 2D zero matrix. Although this system remains rank-deficient, the increased observation dimension enables optimal estimation in the sense of minimum norm least squares.

3. Methodology

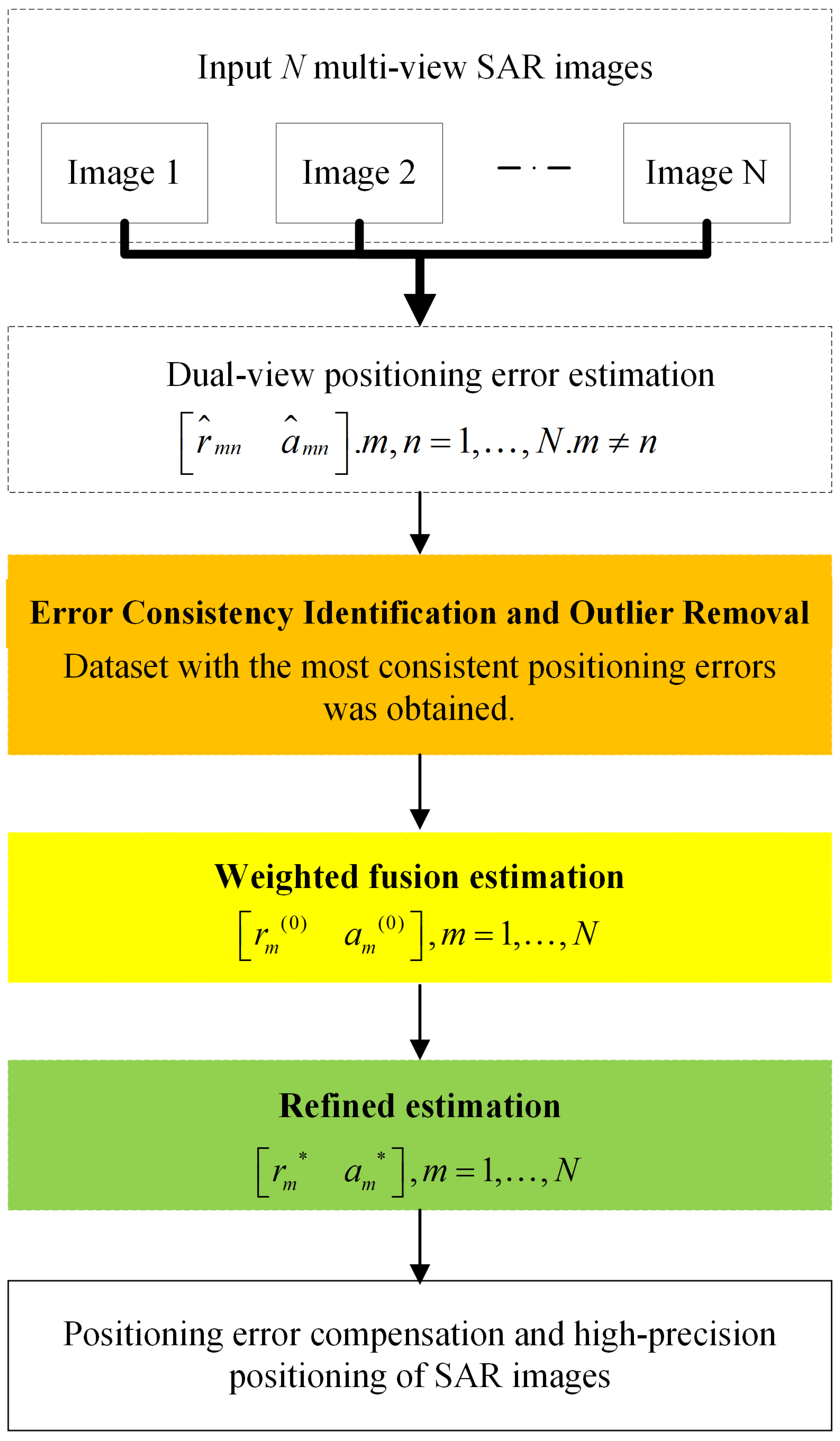

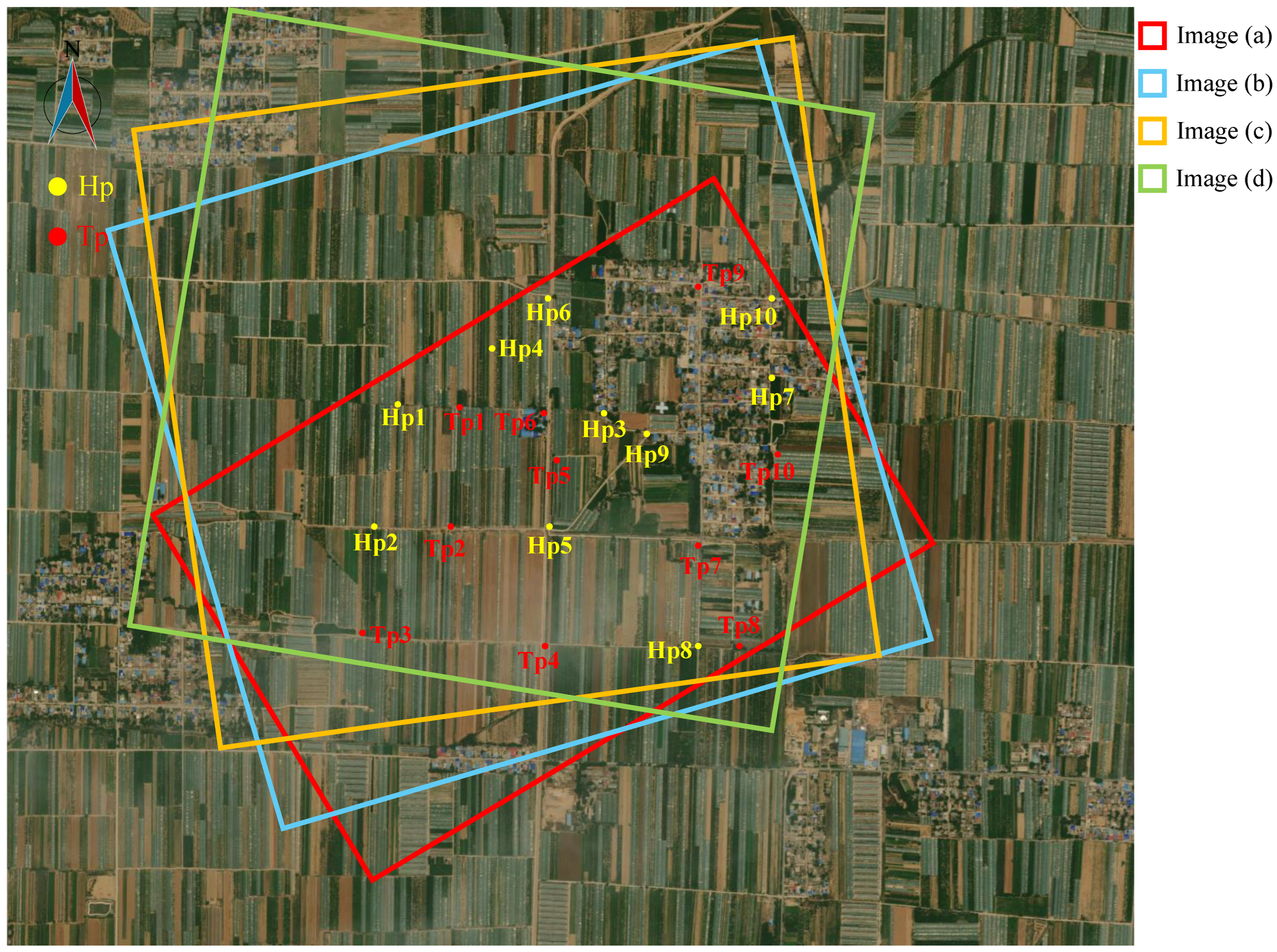

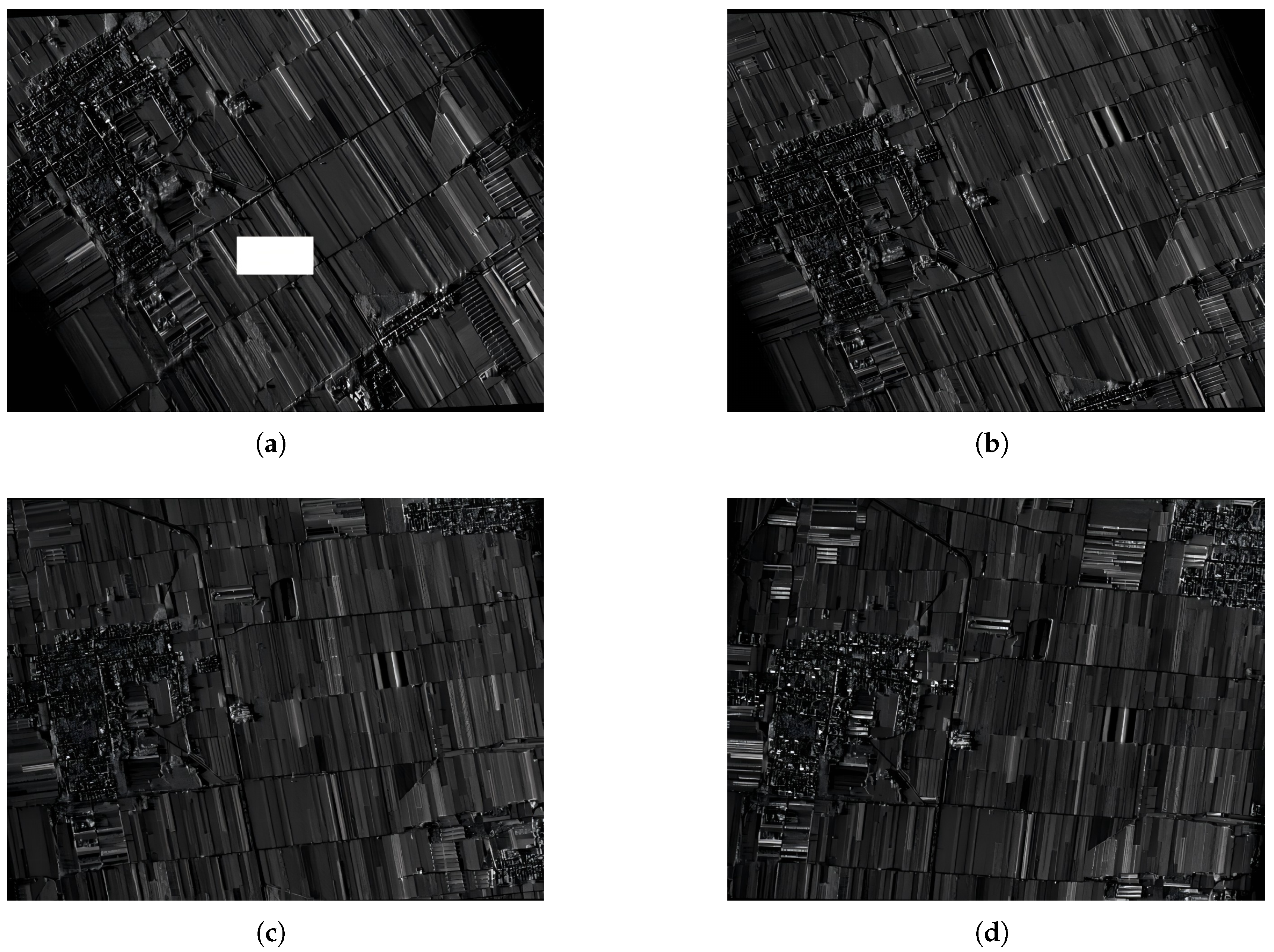

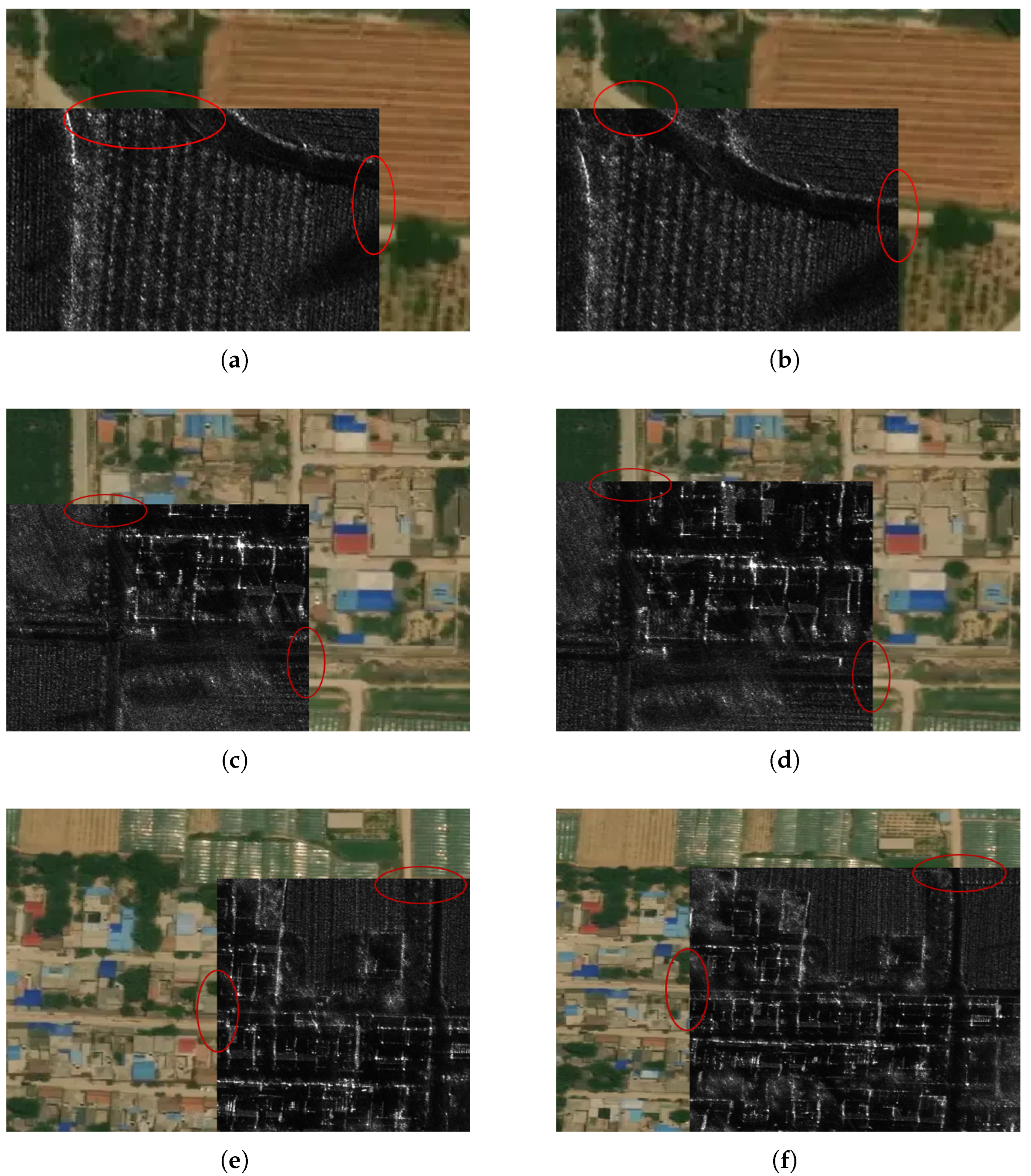

Figure 1 illustrates the main workflow of the proposed method. First, multi-view images are paired to perform dual-view fusion positioning, yielding rough estimates of 2D positioning offsets for each pair. While systematic 2D positioning offsets of multi-view SAR images are generally consistent, anomalous offsets may arise under complex influences (e.g., aircraft trajectory deviations caused by intense airflow disturbances). To address this, a coefficient of variation-based method is proposed to identify consistency in multi-view positioning errors and eliminate anomalous images, enabling outlier removal prior to multi-view fusion. Next, considering that error estimation accuracy of dual-view pairs is closely tied to their geometric relationships (e.g., angular differences), weighting coefficients are computed using the sensitivity of the error propagation model to fuse positioning error offsets across multi-view SAR images. Finally, fusion estimates are refined under the minimum norm least-squares criterion to achieve high-precision planar positioning for each SAR image. This method effectively suppresses the impact of inconsistent multi-view positioning errors. Additionally, the weighted fusion strategy accounts for error sensitivity differences arising from angular geometric characteristics, leveraging complementary and redundant information in multi-view images to maximize algorithm accuracy and robustness.

3.1. Consistency Identification of Multi-View Image Positioning Errors and Outlier Removal

Assuming equal systematic positioning errors for images

m and

n, Equation (

3) can be simplified for any homologous point as:

where

and

denote the range and azimuth positioning errors, respectively. Let

where

represents the element in the

i-th row and

j-th column of

. Substituting (

9) into (

8) yields the range and azimuth positioning error estimates from dual-view fusion:

The accuracy of dual-view estimates depends on both the consistency of positioning errors between views and the inherent angular difference in dual-view observation geometry. With additional multi-view SAR images, the subset with the most consistent positioning errors can be selected based on the dispersion of error estimates across all dual-view pairs.

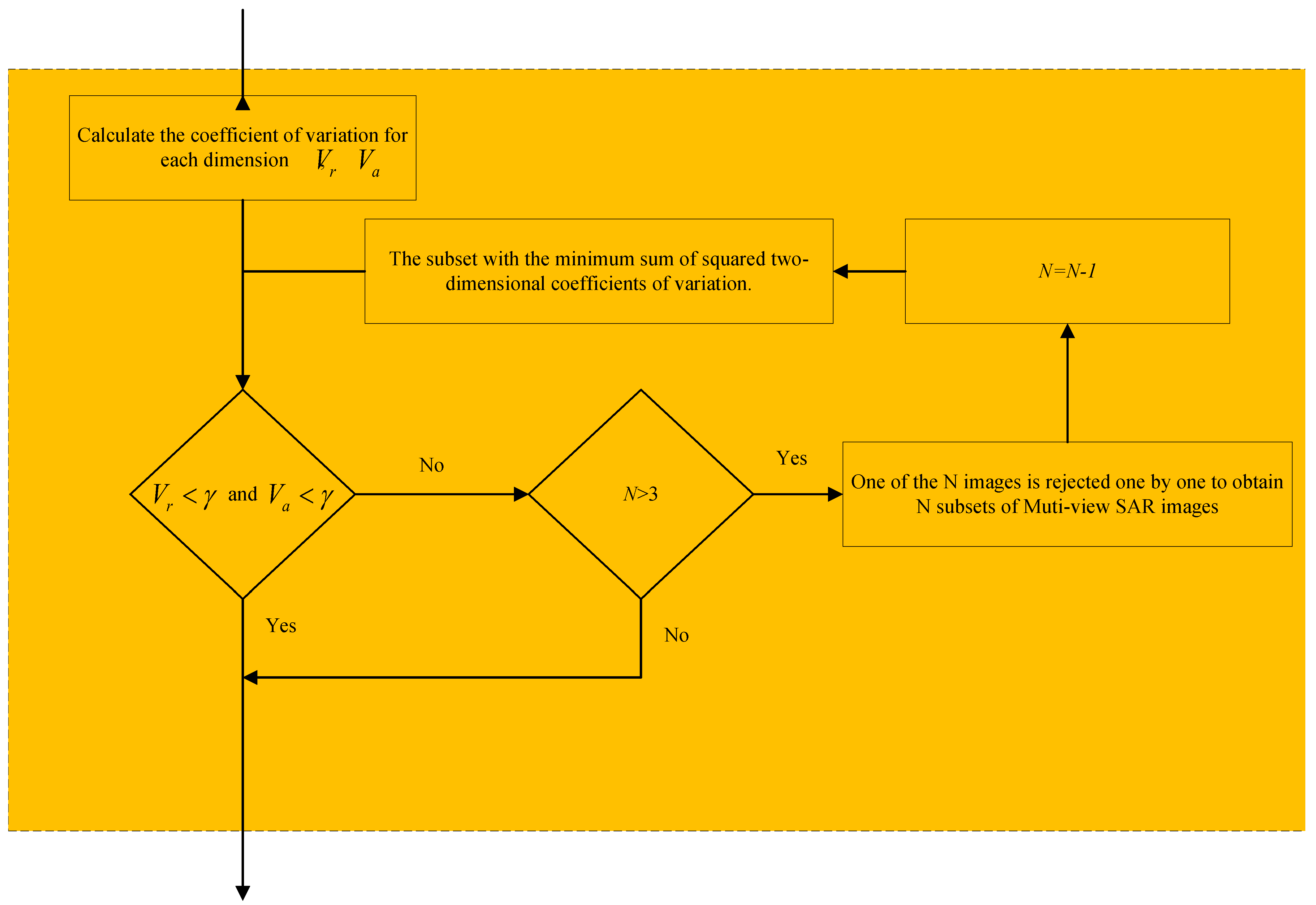

The workflow for identifying multi-view positioning error consistency and outlier removal is shown in

Figure 2. For

N multi-view SAR images, pairwise correlation yields

estimates via dual-view fusion. The dispersion of these estimates reflects the consistency of true positioning errors across multi-view images. Thus, the coefficient of variation [

23] is employed to quantify this consistency, calculated separately for range and azimuth errors:

where

and

are the coefficients of variation for range and azimuth errors, respectively;

and

denote their standard deviations;

and

represent their means.

Consistency is evaluated by comparing and against a threshold . While a 20% threshold is typical in practice, error propagation effects inflate estimation dispersion, so is adjusted to 1 in this algorithm.

Specifically, if either 2D coefficient of variation exceeds 1, actual positioning error discrepancies between images are unacceptably large, precluding accurate estimation. In such cases, images are sequentially excluded one at a time from the N images, generating N subsets each containing images. For each subset, estimates and 2D coefficients of variation are computed. The subset with the smallest coefficients is selected, and if both values are <1, it is adopted as the optimally consistent dataset. If not, exclusion continues until a subset with relatively consistent systematic positioning errors is identified.

If coefficients of variation in either dimension remain >1 even after reducing the subset to three images, the three images with the most consistent errors are selected for fusion positioning.

3.2. Multi-View Positioning Error Fusion Estimation Based on a Geometric Error Propagation Model

Consistency identification and outlier removal yield a subset of multi-view SAR images with relatively consistent systematic positioning errors. Concurrently, a geometric error propagation model for dual-view SAR positioning estimates is established, enabling a weighted fusion strategy to compute multi-view positioning error estimates. To quantify positioning errors between any two images, an equivalent equation is derived using true geographic coordinates of Hps:

Let

where

and

are the true range and azimuth positioning errors of images

m and

n, respectively;

and

denote their range and azimuth error differences. Substituting (

13) into (

12) yields

Rearranging (

14) gives

Thus, the estimation error introduced by image

n into image

m during dual-view positioning is

where

and

are the range direction positioning error estimate and azimuth direction positioning error estimate when the positioning errors of images m and n are assumed to be equal. (

16) indicates that due to discrepancies in true systematic positioning errors, the error propagation matrix governing error transfer from image

n to

m satisfies

where

is the error propagation matrix from

n to

m. The larger the absolute value of an element, the stronger the interference of n’s error on m’s estimation result, providing a basis for weight design in subsequent weighted fusion. Substituting (

17) into (

16) yields:

Larger magnitudes of

,

,

, and

indicate greater estimation errors induced by actual positioning error differences. This implies that errors introduced by SAR images at different angles to a reference image depend on their mutual geometric relationship. Let

and

denote the range and azimuth influence factors, defined as

Systematic positioning error estimates from all pairwise combinations of multi-view images are then fused via a weighted strategy to enhance per-view error estimation accuracy. The fused positioning error for image

m is

where

and

are the fused range and azimuth error estimates for image

m. The weights

and

satisfy

and

This yields multi-view positioning error estimates consistent with the relative consistency of true errors. Subsequently, positioning errors of excluded views can be estimated using equivalent equations derived from Hps’ true geographic coordinates.

Substituting (

18) into (

20) shows that

Estimation accuracy is ensured when errors are relatively consistent (i.e.,

). Equation (

23) decomposes the fused range error and azimuth error estimate into two parts: the weighted sum of the true error and the dual view estimation error. The weight coefficients are determined by the geometric error propagation matrix, which means that images with smaller angular differences (i.e., larger propagation errors) will receive lower weights. When the error of multi view images is highly consistent, the weighted sum tends to zero, and the fusion result will approach the true error.

3.3. Refinement of Fusion Estimation Based on Minimum Norm Least Squares

Following multi-view positioning error fusion, results are refined using the minimum norm least-squares criterion [

24,

25], which relies on the Moore–Penrose generalized inverse [

26] for solution, to achieve accurate error estimation, enabling high-precision planar positioning of SAR images. Equivalent equations between different views are used to derive the minimum norm least-squares solution for weighted fusion estimates.

Taking three images as an example:

Let

denote the fusion estimates. The residuals of the equation system satisfy

Due to rank deficiency, the Moore–Penrose generalized inverse of the coefficient matrix is used to solve for the minimum norm least-squares solution

of (

25):

where

is the Moore–Penrose generalized inverse matrix of

. The final positioning error estimate is

Compared to initial fusion estimates, refined results exhibit smaller equation residuals and higher accuracy. This method balances residuals across equations, preventing dominance by extreme errors and enhancing robustness. Incorporating refined error estimates into latitude/longitude calculations and repositioning SAR images accordingly yields high-precision multi-view SAR positioning results.

5. Discussion

- (1)

The Impact of Radar Target Anisotropy

The method in this paper needs to extract multiple sets of homologous point pairs from multi-view SAR images through high-precision registration. Although strictly speaking, the anisotropy of artificial targets will inevitably reduce the registration accuracy of multi-view images, especially in urban high-rise building areas. Considering that actual multi-view airborne SAR images used in the experiments of this paper have a large coverage area, the limited quantity with significant anisotropy ensures pixel-level registration accuracy and maintains homotopic point reliability. The positional error introduced by this non-ideal registration is far smaller than the systematic geolocation error (at least tens of pixels) of each SAR image. Therefore, the impact of radar target anisotropy on the method in this paper is negligible.

- (2)

The Impact of Complex Terrain Scenarios

The method proposed in this paper focuses on correcting the overall geolocation offset of images, and it possesses both high efficiency and high precision, thus holding significant engineering application value. The space-variant systematic geolocation errors introduced by the errors of geolocation model parameters account for a relatively small proportion. The main source of the spatial variability of errors usually stems from terrain relief factors. Addressing this issue will significantly increase the complexity of the algorithm, which is not the research focus of this paper.

This study uses multi-view airborne SAR images of flat terrain as the experimental data to verify the effectiveness of the core innovations. For multi-view SAR images of mountainous and urban high-rise building scenarios, terrain undulations and tall buildings may locally cause layover, shadows, and additional relative positioning errors. On one hand, this tends to reduce the image registration accuracy in local areas; on the other hand, it will weaken the consistency of the systematic positioning errors of multi-view images in local areas, thereby posing technical challenges to the application of the method proposed in this paper.

In future work, we plan to avoid selecting Hps in urban high-rise building areas through regional masking, correct terrain-induced errors by integrating a Digital Elevation Model (DEM) to address the issue of spatial variability of errors in mountainous scenarios, and acquire more multi-view SAR image data under different scenarios to experimentally verify the adaptability of this method in complex terrains.

- (3)

The number of multi-view images and the proportion of outlier images

When only 2 images from different viewing angles are input, the method in Reference [

18] can be directly adopted. When conditions allow for the acquisition of more multi-view SAR images, the method proposed in this paper should be used. The new method not only makes full use of multi-view geometric structure information to improve fusion accuracy but also can automatically eliminate a small number of SAR images with inconsistent systematic errors, thereby significantly enhancing the accuracy and robustness of the algorithm.

Theoretically, the more multi-view images there are, the higher the fusion processing accuracy will be. Considering the limited sources of current multi-view SAR image data, this paper only compares the processing performance when 2, 3, or 4 images are input. Experimental results show that the fusion of 4 multi-view images yields results under different combinations that are significantly better than those obtained by fusing 2 or 3 images, with the highest positioning accuracy reaching 1.63 m, which is already close to the level of methods corrected using control points.

However, in practical applications, considering multiple factors such as geometric distortion of terrain and ground objects, differences in multi-view target scattering, and a certain degree of inconsistency in multi-view positioning errors, inputting too many images will not only fail to continuously improve algorithm performance but also significantly increase algorithm complexity. Therefore, considering both algorithm complexity and accuracy convergence, we suggest that the number of multi-view images in practical applications should be 3–7, among which the proportion of outlier images should not exceed 30% (i.e., 1–2 images are appropriate).