1. Introduction

The widespread adoption of LiDAR and multi-sensor platforms has established 3D point clouds as a cornerstone for environmental perception in applications such as autonomous driving. While UAV-based monitoring represents a promising future extension of this research, the present study focuses on ground-level LiDAR perception for urban road scenes. Among various perception tasks, 3D object detection and localization are particularly crucial, as they provide essential spatial information for downstream applications such as path planning, dynamic monitoring, and disaster response. Reliable object identification and localization in complex environments form the foundation for dynamic scene interpretation, supporting intelligent systems in autonomous navigation and cooperative sensing. Moreover, accurate perception of small, occluded, or non-cooperative objects is critical for ensuring operational safety and facilitating effective data fusion across sensing platforms.

Despite remarkable progress in deep learning-based 3D detection [

1,

2,

3], several challenges persist under real-world conditions. Severe occlusion, edge ambiguity, and the detection of small or non-cooperative objects in cluttered scenes remain major obstacles. Pioneering work such as PointNet [

4] introduced direct point-based feature learning, while voxel-based methods like VoxelNet [

5] and SECOND [

6] enhanced spatial representation through 3D convolution on structured grids. Subsequent multi-view frameworks, including MV3D [

7] and PointPillars [

8], aimed to achieve a better balance between accuracy and efficiency by projecting 3D data into 2D spaces. Meanwhile, multi-stage detectors such as PointRCNN [

9] and RSN [

10] adopted coarse-to-fine refinement pipelines [

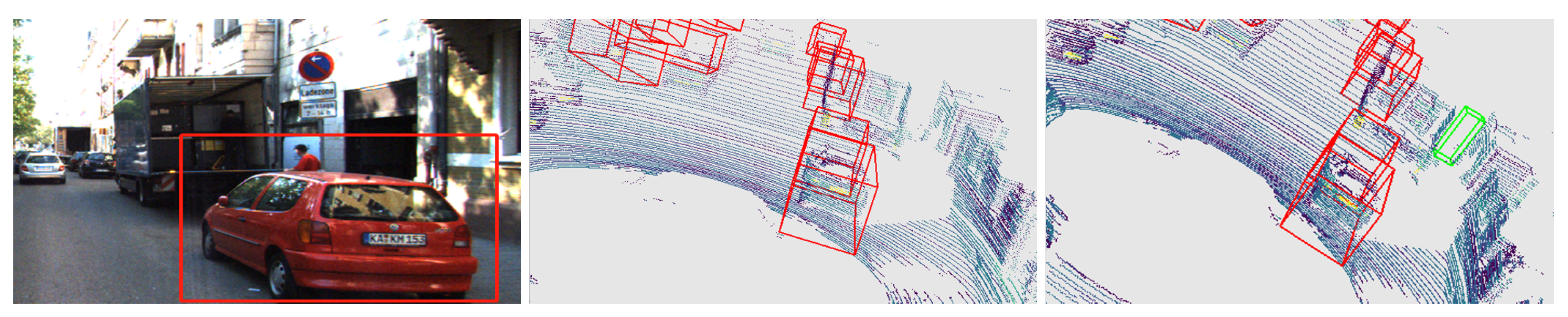

11]. Nevertheless, a common limitation of these approaches is the progressive loss of fine geometric details due to downsampling and pooling operations. This degradation in localization precision is particularly pronounced along object boundaries and for small or occluded instances, as illustrated in

Figure 1. These limitations not only reduce detection accuracy but also hinder downstream tasks such as object tracking, motion prediction, and multi-source data fusion. Although evaluated on autonomous driving datasets such as KITTI [

12] and Waymo [

13], we emphasize that these benchmarks represent ground-based LiDAR sensing environments that share fundamental challenges with remote sensing, including sparse sampling, occlusion, and background clutter. Consequently, the advancements achieved in this demanding near-range domain underscore the potential of our method for broader remote sensing applications, particularly for large-scale 3D scene reconstruction and spatial structure understanding across diverse viewing geometries.

To overcome these limitations, we propose Edge-Aware Semantic Feature Fusion for Detection (EAS-Det), a lightweight and plug-and-play framework that explicitly integrates geometric edge cues with high-level semantic features to enhance structural awareness and contextual reasoning; it can be seamlessly integrated with various 3D detection backbones without modifying their internal architectures. Unlike existing approaches that simply concatenate semantic and geometric features, EAS-Det introduces a bidirectional edge–semantic attention mechanism within the ESI module. This design adaptively re-weights low-level geometric gradients and high-level semantic cues, achieving dynamic feature balancing across multiple receptive fields. At the core of our framework lies the Edge–Semantic Interaction (ESI) module, which employs a dual-attention strategy to produce structure-preserving and context-aware representations. By effectively capturing fine-grained geometric details together with semantic context, the framework exhibits strong robustness, achieving substantial performance gains in the detection of small objects like pedestrians and cyclists, which are particularly challenging in sparse point clouds.

The ESI module comprises three complementary branches: (1) a geometric branch, which projects the raw point cloud into the bird’s-eye view (BEV) and applies Sobel filtering to extract pixel-wise gradient information, thereby capturing salient edge structures; (2) a fusion branch, which employs a channel–spatial attention mechanism to adaptively weight and integrate raw and edge-enhanced features, yielding context-rich multi-scale representations; and (3) a semantic branch, which processes edge-augmented features through a 3D semantic encoder to produce high-level semantic maps with confidence scores. The outputs from these branches are combined into a unified representation that improves detection accuracy and robustness while providing a reliable foundation for temporal consistency in multi-frame tracking and cross-platform perception.

We integrate EAS-Det into several mainstream 3D detection backbones, including PointPillars, PointRCNN, and PV-RCNN [

14], and evaluate it on the KITTI and Waymo benchmarks. Experimental results show consistent performance improvements, particularly for small and heavily occluded objects, confirming that EAS-Det effectively preserves geometric integrity and semantic coherence.

In summary, the main contributions of this work are as follows:

We propose EAS-Det, a lightweight and plug-and-play framework for fine-grained 3D object detection, which integrates multi-scale edge, geometric, and semantic features to enhance spatial perception and localization accuracy.

We design an ESI module that employs a dual-attention mechanism to adaptively integrate edge and semantic cues, improving boundary precision, contextual understanding, and temporal consistency in complex and cluttered environments.

Comprehensive experiments on the KITTI and Waymo datasets demonstrate the superior accuracy, robustness, and generalization of EAS-Det, particularly for small objects.

2. Related Work

Deep learning has significantly advanced the field of 3D object detection. Existing methods can be broadly categorized into two major paradigms: two-stage detectors and one-stage detectors. These paradigms embody distinct design philosophies, aiming to balance accuracy, efficiency, and robustness under diverse sensing conditions.

Two-stage detectors typically generate candidate proposals before refining classification and localization. In 2D vision, pioneering methods like R-CNN [

15], Fast R-CNN [

16], Faster R-CNN [

17], and Mask R-CNN [

18] established the proposal-based paradigm, which enables high detection accuracy through iterative region refinement. Extending this to 3D perception, PV-RCNN [

14] effectively fuses voxel and point features using a novel voxel set abstraction module, while CT3D [

19] employs transformer-based encoders to capture long-range dependencies and spatial correlations. Building on these principles, methods like AVOD-FPN [

20] and DetectoRS [

21] emphasize multi-scale fusion and small-object detection via hierarchical feature pyramid integration. Recent advances in sparse representation, such as VoxelNeXt [

22] and SparseBEV [

23], have introduced fully sparse 3D convolutional networks, significantly improving detection efficiency while maintaining accuracy. Although these methods achieve state-of-the-art performance, they involve substantial computational costs and are prone to error propagation from inaccurate region proposals. This limits their deployment on real-time or resource-limited remote sensing platforms such as UAVs and satellites, highlighting the need for approaches that maintain accuracy while reducing computational complexity.

One-stage detectors, in contrast, perform classification and regression in a single pass, offering faster inference suitable for real-time applications. Representative 2D frameworks include YOLO [

24,

25,

26] and SSD [

27], while for 3D point clouds, methods like VoxelNet and SECOND employ sparse 3D convolutional networks on voxelized representations. PointPillars encodes point clouds into pseudo-images for efficient 2D convolution, and CenterPoint [

28] detects objects directly from heatmap-predicted centroids in an anchor-free manner. More recent approaches, such as IA-SSD [

29], introduce instance-aware downsampling to preserve critical points, and FAR-Pillar [

30] employs feature adaptive refinement for oriented 3D detection. These approaches strike a balance between efficiency and accuracy but often lack fine-grained spatial granularity and explicit boundary modeling. Consequently, their performance suffers on small, occluded, or edge-ambiguous objects, which are prevalent in remote sensing imagery where object scale, density, and viewpoint vary drastically. The absence of explicit geometric modeling in these methods highlights the importance of incorporating structural priors for robust detection in complex environments.

Fusion-based methods further enhance perception by integrating complementary modalities at various stages of the detection pipeline. Early fusion approaches like F-PointNet [

31] leverage 2D detections to constrain the 3D search space through frustum estimation, while PointPainting [

32] augments LiDAR points with semantic predictions from RGB images in a sequential pipeline. More recently, SeSame [

33] learns semantic features directly from point clouds through a dedicated segmentation head, reducing dependency on auxiliary modalities. Advanced fusion strategies such as VoxelNextFusion [

34] implement unified voxel-based cross-modal integration, and Fast-CLOCs [

35] demonstrate improved real-time performance through efficient camera-LiDAR candidate fusion. Despite these advances, fusion-based strategies remain sensitive to the quality, temporal alignment, and calibration accuracy of multimodal data, which limits their scalability in heterogeneous systems like satellite–aerial–ground sensor networks. Moreover, reliance on auxiliary sensors or annotations reduces generalization to resource-limited or single-modality settings, highlighting the value of approaches that achieve robust performance using LiDAR data alone.

In summary, two-stage detectors achieve high accuracy at substantial computational costs, one-stage methods offer real-time efficiency with limited robustness, and fusion-based frameworks enhance semantic understanding while introducing external dependencies. These limitations highlight the need for a unified approach that integrates geometric fidelity and semantic context from point cloud data. To address this gap, the proposed EAS-Det framework incorporates an ESI module that explicitly integrates geometric boundary cues with high-level semantic features through a dual-attention mechanism. By preserving structural details while enhancing discriminative power through adaptive feature re-weighting, EAS-Det achieves accurate and robust 3D detection suitable for large-scale, heterogeneous remote sensing environments.

3. Method Overview

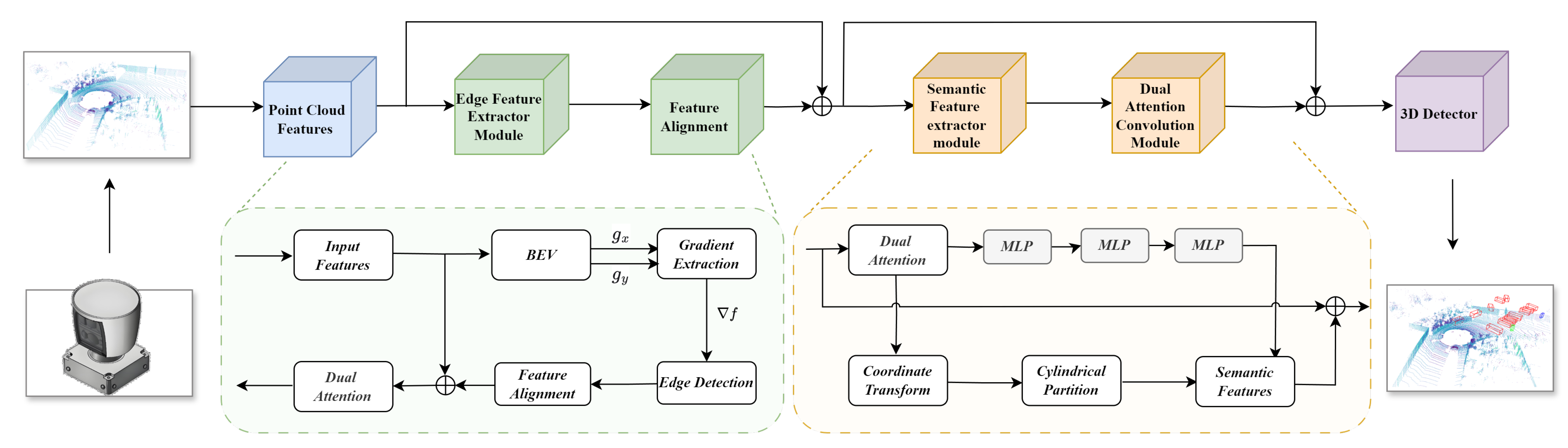

The proposed EAS-Det architecture processes raw LiDAR point clouds to predict oriented 3D bounding boxes, effectively integrating geometric, edge, and semantic cues for robust detection in complex environments. As illustrated in

Figure 2, the framework comprises three main stages: (1) Feature Extraction and Interaction through the ESI module, which extracts and balances high-precision edge and semantic features using a dual-attention mechanism; (2) Feature Concatenation and Fusion, where multi-scale representations are constructed by aligning and aggregating geometric, edge, and semantic cues; (3) LiDAR-based 3D Object Detection, where fused features are fed into a detection head for oriented 3D bounding box prediction, enabling accurate localization and classification under challenging conditions.

3.1. Feature Extraction and Interaction

Robust 3D object detection requires capturing both fine-grained geometric structures and high-level semantic context. Relying solely on geometric cues or semantic information proves inadequate, particularly in remote sensing scenarios characterized by sparse, occluded, or cluttered point clouds. To address this challenge, the proposed ESI module integrates three interdependent components: the Edge Feature Extraction (EF) Module, the Dual Attention (DA) Module, and the 3D Semantic Feature Extraction (SF) Module. By explicitly modeling geometric edges and semantic representations through attention-driven interaction, EAS-Det effectively captures both local object boundaries and global contextual dependencies.

3.1.1. Edge Feature Extraction Module

The EF module extracts precise geometric edge features encoding local structure and object contours, which are critical for detecting small, thin, or sparsely represented objects. The raw point cloud is denoted as:

and is initially downsampled to

points using farthest point sampling (FPS) to reduce computational cost while preserving spatial coverage. Each point is projected onto a bird’s-eye view (BEV) grid, where cells aggregate statistics including maximum height, intensity, and point density. This BEV representation facilitates efficient 2D convolution operations while maintaining spatial locality.

Edge detection employs Sobel operators along the

x and

y directions:

where ∗ denotes convolution, with convolution kernels:

Gradient magnitude and orientation are computed as:

Non-maximum suppression (NMS) and dual-thresholding refine the edge maps. To accommodate objects at varying scales, multi-scale BEV grids generate edge maps denoted as , which are fused to provide scale-invariant edge cues.

Through lightweight operations including FPS sampling, BEV projection, and Sobel convolution, the EF module achieves robust feature extraction with minimal overhead, enabling real-time deployment.

While the Sobel operator originates from image gradient computation, in this context the term edge specifically denotes two-dimensional gradient features computed on the BEV height map that correspond to object contours or elevation discontinuities in the 3D scene. This approach enables the extracted edge cues to serve as a compact yet physically meaningful approximation of local surface variations. Moreover, this gradient-based extraction exhibits inherent robustness to point cloud sparsity, as it relies on relative intensity contrasts between adjacent voxels rather than absolute point density. This property enables the preservation of salient edge structures even in sparse BEV projections, which is crucial for maintaining detection performance under challenging sensing conditions.

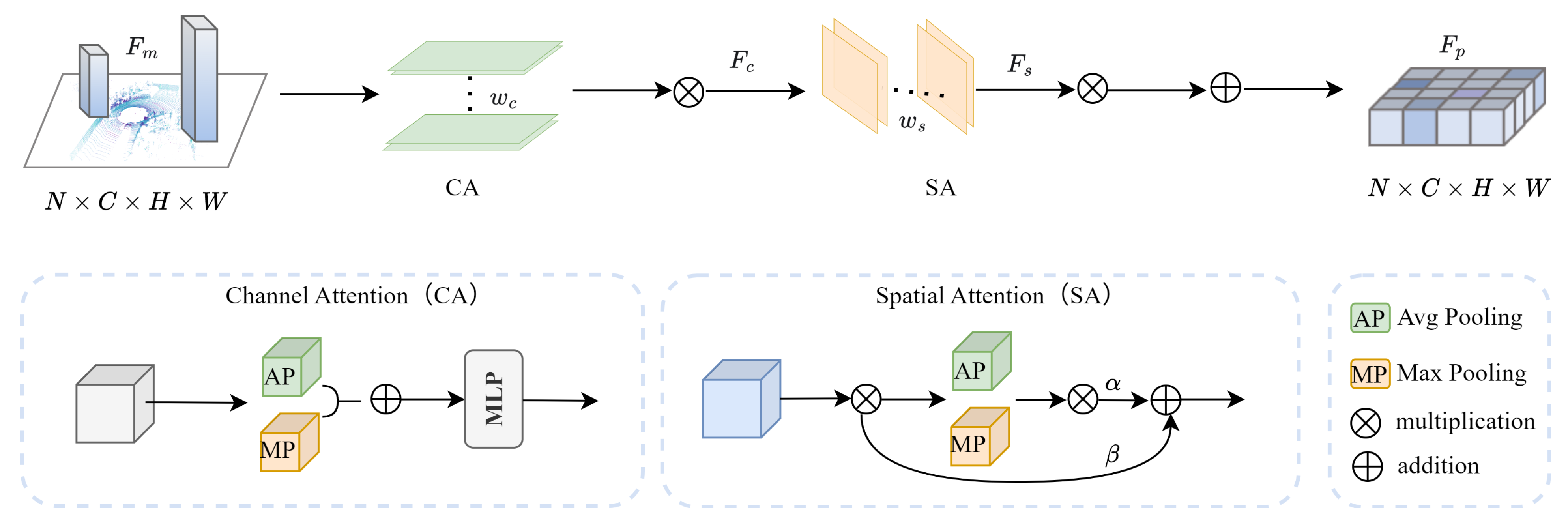

3.1.2. Dual Attention Convolution Module

The Dual Attention (DA) module enhances feature representations by selectively emphasizing informative channels and spatial regions, enabling precise boundary delineation and robust detection of small or sparse objects. This module integrates edge and semantic features from preceding layers, capturing both geometric and contextual information essential for 3D object detection.

The fused feature tensor obtained from initial edge and point feature mapping is denoted as:

The DA module sequentially applies channel attention and spatial attention, followed by adaptive fusion, to refine .

Channel attention emphasizes informative feature types while suppressing irrelevant or noisy channels. Global descriptors are obtained via average and max pooling across spatial dimensions:

capturing both overall and extreme channel responses. These descriptors are processed through a two-layer Multi-Layer Perceptron (MLP) with reduction ratio

r:

where

and

are learnable weights,

denotes sigmoid activation, and ⊙ represents element-wise multiplication. This mechanism selectively emphasizes channels carrying critical edge or semantic cues.

Following channel refinement, spatial attention focuses on important regions within feature maps. Channel-refined features are aggregated along the channel dimension using average and max pooling,

and are then concatenated and processed with a

convolution:

This step captures spatial dependencies and highlights regions critical for detecting small, thin, or occluded objects.

Finally, channel-refined and spatial-refined features are adaptively fused:

where

and

are learnable parameters balancing channel and spatial attention contributions.

The sequential design, which applies channel attention followed by spatial attention, leverages their complementary roles. Specifically, channel attention selects relevant feature types (e.g., edge or semantic cues), while spatial attention localizes critical regions. This enables the network to focus on fine structures and object boundaries. This proves particularly effective for 3D detection of small, sparse, or partially occluded objects.

By jointly modeling channel and spatial dependencies, the DA module enhances boundary delineation and improves occlusion robustness. Empirical evaluations demonstrate consistent improvements in precision and recall for small and occluded objects, validating its effectiveness in complex point cloud environments.

Figure 3 illustrates the DA module’s overall structure and workflow.

3.1.3. 3D Semantic Feature Extraction Module

The Semantic Feature (SF) module extracts rich contextual representations from 3D point clouds, preserving geometric fidelity while mitigating point sparsity. We adopt Cylinder3D [

36], which transforms points from Cartesian coordinates

to cylindrical coordinates

. This transformation enables distance-adaptive voxelization, ensuring uniform sampling density across near and far regions and maintaining critical geometric structures for downstream detection.

An asymmetric 3D convolutional network with residual blocks is used to extract semantic features. Directional kernels (e.g., , ) are employed to capture orientation-specific patterns, which are essential for objects with anisotropic shapes or elongated structures, effectively handling the anisotropic nature of urban objects such as vehicles and pedestrians. A dimensional decomposition-based contextual module aggregates features along each axis, efficiently encoding global scene context without introducing significant computational overhead. The resulting semantic features complement the edge information extracted by the EF module, providing a unified representation that captures both local object boundaries and global semantic context. This design is especially effective for handling small, sparse, or partially occluded objects commonly found in remote sensing point clouds.

3.1.4. Feature Concatenation and Fusion

To integrate geometric, edge, and semantic cues, each point is assigned a semantic label and descriptor, aligned across datasets (e.g., KITTI, Waymo) via one-hot encoding. The final feature vector for each point is constructed as , where are the 3D coordinates, represents the reflectance, and corresponds to the one-hot semantic encoding of the point.

Multi-scale alignment is applied to fuse fine-grained local edge details and global semantic context. This ensures that the network preserves critical geometric boundaries while integrating high-level semantic cues, enhancing robustness to sparse sampling, occlusion, and cluttered scenes. The fused feature representation thus provides a comprehensive point description, supporting reliable and accurate 3D object detection.

3.2. LiDAR-Based 3D Object Detection

The fused feature vectors from the SF, EF, and DA modules are input to a LiDAR-based detection head, which predicts oriented 3D bounding boxes represented by center coordinates, dimensions, orientation, and category. EAS-Det modifies only point-level features, leaving the detector architecture unchanged, ensuring full compatibility with both one-stage detectors and two-stage detectors. One-stage detectors directly regress box parameters in an anchor-free manner, simplifying the pipeline and improving efficiency. Two-stage detectors, on the other hand, generate coarse proposals and refine them using enriched features.

The network is trained with a multi-task loss that combines classification, 3D box regression, and orientation supervision. The classification loss guides category prediction, the regression loss ensures accurate box localization, and the orientation loss captures heading angles via sine-cosine or bin-based formulations. This ensures that the detector distinguishes object classes while precisely encoding geometric and directional properties, which is particularly beneficial for small, sparse, or partially occluded objects.

Extensive experiments on KITTI and Waymo demonstrate that EAS-Det consistently improves mean average precision across all categories, with notable gains for challenging classes such as pedestrians and cyclists. By leveraging the enriched feature representations, the framework achieves a favorable balance between detection accuracy, robustness to sparsity and occlusion, and computational efficiency. Its modular design allows seamless integration with existing LiDAR pipelines without additional overhead, making EAS-Det well-suited for real-world remote sensing scenarios.

4. Experiments and Results

This section presents the experimental setup and comprehensive evaluation of the proposed EAS-Det framework. We begin by introducing the datasets and implementation details, followed by performance comparisons with state-of-the-art 3D object detection methods. Finally, we conduct extensive ablation studies to validate the effectiveness and generalizability of the proposed modules across different detection architectures.

4.1. Implementation Details

4.1.1. Datasets and Evaluation Metrics

We evaluate the effectiveness of EAS-Det on two widely adopted benchmarks: the KITTI dataset and the Waymo Open Dataset. The KITTI dataset contains 7481 training samples and 7518 testing samples. Following the standard protocol, we use 3712 samples for training and 3769 for validation. Evaluation follows the KITTI 3D object detection benchmark, employing Average Precision (AP) and mean Average Precision (mAP) as primary metrics. The IoU thresholds are set to 0.7 for cars and 0.5 for pedestrians and cyclists. Detection difficulty is categorized into Easy, Moderate, and Hard levels based on occlusion and truncation degrees, which help assess model performance under different object conditions.

For semantic segmentation pre-training, we utilize the SemanticKITTI dataset, which provides point-wise annotations for 28 semantic classes, such as road, vegetation, and building. After consolidating dynamic and static object variants and filtering out underrepresented categories, we retain 19 classes. The semantic branch is pre-trained on this dataset, and we remap the 19-class annotations into three detection-relevant categories: car, pedestrian, and cyclist. This remapping employs a predefined rule-based one-hot encoding scheme, ensuring a deterministic correspondence between the segmentation labels and detection targets, which is crucial for seamless integration.

The Waymo Open Dataset features high-resolution LiDAR scans, with an average of 180,000 points per frame. The dataset is designed for autonomous driving applications and includes various real-world driving scenarios. We follow the official evaluation protocol, which includes two difficulty levels: LEVEL_1 (L_1) for nearby, minimally occluded objects, and LEVEL_2 (L_2) for distant or heavily occluded objects. Experiments are conducted under both settings to evaluate the model’s cross-domain generalization capabilities in complex, dynamic driving environments.

4.1.2. Experimental Setup

All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 2080 Ti GPU (11 GB VRAM), an Intel Core i7-10700K CPU, and 32 GB of system memory. The implementation is based on the PyTorch 1.11.0 deep learning framework with Python 3.8 and CUDA 11.3, and built upon the OpenPCDet framework [

37]. The models are trained with a learning rate of 0.01 for the KITTI dataset and 0.003 for the Waymo dataset, using batch sizes of 8 and 16, respectively. We employ the OneCycleLR scheduler [

38] to adjust the learning rate during training. Each model is trained for 80 epochs, with a weight decay of 0.01 and a momentum of 0.9.

4.1.3. Data Augmentation and Training Details

To enhance model robustness and reduce overfitting, we adopt comprehensive data augmentation strategies following established practices [

5]. A sample database is constructed from the training set annotations, from which objects are randomly inserted during training. The augmentation pipeline includes random scaling, axis-aligned flipping, and rotation applied to both bounding boxes and point cloud data. These augmentations help simulate variations in object poses and orientations, enabling the model to generalize better to different object arrangements. For the Waymo dataset, additional techniques like range cropping and random downsampling are applied to accommodate its higher point density and larger sensing range. Range cropping focuses on sampling more points from the object regions of interest, while downsampling reduces computational complexity and ensures that the model can handle the high-density point clouds efficiently.

4.2. Comparison with State-of-the-Art

We evaluate EAS-Det on the KITTI and Waymo benchmarks, with hyperparameters optimized on respective validation sets. Comprehensive comparisons across different backbones, object categories, and difficulty levels demonstrate the effectiveness of our approach, with results summarized in

Table 1 and

Table 2.

4.2.1. On the KITTI Dataset

EAS-Det achieves consistent performance improvements across all categories and difficulty levels on the KITTI benchmark. As shown in

Table 1, our framework delivers substantial AP gains over the best-performing multimodal method [

34]: 10.34%, 9.71%, and 8.95% for pedestrians under easy, moderate, and hard settings, respectively, and 8.01%, 8.21%, and 8.66% for cyclists. These improvements underscore the effectiveness of the ESI module in capturing both fine-grained geometric details and global semantic context.

The results also reveal the architectural advantages of EAS-Det. EAS-Point excels in preserving structural details through point-based representations, which are critical for detecting small or occluded objects. In contrast, EAS-Pillar, while using efficient voxel-based processing, achieves competitive performance with minimal computational overhead, demonstrating the complementary nature of our design across different backbone architectures.

4.2.2. On the Waymo Dataset

Evaluation on the large-scale Waymo Open Dataset validation set under challenging real-world conditions confirms the strong generalization capability of EAS-Det. EAS-Pillar improves LEVEL_1 Vehicle AP by 3.17 percentage points over the PointPillars baseline, while EAS-PV achieves competitive performance across all categories. Notably, EAS-PV establishes a new state-of-the-art in pedestrian detection on the validation set with 75.93% AP under LEVEL_1, significantly outperforming all compared methods.

These results underscore the robustness of our proposed modules, enabling consistent improvements across diverse sensing environments. The integration of edge geometry with semantic context via the ESI module ensures exceptional resilience in sparse and cluttered LiDAR scenes.

4.2.3. Visualization Analysis

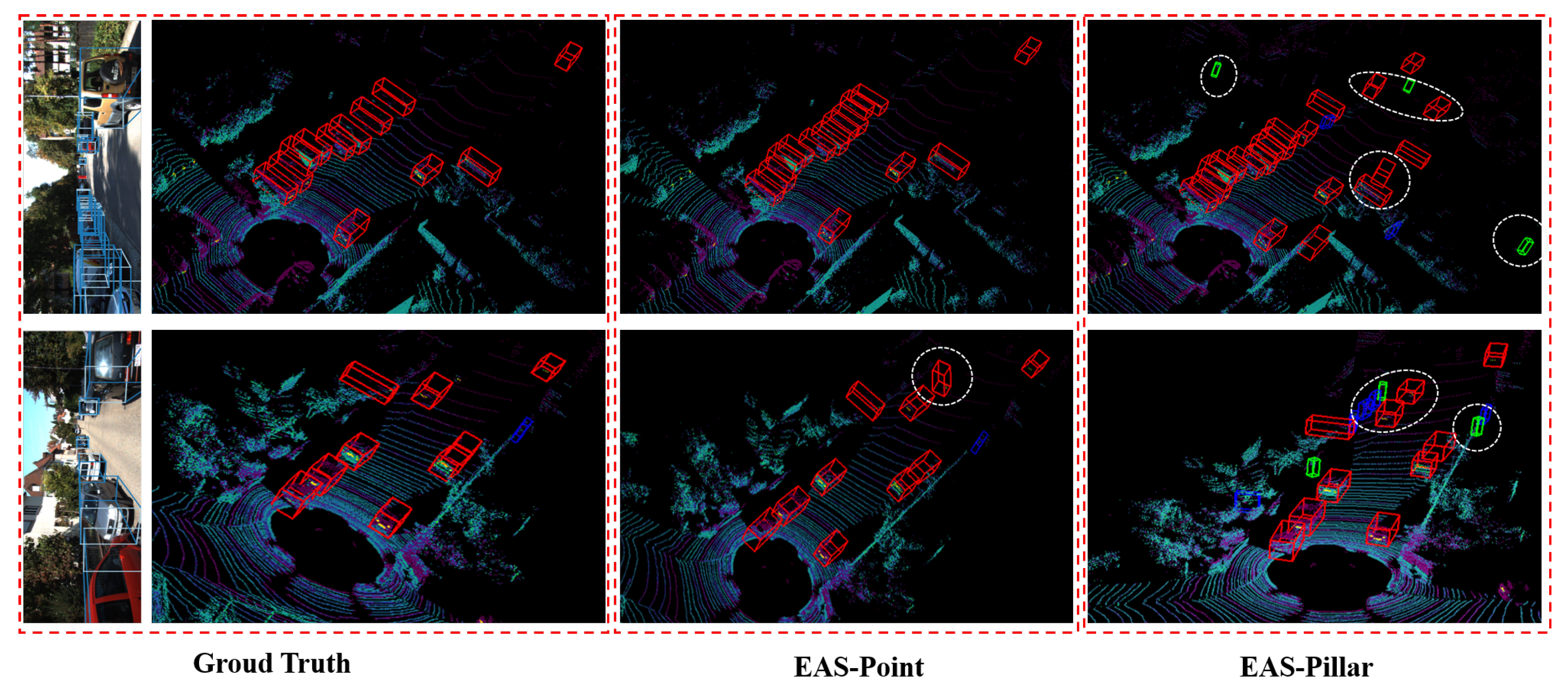

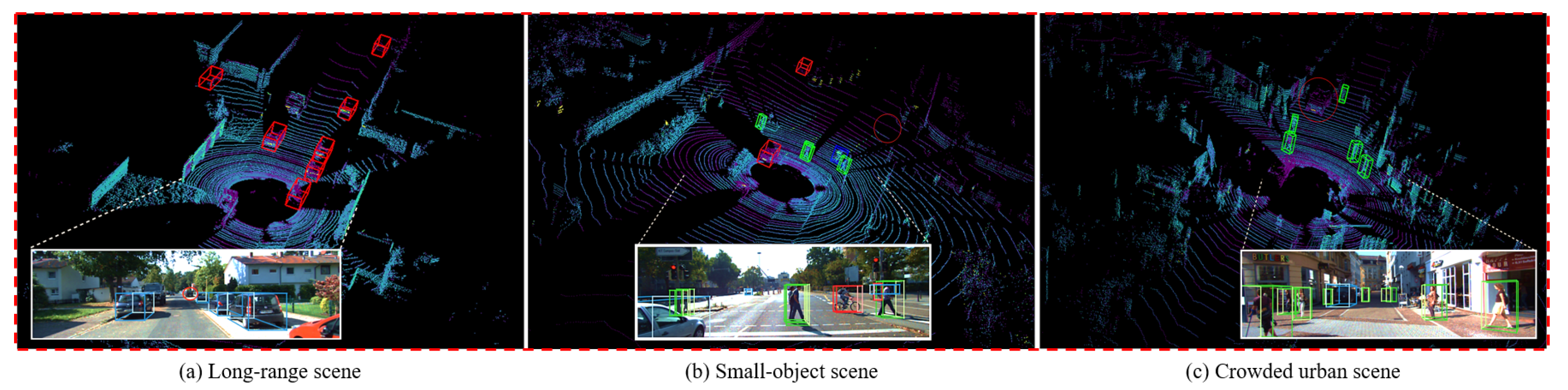

To qualitatively demonstrate the operational effectiveness of our approach, we conduct visualization analyses on representative scenes from the KITTI dataset.

Figure 4 presents a comparative visualization framework, contrasting detection results of PointRCNN and PointPillars with and without EAS-Det integration. The visualizations show that our method reliably detects diverse objects even under challenging conditions, including crowded scenes, background clutter, and partial occlusion, highlighting its robustness and real-world applicability.

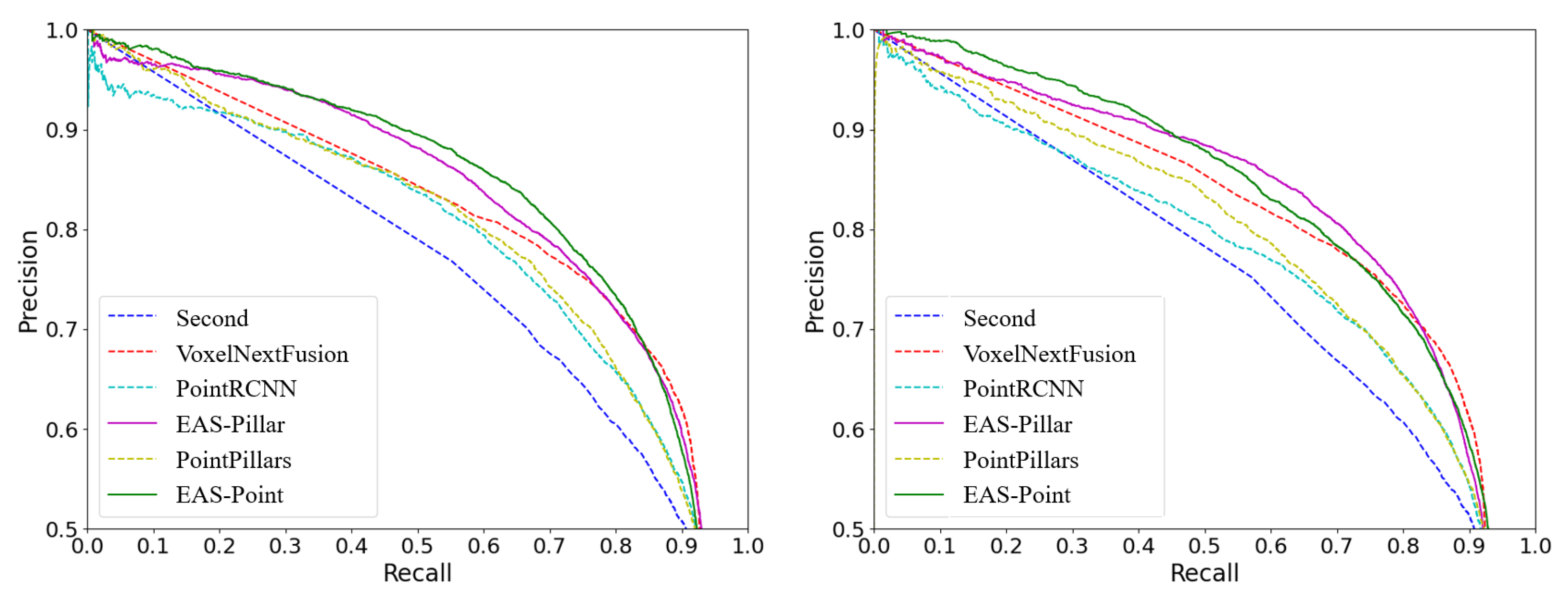

Furthermore, the precision–recall (PR) curves in

Figure 5 confirm that EAS-Det achieves a superior balance between precision and recall across both 3D and bird’s-eye view (BEV) detection tasks. The curves maintain consistently higher precision across recall thresholds, validating the performance advantages of our edge-semantic fusion approach over the baseline detectors.

4.3. Ablation Study

To comprehensively evaluate the contributions of each module and design choice, we conduct systematic ablation studies on the KITTI validation set. All variants are trained with identical hyperparameters, input resolutions, and optimization strategies to ensure fair comparison. Each configuration is trained independently until convergence, and performance metrics on the validation set are reported. In addition to accuracy, we analyze inference efficiency and model complexity to provide a holistic assessment.

4.3.1. Effectiveness of the Edge-Semantic Interaction Module

Table 3 presents the progressive integration of EF, DA, and ESI modules, highlighting their complementary contributions. The DA module yields substantial gains in 3D mAP, with improvements of +4.37% for EAS-Point and +1.28% for EAS-Pillar, while EF enhances geometric fidelity and boundary sharpness, providing 1.5–2.0% improvements across both backbones. These enhancements are critical for precise object contour modeling and bounding box alignment.

The synergistic effect of EF and DA is evident when combined: EAS-Point mAP increases from 63.42% to 66.39% with EF, indicating that EF reinforces geometric integrity while DA improves feature discriminability via channel and spatial attention. Together, they substantially enhance detection robustness and accuracy, particularly in complex scenes with small or partially occluded objects.

4.3.2. Inference Speed and Model Size

We assess deployment efficiency across architectures as evidenced in

Table 3. EAS-Pillar maintains real-time performance at 24–32 FPS with only 5–10 ms additional latency. In contrast, EAS-Point achieves higher accuracy with a 68.94% 3D mAP at 5–6 FPS, or 212 ms per frame, while being suitable for offline high-precision tasks including HD mapping and autonomous driving log analysis.

All variants remain lightweight with parameter sizes below 56 MB, introducing minimal computational overhead. Compared to conventional LiDAR detectors such as SECOND and PointPillars, EAS-Det achieves a favorable balance between detection accuracy and efficiency, demonstrating scalability for both embedded and cloud-based deployments.

4.3.3. Fusion Strategy Analysis

We compare three feature fusion strategies, simple concatenation (Concat), element-wise addition (Add), and the proposed EAS mechanism, as summarized in

Table 4. Concat and Add achieve 77.53% and 78.22% mAP, respectively, while EAS significantly outperforms both, achieving 80.91% mAP, including a +4.25% improvement in Pedestrian AP over Concat. This gain is attributed to EAS’s adaptive weighting mechanism, which balances geometric and semantic information by suppressing redundant channels while emphasizing task-relevant features.

Unlike conventional fusion approaches that treat all features equally, the DA module within EAS performs adaptive feature re-weighting, which selectively enhances discriminative cues while attenuating less informative responses. By dynamically recalibrating feature activations according to both local geometric patterns and global semantic context, it enables a more synergistic integration of edge-aware and contextual information. This design proves particularly effective in LiDAR-based detection, where sparse and irregular point clouds require more structured feature interaction than simple concatenation or averaging can provide.

4.3.4. Robustness to Point Cloud Sparsity

To assess robustness under practical sensing conditions, we simulate point cloud sparsity by randomly downsampling input LiDAR data. Experiments use the EAS-Point configuration on the KITTI validation set under Moderate difficulty.

As shown in

Table 5, the EF module exhibits notable stability: even at 25% point cloud density, the overall mAP decreases by a mere 2.96%, from 72.34% to 69.38%. This indicates that geometrically grounded edge cues remain informative despite severe sparsity.

Performance remains stable for categories with fewer points, such as pedestrians and cyclists, which are typically more challenging to detect. The edge features effectively capture salient contours and spatial boundaries, confirming that EAS-Det is accurate and reliable across varying point cloud densities, supporting its deployment in real-world autonomous perception systems.

4.3.5. Parameter Sensitivity Analysis

Following the commonly adopted practices in attention design [

45], we conducted a comprehensive sensitivity analysis of the key hyperparameters in EAS-Det.

Table 6 presents the effect of varying the Reduction Ratio (

r) and Fusion Initialization Weights (

/

) on the 3D detection performance across the KITTI validation set. The Reduction Ratio notably influences performance. When varying

r among {2, 4, 8}, the overall mAP remains within

, indicating that

is a robust and stable configuration. Similarly, the initialization of the fusion weights (

,

) has a negligible impact on the final performance. Only slight mAP variations (less than

) are observed when using alternative initializations (e.g., 0.2/0.8 or 0.8/0.2). These findings demonstrate that EAS-Det is largely insensitive to hyperparameter variations, ensuring ease of reproduction and adaptability across different settings.

5. Discussion

5.1. Module Contributions and Synergies

The ablation study demonstrates the distinct contributions and synergistic effects of each proposed component. The Edge Feature (EF) module enhances geometric representations, improving boundary localization critical for accurate object detection. The Dual Attention (DA) module adaptively balances spatial and channel dependencies, refining contextual relationships across multi-scale features. The Edge-Semantic Interaction (ESI) module optimally integrates structural and semantic cues, yielding the most significant performance gains, particularly for small or heavily occluded objects. These findings confirm the complementary nature of the proposed modules and their value as enhancements to mainstream 3D detection architectures.

A pivotal outcome of our experimental evaluation is the superior performance of EAS-Det in detecting small objects, namely pedestrians and cyclists. As quantitatively established in

Section 4.2 (

Table 1), our framework achieves substantial AP gains, up to +10.34% for pedestrians and +8.66% for cyclists on the KITTI benchmark, with consistent improvements across all difficulty levels. This demonstrates that our edge-semantic fusion approach is particularly effective in capturing the fine-grained structural details that are critical for recognizing objects with minimal point cloud signatures.

5.2. Architectural Compatibility and Performance

EAS-Det demonstrates consistent performance improvements across both single-stage and two-stage detection paradigms. Single-stage detectors maintain real-time performance (24–32 FPS) while benefiting from edge-aware feature fusion, showing particular effectiveness for medium- and large-scale objects. Two-stage detectors achieve higher precision in fine-grained localization, with the ESI modules significantly enhancing accuracy for small or partially occluded objects. Notably, EAS-Det improves detection across all object categories and difficulty levels, with the most substantial gains observed for challenging cases involving small or occluded objects. This improvement stems from the framework’s unique ability to preserve object boundaries and enhance feature alignment through explicit geometric-semantic interactions—a capability absent in prior fusion-based approaches.

5.3. Robustness Under Sparse and Noisy Conditions

EAS-Det demonstrates strong robustness to sparse and noisy LiDAR data, a key requirement for real-world deployment. Its performance remains stable even at 25% of the original point density, as edge-aware features effectively preserve structural information under sparsity. The EF module’s gradient-based edge extraction captures key geometric boundaries, ensuring reliable detection in sparse or long-range sensing. Compared to density- or semantics-only methods, our edge-semantic fusion provides superior resilience in challenging perceptual conditions.

The framework also handles noisy inputs effectively. Gradient-based edge features emphasize structural variations while suppressing local noise during BEV voxelization and convolution, preserving clear object contours. In sparsity experiments (

Table 5), EAS-Det remains competitive even with 50% of points missing or corrupted. Improved performance on challenging KITTI and Waymo scenes, under occlusion, clutter, and sensor noise, further validates its robustness and practical viability for real-world LiDAR perception systems.

5.4. Computational Efficiency and Deployment

EAS-Det achieves an optimal balance between detection accuracy and computational efficiency. As summarized in

Table 3, the complete EAS-Pillar model improves the 3D mAP by +4.71% over the baseline with only an 8.1% increase in FLOPs (from 120.5 G to 130.2 G) and an almost unchanged model size of 55.7 MB, achieving real-time inference at 24 FPS. Similarly, EAS-Point attains a +9.89% mAP gain while introducing 11.7% additional FLOPs and maintaining a compact 47.0 MB model.

The modular architecture allows seamless integration into mainstream backbones without full retraining, enabling flexible adaptation to diverse sensing configurations. These results demonstrate that EAS-Det delivers substantial performance improvements with minimal computational and memory overhead, ensuring suitability for both embedded and edge-computing platforms. This strong efficiency–accuracy trade-off further underscores its potential for safety-critical, real-time applications such as autonomous driving and remote sensing.

5.5. Qualitative Analysis

To visually validate the performance of EAS-Det, particularly its superior capability in small-object detection, we provide a qualitative comparison as shown in

Figure 6. Empowered by edge-aware semantic fusion, our framework exhibits remarkable robustness in detecting challenging instances such as distant pedestrians and cyclists, where baseline detectors (e.g., PointPillars) often fail to generate bounding boxes due to sparse point distributions and weak semantic features. This qualitative evidence directly correlates with the significant quantitative improvements for pedestrians and cyclists reported in

Table 1, collectively substantiating that our approach effectively enhances the perception of small-scale targets in complex point clouds.

5.6. Implications for Multi-Platform Sensing

While validated on ground-based LiDAR datasets, EAS-Det’s core principles address a key challenge in remote sensing—the effective fusion of structural and contextual cues across heterogeneous platforms such as satellite, aerial, and ground-based systems. The proposed edge-semantic interaction mechanism provides a unified solution, forming the foundation for cross-domain perception models that integrate multi-source sensing perspectives. Insights gained from autonomous driving applications can further drive advancements in large-scale 3D scene understanding and collaborative sensing, contributing to more efficient and generalizable remote sensing systems.

6. Limitations and Future Work

Despite its advantages, EAS-Det exhibits several limitations that suggest directions for future research.

First, the current implementation relies solely on LiDAR data. While LiDAR is robust in low-light and adverse weather, its performance degrades under extreme sparsity, during long-range perception or heavy precipitation. Future work will explore multi-modal fusion with RGB cameras, radar, and event-based sensors to develop adaptive strategies for selecting the most informative cues under varying conditions.

Second, although EAS-Det is architecturally extendable to multi-modal fusion, practical implementation faces challenges including spatiotemporal calibration, feature alignment, and modality conflicts. Future work will develop robust fusion frameworks incorporating uncertainty-aware weighting and self-supervised alignment strategies to prevent performance degradation.

Third, the model’s decision-making process remains largely opaque despite some interpretability provided by edge and semantic visualizations. This black-box nature poses challenges for debugging and safety certification. Future research will integrate explainable AI techniques, such as attention map visualization, saliency-based feature attribution, and uncertainty quantification, to enhance transparency and facilitate failure diagnosis.

Fourth, EAS-Det currently lacks explicit modeling of predictive uncertainty, which is crucial for safety-critical tasks. Future work will integrate Bayesian deep learning and ensemble methods to estimate calibrated confidence measures, enabling reliable decision-making under domain shifts and sensor degradation.

Finally, while EAS-Det achieves acceptable efficiency on high-end GPUs, its deployment on resource-constrained embedded systems remains unverified. Future efforts will focus on model optimization through compression, pruning, quantization, and knowledge distillation. For specific application scenarios such as drones and autonomous vehicles, we will extend evaluation to large-scale, cross-domain datasets to ensure robustness in challenging real-world environments.

7. Conclusions

This paper presents EAS-Det, a novel LiDAR-based 3D object detection framework that explicitly integrates semantic context, geometric structure, and edge information through a dual-attention mechanism. By enabling multi-scale feature fusion, EAS-Det produces highly discriminative and robust representations. Extensive experiments on the KITTI and Waymo datasets demonstrate that EAS-Det consistently outperforms baseline methods across both single-stage and two-stage architectures, exhibiting remarkable robustness in detecting small and challenging objects such as pedestrians and cyclists under dense traffic and sparse point conditions.

The proposed approach enhances detection accuracy without sacrificing efficiency, while maintaining high inference speed and a compact footprint suitable for deployment. Future work will focus on extending EAS-Det to multi-modal detection by incorporating RGB and radar inputs, and on further optimizing its implementation for embedded and edge-computing platforms. These efforts will pave the way for unified cross-domain perception in autonomous systems.