Graph-Based Multi-Resolution Cosegmentation for Coarse-to-Fine Object-Level SAR Image Change Detection

Highlights

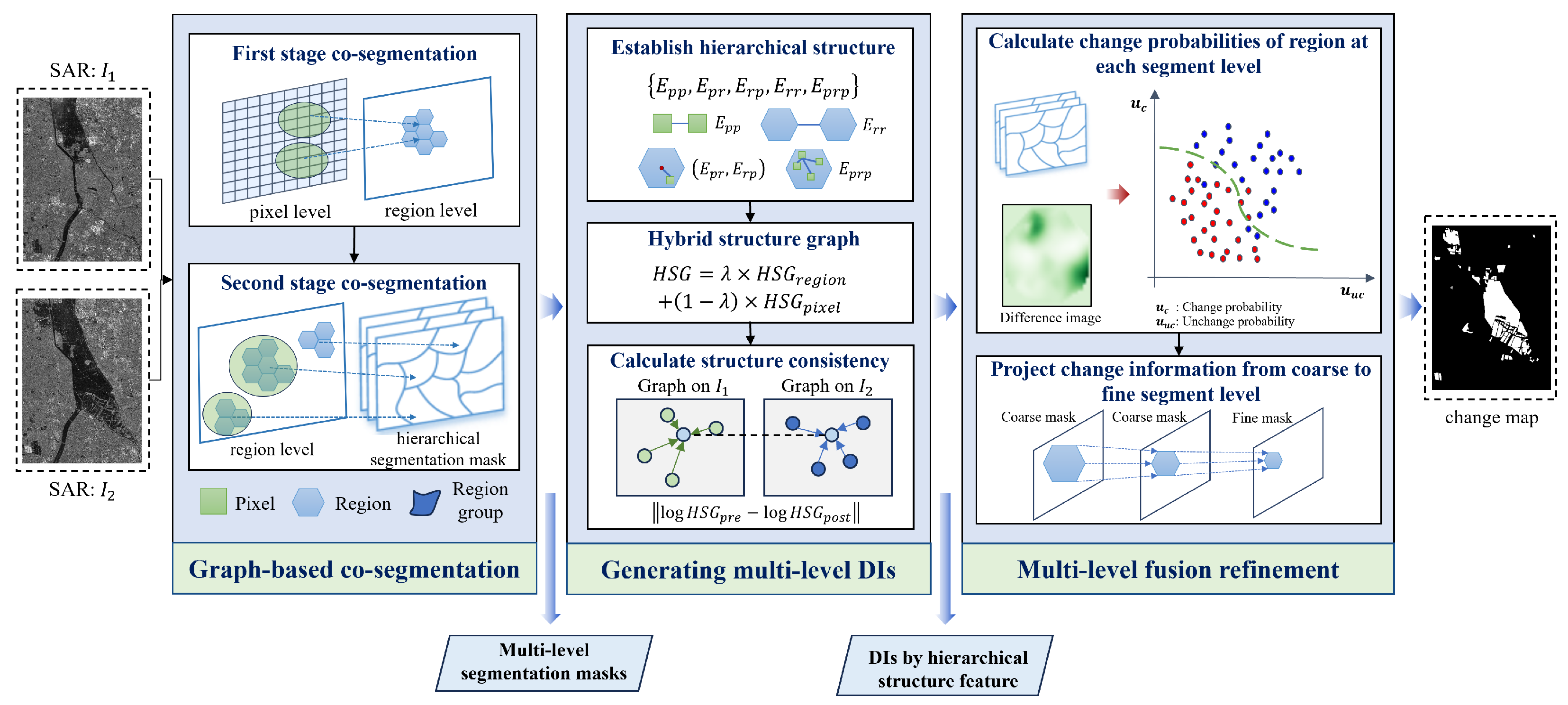

- We propose a novel SAR change detection framework based on multi-level structural feature embedding and graph consistency analysis.

- This method is robust to speckle noise and improves the detection ability of small-scale targets while maintaining the structural integrity of the changing region.

- This method provides a modeling approach for capturing the spatially correlated structural relationships among analysis units at different scales within the image.

- This method enhances the accuracy and efficiency of SAR image change detection in large-scale and complex scenes.

Abstract

1. Introduction

- (1)

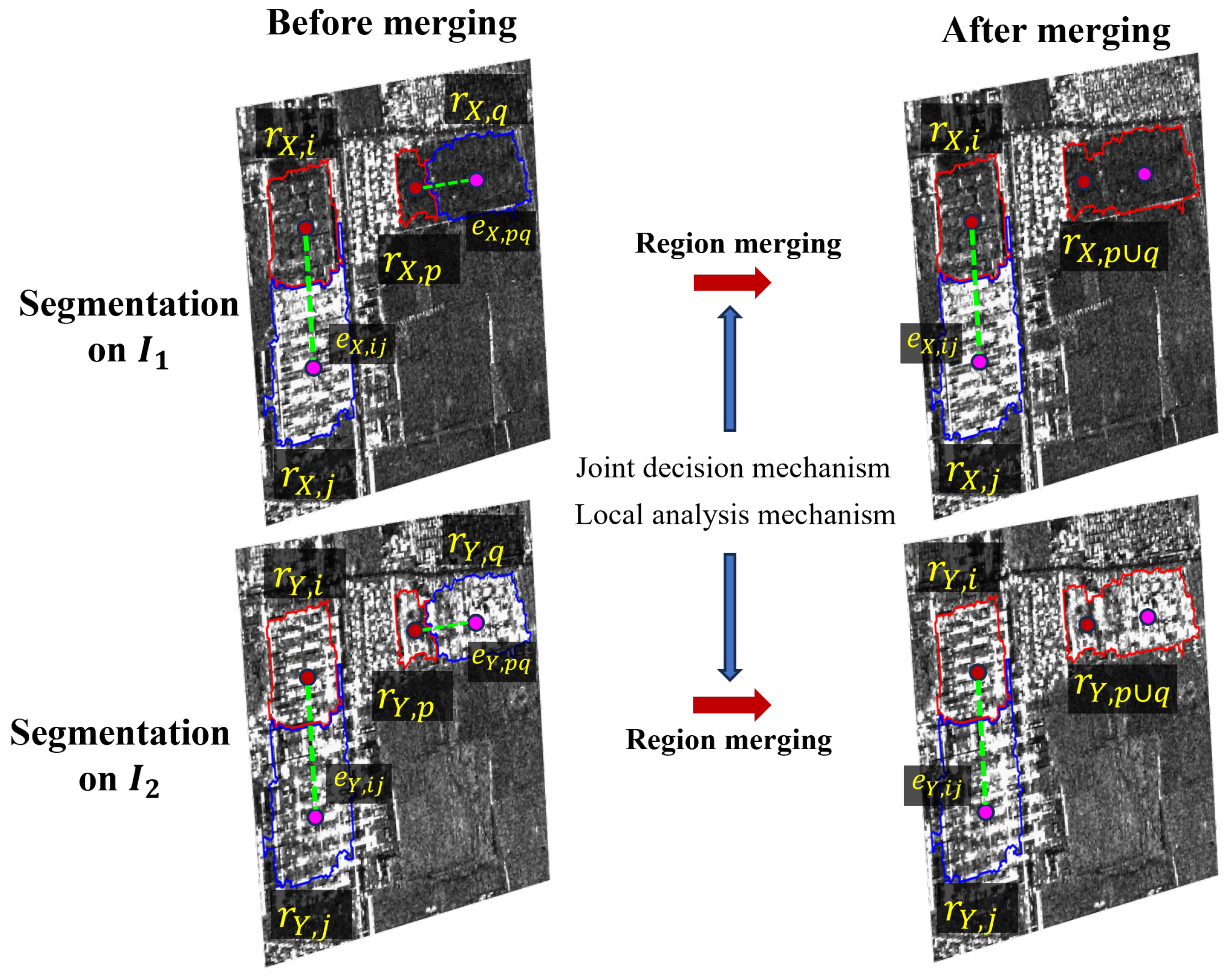

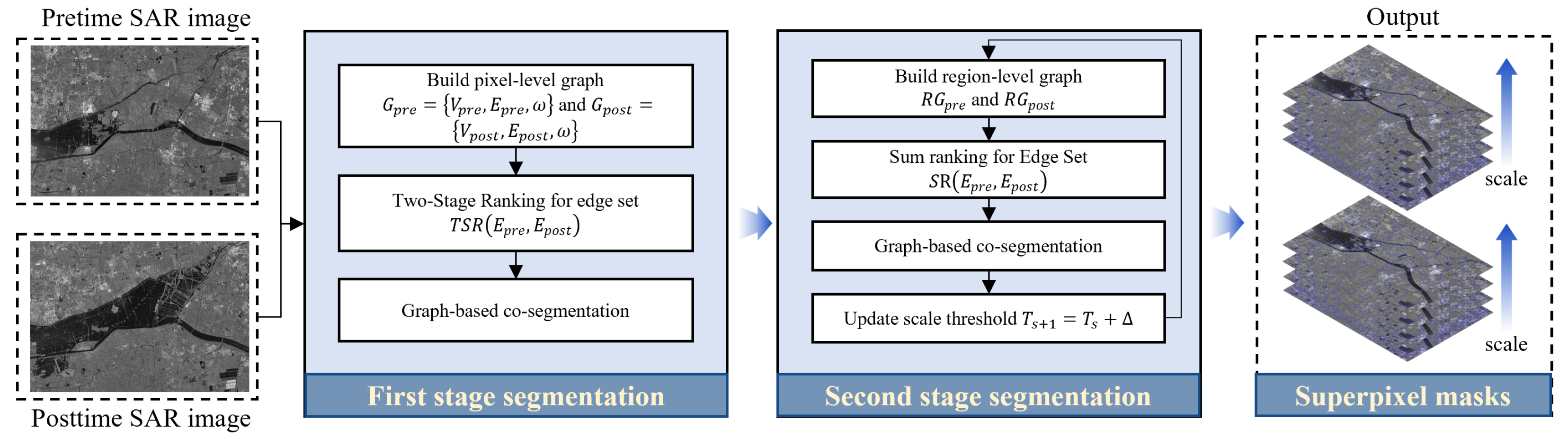

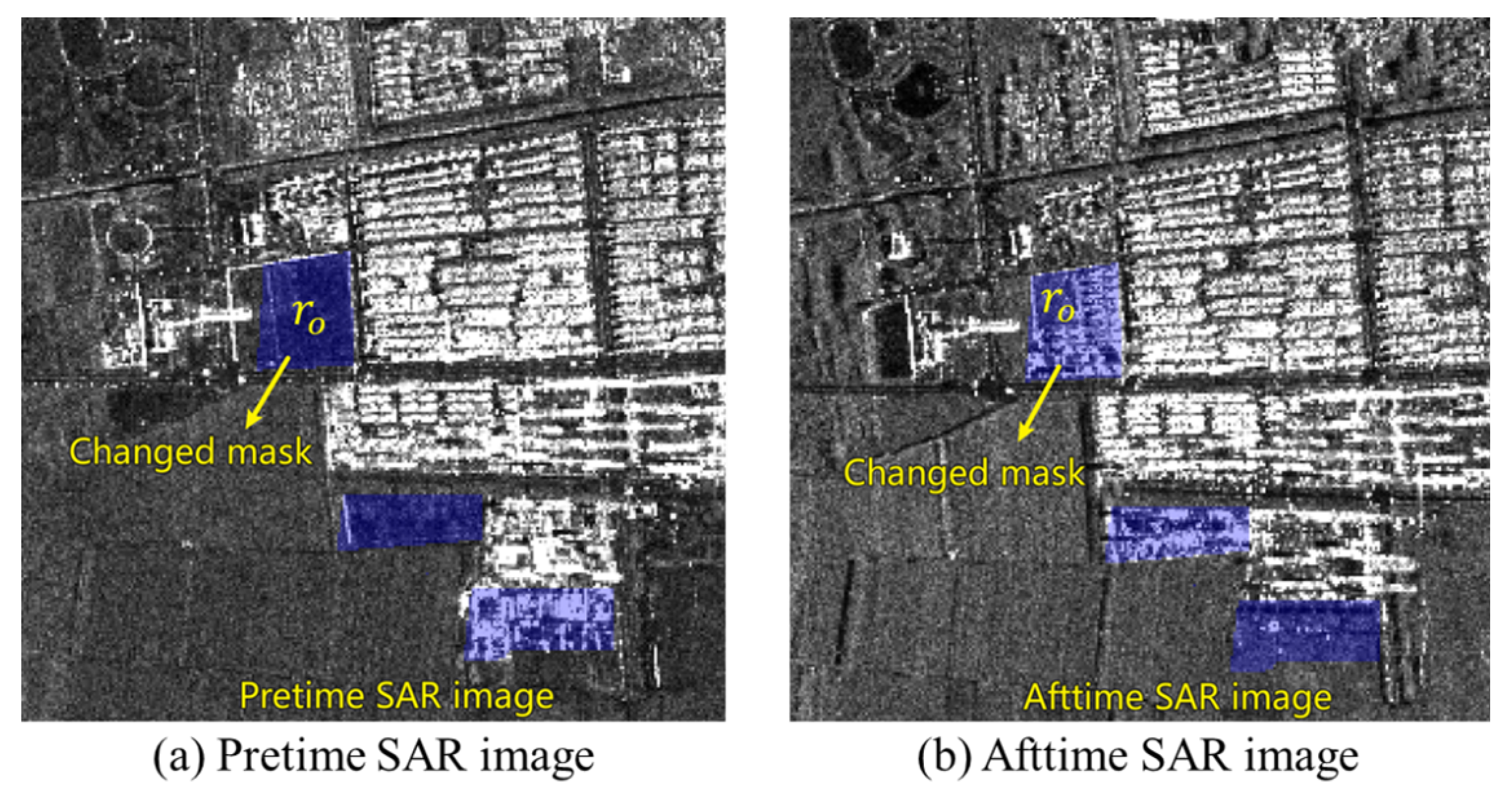

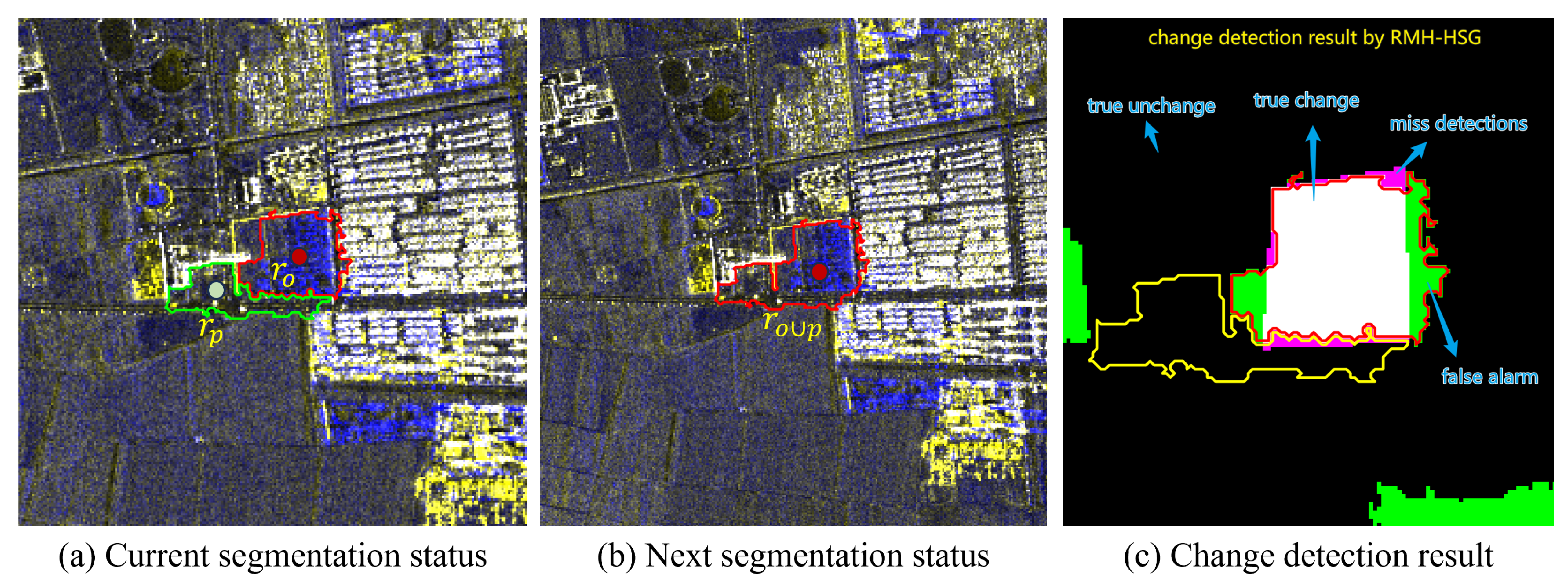

- A graph-based multi-resolution co-segmentation (GMRCS) method is proposed, which is guided by a two-stage ranking (TSR) strategy of edges. This approach jointly segments bi-temporal SAR images to generate hierarchically nested superpixel masks while effectively preserving the structural information of change regions.

- (2)

- A hybrid structural graph is constructed to represent multi-level spatial relationships within the SAR image, integrating pixel–pixel, pixel–region, and region–region connections. Change intensity is quantified from the perspective of graph structural consistency, effectively mitigating the impact of speckle noise.

- (3)

- A region-level fusion refinement model is developed to integrate change information across multiple segmentation scales. By progressively propagating coarse-scale changes to finer levels, this strategy maintains the spatial integrity of change regions and enhances the detection of small-scale variations.

2. Methodology

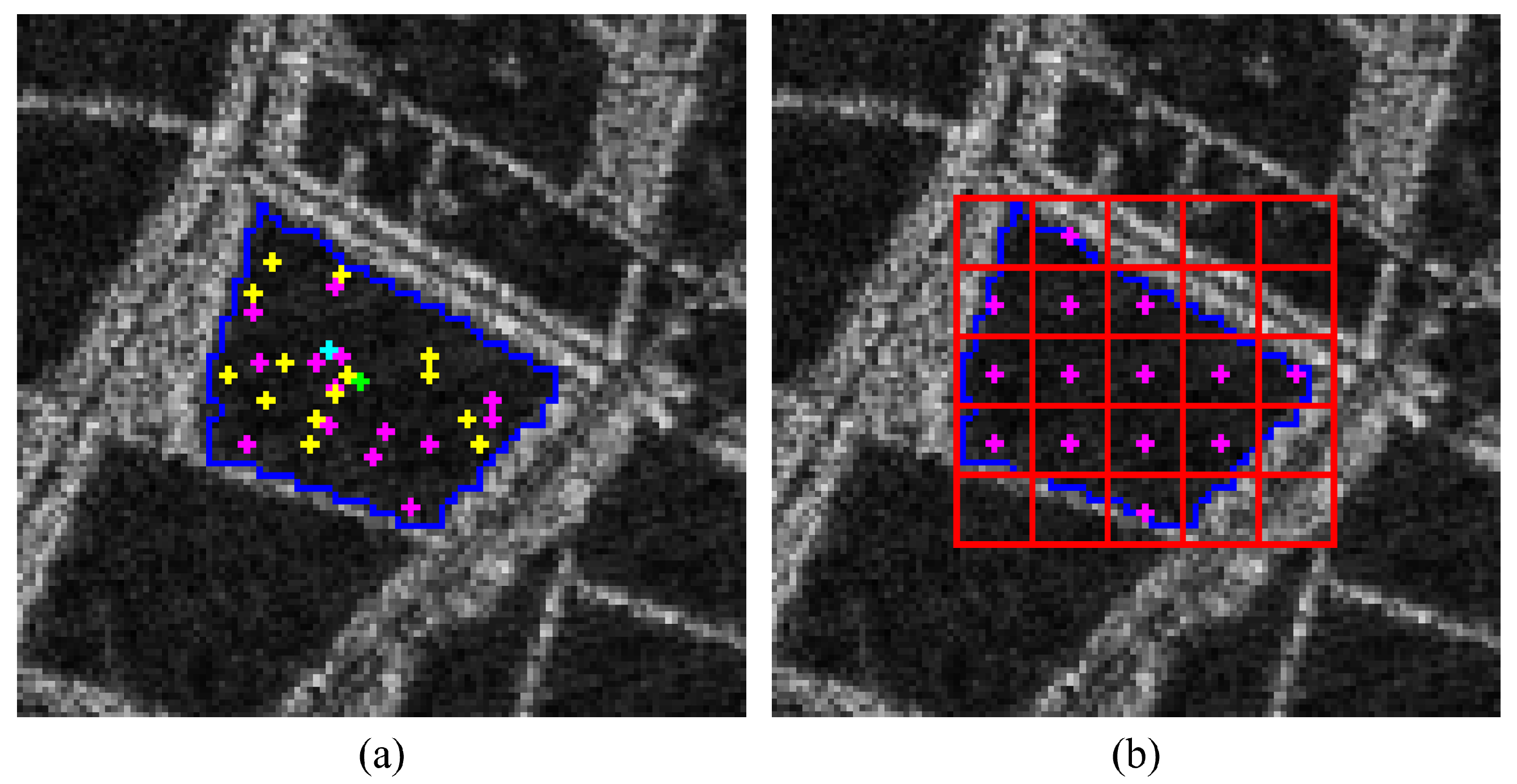

2.1. Graph-Based Multi-Resolution Co-Segmentation

2.2. Hybrid Structure Graph Change Detector

2.3. Region-Level Fusion Refinement Model

3. Experimental Results and Analysis

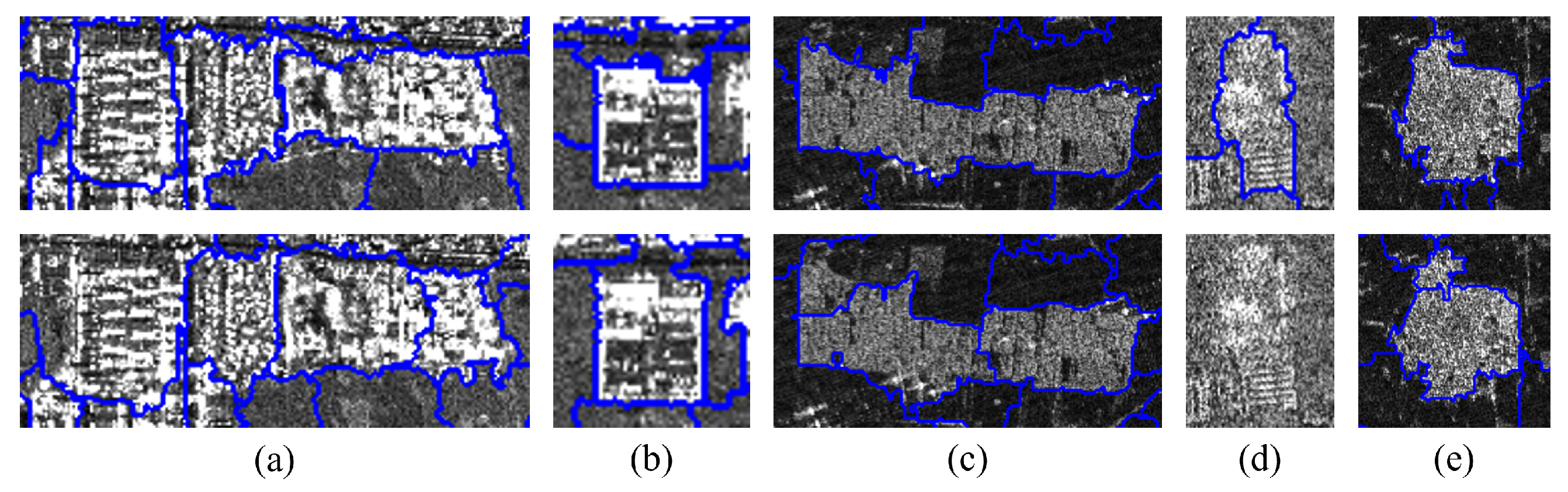

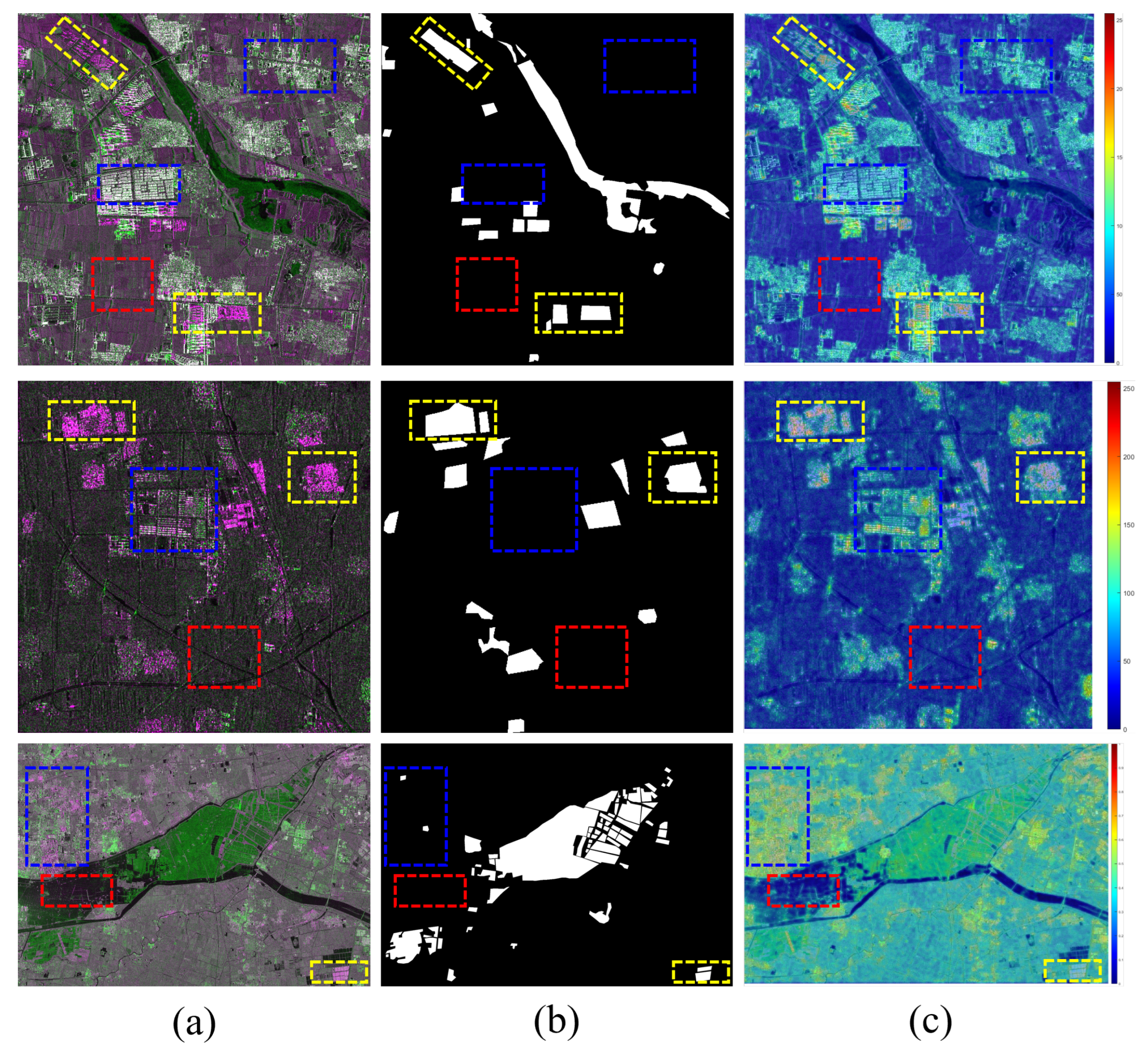

3.1. Experiment Dataset Description

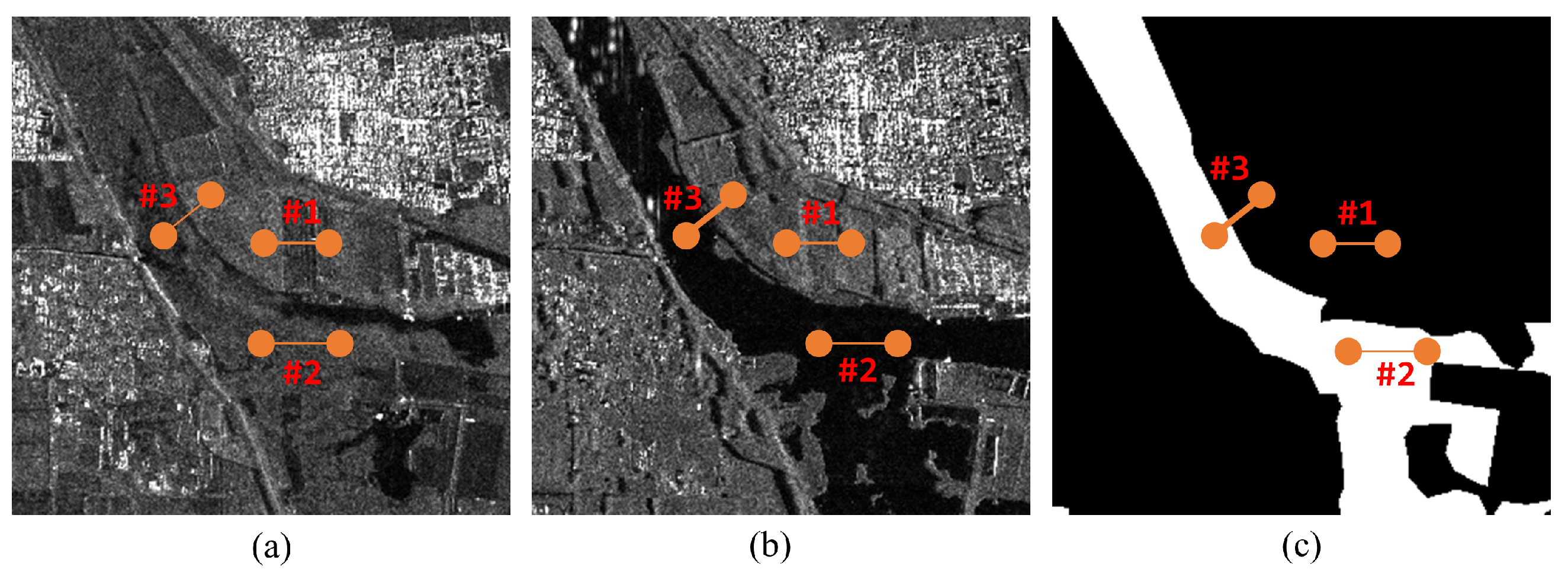

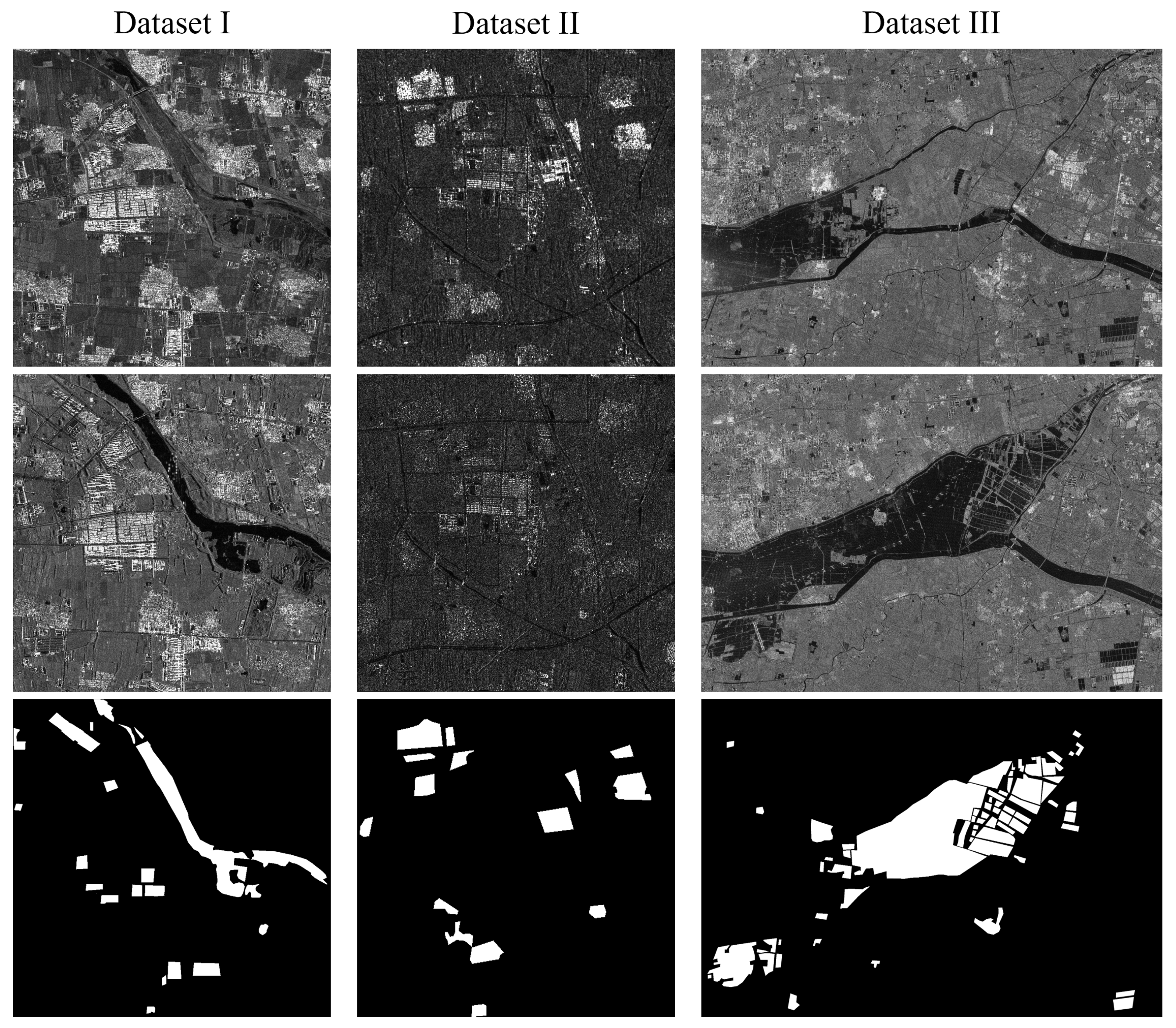

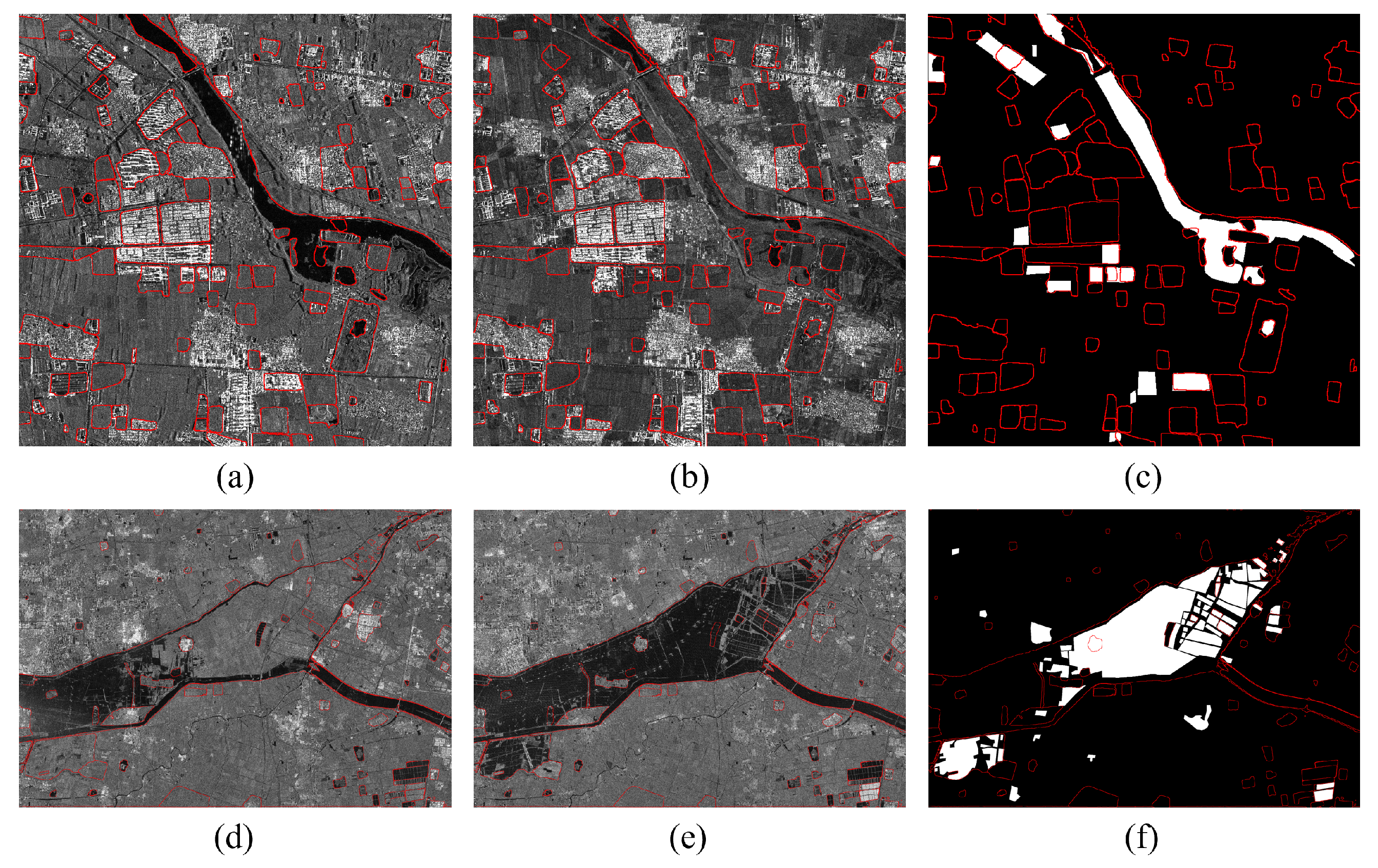

- Dataset I: This dataset includes two TerraSAR images, each with a size of 1000 × 1000 pixels, acquired in January 2014 and August 2015, HH polarization, 3 m resolution, StripMap mode, ENL ≈ 6.3. The high-resolution images capture changes in water bodies and buildings between the two observations.

- Dataset II: The two GF-3 images (600 × 600 pixels) acquired in July and August 2023, HH polarization, 10 m resolution, Fine StripMap mode, ENL ≈ 1.6, used for flood-related building change detection in Zhou Zhou, Hebei, China, following a flood event.

- Dataset III: This dataset covers a different geographic area from DatasetĨI within the same imaging task. The images have a size of 1965 × 2848 pixels, HH polarization, 10 m resolution, Fine StripMap mode, and ENL ≈ 5.7. This region captures significant post-flood surface changes in a floodplain in Hebei, including farmland inundation and waterbody expansion.

3.2. Comparison Methods and Parameter Setting

3.3. Evaluation Criteria

3.4. Change Detection Results

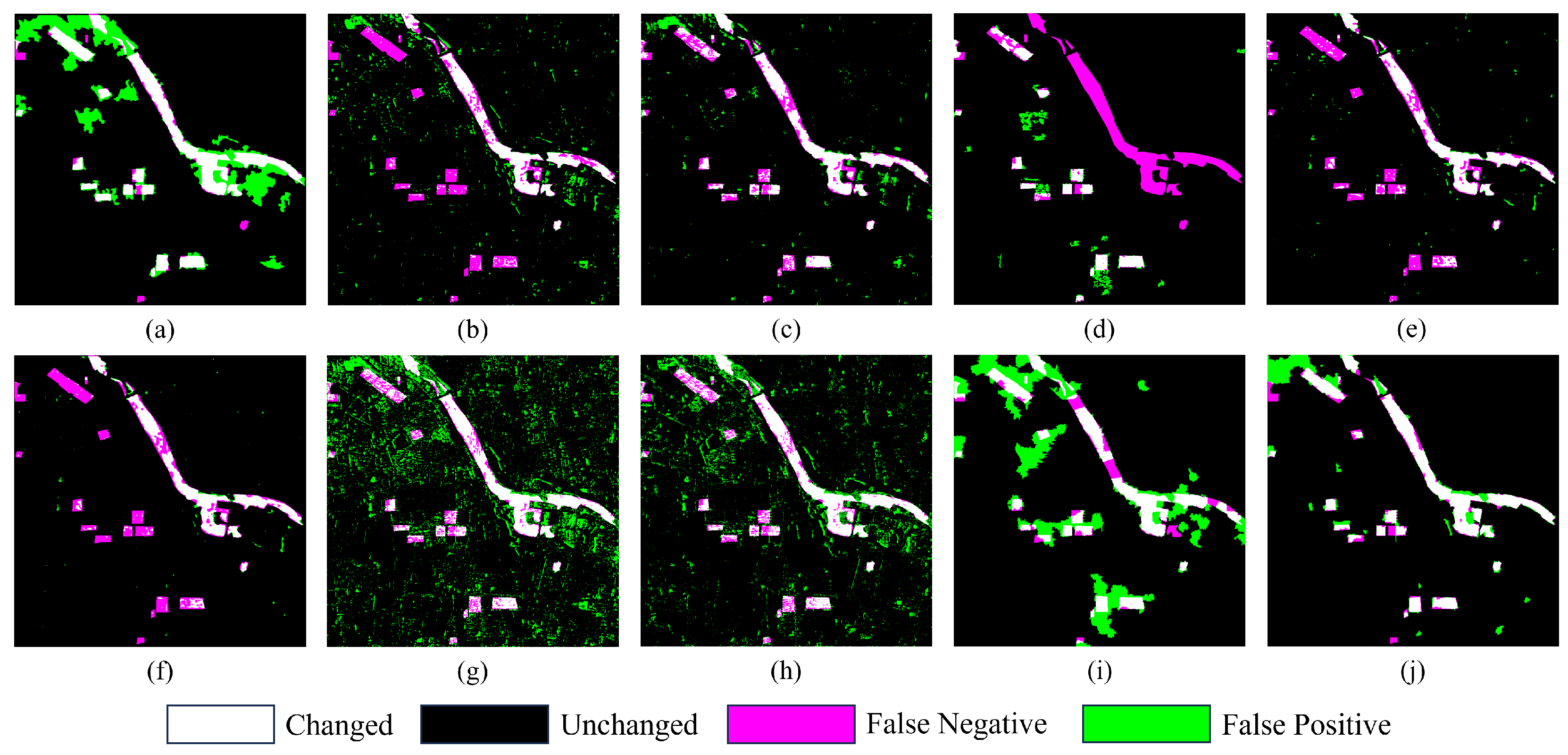

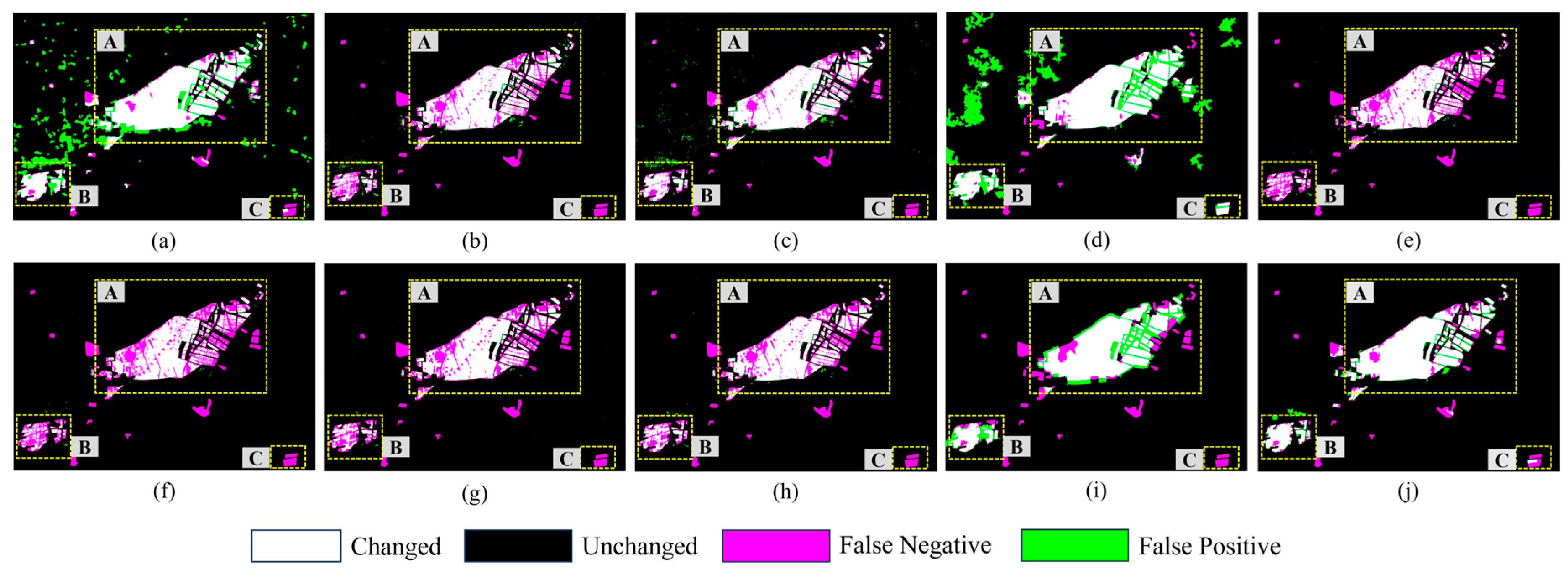

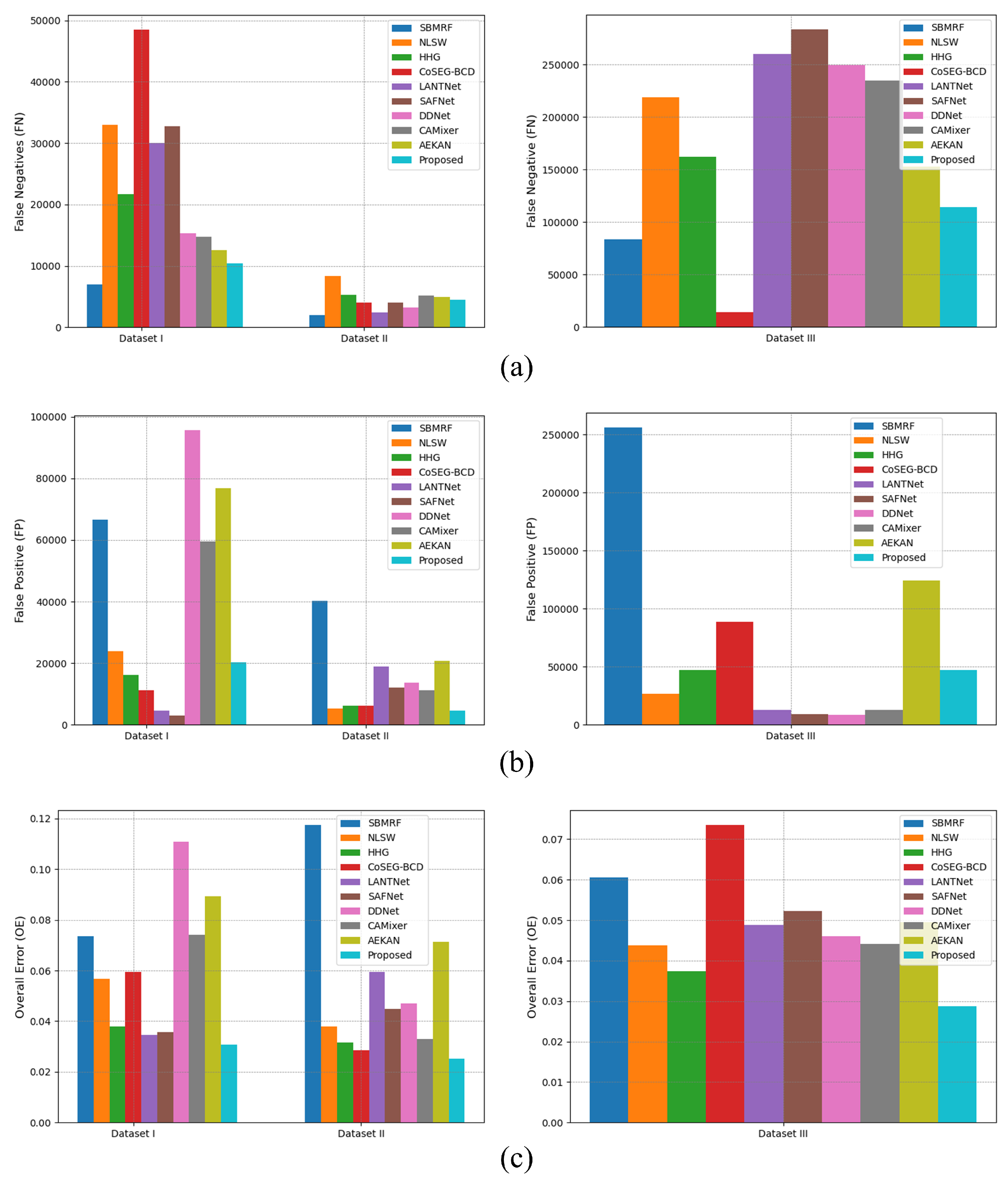

3.4.1. Change Detection Results on Dataset I

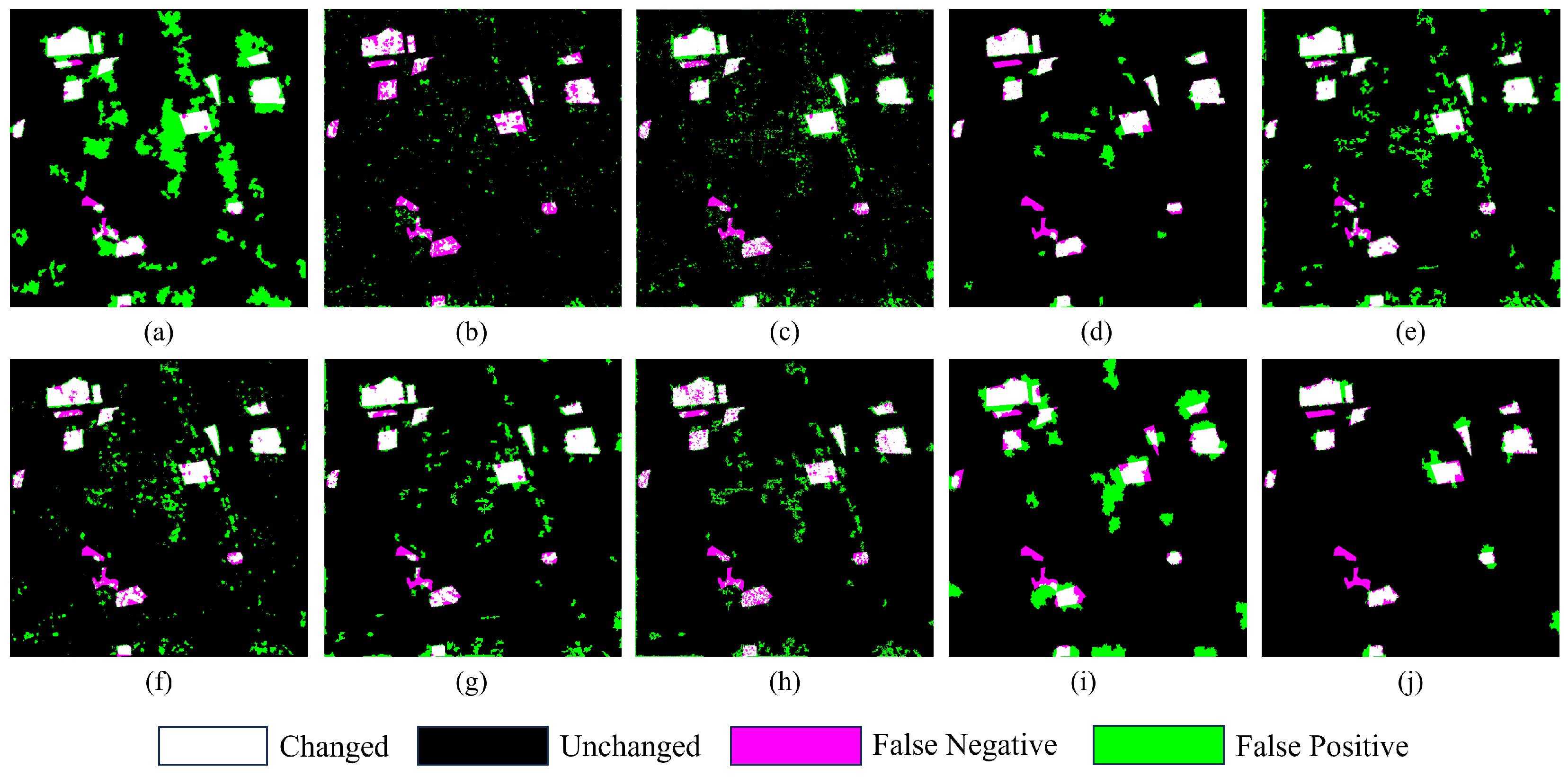

3.4.2. Change Detection Results on Dataset II

3.4.3. Change Detection Results on Dataset III

3.5. Ablation Study

3.5.1. Ablation Study for Graph-Based Strategy

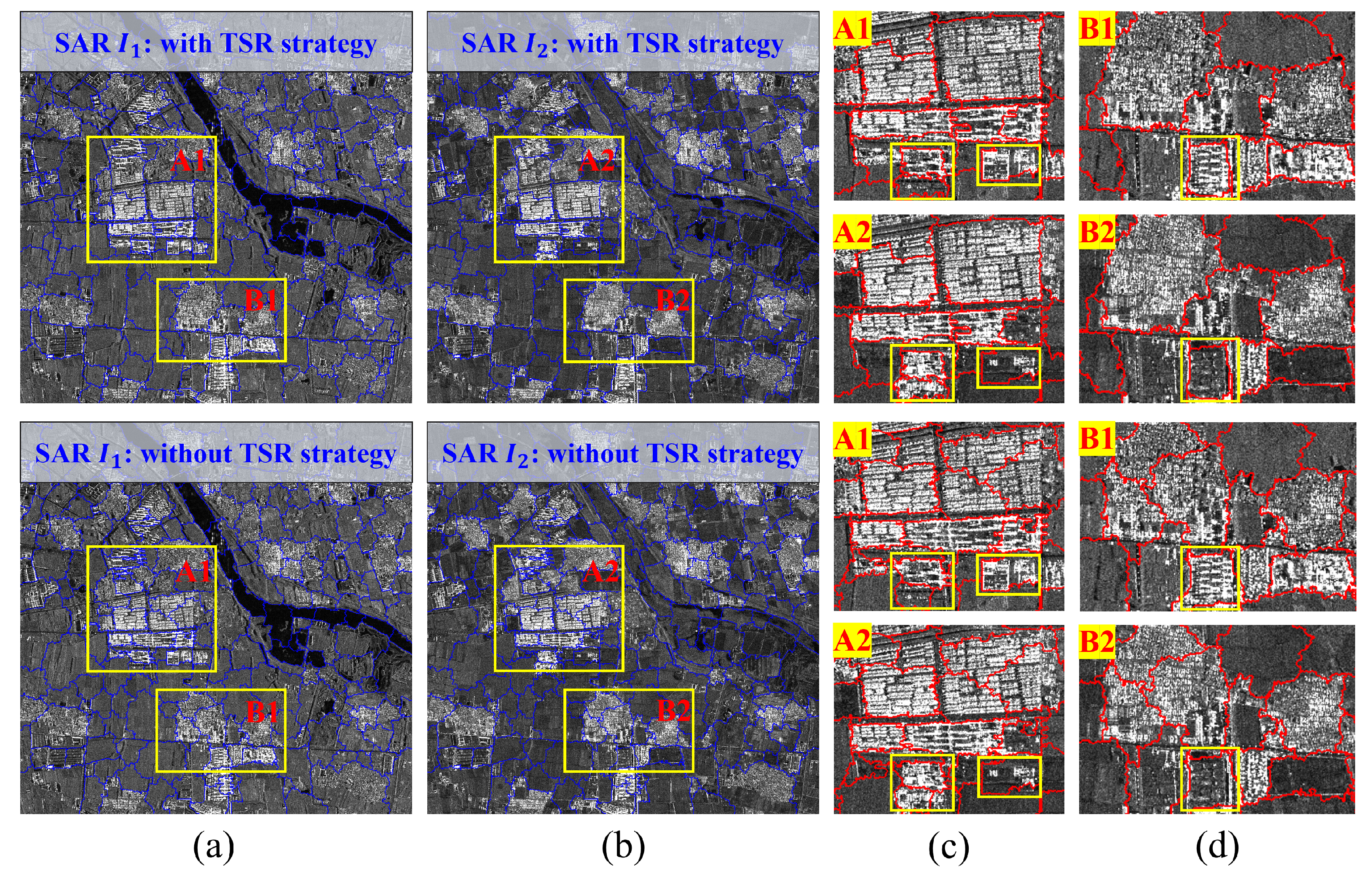

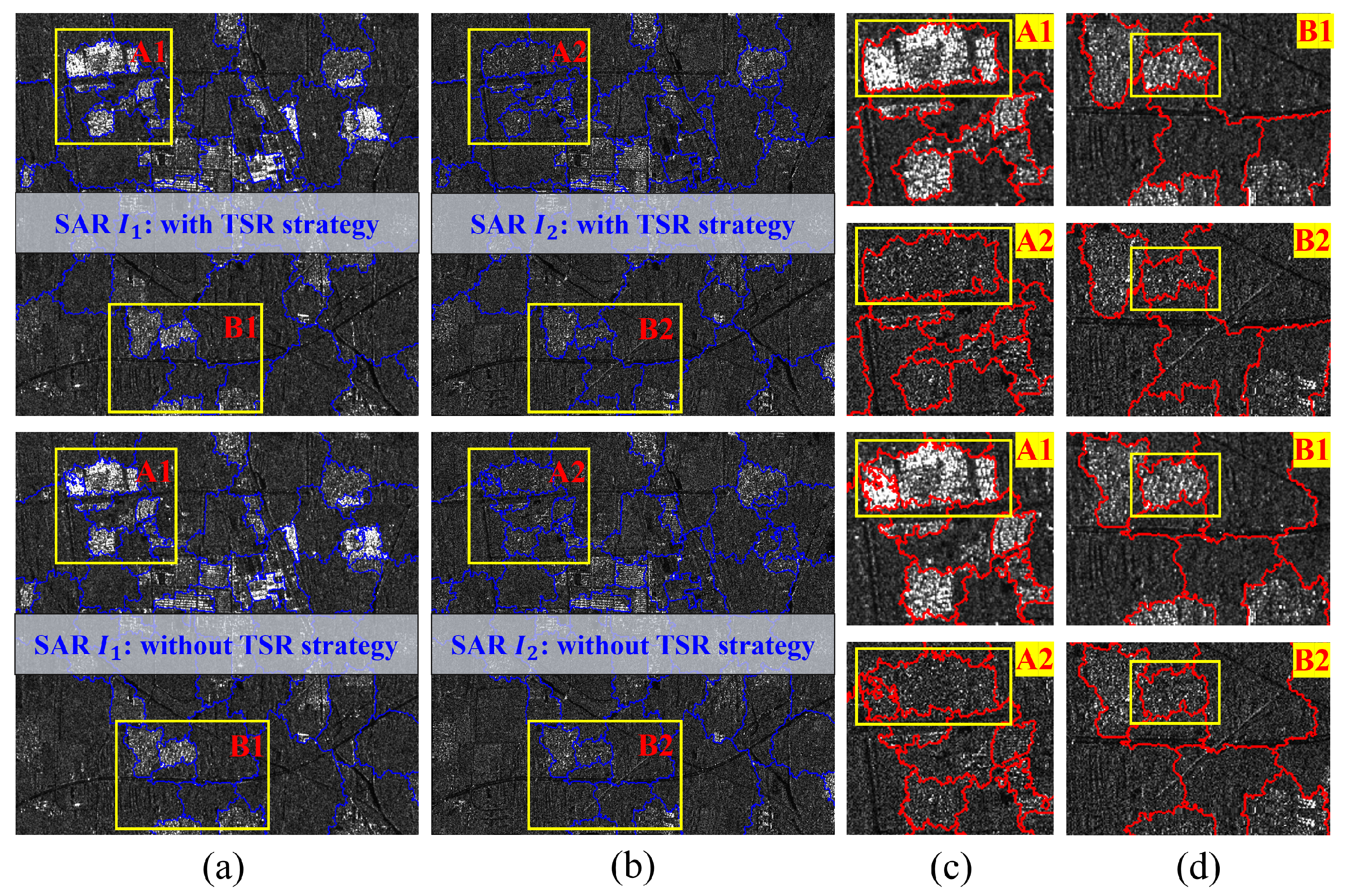

3.5.2. Ablation Study for Two Stage Ranking

3.5.3. Ablation Study for Fusion Refinement Module

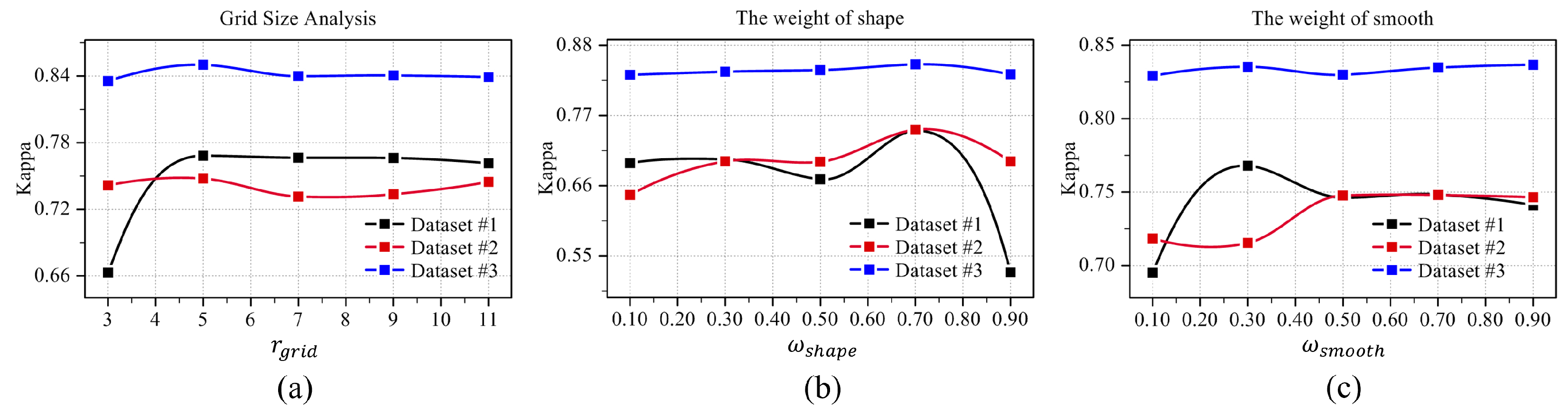

3.6. Key Parameter Analysis

3.7. Comparison of GMRCS and SAM for Change Detection

4. Discussion

- (1)

- Algorithm Effectiveness Analysis: The proposed RMF-HSG method is centered on multi-level spatial structural associations and aims to address two key issues in SAR change detection: high false alarms caused by speckle noise and inconsistent boundaries or poor internal connectivity in pixel-level methods. Its effectiveness is mainly reflected in three aspects. First, the GMRCS module introduces spatiotemporal constraints into multi-temporal co-segmentation, ensuring precise boundaries and regional integrity of change areas across multiple scales. Second, the HSG module employs a pixel–region–structure hierarchical graph consistency measure, effectively suppressing the influence of speckle noise on change intensity estimation. Finally, the FR module fuses multi-scale change information to enhance the detection of small-scale change targets. The three modules work collaboratively to improve both accuracy and stability of change detection. As shown in the experimental results (Figure 7, Figure 8 and Figure 9), the proposed method produces change maps with clear boundaries, low false alarms, and coherent change regions, confirming its effectiveness.

- (2)

- Comparison with Existing Methods: Existing SAR change detection methods can be broadly categorized into hand-crafted and deep learning-based approaches. The proposed method belongs to the former category. In the experiments, we compared the proposed algorithm with several representative methods, and both visual and quantitative results demonstrated its superiority. Compared with hand-crafted methods (SBMRF, NLSW, HHG, CoSEG-BCD), the proposed method exploits the stability of spatial structural associations to achieve stronger noise suppression. Moreover, by introducing spatiotemporal constraints (the TSR strategy) into multi-temporal co-segmentation, it achieves more precise boundary localization at the object level. In contrast, deep learning-based CD methods (LANTNet, DDNet, CAMixer) often suffer from limited training data quality and quantity, making it difficult to handle complex change scenarios. Furthermore, their small patch-based training leads to fragmented detection results, reducing precision and usability. In addition, DLCD methods require pseudo-sample extraction, network training, and inference, resulting in longer processing time. As shown in Table 3, the proposed method runs significantly faster than three representative DLCD methods.

- (3)

- Algorithm Generalization Analysis: The generalization capability of the algorithm is mainly reflected in its adaptability to different scenarios and its stability with respect to parameter settings. The proposed method is not tailored to any specific scene and is theoretically applicable to various surface types. From a modular perspective, GMRCS guides segmentation by assessing feature consistency among adjacent regions and considering temporal changes, but in highly heterogeneous areas such as dense urban scenes, layover, double bounce, and shadows may cause mis-segmentation, which can further affect the structural relationship description in HSG. Fortunately, the spectral and shape weighting parameters and in GMRCS can mitigate this issue to some extent. The parameter sensitivity analysis (Section 3.6) shows that the algorithm is relatively stable with respect to key parameters; moreover, when the geometric feature weight is higher (), the detection performance improves. This indicates that emphasizing geometric feature modeling in SAR imagery is more beneficial for change detection.

- (4)

- Algorithm Limitations: The algorithm assumes a high-precision registration between multi-temporal images; thus, its performance may degrade under severe geometric distortions or large viewing angle differences. For example, in ultra-high-resolution SAR images of dense urban areas, the algorithm may fail to precisely capture change boundaries. Although the proposed method is more efficient and produces more coherent results than DLCD methods, its computational efficiency is slightly lower than pixel-level approaches due to the multi-temporal segmentation process. Furthermore, its performance across different scenarios (e.g., urban, vegetation, coastal, mountainous) remains sensitive to parameter settings, requiring manual adjustment for optimal results.

- (5)

- Future Research: Future work will focus on adapting the algorithm to different scenarios. Specifically, GMRCS will be enhanced to automatically adjust segmentation thresholds based on scene characteristics, and HSG will be extended to construct spatial associations at the semantic feature rather than merely the low feature level. In addition, scene-specific SAR scattering priors will be incorporated to further improve the detection performance of the algorithm in complex environments.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, J.; Zhou, G.; Wang, F.; Liu, R.; Xiang, Y.; Wang, W.; You, H. Multi-temporal sar images change detection considering ambiguous co-registration errors: A unified framework. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4019405. [Google Scholar] [CrossRef]

- Quan, S.; Xiong, B.; Xiang, D.; Zhao, L.; Zhang, S.; Kuang, G. Eigenvalue-based urban area extraction using polarimetric sar data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 458–471. [Google Scholar] [CrossRef]

- Wang, B.; Zhao, C.; Zhang, Q.; Lu, Z.; Pepe, A. Long-term continuously updated deformation time series from multisensor insar in xi’an, china from 2007 to 2021. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7297–7309. [Google Scholar] [CrossRef]

- Zou, B.; Li, W.; Zhang, L. Built-up area extraction using high-resolution sar images based on spectral reconfiguration. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1391–1395. [Google Scholar] [CrossRef]

- Adeli, S.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.J.; Brisco, B.; Tamiminia, H.; Shaw, S. Wetland monitoring using sar data: A meta-analysis and comprehensive review. Remote Sens. 2020, 12, 2190. [Google Scholar] [CrossRef]

- Tsokas, A.; Rysz, M.; Pardalos, P.M.; Dipple, K. Sar data applications in earth observation: An overview. Expert Syst. Appl. 2022, 205, 117342. [Google Scholar] [CrossRef]

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using vhr optical and sar imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef]

- AlAli, Z.T.; Alabady, S.A. A survey of disaster management and sar operations using sensors and supporting techniques. Int. J. Disaster Risk Reduct. 2022, 82, 103295. [Google Scholar] [CrossRef]

- Sun, Z.; Dai, M.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. An anchor-free detection method for ship targets in high-resolution sar images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7799–7816. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention receptive pyramid network for ship detection in sar images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Xiang, Y.; Teng, F.; Wang, L.; Jiao, N.; Wang, F.; You, H. Orthorectification of high-resolution sar images in island regions based on fast multimodal registration. J. Radars 2024, 13, 1–19. [Google Scholar]

- Xiang, Y.; Jiao, N.; Liu, R.; Wang, F.; You, H.; Qiu, X.; Fu, K. A geometry-aware registration algorithm for multiview high-resolution sar images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5234818. [Google Scholar] [CrossRef]

- Xiang, Y.; Jiang, L.; Wang, F.; You, H.; Qiu, X.; Fu, K. Detector-free feature matching for optical and sar images based on a two-step strategy. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5214216. [Google Scholar] [CrossRef]

- Jiao, N.; Wang, F.; Hu, Y.; Xiang, Y.; Liu, R.; You, H. Sar true digital ortho maps production for target geometric structure preservation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 10279–10286. [Google Scholar] [CrossRef]

- Gong, M.; Cao, Y.; Wu, Q. A neighborhood-based ratio approach for change detection in sar images. IEEE Geosci. Remote Sens. Lett. 2011, 9, 307–311. [Google Scholar] [CrossRef]

- Zhuang, H.; Tan, Z.; Deng, K.; Yao, G. Adaptive generalized likelihood ratio test for change detection in sar images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 416–420. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building change detection in vhr sar images via unsupervised deep transcoding. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1917–1929. [Google Scholar] [CrossRef]

- Pirrone, D.; De, S.; Bhattacharya, A.; Bruzzone, L.; Bovolo, F. Unsupervised change detection in built-up areas by multi-temporal polarimetric sar images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4554–4557. [Google Scholar]

- Wang, J.; Yang, X.; Jia, L. Pointwise sar image change detection based on stereograph model with multiple-span neighbourhood information. Int. J. Remote Sens. 2019, 40, 31–50. [Google Scholar] [CrossRef]

- Wang, J.; Zeng, F.; Niu, S.; Zheng, J.; Jiang, X. Sar image change detection via heterogeneous graph with multi-order and multi-level connections. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11386–11401. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, F.; You, H. Unsupervised sar image change detection based on structural consistency and cfar threshold estimation. Remote Sens. 2023, 15, 1422. [Google Scholar] [CrossRef]

- Fu, B.; Wang, Y.; Campbell, A.; Li, Y.; Zhang, B.; Yin, S.; Xing, Z.; Jin, X. Comparison of object-based and pixel-based random forest algorithm for wetland vegetation mapping using high spatial resolution gf-1 and sar data. Ecol. Indic. 2017, 73, 105–117. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, T.; You, H. Object-based multiscale method for sar image change detection. J. Appl. Remote Sens. 2018, 12, 025004. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, T.; You, H. An object-based method based on a novel statistical distance for sar image change detection. In Proceedings of the 2018 IEEE 3rd International Conference on Signal and Image Processing (ICSIP), Shenzhen, China, 13–15 July 2018; pp. 172–176. [Google Scholar]

- Zhu, J.; Wang, F.; You, H. Segmentation-based vhr sar images built-up area change detection: A coarse-to-fine approach. J. Appl. Remote Sens. 2024, 18, 016503. [Google Scholar] [CrossRef]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change detection methods for remote sensing in the last decade: A comprehensive review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Xue, W.; Ai, J.; Zhu, Y.; Chen, J.; Zhuang, S. Ais-fcanet: Long-term ais data assisted frequency-spatial contextual awareness network for salient ship detection in sar imagery. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 15166–15171. [Google Scholar] [CrossRef]

- Wang, R.; Chen, J.-W.; Wang, Y.; Jiao, L.; Wang, M. Sar image change detection via spatial metric learning with an improved mahalanobis distance. IEEE Geosci. Remote Sens. Lett. 2019, 17, 77–81. [Google Scholar] [CrossRef]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic change detection in synthetic aperture radar images based on pcanet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Gao, F.; Wang, X.; Gao, Y.; Dong, J.; Wang, S. Sea ice change detection in sar images based on convolutional-wavelet neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1240–1244. [Google Scholar] [CrossRef]

- Zhang, X.; Su, H.; Zhang, C.; Gu, X.; Tan, X.; Atkinson, P.M. Robust unsupervised small area change detection from sar imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 173, 79–94. [Google Scholar] [CrossRef]

- Qu, X.; Gao, F.; Dong, J.; Du, Q.; Li, H.-C. Change detection in synthetic aperture radar images using a dual-domain network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4013405. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, Z.; Gao, F.; Dong, J.; Du, Q.; Li, H.-C. Convolution and attention mixer for synthetic aperture radar image change detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4012105. [Google Scholar] [CrossRef]

- Xie, J.; Gao, F.; Zhou, X.; Dong, J. Wavelet-based bi-dimensional aggregation network for sar image change detection. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4013705. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, G.; Zhang, C.; Atkinson, P.M.; Tan, X.; Jian, X.; Zhou, X.; Li, Y. Two-phase object-based deep learning for multi-temporal sar image change detection. Remote Sens. 2020, 12, 548. [Google Scholar] [CrossRef]

- Ji, L.; Zhao, J.; Zhao, Z. A novel end-to-end unsupervised change detection method with self-adaptive superpixel segmentation for sar images. Remote Sens. 2023, 15, 1724. [Google Scholar] [CrossRef]

- Ai, J.; Mao, Y.; Luo, Q.; Jia, L.; Xing, M. Sar target classification using the multikernel-size feature fusion-based convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5214313. [Google Scholar] [CrossRef]

- Ning, X.; Zhang, H.; Zhang, R.; Huang, X. Multi-stage progressive change detection on high resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2024, 207, 231–244. [Google Scholar] [CrossRef]

- Zhao, C.; Ma, L.; Wang, L.; Ohtsuki, T.; Mathiopoulos, P.T.; Wang, Y. Sar image change detection in spatial-frequency domain based on attention mechanism and gated linear unit. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4002205. [Google Scholar] [CrossRef]

- Yi, W.; Wang, S.; Ji, N.; Wang, C.; Xiao, Y.; Song, X. Sar image change detection based on gabor wavelets and convolutional wavelet neural networks. Multimed. Tools Appl. 2023, 82, 30895–30908. [Google Scholar] [CrossRef]

- Li, T.; Liang, Z.; Zhao, S.; Gong, J.; Shen, J. Self-learning with rectification strategy for human parsing. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9260–9269. [Google Scholar] [CrossRef]

- Peng, Y.; Cui, B.; Yin, H.; Zhang, Y.; Du, P. Automatic sar change detection based on visual saliency and multi-hierarchical fuzzy clustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7755–7769. [Google Scholar] [CrossRef]

- Meng, D.; Gao, F.; Dong, J.; Du, Q.; Li, H.-C. Synthetic aperture radar image change detection via layer attention-based noise-tolerant network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4026505. [Google Scholar] [CrossRef]

- Fang, S.; Qi, C.; Yang, S.; Li, Z.; Wang, W.; Wang, Y. Unsupervised sar change detection using two-stage pseudo labels refining framework. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4005405. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, M.; Yang, G.; Zhang, L. Escnet: An end-to-end superpixel-enhanced change detection network for very-high-resolution remote sensing images. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 28–42. [Google Scholar] [CrossRef]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-based difference representation learning for change detection in multispectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Duan, Y.; Sun, K.; Li, W.; Wei, J.; Gao, S.; Tan, Y.; Zhou, W.; Liu, J.; Liu, J. Wcmu-net: An effective method for reducing the impact of speckle noise in sar image change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 2880–2892. [Google Scholar] [CrossRef]

- Zhang, W.; Xiang, D.; Su, Y. Fast multiscale superpixel segmentation for sar imagery. IEEE Geosci. Remote Sens. Lett. 2020, 19, 4001805. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, T.; Jiang, X.; Lan, K. A hierarchical heterogeneous graph for unsupervised sar image change detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4516605. [Google Scholar] [CrossRef]

- Hao, M.; Zhou, M.; Jin, J.; Shi, W. An advanced superpixel-based markov random field model for unsupervised change detection. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1401–1405. [Google Scholar] [CrossRef]

- Zhang, K.; Fu, X.; Lv, X.; Yuan, J. Unsupervised multitemporal building change detection framework based on cosegmentation using time-series sar. Remote Sens. 2021, 13, 471. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Du, Q.; Li, H.-C. Synthetic aperture radar image change detection via siamese adaptive fusion network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10748–10760. [Google Scholar] [CrossRef]

- Liu, T.; Xu, J.; Lei, T.; Wang, Y.; Du, X.; Zhang, W.; Lv, Z.; Gong, M. Aekan: Exploring superpixel-based autoencoder kolmogorov-arnold network for unsupervised multimodal change detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5601114. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

| Methods | Dataset I | Dataset II | Dataset III | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PCC(%) ↑ | PRE(%) ↑ | RC(%) ↑ | F1(%) ↑ | Kappa(%) ↑ | PCC(%) ↑ | PRE(%) ↑ | RC(%) ↑ | F1(%) ↑ | Kappa(%) ↑ | PCC(%) ↑ | PRE(%) ↑ | RC(%) ↑ | F1(%) ↑ | Kappa(%) ↑ | |

| SBMRF [50] | 92.65 | 47.21 | 89.51 | 61.81 | 58.17 | 88.26 | 29.68 | 89.59 | 44.59 | 39.82 | 93.94 | 68.35 | 86.91 | 76.52 | 73.10 |

| NLSW [21] | 94.32 | 58.40 | 50.34 | 54.07 | 51.06 | 96.22 | 66.81 | 56.18 | 61.04 | 59.06 | 95.62 | 94.03 | 65.65 | 77.31 | 74.97 |

| HHG [49] | 96.22 | 73.50 | 67.37 | 70.30 | 68.29 | 96.84 | 69.16 | 72.45 | 70.77 | 69.10 | 96.26 | 90.98 | 74.47 | 81.90 | 79.84 |

| CoSEG-BCD [51] | 94.04 | 61.75 | 27.10 | 37.67 | 35.04 | 97.15 | 70.60 | 78.62 | 74.40 | 72.89 | 92.65 | 62.01 | 91.16 | 73.81 | 69.71 |

| LANTNet [43] | 96.53 | 88.66 | 54.86 | 67.78 | 66.06 | 94.05 | 46.55 | 87.05 | 60.67 | 57.76 | 95.12 | 96.76 | 59.09 | 73.37 | 70.86 |

| SAFNet [52] | 96.44 | 91.98 | 50.79 | 65.44 | 63.73 | 95.52 | 55.28 | 79.09 | 65.08 | 62.76 | 94.78 | 97.49 | 55.47 | 70.71 | 68.08 |

| DDNet [32] | 88.90 | 34.84 | 77.01 | 47.98 | 42.84 | 95.29 | 53.46 | 82.98 | 65.02 | 62.63 | 95.40 | 97.85 | 60.83 | 75.02 | 72.64 |

| CAMixer [33] | 92.58 | 46.50 | 77.86 | 58.23 | 54.44 | 95.44 | 55.16 | 72.69 | 62.73 | 60.35 | 95.58 | 97.00 | 63.08 | 76.44 | 74.12 |

| AEKAN [53] | 91.06 | 41.22 | 81.08 | 54.65 | 50.27 | 92.85 | 40.32 | 74.00 | 52.20 | 48.69 | 95.04 | 79.52 | 75.95 | 77.69 | 74.91 |

| RMF-HSG | 96.93 | 73.40 | 84.30 | 78.47 | 76.83 | 97.47 | 75.81 | 76.40 | 76.11 | 74.77 | 97.12 | 91.74 | 82.04 | 86.62 | 85.01 |

| Scale | Dataset I | Dataset II | Dataset III | |||

|---|---|---|---|---|---|---|

| Number | MRJS | GMRCS | MRJS | GMRCS | MRJS | GMRCS |

| 35.02 s | 19.88 s | 12.73 s | 5.85 s | 53.09 s | 25.08 s | |

| 40.17 s | 30.71 s | 14.15 s | 9.61 s | 67.59 s | 40.47 s | |

| 43.94 s | 38.80 s | 15.62 s | 12.17 s | 73.57 s | 52.08 s | |

| Method | Dataset #1 | Dataset #2 | Dataset #3 |

|---|---|---|---|

| LANTNet | 135.02 | 93.49 | 733.48 |

| DDNet | 763.92 | 452.49 | 248.21 |

| CAMixer | 359.95 | 142.91 | 1393.41 |

| RMF-HSG | 51.591 | 15.170 | 122.498 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Yu, M.; Wang, F.; Zhou, G.; Jiao, N.; Xiang, Y.; You, H. Graph-Based Multi-Resolution Cosegmentation for Coarse-to-Fine Object-Level SAR Image Change Detection. Remote Sens. 2025, 17, 3736. https://doi.org/10.3390/rs17223736

Zhu J, Yu M, Wang F, Zhou G, Jiao N, Xiang Y, You H. Graph-Based Multi-Resolution Cosegmentation for Coarse-to-Fine Object-Level SAR Image Change Detection. Remote Sensing. 2025; 17(22):3736. https://doi.org/10.3390/rs17223736

Chicago/Turabian StyleZhu, Jingxing, Miao Yu, Feng Wang, Guangyao Zhou, Niangang Jiao, Yuming Xiang, and Hongjian You. 2025. "Graph-Based Multi-Resolution Cosegmentation for Coarse-to-Fine Object-Level SAR Image Change Detection" Remote Sensing 17, no. 22: 3736. https://doi.org/10.3390/rs17223736

APA StyleZhu, J., Yu, M., Wang, F., Zhou, G., Jiao, N., Xiang, Y., & You, H. (2025). Graph-Based Multi-Resolution Cosegmentation for Coarse-to-Fine Object-Level SAR Image Change Detection. Remote Sensing, 17(22), 3736. https://doi.org/10.3390/rs17223736