Semi-BSU: A Boundary-Aware Semi-Supervised Semantic Segmentation Framework with Superpixel Refinement for Coastal Aquaculture Pond Extraction from Remote Sensing Images

Highlights

- A boundary-aware semi-supervised framework (Semi-BSU) significantly improves the segmentation accuracy of feature edges in remote sensing images, exemplified by coastal aquaculture ponds.

- Superpixel-guided pseudo-label refinement effectively reduces noise and minimizes intra-class inconsistency.

- Achieves high-quality remote sensing feature segmentation with minimal labeled samples, exemplified by coastal aquaculture ponds.

- Provides a practical method for large-scale remote-sensing image interpretation.

Abstract

1. Introduction

2. Materials and Methods

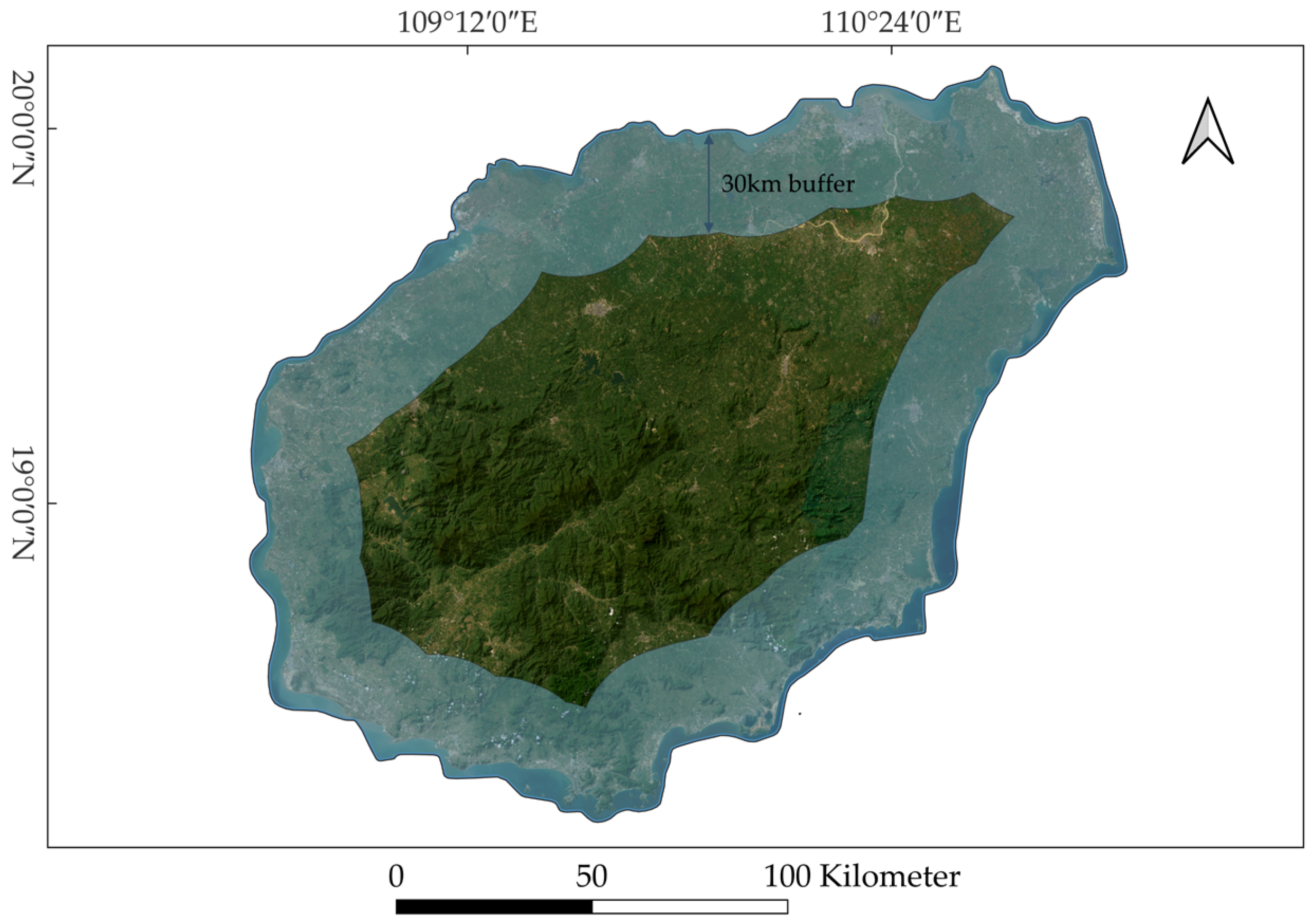

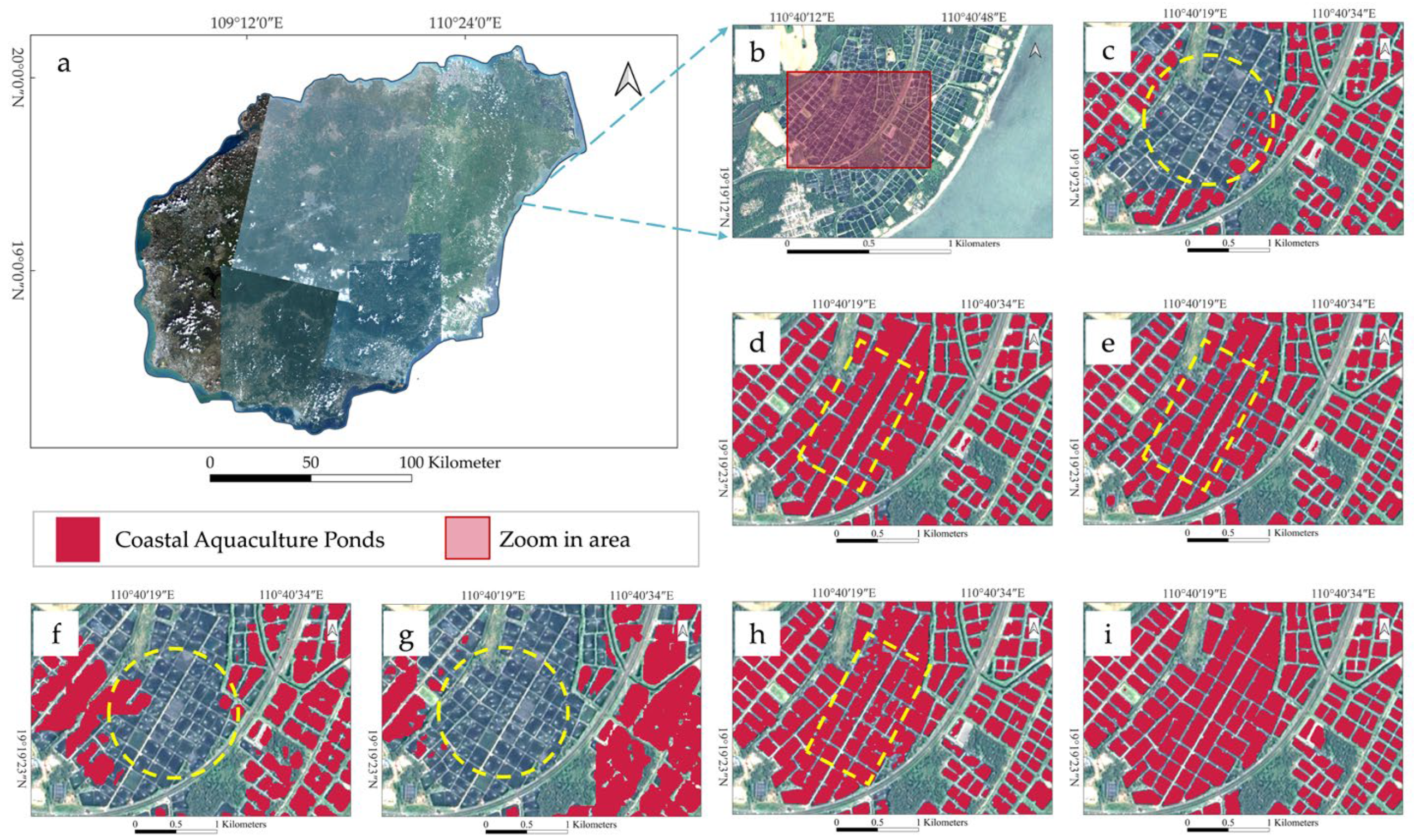

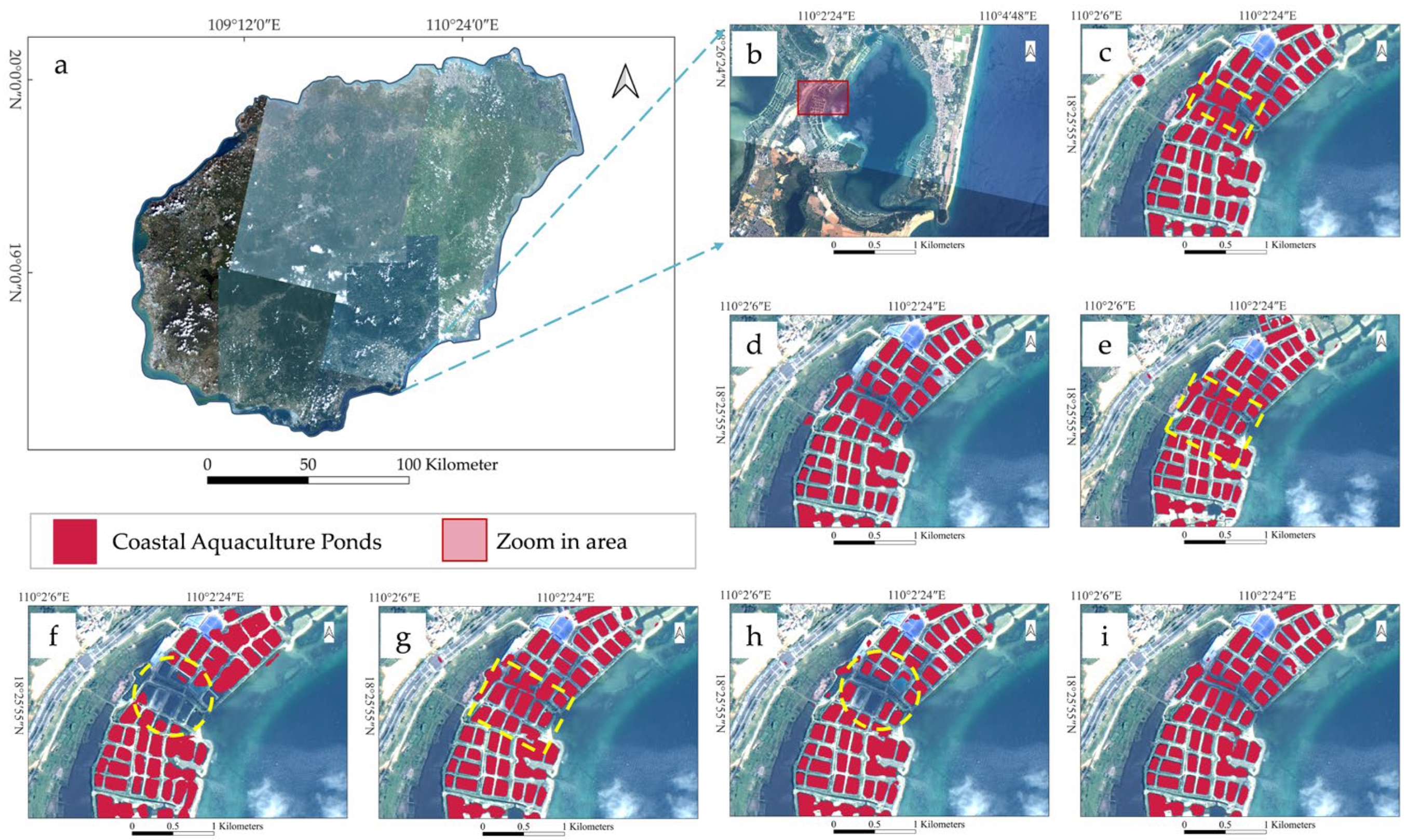

2.1. Study Area

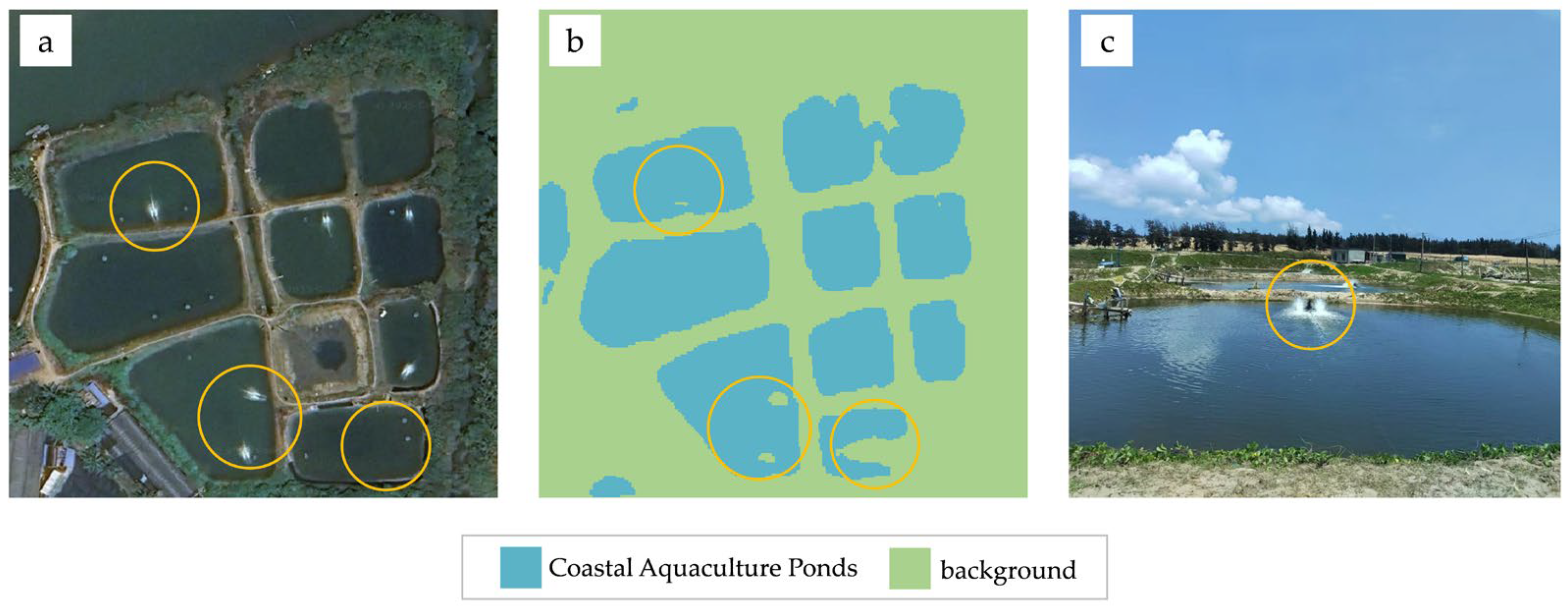

2.2. Dataset and Preprocessing

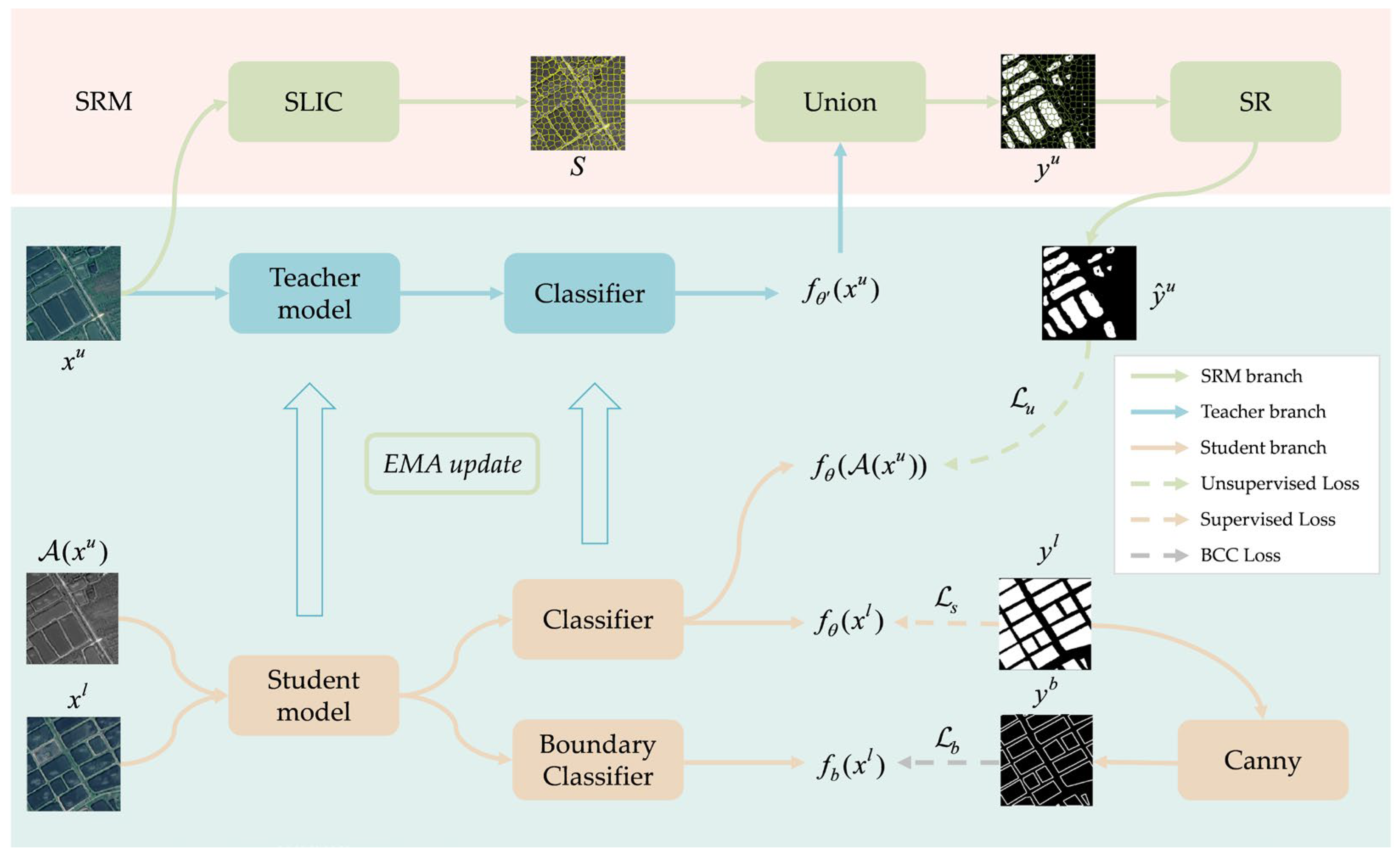

2.3. Methods

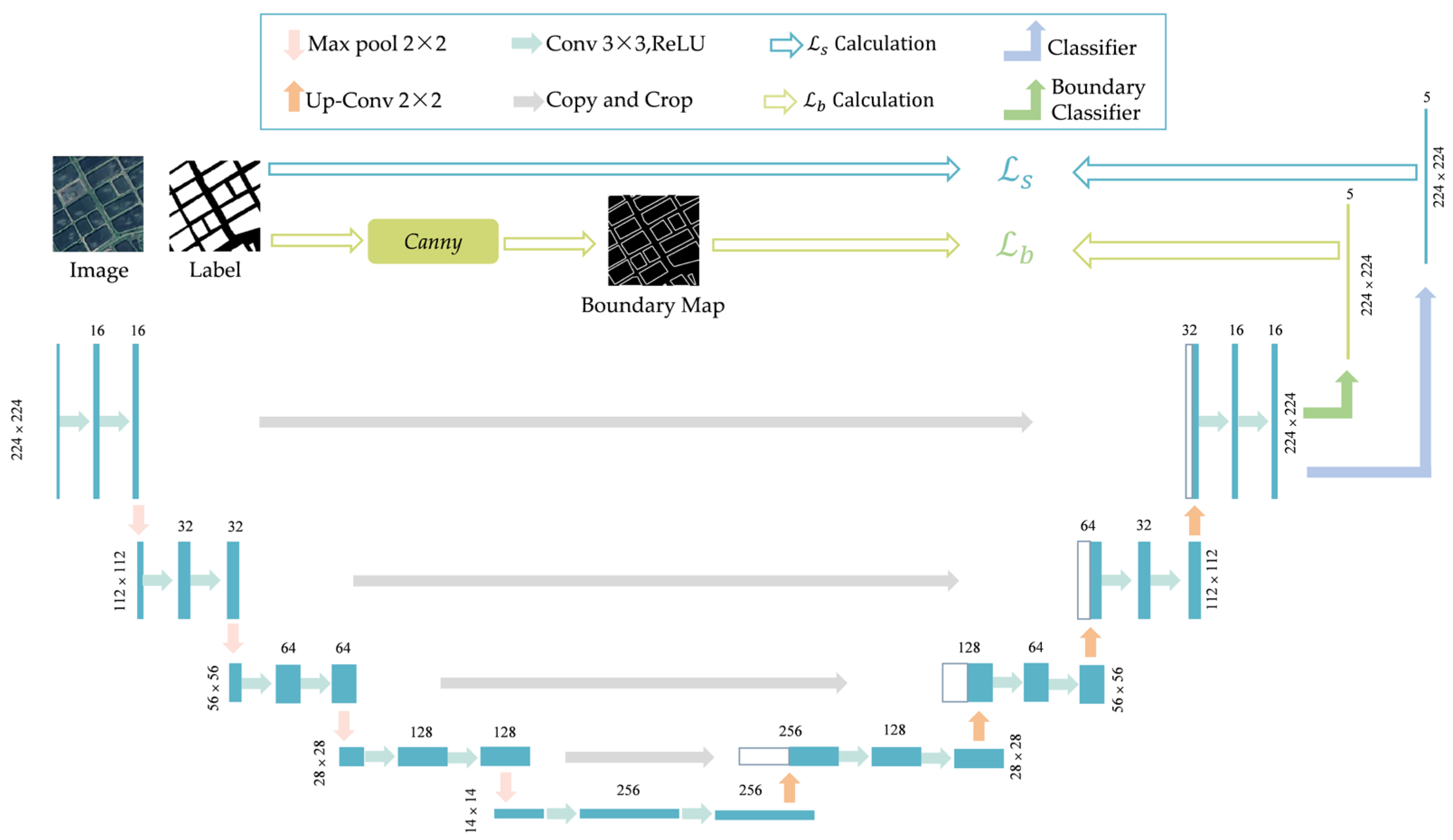

2.3.1. Network Architecture

2.3.2. Student Branch

2.3.3. Teacher Branch

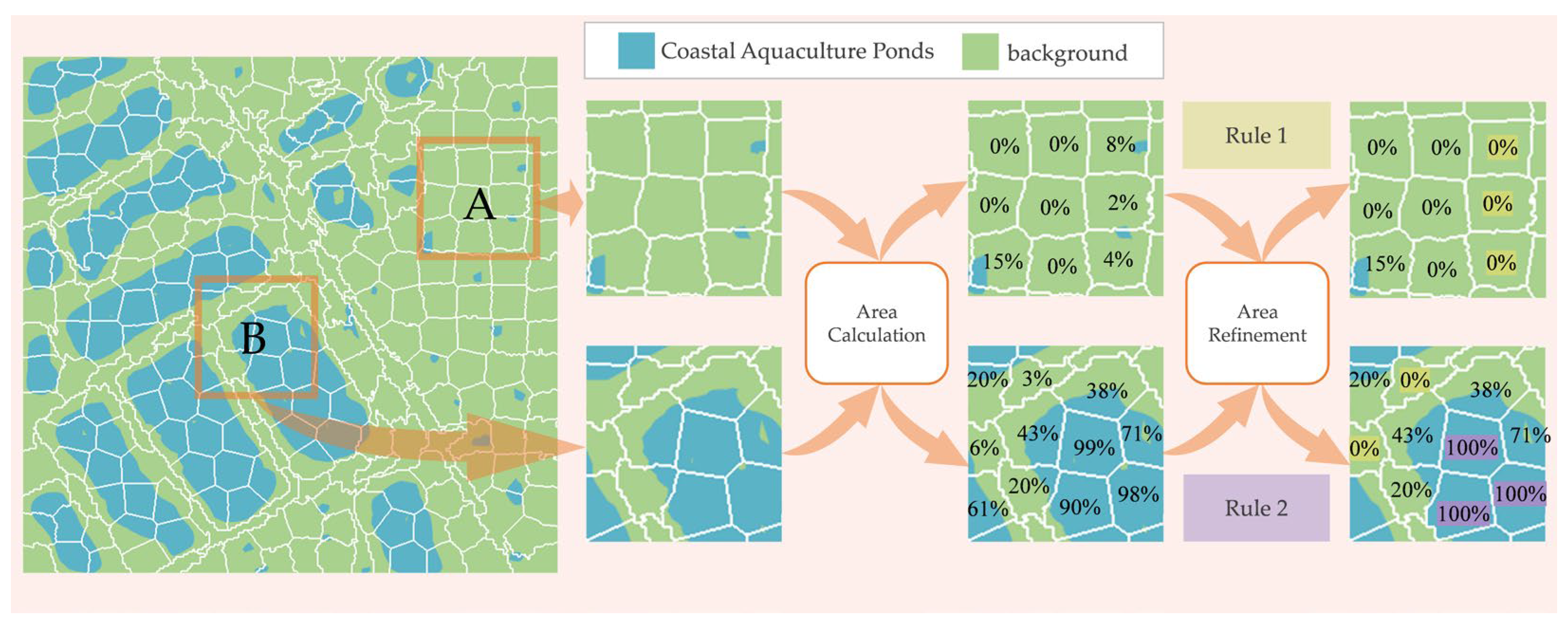

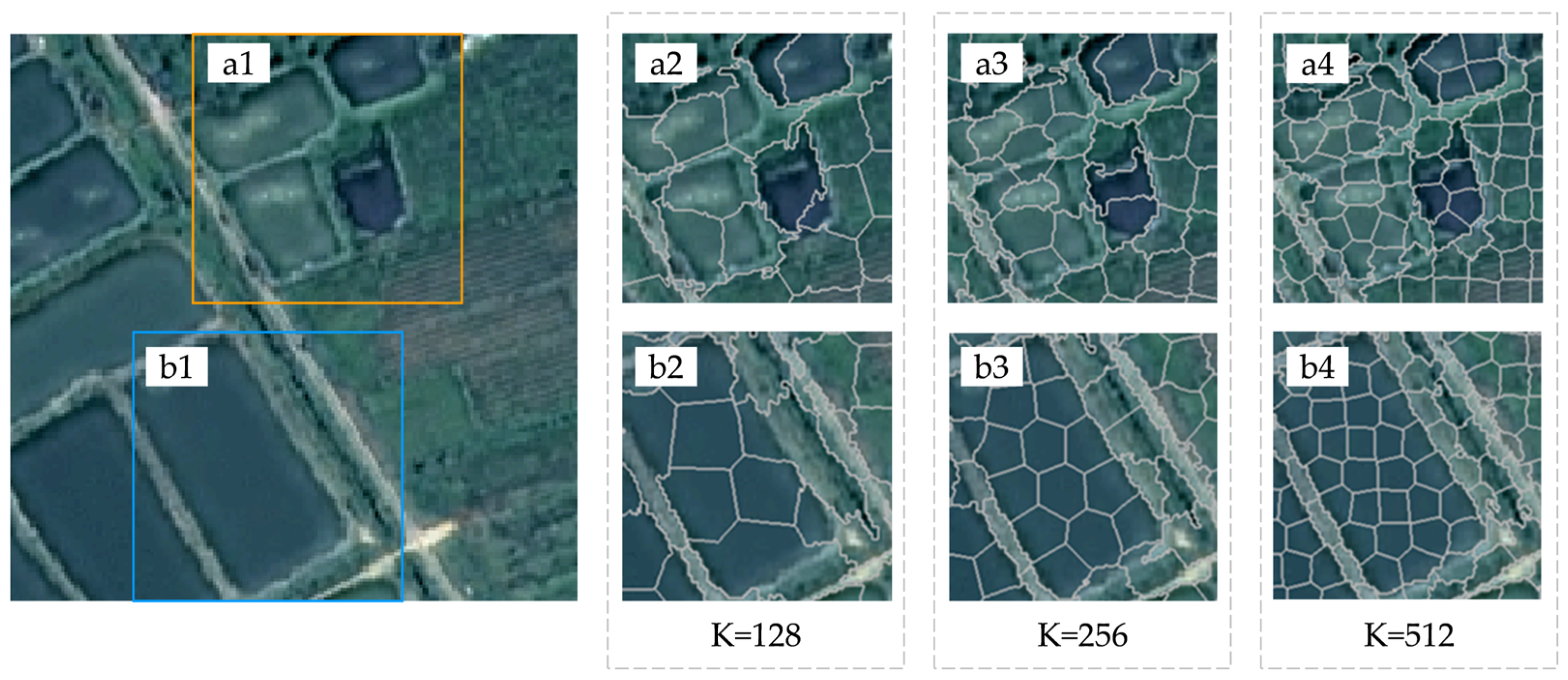

2.3.4. Superpixel Refinement

3. Results

3.1. Experimental Design

3.1.1. Sample Allocation and Label-Scarcity Simulation

3.1.2. Implementation Details

3.1.3. Accuracy Assessment Indicators

3.2. Experimental Results

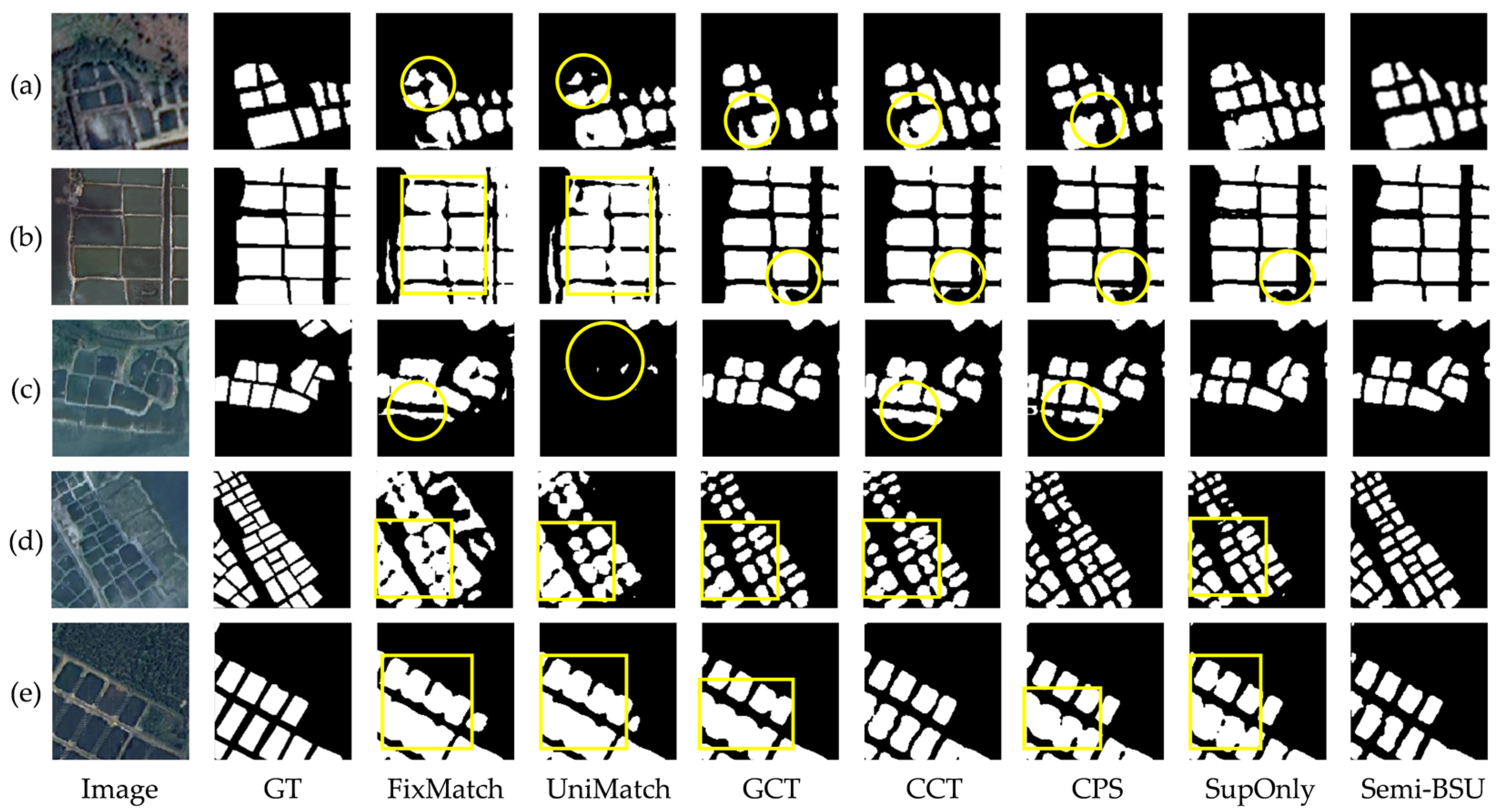

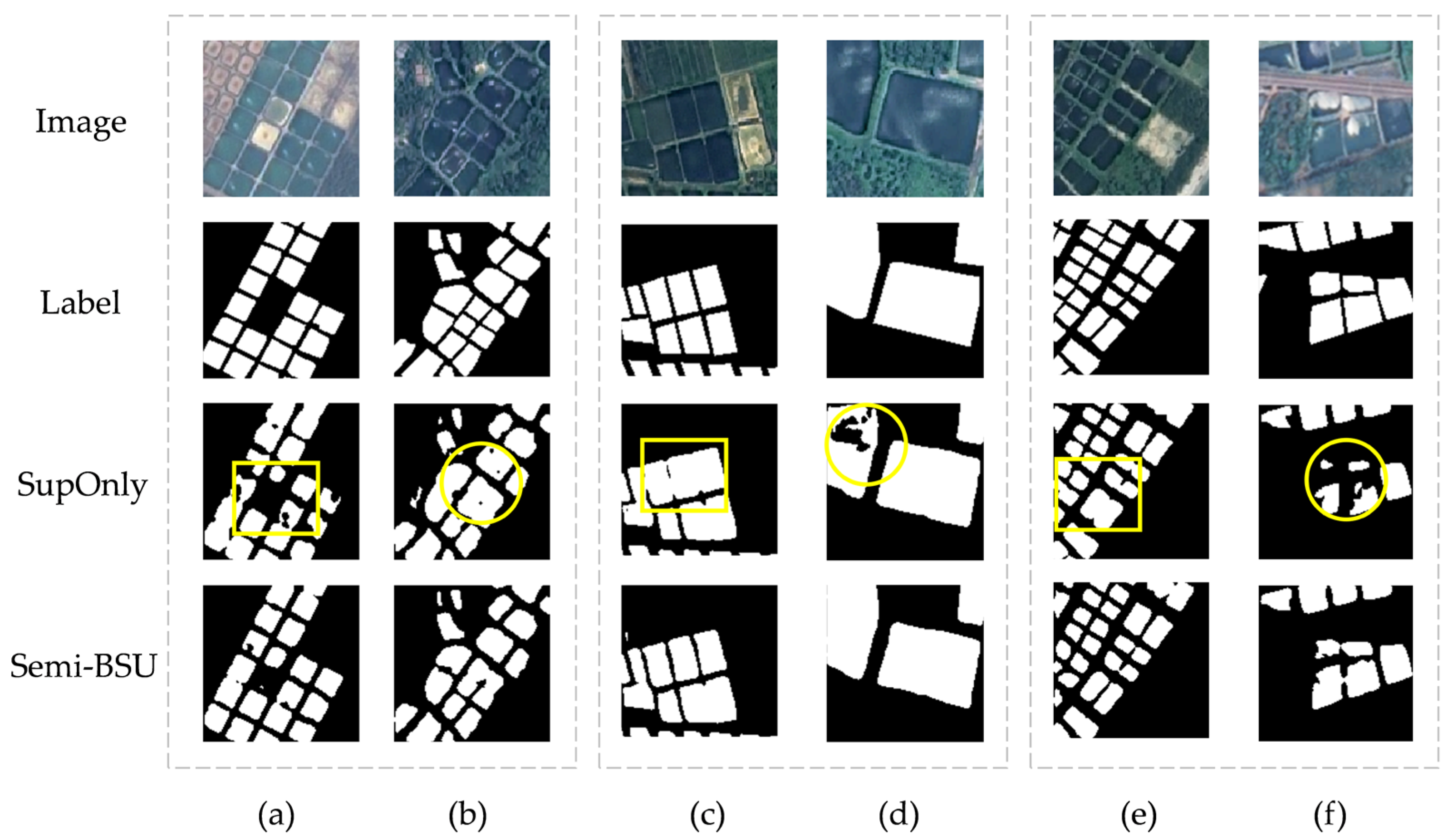

3.2.1. Comparison with Different Semi-Supervised Learning Frameworks

3.2.2. Comparison of Typical Scene Extraction

4. Discussion

4.1. Analysis of Ablation Experiment Results

4.2. Related Analysis of SRM

4.3. Related Analysis of BCC

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Boyd, C.E.; McNevin, A.A.; Davis, R.P. The contribution of fisheries and aquaculture to the global protein supply. Food Secur. 2022, 14, 805–827. [Google Scholar] [CrossRef]

- FAO. Fishery and Aquaculture Statistics Yearbook 2020; FAO: Rome, Italy, 2020. [Google Scholar]

- Hou, T.; Sun, W.; Chen, C.; Yang, G.; Meng, X.; Peng, J. Marine floating raft aquaculture extraction of hyperspectral remote sensing images based decision tree algorithm. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102846. [Google Scholar] [CrossRef]

- Afroz, T.; Alam, S. Sustainable shrimp farming in Bangladesh: A quest for an Integrated Coastal Zone Management. Ocean Coast. Manag. 2013, 71, 275–283. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, C.; Liu, Z.; Zhang, Z.; Liang, J.; Chen, H.; Wang, L. Extraction of Aquaculture Ponds along Coastal Region Using U2-Net Deep Learning Model from Remote Sensing Images. Remote Sens. 2022, 14, 4001. [Google Scholar] [CrossRef]

- Loisel, H.; Vantrepotte, V.; Ouillon, S.; Ngoc, D.D.; Herrmann, M.; Tran, V.; Mériaux, X.; Dessailly, D.; Jamet, C.; Duhaut, T.; et al. Assessment and analysis of the chlorophyll-a concentration variability over the Vietnamese coastal waters from the MERIS ocean color sensor (2002–2012). Remote Sens. Environ. 2017, 190, 217–232. [Google Scholar] [CrossRef]

- Guo, H.; Nativi, S.; Liang, D.; Craglia, M.; Wang, L.; Schade, S.; Corban, C.; He, G.; Pesaresi, M.; Li, J.; et al. Big Earth Data science: An information framework for a sustainable planet. Int. J. Digit. Earth 2020, 13, 743–767. [Google Scholar] [CrossRef]

- Kolli, M.K.; Opp, C.; Karthe, D.; Pradhan, B. Automatic extraction of large-scale aquaculture encroachment areas using Canny Edge Otsu algorithm in Google earth engine—The case study of Kolleru Lake, South India. Geocarto Int. 2022, 37, 11173–11189. [Google Scholar] [CrossRef]

- Ottinger, M.; Bachofer, F.; Huth, J.; Kuenzer, C. Mapping Aquaculture Ponds for the Coastal Zone of Asia with Sentinel-1 and Sentinel-2 Time Series. Remote Sens. 2021, 14, 153. [Google Scholar] [CrossRef]

- Fu, T.; Zhang, L.; Yuan, X.; Chen, B.; Yan, M. Spatio-temporal patterns and sustainable development of coastal aquaculture in Hainan Island, China: 30 Years of evidence from remote sensing. Ocean Coast. Manag. 2021, 214, 105897. [Google Scholar] [CrossRef]

- Ottinger, M.; Clauss, K.; Kuenzer, C. Aquaculture: Relevance, distribution, impacts and spatial assessments—A review. Ocean Coast. Manag. 2016, 119, 244–266. [Google Scholar] [CrossRef]

- Naylor, R.L.; Hardy, R.W.; Buschmann, A.H.; Bush, S.R.; Cao, L.; Klinger, D.H.; Little, D.C.; Lubchenco, J.; Shumway, S.E.; Troell, M. A 20-year retrospective review of global aquaculture. Nature 2021, 591, 551–563. [Google Scholar] [CrossRef]

- Gong, P.; Niu, Z.; Cheng, X.; Zhao, K.; Zhou, D.; Guo, J.; Liang, L.; Wang, X.; Li, D.; Huang, H.; et al. China’s wetland change (1990–2000) determined by remote sensing. Sci. China Earth Sci. 2010, 53, 1036–1042. [Google Scholar] [CrossRef]

- Fan, J.; Huang, H.; Fan, H.; Gao, A. Extracting aquaculture area with RADASAT-1. Mar. Sci. 2004, 10, 46–49. [Google Scholar]

- Tew, Y.L.; Tan, M.L.; Samat, N.; Chan, N.W.; Mahamud, M.A.; Sabjan, M.A.; Lee, L.K.; See, K.F.; Wee, S.T. Comparison of Three Water Indices for Tropical Aquaculture Ponds Extraction using Google Earth Engine. Sains Malays. 2022, 51, 369–378. [Google Scholar] [CrossRef]

- Li, B.; Gong, A.; Chen, Z.; Pan, X.; Li, L.; Li, J.; Bao, W. An Object-Oriented Method for Extracting Single-Object Aquaculture Ponds from 10 m Resolution Sentinel-2 Images on Google Earth Engine. Remote Sens. 2023, 15, 856. [Google Scholar] [CrossRef]

- Peng, Y.; Sengupta, D.; Duan, Y.; Chen, C.; Tian, B. Accurate mapping of Chinese coastal aquaculture ponds using biophysical parameters based on Sentinel-2 time series images. Mar. Pollut. Bull. 2022, 181, 113901. [Google Scholar] [CrossRef]

- Duan, Y.; Li, X.; Zhang, L.; Chen, D.; Liu, S.a.; Ji, H. Mapping national-scale aquaculture ponds based on the Google Earth Engine in the Chinese coastal zone. Aquaculture 2020, 520, 734666. [Google Scholar] [CrossRef]

- Wang, M.; Mao, D.; Xiao, X.; Song, K.; Jia, M.; Ren, C.; Wang, Z. Interannual changes of coastal aquaculture ponds in China at 10-m spatial resolution during 2016–2021. Remote Sens. Environ. 2023, 284, 113347. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, L.; Chen, B.; Zuo, J. An Object-Based Approach to Extract Aquaculture Ponds with 10-Meter Resolution Sentinel-2 Images: A Case Study of Wenchang City in Hainan Province. Remote Sens. 2024, 16, 1217. [Google Scholar] [CrossRef]

- Hernandez-Suarez, J.S.; Nejadhashemi, A.P.; Ferriby, H.; Moore, N.; Belton, B.; Haque, M.M. Performance of Sentinel-1 and 2 imagery in detecting aquaculture waterbodies in Bangladesh. Environ. Model. Softw. 2022, 157, 105534. [Google Scholar] [CrossRef]

- Yu, J.; He, X.; Yang, P.; Motagh, M.; Xu, J.; Xiong, J. Coastal Aquaculture Extraction Using GF-3 Fully Polarimetric SAR Imagery: A Framework Integrating UNet++ with Marker-Controlled Watershed Segmentation. Remote Sens. 2023, 15, 2246. [Google Scholar] [CrossRef]

- Fu, Y.; Ye, Z.; Deng, J.; Zheng, X.; Huang, Y.; Yang, W.; Wang, Y.; Wang, K. Finer Resolution Mapping of Marine Aquaculture Areas Using WorldView-2 Imagery and a Hierarchical Cascade Convolutional Neural Network. Remote Sens. 2019, 11, 1678. [Google Scholar] [CrossRef]

- Fu, Y.; You, S.; Zhang, S.; Cao, K.; Zhang, J.; Wang, P.; Bi, X.; Gao, F.; Li, F. Marine aquaculture mapping using GF-1 WFV satellite images and full resolution cascade convolutional neural network. Int. J. Digit. Earth 2022, 15, 2047–2060. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, L.; Chen, B.; Qiu, Y. Information extraction from offshore aquaculture ponds based on improved U-Net model. SmartTech Innov. 2023, 29, 8–14. [Google Scholar] [CrossRef]

- Zhang, X.; Dai, P.; Li, W.; Ren, N.; Mao, X. Extracting the images of freshwater aquaculture ponds using improved coordinate attention and U-Net neural network. Trans. Chin. Soc. Agric. Eng. 2023, 39, 153–162. [Google Scholar] [CrossRef]

- Chen, C.; Zou, Z.; Sun, W.; Yang, G.; Song, Y.; Liu, Z. Mapping the distribution and dynamics of coastal aquaculture ponds using Landsat time series data based on U2-Net deep learning model. Int. J. Digit. Earth 2024, 17, 2346258. [Google Scholar] [CrossRef]

- Liang, C.; Cheng, B.; Xiao, B.; He, C.; Liu, X.; Jia, N.; Chen, J. Semi-/Weakly-Supervised Semantic Segmentation Method and Its Application for Coastal Aquaculture Areas Based on Multi-Source Remote Sensing Images—Taking the Fujian Coastal Area (Mainly Sanduo) as an Example. Remote Sens. 2021, 13, 1083. [Google Scholar] [CrossRef]

- Zhou, R.; Zhang, W.; Yuan, Z.; Rong, X.; Liu, W.; Fu, K.; Sun, X. Weakly Supervised Semantic Segmentation in Aerial Imagery via Explicit Pixel-Level Constraints. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5634517. [Google Scholar] [CrossRef]

- Lian, R.; Huang, L. Weakly Supervised Road Segmentation in High-Resolution Remote Sensing Images Using Point Annotations. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4501013. [Google Scholar] [CrossRef]

- Zhu, Q.; Sun, Y.; Guan, Q.; Wang, L.; Lin, W. A Weakly Pseudo-Supervised Decorrelated Subdomain Adaptation Framework for Cross-Domain Land-Use Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5623913. [Google Scholar] [CrossRef]

- Miao, W.; Geng, J.; Jiang, W. Semi-Supervised Remote-Sensing Image Scene Classification Using Representation Consistency Siamese Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5616614. [Google Scholar] [CrossRef]

- Chen, H.; Li, Z.; Wu, J.; Xiong, W.; Du, C. SemiRoadExNet: A semi-supervised network for road extraction from remote sensing imagery via adversarial learning. ISPRS J. Photogramm. Remote Sens. 2023, 198, 169–183. [Google Scholar] [CrossRef]

- Shu, Q.; Pan, J.; Zhang, Z.; Wang, M. MTCNet: Multitask consistency network with single temporal supervision for semi-supervised building change detection. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103110. [Google Scholar] [CrossRef]

- Sun, C.; Chen, H.; Du, C.; Jing, N. SemiBuildingChange: A Semi-Supervised High-Resolution Remote Sensing Image Building Change Detection Method With a Pseudo Bitemporal Data Generator. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5622319. [Google Scholar] [CrossRef]

- Fang, F.; Xu, R.; Li, S.; Hao, Q.; Zheng, K.; Wu, K.; Wan, B. Semisupervised Building Instance Extraction From High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5619212. [Google Scholar] [CrossRef]

- Guo, J.; Hong, D.; Liu, Z.; Zhu, X.X. Continent-wide urban tree canopy fine-scale mapping and coverage assessment in South America with high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2024, 212, 251–273. [Google Scholar] [CrossRef]

- Dersch, S.; Schöttl, A.; Krzystek, P.; Heurich, M. Semi-supervised multi-class tree crown delineation using aerial multispectral imagery and lidar data. ISPRS J. Photogramm. Remote Sens. 2024, 216, 154–167. [Google Scholar] [CrossRef]

- Amirkolaee, H.A.; Shi, M.; Mulligan, M. TreeFormer: A Semi-Supervised Transformer-Based Framework for Tree Counting From a Single High-Resolution Image. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4406215. [Google Scholar] [CrossRef]

- Jiang, S.; Wu, H.; Chen, J.; Zhang, Q.; Qin, J. PH-Net: Semi-Supervised Breast Lesion Segmentation via Patch-Wise Hardness. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Luo, F.; Zhou, T.; Liu, J.; Guo, T.; Gong, X.; Gao, X. DCENet: Diff-Feature Contrast Enhancement Network for Semi-Supervised Hyperspectral Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5511514. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, H.; Heng, Q.; Hou, W.; Fan, Y.; Wu, Z.; Wang, J.; Savvides, M.; Shinozaki, T.; Raj, B.; et al. FreeMatch: Self-Adaptive Thresholding for Semi-Supervised Learning. In Proceedings of the The Eleventh International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yi, Z.; Wang, Y.; Zhang, L. Revolutionizing Remote Sensing Image Analysis With BESSL-Net: A Boundary-Enhanced Semi-Supervised Learning Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5620215. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, Y.; Ran, L.; Chen, L.; Wang, K.; Yu, L.; Wang, P.; Zhang, Y. Remote Sensing Image Semantic Change Detection Boosted by Semi-Supervised Contrastive Learning of Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5624113. [Google Scholar] [CrossRef]

- Lin, H.; Wang, H.; Yin, J.; Yang, J. Local Climate Zone Classification via Semi-Supervised Multimodal Multiscale Transformer. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5212117. [Google Scholar] [CrossRef]

- Affairs, Administration of Fisheries and Fisheries Administration of the Ministry of Agriculture and Rural (Ed.) China Fishery Yearbook; China Agricultural Press: Beijing, China, 2023.

- Fu, Y.; Deng, J.; Wang, H.; Comber, A.; Yang, W.; Wu, W.; You, S.; Lin, Y.; Wang, K. A new satellite-derived dataset for marine aquaculture areas in China’s coastal region. Earth Syst. Sci. Data 2021, 13, 1829–1842. [Google Scholar] [CrossRef]

- Ren, C.; Wang, Z.; Zhang, Y.; Zhang, B.; Chen, L.; Xi, Y.; Xiao, X.; Doughty, R.B.; Liu, M.; Jia, M.; et al. Rapid expansion of coastal aquaculture ponds in China from Landsat observations during 1984–2016. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101902. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; Yang, X.; Zhang, Y.; Yang, F.; Liu, B.; Cai, P. Satellite-based monitoring and statistics for raft and cage aquaculture in China’s offshore waters. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102118. [Google Scholar] [CrossRef]

- Aguilar-Manjarrez, J.; Soto, D.; Brummett, R. Aquaculture Zoning, Site Selection and Area Management Under the Ecosystem Approach to Aquaculture. A Handbook; FAO: Rome, Italy, 2017. [Google Scholar]

- Canny, J.F. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, J.; Yang, X.; Huang, C.; Su, F.; Liu, X.; Liu, Y.; Zhang, Y. Global mapping of the landside clustering of aquaculture ponds from dense time-series 10 m Sentinel-2 images on Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103100. [Google Scholar] [CrossRef]

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.-L. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Virtual, 6–12 December 2020. [Google Scholar]

- Yang, L.; Qi, L.; Feng, L.; Zhang, W.; Shi, Y. Revisiting Weak-to-Strong Consistency in Semi-Supervised Semantic Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Ke, Z.; Qiu, D.; Li, K.; Yan, Q.; Lau, R.W.H. Guided Collaborative Training for Pixel-wise Semi-Supervised Learning. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Ouali, Y.; Hudelot, C.; Tami, M. Semi-Supervised Semantic Segmentation with Cross-Consistency Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, X.; Yuan, Y.; Zeng, G.; Wang, J. Semi-Supervised Semantic Segmentation with Cross Pseudo Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Li, Y.; Yi, Z.; Wang, Y.; Zhang, L. Adaptive Context Transformer for Semisupervised Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5621714. [Google Scholar] [CrossRef]

| Satellite | GF6 | ZY1E | |||

|---|---|---|---|---|---|

| Resolution | Spectral Range | Resolution | Spectral Range | ||

| Pan | 2 m | 450–900 nm | 2.5 m | 452–902 nm | |

| Spectral | Blue | 8 m | 450–520 nm | 10 m | 452–521 nm |

| Green | 8 m | 520–600 nm | 10 m | 522–607 nm | |

| Red | 8 m | 630–690 nm | 10 m | 635–694 nm | |

| NIR | 8 m | 760–900 nm | 10 m | 766–895 nm | |

| Satellite | Size | Resolution | Number |

|---|---|---|---|

| GF6 | 224 × 224 | 2 m | 430 |

| ZY1E | 224 × 224 | 2.5 m | 670 |

| Labeled Ratio | Train Set | Validation Set | Test Set | |

|---|---|---|---|---|

| Labeled | Unlabeled | |||

| Full | 880 | —— | 110 | 110 |

| 1/2 | 440 | 440 | 110 | 110 |

| 1/4 | 220 | 660 | 110 | 110 |

| 1/8 | 110 | 770 | 110 | 110 |

| Method | 1/8 | 1/4 | 1/2 | Params | FLOPs | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MIOU | F1 | Kappa | MIOU | F1 | Kappa | MIOU | F1 | Kappa | |||

| FixMatch [55] | 0.7564 | 0.8149 | 0.6558 | 0.7514 | 0.8139 | 0.6531 | 0.8093 | 0.8579 | 0.7382 | 40.35 M | 478.14 G |

| UniMatch [56] | 0.7576 | 0.8066 | 0.6480 | 0.7444 | 0.8094 | 0.6390 | 0.8110 | 0.8522 | 0.7340 | 40.35 M | 637.52 G |

| GCT [57] | 0.8090 | 0.8328 | 0.7068 | 0.8513 | 0.8737 | 0.7854 | 0.8504 | 0.8732 | 0.7840 | 88.98 M | 637.52 G |

| CCT [58] | 0.7948 | 0.8350 | 0.7044 | 0.8314 | 0.8594 | 0.7575 | 0.8423 | 0.8666 | 0.7716 | 40.35 M | 159.38 G |

| CPS [59] | 0.8346 | 0.8623 | 0.7639 | 0.8542 | 0.8771 | 0.7924 | 0.8576 | 0.8878 | 0.8040 | 80.70 M | 637.52 G |

| Semi-BSU | 0.8321 | 0.8587 | 0.7558 | 0.8554 | 0.8876 | 0.7991 | 0.8606 | 0.8896 | 0.8080 | 1.81 M | 55.71 G |

| Method | BCC | SRM | 1/8 | 1/4 | 1/2 | Full | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIOU | F1 | Kappa | MIOU | F1 | Kappa | MIOU | F1 | Kappa | MIOU | F1 | Kappa | |||

| SupOnly | 0.7964 | 0.8270 | 0.6947 | 0.8163 | 0.8455 | 0.7307 | 0.8366 | 0.8620 | 0.7620 | 0.8573 | 0.8870 | 0.8027 | ||

| I | ✓ | 0.7969 | 0.8351 | 0.7017 | 0.8470 | 0.8713 | 0.7810 | 0.8407 | 0.8656 | 0.7696 | —— | —— | —— | |

| II | ✓ | 0.8119 | 0.8483 | 0.7270 | 0.8476 | 0.8781 | 0.7940 | 0.8507 | 0.8809 | 0.7911 | —— | —— | —— | |

| III | ✓ | ✓ | 0.8321 | 0.8587 | 0.7558 | 0.8554 | 0.8876 | 0.7991 | 0.8606 | 0.8896 | 0.8080 | —— | —— | —— |

| Method | 1/8 | 1/4 | 1/2 | Full | ||||

|---|---|---|---|---|---|---|---|---|

| B-IOU | B-F1 | B-IOU | B-F1 | B-IOU | B-F1 | B-IOU | B-F1 | |

| SupOnly | 0.1739 | 0.2854 | 0.1928 | 0.3134 | 0.2013 | 0.3258 | 0.2360 | 0.3726 |

| +BCC | 0.1817 | 0.2966 | 0.2198 | 0.3511 | 0.2075 | 0.3346 | —— | —— |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gan, Y.; Cheng, B.; Li, C.; Fu, W.; Zhang, X. Semi-BSU: A Boundary-Aware Semi-Supervised Semantic Segmentation Framework with Superpixel Refinement for Coastal Aquaculture Pond Extraction from Remote Sensing Images. Remote Sens. 2025, 17, 3733. https://doi.org/10.3390/rs17223733

Gan Y, Cheng B, Li C, Fu W, Zhang X. Semi-BSU: A Boundary-Aware Semi-Supervised Semantic Segmentation Framework with Superpixel Refinement for Coastal Aquaculture Pond Extraction from Remote Sensing Images. Remote Sensing. 2025; 17(22):3733. https://doi.org/10.3390/rs17223733

Chicago/Turabian StyleGan, Yaocan, Bo Cheng, Chunbo Li, Weilong Fu, and Xiaoping Zhang. 2025. "Semi-BSU: A Boundary-Aware Semi-Supervised Semantic Segmentation Framework with Superpixel Refinement for Coastal Aquaculture Pond Extraction from Remote Sensing Images" Remote Sensing 17, no. 22: 3733. https://doi.org/10.3390/rs17223733

APA StyleGan, Y., Cheng, B., Li, C., Fu, W., & Zhang, X. (2025). Semi-BSU: A Boundary-Aware Semi-Supervised Semantic Segmentation Framework with Superpixel Refinement for Coastal Aquaculture Pond Extraction from Remote Sensing Images. Remote Sensing, 17(22), 3733. https://doi.org/10.3390/rs17223733