Highlights

What are the main findings?

- A hierarchical prompting framework that makes coarse-to-fine decisions significantly improves remote sensing scene recognition, especially on fine-grained categories.

- With lightweight parameter-efficient adaptation (LoRA/QLoRA), even a 7B vision–language model can match or surpass full fine-tuning while using much less compute and labeled data.

What are the implications of the main finding?

- The method generalizes well to new datasets and sensing domains with limited or no retraining, showing strong zero-shot and few-shot transferability for real-world remote sensing tasks.

- We provide five structured AID dataset variants and a reproducible evaluation protocol, offering a practical benchmark for robust and transparent assessment of remote sensing VLMs.

Abstract

Vision–language models (VLMs) show strong potential for remote-sensing scene classification but still struggle with fine-grained categories and distribution shifts. We introduce a hierarchical prompting framework that decomposes recognition into a coarse-to-fine decision process with structured outputs, combined with parameter-efficient adaptation using LoRA/QLoRA. To evaluate robustness without depending on external benchmarks, we construct five protocol variants of the AID (V0–V4) that systematically vary label granularity, class consolidation, and augmentation settings. Each variant is designed to align with a specific prompting style and hierarchy. The data pipeline follows a strict split-before-augment strategy, in which augmentation is applied only to the training split to avoid train-test leakage. We further audit leakage using rotation/flip–invariant perceptual hashing across splits to ensure reproducibility. Experiments on all five AID variants show that hierarchical prompting consistently outperforms non-hierarchical prompts and matches or exceeds full fine-tuning, while requiring substantially less compute. Ablation studies on prompt design, adaptation strategy, and model capacity—together with confusion matrices and class-wise metrics—indicate improved recognition at both coarse and fine levels, as well as robustness to rotations and flips. The proposed framework provides a strong, reproducible baseline for remote-sensing scene classification under constrained compute and includes complete prompt templates and processing scripts to support replication.

1. Introduction

1.1. Background and Significance

Remote sensing scene classification has become a pivotal task in the field of geospatial analysis, driven by its critical applications in urban planning, agricultural monitoring, disaster management, and environmental surveillance. Accurately identifying and categorizing land cover and land-use types from aerial or satellite imagery provides essential information for decision-making in both civilian and governmental sectors. Traditionally, scene classification approaches have relied on handcrafted feature extraction methods or supervised deep learning frameworks, such as convolutional neural networks (CNNs), which require extensive labeled datasets and considerable computational resources [1]. Although deep learning methods have substantially improved classification performance, their dependence on task-specific annotations and limited generalization to unseen scenarios remain significant challenges.

The advent of large-scale vision-language models (VLMs), such as CLIP [2] and Flamingo [3], has opened new avenues for remote sensing applications. By pretraining on massive image-text pairs, VLMs possess the ability to encode rich multimodal representations that generalize across diverse domains without requiring extensive retraining. This capability is particularly promising for remote sensing, where annotated data are often scarce or costly to obtain. However, effectively adapting these powerful models to specialized tasks such as scene classification remains a non-trivial problem. The performance of VLMs heavily depends on the design of prompts used to query the model, and naive prompting often fails to elicit optimal task-specific behavior.

1.2. Related Work

Remote sensing scene classification has been a longstanding research topic in geospatial analytics. Early methods predominantly relied on handcrafted features, such as textures, edges, or spectral signatures, combined with traditional machine learning classifiers [4]. With the advent of deep learning, convolutional neural networks (CNNs) became the dominant paradigm, achieving substantial performance improvements on various remote sensing benchmarks [5,6,7,8,9,10,11]. Despite their success, these models typically require large amounts of labeled data for each task and often lack generalization capabilities across different domains or sensor types [12]. More recent efforts have also explored Transformer-style backbones and attention mechanisms for remote sensing recognition but these approaches still tend to assume supervised training on each new dataset, which limits scalability in low-label or cross-sensor regimes.

In parallel, the development of large-scale vision–language models (VLMs) has revolutionized multimodal understanding tasks. Models like CLIP [13] and Flamingo [3], trained on billions of image–text pairs, exhibit remarkable Zero-shot transferability and cross-modal reasoning abilities. While such models have demonstrated impressive results in Natural image domains, their application to remote sensing remains relatively under-explored, with challenges arising from domain shifts, specialized scene semantics, and differences in visual patterns compared to Natural images [14]. Remote sensing imagery often contains fine-grained land-use categories, multi-scale spatial structures, and sensor-dependent appearance variations (e.g., seasonal, off-nadir, or hyperspectral effects) that are not present in typical web-scale image–text data. Recent efforts such as RemoteCLIP [14] have begun adapting large vision–language pretraining to the remote sensing domain, but performance can still degrade under cross-domain shifts or rare classes.

Prompt engineering has emerged as a crucial technique for adapting pretrained VLMs to downstream tasks without requiring extensive retraining. Structured prompt designs have been shown to significantly influence model outputs by steering attention and reasoning pathways [15,16]. Hierarchical reasoning strategies [17,18], which decompose complex classification tasks into sequential sub-decisions, further align model processing with human cognitive mechanisms and have been successfully applied in Few-shot and multimodal contexts [19,20,21]. These approaches suggest that the way the task is asked—for example, through a coarse-to-fine taxonomy or stepwise instructions—can be as important as the backbone itself. However, most existing prompting strategies in remote sensing either (i) assume a flat label space, or (ii) do not explicitly exploit hierarchical structure in land-cover semantics.

In current remote sensing VLM usage, two prompting paradigms dominate. First, many works adopt a One-shot Zero-shot classification style in which the model is prompted once and forced to choose a single label from the entire taxonomy of scene categories in a single step [14,22,23]. This “flat” formulation treats all fine-grained classes as competing simultaneously and returns only one final label, without exposing any intermediate rationale or enforcing consistency with higher-level land-use groupings. Second, other works frame remote sensing understanding as open-ended captioning, prompting the model to freely describe the scene in natural language rather than commit to a controlled label space [12,17,24]. These caption-style prompts emphasize human-readable descriptions (e.g., enumerating visible structures or land-use cues) but impose no structural constraints: the output is unstructured text, not a controlled set of semantic fields, and is therefore difficult to parse automatically or align with standard land-cover taxonomies. Neither of these paradigms explicitly encodes the hierarchical, multi-label nature of remote sensing scene semantics, nor do they guarantee a stable, machine-readable output format.

In contrast, our Hierarchical Prompt Engineering (HPE) formalizes inference as a two-stage decision process aligned with an explicit ontology (Table 1): the model first predicts a coarse superclass and then refines the decision within that branch. We further constrain the model’s response into predefined slots, which enables consistent parsing, error analysis, and reproducibility, rather than free-form text. By reducing the effective label search space at inference time, HPE mitigates confusions between visually similar subclasses (e.g., “dense Residential” vs. “medium Residential”), yields interpretable justifications, and improves robustness under domain shift. Importantly, this prompting scheme is lightweight: it can be applied across different VLM backbones (7B–72B) and datasets (AID variants, RSSCN7) without retraining, making it suitable for practical remote sensing workflows.

Table 1.

Mapping from all 45 fine-grained scene categories to the four coarse classes used by the proposed Hierarchical Prompt Engineering (HPE). This ontology is fixed for all experiments and inference.

Parameter-efficient fine-tuning approaches, such as Low-Rank Adaptation (LoRA) [25] and Quantized LoRA (QLoRA) [26], have been proposed to enable the efficient adaptation of large models with minimal computational overhead. These methods introduce a small number of trainable parameters into the frozen backbone of the model, allowing for rapid task-specific specialization without full-parameter updates. In resource-constrained environments or when fine-tuning ultra-large models, such techniques offer a practical alternative to traditional training pipelines, balancing performance gains with efficiency. Recent work in remote sensing has begun to investigate similar lightweight adaptation schemes for multimodal or hyperspectral models, indicating that full end-to-end retraining is no longer the only viable path for high-quality scene understanding [27].

Beyond generic CNN/Transformer pipelines and generic VLMs, there is a rapidly growing line of domain-specific architectures tailored to remote sensing imagery. Capsule-style attention networks explicitly encode each land-cover entity as a vector “capsule”, whose magnitude and orientation capture semantic attributes such as texture, shape, or material. By integrating attention at both the feature extraction and routing stages, Capsule Attention Networks have been shown to outperform strong CNN, Transformer, and state-space (e.g., sequence-model) baselines on hyperspectral image (HSI) classification, while using fewer parameters and lower computational cost [27]. A second direction treats remote sensing data—especially hyperspectral cubes—as structured, approximately low-rank tensors. Multi-order or multi-dimensional low-rank formulations decompose the data along spatial and spectral modes, enforce low-rankness (e.g., via Schatten p-norm style constraints), and model anomalies as sparse residuals. These methods achieve state-of-the-art anomaly detection accuracy and robustness to noise with efficient alternating-direction solvers, highlighting that carefully designed tensor factorization can deliver competitive performance under limited supervision [28]. A third emerging direction frames scene understanding as large-scale graph learning. Doubly stochastic graph projected clustering jointly learns pixel–anchor and anchor–anchor graphs and enforces probabilistic consistency (symmetry, non-negativity, row-stochastic constraints) to directly yield high-quality cluster assignments for large hyperspectral scenes, improving overall accuracy, normalized mutual information, and purity without resorting to heavy iterative post-processing [29]. Together, these methods illustrate that the remote sensing community is already pushing toward architectures that (i) exploit domain structure, (ii) reduce annotation cost, and (iii) scale to large scenes with better efficiency than naïve CNN baselines.

However, these specialized architectures are typically designed and trained for a specific sub-task (e.g., supervised HSI classification, hyperspectral anomaly detection, or unsupervised large-scale clustering) and often require dataset-specific re-engineering when moving to a new geographic region, sensor, or label space. They do not directly address open-vocabulary or hierarchical multi-label understanding, and they generally do not provide natural-language rationales.

Building upon these prior advancements, this work explores the integration of hierarchical prompting and LoRA-based fine-tuning to adapt large vision–language models for remote sensing scene classification, multi-label tagging, and cross-domain generalization. Our approach aims to bridge the gap between (i) powerful but generic multimodal pretraining and (ii) highly specialized remote sensing architectures. Concretely, we (1) impose a coarse-to-fine hierarchical prompting scheme that mirrors the semantic structure of land-use taxonomies, (2) apply parameter-efficient adaptation to large VLM backbones rather than retraining a task-specific model from scratch, and (3) evaluate both in-domain accuracy and out-of-domain transfer. As a result, we position Hierarchical Prompt Engineering with VLMs as a complementary alternative to recent capsule-attention, low-rank tensor, and doubly stochastic graph models, rather than as a replacement driven only by weaker baselines [27,28,29].

1.3. Proposed Approach and Contributions

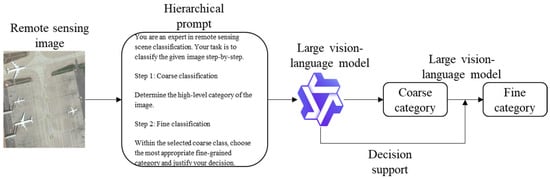

In this work, we investigate how structured, Hierarchical Prompt Engineering (Figure 1) can enhance the performance of VLMs for remote sensing scene classification. Instead of relying on a single-step prediction, we propose a hierarchical framework that decomposes the task into two sequential stages: an initial coarse-classification followed by fine-grained category selection. This structure mirrors the human cognitive process of progressively refining visual interpretations and enables the model to leverage contextual reasoning more effectively. To rigorously evaluate the proposed framework, we conduct systematic comparisons across different prompt designs, fine-tuning techniques (including LoRA-based adaptation and quantization strategies), and model scales ranging from 7B to 72B parameters.

Figure 1.

Implementation of the hierarchical prompting pipeline.

Moreover, we present comprehensive ablation studies and accuracy analyses across multiple variants of the Aerial Image Dataset (AID) [30], progressively refining both data quality and task complexity. Our results demonstrate that hierarchical prompting not only improves classification accuracy but also enhances model robustness and interpretability, offering a scalable solution for applying VLMs to remote sensing tasks with limited labeled data.

2. Materials and Methods

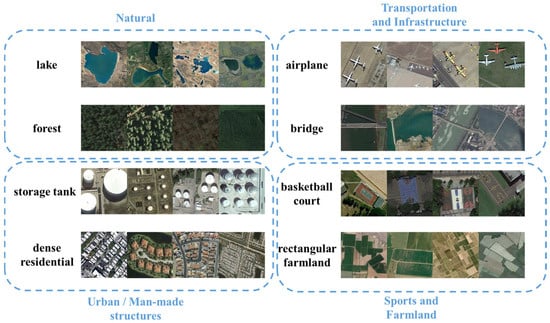

We present our hierarchical prompting framework for coarse-to-fine remote sensing classification. The partial image classification results are shown in Figure 2.

Figure 2.

Example images of selected categories from the AID.

2.1. AID Preparation

We construct five progressively refined variants of the Aerial Image Dataset (AID) to improve data quality, reduce label noise, and provide fair and reproducible evaluation. For all variants, unless otherwise stated, we first define a fixed train/validation/test split on the original images and their labels, and all subsequent processing (augmentation, cleaning, class merging) is applied only to the training portion. This prevents any leakage of test samples into training.

V0: The original AID with 45 scene categories. No data augmentation is applied. The fixed train/validation/test split is defined directly on the raw images.

V1: Standard geometric data augmentation (e.g., rotation, flipping, scaling) is applied to all samples before splitting. In other words, augmented images are treated as independent samples, and then the dataset is split into train/validation/test. This increases data volume but may introduce mild distribution overlap between augmented variants of similar images across splits.

V2: To avoid such leakage, we first split the original images into train/validation/test instead, and only then apply augmentation to the training set. The validation and test sets remain untouched. This ensures that no augmented version of a test image ever appears in training.

V3: We reduce label noise in AID by consolidating visually overlapping scene categories and removing ambiguous samples. In total, we merged 6 groups of near-synonymous classes, which reduces the label space from 45 to 39 categories, and we discarded 17 borderline images that could not be confidently assigned. A full list of merged categories and cleaning rules is provided in Appendix B.2.

V4: We apply post-cleaning augmentation to the V3 training set only, again without altering the validation and test sets. This produces the final high-quality benchmark [31] variant.

A summary of the construction process is provided in Table 2.

Table 2.

Summary of AID versions.

2.2. Prompt Design

To effectively guide large vision–language models (VLMs) in remote sensing scene classification, we propose a structured prompting scheme that we refer to as Hierarchical Prompt Engineering (HPE).

In this work, structured prompting means that the prompt is not a single free-form instruction, but an explicit, task-aligned template, as follows:

- (i)

- a predefined decision flow that first predicts a coarse scene type and then refines it to a fine-grained category;

- (ii)

- an ontology-based label space (Table 1) that restricts each decision step to only the relevant subset of classes;

- (iii)

- a constrained output schema that forces the model to answer in machine-readable fields rather than produce an unconstrained caption.

This differs from conventional “flat” prompts that either (a) ask the model to pick one label from the full list in a single step (“Which category best matches this image?”), or (b) ask the model to freely describe the scene in natural language. Such flat prompting does not exploit the hierarchical structure of land-use taxonomies, tends to confuse visually similar subclasses, and produces outputs that are harder to evaluate quantitatively or reuse downstream. By contrast, our structured prompting turns scene understanding into a controlled, two-stage reasoning process aligned with how remote sensing analysts (and human vision in general) move from a broad functional context to a specific site type.

As shown in Table 3, we instantiate this idea through three progressively refined prompt templates, denoted V0–V2, which evolve from unstructured direct classification to fully structured hierarchical reasoning. The detailed prompt wordings are provided in Appendix A.

Table 3.

Summary of prompt designs (–).

V0 (Direct classification). The model is given the full list of fine-grained scene categories and asked to select the single best match without justification. This emphasizes speed and simplicity, but it also increases the risk of misclassification in fine-grained or visually ambiguous cases because all classes compete simultaneously.

V1 (Coarse-to-fine hierarchical reasoning). The model is first asked to assign the image to one of four coarse superclasses (Natural; Urban/Man-made; Transportation and Infrastructure; Sports and Farmland), and then to choose a specific fine-grained class within that superclass only. At each step, the model is explicitly prompted to provide a short explanation, which improves interpretability and allows us to inspect whether the reasoning is consistent with the visual evidence. This two-step decomposition mirrors cognitive theories of visual recognition, where humans typically categorize scenes by first identifying high-level context and then narrowing down to specific subtypes. Progressing from high-level to low-level categories reduces semantic confusion across unrelated classes, promotes focused discrimination among contextually similar options, and ultimately improves fine-grained accuracy.

V2 (Streamlined hierarchical reasoning). V2 preserves the same hierarchical decision flow as V1 but simplifies the instructions and enforces a fixed response format. Instead of open-ended sentences, the model must output three slots—the coarse class, the fine-grained class, and a brief justification—in a consistent, parseable template. This reduces cognitive load for the model, stabilizes its behavior across images and datasets.

Detailed construction rules of the dataset variants () and the rationale for aligning prompt styles with each variant is provided in Table A1.

To make the proposed hierarchical taxonomy explicit, we list the mapping from all fine-grained scene categories to their corresponding coarse-level parent categories. This fixed mapping is used throughout all experiments to (i) construct the hierarchical prompts and (ii) guide the two-stage inference procedure (first predict the coarse domain, then refine to a fine-grained label). The complete mapping is summarized in Table 1.

Table 1 shows how each of the 45 fine-grained classes is assigned to one of four coarse-level superclasses: Natural, Urban/Man-made, Transportation and Infrastructure, and Sports and Farmland. This explicit ontology is the foundation of our Hierarchical Prompt Engineering (HPE): the model is first prompted with a fine-grained-level question, and is then prompted again with the corresponding fine-grained options only within the predicted superclass. By restricting the second-stage candidates to the relevant branch, HPE reduces label ambiguity and improves robustness in visually similar categories across datasets.

The detailed implementation process of the hierarchical prompt is shown in Figure 4 of Section 3.2.1.

2.3. External Evaluation Datasets and Label Mapping

We further evaluate cross-domain generalization on three small but widely used remote-sensing datasets: UC Merced [5] (UCM, 21 classes), WHU-RS19 [33] (19 classes), and RSSCN7 [34] (7 classes). To ensure a consistent hierarchy, classes from each dataset are mapped to our four coarse groups (Natural, Urban/Man-made, Transportation and Infrastructure, Sports and Farmland). Fine-grained evaluation is conducted on the intersection of class names with AID; non-overlapping classes contribute to the coarse metric only. Detailed class-name mapping is provided in Appendix C.

3. Results

3.1. Experimental Setup

3.1.1. Hyperparameters

VLM Baselines. We used a fixed set of hyperparameters across all experiments unless otherwise specified. The learning rate was set to and the number of training epochs was typically 5, 10, or 15, depending on the experimental configuration. The primary training strategy was Low-Rank Adaptation (LoRA), while full fine-tuning and LoRA combined with DeepSpeed ZeRO-3 optimization [35] were also explored for comparison. A size scaling factor of 8 was employed by default, with some experiments adopting a larger factor of 28 to assess its impact on memory and performance. For large models such as Qwen2VL-72B and Qwen2.5VL-72B, 4-bit quantization was applied to enable efficient training and inference [36,37]. All experiments were implemented on the ms-swift framework [38] and optimized using the AdamW optimizer, with mixed-precision (FP16) training to accelerate convergence and improve computational efficiency.

CNN Baselines. To contextualize the benefits of hierarchical prompting beyond VLMs, we train classical CNNs under the same data splits and augmentations as our VLM experiments. We use ImageNet-pretrained ResNet-50, MobileNetV2, and EfficientNet-B0 with a linear classification head. Training uses SGD with momentum 0.9, cosine learning-rate decay from 0.01, weight decay 1 × 10−4, label smoothing 0.1, batch size 64, early stopping (patience = 5), and a maximum of 5 epochs. We report Top-1 accuracy and macro-F1, and profile efficiency (trainable parameters, peak GPU memory) to assess deployability.

3.1.2. Evaluation Metrics

To evaluate the performance of the models, we primarily adopted Top-1 accuracy and macro-F1-score as the metric for both coarse-classification and fine-classification tasks. Coarse-classification accuracy measures the model’s ability to correctly classify images into high-level categories such as Natural, Urban/Man-made, Transportation and Infrastructure, and Sports and Farmland. Fine-grained accuracy evaluates the correctness within each coarse category, targeting specific subclasses. Additionally, we report per-category coarse accuracy to analyze model performance across different scene types. All results are reported on the validation set corresponding to each dataset split.

3.2. Main Results

3.2.1. Ablation Study

- (a)

- Prompt Engineering Analysis

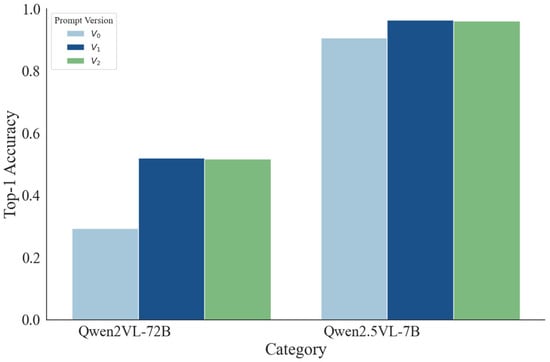

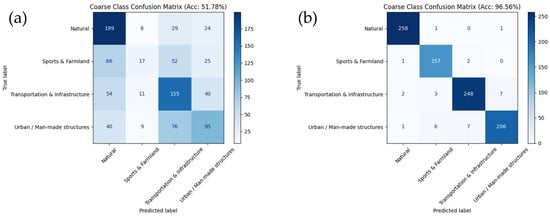

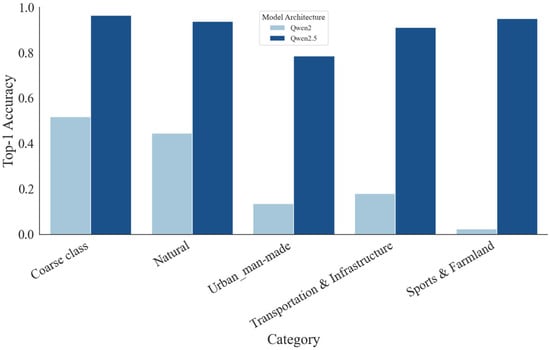

As illustrated in Table 4 and Figure 3. Top-1 accuracy comparison between different prompt versions, we compare three prompt versions (V0–V2) with Qwen2VL-72B and Qwen2.5VL-7B, both fine-tuned using LoRA and evaluated in the ms-swift framework. V0 is a generic, unstructured instruction; V1 introduces explicit coarse→fine hierarchical cues aligned with the label taxonomy; V2 preserves the same hierarchical content as V1 but expresses it in a more compact format. Across both model scales, the decisive factor is the presence of hierarchical guidance: moving from V0 to V1 increases Top-1 accuracy from 29.33% → 52.00% on Qwen2VL-72B and from 90.67% → 96.44% on Qwen2.5VL-7B, whereas changing formatting alone (V1→V2) yields only marginal differences (51.78% vs. 52.00% for 72B; 96.11% vs. 96.44% for 7B). These results indicate that structuring the decision process to mirror the label hierarchy—rather than cosmetic prompt reformatting—drives the gains, with noticeable error reductions in categories prone to cross-group confusion (e.g., Transportation and Infrastructure, Urban/Man-made).

Table 4.

Top-1 accuracy details of models across different prompt versions.

Figure 3.

Top-1 accuracy comparison between different prompt versions.

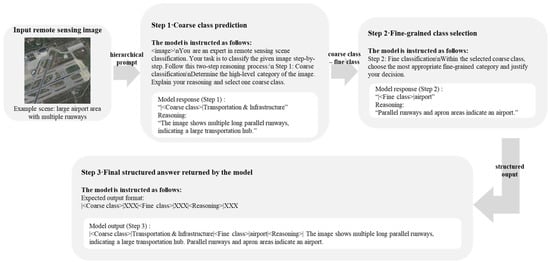

To illustrate how the hierarchical prompt operates in practice, Figure 4. Qualitative example of the proposed hierarchical prompting pipeline. shows a concrete example. In Step 1, the model is explicitly asked to assign the input image to one of four coarse scene groups (“Natural”, “Urban/Man-made”, “Transportation and Infrastructure”, or “Sports and Farmland”) and briefly explain its decision. In Step 2, conditioned on the selected group, the model chooses the most specific fine-grained class (e.g., “airport” within “Transportation and Infrastructure”) and again provides a short justification. The final answer is returned in a structured format |<Coarse class>|…|<Fine class>|…|<Reasoning>|…. This two-stage guidance narrows the search space and prevents the model from confusing semantically unrelated categories, which is the main reason V1/V2 outperform the flat, single-shot baseline V0.

Figure 4.

Qualitative example of the proposed hierarchical prompting pipeline.

- (b)

- Model Architecture Comparison

To isolate the effect of architecture, we compare Qwen2VL-72B with its upgraded variant Qwen2.5VL-72B, both fine-tuned via LoRA under 4-bit quantization with an identical prompting/training protocol. As shown in Table 5, Figure 5 and Figure 6, the upgraded model delivers a substantial gain in coarse-level classification, raising Top-1 accuracy from 51.78% to 96.56% (+44.78 pp). Consistent improvements appear in every coarse category: Natural improves from 44.62% to 93.85%, Urban/Man-made from 13.64% to 78.64%, Transportation and Infrastructure from 18.08% to 91.15%, and Sports and Farmland from 2.50% to 95.00%. The coarse-level confusion matrices show markedly cleaner diagonals and far fewer cross-group confusions for Qwen2.5VL, particularly within classes that are small-scale or visually ambiguous, whereas Qwen2VL exhibits frequent misallocations across these groups. Taken together, these results indicate that the architectural advances in Qwen2.5VL—enhancing multimodal representation and vision–language alignment—are pivotal for robust remote-sensing scene understanding under the same fine-tuning and quantization budget.

Table 5.

Top-1 accuracy details of models based on Qwen2.5 and Qwen2 architectures.

Figure 5.

Coarse-level confusion matrix of Qwen2-based and Qwen2.5-based models. (a) Coarse-level confusion matrix of Qwen2-based model. (b) Coarse-level confusion matrix of Qwen2.5-based model.

Figure 6.

Top-1 accuracy comparison between models based on Qwen2.5 and Qwen2 architectures.

Beyond raw accuracy, we attribute the performance gap primarily to architectural upgrades between Qwen2VL and Qwen2.5VL. Qwen2VL employs a high-capacity vision encoder that supports dynamic input resolution and injects 2D spatial positional information (e.g., via rotary/relative position encodings) before fusing visual tokens into the language backbone. This design already allows the model to preserve fine-grained spatial structure from high-resolution inputs without collapsing them to a fixed size, and to align these spatially grounded tokens with text within a single unified decoder. These choices give Qwen2VL a nontrivial advantage over conventional CNN-based backbones when dealing with complex overhead scenes [39].

Qwen2.5VL extends this recipe in three directions that are directly beneficial for remote sensing. First, the visual encoder is further optimized for very high-resolution imagery by introducing more efficient local-to-global attention patterns and improved normalization/activation schemes. In practice, Qwen2.5VL allocates most attention computation to localized windows while selectively preserving global context, which makes it possible to model dense, cluttered scenes (e.g., ports, industrial blocks, road networks, farmland parcels) at native spatial detail without overwhelming the language backbone. At the same time, upgraded normalization (e.g., RMSNorm-style stabilizers) and modern feed-forward blocks (e.g., gated activations such as SwiGLU) improve the quality and stability of the visual embeddings that are passed to the language model. These changes strengthen the multimodal representation itself: the model can maintain sharper boundaries between semantically adjacent land-cover types and small man-made structures that otherwise tend to be confused [39,40].

Second, Qwen2.5VL includes a refined cross-modal alignment stage that is explicitly trained to bind localized visual evidence to textual concepts. In contrast to treating the image as a single holistic scene description, the newer model is further supervised to ground objects, regions, or layout structure with language, and to express these bindings in structured outputs. This additional supervision encourages the model to form tighter, more disentangled correspondences between subregions of the image and semantic labels. For hierarchical remote-sensing classification, this means that fine-grained cues (e.g., “runway”, “container stacks”, “greenhouse fields”) are more reliably attached to the correct coarse-level parent (e.g., Transportation and Infrastructure, Industrial/Man-made, Agricultural/Farmland) rather than leaking across categories as in Qwen2VL [40].

Finally, Qwen2.5VL is trained to operate robustly under long-context, heterogeneous visual inputs (including extremely wide scenes and document-like layouts), and to fuse them with textual instructions under a unified prompting interface. Although our task here is static overhead imagery rather than long video, this training regime implicitly regularizes spatial reasoning at large geographic scales: the upgraded model better handles scenes where multiple land-use types co-exist in one frame (urban fringe with surrounding cropland, highway–harbor interfaces, etc.), and therefore reduces cross-group misclassification in exactly those mixed or boundary cases that are most challenging for Qwen2VL [40].

In summary, Qwen2.5VL-72B is not just a larger or differently fine-tuned checkpoint: it incorporates a high-resolution-aware–aware vision encoder with efficient local/global attention, stabilized visual tokenization and fusion blocks, and stronger region-level vision–language grounding objectives. These architectural advances directly improve multimodal representation quality and vision–language alignment, which in turn explains the dramatic reduction in confusion across coarse-level categories under identical LoRA fine-tuning and quantization settings [39,40].

- (c)

- Effect of Model Size

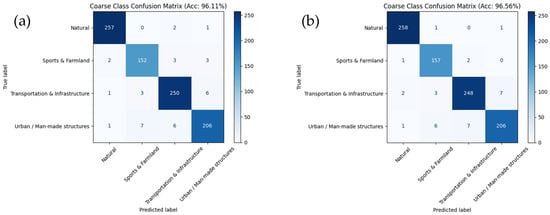

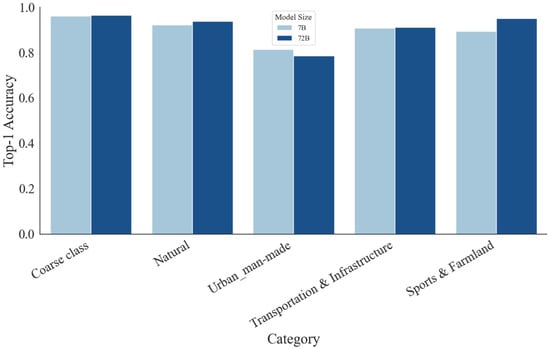

To assess parameter scaling under the same training budget, we compare Qwen2.5VL-7B and Qwen2.5VL-72B, both fine-tuned with LoRA and evaluated under identical settings and hierarchical prompts. As shown in Table 6, Figure 7 and Figure 8. Despite the >10× gap in parameter count, the coarse-level Top-1 accuracy improves only marginally from 96.11% (7B) to 96.56% (72B). Category-wise trends are similarly modest: Natural rises from 92.31% to 93.85%, Transportation and Infrastructure from 90.77% to 91.15%, and Sports and Farmland from 89.38% to 95.00%, whereas Urban/Man-made slightly decreases from 81.36% to 78.64%. The coarse confusion matrices show clean diagonals for both models, with the 72B variant exhibiting only a slightly sharper separation; overall differences do not scale proportionally with model size. Taken together, these results indicate that—once hierarchical prompting provides a strong inductive bias—smaller VLMs such as Qwen2.5VL-7B deliver competitive accuracy with a far better cost-efficiency profile, while scaling to 72B yields only incremental gains in this setting.

Table 6.

Top-1 accuracy details of 7B and 72B models based on Qwen2.5 architecture.

Figure 7.

Coarse-level confusion matrix of 7B and 72B models based on Qwen2.5 architecture. (a) Coarse-level confusion matrix of the 7B model. (b) Coarse-level confusion matrix of the 72B model.

Figure 8.

Top-1 accuracy comparison between 7B and 72B models based on Qwen2.5 architecture.

3.2.2. Data Version Impact

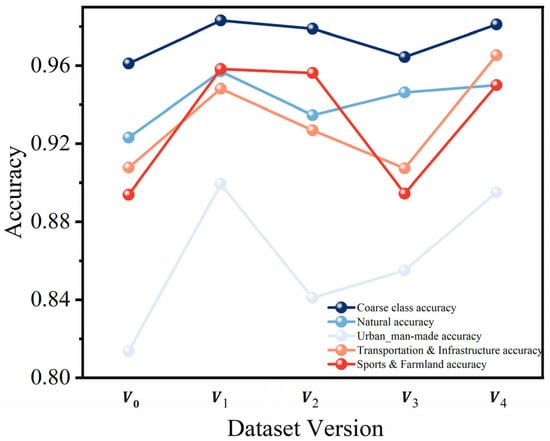

As shown in Figure 9, we assess the influence of dataset versions on classification performance across five variants (–), involving progressive data augmentation, class merging, and dataset cleaning strategies. Overall, versions and achieve the best results, highlighting the importance of dataset construction for model generalization.

Figure 9.

Accuracy Trends Across Dataset Versions.

Specifically, , with extensive augmentation techniques such as rotation and flipping, boosts the recognition of minority classes, achieving a coarse classification accuracy of 98.31% and notable gains in Natural (95.71%), Urban/Man-made (89.92%), Transportation and Infrastructure (94.81%), and Sports and Farmland (95.83%). , integrating both category refinement and data augmentation, maintains competitive performance with 98.11% coarse accuracy and particularly excels in the Transportation and Infrastructure class (96.53%).

In contrast, and exhibit slight degradations, especially in the Urban category, suggesting that aggressive restructuring without adequate data diversity may impair fine-classification discrimination.

These results demonstrate that data augmentation and semantic refinement are crucial for enhancing model robustness, while indiscriminate dataset restructuring may have adverse effects, particularly for complex scene classification.

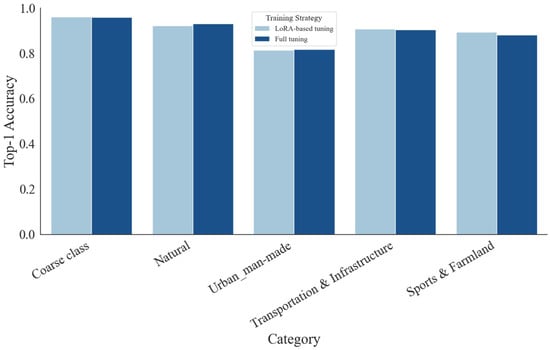

3.2.3. Training Strategy Comparison

We compare LoRA-based tuning with full fine-tuning on Qwen2.5VL-7B under identical data, prompts, and optimization settings. As shown in Table 7, Figure 10 and Figure 11. At the coarse level, the two strategies are essentially indistinguishable—96.11% for LoRA versus 96.00% for full tuning. Class-wise differences are small and mixed: Natural and Urban/Man-made are marginally higher with full tuning (93.08% vs. 92.31%, 81.82% vs. 81.36%), whereas Transportation and Infrastructure and Sports and Farmland favor LoRA (90.77% vs. 90.38%, 89.38% vs. 88.12%). The coarse confusion matrices show similarly clean diagonals for both strategies, and the bar plots confirm that variations remain within about one percentage point across categories. Taken together, these results indicate that LoRA matches the accuracy of full tuning while adapting only a small fraction of parameters, leading to substantially lower memory and training cost. For remote-sensing deployments where compute and energy are constrained, LoRA offers a more practical path without sacrificing classification performance.

Table 7.

Top-1 accuracy details of LoRA-based tuning and full tuning.

Figure 10.

Coarse-level confusion matrix of different training strategies. (a) Coarse-level confusion matrix of LoRA-based tuning strategy. (b) Coarse-level confusion matrix of 72B full-tuning strategy.

Figure 11.

Top-1 accuracy comparison between LoRA-based tuning and full tuning.

3.2.4. Comparison with Classical CNNs

Setup. Table 8 compares three ImageNet-pretrained CNNs (ResNet-50 [41], MobileNetV2 [42], EfficientNet-B0 [43]) with our VLM (Qwen2.5-VL-7B) fine-tuned via LoRA under the proposed hierarchical prompting. All methods use the same AID- split and the same augmentations to ensure fairness. We report fine class Top-1 accuracy, macro-F1, the number of trainable parameters, and peak GPU memory.

Table 8.

CNN vs. VLM w/hierarchical prompting on AID- (same split/augment).

Results and analysis. The hierarchical-prompted VLM achieves the best accuracy: 91.33% Top-1 and 91.32% macro-F1, surpassing ResNet-50 by +0.66/+0.86 points, MobileNetV2 by +1.55/+1.51 points, and EfficientNet-B0 by +0.22/+0.23 points, respectively. The improvement is slightly larger on macro-F1 than on Top-1, indicating better class-balance and sensitivity to minority categories. In terms of tunable parameters, LoRA updates 8.39 M parameters—only ~35.6% of ResNet-50’s 23.60 M trainable parameters (and far below full 7B fine-tuning)—while CNNs require updating all model weights end-to-end. As expected, the VLM incurs higher peak memory (14.00 GB) than CNNs (2.63–3.14 GB), but remains feasible on a single modern GPU and substantially reduces the number of parameters that must be optimized.

Takeaway. Under matched data and augmentations, hierarchical prompting on a VLM delivers consistent accuracy gains over strong CNN baselines while requiring fewer trainable parameters than end-to-end CNN training. The trade-off is higher VRAM usage, which is acceptable for workstation-class GPUs. These results demonstrate tangible benefits beyond classical CNNs, supporting the practicality of the proposed approach when memory resources are available.

3.2.5. Data Efficiency Under Limited Labels

Setup. To evaluate label efficiency, we randomly subsample 1%/5%/10%/25% of the training labels on AID- while keeping the validation sets unchanged. We compare three ImageNet-pretrained CNNs (ResNet-50, MobileNetV2, EfficientNet-B0) with our Qwen2.5-VL-7B + LoRA under hierarchical prompting. All other settings (split, augmentations, optimizer) follow Section 3.1; LoRA uses early stopping. Results (Top-1/macro-F1, %) are averaged over the same seeds and reported in Table 9.

Table 9.

Accuracy (Top-1/macro-F1) (%) vs. labeled fraction on AID-.

Results and analysis. At 1% labels, the hierarchical-prompted VLM reaches 81.44/82.63, outperforming the best CNN (MobileNetV2, 53.44/53.46) by +28.00/+29.17 points, and exceeding ResNet-50 by +53.55/+56.38. At 5% labels, our method attains 87.22/87.90, ahead of MobileNetV2 (67.00/65.94) by +20.22/+21.96; notably, it already surpasses EfficientNet-B0 at 25% (86.22/86.13) and ResNet-50 at 25% (84.33/84.22). At 10% labels, the VLM obtains 85.22/84.92, still better than ResNet-50 (69.44/69.44) and EfficientNet-B0 (75.00/74.51), and slightly above MobileNetV2 at the same fraction (81.11/80.98; +4.11/+3.94). At 25% labels, MobileNetV2 (90.00/89.94) becomes competitive and slightly surpasses the VLM (87.11/87.04, −2.89/−2.90), reflecting the well-known scaling of CNNs with abundant labels. Across all fractions, however, the VLM maintains high and stable performance with far fewer annotated samples.

Label-efficiency takeaway. The hierarchical-prompted VLM exhibits strong data efficiency: with only 1% labels it matches/exceeds MobileNetV2 trained on 10% labels (≈10× fewer labels), and with 5% labels it already outperforms ResNet-50/EfficientNet-B0 trained on 25% labels (≈5× fewer labels). These results indicate that hierarchical prompting leverages VLM priors to deliver high accuracy under scarce supervision, while CNNs need substantially more labels to reach comparable performance.

3.2.6. Cross-Domain Generalization

To assess whether the model trained on AID generalizes beyond its source domain, we evaluate on three small but widely used remote-sensing datasets—UC Merced (UCM), WHU-RS19, and RSSCN7—without changing the prompting pipeline. Because the fine-classification taxonomies of these datasets differ from AID (e.g., heterogeneous splits of Residential/Urban subclasses), we report coarse-level metrics only (Top-1 accuracy and macro-F1) for fair, cross-dataset comparison. The class-name mapping for our four coarse groups is provided in the Supplementary Materials. Training details (optimizer, early stopping, label smoothing) follow Section 3.1.

We consider two protocols: Zero-shot, where the model trained on AID-V2 is evaluated directly on the target dataset; and Few-shot LoRA, where we adapt the model with K = 16 labeled samples per class on the target dataset for ≤5 epochs using LoRA (lightweight parameter-efficient tuning). Table 10 summarizes the results. Zero-shot generalization is already strong on UCM (87.20% Top-1/87.04% F1) and WHU-RS19 (93.90%/91.98%), while RSSCN7 is more challenging due to class imbalance and texture shifts (74.07%/28.11%). With Few-shot LoRA, performance improves consistently across all targets: UCM gains +8.50 Top-1 and +8.51 F1 (to 95.70%/95.55%), WHU-RS19 gains +2.91/+3.94 (to 96.81%/95.92%), and RSSCN7 gains +5.29/+9.4 (to 83.56%/81.23%). These results indicate that (i) the hierarchical prompting framework transfers well in a Zero-shot manner, and (ii) minimal labeled data and lightweight LoRA adaptation suffice to bridge remaining domain gaps efficiently.

Table 10.

Few-shot LoRA vs. Zero-shot in cross-domain.

As shown in Table 11, on RSSCN7, the Zero-shot model already achieves a reasonable coarse-level scene classification accuracy (Top-1 = 0.78), indicating that the model can transfer high-level semantics across domains. However, its macro-F1 at the same coarse level is lower (0.72). The main source of this gap is a systematic failure on a single composite category, “Sports and Farmland”: in the Zero-shot case the model tends to misclassify such regions as either “Natural” (open terrain, vegetation) or “Transportation and Infrastructure” (runways, fields with regular markings), yielding only 0.34 recall and 0.42 F1 for that class. Because macro-F1 averages F1 equally across all coarse classes, this underperformance of one class pulls down the macro average even though other classes, such as “Natural” and “Urban/Man-made”, remain strong (F1 ≈ 0.84). After a small amount of LoRA-based Few-shot adaptation on the target domain, the weakest class is largely recovered (its F1 improves from 0.42 to 0.68), and macro-F1 rises to 0.81, nearly matching Top-1 accuracy (0.84). This shows that a handful of in-domain examples is sufficient to fix a systematic domain-shift error rather than just slightly boosting all classes uniformly.

Table 11.

Cross-domain performance on RSSCN7 (coarse-level). Per-class Precision, Recall, F1-score, and Support. Each cell reports Zero-shot/Few-shot results, showing that Few-shot adaptation mainly improves the most challenging class (“Sports and Farmland”).

4. Discussion

4.1. Effectiveness of Hierarchical Prompting

Hierarchical prompting consistently strengthens classification by decomposing decisions into coarse then fine stages, which reduces the search space, curbs semantic confusion, and aligns with expert practice (broad context before detail). The fine stage also acts as an explicit self-check constrained by the selected coarse category, yielding more deliberate and interpretable outputs. Beyond in-domain gains, Zero-shot tests on UCM/WHU-RS19/RSSCN7 (Section 3.2.4) show that hierarchical prompting retains its advantages under domain shift when evaluated with a consistent label hierarchy (coarse metrics for all classes; fine metrics on class intersections with AID). Moreover, Few-shot LoRA adaptation (Section 3.2.5 and Section 3.2.6) further boosts target-domain performance with only a handful of labeled samples per class and ≤5 training epochs, indicating strong data efficiency and practical deployability.

4.2. Trade-Offs Between Model Size and Accuracy

Larger models (e.g., Qwen2.5VL-72B) offer higher peak accuracy in visually subtle cases, but improvements diminish with scale, and the extra cost in memory, wall-time, and deployment complexity grows steeply. Mid-sized/backbone-efficient settings combined with LoRA/QLoRA strike a favorable balance, delivering accuracy close to full fine-tuning with a small fraction of trainable parameters. In addition, comparisons against classical CNN baselines confirm that the gains stem from the coarse → fine reasoning itself rather than sheer model size: the hierarchy adds robustness to acquisition changes and class imbalance while keeping compute modest. Finally, Few-shot LoRA on target datasets provides a lightweight path to close most of the remaining domain gap without retraining the full model.

4.3. Limitations and Potential Failure Cases

Several limitations remain. Coarse-stage errors propagate to the fine stage, so early mistakes can bias final decisions; confidence-aware repair or joint decoding is a promising remedy. Fine-classification errors persist for visually overlapping classes (e.g., texture-centric categories) and for datasets whose taxonomies do not perfectly align with AID—some external classes are generic (e.g., Buildings, Agriculture), hence evaluated at the coarse level only. Results can be sensitive to prompt phrasing in mid-sized models; prompt calibration or instruction distillation may reduce variance. While 4-bit quantization and LoRA lower resource demands, real-time onboard inference under strict SWaP constraints and very-large-model scaling still require further engineering.

The limitations of specific fine-grained classification cases are provided in Appendix D.

4.4. Future Work

Our framework naturally extends to hierarchical multi-label prediction using node-wise probabilities, consistency-aware losses, and top-down decoding; we will conduct a dedicated evaluation on multi-label remote-sensing corpora, including threshold calibration and imbalance-aware training. The detailed implementation can be found in Appendix E.

Future directions also include: (i) prompt-robust or prompt-free variants via distillation; (ii) broader cross-sensor generalization (e.g., SAR/multispectral) and temporal reasoning; (iii) tighter domain-gap diagnostics with feature-space metrics; and (iv) compression/acceleration tailored to onboard UAV deployment.

5. Conclusions

This work investigated large vision–language models (VLMs) for remote sensing scene understanding under realistic data and compute constraints. We introduced a hierarchical prompting framework that decomposes recognition into a coarse-to-fine decision process and enforces structured, machine-readable outputs. We further combined this prompting scheme with lightweight parameter-efficient adaptation (LoRA/QLoRA), enabling competitive performance without full model fine-tuning.

To make evaluation reproducible and stress-test robustness, we constructed five protocol variants of the AID that systematically vary label granularity, consolidation, and augmentation strategy while enforcing strict split-before-augment rules and explicit leakage control. Using these variants, we showed that hierarchical prompting consistently improves fine-grained classification accuracy and interpretability compared with non-hierarchical prompting, while approaching or surpassing fully fine-tuned baselines at a fraction of the training cost. The framework also scales across model sizes and maintains strong data efficiency in low-label regimes. In addition, it generalizes across domains, transferring to external remote sensing benchmarks without retraining.

Overall, the proposed hierarchical prompting plus parameter-efficient adaptation provides a practical and transparent recipe for deploying VLMs in remote sensing. It offers (i) accuracy on fine-grained categories, (ii) robustness across dataset variants and unseen domains, (iii) reduced annotation and compute burden, and (iv) a reproducible evaluation pipeline. We expect this paradigm to serve as a strong and accessible baseline for future research on multimodal remote sensing scene understanding.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/rs17223727/s1.

Author Contributions

Conceptualization, T.C. and J.A.; methodology, T.C.; software, T.C.; validation, T.C.; formal analysis, T.C.; investigation, T.C.; resources, J.A.; data curation, T.C.; writing—original draft preparation, T.C.; writing—review and editing, T.C. and J.A.; visualization, T.C.; supervision, J.A.; project administration, J.A.; funding acquisition, J.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The remote-sensing datasets used in this study are based on publicly available benchmarks (AID, UCMerced_LandUse, WHU-RS19, and RSSCN7). The five AID protocol variants (V0–V4) introduced in this work—including the cleaned label sets, split-before-augment train/validation/test partitions, hierarchical category mappings, and the hierarchical prompt templates—are provided as Supplementary Materials and are also openly available in our public repository [https://github.com/qlj215/HPE-RS] (accessed on 30 October 2025). No proprietary or personally identifiable data were used in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Prompt Templates

To ensure reproducibility and transparency of our prompt design, we provide the complete templates used in different dataset versions below.

Appendix A.1. V0 Prompt: Direct Category Selection

<image>

You must choose **only one** category name from the following list that best matches the image. Do not explain. Just return the category name exactly as it appears.

Categories: {category_str}

Appendix A.2. V1 Prompt: Coarse-To-Fine Step-By-Step Classification

<image>

You are an expert in remote sensing scene classification. Your task is to classify the given image step-by-step. Follow this two-step reasoning process:

### Step 1: Coarse classification

Determine the high-level category of the image based on the following four options:

1. Natural:

- mountain, lake, forest, beach, cloud, desert, island, river, meadow, snowberg, sea_ice, chaparral, wetland

2. Urban/Man-made:

- dense_residential, medium_residential, sparse_residential, mobile_home_park, industrial_area, commercial_area, church, palace, storage_tank, terrace, thermal_power_station

3. Transportation and Infrastructure:

- airport, airplane, freeway, bridge, railway, railway_station, harbor, intersection, overpass, roundabout, runway, parking_lot, ship

4. Sports and Farmland:

- baseball_diamond, basketball_court, tennis_court, golf_course, ground_track_field, stadium, circular_farmland, rectangular_farmland

Explain your reasoning and select one coarse class.

### Step 2: Fine classification

Within the selected coarse class, choose the most appropriate fine-grained category and justify your decision.

Expected output format:

|<Coarse class>|XXX|<Fine class>|XXX|<Reasoning>|XXX

Appendix A.3. V2 Prompt: Streamlined Coarse-to-Fine Reasoning

<image>

You are an expert in remote sensing scene classification. Your task is to classify the given image step-by-step.

Step 1: Coarse classification

Determine the high-level category of the image.

Step 2: Fine classification

Within the selected coarse class, choose the most appropriate fine-grained category and justify your decision.

Expected output format:

|<Coarse class>|XXX|<Fine class>|XXX|<Reasoning>|XXX

Appendix B. Dataset Variant Design and Prompt–Variant Alignment

Appendix B.1. Goals

We define five protocol variants to stress different robustness axes and to keep prompt design purposeful rather than ad hoc: baseline comparability, orientation/flip invariance, leakage-safe augmentation, semantic cleaning, and the combined setting.

Appendix B.2. Variant Construction and Prompt Rationale

Table A1.

Construction rules and why each variant uses a specific prompt style.

Table A1.

Construction rules and why each variant uses a specific prompt style.

| Variant | Design Goal (Robustness Axis) | Construction Rule (Leakage Control) | Prompt Alignment (Why This Prompt) | Artifacts |

|---|---|---|---|---|

| Baseline comparability | Standard random split; no augmentation | Generic flat prompt, used as the neutral reference | Split index (train/val/test) | |

| Orientation/flip invariance stress | Global rotations (0/90/180/270) and H/V flips applied naïvely | Add orientation-invariant wording and spatial cues | Augmentation list | |

| Leakage-safe augmentation | Split-before-augment: apply all augmentations only to training; validation/test untouched | Keep wording to isolate protocol effect (naïve vs. leakage-safe) | Train-only aug manifests | |

| Semantic disambiguation/label noise | Merge near-synonymous/ambiguous classes; remove borderline samples; run near-duplicate audit across splits | Emphasize structural/semantic descriptors in a coarse → fine template | Cleaned indices + audit logs | |

| Clean + invariance (combined) | Apply ’s leakage-safe augmentation after cleaning | Streamlined hierarchical prompt (coarse → fine) for efficiency and clarity | All manifests and scripts |

Variant was created to reduce semantic redundancy and label noise in AID before downstream training/evaluation. We applied two steps: (i) manual class consolidation for visually overlapping categories, and (ii) removal of borderline/low-confidence samples.

- (i)

- Class consolidation.

We merged six groups of near-synonymous or visually confusable categories as follows:

church and palace → edifice

medium_residential, dense_residential, and mobile_home_park → dense_residential

terrace → reassigned to the agricultural/land-use category farmland/terraced farmland

chaparral and desert → xeric

lake and wetland → aquatic

ground_track_field and stadium → arena

After consolidation, the number of scene categories was reduced from 45 to 39.

- (ii)

- Ambiguous-sample removal.

During manual inspection, we identified images whose appearance could not be labeled consistently even among multiple reviewers (e.g., low resolution, mixed land-use boundaries, and strong occlusion). We removed 17 such borderline samples from .

This cleaned label space () is then used as the basis for Variant , which applies augmentation on top of the merged-and-cleaned categories.

Appendix B.3. Leakage Prevention and Duplicate Audit

Adopt split-before-augment: generate the split first, then apply augmentations only to the training subset.

Screen near-duplicates across splits via perceptual image hashing; compute pairwise Hamming distances and remove pairs below a fixed threshold τ (chosen from the distance histogram; the exact τ and logs are released with the scripts).

Manually spot-check borderline cases to avoid class drift.

Release indices, augmentation manifests, and audit logs for exact reproducibility.

Appendix C. Label Mapping for External Datasets

Appendix C.1. Mapping Policy and Notation

To ensure a consistent evaluation hierarchy across datasets, each external class is mapped to one of our four coarse groups—Natural, Urban/Man-made, Transportation and Infrastructure, Sports and Farmland—and, when there is an exact or one-to-one synonym match, to an AID fine class. If no exact fine-level counterpart exists, the class contributes coarse metrics only (“—” in the AID Fine Class column). Class names are matched case-insensitively with minor normalization (hyphen/underscore/space). Two authors independently verified all mappings; disagreements were resolved by discussion.

Appendix C.2. UC Merced (UCM) → Our Hierarchy/AID Fine Classes

Notes (no one-to-one AID fine match):

- (1)

- Agriculture—generic farmland (AID distinguishes circular vs. rectangular farmland).

- (2)

- Buildings—generic built-up category (AID uses more specific Urban subclasses).

Table A2.

UC Merced (UCM, 21 classes) → Coarse groups.

Table A2.

UC Merced (UCM, 21 classes) → Coarse groups.

| UCM Class | Coarse Class | UCM Class | Coarse Class | UCM Class | Coarse Class |

|---|---|---|---|---|---|

| Agriculture | Sports and Farmland | Forest | Natural | Overpass | Transportation and Infrastructure |

| Airplane | Transportation and Infrastructure | Freeway | Transportation and Infrastructure | Parking Lot | Transportation and Infrastructure |

| Baseball Diamond | Sports and Farmland | Golf Course | Sports and Farmland | River | Natural |

| Beach | Natural | Harbor | Transportation and Infrastructure | Runway | Transportation and Infrastructure |

| Buildings | Urban/Man-made | Intersection | Transportation and Infrastructure | Sparse Residential | Urban/Man-made |

| Chaparral | Natural | Medium Residential | Urban/Man-made | Storage Tanks | Urban/Man-made |

| Dense Residential | Urban/Man-made | Mobile Home Park | Urban/Man-made | Tennis Court | Sports and Farmland |

Appendix C.3. WHU-RS19 → Our Hierarchy/AID Fine Classes

Notes (no one-to-one AID fine match):

- (1)

- Farmland—generic farmland (AID splits by geometry).

- (2)

- Park—Urban green/parkland (no exact AID fine class).

- (3)

- Residential area—density not specified (AID separates dense/medium/sparse).

- (4)

- (Ambiguity handled at fine level but irrelevant here) Football field—could appear with or without athletics track; we evaluate at the coarse level.

Table A3.

WHU-RS19 (19 classes) → Coarse groups.

Table A3.

WHU-RS19 (19 classes) → Coarse groups.

| WHU Class | Coarse Class | WHU Class | Coarse Class | WHU Class | Coarse Class |

|---|---|---|---|---|---|

| Airport | Transportation and Infrastructure | Football field | Sports and Farmland | Parking lot | Transportation and Infrastructure |

| Beach | Natural | Forest | Natural | Pond | Natural |

| Bridge | Transportation and Infrastructure | Industrial area | Urban/Man-made | Port | Transportation and Infrastructure |

| Commercial area | Urban/Man-made | Meadow | Natural | Railway station | Transportation and Infrastructure |

| Desert | Natural | Mountain | Natural | Residential area | Urban/Man-made |

| Farmland | Sports and Farmland | Park | Urban/Man-made | River | Natural |

| - | - | Viaduct | Transportation and Infrastructure | - | - |

Appendix C.4. RSSCN7 → Our Hierarchy/AID Fine Classes

Notes (no one-to-one AID fine match):

- (1)

- Field—generic farmland (AID splits circular/rectangular).

- (2)

- Residential—density not specified (AID distinguishes dense/medium/sparse).

Table A4.

RSSCN7 (7 classes) → Coarse groups.

Table A4.

RSSCN7 (7 classes) → Coarse groups.

| RSSCN7 Class | Coarse Class | RSSCN7 Class | Coarse Class | RSSCN7 Class | Coarse Class |

|---|---|---|---|---|---|

| Grass | Natural | Industrial | Urban/Man-made | Residential | Urban/Man-made |

| River | Natural | Field | Sports and Farmland | Parking | Transportation and Infrastructure |

| - | - | Forest | Natural | - | - |

Appendix D. Fine-Grained Classification Limitations

The analysis below is based on a Qwen2.5-VL model fine-tuned with LoRA. Both the dataset and the hierarchical prompts follow the version.

In several fine-grained scene categories that are visually very similar, the model still exhibits notable confusion. For example, the accuracy for dense_residential is only around 55–65%, with roughly 15–20% of its samples predicted as medium_residential and another 10–15% predicted as mobile_home_park. Similarly, commercial_area is sometimes confused with church/palace, and palace itself reaches only about 75–80% accuracy, while church is around 75–85%. A comparable pattern appears in terrace (≈75–80%, often misclassified as rectangular_farmland), industrial_area (≈70–75%, sometimes predicted as railway_station), and thermal_power_station (which is occasionally misidentified as stadium, runway, etc.). These errors are largely driven by ambiguous visual boundaries: in overhead imagery, these categories often differ only in subtle cues such as roof density, roofing material, rooftop color, or functional context that may not be fully visible in a single cropped patch. A practical mitigation is to reorganize such categories under a coarser parent class first (e.g., treating dense_residential/medium_residential/mobile_home_park collectively as “built-up residential area,” and only subdividing when necessary), or to reassign terrace as a subtype within agricultural land-use rather than as an isolated top-level class. This “semantic regrouping + hierarchical decision” strategy is exactly what we pursue in later dataset iterations: in updated versions (e.g., from V1 to the cleaned/redefined V3), we explicitly merge near-indistinguishable classes and remove ambiguous samples, which stabilizes performance on these difficult regions.

A second source of error is concentrated in Transportation and Infrastructure categories. freeway (≈80–85%) is frequently confused with intersection/overpass, parking_lot (≈80%) is sometimes labeled as railway_station or industrial_area, and railway and railway_station are also misclassified into each other. The main reason is that remote-sensing crops often contain only a local texture patch (asphalt, rails, paved areas) without enough global spatial context, and many samples actually contain multiple co-occurring structures (e.g., both elevated ramps and main highway, or both tracks and station buildings), even though the dataset assigns only a single label. Beyond supplying more contextual cues in the hierarchical prompt (for example, first asking the model to identify a coarse “transport corridor vs. transport hub,” and then refine to freeway vs. intersection vs. railway_station), the more robust fix is dataset hygiene: removing or relabeling patches that include multiple transportation elements with no clearly dominant semantic role. Our dataset cleaning and class-boundary revision during later dataset versions is specifically aimed at reducing these “multi-instance but single-label” ambiguities.

A third recurring issue arises in categories defined by relatively small objects. tennis_court achieves only about 70% accuracy and is often predicted as medium_residential, freeway, or basketball_court. Likewise, ground_track_field (≈80–85%) and stadium (≈85–90%) can be confused with each other. In these cases the target structure may occupy only a small fraction of the tile, or may even lie at the edge of the crop, so the model tends to classify based on the surrounding residential blocks, roads, parking lots, or spectator stands instead of the intended object. Two mitigation strategies are effective here: (i) dataset-level filtering to discard severely cropped or extremely low-salience samples, ensuring that tennis courts/running tracks are actually the dominant visible object in the patch; and (ii) prompting the model to reason about functional attributes (“does the area include a standard oval running track and spectator stands?”) rather than relying purely on local color/texture.

Finally, confusion is also observed in Natural land-cover classes. wetland reaches only about 70–85% accuracy, with 10–20% of its samples misclassified as lake, and additional leakage into forest or island. This reflects the fact that transitional environments such as shallow flooded plains, vegetated marshes, or seasonal inundation zones sit between “open water” and “vegetated land,” and look very different across regions and seasons. A more robust strategy is to introduce a hierarchical decision process for water-related scenes: first decide whether the region is primarily open water vs. vegetation-dominated water/shoreline, and only then distinguish lake/river/wetland. At the dataset level, manual screening is also needed to remove samples labeled as wetland that visually resemble ordinary shoreline or generic vegetated terrain.

Taken together, these observations suggest that most residual errors do not purely reflect a lack of representational capacity in the large vision–language model. Instead, they stem from (1) intrinsically ambiguous or overly fine-grained class boundaries, (2) mixed or low-quality labels where multiple scene types co-occur in a single tile, and (3) objects that occupy only a very small fraction of the field of view. By performing hierarchical regrouping of semantically similar subclasses, injecting contextual reasoning into the prompts, and iteratively cleaning and redefining categories across dataset versions (e.g., from to ), we can substantially improve model stability on these difficult categories without requiring full-scale retraining from scratch.

Appendix E. Hierarchical Multi-Label Extension

Remote-sensing scenes frequently contain multiple coexisting semantics (e.g., forest + river, residential + road). Our framework naturally extends to hierarchical multi-label classification by predicting a probability for each node in the label tree and enforcing parent–child consistency.

Label tree and outputs. Let denote the label hierarchy (coarse → fine). For every node the model outputs a logit and probability . Ground-truth labels are multi-hot vectors that can activate multiple leaves and their ancestors.

Loss. We optimize a hierarchical binary cross-entropy with a consistency regularizer as follows:

which discourages a child from being more probable than its parent and promotes coherent top-down predictions. This loss is model-agnostic and plugs into our LoRA/QLoRA heads without changing the VLM backbone.

Inference (top-down decoding).

- (i)

- Start from the root; apply a calibrated threshold for each node.

- (ii)

- If , expand to its children; otherwise prune the subtree.

- (iii)

- Return all activated leaves and (optionally) their ancestors. A simple repair step sets to satisfy ancestry.

Concretely, each node in the hierarchy, outputs a sigmoid score . We activate the node if . The per-node threshold is calibrated on the validation split: for each node, we sweep (step 0.05) and pick the value that maximizes that node’s F1 while respecting the top-down decoding rule. The resulting values are then frozen and used for all test-time decoding.

Prompting. The hierarchical prompts remain unchanged; we only instruct the model to “list all applicable fine-classes” within the selected coarse branch and to justify each label briefly. This keeps the coarse → fine reasoning intact while allowing multiple fine labels.

Evaluation. We recommend reporting mAP, micro/macro-F1, and hierarchical-F1 (counting a leaf as correct only if its ancestors are also correct). This complements the Top-1 accuracy used in the single-label setting.

Practical note. When the training set is single-label (e.g., AID), the above can be used as-is by treating the single label as an active leaf and implicitly activating its ancestors; true multi-label behavior can be fully validated on public multi-label RS datasets and is left as future work.

References

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sutskever, I. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Online, 18–24 July 2021. [Google Scholar]

- Alayrac, J.-B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Li, Y.; Mensch, A.; Millican, A.; Reynolds, M.; Ring, R.; et al. Flamingo: A Visual Language Model for Few-Shot Learning. arXiv 2022, arXiv:2204.14198. [Google Scholar] [CrossRef]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. arXiv 2015, arXiv:1508.00092. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the ACM SIGSPATIAL International Workshop on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010. [Google Scholar]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Basu, S.; Ganguly, S.; Mukhopadhyay, S.; DiBiano, R.; Karki, M.; Nemani, R. DeepSat: A Learning Framework for Satellite Imagery. In Proceedings of the 23rd ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems (SIGSPATIAL), Seattle, WA, USA, 3–6 November 2015. [Google Scholar]

- Luo, Z.; Chen, Y.; Zhang, Z.; Zhao, T. RS5M: A Large-Scale Vision–Language Dataset for Remote Sensing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Hu, Y.; Yuan, J.; Wen, C.; Lu, X.; Liu, Y.; Li, X. RSGPT: A Remote Sensing Vision Language Model and Benchmark. ISPRS J. Photogramm. Remote Sens. 2025, 224, 272–286. [Google Scholar] [CrossRef]

- Kuckreja, K.; Danish, M.S.; Naseer, M.; Das, A.; Khan, S.; Khan, F.S. GeoChat: Grounded Large Vision-Language Model for Remote Sensing. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27831–27840. [Google Scholar] [CrossRef]

- Li, X.; Ding, J.; Elhoseiny, M. VRSBench: A Versatile Vision–Language Benchmark Dataset for Remote Sensing Image Understanding. Adv. Neural Inf. Process. Syst. (NeurIPS) Datasets Benchmarks Track 2024, 37, 3229–3242. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:2002.05709. [Google Scholar] [CrossRef]

- Liu, F.; Chen, D.; Guan, Z.; Zhou, X.; Zhu, J.; Ye, Q.; Fu, L.; Zhou, J. RemoteCLIP: A Vision Language Foundation Model for Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5622216. [Google Scholar] [CrossRef]

- Brade, S.; Wang, B.; Sousa, M.; Oore, S.; Grossman, T. Promptify: Text-to-Image Generation through Interactive Prompt Exploration with Large Language Models. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (UIST 2023), San Francisco, CA, USA, 29 October–1 November 2023; Association for Computing Machinery: New York, NY, USA, 2023. Article 96, 14 pp. [Google Scholar] [CrossRef]

- Gao, J.; Han, X.; Wei, J.; Le, Q.V.; Zhou, D.; Roberts, A.; Devlin, J.; Dean, J.; Huang, Y.; Mishra, G.; et al. Scaling Instruction-Finetuned Language Models. arXiv 2022, arXiv:2210.11416. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Al Rahhal, M.M.; Ricci, R.; Melgani, F. RS-LLaVA: A Large Vision–Language Model for Joint Captioning and VQA in Remote Sensing. Remote Sens. 2024, 16, 1477. [Google Scholar] [CrossRef]

- Zhang, W.; Cai, M.; Zhang, T.; Zhuang, Y.; Mao, X. EarthGPT: A Universal MLLM for Multi-Sensor Remote Sensing. arXiv 2024, arXiv:2401.16822v3. [Google Scholar]

- Ye, F.; Huang, L.; Liang, S.; Chi, K. Decomposed Two-Stage Prompt Learning for Few-Shot Named Entity Recognition. Information 2023, 14, 262. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar]

- Yao, S.; Yu, Y.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree-of-Thoughts: Deliberate Problem Solving with Large Language Models. arXiv 2023, arXiv:2305.10601v2. [Google Scholar]

- Li, X.; Wen, C.; Hu, Y.; Zhou, N. RS-CLIP: Zero-shot Remote Sensing Scene Classification via Contrastive Vision–Language Supervision. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103497. [Google Scholar] [CrossRef]

- El Khoury, K.; Zanella, M.; Gérin, B.; Godelaine, T.; Macq, B.; Mahmoudi, S.; De Vleeschouwer, C.; Ayed, I.B. Enhancing Remote Sensing Vision–Language Models for Zero-Shot Scene Classification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025. [Google Scholar]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring Models and Data for Remote Sensing Image Caption Generation. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2183–2195. [Google Scholar] [CrossRef]

- Hu, E.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. Adv. Neural Inf. Process. Syst. (NeurIPS) 2023, 36, 10088–10115. [Google Scholar]

- Wang, N.; Yang, A.; Cui, Z.; Ding, Y.; Xue, Y.; Su, Y. Capsule Attention Network for Hyperspectral Image Classification. Remote Sens. 2024, 16, 4001. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Z.; Wang, K.; Jia, H.; Han, Z.; Tang, Y. Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection. Remote Sens. 2024, 16, 74. [Google Scholar] [CrossRef]

- Wang, N.; Cui, Z.; Lan, Y.; Zhang, C.; Xue, Y.; Su, Y.; Li, A. Large-Scale Hyperspectral Image-Projected Clustering via Doubly Stochastic Graph Learning. Remote Sens. 2025, 17, 1526. [Google Scholar] [CrossRef]

- Xia, G.-S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A Benchmark Dataset for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Zauner, C. Implementation and Benchmarking of Perceptual Image Hash Functions. Master’s Thesis, Hagenberg University of Technology, Hagenberg, Austria, 2010. [Google Scholar]

- Barz, B.; Denzler, J. Do We Train on Test Data? Purging CIFAR of Near-Duplicates. J. Imaging 2020, 6, 41. [Google Scholar] [CrossRef]

- Balestra, M.; Paolanti, M.; Pierdicca, R. WHU-RS19 ABZSL: An Attribute-Based Dataset for Remote Sensing Image Understanding. Remote Sens. 2025, 17, 2384. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Rajbhandari, S.; Rasley, J.; Ruwase, O.; He, Y. ZeRO: Memory Optimizations Toward Training Trillion-Parameter Models. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC ’20), Atlanta, GA, USA, 16–19 November 2020. [Google Scholar]

- Dettmers, T.; Lewis, M.; Shleifer, S.; Zettlemoyer, L. LLM.int8(): 8-Bit Matrix Multiplication for Transformers at Scale. arXiv 2022, arXiv:2208.07339. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, L.; Gu, X.; Chen, X.; Chang, H.; Zhang, H.; Chen, Z.; Zhang, X.; Tian, Q. QA-LoRA: Quantization-Aware Low-Rank Adaptation of Large Language Models. In Proceedings of the International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Zhao, W.; Xu, H.; Hu, J.; Wang, X.; Mao, Y.; Zhang, D. SWIFT: A Scalable Lightweight Infrastructure for Fine-Tuning. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; et al. Qwen2-VL: Enhancing Vision-Language Model’s Perception of the World at Any Resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for CNNs. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]