Bridging Vision Foundation and Vision–Language Models for Open-Vocabulary Semantic Segmentation of UAV Imagery

Highlights

- We propose HOSU, a hybrid OVSS framework that integrates distribution-aware fine-tuning, text-guided multi-level regularization, and masked feature consistency to inject DINOv2’s fine-grained spatial priors into CLIP, thereby enhancing segmentation of complex scene distributions and small-scale targets in UAV imagery, while maintaining CLIP-only inference.

- Extensive experiments across four training settings and six UAV benchmarks demonstrate that our method achieves state-of-the-art performance with significant improvements over existing approaches, while ablation studies further confirm that each module plays a key role in the overall performance gain.

- This work offers a new perspective on leveraging the complementary strengths of heterogeneous foundation models, presenting a generalizable framework that enhances the fine-grained spatial perception of vision–language models in dense prediction tasks.

- Our method unlocks open-vocabulary segmentation for UAV imagery, filling an important research gap and delivering robust generalization to unseen categories and diverse aerial scenes, with substantial practical value for real-world UAV applications such as urban planning, disaster assessment, and environmental monitoring.

Abstract

1. Introduction

- We propose a novel hybrid framework for open-vocabulary semantic segmentation of UAV imagery, named HOSU, which leverages the priors of vision foundation models to unlock the capability of vision–language models in scene distribution perception and fine-grained feature representation.

- We propose a text-guided multi-level regularization method that leverages the CLIP text embeddings to regularize visual features, preventing their drift from the original semantic space during fine-tuning and preserving coherent correspondence between visual and textual semantics.

- We propose a mask-based feature consistency strategy to address the prevalent occlusion in UAV imagery, enabling the model to learn stable feature representations that remain robust against missing or partially visible regions.

- Extensive experiments conducted across multiple UAV benchmarks validate the effectiveness of our method, consistently demonstrating state-of-the-art performance and clear improvements over existing open-vocabulary segmentation approaches.

2. Related Work

2.1. Semantic Segmentation

2.2. Open-Vocabulary Semantic Segmentation

2.3. Vision Foundation Models

3. Methods

3.1. Distribution-Aware Fine-Tuning Method

3.1.1. Construction of Region-Level Prototypes

3.1.2. Enhancing Spatial Perception via Inter-Region Alignment

3.1.3. Fine-Grained Representation Through Intra-Region Alignment

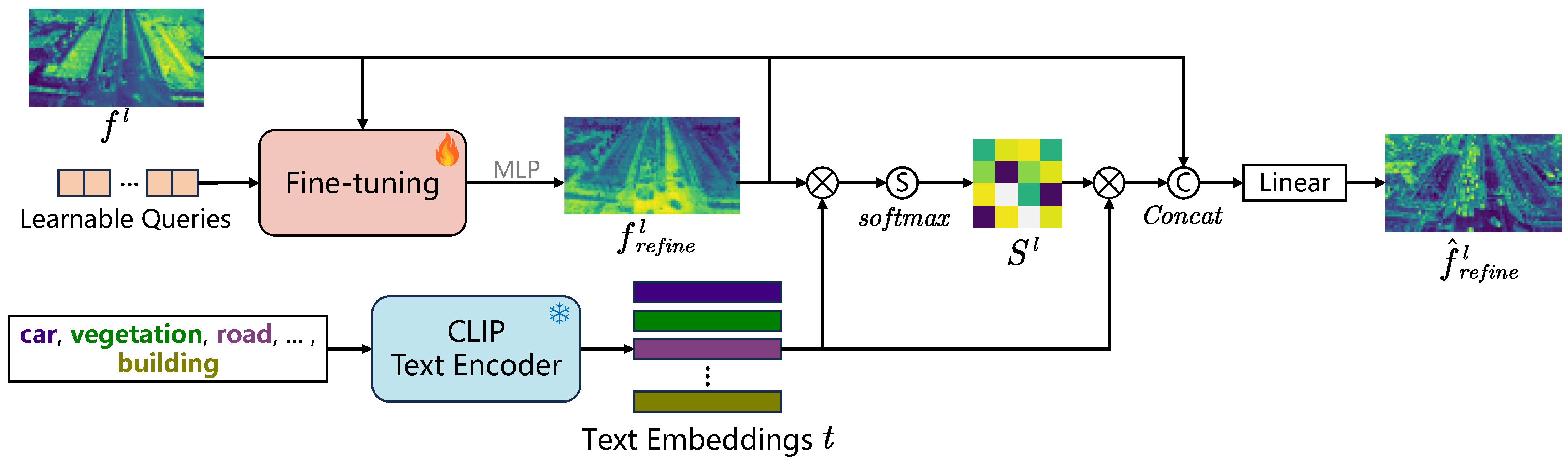

3.2. Text-Guided Multi-Level Regularization

3.3. Masked Feature Consistency for Occlusion Robustness

3.4. Overall Training Objective and Inference Pipeline

4. Results

4.1. Experimental Setup

4.1.1. Datasets

4.1.2. Implementation Details

4.2. Main Results

4.2.1. Quantitative Comparisons with Previous Methods

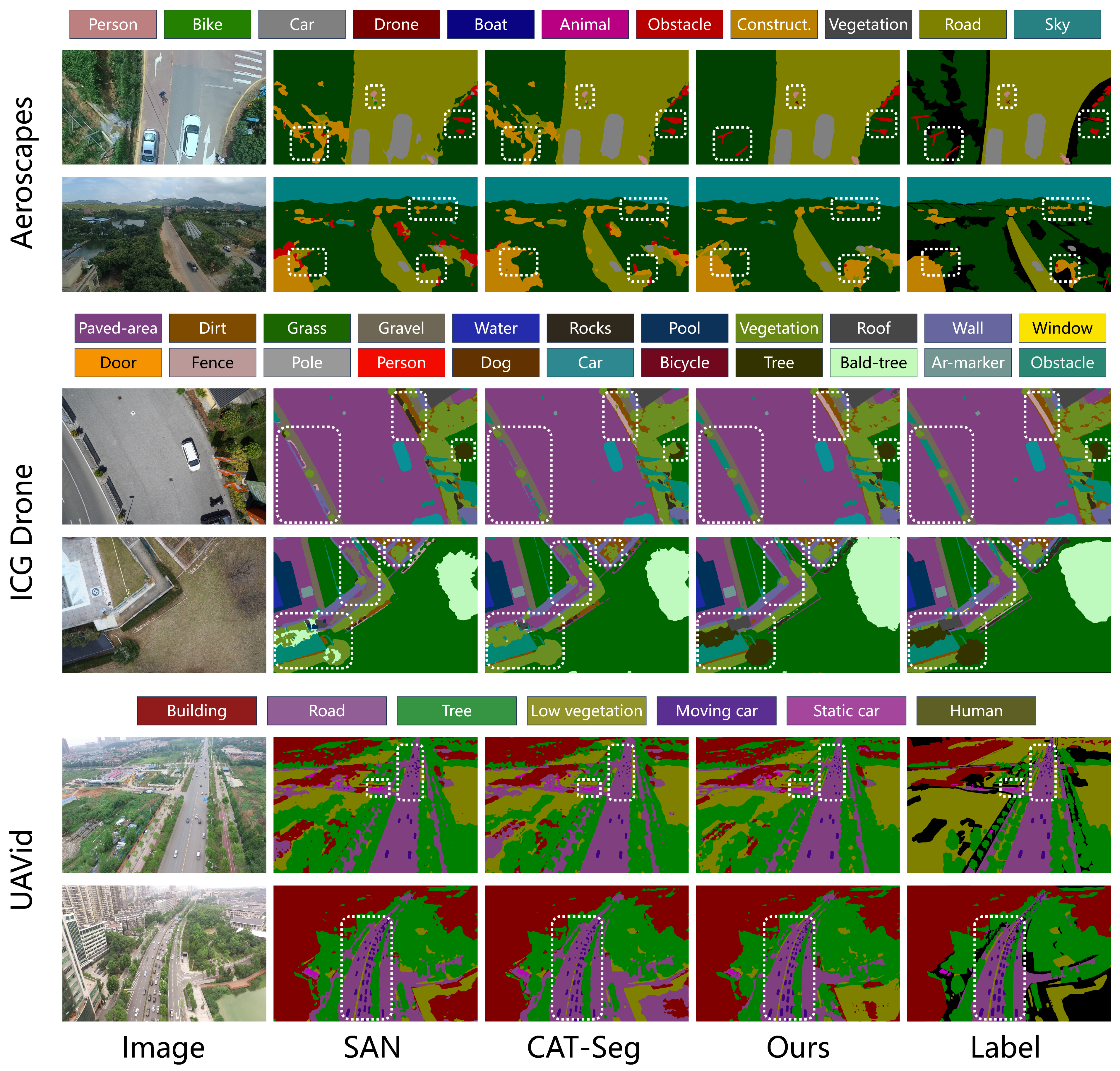

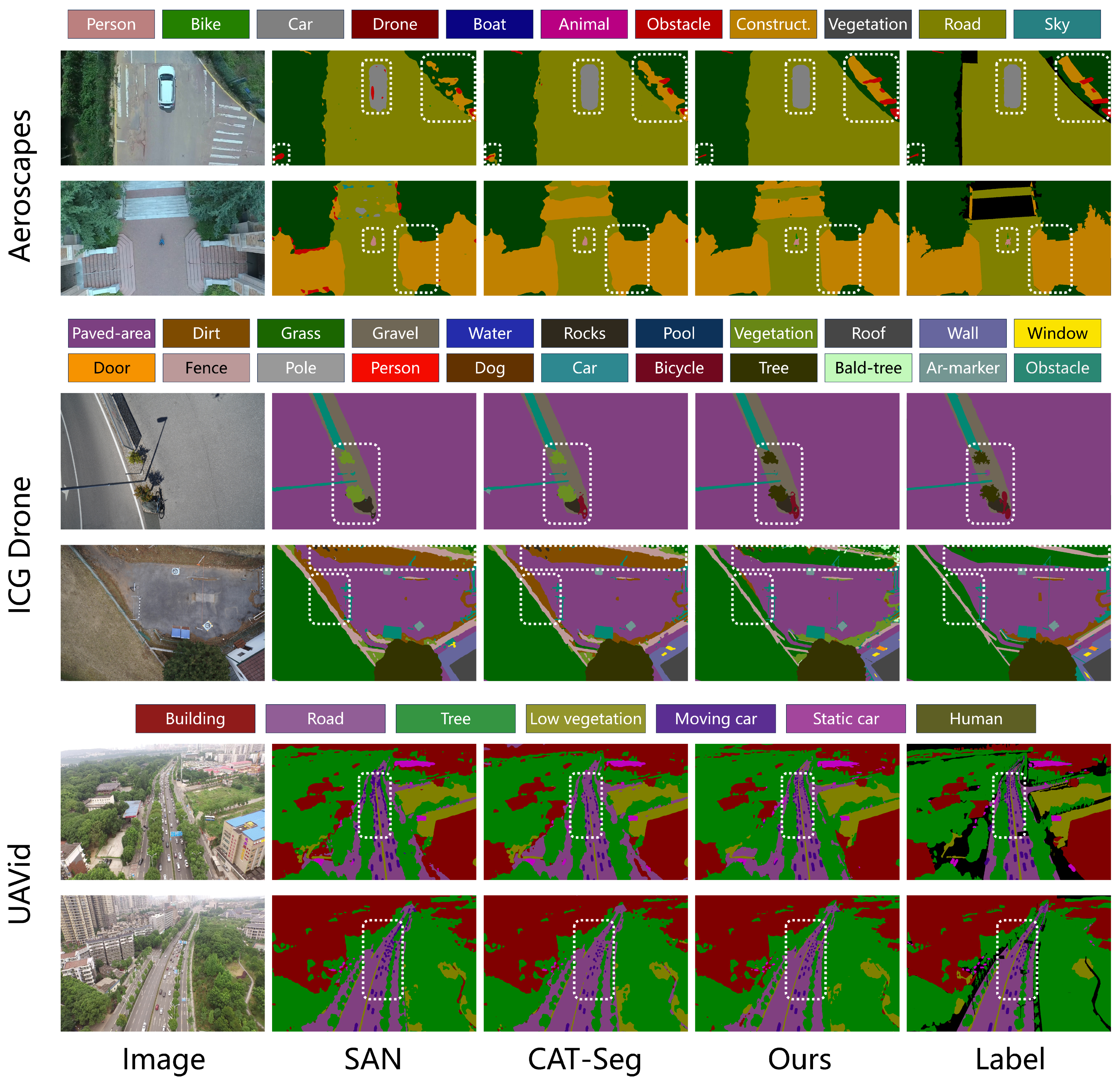

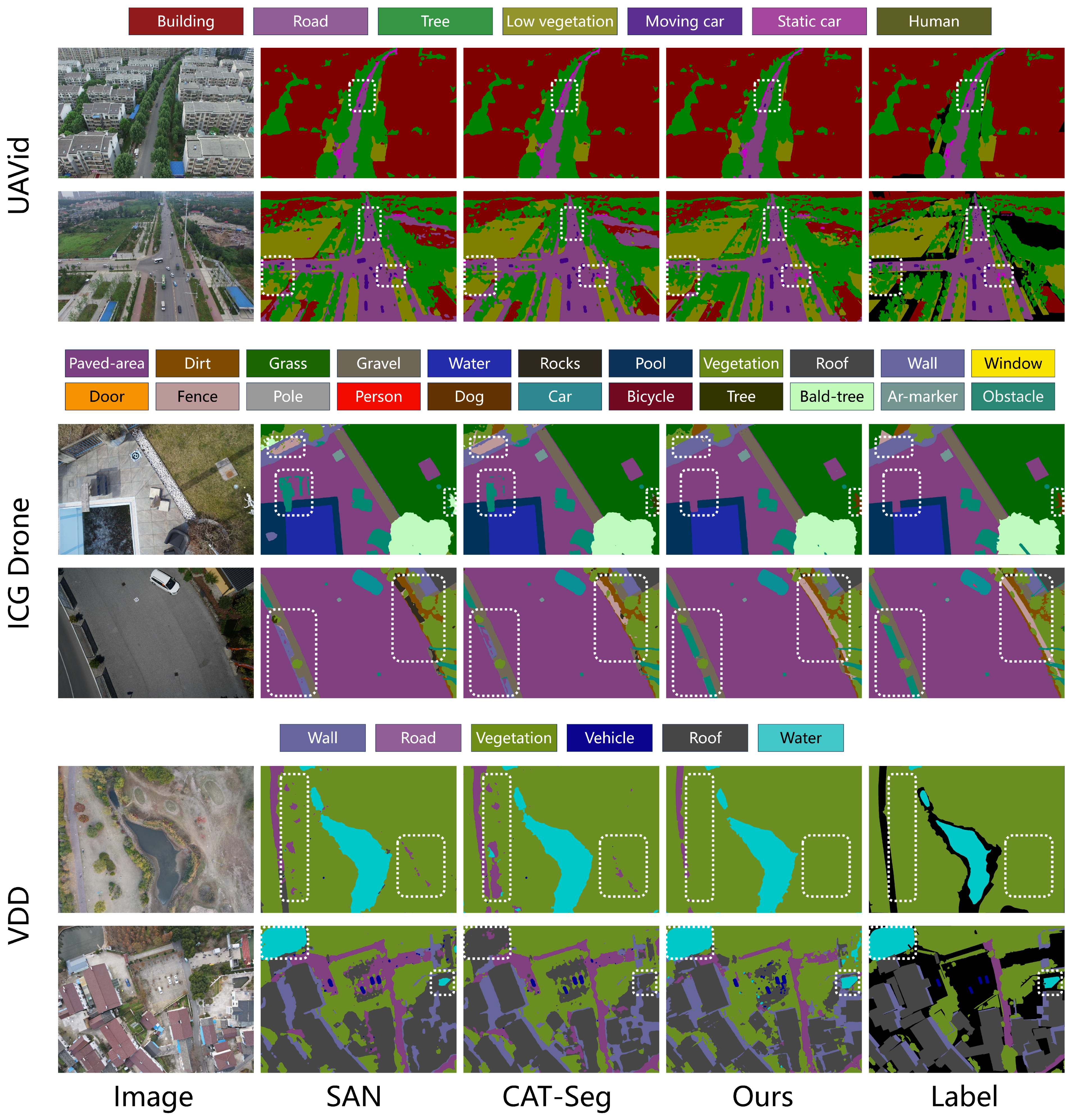

4.2.2. Qualitative Comparisons with Previous Methods

4.3. Ablation Studies and Further Analysis

4.3.1. Ablation Study on Primary Components

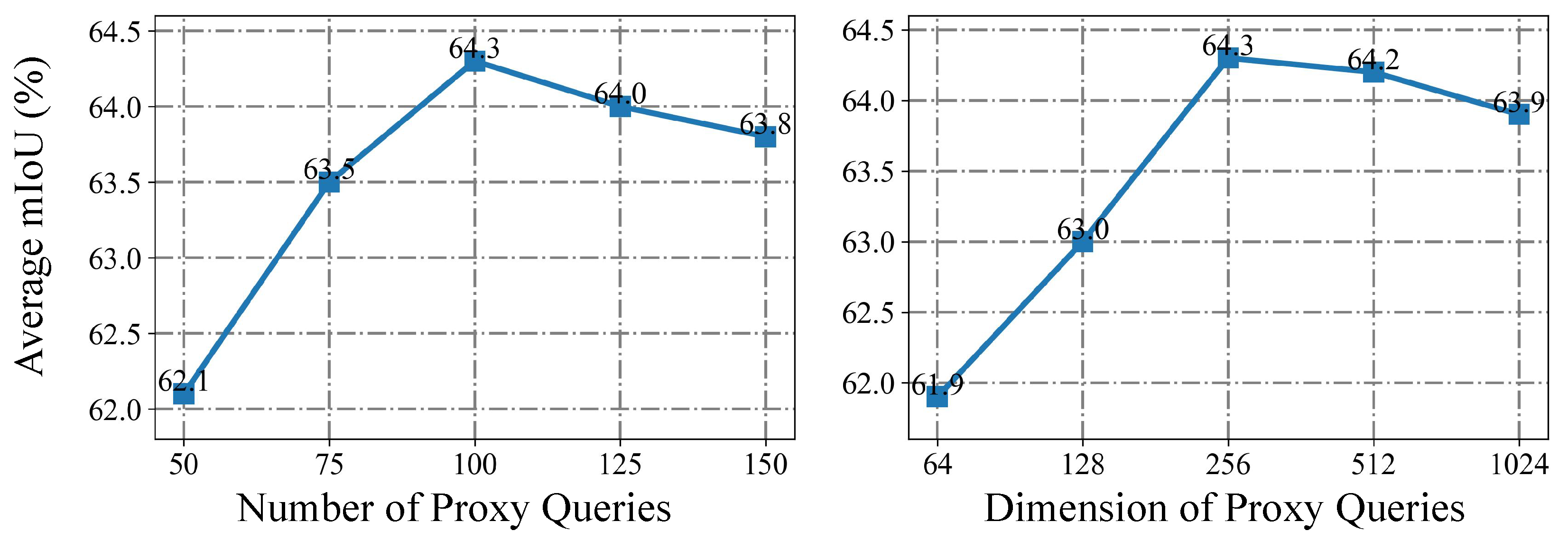

4.3.2. Effect of Proxy Query Length and Dimension

4.3.3. Effect of Loss Weights

4.3.4. Ablation Study on Patch Size and Mask Ratio

4.3.5. Ablation Study on Different Decoder

4.3.6. Inference Efficiency Comparison

4.3.7. Training Cost Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Liang-Chieh, C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A. Semantic image segmentation with deep convolutional nets and fully connected crfs. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 1290–1299. [Google Scholar]

- Mou, L.; Hua, Y.; Zhu, X.X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7557–7569. [Google Scholar] [CrossRef]

- Li, Z.; Chen, X.; Jiang, J.; Han, Z.; Li, Z.; Fang, T.; Huo, H.; Li, Q.; Liu, M. Cascaded multiscale structure with self-smoothing atrous convolution for semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5605713. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Zhang, C.; Jiang, W.; Zhang, Y.; Wang, W.; Zhao, Q.; Wang, C. Transformer and CNN hybrid deep neural network for semantic segmentation of very-high-resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408820. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Li, X.; Cheng, Y.; Fang, Y.; Liang, H.; Xu, S. 2DSegFormer: 2-D Transformer Model for Semantic Segmentation on Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4709413. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Lin, Y.; Cao, Y.; Hu, H.; Bai, X. A simple baseline for open-vocabulary semantic segmentation with pre-trained vision-language model. In Proceedings of the European Conference on Computer Vision, Vienna, Austria, 18–24 July 2022; pp. 736–753. [Google Scholar]

- Zhou, Z.; Lei, Y.; Zhang, B.; Liu, L.; Liu, Y. Zegclip: Towards adapting clip for zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 11175–11185. [Google Scholar]

- Cho, S.; Shin, H.; Hong, S.; Arnab, A.; Seo, P.H.; Kim, S. Cat-seg: Cost aggregation for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 4113–4123. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Hu, H.; Bai, X. Side adapter network for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2945–2954. [Google Scholar]

- Xie, B.; Cao, J.; Xie, J.; Khan, F.S.; Pang, Y. Sed: A simple encoder-decoder for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3426–3436. [Google Scholar]

- Han, C.; Zhong, Y.; Li, D.; Han, K.; Ma, L. Open-vocabulary semantic segmentation with decoupled one-pass network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1086–1096. [Google Scholar]

- Yi, M.; Cui, Q.; Wu, H.; Yang, C.; Yoshie, O.; Lu, H. A simple framework for text-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7071–7080. [Google Scholar]

- Wang, Y.; Sun, R.; Luo, N.; Pan, Y.; Zhang, T. Image-to-image matching via foundation models: A new perspective for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3952–3963. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Li, F.; Zhang, H.; Sun, P.; Zou, X.; Liu, S.; Li, C.; Yang, J.; Zhang, L.; Gao, J. Segment and recognize anything at any granularity. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 467–484. [Google Scholar]

- Wei, Z.; Chen, L.; Jin, Y.; Ma, X.; Liu, T.; Ling, P.; Wang, B.; Chen, H.; Zheng, J. Stronger Fewer & Superior: Harnessing Vision Foundation Models for Domain Generalized Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 28619–28630. [Google Scholar]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.M.; Shum, H.Y. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 3041–3050. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.C.; Lin, D.; Jia, J. Psanet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 15–21 June 2019; pp. 3146–3154. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. pp. 234–241. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv 2019, arXiv:1904.04514. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, J.; Qin, T. Self-guided and cross-guided learning for few-shot segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtually, 19–25 June 2021; pp. 8312–8321. [Google Scholar]

- Deng, J.; Lu, J.; Zhang, T. Diff3detr: Agent-based diffusion model for semi-supervised 3d object detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 57–73. [Google Scholar]

- Tang, F.; Xu, Z.; Qu, Z.; Feng, W.; Jiang, X.; Ge, Z. Hunting attributes: Context prototype-aware learning for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3324–3334. [Google Scholar]

- Zhao, W.; Du, S. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- He, D.; Shi, Q.; Liu, X.; Zhong, Y.; Zhang, L. Generating 2m fine-scale urban tree cover product over 34 metropolises in China based on deep context-aware sub-pixel mapping network. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102667. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A novel transformer based semantic segmentation scheme for fine-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer meets convolution: A bilateral awareness network for semantic segmentation of very fine resolution urban scene images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Dai, D. Decoupling zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11583–11592. [Google Scholar]

- Liang, F.; Wu, B.; Dai, X.; Li, K.; Zhao, Y.; Zhang, H.; Zhang, P.; Vajda, P.; Marculescu, D. Open-vocabulary semantic segmentation with mask-adapted clip. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7061–7070. [Google Scholar]

- Ghiasi, G.; Gu, X.; Cui, Y.; Lin, T.Y. Scaling open-vocabulary image segmentation with image-level labels. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 540–557. [Google Scholar]

- Zhou, C.; Loy, C.C.; Dai, B. Extract free dense labels from clip. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 696–712. [Google Scholar]

- Yu, Q.; He, J.; Deng, X.; Shen, X.; Chen, L.C. Convolutions Die Hard: Open-Vocabulary Segmentation with Single Frozen Convolutional CLIP. arXiv 2023, arXiv:2308.02487. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Rizzoli, G.; Barbato, F.; Caligiuri, M.; Zanuttigh, P. Syndrone-multi-modal uav dataset for urban scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 2210–2220. [Google Scholar]

- Nigam, I.; Huang, C.; Ramanan, D. Ensemble knowledge transfer for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1499–1508. [Google Scholar]

- Cai, W.; Jin, K.; Hou, J.; Guo, C.; Wu, L.; Yang, W. Vdd: Varied drone dataset for semantic segmentation. J. Vis. Commun. Image Represent. 2025, 109, 104429. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Y.; Lu, P.; Chen, Y.; Wang, G. Large-scale structure from motion with semantic constraints of aerial images. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 347–359. [Google Scholar]

- Lyu, Y.; Vosselman, G.; Xia, G.S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Graz University of Technology. ICG Drone Dataset. 2023. Available online: https://fmi-data-index.github.io/semantic_drone.html (accessed on 7 June 2023).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Tranheden, W.; Olsson, V.; Pinto, J.; Svensson, L. Dacs: Domain adaptation via cross-domain mixed sampling. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 6–8 January 2021; pp. 1379–1389. [Google Scholar]

- Zhang, H.; Li, F.; Zou, X.; Liu, S.; Li, C.; Yang, J.; Zhang, L. A simple framework for open-vocabulary segmentation and detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1020–1031. [Google Scholar]

| Dataset | Link | Number of Categories | Classes |

|---|---|---|---|

| SynDrone [49] | https://github.com/LTTM/Syndrone?tab=readme-ov-file (accessed on 9 November 2025) | 27 | [Road; Sidewalk; Ground; Rail Track; Road Line; Terrain; Vegetation; Person; Rider; Train; Car; Truck; Bus; Bicycle; Motorcycle; Building; Wall; Fence; Bridge; Pole; Static; Guard Rail; Dynamic; Traffic Signs; Traffic Light; Water; Sky] |

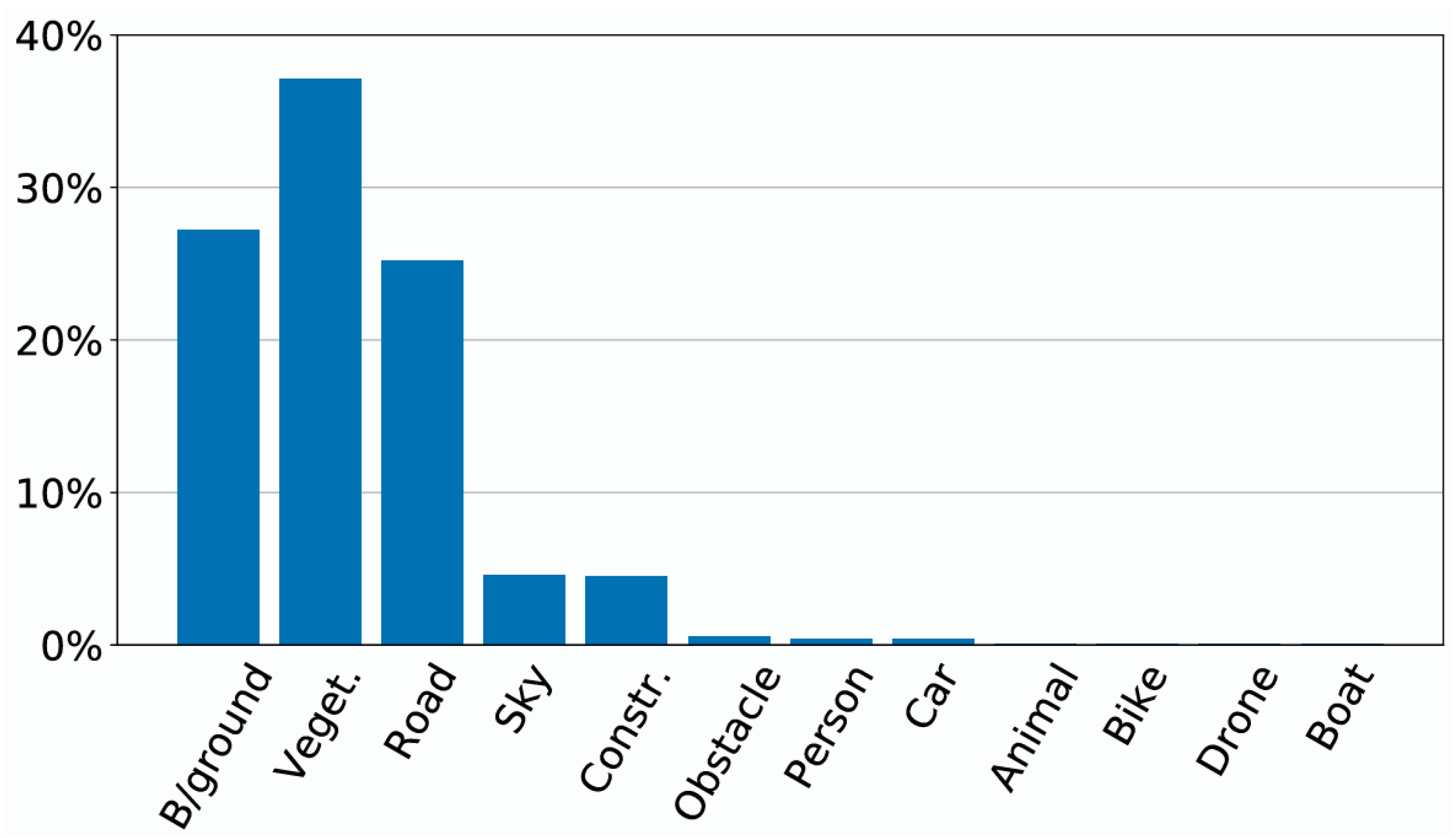

| Aeroscapes [50] | https://github.com/ishann/aeroscapes (accessed on 9 November 2025) | 11 | [Person; Bike; Car; Drone; Boat; Animal; Obstacle; Construction; Vegetation; Road; Sky] |

| VDD [51] | https://github.com/RussRobin/VDD (accessed on 9 November 2025) | 6 | [Wall; Road; Vegetation; Vehicle; Roof; Water] |

| UDD5 [52] | https://github.com/MarcWong/UDD/tree/master (accessed on 9 November 2025) | 4 | [Vegetation; Building; Road; Vehicle] |

| UDD6 [52] | https://github.com/MarcWong/UDD/tree/master (accessed on 9 November 2025) | 5 | [Facade; Road; Vegetation; Vehicle; Roof] |

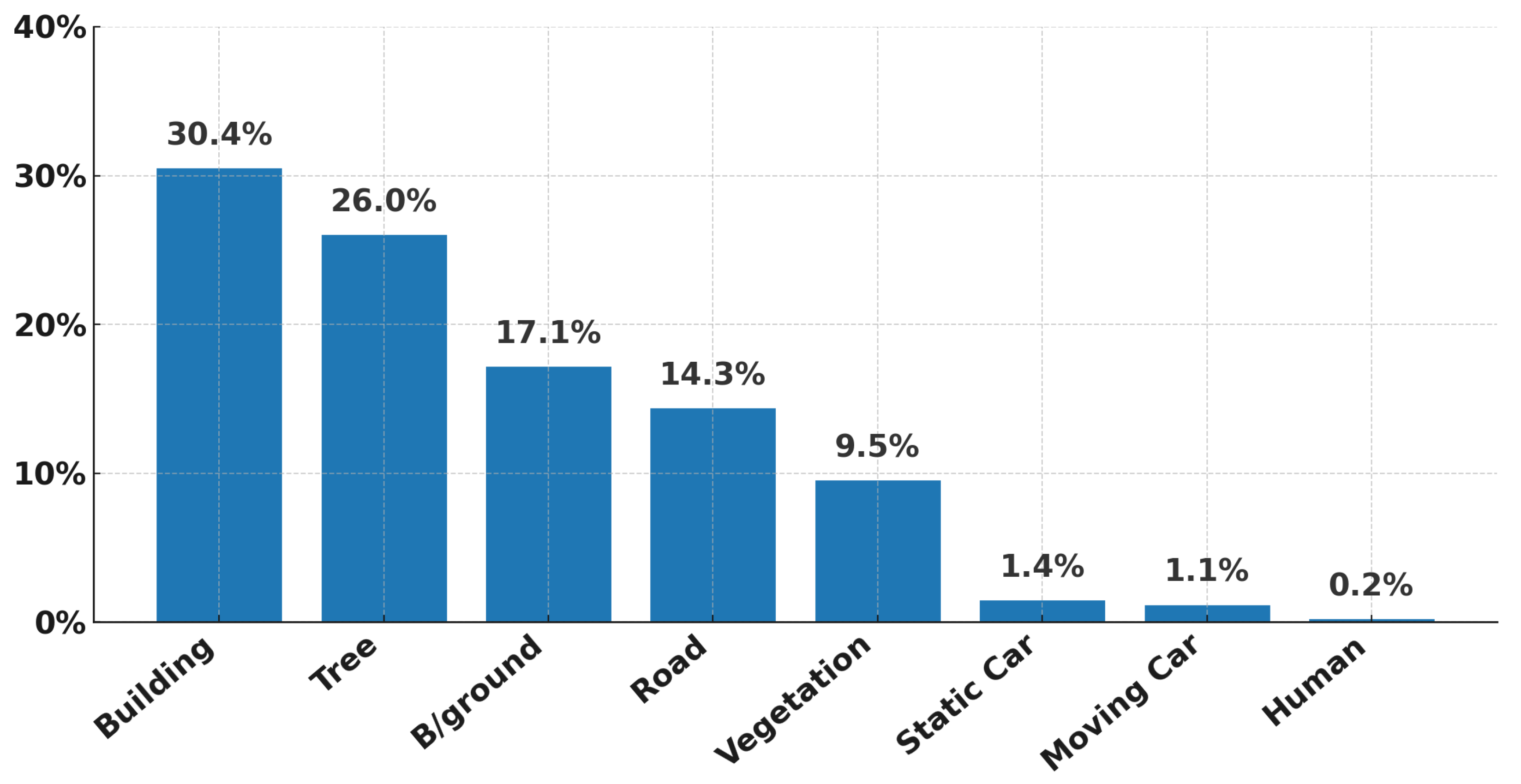

| UAVid [53] | https://research.utwente.nl/en/datasets/uavid-dataset/ (accessed on 9 November 2025) | 7 | [Building; Road; Tree; Low vegetation; Moving car; Static car; Human] |

| ICG Drone [54] | https://fmi-data-index.github.io/semantic_drone.html (accessed on 9 November 2025) | 22 | [Paved-area; Dirt; Grass; Gravel; Water; Rocks; Pool; Vegetation; Roof; Wall; Window; Door; Fence; Fence-pole; Person; Dog; Car; Bicycle; Tree; Bald-tree; Ar-marker; Obstacle] |

| Model | Training Dataset | mIoU (%) | |||||

|---|---|---|---|---|---|---|---|

| VDD | UDD5 | UDD6 | Aeroscapes | UAVid | ICG Drone | ||

| ZegFormer [42] | 64.3 | 67.9 | 66.0 | 58.9 | 61.5 | 48.6 | |

| OpenSeeD [57] | 65.9 | 68.5 | 67.2 | 60.2 | 62.7 | 49.3 | |

| SED [18] | 66.7 | 68.7 | 66.5 | 61.3 | 62.3 | 51.2 | |

| OVSeg [43] | SynDrone | 66.5 | 69.5 | 67.4 | 61.0 | 62.5 | 50.5 |

| SAN [17] | 67.3 | 69.8 | 68.3 | 61.5 | 63.9 | 51.6 | |

| CAT-Seg [16] | 67.8 | 70.7 | 68.2 | 62.3 | 63.7 | 52.3 | |

| Ours | 70.9 | 72.1 | 70.6 | 64.9 | 65.4 | 55.7 | |

| ZegFormer [42] | 66.8 | 88.3 | 76.1 | 58.1 | 63.5 | 40.4 | |

| OpenSeeD [57] | 67.0 | 88.5 | 77.5 | 59.8 | 64.2 | 41.2 | |

| SED [18] | 67.6 | 89.2 | 78.7 | 60.2 | 64.0 | 42.5 | |

| OVSeg [43] | UDD5 | 68.4 | 89.1 | 78.3 | 59.5 | 64.2 | 41.6 |

| SAN [17] | 68.7 | 89.0 | 79.1 | 60.4 | 64.7 | 42.3 | |

| CAT-Seg [16] | 69.5 | 89.3 | 79.6 | 61.1 | 65.3 | 43.1 | |

| Ours | 72.1 | 89.6 | 81.2 | 64.3 | 67.2 | 45.4 | |

| ZegFormer [42] | 86.1 | 67.5 | 67.2 | 62.6 | 65.2 | 40.5 | |

| OpenSeeD [57] | 86.9 | 68.1 | 68.4 | 63.5 | 65.7 | 40.8 | |

| SED [18] | 87.1 | 68.6 | 69.0 | 62.7 | 66.8 | 41.2 | |

| OVSeg [43] | VDD | 86.5 | 69.4 | 68.5 | 63.9 | 66.0 | 41.9 |

| SAN [17] | 87.4 | 69.9 | 69.1 | 64.3 | 66.3 | 42.0 | |

| CAT-Seg [16] | 87.9 | 70.3 | 69.8 | 65.0 | 67.1 | 43.3 | |

| Ours | 89.1 | 72.6 | 71.3 | 67.4 | 69.7 | 45.8 | |

| ZegFormer [42] | 69.8 | 68.6 | 67.3 | 87.0 | 66.9 | 41.9 | |

| OpenSeeD [57] | 70.1 | 69.3 | 68.0 | 87.2 | 67.8 | 43.1 | |

| SED [18] | 70.7 | 69.8 | 68.7 | 87.5 | 68.3 | 44.2 | |

| OVSeg [43] | Aeroscapes | 71.0 | 70.6 | 68.2 | 88.7 | 67.7 | 44.7 |

| SAN [17] | 71.6 | 71.5 | 69.5 | 88.3 | 68.8 | 44.3 | |

| CAT-Seg [16] | 72.3 | 71.7 | 69.8 | 88.9 | 69.1 | 45.4 | |

| Ours | 75.8 | 74.7 | 72.7 | 89.7 | 71.8 | 48.1 | |

| Model | Pers. | Bike | Car | Drone | Boat | Anim. | Obst. | Const. | Veg. | Road | Sky |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CAT-Seg | 81.8 | 63.8 | 39.6 | 64.6 | 21 | 56.5 | 43.9 | 47.2 | 76.6 | 86.1 | 89.7 |

| Ours | 82.4 | 63.1 | 41 | 74.4 | 24.2 | 63 | 51.9 | 50 | 81.8 | 87.1 | 90.1 |

| Model | Build. | Road | Tree | Low Veg. | Mov. Car | Sta. Car | Human |

|---|---|---|---|---|---|---|---|

| CAT-Seg | 63.8 | 91.2 | 76.3 | 73.1 | 63.7 | 49.3 | 52.1 |

| Ours | 66.1 | 91.7 | 77.2 | 77.2 | 68.2 | 52.5 | 56.0 |

| TG | mIoU (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| VDD | UDD6 | Aeroscapes | UAVid | ICG Drone | |||||

| 1 | 68.7 | 78.3 | 60.2 | 64.1 | 42.0 | ||||

| 2 | ✓ | 70.4 | 79.1 | 61.3 | 65.0 | 43.1 | |||

| 3 | ✓ | 70.2 | 79.3 | 61.6 | 64.9 | 42.7 | |||

| 4 | ✓ | ✓ | 71.0 | 80.2 | 62.1 | 65.7 | 43.5 | ||

| 5 | ✓ | ✓ | ✓ | 71.7 | 80.9 | 63.4 | 66.6 | 44.6 | |

| 6 | ✓ | ✓ | ✓ | ✓ | 72.1 | 81.2 | 64.3 | 67.2 | 45.4 |

| mIoU (%) | |||||

|---|---|---|---|---|---|

| VDD | UDD6 | Aeroscapes | UAVid | ICG Drone | |

| 0.5 | 71.6 | 80.6 | 63.1 | 66.5 | 43.9 |

| 1 | 72.1 | 81.2 | 64.3 | 67.2 | 45.4 |

| 1.5 | 71.8 | 80.4 | 62.9 | 66.3 | 44.3 |

| 2 | 70.9 | 80.2 | 61.8 | 65.7 | 42.9 |

| mIoU (%) | |||||

|---|---|---|---|---|---|

| VDD | UDD6 | Aeroscapes | UAVid | ICG Drone | |

| 0.5 | 71.3 | 80.9 | 63.5 | 66.2 | 44.2 |

| 1 | 72.1 | 81.2 | 64.3 | 67.2 | 45.4 |

| 1.5 | 71.9 | 80.5 | 62.7 | 66.8 | 44.6 |

| 2 | 71.2 | 80.0 | 62.1 | 66.3 | 43.7 |

| mIoU (%) | |||||

|---|---|---|---|---|---|

| VDD | UDD6 | Aeroscapes | UAVid | ICG Drone | |

| 0.5 | 71.8 | 80.9 | 63.7 | 66.9 | 44.8 |

| 1 | 72.1 | 81.2 | 64.3 | 67.2 | 45.4 |

| 1.5 | 72.0 | 80.9 | 63.9 | 66.7 | 45.1 |

| 2 | 71.4 | 80.2 | 63.3 | 66.1 | 44.3 |

| Patch Size | Mask Ratio | |||

|---|---|---|---|---|

| 0.2 | 0.3 | 0.4 | 0.5 | |

| 4 | 63.2 | 63.8 | 63.6 | 63.5 |

| 8 | 63.6 | 64.1 | 64.2 | 63.6 |

| 16 | 64.0 | 64.3 | 63.4 | 63.2 |

| 32 | 63.4 | 63.6 | 63.0 | 62.4 |

| 64 | 63.1 | 62.7 | 62.1 | 61.6 |

| Decoder | mIoU (%) | ||||

|---|---|---|---|---|---|

| VDD | UDD6 | Aeroscapes | UAVid | ICG Drone | |

| Segmenter | 69.5 | 77.3 | 62.5 | 63.1 | 41.8 |

| Segformer | 68.5 | 79.8 | 63.1 | 64.3 | 41.3 |

| Mask2former | 70.2 | 79.4 | 62.1 | 66.7 | 43.6 |

| Ours | 72.1 | 81.2 | 64.3 | 67.2 | 45.4 |

| Method | Params. (M) | FPS | GFLOPs |

|---|---|---|---|

| OVSeg | 532.61 | 0.80 | 3216.70 |

| SAN | 447.31 | 8.55 | 1319.64 |

| CAT-Seg | 433.74 | 2.70 | 2000.57 |

| Ours | 431.15 | 9.33 | 1012.91 |

| Method | Trainable Params. (M) | Training Time (h) | GPU Memory (GB) |

|---|---|---|---|

| OVSeg | 147.23 | 17.6 | 88.5 |

| SAN | 23.67 | 18.0 | 102.7 |

| CAT-Seg | 70.27 | 12.6 | 86.2 |

| Ours | 14.39 | 10.3 | 83.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Zhang, Z.; Wang, X.; Wang, X.; Xu, Y. Bridging Vision Foundation and Vision–Language Models for Open-Vocabulary Semantic Segmentation of UAV Imagery. Remote Sens. 2025, 17, 3704. https://doi.org/10.3390/rs17223704

Li F, Zhang Z, Wang X, Wang X, Xu Y. Bridging Vision Foundation and Vision–Language Models for Open-Vocabulary Semantic Segmentation of UAV Imagery. Remote Sensing. 2025; 17(22):3704. https://doi.org/10.3390/rs17223704

Chicago/Turabian StyleLi, Fan, Zhaoxiang Zhang, Xuanbin Wang, Xuan Wang, and Yuelei Xu. 2025. "Bridging Vision Foundation and Vision–Language Models for Open-Vocabulary Semantic Segmentation of UAV Imagery" Remote Sensing 17, no. 22: 3704. https://doi.org/10.3390/rs17223704

APA StyleLi, F., Zhang, Z., Wang, X., Wang, X., & Xu, Y. (2025). Bridging Vision Foundation and Vision–Language Models for Open-Vocabulary Semantic Segmentation of UAV Imagery. Remote Sensing, 17(22), 3704. https://doi.org/10.3390/rs17223704