Highlights

What are the main findings?

- A domain-unified adaptive target detection framework (DUA-TDF) for small vehicle targets in monostatic/bistatic SAR images is proposed.

- The multi-scale detail-aware CycleGAN (MSDA-CycleGAN) and the cross-window axial self-attention target detection network (CWASA-Net) are proposed to enable effective and robust detection of small vehicle targets under both within-domain and cross-domain testing conditions.

What are the implications of the main findings?

- The proposed MSDA-CycleGAN achieves unpaired image style transfer while emphasizing both global structure and local details of the generated images, significantly enhancing the generalization capability of downstream target detection models.

- The proposed CWASA-Net improves the detection performance for small targets in complex backgrounds by conducting collaborative optimization from feature extraction and feature fusion.

Abstract

Benefiting from the advantages of unmanned aerial vehicle (UAV) platforms such as low cost, rapid deployment capability, and miniaturization, the application of UAV-borne synthetic aperture radar (SAR) has developed rapidly. Utilizing a self-developed monostatic Miniaturized SAR (MiniSAR) system and a bistatic MiniSAR system, our team conducted multiple imaging missions over the same vehicle equipment display area at different times. However, system disparities and time-varying factors lead to a mismatch between the distributions of the training and test data. Additionally, small ground vehicle targets under complex background clutter exhibit limited size and weak scattering characteristics. These two issues pose significant challenges to the precise detection of small ground vehicle targets. To address these issues, this article proposes a domain-unified adaptive target detection framework (DUA-TDF). The approach consists of two stages: image-to-image translation and feature extraction and target detection. In the first stage, a multi-scale detail-aware CycleGAN (MSDA-CycleGAN) is proposed to align the source and target domains at the image level by achieving unpaired image style transfer while emphasizing both global structure and local details of the generated images. In the second stage, a cross-window axial self-attention target detection network (CWASA-Net) is proposed. This network employs a hybrid backbone centered on the cross-window axial self-attention mechanism to enhance feature representation, coupled with a convolution-based stacked cross-scale feature fusion network to strengthen multi-scale feature interaction. To validate the effectiveness and generalization capability of the proposed algorithm, comprehensive experiments are conducted on both self-developed monostatic/bistatic SAR datasets and public dataset. Experimental results demonstrate that our method achieves an mAP50 exceeding 90% in within-domain tests and maintains over 80% in cross-domain scenarios, demonstrating exceptional and robust detection performance as well as cross-domain adaptability.

1. Introduction

Synthetic aperture radar (SAR) [1], leveraging its all-day, all-weather, and high-resolution observation capabilities, plays an irreplaceable role in both military and civilian domains such as battlefield target recognition, disaster response, and maritime surveillance. Traditional spaceborne and airborne SAR systems are constrained by platform dependency and rigid observation modes, making it difficult to achieve highly flexible and refined target monitoring. Benefiting from the low cost, rapid deployment capability, and miniaturization of unmanned aerial vehicle (UAV) platforms, the application of UAV-borne SAR [2] has developed rapidly. UAV-borne SAR now plays an increasingly vital role in applications such as reconnaissance and mapping. Our research team has independently developed both monostatic and bistatic Miniaturized SAR (MiniSAR) systems, which combine advantages such as low cost, lightweight design, high resolution, and real-time processing. The bistatic system, in particular, employs a transmitter–receiver separation architecture that enables multi-angle observation capabilities. This allows the acquisition of asymmetric scattering characteristics of targets, thereby enhancing information completeness and anti-jamming performance. These features provide multi-dimensional support for the application of MiniSAR in detecting small ground vehicle targets. However, there are system disparities and time-varying factors between the monostatic and bistatic systems, including disparities in viewing angle, resolution, and scattering characteristics caused by different imaging geometries, as well as variations in background environment and radar system parameters due to temporal gaps in data acquisition. These discrepancies result in a mismatch between the distributions of the training and test data, exhibiting significant domain shift [3]. Furthermore, small vehicle targets under complex background clutter exhibit limited size and weak scattering characteristics. Consequently, developing an effective and robust method for detecting small targets in complex backgrounds under various conditions remains a substantial challenge.

Traditional SAR target detection methods, such as those based on constant false alarm rate (CFAR) algorithms [4], suffer from limitations including limited feature representation capacity, poor environmental adaptability, and low real-time processing efficiency. These shortcomings make it difficult for them to meet the requirements of modern applications in complex scenarios. In recent decades, data-driven deep learning methods have gradually emerged as the mainstream technical approach, owing to their powerful capability for automatic feature extraction and end-to-end optimization. Deep learning-based target detection methods are primarily categorized into two-stage methods, one-stage methods, and transformer-based methods. Two-stage detection methods involve two distinct phases: initially generating region proposals that may contain targets, followed by performing classification and precise bounding box regression on these proposed regions. Representative two-stage detectors include the Region-based Convolutional Neural Network (R-CNN) series [5,6,7]. Due to the secondary refinement of candidate regions in the second stage, two-stage detectors generally achieve higher detection accuracy and more precise bounding box regression. However, they suffer from high computational costs and often fail to achieve real-time detection. One-stage detection methods eliminate the step of generating region proposals and instead perform dense sampling on the image, directly predicting both target categories and bounding boxes in a single pass. Representative one-stage detectors include the You Only Look Once (YOLO) series [8,9,10], Single Shot MultiBox Detector (SSD) [11], and RetinaNet [12]. One-stage detectors benefit from their simple design and fast detection speed, but traditionally exhibit slightly lower accuracy and relatively weaker performance in detecting small targets. Transformer-based detection methods formulate target detection as a set prediction problem. Utilizing an encoder–decoder architecture with learnable query vectors, they are optimized end-to-end via bipartite matching loss, thereby discarding anchor boxes and non-maximum suppression from traditional approaches. Representative transformer-based detectors include Detection Transformer (DETR) [13] and Deformable DETR [14]. Transformer-based detectors simplify the detection pipeline and enable truly end-to-end detection, demonstrating strong performance in complex scenes. Nevertheless, they suffer from high computational complexity, heavy reliance on large-scale datasets, and slow training convergence.

In traditional deep learning-based [15] target detection, both training and test data are required to follow the same probability distribution. Models are trained in a fully supervised manner using labeled datasets, under which condition satisfactory test results can be achieved. However, in real-world detection tasks, training and test data may be acquired by different systems. Disparities in imaging geometries, radar system parameters, and data acquisition timelines between monostatic and bistatic systems can lead to a distribution mismatch between training and test data, consequently resulting in significant degradation of detection performance. Domain adaptation methods offer an effective solution to the aforementioned problem by transferring knowledge from the training data to the test data, thereby effectively mitigating the distribution discrepancy across domains. Facilitated by domain adaptation, target detection models originally developed on homogeneous datasets can be effectively applied to detection tasks on heterogeneous datasets. This approach significantly enhances the model’s detection performance and generalization capability in cross-domain scenarios without incurring additional annotation costs.

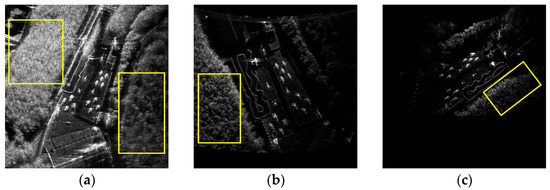

In current research, the definition of small targets lacks a unified standard. The existing definitions are generally categorized into relative-scale and absolute-scale criteria. Relative-scale definitions are based on the size ratio of the target to the entire image. For instance, Chen et al. [16] consider targets with a median ratio of bounding box area to image area between 0.08% and 0.58% as small targets. However, relative scale is susceptible to variations in image dimensions and preprocessing operations, making it difficult to objectively evaluate a model’s cross-scale performance. Therefore, this article adopts the widely accepted absolute-scale definition, following the standard established in the MS COCO dataset, wherein targets with pixel dimensions smaller than 32 × 32 are classified as small targets. As shown in Figure 1, in the monostatic/bistatic SAR datasets used in this article, all images are uniformly sized at 640 × 640 pixels, and all targets range in size from 12 × 12 to 28 × 28 pixels. According to the absolute criterion mentioned above, all targets in the datasets fall within the small target category.

Figure 1.

Panoramic images of the monostatic/bistatic SAR datasets: (a) Monostatic March dataset. (b) Monostatic July dataset. (c) Bistatic dataset. The yellow rectangles indicate extensive and complex background clutter.

Panoramic images in Figure 1 compare the monostatic March dataset, the monostatic July dataset, and the bistatic dataset. It is clearly evident that the monostatic July dataset exhibits the highest image quality, with clearly outlined small ground vehicle targets and a higher signal-to-noise ratio compared to the monostatic March dataset. This improvement can be primarily attributed to the increased power amplifier gain of the monostatic MiniSAR system’s sensor during the July data acquisition campaign. In contrast, the bistatic MiniSAR system, influenced by the bistatic angle resulting from its spatially separated transmitter and receiver architecture, undergoes alterations in scattering mechanisms. This leads to phenomena such as abrupt intensity variations or even disappearance of targets in the bistatic images, resulting in uneven brightness distribution throughout the bistatic dataset. Although the regions of interest in these three datasets correspond to the same vehicle equipment display area, system disparities and variations in the background environment caused by temporal gaps in data acquisition lead to a shift in data distribution. Consequently, when both training and test data in the MiniSAR target detection task originate from the same dataset, referred to as within-domain testing, the model achieves acceptable detection performance. However, when training and test data are drawn from different datasets, known as cross-domain testing, the model’s detection accuracy declines significantly. Furthermore, the small ground vehicle targets, which occupy very few pixels, are embedded in extensive and complex background clutter as indicated by the yellow rectangles. This makes it challenging to distinguish foreground from background and to classify target categories, thereby imposing additional demands on the model’s detection performance. In summary, developing a robust detection method for small vehicle targets that remains effective under both within-domain and cross-domain testing conditions while resisting complex background clutter represents a meaningful and highly challenging research task.

To address the aforementioned challenges, this article proposes a domain-unified adaptive SAR target detection framework (DUA-TDF) based on pixel-level domain adaptation and cross-window axial self-attention mechanism. The proposed method consists of two stages: image-to-image translation and feature extraction and target detection. In the image-to-image translation stage, a style generation network named multi-scale detail-aware CycleGAN (MSDA-CycleGAN) is proposed to transform image characteristics across domains. By emphasizing both global structure and local details in the generated images, it achieves unpaired image style transfer. After image translation, the problem of detecting targets in heterogenous data is transformed into a task within a homogeneous domain, thereby allowing subsequent research to focus primarily on small target detection in complex backgrounds. In the feature extraction and target detection stage, a cross-window axial self-attention target detection network (CWASA-Net) is proposed. The network first employs the cross-window axial self-attention mechanism to process high-level features, enabling refined learning of both local details and global dependencies. By decomposing the conventional two-dimensional global attention computation into separate one-dimensional row-wise and column-wise axial operations, it significantly reduces the computational overhead required for global context modeling. Secondly, a convolution-based stacked cross-scale feature fusion network is designed to integrate and enhance the multi-scale features extracted by the backbone. The convolution-based stacked fusion modules, combined with bidirectional fusion paths, strengthen the interaction between shallow localization information and deep semantic information. The contributions of this article can be summarized as follows.

- (1)

- To address the significant performance degradation caused by a mismatch between the distributions of the training and test data, we propose a style generation network named MSDA-CycleGAN to align the source and target domains at the image level, thereby achieving unpaired image style transfer. This network incorporates two key designs to achieve precise and detail-preserving alignment: first, a multilayer guided filtering (MGF) module is embedded at the end of the generator to adaptively enhance high-frequency details in the generated images; second, a structural similarity constraint is introduced into the cycle-consistency loss, forcing the model to preserve critical geometric structures and spatial contextual information during style transfer. These mechanisms collectively ensure that the aligned images are more conducive to the generalization of downstream detection models.

- (2)

- To tackle the challenge of detecting small targets under complex background clutter, we propose a cross-window axial self-attention target detection network (CWASA-Net). This network conducts collaborative optimization from two key dimensions: feature extraction and feature fusion. On one hand, the proposed cross-window axial self-attention mechanism is employed in the deeper layers of the backbone network. It efficiently refines high-level features by capturing both local details and global dependencies, thereby compensating for the information scarcity of small targets. On the other hand, a convolution-based stacked cross-scale feature fusion network is designed as the neck network. The convolution-based stacked fusion modules, combined with bidirectional fusion paths, integrate and enhance the multi-scale features extracted by the backbone, thereby strengthening the interaction between shallow localization information and deep semantic information. These two components work synergistically to produce fused features that are rich in both contextual information and fine local details, significantly enhancing the discriminative representation capability for multi-scale features.

- (3)

- To validate the effectiveness of the proposed algorithm, we conduct comprehensive experiments on the self-developed monostatic/bistatic SAR datasets under multiple within-domain and cross-domain testing conditions. The superiority and generalization capability of our method are verified through comparative experiments with other state-of-the-art (SOTA) target detection algorithms, ablation experiments, and generalization experiments on public dataset.

The rest of this article is organized as follows. Section 2 reviews related work. Section 3 introduces the self-developed monostatic/bistatic SAR datasets. Section 4 provides a detailed description of the proposed method. Section 5 presents experimental results and corresponding analyses based on both the monostatic/bistatic SAR datasets and public dataset. Finally, Section 6 concludes the article.

2. Related Work

SAR target detection is closely related to the problem of small target detection in general scenarios, and the rapid progress in small target detection has significantly contributed to enhancing the performance of SAR target detection. Meanwhile, domain adaptation methods effectively mitigate the distribution discrepancy between training and test data in SAR images, thereby improving the model’s generalization capability in cross-domain scenarios. The following briefly reviews recent research advances in deep learning-based SAR target detection, small target detection, and domain adaptation.

2.1. SAR Target Detection

In recent years, deep learning-based methods have achieved remarkable progress in the field of SAR target detection. Zhao et al. [17] introduced an auxiliary segmentation task to encourage the network to focus on learning precise shapes and characteristics of targets, thereby enhancing the performance of the main detection task. Wan et al. [18] abandoned the conventional anchor mechanism and constructed a network architecture dedicated to multi-scale enhanced representation learning to address the issues of multi-scale targets and complex backgrounds in SAR images. Chen et al. [19] incorporated graph convolutional networks to explore and leverage global spatial and semantic relationships among targets, thereby refining and enhancing candidate features initially generated by YOLO. Du et al. [20] proposed an adaptive rotating anchor generation mechanism, coupled with a convolutional module featuring rotational constraints and rectangular receptive fields, to collectively improve the detection of rotated vehicles. Lv et al. [21] first utilized inherent characteristics of SAR targets for coarse localization to suppress strong background clutter and then significantly improved detection efficiency through a specially designed lightweight detection model. Zhou et al. [22] introduced a novel lightweight feature aggregation module capable of efficiently extracting and integrating discriminative fine-grained features specific to SAR targets, achieving a balance between detection accuracy and efficiency. Subsequently, Zhou et al. [23] proposed a SAR-specific feature aggregation module tailored to the unique scattering properties of vehicle targets in SAR images, and incorporated Swin Transformer into the detection head to capture deeper semantic information. Ye et al. [24] proposed an adaptive aggregation-refinement feature pyramid network to generate enhanced multi-scale features. By incorporating key adaptive spatial aggregation module and global context module, their approach strengthens the model’s capability in detecting small vehicle targets. While the above methods have demonstrated promising results in SAR target detection, their performance advantages heavily rely on the assumption that training and test data follow identical probability distributions. This limitation poses significant challenges to their generalization capability in real-world scenarios involving complex cross-domain conditions.

2.2. Small Target Detection

Small target detection aims to address core challenges such as low pixel coverage and weak feature representation. Technical approaches in this field can be categorized into four main types: multi-scale feature learning, contextual information utilization, data-oriented enhancement, and innovative optimization strategies. Multi-scale feature learning focuses on constructing feature representations that preserve both high-resolution details and high-level semantic information. Feature pyramid networks (such as Feature Pyramid Network (FPN), Path Aggregation Network (PANet), and Bidirectional FPN (BiFPN)) integrate features from different scales through unidirectional or bidirectional paths to improve the perception of multi-scale targets, especially small targets. Transformer-based methods (such as Vision Transformer (ViT) and Swin Transformer) leverage their inherent global modeling capacity and adaptive attention mechanisms to capture dependencies across varying scales effectively. Contextual information utilization addresses the difficulty of limited information in small targets by incorporating surrounding context to aid detection. Dilated convolutions expand the receptive field to integrate broader contextual information within a single feature computation. Self-attention mechanisms efficiently model long-range dependencies between pixels, capturing globally relevant contextual cues across the image. Data-oriented enhancement increases the number of small target samples directly at the data level. Oversampling increases the number of training iterations for images containing small targets by repeatedly sampling them during training. Generative adversarial networks (GANs) synthesize high-quality samples of small targets to alleviate extreme data imbalance. Innovative optimization strategies refine the training and inference processes to tackle difficulties in small target localization and the scarcity of positive samples. Enhanced anchor design strategies (such as dense anchors and anchor-free methods) improve the recall of small targets and prevent mismatch between anchor sizes and small targets. Improvements in loss function design (such as focal loss and its variants) reduce the contribution of easy-to-classify negative samples in the loss calculation, thereby directing more focus to small targets during learning.

2.3. Domain Adaptation for Target Detection

Domain adaptation is widely applied in target detection and can be broadly categorized into three types: image translation, feature alignment, and self-training. Image translation-based domain adaptation directly transforms the style of source domain images to that of the target domain at the pixel level, preserving the structural content and annotations of the source while reducing visual discrepancies. Image translation typically relies on image generation techniques [25,26,27]. CycleGAN [26] is an unsupervised framework based on cycle-consistency constraints, which enables unpaired image style transfer through bidirectional generative mappings. Inoue et al. [28] proposed a progressive domain adaptation framework that first transitions from fully supervised to weakly supervised learning within the source domain and then achieves weakly supervised feature alignment from the source to the target domain. This method effectively enables cross-domain target detection using only image-level labels. Feature alignment-based domain adaptation aims to minimize the discrepancy between feature distributions of the source and target domains in the latent space, guiding the model to learn domain-invariant features. Sun et al. [29] proposed minimizing the difference between the covariance matrices of source and target domain features to achieve alignment of second-order statistics, thereby accomplishing unsupervised domain adaptation. Chen et al. [3] first introduced adversarial learning to cross-domain target detection, aligning feature distributions between the source and target domains at both image and instance levels. Saito et al. [30] proposed a dual strong-weak alignment strategy that combines category-aware local features with global features to achieve comprehensive feature distribution alignment. Self-training-based domain adaptation leverages the model’s own high-confidence predictions on the target domain as pseudo-labels, iteratively guiding the learning process on target data. Cao et al. [31] integrated contrastive learning with a mean-teacher framework, enhancing intra-class feature compactness and inter-class separation to achieve more robust domain-adaptive target detection under the guidance of pseudo-labels. Li et al. [32] proposed a dual-stage target detection architecture based on an adaptive teacher model, which improves generalization and robustness through dynamically optimized pseudo-label generation process and feature alignment strategy. Although the aforementioned domain adaptation methods have demonstrated remarkable success in natural images, their designs often implicitly leverage semantic information from color channels. However, SAR images are single-channel grayscale data, and domain discrepancies in SAR images primarily manifest in aspects such as texture, structure, and scattering characteristics. Therefore, directly applying these methods to SAR images often fails to achieve satisfactory alignment of discriminative features, leading to limited performance improvement in cross-domain SAR target detection.

3. Dataset Description

3.1. Monostatic and Bistatic MiniSAR Systems

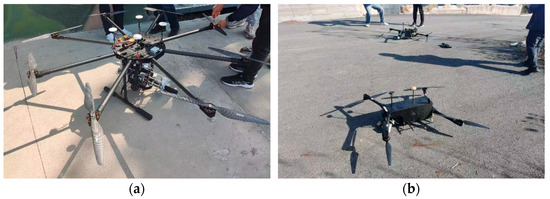

The integration of MiniSAR with UAV platforms combines advantages such as low cost, lightweight design, high resolution, and real-time processing capabilities. Our team has independently developed both a monostatic MiniSAR system and a bistatic MiniSAR system. The physical photographs of the systems are shown in Figure 2. As all vehicle targets are centrally parked within the vehicle equipment display area of the theme park, the MiniSAR systems are operated in spotlight mode. The schematic diagrams of the imaging geometry are provided in Figure 3.

Figure 2.

Physical photographs of MiniSAR systems: (a) Monostatic MiniSAR system. (b) Bistatic MiniSAR system.

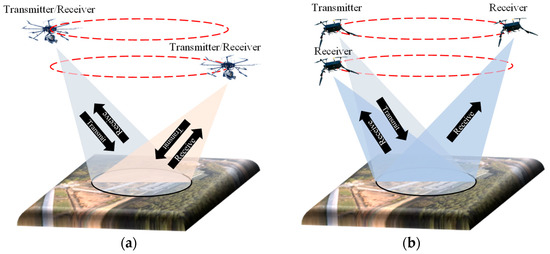

Figure 3.

Schematic diagrams of the imaging geometry: (a) Monostatic MiniSAR system. (b) Bistatic MiniSAR system. The red dashed circles indicate the flight paths.

The monostatic MiniSAR system is illustrated in Figure 3a. The transmitter and receiver of this system are deployed on the same UAV. Main system parameters are summarized in Table 1. Specifically, the system operates in the X-band with a center frequency of 9.7 GHz and a bandwidth of 1800 MHz. The platform followed a circular flight path with a radius of 350 (±5) m around the vehicle equipment display area at a velocity of 5–7 m/s. By adjusting flight altitude and operating distance, the system captured target information at multiple depression angles. Each flight had a duration of approximately 360 s. With an azimuthal sample pulse count of 4096, sub-aperture echo data was acquired every 1.2 s. Each flight yielded 300 panoramic images after processing, achieving an imaging resolution of 0.1 m × 0.1 m. Using the monostatic MiniSAR system, our team conducted two imaging campaigns in March and July 2022. The March campaign consisted of three flights with depression angles of 15°, 31°, and 45°, respectively, while the July campaign included four flights at depression angles of 26°, 31°, 37°, and 45°. Additionally, the power amplifier gain of the sensor was increased during the July data acquisition, resulting in higher image quality in the July dataset compared to the March dataset.

Table 1.

Main Parameters of the Monostatic MiniSAR System.

The bistatic MiniSAR system is illustrated in Figure 3b. The transmitter and receiver of this system are deployed on separate UAVs. Main system parameters are summarized in Table 2. In contrast to the monostatic system, the bistatic configuration employs a pulse width of 1.9012 ms, a pulse repetition frequency (PRF) of 525 Hz, and achieves an imaging resolution of 0.16 m × 0.26 m. Using the bistatic MiniSAR system, our team conducted the third imaging campaign in December 2023. The system executed a total of nine flights with varied azimuth and depression angle combinations to capture rich multi-angle scattering characteristics of the targets. The specific angular configurations of the transmitter and receiver for each flight are detailed in Table 3.

Table 2.

Main Parameters of the Bistatic MiniSAR System.

Table 3.

Angular Configurations of the Transmitter and Receiver for Nine Flights.

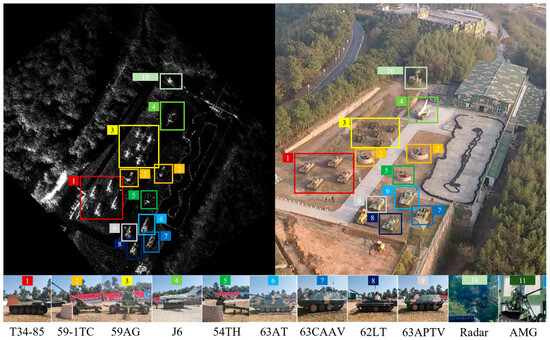

3.2. Monostatic/Bistatic SAR Datasets

Utilizing the MiniSAR systems, we conducted multiple imaging missions over the same vehicle equipment display area at different times, constructing the monostatic/bistatic SAR datasets named FAST-Vehicle. Figure 4 shows a comparative overview between the SAR panoramic imaging result and the corresponding optical image of the area. As detailed in Table 4, the FAST-Vehicle dataset comprises 11 categories of targets. The monostatic dataset includes the first 10 target types listed in Table 4, while the bistatic dataset supplements these with an additional category AMG. Side-view optical images and detailed information for all targets are provided in Figure 4 and Table 4, respectively.

Figure 4.

SAR panoramic image and corresponding optical image of the vehicle equipment display area, and side-view optical images of the 11 target categories listed below.

Table 4.

Full Name and Corresponding Abbreviation of Target Types.

4. Methodology

4.1. Framework Overview

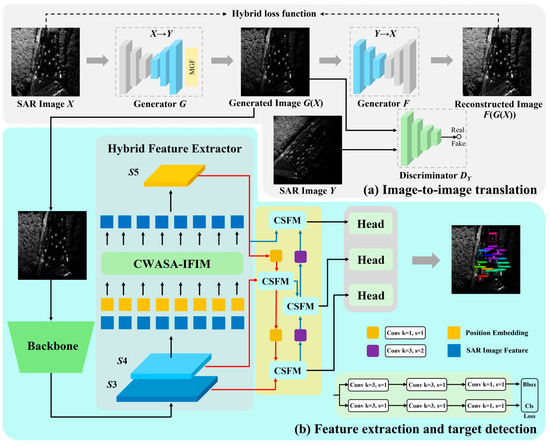

This article proposes the DUA-TDF based on pixel-level domain adaptation and cross-window axial self-attention mechanisms, aiming to achieve robust detection of small ground vehicle targets under complex background clutter in both within-domain and cross-domain scenarios. As illustrated in Figure 5, the proposed method follows a two-stage architecture: image-to-image translation stage and feature extraction and target detection stage. In the image-to-image translation stage, MSDA-CycleGAN is employed to align the source and target domains at the image level, enabling unpaired image style transfer. It is important to note that this stage is specifically designed for cross-domain detection tasks. Under within-domain testing conditions, the system automatically skips this stage and proceeds directly to the next stage. In the feature extraction and target detection stage, the anchor-free CWASA-Net is constructed. First, the backbone network combines the local feature extraction capability of the first four layers (S1–S4) of YOLOv8’s backbone with the intra-scale global context modeling ability of the cross-window axial self-attention mechanism, forming a hybrid feature extractor. Second, a convolution-based stacked cross-scale feature fusion network is designed as the neck network to integrate and enhance the multi-scale features extracted by the backbone. Finally, the decoupled detection head of YOLOv8 is adopted to perform target classification and bounding box regression. The following provides a detailed description of the aforementioned two stages.

Figure 5.

Overall structure of DUA-TDF.

4.2. Image-to-Image Translation

The core objective of this stage is to address the domain shift in SAR images caused by system disparities and time-varying factors. It is noteworthy that the three subsets of the FAST-Vehicle dataset (the monostatic March dataset, monostatic July dataset, and bistatic dataset) capture identical physical scenes and targets of interest. This implies that the domain discrepancy does not originate from variations in semantic content or target categories but rather manifests as differences in underlying textures and scattering intensities caused by variations in backscattering characteristics and imaging geometry. The essence of this discrepancy lies in pixel-level stylistic variations. Consequently, compared to more complex feature-level domain adaptation methods designed to address semantic discrepancies, a pixel-level domain adaptation approach capable of directly aligning image styles and enhancing discriminative details is sufficient to effectively bridge the aforementioned distribution gaps and improve the generalization capability of downstream target detection models.

Although CycleGAN is a classical method for unpaired image translation, its direct application to SAR images exhibits significant limitations. The standard CycleGAN and its loss function are designed for natural images. However, SAR images are single-channel grayscale data lacking color information, and their discriminative features rely entirely on structural and textural details. The original cycle-consistency loss (L1 loss) tends to produce blurred edges and loss of fine details during translation. To address these issues, this article proposes MSDA-CycleGAN, an enhanced generation network based on CycleGAN, which incorporates two key improvements: (1) A MGF module is embedded at the end of the generator to adaptively enhance high-frequency details in the generated images. (2) A structural similarity constraint is incorporated into the cycle-consistency loss, forming a hybrid loss function that forces the model to preserve critical geometric structures and spatial contextual information during style transfer. The structure of MSDA-CycleGAN is illustrated in Figure 5a. The MGF module and the optimization of loss function are described in detail in the following sections.

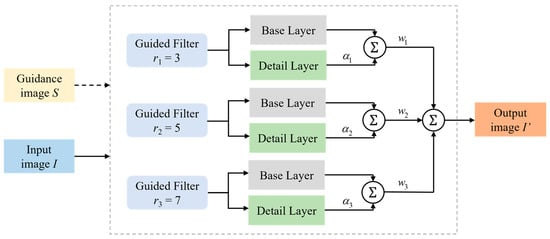

4.2.1. Multilayer Guided Filtering Module

To overcome the limitation of the standard CycleGAN generator in preserving fine details in SAR images, particularly the blurred terrain edges and vehicle targets caused by the L1-based cycle-consistency constraint, we design the MGF module as illustrated in Figure 6. The core idea of this module is to explicitly enhance high-frequency components of the output image through multi-scale decomposition, detail enhancement, and adaptive fusion.

Figure 6.

Structure of the MGF module. The input image I and the guidance image S serve as inputs to the MGF module. The module utilizes the structural information from S to guide the filtering and enhancement of I, ultimately producing the detail-enhanced result I′.

As shown in Figure 6, the input image I is first decomposed at three different scales. For each scale k, a guided filter GFk is applied to process the image:

where Bk represents the base layer obtained from filtering, which contains low-frequency contour information of the image; S denotes the guidance image; and rk is the window radius of the kth filter, determining the size of the receptive field. The small window applies mild smoothing and focuses on capturing the finest high-frequency components (such as edges and textures), while the large windows perform strong smoothing to extract macroscopic low-frequency contours, with their corresponding detail layers containing more prominent and coarse-grained structural information. We adopt an increasing radius setting with r1 = 3, r2 = 5, and r3 = 7 to capture features at three distinct scales, ranging from fine to coarse. Subsequently, the detail layer is obtained by subtracting the base layer:

where Dk denotes the detail layer, capturing high-frequency information lost in the original image at scale k, such as edges and textures.

Then, to adaptively enhance beneficial high-frequency details, we apply scaling to the detail layers across the three scales:

where denotes a learnable coefficient that is optimized during training via gradient descent and backpropagation.

Finally, the enhanced detail layers from the three scales are fused with the base layer through weighted summation to produce the detail-enhanced output image:

where represents the fusion weights for each scale. To ensure fusion stability, the weights are normalized using a softmax function:

where also denotes a learnable coefficient. This design enables the network to flexibly integrate information from all three scales. The large-scale base layer provides the global structure, while the small-scale detail layer contributes fine-grained textures.

As illustrated in Figure 5a, the MGF module is embedded in a plug-and-play manner at the end of the forward generator G to enhance the detail representation of its output images, while the backward generator F retains its original structure. This asymmetric design is motivated by the fact that the task requires only high-quality unidirectional translation. The backward path in cycle-consistent training serves merely as a regularization constraint, and the detail fidelity of its output is not a primary concern. Preserving F in its original form avoids unnecessary computational overhead, improving model efficiency. The inputs to the MGF module are the preliminary output X’ from generator G and the source domain image X, which serves as the guidance image. This design is justified by the observation that, although X and X’ exhibit different styles, they share identical scene structures and target spatial layouts. Hence, the source domain image provides the most reliable spatial structural prior for the filtering process, guiding the module to operate at accurate spatial locations.

4.2.2. Optimization of Loss Function

Although the MGF module effectively enhances the details of generated images, the original loss function of CycleGAN remains insufficient for strictly preserving structural consistency between the generated and source images. The standard cycle-consistency loss employs the L1 norm, which constrains image reconstruction by minimizing pixel-wise absolute errors. However, this approach inevitably results in blurred edges and structural distortions, which is a critical drawback for SAR target detection where structural integrity is essential.

To enforce the preservation of critical structural information during style transfer, we incorporate the structural similarity index measure (SSIM) as a key constraint into the cycle-consistency loss, forming a hybrid cycle-consistency loss. SSIM comprehensively evaluates image similarity from three dimensions: luminance, contrast, and structure. For two images a and b, it is calculated as follows:

where and represent the mean values of images a and b, respectively; and denote their standard deviations; denotes the covariance between images a and b; and and are small constants added to prevent division by zero.

Based on SSIM, the structural similarity loss is defined as:

where LSSIM is employed to enforce structural consistency between the original and reconstructed images, preventing adversarial training from distorting critical features.

Given that the task only requires high-quality unidirectional translation, we introduce an asymmetric improvement to the cycle-consistency loss. All enhancement constraints are applied solely to the forward cycle, while the backward cycle, which serves only as an auxiliary training mechanism, retains the original L1 constraint. Specifically, the structural similarity loss LSSIM for the forward cycle reconstruction is defined as:

where x denotes a sample from the dataset in image domain X; pdata (x) represents the data distribution of image domain X; F(G(x)) represents the reconstructed image.

Therefore, the improved asymmetric cycle-consistency loss is defined as:

where and denote balancing weight coefficients; denotes the L1 norm function; pdata (y) represents the data distribution of image domain Y; y denotes a sample from the dataset in image domain Y; G(F(y)) represents the reconstructed image.

The adversarial loss for the mapping X → Y and its corresponding discriminator DY is defined as:

where DY represents a binary classifier that distinguishes between generated images and real images from domain Y. G aims to minimize this loss function, while DY attempts to maximize it.

The adversarial loss for the mapping Y → X and its corresponding discriminator DX is defined as:

where DX represents a binary classifier that distinguishes between generated images and real images from domain X. F aims to minimize this loss function, while DX attempts to maximize it.

In summary, the total loss function of MSDA-CycleGAN is defined as:

The training of MSDA-CycleGAN aims to solve the following min-max optimization problem:

where the generator is trained to minimize the total loss function, while the discriminator is trained to maximize it.

4.3. Feature Extraction and Target Detection

In the image-to-image translation stage, MSDA-CycleGAN is employed to achieve distribution alignment between training and test data at the image level, thereby transforming the cross-domain target detection task into a within-domain detection problem. It is important to emphasize that this stage is specifically designed for cross-domain testing scenarios. Under within-domain conditions, no data transformation is required, and the model proceeds directly to the feature extraction and target detection stage. The feature extraction and target detection stage primarily focuses on small target detection in complex backgrounds. We construct the anchor-free CWASA-Net, whose overall architecture is illustrated in Figure 5b. First, the backbone network integrates the local feature extraction capability of the first four layers (S1–S4) of YOLOv8’s backbone with the intra-scale global context modeling ability of the cross-window axial self-attention mechanism, forming a hybrid feature extractor. This design enables efficient collaboration and complementarity between local details and global dependencies, compensating for the information scarcity of small targets. Second, the convolution-based stacked cross-scale feature fusion network is designed as the neck to integrate and enhance the multi-scale features extracted by the backbone, strengthening the interaction between shallow localization information and deep semantic information. Finally, the decoupled detection head of YOLOv8 is adopted to perform target classification and bounding box regression. Compared to YOLOv8, which is also anchor-free and excels in both detection accuracy and inference speed, CWASA-Net offers two main advantages: (1) A cross-window axial self-attention mechanism is proposed to process high-level features, enabling refined learning of both local details and global dependencies in an efficient manner to enhance the feature representation of small targets. This effectively alleviates the issues of detail loss and insufficient semantic information caused by small target sizes. (2) A convolution-based stacked cross-scale feature fusion network is designed to integrate and enhance multi-scale features extracted by the backbone. The convolution-based stacked fusion module, combined with bidirectional fusion paths, strengthens the interaction between shallow localization information and deep semantic information. The following sections detail the cross-window axial self-attention-based intra-scale feature interaction module and the convolution-based stacked cross-scale feature fusion network.

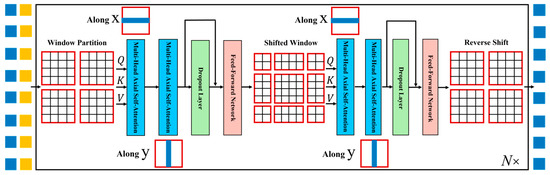

4.3.1. Cross-Window Axial Self-Attention Based Intra-Scale Feature Interaction Module

Due to the absence of color information and complex backgrounds in SAR images, small targets exhibit weak detailed feature representation and are susceptible to interference from background clutter resembling the targets. Directly applying the self-attention mechanism can capture global context to compensate for the lack of local information in small targets. However, the large size of panoramic input images leads to high computational complexity. To collaboratively address the dual challenges of weak detail representation and high computational complexity, we propose the cross-window axial self-attention mechanism and apply it to high-level features rich in semantic information. This mechanism integrates cross-window information interaction with axial self-attention, effectively enhancing the detail features of small targets and modeling their global relationships with the background while significantly reducing computational cost. By leveraging the factorization strategy of axial self-attention, the cross-window axial self-attention mechanism decomposes the two-dimensional global attention into sequential one-dimensional row-wise and column-wise axial operations, thereby reducing the computational complexity from quadratic to linear and significantly improving computational efficiency. Meanwhile, the axial operations retain the capability of adaptively weighting features at different positions, enabling effective focus on critical details to enhance the feature representation of small targets. Furthermore, through an alternating design of standard windows and shifted windows, the mechanism not only captures fine-grained features within local windows but also establishes global contextual dependencies across windows. Based on the above design, we construct the cross-window axial self-attention-based intra-scale feature interaction module (CWASA-IFIM). As illustrated in Figure 7, the CWASA-IFIM consists of N cross-window axial self-attention submodules stacked together, with each submodule featuring a cascaded combination of standard window axial self-attention and shifted window axial self-attention. The working principle of CWASA-IFIM is described in detail below.

Figure 7.

Structure of the CWASA-IFIM.

Standard Window Axial Self-Attention Mechanism

The self-attention learning mechanism is applied to the S4 layer features of the backbone network, which possess richer semantic information. This design is primarily motivated by two considerations: First, the S4 layer features play a critical role in detecting small targets, as they contain sufficient contextual semantic information while preserving target location and detailed features without excessive loss, making them an ideal level for intra-scale context modeling. Second, since lower-level features contain limited semantic information, conducting intra-scale interactions at this level is both redundant and inefficient, and may even introduce noise that interferes with the semantic representation of high-level features. The enhanced representation of small targets lays a solid foundation for their subsequent accurate localization and classification. Specifically, the S4 layer feature map with dimensions H × W × C is first partitioned into H/P × W/P non-overlapping patches of size P × P × C. Each patch is then flattened and transformed into an embedding vector via a linear projection layer, while positional encodings are incorporated into each patch embedding. These patch embeddings are subsequently grouped into multiple non-overlapping standard windows, each containing M × M patches. Within each window, all patch embeddings are fed into three separate shared linear projection layers to generate the corresponding query (Q), key (K), and value (V) matrices. Thereafter, a row-column axial factorization strategy is employed within each standard window. First, axial multi-head self-attention is computed along the row direction (X-axis), where the attention weights are only aggregated among all patch embeddings within the same row. The formulation is as follows:

where , , and represent the query, key, and value matrices for row-wise attention within a standard window; denotes the ith row attention head; , , , and denote the learnable parameter matrices for generating , , and , respectively; represents the concatenation operation; represents the dimension of the query and key matrices; and T denotes the transpose operation. Subsequently, a symmetric computation is performed along the column direction (Y-axis) to build the complete 2D contextual representation. Specifically, column-wise axial multi-head self-attention is applied, which aggregates attention weights exclusively from patch embeddings within the same column. The formulation is as follows:

where , , and represent the query, key, and value matrices for column-wise attention within a standard window; denotes the ith column attention head; , , , and denote the learnable parameter matrices for generating , , and , respectively; and represents the dimension of the query and key matrices. The SAR image features enhanced by axial self-attention are fed into a dropout layer for regularization to mitigate overfitting risks. This process is formulated as follows:

The features are then passed into a feed-forward network for non-linear transformation and feature enhancement. This process is formulated as follows:

where GELU denotes the activation function.

Shifted Window Axial Self-Attention Mechanism

While the standard window axial self-attention mechanism achieves efficient computation, its receptive field remains confined to individual windows, limiting its ability to model global context. To address this limitation, we introduce a shifted window strategy to establish cross-window connections. By cyclically shifting the feature maps and re-partitioning the windows, the computational windows incorporate patch embeddings from different regions of the original feature maps. Within these newly formed windows after shifting, axial multi-head self-attention is first computed along the row direction (X-axis), which exclusively aggregates attention weights among all patch embeddings within the same row. The formulation is as follows:

where , , and represent the query, key, and value matrices for row-wise attention within a shifted window; denotes the ith row attention head; ,, , and denote the learnable parameter matrices for generating , , and , respectively; and represents the dimension of the query and key matrices. Subsequently, a symmetric computation is performed along the column direction (Y-axis) to build the complete 2D contextual representation. Specifically, column-wise axial multi-head self-attention is applied, which aggregates attention weights exclusively from patch embeddings within the same column. The formulation is as follows:

where , , and represent the query, key, and value matrices for column-wise attention within a shifted window; denotes the ith column attention head; , , , and denote the learnable parameter matrices for generating , , and , respectively; and represents the dimension of the query and key matrices. The SAR image features enhanced by axial self-attention are fed into a dropout layer for regularization to mitigate overfitting risks. This process is formulated as follows:

The features are then passed into a feed-forward network for non-linear transformation and feature enhancement. This process is formulated as follows:

Finally, after completing the self-attention computation within the shifted windows, a reverse cyclic shifting operation is applied to the output feature map to restore its original spatial layout. This step ensures structural consistency between the output and input feature maps, thereby facilitating subsequent processing.

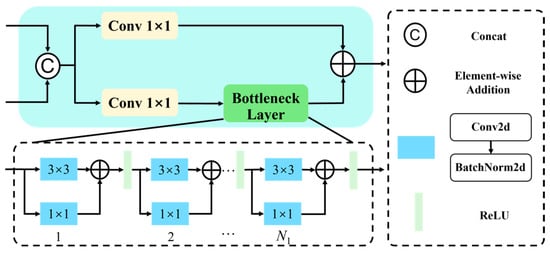

4.3.2. Convolution-Based Stacked Cross-Scale Feature Fusion Network

To effectively integrate and enhance the multi-scale features extracted by the backbone network, while addressing the challenge of balancing semantic information and detailed features in small target detection, we design a convolution-based stacked cross-scale feature fusion network to serve as the neck network. Building upon the PANet, the proposed network introduces optimizations in two key aspects: the convolutional operation strategy and the feature aggregation module. On one hand, a differentiated convolutional kernel strategy is applied along the feature propagation paths. The top-down path uses 1 × 1 convolutions to efficiently transmit high-level semantic information, while the bottom-up path employs 3 × 3 convolutions to enhance local detail features. On the other hand, a novel convolution-based stacked fusion module (CSFM) is proposed. Through a dual-branch architecture and structural reparameterization mechanism, this module achieves richer feature representation and higher inference efficiency, thereby significantly improving the representational capacity of multi-scale features. The following introduces the differentiated convolutional kernel configuration strategy and the CSFM, respectively.

Differentiated Convolutional Kernel Configuration Strategy

As shown in Figure 5b, significant differences exist in semantic expression and computational requirements between the features propagated along the top-down and bottom-up paths during cross-scale feature fusion. In the top-down path, high-level features possess strong semantic information but at lower spatial resolution. 1 × 1 convolutions are employed for channel adjustment and feature compression, enabling efficient information transmission without disrupting spatial structures while reducing computational overhead. In the bottom-up path, to fully leverage the detailed and positional information in shallow features, 3 × 3 convolutions are employed for downsampling and feature enhancement, thereby improving the network’s perception of edges and textures of small targets. This strategy achieves a rational allocation of computational resources while ensuring effective feature fusion.

Convolution-Based Stacked Fusion Module

The CSFM serves as the core component of the convolution-based stacked cross-scale feature fusion network, with its detailed structure illustrated in Figure 8. CSFM adopts a dual-branch design: one branch consists of a 1 × 1 convolution followed by a bottleneck layer composed of multiple sequentially stacked RepVGG blocks. This configuration leverages structural re-parameterization to achieve strong deep feature extraction capabilities during training; the other branch employs a 1 × 1 convolution to adjust channel dimensionality, preserving original feature information while providing a shortcut for gradient flow. The outputs of both branches are finally fused through element-wise addition. This design enhances gradient flow and feature diversity during training through its multi-branch architecture, while being converted into a single-branch structure via structural reparameterization during inference, thereby balancing training effectiveness and inference efficiency. The CSFM significantly improves multi-scale feature fusion performance, particularly for characterizing small targets in complex backgrounds.

Figure 8.

Structure of the CSFM.

Taking the feature maps from the S4 and S5 layers extracted by the backbone network as inputs, the processing procedure of the CSFM is formulated as follows:

where upsample denotes the upsampling operation, and ReLU represents the activation function.

5. Experiments

This section begins by introducing the datasets, implementation details, and evaluation metrics. Then, comprehensive experiments and analyses are conducted for the proposed DUA-TDF under various cross-domain and within-domain testing conditions configured on the FAST-Vehicle dataset. Finally, the superiority and generalization capability of our method are verified through comparative experiments with other SOTA target detection algorithms, ablation experiments, and generalization experiments on public dataset.

5.1. Introduction of Datasets

The FAST-Vehicle dataset, which was briefly introduced in Section 3, has its detailed composition summarized in Table 5. To mitigate potential overfitting caused by fixed target positions, we dynamically adjusted the locations of targets within the panoramic images. The Mix-MSTAR dataset, proposed by Liu et al. [33], is a synthetic dataset constructed by combining MSTAR target slices with backgrounds. The dataset encompasses diverse scenarios, including forests, grasslands, lakes, and urban areas. The Mix-MSTAR dataset consists of 100 images, each with a resolution of 1478 × 1784 pixels, and contains 5392 vehicle targets distributed across 20 categories. These include multiple variants of the T72 series as well as other non-T72 MSTAR target categories. In our experiments, the Mix-MSTAR images are randomly cropped into 640 × 640 pixel patches, with each patch guaranteed to contain at least two targets. These image patches are subsequently resized to 416 × 416 pixels when used as inputs to all detectors.

Table 5.

Detailed Composition of The FAST-Vehicle Dataset.

5.2. Implement Details

All the work is implemented on Python 3.11, PyTorch 2.0.0, and CUDA 11.8 with an AMD EPYC 9554 @ 2.45 GHz CPU and a NVIDIA GeForce RTX 4090D GPU. The Adam optimizer is employed to train the network over 200 epochs with 8 images per batch. The initial learning rate is set to 0.001, while the momentum and weight decay are configured as 0.937 and 0.0005, respectively.

To comprehensively evaluate the performance of DUA-TDF, this article constructs various cross-domain and within-domain testing conditions based on the three subsets of the FAST-Vehicle dataset. Detailed experimental configurations are provided in Table 6.

Table 6.

Detailed Experimental Configurations.

EXP1 employs monostatic datasets from different months as training and test sets, respectively, to preliminarily validate the detection performance of the proposed algorithm under cross-domain testing conditions. Although both the training and test data originate from the same monostatic system, domain distribution shifts arise due to the enhanced sensor amplifier during the July data acquisition and the four-month temporal gap between them. This configuration constitutes a moderately challenging cross-domain detection task.

EXP2 utilizes the complete bistatic dataset and monostatic datasets from different months as the training and test sets, respectively, to further evaluate the detection performance of the proposed algorithm in more complex cross-domain scenarios. Since the training and test data are acquired from different imaging systems and separated by a temporal gap of approximately 1.5 years, the domain distribution shifts become more pronounced, resulting in a highly challenging cross-domain detection task.

EXP3 employs monostatic data with different depression angles from the same month as the training and test sets, respectively, to evaluate the detection performance of the proposed algorithm under within-domain testing conditions of the monostatic system.

EXP4 utilizes bistatic data from different flights as the training and test sets, respectively, aiming to validate the detection performance of the proposed algorithm under within-domain testing conditions of the bistatic system.

5.3. Evaluation Metrics

In our experiments, we adopted four evaluation metrics widely used in target detection, as introduced in [24]: Precision, Recall, F1-score, and mean Average Precision (mAP). When mAP is computed at an Intersection over Union (IoU) threshold of 0.5, we denote it as mAP50.

5.4. Comprehensive Experiments Under Various Testing Conditions

Following the experimental configurations specified in Table 6, we perform a series of experiments under various cross-domain and within-domain testing conditions on the FAST-Vehicle dataset to evaluate the performance of the proposed DUA-TDF. The detection results of DUA-TDF under four different experimental configurations are presented in Table 7 and Figure 9. A detailed quantitative and qualitative analysis of each experiment is provided below.

Table 7.

Detection Results of EXP 1–EXP 4.

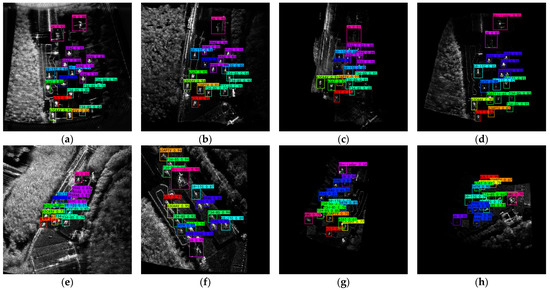

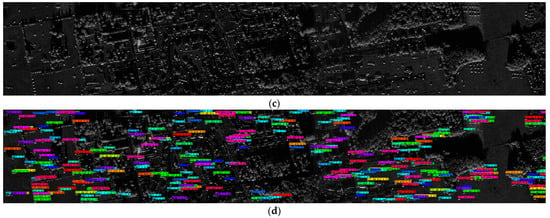

Figure 9.

Partial visual detection results of EXP 1–EXP 4: (a) EXP 1-1; (b) EXP 1-2; (c) EXP 2-1; (d) EXP 2-2; (e) EXP 3-1; (f) EXP 3-2; (g) EXP 4-1; (h) EXP 4-2.

EXP 1-1 uses the monostatic March dataset as the training set and the monostatic July dataset as the test set, while EXP 1-2 adopts the reverse configuration. Due to the relatively short time span between the two datasets and the fact that systemic differences primarily stem from sensor power amplification adjustments, the pixel-level style discrepancy between them is relatively limited. After performing image-level domain alignment via MSDA-CycleGAN, Figure 9a,b present the style transfer results from July to March and March to July, respectively. This process effectively mitigates the significant performance degradation caused by distribution mismatch between training and test data for target detectors developed on homogeneous datasets. Quantitative results indicate that DUA-TDF achieves mAP50 and F1-score of 86.5% and 84.7% in EXP 1-1, while its overall performance in EXP 1-2 surpasses that of EXP 1-1, with precision, recall, F1-score, and mAP50 all reaching approximately 90%. This performance discrepancy primarily stems from the advantages of the July dataset in both sample quantity and quality, which provides more sufficient supervisory information for model training. A more intuitive qualitative evaluation based on partial visual detection results in Figure 9 reveals that Figure 9a contains one false detection where a radar target is misidentified as a J6 target. This error occurs because the March dataset does not include the radar category, leading to the model’s limited discriminative capability for unknown classes. In contrast, Figure 9b demonstrates correct detection of all targets with no missed or false detections. In summary, DUA-TDF effectively handles moderate domain shifts within the monostatic system and achieves cross-domain target detection by building upon image alignment.

EXP 2-1 and EXP 2-2 both utilize all bistatic data as the training set, with the monostatic March data and monostatic July data serving as the test sets, respectively. Due to the distinct imaging characteristics between bistatic and monostatic systems combined with the long time span of data collection, significant pixel-level style discrepancies exist between training and test data, resulting in more substantial domain shifts than those observed in EXP 1. Although MSDA-CycleGAN successfully transfers the style of the monostatic test data to resemble the bistatic training data, as shown in Figure 9c,d, perfect pixel-level alignment remains unattained due to inherent imaging disparities between the heterogeneous systems. Consequently, all detection metrics of DUA-TDF in EXP 2-1 and EXP 2-2 show a decline compared to those in EXP 1. Nevertheless, under these more challenging cross-domain conditions, DUA-TDF maintains mAP50 above 80% in both EXP 2-1 and EXP 2-2. Apart from one missed detection in Figure 9c, no false or missed detections are observed in Figure 9d. The slightly superior performance in EXP 2-2 can still be attributed to the advantages in sample quantity and quality of the July dataset. Experimental results demonstrate that DUA-TDF retains effective detection performance even in complex scenarios with system heterogeneity and significant domain divergence.

EXP 3-1 and EXP 3-2 represent within-domain testing experiments conducted within the monostatic March and July datasets, respectively. Both configurations selected data at 45° depression angles as test sets, while using data from other depression angles within the same month for training. Since the training and test data follow identical distributions, the test data requires no domain alignment through MSDA-CycleGAN processing. Quantitative results demonstrate that DUA-TDF achieves its highest mAP50 in EXP 3-1 and EXP 3-2 among all experimental configurations, reaching 90.7% and 90.9% respectively, with all other metrics remaining closely aligned and exceeding 88%. Visual detection results in Figure 9e,f clearly show that all targets are correctly detected without any false or missed detections. In summary, under within-domain testing conditions without domain shift in the monostatic system, DUA-TDF achieves precise detection of small vehicle targets.

EXP 4-1 and EXP 4-2 are partitioned from the nine flights of the bistatic dataset. By constructing complementary training sets and a unified test set, these experiments validate the model’s adaptability to different flights within the same system. Since the data from all flights were continuously collected by the same bistatic system within a short timeframe, they can be considered as following an identical data distribution. Consequently, the test data requires no domain alignment through MSDA-CycleGAN processing. Quantitative results indicate that DUA-TDF delivers outstanding overall performance in EXP 4-1 and EXP 4-2, achieving mAP50 of 90.2% and 90.1%, respectively. Although slightly inferior to the optimal within-domain results in EXP 3, these values remain consistently above 90%. Notably, the recall rates in EXP 4-1 and EXP 4-2 remain relatively low at approximately 82%. This limitation primarily stems from the inherent bistatic scattering characteristics of the MiniSAR system, where the transmitter–receiver separation introduces bistatic angle variations that can cause abrupt target brightness changes or even partial disappearance, thereby increasing the risk of missed detections. The visual detection results in Figure 9g,h further corroborate the above analysis intuitively. Specifically, Figure 9g contains two missed detections while Figure 9h exhibits one, with all remaining targets being correctly identified. These experimental results demonstrate that DUA-TDF maintains excellent detection performance and robustness in the bistatic system within-domain testing.

The four experimental groups collectively demonstrate that the proposed method achieves excellent and robust detection performance in both monostatic and bistatic with-domain testing, with mAP50 consistently exceeding 90%, thereby validating the effectiveness of DUA-TDF under ideal data distribution conditions. Furthermore, in cross-domain testing scenarios involving various domain shifts such as sensor parameter variations, temporal spans, and system heterogeneity, DUA-TDF maintains reliable generalization capability and effectively addresses the challenge of detecting small vehicle targets in complex backgrounds.

5.5. Comparative Experiments

We select EXP 1-1, a representative cross-domain testing condition, to conduct a quantitative comparison of detection performance between DUA-TDF and other detectors. The results are summarized in Table 8. The compared methods include both classical detectors and SOTA algorithms. As shown in Table 8, DUA-TDF achieves the highest mAP50 and F1-score, reaching 86.5% and 84.7% respectively, demonstrating significant superiority over other comparative methods. Although the proposed method introduces additional parameters and computational overhead during training, which stems from integrating the MSDA-CycleGAN style generation network in the first stage and adopting the re-parameterized design in the neck of the detection network, the re-parameterized structure can be equivalently transformed into an efficient single-branch architecture during inference. Consequently, DUA-TDF attains an inference speed of 67.8 FPS, with a computational burden substantially lower than that of the two-stage detector Faster R-CNN. While SSD exhibits the fewest parameters and relatively fast inference speed, it yields the lowest mAP50 and F1-score, indicating its limitations in cross-domain SAR small target detection tasks. The performance of other classical detectors remains relatively comparable, with none achieving breakthroughs in detection accuracy. Among them, YOLOv7 demonstrates relatively balanced performance in accuracy-efficiency trade-off through its anchor-based optimization design, while YOLOv8 adopts an anchor-free paradigm to streamline the training pipeline, attaining its performance advantages at the cost of acceptable model complexity increase. Compared to SOTA methods, DUA-TDF achieves improvements of over 7.6% in mAP50 and 9.9% in F1-score. This advantage primarily stems from the strong dependency of LTY-Network and ARF-YOLO on the identical distribution between training and test data, leading to severe performance degradation in cross-domain scenarios. Experimental results demonstrate that the proposed method achieves effective image-level domain alignment through MSDA-CycleGAN, significantly mitigating performance degradation caused by distribution shifts. By incurring acceptable model complexity, it attains optimal detection performance for cross-domain small vehicle target detection in SAR images.

Table 8.

Quantitative Comparison of Different Methods in EXP 1-1.

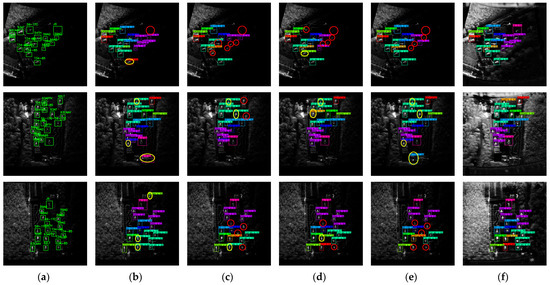

To visually compare the detection performance across different methods, we present visual detection results of DUA-TDF and several high-performing methods mentioned above in EXP 1-1. Partial detection results are presented in Figure 10. Figure 10a shows the original image with ground truth annotations. In EXP 1-1, where monostatic March data serves as the training set and monostatic July data as the test set, substantial pixel-level style differences between the training and test data result in numerous false and missed detections across all comparative methods. Specifically, Faster R-CNN exhibits seven false detections and one missed detection, demonstrating the most prominent false detection issues. CenterNet suffers from twelve missed detections and three false detections, representing the most severe missed detection problem. YOLOv7 yields four false detections and seven missed detections, while YOLOv8 produces four false detections and nine missed detections. The overall performance of these comparative methods is significantly affected by the domain shift. In contrast, the visual detection results in Figure 10f demonstrate that our method successfully transfers the style from the monostatic March training data to the monostatic July test data, effectively overcoming the impact of cross-domain distribution mismatch. All small vehicle targets are correctly detected across the three images without any false or missed detections. These visual detection results fully validate that the MSDA-CycleGAN style generation network in DUA-TDF effectively achieves image-level domain alignment, significantly mitigating model performance degradation caused by the distribution mismatch between training and test data. Consequently, DUA-TDF achieves optimal performance in cross-domain detection tasks for small vehicle targets in SAR images.

Figure 10.

Partial visual detection results of different methods in EXP 1-1: (a) Ground truth; (b) Faster R-CNN; (c) CenterNet; (d) YOLOv7; (e) YOLOv8; (f) our method. The yellow ellipses indicate false detections, while the red circles denote missed detections.

5.6. Ablation Experiments

To validate the effectiveness of each core component in DUA-TDF, we similarly selected EXP 1-1 to conduct ablation experiments on MSDA-CycleGAN, CWASA-IFIM, and CSFM sequentially. The hyperparameters remain consistent across all ablation experiments to ensure fairness, and the results are summarized in Table 9.

Table 9.

Ablation Experiments of DUA-TDF in EXP 1-1.

Effect of MSDA-CycleGAN: As shown in Table 9, the baseline network achieves mAP50 and F1-score of 75.6% and 74.8%, with 43.632 M parameters and 165.412 GFLOPs. The core of this research is to address the domain shift problem in SAR images caused by system disparities and time-varying factors. This domain discrepancy essentially manifests as pixel-level style variations. MSDA-CycleGAN aligns the training and test data at the image level by achieving unpaired image style transfer. After processing with MSDA-CycleGAN, the test data undergoes successful image style transfer, achieving mAP50 and F1-score of 83.4% and 83.5%, respectively. This represents a significant improvement of 7.8% in mAP50 and 8.7% in F1-score compared to the baseline. When MSDA-CycleGAN is collaboratively used with CWASA-IFIM and CSFM respectively, mAP50 is further increased to approximately 85.5%. Although MSDA-CycleGAN introduces additional costs of 28.283 M parameters and 56.364 GFLOPs, it achieves a substantial improvement in detection performance.

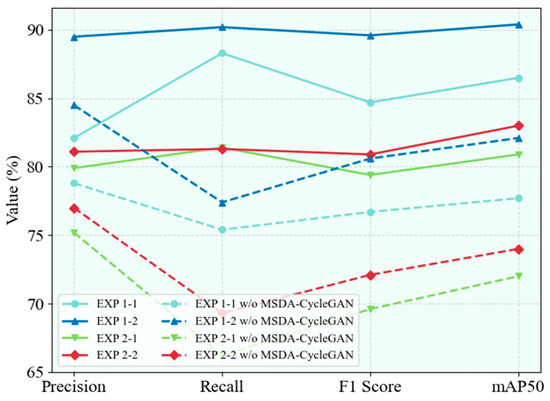

We further investigate the impact of MSDA-CycleGAN on detection performance under all cross-domain testing conditions (EXP 1 and EXP 2) in Table 6, with experimental results presented in Figure 11. In the experiments, “w/o MSDA-CycleGAN” indicates that the test data proceeds directly to feature extraction and target detection without MSDA-CycleGAN processing. As clearly shown in Figure 11, under cross-domain testing conditions, the absence of image style transfer causes severe performance degradation across all metrics, with mAP50 and F1-score decreasing by at least 8.3% and 8%, respectively. The comparative results further validate the effectiveness and robustness of MSDA-CycleGAN for cross-domain SAR target detection, demonstrating its significant enhancement of the generalization capacity in the downstream detection model.

Figure 11.

The impact of MSDA-CycleGAN on detection performance under cross-domain testing conditions.

Effect of CWASA-IFIM: CWASA-IFIM efficiently refines high-level features by capturing both local details and global dependencies, thereby compensating for the information scarcity of small targets. As indicated in Table 9, CWASA-IFIM consistently enhances model performance regardless of MSDA-CycleGAN processing, with mAP50 and F1-score increasing by at least 1.3% and 0.8%, respectively. When CWASA-IFIM is further combined with CSFM, mAP50 and F1-score gain additional improvements of 0.8% and 1.1%. The effectiveness of CWASA-IFIM primarily stems from its appropriate application to the S4-layer features, which retain both rich contextual semantic information and preserved spatial details. Furthermore, CWASA-IFIM efficiently models global context through a factorized computation strategy, reducing computational complexity while introducing only 2.898 M parameters and 4.015 GFLOPs. Experimental results demonstrate that CWASA-IFIM, as an efficient global context modeling module, enhances the model’s representational capacity and detection performance for small vehicle targets in complex backgrounds at the cost of a minimal increase in model complexity.

Effect of CSFM: The CSFM works in conjunction with bidirectional fusion paths to integrate and enhance multi-scale features extracted by the backbone network, strengthening the interaction between shallow localization information and deep semantic representations. As shown in Table 9, replacing the original PANet neck in the baseline with CSFM improves mAP50 and F1-score by 1.2% and 0.6%, respectively. Although CSFM introduces additional costs of 13.581 M parameters and 44.149 GFLOPs during training due to its re-parameterized convolutional structure, it transforms into an efficient single-branch architecture through structural re-parameterization during inference, achieving an effective balance between training performance and inference efficiency. Furthermore, when CSFM is integrated with MSDA-CycleGAN and CWASA-IFIM to form the complete model, mAP50 and F1-score reach 86.5% and 84.7%, respectively. This represents an improvement of approximately 10% over the baseline and achieves the best detection performance among all ablation combinations. These results validate that the multi-scale feature interaction paradigm constructed by CSFM effectively enhances the discrimination and localization capabilities for small vehicle targets.

5.7. Generalization Experiments

DUA-TDF has conducted experiments under various cross-domain and within-domain testing conditions in Section 5.4, and its generalization capability is demonstrated. To further validate the generalization performance of DUA-TDF on public dataset, we conduct additional experiments on the Mix-MSTAR dataset. It is worth noting that, since the training and test data in the Mix-MSTAR dataset follow the same probability distribution, the image translation stage (MSDA-CycleGAN) is automatically skipped in this experiment. The process proceeds directly to the feature extraction and target detection stage, performed by the CWASA-Net, to complete the detection task.

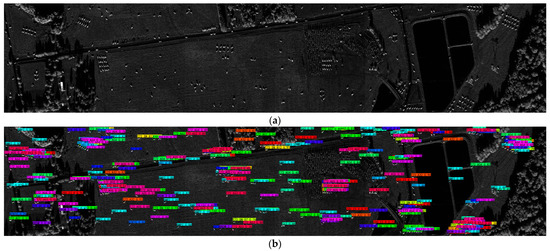

The detection results of CWASA-Net and other compared methods on the Mix-MSTAR dataset are summarized in Table 10. Our method achieves the highest mAP50 among all approaches, reaching 82.5%. Figure 12 presents visual detection results of CWASA-Net on the Mix-MSTAR dataset, demonstrating that the proposed method also delivers satisfactory performance in detecting small vehicle targets across diverse scenes. These results fully validate that DUA-TDF possesses stable detection performance and strong generalization capability.

Table 10.

Detection Results of Different Methods on the Mix-MSTAR Dataset.

Figure 12.

Visual detection results on the Mix-MSTAR dataset: (a) Test image 1. (b) Detection result of test image 1. (c) Test image 2. (d) Detection result of test image 2.

6. Conclusions

To address the challenge of robustly detecting small ground vehicle targets in SAR images under complex background clutter and across both within-domain and cross-domain scenarios, this article proposes the DUA-TDF based on pixel-level domain adaptation and cross-window axial self-attention mechanisms. The method consists of two stages: image-to-image translation and feature extraction and target detection. In the first stage, MSDA-CycleGAN is utilized to achieve image-level alignment between source and target domains, effectively accomplishing unpaired image style transfer. In the second stage, CWASA-Net is employed to perform small target detection in complex backgrounds. To validate the effectiveness and generalization capability of the proposed algorithm, multiple within-domain and cross-domain detection experiments are conducted on both the self-developed FAST-Vehicle dataset and public dataset. Experimental results demonstrate that DUA-TDF achieves outstanding performance in both monostatic and bistatic within-domain testing, with mAP50 consistently exceeding 90%. Under cross-domain scenarios involving various domain shifts such as sensor parameter variations, temporal spans, and system heterogeneity, it maintains mAP50 no lower than 80%, exhibiting excellent cross-domain adaptability. In summary, DUA-TDF delivers robust detection performance across diverse testing conditions, effectively addresses complex background interference and cross-domain distribution shifts, thereby possessing practical application value.