Highlights

What are the main findings?

- A novel model (IDBT) that integrates semantic and boundary information for precise agricultural parcel delineation.

- IDBT achieves state-of-the-art accuracy and boundary precision on two large-scale datasets.

What is the implication of the main finding?

- Provides a practical, high-resolution mapping tool for vegetation analysis and agricultural monitoring.

- Robust transferability across diverse agricultural landscapes and unseen regions.

Abstract

Accurate agricultural parcel (AP) delineation from remote sensing imagery is critical for precision agriculture and effective land management. However, parcel boundaries are typically ambiguous, irregular, and challenging to segment precisely. Existing methods often struggle to adequately integrate high-level semantic information with fine-grained boundary details, leading to incomplete or blurred parcel outlines. To address these limitations, we introduce the Interactive Dual-Branch Transformer (IDBT), a novel segmentation framework designed to significantly enhance boundary localization. Our approach incorporates three key innovations: (1) a dual-branch architecture enabling bidirectional interactive information flow between semantic segmentation and boundary detection branches; (2) attention-enhanced Transformer backbones that facilitate optimized multi-scale feature fusion; and (3) a tailored supervision strategy that combines boundary-aware losses with point-wise refinement to sharpen ambiguous parcel edges. Extensive evaluations conducted on two public datasets demonstrate that IDBT outperforms current state-of-the-art methods, achieving high segmentation accuracy and boundary precision. Additionally, our approach exhibits robust transferability in cross-area experiments. Code will be made publicly available at the repository listed in the Data Availability Statement.

1. Introduction

With advancements in remote sensing technologies, large-scale Earth observation data has become increasingly available across various domains. Among these, agriculture has particularly benefited from such data-rich environments. In agricultural monitoring, agricultural parcel (AP) delineation is a fundamental and long-standing task that aims to identify cultivated fields [1]. Since agricultural parcels serve as the basic operational units in farming, accurate delineation is essential for a range of downstream applications, including yield estimation, resource allocation, precision agriculture, and sustainable agricultural planning [2].

Traditionally, mapping APs relies on field surveys and manual digitization, which are both time-consuming and resource-intensive. Given the need to scale mapping efforts across vast and frequently updated regions, automated solutions have become increasingly desirable. The rapid progress of artificial intelligence (AI) technologies has brought new momentum to AP delineation research. Owing to their strong capabilities in processing, optimizing, and learning from large-scale and high-dimensional data, AI-based methods naturally align with the characteristics of modern remote sensing data [3,4,5]. Consequently, a growing body of work has explored the use of machine learning algorithms, and more recently, deep neural networks, for automatic field delineation from satellite imagery and other geospatial sources [6,7].

In high-resolution satellite or aerial imagery, field boundaries are often subtle, irregular, or visually ambiguous. Adjacent parcels may have similar spectral or textural signatures, especially when crop types are homogeneous or when fields are in early growth stages. Furthermore, boundary cues are frequently degraded by natural occlusions (e.g., shadows, clouds) or imaging artifacts, making accurate delineation inherently difficult [8]. These challenges demand models that can jointly capture the global semantic context of agricultural scenes and local fine-grained boundary details.

Semantic segmentation has advanced considerably with the emergence of CNN-based models such as FCN and DeepLab, which demonstrate strong pixel-level performance [9,10]. However, these models often yield imprecise boundaries as fine structural details are lost in deep, down-sampled features, and interpolation-based up-sampling further smooths the reconstructed edges [11]. To mitigate this limitation, many boundary-aware CNNs have been proposed. For example, multi-task frameworks have been developed to couple semantic segmentation and edge detection via shared encoders and boundary-consistency losses [12]. BiSeNet [13] proposes a two-path architecture to enhance spatial detail. BASS [14] applies the boundary attention mechanism and an additional boundary-aware loss term to guide the learning process. Despite these advances, CNN-based networks remain constrained by limited receptive fields, making it difficult to maintain both global contextual understanding and fine boundary localization. The emergence of Transformer architectures has further advanced semantic segmentation by enabling global feature reasoning and long-range dependency modeling. Recent works such as BoundaryFormer [15] and EdgeFormer [16] have successfully integrated attention mechanisms with edge supervision, leading to improved contour delineation in general object segmentation tasks. The Segment Anything Model (SAM), as a strong general-purpose baseline, has demonstrated outstanding zero-shot and prompt-based segmentation performance across diverse imagery [17]. However, such general-purpose segmentation frameworks are often optimized for well-defined object categories and regular shapes. Their direct application to large-scale agricultural parcel delineation remains suboptimal.

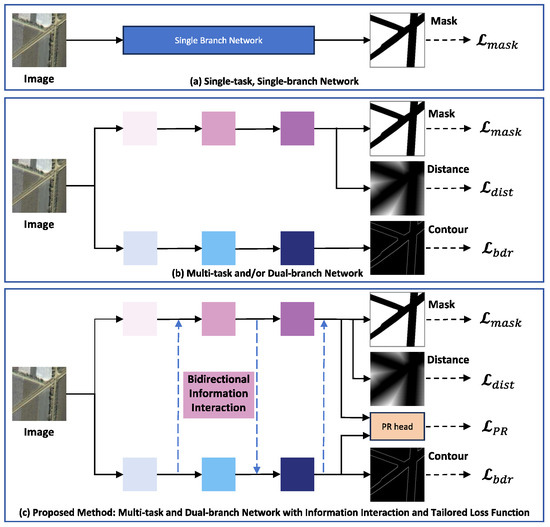

Agricultural parcels exhibit several domain-specific challenges that make their delineation uniquely difficult. Unlike urban or man-made objects in general scenes (e.g., roads, buildings), agricultural parcels often feature subtle, irregular, or ambiguous divisions, marked by narrow ridges, natural growth patterns, or unstructured boundaries. These characteristics, combined with intra-field heterogeneity, crop phenology, and temporal inconsistency in remote sensing imagery, pose unique challenges for boundary localization and semantic discrimination. To address these issues, several networks have been specifically developed for agricultural parcel delineation. Single-branch frameworks focus on improving general segmentation quality while indirectly enhancing boundary precision, as shown in Figure 1a. For instance, ref. [18] develops a generalized CNN framework for large-area cropland mapping from very-high-resolution (VHR) imagery, demonstrating strong regional scalability but limited sensitivity to fine parcel boundaries. Similarly, ref. [19] has employed a DeepLabV3-based model to map smallholder plots from WorldView-2 imagery, achieving high overall accuracy yet relying on standard semantic segmentation pipelines without explicit edge modeling. Such single-branch architectures excel at capturing large-scale land-cover semantics but often produce blurred or incomplete parcel edges. In contrast, multi-branch or multi-task frameworks explicitly model both parcel interiors and boundary structures, allowing edge features to guide semantic reasoning [20,21,22], as shown in Figure 1b. BsiNet [23] improves geometric precision in hilly terrains through a multi-task design. HBGNet [24] and BFINet [25] leverage dual-branch or hierarchical mechanisms to jointly learn semantic-body and edge information. DSTFNet [26] extends this idea by integrating spatiotemporal cues from VHR imagery to enhance robustness under seasonal variations. These studies collectively confirm that explicit boundary modeling and cross-scale feature fusion are crucial for improving delineation accuracy and spatial consistency in agricultural landscapes.

Figure 1.

Overview of existing agricultural parcel delineation methods and our proposed IDBT model. Our approach explicitly models the interaction between semantic and boundary information with a specialized loss function, achieving refined segmentation accuracy.

Nevertheless, despite these notable advances, most existing dual-branch frameworks still rely on unidirectional or loosely coupled interactions between semantic and boundary representations. While such designs have steadily improved boundary fidelity, they primarily emphasize feature-level concatenation or shallow fusion, without deeper iterative communication between high-level semantic representations and low-level spatial cues. There remains room for further progress in achieving deeper, bidirectional feature exchange and more consistent integration between semantic context and edge precision. Motivated by this observation, we propose the Interactive Dual-Branch Transformer (IDBT). IDBT is a framework specifically designed for precise agricultural parcel delineation from VHR imagery. It couples a Transformer-based semantic branch for global contextual understanding with a boundary branch for localized edge learning. We introduce two dedicated modules, the Boundary-Information Tokenization Module (BITM) and the Boundary-Information Feedback Module (BIFM). BITM transforms fine-grained edge features into compact boundary tokens and integrates them into the Transformer sequence, allowing global semantic reasoning to be guided by localized spatial cues. Complementarily, BIFM leverages high-level semantics to adaptively refine boundary predictions, enabling a bidirectional flow of information between the two branches. Feature fusion has been widely recognized as an effective strategy for improving spatial coherence and multi-scale consistency in RS vision models. Many prior works have explored attention-based feature interaction to strengthen global–local alignment [27,28]. Building upon this insight, we propose the Multi-level Feature Fusion Module (MFFM), which further unifies hierarchical representations across Transformer stages, ensuring structural completeness and spatial coherence. Additionally, we incorporate a Dual-PointRend supervision strategy. This design refines predictions at both coarse and boundary levels. A composite loss is devised to jointly supervise the segmentation and point-wise predictions, thus promoting both semantic coherence and edge sharpness. To validate the effectiveness of our approach, we conduct extensive experiments on two large-scale benchmark datasets for AP segmentation. Our proposed model consistently outperforms state-of-the-art (SOTA) methods across all evaluation metrics. IDBT also shows stronger transferability in cross-dataset experiments. These results highlight the robustness and practical applicability of our framework. The main contributions of this study can be summarized as follows.

- We design a bidirectional dual-branch network aware of boundary information that explicitly models semantic and boundary cues for precise agricultural parcel delineation;

- We introduce a set of modules to enhance multi-level feature exchange between branches, improving spatial detail retention and semantic understanding;

- We propose a Dual-PointRend supervision strategy to enable more effective boundary learning by selectively refining uncertain or complex regions during training;

- We conduct comprehensive experiments on two large-scale datasets, achieving superior performance and demonstrating strong generalization across diverse agricultural landscapes.

2. Materials and Methods

2.1. Overview of the IDBT Framework

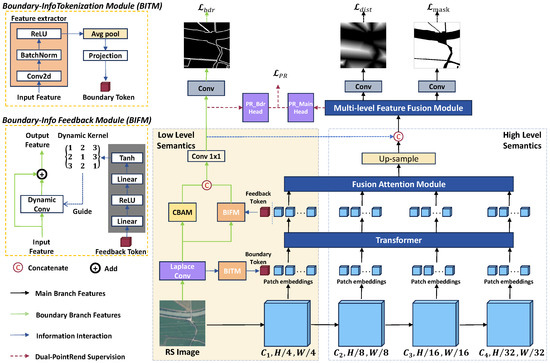

We propose IDBT, an interactive dual-branch Transformer architecture for accurate agricultural parcel (AP) delineation from high-resolution remote sensing imagery. As illustrated in Figure 2, the model consists of two closely integrated branches. The main (semantic) branch employs a hierarchical Transformer backbone to extract multi-scale contextual features, which are progressively refined by downstream fusion modules. In parallel, the boundary branch captures fine-grained structural cues via Laplacian convolution and a Convolutional Block Attention Module (CBAM) [29]. To enable effective interaction between the two streams, we design two lightweight yet effective components: the Boundary-Information Tokenization Module (BITM) and the Boundary-Information Feedback Module (BIFM). Together, they enable efficient message passing between the two streams, helping the main branch gain structural awareness while guiding the boundary branch with high-level semantics. To further enhance feature quality and mask completeness, the main branch is equipped with a Fusion Attention Module (FAM) for refining multi-scale Transformer outputs. Furthermore, a Multi-level Feature Fusion Module (MFFM) is designed to integrate these high-level representations with spatially detailed boundary features, as shown in Figure 3. These modules jointly promote coherence in both the interior and boundary of the segmented parcels.

Figure 2.

The architecture of the IDBT model and the composition of two sub-modules BITM and BIFM.

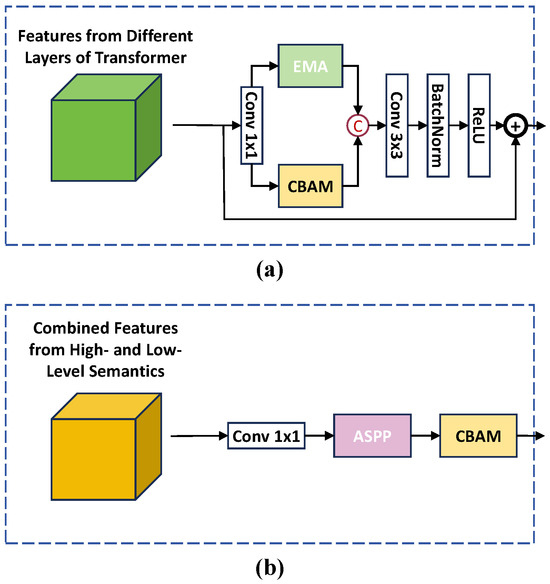

Figure 3.

Structures of the designed modules Fusion Attention Module (FAM) and Multi-level Feature Fusion Module (MFFM). (a) FAM refines multi-scale features output from the Transformer. (b) MFFM integrates high-level semantic and low-level boundary features.

In addition to the primary task of mask prediction, IDBT includes two auxiliary prediction heads to enhance spatial precision: boundary maps and distance maps. The boundary map branch focuses on capturing fine-grained edge structures and guiding the model toward clearer and more consistent field delineations. The distance map is generated through a Euclidean distance transform that encodes the proximity of each internal pixel to the nearest boundary. This distance map enhances geometric accuracy by promoting topological connectivity and reducing isolated segmentation errors [30]. Moreover, we adopt a Dual-PointRend supervision strategy that selectively refines uncertain regions on both segmentation and boundary predictions. This strategy significantly enhances boundary precision. The entire framework is trained with a tailored composite loss that combines segmentation, boundary, distance regression, and point-wise refinement supervision.

2.2. Boundary-Information Tokenization Module and Boundary-Information Feedback Module

We introduce two modules to enable low-level information exchange between the semantic and boundary branches. The Boundary-Information Tokenization Module (BITM) encodes low-level boundary features into a compact token and concatenates it with the Transformer input sequence. Given the initial boundary feature map , BITM generates the boundary token as:

This token is then concatenated with the image patch embeddings at the scale, which allows the Transformer in the main branch to incorporate boundary cues at an early stage of feature learning. After the Transformer stage, the Boundary-Information Feedback Module (BIFM) injects global semantic context back into the boundary branch. Specifically, the updated boundary token is extracted and passed to a kernel generator (inspired by [31,32]) to produce a content-adaptive dynamic convolution kernel . The generator is implemented as a small fully connected network that maps the feedback token to a flattened vector of convolution weights , which is then reshaped and applied to the boundary feature map :

where K denotes the kernel size. Each spatial location thus receives a convolution operation modulated by the semantic feedback, allowing the boundary branch to adaptively emphasize or suppress edge responses according to the high-level context. The output feature is computed as:

where ∗ represents the convolution operation and the residual connection preserves stability. BITM enhances the boundary sensitivity of the main branch, while BIFM improves the spatial fidelity of boundary predictions by infusing global semantic context. Together, they facilitate bidirectional information flow between the two branches with minimal overhead.

2.3. Fusion Attention Module and Multi-Level Feature Fusion Module

To enhance and consolidate multi-scale semantic features from different Transformer depths, we design the Fusion Attention Module (FAM) and place it after the Transformer backbone. FAM processes features in parallel through the Efficient Multi-Scale Attention (EMA) [33] and the CBAM. EMA leverages multi-group channel-wise attention to capture long-range dependencies and contextual relationships. Simultaneously, CBAM focuses on refining local spatial and channel-level information, enhancing discrimination in regions with subtle spectral or textural variation. The outputs of EMA and CBAM are then concatenated, upsampled, and aggregated with features from other scales. These refined, aligned feature maps are further up-sampled to the original resolution and concatenated with boundary-enhanced features from the boundary branch—facilitating another round of cross-branch information exchange.

The fused multi-level representation is then processed by the Multi-level Feature Fusion Module (MFFM), which performs deeper integration and refinement. MFFM consists of three sequential components: (1) a convolution to unify channel dimensions and reduce redundancy; (2) an Atrous Spatial Pyramid Pooling (ASPP) module [10] that applies parallel dilated convolutions with different dilation rates to capture object information at multiple receptive fields. The fused features at this stage contain diverse semantics from multiple layers and from both branches. The multi-scale receptive fields of ASPP are particularly suited to integrate these heterogeneous features, which vary significantly in spatial detail and contextual scope; (3) a CBAM module to further refine the fused features by emphasizing informative channels and spatial regions.

The output of MFFM serves as a robust final representation for both mask prediction and distance map generation.

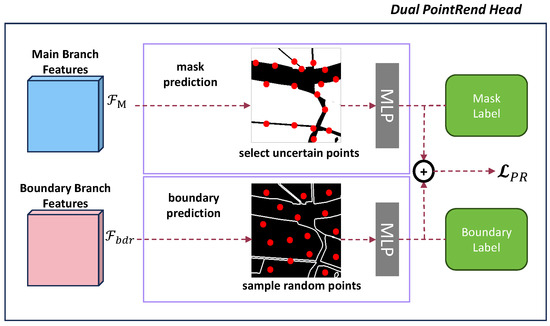

2.4. Dual-PointRend Strategy

Up-sampling operations in deep segmentation networks often lead to blurry or imprecise boundaries, particularly in regions with high spatial ambiguity. To address this, we propose a Dual-PointRend strategy, which extends the PointRend concept [34] to our dual-branch network for both mask and boundary refinement. Figure 4 illustrates the strategy of the Dual-PointRend process.

Figure 4.

Illustration of the proposed Dual-PointRend supervision strategy. In the main branch, uncertain points are selected from the coarse segmentation map and refined through a PointRend head. In the boundary branch, randomly sampled points are similarly refined to enhance edge continuity. Both refined predictions are updated back to their respective outputs.

In the main branch, the fused feature map passes through a coarse prediction head to generate initial mask logits. Points with the highest uncertainty (i.e., probabilities closest to 0.5) are then selected. For each selected point , their features are refined by a lightweight MLP to produce sharper logits:

where denotes the PointRend refinement in the main branch. These refined logits are updated back into the mask prediction at the corresponding positions to improve the quality of ambiguous regions.

In the boundary branch, where the ground truth is a sparse contour map, uncertainty-based sampling is less effective. Instead, we randomly sample points from the boundary prediction map and refine them using a boundary PointRend head:

denotes the PointRend refinement in the boundary branch. These refined logits are also updated to the boundary map to enhance edge continuity and localization.

By applying independent PointRend refinement to both branches and updating predictions at selected points, the Dual-PointRend strategy simultaneously improves mask completeness and boundary sharpness. Point-level losses are applied on the refined predictions in each branch to complement standard supervision.

2.5. Loss Functions

To supervise model training, we design a composite loss function consisting of two components: the Task Loss and the PointRend Loss .

The Task Loss supervises the three prediction tasks of our network: AP mask segmentation, AP boundary detection, and AP distance map regression. Specifically, binary cross-entropy loss is applied to supervise the predicted mask for accurate pixel-level classification. The negative log-likelihood loss is used to optimize the boundary predictions based on the binary contour ground truth [35]. This choice offers stable gradients under severe class imbalance and is less sensitive to sparse positive pixels than BCE or Focal alternatives. The mean square error loss is used to minimize the difference between the predicted distance map and the ground-truth distance map. The Task Loss is thus formulated as:

where are balancing hyper-parameters.

To complement the Task Loss and enhance boundary precision, we incorporate the PointRend Loss, derived from the Dual-PointRend strategy. In the main branch, points with the highest uncertainty are sampled (), while in the boundary branch, points are randomly sampled from the contour prediction map (). At these sampled points, a point-level loss (BCE) is applied separately for each branch with balancing weight and . The overall PointRend Loss is thus defined as:

where N is the number of sampled points. The total loss is defined as the sum of the Task Loss and the PointRend Loss:

3. Experiments

3.1. Experimental Setup

3.1.1. Datasets

We evaluate our model on two large-scale datasets designed for agricultural parcel segmentation from very high-resolution (VHR) remote sensing imagery. FHAPD [24] is a VHR (1 m) remote sensing dataset covering seven regions in China with diverse agricultural landscapes. Images are cropped into pixel patches and annotated with binary annotations (parcel vs. background). We select three representative subsets, FHAPD-JS, FHAPD-HB, and FHAPD-XJ. We randomly divide each regional subset (JS, HB, and XJ) into training, validation, and test sets using approximately a 70:10:20 ratio, ensuring spatial independence between samples.

The AI4Boundaries dataset [36] consists of orthophoto patches at 1 m resolution, with parcel masks derived from public cadastral data across several European countries. We adopt the official train/validation/test partitions and further divide each patch into non-overlapping pixel sub-patches. After filtering out patches without agricultural content, the final counts are (9751/1084/2388) for train/validation/test, respectively. This division corresponds to a ratio close to 70:10:20, consistent with FHAPD subsets.

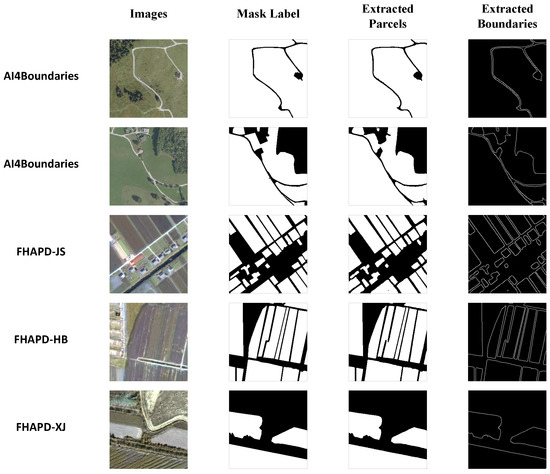

For both datasets, three types of ground-truth annotations are available: parcel masks, boundary maps, and distance maps. The distance maps are computed using the Euclidean transform from each interior pixel to the nearest boundary, serving as auxiliary supervision for geometric regularity. AI4Boundaries is characterized by large, homogeneous fields in relatively flat terrain, while FHAPD contains parcels with varied sizes, shapes, and topography. Figure 5 presents representative examples from AI4Boundaries and the three FHAPD subsets. These samples visually highlight differences in field morphology and imaging characteristics, allowing a more intuitive understanding of the segmentation challenges and generalization potential.

Figure 5.

Visual examples from the evaluated datasets and predictions of the proposed model. The left two columns display sample images and corresponding ground-truth parcel masks. The right two columns show the extracted parcel masks and extracted boundaries generated by IDBT.

3.1.2. Comparative Models

To thoroughly evaluate the effectiveness of the proposed IDBT model, we compare it with a set of representative methods used in AP delineation and RS image segmentation. The comparative baseline models include ResUNet [37], REAUNET [38], BsiNet [23], SEANet [39], BFINet [25], HBGNet [24], GPWFormer [40], RSMamba-ss [41], and BPT [42].

3.1.3. Evaluation Metrics

In the experiments, we adopt a set of widely used metrics that collectively assess both semantic segmentation quality and boundary delineation accuracy following common practices in similar tasks [11,24,43]. The primary metric is the Intersection-over-Union (IoU), which measures the degree of overlap between the predicted segmentation and the ground truth. It can be computed as:

where and refer to the predicted and true pixels of a sample for the category i, respectively. In addition to IoU, we report the F1 score and pixel accuracy (Acc), which further reflect classification precision and overall correctness. The F1 score is computed as the harmonic mean of precision and recall:

where

Pixel accuracy calculates the proportion of correctly classified pixels:

where TP stands for true positives (correct classification of pixels as positive predictions), while FP stands for false positives (negative pixels erroneously classified as positive). TN is true negatives (accurate identification of the background area as background), and FN is false negatives (positive pixels mistakenly categorized as negatives).

To better assess boundary delineation quality, we further report the boundary F1 score () with a narrow buffer region (3 m). is the harmonic mean of completeness (Com) and correctness (Corr) [44,45,46]:

Completeness is the ratio of correctly detected boundaries and correctness means the error of incorrectly detected. Com and Corr can be defined as:

where denotes the extracted boundary and represents the reference boundary. In addition, we employ the Average Surface Distance (ASD) metric to complement . ASD quantifies the mean Euclidean distance between corresponding points on the predicted and reference boundaries. It thereby captures the average geometric deviation between the two contours. Unlike overlap-based metrics, ASD directly measures spatial consistency and smoothness along boundaries, offering an intuitive and stable evaluation of delineation accuracy—especially for complex, fragmented agricultural parcels where small pixel shifts can accumulate into significant shape differences.

Additionally, we report FLOPs (Floating Point Operations) to quantify the computational cost of different models. This metric captures the total number of arithmetic operations required during inference, providing an estimate of model complexity and runtime overhead.

3.1.4. Implementation Details

All models are trained using the Adam optimizer with an initial learning rate of , a batch size of 8, and 100 training epochs. The balancing weights for the individual components () are set to [0.5, 0.3, 1, 1, 0.5]. For the Dual-PointRend strategy, 1024 points are sampled for each branch at each iteration. All experiments are conducted on a server equipped with an NVIDIA GTX 3090 GPU. To ensure robustness and reliability, all reported performance metrics are averaged over at least three independent runs using different random seeds.

3.2. Results

3.2.1. FHAPD

The quantitative results on the three FHAPD regional subsets—JS, HB, and XJ—are summarized in Table 1. Our proposed IDBT model achieves the best overall performance across the three regional subsets. On the JS subset, IDBT achieves an IoU of 84.4%, F1 score of 91.6%, and pixel accuracy of 90.1%. The boundary F1 score also reaches a leading 80.7%, surpassing the second-best model (HBGNet, 79.3%) by a notable margin of 1.4%. Similarly, IDBT attains the best IoU (86.9%), F1 (93.0%), and accuracy (91.6%) on the HB subset. For the XJ subset, IDBT again delivers a remarkable performance with IoU 95.1%, F1 97.4%, and accuracy 96.2%, all of which are the highest among all baselines. In terms of the distance-based boundary metric, IDBT also achieves the lowest ASD across all subsets, with values of 3.88, 2.77, and 4.12 pixels on JS, HB, and XJ, respectively. The ASD values indicate that the predicted parcel boundaries are only a few pixels away from the ground truth contours on average. They further confirm that IDBT achieves precise geometric alignment and smooth boundary transitions.

Table 1.

Comparison of experimental results on the FHAPD dataset.

Regarding the model efficiency, we compare the computational complexity using FLOPs. IDBT achieves the best performance while maintaining a moderate model size of 40.3 GFLOPs, which lies in the middle of the competing methods and is lower than the second-best-performing model, HBGNet (57.8 GFLOPs). We further assess practical deployment efficiency by reporting single-image inference latency and peak inference memory under a batch size of 1 (evaluated on FHAPD-JS). The evaluation results for competing models are provided in Appendix A, Table A1. IDBT demonstrates favorable runtime efficiency, achieving relatively low inference overhead while delivering the best segmentation accuracy.

These results demonstrate that IDBT is highly effective in accurately delineating agricultural parcels and preserving boundary details across diverse agricultural landscapes. Moreover, IDBT is also computationally efficient.

3.2.2. AI4Boundaries

The experimental results on the entire AI4Boundaries dataset are presented in Table 2. Since the network architecture and input dimensions remain consistent across datasets, the FLOP values for all models are identical to those reported in Table 1. For completeness, FLOPs for all models are included in Table 2 to indicate model efficiency. The proposed IDBT model achieves the highest scores across all evaluation metrics, including IoU (83.7%), F1 (91.1%), Acc (87.4%), and (51.3%). Compared to other strong baselines such as SEANet and HBGNet, which both attain a high F1 score of 90.5–90.6% and accuracy of 86.7%, IDBT still leads with a +0.5–0.6% improvement in F1 and +0.7% in accuracy. Notably, in terms of boundary delineation, IDBT outperforms all methods, surpassing SEANet and HBGNet by 1.0% in . IDBT also yields the lowest ASD of 7.43 pixels, indicating that its predicted parcel boundaries are spatially closer and more geometrically aligned with the ground truth compared to competing methods. The results further confirm the generalization capability of IDBT when applied to large, homogeneous fields typical of the AI4Boundaries dataset. To verify that the filtering of non-agricultural patches does not bias the evaluation, we have conducted additional experiments using the unfiltered dataset. The results show that the filtering step affects the IoU by less than 0.5% and does not change the relative performance ranking among models.

Table 2.

Comparison of experimental results on the AI4Boundaries dataset.

In addition, although AI4Boundaries is primarily designed for semantic parcel segmentation, many of its regions contain relatively large and topologically complete agricultural fields compared with the small and densely tessellated parcels in FHAPD. This characteristic enables a scoped evaluation of instance-level delineation performance on a subset where parcel individuality is visually unambiguous. To provide complementary validation from this perspective, we construct a mini test set by selecting several AI4Boundaries images (178 counts) that exhibit clear and non-intersecting field boundaries. On this mini set, we compute instance Precision and instance F1 scores using IoU ≥ 0.5 matching criteria. The results are reported in Appendix A, Table A2. IDBT achieves competitive instance Precision (ranking 3rd among tested models) while maintaining the highest instance F1 score. The results show IDBT’s consistency in parcel completeness and topology preservation under this evaluation setting. It should be noted that the official AI4Boundaries dataset only provides semantic parcel masks, and instance masks are derived from them through connected-component extraction. Therefore, while this assessment offers helpful complementary insights, its conclusions should be interpreted with appropriate caution given the inherent characteristics of agricultural parcel tessellation.

3.3. Qualitative Visualizations

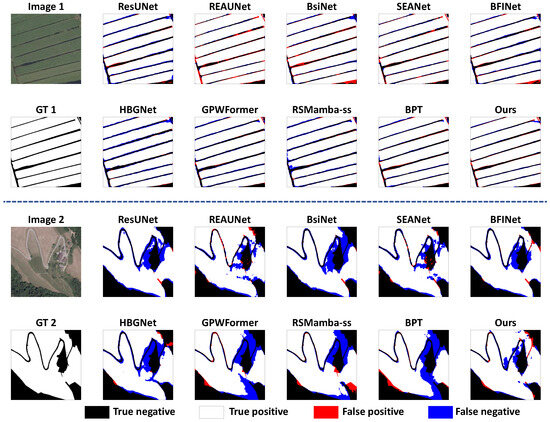

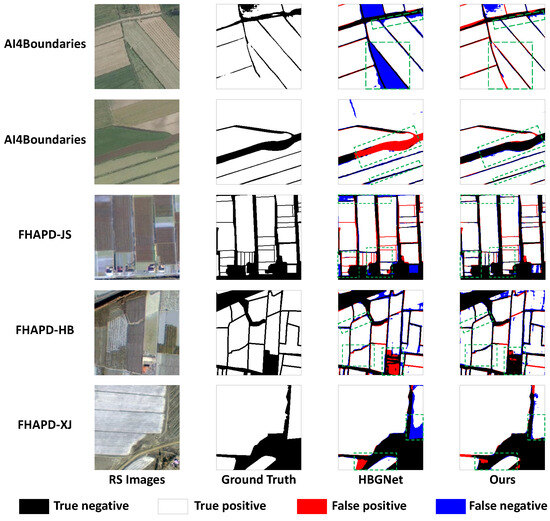

To provide a more intuitive understanding of model behavior across diverse agricultural scenarios, we present qualitative visualizations in Figure 5, Figure 6 and Figure 7.

Figure 6.

Visual comparison of parcel delineation performance across all competing models on the AI4Boundaries dataset. The error maps illustrate true negatives (black part), true positives (white part), false positives (red part), and false negatives (blue part) in predicted parcel masks.

Figure 7.

Visual comparison of two top-performing models, HBGNet and IDBT. From left to right: RS images, ground truth, error maps of HBGNet, and error maps of IDBT. The error maps illustrate true negatives (black part), true positives (white part), false positives (red part), and false negatives (blue part) in predicted parcel masks. Examples are shown from the AI4Boundaries dataset (top two rows) and the three FHAPD subsets (bottom rows). The green squares indicate regions with visually significant differences between models.

Figure 5 showcases representative samples from the AI4Boundaries dataset and the three FHAPD subsets (JS, HB, and XJ). For each example, we display the input image, ground-truth mask label, the parcel delineation result predicted by our IDBT model, and the corresponding extracted boundary map. This visualization highlights the varied farming characteristics across datasets. Despite these variations, IDBT consistently generates complete and accurate parcel masks, maintaining boundary integrity even in the presence of noisy backgrounds, irregular field shapes, or densely clustered plots. The extracted boundaries are continuous and well-aligned with the ground truth. These segmentation results demonstrate strong geometric precision and topological coherence.

Figure 6 provides a broader comparison across all competing models. We selectively show two samples from two distinct geographic regions within AI4Boundaries. Here we visualize error maps relative to the ground truth for all models, where true negatives appear in black, true positives in white, false positives in red, and false negatives in blue. These error maps facilitate a detailed visual assessment of both interior classification accuracy and boundary delineation performance. The comparison reveals that IDBT produces fewer boundary-related misclassifications and maintains higher foreground completeness than other methods. Notably, the reductions in red/blue pixels along narrow ridges and field edges indicate better localization and topological consistency of IDBT.

To further highlight the difference, Figure 7 presents side-by-side visualizations of IDBT and the second-best-performing model (HBGNet). The figure includes two examples from AI4Boundaries and one example from each FHAPD subset. We also present the error maps of two compared methods. As seen in Figure 7, IDBT produces more complete and precise parcel delineations. Most regions in the IDBT outputs exhibit fewer errors and clearer boundaries. Moreover, we use green rectangles to highlight regions with visually significant improvements, where IDBT demonstrates sharper parcel boundaries and reduced structural distortions, particularly in irregular and densely interacting field regions. These visual advantages align with the quantitative improvements reported earlier in numerical metrics.

3.4. Transfer Testing

Following the work of [24], we first conduct additional cross-subset transfer experiments within the FHAPD dataset. In these experiments, each model is trained on the train set of one regional subset (source domain) and directly tested on the test set of another (target domain) without any fine-tuning. This setup allows us to assess the transferability of our model across regions with diverse agricultural characteristics. The same training configuration as in the main FHAPD experiments is adopted. Three representative transfer configurations are designed: JS→XJ, HB→JS, and XJ→HB. To be clear, we use the JS→XJ experiment as an example, which means models are trained on the FHAPD-JS subset and evaluated on the FHAPD-XJ subset. These scenarios comprehensively assess the ability of each model to transfer beyond the spatial and phenological patterns of its training domain. Table 3 summarizes the results. IDBT model achieves the best performance across all transfer scenarios in terms of both IoU and F1 score. Specifically, IDBT attains 66.5% IoU and 79.9% F1 in JS→XJ, outperforming the second-best model (BPT) by a large margin of +6.4% IoU and +4.2% F1.

Table 3.

Results of transfer testing within FHAPD subsets and cross-dataset transfer JS→AI4B.

To further examine cross-domain generalization ability beyond dataset-internal shifts, we additionally evaluate a more challenging setting: training on FHAPD-JS and testing on the AI4Boundaries dataset (JS→AI4B), which introduces substantial domain discrepancies in parcel scale, topography, and geographic context. The results are also provided in Table 3. Although IDBT continues to outperform other methods in both IoU and F1 under this configuration, the performance of all deep models decreases significantly (IoU values for some baselines drop below 40%), indicating that a direct zero-shot transfer across such heterogeneous datasets remains difficult.

This performance gap reflects widely recognized challenges in agricultural remote sensing: strong geographic domain shifts, sensor differences, and landscape-pattern discrepancies can severely affect cross-dataset transferability [47,48,49]. Taken together, the results demonstrate that IDBT transfers robustly across subsets within FHAPD where data sources and cultivation patterns are more consistent, while much larger shifts such as FHAPD→AI4B remain challenging for all deep models. These findings suggest that IDBT already provides strong transferability in realistic operational scenarios where imagery is collected under similar sensing conditions, and they also highlight an important direction for future research toward more domain-adaptive agricultural parcel delineation.

3.5. Ablation Study

We conduct the ablation study in two scenarios to evaluate the contribution of each major component of IDBT: BITM&BIFM, FAM, MFFM, and the Dual-PointRend strategy.

The first scenario is in-domain testing on the FHAPD-JS subset, whose results are reported in Table 4. Starting from the baseline (Exp ), the introduction of BITM&BIFM alone brings a clear improvement in both IoU and F1 ( and , Exp ). Adding FAM (Exp ) or MFFM (Exp ) further enhances the performance. When FAM and MFFM are used together, the model achieves large gains compared to the baseline (Exp vs. ) and compared to BITM&BIFM alone (Exp vs. ). Combining BITM&BIFM, FAM, and MFFM (Exp ) yields a significant overall improvement of in IoU and in F1 over the baseline. The application of Dual-PointRend strategy does not lead to a notable improvement in this setting ( in IoU and in F1, Exp ).

Table 4.

Ablation experiments on FHAPD-JS subset.

The second scenario evaluates the transferability of each component in the JS-XJ cross-subset transfer test. The results are shown in Table 5. Similar to the first scenario, introducing any single module or the combination of two modules leads to a clear improvement over the baseline. Notably, the performance gains are even more pronounced in this transfer test. For example, using FAM and MFFM together yields a improvement in IoU over the baseline. In addition, comparing the Exp with , the Dual-PointRend strategy brings a more significant improvement, suggesting that its point-level refinement plays a larger role when the model is tested on unseen regions.

Table 5.

Ablation experiments on transfer test of JS-XJ.

3.6. Discussion

Overall, the experimental results across both the FHAPD and AI4Boundaries datasets demonstrate that IDBT consistently outperforms state-of-the-art baselines in terms of segmentation accuracy and boundary delineation quality. The strong performance holds across diverse agricultural landscapes, indicating the model’s robustness under varying geographic and topographic conditions.

From the transfer testing experiments, it is evident that IDBT generalizes well to unseen regional distributions. Even without fine-tuning, it consistently maintains a clear lead in all cross-subset evaluations within FHAPD. This result highlights the strong transferability of IDBT within domains where sensing modality and field morphology remain comparable. It is an essential property for real-world deployment where labeled data may be limited or unavailable for certain regions.

The qualitative visualizations provide strong intuitive support for the quantitative results. The visualization results show how IDBT effectively handles diverse agricultural patterns. In addition, the error maps clearly illustrate IDBT’s superior ability in achieving both mask completeness and boundary precision compared to the competing models.

Moreover, the ablation study clarifies the contribution of each component. BITM&BIFM establish a strong foundation by explicitly encoding edge cues early in the network. The FAM and MFFM modules further enhance representation capacity by integrating multi-level features with spatial and semantic focus. Regarding the Dual-PointRend supervision, its improvement in the in-domain setting appears marginal because the base model already achieves high accuracy (F1 above 90%). Given that Dual-PointRend selectively refines a limited number of points, most of which are already correctly predicted in such cases, the refinement gain becomes numerically small. However, under domain-shift scenarios where the base segmentation is less accurate, the refinement-oriented learning of Dual-PointRend strengthens the model’s boundary sensitivity and generalization ability, leading to more evident performance gains in transfer tests.

Finally, it is worth noting that IDBT maintains strong accuracy while being relatively lightweight. As shown in the FLOPs comparison, our model achieves better or comparable results with lower computational cost than many complex baselines.

Although the current design emphasizes efficiency, future work may explore lightweight distillation or dynamic inference strategies to further reduce computational cost for edge deployment scenarios. In addition, the cross-dataset transfer observations (FHAPD-JS→AI4Boundaries) motivate further exploration of domain-adaptive and multi-sensor parcel delineation frameworks to enhance robustness across heterogeneous data sources. Moreover, the proposed framework demonstrates strong potential for broader geospatial applications. Its boundary-faithful and instance-consistent delineation capability provides a solid foundation for tasks that require legally traceable and high-confidence parcel geometries. These characteristics suggest that IDBT could be further extended toward compliance-oriented mapping and other practical geospatial analysis scenarios.

4. Conclusions

In this work, we presented IDBT, a dual-branch Transformer-based framework for precise agricultural parcel delineation from very-high-resolution remote sensing imagery. By introducing the Boundary-Information Tokenization and Feedback Modules, the Fusion Attention Module, the Multi-level Feature Fusion Module, and a Dual PointRend strategy, IDBT effectively integrates semantic and boundary information to produce more complete parcel masks and more precisely delineated boundaries. Extensive experiments on the FHAPD and AI4Boundaries datasets demonstrate that IDBT consistently achieves state-of-the-art performance and exhibits strong transferability. These results highlight the potential of IDBT as a practical and reliable solution for large-scale agricultural parcel mapping.

Author Contributions

Conceptualization, Y.W. and R.W.; methodology, Y.W.; software, Y.W., Z.P. and J.J.; validation, Y.W. and H.S.; formal analysis, Y.W.; investigation, R.W.; resources, Z.P. and R.W.; data curation, Y.W. and J.J.; writing—original draft preparation, Y.W.; writing—review and editing, Y.W. and H.S.; visualization, Y.W., Z.P. and J.J.; supervision, R.W.; project administration, R.W.; funding acquisition, R.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Program of China (No. 2023YFD1702105).

Data Availability Statement

The code and data will be made publicly available at https://github.com/yaw6622/IDBT (accessed on 5 November 2025).

Acknowledgments

We thank the Agricultural Sensors and Intelligent Perception Technology Innovation Center of Anhui Province, Zhongke Hefei Institutes of Collaborative Research and Innovation for Intelligent Agriculture for providing hardware support for some of the experiments in the research process.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Inference latency and peak inference memory comparison among competing models (batch size = 1). Latency is reported as milliseconds per image (ms/img), and memory refers to maximum GPU allocation during inference.

Table A1.

Inference latency and peak inference memory comparison among competing models (batch size = 1). Latency is reported as milliseconds per image (ms/img), and memory refers to maximum GPU allocation during inference.

| Method | ResUNet | REAUNET | BsiNet | SEANet | BFINet | HBGNet | GPWFormer | RSMamba-ss | BPT | IDBT(ours) |

|---|---|---|---|---|---|---|---|---|---|---|

| Inference Latency (ms/img) | 29.17 | 36.66 | 15.75 | 32.29 | 16.32 | 22.48 | 23.18 | 20.55 | 25.60 | 19.87 |

| Peak Memory (GB) | 1.56 | 2.18 | 0.60 | 1.97 | 0.66 | 1.32 | 1.37 | 0.86 | 1.58 | 1.31 |

Table A2.

Instance-level evaluation results on a mini testset of the AI4Boundaries dataset (IoU ≥ 0.5 matching). We report Instance Precision and Instance F1 in %.

Table A2.

Instance-level evaluation results on a mini testset of the AI4Boundaries dataset (IoU ≥ 0.5 matching). We report Instance Precision and Instance F1 in %.

| Method | ResUNet | REAUNET | BsiNet | SEANet | BFINet | HBGNet | GPWFormer | RSMamba-ss | BPT | IDBT(ours) |

|---|---|---|---|---|---|---|---|---|---|---|

| Instance Precision (%) | 48.6 | 50.3 | 51.8 | 57.1 | 53.5 | 56.6 | 49.7 | 53.2 | 51.0 | 54.8 |

| Instance F1 (%) | 45.8 | 48.7 | 59.9 | 65.7 | 57.9 | 65.1 | 52.2 | 51.4 | 55.1 | 67.7 |

References

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from Landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Thangi, D.S. Artificial Intelligence Models for Remote Sensing Applications. In Artificial Intelligence Techniques for Sustainable Development; CRC Press: Boca Raton, FL, USA, 2024; pp. 201–217. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal convolutional neural network for the classification of satellite image time series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Kovačević, V.; Pejak, B.; Marko, O. Enhancing machine learning crop classification models through sam-based field delineation based on satellite imagery. In Proceedings of the 2024 12th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Novi Sad, Serbia, 15–18 July 2024; pp. 1–4. [Google Scholar]

- Zhu, Y.; Pan, Y.; Zhang, D.; Wu, H.; Zhao, C. A deep learning method for cultivated land parcels (CLPs) delineation from high-resolution remote sensing images with high-generalization capability. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4410525. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, J.; Ning, T. Progress and prospect of cultivated land extraction from high-resolution remote sensing images. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1582–1590. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef]

- Zhen, M.; Wang, J.; Zhou, L.; Li, S.; Shen, T.; Shang, J.; Fang, T.; Quan, L. Joint semantic segmentation and boundary detection using iterative pyramid contexts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13666–13675. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Liu, Y.; Shi, H.; Shen, H.; Si, Y.; Wang, X.; Mei, T. A new dataset and boundary-attention semantic segmentation for face parsing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11637–11644. [Google Scholar]

- Lazarow, J.; Xu, W.; Tu, Z. Instance segmentation with mask-supervised polygonal boundary transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4382–4391. [Google Scholar]

- Jin, B.; Zhang, Y.; Meng, Y.; Han, J. Edgeformers: Graph-empowered transformers for representation learning on textual-edge networks. arXiv 2023, arXiv:2302.11050. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder crop area mapped with a semantic segmentation deep learning method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Luo, W.; Zhang, C.; Li, Y.; Yan, Y. Mlgnet: Multi-task learning network with attention-guided mechanism for segmenting agricultural fields. Remote Sens. 2023, 15, 3934. [Google Scholar] [CrossRef]

- Bergamasco, L.; Bovolo, F.; Bruzzone, L. A dual-branch deep learning architecture for multisensor and multitemporal remote sensing semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2147–2162. [Google Scholar] [CrossRef]

- Ma, H.R.; Shen, X.C.; Luo, Z.Q.; Chen, P.T.; Guan, B.; Zheng, M.X.; Yu, W.S. Extracting Agricultural Parcel Boundaries From High Spatial Resolution Remote Sensing Images Based on a Multi-Task Deep Learning Network with Boundary Enhancement Mechanism. IEEE Access 2024, 12, 112038–112052. [Google Scholar] [CrossRef]

- Long, J.; Li, M.; Wang, X.; Stein, A. Delineation of agricultural fields using multi-task BsiNet from high-resolution satellite images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102871. [Google Scholar] [CrossRef]

- Zhao, H.; Wu, B.; Zhang, M.; Long, J.; Tian, F.; Xie, Y.; Zeng, H.; Zheng, Z.; Ma, Z.; Wang, M.; et al. A large-scale VHR parcel dataset and a novel hierarchical semantic boundary-guided network for agricultural parcel delineation. ISPRS J. Photogramm. Remote Sens. 2025, 221, 1–19. [Google Scholar] [CrossRef]

- Zhao, H.; Long, J.; Zhang, M.; Wu, B.; Xu, C.; Tian, F.; Ma, Z. Irregular agricultural field delineation using a dual-branch architecture from high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6015105. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, Q.; Zhang, X.; Yang, J.; Wei, H.; Wang, J.; Zeng, Y.; Yin, G.; Li, W.; You, L.; et al. Improving agricultural field parcel delineation with a dual branch spatiotemporal fusion network by integrating multimodal satellite data. ISPRS J. Photogramm. Remote Sens. 2023, 205, 34–49. [Google Scholar] [CrossRef]

- Huo, Y.; Gang, S.; Guan, C. FCIHMRT: Feature cross-layer interaction hybrid method based on Res2Net and transformer for remote sensing scene classification. Electronics 2023, 12, 4362. [Google Scholar] [CrossRef]

- Osco, L.P.; Wu, Q.; De Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Li, J.; Junior, J.M. The segment anything model (sam) for remote sensing applications: From zero to one shot. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103540. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Jia, X.; De Brabandere, B.; Tuytelaars, T.; Gool, L.V. Dynamic filter networks. In Proceedings of the Advances in Neural Information Processing Systems Conference 29, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Yang, B.; Bender, G.; Le, Q.V.; Ngiam, J. Condconv: Conditionally parameterized convolutions for efficient inference. In Proceedings of the Advances in Neural Information Processing Systems Conference 32, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. Pointrend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9799–9808. [Google Scholar]

- Murugesan, B.; Sarveswaran, K.; Shankaranarayana, S.M.; Ram, K.; Joseph, J.; Sivaprakasam, M. Psi-Net: Shape and boundary aware joint multi-task deep network for medical image segmentation. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 7223–7226. [Google Scholar]

- d’Andrimont, R.; Claverie, M.; Kempeneers, P.; Muraro, D.; Yordanov, M.; Peressutti, D.; Batič, M.; Waldner, F. AI4Boundaries: An open AI-ready dataset to map field boundaries with Sentinel-2 and aerial photography. Earth Syst. Sci. Data 2023, 15, 317–329. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Lu, R.; Zhang, Y.; Huang, Q.; Zeng, P.; Shi, Z.; Ye, S. A refined edge-aware convolutional neural networks for agricultural parcel delineation. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104084. [Google Scholar] [CrossRef]

- Li, M.; Long, J.; Stein, A.; Wang, X. Using a semantic edge-aware multi-task neural network to delineate agricultural parcels from remote sensing images. ISPRS J. Photogramm. Remote Sens. 2023, 200, 24–40. [Google Scholar] [CrossRef]

- Ji, D.; Zhao, F.; Lu, H. Guided patch-grouping wavelet transformer with spatial congruence for ultra-high resolution segmentation. arXiv 2023, arXiv:2307.00711. [Google Scholar]

- Chen, K.; Chen, B.; Liu, C.; Li, W.; Zou, Z.; Shi, Z. Rsmamba: Remote sensing image classification with state space model. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, Y.; Xu, L.; Jin, S.; Chen, Y. Ultra-high resolution segmentation via boundary-enhanced patch-merging transformer. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 20 February–4 March 2025; pp. 7087–7095. [Google Scholar]

- Ji, D.; Zhao, F.; Lu, H.; Tao, M.; Ye, J. Ultra-high resolution segmentation with ultra-rich context: A novel benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23621–23630. [Google Scholar]

- Marshall, M.; Crommelinck, S.; Kohli, D.; Perger, C.; Yang, M.Y.; Ghosh, A.; Fritz, S.; Bie, K.d.; Nelson, A. Crowd-driven and automated mapping of field boundaries in highly fragmented agricultural landscapes of Ethiopia with very high spatial resolution imagery. Remote Sens. 2019, 11, 2082. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; De By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Du, T.; Chen, Y.; Dong, D.; Zhou, C. Delineation of cultivated land parcels based on deep convolutional networks and geographical thematic scene division of remotely sensed images. Comput. Electron. Agric. 2022, 192, 106611. [Google Scholar] [CrossRef]

- Li, J.; Huang, X.; Gong, J. Deep neural network for remote-sensing image interpretation: Status and perspectives. Natl. Sci. Rev. 2019, 6, 1082–1086. [Google Scholar] [CrossRef] [PubMed]

- Sun, E.; Cui, Y.; Liu, P.; Yan, J. A decade of deep learning for remote sensing spatiotemporal fusion: Advances, challenges, and opportunities. arXiv 2025, arXiv:2504.00901. [Google Scholar] [CrossRef]

- Halstead, M.; Zimmer, P.; McCool, C. A cross-domain challenge with panoptic segmentation in agriculture. Int. J. Robot. Res. 2024, 43, 1151–1174. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).