1. Introduction

The complex demands of terrestrial monitoring applications frequently exceed the capabilities of single-sensor systems, driving the need for multisensor integration to achieve comprehensive Earth observation [

1]. Multimodal remote sensing image registration, a prerequisite for effectively utilizing complementary information from these diverse sources [

2], aligns images of the same geographic area acquired by different sensors. Registering optical and SAR images, however, is particularly challenging. Their fundamentally different imaging mechanisms lead to significant nonlinear radiometric differences (NRD) and complex geometric distortions, resulting in difficulties for feature extraction and representation during cross-modal image registration.

Traditional image registration methods can be categorized into two types: area-based methods and feature-based methods [

3]. The core of area-based methods lies in optimizing a similarity metric to estimate geometric transformation parameters, effectively driving the alignment process through a template matching strategy [

4]. Feature-based matching methods typically comprise three key steps: feature detection, feature description, and feature matching. Here, feature detection is crucial in robustly extracting distinctive and repeatable keypoints. A core limitation of these feature-based methods lies in their feature point detection stage, where they heavily rely on image intensity or gradient information. Due to the significant nonlinear radiometric distortions between optical images and SAR images, the intensity distribution patterns and gradient structures of identical objects exhibit drastic changes, or even inversions, between these two modalities. This radiometric discrepancy can be abstracted as an unknown, complex nonlinear function

mapping the intensity values between modalities:

where

and

are the optical and SAR image intensities,

and

are corresponding points related by a geometric transformation

, and

represents SAR speckle noise. This discrepancy severely undermines the repeatability and localization accuracy of feature points extracted by traditional methods, leading to missed and false detections.

In recent years, deep learning-based remote sensing image registration methods have demonstrated considerable potential in multimodal remote sensing image matching. These methods employ neural networks to extract image feature points, generate feature descriptors, or compute image transformation matrices. Due to the large-scale dimensions inherent in remote sensing imagery, keypoint-based registration methodologies demonstrate marked advantages over dense registration approaches in terms of computational efficiency. However, current deep learning-based feature point detection methods face significant challenges. Crucially, the lack of large-scale, high-quality annotated datasets containing corresponding optical–SAR keypoints hinders supervised learning approaches. Furthermore, existing methods usually depend on image intensity extreme responses [

5] or network regression without keypoint supervision [

6,

7] for feature point extraction. Methods relying on image intensity extreme responses are prone to feature instability due to imaging differences. On the other hand, methods based on network regression without keypoint supervision lack intermediate supervision, which can lead to spatially inconsistent predictions and increased susceptibility to occlusion and ambiguous patterns. Both limitations adversely impact the performance of subsequent feature descriptor construction and matching.

To learn how to extract stable and repeatable keypoints across optical and SAR images, we build upon the concept of self-supervised keypoint generation [

8] and propose a novel pseudo-label generation method. This concept was successfully applied to single-modal optical image registration, leveraging synthetic data to explicitly generate stable keypoints without manual annotation. This strategy shares high-level capabilities with other self-supervised methods that create training data through synthetic transformations, such as geometric matching networks [

9] and deep image homography estimation [

10] for estimating global transformations. However, a limitation of these methods is that they lack interest points and point correspondences, which are crucial for registration. Furthermore, in the realm of unsupervised image registration, another common approach is to directly predict a dense deformation field to warp the moving image towards the fixed image, optimizing similarity metrics without manual annotations. The pivotal distinction of our work lies in its objective: instead of estimating global transformations or dense deformation fields, our method is specifically designed to self-supervise the generation of keypoint location labels. However, directly applying this concept to optical–SAR cross-modal scenarios presents obstacles [

11], as it is challenging to generate high-quality virtual synthetic image pairs that accurately reflect genuine cross-modal keypoint characteristics. In the absence of such highly representative virtual training data, it is difficult to train a keypoint detection network capable of co-annotating corresponding feature points across both modalities under reliable cross-modal supervision. To address this, this article proposes a pseudo-label generation method that can simultaneously generate reliable feature point labels for both optical and SAR image pairs.

In deep learning-based remote sensing image registration, early research primarily utilized Siamese networks to simultaneously detect and describe features from cross-modal image pairs [

12,

13,

14]. However, extensive experiments reveal that Siamese networks underperform in heterogeneous image pairs like optical–SAR due to the inadequate modeling of intermodal distinctions [

5]. This limitation motivated a shift toward pseudo-Siamese network architectures [

5,

12,

15,

16], whose core advantage lies in independently learning modality-specific features. Nevertheless, effectively fusing this modality-specific information with modality-shared information for robust registration remains a critical challenge. The registration objective can be formulated as finding the optimal transformation parameters

that align the two images:

where

and

are feature extractors for optical and SAR images,

is the geometric transformation, and

is a feature similarity loss. To address this, we propose the pseudo-twin interaction network (PTIF) as a unified feature detection and description backbone.

Based on the analysis above, the main contributions of this paper are summarized as follows:

- (1)

It proposes an automated pseudo-labeling module (APLM) to address the challenge of lacking annotated corresponding keypoints. The APLM generates stable keypoint pseudo-labels simultaneously for optical–SAR pairs without manual annotation, enabling effective SAR keypoint extraction through transfer learning from visible synthetic data while avoiding the difficulty of generating high-quality optical–SAR synthetic pairs.

- (2)

We design and implement the PTIF using a pseudo-twin architecture integrated with a cross-modal interactive attention (CIA) module. This module captures spatial correspondences and learns cross-modal shared features, thereby effectively fusing modality-specific and modality-shared information.

- (3)

We introduce PLISA, an end-to-end registration method built upon the APLM and PTIF. Evaluations on the SEN1-2 dataset and OSdataset demonstrate PLISA’s superior accuracy and robustness against challenges including large perspective rotations, scale variations, and inherent SAR speckle noise.

The remainder of this article is structured as follows. A brief overview of methods in the field of image registration is introduced in

Section 2. The proposed method is explained in detail in

Section 3.

Section 4 discusses the experimental results under various conditions. Finally,

Section 5 concludes this article.

3. Method

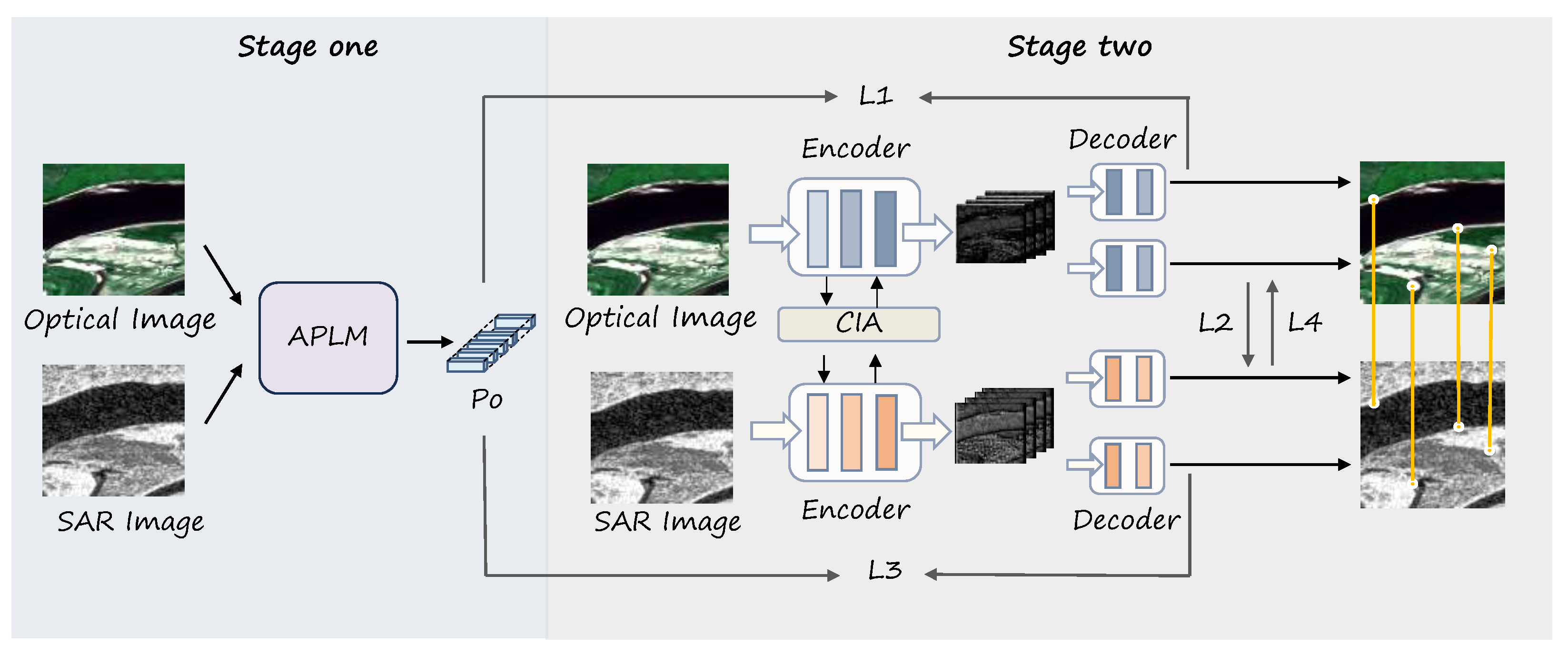

This article proposes a novel framework termed PLISA for optical and SAR image registration, as shown in

Figure 1. PLISA comprises two sequential stages. In the first stage, the APLM generates pseudo-labels Po representing corresponding points in the optical and SAR image pair. In the second stage, these pseudo-labels Po are leveraged as supervision to train the PTIF registration network. The PTIF is optimized via a composite loss function including feature point localization losses for both the optical (L1) and SAR (L3) images and bidirectional cross-modal descriptor matching losses (L2: optical to transformed SAR; L4: SAR to transformed optical). This design enables the framework to optimize feature localization and robust cross-modal descriptor matching, thereby enhancing the registration accuracy.

3.1. Automated Pseudo-Labeling Module

The APLM is designed to provide stable and repeatable feature points for both optical and SAR images. We adopt the core concept of feature point annotation from SuperPoint [

8].

Nevertheless, Superpoint [

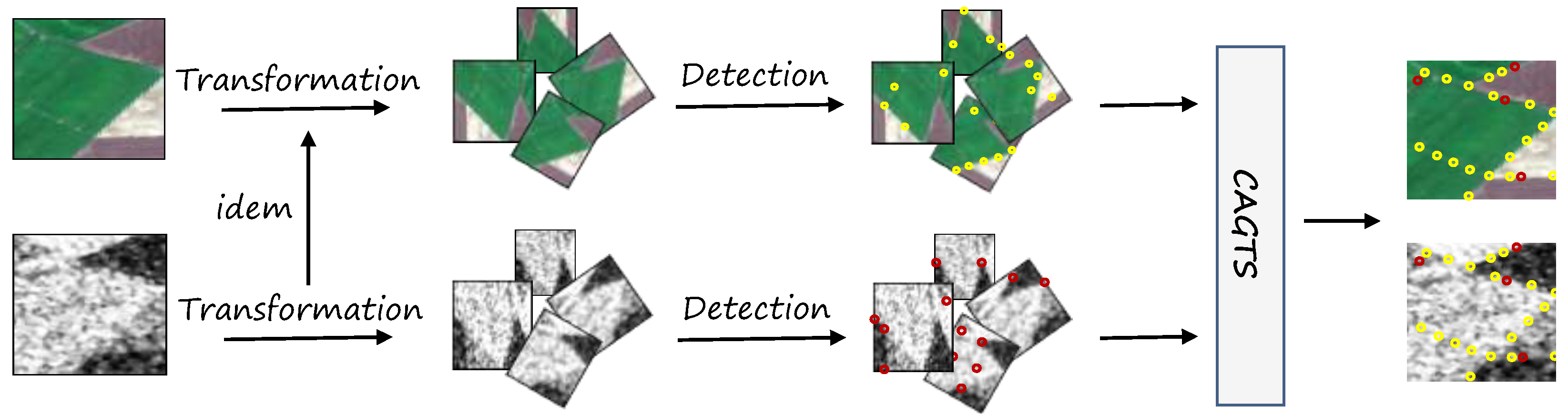

8] is primarily designed for the optical spectrum and cannot be directly applied to annotate feature points in SAR images. To overcome this limitation, we propose the APLM, which directly extends its underlying principles to cross-modal scenarios. Given the challenges in generating synthetic SAR datasets, we adhered to SuperPoint’s paradigm by training MagicPoint exclusively on synthetic optical data, subsequently deploying it for feature point detection in real-world optical and SAR imagery. Experimental results indicate that, while MagicPoint accurately labels feature points in optical images, it produces sparser detections in SAR images. This suggests that a method is required to increase the consistency of feature point detection across cross-modal image pairs. The implementation details for the application of MagicPoint to real optical and SAR images are shown in

Figure 2.

In response, we propose the Consistency-Aware Pseudo-Ground-Truth Selector (CAGTS) for the pseudo-ground-truth labeling of both optical and SAR images, using only optical synthetic data. This method is inspired by the homographic adaptation strategy [

8]. The CAGTS presents the idea of soft labels. This scheme maintains the precise localization of feature points in optical images while effectively preserving feature points characterized by SAR-specific imaging properties.

We initially annotated feature points in optical and SAR images with MagicPoint. This process generates initial sets of candidate feature point locations, denoted as {} for the optical image and {} for the corresponding SAR image. The final pseudo-ground-truth points are selected from the complete initial set {} of optical images and the filtered points from the initial SAR image set {}.

The filtering begins with a distance-based hard constraint. For each candidate SAR point

in the initial set {

}, its nearest neighbor optical point

within the set {

} is identified. The Euclidean distance

is calculated. A predefined distance threshold T is applied: if

,

is discarded due to being too isolated from any optical point to be considered consistent; if

,

is retained as a provisionally consistent candidate, forming a subset

. Next, a soft confidence score

is calculated for each provisionally consistent SAR point

in

. A search radius T is defined, centered at

. All optical points

falling within this radius T are identified, and N denotes the number of such points. The confidence score is computed as the average of the inverse distances to all these neighboring optical points within T:

where,

denotes the

i-th keypoint in the optical image, and

represents the Euclidean distance between

and

.

N refers to the total number of keypoints in the optical image. It is crucial to emphasize that this strategy must be applied to a pair of already co-registered optical and SAR images for annotation. A confidence threshold

is then applied to

for final selection. This threshold critically controls the strictness of SAR point selection, directly influencing the quantity and quality of the pseudo-ground truth. If

>

,

is accepted as a final pseudo-ground-truth point for the SAR image; if

,

is rejected due to lacking sufficient consistent local evidence despite passing the initial distance filter. The optimal value of

was determined through extensive empirical analysis (see

Section 4.9 for details), balancing the inclusion of sufficient correspondence points against the exclusion of noisy outliers. Our experiments indicate that

= 0.15 yields the best overall performance. Consequently, the final pseudo-ground-truth points sets are defined as follows: the optical image pseudo-ground-truth points remain the initial set {

} generated by the homographic adaptation strategy [

8]; the SAR image pseudo-ground-truth points comprise the subset of the initial {

} points that survived both the hard distance filter and the soft confidence threshold.

The pseudo-ground-truth point set obtained through the above process not only reflects the correspondence between the two image types but also enhances the repeatability of the feature points. The CAGTS helps to filter out inaccurate or isolated feature points that result from structural differences between images from different sources, thereby providing high-quality feature point labels for subsequent image registration.

3.2. Pseudo-Twin Interaction Network

Siamese networks, sharing parameters, perform exceptionally well in feature extraction for homogeneous modalities. However, when applied to cross-modal feature extraction involving optical and SAR remote sensing images, the dual branches can interfere with each other. This not only compromises the quality of feature extraction but also yields suboptimal results. Therefore, in multisource image registration tasks, researchers tend to adopt pseudo-Siamese network architectures to extract features from images of different modalities.

Accordingly, we design a PTIF for the extraction of feature points and descriptors. The architecture of the PTIF mainly consists of two parallel branches, with each branch dedicated to processing input images from different modalities.

3.2.1. Encoder

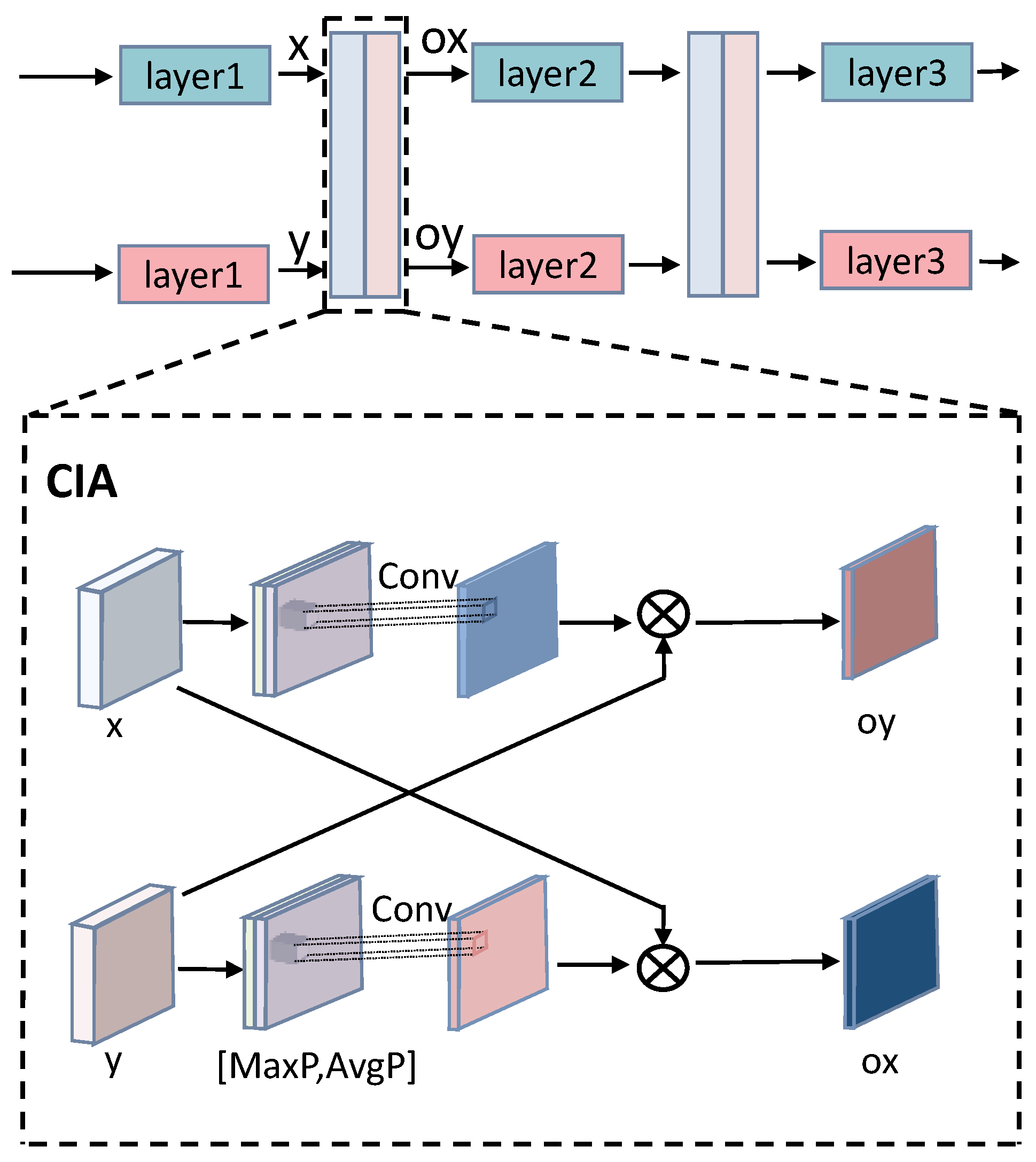

In the encoder section, the network utilizes a VGG-like backbone, which is configured with six 3 × 3 convolutional layers. After every two convolutional layers, a 2 × 2 max pooling layer is applied for spatial downsampling. Notably, between the first four convolutional layers—specifically after every two convolution operations—a CIA module is introduced between two distinct branches.

3.2.2. Decoder

Within the decoder, there are three 3 × 3 convolutional layers followed by a 1 × 1 convolutional layer, outputting a 65-channel feature point tensor and a 256-channel descriptor tensor.

Regarding the feature point tensor, it represents the probability of each position being a feature point, where the 65 channels include 64 positions from an 8 × 8 pixel grid and one “garbage bin” category [

8]. The “garbage bin” category is used to indicate the absence of significant feature points within this 8 × 8 pixel region. During training, the ground truth label

for each

region is determined based on the presence of annotated feature points. When the region contains at least one feature point,

is set to the grid position index corresponding to the feature point closest to the region center; when the region contains no feature points,

is set to 65.

As for the descriptor tensor, it has 256 channels, where both the width and height are of the original image size. To reduce the memory and processing time consumption, this tensor is bilinearly interpolated based on the locations of feature points to obtain 256-dimensional descriptors corresponding to the original size.

3.2.3. Cross-Modal Interactive Attention

Relying solely on the pseudo-Siamese network structure can only ensure the independence of feature extraction across different modalities, without achieving effective interaction and knowledge sharing between the two modalities. Thus, we introduce the CIA module in the network to enhance the learning capabilities across different modalities. The CIA module is designed to highlight salient structural regions in images. Through cross-modal learning, it adjusts the weight and attention distributions across locations in one modality based on information from the other. This not only helps the model to better capture the spatial structure in the input data but also improves the quality of feature representation.

As shown in

Figure 3, the CIA module performs max pooling and average pooling operations on the feature map of one modality to capture the global contextual information of this modality. Then, the results of these two pooling operations are concatenated and processed through a 1 × 1 convolutional layer to generate a set of spatial attention weights. These spatial attention weights are subsequently multiplied with the feature map of the other modality, emphasizing the salient parts that both modalities focus on while suppressing irrelevant or interfering regions. The fused feature map integrates the key information from both modalities, enhancing the consistency and complementarity of the cross-modal features.

3.3. Loss Function

The final loss of our network comprises four parts: L1 for optical image feature point localization, L2 for descriptor matching between optical and transformed SAR images, L3 for SAR image feature point localization, and L4 for descriptor matching between SAR and transformed optical images. The formula is as follows:

where,

is a pivotal hyperparameter that balances the contributions between the task of keypoint detection and the task of descriptor learning. A higher

value encourages the network to focus on learning discriminative descriptors for matching, while a lower

prioritizes the accurate localization of feature points. The optimal value of

is determined through ablation studies, as detailed in

Section 4.9. The feature point loss is defined as

The shape of the feature map of the feature points output by the network is

, where

represents the ground truth label,

is the predicted value for the class

, and

(

) denotes the predicted values for other classes. This cross-entropy loss function treats all categories equally. When

, the network is forced to learn high-probability predictions for the garbage bin category; when

is a position index, the network is forced to learn probability predictions for the corresponding feature point location. The descriptor loss is defined as

In computing the descriptor loss, a function

is defined as

. Here,

and

represent the keypoints at the center positions of the

cells in the two images, while

d and

denote the descriptor vectors corresponding to each cell in the two images. The hyperparameters within the descriptor loss function—the weighting term

and the margins

,

—are adopted directly from the SuperPoint design [

8], as they effectively govern the internal balance of positive and negative pairs and have been proven to work well in practice. The weighting term

addresses the fact that negative correspondences vastly outnumber positive correspondences.

4. Results

In this section, we first present an overview of the primary metrics, relevant datasets, and the detailed experimental setup. Then, we compare the performance of the proposed innovative method with that of multiple existing baseline methods to verify its superiority. We then assess the impact of varying levels of image noise on registration accuracy and analyze the performance of two feature point and descriptor decoupling strategies. Furthermore, we systematically evaluate the effects of two distinct optimizers on the training stability and final performance of PLISA. Finally, comprehensive ablation studies and parameter analysis are conducted.

4.1. Evaluation Metrics

We evaluate the image registration performance using four metrics: the root mean square error (RMSE), matching success rate (SR), number of correctly matched points (NCM), and repeatability [

3]. Among them, the repeatability metric is specifically designed to validate the quality of the extracted feature points. All metric values reported in the following represent averages computed over the complete test dataset.

4.1.1. RMSE

The RMSE is used to quantify the registration accuracy between the reference image and the target image after the predicted transformation, with smaller values indicating higher registration accuracy [

68]. The RMSE is calculated as follows:

where

and

are the coordinates of the

i-th correctly matched feature point pair in the reference image and the target image, respectively, after removing mismatched points using the FSC algorithm. The variable

m represents the number of correct matching point pairs remaining after the FSC-based mismatch removal process.

The point from the reference image is then transformed to obtain its predicted coordinates in the target image. The RMSE is computed based on the differences between these predicted coordinates and the actual corresponding point coordinates in the target image, providing an objective measure of the registration transformation accuracy.

4.1.2. NCM

NCM denotes the number of correct matches. This metric provides a direct measure of the number of feature points that are accurately matched between the reference and target images. The definition of a correct match is as follows [

8]:

In this case,

represents the feature point location of the target image after the predicted transformation,

denotes the corresponding feature point location on the target image after the actual transformation, and

refers to the actual transformation matrix.

4.1.3. SR

A match is considered successful when the RMSE for an image pair is below 3 pixels. The SR is calculated as the proportion of successfully matched pairs relative to the total number of pairs [

44].

4.1.4. Repeatability (REP)

Repeatability simply measures the probability that a point is detected in the second image. We compute the repeatability by measuring the distances between the extracted 2D point centers [

8]. More specifically, let us assume that we have

points in the first image and

points in the second image. Correctness in repeatability experiments is defined as follows: a point is considered correctly repeated if the distance from its corresponding point in the other image is below a threshold d. Empirically, d is set to 3 pixels [

31,

69,

70]; hence, this metric is commonly referred to as the 3-pixel repeatability. In this work, we also adopt d = 3 as the threshold.

4.2. Dataset

The experiment uses two public multimodal remote sensing image matching and registration datasets, SEN1-2 [

71] and OSdataset [

72]. The model’s performance is evaluated on the test splits of both the SEN1-2 dataset and OSdataset, with the latter used exclusively to further assess the generalizability to unseen data.

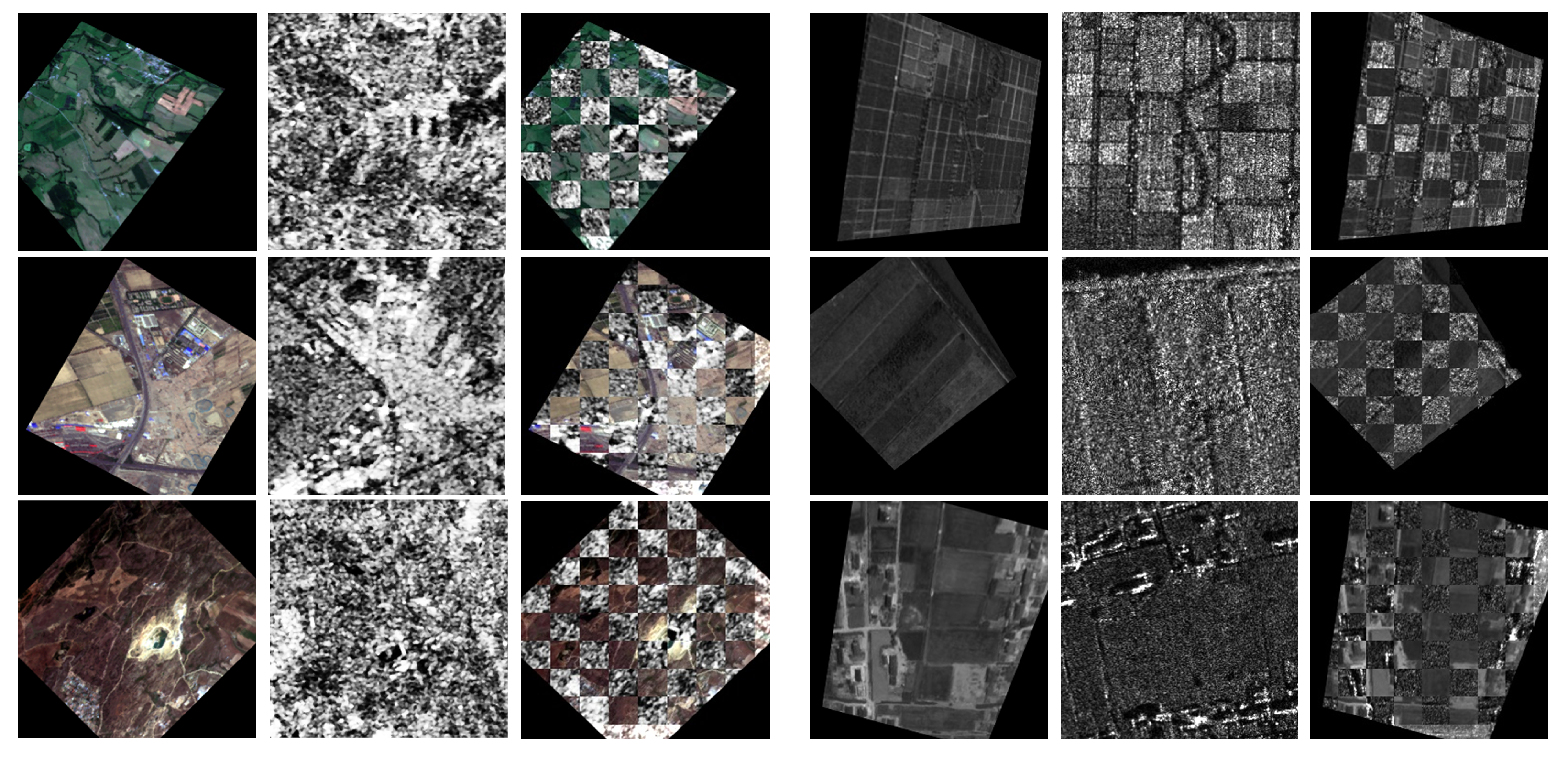

Figure 4 shows three groups of multimodal image samples from OSdataset and the SEN1-2 dataset, where significant nonlinear radiometric differences between the SAR images [

3] and the corresponding visible images can be clearly observed, posing an additional challenge for cross-modal image processing. The specifics of each dataset are as follows.

The SEN1-2 dataset [

71] consists of SAR and optical image patches, with SAR images provided by the Sentinel-1 satellite and the corresponding optical images provided by Sentinel-2. All image patches are standardized to a size of 256 × 256 pixels, with a spatial resolution of 10 m. The training set consists of 9036 pairs and the test set consists of 1260 pairs.

OSdataset [

72] contains co-registered SAR and optical image pairs in both 256 × 256 and 512 × 512 pixel sizes. In this study, we utilize the 256 × 256 version, which provides 10,692 image pairs with a 1-meter spatial resolution. From this collection, 1696 pairs of images were randomly chosen as the test set.

M4-SAR [

73] is a multiresolution, multipolarization, multiscenario, and multisource dataset designed for object detection based on the fusion of optical and SAR images. It was jointly developed by the PCA Lab of Nanjing University of Science and Technology, the Key Laboratory of Intelligent Computing and Signal Processing of the Ministry of Education at Anhui University, and the College of Computer Science at Nankai University. The dataset contains 112,184 precisely aligned image pairs and nearly one million annotated instances. For the evaluation of the model’s generalization capabilities, we randomly selected 406 optical and SAR image pairs with a spatial resolution of 60 m as the test set.

The SEN12MS-CR [

74] dataset comprises 122,218 patch triplets, each containing a Sentinel-1 SAR image, a Sentinel-2 optical image, and a cloud-covered Sentinel-2 optical image. From this collection, we randomly selected 383 cloud-covered optical and SAR image pairs to constitute the test set.

To address the challenge of partial overlap registration, we created the OSD-PO dataset, which is derived from the original OSdataset and consists of 1000 image pairs. These were constructed by first extracting 512 × 512 image patches from OSdataset, which were then cropped with controlled overlap ratios ranging from 50% to 80%. To simulate realistic imaging perturbations, we applied a series of geometric transformations: 70% of the pairs underwent slight translation, 20% were subjected to scale transformation, and the remaining 10% received combined rotation and scale transformations.

4.3. Experimental Details

All experiments were conducted using an NVIDIA RTX 3090 GPU. In the training process, we adopted Adam as an optimizer. Hyperparameters were set to and . The positive margin for the descriptor hinge loss was set to 1, and the negative margin was set to 0.2. Prior to the commencement of the experiments, necessary data preprocessing steps were applied to the SAR images to enhance the image quality and improve the subsequent processing results, including histogram equalization and Lee filtering.

4.4. Comparative Experiments

We evaluated seven state-of-the-art methods alongside our proposed approach across three different scenarios: slight translation, scale transformation, and rotation and scale transformation. Here, slight translation refers to minimal global image displacement, typically constrained to a range of ±5 pixels, without involving scale, rotation, or perspective alterations. Scaling transformation is achieved by sampling random scale factors from a truncated normal distribution centered at 1.0 with a ±10% amplitude. The rotation and scale transformations simultaneously applied random rotation across a continuous range of −90° to +90° and scaling within the range of 0.9–1.1. Additionally, all methods were tested on the partially overlapping OSD-PO dataset. The seven methods are RIFT (2019) [

35], Superpoint (2017) [

8], CMM-Net (2021) [

5], ReDFeat (2022) [

6], ADRNet (2024) [

57], MINIMA-LG (2024) [

75], and MINIMA-RoMa (2024) [

75], as shown in

Table 1.

4.4.1. Slight Translation Scenario

Evaluations of RIFT, Superpoint, CMM-Net, ReDFeat, ADRNet, MINIMA-LG, MINIMA-RoMa, and the proposed method on the SEN1-2 dataset and OSdataset under slight translation transformations reveal varying performance levels. The corresponding quantitative evaluation results are provided in

Table 2.

On the SEN1-2 dataset, the proposed method achieves an SR of 100%, matching ReDFeat and ADRNet while significantly outperforming RIFT (72.619%), Superpoint (0%), and CMM-Net (4.360%). In terms of overall performance, MINIMA-RoMa also demonstrates impressive results across multiple metrics, particularly achieving an exceptionally high NCM, which is attributed to its detector-free matching paradigm that involves dense pixel-level correspondence. Our method attains a competitively high NCM while maintaining computational efficiency. Most notably, our approach achieves the lowest RMSE and the highest repeatability, demonstrating superior matching accuracy and robustness. Our method maintains competitive efficiency while delivering substantially improved matching precision.

Similar trends are observed on OSdataset, where our method again achieves a near-perfect SR (99.7%), exceeding ReDFeat (65.5%), ADRNet (77.83%), and RIFT (72.465%). Its NCM is also significantly lower than that of MINIMA-RoMa, but our method achieves the lowest RMSE and the highest repeatability. Superpoint and CMM-Net exhibit notably poor performance across all metrics. These results underscore our method’s effectiveness under slight translational deformations.

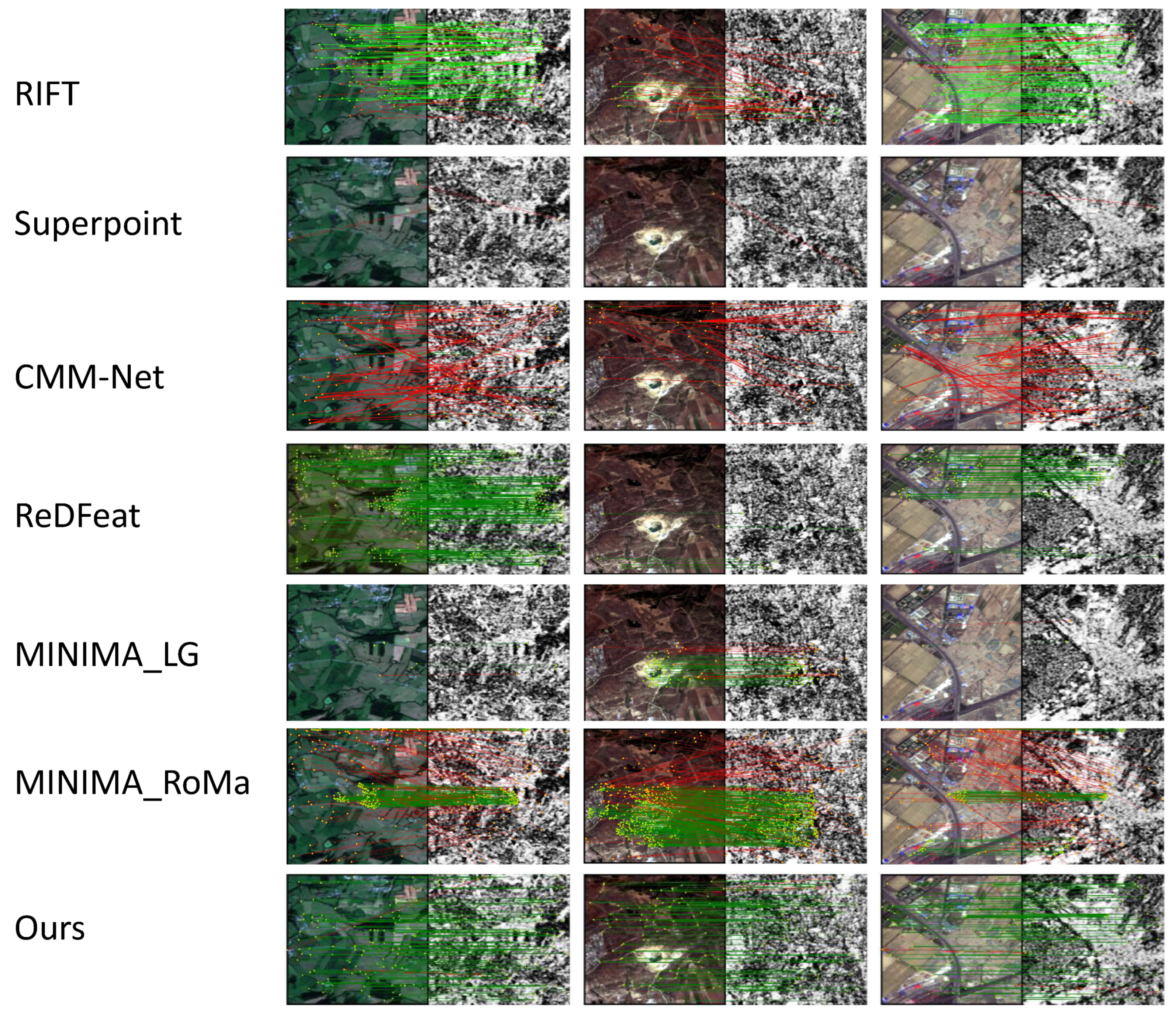

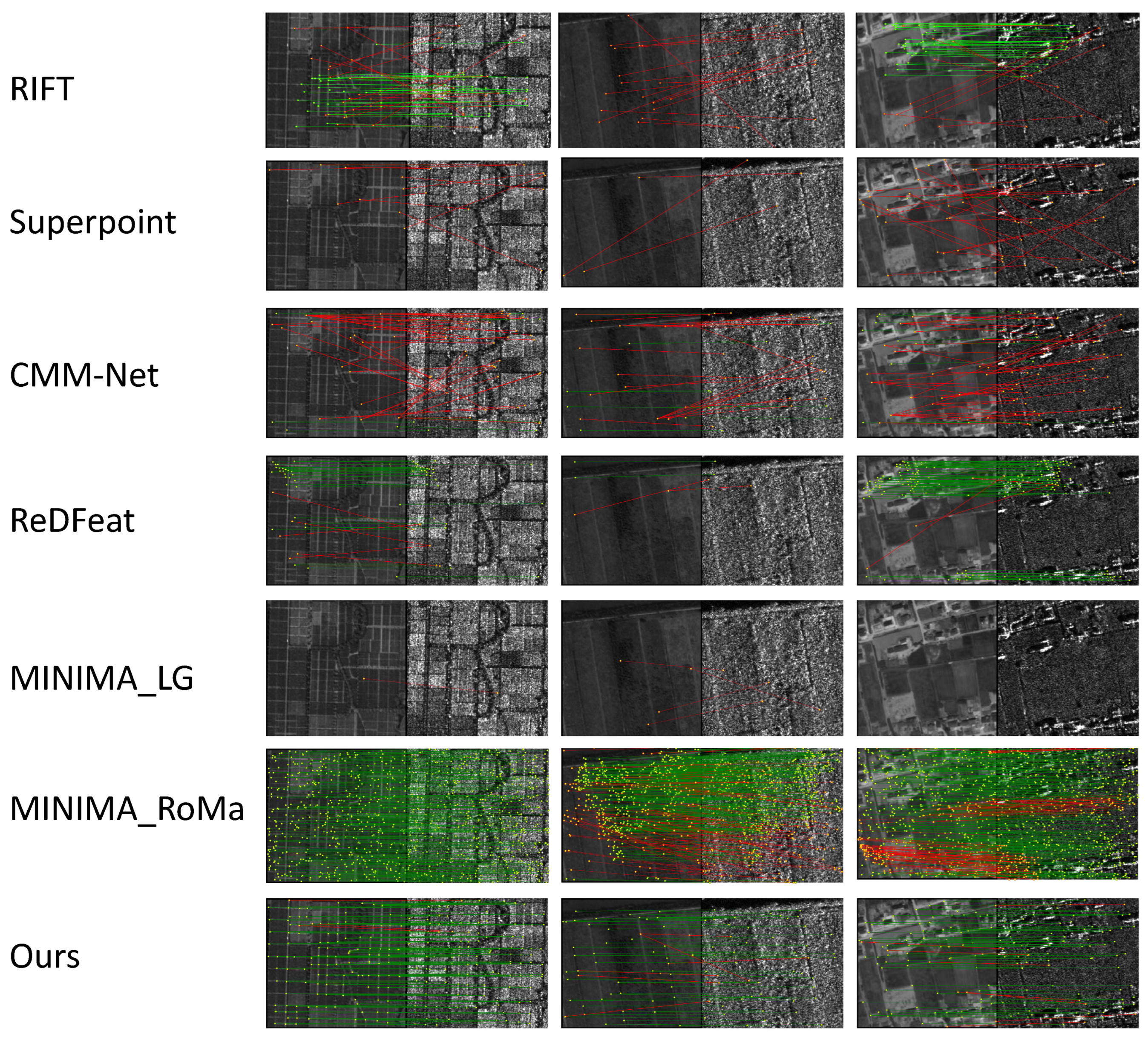

The qualitative results in

Figure 5 and

Figure 6 visually corroborate the quantitative findings. Among all competitors, MINIMA-RoMa also produces commendable registration results, followed by ReDFeat, which shows relatively good performance. Nevertheless, the proposed method consistently yields the most reliable matches.

In summary, the proposed method demonstrates consistently superior performance across both datasets, proving highly robust and accurate under translation transformations.

4.4.2. Scale Transformation Scenario

Scale transformations presented significant challenges for most evaluated methods, as clearly evidenced by the quantitative results in

Table 3. While the majority of the approaches suffered from substantial performance degradations, MINIMA-RoMa maintained relatively strong performance across key metrics, demonstrating notable resilience to scale variations. The proposed method also achieved consistently superior results despite the challenging conditions.

On the SEN1-2 dataset, our method achieves a perfect SR of 100%, demonstrating clear superiority over other approaches. While MINIMA-RoMa also maintains strong performance, with a 99.8% SR and exceptionally high NCM value, our method achieves the lowest RMSE and highest repeatability. The performance gap becomes particularly evident when comparing it with other methods: RIFT achieves only a 37.063% SR, while ADRNet fails functionally under scale changes, with only a 1.667% SR.

On OSdataset, our method maintains outstanding performance, with a 98.99% SR, closely matching MINIMA-RoMa (99.8% SR) while achieving superior repeatability. MINIMA-LG shows inconsistent performance across datasets, achieving moderate results on SEN1-2 (15.1% SR) but better performance on OSdataset (55.1% SR), albeit still substantially below the leading methods.

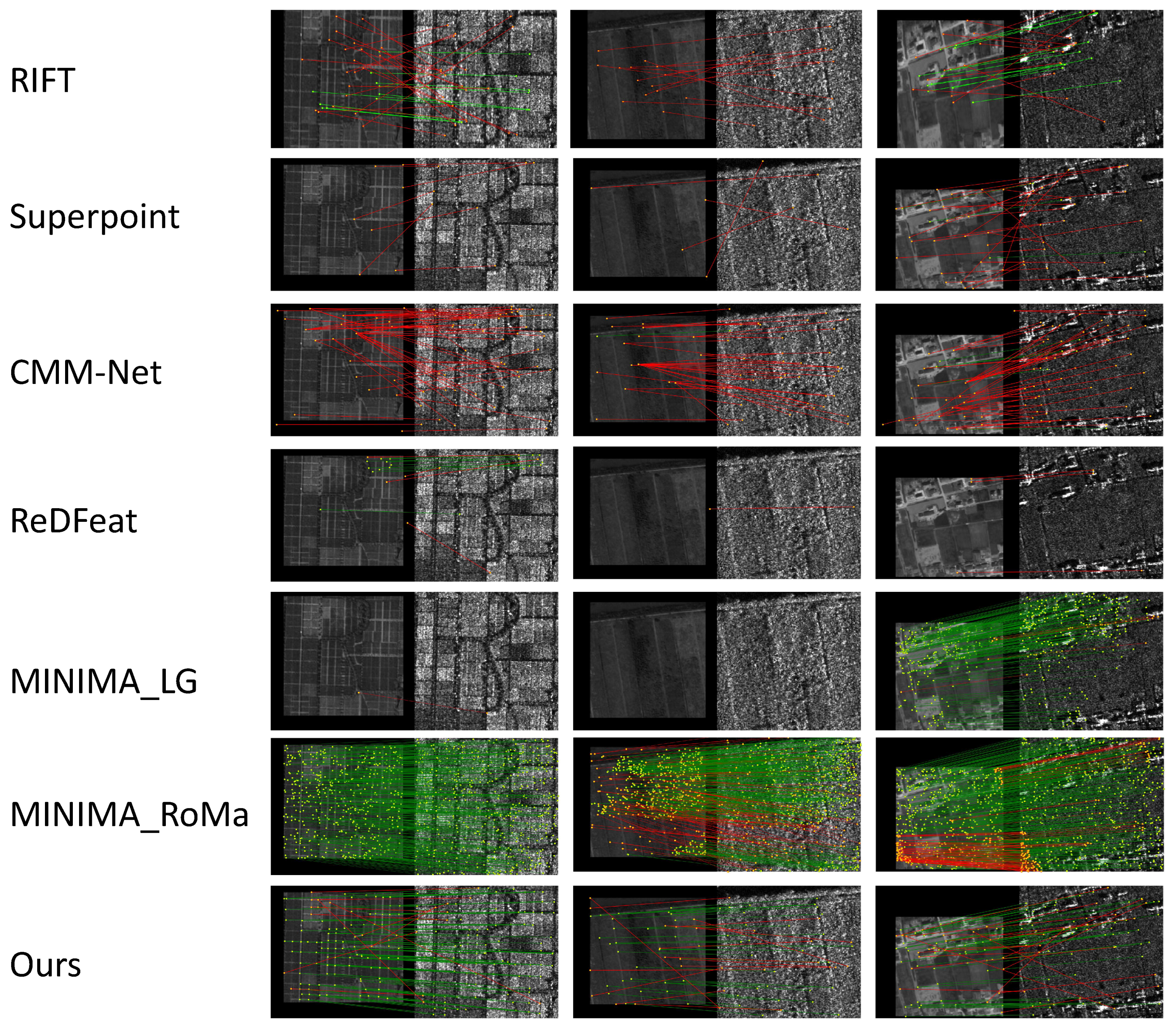

The qualitative results in

Figure 7 and

Figure 8 corroborate these findings. MINIMA-RoMa produces commendable matching results across all test pairs. MINIMA-LG shows acceptable performance on the three image pairs from the SEN1-2 dataset but exhibits noticeable degradation on the OSdataset examples. The proposed method sustains reliable matches across all test pairs, maintaining robustness where other methods experience significant performance deterioration.

In summary, while MINIMA-RoMa maintains competitive performance in scale transformation scenarios, our method also demonstrates exceptional scale invariance, achieving a perfect or near-perfect SR while maintaining superior matching precision and repeatability across diverse datasets.

4.4.3. Rotation and Scale Transformation Scenario

Large-angle rotation and scale transformations combined represent the most challenging scenario. The quantitative results in

Table 4 reveal a stark performance gap: the proposed method delivers near-flawless accuracy, while most competing methods exhibit functional breakdown.

On the SEN1-2 dataset, our approach achieves a perfect 100% SR, demonstrating remarkable robustness to rotation and scale transformations. MINIMA-RoMa emerges as the second-best performer with an 83.4% SR, although its performance shows noticeable degradation compared to scale transformation scenarios. The gap becomes particularly evident when considering other methods: ReDFeat achieves only a 7.38% SR, while RIFT and ADRNet fall below a 3% SR. Our method also dominates in matching quality, achieving the lowest RMSE and highest repeatability.

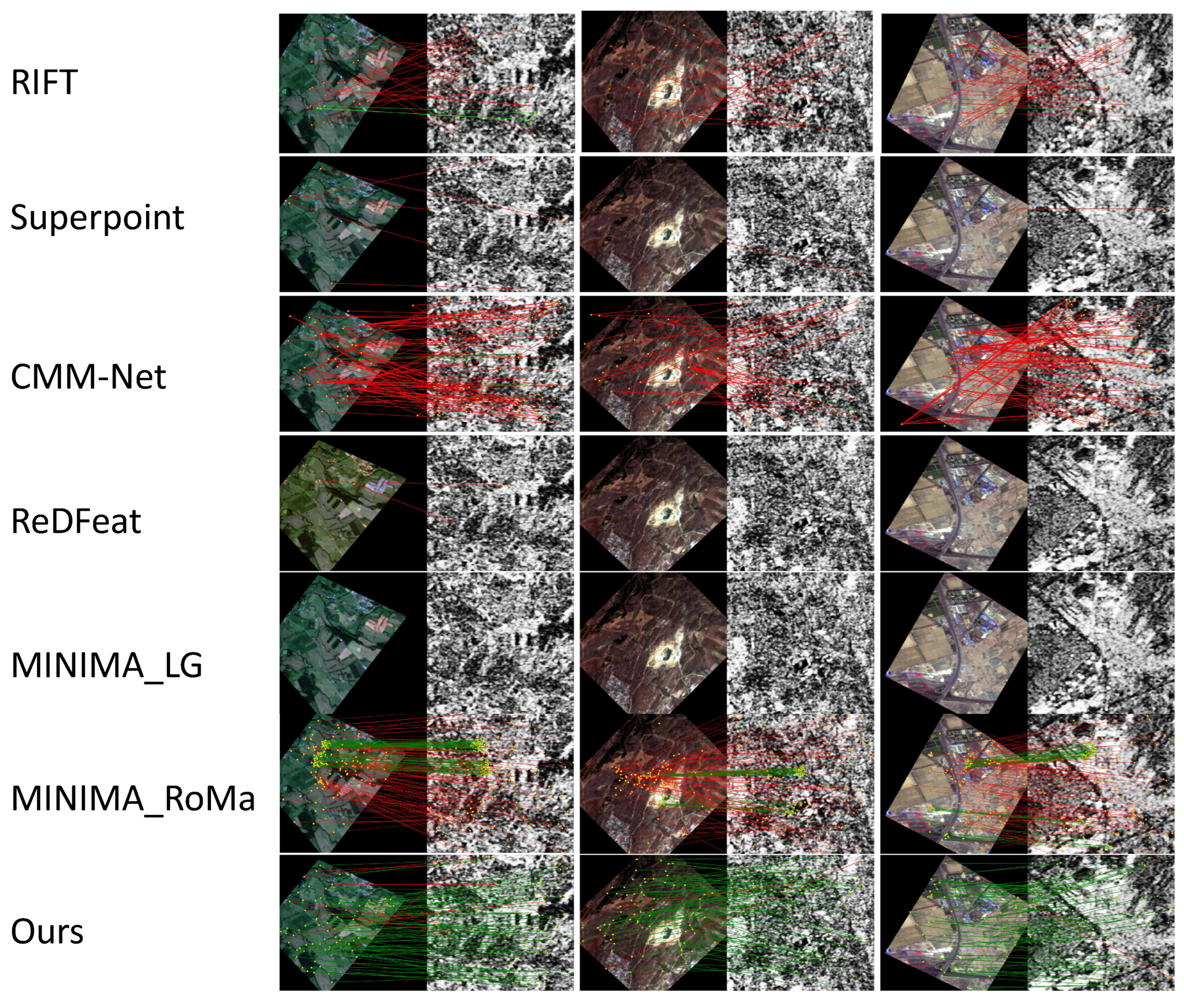

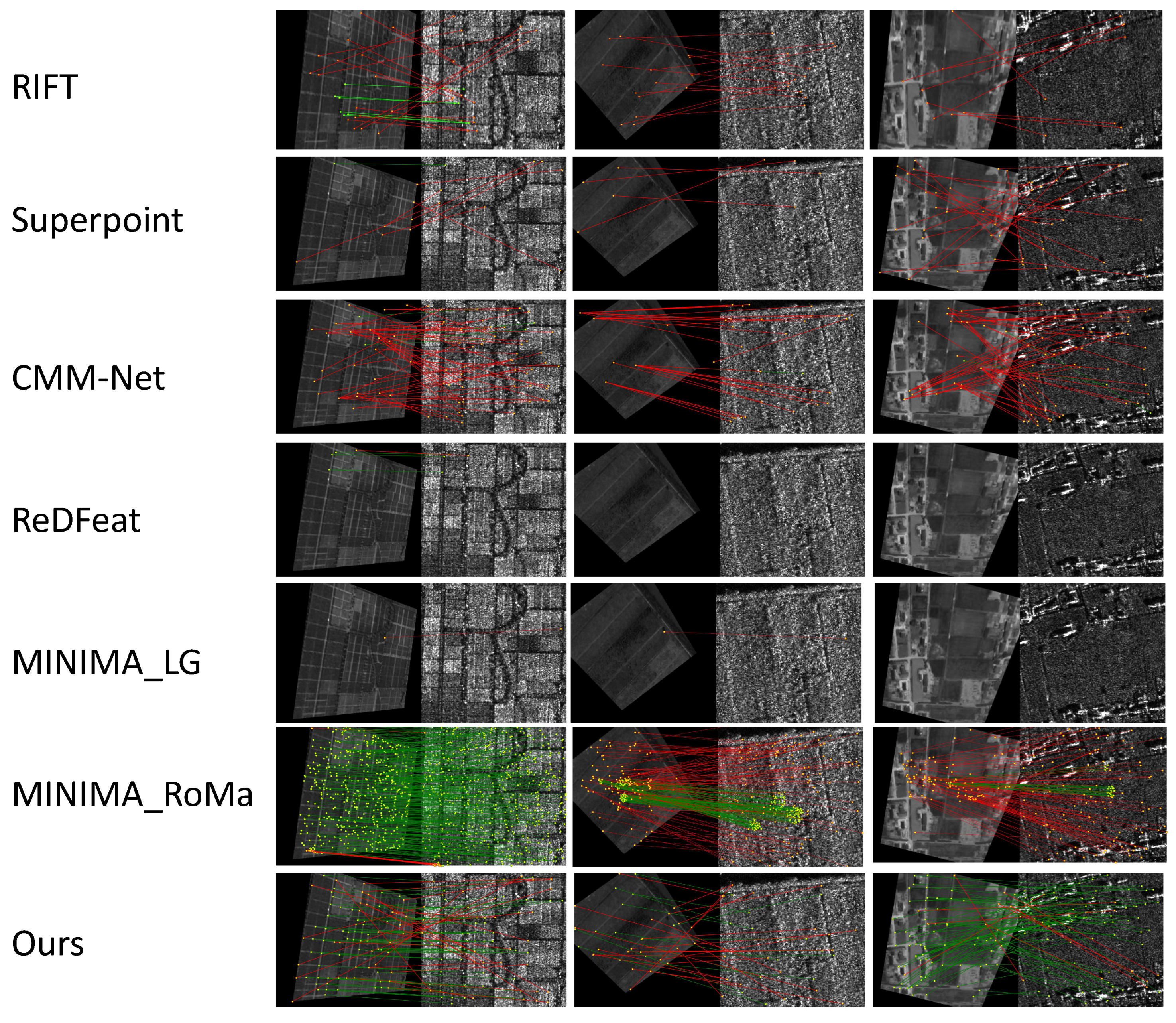

The qualitative results in

Figure 9 and

Figure 10 visually demonstrate the performance hierarchy under these conditions. The proposed method clearly produces the most reliable correspondences, maintaining robust matching quality despite the combined large-angle rotation and scale transformations. MINIMA-RoMa emerges as the second-best performer, generating substantially better matches than other approaches. Other competing methods, including RIFT and CMM-Net, exhibit near-complete failure and lack practical utility for registration tasks.

In particular, under rotation and scale transformation conditions, we focus on demonstrating the advantages of the proposed method compared to other state-of-the-art methods. The checkerboard visualization results of six pairs of high-difficulty images are shown in

Figure 11, clearly illustrating the superior performance of our method in multimodal image registration tasks.

4.4.4. Evaluation on Partially Overlapping Dataset

The partially overlapping scenario represents an extremely challenging condition for image registration, as clearly evidenced by the quantitative results in

Table 5. The overall performance across all evaluated methods remains limited, highlighting the difficulty of this task. Among the compared approaches, MINIMA-RoMa achieves the highest SR at 20.1%, along with significantly superior NCM values, although its matching accuracy, as reflected in the RMSE, still requires substantial improvement. Our method attains a 5.56% SR, comparable to ReDFeat and MINIMA-LG, while achieving the best repeatability among all methods. Notably, RIFT, SuperPoint, CMM-Net, and ADRNet completely fail to produce any successful registrations under these demanding conditions.

We acknowledge the current limitations of our approach in handling severe partial overlap scenarios, particularly in achieving a higher SR. The generally constrained performance across all methods is evident, including the relatively high-performing MINIMA-RoMa. We attribute the generally poor performance across all methods to the absence of non-overlapping image pairs in the training data. This limitation highlights an important direction for future research, where incorporating explicitly non-overlapping training samples could potentially enhance the method’s capabilities in handling extreme partial overlap scenarios.

4.4.5. Computational Efficiency Discussion

We evaluate the inference speed and GPU memory consumption of our method alongside other approaches, with all experiments conducted under the same environment on an NVIDIA RTX 3090 GPU. As shown in

Table 6, our method achieves an inference time of 0.126 s per image pair and memory usage of 1680 MiB. While ReDFeat and ADRNet exhibit faster inference speeds, and ReDFeat also consumes significantly less memory, our approach still maintains competitive efficiency. Notably, MINIMA-RoMa, which delivers superior performance compared to ReDFeat and ADRNet and is among the best performers aside from our method, incurs significantly higher computational costs due to its detector-free design. Although our method is not the absolute best in terms of computational efficiency, it strikes a favorable balance between performance and resource demands, making it a practically viable and efficient solution for real-world applications.

4.4.6. Discussion of Generalization Capabilities

This study intentionally employs the SEN1-2 dataset, OSdataset, and the M4-SAR dataset, with significantly different spatial resolutions, to rigorously evaluate the generalization capabilities of the proposed method. As shown in

Table 7, the consistently high performance across both datasets demonstrates notable robustness to significant variations in the ground sampling distance. Although a performance decrease is observed on the M4-SAR dataset, the overall results remain satisfactory.

Additionally, the SEN12MS-CR dataset is included to assess the method’s performance under cloud cover conditions. The empirical results confirm that our approach maintains reliable feature matching capabilities despite atmospheric disturbances.

We attribute this robustness to the characteristics of the feature points that our method leverages. The detected feature points are predominantly high-quality corners. Such features maintain their distinctive characteristics across resolution variations because their salience originates from the local geometric structure rather than intensity variations, which tend to be highly sensitive to resolution changes. As a result, identical physical structures, such as building corners or road intersections, produce consistent feature representations in 60 m and 1 m imagery.

The empirical evidence indicates that the method successfully bridges substantial resolution gaps and maintains functionality under challenging conditions like cloud cover, making it highly suitable for practical applications involving multisource remote sensing data.

4.5. Discussion of the Effectiveness of the APLM

The proposed APLM serves as the cornerstone for generating supervisory signals in our self-supervised framework. To comprehensively validate its efficacy, this section provides a detailed analysis from two critical perspectives: the quality of the generated pseudo-labels and the robustness of the model to potential inaccuracies within them.

First, to validate the quality of the generated pseudo-labels, we conducted manual verification. A prerequisite for the success of our method is the availability of high-quality pseudo-labels for training the feature matcher. To quantitatively assess this, we performed manual verification on a randomly selected set of 600 image pairs from the training corpus. The statistical results confirm the exceptional reliability of our APLM, yielding average pseudo-label accuracy of 93.9%. This high precision ensures that the supervisory signal provided to the matcher is overwhelmingly correct, thereby effectively guiding the learning process.

Second, we designed an experiment to investigate the model’s robustness to noise. Specifically, we systematically introduced errors into the training data. For each image pair, 10% of the pseudo-label point correspondences were corrupted by shifting the coordinates of the SAR image points by 3 pixels in a random direction, while the corresponding optical image points remained unaltered.

The model was subsequently trained and evaluated on this perturbed dataset. The impact on the matching performance across key datasets is summarized in

Table 8. On the SEN1-2 dataset, the performance remained highly stable, with the SR and repeatability showing negligible changes, while the RMSE saw a minor increase of 0.03, and the NCM decreased by 30. A contained effect was observed on OSdataset, where the repeatability decreased by 1%, the SR decreased by 1%, the RMSE increased by 0.3, and the NCM was reduced by 10. Crucially, the training process remained stable and converged normally under both conditions.

These results robustly demonstrate that our framework is not critically sensitive to a small proportion of erroneous pseudo-labels, confirming that the APLM provides a sufficiently clean and robust supervisory signal for effective model convergence.

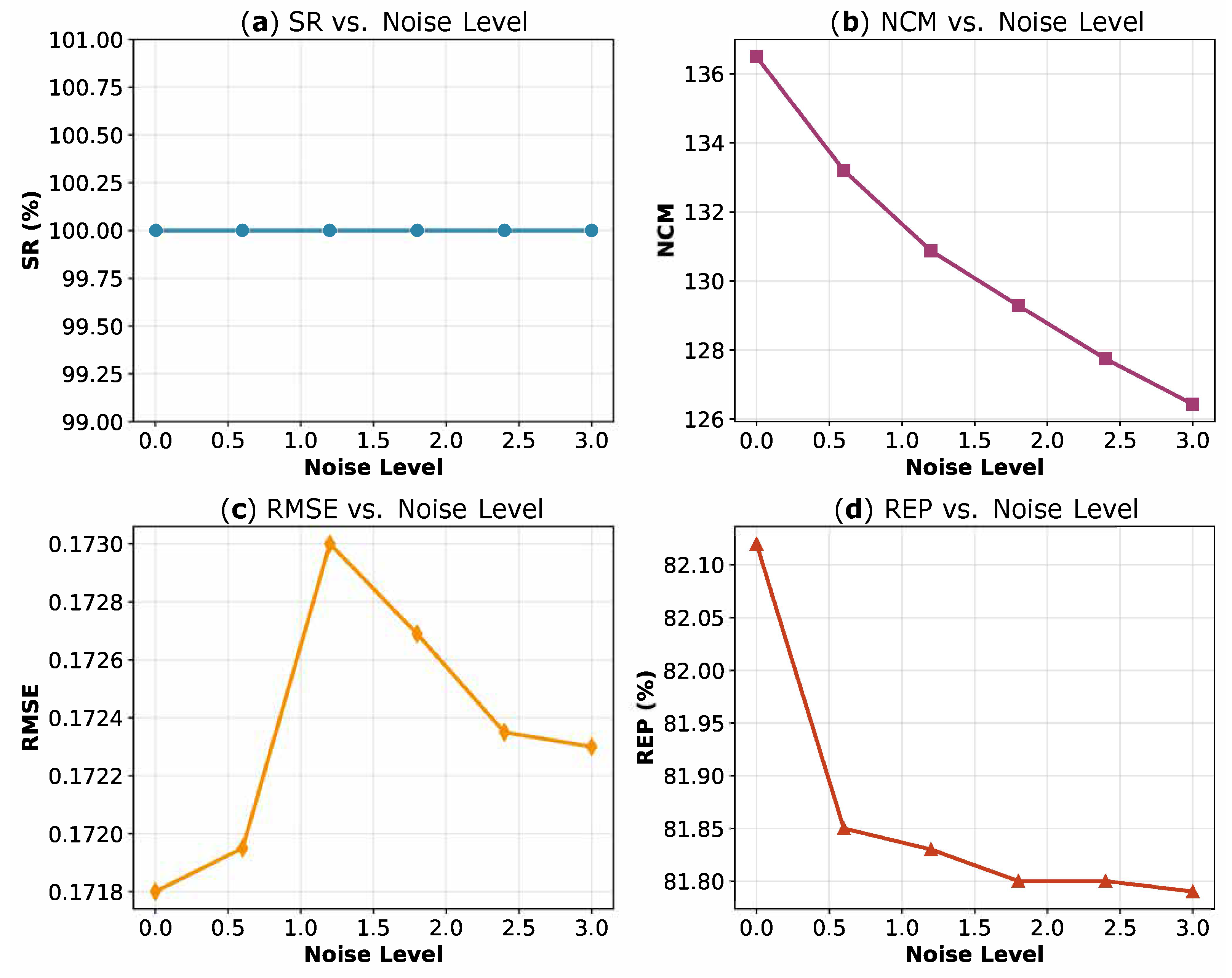

4.6. Impact of Noise on Cross-Modal Registration Accuracy

This section analyzes the effect of synthetic noise on the performance of PLISA. To simulate realistic noise while maintaining experimental control, we introduced two types of synthetic noise into pre-denoised SAR images. The first type was based on the original SAR sensor noise that had been initially removed from the SEN1-2 dataset using a Lee filter. We scaled this noise with coefficients of 0.6, 1.2, 1.8, 2.4, 3.0 and injected it back into the pre-denoised images. A coefficient of 1.0 corresponds exactly to the inherent noise level of the original SAR images, providing a realistic baseline for comparison. The second type was Gaussian noise, which was added at three intensity levels, with sigma values of 30, 50, and 70, into the pre-denoised SAR images.

As illustrated in

Figure 12 for the first type of noise and

Table 9 for the second type, all metrics exhibit consistent trends with increasing noise: the SR and NCM show a steady decline, while the RMSE rises slightly and the repeatability decreases modestly. Despite these variations, PLISA maintains robust performance across all noise levels, demonstrating its practical utility in real-world scenarios, where the image quality is often compromised.

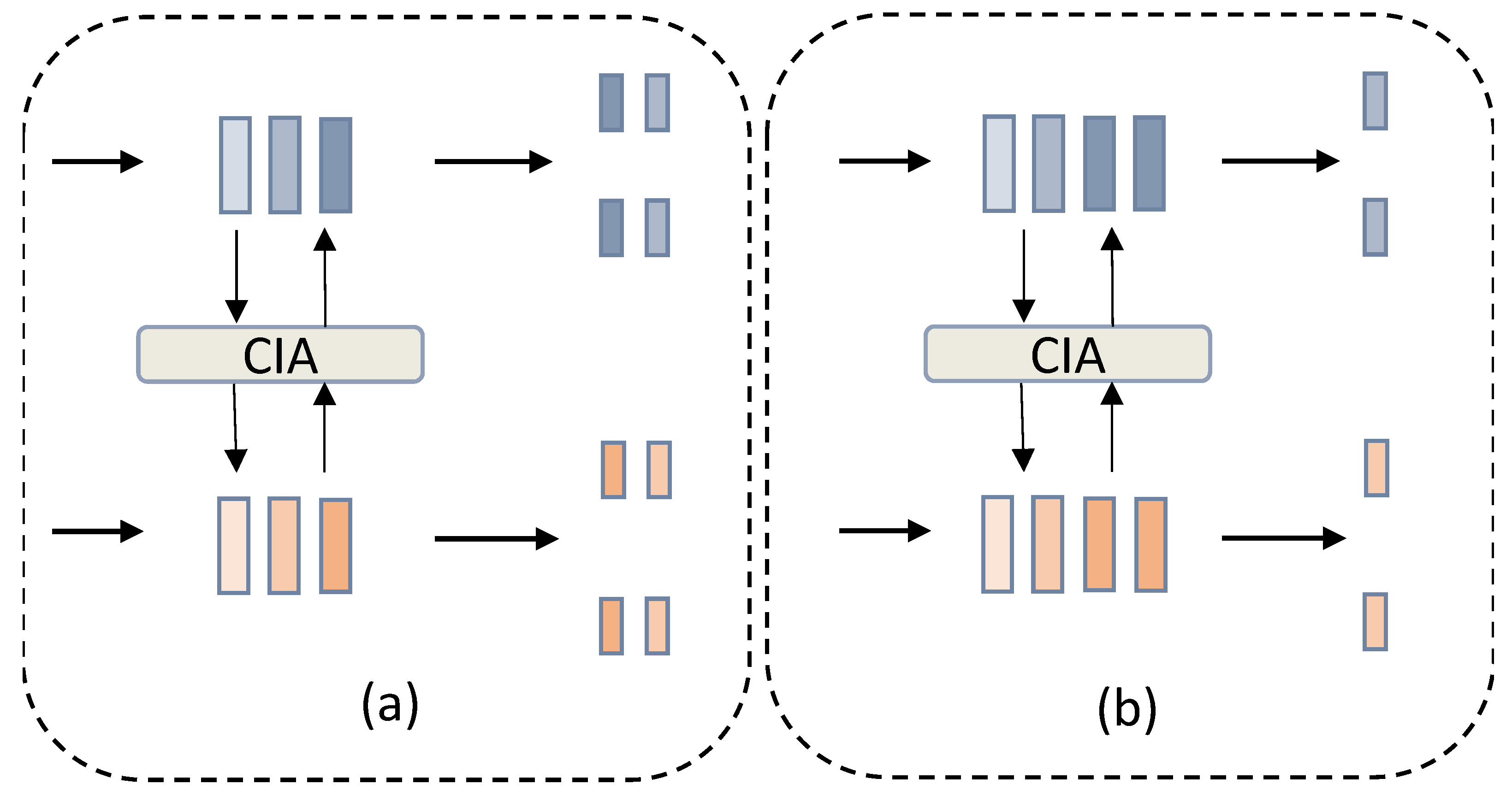

4.7. Influence of Feature Point and Descriptor Decoupling Strategy

To evaluate the impact of the feature point and descriptor decoupling strategy on the experimental results, we conduct a comparative analysis of two different decoupling schemes and perform an in-depth study of their effects.

Figure 13 illustrates the network architectures under these two decoupling strategies, with the key difference lying in the depth of the network at which the decoupling occurs: the first strategy, termed the early-stage decoupling strategy (EDS), separates the feature point detection and descriptor generation tasks at an earlier stage, while the second strategy, referred to as the deep-layer decoupling strategy (DDS), performs this separation at a deeper layer of the network.

Experimental results reflecting the changes in the metrics when applying the two decoupling strategies are shown in

Table 10. On the SEN1-2 dataset, EDS achieved a lower RMSE of 0.171, compared to 0.177 under DDS, along with a higher NCM value of 136.496 versus 129.160. The improvement is even more pronounced on the more challenging OSdataset, where EDS attained an RMSE of 0.896—a reduction of 0.082 compared to DDS—and improved both the NCM and SR substantially.

The observed performance disparities are predominantly attributable to the distinct decoupling positions within the network architecture. Specifically, when decoupling is implemented at deeper layers of the network, the interplay between the feature point detection network and the descriptor generation network is intensified. This heightened interaction, while beneficial in certain contexts, may introduce complexities that impede the independent optimization of their respective tasks.

Conversely, adopting an early decoupling strategy enables each subnetwork to focus more singularly on its designated task. By minimizing the mutual interference between the two networks, early decoupling facilitates a more focused optimization process. This, in turn, enhances the overall performance of the system by allowing each subnetwork to operate more efficiently within its specialized domain.

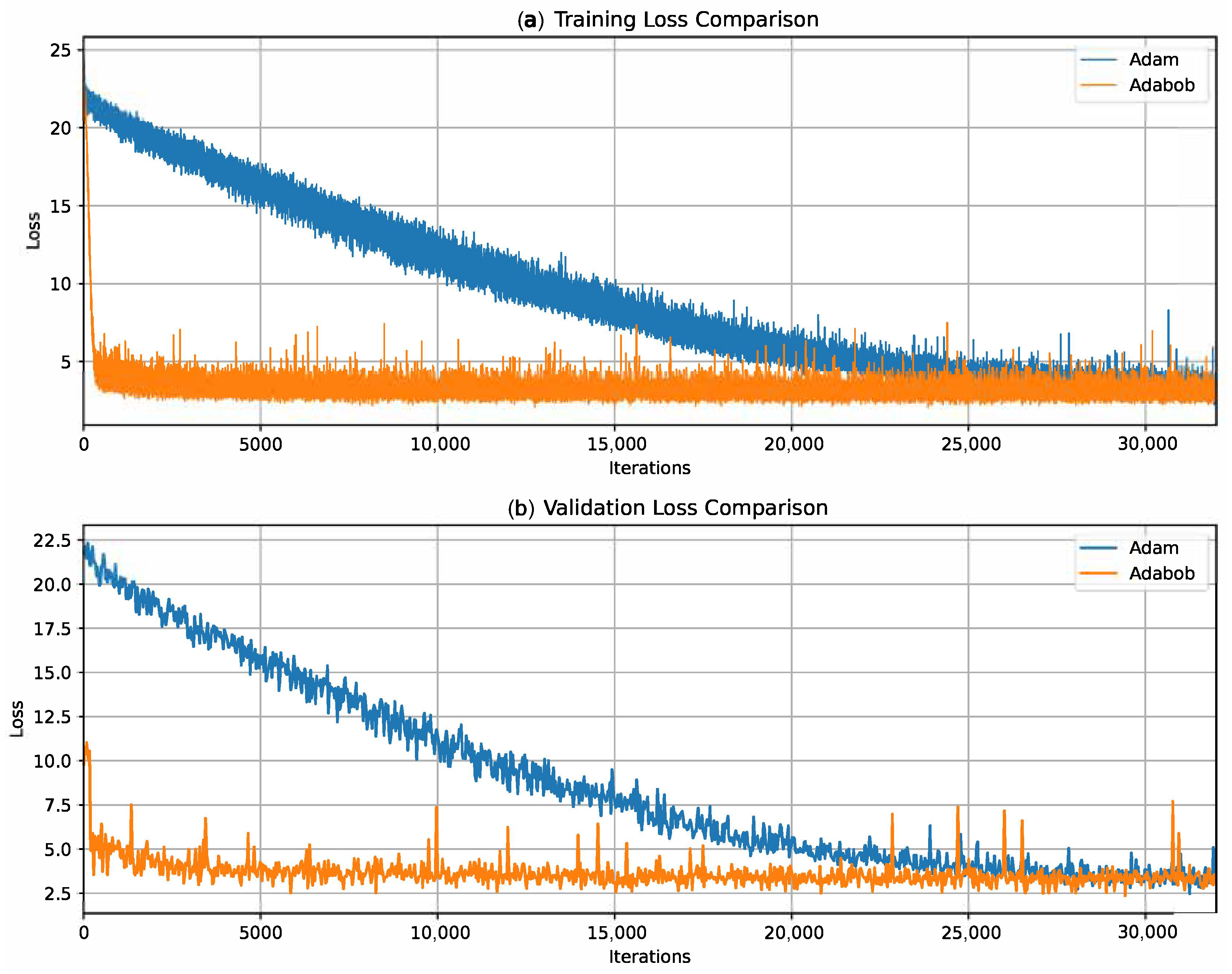

4.8. Experiments with Adam and AdaBoB Optimizers

To evaluate the robustness and consistency of the proposed PLISA framework under different optimizers, we conducted comparative experiments using both the Adam and AdaBoB [

76] optimizers. Adam is a classic and widely adopted optimization algorithm, well validated across numerous tasks. In contrast, AdaBoB [

76] is a more recent method that integrates the gradient confidence mechanism from AdaBelief and the dynamic learning rate bounds from AdaBound. This combination ensures stable convergence, theoretical guarantees, and low computational complexity. The experiments were designed to compare the performance of PLISA under both optimizers. Default parameters were used for AdaBoB [

76] throughout the experiments.

The quantitative results are summarized in

Table 11. PLISA exhibits minimal performance variation between Adam and AdaBoB [

76], with all metrics remaining highly consistent. This indicates that the method is robust to the choice of optimizer.

The training and validation loss curves are shown in

Figure 14. Both optimizers effectively reduce the loss, with the training loss dropping rapidly from an initial value of 20–25 and eventually converging close to zero. AdaBoB, however, demonstrates smoother loss curves in the mid-to-late training phase, with almost no visible fluctuations. This aligns with its design goal of suppressing gradient noise and enforcing stable convergence through dynamic learning rate constraints.

In summary, PLISA maintains strong and consistent performance across both optimizers. These results confirm the optimization stability and reliability of the proposed framework.

4.9. Ablation Study

To validate the effectiveness of the components in our framework, we conducted an ablation study using the SEN1-2 dataset and OSdataset, with a particular focus on evaluating the contributions of the proposed CIA module and APLM.

The results, presented in

Table 12, clearly show that removing either the CIA module or the APLM causes a substantial drop in performance across all evaluation metrics on both datasets. Specifically, the absence of the APLM leads to a complete failure in feature matching, underscoring its essential role in generating reliable pseudo-labels for supervisory signals. Conversely, removing the CIA module severely weakens cross-modal feature interaction, resulting in a sharp decline in matching accuracy. These findings strongly affirm that both components are critical for learning robust feature representations and achieving the accurate registration of optical–SAR image pairs.

Building upon the foundational ablation study, we further validate the efficacy of the proposed CIA module and justify its specific design choice by comparing its performance against two other representative interactive attention mechanisms: interactive channel attention (ICA) and interactive convolutional block attention (ICBAM). The quantitative results are presented in

Table 13. It is essential to first elucidate the conceptual differences between these three interaction paradigms to provide context for their performance disparities.

The ICA mechanism is rooted in the channel attention paradigm. Its core operation focuses on modeling interdependencies between channels across modalities, generating channel-wise weights to recalibrate the feature importance. However, this interaction occurs on spatially compressed descriptors, which risks diluting the critical spatial information that is paramount for geometric registration tasks. In contrast, the ICBA module employs a cascaded structure, typically processing channel attention first and then spatial attention in sequence. A critical drawback of this design is the inherent risk of error propagation, where suboptimal interactions in the initial channel stage are passed into and amplified by the subsequent spatial stage.

Our CIA module is designed with a fundamentally different philosophy, prioritizing direct spatial interaction. It bypasses intricate channel recalibration and instead generates spatial attention maps from one modality to directly guide the feature selection of the other. This design is intrinsically aligned with the core objective of image registration—establishing precise spatial correspondences. It explicitly forces both modalities to focus on spatially co-occurring salient regions, thereby preserving the structural integrity and enhancing the geometric consistency.

As unequivocally evidenced in

Table 13, the performance of both ICA and ICBA is significantly inferior to that of our proposed CIA module. This performance disparity is a direct consequence of the misalignment between their core operational principles and the intrinsic requirements of cross-modal registration. The limitation of ICA originates from its channel-centric optimization. In cross-modal scenarios, where feature channels can embody vastly different physical interpretations, forcibly performing interchannel interaction often disrupts modality-specific information and fails to establish meaningful correlations for geometric matching. ICBA’s failure, conversely, exposes the structural vulnerability of its cascaded pipeline. The sequential process propagates distortions from the first interaction stage directly into the second, causing the spatial attention weights to be computed from already corrupted representations, which in turn amplifies errors and leads to poor overall performance.

The superior efficacy of our CIA module is therefore attributed to its dedicated spatial interaction strategy. By enabling a mutual focus on spatially co-occurring regions, it directly augments the geometric consistency between modalities. This approach effectively preserves the unique characteristics of each modality while selectively suppressing irrelevant interference, making it uniquely powerful for building robust spatial correspondences between optical and SAR images.

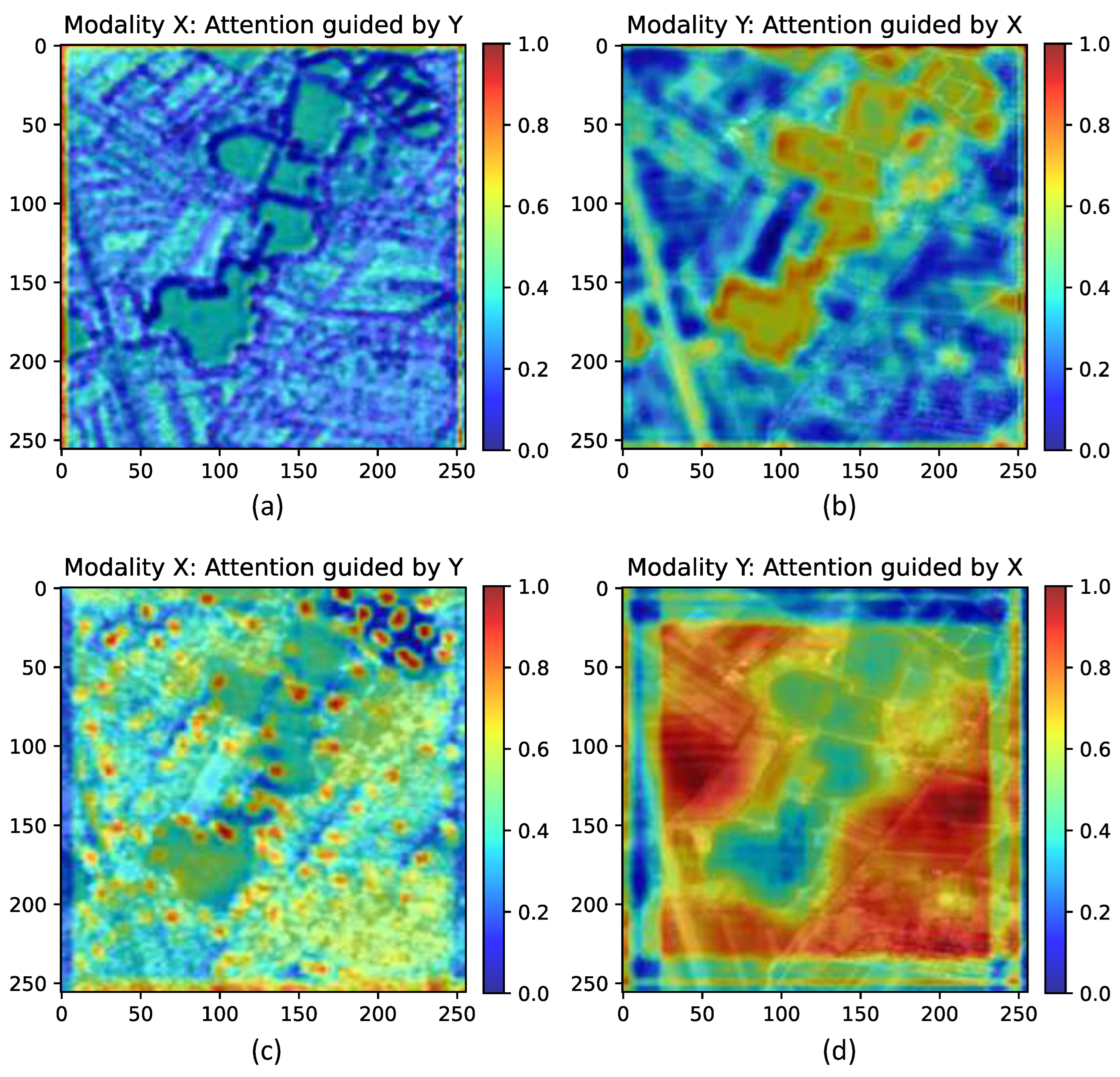

To validate the effectiveness of the CIA module, we conducted a visual analysis of the network outputs.

Figure 15 illustrates the changes in the attention maps of optical and SAR images before and after one CIA module operation.

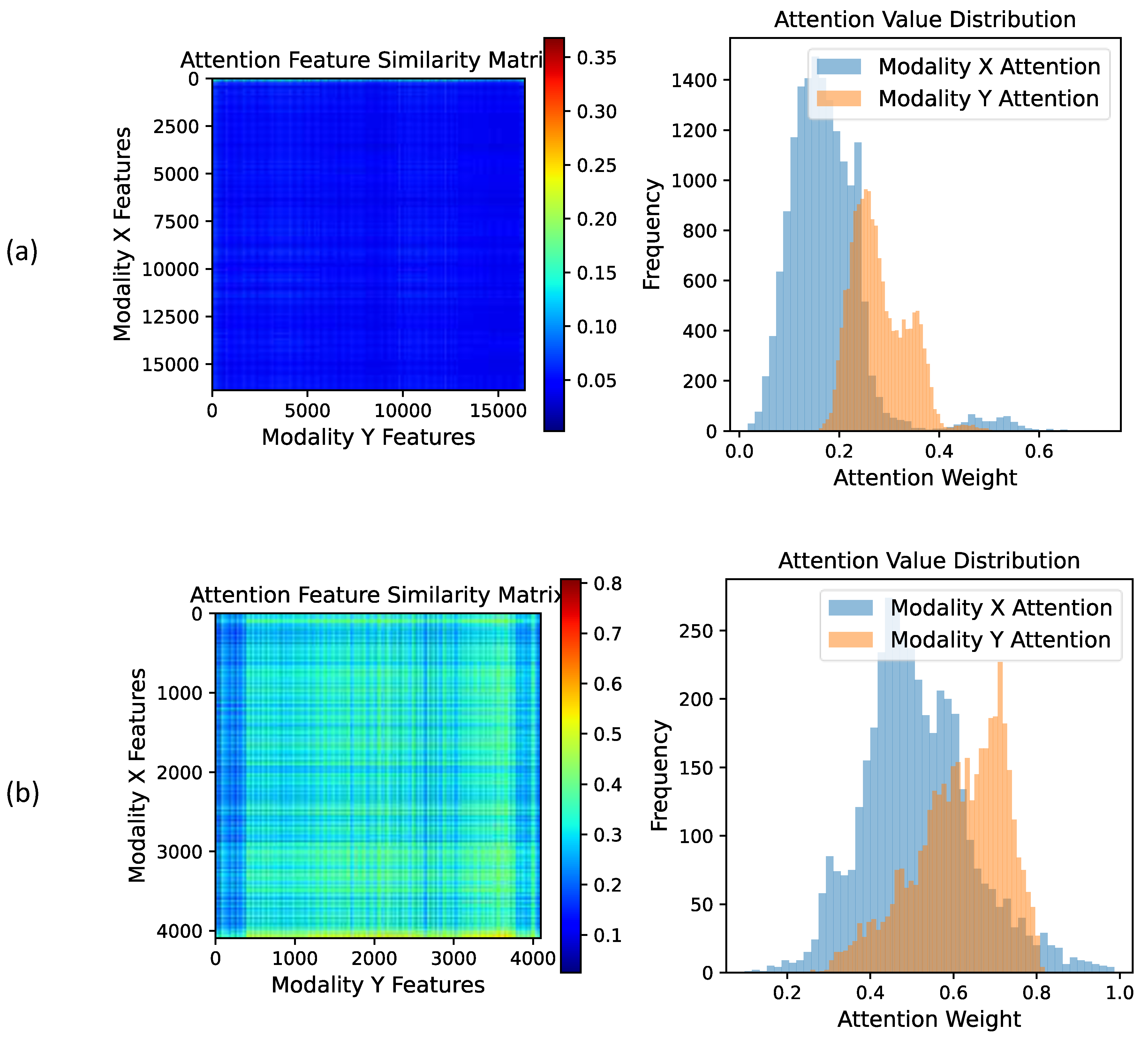

The results demonstrate that, without CIA, the attention maps of both modalities primarily focus on salient regions within their respective images, yet these regions exhibit significant discrepancies. For instance, salient regions in optical images focus on texture-rich areas, whereas SAR images emphasize regions with strong reflectivity. After CIA processing, however, the attention map of the optical image begins to converge towards salient regions of the SAR image and vice versa. This indicates that the CIA module enables mutual guidance between modalities, allowing it to learn complementary salient information and extract more consistent representations. Furthermore,

Figure 16 presents the post-CIA attention value distribution and feature similarity matrix. The distribution of the attention weights reveals that, following the application of the CIA module, the alignment between modalities is significantly enhanced, as the distribution of the attention weights across different modalities becomes increasingly similar. By directing each modality to focus on the same salient regions as the other modality, the CIA module facilitates the extraction of more repeatable feature points and the generation of more similar feature vectors for the same point, which is crucial for multimodal image registration. The similarity matrix indicates a significant increase in cross-modal feature similarity after CIA processing, thereby demonstrating the module’s ability to extract more consistent features and, consequently, improving the accuracy of multimodal image registration.

Based on these experimental analyses, we conclude that the CIA module demonstrates significant effectiveness in optical–SAR image registration. By enabling mutual guidance between modalities, it facilitates the extraction of highly consistent cross-modal features, substantially enhancing the registration accuracy.

4.10. Parameter Analysis

As introduced in

Section 3.2 and

Section 3.3, the proposed method relies on several key hyperparameters, which can be categorized into two groups: the architectural parameter and functional parameters. The architectural parameter is the descriptor dimensionality and the functional parameters are

and

.

For the architectural parameter, we observed that the model performance remains robust across a certain range. The analysis of the descriptor dimensionality, as detailed in

Table 14, indicates a consistent trend. While the 128-dimensional configuration serves as a computationally efficient baseline, increasing the dimensionality to 256 brings consistent performance gains on both datasets. Specifically, on the SEN1-2 dataset, the 256-dimensional descriptor achieves the highest NCM and repeatability, showing a noticeable improvement over the 128-dimensional version. This trend is further supported on OSdataset, where the 256-dimensional setup also attains the highest NCM and the lowest RMSE. In contrast, the 512-dimensional descriptor introduces higher computational costs without outperforming the 256-dimensional configuration and even leads to a performance degradation regarding certain metrics. Thus, we conclude that the 256-dimensional descriptor offers the best balance, enhancing the representational capacity and matching accuracy while avoiding the unnecessary overhead of higher dimensions.

Regarding the functional parameters, controls the weight ratio of the descriptor loss in the loss function; a higher value increases the proportion of the descriptor loss in the total loss, potentially enhancing the network’s focus on the descriptor generation task. The parameter determines the number of feature points selected from the SAR image; a larger value means that fewer SAR image feature points are used, reducing the amount of SAR information introduced, while a smaller value may result in the inclusion of excessive noise points, thereby interfering with the network’s learning process.

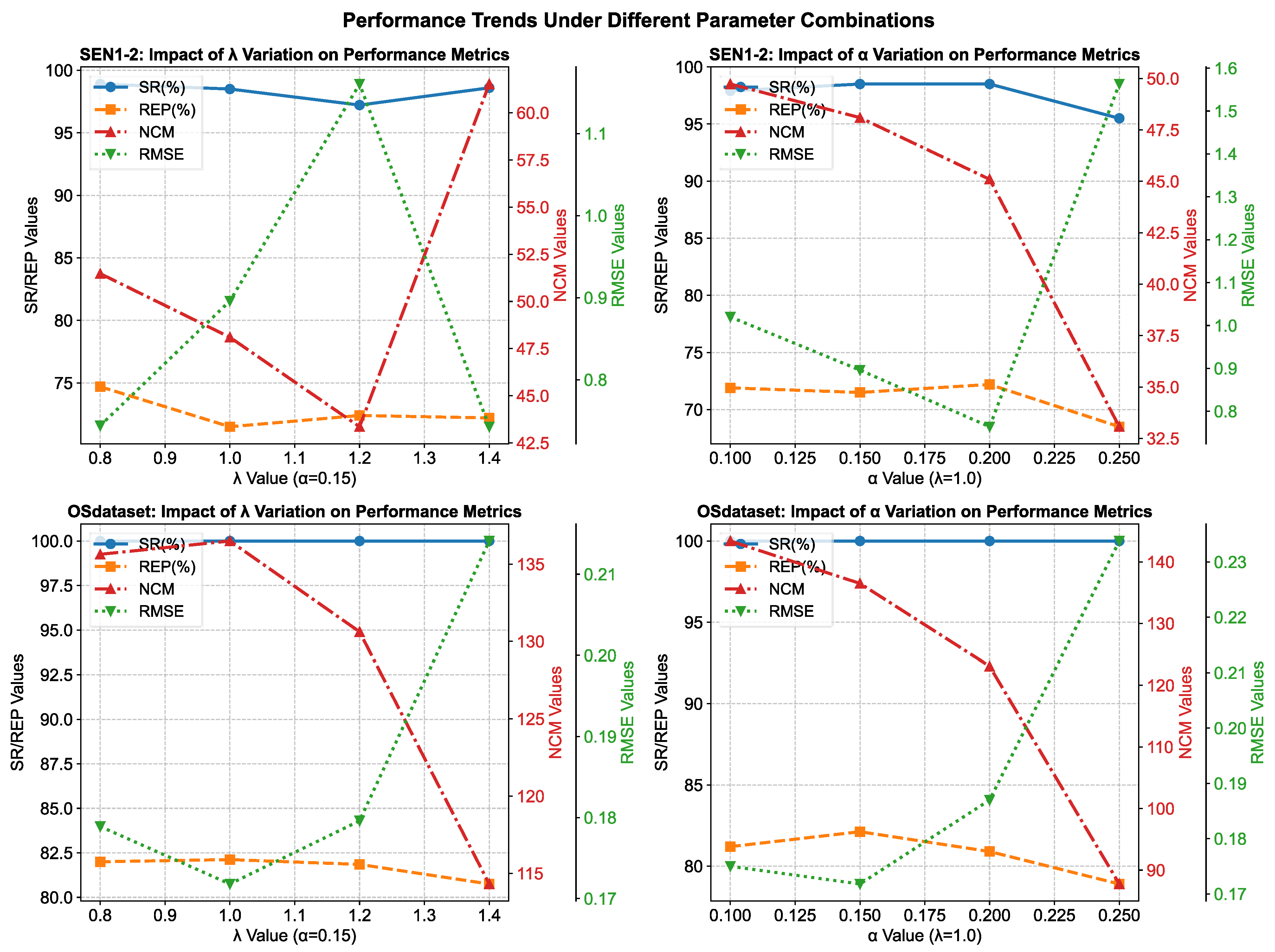

Through systematic ablation studies on the SEN1-2 dataset, we investigated the influences of hyperparameters and on model performance. The experimental results demonstrate that both parameters impact the matching accuracy, yet they exhibit distinct effects across different metrics.

Our systematic ablation studies on the SEN1-2 dataset reveal influence patterns (

Figure 17). For

, we observe a performance peak at

. When

is too low, the model underfits the descriptor learning task, leading to a noticeable drop in matching accuracy. Conversely, an excessively high value causes the network to overprioritize descriptor optimization at the expense of feature detection. This indicates that

is crucial for balancing the two core subtasks. Similarly,

exhibits a pronounced optimal range. The best overall performance is achieved at

. A smaller

introduces excessive feature points, including many low-quality and noisy candidates, which disrupts the matching process. A larger

overfilters the features, resulting in insufficient information for robust matching. This demonstrates that

effectively acts as a noise filter and information regulator.

Notably, on OSdataset—used exclusively to test generalization—the model maintained excellent performance across all parameter configurations. While the lowest RMSE was observed at , the highest NCM occurred at , indicating dataset-specific characteristics. However, based on the comprehensive validation on SEN1-2, we fixed the parameters at and for final evaluation. The model achieved remarkably strong performance on OSdataset even with these fixed parameters, demonstrating robust generalization capabilities.

These findings confirm that, while the optimal parameters may vary across datasets, the selected configuration provides an excellent balance between feature detection and descriptor learning, enabling robust performance across diverse scenarios.