Evaluation of CMORPH V1.0, IMERG V07A and MSWEP V2.8 Satellite Precipitation Products over Peninsular Spain and the Balearic Islands

Highlights

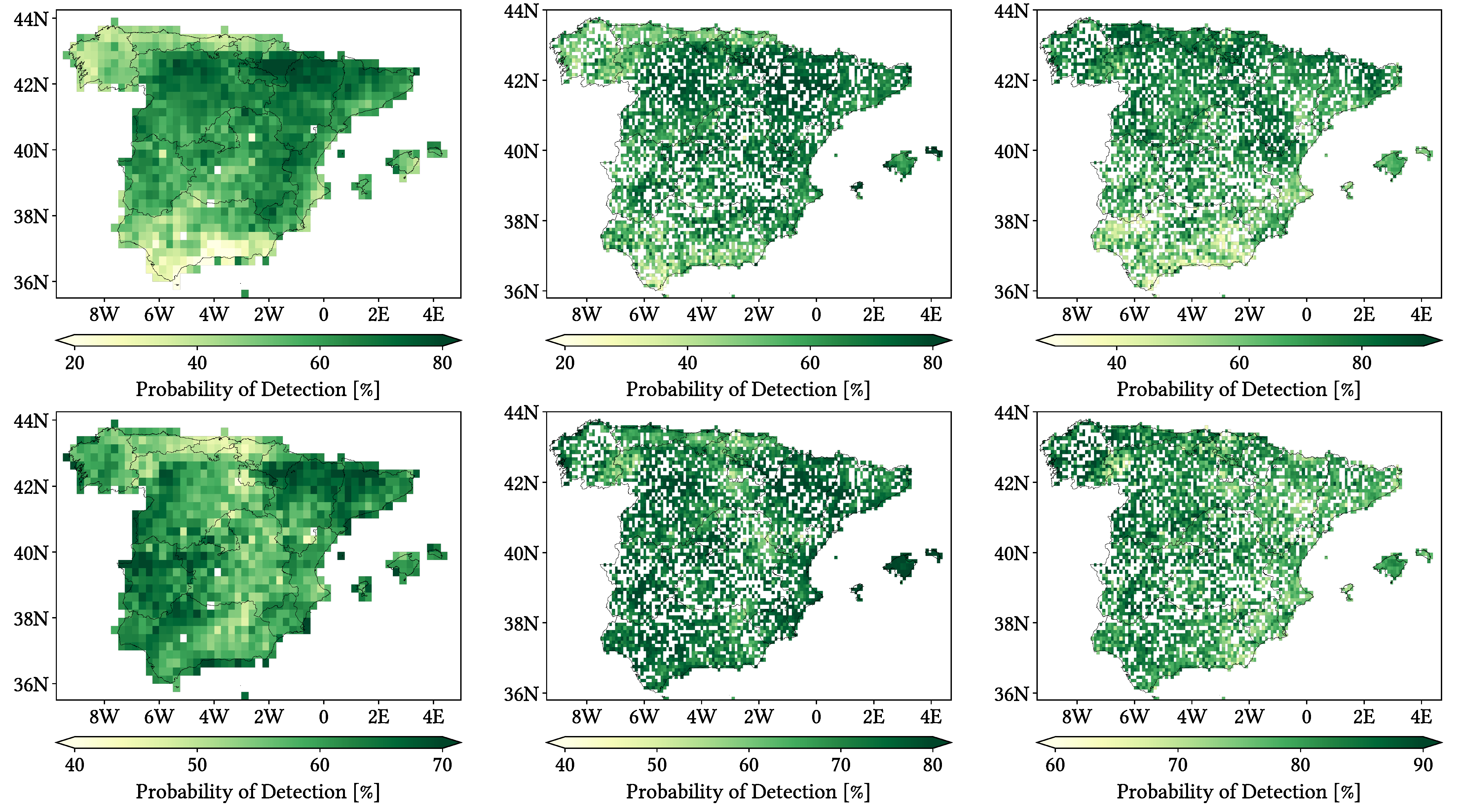

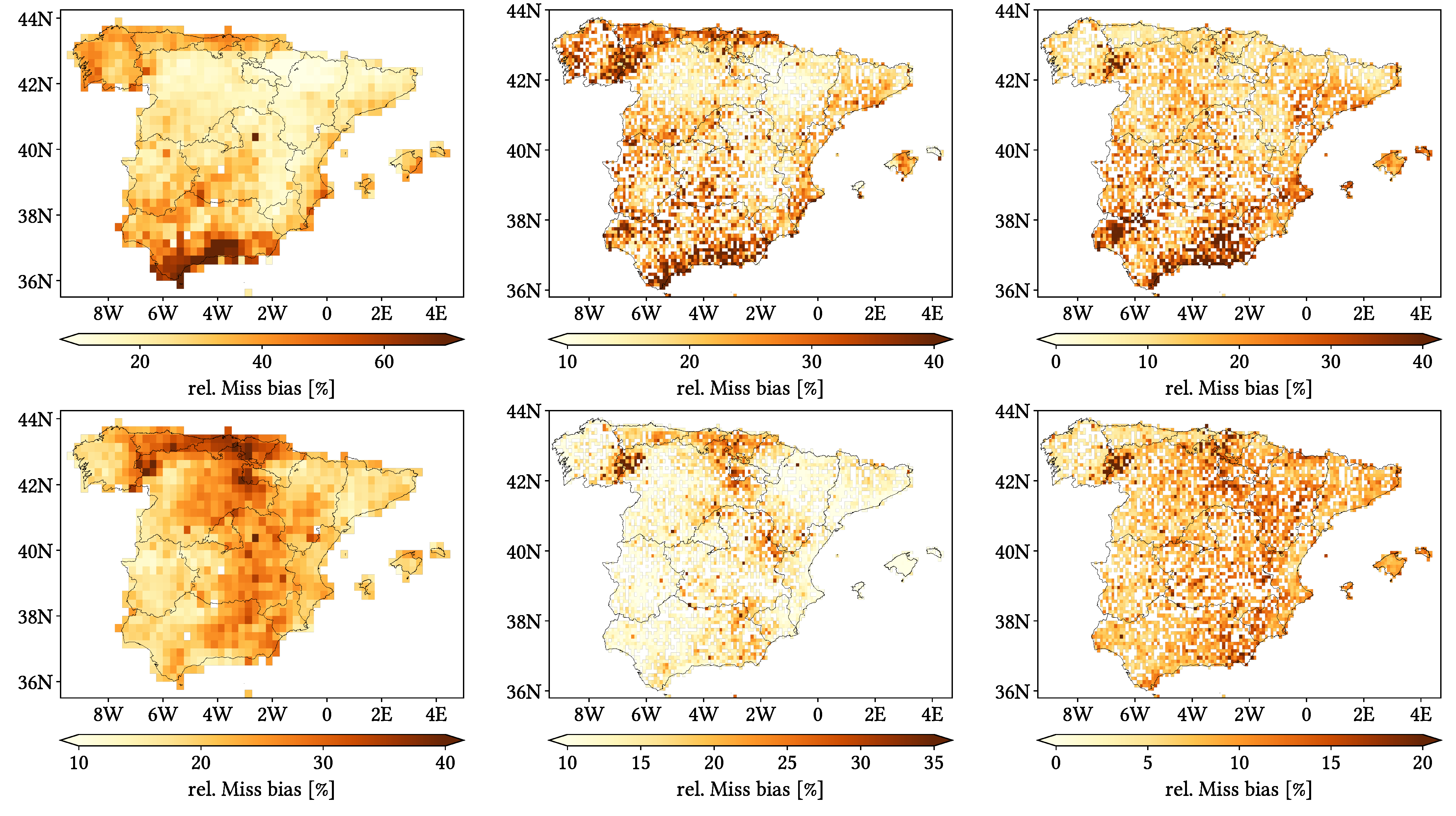

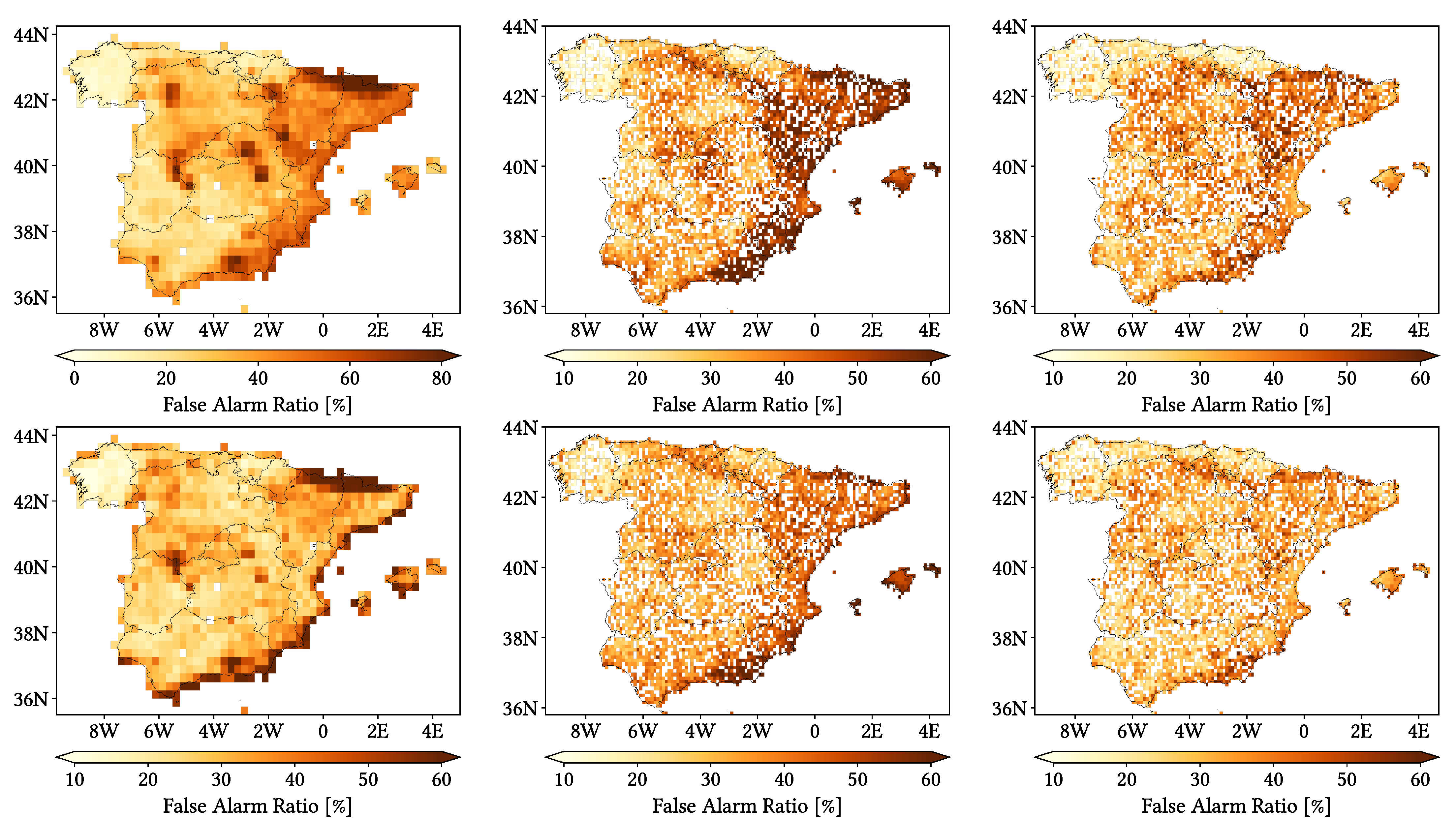

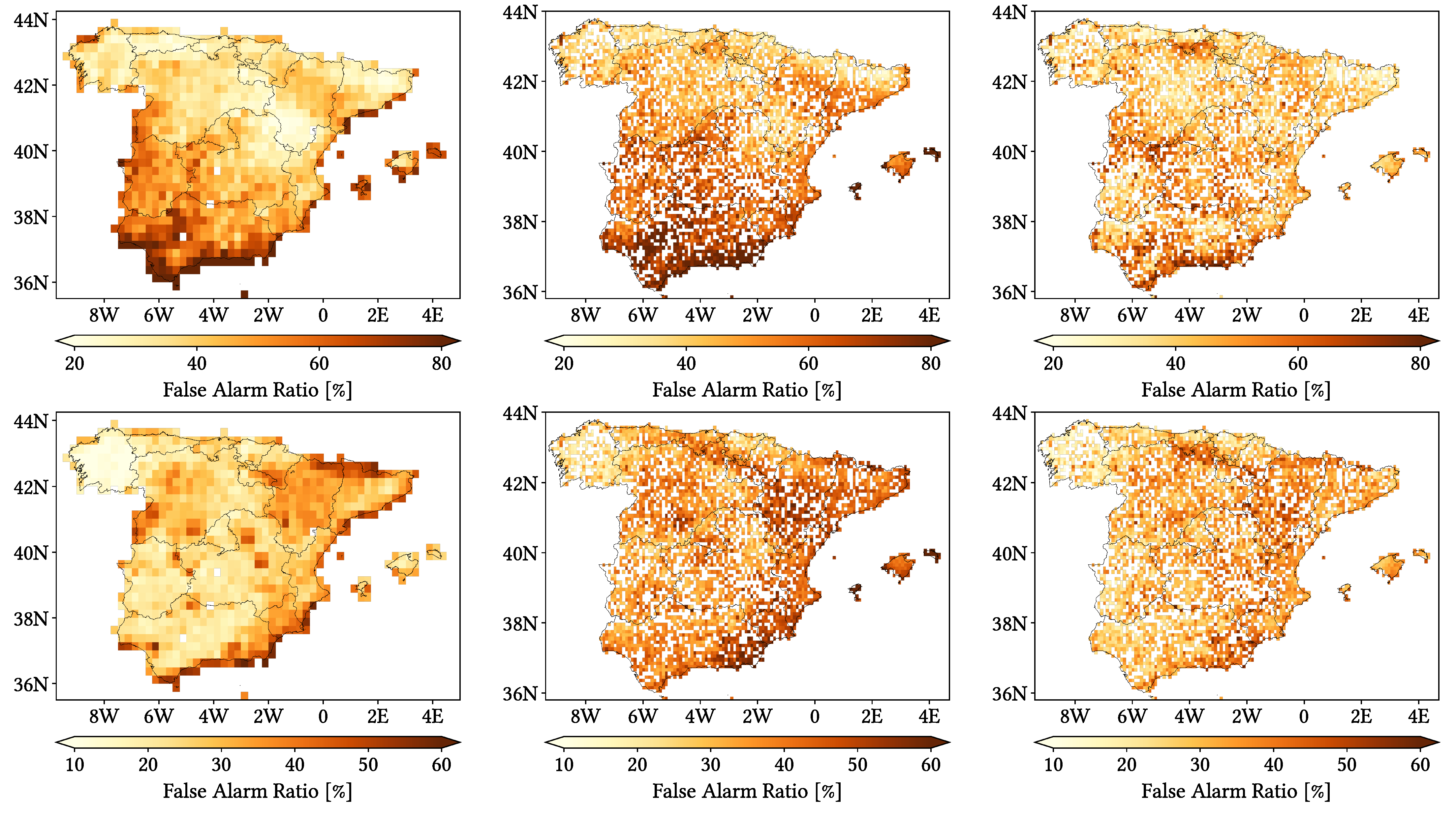

- Over our study area, CMORPH is not recommended and MSWEP is preferable over IMERG, these last two showing mainly CC and POD > 67% but FAR > 30%.

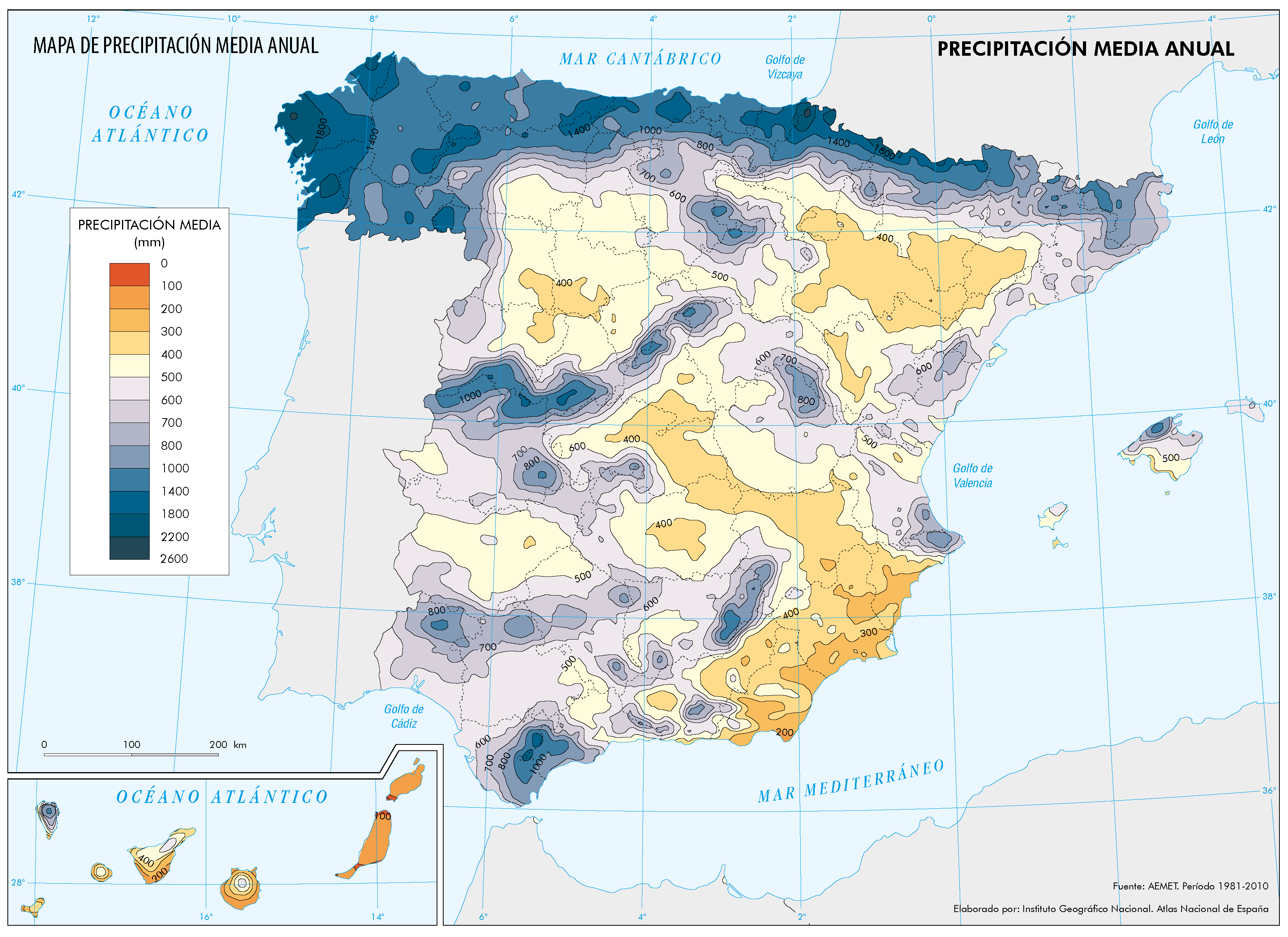

- Worst performance occurs in those regions with simultaneously high orographical complexity, annual precipitation and altitude. Heavier intensities are easily detected but notably underestimated. Performance is more predictable in spring and autumn.

- These SPPs should be used with caution.

- We recommend first analysing their performance on the specific application of interest.

Abstract

1. Introduction

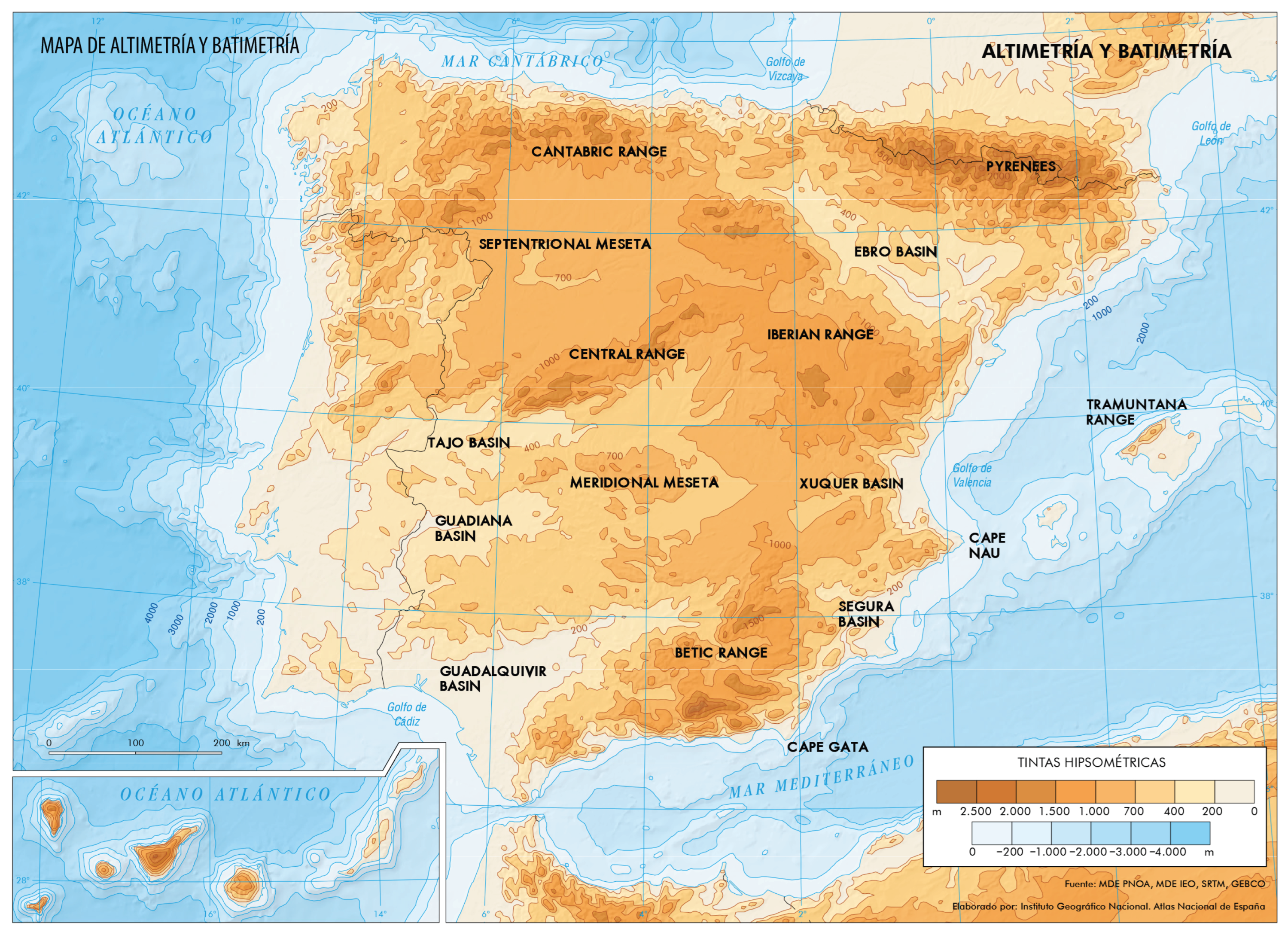

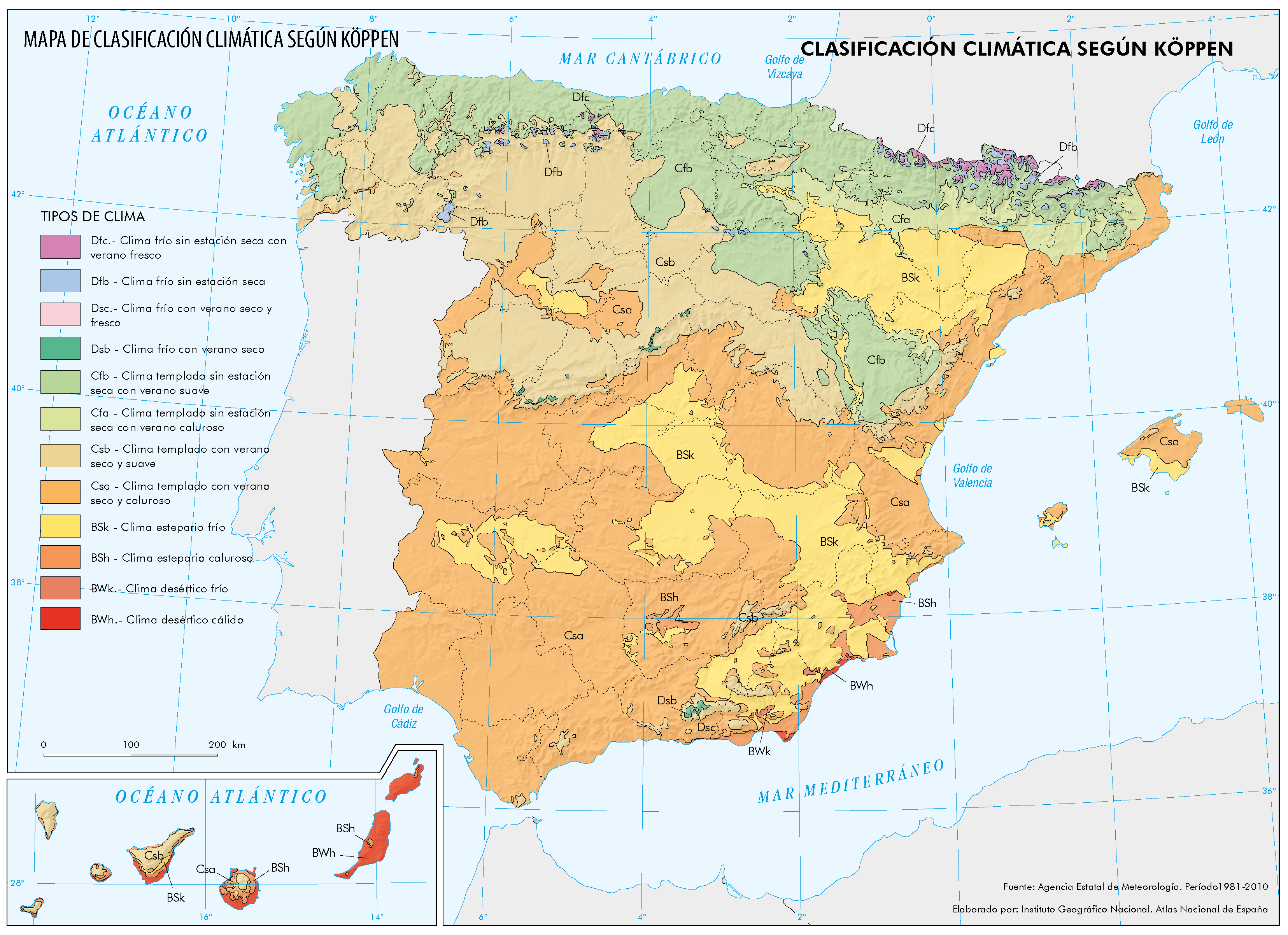

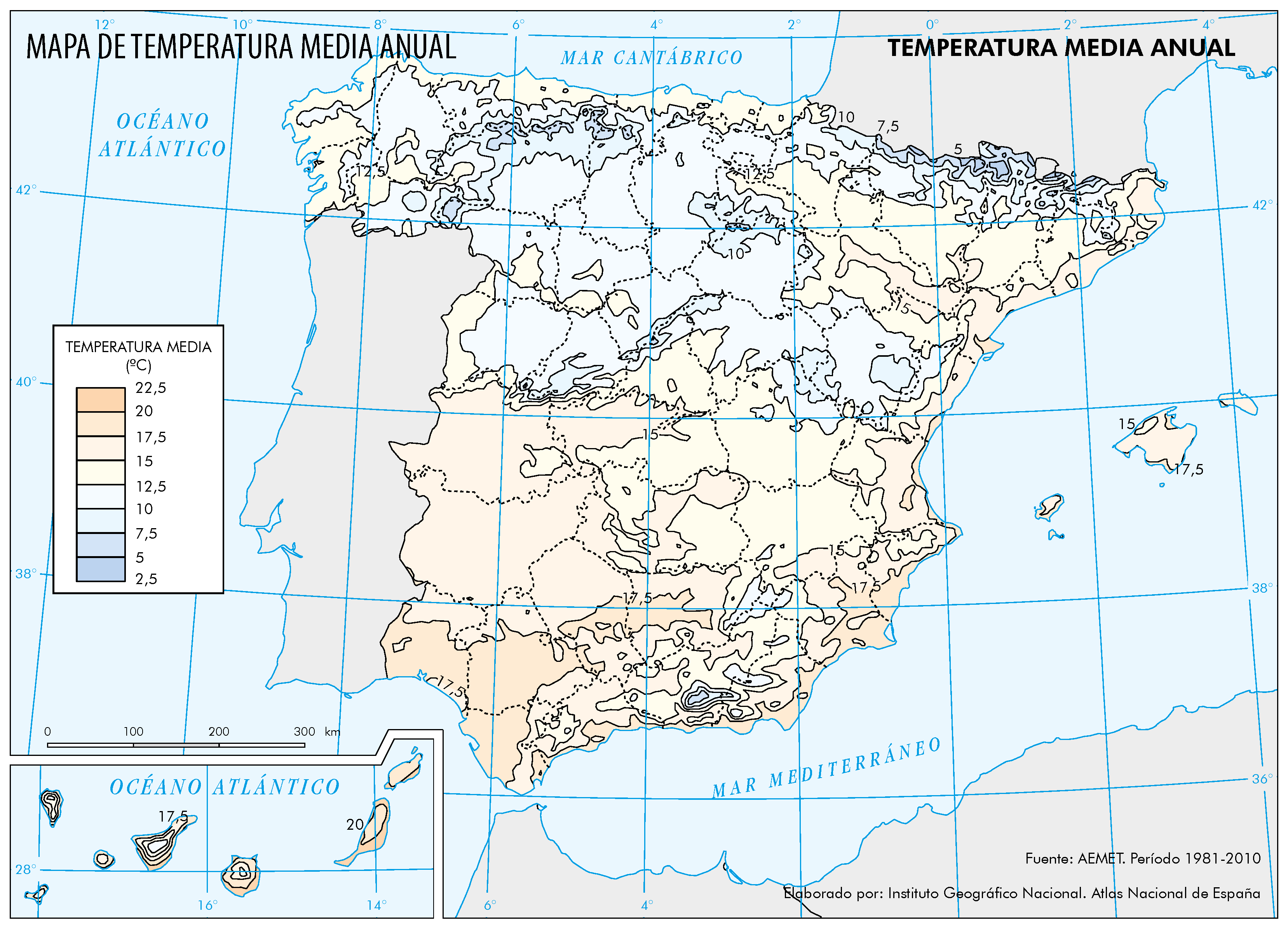

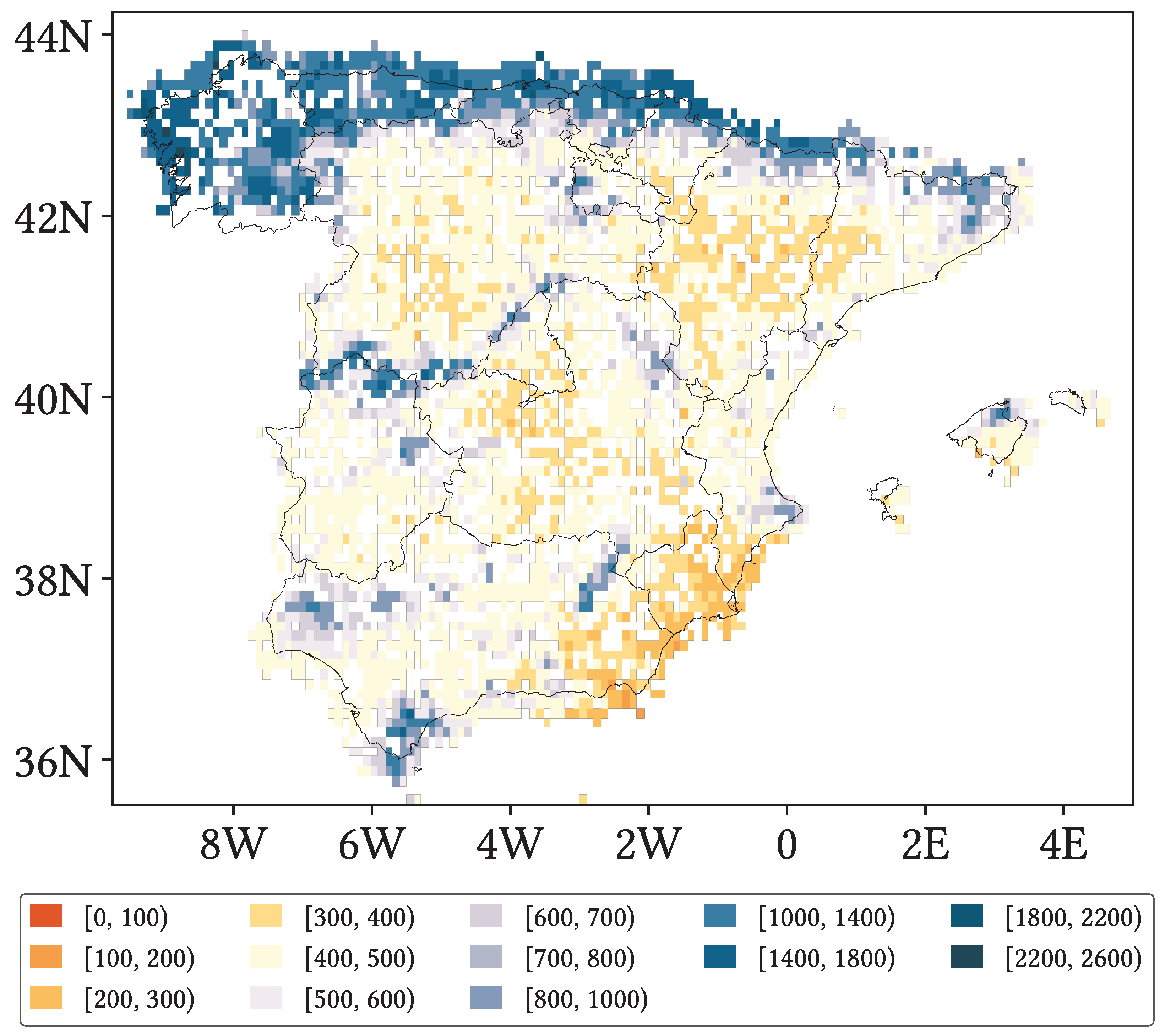

2. Study Area

3. Materials

3.1. Agencia Estatal de Meteorología (AEMET) Ground Gauge Data

3.2. NOAA CPC Climate Morphing Technique (CMORPH) Climate Data Record (CDR)

3.3. NASA Integrated Multi-SatellitE Retrievals for GPM (IMERG)

3.4. GloH2O Multi-Source Weighted-Ensemble Precipitation (MSWEP)

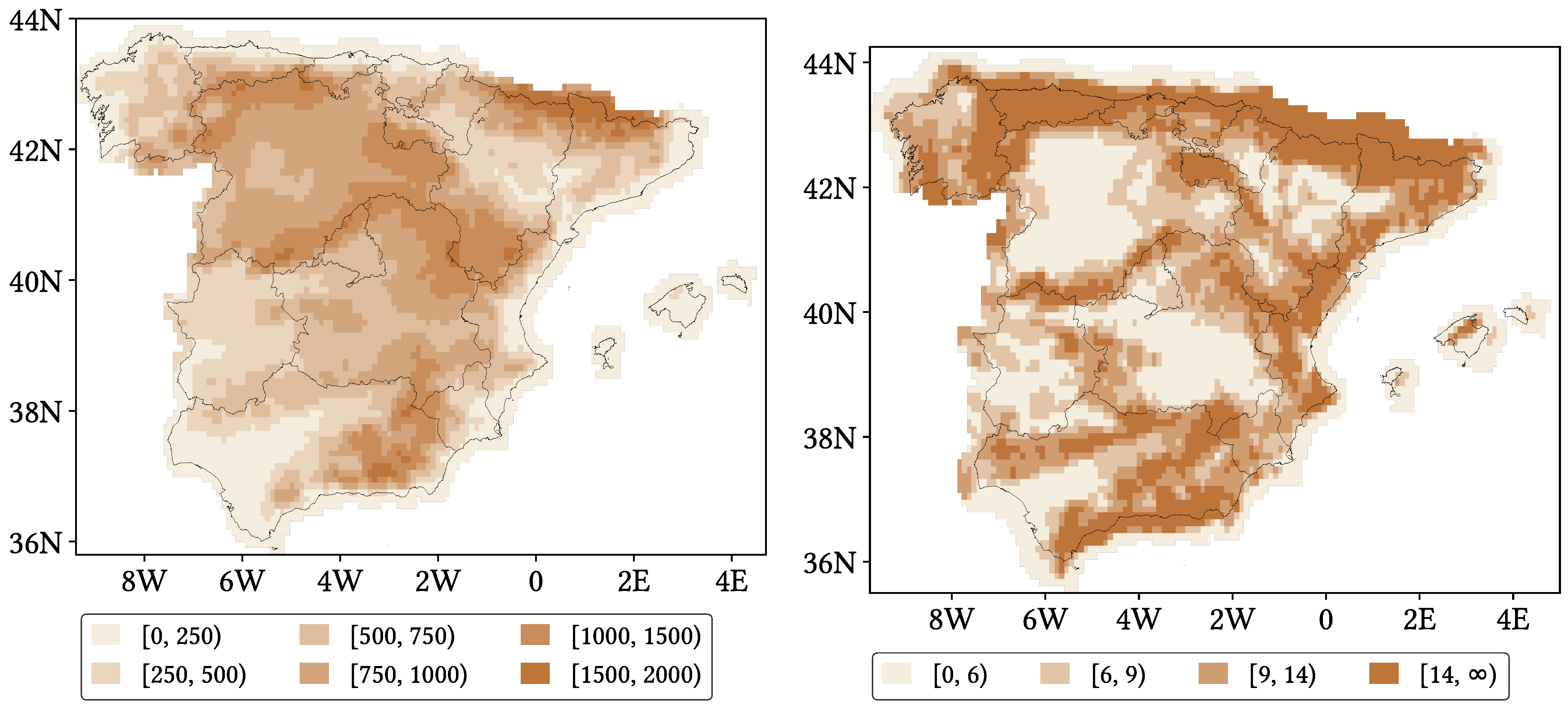

3.5. NASA Shuttle Radar Topography Mission (SRTM)

4. Method

4.1. Categorical and Statistical Metrics

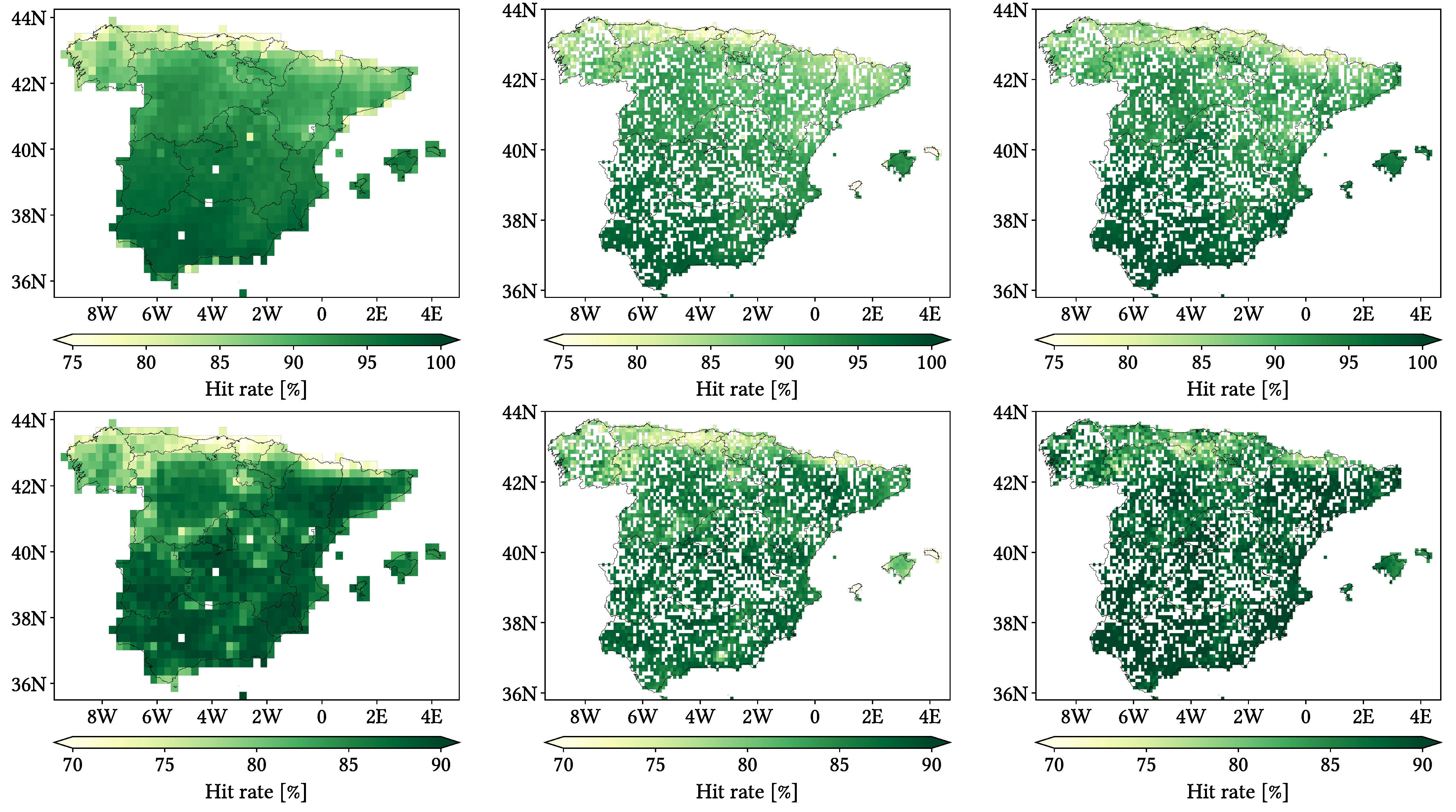

- Hit rate (HtR): global capability of the SPP for reporting hit wet and dry events.

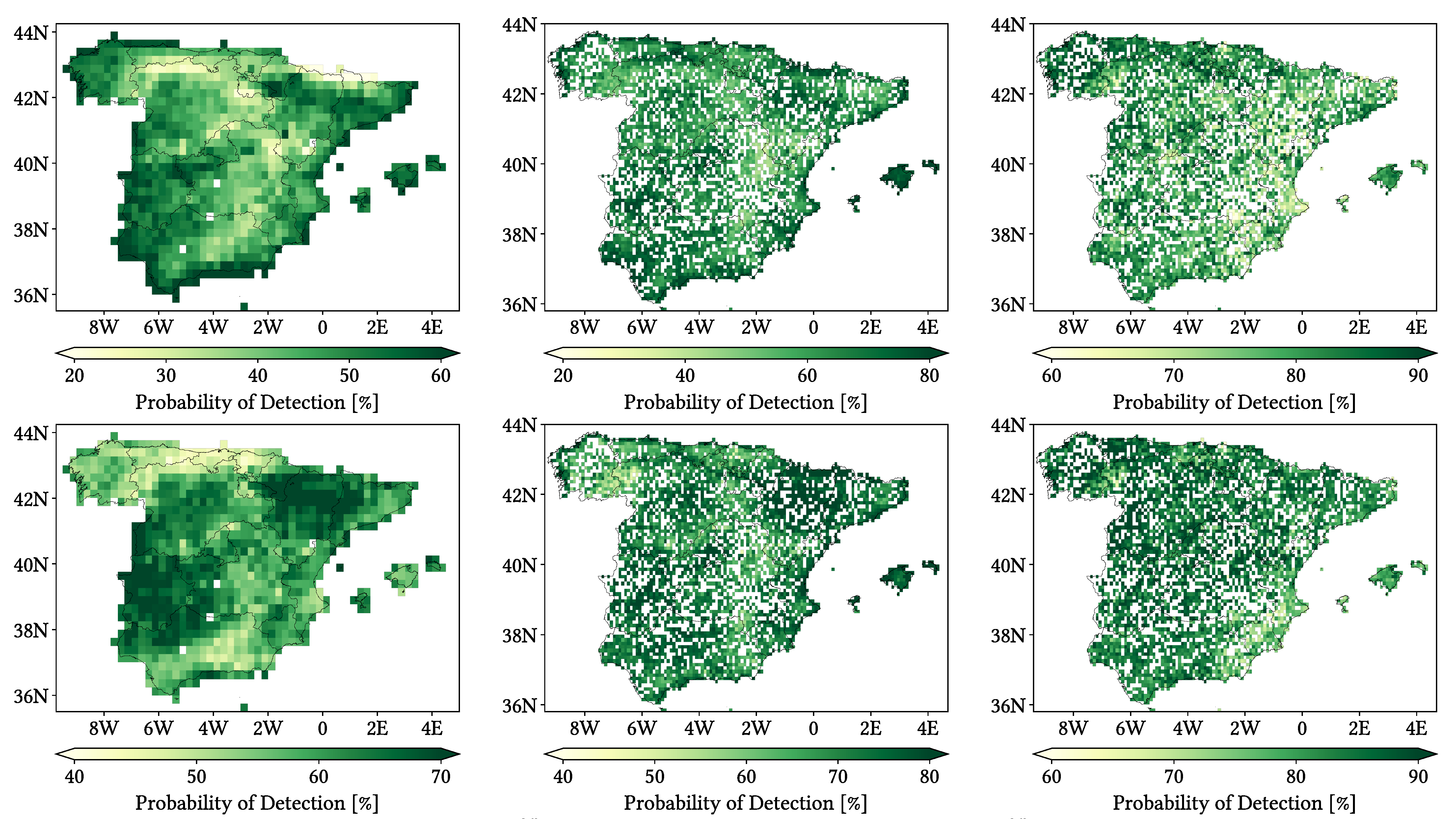

- Probability of Detection (POD): truthful detection capability of wet events.

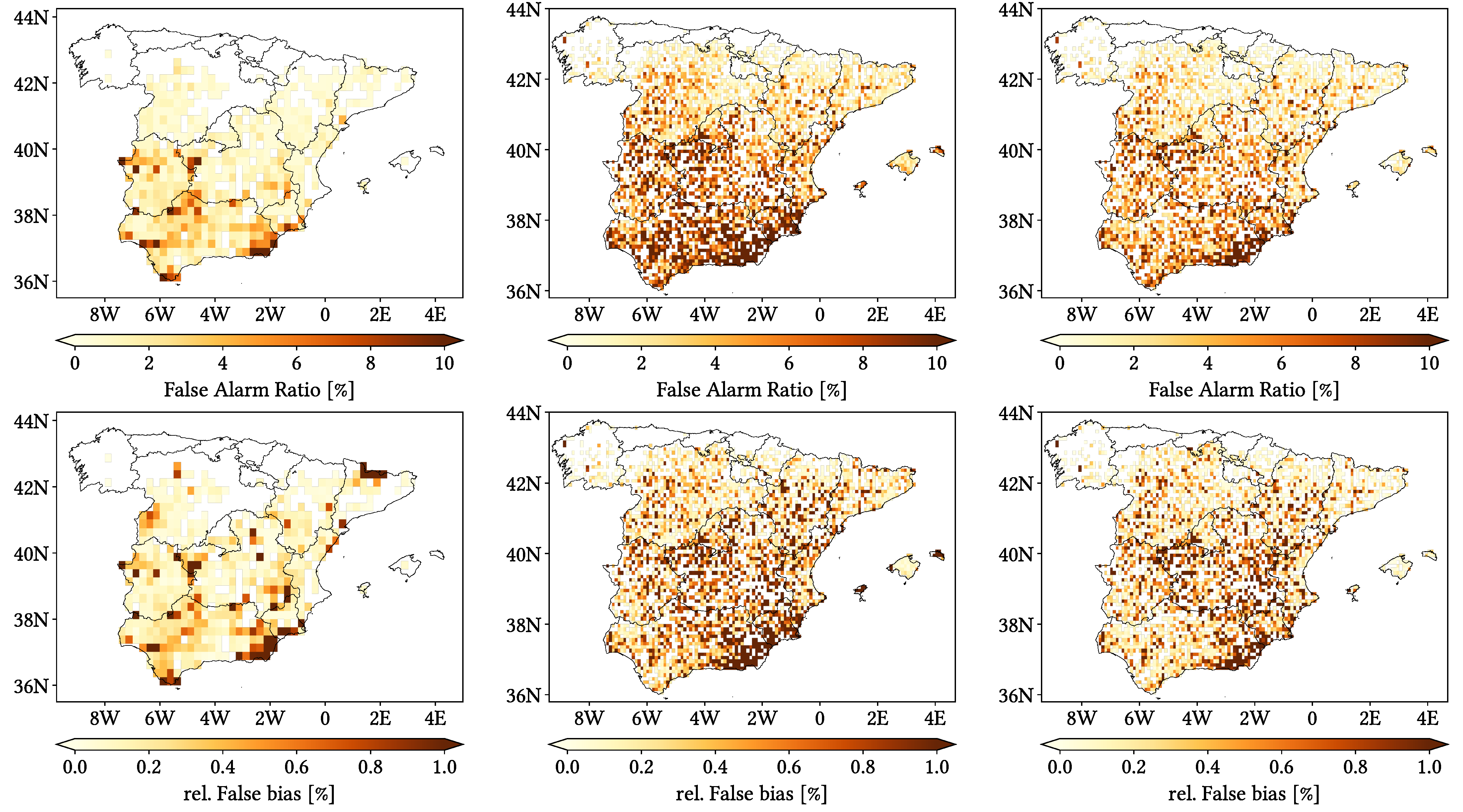

- False Alarm Ratio (FAR): ratio of false events to the wet events reported by the SPP.

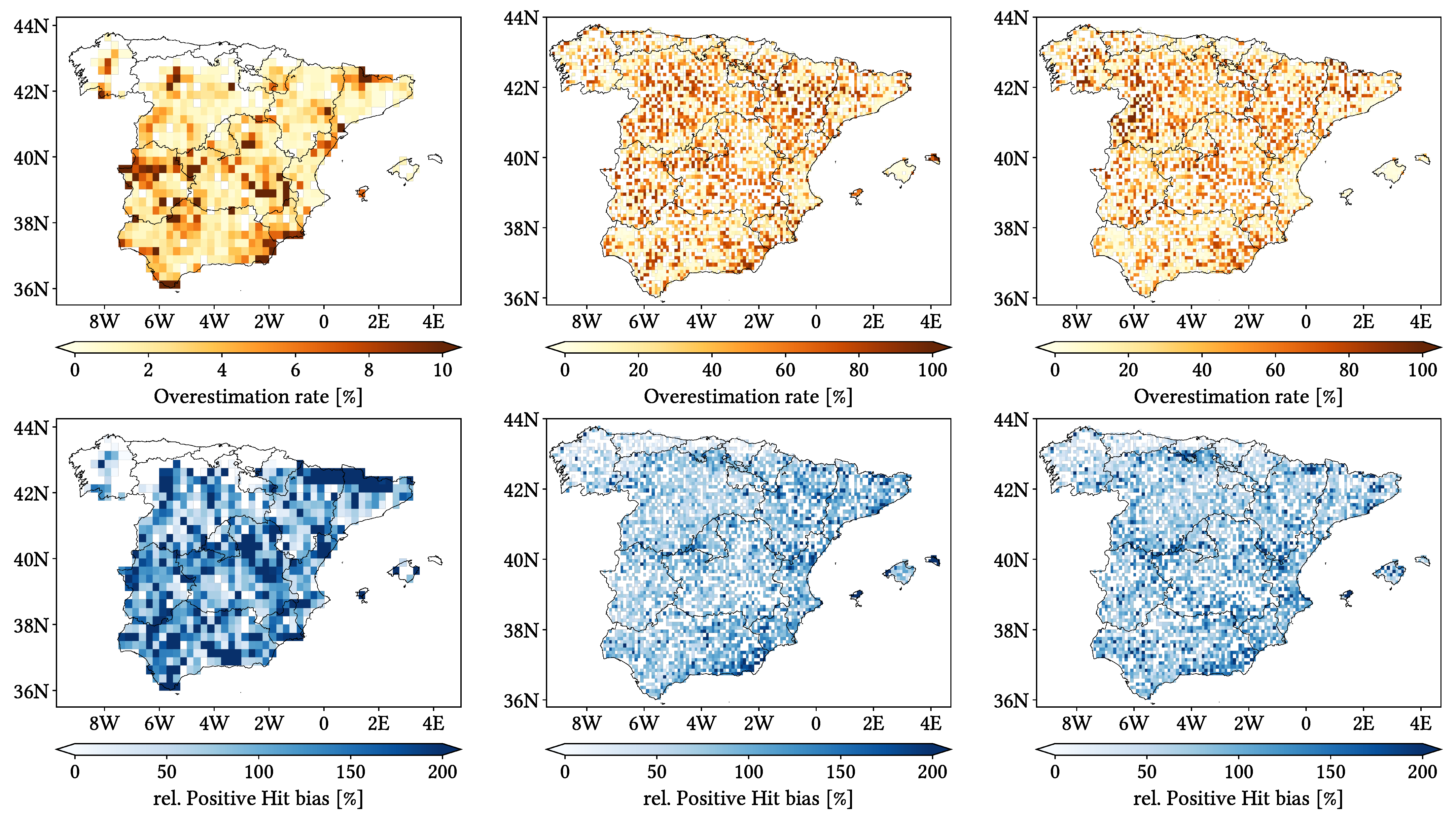

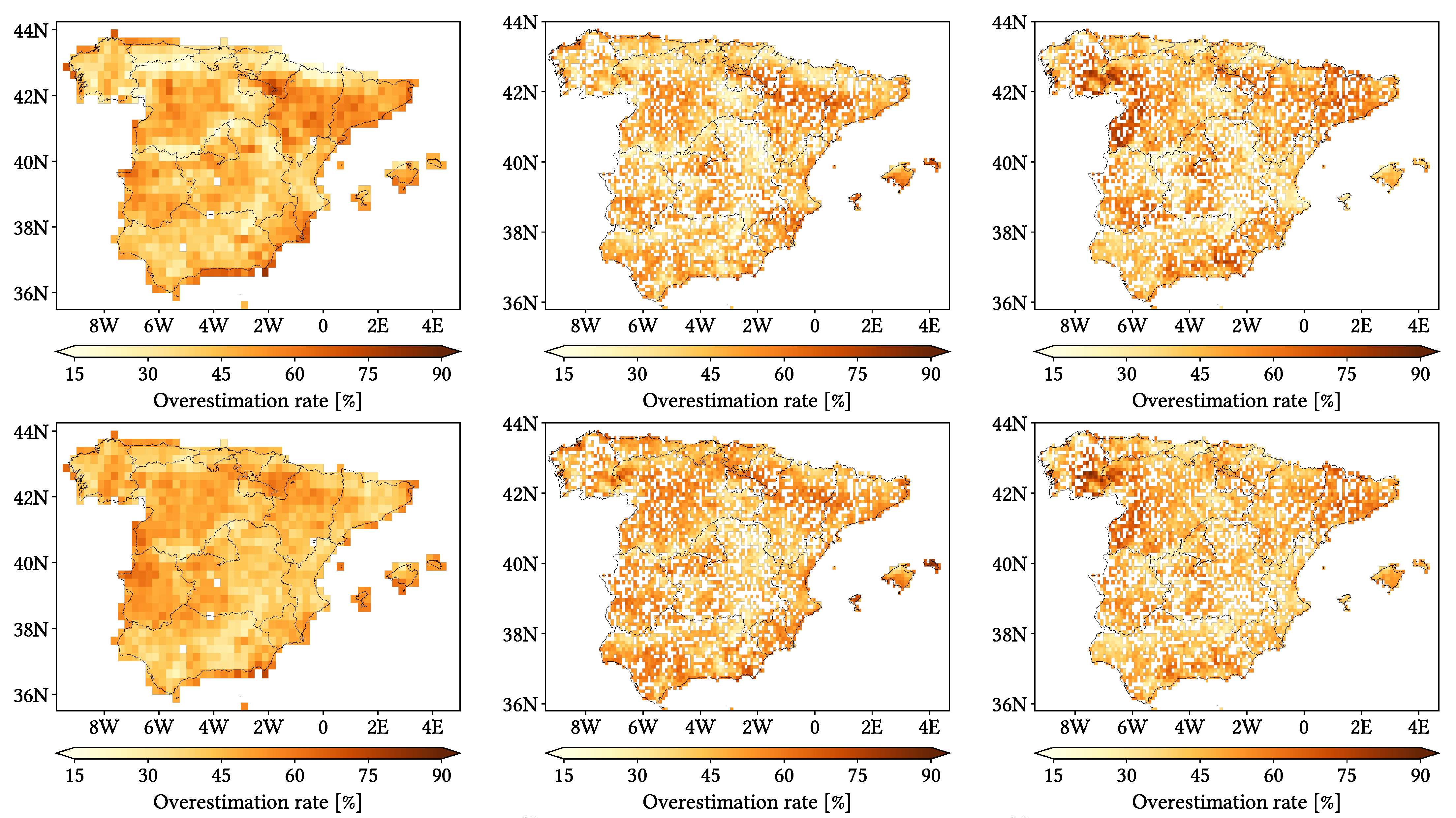

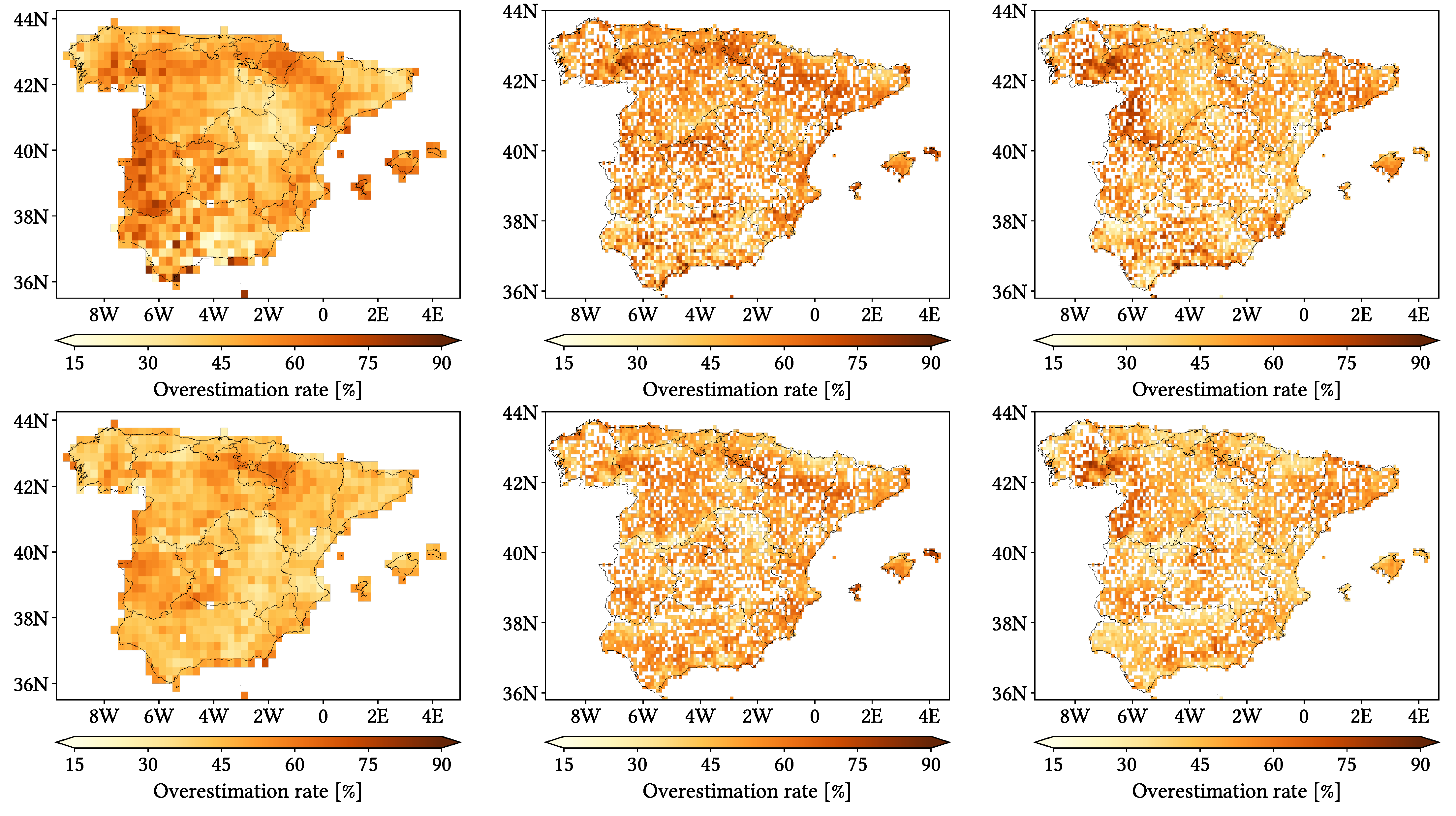

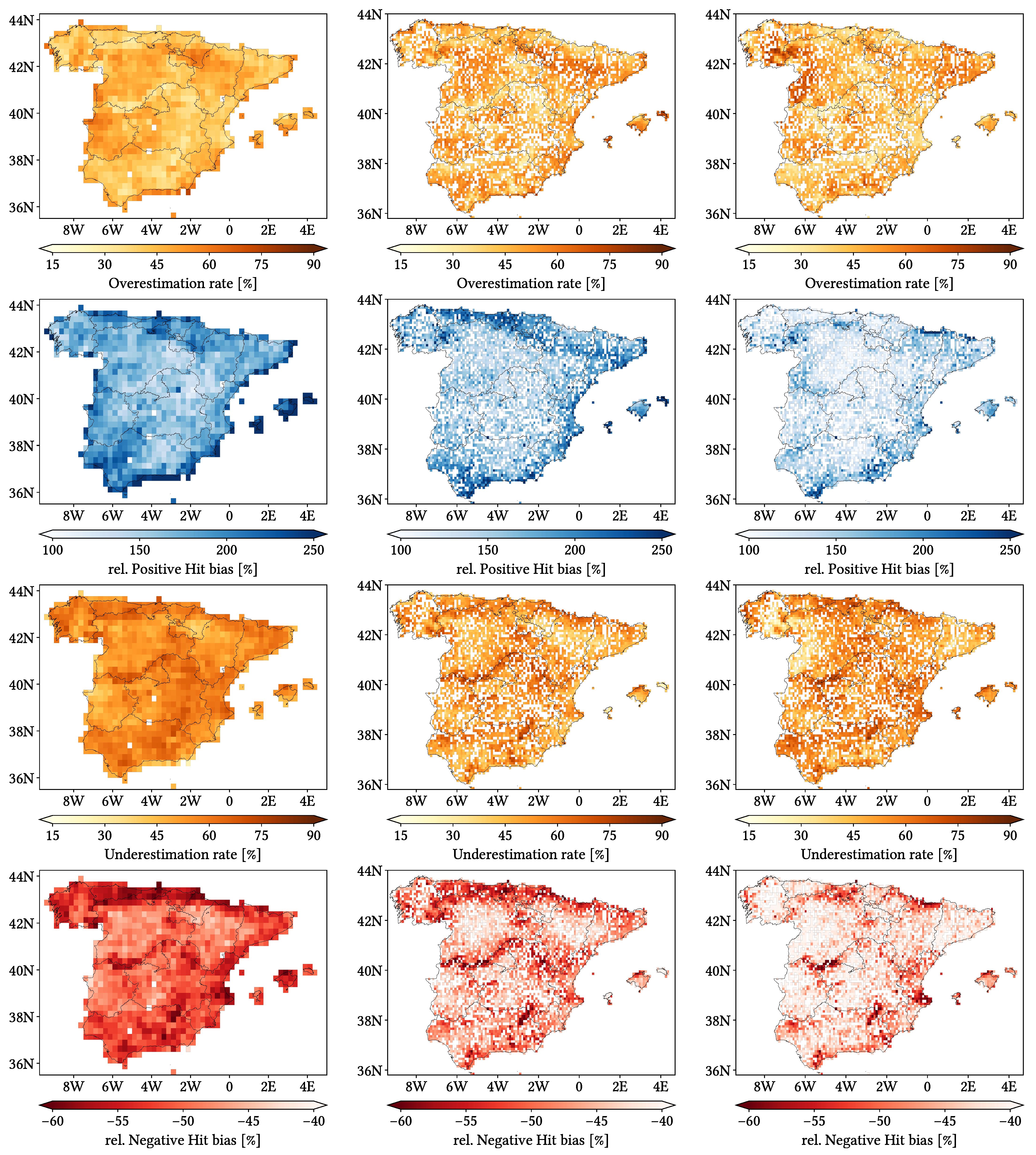

- Overestimation Rate (OvR): ratio of hit wet events which show overestimation.

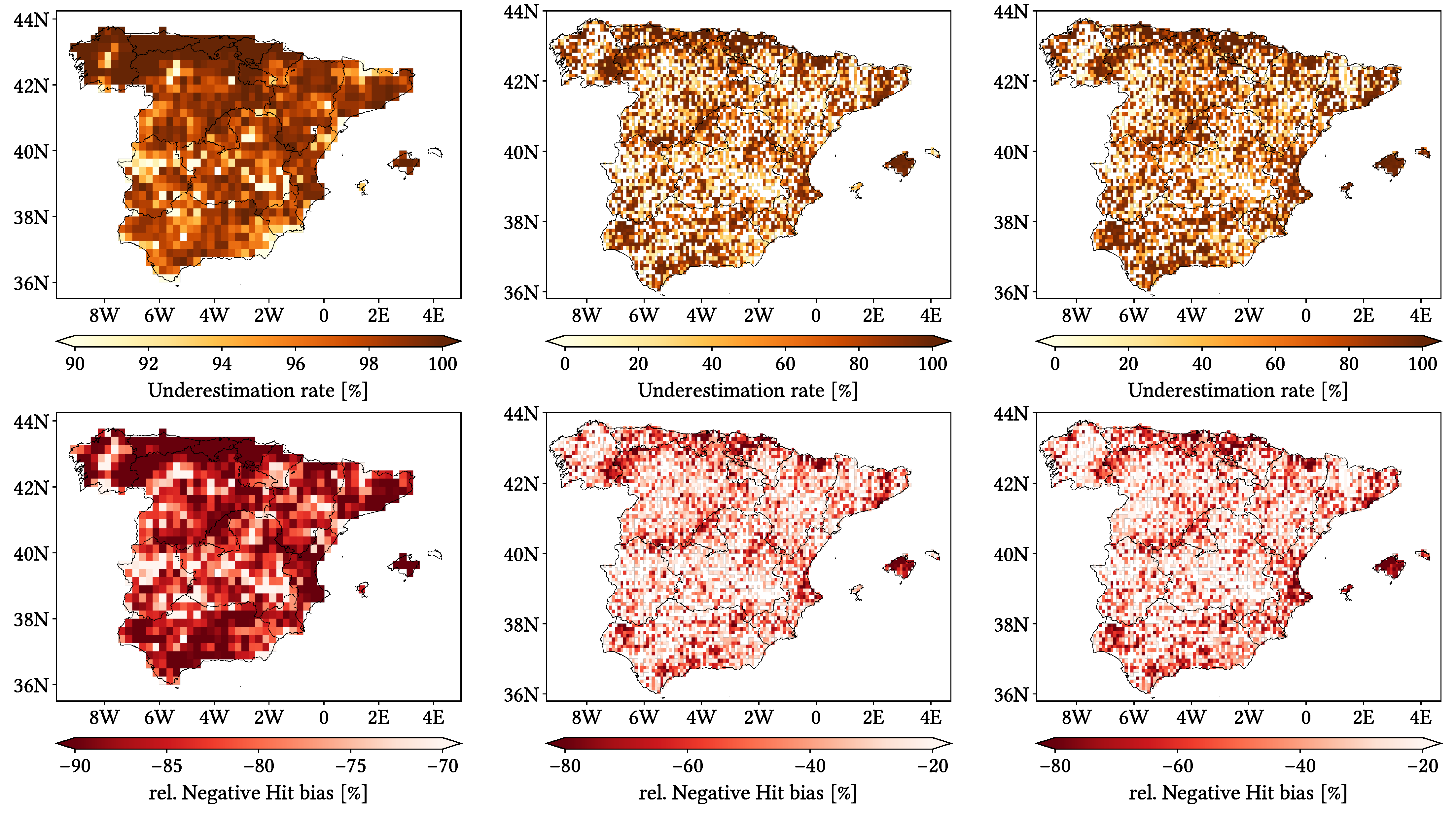

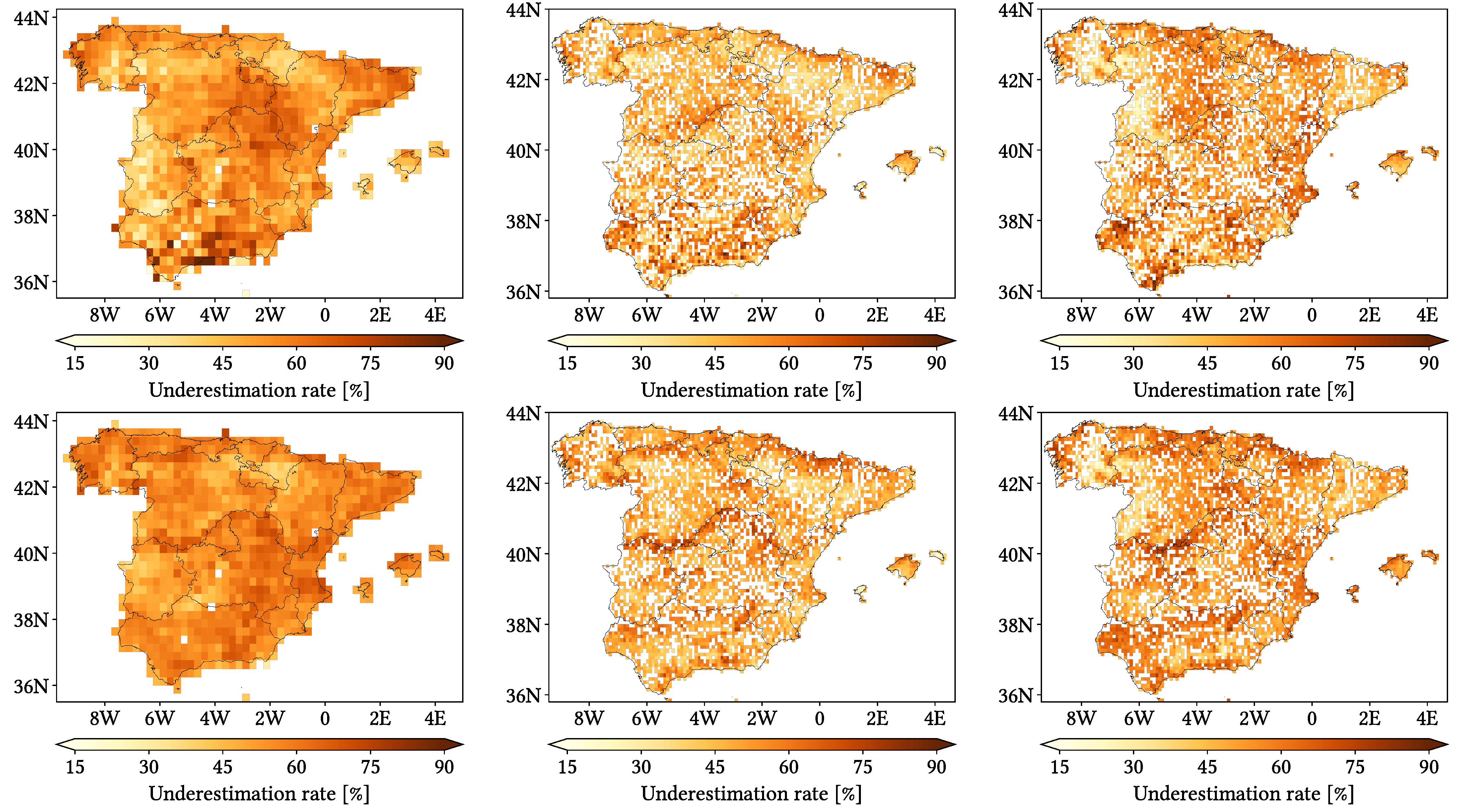

- Underestimation Rate (UdR): ratio of hit wet events which show underestimation.

4.2. Error Components

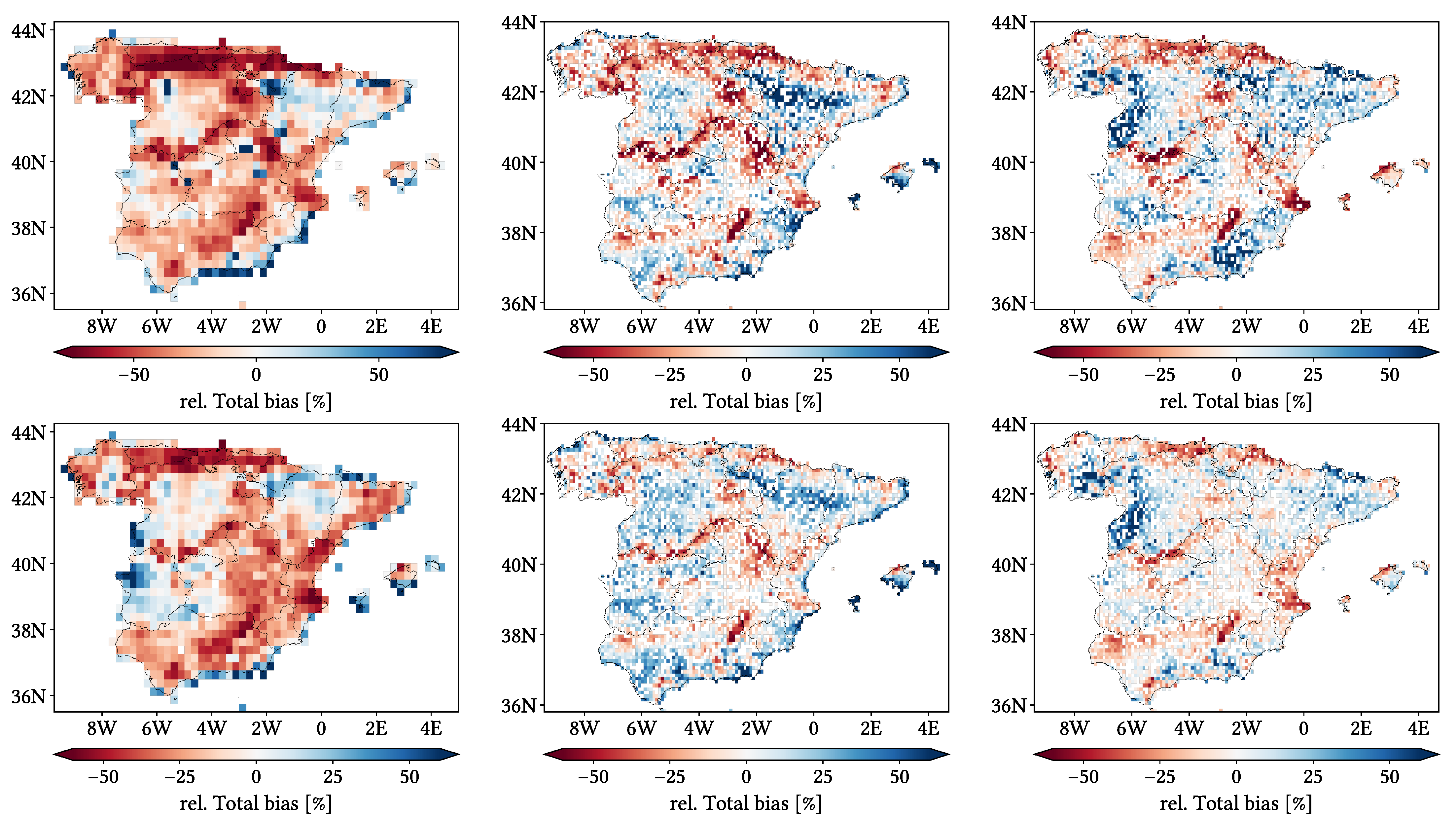

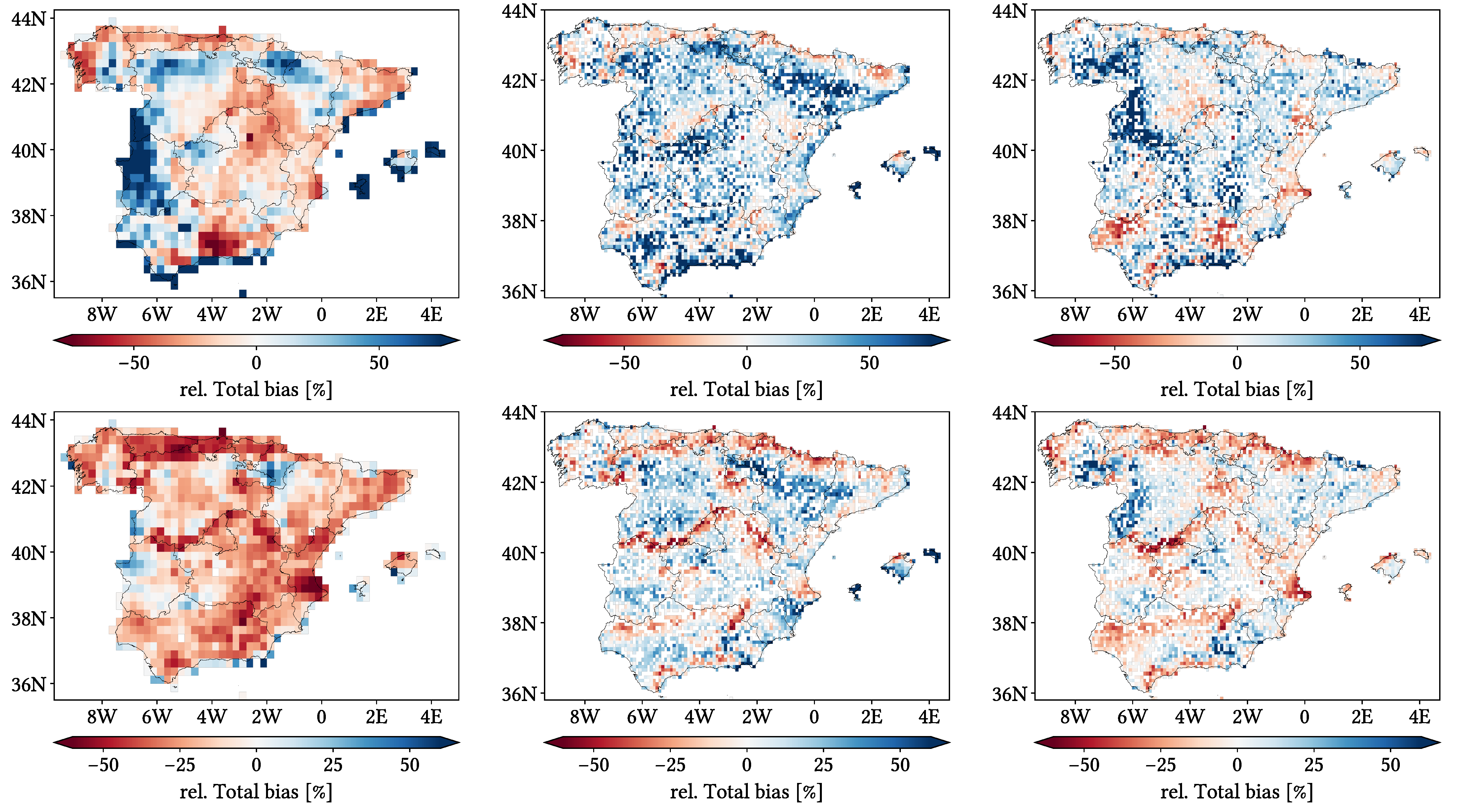

- Relative Total bias: accumulated Total bias divided by accumulated reference precipitation. It represents how large the accumulated error is compared to the local amount of precipitation. We would have defined it as the usual mean relative error (error divided by reference value) if precipitation could not be null.

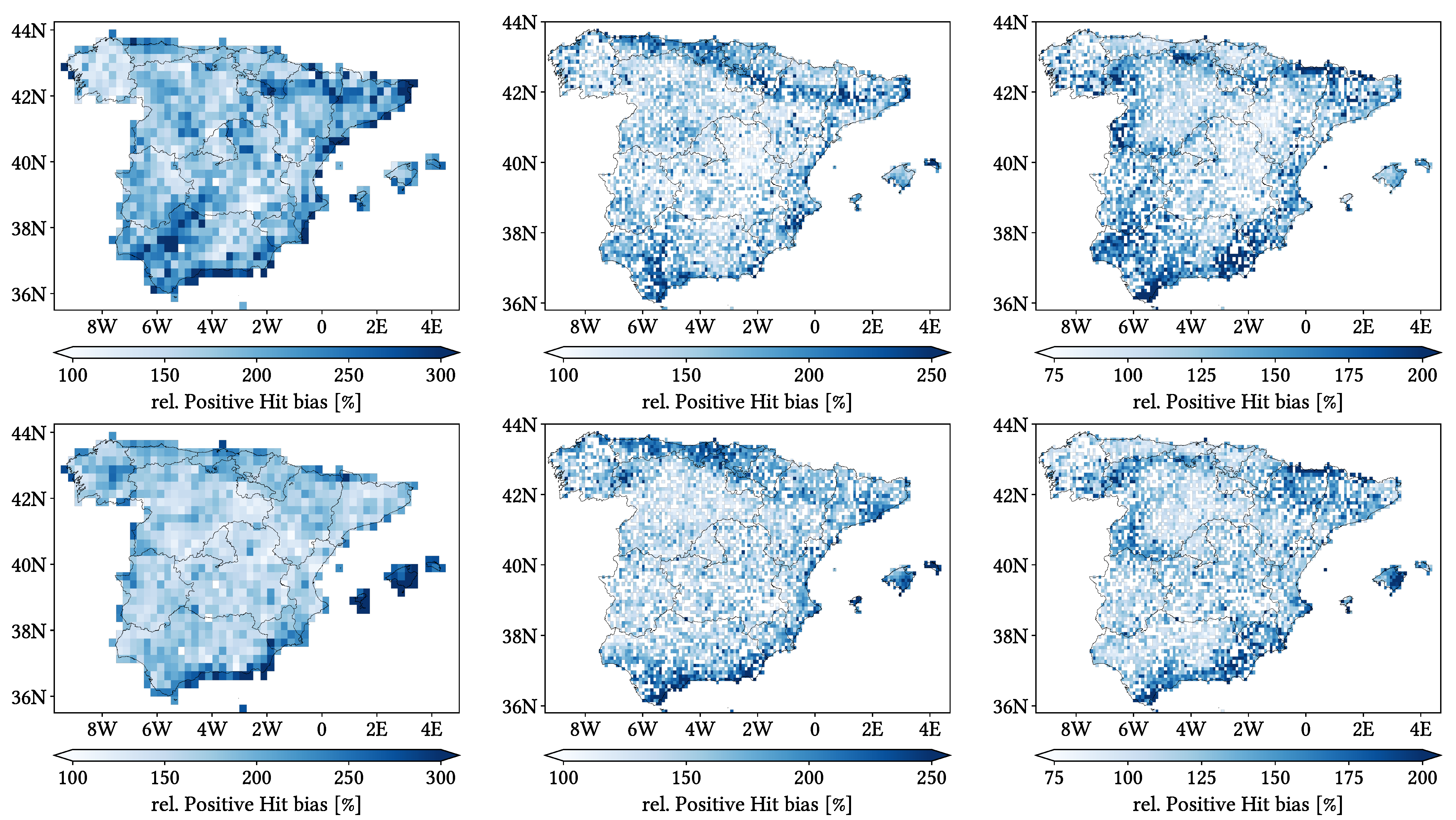

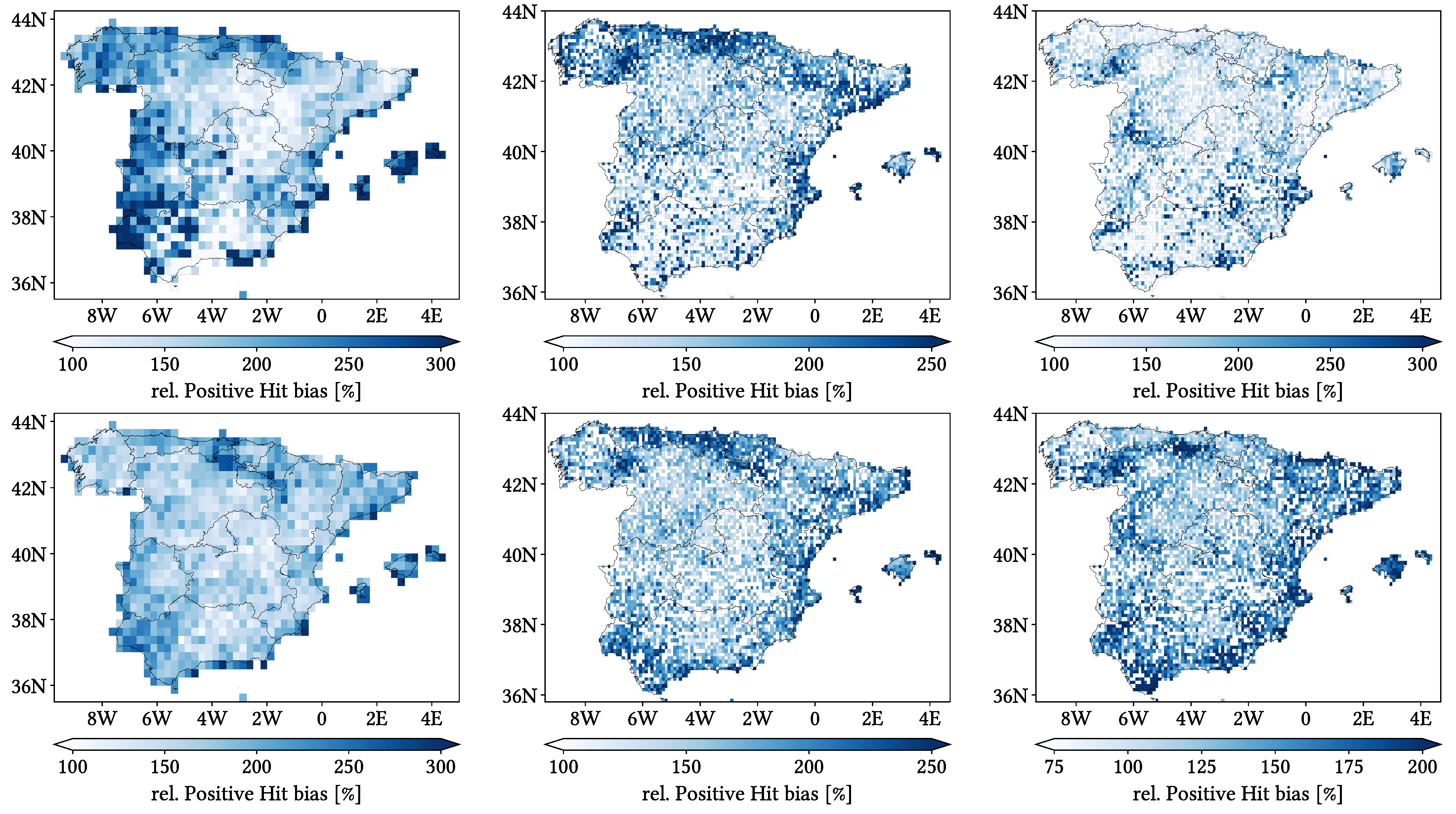

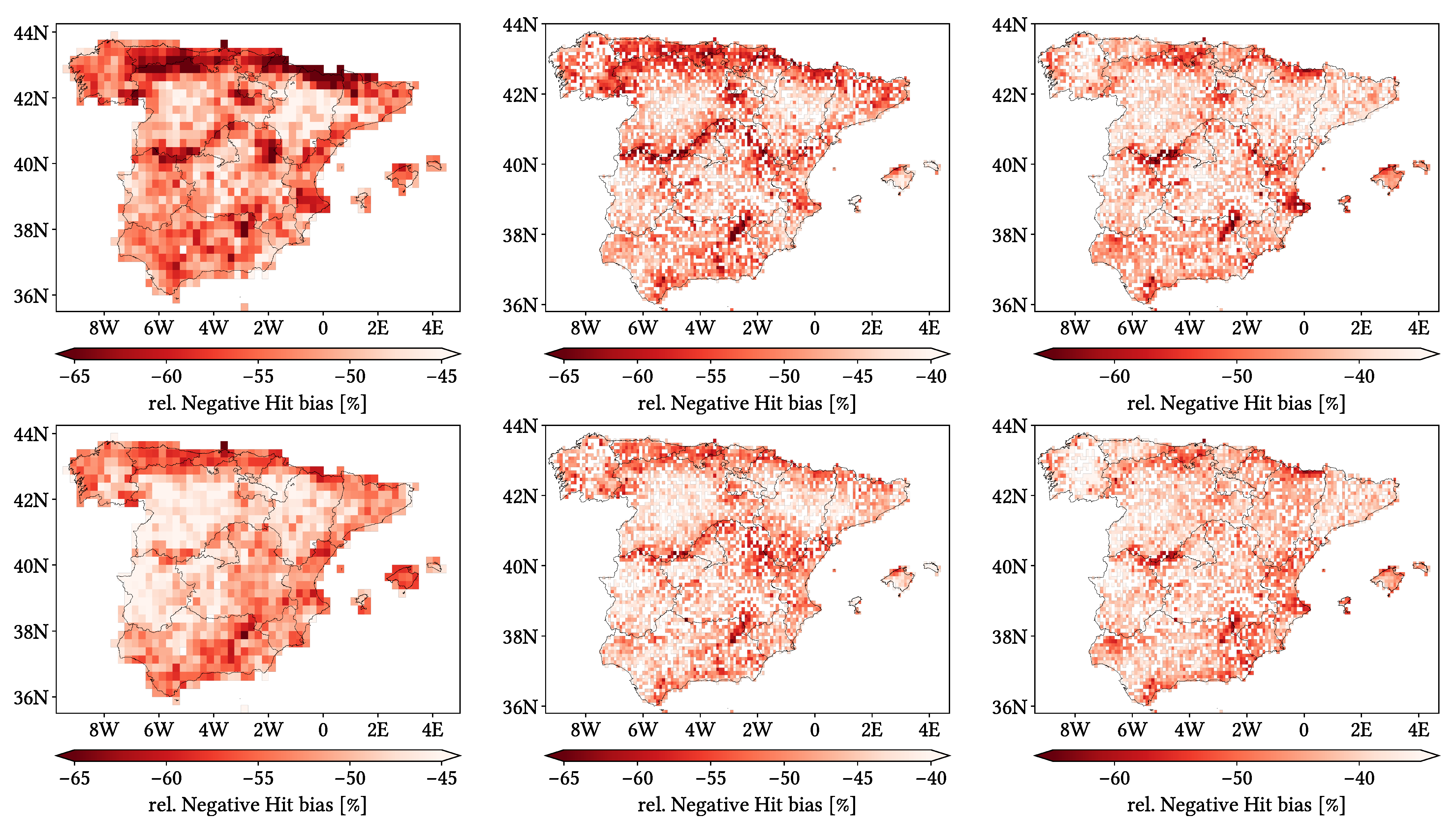

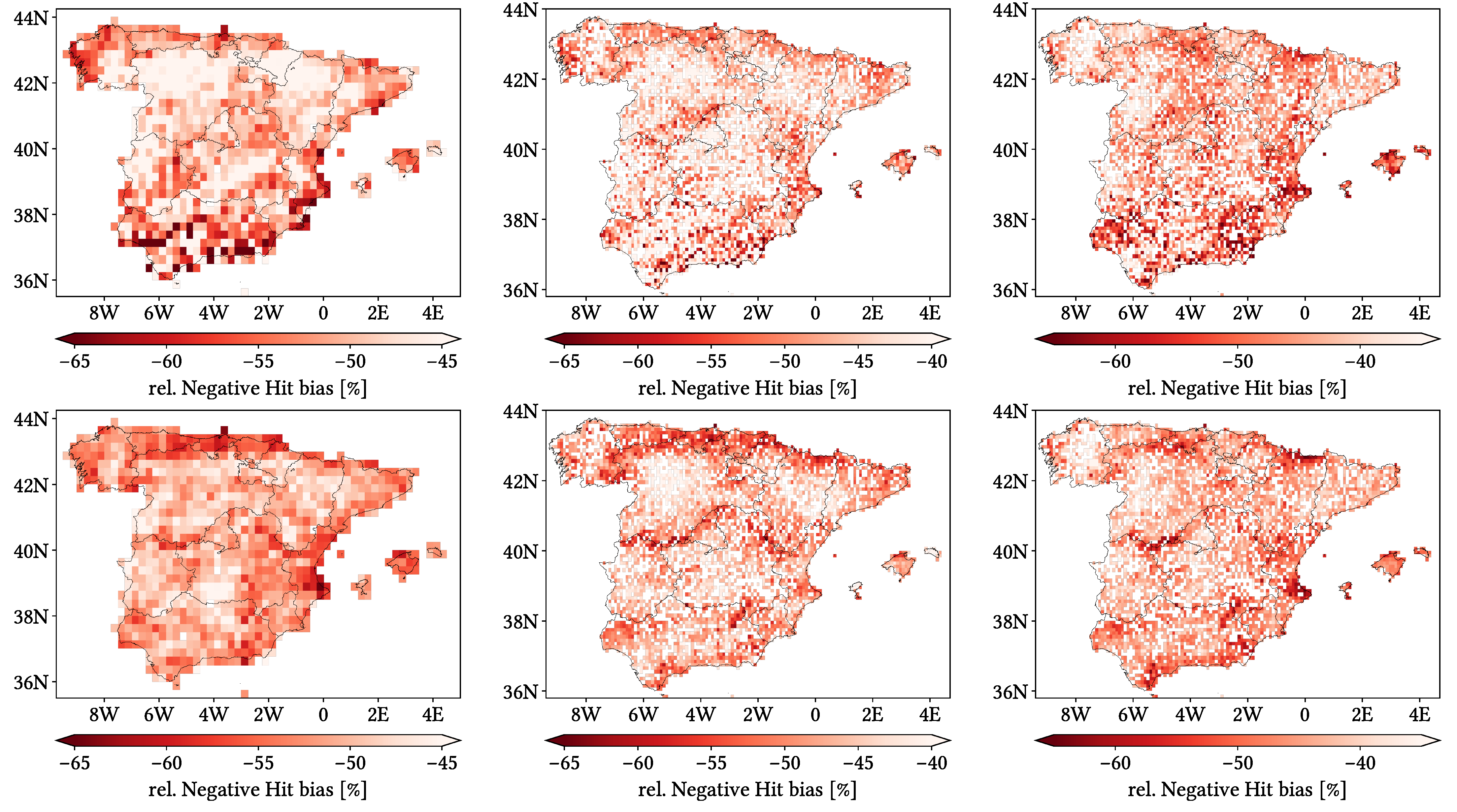

- Relative Positive (Negative) Hit bias: averaged relative error coming from days where precipitation has been correctly detected but overestimated (underestimated). We took advantage of these days presenting a non-zero reference value. We decided not to accumulate the bias and divide it by accumulated precipitation to prevent smoothing it.

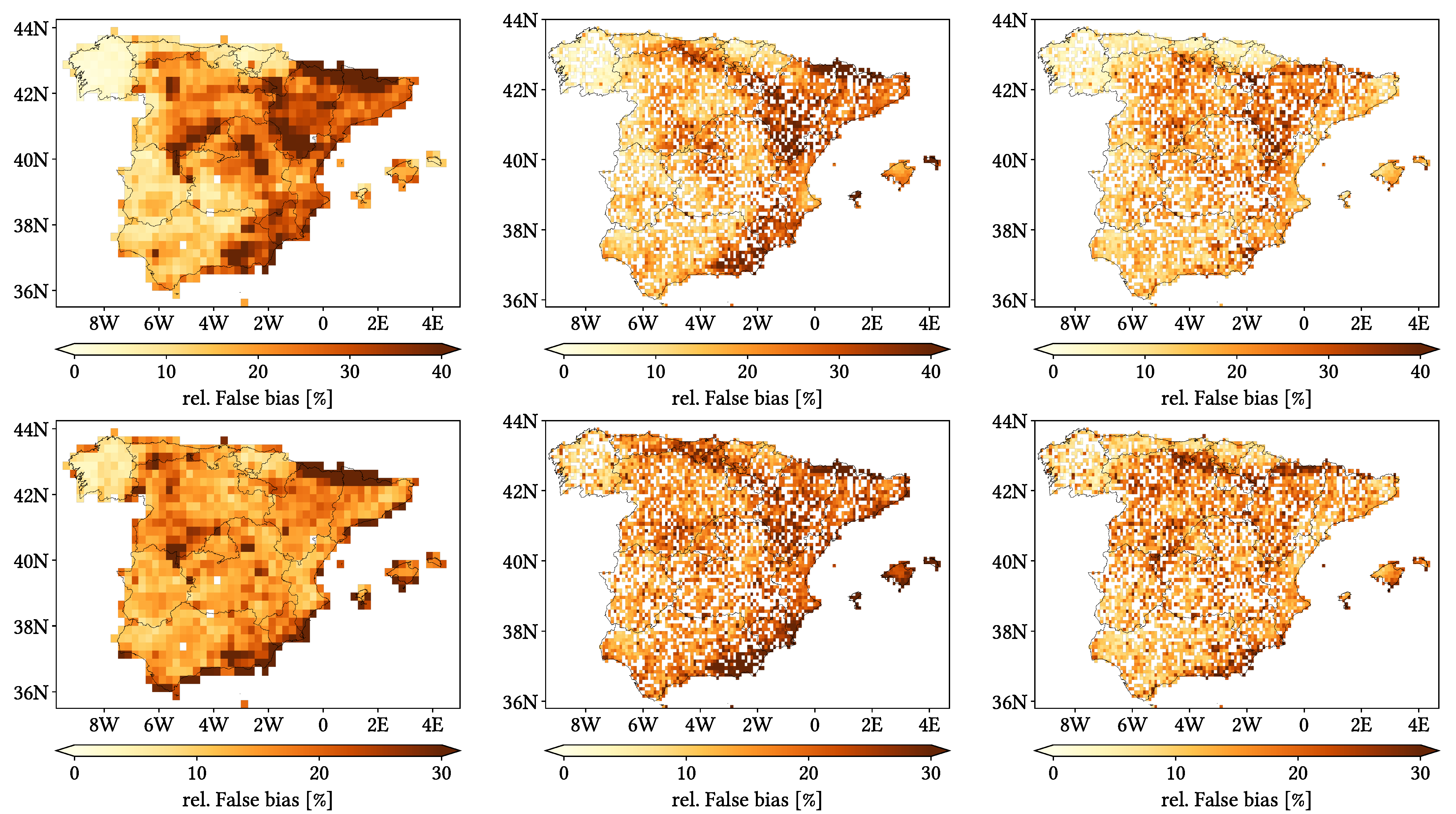

- Relative False bias: accumulated False bias divided by accumulated satellite precipitation. It represents how much of the satellite estimated precipitation is false.

- Relative Miss bias: accumulated Miss bias divided by accumulated reference precipitation. It represents how much of the gauge detected precipitation has been missed.

4.3. Data Agroupation

- No data grouping: overall comparison through the whole temporal record. We performed this comparison both at daily and monthly resolution. For this last one, we created monthly datasets by accumulating the daily ones through each month, and as there is no common definition for a wet month, we also used the threshold of for defining a wet month and for the computation of rMAE.

- Grouping by season of year, for all years at once (i.e., without focusing on every year individually). We have applied the usual climatological month agroupation.

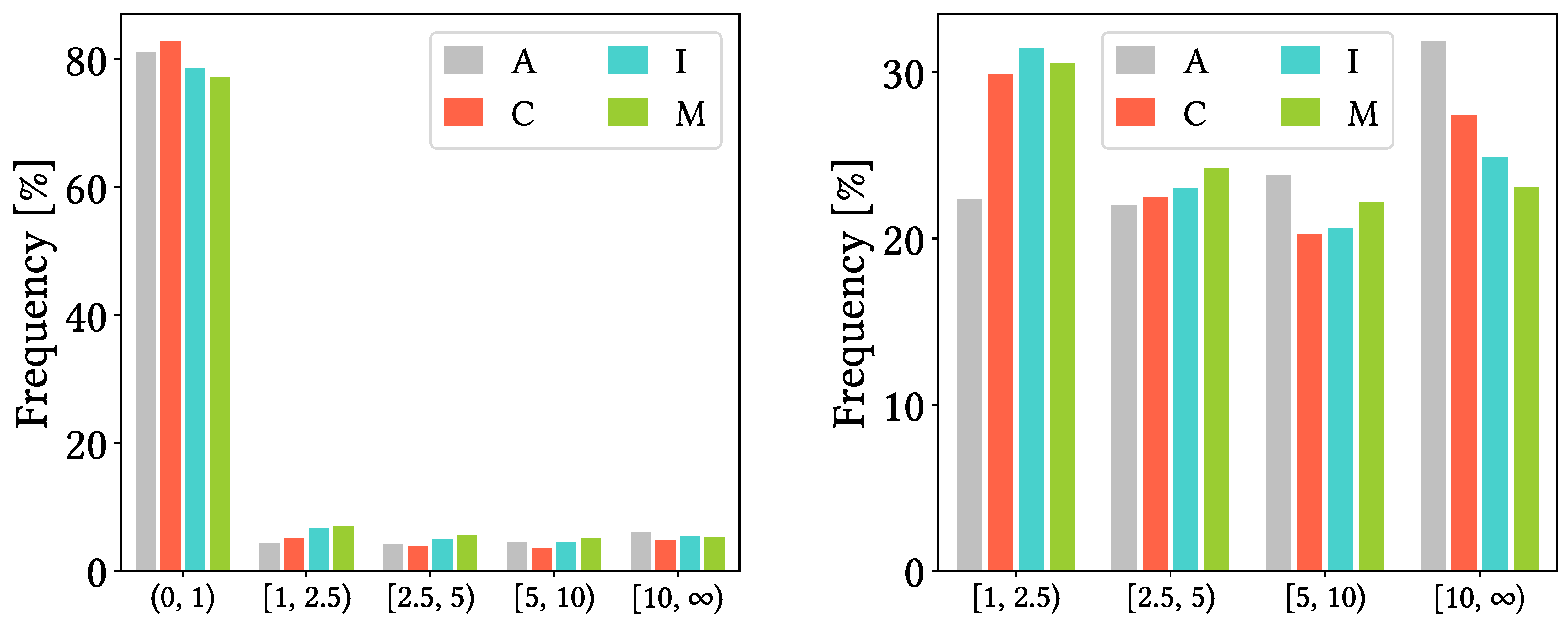

- Grouping by intensity intervals. The chosen thresholds are pixel-scale quartiles reported by reference (which are slightly different from pixel to pixel), once ignoring dry days.

- Grouping by mean altitude intervals, obtained from the SRTM DEM resampled to both 0.25° and 0.1° lat-lon resolutions. The thresholds have been determined following the work of Navarro et al. [44].

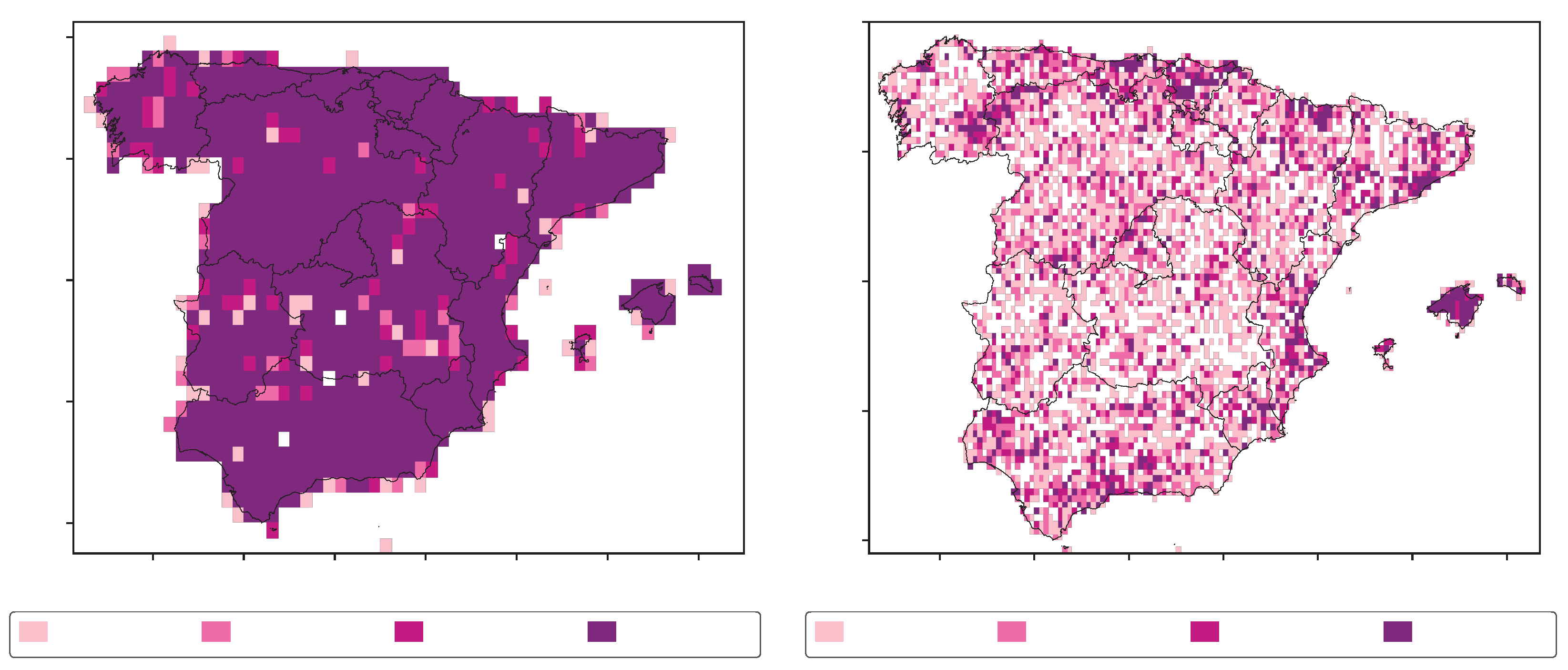

- Grouping by orographic complexity intervals, represented by the TRI calculated from the SRTM DEM at both resolutions too. The thresholds have been established according to pixel-scale quartiles. These quartiles were similar for the three SPPs, so we chose the same representative values for all of them.

- Grouping by density of gauges per pixel. The chosen thresholds are 1, 2, 3 and more than 3 gauges per pixel, which have been established both according to quartiles at 0.1° lat-lon resolution and due to our interesent in performance variability for lower gauge densities.

5. Results

5.1. Probability Density Functions of Precipitation According to Reference and to Each SPP

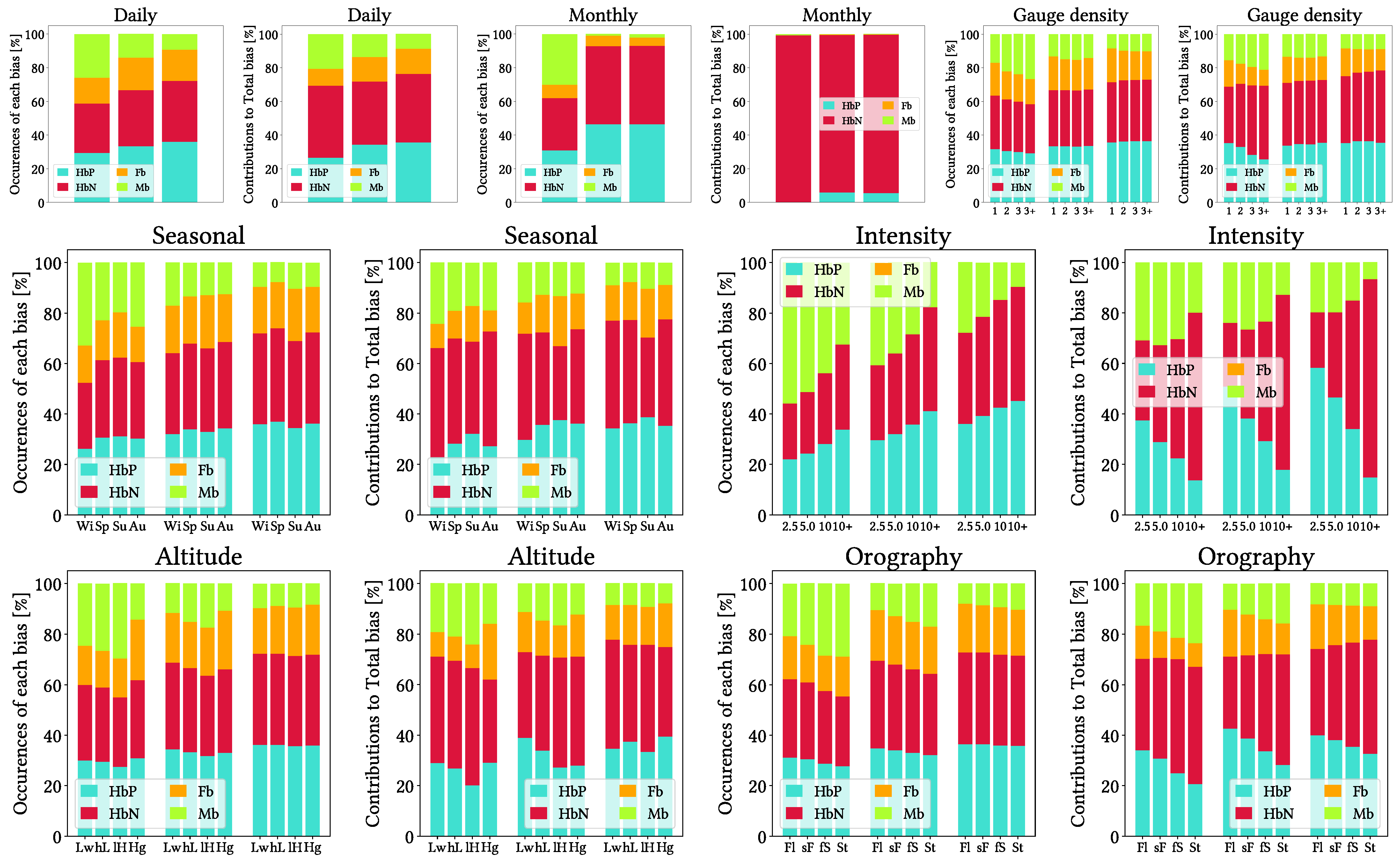

5.2. Contributions and Occurrences of Each Type of Bias

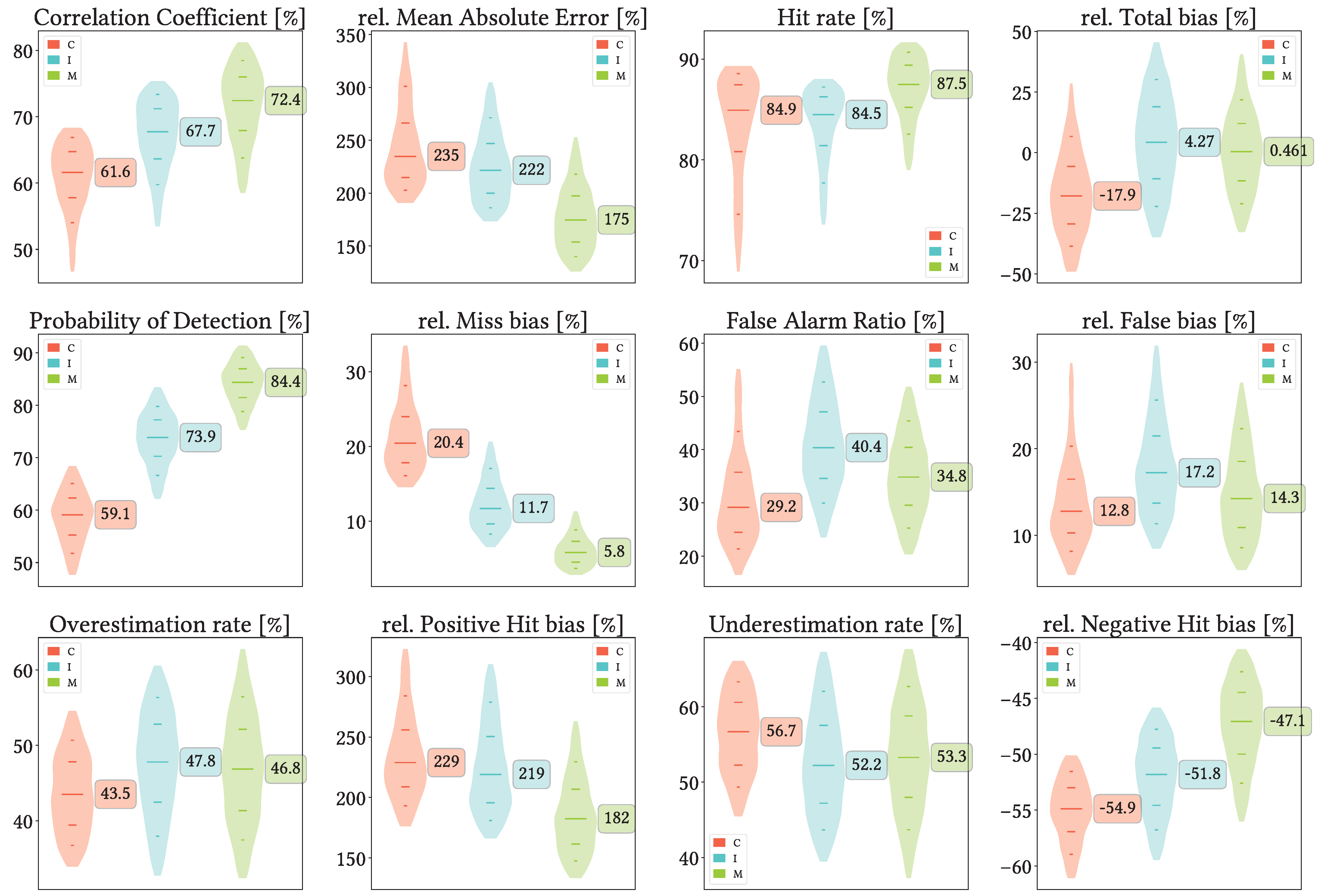

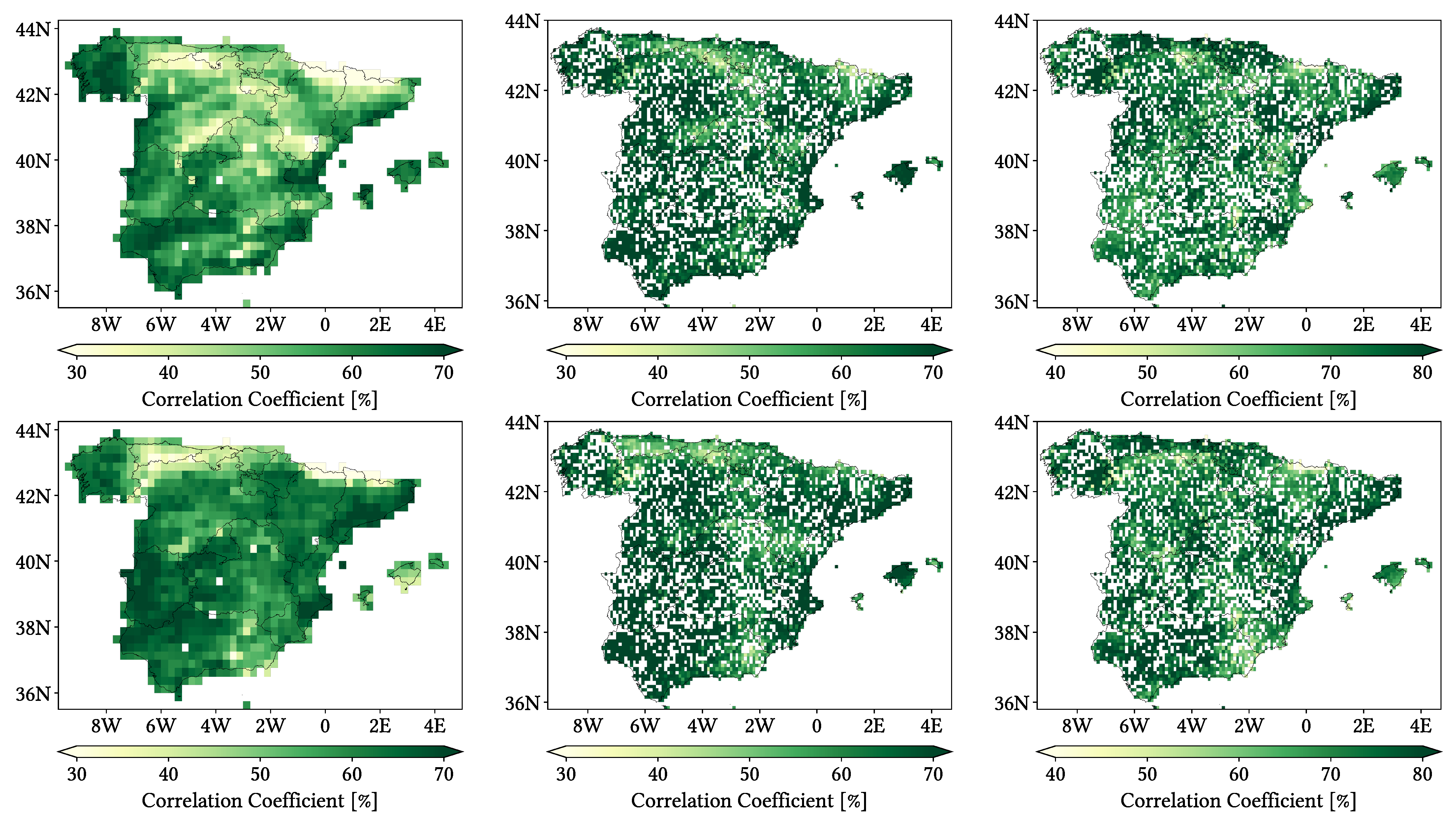

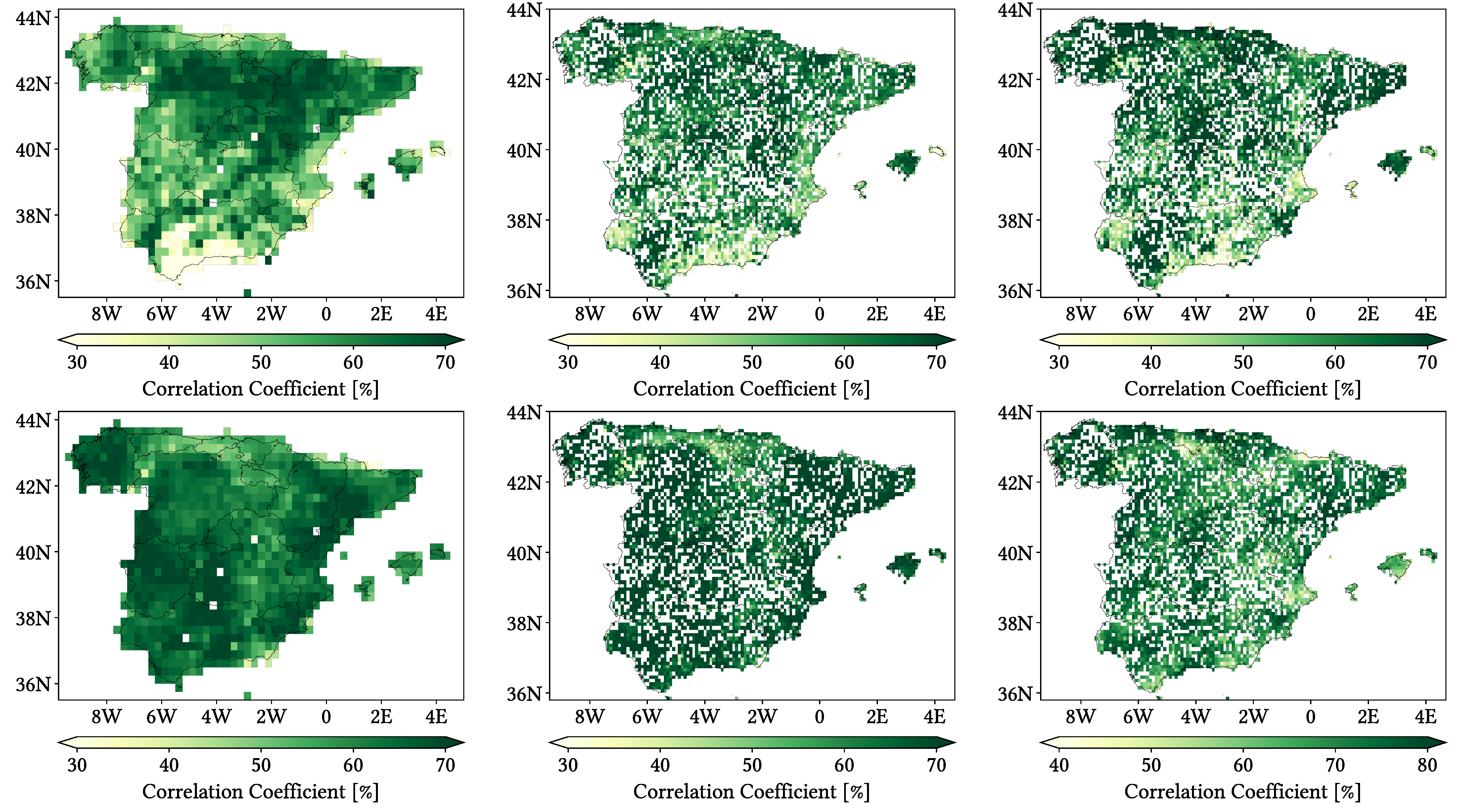

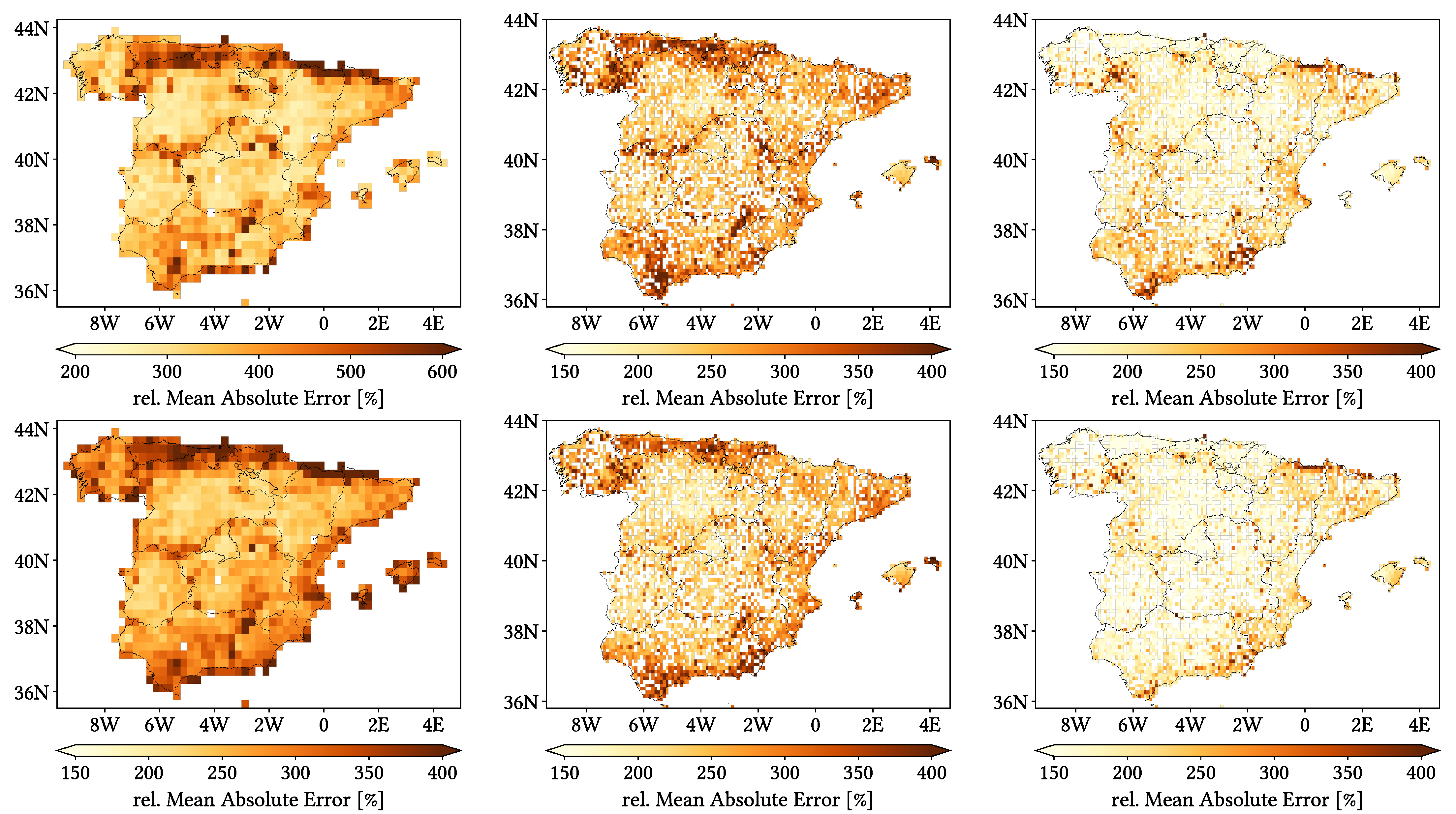

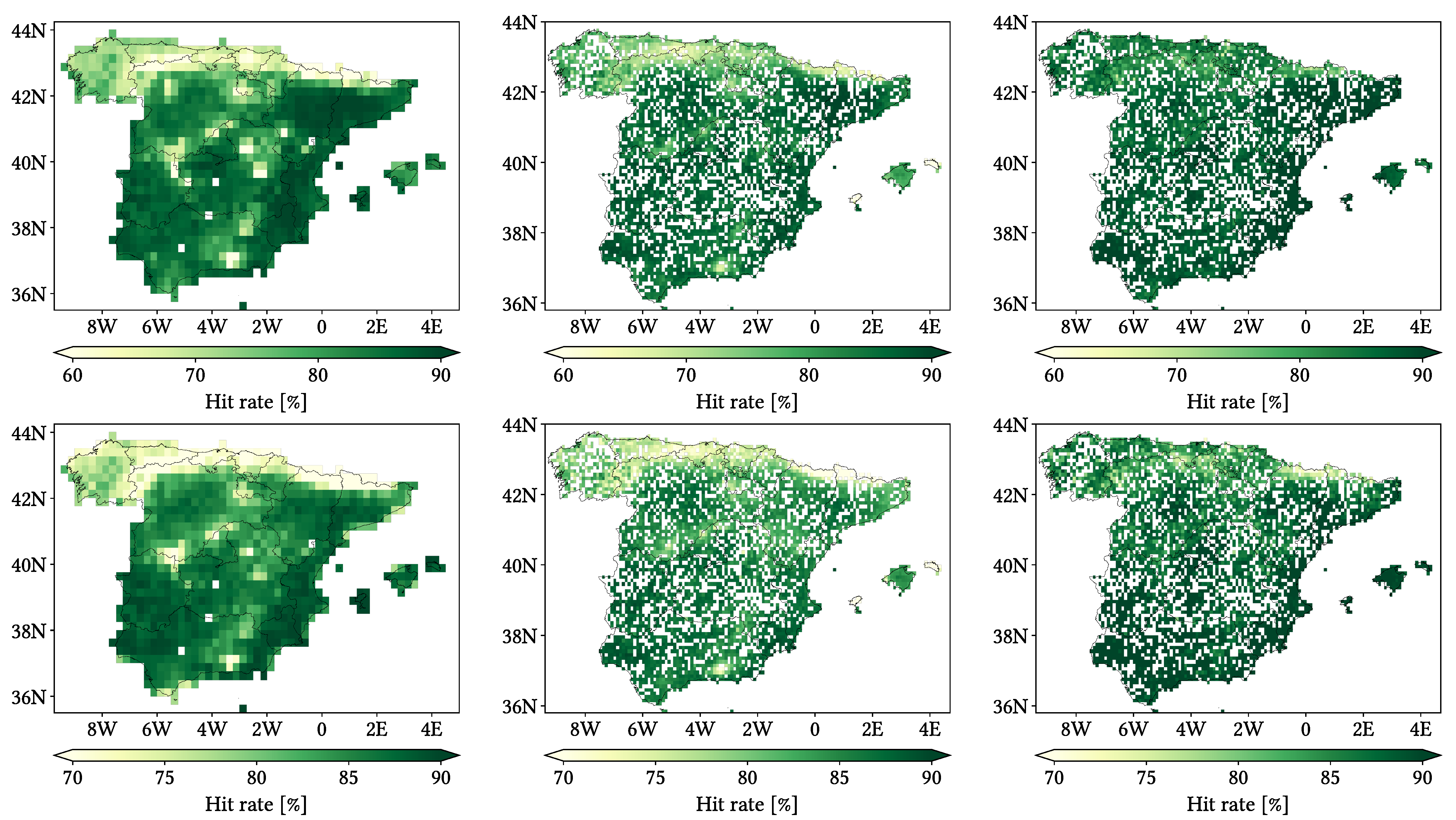

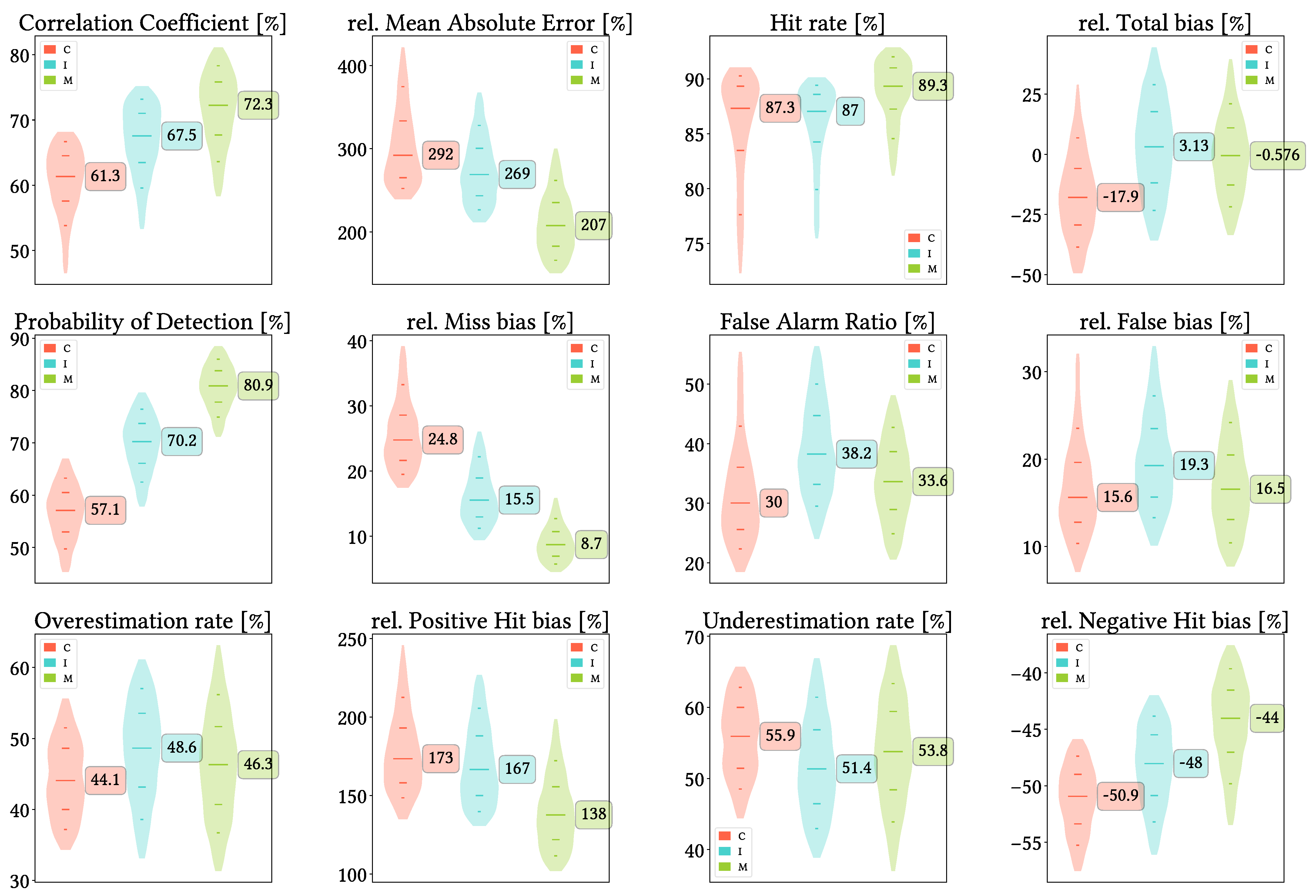

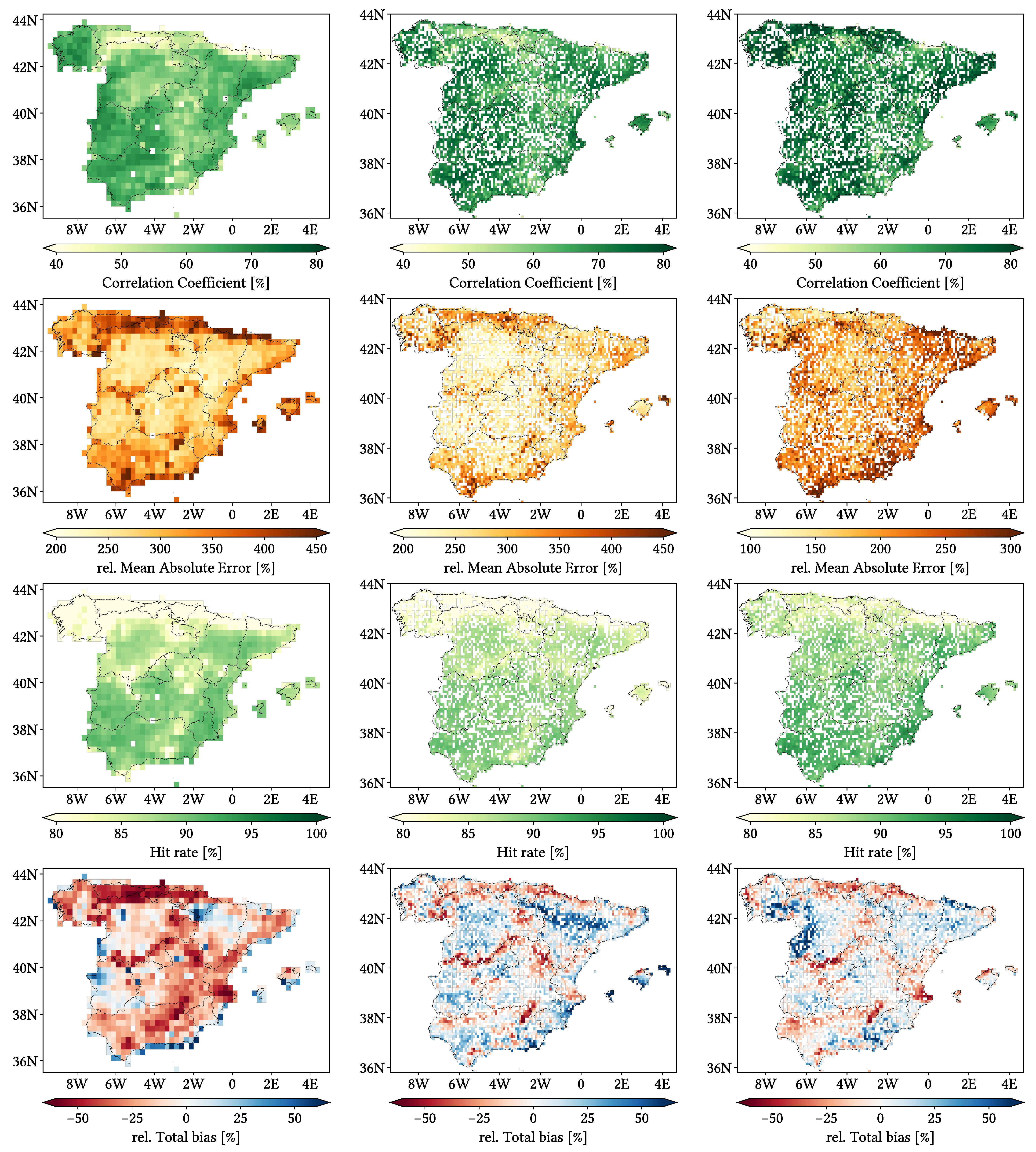

5.3. Overall Daily Analysis

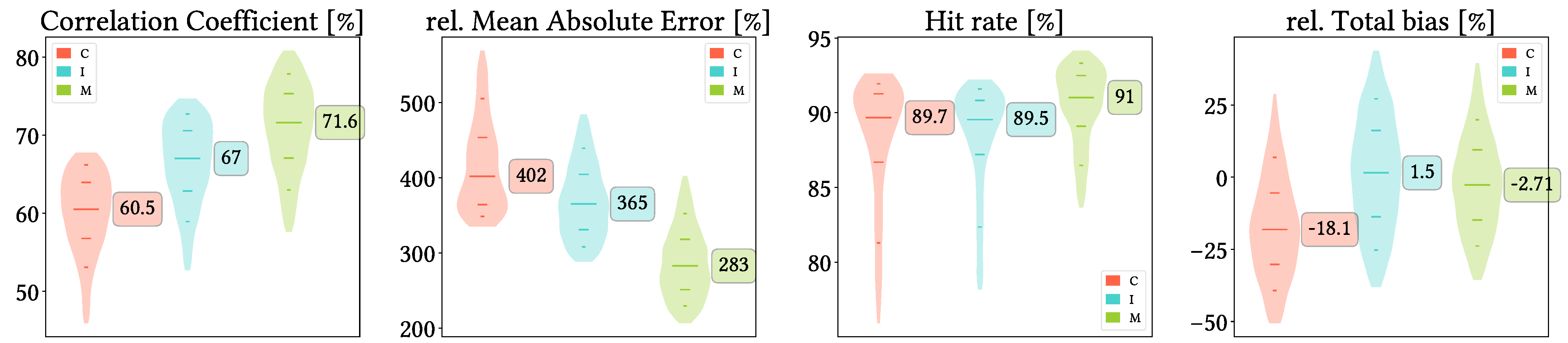

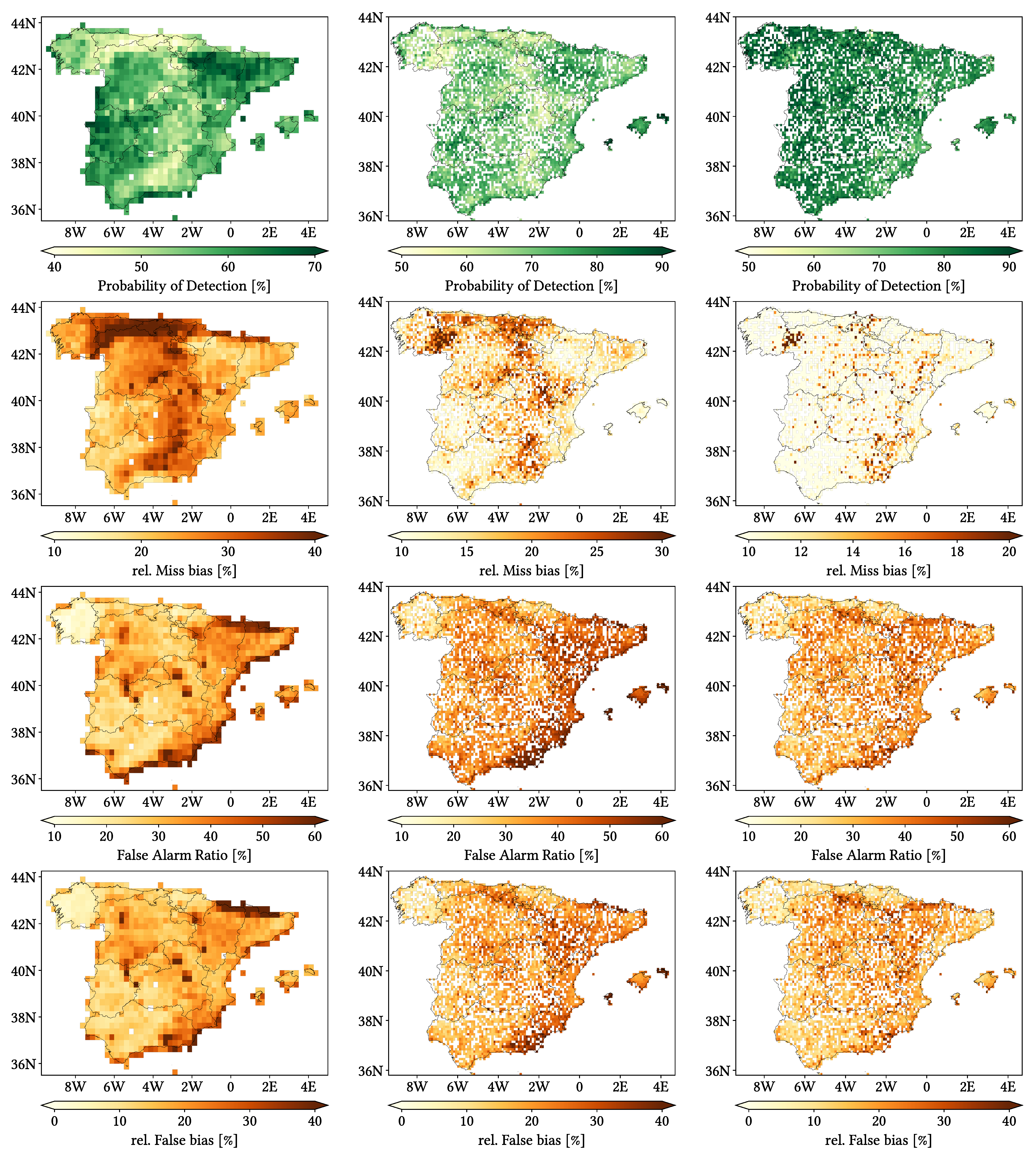

5.4. Overall Monthly Analysis

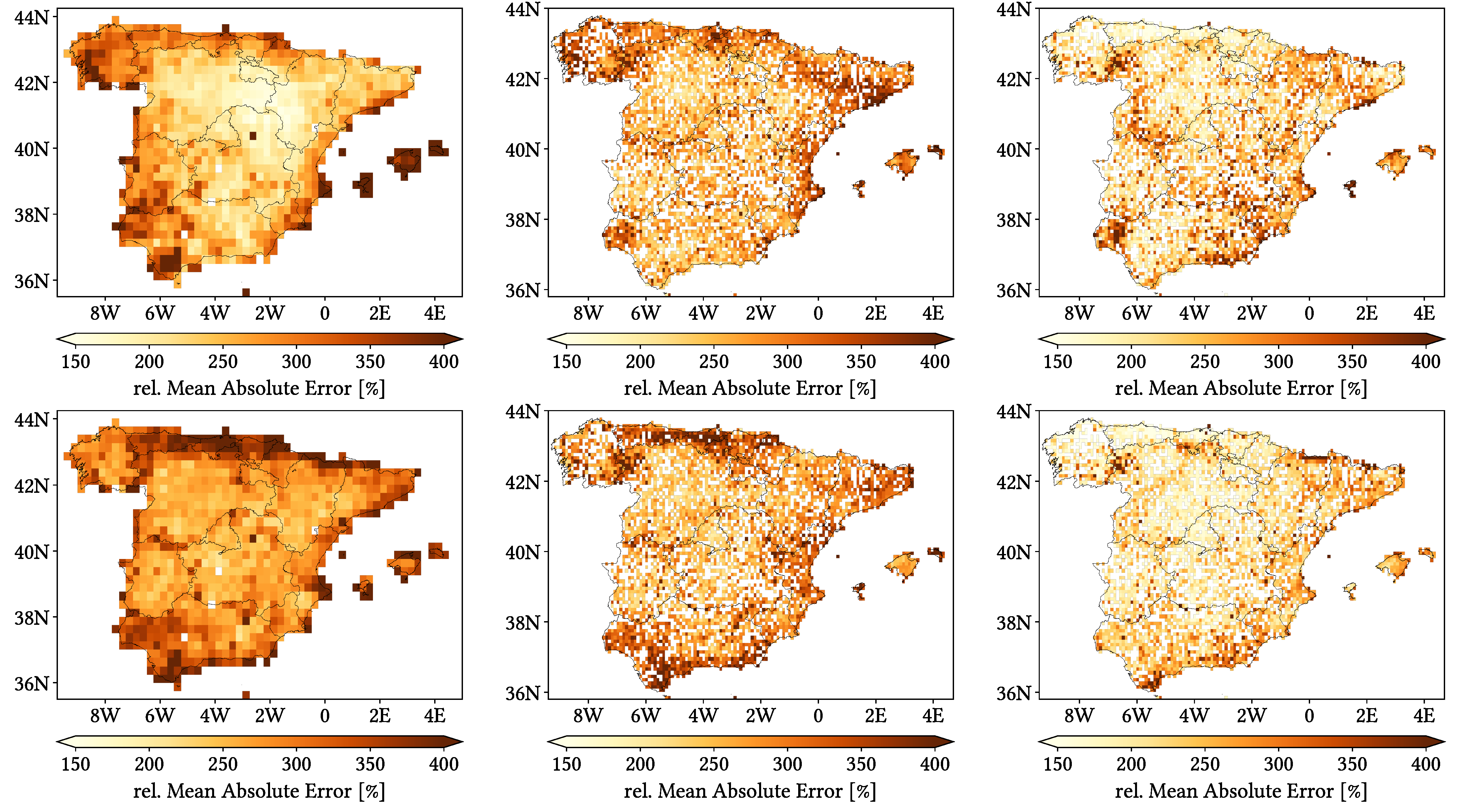

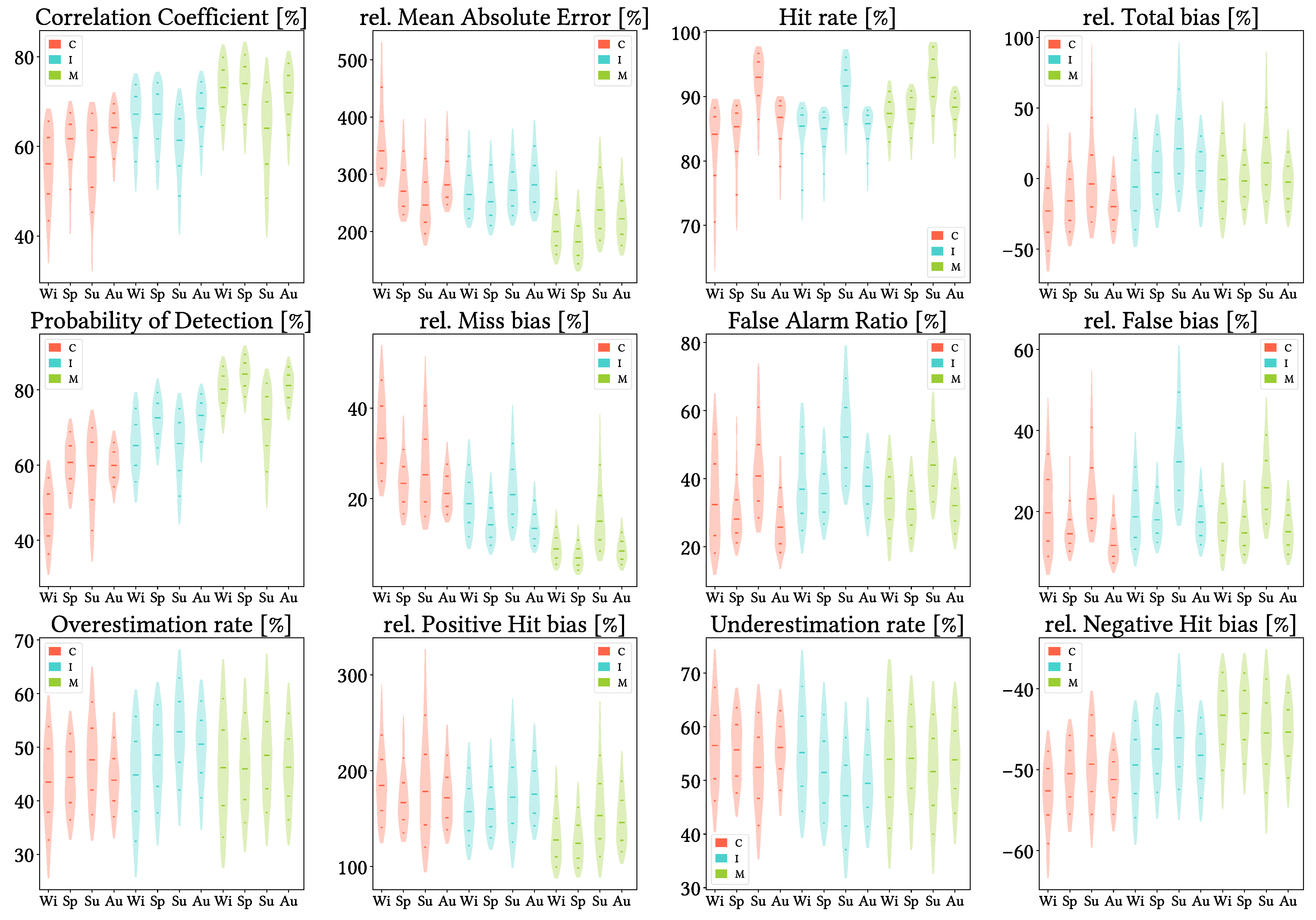

5.5. Analysis Regarding Season of Year

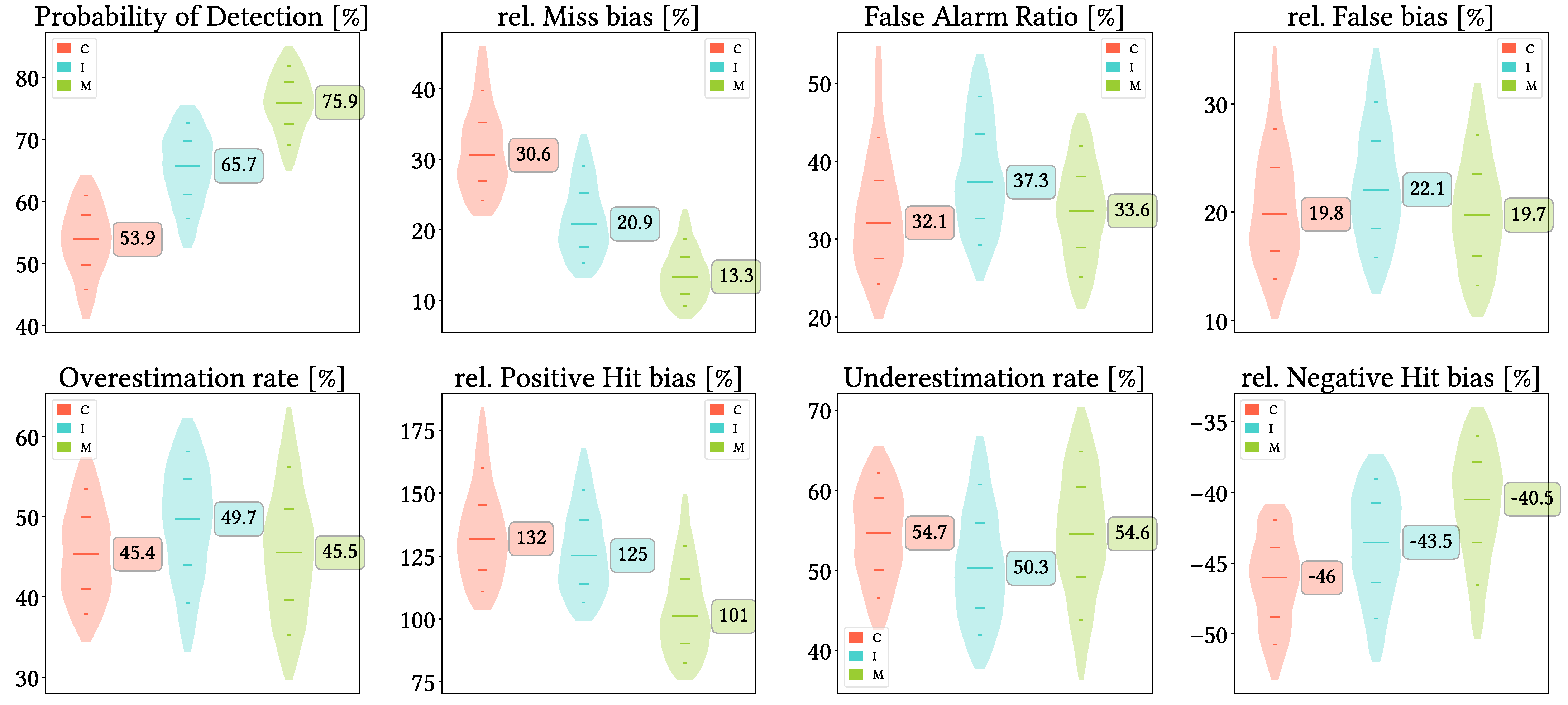

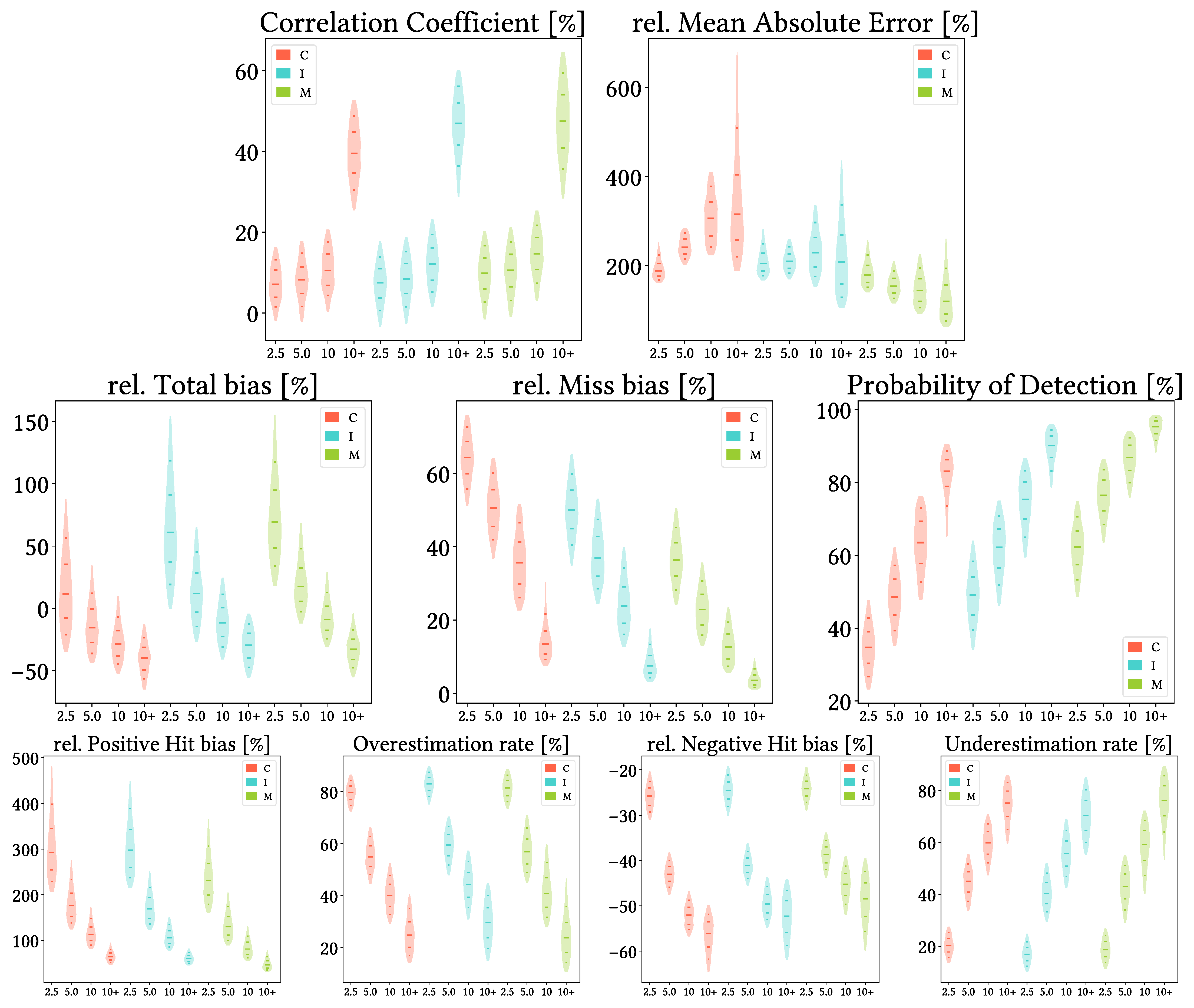

5.6. Analysis Regarding Reference Precipitation Intensity

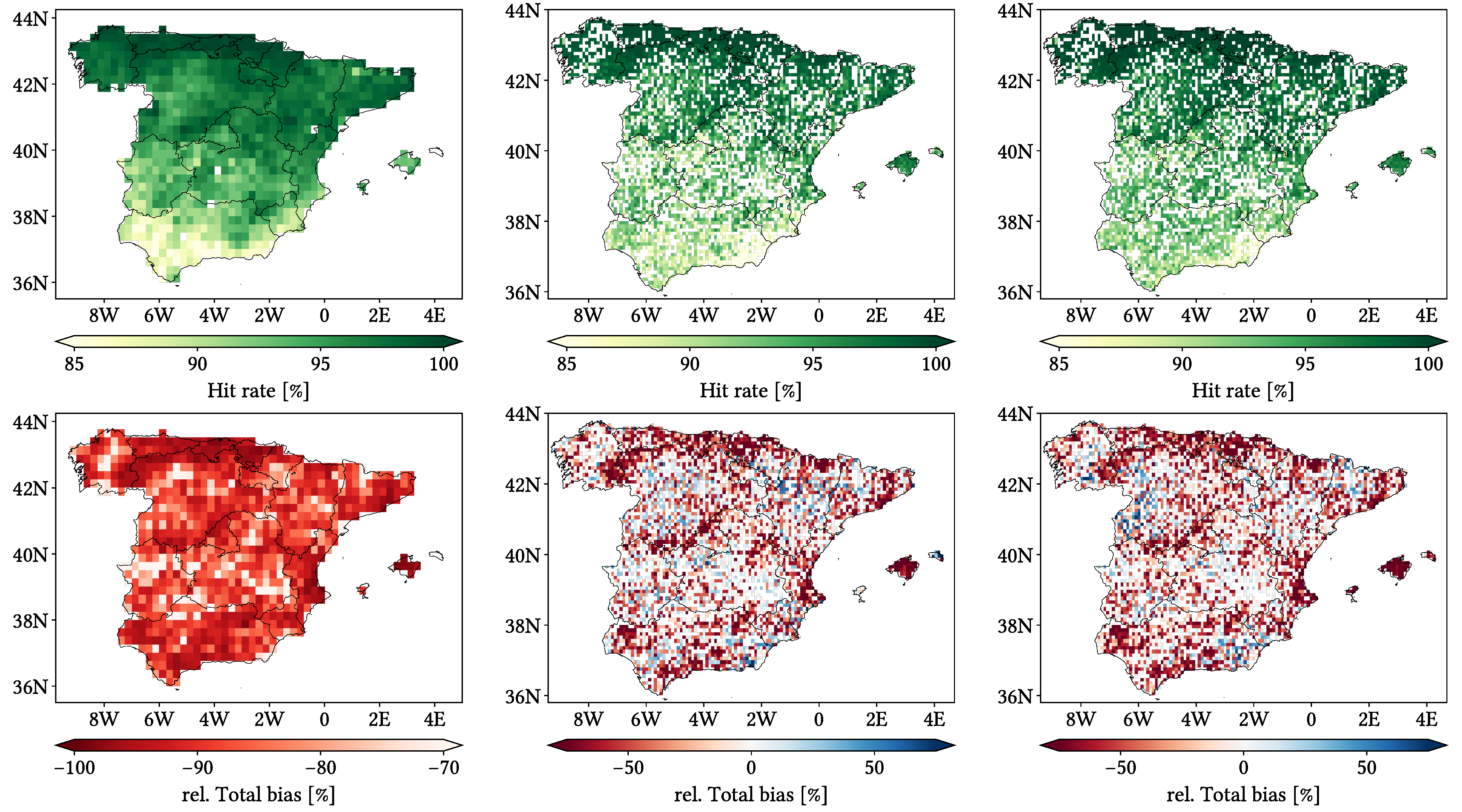

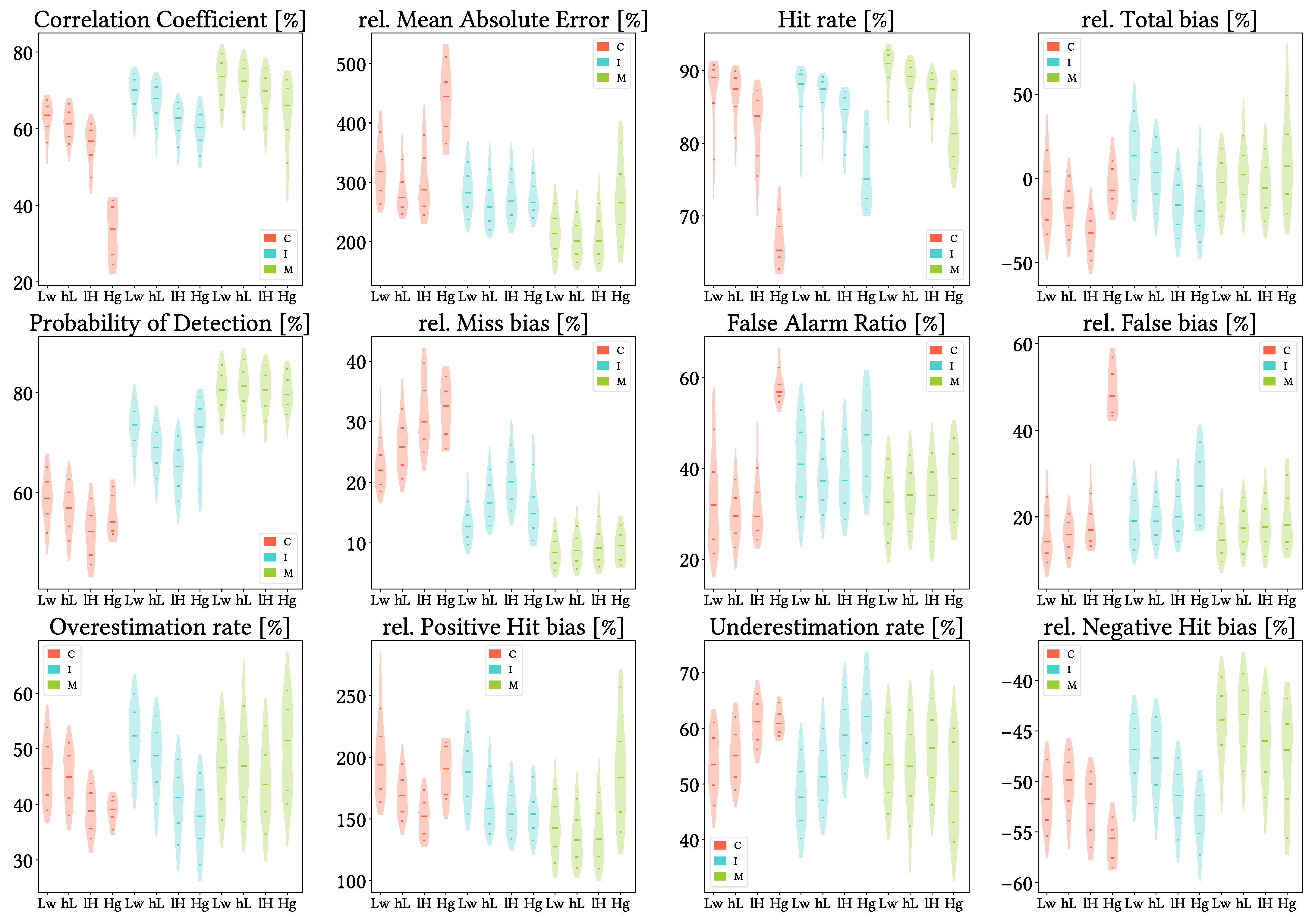

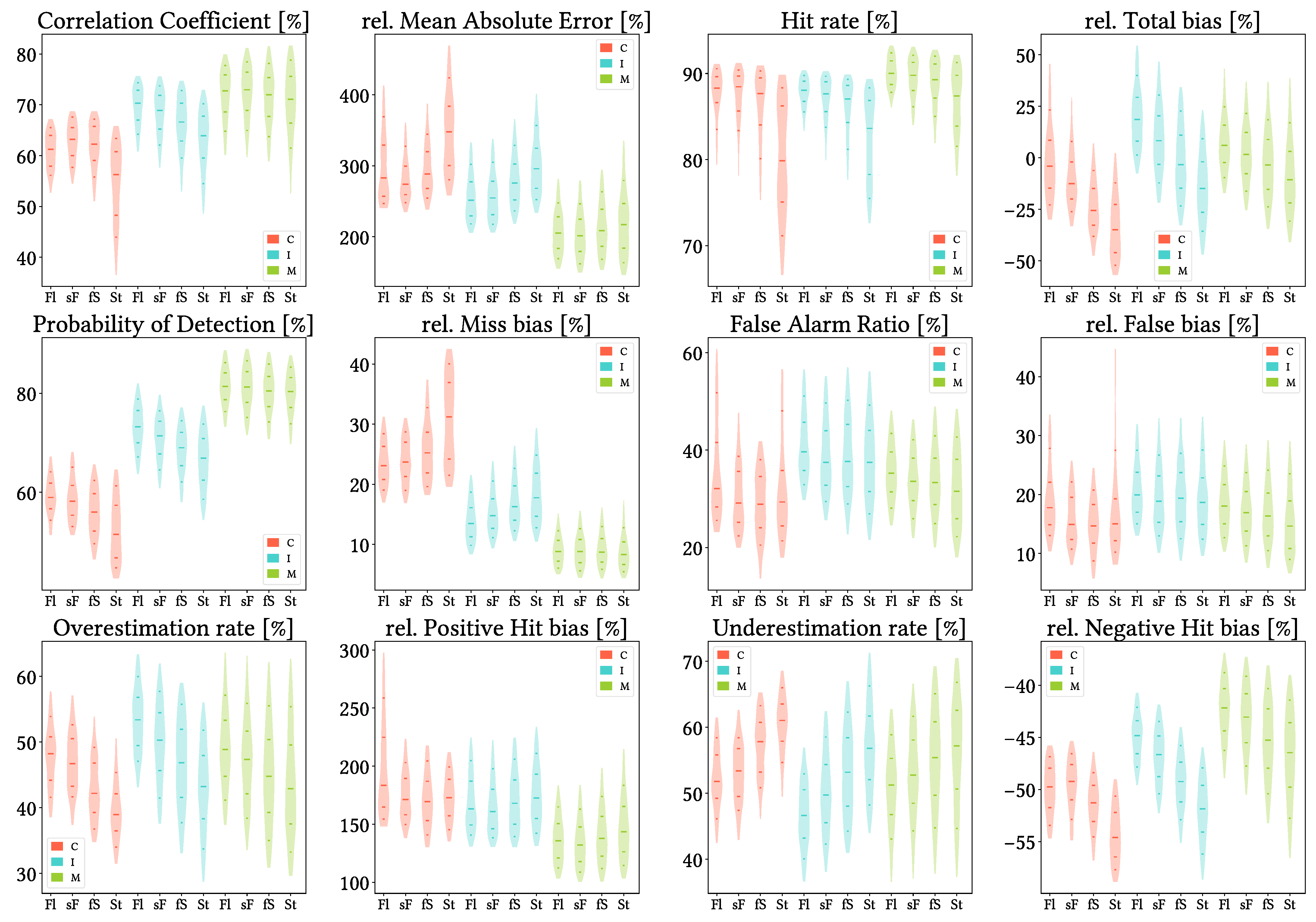

5.7. Analysis Regarding Altitude and Orographic Complexity

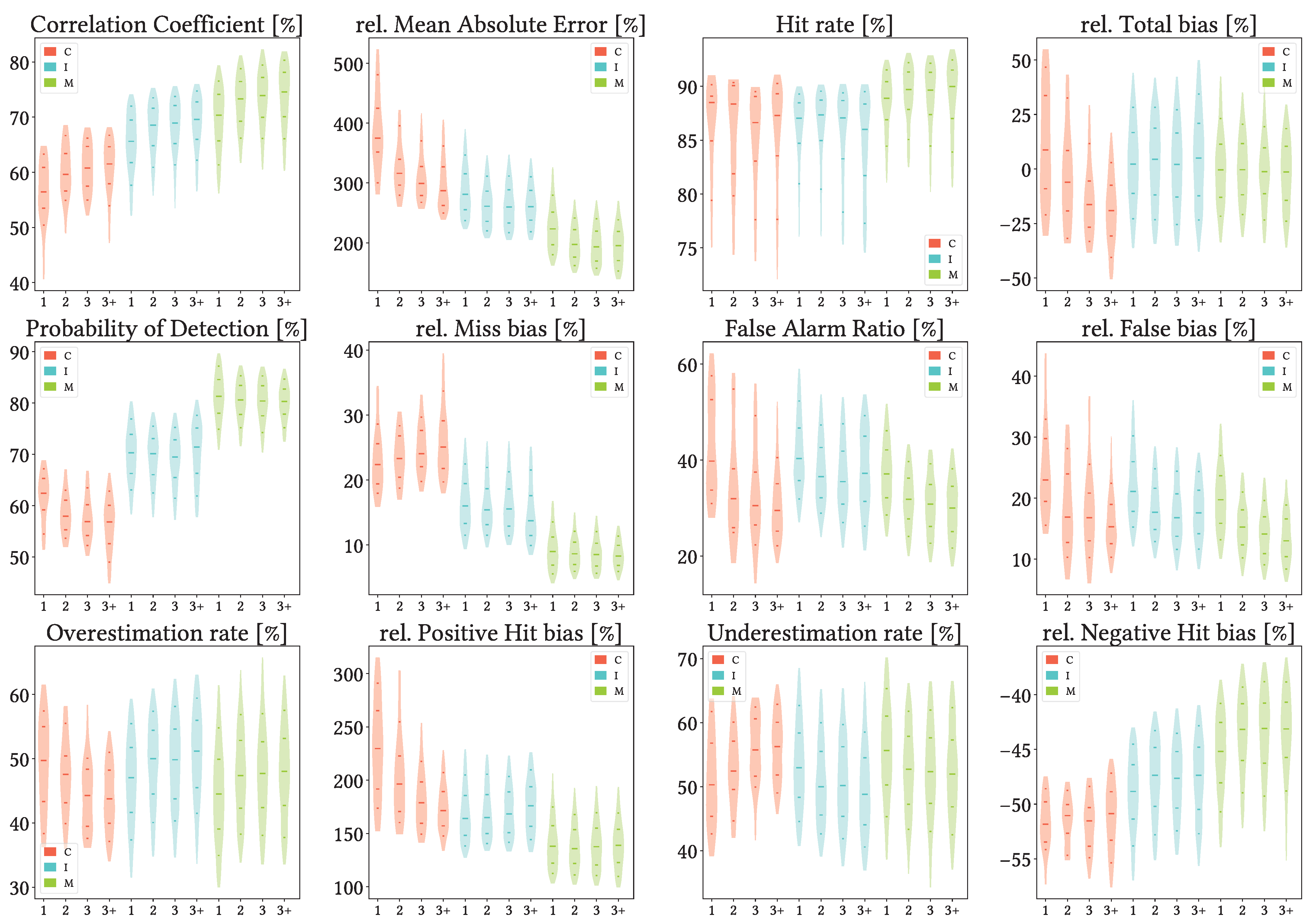

5.8. Analysis Regarding Gauge Density by Pixel

6. Discussion

7. Conclusions

- MSWEP is preferred, CMORPH is unrecommended, and performance is generally better at monthly than at daily resolution. CMORPH exhibits much worse detection capabilities and underestimates in greater magnitude. IMERG shows general overestimation (due to higher False bias and greater tendency to overestimate, both in frequency and magnitude), while MSWEP is equilibrated in that regard. Once precipitation is correctly detected, all products tend to underestimate more frequently than to overestimate, but the overestimation is always greater in magnitude. In fact, when overestimating, the SPP reports are always at least twice as large as the reference. The quantity of false reports is about one third of the total across all products. Nonetheless, they exhibit good accuracy for correctly detecting wet or dry days, as dry days are majoritary in most of the study region. Monthly performance is generally better for all products, although they become more prone to underestimation and show substantially increased negative bias.

- Performance for autumn and spring is similar, while it diverges in different ways for summer and winter. Performance for spring and autumn is similar to that of the global analysis, whereas the performance differs for winter and specially for summer. SPPs show worse detection capabilities and greater amount of false events across these two seasons (which also implies greater missed and falsely reported precipitation quantities), and summer also presents worse correlation values. Interestingly, this does not necessarily translate in winter and summer being more problematic overall, as overestimation and underestimation when correctly detecting precipitation have a notable influence. Indeed, winter is most problematic for CMORPH and summer is for MSWEP, whereas IMERG is similarly problematic across all seasons.

- Correlation and detection capabilities increase along reference intensity, but so does underestimation magnitude and frequency. Correlations are still very low across all products and intensities (mainly lesser than 60%), and the detection capabilities of CMORPH for its first two quartiles and those of IMERG for its first quartiles are low, as most of their pixels miss more than half the events. Nonetheless, CMORPH is more problematic for heavier intensities, IMERG for its third quartiles (not by much) and MSWEP for lighter intensities.

- Worse performance takes place in those regions that show both the greatest orographic complexity and high altitudes, which also tend to be considerably humid. These regions are the surroundings of the Septentrional Meseta, the Iberian and Betic ranges and the Pyrenees. The main problems are reduced detection capabilities and increased underestimation, and increased falsely reported precipitation to a lesser extent. Other regions with increased problematic are the northern littoral (reduced detection capabilities and increased underestimation) and the Iberian Mediterranean Basin (increased false precipitation).

- Density of reference gauges per pixel has no noticeable influence on any of the products performance. This motivates their use, but at the same time it implies that sources of discrepancy must lie elsewhere.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ATBD | Algorithm Theoretical Basis Document |

| CPC | Climate Prediction Center |

| GPCC | Global Precipitation Climatology Centre |

| GPCP | Global Precipitation Climatology Project |

| GPM | Global Precipitation Measurement Mission |

| IMS | Ice Mapping System |

| IPCC | Intergovernmental Panel on Climate Change |

| IR | Infrared |

| PMW | Passive Microwave |

Appendix A

Appendix B

Appendix C

References

- IPCC. Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Stocker, T.F., Qin, D., Plattner, G.-K., Tignor, M., Allen, S.K., Boschung, J., Nauels, A., Xia, Y., Bex, V., Midgley, P.M., Eds.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013; p. 1535. [Google Scholar] [CrossRef]

- Graff, L.S.; Lacasce, J. Changes in Cyclone Characteristics in Response to Modified SSTs. J. Clim. 2014, 27, 4273–4295. [Google Scholar] [CrossRef][Green Version]

- del Río, S.; Cano-Ortiz, A.; Herrero, L.; Penas, A. Recent trends in mean maximum and minimum air temperatures over Spain (1961–2006). Theor. Appl. Climatol. 2012, 109, 605–626. [Google Scholar] [CrossRef]

- Olcina-Cantos, J.; Notivoli, R.S.; Miró, J.; Meseguer-Ruiz, O. Tropical nights on the Spanish Mediterranean coast, 1950–2014. Clim. Res. 2019, 78, 225–236. [Google Scholar] [CrossRef]

- Estrela, M.J.; Miró, J.; Pastor, F.; Millán, M. Frontal Atlantic Rainfall Component in the Western Mediterranean Basin. Variability and Spatial Distribution. ESF-MedCLIVAR Workshop on “Hydrological, Socioeconomic and Ecological Impacts of the North Atlantic Oscillation in the Mediterranean Region”. 2010, Volume 036931. Available online: https://www.researchgate.net/publication/235901141_FRONTAL_ATLANTIC_RAINFALL_COMPONENT_IN_THE_WESTERN_MEDITERRANEAN_BASIN_VARIABILITY_AND_SPATIAL_DISTRIBUTION (accessed on 24 October 2025).

- Gonzalez-Hidalgo, J.C.; Lopez-Bustins, J.; Štepánek, P.; Martin-Vide, J.; de Luis, M. Monthly precipitation trends on the Mediterranean fringe of the Iberian Peninsula during the second-half of the twentieth century (1951–2000). Int. J. Climatol. 2009, 29, 1415–1429. [Google Scholar] [CrossRef]

- Miró, J.J.; Estrela, M.J.; Caselles, V.; Gómez, I. Spatial and temporal rainfall changes in the Júcar and Segura basins (1955–2016): Fine-scale trends. Int. J. Climatol. 2018, 38, 4699–4722. [Google Scholar] [CrossRef]

- Estrela, M.J.; Corell, D.; Valiente, J.A.; Azorin-Molina, C.; Chen, D. Spatio-temporal variability of fog-water collection in the eastern Iberian Peninsula: 2003–2012. Atmos. Res. 2019, 226, 87–101. [Google Scholar] [CrossRef]

- Miró, J.J.; Estrela, M.J.; Olcina-Cantos, J. Statistical downscaling and attribution of air temperature change patterns in the Valencia region (1948–2011). Atmos. Res. 2015, 156, 189–212. [Google Scholar] [CrossRef]

- Islam, M.A.; Yu, B.; Cartwright, N. Assessment and comparison of five satellite precipitation products in Australia. J. Hydrol. 2020, 590, 125474. [Google Scholar] [CrossRef]

- Michaelides, S.; Levizzani, V.; Anagnostou, E.; Bauer, P.; Kasparis, T.; Lane, J. Precipitation: Measurement, remote sensing, climatology and modeling. Atmos. Res. 2009, 94, 512–533. [Google Scholar] [CrossRef]

- Wu, X.; Zhao, N. Evaluation and Comparison of Six High-Resolution Daily Precipitation Products in Mainland China. Remote Sens. 2023, 15, 223. [Google Scholar] [CrossRef]

- Sharifi, E.; Eitzinger, J.; Dorigo, W. Performance of the state-of-the-art gridded precipitation products over mountainous terrain: A regional study over Austria. Remote Sens. 2019, 11, 2018. [Google Scholar] [CrossRef]

- Alsumaiti, T.S.; Hussein, K.; Ghebreyesus, D.T.; Sharif, H.O. Performance of the CMORPH and GPM IMERG products over the United Arab Emirates. Remote Sens. 2020, 12, 1426. [Google Scholar] [CrossRef]

- Maggioni, V.; Meyers, P.C.; Robinson, M.D. A Review of Merged High-Resolution Satellite Precipitation Product Accuracy during the Tropical Rainfall Measuring Mission (TRMM) Era. J. Hydrometeorol. 2016, 17, 1101–1117. [Google Scholar] [CrossRef]

- Mei, Y.; Anagnostou, E.N.; Nikolopoulos, E.I.; Borga, M. Error Analysis of Satellite Precipitation Products in Mountainous Basins. J. Hydrometeorol. 2014, 15, 1778–1793. [Google Scholar] [CrossRef]

- Derin, Y.; Anagnostou, E.; Berne, A.; Borga, M.; Boudevillain, B.; Buytaert, W.; Che-Hao, C.; Chen, H.; Delrieu, G.; Hsu, Y.C.; et al. Evaluation of GPM-era Global Satellite Precipitation Products over Multiple Complex Terrain Regions. Remote Sens. 2019, 11, 2936. [Google Scholar] [CrossRef]

- Ma, Z.; Xu, J.; Zhu, S.; Yang, J.; Tang, G.; Yang, Y.; Shi, Z.; Hong, Y. AIMERG: A new Asian precipitation dataset (0.1°/half-hourly, 2000–2015) by calibrating the GPM-era IMERG at a daily scale using APHRODITE. Earth Syst. Sci. Data 2020, 12, 1525–1544. [Google Scholar] [CrossRef]

- López-Davalillo Larrea, J. Geografía Regional de España; Colección Grado, UNED— Universidad Nacional de Educación a Distancia: Madrid, Spain, 2014. [Google Scholar]

- IGN. Atlas Nacional de España (Instituto Geográfico Nacional); IGN: Madrid, Spain, 2019. [Google Scholar]

- AEMET; IMP. Atlas Climático Ibérico (Agencia Estatal de Meteorología e Instituto de Meteorologia de Portugal); AEMET: Madrid, Spain; IMP: Lisboa, Portugal, 2011. [Google Scholar]

- Martín Vide, J.; Olcina Cantos, J. Climas y Tiempos de España; Alianza: Madrid, Spain, 2001. [Google Scholar]

- Domonkos, P. Homogenization of precipitation time series with ACMANT. Theor. Appl. Climatol. 2015, 122, 303–314. [Google Scholar] [CrossRef]

- Miró, J.J.; Caselles, V.; Estrela, M.J. Multiple imputation of rainfall missing data in the Iberian Mediterranean context. Atmos. Res. 2017, 197, 313–330. [Google Scholar] [CrossRef]

- Miró, J.J.; Lemus-Canovas, M.; Serrano-Notivoli, R.; Olcina Cantos, J.; Estrela, M.; Martin-Vide, J.; Sarricolea, P.; Meseguer-Ruiz, O. A component-based approximation for trend detection of intense rainfall in the Spanish Mediterranean coast. Weather Clim. Extrem. 2022, 38, 100513. [Google Scholar] [CrossRef]

- Joyce, R.J.; Janowiak, J.E.; Arkin, P.A.; Xie, P. CMORPH: A Method that Produces Global Precipitation Estimates from Passive Microwave and Infrared Data at High Spatial and Temporal Resolution. J. Hydrometeorol. 2004, 5, 487–503. [Google Scholar] [CrossRef]

- Xie, P.; Joyce, R.; Wu, S. Bias-Corrected CMORPH - Climate Algorithm Theoretical Basis Document, NOAA Climate Dara Record Program (CDRP-ATBD-0812 by CDRP Document Manager), Rev. 0 (2018). 2018. Available online: https://www.ncei.noaa.gov/products/climate-data-records/precipitation-cmorph (accessed on 24 October 2025).

- Stampoulis, D.; Anagnostou, E.N. Evaluation of Global Satellite Rainfall Products over Continental Europe. J. Hydrometeorol. 2012, 13, 588–603. [Google Scholar] [CrossRef]

- Zambrano-Bigiarini, M.; Nauditt, A.; Birkel, C.; Verbist, K.; Ribbe, L. Temporal and spatial evaluation of satellite-based rainfall estimates across the complex topographical and climatic gradients of Chile. Hydrol. Earth Syst. Sci. 2017, 21, 1295–1320. [Google Scholar] [CrossRef]

- Alijanian, M.; Rakhshandehroo, G.R.; Mishra, A.K.; Dehghani, M. Evaluation of satellite rainfall climatology using CMORPH, PERSIANN-CDR, PERSIANN, TRMM, MSWEP over Iran. Int. J. Climatol. 2017, 37, 4896–4914. [Google Scholar] [CrossRef]

- Huang, A.; Zhao, Y.; Zhou, Y.; Yang, B.; Zhang, L.; Dong, X.; Fang, D.; Wu, Y. Evaluation of multisatellite precipitation products by use of ground-based data over China. J. Geophys. Res. Atmos. 2016, 121, 10,654–10,675. [Google Scholar] [CrossRef]

- Serrat-Capdevila, A.; Merino, M.; Valdes, J.B.; Durcik, M. Evaluation of the Performance of Three Satellite Precipitation Products over Africa. Remote Sens. 2016, 8, 836. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Joyce, R.; Nelkin, E.J.; Tan, J.; Braithwaite, D.; Hsua, K.; Kelley, O.A.; Nguyen, P.; Sorooshian, S.; et al. NASA Global Precipitation Measurement (GPM) Integrated Multi-satellitE Retrievals for GPM (IMERG): Algorithm Theoretical Basis Document (ATBD) Version 07. 2023. Available online: https://gpm.nasa.gov/resources/documents/imerg-v07-atbd (accessed on 24 October 2025).

- Satgé, F.; Xavier, A.; Pillco Zolá, R.; Hussain, Y.; Timouk, F.; Garnier, J.; Bonnet, M.P. Comparative Assessments of the Latest GPM Mission’s Spatially Enhanced Satellite Rainfall Products over the Main Bolivian Watersheds. Remote Sens. 2017, 9, 369. [Google Scholar] [CrossRef]

- Peinó, E.; Bech, J.; Udina, M. Performance Assessment of GPM IMERG Products at Different Time Resolutions, Climatic Areas and Topographic Conditions in Catalonia. Remote Sens. 2022, 14, 5085. [Google Scholar] [CrossRef]

- Ge, Z.; Yu, R.; Zhu, P.; Hao, Y.; Li, Y.; Liu, X.; Zhang, Z.; Ren, X. Applicability evaluation and error analysis of TMPA and IMERG in Inner Mongolia Autonomous Region, China. Theor. Appl. Climatol. 2023, 151, 1449–1467. [Google Scholar] [CrossRef]

- Nan, L.; Yang, M.; Wang, H.; Xiang, Z.; Hao, S. Comprehensive Evaluation of Global Precipitation Measurement Mission (GPM) IMERG Precipitation Products over Mainland China. Water 2021, 13, 3381. [Google Scholar] [CrossRef]

- Beck, H.E.; Wood, E.F.; Pan, M.; Fisher, C.K.; Miralles, D.G.; van Dijk, A.I.J.M.; McVicar, T.R.; Adler, R.F. MSWEP V2 Global 3-Hourly 0.1° Precipitation: Methodology and Quantitative Assessment. Bull. Am. Meteorol. Soc. 2019, 100, 473–500. [Google Scholar] [CrossRef]

- Shaowei, N.; Jie, W.; Juliang, J.; Xiaoyan, X.; Yuliang, Z.; Fan, S.; Linlin, Z. Comprehensive evaluation of satellite-derived precipitation products considering spatial distribution difference of daily precipitation over eastern China. J. Hydrol. Reg. Stud. 2022, 44, 101242. [Google Scholar] [CrossRef]

- NASA. Shuttle Radar Topography Mission (SRTM) Global; Distributed by OpenTopography; NASA: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Riley, S.; DeGloria, S.; Elliot, S. A Terrain Ruggedness Index that Quantifies Topographic Heterogeneity. Int. J. Sci. 1999, 5, 23–27. [Google Scholar]

- Zhang, X.; Alexander, L.; Hegerl, G.C.; Jones, P.; Tank, A.K.; Peterson, T.C.; Trewin, B.; Zwiers, F.W. Indices for monitoring changes in extremes based on daily temperature and precipitation data. WIREs Clim. Chang. 2011, 2, 851–870. [Google Scholar] [CrossRef]

- Tian, Y.; Peters-Lidard, C.D.; Eylander, J.B.; Joyce, R.J.; Huffman, G.J.; Adler, R.F.; Hsu, K.l.; Turk, F.J.; Garcia, M.; Zeng, J. Component analysis of errors in satellite-based precipitation estimates. J. Geophys. Res. 2009, 114. [Google Scholar] [CrossRef]

- Navarro, A.; García-Ortega, E.; Merino, A.; Sánchez, J.L.; Tapiador, F.J. Orographic biases in IMERG precipitation estimates in the Ebro River basin (Spain): The effects of rain gauge density and altitude. Atmos. Res. 2020, 244, 105068. [Google Scholar] [CrossRef]

- Li, J.; Lu, C.; Chen, J.; Zhou, X.; Yang, K.; Xu, X.; Wu, X.; Zhu, L.; He, X.; Wu, S.; et al. The combined effects of convective entrainment and orographic drag on precipitation over the Tibetan Plateau. Sci. China Earth Sci. 2025, 68, 2615–2630. [Google Scholar] [CrossRef]

- Qin, Y.; Chen, Z.; Shen, Y.; Zhang, S.; Shi, R. Evaluation of Satellite Rainfall Estimates over the Chinese Mainland. Remote Sens. 2014, 6, 11649–11672. [Google Scholar] [CrossRef]

- Roushdi, M. Spatio-Temporal Assessment of Satellite Estimates and Gauge-Based Rainfall Products in Northern Part of Egypt. Climate 2022, 10, 134. [Google Scholar] [CrossRef]

- Awange, J.; Ferreira, V.; Forootan, E.; Khandu; Andam-Akorful, S.; Agutu, N.; He, X. Uncertainties in remotely sensed precipitation data over Africa. Int. J. Climatol. 2016, 36, 303–323. [Google Scholar] [CrossRef]

- Zhou, Z.; Guo, B.; Xing, W.; Zhou, J.; Xu, F.; Xu, Y. Comprehensive evaluation of latest GPM era IMERG and GSMaP precipitation products over mainland China. Atmos. Res. 2020, 246, 105132. [Google Scholar] [CrossRef]

- Bogerd, L.; Overeem, A.; Leijnse, H.; Uijlenhoet, R. A Comprehensive Five-Year Evaluation of IMERG Late Run Precipitation Estimates over the Netherlands. J. Hydrometeorol. 2021, 22, 1855–1868. [Google Scholar] [CrossRef]

- Su, J.; Lü, H.; Ryu, D.; Zhu, Y. The Assessment and Comparison of TMPA and IMERG Products over the Major Basins of Mainland China. Earth Space Sci. 2019, 6, 2461–2479. [Google Scholar] [CrossRef]

- Zhu, S.; Shen, Y.; Ma, Z. A New Perspective for Charactering the Spatio-temporal Patterns of the Error in GPM IMERG over Mainland China. Earth Space Sci. 2021, 8, 1. [Google Scholar] [CrossRef]

- Ramsauer, T.; Weiß, T.; Marzahn, P. Comparison of the GPM IMERG Final Precipitation Product to RADOLAN Weather Radar Data over the Topographically and Climatically Diverse Germany. Remote Sens. 2018, 10, 2029. [Google Scholar] [CrossRef]

- Senent-Aparicio, J.; López-Ballesteros, A.; Pérez-Sánchez, J.; Segura-Méndez, F.J.; Pulido-Velazquez, D. Using Multiple Monthly Water Balance Models to Evaluate Gridded Precipitation Products over Peninsular Spain. Remote Sens. 2018, 10, 922. [Google Scholar] [CrossRef]

- Moazami, S.; Najafi, M. A comprehensive evaluation of GPM-IMERG V06 and MRMS with hourly ground-based precipitation observations across Canada. J. Hydrol. 2021, 594, 125929. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, L.F.; Bras, R.L. Evaluation of the Quality of Precipitation Products: A Case Study Using WRF and IMERG Data over the Central United States. J. Hydrometeorol. 2018, 19, 2007–2020. [Google Scholar] [CrossRef]

- Sun, W.; Sun, Y.; Li, X.; Wang, T.; Wang, Y.; Qiu, Q.; Deng, Z. Evaluation and Correction of GPM IMERG Precipitation Products over the Capital Circle in Northeast China at Multiple Spatiotemporal Scales. Adv. Meteorol. 2018, 2018, 4714173. [Google Scholar] [CrossRef]

- Xin, Y.; Yang, Y.; Chen, X.; Yue, X.; Liu, Y.; Yin, C. Evaluation of IMERG and ERA5 precipitation products over the Mongolian Plateau. Sci. Rep. 2022, 12, 21776. [Google Scholar] [CrossRef]

- Wang, H.; Yong, B. Quasi-Global Evaluation of IMERG and GSMaP Precipitation Products over Land Using Gauge Observations. Water 2020, 12, 243. [Google Scholar] [CrossRef]

- Yu, L.; Leng, G.; Python, A.; Peng, J. A Comprehensive Evaluation of Latest GPM IMERG V06 Early, Late and Final Precipitation Products across China. Remote Sens. 2021, 13, 1208. [Google Scholar] [CrossRef]

- Tapiador, F.J.; Navarro, A.; García-Ortega, E.; Merino, A.; Sánchez, J.L.; Marcos, C.; Kummerow, C. The contribution of rain gauges in the calibration of the IMERG product: Results from the first validation over Spain. J. Hydrometeorol. 2020, 21, 161–182. [Google Scholar] [CrossRef]

- Tang, G.; Clark, M.P.; Papalexiou, S.M.; Ma, Z.; Hong, Y. Have satellite precipitation products improved over last two decades? A comprehensive comparison of GPM IMERG with nine satellite and reanalysis datasets. Remote Sens. Environ. 2020, 240, 111697. [Google Scholar] [CrossRef]

- Kidd, C.; Bauer, P.; Turk, J.; Huffman, G.J.; Joyce, R.; Hsu, K.L.; Braithwaite, D. Intercomparison of High-Resolution Precipitation Products over Northwest Europe. J. Hydrometeorol. 2012, 13, 67–83. [Google Scholar] [CrossRef]

- Chua, Z.W.; Kuleshov, Y.; Watkins, A. Evaluation of Satellite Precipitation Estimates over Australia. Remote Sens. 2020, 12, 678. [Google Scholar] [CrossRef]

- Lockhoff, M.; Zolina, O.; Simmer, C.; Schulz, J. Representation of Precipitation Characteristics and Extremes in Regional Reanalyses and Satellite- and Gauge-Based Estimates over Western and Central Europe. J. Hydrometeorol. 2019, 20, 1123–1145. [Google Scholar] [CrossRef]

- Lo Conti, F.; Hsu, K.L.; Noto, L.V.; Sorooshian, S. Evaluation and comparison of satellite precipitation estimates with reference to a local area in the Mediterranean Sea. Atmos. Res. 2014, 138, 189–204. [Google Scholar] [CrossRef]

- Salio, P.; Hobouchian, M.P.; García Skabar, Y.; Vila, D. Evaluation of high-resolution satellite precipitation estimates over southern South America using a dense rain gauge network. Atmos. Res. 2015, 163, 146–161. [Google Scholar] [CrossRef]

- Wang, Q.; Zeng, Y.; Mannaerts, C.; Golroudbary, V.R. Determining Relative Errors of Satellite Precipitation Data over The Netherlands. In Proceedings of the 2nd International Electronic Conference on Remote Sensing, Online, 22 March–5 April 2018; Volume 22. [Google Scholar] [CrossRef]

- Song, L.; Xu, C.; Long, Y.; Lei, X.; Suo, N.; Cao, L. Performance of Seven Gridded Precipitation Products over Arid Central Asia and Subregions. Remote Sens. 2022, 14, 6039. [Google Scholar] [CrossRef]

- Beck, H.E.; Vergopolan, N.; Pan, M.; Levizzani, V.; van Dijk, A.I.J.M.; Weedon, G.P.; Brocca, L.; Pappenberger, F.; Huffman, G.J.; Wood, E.F. Global-scale evaluation of 22 precipitation datasets using gauge observations and hydrological modeling. Hydrol. Earth Syst. Sci. 2017, 21, 6201–6217. [Google Scholar] [CrossRef]

- El Kenawy, A.M.; McCabe, M.F.; Lopez-Moreno, J.I.; Hathal, Y.; Robaa, S.M.; Al Budeiri, A.L.; Jadoon, K.Z.; Abouelmagd, A.; Eddenjal, A.; Domínguez-Castro, F.; et al. Spatial assessment of the performance of multiple high-resolution satellite-based precipitation data sets over the Middle East. Int. J. Climatol. 2019, 39, 2522–2543. [Google Scholar] [CrossRef]

- Gao, Y.C.; Liu, M.F. Evaluation of high-resolution satellite precipitation products using rain gauge observations over the Tibetan Plateau. Hydrol. Earth Syst. Sci. 2013, 17, 837–849. [Google Scholar] [CrossRef]

- Camici, S.; Ciabatta, L.; Massari, C.; Brocca, L. How reliable are satellite precipitation estimates for driving hydrological models: A verification study over the Mediterranean area. J. Hydrol. 2018, 563, 950–961. [Google Scholar] [CrossRef]

- Navarro, A.; García-Ortega, E.; Merino, A.; Sánchez, J.L.; Kummerow, C.; Tapiador, F.J. Assessment of IMERG precipitation estimates over Europe. Remote Sens. 2019, 11, 2470. [Google Scholar] [CrossRef]

| Name | Abbreviation | Definition | Best Value |

|---|---|---|---|

| Hit Rate | HtR | 1 | |

| Probability of Detection | POD | 1 | |

| False Alarm Ratio | FAR | 0 | |

| Overestimation Rate | OvR | 0 | |

| Underestimation Rate | UdR | 0 | |

| Correlation Coefficient | CC | 1 | |

| Relative Mean Absolute Error | rMAE | 0 |

| Seasonal | Altitude | Orography |

|---|---|---|

| Winter: December, January and February | Low altitude = | Flat terrain: |

| Spring: March, April and May | higher-Low altitude = | steepper-Flat terrain: |

| Summer: June, July and August | lower-High altitude = | flatter-Steep terrain: |

| Autumn: September, October and November | High altitude = | Steep terrain: |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

García-Ten, A.; Niclòs, R.; Valor, E.; Caselles, V.; Estrela, M.J.; Miró, J.J.; Luna, Y.; Belda, F. Evaluation of CMORPH V1.0, IMERG V07A and MSWEP V2.8 Satellite Precipitation Products over Peninsular Spain and the Balearic Islands. Remote Sens. 2025, 17, 3562. https://doi.org/10.3390/rs17213562

García-Ten A, Niclòs R, Valor E, Caselles V, Estrela MJ, Miró JJ, Luna Y, Belda F. Evaluation of CMORPH V1.0, IMERG V07A and MSWEP V2.8 Satellite Precipitation Products over Peninsular Spain and the Balearic Islands. Remote Sensing. 2025; 17(21):3562. https://doi.org/10.3390/rs17213562

Chicago/Turabian StyleGarcía-Ten, Alejandro, Raquel Niclòs, Enric Valor, Vicente Caselles, María José Estrela, Juan Javier Miró, Yolanda Luna, and Fernando Belda. 2025. "Evaluation of CMORPH V1.0, IMERG V07A and MSWEP V2.8 Satellite Precipitation Products over Peninsular Spain and the Balearic Islands" Remote Sensing 17, no. 21: 3562. https://doi.org/10.3390/rs17213562

APA StyleGarcía-Ten, A., Niclòs, R., Valor, E., Caselles, V., Estrela, M. J., Miró, J. J., Luna, Y., & Belda, F. (2025). Evaluation of CMORPH V1.0, IMERG V07A and MSWEP V2.8 Satellite Precipitation Products over Peninsular Spain and the Balearic Islands. Remote Sensing, 17(21), 3562. https://doi.org/10.3390/rs17213562