Accurate Digital Reconstruction of High-Steep Rock Slope via Transformer-Based Multi-Sensor Data Fusion

Highlights

- Partial overlap, large outliers, and density heterogeneity in TLS–UAV data were revealed.

- A Transformer-based fusion method was introduced for high-steep slope reconstruction.

- Accurate digital modeling of complex mountainous terrains was enabled.

- Reliable data support for disaster warning and risk mitigation was provided.

Abstract

1. Introduction

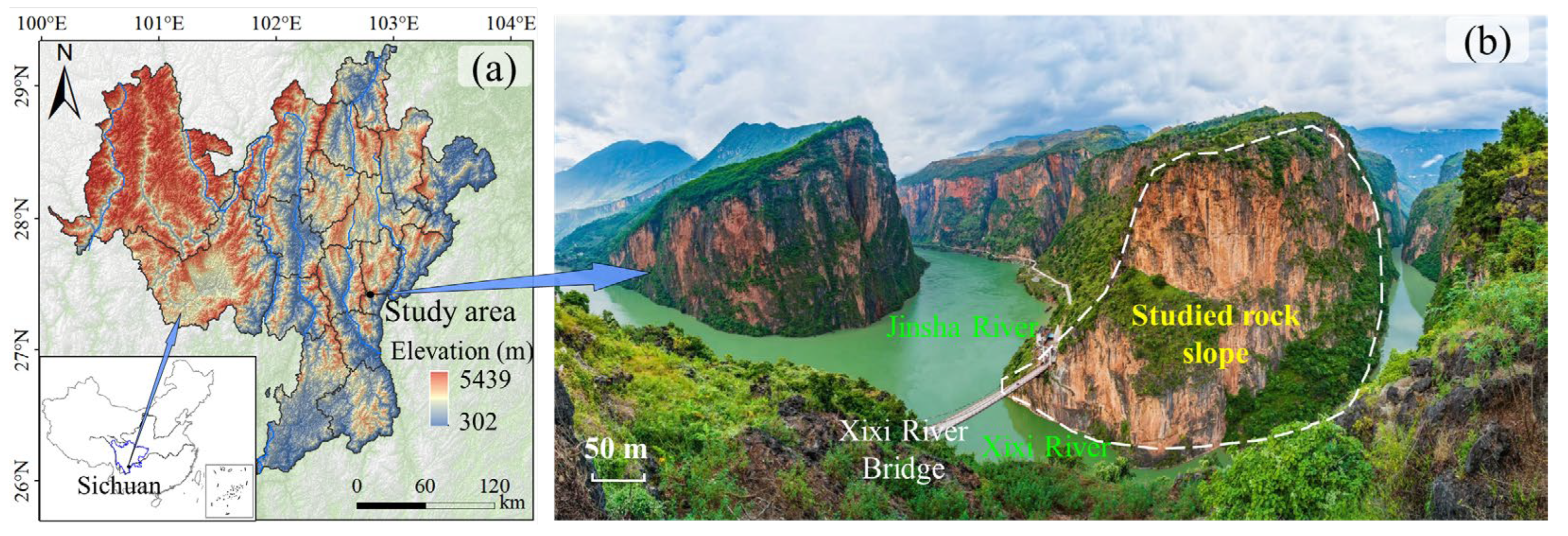

2. Study Sites

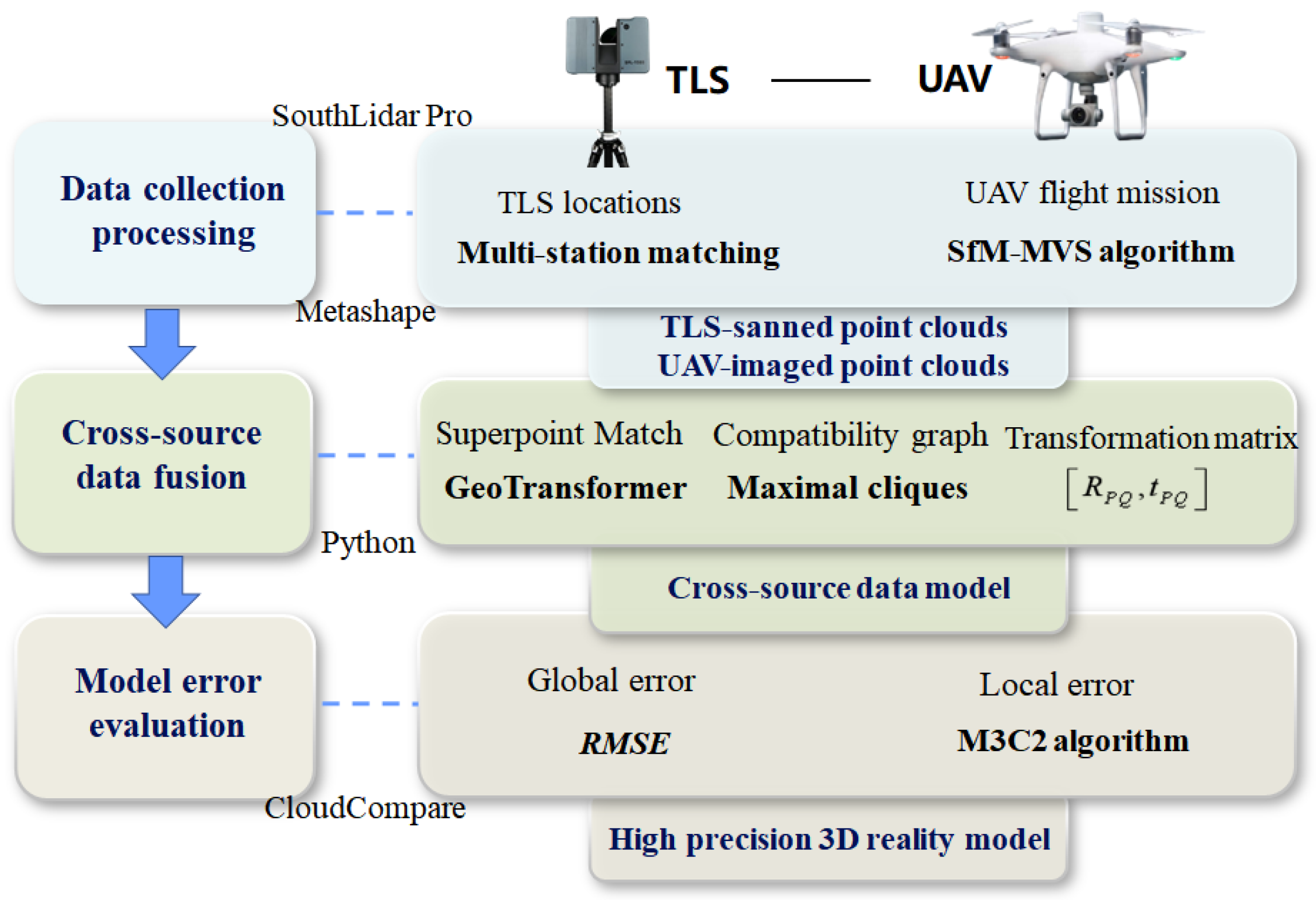

3. Materials and Methods

3.1. Data Collection and Processing

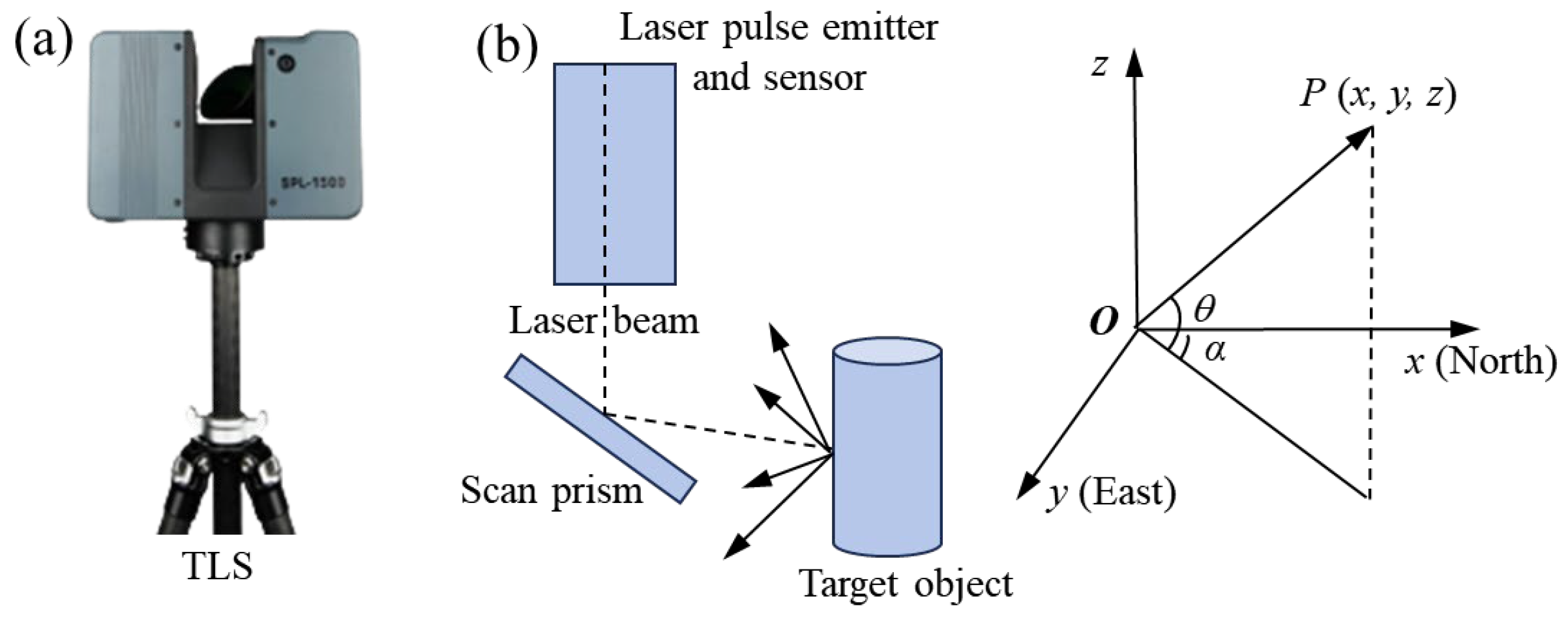

3.1.1. Terrestrial Laser Scanning

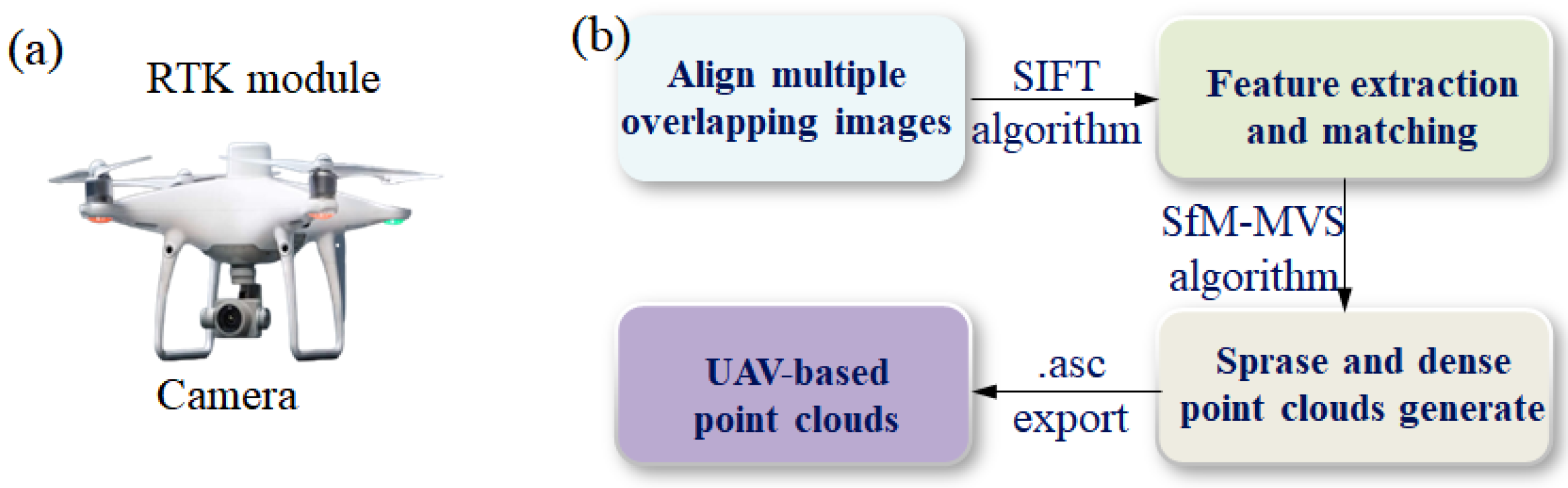

3.1.2. Unmanned Aerial Vehicle Photogrammetry

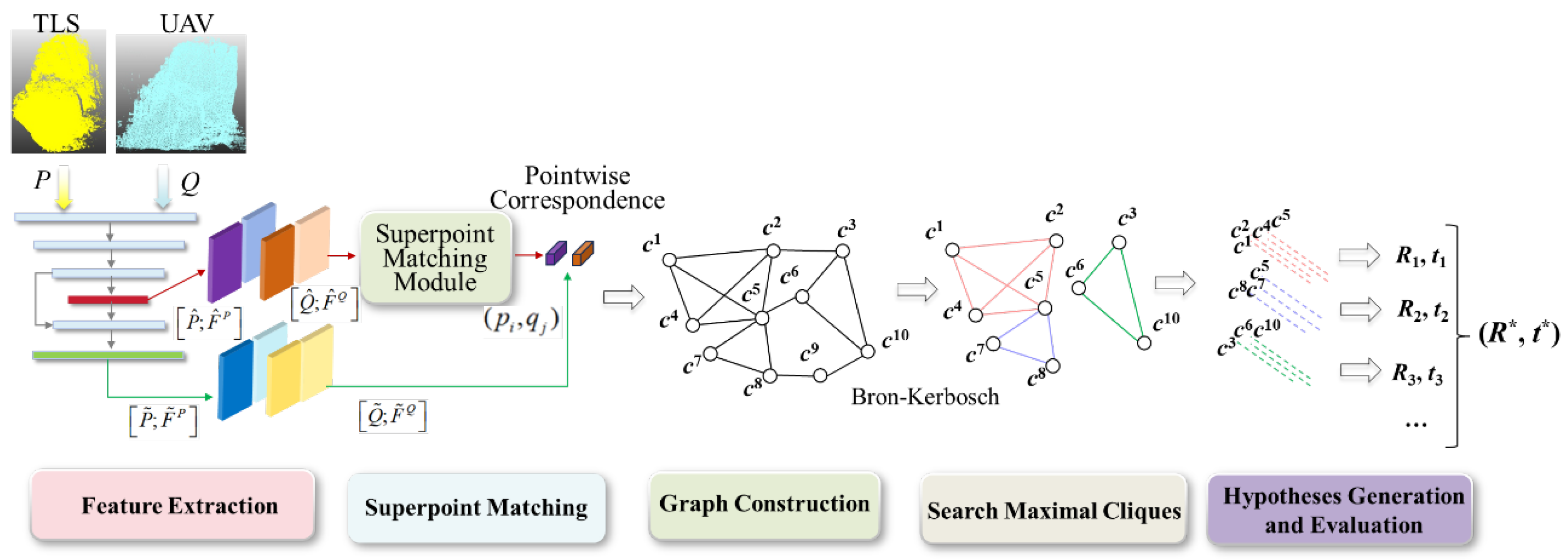

3.2. Multi-Sensor Data Fusion Method

3.2.1. Problems and Objectives of Multi-Sensor Data Registration

3.2.2. Transformer-Based Method of CSPC Registration

3.3. Accuracy Analysis Method for Multi-Sensor Data Model

- (1)

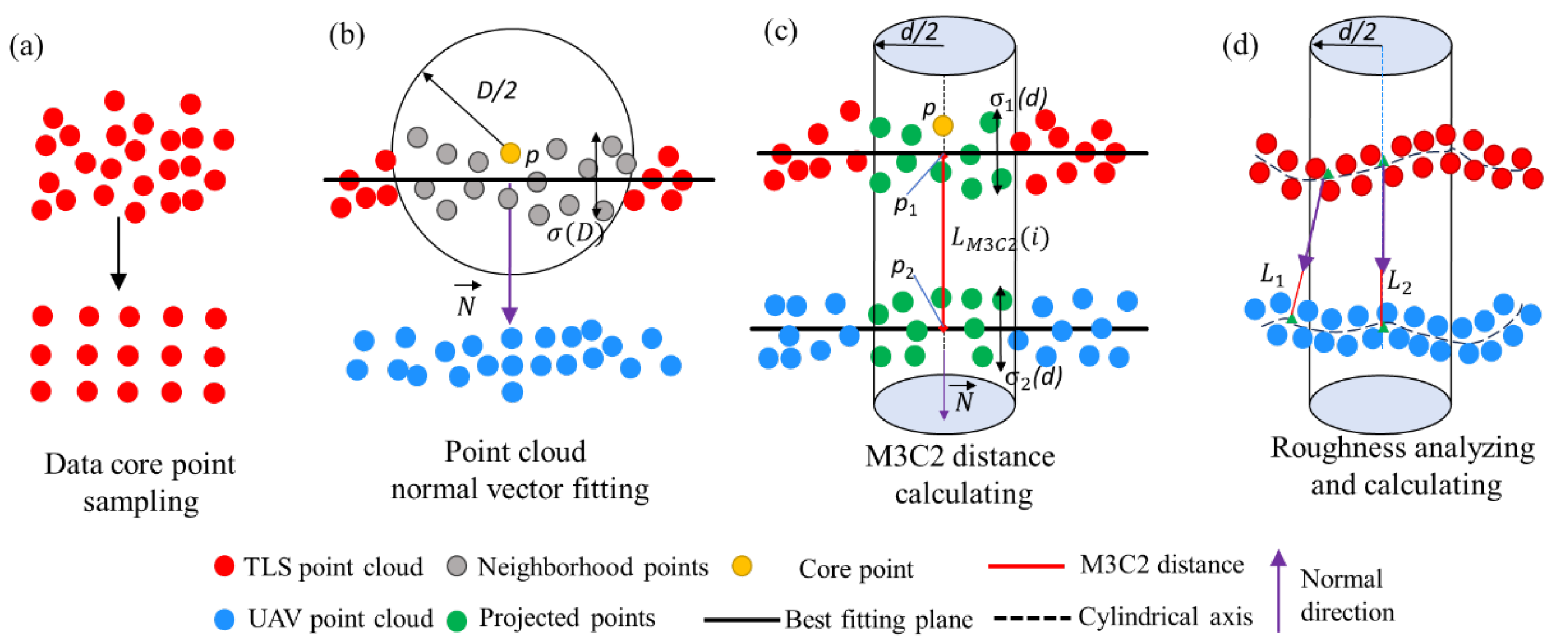

- Data core point sampling: A uniformly distributed and low-density core point set, which is a subset of the original point cloud data, is obtained through downsampling, as illustrated in Figure 6a. This process effectively reduces the complexity of data processing and enhances the computational efficiency of subsequent operations.

- (2)

- Point cloud normal vector fitting: For each core point p in Figure 6a, a neighborhood dataset is constructed from the original point cloud within a specified radius D/2. As shown in Figure 6b, a local normal vector N is derived by fitting a plane to the neighborhood data using the least squares method. The direction of N determines the reference for distance calculation. Additionally, the standard deviation of the neighborhood points from the fitted plane is defined as the roughness σ(D) (Equation (11)), which characterizes the local surface properties.where ai denotes the distance from the i-th point to the fitted plane within a radius of D/2; represents the average distance from all points to the fitted plane within the same radius D/2; N is the total number of points located within the radius D/2.

- (3)

- M3C2 distance Calculating: Along the direction of the normal vector N of the core point p, a cylinder with a radius of d/2 is constructed centered at p. This cylinder intersects the CSPC, forming two sets of intersection points, n1 and n2 (represented by green points in Figure 6c). The points in each intersection set are projected onto the cylinder axis, and the mean positions of the projected points, p1 and p2, are calculated. The Euclidean distance LM3C2 between p1 and p2 represents the variation distance of the CSPC at the core point p. By iterating through all core points, the distribution of point cloud variation distances across the entire target region can be obtained, which is then used to evaluate registration errors.

- (4)

- Roughness analyzing and calculating: To mitigate the influence of random errors, a confidence interval is established to determine the Least Significant Change Distance (LoD). Assuming that the errors follow an independent Gaussian distribution, the following formula can be applied for testing when the number of intersection points n1 and n2 is greater than or equal to 30 (Equation (12)):where LOD (d) represents the least significant change distance under the projection radius d/2; σ1 and σ2 denote the roughness of the TLS and UAV point clouds, respectively, under the projection radius d/2; n1 and n2 are the numbers of core points in the respective point clouds; reg represents the error of point cloud registration.

4. Results and Analysis

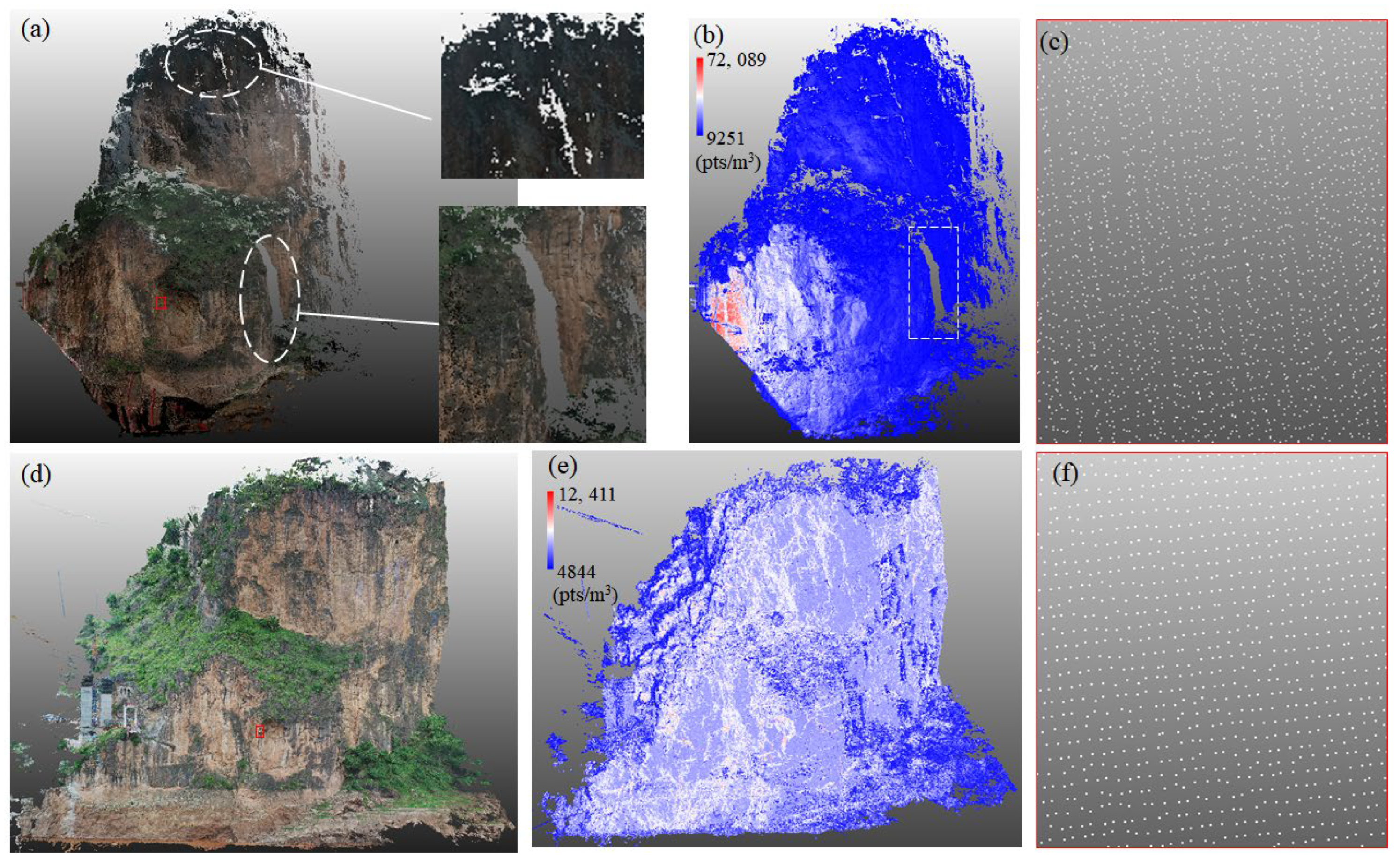

4.1. Analysis on TLS-UAV Heterogeneous Data

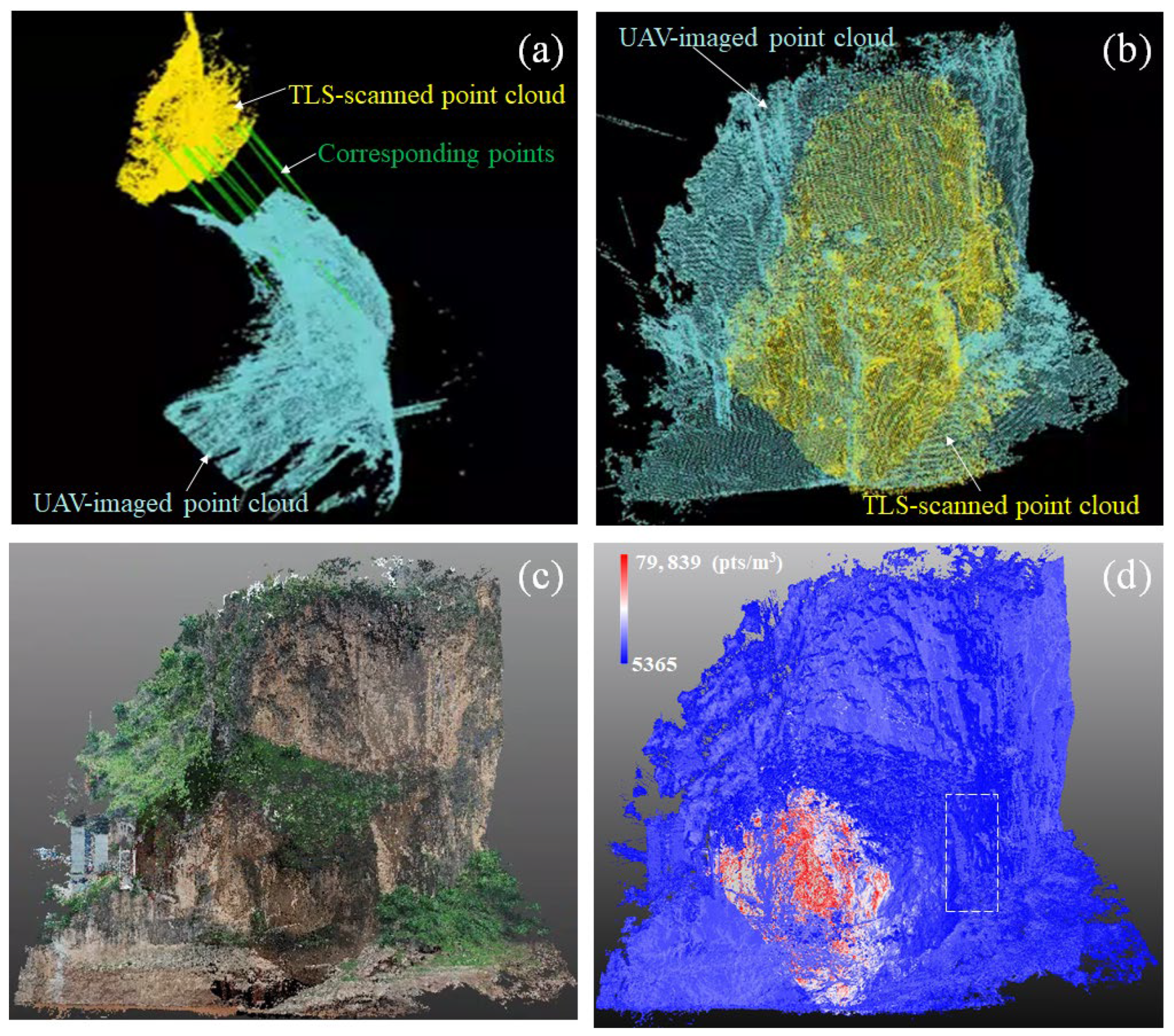

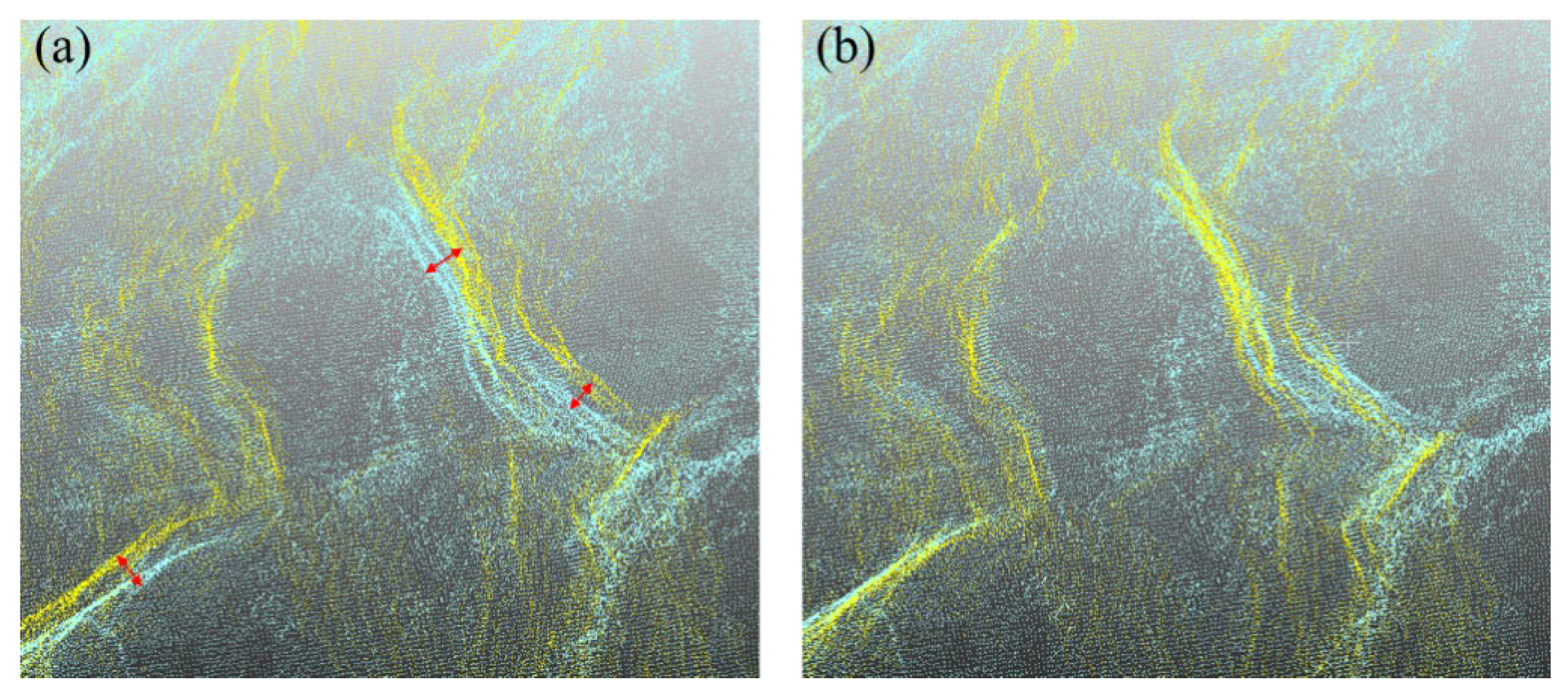

4.2. Fusion Effect of Multi-Sensor Data Model

4.3. Accuracy Evaluation of Multi-Sensor Data Model

5. Discussion

5.1. Advantages and Limitations of Methodology

5.2. Potential of High-Precision Digital Model

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Gong, W.; Tang, H.; Wang, L. DEM Simulation of the Bridge Collapse under the Impact of Rock Avalanche: A Case Study of the 2020 Yaoheba Rock Avalanche in Southwest China. Bull. Eng. Geol. Environ. 2024, 83, 104. [Google Scholar] [CrossRef]

- Sun, J.; Wang, X.; Guo, S.; Liu, H.; Zou, Y.; Yao, X.; Huang, X.; Qi, S. Potential Rockfall Source Identification and Hazard Assessment in High Mountains (Maoyaba Basin) of the Tibetan Plateau. Remote Sens. 2023, 15, 3273. [Google Scholar] [CrossRef]

- Sun, H.; Sheng, L.; Dai, Y.; Li, X.; Rui, Y.; Lu, L. 3D Geological Modeling of Tunnel Alignment in the Complex Mountainous Region of Yongshan, China, Based on Multisource Data Fusion. Eng. Geol. 2025, 354, 108209. [Google Scholar] [CrossRef]

- Fei, L.; Jaboyedoff, M.; Guerin, A.; Noël, F.; Bertolo, D.; Derron, M.-H.; Thuegaz, P.; Troilo, F.; Ravanel, L. Assessing the Rock Failure Return Period on an Unstable Alpine Rock Wall Based on Volume-Frequency Relationships: The Brenva Spur (3916 m Asl, Aosta Valley, Italy). Eng. Geol. 2023, 323, 107239. [Google Scholar] [CrossRef]

- Lan, H.; Tian, N.; Li, L.; Wu, Y.; Macciotta, R.; Clague, J.J. Kinematic-Based Landslide Risk Management for the Sichuan-Tibet Grid Interconnection Project (STGIP) in China. Eng. Geol. 2022, 308, 106823. [Google Scholar] [CrossRef]

- Liu, C.; Bao, H.; Wang, T.; Zhang, J.; Lan, H.; Qi, S.; Yuan, W.; Koshimura, S. Intelligent Characterization of Discontinuities and Heterogeneity Evaluation of Potential Hazard Sources in High-Steep Rock Slope by TLS-UAV Technology. J. Rock Mech. Geotech. Eng. 2025; in press. [Google Scholar] [CrossRef]

- Jiang, N.; Li, H.-B.; Li, C.-J.; Xiao, H.-X.; Zhou, J.-W. A Fusion Method Using Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry for Landslide Deformation Monitoring under Complex Terrain Conditions. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Wang, S.; Yan, B.; Hu, W.; Liu, X.; Wang, W.; Chen, Y.; Ai, C.; Wang, J.; Xiong, J.; Qiu, S. Digital Reconstruction of Railway Steep Slope from UAV+TLS Using Geometric Transformer. Transp. Geotech. 2024, 48, 101343. [Google Scholar] [CrossRef]

- Francioni, M.; Salvini, R.; Stead, D.; Coggan, J. Improvements in the Integration of Remote Sensing and Rock Slope Modelling. Nat. Hazard. 2018, 90, 975–1004. [Google Scholar] [CrossRef]

- Battulwar, R.; Zare-Naghadehi, M.; Emami, E.; Sattarvand, J. A State-of-the-Art Review of Automated Extraction of Rock Mass Discontinuity Characteristics Using Three-Dimensional Surface Models. J. Rock Mech. Geotech. Eng. 2021, 13, 920–936. [Google Scholar] [CrossRef]

- Wu, F.; Wu, J.; Bao, H.; Li, B.; Shan, Z.; Kong, D. Advances in Statistical Mechanics of Rock Masses and Its Engineering Applications. J. Rock Mech. Geotech. Eng. 2021, 13, 22–45. [Google Scholar] [CrossRef]

- Ding, Q.; Wang, F.; Chen, J.; Wang, M.; Zhang, X. Research on Generalized RQD of Rock Mass Based on 3D Slope Model Established by Digital Close-Range Photogrammetry. Remote Sens. 2022, 14, 2275. [Google Scholar] [CrossRef]

- Kang, J.; Kim, D.; Lee, C.; Kang, J.; Kim, D. Efficiency Study of Combined UAS Photogrammetry and Terrestrial LiDAR in 3D Modeling for Maintenance and Management of Fill Dams. Remote Sens. 2023, 15, 2026. [Google Scholar] [CrossRef]

- Luo, X.-L.; Jiang, N.; Li, H.-B.; Xiao, H.-X.; Chen, X.-Z.; Zhou, J.-W. A High-Precision Modeling and Error Analysis Method for Mountainous and Canyon Areas Based on TLS and UAV Photogrammetry. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7710–7724. [Google Scholar] [CrossRef]

- Abellán, A.; Oppikofer, T.; Jaboyedoff, M.; Rosser, N.J.; Lim, M.; Lato, M.J. Terrestrial Laser Scanning of Rock Slope Instabilities. Earth Surf. Process. Landf. 2014, 39, 80–97. [Google Scholar] [CrossRef]

- O’banion, M.S.; Olsen, M.J.; Hollenbeck, J.P.; Wright, W.C. Data Gap Classification for Terrestrial Laser Scanning-Derived Digital Elevation Models. ISPRS Int. J. Geo-Inf. 2020, 9, 749. [Google Scholar] [CrossRef]

- Dadrass Javan, F.; Samadzadegan, F.; Toosi, A.; van der Meijde, M. Unmanned Aerial Geophysical Remote Sensing: A Systematic Review. Remote Sens. 2025, 17, 110. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Seidl, J.; Reindl, T.; Brouček, J. Photogrammetry Using UAV-Mounted GNSS RTK: Georeferencing Strategies without GCPs. Remote Sens. 2021, 13, 1336. [Google Scholar] [CrossRef]

- Cucchiaro, S.; Fallu, D.J.; Zhang, H.; Walsh, K.; Oost, K.V.; Brown, A.G.; Tarolli, P. Multiplatform-SfM and TLS Data Fusion for Monitoring Agricultural Terraces in Complex Topographic and Landcover Conditions. Remote Sens. 2020, 12, 1946. [Google Scholar] [CrossRef]

- Zang, Y.; Yang, B.; Li, J.; Guan, H. An Accurate Tls and Uav Image Point Clouds Registration Method for Deformation Detection of Chaotic Hillside Areas. Remote Sens. 2019, 11, 647. [Google Scholar] [CrossRef]

- Šašak, J.; Gallay, M.; Kaňuk, J.; Hofierka, J.; Minár, J. Combined Use of Terrestrial Laser Scanning and UAV Photogrammetry in Mapping Alpine Terrain. Remote Sens. 2019, 11, 2154. [Google Scholar] [CrossRef]

- Yan, C.; Feng, M.; Wu, Z.; Guo, Y.; Dong, W.; Wang, Y.; Mian, A. Discriminative Correspondence Estimation for Unsupervised RGB-D Point Cloud Registration. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 1209–1223. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J. Cross-Source Point Cloud Registration: Challenges, Progress and Prospects. Neurocomputing 2023, 548, 126383. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Li, P.; Wang, R.; Wang, Y.; Tao, W. Evaluation of the ICP Algorithm in 3D Point Cloud Registration. IEEE Access 2020, 8, 68030–68048. [Google Scholar] [CrossRef]

- Han, J.; Shin, M.; Paik, J. Robust Point Cloud Registration Using Hough Voting-Based Correspondence Outlier Rejection. Eng. Appl. Artif. Intell. 2024, 133, 107985. [Google Scholar] [CrossRef]

- Li, R.; Gan, S.; Yuan, X.; Bi, R.; Luo, W.; Chen, C.; Zhu, Z. Automatic Registration of Large-Scale Building Point Clouds with High Outlier Rates. Autom. Constr. 2024, 168, 105870. [Google Scholar] [CrossRef]

- Dai, W.; Kan, H.; Tan, R.; Yang, B.; Guan, Q.; Zhu, N.; Xiao, W.; Dong, Z. Multisource Forest Point Cloud Registration with Semantic-Guided Keypoints and Robust RANSAC Mechanisms. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103105. [Google Scholar] [CrossRef]

- Wang, C.; Gu, Y.; Li, X. LPRnet: A Self-Supervised Registration Network for LiDAR and Photogrammetric Point Clouds. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4404012. [Google Scholar] [CrossRef]

- Zhang, Y.-X.; Gui, J.; Cong, X.; Gong, X.; Tao, W. A Comprehensive Survey and Taxonomy on Point Cloud Registration Based on Deep Learning. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju Island, Republic of Korea, 3–9 August 2024; pp. 8344–8353. [Google Scholar]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. Pointnetlk: Robust & Efficient Point Cloud Registration Using Pointnet. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Volume 2019, pp. 7156–7165. [Google Scholar]

- Lu, F.; Chen, G.; Liu, Y.; Zhan, Y.; Li, Z.; Tao, D.; Jiang, C. Sparse-to-Dense Matching Network for Large-Scale LiDAR Point Cloud Registration. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11270–11282. [Google Scholar] [CrossRef]

- Wang, Y.; Solomon, J.M. Deep Closest Point: Learning Representations for Point Cloud Registration. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3523–3532. [Google Scholar]

- Li, G.; Wu, B.; Yang, L.; Pan, Z.; Dong, L.; Wu, S.; Shen, G.; Zhang, J.; Xiao, T.; Zhang, L.; et al. QuadrantSearch: A Novel Method for Registering UAV and Backpack LiDAR Point Clouds in Forested Areas. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–17. [Google Scholar] [CrossRef]

- Han, Z.; Liu, L. A 6D Object Pose Estimation Algorithm for Autonomous Docking with Improved Maximal Cliques. Sensors 2025, 25, 283. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, X.; Wang, P.; Guo, Y.; Sun, K.; Wu, Q.; Zhang, S.; Zhang, Y. MAC: Maximal Cliques for 3D Registration. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10645–10662. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D Comparison of Complex Topography with Terrestrial Laser Scanner: Application to the Rangitikei Canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- CloudCompare; Version 2.12; EDF R&D, Télécom ParisTech: Paris, France, 2022; Available online: https://www.cloudcompare.org/ (accessed on 27 January 2025).

- Python Software Foundation. Python Language Reference, Version 3.10. Available online: https://docs.python.org/3 (accessed on 29 January 2025).

- Kong, D.; Saroglou, C.; Wu, F.; Sha, P.; Li, B. Development and Application of UAV-SfM Photogrammetry for Quantitative Characterization of Rock Mass Discontinuities. Int. J. Rock Mech. Min. Sci. 2021, 141, 104729. [Google Scholar] [CrossRef]

- Li, J.; Zhuang, Y.; Peng, Q.; Zhao, L. Pose Estimation of Non-Cooperative Space Targets Based on Cross-Source Point Cloud Fusion. Remote Sens. 2021, 13, 4239. [Google Scholar] [CrossRef]

- Qin, Z.; Yu, H.; Wang, C.; Guo, Y.; Peng, Y.; Ilic, S.; Hu, D.; Xu, K. GeoTransformer: Fast and Robust Point Cloud Registration With Geometric Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9806–9821. [Google Scholar] [CrossRef]

- Peng, Y.; Lin, S.; Wu, H.; Cao, G. Point Cloud Registration Based on Fast Point Feature Histogram Descriptors for 3D Reconstruction of Trees. Remote Sens. 2023, 15, 3775. [Google Scholar] [CrossRef]

- Singh, S.K.; Raval, S.; Banerjee, B.P. Automated Structural Discontinuity Mapping in a Rock Face Occluded by Vegetation Using Mobile Laser Scanning. Eng. Geol. 2021, 285, 106040. [Google Scholar] [CrossRef]

- Cui, P.; Ge, Y.; Li, S.; Li, Z.; Xu, X.; Zhou, G.G.D.; Chen, H.; Wang, H.; Lei, Y.; Zhou, L.; et al. Scientific Challenges in Disaster Risk Reduction for the Sichuan–Tibet Railway. Eng. Geol. 2022, 309, 106837. [Google Scholar] [CrossRef]

- Liu, C.; Bao, H.; Lan, H.; Yan, C.; Li, C.; Liu, S. Failure Evaluation and Control Factor Analysis of Slope Block Instability along Traffic Corridor in Southeastern Tibet. J. Mt. Sci. 2024, 21, 1830–1848. [Google Scholar] [CrossRef]

- Xu, Q.; Ye, Z.; Liu, Q.; Dong, X.; Li, W.; Fang, S.; Guo, C. 3D Rock Structure Digital Characterization Using Airborne LiDAR and Unmanned Aerial Vehicle Techniques for Stability Analysis of a Blocky Rock Mass Slope. Remote Sens. 2022, 14, 3044. [Google Scholar] [CrossRef]

- Wang, M.; Zhou, J.; Chen, J.; Jiang, N.; Zhang, P.; Li, H. Automatic Identification of Rock Discontinuity and Stability Analysis of Tunnel Rock Blocks Using Terrestrial Laser Scanning. J. Rock Mech. Geotech. Eng. 2023, 15, 1810–1825. [Google Scholar] [CrossRef]

- Bao, H.; Liu, C.; Lan, H.; Yan, C.; Li, L.; Zheng, H.; Dong, Z. Time-Dependency Deterioration of Polypropylene Fiber Reinforced Soil and Guar Gum Mixed Soil in Loess Cut-Slope Protecting. Eng. Geol. 2022, 311, 106895. [Google Scholar] [CrossRef]

- Núñez-Andrés, M.A.; Prades-Valls, A.; Matas, G.; Buill, F.; Lantada, N. New Approach for Photogrammetric Rock Slope Premonitory Movements Monitoring. Remote Sens. 2023, 15, 293. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Weight | 1391 g |

| Maximum flight height | 500 m |

| Max flight time | 30 min |

| GNSS mode | GPS/BDS/Galileo |

| Image dimensions | 5472 × 3648 pixels |

| Focal length | 8.8–24 mm |

| Post-processing | Agisoft Metashape V2.0.2 |

| Critical Parameters | Values |

|---|---|

| Max service time | 4 h |

| Ranging method | Pulse-type |

| Max scanning distance | 1500 m |

| Distance resolution | 3 mm @ 100 m |

| Maximum field of view angle | horizontal 360°/vertical 300° |

| Angular resolution | 0.001° |

| Post-processing | SouthLidar Pro2.0 |

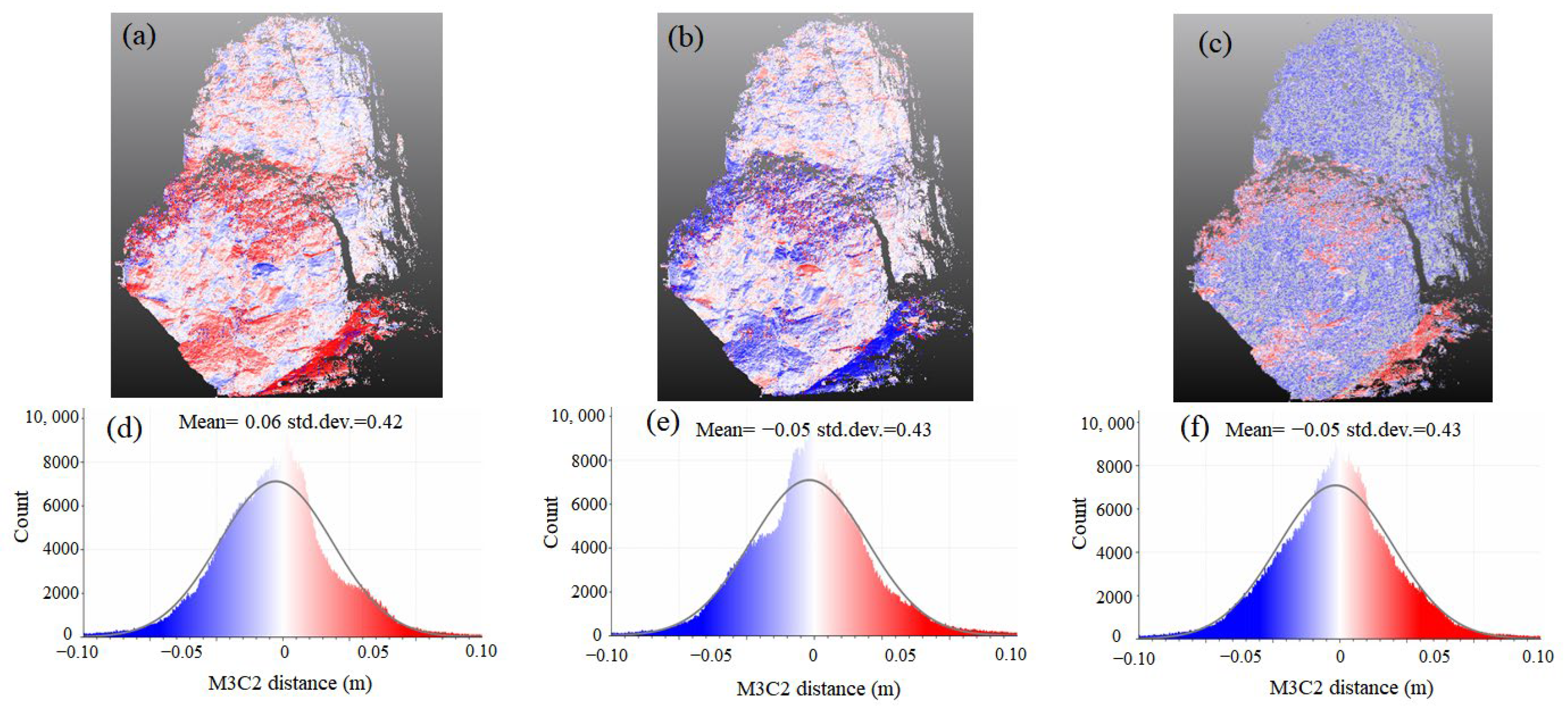

| Method | Global Error RMSE (m) | Local Error M3C2 (m) | Times (s) |

|---|---|---|---|

| ICP-based method | 0.23 | 0.19 | 154 |

| Transformer-based method | 0.08 | 0.06 | 68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Bao, H.; Zhang, J.; Lan, H.; Adriano, B.; Koshimura, S.; Yuan, W. Accurate Digital Reconstruction of High-Steep Rock Slope via Transformer-Based Multi-Sensor Data Fusion. Remote Sens. 2025, 17, 3555. https://doi.org/10.3390/rs17213555

Liu C, Bao H, Zhang J, Lan H, Adriano B, Koshimura S, Yuan W. Accurate Digital Reconstruction of High-Steep Rock Slope via Transformer-Based Multi-Sensor Data Fusion. Remote Sensing. 2025; 17(21):3555. https://doi.org/10.3390/rs17213555

Chicago/Turabian StyleLiu, Changqing, Han Bao, Jingfeng Zhang, Hengxing Lan, Bruno Adriano, Shunichi Koshimura, and Wei Yuan. 2025. "Accurate Digital Reconstruction of High-Steep Rock Slope via Transformer-Based Multi-Sensor Data Fusion" Remote Sensing 17, no. 21: 3555. https://doi.org/10.3390/rs17213555

APA StyleLiu, C., Bao, H., Zhang, J., Lan, H., Adriano, B., Koshimura, S., & Yuan, W. (2025). Accurate Digital Reconstruction of High-Steep Rock Slope via Transformer-Based Multi-Sensor Data Fusion. Remote Sensing, 17(21), 3555. https://doi.org/10.3390/rs17213555