Advances on Multimodal Remote Sensing Foundation Models for Earth Observation Downstream Tasks: A Survey

Highlights

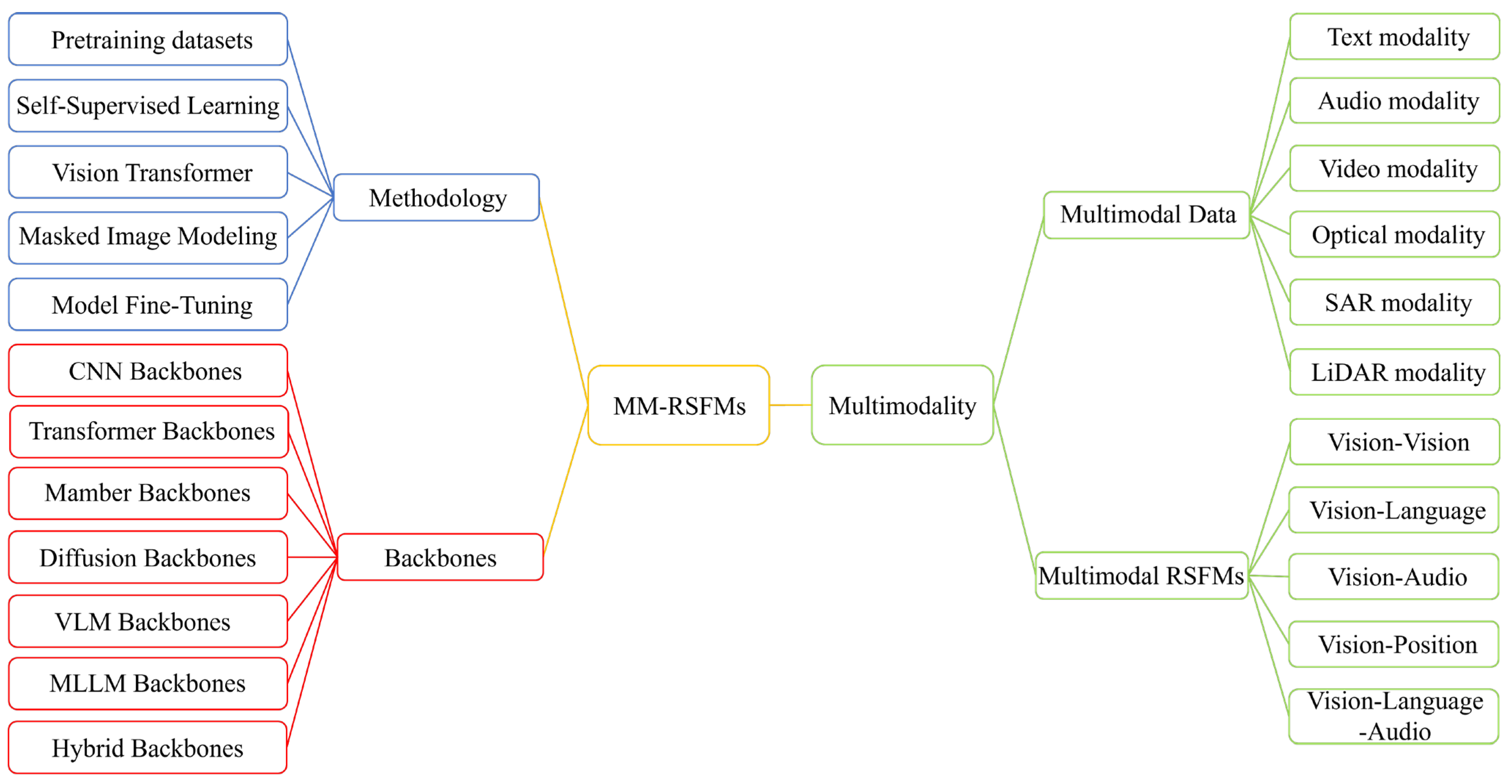

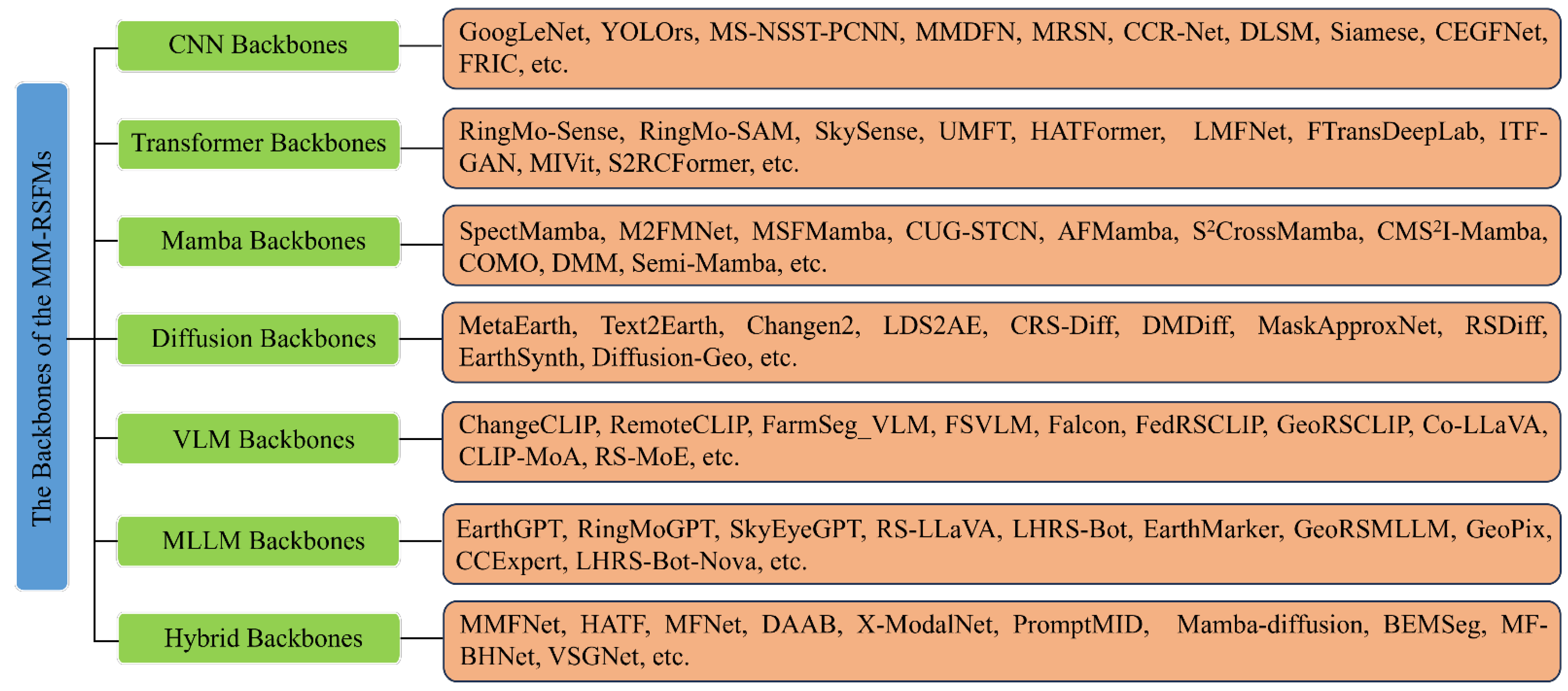

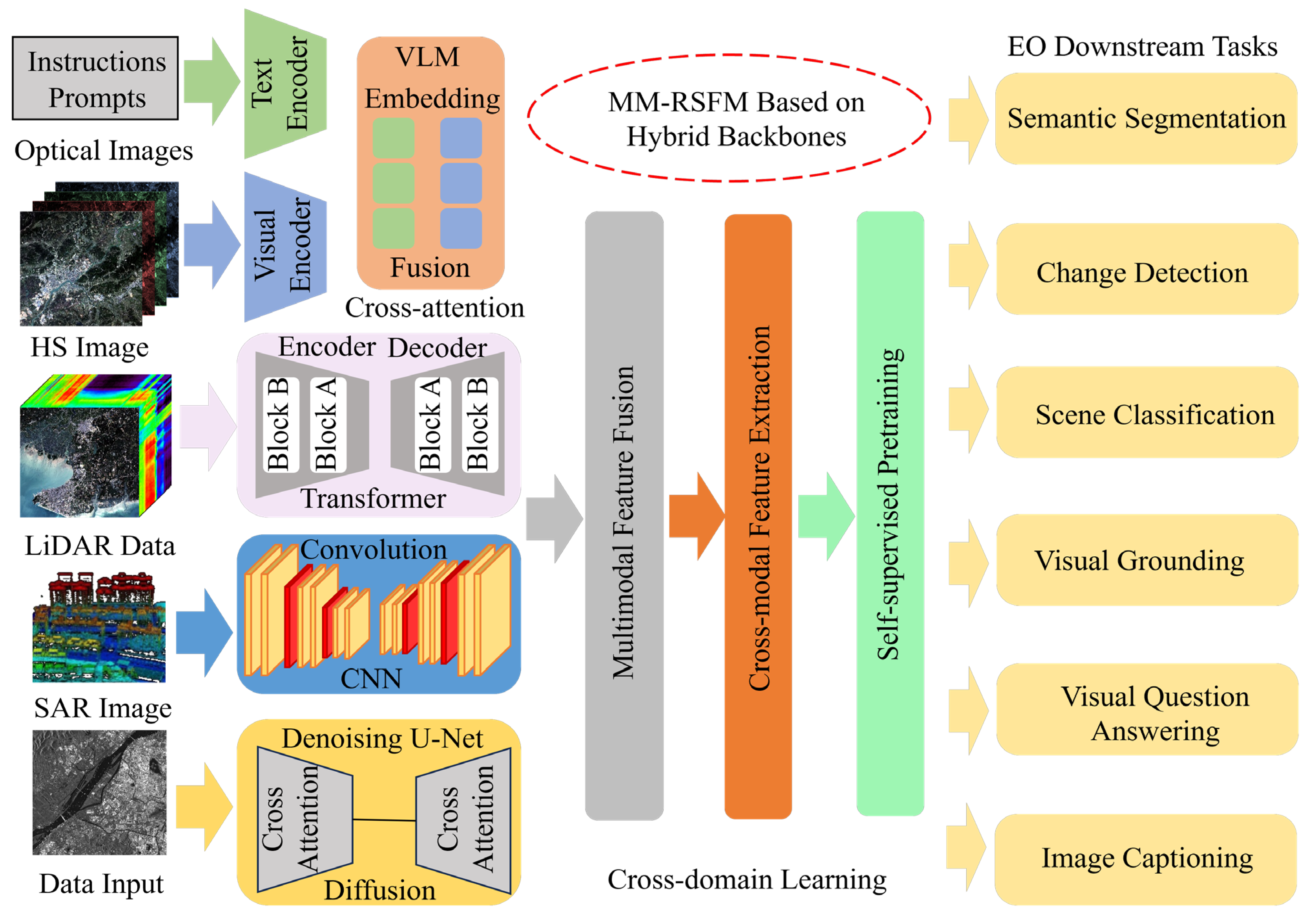

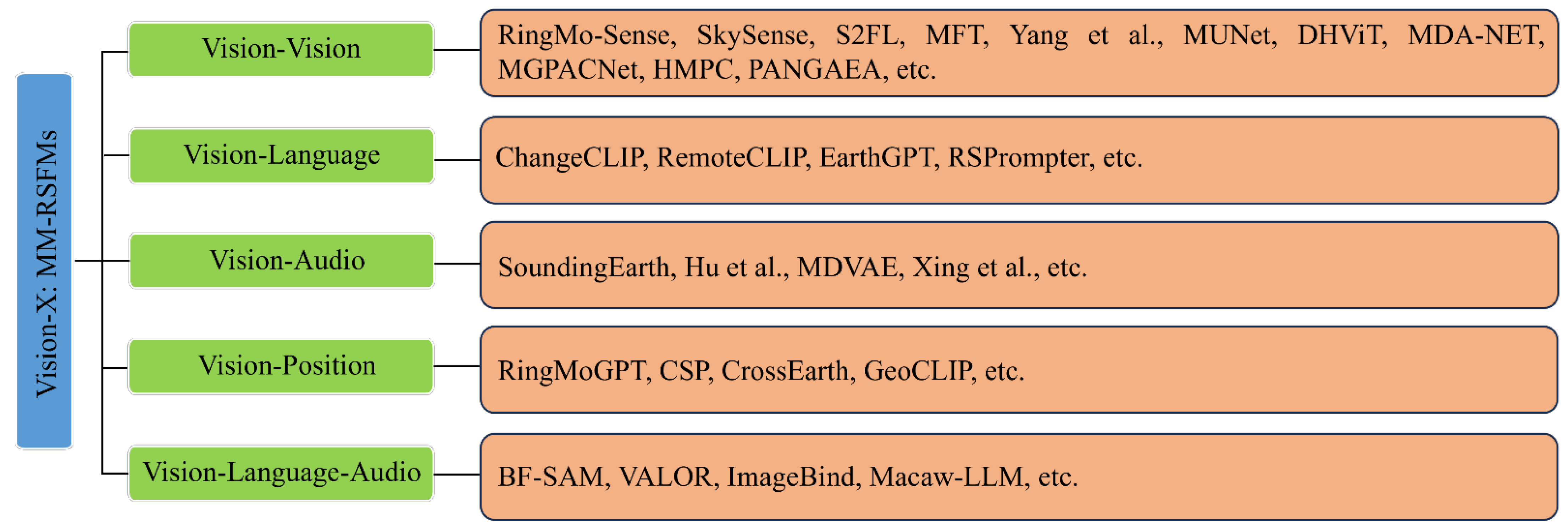

- This review develops an innovative taxonomy for vision–X (including vision, language, audio, and position) multimodal remote sensing foundation models (MM-RSFMs) according to their backbones, encompassing CNN, Transformer, Mamba, Diffusion, vision–language model (VLM), multimodal large language model (MLLM), and hybrid backbones.

- A thorough analysis of the problems and challenges confronting MM-RSFMs reveals a scarcity of high-quality multimodal datasets, limited capability for multimodal feature extraction, weak cross-task generalization, absence of unified evaluation criteria, and insufficient security measures.

- The taxonomy assists readers in developing a systematic understanding of the intrinsic characteristics and interrelationships between cross-modal alignment and multimodal fusion in MM-RSFMs from a technical perspective.

- By analyzing key issues and challenges, targeted improvements can be implemented to improve the generalization, interpretability, and security of MM-RSFMs, thereby advancing their research progress and innovative applications.

Abstract

1. Introduction

- (1)

- Comprehensive Survey: This article provides the first comprehensive survey of vision–X (including vision, language, audio, and position) MM-RSFMs specifically designed for EO downstream tasks. It systematically reviews research progress, technological innovation, model architecture, key issues, and development trends of MM-RSFMs across five dimensions: pre-training data, key technologies, backbones, cross-modal interactions, and problems and prospects.

- (2)

- Innovative Taxonomy: This article develops an innovative taxonomy framework for MM-RSFMs and reviews the development of them according to multimodal backbones, such as CNN backbones, Transformer backbones, Mamba backbones, Diffusion backbones, vision–language model (VLM) backbones, multimodal large language model (MLLM) backbones, and hybrid backbones. This taxonomy aids readers in developing a systematic understanding of the intrinsic characteristics and interrelationships among cross-modal alignment and multimodal fusion in MM-RSFMs.

- (3)

- Thorough Analysis: This work conducts a thorough analysis of the problems and challenges confronting MM-RSFMs and predicts future directions. It analyzes the key issues of MM-RSFMs from five aspects: scarcity of high-quality multimodal datasets, limited capability for multimodal feature extraction, weak cross-task generalization, absence of unified evaluation criteria, and insufficient security measures. These insights are intended to facilitate further research progress and innovative applications in the field.

2. Multimodal RS Pre-Training Data

3. Key Technologies for MM-RSFMs: From Self-Supervised Learning to Model Fine-Tuning

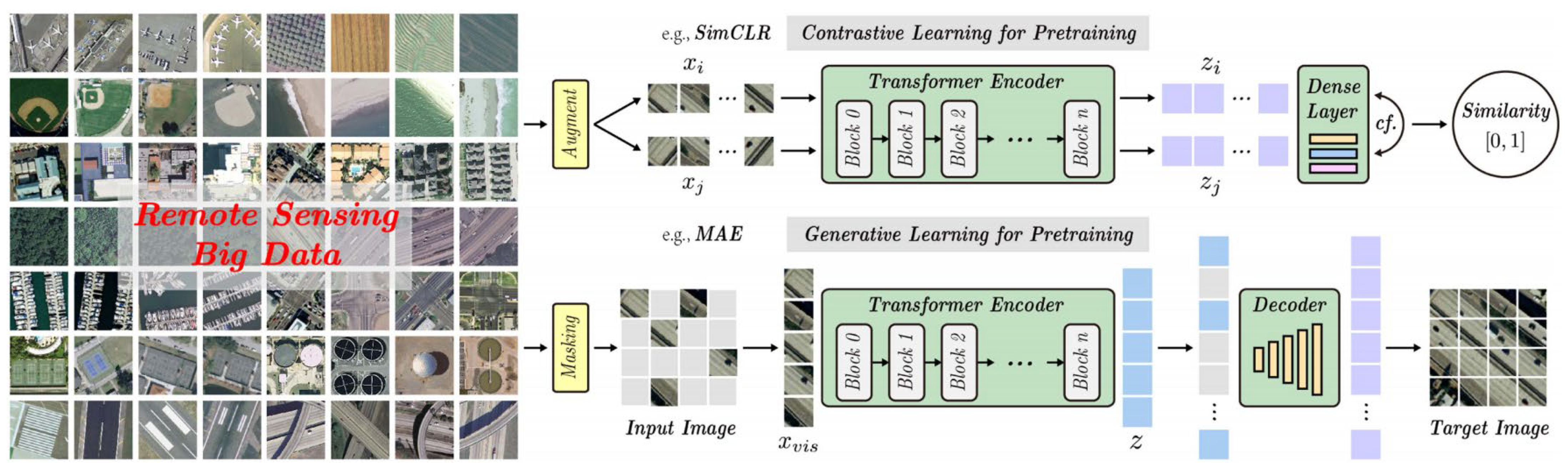

3.1. Self-Supervised Learning

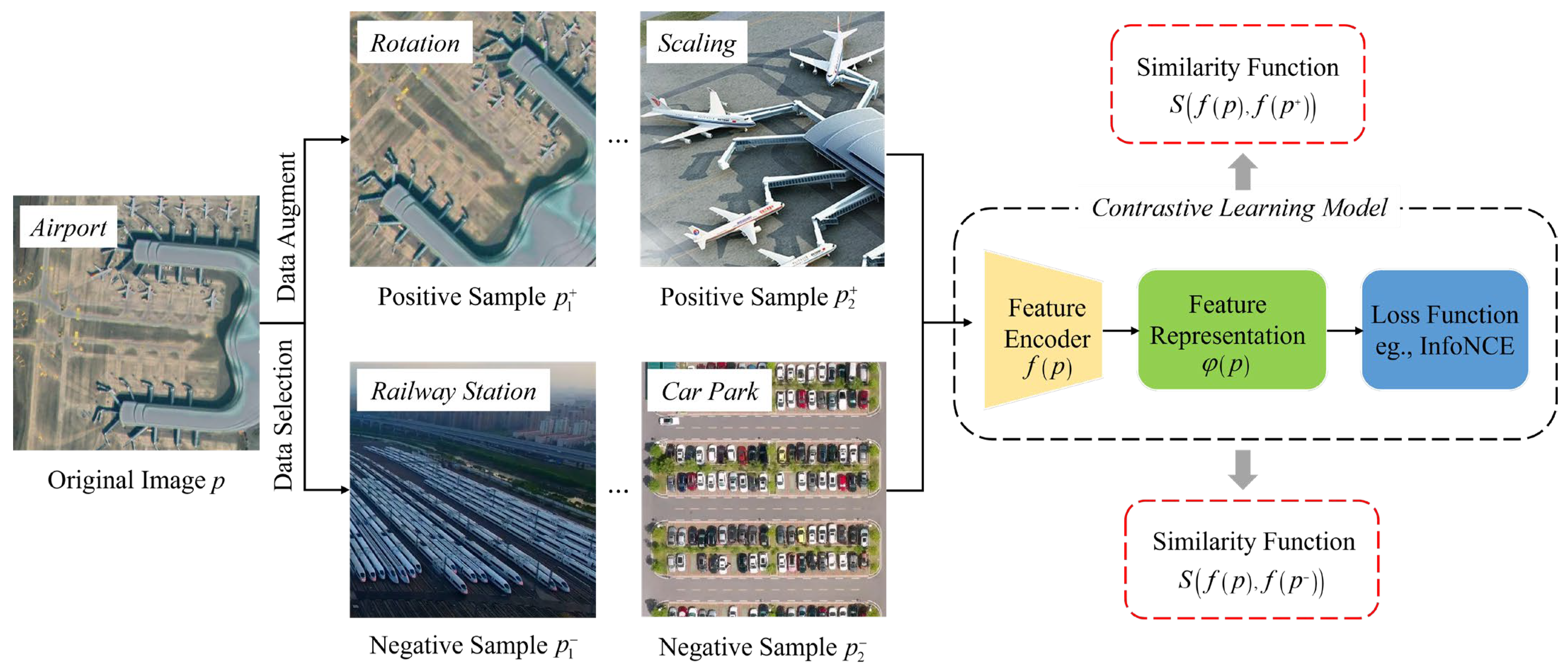

3.1.1. Contrastive Learning

3.1.2. Generative Learning

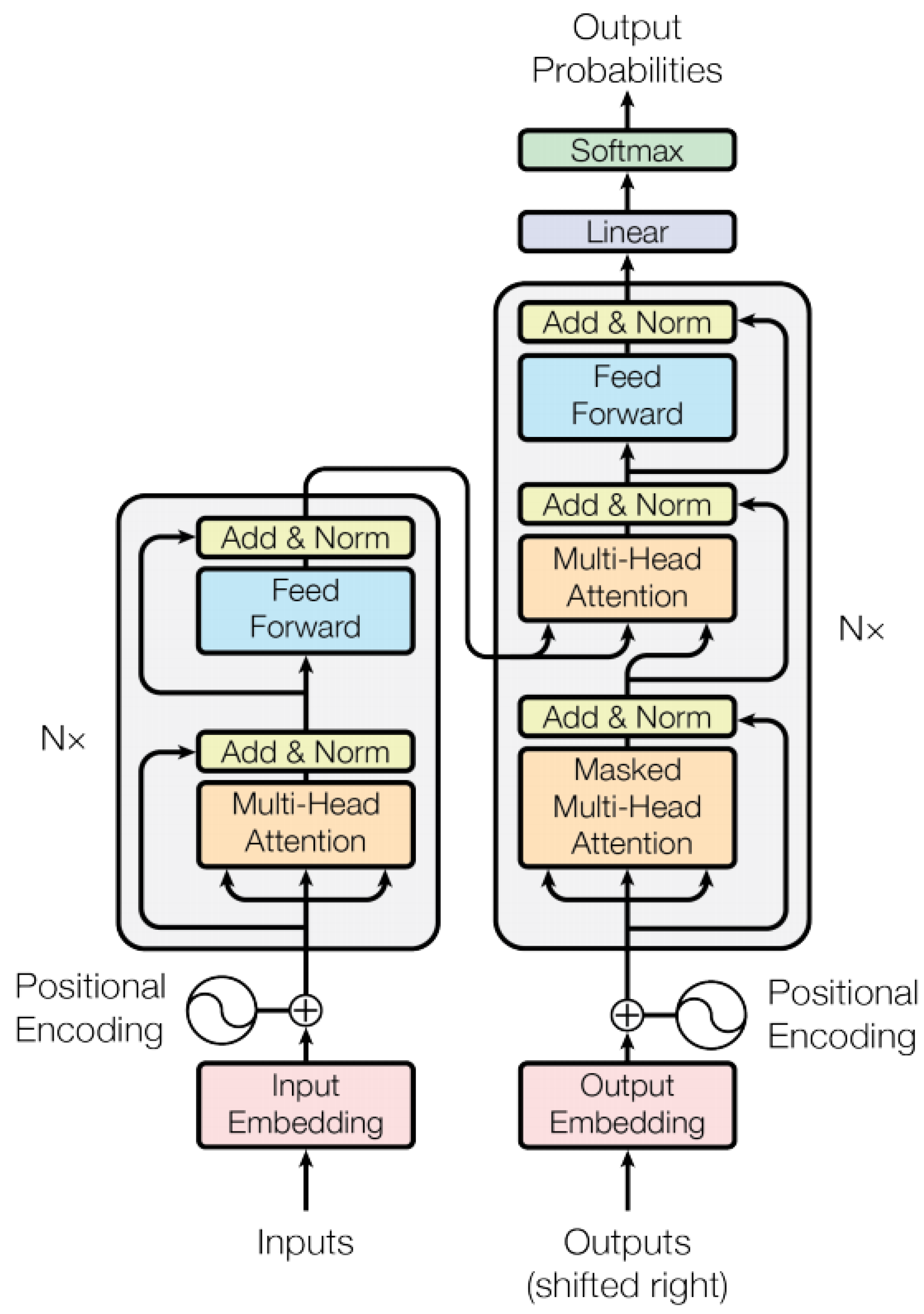

3.2. Vision Transformer

3.3. Masked Image Modeling

3.4. Model Fine-Tuning

4. The Backbones of MM-RSFMs

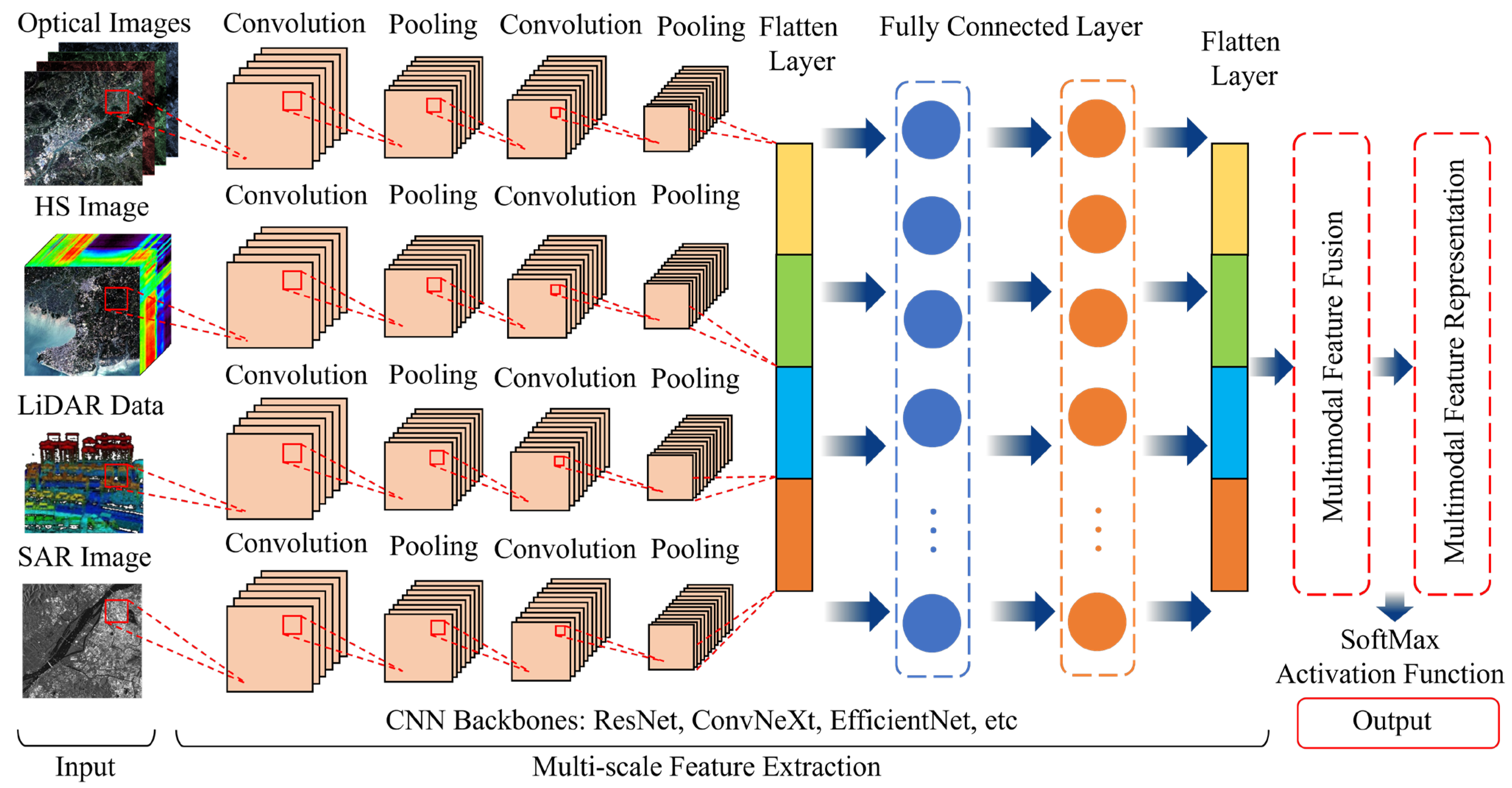

4.1. CNN Backbones

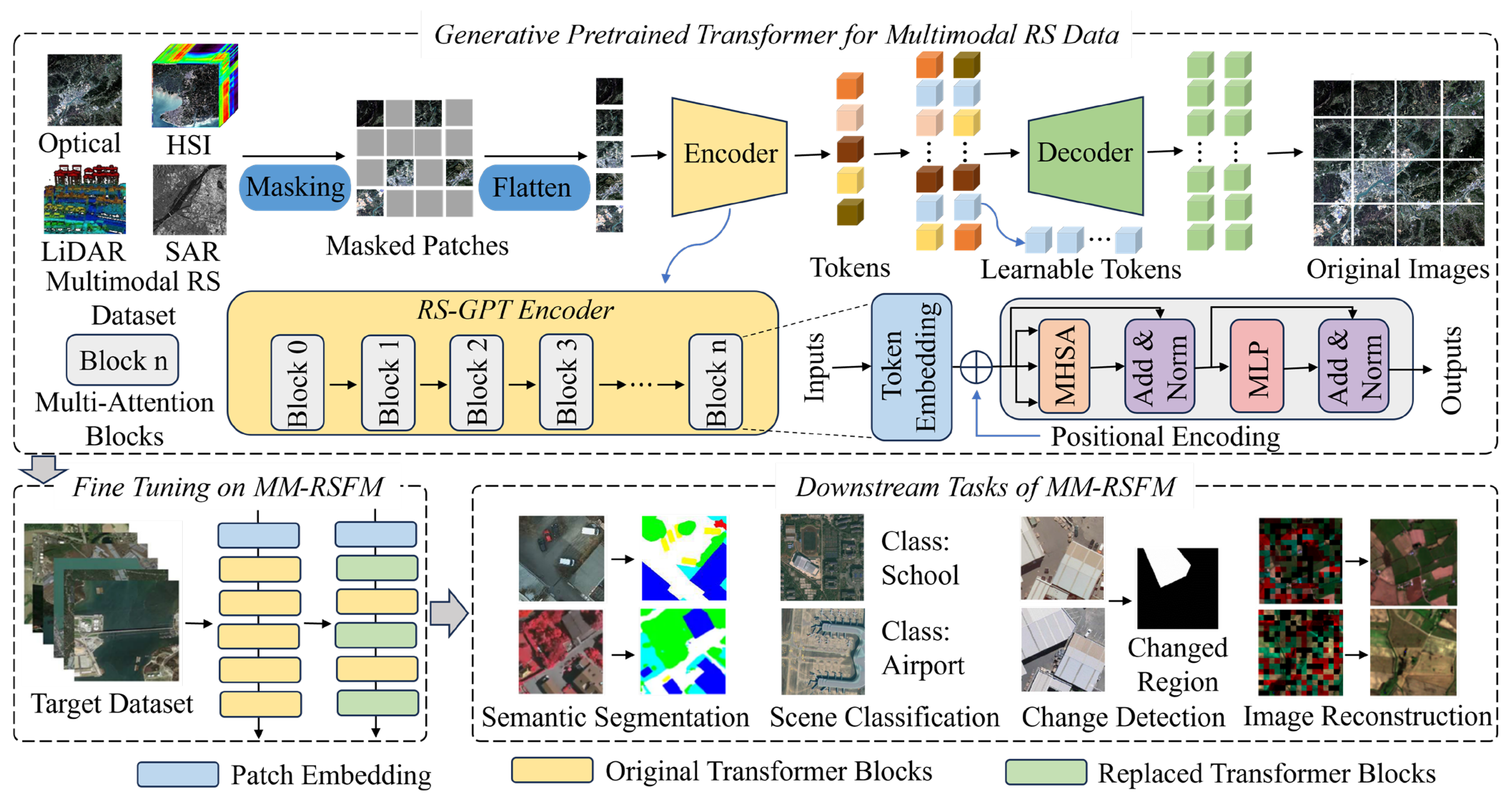

4.2. Transformer Backbones

4.3. Mamba Backbones

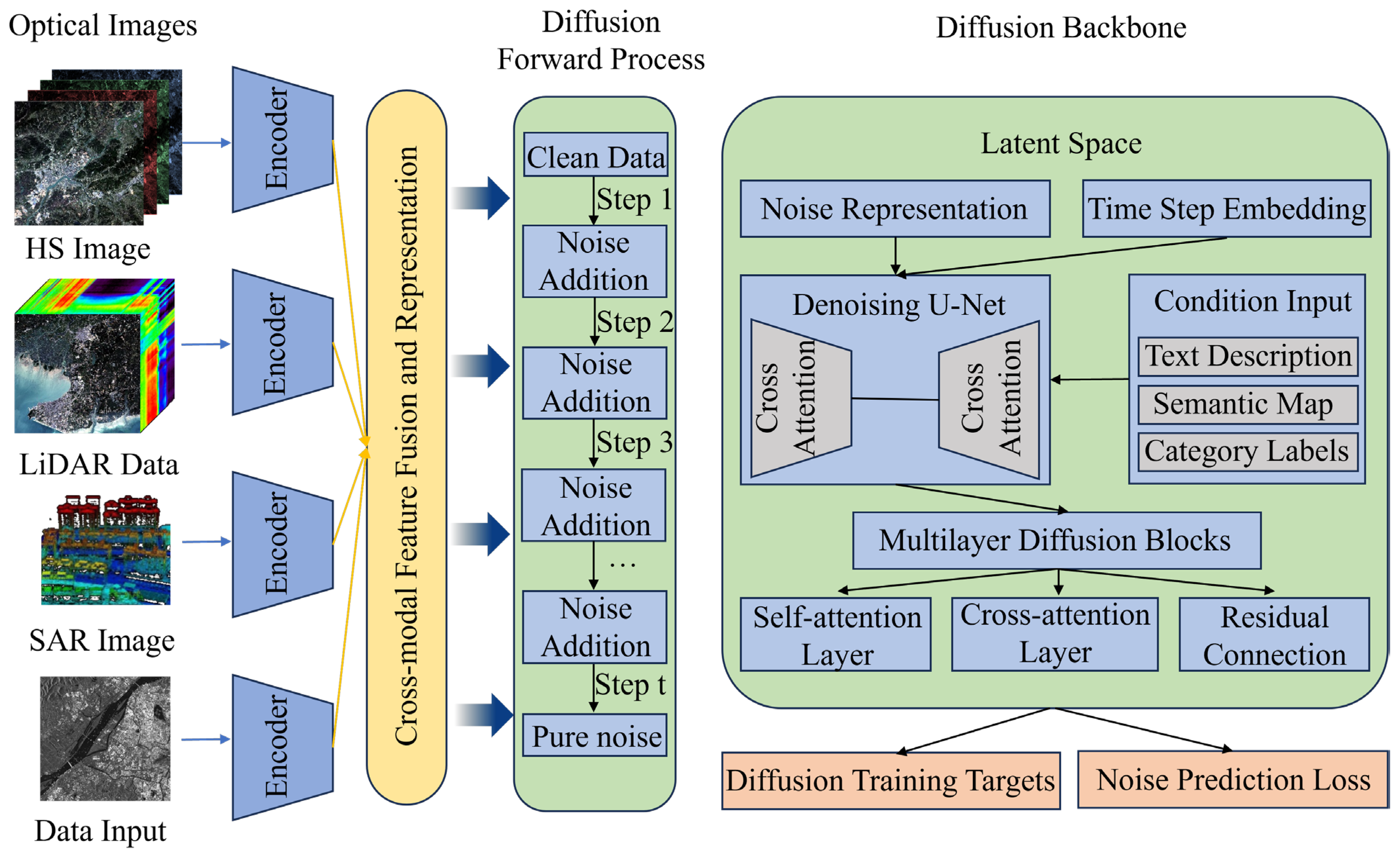

4.4. Diffusion Backbones

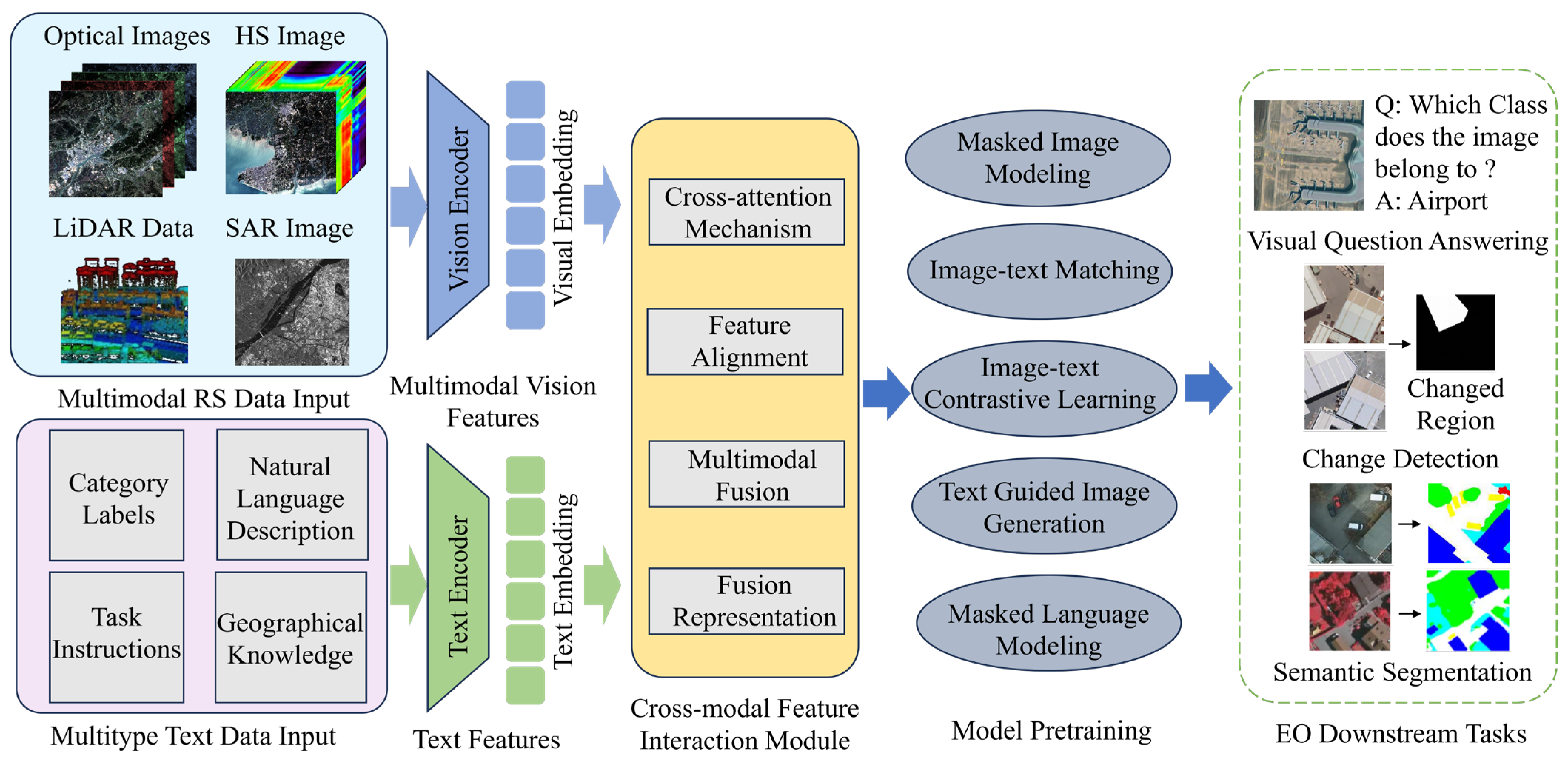

4.5. VLM Backbones

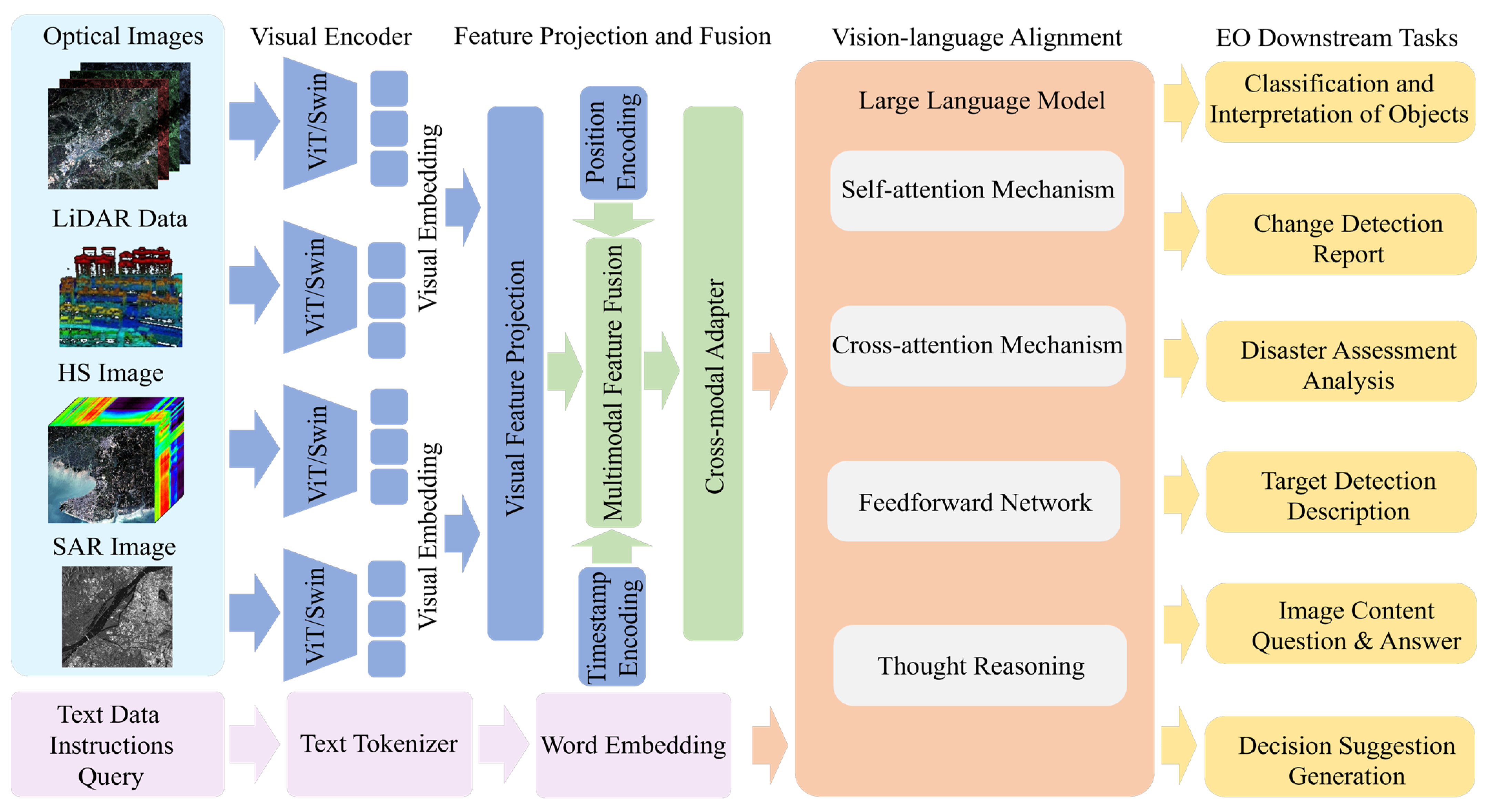

4.6. MLLM Backbones

4.7. Hybrid Backbones

5. Vision–X: MM-RSFMs

5.1. Vision–Vision RSFMs

5.1.1. Optical–SAR RSFMs

5.1.2. Optical–LiDAR RSFMs

5.1.3. Optical–SAR–LiDAR RSFMs

5.2. Vision–Language RSFMs

5.3. Vision–Audio RSFMs

5.4. Vision–Position RSFMs

5.5. Vision–Language–Audio RSFMs

6. Challenges and Perspectives

6.1. A Scarcity of High-Quality Multimodal RS Datasets

6.2. Limited Multimodal Feature Extraction Capability

6.3. Weak Cross-Task Generalization Ability

6.4. The Absence of Unified Evaluation Criteria

6.5. Insufficient Security Measures of Foundation Models

7. Conclusions and Outlook

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhou, G.; Wang, X.; Liu, S.; Wang, Y.; Gao, E.; Wu, J.; Lu, Y.; Yu, L.; Wang, W.; Li, K. MSS-Net: A lightweight network incorporating shifted large kernel and multi-path attention for ship detection in remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2025, 143, 104805. [Google Scholar] [CrossRef]

- Hong, D.; Zhang, B.; Li, H.; Li, Y.; Yao, J.; Li, C.; Werner, M.; Chanussot, J.; Zipf, A.; Zhu, X.X. Cross-city matters: A multimodal remote sensing benchmark dataset for cross-city semantic segmentation using high-resolution domain adaptation networks. Remote Sens. Environ. 2023, 299, 113856. [Google Scholar] [CrossRef]

- Li, X.; Sun, Y.; Peng, X. TA-MSA: A fine-tuning framework for few-shot remote sensing scene classification. Remote Sens. 2025, 17, 1395. [Google Scholar] [CrossRef]

- Zhou, G.; Zhang, Z.; Wang, F.; Zhu, Q.; Wang, Y.; Gao, E.; Cai, Y.; Zhou, X.; Li, C. A multi-scale enhanced feature fusion model for aircraft detection from SAR images. Int. J. Digit. Earth 2025, 18, 2507842. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, Y.; Ru, L.; Dang, B.; Lao, J.; Yu, L.; Luo, J.; Zhu, Z.; Sun, Y.; Zhang, J.; et al. A semantic-enhanced multi-modal remote sensing foundation model for Earth observation. Nat. Mach. Intell. 2025, 7, 1235–1249. [Google Scholar] [CrossRef]

- Yao, D.; Zhi-Li, Z.; Xiao-Feng, Z.; Wei, C.; Fang, H.; Yao-Ming, C.; Cai, W.-W. Deep hybrid: Multi-graph neural network collaboration for hyperspectral image classification. Def. Technol. 2023, 23, 164–176. [Google Scholar] [CrossRef]

- Zhou, G.; Zhi, H.; Gao, E.; Lu, Y.; Chen, J.; Bai, Y.; Zhou, X. DeepU-Net: A parallel dual-branch model for deeply fusing multi-scale features for road extraction from high-resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 9448–9463. [Google Scholar] [CrossRef]

- Wang, J.; Guo, S.; Huang, R.; Li, L.; Zhang, X.; Jiao, L. Dual-channel capsule generation adversarial network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5501016. [Google Scholar] [CrossRef]

- Touvron, H.; Bojanowski, P.; Caron, M.; Cord, M.; El-Nouby, A.; Grave, E.; Izacard, G.; Joulin, A.; Synnaeve, G.; Verbeek, J.; et al. ResMLP: Feedforward networks for image classification with data-efficient training. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5314–5321. [Google Scholar] [CrossRef]

- Lu, H.; Wei, Z.; Wang, X.; Zhang, K.; Liu, H. GraphGPT: A graph enhanced generative pretrained transformer for conditioned molecular generation. Int. J. Mol. Sci. 2023, 24, 16761. [Google Scholar] [CrossRef]

- Duan, Z.; Lu, M.; Ma, J.; Huang, Y.; Ma, Z.; Zhu, F. QARV: Quantization-aware ResNet VAE for lossy image compression. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 436–450. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Lu, W.; Zhu, Z.; Lu, X.; He, Q.; Li, J.; Rong, X.; Yang, Z.; Chang, H.; et al. RingMo: A remote sensing foundation model with masked image modeling. IEEE Trans. Geosci. Remote Sens. 2022, 61, 5608420. [Google Scholar] [CrossRef]

- Yao, F.; Lu, W.; Yang, H.; Xu, L.; Liu, C.; Hu, L.; Yu, H.; Liu, N.; Deng, C.; Tang, D.; et al. RingMo-Sense: Remote sensing foundation model for spatiotemporal prediction via spatiotemporal evolution disentangling. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5620821. [Google Scholar] [CrossRef]

- Yan, Z.; Li, J.; Li, X.; Zhou, R.; Zhang, W.; Feng, Y.; Diao, W.; Fu, K.; Sun, X. RingMo-SAM: A foundation model for segment anything in multimodal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5625716. [Google Scholar] [CrossRef]

- Guo, X.; Lao, J.; Dang, B.; Zhang, Y.; Yu, L.; Ru, L.; Zhong, L.; Huang, Z.; Wu, K.; Hu, D.; et al. SkySense: A multi-modal remote sensing foundation model towards universal interpretation for earth observation imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2024, Seattle, WA, USA, 16–22 June 2024; pp. 27672–27683. [Google Scholar]

- Dong, S.; Wang, L.; Du, B.; Meng, X. ChangeCLIP: Remote sensing change detection with multimodal vision-language representation learning. ISPRS J. Photogramm. Remote Sens. 2024, 208, 53–69. [Google Scholar] [CrossRef]

- Liu, F.; Chen, D.; Guan, Z.; Zhou, X.; Zhu, J.; Ye, Q.; Fu, L.; Zhou, J. RemoteCLIP: A vision language foundation model for remote sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5622216. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, C.; Liu, L.; Shi, Z.; Zou, Z. MetaEarth: A generative foundation model for global-scale remote sensing image generation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 1764–1781. [Google Scholar] [CrossRef]

- Hong, D.; Zhang, B.; Li, X.; Li, Y.; Li, C.; Yao, J.; Yokoya, N.; Li, H.; Ghamisi, P.; Jia, X.; et al. Spectral remote sensing foundation model. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5227–5244. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, Q.; Xu, Y.; Zhang, J.; Du, B.; Tao, D.; Zhang, L. Advancing plain vision transformer toward remote sensing foundation model. IEEE Trans. Geosci. Remote Sens. 2022, 61, 5607315. [Google Scholar] [CrossRef]

- Cha, K.; Seo, J.; Lee, T. A billion-scale foundation model for remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 1–17. [Google Scholar] [CrossRef]

- Hong, D.; Hu, J.; Yao, J.; Chanussot, J.; Zhu, X.X. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model. ISPRS J. Photogramm. Remote Sens. 2021, 178, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Qi, H.; Shi, S.; Sifu, B.; Tang, X.; Gong, W. Spatial-spectral feature fusion and spectral reconstruction of multispectral LiDAR point clouds by attention mechanism. Remote Sens. 2025, 17, 2411. [Google Scholar] [CrossRef]

- Sun, X.; Tian, Y.; Lu, W.; Wang, P.; Niu, R.; Yu, H.; Fu, K. From single- to multi-modal remote sensing imagery interpretation: A survey and taxonomy. Sci. China Inf. Sci. 2023, 66, 140301. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep learning in multimodal remote sensing data fusion: A comprehensive review. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Y.; Zhang, Y.; Zhong, L.; Wang, J.; Chen, J. DKDFN: Domain knowledge-guided deep collaborative fusion network for multimodal unitemporal remote sensing land cover classification. ISPRS J. Photogramm. Remote Sens. 2022, 186, 170–189. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, M.; Wang, H.; Hua, C. Cross-level multi-modal features learning with transformer for RGB-D object recognition. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7121–7130. [Google Scholar] [CrossRef]

- Cai, S.; Wakaki, R.; Nobuhara, S.; Nishino, K. RGB road scene material segmentation. Image Vis. Comput. 2024, 145, 104970. [Google Scholar] [CrossRef]

- Hu, Z.; Xiao, J.; Li, L.; Liu, C.; Ji, G. Human-centric multimodal fusion network for robust action recognition. Expert Syst. Appl. 2023, 239, 122314. [Google Scholar] [CrossRef]

- Li, G.; Lin, Y.; Ouyang, D.; Li, S.; Luo, X.; Qu, X.; Pi, D.; Li, S.E. A RGB-thermal image segmentation method based on parameter sharing and attention fusion for safe autonomous driving. IEEE Trans. Intell. Transp. Syst. 2023, 25, 5122–5137. [Google Scholar] [CrossRef]

- Qin, J.; Li, M.; Zhao, J.; Li, D.; Zhang, H.; Zhong, J. Advancing sun glint correction in high-resolution marine UAV RGB imagery for coral reef monitoring. ISPRS J. Photogramm. Remote Sens. 2024, 207, 298–311. [Google Scholar] [CrossRef]

- Mei, S.; Song, C.; Ma, M.; Xu, F. Hyperspectral image classification using group-aware hierarchical transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5539014. [Google Scholar] [CrossRef]

- Gong, Z.; Zhou, X.; Yao, W.; Zheng, X.; Zhong, P. HyperDID: Hyperspectral intrinsic image decomposition with deep feature embedding. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5506714. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, M.; Li, W.; Wang, S.; Tao, R. Language-aware domain generalization network for cross-scene hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5501312. [Google Scholar] [CrossRef]

- Zhou, M.; Huang, J.; Hong, D.; Zhao, F.; Li, C.; Chanussot, J. Rethinking pan-sharpening in closed-loop regularization. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 14544–14558. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Yang, F.; Xiao, J. Study on pixel entanglement theory for imagery classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5409518. [Google Scholar] [CrossRef]

- Zheng, H.; Li, D.; Zhang, M.; Gong, M.; Qin, A.K.; Liu, T.; Jiang, F. Spectral knowledge transfer for remote sensing change detection. IEEE Trans. Geosci. Remote Sens. 2023, 62, 4501316. [Google Scholar] [CrossRef]

- Zheng, J.; Yang, S.; Wang, X.; Xiao, Y.; Li, T. Background noise filtering and clustering with 3d lidar deployed in roadside of urban environments. IEEE Sens. J. 2021, 21, 20629–20639. [Google Scholar] [CrossRef]

- Farmonov, N.; Esmaeili, M.; Abbasi-Moghadam, D.; Sharifi, A.; Amankulova, K.; Mucsi, L. HypsLiDNet: 3-D–2-D CNN model and spatial–spectral morphological attention for crop classification with DESIS and LIDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11969–11996. [Google Scholar] [CrossRef]

- Ma, C.; Shi, X.; Wang, Y.; Song, S.; Pan, Z.; Hu, J. MosViT: Towards vision transformers for moving object segmentation based on Lidar point cloud. Meas. Sci. Technol. 2024, 35, 116302. [Google Scholar] [CrossRef]

- Zhao, S.; Luo, Y.; Zhang, T.; Guo, W.; Zhang, Z. A domain specific knowledge extraction transformer method for multisource satellite-borne SAR images ship detection. ISPRS J. Photogramm. Remote Sens. 2023, 198, 16–29. [Google Scholar] [CrossRef]

- Yasir, M.; Liu, S.; Mingming, X.; Wan, J.; Pirasteh, S.; Dang, K.B. ShipGeoNet: SAR image-based geometric feature extraction of ships using convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5202613. [Google Scholar] [CrossRef]

- Wang, L.; Yang, X.; Tan, H.; Bai, X.; Zhou, F. Few-shot class-incremental sar target recognition based on hierarchical embedding and incremental evolutionary network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5204111. [Google Scholar] [CrossRef]

- Roy, S.K.; Deria, A.; Hong, D.; Rasti, B.; Plaza, A.; Chanussot, J. Multimodal fusion transformer for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5515620. [Google Scholar] [CrossRef]

- Sun, Y.; Lei, L.; Guan, D.; Kuang, G.; Li, Z.; Liu, L. Locality preservation for unsupervised multimodal change detection in remote sensing imagery. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 6955–6969. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Yan, H.; Zhan, Q.; Yang, S.; Zhang, M.; Zhang, C.; Lei, Y.; Liu, Z.; Liu, Q.; Wang, Y. A Survey on remote sensing foundation models: From vision to multimodality. arXiv 2025, arXiv:2503.22081. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Dang, B.; Wu, K.; Guo, X.; Wang, J.; Chen, J.; Yang, M. Multi-modal remote sensing large foundation models: Current research status and future prospect. Acta Geod. Cartogr. Sin. 2024, 53, 1942–1954. [Google Scholar] [CrossRef]

- Fu, K.; Lu, W.; Liu, X.; Deng, C.; Yu, H.; Sun, X. A comprehensive survey and assumption of remote sensing foundation modal. J. Remote Sens. 2023, 28, 1667–1680. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, J.-J.; Cui, H.-W.; Li, L.; Yang, Y.; Tang, C.-S.; Boers, N. When Geoscience meets foundation models: Toward a general geoscience artificial intelligence system. IEEE Geosci. Remote Sens. Mag. 2024, 2–41. [Google Scholar] [CrossRef]

- Yan, Q.; Gu, H.; Yang, Y.; Li, H.; Shen, H.; Liu, S. Research progress and trend of intelligent remote sensing large model. Acta Geod. Cartogr. Sin. 2024, 53, 1967–1980. [Google Scholar] [CrossRef]

- Li, X.; Wen, C.; Hu, Y.; Yuan, Z.; Zhu, X.X. Vision-language models in remote sensing: Current progress and future trends. IEEE Geosci. Remote Sens. Mag. 2024, 12, 32–66. [Google Scholar] [CrossRef]

- Bao, M.; Lyu, S.; Xu, Z.; Zhou, H.; Ren, J.; Xiang, S.; Li, X.; Cheng, G. Vision Mamba in remote sensing: A comprehensive survey of techniques, applications and outlook. arXiv 2025, arXiv:2505.00630. [Google Scholar] [CrossRef]

- Yang, X.; Li, S.; Chen, Z.; Chanussot, J.; Jia, X.; Zhang, B.; Li, B.; Chen, P. An attention-fused network for semantic segmentation of very-high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 177, 238–262. [Google Scholar] [CrossRef]

- Hang, R.; Li, Z.; Ghamisi, P.; Hong, D.; Xia, G.; Liu, Q. Classification of hyperspectral and lidar data using coupled CNNs. IEEE Trans. Geosci. Sens. 2020, 58, 4939–4950. [Google Scholar] [CrossRef]

- Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Khan, F.S.; Zhu, F.; Shao, L.; Xia, G.-S.; Bai, X. iSAID: A large-scale dataset for instance segmentation in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 28–37. [Google Scholar]

- Hu, Y.; Yuan, J.; Wen, C.; Lu, X.; Liu, Y.; Li, X. RSGPT: A remote sensing vision language model and benchmark. ISPRS J. Photogramm. Remote Sens. 2025, 224, 272–286. [Google Scholar] [CrossRef]

- Wang, Z.; Prabha, R.; Huang, T.; Wu, J.; Rajagopal, R. SkyScript: A large and semantically diverse vision-language dataset for remote sensing. Proc. AAAI Conf. Artif. Intell. 2024, 38, 5805–5813. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Zhang, W.; Cai, M.; Zhang, T.; Zhuang, Y.; Mao, X. EarthGPT: A universal multimodal large language model for multisensor image comprehension in remote sensing domain. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5917820. [Google Scholar] [CrossRef]

- Heidler, K.; Mou, L.; Hu, D.; Jin, P.; Li, G.; Gan, C.; Wen, J.-R.; Zhu, X.X. Self-supervised audiovisual representation learning for remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2022, 116, 103130. [Google Scholar] [CrossRef]

- Hu, D.; Li, X.; Mou, L.; Jin, P.; Chen, D.; Jing, L.; Zhu, X.; Dou, D. Cross-task transfer for geotagged audiovisual aerial scene recognition. arXiv 2020, arXiv:2005.08449. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, P.; Zhou, D.; Li, G.; Zhang, H.; Hu, D. Ref-Avs: Refer and segment objects in audio-visual scenes. In Proceedings of the IEEE European Conference on Computer Vision (ECCV) 2024, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Wang, P.; Hu, H.; Tong, B.; Zhang, Z.; Yao, F.; Feng, Y.; Zhu, Z.; Chang, H.; Diao, W.; Ye, Q.; et al. RingMoGPT: A unified remote sensing foundation model for vision, language, and grounded tasks. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5611320. [Google Scholar] [CrossRef]

- Horn, G.; Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A. The iNaturalist species classification and detection dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8769–8778. [Google Scholar]

- Christie, G.; Fendley, N.; Wilson, J.; Mukherjee, R. Functional Map of the World. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6172–6180. [Google Scholar]

- Larson, M.; Soleymani, M.; Gravier, G.; Ionescu, B.; Jones, G.J. The benchmarking initiative for multimedia evaluation: MediaEval 2016. IEEE Multimed. 2017, 24, 93–96. [Google Scholar] [CrossRef]

- Li, Y.; Wang, L.; Wang, T.; Yang, X.; Luo, J.; Wang, Q.; Deng, Y.; Wang, W.; Sun, X.; Li, H.; et al. STAR: A first-ever dataset and a large-scale benchmark for scene graph generation in large-size satellite imagery. arXiv 2024, arXiv:2406.09410. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Yokoya, N.; Chini, M. Fourier domain structural relationship analysis for unsupervised multimodal change detection. ISPRS J. Photogramm. Remote Sens. 2023, 198, 99–114. [Google Scholar] [CrossRef]

- Zhu, B.; Yang, C.; Dai, J.; Fan, J.; Qin, Y.; Ye, Y. R2FD2: Fast and Robust matching of multimodal remote sensing images via repeatable feature detector and rotation-invariant feature descriptor. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5606115. [Google Scholar] [CrossRef]

- Li, J.; Zheng, K.; Li, Z.; Gao, L.; Jia, X. X-shaped interactive autoencoders with cross-modality mutual learning for unsupervised hyperspectral image super-resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5518317. [Google Scholar] [CrossRef]

- Wang, X.; Qi, G.-J. Contrastive learning with stronger augmentations. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5549–5560. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Miao, R.; Wang, Y.; Wang, X. Contrastive graph convolutional networks with adaptive augmentation for text classification. Inf. Process. Manag. 2022, 59, 102946. [Google Scholar] [CrossRef]

- Ding, L.; Liu, L.; Huang, Y.; Li, C.; Zhang, C.; Wang, W.; Wang, L. Text-to-image vehicle re-identification: Multi-scale multi-view cross-modal alignment network and a unified benchmark. IEEE Trans. Intell. Transp. Syst. 2024, 25, 7673–7686. [Google Scholar] [CrossRef]

- Ma, H.; Lin, X.; Yu, Y. I2F: A unified image-to-feature approach for domain adaptive semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 46, 1695–1710. [Google Scholar] [CrossRef]

- Li, S.; Liu, Z.; Zang, Z.; Wu, D.; Chen, Z.; Li, S.Z. GenURL: A general framework for unsupervised representation learning. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 286–298. [Google Scholar] [CrossRef]

- Han, D.; Cheng, X.; Guo, N.; Ye, X.; Rainer, B.; Priller, P. Momentum cross-modal contrastive learning for video moment retrieval. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 5977–5994. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Z.; Li, G.; Zhuang, P.; Hou, G.; Zhang, Q.; Li, C. GACNet: Generate adversarial-driven cross-aware network for hyperspectral wheat variety identification. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5503314. [Google Scholar] [CrossRef]

- Ma, X.; Liu, C.; Xie, C.; Ye, L.; Deng, Y.; Ji, X. Disjoint masking with joint distillation for efficient masked image modeling. IEEE Trans. Multimed. 2023, 26, 3077–3087. [Google Scholar] [CrossRef]

- Mantripragada, K.; Qureshi, F.Z. Hyperspectral pixel unmixing with latent dirichlet variational autoencoder. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5507112. [Google Scholar] [CrossRef]

- De Santis, E.; Martino, A.; Rizzi, A. Human versus machine intelligence: Assessing natural language generation models through complex systems theory. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 4812–4829. [Google Scholar] [CrossRef]

- Si, L.; Dong, H.; Qiang, W.; Song, Z.; Du, B.; Yu, J.; Sun, F. A Trusted generative-discriminative joint feature learning framework for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5601814. [Google Scholar] [CrossRef]

- Huang, Y.; Zheng, H.; Li, Y.; Zheng, F.; Zhen, X.; Qi, G.; Shao, L.; Zheng, Y. Multi-constraint transferable generative adversarial networks for cross-modal brain image synthesis. Int. J. Comput. Vis. 2024, 132, 4937–4953. [Google Scholar] [CrossRef]

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal learning with transformers: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef]

- Jiang, M.; Su, Y.; Gao, L.; Plaza, A.; Zhao, X.-L.; Sun, X.; Liu, G. GraphGST: Graph generative structure-aware transformer for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5504016. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, Y.; Zhang, J.; Tao, D. ViTAEv2: Vision transformer advanced by exploring inductive bias for image recognition and beyond. Int. J. Comput. Vis. 2023, 131, 1141–1162. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. 2022, 54, 200. [Google Scholar] [CrossRef]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. UniFormer: Unifying convolution and self-attention for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12581–12600. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; He, J.; Lin, C.-W.; Zhang, L. TTST: A top-k token selective transformer for remote sensing image super-resolution. IEEE Trans. Image Process. 2024, 33, 738–752. [Google Scholar] [CrossRef] [PubMed]

- Tu, L.; Li, J.; Huang, X.; Gong, J.; Xie, X.; Wang, L. S2HM2: A spectral–spatial hierarchical masked modeling framework for self-supervised feature learning and classification of large-scale hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5517019. [Google Scholar] [CrossRef]

- Huang, Z.; Jin, X.; Lu, C.; Hou, Q.; Cheng, M.-M.; Fu, D.; Shen, X.; Feng, J. Contrastive masked autoencoders are stronger vision learners. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2506–2517. [Google Scholar] [CrossRef]

- Qian, Y.; Wang, Y.; Zou, J.; Lin, J.; Pan, Y.; Yao, T.; Sun, Q.; Mei, T. Kernel masked image modeling through the lens of theoretical understanding. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 13512–13526. [Google Scholar] [CrossRef]

- Hou, Z.; Sun, F.; Chen, Y.; Xie, Y.; Kung, S.-Y. MILAN: Masked image pretraining on language assisted representation. arXiv 2022, arXiv:2208.06049. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Wang, B.; Zhu, Z.; Diao, W.; Yang, M.Y. AST: Adaptive self-supervised transformer for optical remote sensing representation. ISPRS J. Photogramm. Remote Sens. 2023, 200, 41–54. [Google Scholar] [CrossRef]

- Chen, X.; Liu, C.; Hu, P.; Lin, J.; Gong, Y.; Chen, Y.; Peng, D.; Geng, X. Adaptive masked autoencoder transformer for image classification. Appl. Soft Comput. 2024, 164, 111958. [Google Scholar] [CrossRef]

- Ding, N.; Qin, Y.; Yang, G.; Wei, F.; Yang, Z.; Su, Y.; Hu, S.; Chen, Y.; Chan, C.-M.; Chen, W.; et al. Parameter-efficient fine-tuning of large-scale pre-trained language models. Nat. Mach. Intell. 2023, 5, 220–235. [Google Scholar] [CrossRef]

- Radenovic, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1655–1668. [Google Scholar] [CrossRef]

- Dong, Z.; Gu, Y.; Liu, T. UPetu: A unified parameter-efficient fine-tuning framework for remote sensing foundation model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5616613. [Google Scholar] [CrossRef]

- Song, B.; Yang, H.; Wu, Y.; Zhang, P.; Wang, B.; Han, G. A multispectral remote sensing crop segmentation method based on segment anything model using multistage adaptation fine-tuning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4408818. [Google Scholar] [CrossRef]

- Wang, H.; Li, J.; Wu, H.; Hovy, E.; Sun, Y. Pretrained language models and their applications. Engineering 2023, 25, 51–65. [Google Scholar] [CrossRef]

- Zhu, J.; Li, Y.; Yang, K.; Guan, N.; Fan, Z.; Qiu, C.; Yi, X. MVP: Meta visual prompt tuning for few-shot remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5610413. [Google Scholar] [CrossRef]

- Guo, M.-H.; Zhang, Y.; Mu, T.-J.; Huang, S.X.; Hu, S.-M. Tuning vision-language models with multiple prototypes clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 11186–11199. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Qian, L.; Gamba, P. A novel iterative self-organizing pixel matrix entanglement classifier for remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5407121. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large language models in medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Liu, W.; Zhu, Q.; Lu, Y.; Liu, Y. ECA-MobileNetV3(Large)+SegNet model for binary sugarcane classification of remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4414915. [Google Scholar] [CrossRef]

- Zhang, C.; Pan, X.; Li, H.; Gardiner, A.; Sargent, I.; Hare, J.; Atkinson, P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote Sens. 2018, 140, 133–144. [Google Scholar] [CrossRef]

- An, X.; He, W.; Zou, J.; Yang, G.; Zhang, H. Pretrain a remote sensing foundation model by promoting intra-instance similarity. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5643015. [Google Scholar] [CrossRef]

- Baltrusaitis, T.; Ahuja, C.; Morency, L.-P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Lungren, M.P. The Current and future state of ai interpretation of medical images. N. Engl. J. Med. 2023, 388, 1981–1990. [Google Scholar] [CrossRef] [PubMed]

- Jin, L.; Li, Z.; Tang, J. Deep semantic multimodal hashing network for scalable image-text and video-text retrievals. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 1838–1851. [Google Scholar] [CrossRef] [PubMed]

- Lin, R.; Hu, H. Adapt and explore: Multimodal mixup for representation learning. Inf. Fusion 2023, 105, 102216. [Google Scholar] [CrossRef]

- Li, D.; Xie, W.; Li, Y.; Fang, L. FedFusion: Manifold-driven federated learning for multi-satellite and multi-modality fusion. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5500813. [Google Scholar] [CrossRef]

- Khan, M.; Gueaieb, W.; El Saddik, A.; Kwon, S. MSER: Multimodal speech emotion recognition using cross-attention with deep fusion. Expert Syst. Appl. 2024, 245, 122946. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, J.; Gong, M.; Gong, P.; Fan, X.; Qin, A.K.; Miao, Q.; Ma, W. Self-supervised intra-modal and cross-modal contrastive learning for point cloud understanding. IEEE Trans. Multimed. 2023, 26, 1626–1638. [Google Scholar] [CrossRef]

- Krishna, R.; Wang, J.; Ahern, W.; Sturmfels, P.; Venkatesh, P.; Kalvet, I.; Lee, G.R.; Morey-Burrows, F.S.; Anishchenko, I.; Humphreys, I.R.; et al. Generalized biomolecular modeling and design with RoseTTAFold All-Atom. Science 2024, 384, eadl2528. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.; Huang, L.; Hong, D.; Du, Q. Foundation model-based multimodal remote sensing data classification. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5502117. [Google Scholar] [CrossRef]

- Min, B.; Ross, H.; Sulem, E.; Ben Veyseh, A.P.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 2023, 56, 30. [Google Scholar] [CrossRef]

- Cui, H.; Wang, C.; Maan, H.; Pang, K.; Luo, F.; Duan, N.; Wang, B. scGPT: Toward building a foundation model for single-cell multi-omics using generative AI. Nat. Methods 2024, 21, 1470–1480. [Google Scholar] [CrossRef]

- Saka, A.; Taiwo, R.; Saka, N.; Salami, B.A.; Ajayi, S.; Akande, K.; Kazemi, H. GPT models in construction industry: Opportunities, limitations, and a use case validation. Dev. Built Environ. 2024, 17, 100300. [Google Scholar] [CrossRef]

- Zhao, B.; Jin, W.; Del Ser, J.; Yang, G. ChatAgri: Exploring potentials of ChatGPT on cross-linguistic agricultural text classification. Neurocomputing 2023, 557, 126708. [Google Scholar] [CrossRef]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate medium-range global weather forecasting with 3D neural networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, C.; He, Z.; Yu, W. GeoGPT: An assistant for understanding and processing geospatial tasks. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103976. [Google Scholar] [CrossRef]

- Huang, A.H.; Wang, H.; Yang, Y. FinBERT: A large language model for extracting information from financial text. Contemp. Account. Res. 2023, 40, 806–841. [Google Scholar] [CrossRef]

- Omiye, J.; Gui, H.; Rezaei, S.; Zou, J.; Daneshjou, R. Large language models in medicine: The potentials and pitfalls: A narrative review. Ann. Intern. Med. 2024, 177, 210–220. [Google Scholar] [CrossRef] [PubMed]

- Mann, S.; Earp, B.; Moller, N.; Vynn, S.; Savulescu, J. AUTOGEN: A personalized large language model for academic enhancement-ethics and proof of principle. Am. J. Bioeth. 2023, 23, 28–41. [Google Scholar] [CrossRef] [PubMed]

- Gong, S.; Luo, X. DGGCCM: A hybrid neural model for legal event detection. Artif. Intell. Law 2024, 1–41. [Google Scholar] [CrossRef]

- Zhang, Y.; Fu, K.; Sun, H.; Sun, X.; Zheng, X.; Wang, H. A multi-model ensemble method based on convolutional neural networks for aircraft detection in large remote sensing images. Remote Sens. Lett. 2018, 9, 11–20. [Google Scholar] [CrossRef]

- Sharma, M.; Dhanaraj, M.; Karnam, S.; Chachlakis, D.G.; Ptucha, R.; Markopoulos, P.P. YOLOrs: Object detection in multimodal remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1497–1508. [Google Scholar] [CrossRef]

- Li, W.; Wu, J.; Liu, Q.; Zhang, Y.; Cui, B.; Jia, Y. An effective multimodel fusion method for SAR and optical remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5881–5892. [Google Scholar] [CrossRef]

- Ma, W.; Guo, Q.; Wu, Y.; Zhao, W.; Zhang, X.; Jiao, L. A novel multi-model decision fusion network for object detection in remote sensing images. Remote Sens. 2019, 11, 737. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, C.; Xia, C. Multimodal remote sensing network. In Proceedings of the 2023 13th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Athens, Greece, 31 October–2 November 2023; pp. 1–4. [Google Scholar]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional neural networks for multimodal remote sensing data classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5517010. [Google Scholar] [CrossRef]

- Uss, M.; Vozel, B.; Lukin, V.; Chehdi, K. Efficient Discrimination and localization of multimodal remote sensing images using CNN-based prediction of localization uncertainty. Remote Sens. 2020, 12, 703. [Google Scholar] [CrossRef]

- Zhang, H.; Ni, W.; Yan, W.; Xiang, D.; Wu, J.; Yang, X.; Bian, H. Registration of multimodal remote sensing image based on deep fully convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3028–3042. [Google Scholar] [CrossRef]

- Zhou, W.; Jin, J.; Lei, J.; Hwang, J.-N. CEGFNet: Common extraction and gate fusion network for scene parsing of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5405110. [Google Scholar] [CrossRef]

- Zhou, H.; Xia, L.; Du, X.; Li, S. FRIC: A framework for few-shot remote sensing image captioning. Int. J. Digit. Earth 2024, 17, 2337240. [Google Scholar] [CrossRef]

- Azeem, A.; Li, Z.; Siddique, A.; Zhang, Y.; Zhou, S. Unified multimodal fusion transformer for few shot object detection for remote sensing images. Inf. Fusion 2024, 111, 102508. [Google Scholar] [CrossRef]

- Liu, B.; Huang, Z.; Li, Y.; Gao, R.; Chen, H.-X.; Xiang, T.-Z. HATFormer: Height-aware transformer for multimodal 3D change detection. ISPRS J. Photogramm. Remote Sens. 2025, 228, 340–355. [Google Scholar] [CrossRef]

- Wang, T.; Chen, G.; Zhang, X.; Liu, C.; Wang, J.; Tan, X.; Zhou, W.; He, C. LMFNet: Lightweight multimodal fusion network for high-resolution remote sensing image segmentation. Pattern Recognit. 2025, 164, 111579. [Google Scholar] [CrossRef]

- Feng, H.; Hu, Q.; Zhao, P.; Wang, S.; Ai, M.; Zheng, D.; Liu, T. FTransDeepLab: Multimodal fusion Transformer-based deeplabv3+ for remote sensing semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4406618. [Google Scholar] [CrossRef]

- Zhu, C.; Zhang, T.; Wu, Q.; Li, Y.; Zhong, Q. An implicit Transformer-based fusion method for hyperspectral and multispectral remote sensing image. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103955. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Yang, G.; Li, D.; Li, Y. Multimodal informative ViT: Information aggregation and distribution for hyperspectral and LiDAR classification. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7643–7656. [Google Scholar] [CrossRef]

- Xu, Y.; Cao, L.; Li, J.; Li, W.; Li, Y.; Zong, Y.; Wang, A.; Rao, Y.; Deng, S. S2RCFormer: Spatial-spectral residual cross-attention Transformer for multimodal remote sensing data classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 16176–16193. [Google Scholar] [CrossRef]

- Dong, Z.; Cheng, D.; Li, J. SpectMamba: Remote sensing change detection network integrating frequency and visual state space model. Expert Syst. Appl. 2025, 287, 127902. [Google Scholar] [CrossRef]

- Pan, H.; Zhao, R.; Ge, H.; Liu, M.; Zhang, Q. Multimodal fusion Mamba network for joint land cover classification using hyperspectral and Lidar data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 17328–17345. [Google Scholar] [CrossRef]

- Gao, F.; Jin, X.; Zhou, X.; Dong, J.; Du, Q. MSFMamba: Multiscale feature fusion State Space Model for multisource remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5504116. [Google Scholar] [CrossRef]

- Wang, H.; Chen, W.; Li, X.; Liang, Q.; Qin, X.; Li, J. CUG-STCN: A seabed topography classification framework based on knowledge graph-guided vision mamba network. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104383. [Google Scholar] [CrossRef]

- Weng, Q.; Chen, G.; Pan, Z.; Lin, J.; Zheng, X. AFMamba: Adaptive fusion network for hyperspectral and LiDAR data collaborative classification base on mamba. J. Remote Sens. 2025, 135, 1–15. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, Z.; Deng, J.; Bian, L.; Yang, C. S2CrossMamba: Spatial–spectral cross-Mamba for multimodal remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5510705. [Google Scholar] [CrossRef]

- Ma, M.; Zhao, J.; Ma, W.; Jiao, L.; Li, L.; Liu, X.; Liu, F.; Yang, S. A Mamba-aware spatial–spectral cross-modal network for remote sensing classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4402515. [Google Scholar] [CrossRef]

- Liu, C.; Ma, X.; Yang, X.; Zhang, Y.; Dong, Y. COMO: Cross-mamba interaction and offset-guided fusion for multimodal object detection. Inf. Fusion 2025, 125, 103414. [Google Scholar] [CrossRef]

- Zhou, M.; Li, T.; Qiao, C.; Xie, D.; Wang, G.; Ruan, N.; Mei, L.; Yang, Y.; Shen, H.T. DMM: Disparity-guided multispectral Mamba for oriented object detection in remote sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5404913. [Google Scholar] [CrossRef]

- Li, Y.; Li, D.; Xie, W.; Ma, J.; He, S.; Fang, L. Semi-mamba: Mamba-driven semi-supervised multimodal remote sensing feature classification. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 9837–9849. [Google Scholar] [CrossRef]

- Liu, C.; Chen, K.; Zhao, R.; Zou, Z.; Shi, Z. Text2Earth: Unlocking text-driven remote sensing image generation with a global-scale dataset and a foundation model. IEEE Geosci. Remote Sens. Mag. 2025, 13, 238–259. [Google Scholar] [CrossRef]

- Zheng, Z.; Ermon, S.; Kim, D.; Zhang, L.; Zhong, Y. Changen2: Multi-temporal remote sensing generative change foundation model. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 725–741. [Google Scholar] [CrossRef]

- Qu, J.; Yang, Y.; Dong, W.; Yang, Y. LDS2AE: Local diffusion shared-specific autoencoder for multimodal remote sensing image classification with arbitrary missing modalities. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38. [Google Scholar] [CrossRef]

- Tang, D.; Cao, X.; Hou, X.; Jiang, Z.; Liu, J.; Meng, D. CRS-Diff: Controllable remote sensing image generation with Diffusion model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5638714. [Google Scholar] [CrossRef]

- Zhang, W.; Mei, J.; Wang, Y. DMDiff: A dual-branch multimodal conditional guided Diffusion model for cloud removal through sar-optical data fusion. Remote Sens. 2025, 17, 965. [Google Scholar] [CrossRef]

- Sun, D.; Yao, J.; Xue, W.; Zhou, C.; Ghamisi, P.; Cao, X. Mask approximation net: A novel Diffusion model approach for remote sensing change captioning. IEEE Trans. Geosci. Remote Sens. 2025, 1. [Google Scholar] [CrossRef]

- Sebaq, A.; ElHelw, M. RSDiff: Remote sensing image generation from text using Diffusion model. Neural Comput. Appl. 2024, 36, 23103–23111. [Google Scholar] [CrossRef]

- Pan, J.; Lei, S.; Fu, Y.; Li, J.; Liu, Y.; Sun, Y.; He, X.; Peng, L.; Huang, X.; Zhao, B. EarthSynth: Generating informative earth observation with Diffusion models. arXiv 2025, arXiv:2505.12108. [Google Scholar] [CrossRef]

- Cai, M.; Zhang, W.; Zhang, T.; Zhuang, Y.; Chen, H.; Chen, L.; Li, C. Diffusion-Geo: A two-stage controllable text-to-image generative model for remote sensing scenarios. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 7003–7006. [Google Scholar]

- Wu, H.; Mu, W.; Zhong, D.; Du, Z.; Li, H.; Tao, C. FarmSeg_VLM: A farmland remote sensing image segmentation method considering vision-language alignment. ISPRS J. Photogramm. Remote Sens. 2025, 225, 423–439. [Google Scholar] [CrossRef]

- Wu, H.; Du, Z.; Zhong, D.; Wang, Y.; Tao, C. FSVLM: A vision-language model for remote sensing farmland segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4402813. [Google Scholar] [CrossRef]

- Yao, K.; Xu, N.; Yang, R.; Xu, Y.; Gao, Z.; Kitrungrotsakul, T.; Ren, Y.; Zhang, P.; Wang, J.; Wei, N.; et al. Falcon: A remote sensing vision-language foundation model. arXiv 2025, arXiv:2503.11070. [Google Scholar] [CrossRef]

- Lin, H.; Zhang, C.; Hong, D.; Dong, K.; Wen, C. FedRSCLIP: Federated learning for remote sensing scene classification using vision-language models. IEEE Geosci. Remote Sens. Mag. 2025, 13, 260–275. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, T.; Guo, Y.; Yin, J. RS5M and GeoRSCLIP: A large-scale vision- language dataset and a large vision-language model for remote sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5642123. [Google Scholar] [CrossRef]

- Liu, F.; Dai, W.; Zhang, C.; Zhu, J.; Yao, L.; Li, X. Co-LLaVA: Efficient remote sensing visual question answering via model collaboration. Remote Sens. 2025, 17, 466. [Google Scholar] [CrossRef]

- Fu, Z.; Yan, H.; Ding, K. CLIP-MoA: Visual-language models with mixture of adapters for multitask remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4703817. [Google Scholar] [CrossRef]

- Lin, H.; Hong, D.; Ge, S.; Luo, C.; Jiang, K.; Jin, H.; Wen, C. RS-MoE: A vision–language model with mixture of experts for remote sensing image captioning and visual question answering. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5614918. [Google Scholar] [CrossRef]

- Zhan, Y.; Xiong, Z.; Yuan, Y. SkyEyeGPT: Unifying remote sensing vision-language tasks via instruction tuning with large language model. ISPRS J. Photogramm. Remote Sens. 2025, 221, 64–77. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Al Rahhal, M.M.; Ricci, R.; Melgani, F. RS-LLaVA: A large vision-language model for joint captioning and question answering in remote sensing imagery. Remote Sens. 2024, 16, 1477. [Google Scholar] [CrossRef]

- Muhtar, D.; Li, Z.; Gu, F.; Zhang, X.; Xiao, P. LHRS-Bot: Empowering remote sensing with VGI-enhanced large multimodal language model. Comput. Vis. ECCV 2024, 15132, 440–457. [Google Scholar] [CrossRef]

- Zhang, W.; Cai, M.; Zhang, T.; Zhuang, Y.; Li, J.; Mao, X. EarthMarker: A visual prompting multimodal large language model for remote sensing. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5604219. [Google Scholar] [CrossRef]

- Zhang, Z.; Shen, H.; Zhao, T.; Chen, B.; Guan, Z.; Wang, Y.; Jia, X.; Cai, Y.; Shang, Y.; Yin, J. GeoRSMLLM: A multimodal large language model for vision-language tasks in geoscience and remote sensing. arXiv 2025, arXiv:2503.12490. [Google Scholar]

- Ou, R.; Hu, Y.; Zhang, F.; Chen, J.; Liu, Y. GeoPix: A multimodal large language model for pixel-level image understanding in remote sensing. IEEE Geosci. Remote Sens. Mag. 2025, 13, 324–337. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, M.; Xu, S.; Li, Y.; Zhang, B. CCExpert: Advancing MLLM capability in remote sensing change captioning with difference-aware integration and a foundational dataset. arXiv 2024, arXiv:2411.11360. [Google Scholar] [CrossRef]

- Li, Z.; Muhtar, D.; Gu, F.; He, Y.; Zhang, X.; Xiao, P.; He, G.; Zhu, X. LHRS-Bot-Nova: Improved multimodal large language model for remote sensing vision-language interpretation. ISPRS J. Photogramm. Remote Sens. 2025, 227, 539–550. [Google Scholar] [CrossRef]

- Liu, P. A multimodal fusion framework for semantic segmentation of remote sensing based on multilevel feature fusion learning. Neurocomputing 2025, 653, 131233. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Z.; Gao, H.; Li, X.; Wang, L.; Miao, Q. HATF: Multi-modal feature learning for infrared and visible image fusion via hybrid attention Transformer. Remote Sens. 2024, 16, 803. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.-O.; Huang, B. A unified framework with multimodal fine-tuning for remote sensing semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5405015. [Google Scholar] [CrossRef]

- Wang, J.; Su, N.; Zhao, C.; Yan, Y.; Feng, S. Multi-modal object detection method based on dual-branch asymmetric attention backbone and feature fusion pyramid network. Remote Sens. 2024, 16, 3904. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Xia, G.-S.; Chanussot, J.; Zhu, X.X. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote Sens. 2020, 167, 12–23. [Google Scholar] [CrossRef]

- Nie, H.; Luo, B.; Liu, J.; Fu, Z.; Zhou, H.; Zhang, S.; Liu, W. PromptMID: Modal invariant descriptors based on Diffusion and vision foundation models for optical-SAR image matching. arXiv 2025, arXiv:2502.18104. [Google Scholar] [CrossRef]

- Du, W.-L.; Gu, Y.; Zhao, J.; Zhu, H.; Yao, R.; Zhou, Y. A Mamba-Diffusion framework for multimodal remote sensing image semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6016905. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, X. Bidirectional feature fusion and enhanced alignment based multimodal semantic segmentation for remote sensing images. Remote Sens. 2024, 16, 2289. [Google Scholar] [CrossRef]

- Wang, S.; Cai, B.; Hou, D.; Ding, Q.; Wang, J.; Shao, Z. MF-BHNet: A hybrid multimodal fusion network for building height estimation using sentinel-1 and sentinel-2 imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4512419. [Google Scholar] [CrossRef]

- Pan, C.; Fan, X.; Tjahjadi, T.; Guan, H.; Fu, L.; Ye, Q.; Wang, R. Vision foundation model guided multimodal fusion network for remote sensing semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 9409–9431. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, T.; Dai, W.; Zhang, C.; Cai, W.; Zhou, X.; Chen, D. Few-shot adaptation of multi-modal foundation models: A survey. Artif. Intell. Rev. 2024, 57, 268. [Google Scholar] [CrossRef]

- Zhang, M.; Yang, B.; Hu, X.; Gong, J.; Zhang, Z. Foundation model for generalist remote sensing intelligence: Potentials and prospects. Sci. Bull. 2024, 69, 3652–3656. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Wang, X.; Xing, Y.; Cheng, C.; Jiang, W.; Feng, Q. Modality fusion vision transformer for hyperspectral and LiDAR data collaborative classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 17052–17065. [Google Scholar] [CrossRef]

- Han, Z.; Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Chanussot, J. Multimodal hyperspectral unmixing: Insights from attention networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5524913. [Google Scholar] [CrossRef]

- Xue, Z.; Tan, X.; Yu, X.; Liu, B.; Yu, A.; Zhang, P. Deep hierarchical vision transformer for hyperspectral and LIDAR data classification. IEEE Trans. Image Process. 2022, 31, 3095–3110. [Google Scholar] [CrossRef]

- Zhang, M.; Zhao, X.; Li, W.; Zhang, Y.; Tao, R.; Du, Q. Cross-scene joint classification of multisource data with multilevel domain adaption network. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 11514–11526. [Google Scholar] [CrossRef]

- Song, X.; Jiao, L.; Li, L.; Liu, F.; Liu, X.; Yang, S.; Hou, B. MGPACNet: A multiscale geometric prior aware cross-modal network for images fusion classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4412815. [Google Scholar] [CrossRef]

- Wu, Q.; Li, Z.; Zhu, S.; Xu, P.P.; Yan, T.T.; Wang, J. Nonlinear intensity measurement for multi-source images based on structural similarity. Measurement 2021, 179, 109474. [Google Scholar] [CrossRef]

- Marsocci, V.; Jia, Y.; Bellier, G.; Kerekes, D.; Zeng, L.; Hafner, S.; Gerard, S.; Brune, E.; Yadav, R.; Shibli, A.; et al. PANGAEA: A global and inclusive benchmark for geospatial foundation models. arXiv 2024, arXiv:2412.04204. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to prompt for remote sensing instance segmentation based on visual foundation model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4701117. [Google Scholar] [CrossRef]

- Hu, D.; Wei, Y.; Qian, R.; Lin, W.; Song, R.; Wen, J.-R. Class-aware sounding objects Localization via audiovisual correspondence. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 9844–9859. [Google Scholar] [CrossRef] [PubMed]

- Sadok, S.; Leglaive, S.; Girin, L.; Alameda-Pineda, X.; Séguier, R. A multimodal dynamical variational autoencoder for audiovisual speech representation learning. Neural Netw. 2024, 172, 106120. [Google Scholar] [CrossRef] [PubMed]

- Xing, Y.; He, Y.; Tian, Z.; Wang, X.; Chen, Q. Seeing and hearing: Open-domain visual-audio generation with diffusion latent aligners. arXiv 2024, arXiv:2402.17723. [Google Scholar]

- Mai, G.; Lao, N.; He, Y.; Song, J.; Ermon, S. CSP: Self-supervised contrastive spatial pre-training for geospatial-visual representations. arXiv 2023, arXiv:2305.01118. [Google Scholar]

- Gong, Z.; Wei, Z.; Wang, D.; Hu, X.; Ma, X.; Chen, H.; Jia, Y.; Deng, Y.; Ji, Z.; Zhu, X.; et al. CrossEarth: Geospatial vision foundation model for domain generalizable remote sensing semantic segmentation. arXiv 2024, arXiv:2410.22629. [Google Scholar] [CrossRef]

- Cepeda, V.; Nayak, G.; Shah, M. GeoCLIP: Clip-inspired alignment between locations and images for effective worldwide geo-localization. arXiv 2023, arXiv:2309.16020. [Google Scholar]

- Gong, Z.; Li, B.; Wang, C.; Chen, J.; Zhao, P. BF-SAM: Enhancing SAM through multi-modal fusion for fine-grained building function identification. Int. J. Geogr. Inf. Sci. 2024, 39, 2069–2095. [Google Scholar] [CrossRef]

- Liu, J.; Chen, S.; He, X.; Guo, L.; Zhu, X.; Wang, W.; Tang, J. VALOR: Vision-audio-language omni-perception pretraining model and dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 708–724. [Google Scholar] [CrossRef]

- Girdhar, R.; El-Nouby, A.; Liu, Z.; Singh, M.; Alwala, K.V.; Joulin, A.; Misra, I. ImageBind One Embedding Space to Bind Them All. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 15180–15190. [Google Scholar]

- Lyu, C.; Wu, M.; Wang, L.; Huang, X.; Liu, B.; Du, Z.; Shi, S.; Tu, Z. Macaw-LLM: Multi-modal language modeling with image, audio, video, and text integration. arXiv 2023, arXiv:2306.09093. [Google Scholar]

| Modality | Dataset | Year | Type | Size | Feature Description |

|---|---|---|---|---|---|

| Vision + Vision | Houston 2013 [22] | 2021 | HS, MS | 15,029 | The multimodal RS benchmark dataset obtained by reducing the spatial and spectral resolution of the original HSIs covers 20 types of land features such as roads, buildings, and vegetation, and is used for land-cover classification. |

| Augsburg [22] | 2021 | HS, SAR, DSM | 78,294 | This multimodal RS benchmark dataset consists of spaceborne HSIs, Sentinel-1 dual-polarization (VV-VH) PolSAR images, and digital surface model (DSM) images, and is used for land-cover classification. | |

| DKDFN [26] | 2022 | MS, SAR, DEM | 450 | A multimodal dataset composed of Sentinel-1 bipolar SAR images, Sentinel-2 MSIs, and SRTM digital elevation model data is provided, with a resolution of 10 m, and is used for land-cover classification. | |

| Potsdam [53] | 2021 | DSM, IRRG, RGB, RGBIR | 38 | An RS benchmark dataset for urban semantic segmentation, in which Potsdam city, covered by airborne orthophoto images, features large building complexes, narrow streets, and dense settlement structures. | |

| Trento [54] | 2020 | HS, LiDAR | 30,414 | A multimodal dataset for RS land classification, derived from airborne HSIs and LiDAR data from the Trento rural area, with a spatial resolution of 1 m. | |

| Vision + Language | RemoteCLIP [17] | 2024 | RSI, Text | 828,725 | An RS image–text dataset specifically established for the pre-training task of vision–language models, featuring a wide variety of scene types, image captions, semantics, and alignment features. |

| iSAID [55] | 2019 | RSI, Text | 2806 | The first RS benchmark dataset composed of high-resolution aerial images and their annotations, featuring large-scale and multi-scale characteristics, is used for instance segmentation and object detection. | |

| RSICap [56] | 2023 | RSI, Text | 2585 | The RS image–text pairs containing image types, land feature attributes, and scene descriptions can be used for the pre-training of MM-RSFMs and for its downstream tasks. | |

| SkyScript [57] | 2023 | RSI, Text | 2.6 M | A dataset of image–text pairs with a scale of millions, among which the RSIs are obtained from the Google Earth Engine (GEE) platform, and the corresponding semantic labels are obtained from the Open Street Map (OSM). | |

| LEVIR-CD [58] | 2020 | RSI, Text | 637 | The benchmark dataset for change detection, which includes a total of 31,333 individual buildings with changes, involves many variations such as sensor characteristics, atmospheric conditions, and lighting conditions. | |

| MMRS-1M [59] | 2024 | RSI, Text | 1 M | A large-scale multi-sensor and multimodal RS instruction tracking dataset with image–text pairs, where the visual modality includes optical, SAR, and infrared, etc., and covers classification, image captions, and visual question answering, etc. | |

| Vision + Audio | SoundingEarth [60] | 2023 | Image– Audio | 50,545 | An image–audio pair consisting of aerial images from 136 countries and corresponding crowdsourced audio from scenes, without the need for manual geographic annotation, can be used for geospatial perception and audio–visual learning tasks in RS. |

| ADVANCE [61] | 2020 | Image– Audio | 5075 | A multimodal dataset for audio–visual aviation scene recognition tasks, consisting of audio data geotagged from FreeSound and high-resolution images from Google Earth. | |

| Ref-AVS [62] | 2024 | Video, Audio | 40,020 | A multimodal benchmark dataset rich in audio and visual descriptions, which can provide pixel-level annotations and multimodal cues for dynamic visual and auditory object segmentation tasks, covering a wide range of object categories. | |

| Vision + Position | RingMoGPT [63] | 2024 | Image, Text | 522,769 | A unified RS basic model pre-training dataset for visual, language, and grounding tasks, containing over 500,000 pairs of high-quality image–text pairs, generated through a low-cost and efficient data generation paradigm. |

| iNaturalist 2018 [64] | 2018 | Image, Text | 859,000 | The dataset used for object detection and classification consists of over 800,000 images from more than 5000 object categories, and each image has a ground-truth label. | |

| fMoW [65] | 2018 | Image, Metadata | 1 M | The dataset used for identifying buildings and land-use tasks can infer object features such as location, time, solar angle, and physical size from satellite MSIs and the corresponding metadata of each image. | |

| MP-16 [66] | 2017 | Geotagged Images | 4.72 M | This dataset consists of over 4 million geotagged images, enabling alignment between the images and their corresponding GPS locations. It can be used to develop global geographic positioning models for image-to-GPS retrieval methods. | |

| STAR [67] | 2024 | <Subject, Relationship, and Object> | 400,000 | The first benchmark dataset for satellite image scene-graph generation tasks, including complex scenarios such as airports, ports, and overpasses, covers 210,000 geographical entities and 400,000 target relation triples. |

| RSFM | Year | Data | Backbone | Parameter | Size | EO Downstream Tasks |

|---|---|---|---|---|---|---|

| RingMo-Sense [13] | TGRS 2023 | videos, images | Transformer | - | 1 M | VP, CF, REE, ODSV, MTSV, RSTIS |

| RingMo-SAM [14] | TGRS 2023 | optical, SAR data | Transformer | - | 1 M | SS, OD |

| SkySense [15] | CVPR 2023 | optical, SAR data | Transformer | 10 B | 21.5 M | SS, OD, CD, SC |

| ChangeCLIP [16] | ISPRS 2024 | image, text | VLM | - | 0.06 M | CD |

| RemoteCLIP [17] | TGRS 2024 | image, text | VLM | 304 M | 0.16 M | ITR, IP, KC, FSC, ZSIC, OCRSI |

| MetaEarth [18] | TPAMI 2024 | optical image, GI | Diffusion | 600 M | 3.1 M | IG, RSIC |

| EarthGPT [59] | TGRS 2024 | image–text pairs | MLLM | 400 M | 1 M | IC, RLC, VQA, SC, VG, OD |

| RingMoGPT [63] | TGRS 2024 | image–text pairs | MLLM | - | 0.52 M | SC, OD, VQA, IC, GIC, CC |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, G.; Qian, L.; Gamba, P. Advances on Multimodal Remote Sensing Foundation Models for Earth Observation Downstream Tasks: A Survey. Remote Sens. 2025, 17, 3532. https://doi.org/10.3390/rs17213532

Zhou G, Qian L, Gamba P. Advances on Multimodal Remote Sensing Foundation Models for Earth Observation Downstream Tasks: A Survey. Remote Sensing. 2025; 17(21):3532. https://doi.org/10.3390/rs17213532

Chicago/Turabian StyleZhou, Guoqing, Lihuang Qian, and Paolo Gamba. 2025. "Advances on Multimodal Remote Sensing Foundation Models for Earth Observation Downstream Tasks: A Survey" Remote Sensing 17, no. 21: 3532. https://doi.org/10.3390/rs17213532

APA StyleZhou, G., Qian, L., & Gamba, P. (2025). Advances on Multimodal Remote Sensing Foundation Models for Earth Observation Downstream Tasks: A Survey. Remote Sensing, 17(21), 3532. https://doi.org/10.3390/rs17213532