1. Introduction

Heterogeneous remote sensing image matching has emerged as a critical research frontier and serves as the fundamental process for geospatial positioning, navigation, and large-scale image stitching. With the rapid diversification of remote sensing platforms, robust cross-modal feature association among heterogeneous data (e.g., optical, SAR, and infrared) has become imperative. However, three inherent challenges persist: (1) nonlinear radiometric distortions caused by disparate imaging mechanisms, (2) divergent textural characteristics across modalities, and (3) nonlinear geometric deformations resulting from coupled sensor–environment interactions. These factors collectively violate the feature-consistency assumption of conventional matchers, especially under complex environmental disturbances and cross-sensor conditions [

1,

2].

Hand-crafted methods achieve local matching by constructing scale-invariant descriptors; however, they rely on the gradient-coherence assumption. Although the methods based on regional mutual information can mitigate differences in radiometric distributions, they are sensitive to noise and local deformation, and their performance degrades markedly in low-overlap scenarios. In recent years, deep learning has partially mitigated the modal gap through end-to-end feature embedding, but the computational process of an effective feature extraction network is relatively complex, and they struggle to meet real-time constraints when processing large-format images [

3].

To solve the above difficulties related to heterogenous image matching, in the selection of the base map, using a road vector image as the base map has the following advantages: (1). Vector image is a binary image generated based on vector data, which is not affected by factors such as sensors and imaging mechanisms. (2). Vector data has the advantage of lossless transformation, so problems such as differences in viewpoints and changes in the scene can be eliminated between vector image and real-time map. (3). Data itself does not exist in the resolution; the generated vector image can be compatible with the real-time map in resolution. (4). Under the same search range, the vector image storage is smaller, the matching calculation is smaller, and the calculation speed is faster.

For real-time map selection, SAR images are very suitable to be used as real-time maps for matching navigation due to their all-day, all-weather characteristics. SAR images have backward scattering features due to their unique imaging method. Road target backscatter is so weak that it appears as dark barred areas in high-resolution SAR images [

4], which is an easy feature to identify and match.

Therefore, we propose to match SAR images with road vector images for navigation. There are still some difficulties in this research: (1). The research used for deep learning needs a large amount of data as a support, and the SAR and vector image dataset is relatively lacking at present. (2). The SAR image contains rich texture information and coherent spot noise interference, while the vector image only contains sparse road information, which makes it difficult to match it. (3). The road vector image represents the location of the centerline of the road, and it is difficult to restore the width information of the road. Matching pays more attention to the road pointing and length, and is not sensitive to the road width. (4). There may be some unnamed roads missing in the vector information of the road network, which cannot be completely corresponded to the real-time image.

Deep learning methods require a large amount of training sample data for support. Since most of the SAR images come from satellite and airborne radar, the acquisition cost is significantly higher than that of optical images, and the publicly available SAR datasets are relatively limited. The SENI-2 dataset [

5] published the SAR image and optical image pair dataset, after which the use of deep learning to study the alignment algorithms [

6], matching algorithms [

7], data fusion [

8], and detection and recognition [

9] have been greatly developed. Deep learning algorithms related to SAR image ship target detection have been further developed after the release of SSDD [

10], OpenSARShip [

11], SAR Dataset of Ship Detection [

12], and AIR-SARShip-1.0 [

13]. The datasets containing SAR images with corresponding road vectors are currently only two datasets, SARroad and sar_dataset, but the amount of data in them is still far from meeting the needs of deep learning.

In order to solve the above problems, we constructed a SAR-VEC dataset and proposed a Siamese U-Net dual-task supervised network. The main contributions of this paper are as follows:

From the perspective of image source selection, the use of SAR images and road vector images for matching navigation tasks is proposed.

A dual-task supervised network combined with matching loss and segmentation loss is proposed to match SAR images with road vector images for navigation. This provides an idea that co-incorporating supervision of different tasks that extract similar features can be effective in improving accuracy.

A SAR-VEC dataset containing SAR images, road vector images and related labels is constructed for experiments.

2. Related Work

2.1. SAR Image Matching

The matching of SAR images with optical images has been widely studied in heterogenous image matching, and SAR images generated in real-time by the flight platform are usually matched with the optical satellite reference images. Ma et al. [

14] proposed a two-step alignment method based on deep learning features and local features, which firstly approximates the spatial relations using a deep network based on the feature layer of the image (e.g., VGG-16), then applies a matching strategy which takes into account the spatial relationships, and then applies the matching strategy considering spatial relationships to the local SIFT feature-based method to improve the alignment accuracy. Hughes et al. [

15] proposed a pseudo-Siamese network for high-resolution images, which can somewhat solve the problem of difficult matching due to the inversion of the tops and bottoms of high-rise buildings in the SAR images. Hoffmann et al. [

16] used a full convolutional neural network (FCN) to measure image similarity, which is characterized by the fact that the input of the network is not limited by the size of the image, and it can be applied to images of various sizes without scaling in preprocessing. Hughes et al. [

17] also designed a network for the selection of the matching region, the template matching, and the elimination of the mismatched points, which is a unified framework for the realization of the end-to-end matching. The problem of feature matching difficulties due to geometric deformations (e.g., asymmetric distortions) and texture anisotropy in heterogenous image alignment needs to be further addressed [

18].

2.2. Vector Image Matching

Traditional matching algorithms for vector images often extract road intersections, road skeletons or edges in the image as features and characterize them based on geometric attribute information such as points and lines to complete the matching. Costea [

19] detected road intersections on optical images and realized the matching between optical remote sensing images and vector images by matching them with the road intersections in the vector images. Li [

20,

21,

22] et al. proposed the use of road intersections and their tangents as global invariant features and edge features with projection invariance for matching alignment of road network maps and aerial data.

For vector image matching using deep learning methods, Shi [

23] proposed a generative adversarial network-based image transformation method, which employs a loss function based on edge distribution to make real-time image and vector reference image have stylistically consistent edges, and converts the real-time image to the vector reference image to reduce the difference between the two to complete the matching. Wei [

24] proposed an end-to-end Siamese U-Net structure for deep learning template matching method (Siamese U-Net, Siam-U), which utilizes the U-Net to establish linked jump connections at the encoder and decoder, preserving the resolution and location information lost in the CNN multipooling layer, and providing suitable convolutional features for the matching to solve the problem of direct matching between geographic vector images and optical images.

2.3. SAR Image Road Extraction

There are many kinds of traditional SAR image road segmentation methods: the most important and commonly used are edge detection [

25] and region segmentation [

26]. The former focuses on the linear features of the road edges, and the latter mainly focuses on the regional features of the road surface.

Deep learning is utilized for SAR image road extraction. Li [

27] et al. used CNN model for feature extraction and matching, and postprocessed the extracted road candidate regions using MRF and improved Radon algorithm. The MSPP [

28] model proposes a Global-Attention Fusion (GAF) module, which contains two branches: one is used to pool the high-level feature maps for global averaging as the attention mechanism map, and the other one learns the low-level feature maps through the bottleneck architecture initially to obtain the shallow features, and then fuses the generated attention mechanism map with the shallow feature maps to obtain the weighted feature maps.

3. Dataset

In order to facilitate the development of deep learning methods for SAR-geo-vector data fusion, it is crucial to be able to acquire large datasets of fully aligned images or image patches. However, collecting large volumes of accurately aligned image pairs remains challenging, especially since SAR imagery is less accessible and more costly to obtain than optical data. There is almost no SAR dataset for road targets in the public datasets. In order to promote the research on the heteroscedastic matching method between SAR images and geographic vector images, this paper constructs a public sample dataset of SAR images and geographic vector images of the corresponding roads based on the Gaofen-3 satellite data, which is named as SAR-VEC.

3.1. Data Sources

The SAR strip (GF3_SAY_UFS_000605) was acquired on 20 September 2016 in Ultra-Fine Strip mode, C-band (5.4 GHz, λ ≈ 5.6 cm), HH-polarisation, 3 m ground-range resolution, incidence angle ≈25°, centred at 114.5°E, 30.5°N (Hubei Province).

The road geo-vector data come from OpenStreetMap (OSM) and the road network vector data of WeMap to complement and check each other, supplementing each other to ensure the completeness of the road network with possible deficiencies in the road network.

3.2. Data Processing

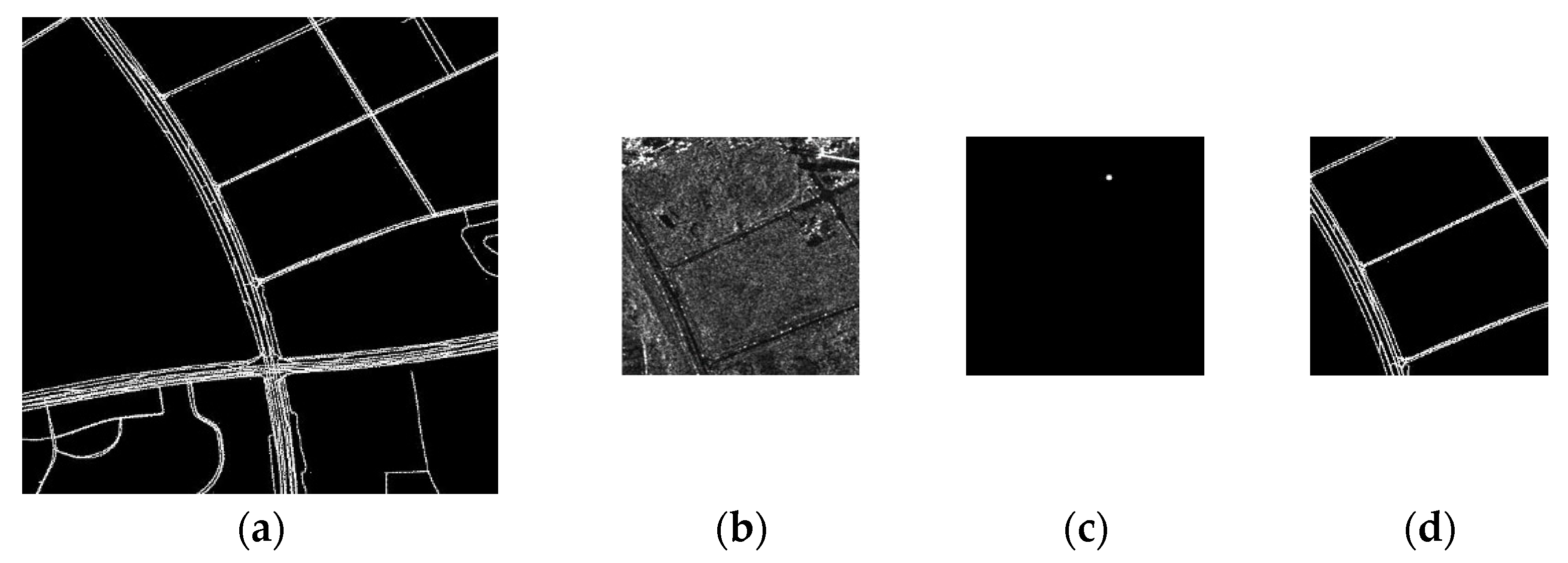

SAR images were pre-processed using PIE-SAR7.0 software, including SLC-to-intensity conversion, filtering noise reduction, geometric correction, and geocoding. The geographic vector data were vectorized, geocoded, and plotted into vector images using QGIS software. The geocoded SAR image was coarsely aligned to the vector image and then manually aligned precisely. The tightly aligned SAR image and vector image are sliced into data pairs, and each set of data pairs contains (1). a 512 × 512 geographic vector image of the road network as a base map; (2). a 3 m resolution 256 × 256 SAR image as a real-time map; (3). Coordinate labels of the position of the upper left corner of the SAR image points in the vector image are used to generate the position label image, where the real Euclidean distance of the matching location within three pixels is set to 1 and the rest is set to 0; (4). a 256 × 256 geographic vector image of the road network corresponding to the SAR image is used as the road monitoring map label.

The preliminary data pair set may have the following problems: (i) due to the SAR image itself is prone to serious coherent spot noise interference, some images will also have the phenomenon of defocus; (ii) the road target in the SAR image is prone to be interfered by the number of trees on the side of the road, buildings, and green belts and other objects, resulting in some of the roads appear serious breaks, occlusion, or mixing with the background; (iii) the roads in the vector image are missing and do not fully match the SAR image. SAR images do not match exactly. After removing the above three types of bad data pairs, the final SAR-VEC dataset containing 2503 data pairs is formed. Examples of a data pair are shown in

Figure 1. See the

Appendix A.1 for detailed dataset preparation.

4. Methods

In this paper, a Siamese U-Net dual-task supervised network (SUDS) is proposed as shown in

Figure 2. The network consists of three parts: feature extraction, matching supervision and road extraction supervision.

4.1. Feature Extraction

Road targets exhibit regular geometric properties—edges, orientation, shape, and network topology. SAR images have backward scattering characteristics due to its unique imaging method, and back-scattering magnitude depends on the complex dielectric constant, surface roughness, and other target parameters. The backward scattering of road targets is very weak, which is shown as dark barred areas in high-resolution SAR images [

4]. Water bodies such as rivers and roads often show similar dark barred regions and need to be distinguished. In addition, under complex road conditions, ground moving targets appear blurred and shifted in the image, forming highlight interference, which covers part of the road and makes the road appear broken, as shown in

Figure 3. Meanwhile, the vector image is a simple binary image that contains only sparse road network information. Therefore, how to extract the common features of the two is the key to solve the problem.

The difficulty of the heterogenous image matching problem lies in the large image differences and the difficulty of homogeneous feature extraction. Some studies [

23,

29] converted one type of image into another type of image by learning the pixel distribution law between heterogenous images. The homogeneous image matching algorithm is used to complete the matching work after converting the heterogeneous images into images of the same style. Referring to this idea, we can convert SAR images into vector style binary images and then match them with vector images, which seems to be related to the road extraction task. However, in reality, road extraction is only an intermediate means to complete the matching. We do not pursue the accuracy of the extracted roads, but are more concerned with whether it contributes to the matching results. Therefore, we try to add road segmentation as an additional supervision to the network structure.

It is found through the previous research that constructing Siamese network can better extract the common features of heterogeneous source images. Meanwhile, the encoder and decoder structure of U-Net can better extract the road network features of SAR images [

30]. Siamese U-Net dual-task supervised network is proposed. Using the Siamese network, the SAR real-time image and the vector reference image are put into two branches of the convolutional network separately, so as to extract similar structural features. Despite being two separate branches of the feature extraction network, their weights are shared. Weight sharing forces both branches to learn common spatial descriptors; this reduces the risk of over-fitting to SAR-specific speckle patterns without requiring additional regularization terms.

The feature extraction consists of nine modules, including a convolutional input module, four downsampling modules, and four upsampling modules. Each upsampling has a corresponding input from the downsampling module and a common input from the upper layer in a hopping fashion. With this hopping cascade approach, the depth features contain both high-level and low-level information. The same encoder feature maps are reused by the segmentation head, adding only one 1 × 1 conv kernel worth of memory at inference.

The specific network layer parameters are shown in

Appendix A.2.

4.2. Dual-Task Supervised Loss Function

In the network output section, we divided the final output into two parts representing two different tasks for supervised network feature extraction.

The first part is the matching output. A 1 × 1 convolutional layer is used to extract the SAR feature image and vector feature image, respectively. And then the correlation heat image of the two is obtained by convolution, and the peak of the correlation heat image is used as the matching result. In the matching task, we use the class-balanced cross-entropy loss function

.

represents the biplot of the matching labels of the image pair, where the Euclidean distance of the true matching position within three pixels is set to 1 for all labels and 0 for the rest.

,

,

,

,

,

denote the width and height of the vector image and the SAR image, respectively.

and

denote the correct match position and wrong match position labels, respectively.

represents the predicted heat map of the network output.

denotes the value of the predicted heat map at

,

is the same.

The second part is the segmentation output. The road segmentation map of the SAR image is obtained using a 3 × 3 convolutional layer and sigmoid activation function, which is used as the road segmentation result. The sum of binary cross entropy loss (

) and dice coefficient loss (

) is used as the loss function

, and the model is optimized using the Adam optimizer.

where n denotes the total number of pixel points,

denotes the log probability of the prediction category;

and

denote the number of road pixel points in binary prediction map A and labelling map B, respectively.

denotes the number of intersecting pixel points between A and B.

The final loss function is the weighted sum of the matching

and the segmentation

.

where

is a weighting factor. Unlike single-task losses used, the joint formulation allows gradients from segmentation to regularize matching features, eliminating the need for extra post-processing filters. The size of

determines the proportion of matching loss to segmentation loss in network training, and we will discuss its value in the subsequent sections.

5. Experiments and Results

In this section, we design an experiment to verify that it is feasible to solve the problem of matching SAR images with vector images using Siamese U-Net Dual-task Supervised Network (SUDS). Our proposed SUDS is evaluated by comparing it with other existing matching methods Normalized Cross Correlation (NCC), MatchNet [

31], Unet++ [

32] and Siam-U [

7].

5.1. Experimental Settings and Evaluation Metrics

In terms of hardware and environment, Intel Core I9-13900K CPU and NVIDIA RTX4090 GPU are used throughout the experiment for training and testing, python3.7 is used for programming, and pytorch1.13.0+cu116 is used to build the network framework. The network was optimized using the Adaptive Moment Estimation (Adam) optimizer, with the raw learning rate set to 0.0001, the weight decay coefficient to 0.00005, and the number of network training epochs to 50.

We randomly divide the 2503 sets of image pairs in the SAR-VEC dataset presented in the previous section into a training set containing 2003 sets of image pairs and a test set of 500 sets of image pairs. In the training set, 256 × 256 SAR images are used as real-time images, 512 × 512 vector images are used as reference images, labels with Euclidean distances within three pixels of the true matching location are set to 1 and the rest to 0, constituting a binary map of matching labels, and 256 × 256 vector images are used as road segmentation labels.

In this paper, we use the root mean square error of the sample

, the correct rate of template matching within a certain error threshold

, and the standard deviation of matching accuracy

to evaluate the matching effect of the model.

where

is the total number of test samples,

denotes the coordinates of network matching in the first group of test data, and

denotes the true coordinates of the first group.

is the number of groups whose matching error is less than the threshold T in the group of

test samples.

5.2. Matching Comparison

In order to compare different methods and demonstrate the superiority of our method (SUDS), we compared four relate matching methods, NCC, MatchNet, Unet++ and Siam-U. In order to ensure the objectivity and impartiality of the experimental results, we retrained the Siam-U network under the same conditions, in SUDS.

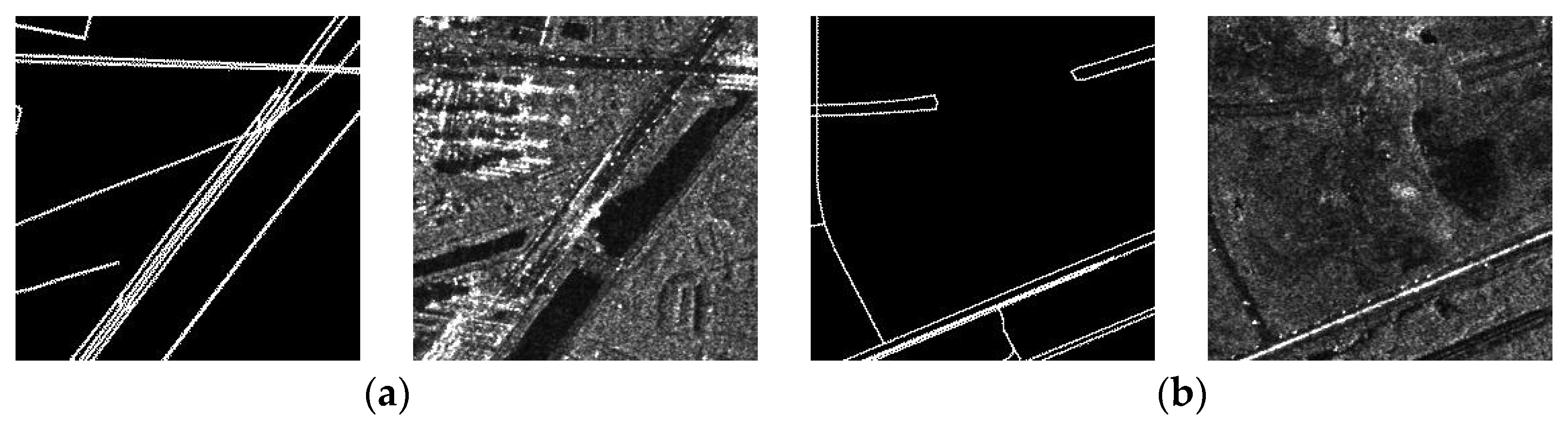

An example of a successfully matched heat map is shown as

Figure 4. This set of examples has clear road features in the SAR image and strong road specificity in the vector image, which is difficult to make false matches, and is an ideal set of matching inputs. From the heat map, the high heat values in the NCC heat map appear in the form of regions, and the high heat value regions in the MatchNet heat map are in the form of lines, while the peaks are not obvious. Whereas, Unet++, Siam-U and SUDS heat map has obvious dotted peaks which shows that Unet series network performs better on this task. The peaks in the SUDS heat map are more concentrated and the peak contrast is prominent, which indicates that the SUDS method has better performance in matching.

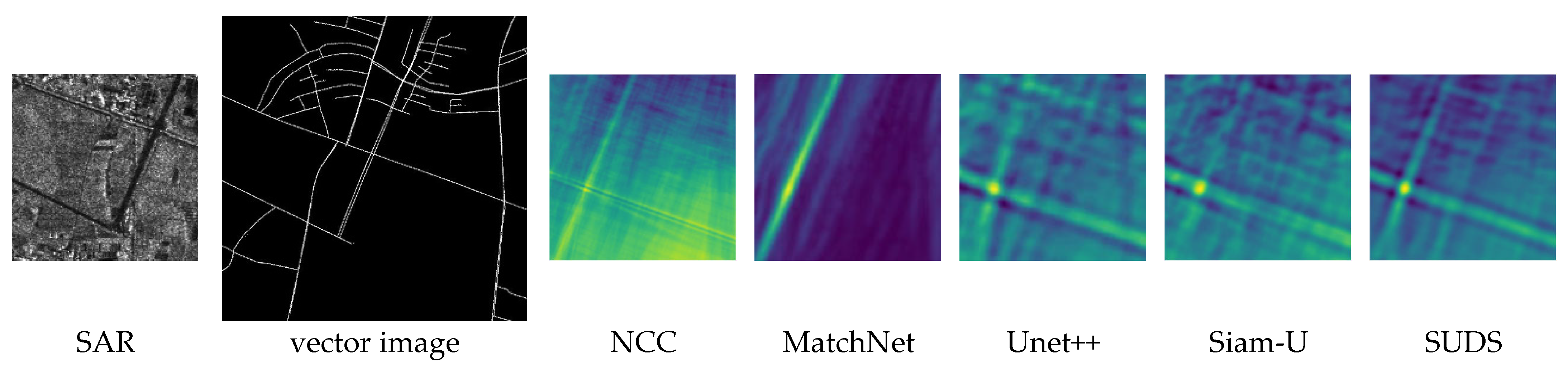

Matching results of three sets of typical interference images are shown as

Figure 5. NCC is not able to produce correct matching results in all three sets of images. MatchNet heat map shows obvious line features, the peak ratio is too low, the matching results are easily interfered, and the matching error is significantly higher than that of the Unet network method. Thus we focus on comparing the differences between Unet++, Siam-U and SUDS methods.

In group 1, the vector image contains straight roads with multiple similar intersections, which is easy to produce false matches. both Unet++ and Siam-U matching results have multiple local extreme points, while SUDS matching results have only a single peak value. In group 2, the SAR image contains similar roads and water bodies, resulting in interference. There are multiple local extremes in the Unet++ matching result, and the Siam-U matching result has high local heat values interfered by the water bodies, while the SUDS matching result has prominent peaks and a clearly defined image. In the third set of images, there is highlight interference on the right side of the road in the SAR image, and there are similar highlighted buildings on the top of the image. Unet++ has higher local heat values and the peak is not prominent. Siam-U is disturbed by the interference and has two similar local extremes, and the matching result is inaccurate. While SUDS eliminates the interference well, with a clear single peak and accurate matching result.

The above results show that our proposed dual-task supervisory module can help the network to better focus the road information through the supervision of the segmentation module. When there is interference in the image, the network can extract the key features more accurately. Through the connection of the twin network, the matching module will share the horizon of the segmentation module, which leads to the improvement of the performance of the matching module. Therefore, SUDS can overcome the water body interference and highlight interference caused by the SAR imaging principle, correctly extract the road information and distinguish the differences in similar roads, so as to obtain more accurate matching results with better robustness.

The test data results of all methods are shown in

Table 1. It can be seen that the SUDS network is able to complete the task of matching SAR images with vector images and obtain a high matching correct rate. The SUDS method outperforms the remaining two methods in terms of root mean square error and correct matching rate, with 80.2% correct matching rate within 5 pixels and 91.0% correct matching rate within 10 pixels.

For the matching time, although NCC and MatchNet have shorter time consumption, the matching error is larger and the correct rate of matching is lower. While ensuring the accuracy rate, SUDS outperforms Unet++ and Siam-U in terms of time consumption and can better adapt to the demand of real-time matching in the navigation process.

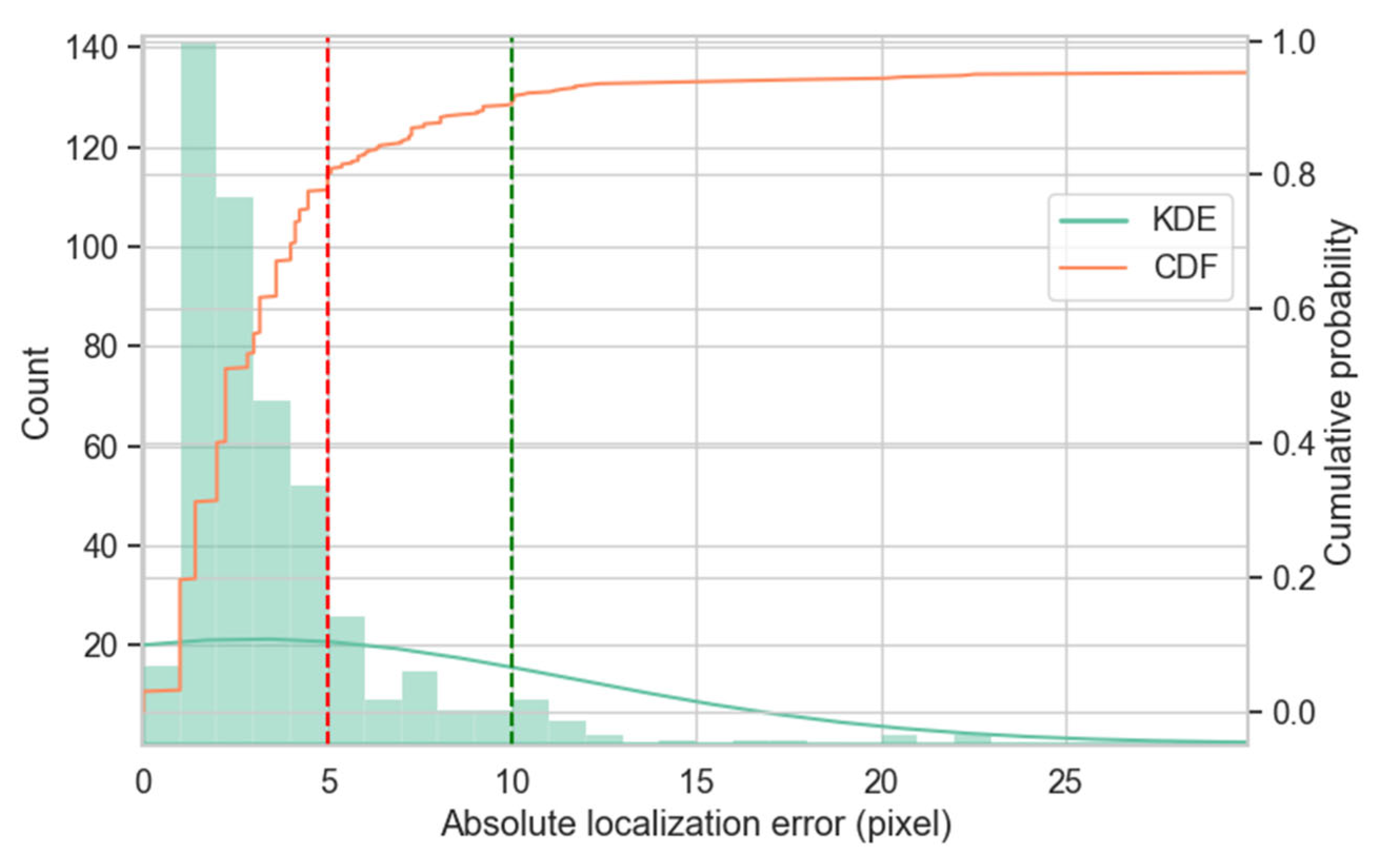

Figure 6 presents the distribution of localization errors, consistent with our previous conclusions. The histogram indicates that most samples have errors concentrated between 0 and 5 pixels, with the highest proportion of samples having errors between 0 and 1 pixels, exceeding 30%. This suggests that the model achieves higher localization accuracy within smaller error ranges.

The CDF curve shows that 80.2% of samples have errors no greater than 5 pixels, and 91.0% have errors no greater than 10 pixels. This further substantiates the model’s capability to achieve high-precision localization in most cases.

The KDE curve smoothly outlines the shape of the error distribution, further emphasizing the concentration of errors in a smaller range and the pronounced decrease in sample counts with increasing error.

Limitations of the current study and representative failure cases are summarised in

Appendix A.3.

6. Discussion

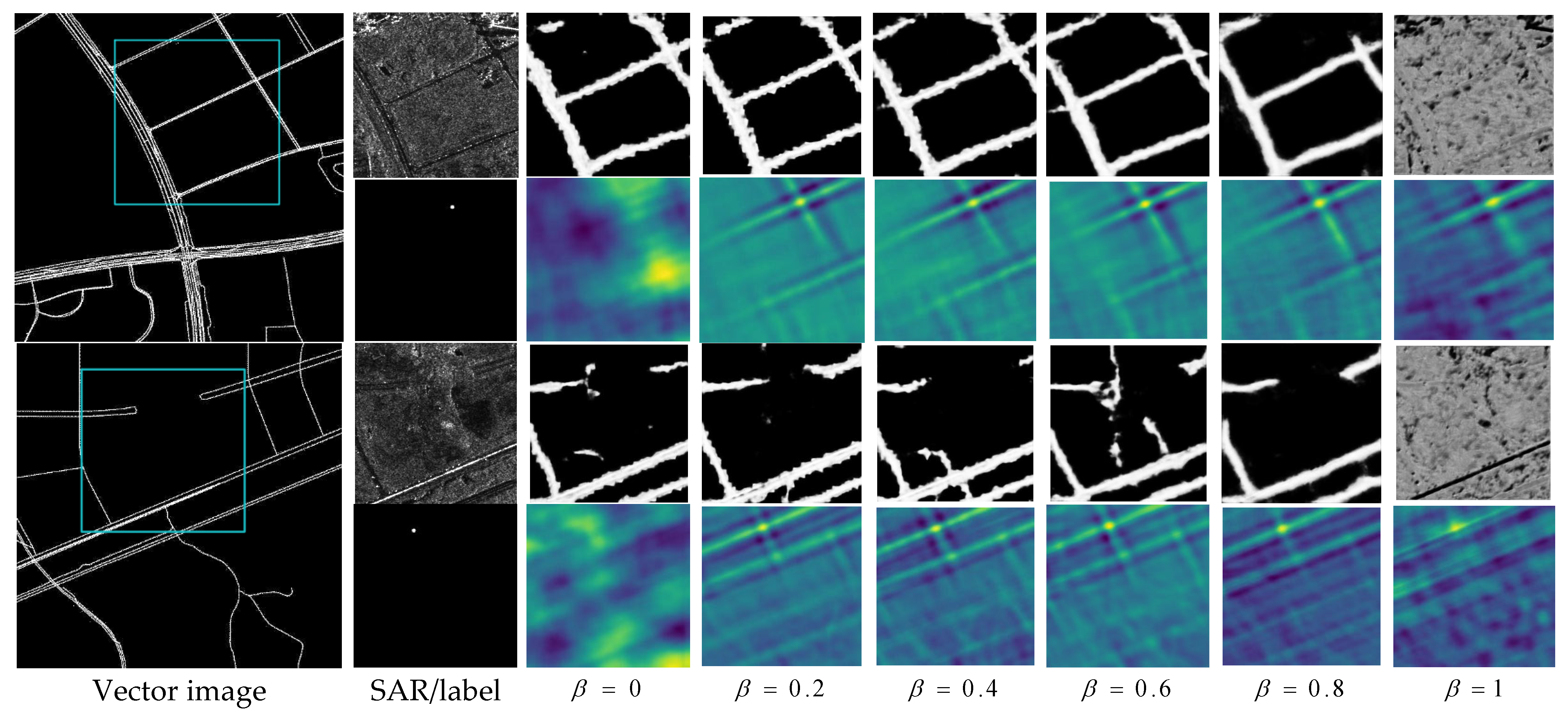

In this section, we will discuss how the weighting parameter

works for network training and how to choose an appropriate value. The networks were trained and tested with the same settings as in the previous chapter, with weighting parameter

of 0, 0.2, 0.4, 0.6, 0.8, and 1, respectively. Two examples of network outputs are shown in

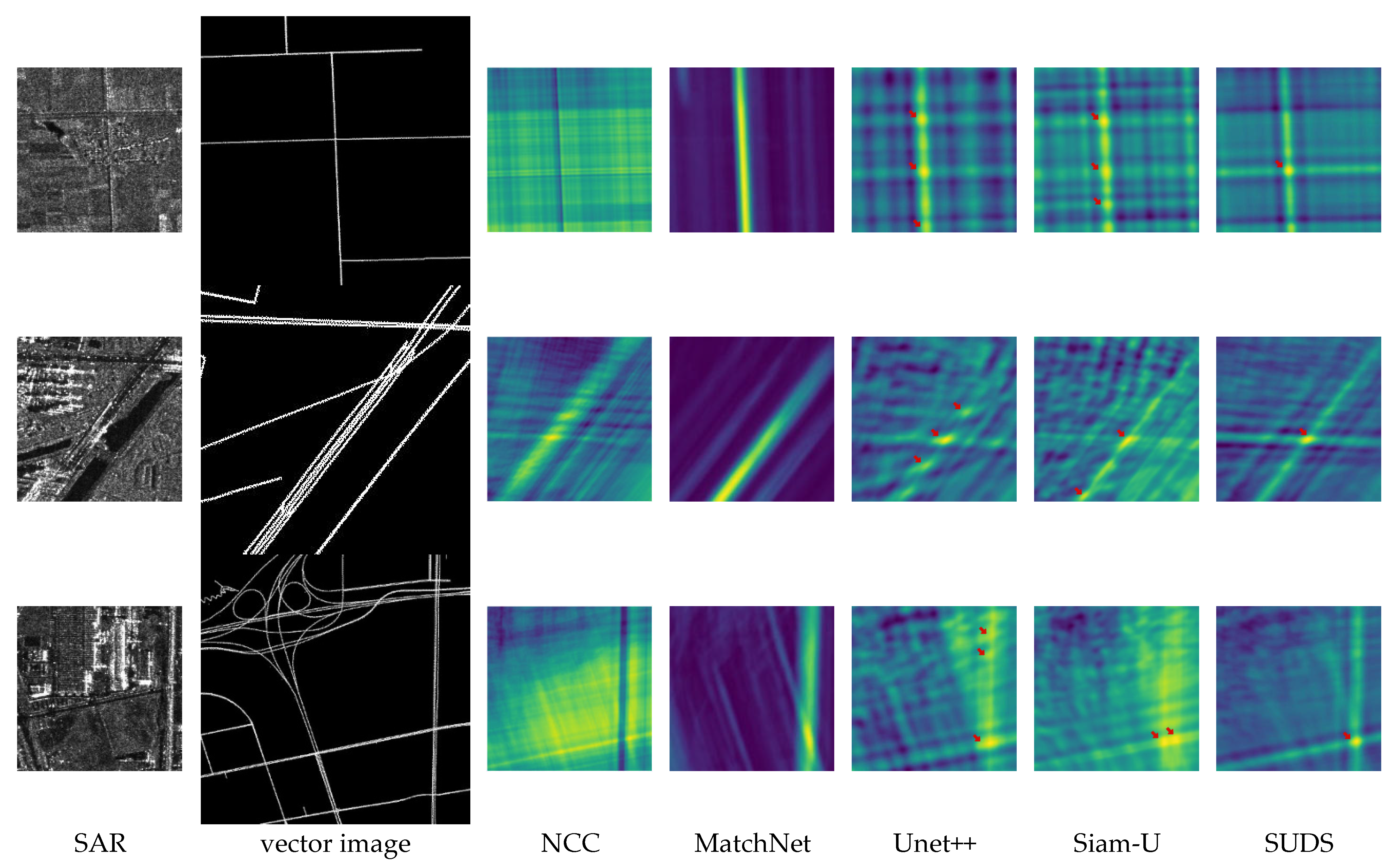

Figure 7.

From the road segmentation results, when , the network degrades to a U-Net matching network, which can hardly segment the road. When , as b increases, the weight of matching loss in the network increases, the jagged interference in the road segmentation image gradually decreases, and the segmented image becomes smoother. This indicates that the increase in the weight of the matching loss is beneficial to the road segmentation task.

From the matching results, when , the network degrades to a U-Net segmentation network, which can hardly obtain a correlation heat map with clear peaks. When , the network degrades to U-Net matching network, which is able to obtain relatively accurate correlated heat images. When , the addition of segmentation loss enhances the boundary alignment of the network, resulting in more prominent correlated heat map peaks and less background noise. This suggests that the presence of segmentation loss is beneficial for the matching task.

In fact, we are more concerned about the matching performance of the model in the navigation task, and the more accurate matching test statistics are shown in

Table 2.

As can be seen from

Table 2, when

, the network contains only the road segmentation loss, and the network is downgraded to a U-Net segmentation network. At this time, the root-mean-square error is the largest, and

is close to 0, which almost cannot complete the matching task.

When , the network contains only matching loss, and the network is degraded to U-Net matching network. The matching error is 69.2% correct within 5 pixels, and the matching error is 86.6% correct within 10 pixels.

When , the network contains both road segmentation loss and matching loss, and the matching error is more correct within 5 pixels than that at in both cases. This shows that adding segmentation loss supervision to the matching network can effectively improve the correct rate of matching.

When or , although their matching accuracy are more correct within 5 pixels, the root-mean-square error is larger than that at . The cause of these problems may be related to the performance of matching loss and segmentation loss. Matching loss directly adjusts the gradient ratio of positive and negative samples by fixing the weight parameter, and the gradient contribution of positive samples is larger, which can accelerate the model’s learning of a few classes. When the percentage of matching loss is small, the network may fall into a local optimum, and the ability to match some difficult samples is insufficient. When and , the matching correctness and root-mean-square error have better performance.

In addition, in terms of gradient propagation and convergence speed, the matching loss gradient is linearly related to the error, with a clear direction of the gradient, and the optimization process is more stable; while the segmentation loss gradient is inversely proportional to the prediction error, which may lead to instability at the early stage of training and slower convergence speed. The convergence curves of the two losses over epoch are shown in

Figure 8.

According to the experimental experience, the best matching result is obtained when . When facing different tasks and loss functions, the value of the parameter can be adjusted according to the ratio of the initial epoch loss.

7. Conclusions

In this paper, we propose to use SAR images with road vector images for the matching task, create a SAR-VEC dataset, and propose a Siamese U-Net dual-task supervised network for that matching task. We add the segmentation loss to the matching task and use the weight-sharing U-Net to extract the common road information of the SAR and vector images. The sum of the weighted segmentation loss and the matching loss is calculated as the network loss, and the jointly supervised network feature extraction improves the feature extraction capability of the network, thus improving the matching accuracy. By comparing the NCC, MatchNet, Unet++ and Siam-U methods, we find that SUDS can better eliminate the interference in SAR images, identify the differences in similar roads, obtain more accurate matching results and have better robustness. Experiments show that the SUDS method outperforms the remaining two methods in terms of root-mean-square error and matching correctness, with 80.2% correctness up to 5 pixels and 91.0% correctness up to 10 pixels of matching error.

By exploring the value of the weighting factor , we find that dual-task loss supervision is beneficial not only for the matching task, but also for the segmentation task. The best matching results are obtained when . When facing different tasks and loss functions, the value of the parameter b can be adjusted according to the ratio of the initial epoch loss. This provides a new idea for future research: adding different tasks that extract similar features together to supervision can effectively improve the accuracy.

The proposed SUDS approach requires further refinement to cope with more complex scenarios, and the matching will become more challenging when facing the presence of road occlusion or deformed roads in SAR images. The matching algorithm can be subsequently optimized to adapt to more complex matching tasks. In addition, in this paper, we use vector data to generate vector images and then match them. Constructing a matching network for heterogeneous data and directly using vector data to match with raster images may achieve improved results.