HybriDet: A Hybrid Neural Network Combining CNN and Transformer for Wildfire Detection in Remote Sensing Imagery

Highlights

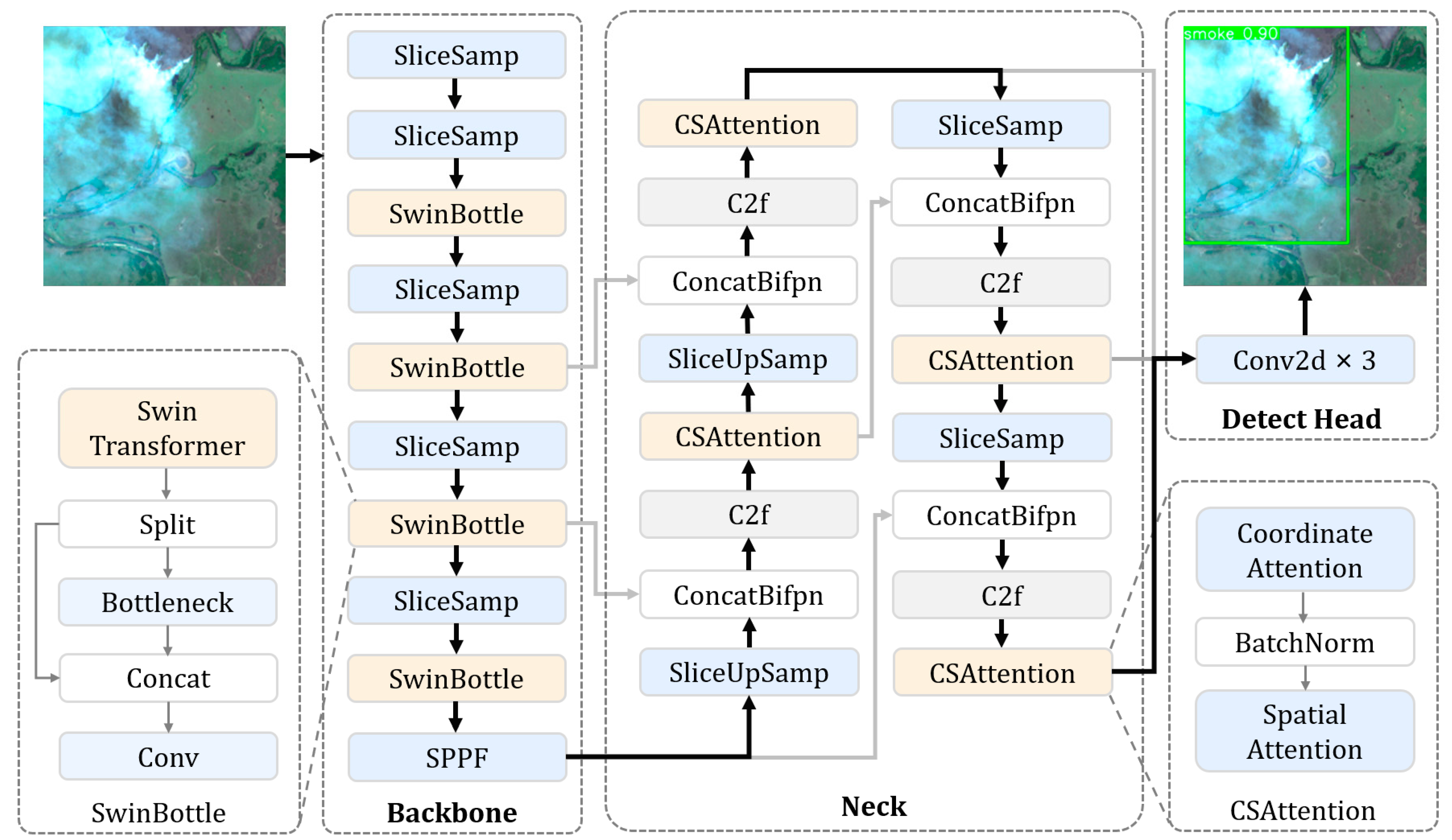

- A novel hybrid neural network architecture named HybriDet is proposed, which effectively integrates the local feature extraction capability of CNNs and the global contextual modeling strength of Transformers. The innovative SwinBottle module and Coordinate-Spatial (CS) dual attention mechanism significantly improve the detection accuracy for wildfires and smoke in complex remote sensing imagery.

- A superior balance between accuracy and efficiency is achieved. The lightweight model after structured pruning contains only 6.45 M parameters. It significantly outperforms state-of-the-art models like YOLOv8 by 6.4% in mAP50 on the FASDD-RS dataset while maintaining real-time inference speed suitable for edge device deployment.

- Provides an efficient and reliable fire detection solution for resource-constrained edge computing environments (e.g., satellites, UAVs). Model compression and optimization techniques enable the practical deployment of high-performance deep learning models on low-power devices, directly contributing to early wildfire warning and emergency response.

- The proposed method demonstrates strong generalization capabilities and broad application prospects. Its superior performance across multiple public datasets (FASDD-UAV, FASDD-RS, VOC) indicates its effectiveness in handling highly heterogeneous remote sensing imagery, providing crucial technical support for intelligent remote sensing monitoring in ecological conservation and socioeconomic security.

Abstract

1. Introduction

- We combined CNN and transformer to design a new wildfire detection model, utilizing the windowed attention of Swin Transformer to facilitate information exchange between image contexts. Simultaneously, incorporating bottleneck residual convolution helps address the deficiency in global perception with lower model parameter costs, effectively enhancing fire detection accuracy.

- We designed a dual attention called Coordinate-Spatial (CS) attention mechanism, which integrates Coordinate and Spatial Attention to enhance feature discrimination. It captures long-range channel dependencies through directional-aware modeling while emphasizing salient spatial regions, enabling comprehensive feature understanding for irregular flame and smoke objects.

- Our comprehensive experiments on the FASDD-UAV, FASDD-RS and Pascal Visual Object Classes (VOC) datasets demonstrate that the HybriDet achieves superior detection performance compared to advanced models, while maintaining a similar level of model complexity. Additionally, ablation studies confirm the effectiveness of each proposed module, and edge deployment experiments validate the model’s real-time inference capability on embedded devices.

2. Related Works

2.1. Fire Detection Methods Based on Deep Learning

2.2. Model Pruning

3. Methodology

3.1. Overall Architecture

3.2. SwinBottle Component

3.3. Coordinate-Spatial (CS) Attention Mechanism

4. Experiment

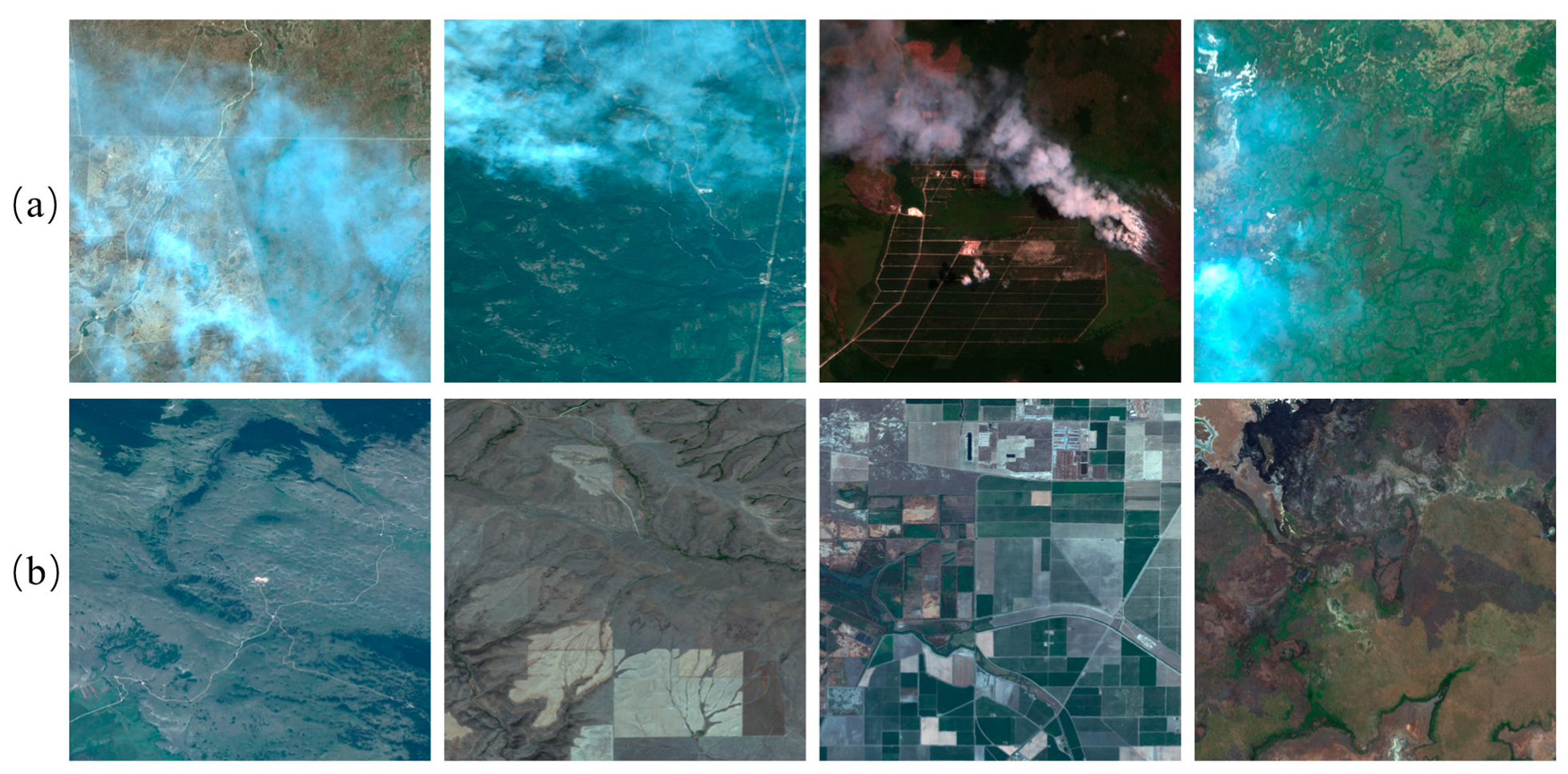

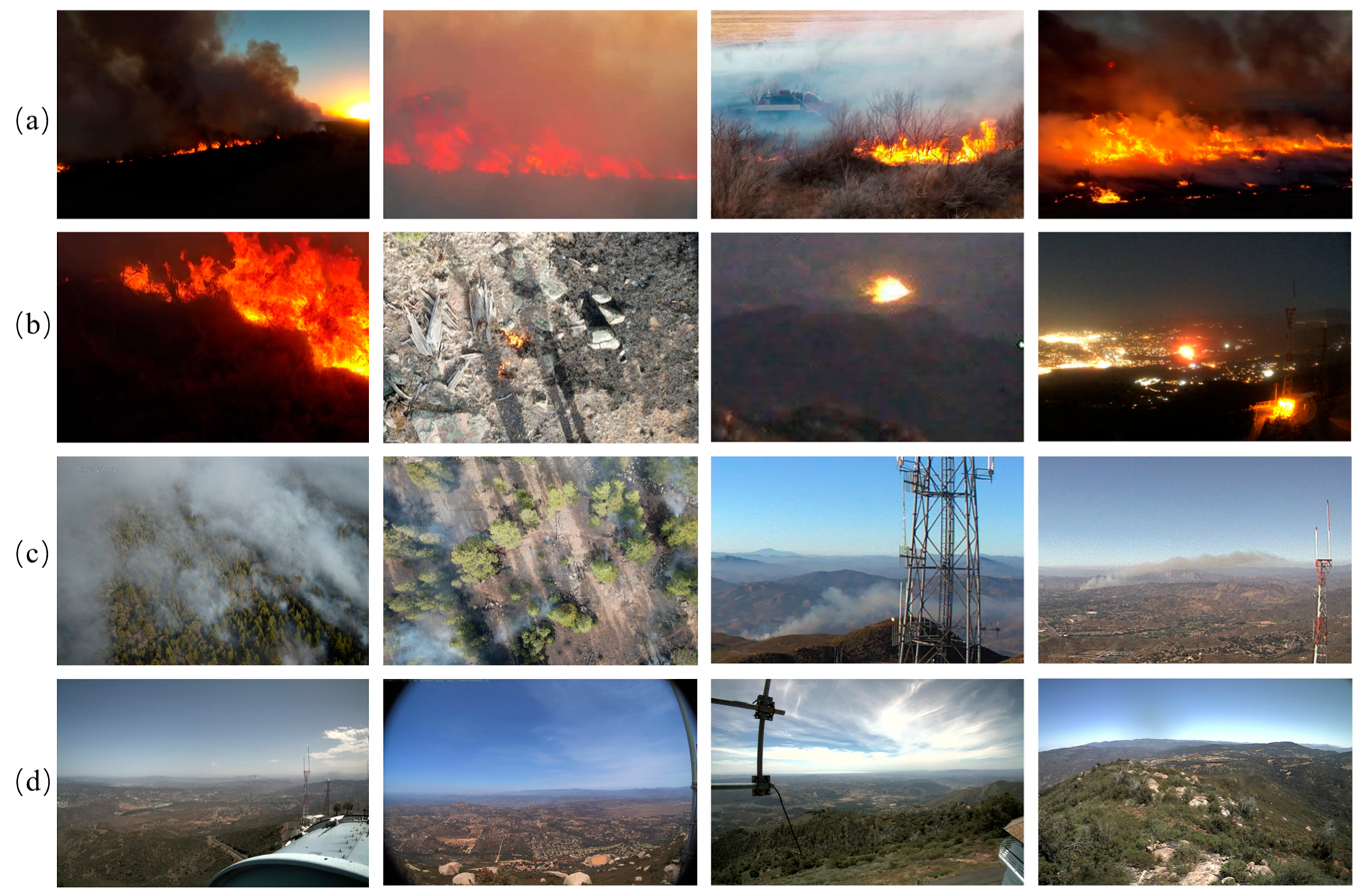

4.1. Dataset

4.2. Experimental Settings

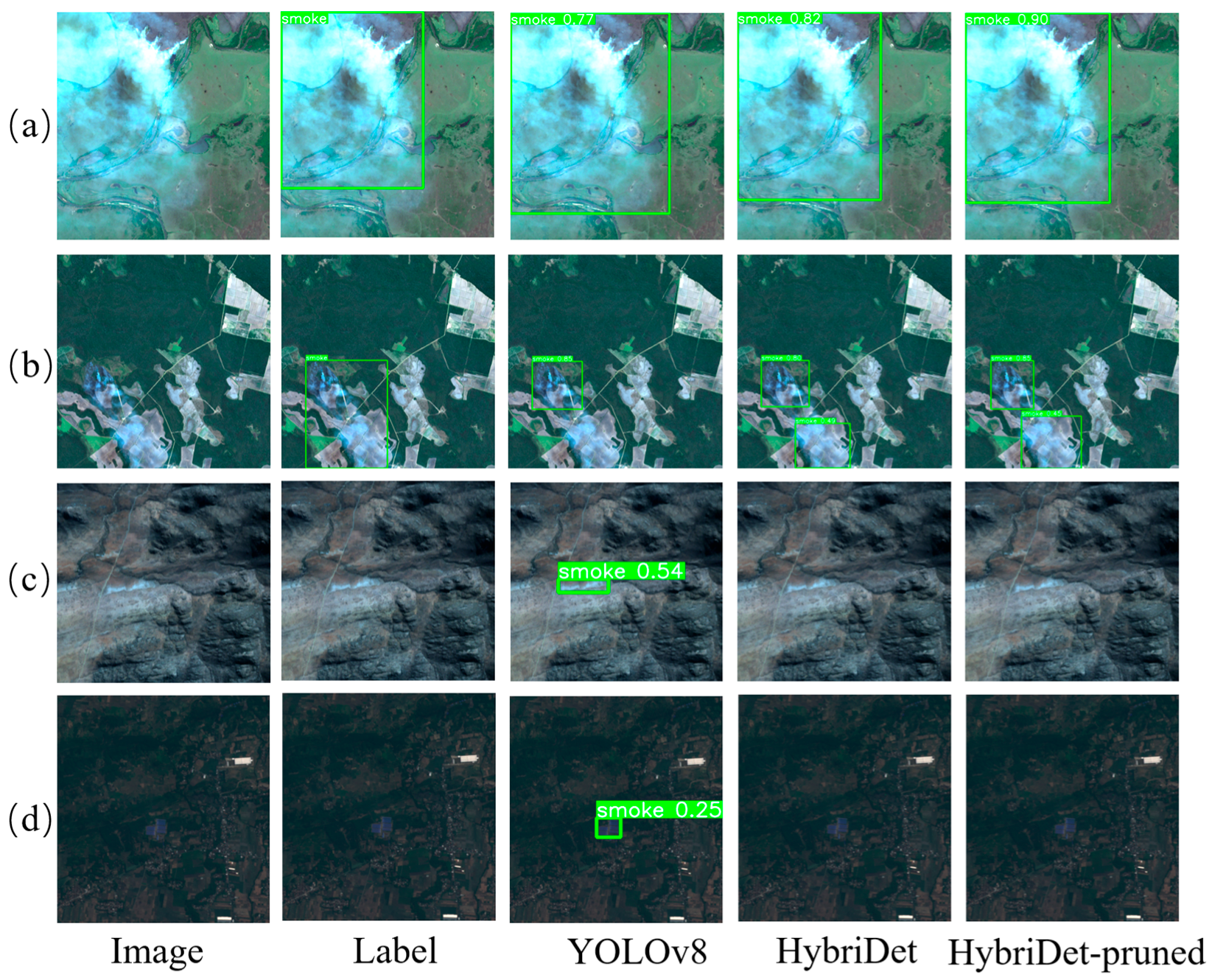

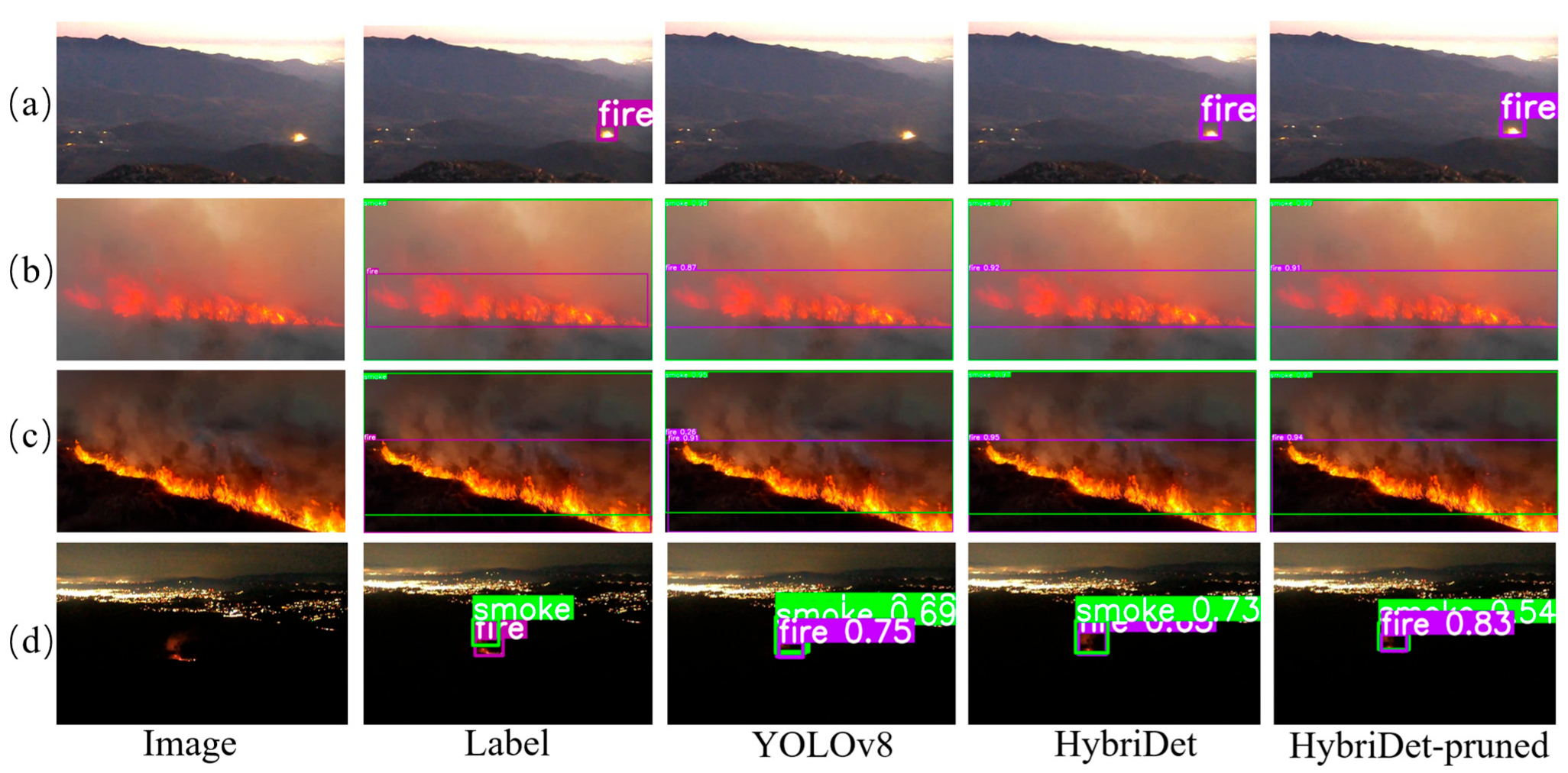

4.3. Comparative Experiment

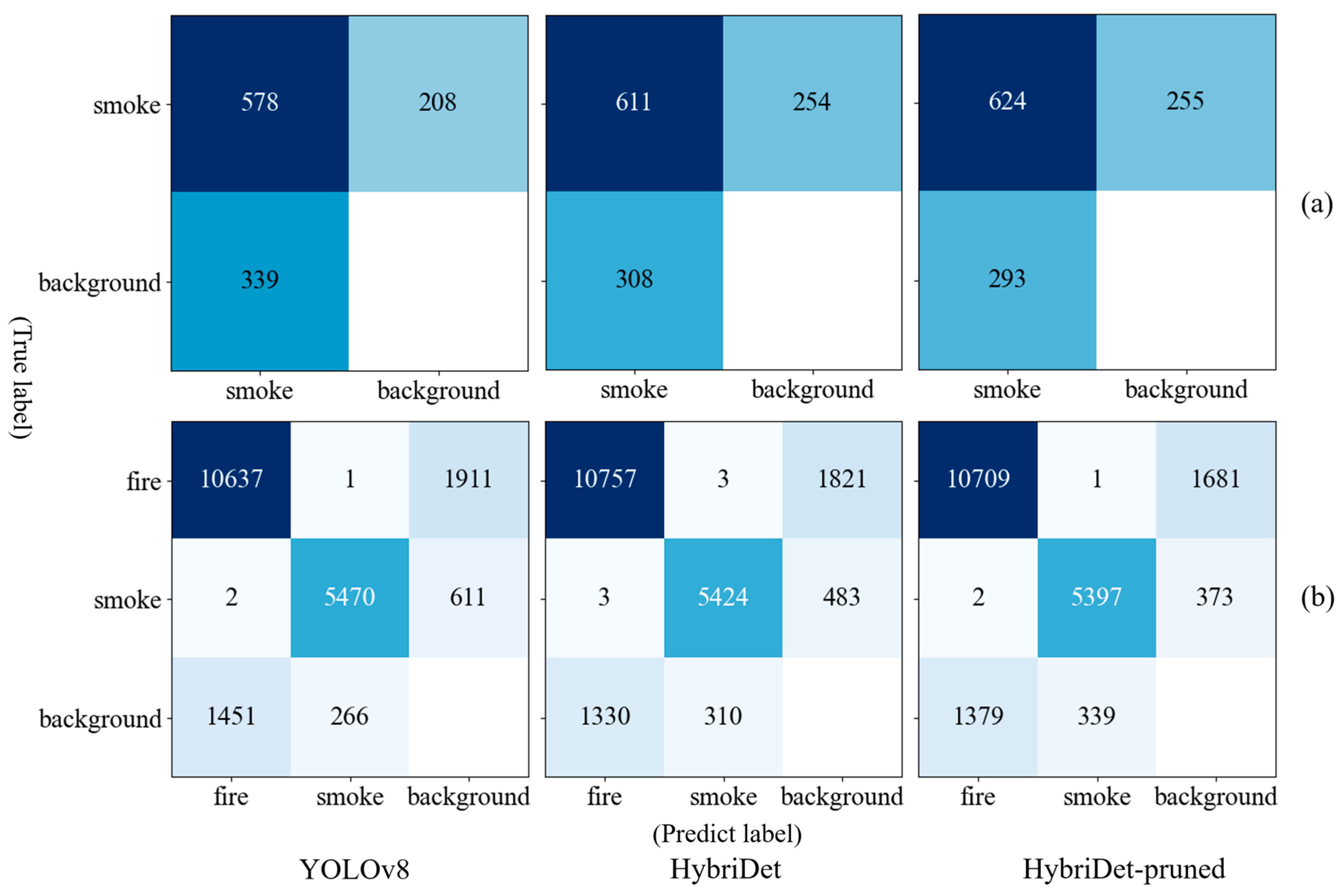

4.4. Performance Evaluation

4.5. Edge Deployment Optimization

4.6. Ablation Study

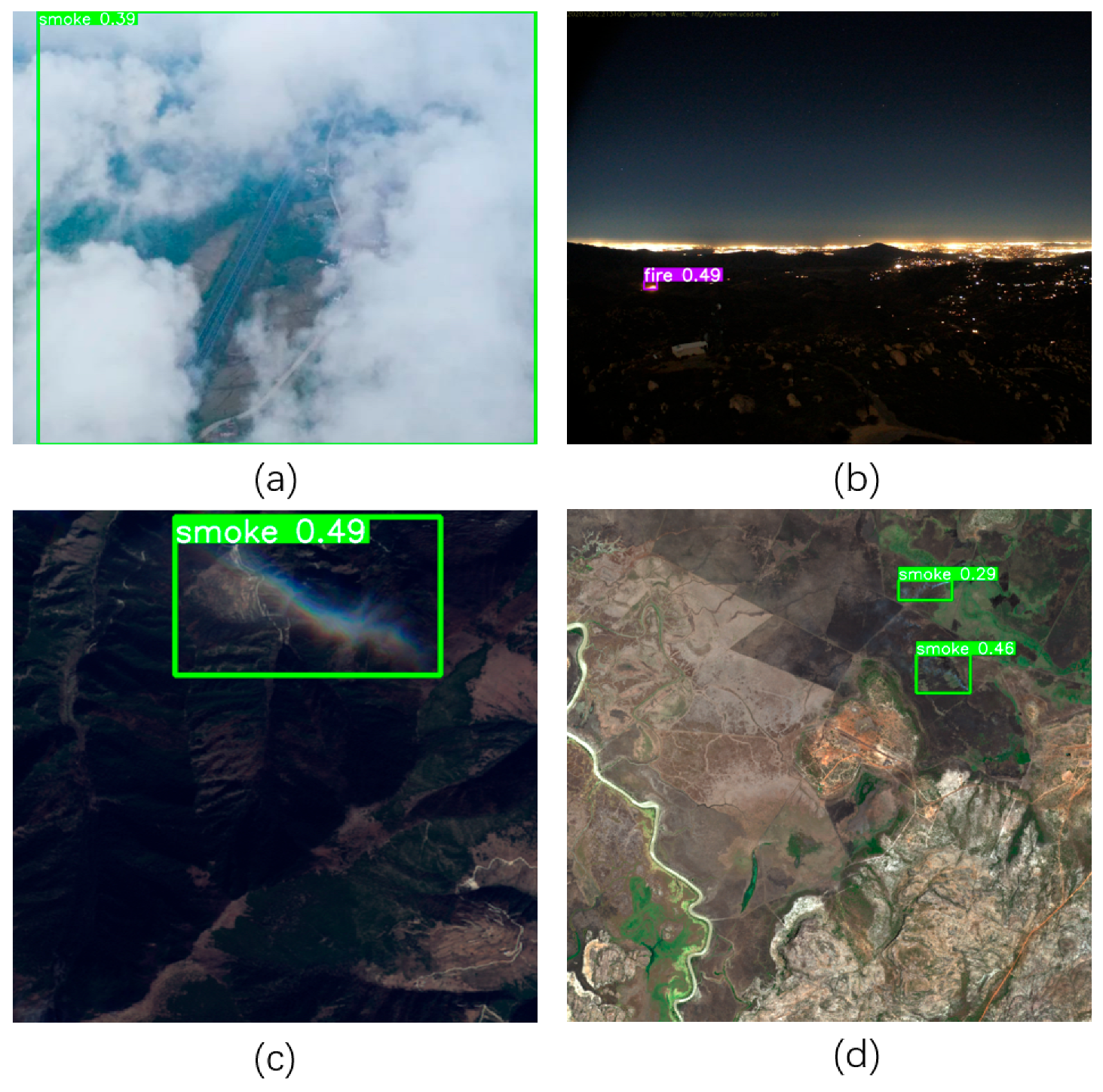

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Özel, B.; Alam, M.S.; Khan, M.U. Review of Modern Forest Fire Detection Techniques: Innovations in Image Processing and Deep Learning. Information 2024, 15, 538. [Google Scholar] [CrossRef]

- Wu, Q.; Cao, J.; Zhou, C.; Huang, J.; Li, Z.; Cheng, S.-M.; Cheng, J.; Pan, G. Intelligent Smoke Alarm System with Wireless Sensor Network Using ZigBee. Wireless Commun. Mobile Comput. 2018, 2018, 8235127. [Google Scholar] [CrossRef]

- Wang, M.; Yue, P.; Jiang, L.; Yu, D.; Tuo, T.; Li, J. An Open Flame and Smoke Detection Dataset for Deep Learning in Remote Sensing Based Fire Detection. Geo-Spat. Inf. Sci. 2024, 28, 511–526. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mobile Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Chen, Y.; Li, J.; Sun, K.; Zhang, Y. A Lightweight Early Forest Fire and Smoke Detection Method. J. Supercomput. 2024, 80, 9870–9893. [Google Scholar] [CrossRef]

- Dalal, S.; Lilhore, U.K.; Radulescu, M.; Simaiya, S.; Jaglan, V.; Sharma, A. A Hybrid LBP-CNN with YOLO-v5-Based Fire and Smoke Detection Model in Various Environmental Conditions for Environmental Sustainability in Smart City. Environ. Sci. Pollut. Res. 2024. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Niu, S.; Yue, J.; Zhou, Y. Multiscale Wildfire and Smoke Detection in Complex Drone Forest Environments Based on YOLOv8. Sci. Rep. 2025, 15, 2399. [Google Scholar] [CrossRef]

- Lei, G.; Dong, J.; Jiang, Y.; Tang, L.; Dai, L.; Cheng, D.; Chen, C.; Huang, D.; Peng, T.; Wang, B.; et al. Wildfire Target Detection Algorithms in Transmission Line Corridors Based on Improved YOLOv11_MDS. Appl. Sci. 2025, 15, 688. [Google Scholar] [CrossRef]

- Mohammad Imdadul Alam, G.; Tasnia, N.; Biswas, T.; Hossen, M.J.; Arfin Tanim, S.; Saef Ullah Miah, M. Real-Time Detection of Forest Fires Using FireNet-CNN and Explainable AI Techniques. IEEE Access 2025, 13, 51150–51181. [Google Scholar] [CrossRef]

- El-Madafri, I.; Peña, M.; Olmedo-Torre, N. Real-Time Forest Fire Detection with Lightweight CNN Using Hierarchical Multi-Task Knowledge Distillation. Fire 2024, 7, 392. [Google Scholar] [CrossRef]

- Marjani, M.; Mahdianpari, M.; Mohammadimanesh, F. CNN-BiLSTM: A Novel Deep Learning Model for near-Real-Time Daily Wildfire Spread Prediction. Remote Sens. 2024, 16, 1467. [Google Scholar] [CrossRef]

- Li, R.; Hu, Y.; Li, L.; Guan, R.; Yang, R.; Zhan, J.; Cai, W.; Wang, Y.; Xu, H.; Li, L. SMWE-GFPNNet: A High-Precision and Robust Method for Forest Fire Smoke Detection. Knowl.-Based Syst. 2024, 289, 111528. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale 2021. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Cruz, M.V.; Kumar, N.; Subramanian, A.; Sethuraman, S.C. Wildfire Detection Using Vision Transformer(ViT). In Proceedings of the 2025 6th International Conference on Artificial Intelligence, Robotics and Control (AIRC), Savannah, GA, USA, 7–9 May 2025; pp. 402–406. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Chen, J.; Han, H.; Liu, M.; Su, P.; Chen, X. IFS-DETR: A Real-Time Industrial Fire Smoke Detection Algorithm Based on an End-to-End Structured Network. Measurement 2025, 241, 115660. [Google Scholar] [CrossRef]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying Convolution and Attention for All Data Sizes. Adv. Neural Inf. Process. Syst. 2021, 34, 3965–3977. [Google Scholar]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. RT-DETRv2: Improved Baseline with Bag-of-Freebies for Real-Time Detection Transformer 2024. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Wei, Z.; Chen, P.; Yu, X.; Li, G.; Jiao, J.; Han, Z. Semantic-Aware SAM for Point-Prompted Instance Segmentation. arXiv 2023, arXiv:2312.15895. [Google Scholar]

- Li, X.; Xu, F.; Liu, F.; Tong, Y.; Lyu, X.; Zhou, J. Semantic Segmentation of Remote Sensing Images by Interactive Representation Refinement and Geometric Prior-Guided Inference. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5400318. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Yu, A.; Lyu, X.; Gao, H.; Zhou, J. A Frequency Decoupling Network for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5607921. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Zhang, J.; Yu, A.; Lyu, X.; Gao, H.; Zhou, J. Dual-Domain Decoupled Fusion Network for Semantic Segmentation of Remote Sensing Images. Inf. Fusion 2025, 124, 103359. [Google Scholar] [CrossRef]

- Mukherjee, A.; Mondal, J.; Dey, S. Accelerated Fire Detection and Localization at Edge. ACM Trans. Embed. Comput. Syst. 2022, 21, 1–27. [Google Scholar] [CrossRef]

- Xie, J.; Zhao, H. Forest Fire Object Detection Analysis Based on Knowledge Distillation. Fire 2023, 6, 446. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, J.; Ta, N.; Zhao, X.; Xiao, M.; Wei, H. A Real-Time Deep Learning Forest Fire Monitoring Algorithm Based on an Improved Pruned + KD Model. J. Real-Time Image Process. 2021, 18, 2319–2329. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Ding, Y.; Bu, X. MS-FRCNN: A Multi-Scale Faster RCNN Model for Small Target Forest Fire Detection. Forests 2023, 14, 616. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, S.; Wang, W.; Zhang, W.; Zhang, L. Pyramid Attention Based Early Forest Fire Detection Using UAV Imagery. J. Phys. Conf. Ser. 2022, 2363, 012021. [Google Scholar] [CrossRef]

- Chen, G.; Cheng, R.; Lin, X.; Jiao, W.; Bai, D.; Lin, H. LMDFS: A Lightweight Model for Detecting Forest Fire Smoke in UAV Images Based on YOLOv7. Remote Sens. 2023, 15, 3790. [Google Scholar] [CrossRef]

- Jonnalagadda, A.V.; Hashim, H.A. SegNet: A Segmented Deep Learning Based Convolutional Neural Network Approach for Drones Wildfire Detection. Remote Sens. Appl. Soc. Environ. 2024, 34, 101181. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Shahid, M.; Hua, K. Fire Detection Using Transformer Network. In Proceedings of the 2021 International Conference on Multimedia Retrieval, Taipei, Taiwan, 6–19 November 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 627–630. [Google Scholar]

- Jiang, M.; Zhao, Y.; Yu, F.; Zhou, C.; Peng, T. A Self-Attention Network for Smoke Detection. Fire Saf. J. 2022, 129, 103547. [Google Scholar] [CrossRef]

- Li, A.; Zhao, Y.; Zheng, Z. Novel Recursive BiFPN Combining with Swin Transformer for Wildland Fire Smoke Detection. Forests 2022, 13, 2032. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, F.; Xu, Y.; Wang, J.; Lu, H.; Wei, W.; Zhu, J. TFNet: Transformer-Based Multi-Scale Feature Fusion Forest Fire Image Detection Network. Fire 2025, 8, 59. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Cetin, A.E. Computationally Efficient Wildfire Detection Method Using a Deep Convolutional Network Pruned via Fourier Analysis. Sensors 2020, 20, 2891. [Google Scholar] [CrossRef]

- de Venâncio, P.V.A.B.; Lisboa, A.C.; Barbosa, A.V. An Automatic Fire Detection System Based on Deep Convolutional Neural Networks for Low-Power, Resource-Constrained Devices. Neural Comput. Appl. 2022, 34, 15349–15368. [Google Scholar] [CrossRef]

- He, L.; Wang, M. SliceSamp: A Promising Downsampling Alternative for Retaining Information in a Neural Network. Appl. Sci. 2023, 13, 11657. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO 2023. Available online: https://docs.ultralytics.com/zh/models/yolov8/ (accessed on 16 October 2025).

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Jocher, G. Ultralytics/Yolov5: V3.1—Bug Fixes and Performance Improvements 2020. Available online: https://docs.ultralytics.com/zh/yolov5/quickstart_tutorial/ (accessed on 16 October 2025).

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design 2021. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions 2021. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Bin Jabar, A.H.; Noordin, N.H.; Samad, R. Image Recognition System for Pico Satellite Earth Surface Analysis (50–75 M). In Proceedings of the 2025 IEEE 8th International Conference on Electrical, Control and Computer Engineering (Inecce), Kuantan, Malaysia, 27–28 August 2025; pp. 224–228. [Google Scholar]

- Jangirova, S.; Jankovic, B.; Ullah, W.; Khan, L.U.; Guizani, M. Real-Time Aerial Fire Detection on Resource-Constrained Devices Using Knowledge Distillation 2025. arXiv 2025, arXiv:2502.20979. [Google Scholar]

- Titu, M.F.S.; Pavel, M.A.; Michael, G.K.O.; Babar, H.; Aman, U.; Khan, R. Real-Time Fire Detection: Integrating Lightweight Deep Learning Models on Drones with Edge Computing. Drones 2024, 8, 483. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

| Layer | Conv Module | Slicesamp Module | C2f Module | SwinBottle Module | ||||

|---|---|---|---|---|---|---|---|---|

| Number of Module | Params | Number of Module | Params | Number of Module | Params | Number of Module | Params | |

| 1 | 1 | 464 | 1 | 356 | 1 | 7360 | 1 | 19,426 |

| 2 | 1 | 4672 | 1 | 2816 | 2 | 49,664 | 2 | 147,208 |

| 3 | 1 | 18,560 | 1 | 9728 | 2 | 197,632 | 2 | 581,136 |

| 4 | 1 | 73,984 | 1 | 35,840 | 1 | 460,288 | 1 | 1,187,600 |

| 5 | 1 | 295,424 | 1 | 137,216 | - | - | - | - |

| Dataset | Images | Train | Val | Test | Fire | Smoke | Both | Neither |

|---|---|---|---|---|---|---|---|---|

| FASDD-UAV | 25,097 | 12,551 | 8365 | 4181 | 210 | 5080 | 7821 | 11,986 |

| FASDD-RS | 2223 | 1112 | 741 | 370 | - | 1335 | - | 888 |

| Model | Dataset | Val | Test | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | mAP50 | Precision | Recall | mAP50 | ||

| YOLOv7 | FASDD-RS | 66.5 | 63.8 | 66.6 | 60.2 | 68.7 | 65.2 |

| YOLOv8 | FASDD-RS | 65.7 | 60.1 | 65.0 | 64.4 | 60.7 | 61.7 |

| YOLOv12 | FASDD-RS | 72.6 | 62.8 | 68.1 | 71.2 | 63.9 | 65.6 |

| Swin Transformer | FASDD-RS | 26.3 | 87.2 | 68.6 | 29.4 | 87.5 | 69.9 |

| RT-DETR | FASDD-RS | 69.4 | 61.1 | 60.8 | 68.3 | 60.2 | 60.3 |

| HybriDet | FASDD-RS | 72.3 | 63.7 | 69.2 | 72.2 | 62.6 | 66.6 |

| YOLOv8 | VOC | 77.1 | 68.1 | 75.5 | - | - | - |

| HybriDet | VOC | 79.7 | 71.6 | 79.1 | - | - | - |

| Dataset | Model | Size | FASDD Val | FASDD Test | ||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | mAP50 | Precision | Recall | mAP50 | |||

| FASDD-RS | YOLOv8 | 5.98 M | 65.7 | 60.1 | 65.0 | 64.4 | 60.7 | 61.7 |

| HybriDet (original) | 8.21 M | 72.3 | 63.7 | 69.2 (+4.2) | 72.2 | 62.6 | 66.6 (+4.9) | |

| HybriDet (pruned) | 6.45 M | 66.1 | 65.1 | 66.4 (+1.4) | 72.1 | 59.4 | 68.1 (+6.4) | |

| FASDD-UAV | YOLOv8 | 5.99 M | 88.8 | 87.9 | 92.2 | 89.4 | 87.4 | 92.3 |

| HybriDet (original) | 8.31 M | 90.3 | 88.3 | 92.6 (+0.4) | 89.8 | 87.9 | 92.3 | |

| HybriDet (pruned) | 6.26 M | 90.4 | 88.0 | 92.4 (+0.2) | 90.3 | 88.0 | 92.5 (+0.2) | |

| Devices | Models | YOLOv8 | HybriDet (Original) | HybriDet (Pruned) | |||

| datasets | FASDD-RS (RGB) | FASDD-RS (SWIR)_ | FASDD-RS (RGB) | FASDD-RS (SWIR) | FASDD-RS (RGB) | FASDD-RS (SWIR) | |

| Patameters (MB) | 3.07 | 3.09 | 4.14 | 4.17 | 3.23 | 3.24 | |

| NVIDIA GeForce RTX 3090 | mAP50 (%) | 61.6 | 63.8 | 66.5 | 68.3 | 68.0 | 69.7 |

| Latency (ms) | 2.1 | 2.4 | 3.4 | 3.6 | 3.2 | 3.4 | |

| Power consumption (W) | 320–350 | 320–350 | 320–350 | 320–350 | 320–350 | 320–350 | |

| Raspberry PI 4B | mAP50 (%) | 60.3 | 62.8 | 65.3 | 67.4 | 66.9 | 68.8 |

| Latency (ms) | 29.8 | 33.6 | 38.9 | 40.7 | 37.4 | 39.2 | |

| Power consumption (W) | 7–10 | 7–10 | 7–10 | 7–10 | 7–10 | 7–10 | |

| Model | FASDD-RS Val | FASDD-RS Test | |||||||

|---|---|---|---|---|---|---|---|---|---|

| SliceSamp | CS Attention | SwinBottle | ConcatBifpn | Precision | Recall | mAP50 | Precision | Recall | mAP50 |

| 65.7 | 60.1 | 65.0 | 64.4 | 60.7 | 61.7 | ||||

| √ | 63.6 | 63.2 | 63.4 | 69.4 | 61.5 | 65.3 | |||

| √ | √ | 65.6 | 61.5 | 64.2 | 66.3 | 59.0 | 64.6 | ||

| √ | √ | 67.5 | 60.4 | 65.1 | 67.8 | 64.5 | 66.3 | ||

| √ | √ | √ | 67.9 | 62.5 | 68.3 | 68.1 | 62.4 | 65.9 | |

| √ | √ | √ | √ | 72.3 | 63.7 | 69.2 | 72.2 | 62.6 | 66.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, F.; Wang, M. HybriDet: A Hybrid Neural Network Combining CNN and Transformer for Wildfire Detection in Remote Sensing Imagery. Remote Sens. 2025, 17, 3497. https://doi.org/10.3390/rs17203497

Dong F, Wang M. HybriDet: A Hybrid Neural Network Combining CNN and Transformer for Wildfire Detection in Remote Sensing Imagery. Remote Sensing. 2025; 17(20):3497. https://doi.org/10.3390/rs17203497

Chicago/Turabian StyleDong, Fengming, and Ming Wang. 2025. "HybriDet: A Hybrid Neural Network Combining CNN and Transformer for Wildfire Detection in Remote Sensing Imagery" Remote Sensing 17, no. 20: 3497. https://doi.org/10.3390/rs17203497

APA StyleDong, F., & Wang, M. (2025). HybriDet: A Hybrid Neural Network Combining CNN and Transformer for Wildfire Detection in Remote Sensing Imagery. Remote Sensing, 17(20), 3497. https://doi.org/10.3390/rs17203497