FPGA-Based Real-Time Deblurring and Enhancement for UAV-Captured Infrared Imagery

Abstract

Highlights

- A novel deep learning network is proposed for simultaneous blind deblurring and enhancement of UAV infrared images, integrating feature extraction, fusion, and simulated diffusion modules, along with a region-specific pixel loss and progressive training strategy.

- The method achieves significant performance improvements, including a 10.7% increase in PSNR, 25.6% reduction in edge inference time, and 18.4% decrease in parameter count.

- Offers an efficient and lightweight solution for real-time infrared image restoration on mobile platforms such as UAVs.

- Enhances image quality and inference speed, providing a reliable foundation for downstream high-level vision tasks.

Abstract

1. Introduction

- A novel network architecture is proposed for single-image blind deblurring and enhancement of UAV IR imagery, addressing the unique challenges posed by uncooled sensors and platform motion.

- A region-specific pixel loss is proposed together with a progressive training strategy, aiming to improve local feature preservation and enhance the model’s overall performance.

- Extensive evaluations on public IR datasets confirm that the method not only achieves superior performance to existing approaches but also does so with fewer parameters and faster inference speeds.

2. Related Work

2.1. Infrared Image Enhancement

2.2. Deep Learning-Based Image Restoration

2.3. Infrared Image Deblurring

2.4. UAV Image Rapid Processing Technologies and Applications

2.5. Summary

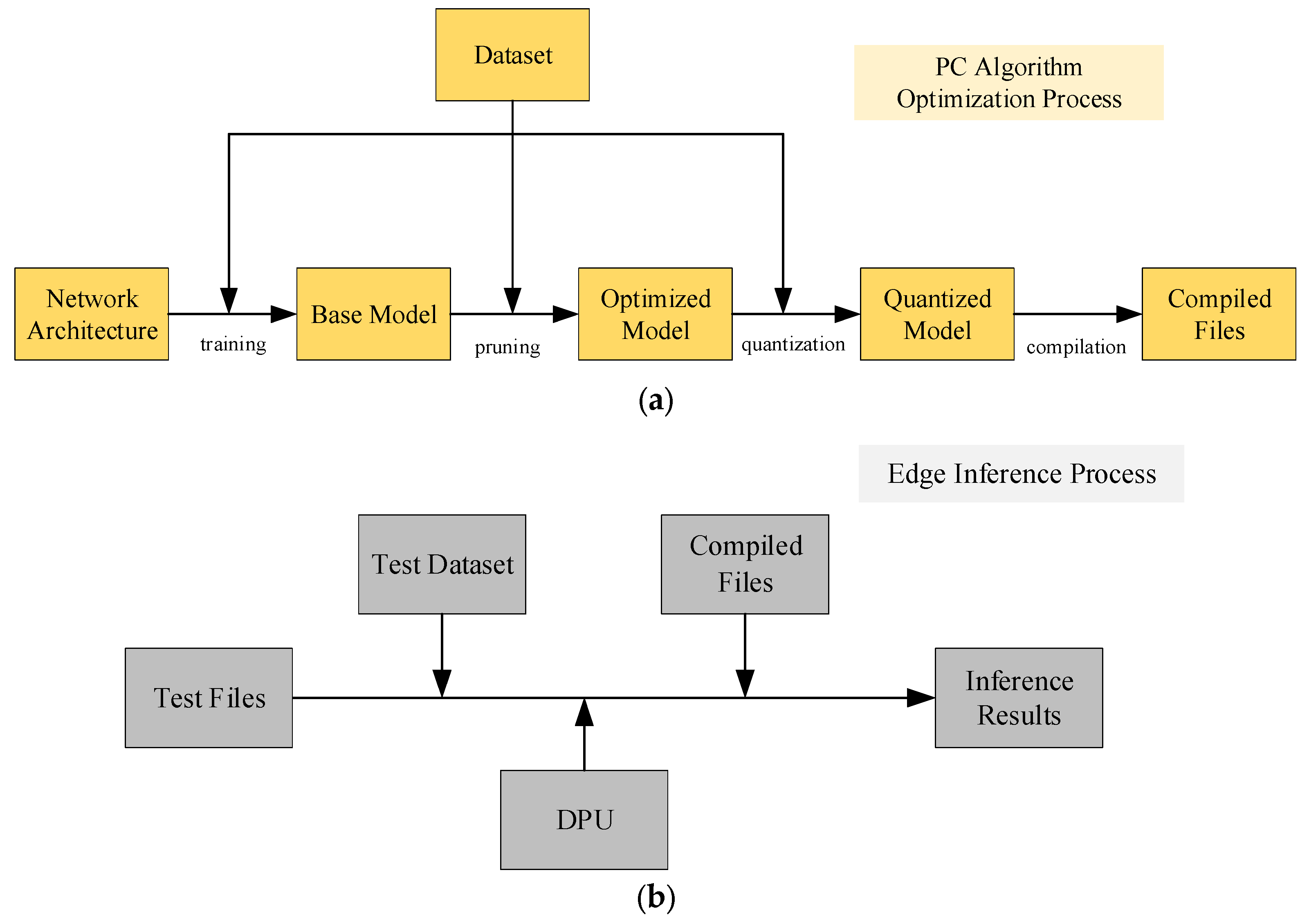

3. Method

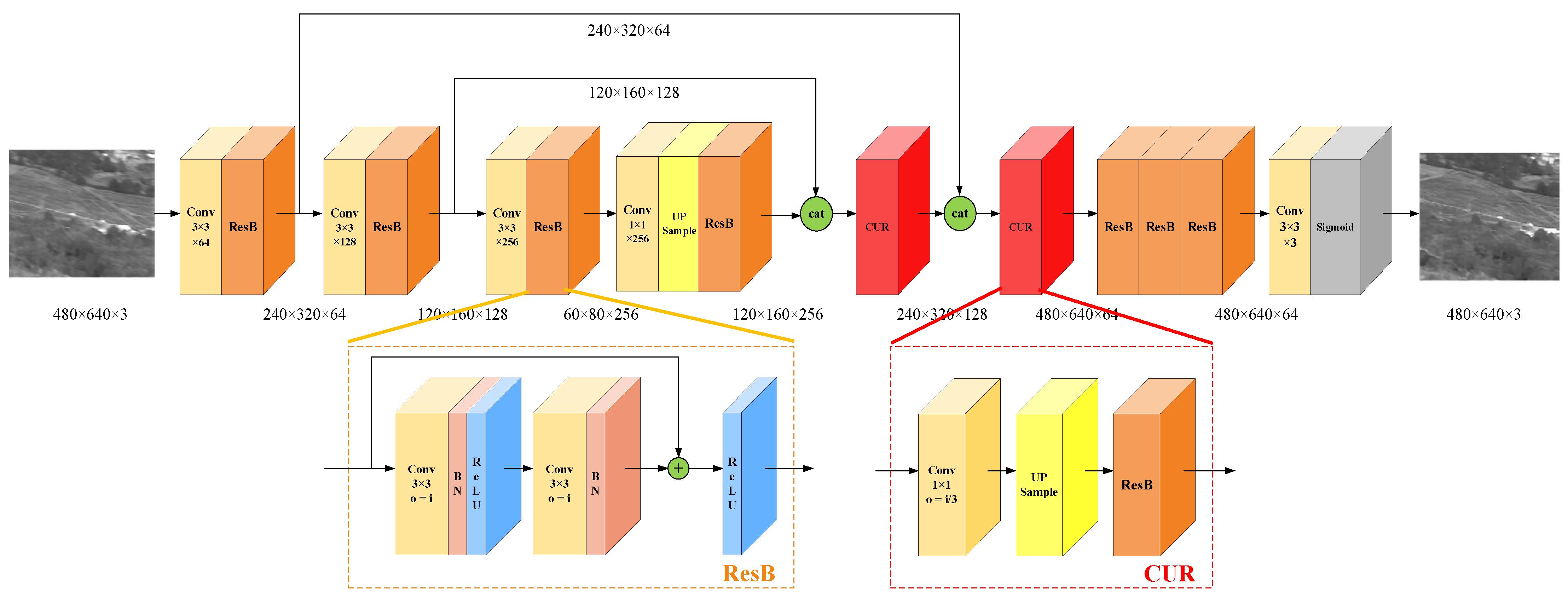

3.1. Network Architecture

3.2. Dataset Construction

3.3. Loss Function and Training Strategy

3.3.1. Regional Pixel Loss

3.3.2. Deep Feature Loss

3.3.3. Gradient Mixed Loss

3.3.4. Progressive Training Strategy

4. Experiments

4.1. Experiment Environment

4.2. Assessment Indicators

4.3. Ablation Experiments

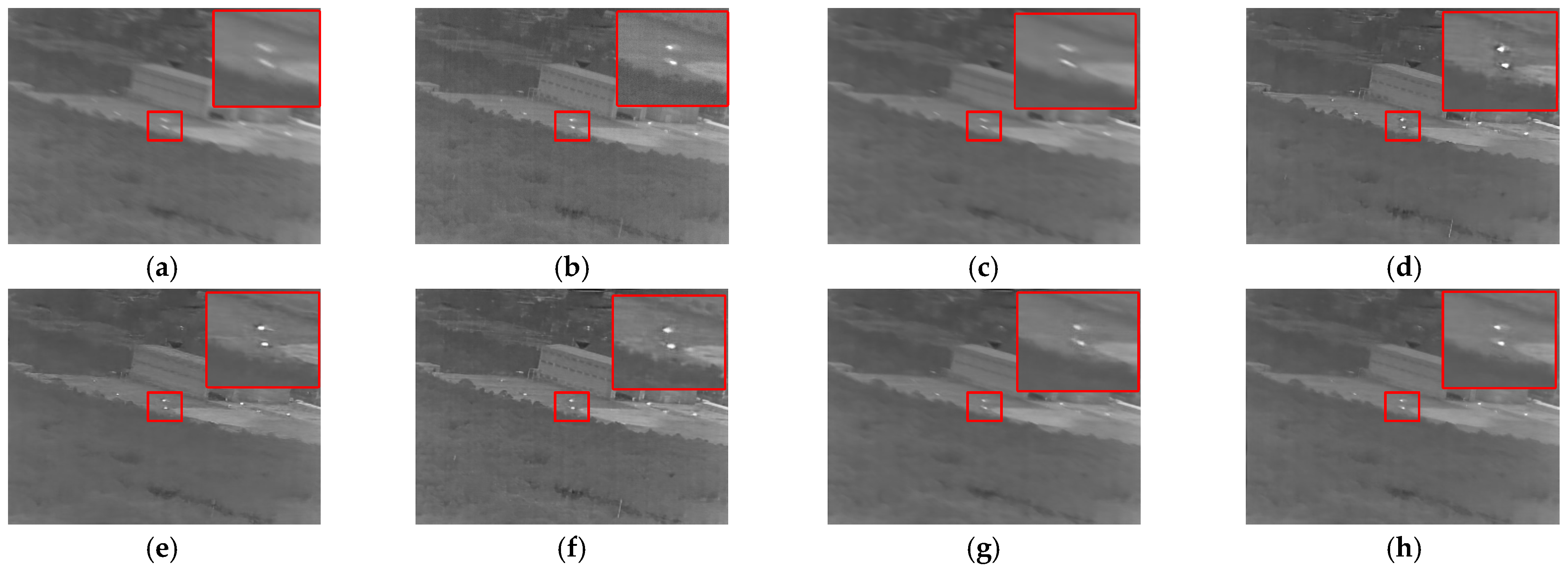

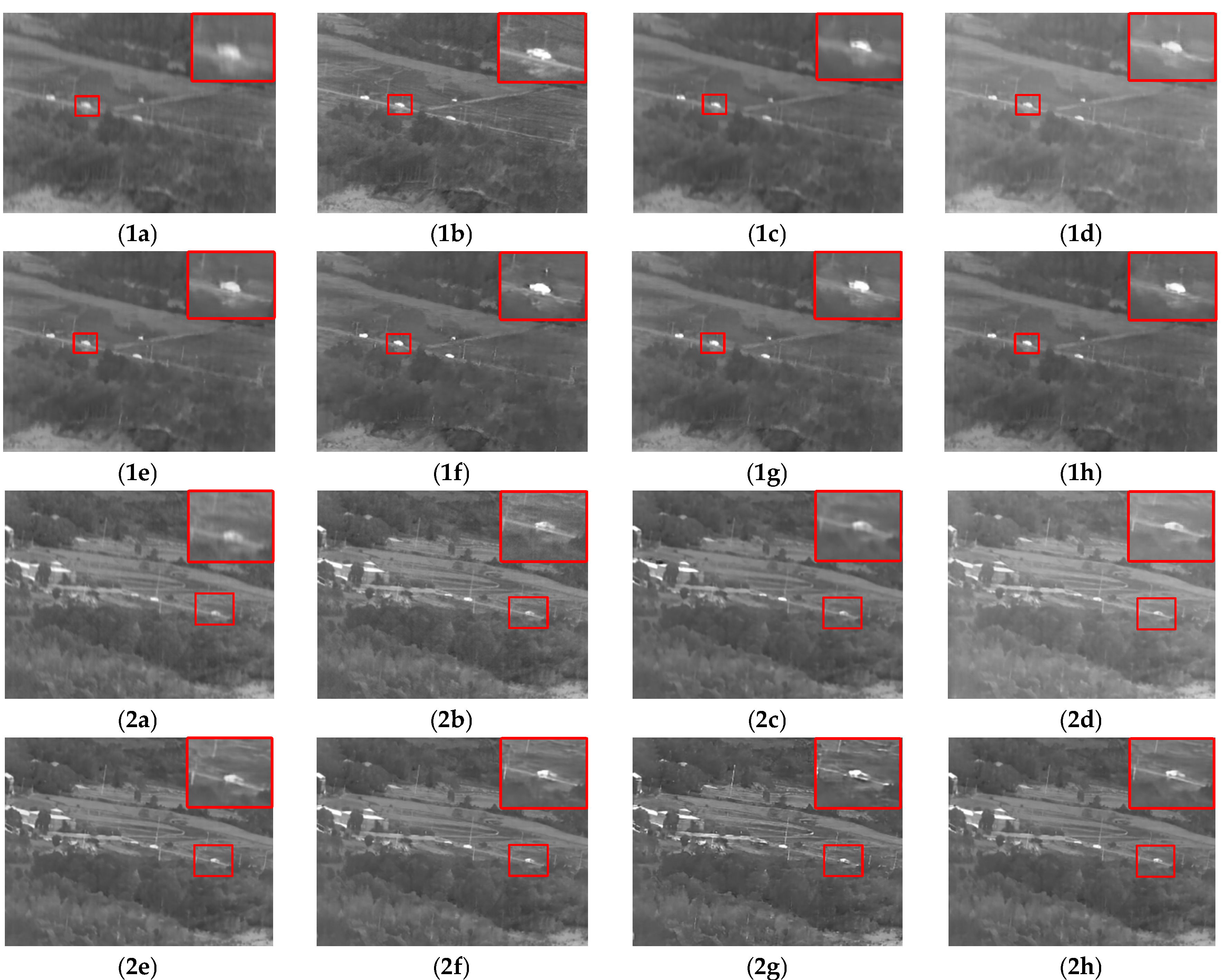

4.4. Comparison with State-of-the-Art Methods

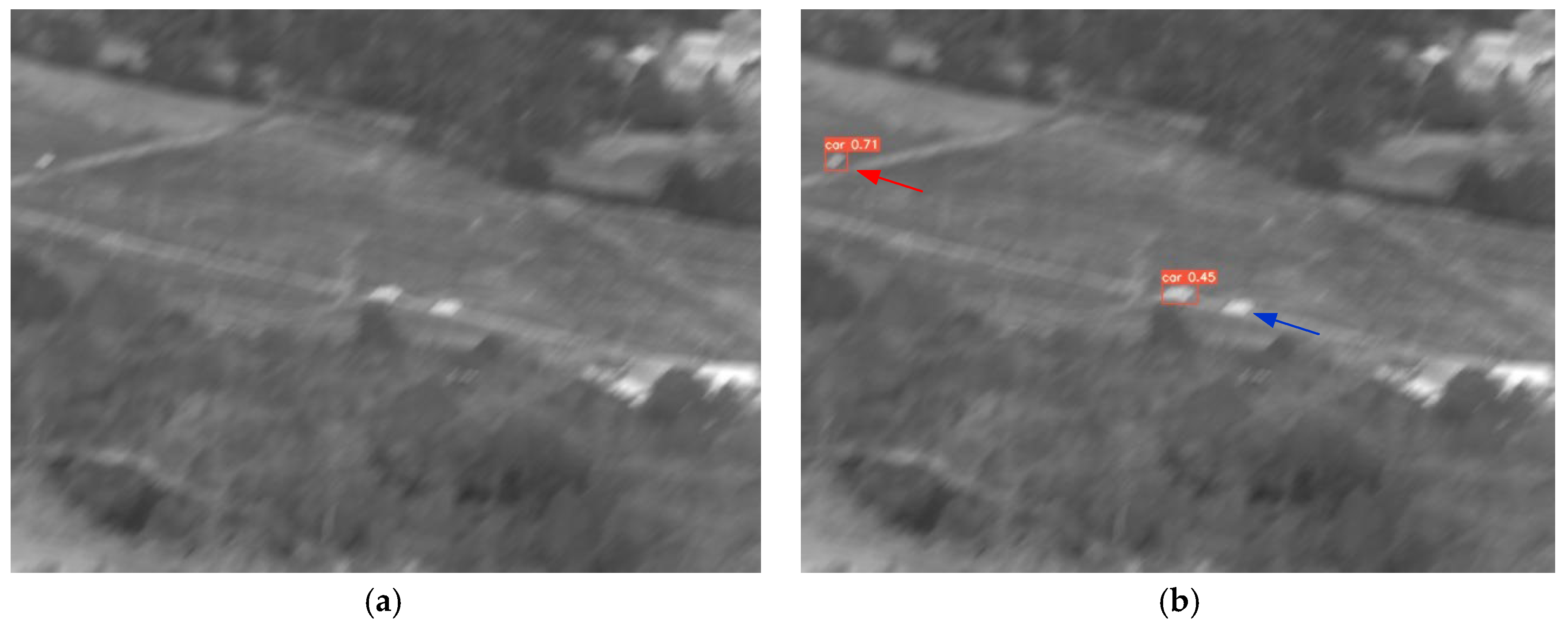

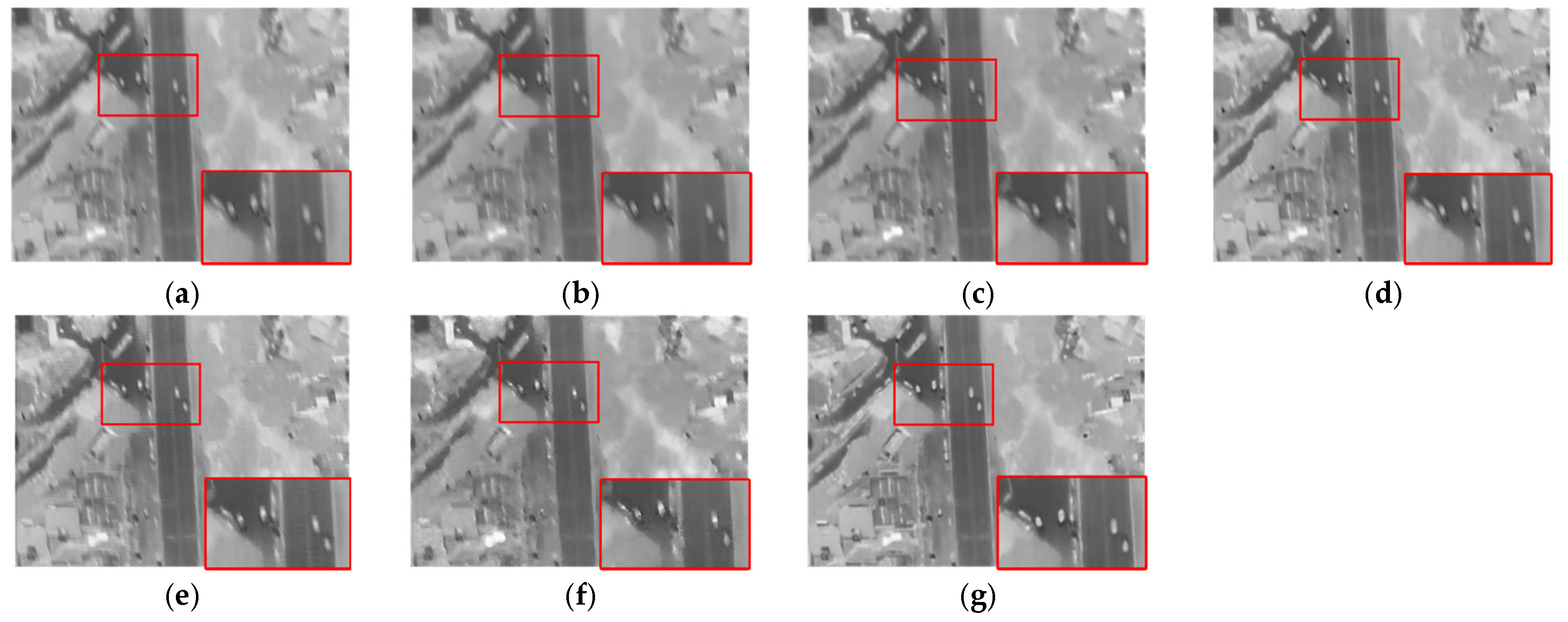

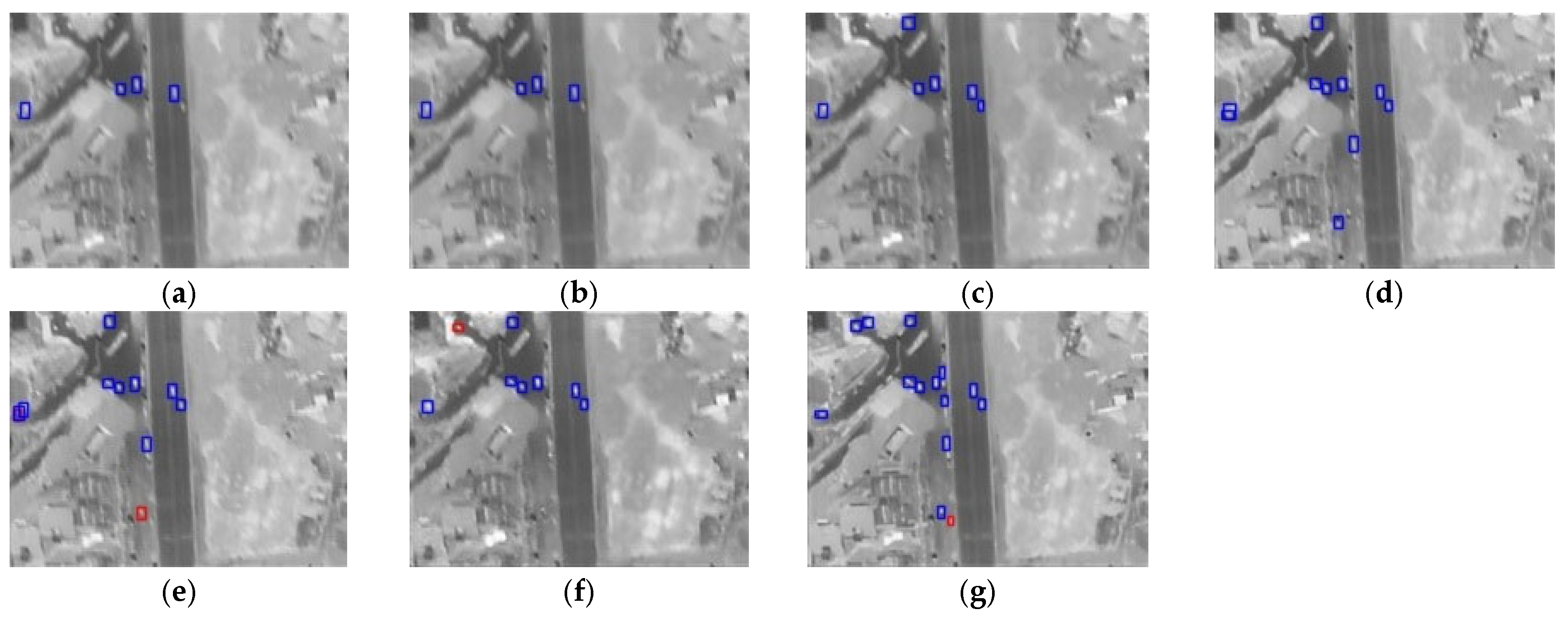

4.5. Real-World Scenario Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shu, S.; Fu, Y.; Liu, S.; Zhang, Y.; Zhang, T.; Wu, T.; Gao, X. A correction method for radial distortion and nonlinear response of infrared cameras. Rev. Sci. Instrum. 2024, 95, 034901. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Aidini, A.; Fotiadou, K.; Giannopoulos, M.; Pentari, A.; Tsakalides, P. Survey of deep-learning approaches for remote sensing observation enhancement. Sensors 2019, 19, 3929. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zhu, L. A review on unmanned aerial vehicle remote sensing: Platforms, sensors, data processing methods, and applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Dhal, K.G.; Das, A.; Ray, S.; Gálvez, J.; Das, S. Histogram equalization variants as optimization problems: A review. Arch. Comput. Methods Eng. 2021, 28, 1471–1496. [Google Scholar] [CrossRef]

- Ma, S.; Yang, C.; Bao, S. Contrast enhancement method based on multi-scale retinex and adaptive gamma correction. J. Adv. Comput. Intell. Intell. Inform. 2022, 26, 875–883. [Google Scholar] [CrossRef]

- Ren, K.; Gao, Y.; Wan, M.; Gu, G.; Chen, Q. Infrared small target detection via region super resolution generative adversarial network. Appl. Intell. 2022, 52, 11725–11737. [Google Scholar] [CrossRef]

- Fan, S.; Liang, W.; Ding, D.; Yu, H. LACN: A lightweight attention-guided convnext network for low-light image enhancement. Eng. Appl. Artif. Intell. 2023, 117, 105632. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; MICCAI 2015. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8878–8887. [Google Scholar] [CrossRef]

- Liu, J.; Chandrasiri, N.P. CA-ESRGAN: Super-resolution image synthesis using channel attention-based ESRGAN. IEEE Access 2024, 12, 25740–25748. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar] [CrossRef]

- Fei, S.; Ye, T.; Wang, L.; Zhu, L. LucidFlux: Caption-free universal image restoration via a large-scale diffusion transformer. arXiv 2025, arXiv:2509.22414. [Google Scholar]

- Zhang, H.; Zhang, X.; Cai, N.; Di, J.; Zhang, Y. Joint multi-dimensional dynamic attention and transformer for general image restoration. Comput. Struct. Biotechnol. J. 2025, 25, 102162. [Google Scholar] [CrossRef]

- Ye, Y.; Wang, T.; Fang, F.; Zhang, G. MSCSCformer: Multi-scale convolutional sparse coding-based transformer for pansharpening. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5405112. [Google Scholar] [CrossRef]

- Chobola, T.; Müller, G.; Dausmann, V.; Theileis, A.; Taucher, J.; Huisken, J.; Peng, T. Lucyd: A feature-driven richardson-lucy deconvolution network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; Springer Nature: Cham, Switzerland, 2023; pp. 656–665. [Google Scholar] [CrossRef]

- Zhao, Y.; Fu, G.; Wang, H.; Zhang, S.; Yue, M. Infrared image deblurring based on generative adversarial networks. Int. J. Opt. 2021, 2021, 9946809. [Google Scholar] [CrossRef]

- Wu, W.B.; Pan, Y.; Su, N.; Wang, J.; Wu, S.; Xu, Z.; Yu, Y.; Liu, Y. Multi-scale network for single image deblurring based on ensemble learning module. Multimed. Tools Appl. 2025, 84, 9045–9064. [Google Scholar] [CrossRef]

- Xiao, X.; Qu, W.; Xia, G.S.; Xu, M.; Shao, Z.; Gong, J.; Li, D. A novel real-time matching and pose reconstruction method for low-overlap agricultural UAV images with repetitive textures. ISPRS J. Photogramm. Remote Sens. 2025, 226, 54–75. [Google Scholar] [CrossRef]

- Li, Y.; Chen, S.; Hwang, K.; Ji, X.; Lei, Z.; Zhu, Y.; Ye, F.; Liu, M. Spatio-temporal data fusion techniques for modeling digital twin City. Geo-Spat. Inf. Sci. 2024, 28, 541–564. [Google Scholar] [CrossRef]

- Xiao, X.; Guo, B.; Shi, Y.; Gong, W.; Li, J.; Zhang, C. Robust and rapid matching of oblique UAV images of urban area. In MIPPR 2013: Pattern Recognition and Computer Vision; SPIE: Bellingham, WA, USA, 2013; Volume 8919, pp. 223–230. [Google Scholar] [CrossRef]

- Zhao, M.; Ling, Q. Pwstablenet: Learning pixel-wise warping maps for video stabilization. IEEE Trans. Image Process. 2020, 29, 3582–3595. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, Z.; Tanim, S.A.; Prity, F.S.; Rahman, H.; Maisha, T.B.M. Improving biomedical image segmentation: An extensive analysis of U-Net for enhanced performance. In Proceedings of the International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Amaravati, India, 22–23 February 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693. [Google Scholar] [CrossRef]

- Luo, X.; Qu, Y.; Xie, Y.; Zhang, Y.; Li, C.; Fu, Y. Lattice network for lightweight image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4826–4842. [Google Scholar] [CrossRef] [PubMed]

- Qi, J.; Abera, D.E.; Fanose, M.N.; Wang, L.; Cheng, J. A deep learning and image enhancement based pipeline for infrared and visible image fusion. Neurocomputing 2024, 578, 127353. [Google Scholar] [CrossRef]

- Huang, W.; Xue, Y.; Hu, L.; Liuli, H. S-EEGNet: Electroencephalogram signal classification based on a separable convolution neural network with bilinear interpolation. IEEE Access 2020, 8, 131636–131646. [Google Scholar] [CrossRef]

- Pan, L.; Mo, C.; Li, J.; Wu, Z.; Liu, T.; Cheng, J. Design of lightweight infrared image enhancement network based on adversarial generation. In Proceedings of the International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 9–11 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 995–998. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M.-H. Diffusion models: A comprehensive survey of methods and applications. ACM Comput. Surv. 2023, 56, 1–39. [Google Scholar] [CrossRef]

- Wu, S.; Gao, X.; Wang, F.; Hu, X. Variation-guided condition generation for diffusion inversion in few-shot image classification. In Proceedings of the International Conference on New Trends in Computational Intelligence (NTCI), Qingdao, China, 3–5 November 2023; IEEE: Piscataway, NJ, USA, 2023; Volume 1, pp. 318–323. [Google Scholar] [CrossRef]

- Yi, S.; Li, L.; Liu, X.; Li, J.; Chen, L. HCTIRdeblur: A hybrid convolution-transformer network for single infrared image deblurring. Infrared Phys. Technol. 2023, 131, 104640. [Google Scholar] [CrossRef]

- Cao, S.; He, N.; Zhao, S.; Lu, K.; Zhou, X. Single image motion deblurring with reduced ringing effects using variational Bayesian estimation. Signal Process. 2018, 148, 260–271. [Google Scholar] [CrossRef]

- Li, M.; Nong, S.; Nie, T.; Han, C.; Huang, L.; Qu, L. A novel stripe noise removal model for infrared images. Sensors 2022, 22, 2971. [Google Scholar] [CrossRef]

- Cheng, J.H.; Pan, L.h.; Liu, T.; Cheng, J. Lightweight infrared image enhancement network based on adversarial generation. J. Signal Process. 2024, 40, 484–491. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

| Names | Related Configurations |

|---|---|

| GPU | NVIDIA GeForce RTX 3090 |

| CPU | Inter(R) Core™ i9-13900K |

| Operating system | Ubuntu 22.04 |

| Deep learning framework | Pytorch 2.1.1 + CUDA 12.1 |

| Diffusion Module | Regional Pixel Loss | Progressive Training | PSNR/dB | SSIM | RMSE | EME | SNRME |

|---|---|---|---|---|---|---|---|

| 31.35 | 0.8678 | 7.16 | 1.32 | 3.38 | |||

| √ | 34.38 | 0.8721 | 5.87 | 1.45 | 3.39 | ||

| √ | √ | 35.56 | 0.8715 | 4.33 | 1.53 | 3.42 | |

| √ | √ | 36.44 | 0.8919 | 3.89 | 1.59 | 3.44 | |

| √ | √ | 36.93 | 0.9006 | 3.68 | 1.64 | 3.47 | |

| √ | √ | √ | 36.95 | 0.9012 | 3.67 | 1.66 | 3.49 |

| Model | Parameters | PSNR/dB | SSIM | RMSE |

|---|---|---|---|---|

| IREGAN [33] | 1.05 M | 31.87 | 0.8619 | 7.16 |

| PWStableNet [21] | 7.91 M | 30.89 | 0.8542 | 7.50 |

| Improved U-Net [22] | 7.29 M | 31.85 | 0.9065 | 6.89 |

| Uformer-T [23] | 5.23 M | 34.33 | 0.9144 | 6.78 |

| HCTIRdeblur [30] | 4.77 M | 33.38 | 0.9367 | 5.43 |

| Ours | 3.89 M | 36.95 | 0.9012 | 3.67 |

| Model | EME | SNRME | Time/Ms | Power/W | mAP/% |

|---|---|---|---|---|---|

| IREGAN [33] | 1.25 | 3.45 | 5.6 | 1.36 | 83.3 |

| PWStableNet [21] | 1.40 | 3.52 | 17.3 | 4.50 | 85.0 |

| Improved U-Net [22] | 1.51 | 3.50 | 16.8 | 4.24 | 85.9 |

| Uformer-T [23] | 1.54 | 3.52 | 9.2 | 2.58 | 86.8 |

| HCTIRdeblur [30] | 1.63 | 3.47 | 8.2 | 2.16 | 86.5 |

| Ours | 1.66 | 3.49 | 6.1 | 1.95 | 87.2 |

| Stage | Processing Unit | Key Operations | Time Consumption (%) |

|---|---|---|---|

| Pre-processing | ARM | Image Decoding, Resizing | 64 |

| Network Inference | DPU Kernel | Convolutional Operations | 15 |

| Post-processing | ARM | Image Display | 21 |

| LUTs | FFs | BRAM | |

|---|---|---|---|

| Total Available | 117,120 | 234,240 | 144 |

| Amount Utilized | 64,416 | 77,299 | 32 |

| Utilization Ratio | 55% | 32.9% | 22.2% |

| Primary Function | Compute, Control Logic | Pipeline Registers | Feature Map, Weight Storage |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, J.; Pan, L.; Liu, T.; Cheng, B.; Cai, Y. FPGA-Based Real-Time Deblurring and Enhancement for UAV-Captured Infrared Imagery. Remote Sens. 2025, 17, 3446. https://doi.org/10.3390/rs17203446

Cheng J, Pan L, Liu T, Cheng B, Cai Y. FPGA-Based Real-Time Deblurring and Enhancement for UAV-Captured Infrared Imagery. Remote Sensing. 2025; 17(20):3446. https://doi.org/10.3390/rs17203446

Chicago/Turabian StyleCheng, Jianghua, Lehao Pan, Tong Liu, Bang Cheng, and Yahui Cai. 2025. "FPGA-Based Real-Time Deblurring and Enhancement for UAV-Captured Infrared Imagery" Remote Sensing 17, no. 20: 3446. https://doi.org/10.3390/rs17203446

APA StyleCheng, J., Pan, L., Liu, T., Cheng, B., & Cai, Y. (2025). FPGA-Based Real-Time Deblurring and Enhancement for UAV-Captured Infrared Imagery. Remote Sensing, 17(20), 3446. https://doi.org/10.3390/rs17203446