Highlights

What are the main findings?

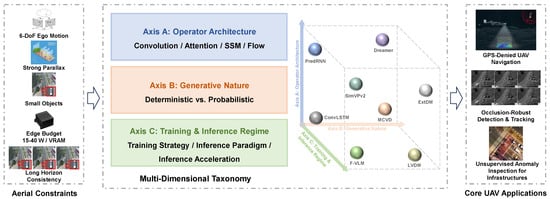

- This review introduces a novel three-axis taxonomy (operator architecture, generative nature, and training regime) that systematically clarifies the complex design space of video prediction models for resource-constrained UAVs.

- Our analysis of the recent literature (2022–2025) reveals a clear technological shift towards efficient, long-range temporal operators, such as state-space models (SSMs), which are uniquely suited to the computational and memory constraints of aerial platforms.

What is the implication of the main finding?

- The proposed framework serves as a practical design guide, enabling researchers and engineers to strategically navigate the trade-offs between model performance and onboard deployment limitations for specific UAV remote sensing applications.

- By identifying key research gaps, engineering best practices, and future directions, this survey provides a roadmap to accelerate the development of robust, scalable world models, moving the field closer to true predictive autonomy.

Abstract

The analysis of dynamic remote sensing scenes from unmanned aerial vehicles (UAVs) is shifting from reactive processing to proactive, predictive intelligence. Central to this evolution is video prediction—forecasting future imagery from past observations—which enables critical remote sensing applications like persistent environmental monitoring, occlusion-robust object tracking, and infrastructure anomaly detection under challenging aerial conditions. Yet, a systematic review of video prediction models tailored for the unique constraints of aerial remote sensing has been lacking. Existing taxonomies often obscure key design choices, especially for emerging operators like state-space models (SSMs). We address this gap by proposing a unified, multi-dimensional taxonomy with three orthogonal axes: (i) operator architecture; (ii) generative nature; and (iii) training/inference regime. Through this lens, we analyze recent methods, clarifying their trade-offs for deployment on UAV platforms that demand processing of high-resolution, long-horizon video streams under tight resource constraints. Our review assesses the utility of these models for key applications like proactive infrastructure inspection and wildlife tracking. We then identify open problems—from the scarcity of annotated aerial video data to evaluation beyond pixel-level metrics—and chart future directions. We highlight a convergence toward scalable dynamic world models for geospatial intelligence, which leverage physics-informed learning, multimodal fusion, and action-conditioning, powered by efficient operators like SSMs.

1. Introduction

1.1. The Emergence of Embodied Intelligence in UAVs

Embodied unmanned aerial vehicles (UAVs) are transitioning from teleoperated platforms to intelligent agents that perceive, decide, and act autonomously within complex, three-dimensional environments. This paradigm shift from passive remote sensing to active, embodied perception is unlocking new frontiers across precision agriculture [1], disaster response [2], infrastructure inspection [3], and environmental monitoring [4,5,6]. Unlike ground robots, which operate on a 2D or 2.5D manifold, UAVs contend with full six-degree-of-freedom (6-DoF) dynamics, making their interaction with the world inherently more complex [7]. The principles of Embodied AI, which emphasize the tight coupling between an agent’s body, its sensors, and its environment, are central to enabling this new generation of intelligent aerial systems [8]. True autonomy in these dynamic, partially observable, and often GPS-challenged or GPS-denied settings cannot rely on reactive control alone. Onboard intelligence must build and maintain an internal model of the world to anticipate likely futures [9]. In practice, this means predicting future sensory observations to reduce decision latency, hedge against uncertainty, and enable safer, more reliable, and proactive behaviors.

1.2. Video Prediction: The Core of Proactive Autonomy

Video prediction—the task of forecasting future frames from a sequence of past observations—is central to this vision of predictive autonomy. It serves a dual purpose. Beyond its explicit goal of generation, it functions as a powerful mechanism for self-supervised representation learning [10]. To minimize future prediction error, a model must implicitly encode the underlying regularities of the world: object permanence, scene geometry, appearance constancy, motion dynamics, and even rudimentary causal structures [11,12,13]. For embodied UAVs, which constantly face the challenges of a moving camera, strong parallax effects, and the need to discern small, distant targets, such foresight provides tangible benefits: (i) Anticipatory Planning: enabling navigation through cluttered spaces by predicting the outcomes of potential control actions in a model-based planning framework [14,15]. (ii) Robust State Estimation: maintaining target tracks through temporary occlusions or sensor dropouts by “imagining” the missing frames [16]. (iii) Unsupervised Anomaly Detection: flagging unexpected deviations from normal dynamics—such as cracks in a bridge or unauthorized activity—as high-prediction-error events [17,18]. Consequently, prediction is not merely a generative task but a cognitive prerequisite for proactive agency in aerial remote sensing.

1.3. The Gap: Limitations of Existing Taxonomies in a Rapidly Evolving Field

The landscape of video prediction models is heterogeneous and evolving at a breakneck pace. While several excellent surveys exist, they often categorize models along a single axis, such as “RNN-based vs. CNN-based” or “deterministic vs. generative” [19,20,21]. Furthermore, broader reviews on video generation often focus on creative applications rather than robotic control [22]. Such taxonomies, while historically useful, struggle to properly position the latest hybrid architectures and, more critically, fail to provide a clear framework for understanding the role of emerging temporal operators. In particular, state-space models (SSMs) defy simple classification: their inference process is recurrent, yet their training can be parallelized like a convolution, and they capture long-range dependencies akin to transformers [23,24]. Similarly, the proliferation of attention variants (e.g., sparse, low-rank, local) has blurred the lines, offering different inductive biases and scalability trade-offs compared to traditional convolutional recurrence [25,26]. For a practitioner aiming to deploy a model on a resource-constrained UAV, a taxonomy that explicitly disentangles the backbone topology from the choice of temporal operator is essential for navigating these design choices and their performance implications. This review aims to fill this gap.

1.4. Design Space for UAV Video Prediction: Constraints and Desiderata

Deploying predictive models on UAVs imposes a unique and stringent set of constraints that sharpen the design desiderata. These are not just theoretical preferences but hard engineering requirements [27]: (i) Throughput and Latency: Models must process frames and generate predictions at a rate that supports real-time decision-making (e.g., >15 FPS) at relevant resolutions (e.g., 512 × 512 to 1024 × 1024), all within a strict end-to-end latency budget. (ii) Compute and Memory Footprint: Onboard processors (e.g., NVIDIA Jetson series, NVIDIA, Santa Clara, CA, USA) have limited peak memory (VRAM) and power budgets (typically 15–40 W), precluding the direct deployment of massive foundation models [28]. (iii) Robustness to Ego-Motion: Models must be robust to fast 6-DoF ego-motion, which induces large optical flow, motion blur, and rolling shutter artifacts. (iv) Small-Object Sensitivity: In many remote sensing tasks, the regions of interest (e.g., a person, a vehicle, a crop disease spot) are very small, demanding high fidelity in specific parts of the frame. (v) Long-Horizon Consistency: For meaningful planning, predictions must remain coherent and avoid catastrophic error accumulation over long time horizons (e.g., >1 s or >20 frames). These factors create a strong selective pressure for architectures and temporal operators that balance global dependency modeling with linear-time (or near-linear) complexity and stable autoregressive rollouts [13,23,24].

1.5. Contributions, Research Questions, and a New Taxonomy

To address the aforementioned gap, we propose and utilize a unified, multi-dimensional taxonomy built upon three orthogonal axes. This framework forms the backbone of our survey:

- Axis A: Operator Architecture: The model’s overall structure (CNN, Transformer, or hybrid) paired with the core mechanism for temporal modeling (convolutional recurrence, attention, SSM, or explicit warping/flow).

- Axis B: Generative Nature: The fundamental approach to prediction, ranging from deterministic regression to probabilistic models that capture uncertainty (VAEs, GANs, diffusion models).

- Axis C: Training and Inference Regime: The strategies used for training (e.g., teacher-forcing, curricula) and generation (e.g., autoregressive vs. one-shot), including techniques for edge deployment (distillation, quantization).

A conceptual illustration of this taxonomy is shown in Figure 1. Using this lens, we synthesize advances from 2022–2025, analyze their trade-offs, and assess their utility for core UAV tasks. This review is guided by three primary research questions:

Figure 1.

A conceptual illustration of our proposed three-axis taxonomy for video prediction models. This framework allows for a nuanced classification of modern architectures by decoupling backbone design, temporal mechanism, generative properties, and deployment strategies. Representative models are positioned within this 3D space based on their core characteristics.

- Q1: How should different temporal operators (attention, SSM, conv-recurrence) be selected and configured based on the target resolution, prediction horizon, and specific motion characteristics of UAV applications?

- Q2: Under what specific UAV task conditions (e.g., navigating through dense occlusions, tracking erratically moving targets) do probabilistic generators (VAEs, diffusion models) justify their significant computational overhead compared to deterministic models?

- Q3: Which training regimes and model compression techniques (e.g., distillation, quantization) are most effective in bridging the gap between high-accuracy models and the stringent deployment constraints of edge platforms?

Our synthesis reveals a clear trajectory towards scalable world models [29,30], powered by efficient temporal operators like SSMs and enriched by multimodal fusion (RGB, IMU, event cameras) and action-conditioning.

- Scope, Inclusion/Exclusion Criteria, and Terminology

This review focuses on video prediction under UAV constraints. Downstream tasks (detection/segmentation/tracking) are discussed only insofar as they inform prediction design and evaluation; exhaustive coverage is beyond scope. We include methods that explicitly predict pixels or latent representations over time; we exclude works limited to single-frame enhancement or purely static remote-sensing analysis. For clarity, we classify these approaches based on their fundamental generative mechanism, as summarized in Table 1. This classification distinguishes between the temporal operators, sequential AR rollouts, and parallel one-shot/blockwise predictions.

Table 1.

A comprehensive comparison of time-series forecasting mechanisms.

2. Fundamentals of Video Prediction

2.1. Nomenclature

To facilitate a clear and rigorous discussion, Table 2 establishes a common terminology for the core concepts, model architectures, and evaluation metrics central to this field.

Table 2.

Nomenclature of key abbreviations and terms.

2.2. Formal Problem Definition

Let denote a sequence of T observed context frames, and represent the ground-truth future frames. Each frame is a tensor with C channels and spatial dimensions . The goal of video prediction is to generate a sequence that is as close as possible to .

Deterministic predictors aim to learn a direct mapping by minimizing a reconstruction loss, implicitly modeling the conditional mean of the future distribution:

Probabilistic predictors learn a conditional distribution and generate diverse futures by sampling from latent variables or via iterative denoising. The objective is often to maximize the log-likelihood:

Action-conditional predictors, crucial for embodied agents like UAVs, extend this to , where is a sequence of future actions. For a UAV, an action could be a high-level command (e.g., target velocity and yaw rate, ) or low-level motor inputs. This conditioning, a cornerstone of model-based reinforcement learning [31], allows the model to “imagine” the consequences of different plans, forming the basis of model-based control [30].

- Core Challenges

The task is notoriously difficult due to the following: (i) High Dimensionality: Direct pixel-space generation is computationally expensive. This motivates latent-space prediction, where a compact representation is predicted first and then decoded to pixels, often leading to better efficiency and coherence. (ii) Inherent Stochasticity: The future is uncertain; a single past can lead to multiple plausible futures. Deterministic models often average these possibilities, resulting in blurry predictions. (iii) Complex Spatio-Temporal Dependencies: Models must capture both local motion and long-range interactions across space and time. (iv) Error Accumulation: In autoregressive generation, small errors in early frames can compound catastrophically over long horizons.

2.3. Training Objectives and Loss Functions

The choice of loss function profoundly impacts the quality of generated videos. Modern approaches typically use a weighted combination of several losses to balance fidelity, perceptual quality, and realism.

- Pixel-Level Fidelity Losses

The most direct objectives are pixel-wise losses, which are computationally cheap and provide a stable training signal.

- (Mean Squared Error, MSE) is widely used but tends to produce overly smooth and blurry results by penalizing large errors heavily.

- (Mean Absolute Error, MAE) is known to produce sharper images compared to L2 and is often preferred in modern architectures.

- Perceptual and Feature-Based Losses

To better align with human perception, losses can be computed in a learned feature space rather than pixel space.

- [32]: Uses features from a pre-trained network (e.g., VGG-19 [33]) to enforce perceptual similarity. It computes the distance between feature maps of the generated and real frames, capturing texture and structural information that pixel losses miss.

- [34]: A related loss that encourages the generated frames to match the style (correlations between features) of the real frames, further improving textural realism.

- Adversarial Loss

To produce crisp and realistic images, Generative Adversarial Networks (GANs) [35] introduce a discriminator network D that is trained to distinguish real frames from generated ones. The generator G (the prediction model) is then trained to fool the discriminator.

This adversarial objective pushes the generator to produce samples that lie on the manifold of real data, effectively acting as a learned loss function for realism.

- Temporal Consistency Losses

To ensure smooth motion, specific losses can be added to regularize the temporal dimension.

- : An optical flow-based warping loss, as described in [36], encourages the model to learn physically plausible motion by explicitly penalizing inconsistencies between a warped previous frame and the current predicted frame.

- : Some models directly predict optical flow and are supervised with a ground-truth flow (if available), using losses like the Average End-Point-Error (EPE): .

2.4. Evaluation Metrics: A UAV-Centric View

Evaluating video prediction requires a multi-faceted approach. A robust evaluation protocol should include metrics from each of the following categories.

- Pixel Fidelity.

Mean Squared Error (MSE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index (SSIM) [37] are standard. While fast to compute, they correlate poorly with human perception of quality and often penalize plausible but slightly displaced predictions, a common issue in UAV footage with high parallax.

- Perceptual and Distributional Similarity

- LPIPS [38]: Learned Perceptual Image Patch Similarity computes the distance between deep features, offering a more robust measure of perceptual quality. It has become a de facto standard for evaluating generative models.

- FVD [39]: Fréchet Video Distance is the standard for evaluating the distribution of generated videos. It measures the Fréchet distance between Gaussian distributions fitted to features of real and generated video clips extracted from a pre-trained video classifier, capturing both frame-level quality and temporal coherence.

- KVD [40]: Kernel Video Distance is an alternative to FVD that uses the Maximum Mean Discrepancy (MMD) with a polynomial kernel, proposed as a more stable metric for high-dimensional feature spaces.

- Motion and Temporal Consistency

Metrics that directly assess motion are critical for UAVs. These include End-Point-Error (EPE) of predicted optical flow, trajectory Mean Absolute Error (MAE) on tracked keypoints, and temporal consistency scores like temporal LPIPS (tLP) which measures perceptual similarity between consecutive frames. A comprehensive review of video generation metrics can be found in [41].

- UAV-Specific and Deployability

For UAV applications, metrics must be task-aware. ROI-aware metrics (e.g., ROI-PSNR) compute scores only on masked regions of interest (e.g., small moving targets), which is crucial for tracking applications. Deployability metrics are non-negotiable: latency (ms/frame), throughput (FPS), peak VRAM usage (GB), and energy efficiency (Joules/frame or Frames/Joule) must be reported on specified edge hardware (e.g., NVIDIA Jetson AGX Orin) and precision (e.g., FP16/FP8).

2.5. Traditional Approaches to Video Prediction

Before the dominance of deep learning, several traditional approaches were widely studied for video prediction. While less powerful in complex UAV scenarios, they remain important for historical context and lightweight baselines.

- Optical Flow Extrapolation.

Methods in this line estimate motion fields between consecutive frames (e.g., Horn–Schunck, Lucas–Kanade) and extrapolate them to generate future frames. While computationally efficient, flow extrapolation struggles with occlusions, non-rigid motion, and long-horizon stability.

- Dynamic Textures and Autoregressive Models.

Linear dynamical systems and autoregressive models (e.g., Dynamic Textures, Kalman filtering) capture pixel intensity evolution over time. These methods are suitable for short clips and simple textures but cannot generalize to high-resolution UAV videos with complex dynamics.

- Handcrafted Features and Shallow Models.

Earlier work also exploited handcrafted features such as SIFT, HOG, or sparse coding, combined with regression models to predict motion trajectories. Although interpretable and lightweight, such approaches lack the representation capacity required for high-dimensional UAV scenes.

Compared with modern deep models, traditional approaches offer advantages in efficiency and interpretability but fall short in scalability and fidelity. As such, they are rarely competitive in current UAV prediction tasks, yet they provide useful baselines and motivate hybrid methods that incorporate physics priors.

2.6. Quantitative Summary of Current SOTA in UAV Predictive Autonomy

To help practitioners quickly track the latest capabilities, Table 3 consolidates representative accuracy and efficiency metrics across major model families used for UAV video prediction. Accuracy metrics (PSNR/SSIM/FVD) are drawn from reported results in recent literature, while efficiency (FPS/VRAM) reflects typical ranges observed on edge (Jetson Orin) and workstation (RTX 4090) deployments, consistent with Section 3.1, Section 3.2 and Section 3.3.

Table 3.

Unified SOTA summary across the three axes (operator, generative, regime). Accuracy ranges are representative values from prior work; efficiency reflects typical deployment ranges on Jetson Orin (edge) and RTX 4090 (workstation).

3. A Multi-Dimensional Taxonomy of Video Prediction Models

To navigate the complex design space of modern video prediction, we propose a three-axis taxonomy that disentangles architectural choices, generative principles, and training strategies. This framework provides a structured lens to analyze and compare models, especially for deployment on resource-constrained platforms like UAVs.

3.1. Axis A: Operator Architecture

This axis separates the model’s spatial feature extractor (the backbone) from its temporal evolution mechanism (the temporal operator).

3.1.1. Type I: Convolutional Recurrence

This family of models, built upon the Convolutional LSTM (ConvLSTM) [11], pioneered deep learning for video prediction. ConvLSTM replaces the matrix multiplications in a traditional LSTM [55] with convolutional operations, allowing it to maintain a 2D spatial structure in its hidden states. This makes it a natural fit for modeling pixel-space dynamics. A summary of key models in this category is presented in Table 4.

Table 4.

Summary of key models based on convolutional recurrence.

- Key Models and Evolution

- ConvLSTM [11]: The foundational work that introduced the convolutional recurrent cell for precipitation nowcasting. Its strength lies in its strong spatio-temporal locality bias.

- PredRNN [12] and PredRNN++ [42]: This series of works identified key limitations of deep ConvLSTM stacks. PredRNN introduced a spatio-temporal LSTM (ST-LSTM) unit to better model spatial correlations, while PredRNN++ added a Gradient Highway Unit (GHU) to route gradient information through time, alleviating the vanishing gradient problem in very deep recurrent models.

- E3D-LSTM [56]: Proposed an Eidetic 3D LSTM that uses a self-attention mechanism to recall detailed state representations from the past, allowing the model to better handle long-term dependencies with transient details.

- MIM (Masked Input Modeling) [57]: Addressed the issue of static regions in videos by introducing a mechanism to block stationary memory cells from updating, focusing computation on dynamic areas.

- PhyDNet [43]: Explicitly disentangled physical dynamics (e.g., predictable motion) from residual uncertainties. It uses a ConvLSTM to model the residual part, guided by a physics-based differential equation solver, making it well-suited for scenes with predictable fluid-like motion.

- TAU (Temporal Attention Unit) [58]: A recent work that augments recurrent models with a temporal attention mechanism to achieve state-of-the-art performance in forecasting, showing that hybrid recurrent-attention models remain a competitive research direction.

- UAV-Centric Analysis

- Strengths: Convolutional recurrence has a strong inductive bias for local motion and translation equivariance, making it data-efficient for simple dynamics. Its recurrent nature allows for rollout to arbitrary lengths.

- Weaknesses: The strictly sequential nature of recurrence (one step at a time) leads to high inference latency and makes training parallelization difficult. More importantly, they are prone to error accumulation and often struggle to model complex, non-local interactions (e.g., a UAV turning rapidly, causing the entire scene to rotate).

3.1.2. Type II: Attention and Transformers

Inspired by their success in NLP [59] and image recognition [60], Transformers have been adapted for video by treating a video as a sequence of spatio-temporal tokens. Their core component, self-attention, allows every token to directly attend to every other token, making it exceptionally powerful for modeling long-range dependencies. Table 5 summarizes key developments.

Table 5.

Summary of key models based on Attention and Transformers.

- Key Models and Evolution

- Factorized Transformers: Early works adapted vision transformers by factorizing spatial and temporal attention to manage the quadratic cost. TimeSformer [61] explored different space-time attention schemes, while ViViT [62] proposed efficient tokenization strategies like “tubelet” embedding.

- Local/Windowed Attention: To improve scalability, models like the Video Swin Transformer [26] compute attention within local, non-overlapping windows that are shifted across layers, achieving linear complexity with respect to the number of tokens.

- Generative Video Transformers: More recent works have focused on large-scale generation. Phenaki [63] can generate minute-long videos from a sequence of text prompts by using a bidirectional transformer to compress video into discrete tokens. MagVIT-v2 [48] is a state-of-the-art model that uses a multi-stage transformer to perform masked token prediction, achieving high-quality results. The architecture of Sora [64] also builds on the transformer paradigm, using a diffusion-transformer to process “spacetime patches” for large-scale, high-fidelity video generation.

- UAV-Centric Analysis

- Strengths: Unparalleled ability to model global, long-range spatio-temporal dependencies. This is crucial for UAVs performing complex maneuvers where the entire scene transforms globally. Their parallelizable nature is a significant advantage for training.

- Weaknesses: The quadratic complexity of full self-attention ( for sequence length L) is prohibitive for high-resolution, long-horizon videos. While variants like Swin reduce this, memory usage remains a major bottleneck for edge deployment on UAVs. They also lack a strong temporal inductive bias, often requiring massive datasets to learn motion priors effectively.

3.1.3. Type III: State-Space Models (SSMs)

SSMs are a recent and highly promising class of temporal operators that blend the strengths of RNNs and Transformers. Inspired by classical state-space models from control theory, modern deep learning versions like S4 and Mamba learn these state-space parameters from data. Key SSM-based models are listed in Table 6.

Table 6.

Summary of key models based on state-space models (SSMs).

- Key Models and Evolution

- S4 [23] and S5 [65]: The breakthrough works that stabilized SSMs for deep learning. S4 used a specific parameterization (diagonal plus low-rank), while S5 further simplified the formulation, making it highly effective for long-sequence modeling.

- Mamba [24]: Improved upon prior SSMs by making the parameters input-dependent, allowing the model to selectively remember or forget information based on the content. This “selective” mechanism gives it capabilities rivaling attention but with linear-time complexity.

- Vision Mamba (Vim) and VMamba [50,66]: Adapted the Mamba architecture for vision tasks by “flattening” image patches into a sequence and applying the Mamba block. These models have shown competitive performance to Vision Transformers with better computational scaling.

- SimVPv2 [45]: A video prediction model that incorporates an “Invariant-Memory State-Space” (IM-SSM) block, demonstrating the direct applicability and benefits of SSMs for spatio-temporal forecasting.

- ss-Mamba [67]: A recent variant of the Mamba family, ss-Mamba integrates semantic-aware embeddings and spline-based temporal encodings within a selective state-space modeling framework to enhance forecasting accuracy, robustness, and interpretability while reducing computational overhead in complex sequence modeling tasks.

- UAV-Centric Analysis

- Strengths: SSMs offer the “best of both worlds”: linear-time complexity () and constant memory during autoregressive inference like an RNN, combined with parallelizable training and the ability to capture very-long-range dependencies like a Transformer. This makes them exceptionally well-suited for the long-horizon, high-resolution, and resource-constrained nature of UAV deployment.

- Weaknesses: As a very new architecture class, best practices for implementation and hyperparameter tuning are still emerging. Their inductive bias for visual tasks is also less understood compared to CNNs or Transformers.

3.1.4. Type IV: Explicit Warping and Flow

This approach is founded on a strong physical prior: most changes in a video are due to motion. These models first explicitly estimate motion, typically as a dense optical flow field using networks like RAFT [68], and then use this field to warp the previous frame into the future. A separate, often smaller, neural network then predicts a residual to handle disocclusions, new content, and non-rigid changes. A summary is provided in Table 7.

Table 7.

Summary of approaches based on explicit warping and flow.

- Key Models and Evolution

- DVF (Deep Voxel Flow) [36]: Learned a voxel flow representation to warp past frames into the future and then used a GAN to synthesize a sharp result.

- SAVP (Spatially-Aware Video Prediction) [44]: An action-conditional model that disentangles static and dynamic content. It predicts future optical flow and combines it with a learned foreground mask to generate predictions.

- Layered Representations: Some models predict that scenes are composed of multiple layers, each with its own motion. World of Bits [69] and later works model a scene as a set of sprites or objects on a background, predicting the motion of each independently.

- Neural Radiance Fields (NeRF) based prediction: A new frontier involves using NeRF [71] for prediction. Models like DyNeRF [70] learn a dynamic scene representation that can be rendered from any viewpoint at any time, implicitly performing prediction by modeling the underlying 3D scene dynamics.

- UAV-Centric Analysis

- Strengths: Incorporating a strong geometric prior makes these models highly data-efficient and capable of producing sharp predictions for scenes dominated by camera motion, which is very common for UAVs.

- Weaknesses: They are brittle. If the underlying flow estimation fails (e.g., in scenes with low texture, fast motion, or severe occlusions), the entire prediction quality collapses. The two-stage process (flow estimation then residual prediction) can also be less efficient than end-to-end models.

3.1.5. Comparative Analysis of Operator Architectures

While Section 3.1.1, Section 3.1.2, Section 3.1.3 and Section 3.1.4 described each operator family qualitatively, UAV practitioners often require a side-by-side comparison to evaluate deployment feasibility. Table 8 summarizes the key quantitative trade-offs across convolutional recurrent networks, Transformers, state-space models, and flow/warping-based methods.

Table 8.

Quantitative comparison of operator architectures for UAV video prediction. Estimates assume a 1B-parameter FP16 model processing video (batch size 1). FPS and VRAM are projected on Jetson Orin (edge) and RTX 4090 (workstation).

From this comparison, SSMs provide the best balance between long-horizon modeling and efficiency, while Transformers achieve strong accuracy at the cost of memory and latency. ConvLSTM remains competitive on resource-limited UAVs, whereas flow-based methods excel when explicit motion priors dominate but are less flexible in unconstrained scenes.

3.2. Axis B: Generative Nature (Deterministic vs. Probabilistic)

This axis classifies models based on whether they produce a single, best-guess future (deterministic) or a distribution of possible futures (probabilistic). This choice is fundamental as it relates directly to handling the inherent uncertainty of the real world.

3.2.1. Deterministic Models

Deterministic models learn a one-to-one mapping from past frames to future frames, typically by minimizing a pixel-wise reconstruction loss like L1 or L2. They predict the conditional mean of the future distribution. Table 9 lists key examples.

Table 9.

Summary of key deterministic models.

- Representative Models:

Many early ConvLSTM-based models fall into this category. A prime modern example is SimVP [13], which uses a simple, purely convolutional architecture to achieve state-of-the-art deterministic predictions. Its success demonstrates that a well-designed backbone can implicitly learn complex dynamics without explicit probabilistic modeling.

- UAV-Centric Analysis:

Strengths: Deterministic models are computationally efficient, straightforward to train, and produce stable, repeatable predictions. This makes them suitable for UAV tasks where a single, plausible forecast is sufficient, such as short-term obstacle avoidance. Weaknesses: They famously suffer from the “blurry prediction” problem. When faced with multiple possible futures (e.g., a vehicle at an intersection could turn left or right), the model averages these outcomes, resulting in a fuzzy, unrealistic image. This limits their use in long-horizon planning or scenarios requiring risk assessment based on multiple outcomes.

3.2.2. Probabilistic Models

Probabilistic models aim to capture the full distribution of possible futures, . This allows them to generate diverse and realistic samples, which is crucial for robust decision-making under uncertainty.

- Type I: Variational Autoencoders (VAEs)

VAEs [72] learn a compressed latent distribution of the future. By sampling a latent vector from this distribution and feeding it to a decoder, they can generate a variety of future sequences. This provides a principled and efficient way to model uncertainty. Key VAE-based models are summarized in Table 10.

Table 10.

Summary of key VAE-based probabilistic models.

- VRNN (Variational RNN) [73]: One of the earliest works to combine RNNs with variational inference, allowing the model to handle high-dimensional sequential data like speech and video.

- SVG (Stochastic Video Generation) [74]: A seminal VAE-based model for video prediction. It uses a learned prior and posterior distribution over a latent variable that is sampled for each predicted frame, enabling diverse outputs.

- SLT (Stochastic Latent Transformer) [75]: This model represents a modern hybrid approach. It first uses a VAE to encode frames into a discrete latent space and then applies a Transformer to model the temporal dynamics of these latent representations. This leverages the probabilistic nature of VAEs and the long-range modeling capabilities of Transformers.

- GH-VAE (Generative Hierarchical VAE) [76]: Employs a hierarchical latent space to model video from a global level down to fine-grained details, improving the quality and coherence of long-term generation.

- VAE-SD (Supervised Disentanglement VAE) [77]: A more recent work that combines disentangled representation learning with VAEs to improve the diversity and quality of video generation.

- TD-VAE (Temporally-Disentangled VAE) [78]: This work focuses on learning more structured representations by explicitly disentangling time-invariant factors (e.g., an object’s identity) from time-varying factors (e.g., its position and pose) within the latent space, which is crucial for better understanding and control.

- Dreamer Series as World Models [30]: While complex systems, the Dreamer models are fundamentally built on VAE principles. They learn a compact, latent world model (often called a Recurrent State-Space Model with a variational component) where an agent can efficiently plan future actions through “imagination.” This represents a highly successful application of VAEs for decision-making and control, directly relevant to predictive autonomy.

- UAV-Centric Analysis

- Strengths: VAEs provide a principled way to model uncertainty and generate diverse futures. For a UAV, this means it could anticipate multiple possible trajectories for a pedestrian, allowing the planner to choose a path that is safe under all likely scenarios. The trend towards structured, disentangled latent spaces (e.g., TD-VAE) and their successful application in world models (Dreamer) makes them highly compelling for learning controllable and interpretable environment models.

- Weaknesses: The diversity often comes at the cost of sharpness, as VAEs can also suffer from a form of blurring or mode-averaging within the decoder, though less severely than deterministic models. The quality of generation is highly dependent on the expressiveness of the latent space.

- Type II: Generative Adversarial Networks (GANs)

GANs [35] use a minimax game between a generator and a discriminator to produce highly realistic outputs. The generator predicts future frames, while the discriminator is trained to distinguish between real and generated sequences. This adversarial pressure forces the generator to produce sharp, perceptually convincing frames that lie on the manifold of natural images. Key developments are summarized in Table 11.

Table 11.

Summary of key GAN-based probabilistic models.

- vid2vid (Video-to-Video Synthesis) [79]: A foundational work in conditional video generation. While not strictly a prediction model, it demonstrates how GANs can translate a sequence of abstract inputs (like semantic segmentation maps) into photorealistic video. This capability is crucial for generating realistic simulation data from abstract planner outputs.

- MoCoGAN [80]: Decomposes motion and content into separate latent spaces, allowing for better control and structured generation of video content.

- TGANv2 (Temporal GAN v2) [81]: This work directly addresses the challenge of temporal consistency. It pairs a recurrent generator with a temporal discriminator that evaluates sequences of frames, encouraging the model to learn smoother and more plausible long-term motion dynamics.

- DVD-GAN (Dual Video Discriminator GAN) [82]: Utilized two discriminators—a spatial discriminator that judges frame quality and a temporal discriminator that judges motion realism across frames—to generate high-quality, coherent videos.

- StyleGAN-V and StyleVideoGAN [83,84]: These state-of-the-art models build upon the powerful StyleGAN architecture. They leverage style-based synthesis and disentangled latent spaces to generate high-resolution, continuous videos with a high degree of control over appearance and motion, demonstrating the continued power of GANs for high-fidelity applications.

- Video-GPT [47]: While primarily using a VQ-VAE and Transformer, it crucially employs an adversarial objective on top of its decoder to enhance the realism and sharpness of the final pixel-space output, showcasing the effectiveness of hybrid approaches.

- UAV-Centric Analysis

- Strengths: Unmatched ability to produce crisp, high-fidelity images. For applications like sim-to-real, data augmentation, or generating realistic training environments for other perception modules on a UAV, GANs are highly effective. The ability to perform conditional synthesis (vid2vid) is particularly valuable for creating realistic sensor data from simulated planner outputs.

- Weaknesses: GANs are notoriously difficult and unstable to train. They can also suffer from “mode collapse,” where the generator learns to produce only a limited variety of outputs, defeating the purpose of probabilistic modeling. Ensuring long-term temporal coherence remains a significant challenge, although models like TGANv2 have made progress.

- Type III: Denoising Diffusion Models

Diffusion models [85,86] are the current state-of-the-art in generative modeling. They work by progressively adding noise to data in a “forward process” and then training a neural network to reverse this process, starting from pure noise and iteratively denoising it to generate a clean sample. Key models are listed in Table 12.

Table 12.

Summary of key diffusion-based probabilistic models.

- MCVD (Masked Conditional Video Diffusion) [17]: A leading diffusion-based model for video prediction. It takes past frames as a condition and uses a masked denoising process to generate future frames, demonstrating remarkable quality and diversity.

- LVDM (Latent Video Diffusion Models) [51]: To manage the immense computational cost of running diffusion in pixel space, LVDM first uses an autoencoder to compress the video into a much lower-dimensional latent space. The diffusion process then occurs entirely in this latent space, followed by a final decoding step. This approach is now standard for large-scale video models.

- LFDM (Latent Flow Diffusion Model) [87]: This work directly tackles the slow sampling speed of diffusion models. By leveraging Rectified Flow, LFDM learns a straighter trajectory between noise and data in the latent space, enabling it to produce high-quality video in as few as 4–8 inference steps—a significant move towards real-time feasibility.

- W.A.L.T/Sora [64,88]: These models represent the state-of-the-art in generative fidelity by pairing the diffusion framework with a powerful Transformer backbone (often termed a Diffusion Transformer). They process video as a sequence of “spacetime patches,” enabling remarkable temporal consistency and high-resolution output.

- MotionCtrl [89]: This model enhances the controllability of video generation by allowing explicit inputs for camera motion (e.g., pan, tilt, zoom) and object trajectories. This is a crucial step towards creating world models that can be precisely controlled for planning and simulation in robotics.

- ExtDM (Extrapolative Diffusion Models) [90]: To address long-horizon generation, ExtDM introduces an autoregressive mechanism within the latent diffusion process. It predicts a short chunk of future latent codes, appends them to the context, and then predicts the next chunk, enabling the generation of much longer and more coherent video sequences than one-shot models.

- UAV-Centric Analysis

- Strengths: Produce the highest quality and most diverse samples among all generative models. Their ability to generate highly realistic future scenarios could be transformative for high-stakes UAV mission planning and simulation. Models like MotionCtrl show a clear path toward action-conditional world models with fine-grained control.

- Weaknesses: Their primary drawback is the slow inference speed. However, recent advancements like LFDM are rapidly closing this gap by drastically reducing the required number of sampling steps. While still challenging for onboard deployment, these efficiency improvements suggest that real-time diffusion models may become feasible in the near future, especially when combined with hardware acceleration and distillation techniques.

3.2.3. Comparative Analysis of Generative Paradigms

While Section 3.2.1 and Section 3.2.2 outlined the strengths of deterministic, variational, adversarial, and diffusion-based models, UAV deployment requires a side-by-side evaluation of their trade-offs. Table 13 provides a structured summary, including predictive fidelity, diversity, inference efficiency, and lightweight strategies.

Table 13.

Comparison of generative paradigms for UAV video prediction. Metrics are representative values drawn from recent literature. FPS measured on Jetson Orin (edge) and RTX 4090 (workstation).

From this comparison, diffusion models clearly dominate in fidelity and diversity but suffer from high latency. Recent studies show that reducing diffusion steps from 250 to 20 improves FPS from ~2 to ~15 on edge hardware, with only minor PSNR/SSIM degradation (e.g., 28.2 → 28.0, SSIM 0.91 unchanged) [91,92]. Latent compression (e.g., spatial , temporal 8×) further boosts speed but reduces PSNR by ~5 dB [91,93]. Knowledge distillation can shrink diffusion models to 4–8 steps, retaining over 95% of performance while reaching 10+ FPS on mobile GPUs [91,94,95]. For UAV deployment, this suggests that diffusion is viable only with aggressive acceleration, while VAEs and GANs offer better real-time trade-offs.

3.3. Axis C: Training and Inference Regime

This axis describes the strategic choices in how models are trained, how they generate predictions, and how they are optimized for real-world deployment on edge devices. These choices often have as much impact on final performance as the model architecture itself.

3.3.1. Advanced Training Paradigms

Beyond the basic training loop, several advanced paradigms are used to improve model performance, data efficiency, and task alignment, as summarized in Table 14.

Table 14.

Summary of advanced training paradigms.

- Curriculum and Multi-Stage Training

Instead of training on the full complexity of the task from the start, curriculum learning introduces concepts gradually.

- Loss Scheduling: A common curriculum is to start by training with a simple L1/L2 loss to establish a stable baseline. Perceptual and adversarial losses are then gradually introduced or their weights increased, guiding the model toward finer details and realism without causing early training instability.

- Multi-Stage Architecture Training: This is central to latent space models. First, a convolutional autoencoder (often a VQ-VAE) is trained on a massive image or video dataset to learn a robust and compressed latent space. In the second stage, this encoder/decoder is frozen, and the temporal model (Transformer, SSM, or diffusion model) is trained exclusively in the much lower-dimensional latent space. This approach, used by Video-GPT [47] and latent diffusion models, is far more computationally efficient.

- Self-Supervised Pre-Training and Fine-Tuning

Inspired by the success of models like BERT and GPT, pre-training on large, unlabeled datasets followed by fine-tuning on a specific task has become a dominant paradigm.

- Masked Autoencoding (MAE) [96] is a powerful self-supervised pre-training strategy. Models like MaskViT [97] are trained to reconstruct heavily masked (e.g., 75%) patches of a video. To succeed, the model must learn rich internal representations of motion and appearance.

- Transfer Learning: A model pre-trained on a large-scale general video dataset (e.g., Kinetics-400 for human actions or web-crawled videos) can then be fine-tuned on a smaller, domain-specific UAV dataset. This transfers general knowledge about visual dynamics, significantly improving performance and reducing the amount of specialized data needed. This is a crucial strategy to overcome the data scarcity challenge in the UAV domain.

- Fine-tuning with Reinforcement Learning (RL)

For control tasks, aligning the predictive model with the ultimate goal (e.g., reaching a waypoint without crashing) is more important than achieving perfect pixel accuracy.

- World Models [29,30] fully embrace this concept. The predictive model is trained to forecast future latent states. A separate policy network is then trained via RL *entirely within the latent space of the world model*. The gradients from the RL reward signal can be used to fine-tune the predictive model itself, ensuring that what it predicts is not just visually plausible but also useful for making good decisions. This tight coupling of prediction and control is the essence of advanced predictive autonomy.

3.3.2. Generation and Inference Strategies

This defines the core mechanism by which a model produces its output sequence. Table 15 compares the two main approaches.

Table 15.

Comparison of generation and inference strategies.

- Autoregressive (AR) Models

Autoregressive (AR) models generate the future one frame at a time: the prediction for frame , , is conditioned on all previously generated frames . Most ConvLSTM- and SSM-based predictors adopt this scheme due to their recurrent inference path.

A well-known pitfall of AR decoding is exposure bias. During training, the model typically consumes ground-truth frames (teacher forcing), which is efficient but induces a train–test mismatch: at inference it must condition on its own, potentially imperfect, rollouts. The resulting feedback can degrade quality rapidly over long horizons. Scheduled Sampling [98] mitigates this by gradually replacing ground-truth inputs with model predictions during training, thereby acclimating the model to its own errors.

- Non-Autoregressive/One-Shot Models

These models generate the entire sequence of future frames (or large blocks of them) in a single forward pass, breaking the sequential dependency. This is typical for Transformer-based models and some diffusion models.

- MagViT [48] is a prime example of a non-autoregressive transformer that predicts all future frames’ tokens in parallel, leading to a significant speed-up. Similarly, latent diffusion models like LVDM [51] can generate a sequence of future latents in a single diffusion process, which are then decoded in parallel.

- UAV-Centric Trade-off: AR models offer flexibility for variable-length prediction but can be slow and suffer from error accumulation. Non-AR models are much faster for fixed-length prediction but may lack the fine-grained temporal consistency of their AR counterparts. For UAVs, a fast non-AR model might be ideal for short-term reactive planning, while a more deliberate AR model could be used for longer-term mission planning offline.

3.3.3. Deployment and Acceleration Strategies

Bridging the lab-to-field gap requires aggressive optimization to fit powerful models onto resource-constrained UAV hardware. Key techniques are outlined in Table 16.

Table 16.

Summary of deployment and acceleration strategies.

- Knowledge Distillation

This involves training a small, fast “student” model to mimic a large “teacher.”

- Output-Based Distillation: The student is trained to match the final predictions of the teacher. For a diffusion model, this means training a single-step student network to replicate the output of the multi-step teacher, a technique explored in works like [95].

- Feature-Based Distillation: A more powerful technique where the student is also trained to match the intermediate feature maps of the teacher. This provides a richer training signal. A recent framework, F-VLM [99], shows how pre-trained vision-language models can be distilled into much smaller, efficient students for deployment. While not specific to prediction, the principles are directly applicable.

- Quantization and Pruning

These techniques reduce the model’s size and computational demands at the hardware level.

- Quantization: A crucial step for deployment on platforms with specialized hardware like NVIDIA’s Tensor Cores or Qualcomm’s AI Engines. Toolsets like NVIDIA’s PTQ Toolkit provide methods for calibrating and converting models to FP8 with minimal accuracy loss. As demonstrated in works like ZeroQuant [100], sophisticated quantization techniques can be applied even to massive transformer models.

- Pruning: While classic pruning creates sparse models that are hard to accelerate on GPUs, structured pruning removes entire filters or attention heads. Recent methods like LLM-Pruner [101] have developed effective strategies for pruning large transformer models, which can be adapted for vision transformers used in video prediction.

- Hardware–Software Co-Design

This emerging paradigm involves designing the neural network architecture with the target hardware’s capabilities in mind from the very beginning.

- Neural Architecture Search (NAS) can be used to automatically discover efficient model architectures. For instance, Once-for-All (OFA) [102] trains a single, large “over-parameterized” network from which specialized sub-networks can be quickly extracted to meet diverse hardware constraints without retraining. This allows for deploying tailored models for different UAV platforms (e.g., a small model for a nano drone, a larger one for a heavy-lift hexacopter) from a single trained asset.

3.3.4. Comparative Analysis of Training and Inference Regimes

While Section 3.3.1, Section 3.3.2 and Section 3.3.3 introduced autoregressive, non-autoregressive, and acceleration strategies individually, it is essential to evaluate their relative trade-offs for UAV deployment. Table 17 summarizes these regimes across multiple dimensions.

Table 17.

Comparison of training and inference regimes for UAV video prediction.

In summary, AR regimes excel at modeling long temporal dependencies but are limited by latency and error accumulation. Non-AR approaches deliver high efficiency, making them ideal for real-time UAV tasks, yet their fixed horizon constrains applicability. Hybrid approaches, though more complex, offer a promising compromise by mitigating error accumulation while retaining partial flexibility. This comparative view highlights that different UAV applications (e.g., long-term navigation vs. short-term obstacle avoidance) naturally map to distinct inference regimes.

4. Key Applications and Challenges for Embodied UAVs

The theoretical advancements in video prediction are compelling, but their true value is realized when grounded in real-world applications. For embodied UAVs, predictive models are not merely academic exercises; they are enabling technologies for a new level of autonomy. This section explores three core applications—navigation, tracking, and anomaly detection—analyzing the specific demands each places on model design through the lens of our three-axis taxonomy.

4.1. Predictive Control for Autonomous Navigation in GPS-Denied Environments

In environments like urban canyons, dense forests, or indoor spaces, GPS signals are unreliable or unavailable. Here, vision becomes the primary sense for navigation. Predictive control, particularly Model Predictive Control (MPC) [103], leverages a forward model of the world to plan actions. Video prediction models serve as powerful, learned forward models.

- Problem Setting and Approach

Given the UAV’s past observations and a candidate sequence of future control actions , an action-conditional video prediction model forecasts the resulting future states or observations . A planner then optimizes over the space of possible action sequences by minimizing a cost function evaluated on the imagined futures. This “imagination-based planning” is the core idea of seminal works like Dreamer [15] and its successor DreamerV3 [30]. In the context of robotics, this approach is often referred to as Visual MPC, where systems like MPPI [104] provide efficient algorithms for searching the action space. The goal is to learn a policy that can navigate complex environments by “thinking ahead.”

- Model Design Requirements (Mapping to Taxonomy)

- Axis A (Operator): Long planning horizons () are essential for non-myopic behavior. This makes SSMs (e.g., SimVPv2) and efficient Transformers superior to ConvLSTMs, as they are less prone to vanishing gradients and better at capturing long-range dependencies with favorable scaling properties. Prediction is often performed in a compact latent space rather than pixel space to save computation.

- Axis B (Generative Nature): Navigation in dynamic environments is fraught with uncertainty. A probabilistic model (e.g., VAE-based like SVG) is highly desirable as it can generate multiple possible futures for other agents (e.g., pedestrians, vehicles), enabling the planner to find a robust policy that is safe across many outcomes.

- Axis C (Training/Inference): The model must be action-conditional. Training data must contain tightly synchronized `(observation, action, next_observation)’ triplets. At inference, the model is used in an autoregressive fashion to roll out long trajectories.

- UAV-Specific Challenges

Unlike ground robots, UAVs operate in full 3D space with 6-DoF dynamics. This creates an enormous action space for the planner to search. The visual scene is dominated by ego-motion, and the lack of a ground-plane constraint means that small prediction errors in pitch or roll can lead to catastrophically divergent future trajectories. While early works demonstrated visual MPC on UAVs for tasks like trail following [105], adapting these models to the extreme dynamics and safety requirements of complex urban environments remains a major challenge. Recent works like Unified World Models (UWM) [106]: This line of work explores unified world models that integrate **video diffusion** and **action diffusion** within a single transformer architecture to model dynamics, inverse/forward mappings, and video generation jointly across multiple robotic domains. By training on both robot trajectories and unlabeled video data, UWM aims to generalize across diverse robots and environments with a single model.

4.2. Proactive Target Tracking Through Occlusion and Abrupt Motion

Visual object tracking from a UAV is a core capability for applications ranging from surveillance to search and rescue. A key failure mode for trackers is when the target is temporarily occluded or undergoes abrupt motion. Video prediction offers a robust solution by providing a learned motion prior.

- Problem Setting and Approach

Most modern trackers follow a tracking-by-detection paradigm, which re-localizes the target in each frame. However, when the detector fails, the track is lost. By incorporating a predictive module, the tracker can “coast” through such failures. The system maintains a target state (e.g., a Kalman filter state) which is propagated forward in time using both a classical motion model and a deep predictive model. The deep model can forecast the target’s appearance and location, enabling rapid re-detection after occlusion. This principle is explored in works like P-Tracker [107] and has roots in earlier ideas of using generative models to handle occlusion in tracking [108]. Recent Siamese network-based trackers like Siam R-CNN [109] can also be augmented with temporal prediction modules to improve long-term robustness.

- Model Design Requirements (Mapping to Taxonomy)

- Axis A (Operator): Since tracking is a real-time task, low latency is critical. Lightweight CNNs (e.g., SimVP-style) or shallow ConvLSTMs are often preferred over heavy Transformers or SSMs for this application. The prediction can be done on a small, cropped region of interest (ROI) around the target to save computation.

- Axis B (Generative Nature): For most tracking scenarios, a fast deterministic prediction is sufficient. The primary goal is to get a good estimate of the target’s next location, not to generate photorealistic crops. However, for highly erratic targets, a probabilistic model could forecast a distribution of possible next locations, guiding a more robust search strategy.

- Axis C (Training/Inference): Training with ROI-aware losses is crucial. The loss should be computed only on or near the target’s bounding box to ensure the model focuses on learning the target’s specific dynamics, not the background.

- UAV-Specific Challenges

Targets viewed from UAVs are often very small, occupy only a few pixels, and undergo dramatic scale and appearance changes. The VisDrone [110] and UAVDT [111] datasets are filled with such challenging scenarios. The high motion of both the camera and the target means that simple motion models (like constant velocity) are often insufficient, making learned, content-aware predictors particularly valuable.

4.3. Unsupervised Anomaly Detection for Infrastructure Inspection

UAVs are increasingly used for the automated inspection of critical infrastructure. Unsupervised anomaly detection aims to identify novel or unexpected phenomena—such as a new crack, corrosion, or vegetation overgrowth—without prior training on examples of these faults.

- Problem Setting and Approach

The “prediction-based” paradigm is standard for this task. A model is trained exclusively on large amounts of data depicting “normal” conditions. At test time, the model predicts the next frame(s), and an anomaly score is computed based on the prediction error (PE) between the prediction and the actual frame . This idea has been extensively explored in video anomaly detection (VAD), with models like AnoPred [112] and methods that combine reconstruction and prediction losses [18]. The core assumption is that the model, having only seen normal data, will fail to accurately predict unseen anomalous events. This approach is explored in aerial contexts in datasets like Drone-Anomaly [113], and a comprehensive survey of VAD techniques can be found in [114].

- Model Design Requirements (Mapping to Taxonomy)

- Axis A (Operator): High fidelity is key. The model must be powerful enough to accurately reconstruct fine details and textures of normal data. Deep CNN backbones are common. To handle the strong geometric structure, models incorporating explicit warping/flow can be very effective, as they can separate appearance changes from simple viewpoint shifts.

- Axis B (Generative Nature): A high-capacity deterministic model is often the best choice, as the goal is to learn a single, precise model of the normal data distribution. Some works explore using the discriminator of a GAN to identify out-of-distribution (anomalous) samples.

- Axis C (Training/Inference): A critical preprocessing step is ego-motion compensation. By using IMU data or estimating optical flow, the input frames can be warped to a reference frame, ensuring the model doesn’t flag changes due to UAV movement as anomalies.

- UAV-Specific Challenges

The definition of “normal” can be highly variable due to changing illumination, weather, and seasons. A model trained only on sunny-day data may falsely flag shadows on a cloudy day as anomalies. Developing models that are robust to these environmental variations while remaining sensitive to subtle structural faults is a major open research problem. Furthermore, camera jitter and ego-motion can easily be mistaken for anomalies, making stabilization (as discussed in Section 5.1) a critical prerequisite.

4.4. UAV-Centric Datasets and Their Limitations

The performance of any predictive model is fundamentally tied to the quality and characteristics of the training data. While several UAV-centric datasets exist, many were not designed with video prediction in mind. We add to our earlier table with datasets like UAV-ARG [115], which provides high-quality data for gesture recognition, and Blackbird [116], a large-scale dataset specifically for aggressive, vision-based drone racing.

- Critical Gaps in Existing Datasets

A major bottleneck for advancing UAV video prediction, especially for world models, is the lack of large-scale datasets that provide long, continuous video sequences tightly synchronized with high-frequency 6-DoF ego-motion data (IMU), and control inputs. Datasets from the autonomous driving domain, like Argoverse 2 [117] and the Waymo Open Dataset [118], provide a blueprint for what is needed: diverse scenarios with rich, multi-modal, synchronized sensor data. The remote sensing community needs a similar benchmark to truly push the boundaries of predictive autonomy for UAVs.

4.5. Toward Standardized Evaluation Protocols for UAV Settings

Meaningful progress requires consistent and comprehensive evaluation. We advocate for a standardized protocol that moves beyond simple PSNR/SSIM.

- Stratified Reporting

Performance should not be a single number. Results must be stratified by key factors affecting difficulty: scene type (e.g., urban vs. rural), ego-motion dynamics (e.g., hover vs. aggressive flight, measured by average optical flow magnitude), and environmental conditions (day/night, clear/rainy).

- Task-Oriented Metrics

Evaluation should be tied to the downstream task. For navigation, as demonstrated in benchmarks like the CARLA challenge [119], metrics should include route completion rates and infractions (collisions). For tracking, the primary metric is tracking accuracy (e.g., AUC, precision) during occlusion, directly measuring the benefit of the predictive module.

- Efficiency and Power Disclosure

A model’s utility for UAVs is meaningless without a clear report of its performance on relevant edge hardware. We propose a standard reporting format: `FPS|VRAM (GB)|Power (W) @ Resolution on [Hardware] with [Precision]’. For instance: `32 FPS|3.1 GB|18 W @ 512 × 512 on Jetson AGX Orin with FP16’. This transparency is essential for reproducibility and fair comparison.

5. Engineering Considerations and Deployment

A performant video prediction model in a Jupyter notebook is merely a prototype. Transforming it into a reliable component on an autonomous UAV requires navigating a series of significant engineering challenges, from system architecture to real-time acceleration and safety validation.

5.1. System Architecture on Board a UAV

An effective onboard system is not a monolithic program but a collection of asynchronous, communicating modules. Modern robotics frameworks like ROS 2 (Robot Operating System 2) [120] provide a robust, real-time-capable foundation for such architectures.

- A Decoupled, Multi-Module Pipeline

A typical pipeline for predictive autonomy can be structured as follows:

- Sensor Abstraction Layer: Nodes that publish synchronized sensor data (camera frames, IMU readings, GPS if available) with consistent timestamps.

- State Estimation Node: A Visual-Inertial Odometry (VIO) or SLAM module (e.g., VINS-Fusion [121]) that provides a high-frequency estimate of the UAV’s 6-DoF pose. This is the bedrock of the system.

- Prediction Node: This core module subscribes to sensor data and pose estimates. It first performs ego-motion stabilization by warping incoming frames to a common reference frame based on the VIO data. This crucial step offloads the modeling of background motion from the neural network, allowing it to focus its capacity on learning object dynamics and scene changes. The stabilized frames are then fed into the video prediction model.

- Planning and Decision Node: Subscribes to the predicted futures (e.g., latent states or semantic maps) from the Prediction Node. It performs trajectory optimization or task planning (e.g., using MPC) and publishes control commands.

- Control and Actuation Node: A low-level controller (e.g., a PX4 flight controller [122]) that translates high-level commands into motor signals.

This decoupled design allows for modular development, testing, and multi-rate execution, which is essential in a complex real-time system.

5.2. Compression and Acceleration for Real-Time Inference

To meet the stringent latency and power budgets of a UAV, models must be aggressively optimized.

- Inference Acceleration with Specialized Engines

Simply running a PyTorch v.2.8.0 or TensorFlow v.2.16.1 model on an edge device is inefficient. Hardware vendors provide specialized inference engines like NVIDIA’s TensorRT [123], which take a trained model and perform several key optimizations:

- Graph Optimization: Fusing multiple layers (e.g., Conv -> BatchNorm -> ReLU) into a single computational kernel to reduce overhead.

- Precision Calibration: Intelligently converting model weights to lower-precision formats like FP16 or FP8 (quantization) to leverage specialized hardware units (e.g., Tensor Cores) for massive speedups.

- Kernel Auto-Tuning: Selecting the fastest available implementation for each layer from a library of hardware-specific kernels.

- Advanced Compression Techniques

Beyond standard optimization, advanced techniques are needed:

- Quantization-Aware Training (QAT): While Post-Training Quantization (PTQ) is fast, it can sometimes lead to accuracy degradation. QAT simulates the effects of quantization during the training process itself, allowing the model to adapt its weights to the lower precision, often resulting in higher accuracy for FP8 models [124]. This is particularly important for sensitive temporal models.

- Structured Pruning and Distillation: Instead of just removing individual weights (unstructured pruning), which leads to sparse matrices that are inefficient on GPUs, structured pruning removes entire channels or blocks. This can be combined with knowledge distillation, where a smaller “student” model is trained not only on the data but also to mimic the feature maps or motion field predictions of a larger “teacher” model, preserving performance in a much smaller package.

5.3. Safety, Fault Tolerance, and Robust Testing

An autonomous system is only as good as its worst-case performance. Safety cannot be an afterthought.

- Uncertainty-Aware Safety Layers

The system must be able to recognize when its predictive model is failing or uncertain. This requires the model to not only make a prediction but also to quantify its own confidence. A comprehensive survey of uncertainty quantification techniques in deep learning can be found in [125]. Probabilistic models (from Axis B) are a natural fit here.

- By generating multiple future samples or estimating the parameters of a predictive distribution, the system can calculate the variance or divergence. A high divergence (the model is “unsure”) can serve as a trigger for a safety protocol, a core principle of safe learning-based control [126,127]. The UAV could then revert to a conservative behavior, such as hovering or increasing its safety margins.

- From Software-in-the-Loop to Hardware-in-the-Loop

Real-world flight testing is expensive and risky. A rigorous, multi-stage testing protocol is essential, leveraging high-fidelity simulators like AirSim [128] or NVIDIA Isaac Sim [129].

- Software-in-the-Loop (SIL): The entire software stack is tested in a purely virtual environment.

- Hardware-in-the-Loop (HIL): The onboard computer (e.g., a Jetson) runs the complete flight software, but its sensor inputs come from a simulator, and its control outputs are sent back to the simulator. The importance and methodology of HIL for UAV validation are well-documented in works like [130]. This tests the real-time performance and computational load of the actual flight hardware without physical risk.

- Real-World Flight Tests: Only after passing extensive HIL testing should the system be deployed on a physical UAV, initially in a controlled environment.

5.4. Datasets, Benchmarks, and Challenges

A significant challenge in UAV video prediction is the profound scarcity of datasets and benchmarks designed specifically for this task. Consequently, the field currently relies heavily on repurposing video data from benchmarks established for other UAV-centric computer vision tasks, such as object tracking, detection, and semantic segmentation. The real-world datasets presented in Table 18, with the exception of the AirSim simulator, are all prominent examples of this practice; none were originally created to evaluate generative video models.

Table 18.

Summary of datasets for UAV video prediction research.

This pragmatic “makeshift” approach, while necessary, also introduces a series of interconnected challenges. A core problem stems from a severe misalignment in evaluation metrics: the official benchmarks for these datasets use metrics (e.g., MOTA for tracking or mIoU for segmentation) that are entirely irrelevant for assessing the quality of predicted frames. This forces researchers to fall back on generic, and often perceptually flawed, image-quality metrics (e.g., PSNR, SSIM, LPIPS, etc.). These metrics still fail to evaluate what truly matters; they primarily assess single-frame perceptual quality but cannot directly measure the temporal coherence of motion or the task-relevant utility of a prediction.Compounding this issue is the lack of corresponding dense ground truth. Most of these datasets provide only sparse labels (e.g., bounding boxes), which precludes the evaluation of richer, more useful predictions such as future depth, optical flow, or scene geometry. Furthermore, the datasets themselves can contain task-specific biases; for instance, camera motions in tracking datasets are often designed to keep a target centered, which may not fully reflect the full spectrum of dynamics encountered in autonomous navigation. This reliance on repurposed data results in several critical gaps for predictive autonomy, including the insufficient temporal continuity of short video clips and a general lack of synchronized multi-modal data, such as IMU readings and control inputs, which are essential for training world models. Collectively, these challenges not only complicate the fair and comprehensive evaluation of predictive models but also hinder progress towards architectures truly optimized for the prediction task itself.

6. Future Directions

While significant progress has been made, the journey towards truly predictive autonomy for UAVs is far from over. We highlight five key directions that will shape the future of this field.

6.1. Beyond Pixels: Long-Horizon and Semantic Forecasting

For high-level decision-making, raw pixel predictions are often inefficient and brittle. The future lies in predicting more abstract, task-relevant representations.

- Semantic and Instance Forecasting: Instead of predicting future RGB values, models can predict future semantic segmentation maps, allowing a UAV to reason about interactions between object categories.

- Bird’s-Eye-View (BEV) Prediction: Popularized in autonomous driving, BEV prediction projects sensor data onto a top-down grid representation. Predicting future BEV occupancy grids is more compact and directly useful for planning than predicting from a perspective view [131]. Recent works like BEVerse [132] are pushing the state-of-the-art in this area, and adapting these powerful representations to the full 3D world of UAVs is a key research challenge.

6.2. Injecting Priors: Physics-Informed and Differentiable Models

Purely data-driven models can sometimes produce physically implausible futures. Injecting physical priors can regularize the learning process and improve generalization.

- Physics-Informed Neural Networks (PINNs): While traditionally used for solving PDEs, the core idea of designing loss functions that penalize violations of physical laws can be integrated into predictive models [133]. For UAVs, this could mean ensuring predictions respect gravity or basic aerodynamic constraints.

- Differentiable Simulation: The rise of differentiable simulators [134] allows gradients to be passed through the simulation process itself. This enables models to be trained via “analysis-by-synthesis,” where a model’s parameters are optimized to produce a simulation that matches reality, a powerful paradigm for learning dynamics.

6.3. The Grand Goal: Video Prediction as General World Modeling

The ultimate goal of video prediction is not just forecasting pixels, but learning a comprehensive, reusable internal model of the world.

- Reinforcement Learning in Latent Imagination: As demonstrated by the Dreamer family [30], a world model allows an agent to learn complex behaviors entirely by “dreaming” in its compact latent space. A thorough review of world models can be found in [9].

- Language-Conditioned Goals: The next frontier is to combine these world models with the reasoning capabilities of Large Language Models (LLMs). This would allow a user to specify high-level, semantic goals (e.g., “inspect the windows on the north face of that building”). Works like VoxPoser [135] demonstrate how LLMs can parse such commands and, combined with visual models, generate robotic plans, paving the way for more intuitive human–robot interaction with UAVs.

6.4. Fusing Senses: End-to-End Multimodal Systems

Vision is powerful but has limitations. True robustness will come from intelligently fusing multiple sensor modalities.

- Event Cameras: Fusing event streams with standard frames can dramatically improve motion estimation and prediction during fast UAV maneuvers [136].

- LiDAR, Thermal, and Radar: Fusing sparse but geometrically accurate LiDAR data can anchor visual predictions in metric 3D space [137]. Thermal cameras can provide crucial information for search-and-rescue. Millimeter-wave radar, which is robust to adverse weather, is also becoming a viable sensor for UAVs [138]. The key challenge is designing temporal operators (e.g., multimodal SSMs) that can naturally ingest and align these asynchronous, heterogeneous data streams.

6.5. Building the Future: Better Benchmarks and Responsible Deployment

Progress requires the right tools and a commitment to responsible innovation.

- The Need for a “Flying-Argoverse”: As highlighted in Section 4.4, the community urgently needs a large-scale, multi-modal benchmark dataset for UAV predictive autonomy.

- Explainable and Trustworthy AI (XAI): For safety-critical systems, black-box models are unacceptable. Future research must focus on making predictive models more transparent and explainable. A survey of XAI for robotics can be found in [139]. We need models that can not only predict the future but also articulate their uncertainty and the reasoning behind their forecasts, building trust with operators and regulators.

7. Conclusions

The paradigm of unmanned aerial remote sensing is shifting from passive data collection to active, predictive autonomy. In this survey, we have charted the rapidly evolving landscape of video prediction, a technology cornerstone for this transition. We addressed the limitations of existing single-axis taxonomies by proposing a novel, three-axis framework that disentangles backbone architecture & temporal operator, generative nature, and training/inference regime. This framework provides a structured lens to analyze the complex trade-offs involved in designing models for the unique constraints of UAVs.