FANT-Det: Flow-Aligned Nested Transformer for SAR Small Ship Detection

Abstract

Highlights

- FANT-Det achieves state-of-the-art performance for small ship detection in SAR imagery, outperforming existing methods on SSDD, HRSID, and LS-SSDD-v1.0.

- The architecture integrates a two-level nested transformer block, flow-aligned multiscale fusion, and adaptive contrastive denoising, yielding clear gains in detecting small ships under heavy noise and clutter.

- It enables reliable detection in congested ports and low-visibility conditions, thereby improving situational awareness for civilian and military applications.

- It provides a practical design recipe for SAR small ship detection, with potential for transfer to other remote sensing tasks.

Abstract

1. Introduction

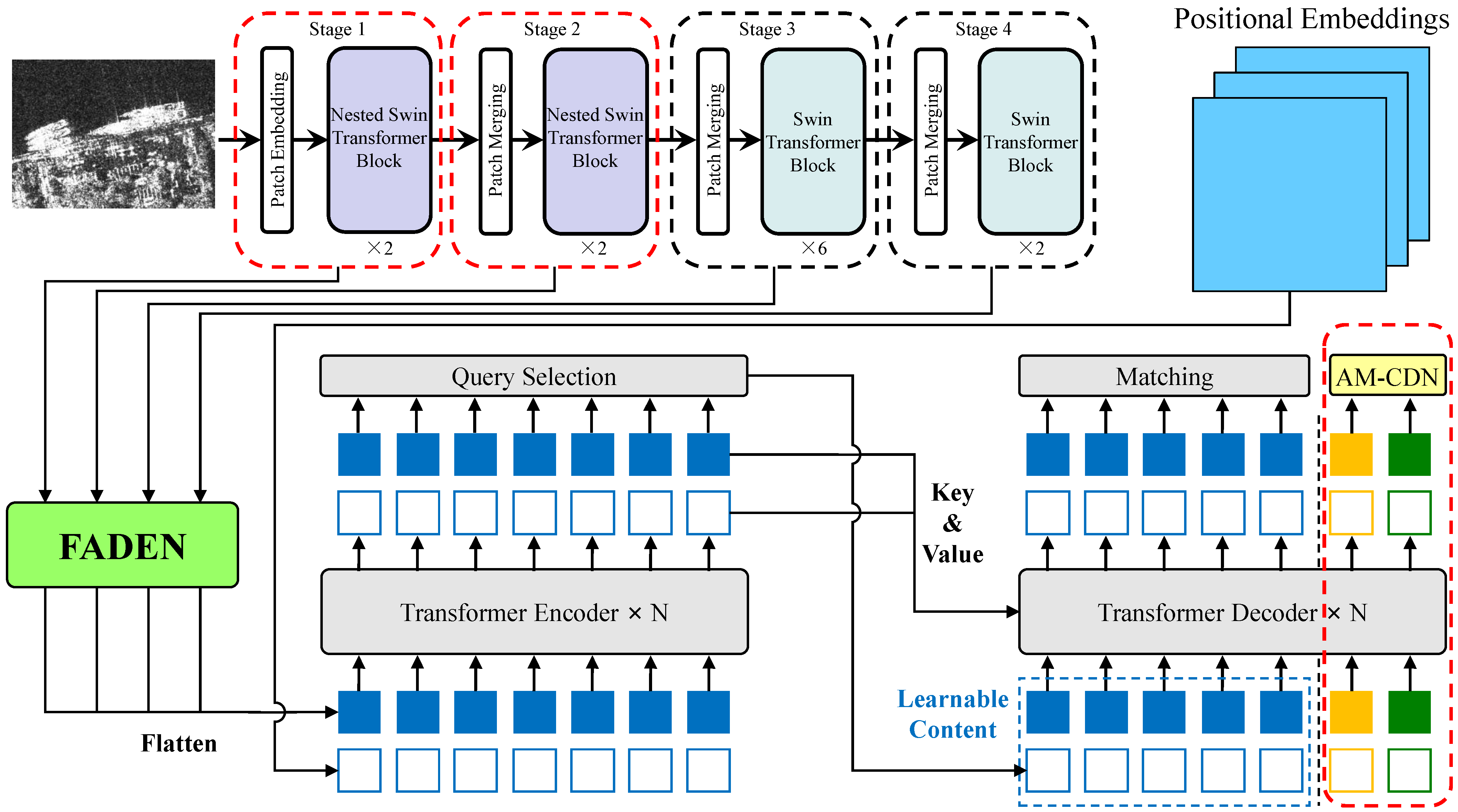

- We propose FANT-Det, a novel SAR ship detection architecture tailored for small ships in complex scenes, achieving state-of-the-art (SOTA) performance on three public benchmark datasets.

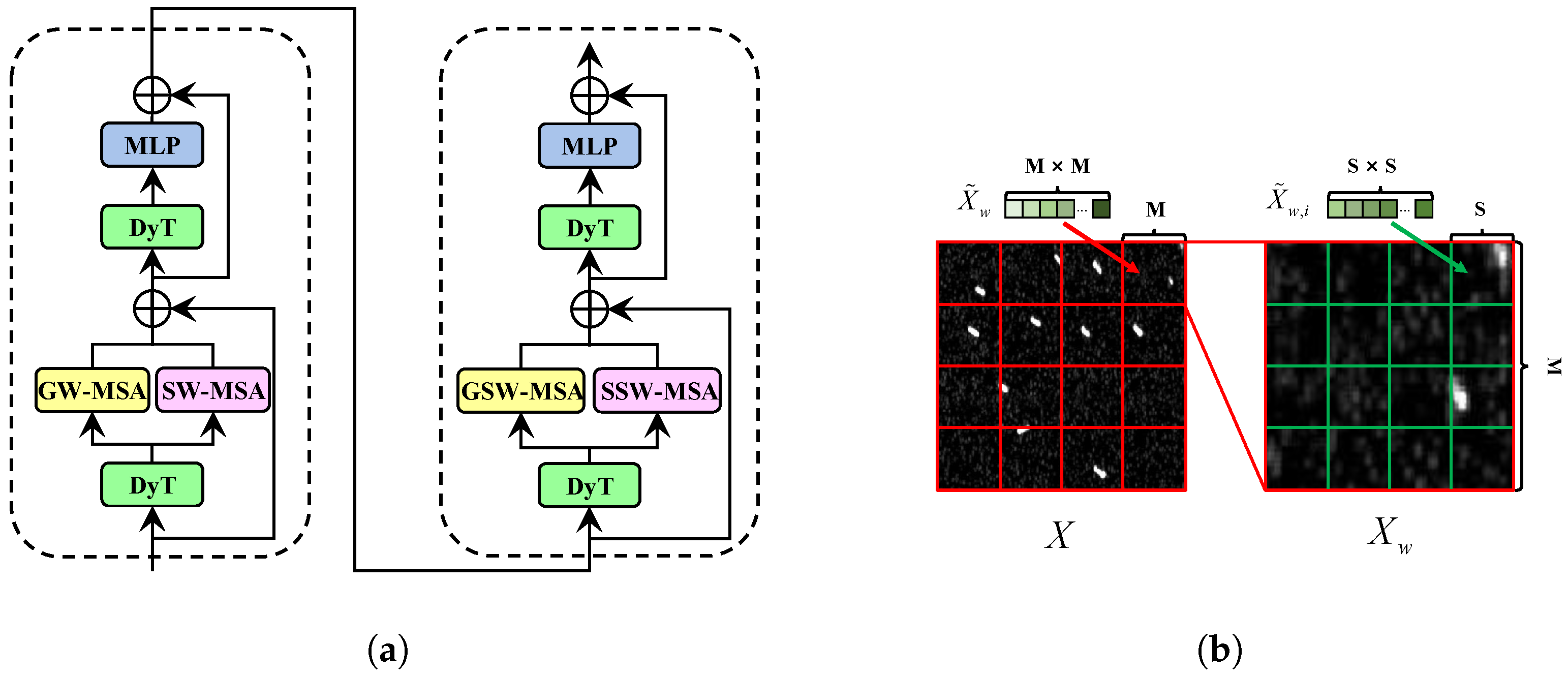

- We develop the NSTB, which adopts a nested local self-attention architecture to provide comprehensive feature capture and reinforcement for small ships, improving the extraction of small-target information.

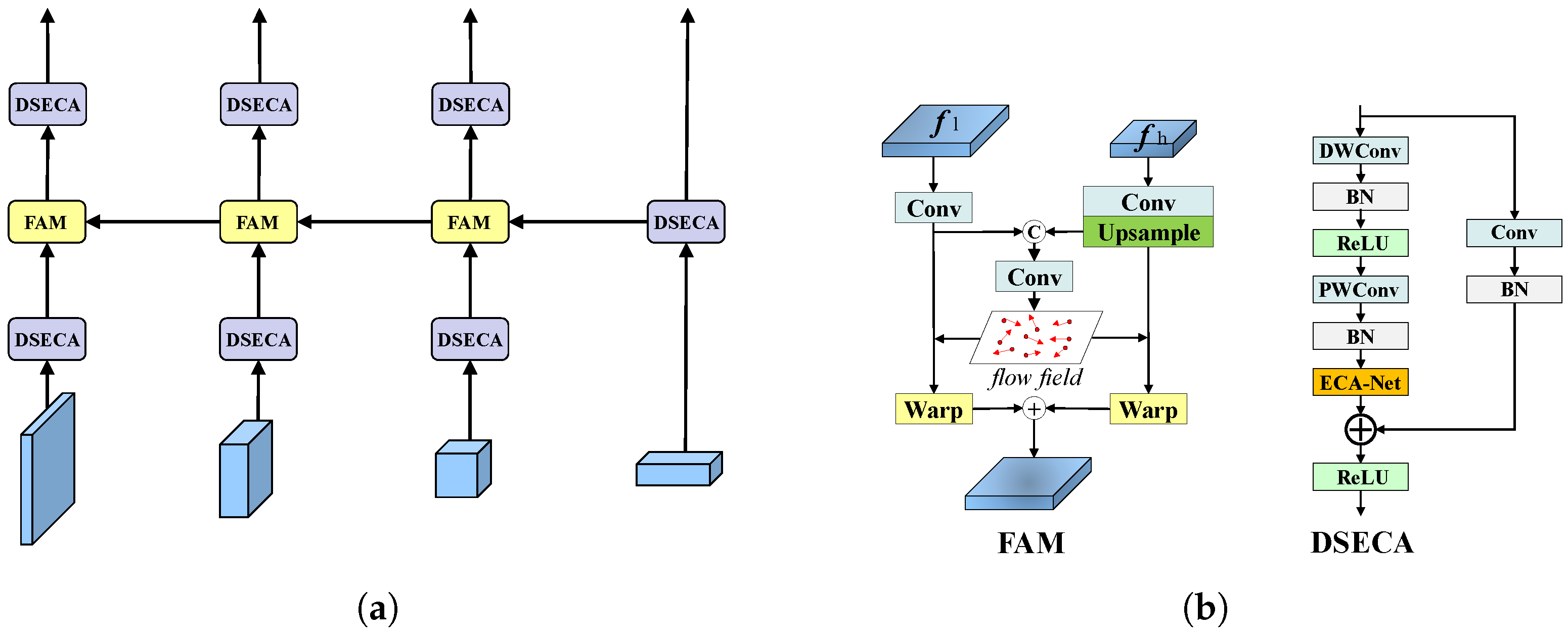

- We design FADEN to improve the quality of multi-scale feature fusion by employing semantic flow alignment and a lightweight attention mechanism, thereby achieving fine-grained feature matching and adaptive filtering of background clutter.

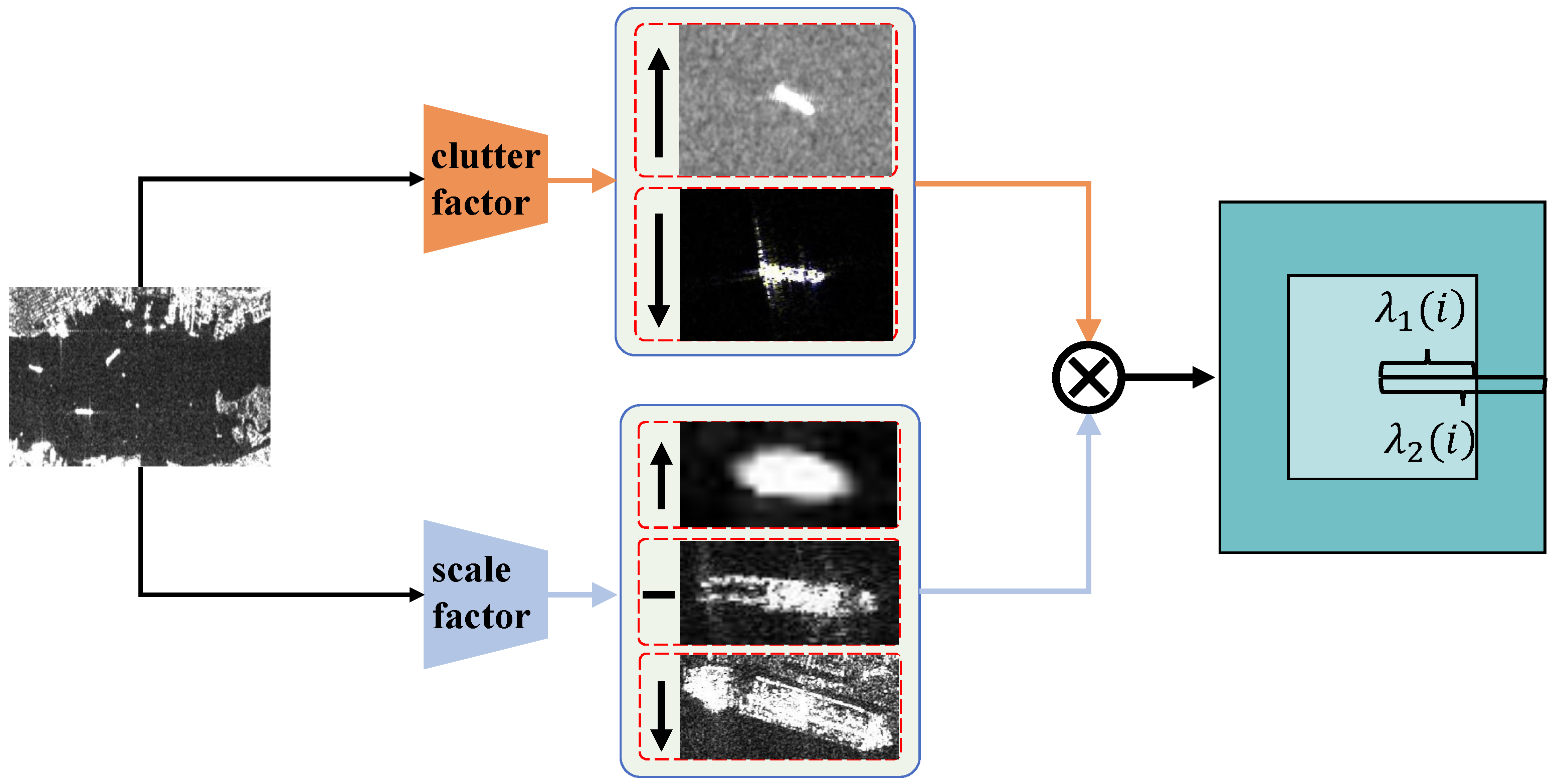

- We introduce an AM-CDN training paradigm that enhances robustness of the detector for SAR ship targets by adaptively adjusting the positive and negative sample thresholds in contrastive denoising according to the target scale coefficient and local clutter intensity.

2. Related Works

2.1. Traditional Methods and CNN-Based Methods for SAR Ship Detection

2.2. Transformer-Based Methods for Ship Detection

3. Proposed Method

3.1. Method Overview

3.2. Nested Swin Transformer Block

3.3. Flow-Aligned Depthwise Efficient Channel Attention Network

3.4. Adaptive Multi-Scale Contrastive DeNoising

4. Experiments and Discussion

4.1. Datasets

4.2. Implementation Details and Evaluation Metrics

4.3. Ablation Experiments

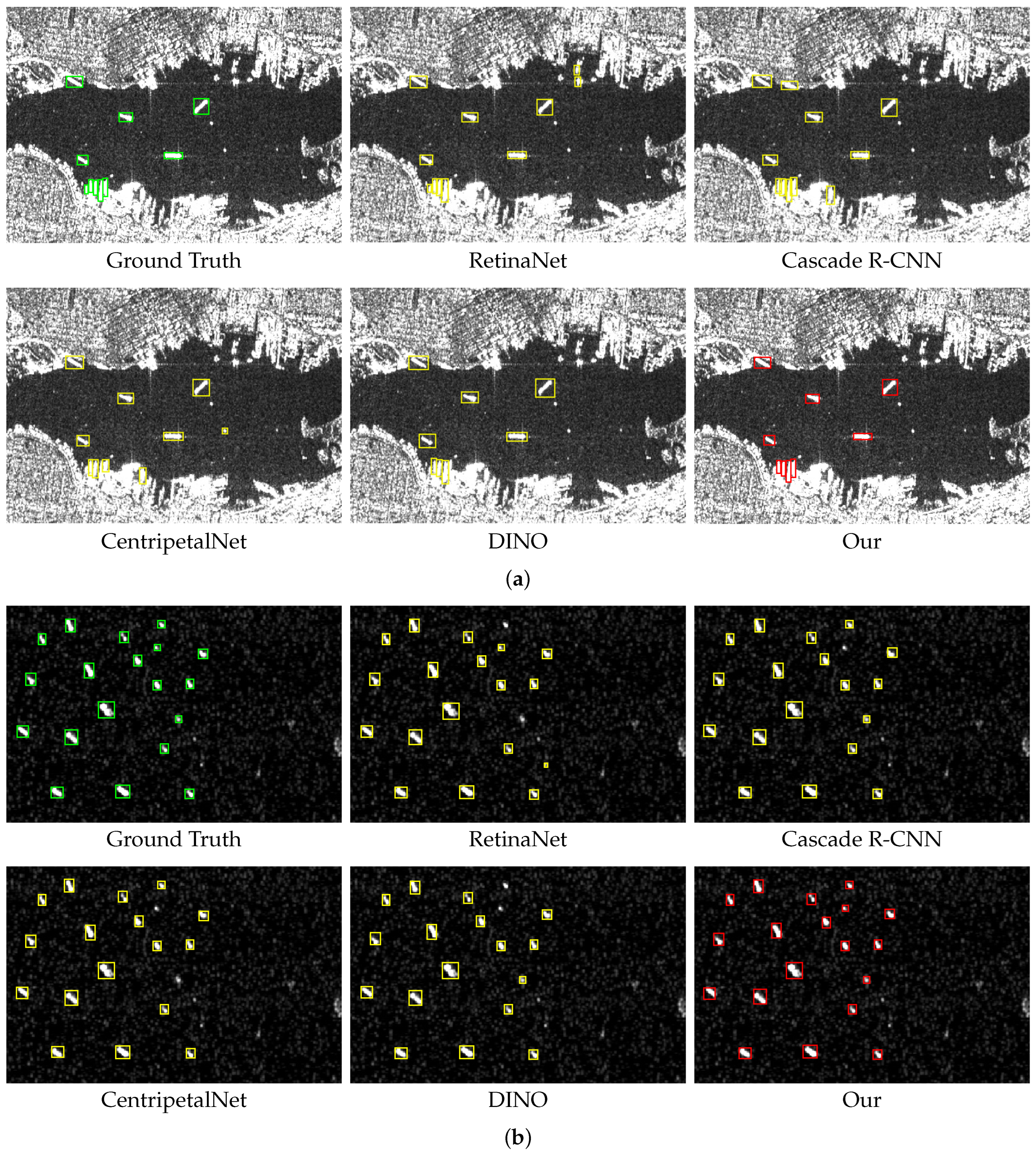

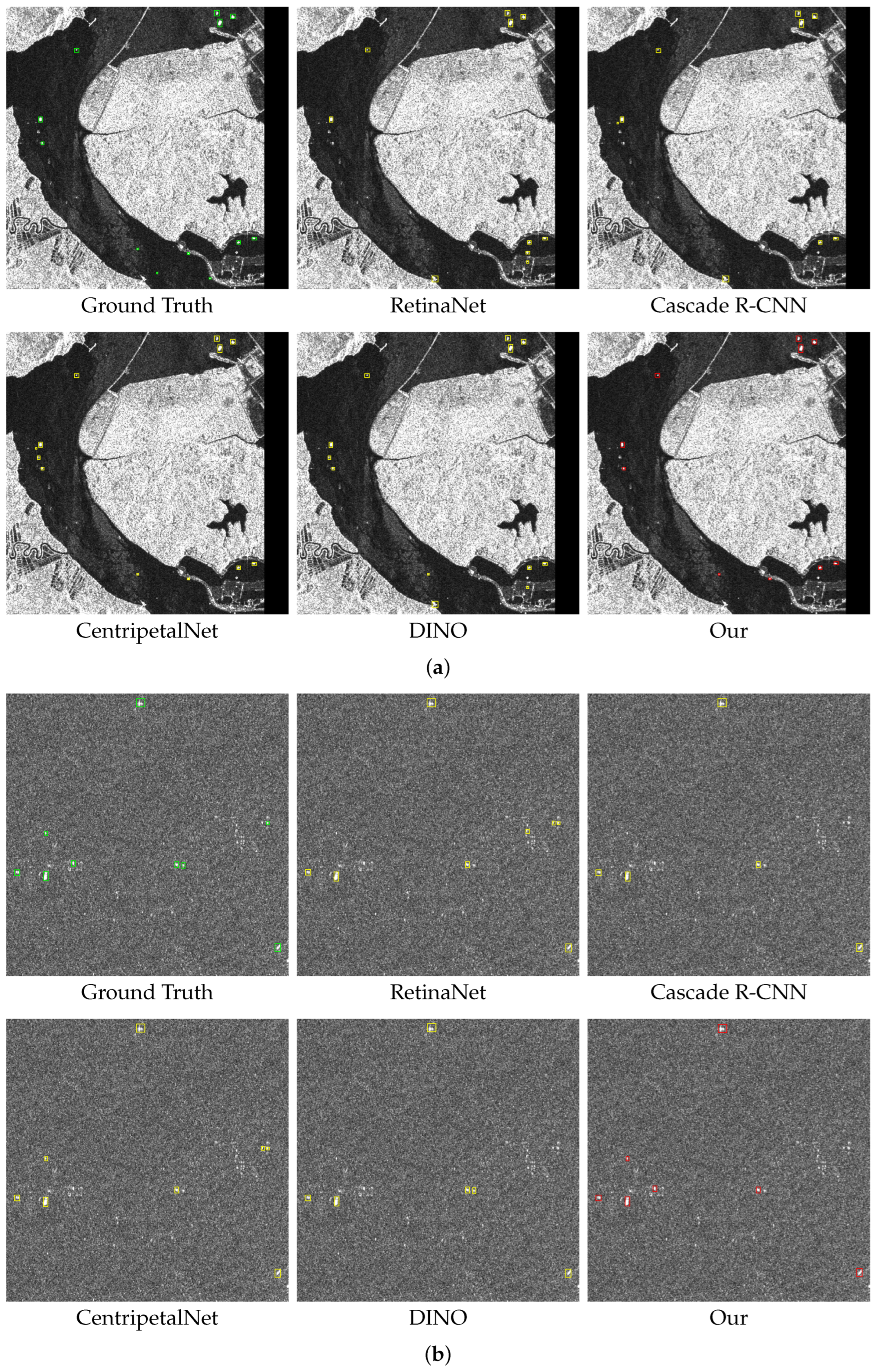

4.4. Algorithm Performance Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Xu, C.; Su, H.; Gao, L.; Wang, T. Deep learning for SAR ship detection: Past, present and future. Remote Sens. 2022, 14, 2712. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A survey on deep-learning-based real-time SAR ship detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3218–3247. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Zhang, X.; Zhou, Z.; Xiong, B.; Ji, K.; Kuang, G. Arbitrary-direction SAR ship detection method for multi-scale imbalance. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5208921. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, W.; Deng, J.; Guo, Y.; Liu, S.; Zhang, J. MASFF-Net: Multi-azimuth scattering feature fusion network for SAR target recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 19425–19440. [Google Scholar] [CrossRef]

- Galdorisi, G.; Goshorn, R. Bridging the policy and technology gap: A process to instantiate maritime domain awareness. In Proceedings of the OCEANS 2005 MTS/IEEE, Washington, DC, USA, 17-23 September 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 1–8. [Google Scholar]

- Deng, J.; Wang, W.; Zhang, H.; Zhang, T.; Zhang, J. PolSAR ship detection based on superpixel-level contrast enhancement. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4008805. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A novel YOLO-based method for arbitrary-oriented ship detection in high-resolution SAR images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Yu, J.; Yu, Z.; Li, C. GEO SAR imaging of maneuvering ships based on time–frequency features extraction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5226321. [Google Scholar] [CrossRef]

- Ma, Y.; Guan, D.; Deng, Y.; Yuan, W.; Wei, M. 3SD-Net: SAR Small Ship Detection Neural Network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5221613. [Google Scholar] [CrossRef]

- Zhu, H.; Yu, Z.; Yu, J. Sea clutter suppression based on complex-valued neural networks optimized by PSD. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9821–9828. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, C.; Filaretov, V.F.; Yukhimets, D.A. Multi-scale ship detection algorithm based on YOLOv7 for complex scene SAR images. Remote Sens. 2023, 15, 2071. [Google Scholar] [CrossRef]

- Gierull, C.H.; Sikaneta, I. A compound-plus-noise model for improved vessel detection in non-Gaussian SAR imagery. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1444–1453. [Google Scholar] [CrossRef]

- Wang, C.; Jiang, S.; Zhang, H.; Wu, F.; Zhang, B. Ship detection for high-resolution SAR images based on feature analysis. IEEE Geosci. Remote Sens. Lett. 2013, 11, 119–123. [Google Scholar] [CrossRef]

- Shi, T.; Gong, J.; Hu, J.; Sun, Y.; Bao, G.; Zhang, P.; Wang, J.; Zhi, X.; Zhang, W. Progressive class-aware instance enhancement for aircraft detection in remote sensing imagery. Pattern Recognit. 2025, 164, 111503. [Google Scholar] [CrossRef]

- Hu, J.; Li, Y.; Zhi, X.; Shi, T.; Zhang, W. Complementarity-aware Feature Fusion for Aircraft Detection via Unpaired Opt2SAR Image Translation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5628019. [Google Scholar] [CrossRef]

- Yu, J.; Pan, B.; Yu, Z.; Li, C.; Wu, X. Collaborative optimization for SAR image despeckling with structure preservation. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5201712. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9, 860. [Google Scholar] [CrossRef]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A modified faster R-CNN based on CFAR algorithm for SAR ship detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhang, L.; Liu, Y.; Zhao, W.; Wang, X.; Li, G.; He, Y. Frequency-adaptive learning for SAR ship detection in clutter scenes. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5215514. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, J.; Peng, J.; Zhang, L. Anchor box optimization for object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CA, USA, 1–5 March 2020; pp. 1286–1294. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Feng, Y.; You, Y.; Tian, J.; Meng, G. OEGR-DETR: A novel detection transformer based on orientation enhancement and group relations for SAR object detection. Remote Sens. 2023, 16, 106. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Dai, H.; Du, L.; Wang, Y.; Wang, Z. A modified CFAR algorithm based on object proposals for ship target detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1925–1929. [Google Scholar] [CrossRef]

- Gao, G.; Ouyang, K.; Luo, Y.; Liang, S.; Zhou, S. Scheme of parameter estimation for generalized gamma distribution and its application to ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 55, 1812–1832. [Google Scholar] [CrossRef]

- Pappas, O.; Achim, A.; Bull, D. Superpixel-level CFAR detectors for ship detection in SAR imagery. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1397–1401. [Google Scholar] [CrossRef]

- Miao, T.; Zeng, H.; Yang, W.; Chu, B.; Zou, F.; Ren, W.; Chen, J. An Improved Lightweight RetinaNet for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4667–4679. [Google Scholar] [CrossRef]

- Sun, K.; Liang, Y.; Ma, X.; Huai, Y.; Xing, M. DSDet: A lightweight densely connected sparsely activated detector for ship target detection in high-resolution SAR images. Remote Sens. 2021, 13, 2743. [Google Scholar] [CrossRef]

- Bai, L.; Yao, C.; Ye, Z.; Xue, D.; Lin, X.; Hui, M. A novel anchor-free detector using global context-guide feature balance pyramid and united attention for SAR ship detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4003005. [Google Scholar] [CrossRef]

- Shen, J.; Bai, L.; Zhang, Y.; Momi, M.C.; Quan, S.; Ye, Z. ELLK-Net: An efficient lightweight large kernel network for SAR ship detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5221514. [Google Scholar] [CrossRef]

- Wang, S.; Gao, S.; Zhou, L.; Liu, R.; Zhang, H.; Liu, J.; Jia, Y.; Qian, J. YOLO-SD: Small ship detection in SAR images by multi-scale convolution and feature transformer module. Remote Sens. 2022, 14, 5268. [Google Scholar] [CrossRef]

- Li, K.; Zhang, M.; Xu, M.; Tang, R.; Wang, L.; Wang, H. Ship detection in SAR images based on feature enhancement Swin transformer and adjacent feature fusion. Remote Sens. 2022, 14, 3186. [Google Scholar] [CrossRef]

- Hu, B.; Miao, H. An improved deep neural network for small-ship detection in SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2596–2609. [Google Scholar] [CrossRef]

- Zong, Z.; Song, G.; Liu, Y. DETRs with Collaborative Hybrid Assignments Training. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 6748–6758. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13619–13627. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.; Shum, H.Y. DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection. In Proceedings of the The Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Yu, C.; Shin, Y. SMEP-DETR: Transformer-Based Ship Detection for SAR Imagery with Multi-Edge Enhancement and Parallel Dilated Convolutions. Remote Sens. 2025, 17, 953. [Google Scholar] [CrossRef]

- Liu, W.; Zhou, L. Multi-level Denoising for High Quality SAR Object Detection in Complex Scenes. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5226813. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1. 0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 18–20 November 2024; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Liangjun, Z.; Feng, N.; Yubin, X.; Gang, L.; Zhongliang, H.; Yuanyang, Z. MSFA-YOLO: A multi-scale SAR ship detection algorithm based on fused attention. IEEE Access 2024, 12, 24554–24568. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, N.; Gao, Y.; Chen, H.; Wang, P.; Tian, Z.; Shen, C.; Zhang, Y. NAS-FCOS: Fast neural architecture search for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11943–11951. [Google Scholar]

- Dong, Z.; Li, G.; Liao, Y.; Wang, F.; Ren, P.; Qian, C. Centripetalnet: Pursuing high-quality keypoint pairs for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10519–10528. [Google Scholar]

- Liu, Y.; Yan, G.; Ma, F.; Zhou, Y.; Zhang, F. SAR ship detection based on explainable evidence learning under intraclass imbalance. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5207715. [Google Scholar] [CrossRef]

| Configuration | P (%) | R (%) | F1 (%) | AP (%) | APsmall (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| Baseline (Swin-T + DINO) | 80.2 | 59.4 | 68.3 | 65.7 | 63.3 | 48.5 | 280.1 |

| +NSTB | 80.4 | 70.1 | 74.9 | 71.4 | 70.1 | 51.6 | 294.2 |

| +FADEN | 83.1 | 65.2 | 73.1 | 70.2 | 68.6 | 50.4 | 290.0 |

| +AM-CDN | 85.0 | 61.1 | 71.0 | 68.2 | 65.5 | 48.5 | 280.1 |

| +NSTB + FADEN | 82.7 | 73.5 | 77.8 | 74.0 | 72.9 | 53.5 | 304.2 |

| +NSTB + AM-CDN | 83.8 | 71.0 | 76.9 | 72.8 | 71.1 | 51.6 | 294.2 |

| +FADEN + AM-CDN | 85.5 | 65.4 | 74.1 | 71.8 | 70.2 | 50.4 | 290.0 |

| +NSTB + FADEN + AM-CDN | 87.0 | 75.5 | 80.8 | 75.5 | 74.5 | 53.5 | 304.2 |

| Dataset | Methods | P | R | F1 | AP | APsmall |

|---|---|---|---|---|---|---|

| SSDD | NAS-FPN [47] | 76.5 | 61.5 | 68.2 | 67.2 | 64.1 |

| BiFPN [48] | 81.1 | 62.5 | 70.6 | 68.8 | 65.2 | |

| ASFF [49] | 79.2 | 63.5 | 70.5 | 68.0 | 66.5 | |

| FADEN | 83.1 | 65.2 | 73.1 | 70.2 | 68.6 | |

| HRSID | NAS-FPN | 72.1 | 58.4 | 64.5 | 63.5 | 62.7 |

| BiFPN | 80.5 | 58.6 | 67.8 | 66.1 | 64.9 | |

| ASFF | 76.6 | 60.7 | 67.7 | 64.8 | 66.2 | |

| FADEN | 82.5 | 62.7 | 70.6 | 67.3 | 68.4 |

| Category | Model | Entire | Inshore | |||||

|---|---|---|---|---|---|---|---|---|

| AP | AP0.5 | AP0.75 | APsmall | AP | AP0.5 | AP0.75 | ||

| Anchor-based | RetinaNet [31] | 45.3 | 79.1 | 48.7 | 45.8 | 26.3 | 53.1 | 24.1 |

| YOLOv8 [50] | 61.3 | 95.7 | 70.1 | 58.8 | 43.5 | 84.2 | 46.7 | |

| Cascade RCNN [51] | 66.1 | 93.2 | 76.9 | 65.3 | 50.9 | 80.1 | 56.8 | |

| HRSDNet [32] | 67.5 | 92.6 | 79.8 | 66.6 | 52.8 | 79.9 | 58.2 | |

| MSFA-YOLO [52] | 66.2 | 98.7 | 76.9 | 56.6 | 57.1 | 95.9 | 60.6 | |

| Anchor-free | YOLOX [53] | 54.9 | 88.3 | 60.2 | 52.1 | 38.1 | 71.4 | 39.4 |

| NAS-FCOS [54] | 59.1 | 90.7 | 67.3 | 59.4 | 39.8 | 73.6 | 37.2 | |

| CentripetalNet [55] | 60.8 | 90.9 | 68.8 | 61.3 | 40.5 | 73.0 | 41.2 | |

| FBUA-Net [33] | 61.1 | 96.2 | 77.6 | 59.9 | – | – | – | |

| Ellk-Net [34] | 63.9 | 95.6 | 74.6 | 57.2 | – | – | – | |

| Transformer-based | Deformable DETR [39] | 59.2 | 90.8 | 71.5 | 58.7 | 44.1 | 69.3 | 48.4 |

| CO-DETR [38] | 60.8 | 91.3 | 73.1 | 60.1 | 45.7 | 70.2 | 49.5 | |

| DINO [41] | 65.7 | 91.2 | 78.3 | 63.3 | 50.5 | 76.1 | 53.4 | |

| OEGR-DETR [26] | – | 93.9 | 84.0 | – | – | – | – | |

| RT-DINO [42] | 68.3 | 97.2 | – | – | 59.8 | 92.6 | – | |

| FANT-Det (Ours) | 75.5 | 98.9 | 91.0 | 74.5 | 69.5 | 96.8 | 84.0 | |

| Category | Model | Entire | Inshore | |||||

|---|---|---|---|---|---|---|---|---|

| AP | AP0.5 | AP0.75 | APsmall | AP | AP0.5 | AP0.75 | ||

| Anchor-based | RetinaNet [31] | 55.2 | 80.5 | 60.3 | 56.4 | 35.3 | 61.7 | 36.1 |

| YOLOv8 [50] | 63.2 | 90.4 | 72.5 | 62.0 | 56.1 | 83.6 | 57.9 | |

| Cascade RCNN [51] | 63.9 | 82.8 | 73.1 | 64.8 | 56.0 | 74.2 | 62.7 | |

| HRSDNet [32] | 65.2 | 83.0 | 74.5 | 65.6 | 55.8 | 73.6 | 63.1 | |

| MSFA-YOLO [52] | 67.1 | 92.7 | 76.7 | 53.7 | – | – | – | |

| Anchor-free | YOLOX [53] | 54.9 | 88.3 | 60.2 | 52.1 | 38.1 | 71.4 | 39.4 |

| NAS-FCOS [54] | 57.2 | 83.8 | 64.0 | 58.1 | 50.6 | 75.3 | 53.4 | |

| CentripetalNet [55] | 61.2 | 85.1 | 64.9 | 62.4 | 45.8 | 74.3 | 48.0 | |

| FBUA-Net [33] | 69.1 | 90.3 | 79.6 | 69.6 | – | – | – | |

| Ellk-Net [34] | 66.8 | 90.6 | 76.0 | 68.9 | – | – | – | |

| Transformer-based | Deformable DETR [39] | 55.2 | 81.9 | 64.1 | 58.9 | 47.6 | 64.0 | 50.8 |

| CO-DETR [38] | 56.5 | 83.2 | 66.4 | 60.8 | 48.8 | 65.3 | 51.1 | |

| DINO [41] | 64.1 | 87.2 | 73.2 | 64.8 | 48.4 | 75.6 | 52.9 | |

| OEGR-DETR [26] | 64.9 | 90.5 | 74.4 | 66.7 | – | – | – | |

| RT-DINO [42] | – | 92.2 | – | – | – | 81.3 | – | |

| FANT-Det (Ours) | 72.1 | 93.5 | 82.2 | 74.6 | 70.3 | 91.5 | 80.1 | |

| Category | Methods | P | R | F1 | AP0.5 |

|---|---|---|---|---|---|

| CNN-based | RetinaNet [31] | 71.5 | 69.3 | 70.4 | 64.1 |

| YOLOv8 [50] | 83.5 | 68.1 | 75.0 | 75.7 | |

| Cascade RCNN [51] | 84.7 | 72.1 | 77.9 | 80.5 | |

| EDHC [56] | 85.8 | 72.9 | 78.8 | 80.9 | |

| YOLOX [53] | 83.9 | 73.8 | 78.5 | 79.3 | |

| NAS-FCOS [54] | 80.2 | 73.6 | 76.8 | 75.7 | |

| CentripetalNet [55] | 81.6 | 75.1 | 78.2 | 78.9 | |

| Transformer-based | Deformable DETR [39] | 80.7 | 66.5 | 72.9 | 72.0 |

| CO-DETR [38] | 84.1 | 70.2 | 76.5 | 76.3 | |

| DINO [41] | 76.2 | 62.9 | 68.4 | 67.3 | |

| RT-DETR [42] | 85.3 | 73.0 | 78.7 | 79.2 | |

| FANT-Det (Ours) | 86.7 | 75.6 | 80.8 | 82.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Wang, D.; Hu, J.; Zhi, X.; Yang, D. FANT-Det: Flow-Aligned Nested Transformer for SAR Small Ship Detection. Remote Sens. 2025, 17, 3416. https://doi.org/10.3390/rs17203416

Li H, Wang D, Hu J, Zhi X, Yang D. FANT-Det: Flow-Aligned Nested Transformer for SAR Small Ship Detection. Remote Sensing. 2025; 17(20):3416. https://doi.org/10.3390/rs17203416

Chicago/Turabian StyleLi, Hanfu, Dawei Wang, Jianming Hu, Xiyang Zhi, and Dong Yang. 2025. "FANT-Det: Flow-Aligned Nested Transformer for SAR Small Ship Detection" Remote Sensing 17, no. 20: 3416. https://doi.org/10.3390/rs17203416

APA StyleLi, H., Wang, D., Hu, J., Zhi, X., & Yang, D. (2025). FANT-Det: Flow-Aligned Nested Transformer for SAR Small Ship Detection. Remote Sensing, 17(20), 3416. https://doi.org/10.3390/rs17203416