Spatiotemporal Analysis of Vineyard Dynamics: UAS-Based Monitoring at the Individual Vine Scale

Abstract

Highlights

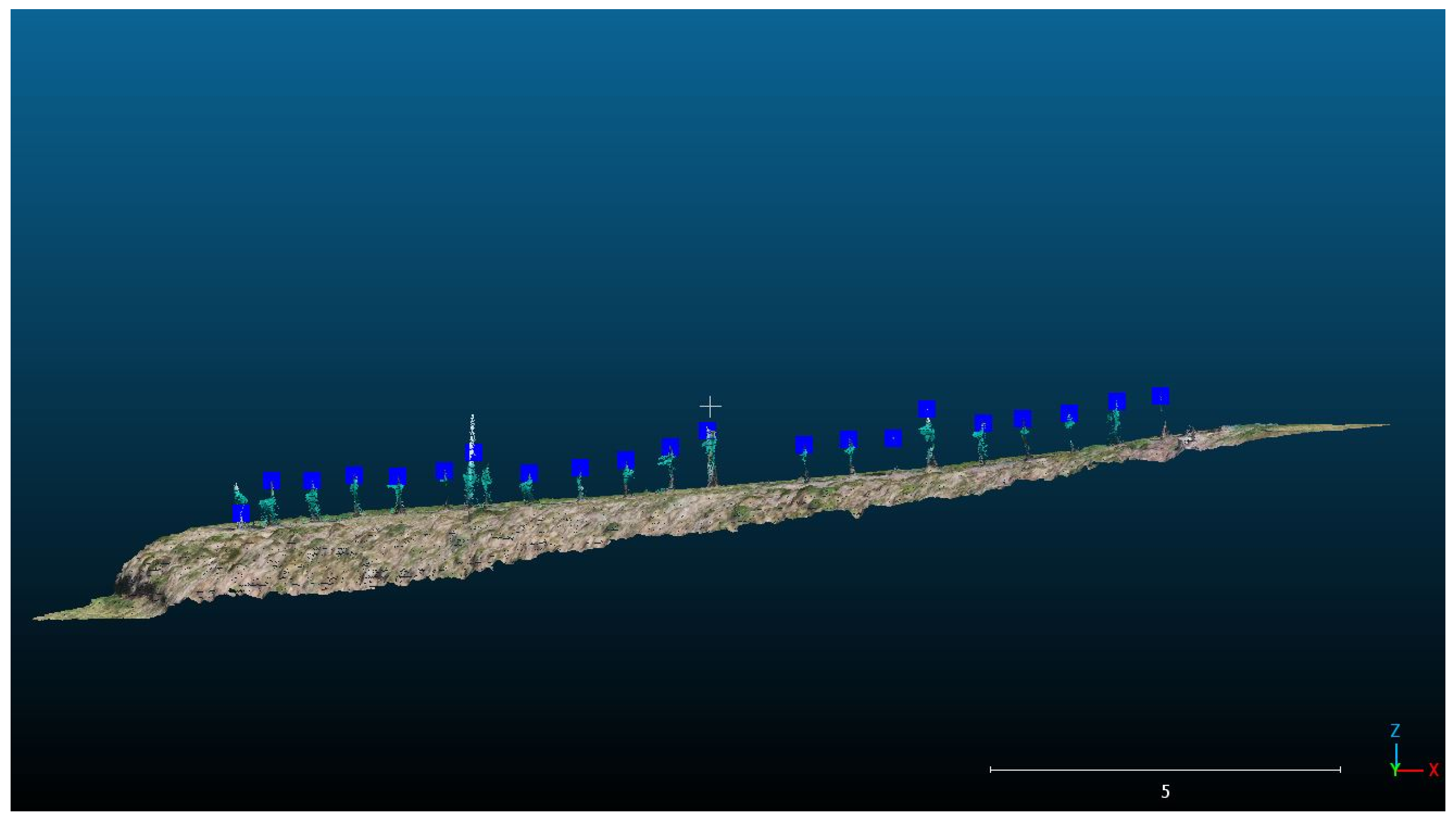

- Individual vines were detected from UAS-based 3D point clouds achieving a 10.7 cm mean Euclidean distance to reference measurements; OBIA yielded canopy masks for unbiased NDRE values of individual vines.

- Canopy-only, per-vine NDRE across key phenological phases showed strong spatial autocorrelation; LISA exposed stable zones of high and low vigour and outliers.

- Managers can target interventions at plant scale—identifying early stress hotspots and isolated problem vines before spread.

- The workflow is transferable and operational for routine vine monitoring, supporting site-specific decisions and yield/resource optimization.

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. UAS Image Acquisition

2.3. Image Processing

2.4. Image- and Point-Cloud Analysis Techniques

2.4.1. Object-Based Image Analysis (OBIA)

2.4.2. Single Plant Segmentation

2.4.3. Vine Performance Analysis

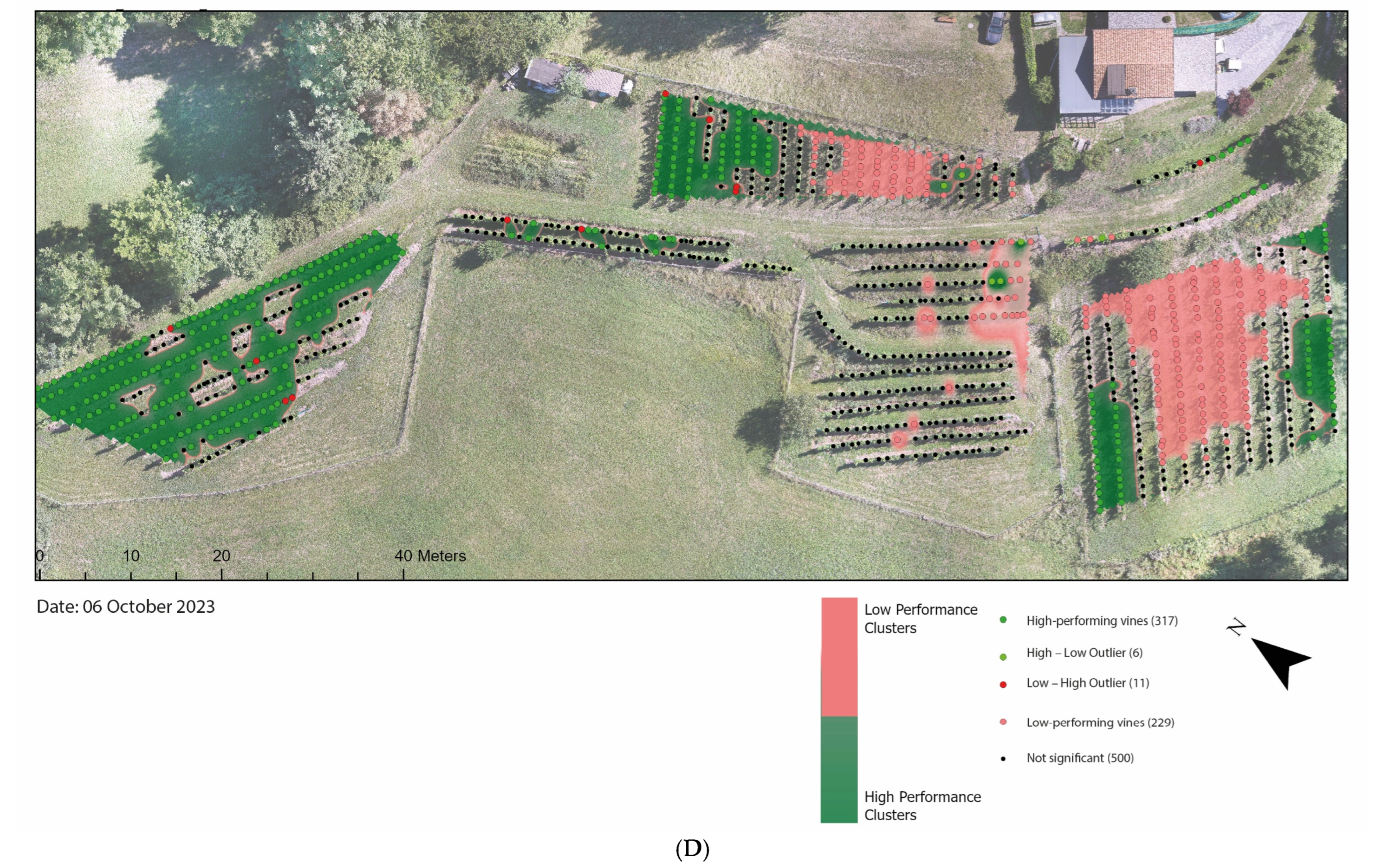

2.4.4. Spatial Patterns and Cluster Analysis of NDRE Values

- High performing vines (HP): areas with high NDRE values with neighbors of high values

- High–low outliers (HL): vines with high NDRE values with neighbors of low values (outlier)

- Low–high outliers (LH): vines with low NDRE values with neighbors of high values (outlier)

- Low performing vines (LP): areas with low NDRE values with neighbors of low values

- Not significant (NS): areas where NDRE values are randomly distributed

3. Results of Vineyard Dynamics Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arnó, J.; Martínez Casasnovas, J.A.; Ribes Dasi, M.; Rosell, J.R. Review. Precision viticulture. Research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 2009, 7, 779–790. [Google Scholar] [CrossRef]

- Sassu, A.; Gambella, F.; Ghiani, L.; Mercenaro, L.; Caria, M.; Pazzona, A.L. Advances in Unmanned Aerial System Remote Sensing for Precision Viticulture. Sensors 2021, 21, 956. [Google Scholar] [CrossRef]

- Gavrilović, M.; Jovanović, D.; Božović, P.; Benka, P.; Govedarica, M. Vineyard Zoning and Vine Detection Using Machine Learning in Unmanned Aerial Vehicle Imagery. Remote Sens. 2024, 16, 584. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Diago, M.-P.; Tardaguila, J. Automated early yield prediction in vineyards from on-the-go image acquisition. Comput. Electron. Agric. 2018, 144, 26–36. [Google Scholar] [CrossRef]

- Moraye, K.; Pavate, A.; Nikam, S.; Thakkar, S. Crop Yield Prediction Using Random Forest Algorithm for Major Cities in Maharashtra State. Int. J. Innov. Res. Comput. Sci. Technol. 2021, 9, 40–44. [Google Scholar] [CrossRef]

- Cinat, P.; Di Gennaro, S.F.; Berton, A.; Matese, A. Comparison of Unsupervised Algorithms for Vineyard Canopy Segmentation from UAV Multispectral Images. Remote Sens. 2019, 11, 1023. [Google Scholar] [CrossRef]

- Barros, T.; Conde, P.; Gonçalves, G.; Premebida, C.; Monteiro, M.; Ferreira, C.S.S.; Nunes, U.J. Multispectral vineyard segmentation: A deep learning comparison study. Comput. Electron. Agric. 2022, 195, 106782. [Google Scholar] [CrossRef]

- Ferro, M.V.; Sørensen, C.G.; Catania, P. Comparison of different computer vision methods for vineyard canopy detection using UAV multispectral images. Comput. Electron. Agric. 2024, 225, 109277. [Google Scholar] [CrossRef]

- Cogato, A.; Meggio, F.; Collins, C.; Marinello, F. Medium-Resolution Multispectral Data from Sentinel-2 to Assess the Damage and the Recovery Time of Late Frost on Vineyards. Remote Sens. 2020, 12, 1896. [Google Scholar] [CrossRef]

- Giovos, R.; Tassopoulos, D.; Kalivas, D.; Lougkos, N.; Priovolou, A. Remote Sensing Vegetation Indices in Viticulture: A Critical Review. Agriculture 2021, 11, 457. [Google Scholar] [CrossRef]

- Sozzi, M.; Kayad, A.; Marinello, F.; Taylor, J.; Tisseyre, B. Comparing vineyard imagery acquired from Sentinel-2 and Unmanned Aerial Vehicle (UAV) platform. OENO One 2020, 54, 189–197. [Google Scholar] [CrossRef]

- Agapiou, A. Vegetation Extraction Using Visible-Bands from Openly Licensed Unmanned Aerial Vehicle Imagery. Drones 2020, 4, 27. [Google Scholar] [CrossRef]

- Lorenz, D.H.; Eichhorn, K.W.; Bleiholder, H.; Klose, R.; Meier, U.; Weber, E. Growth Stages of the Grapevine: Phenological growth stages of the grapevine (Vitis vinifera L. ssp. vinifera)—Codes and descriptions according to the extended BBCH scale. Aust. J. Grape Wine Res. 1995, 1, 100–103. [Google Scholar] [CrossRef]

- Ferro, M.V.; Catania, P.; Miccichè, D.; Pisciotta, A.; Vallone, M.; Orlando, S. Assessment of vineyard vigour and yield spatio-temporal variability based on UAV high resolution multispectral images. Biosyst. Eng. 2023, 231, 36–56. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- De Castro, A.; Jiménez-Brenes, F.; Torres-Sánchez, J.; Peña, J.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef]

- Modica, G.; De Luca, G.; Messina, G.; Praticò, S. Comparison and assessment of different object-based classifications using machine learning algorithms and UAVs multispectral imagery: A case study in a citrus orchard and an onion crop. Eur. J. Remote Sens. 2021, 54, 431–460. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Jurado, J.M.; Pádua, L.; Feito, F.R.; Sousa, J.J. Automatic Grapevine Trunk Detection on UAV-Based Point Cloud. Remote Sens. 2020, 12, 3043. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Matese, A. Evaluation of novel precision viticulture tool for canopy biomass estimation and missing plant detection based on 2.5D and 3D approaches using RGB images acquired by UAV platform. Plant Methods 2020, 16, 91. [Google Scholar] [CrossRef]

- Haghverdi, A.; Leib, B.G.; Washington-Allen, R.A.; Ayers, P.D.; Buschermohle, M.J. Perspectives on delineating management zones for variable rate irrigation. Comput. Electron. Agric. 2015, 117, 154–167. [Google Scholar] [CrossRef]

- Lajili, A.; Cambouris, A.N.; Chokmani, K.; Duchemin, M.; Perron, I.; Zebarth, B.J.; Biswas, A.; Adamchuk, V.I. Analysis of Four Delineation Methods to Identify Potential Management Zones in a Commercial Potato Field in Eastern Canada. Agronomy 2021, 11, 432. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Peres, E.; Morais, R.; Sousa, J.J. Multi-Temporal Vineyard Monitoring through UAV-Based RGB Imagery. Remote Sens. 2018, 10, 1907. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Berton, A. Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int. J. Remote Sens. 2016, 38, 2150–2160. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Santesteban, L.G. Methods to compare the spatial variability of UAV-based spectral and geometric information with ground autocorrelated data. A case of study for precision viticulture. Comput. Electron. Agric. 2019, 162, 931–940. [Google Scholar] [CrossRef]

- Campos, J.; Garcia-Ruiz, F.; Gil, E. Assessment of Vineyard Canopy Characteristics from Vigour Maps Obtained Using UAV and Satellite Imagery. Sensors 2021, 21, 2363. [Google Scholar] [CrossRef]

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the Optimal Radiometric Calibration Method for UAV-Based Multispectral Imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Agisoft. Agisoft Metashape User Manual: Professional Edition, Version 2.0; Agisoft LLC: Saint Petersburg, Russia, 2023. [Google Scholar]

- Westover, F. Grapevine Phenology Revisited. Available online: https://winesvinesanalytics.com/features/article/196082/Grapevine-Phenology-Revisited (accessed on 1 July 2022).

- Pricope, N.G.; Halls, J.N.; Mapes, K.L.; Baxley, J.B.; Wu, J.J. Quantitative Comparison of UAS-Borne LiDAR Systems for High-Resolution Forested Wetland Mapping. Sensors 2020, 20, 4453. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Njambi, R. nDSMs: How digital Surface Models and Digital Terrain Models Elevate Your Insights. Available online: https://up42.com/blog/ndsms-how-digital-surface-models-and-digital-terrain-models-elevate-your (accessed on 8 August 2023).

- Trimble, eCognition Developer User Guide; Trimble Germany GmbH: Munich, Germany, 2022.

- Rosso, P.; Nendel, C.; Gilardi, N.; Udroiu, C.; Chlebowski, F. Processing of remote sensing information to retrieve leaf area index in barley: A comparison of methods. Precis. Agric. 2022, 23, 1449–1472. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W. Application of the WDVI in estimating LAI at the generative stage of barley. ISPRS J. Photogramm. Remote Sens. 1991, 46, 37–47. [Google Scholar] [CrossRef]

- Ruess, S.; Paulus, G.; Lang, S. Automated Derivation of Vine Objects and Ecosystem Structures Using UAS-Based Data Acquisition, 3D Point Cloud Analysis, and OBIA. Appl. Sci. 2024, 14, 3264. [Google Scholar] [CrossRef]

- Maccioni, A.; Agati, G.; Mazzinghi, P. New vegetation indices for remote measurement of chlorophylls based on leaf directional reflectance spectra. J. Photochem. Photobiol. 2001, 61, 52–61. [Google Scholar] [CrossRef]

- Mathur, M. Spatial Autocorrelation analysis in plant population: An overview. J. Appl. Nat. Sci. 2015, 17, 501–513. [Google Scholar] [CrossRef]

- Boots, B.; Getis, A. Point Pattern Analysis. In Web Book of Regional Science, RJackson, R., Ed.; West Virginia University: Morgantown, WV, USA, 1988. [Google Scholar]

- Moraga, P. Spatial Statistics for Data Science: Theory and Practice with R. Available online: https://www.paulamoraga.com/book-spatial/spatial-autocorrelation.html (accessed on 1 January 2025).

- Anselin, L. Local Indicators of Spatial Association—LISA. Geogr. Anal. 1995, 27, 93–115. [Google Scholar] [CrossRef]

- Blanford, J.; Kessler, F.; Griffin, A.; O’Sullival, D. Project 4: Calculating Global Moran’s I and the Moran Scatterplot. Available online: https://www.e-education.psu.edu/geog586/node/672 (accessed on 1 January 2025).

- Ferreira, M.P.; Féret, J.-B.; Grau, E.; Gastellu-Etchegorry, J.-P.; do Amaral, C.H.; Shimabukuro, Y.E.; de Souza Filho, C.R. Retrieving structural and chemical properties of individual tree crowns in a highly diverse tropical forest with 3D radiative transfer modeling and imaging spectroscopy. Remote Sens. Environ. 2018, 211, 276–291. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Catania, P.; Ferro, M.V.; Orlando, S.; Vallone, M. Grapevine and cover crop spectral response to evaluate vineyard spatio-temporal variability. Sci. Hortic. 2025, 339, 113844. [Google Scholar] [CrossRef]

- Dalton, D.T.; Hilton, R.J.; Kaiser, C.; Daane, K.M.; Sudarshana, M.R.; Vo, J.; Zalom, F.G.; Buser, J.Z.; Walton, V.M. Spatial Associations of Vines Infected With Grapevine Red Blotch Virus in Oregon Vineyards. Plant Dis. 2019, 103, 1507–1514. [Google Scholar] [CrossRef]

- Fraga, H.; Molitor, D.; Leolini, L.; Santos, J.A. What Is the Impact of Heatwaves on European Viticulture? A Modelling Assessment. Appl. Sci. 2020, 10, 3030. [Google Scholar] [CrossRef]

| Date | Phenological Stage | GCP Error |

|---|---|---|

| 23 September 2022 | Harvest | 1.5 cm |

| 8 April 2023 | Budburst | 2.4 cm |

| 14 July 2023 | Flowering | 3.0 cm |

| 16 August 2023 | Veraison | 1.8 cm |

| 6 October 2023 | Harvest | 1.6 cm |

| Parameter | Value |

|---|---|

| Altitude (AGL) | 80 m |

| Speed | 2 m/s |

| Shooting style | 1 img/2 s |

| Sensor orientation | nadir |

| Forward overlap | 80% |

| Side overlap | 80% |

| Ground Sampling Distance RGB | 1 cm |

| Ground Sampling Distance multis. | 7 cm |

| Timestamp | Phenological Stages | Moran’s Index | p-Value | Z-Score |

|---|---|---|---|---|

| 23 September 2022 | Harvest | 0.52 | 0.00 | 35.44 |

| 14 July 2023 | Flowering | 0.69 | 0.00 | 46.78 |

| 16 August 2023 | Veraison | 0.7 | 0.00 | 47.49 |

| 6 October 2023 | Harvest | 0.73 | 0.00 | 48.93 |

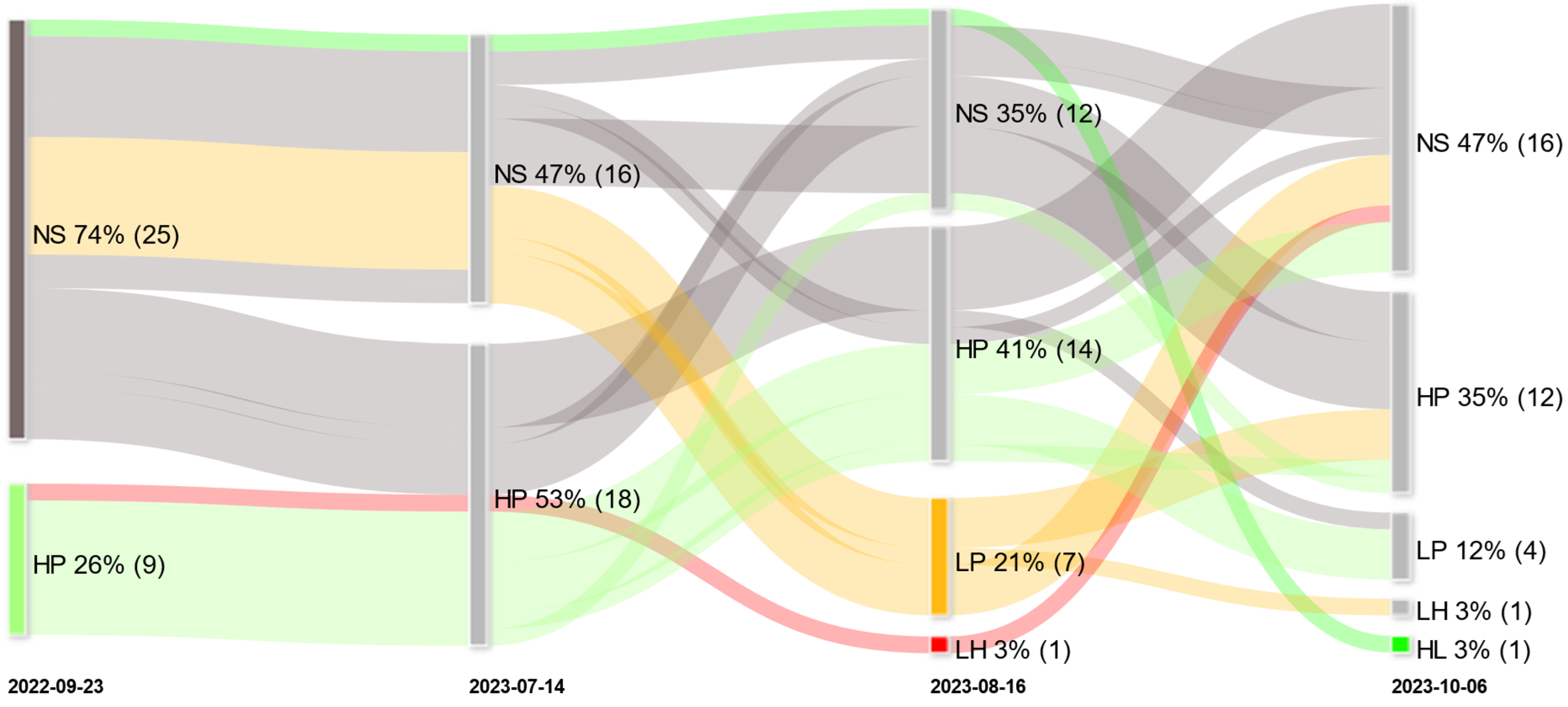

| Phase | 1 | 2 |

|---|---|---|

| HP | −91 | +129 |

| LP | −2 | +4 |

| NS | −78 | +117 |

| HL | +16 | −19 |

| LH | −1 | +3 |

| Mean temp max (°C) | 24.98 | 24.32 |

| Mean of max temp (°C) | 24.47 | 20.27 |

| Cum. ET0 (mm) | 201.88 | 121.33 |

| Cum. Rain (mm) | 215.00 | 400.20 |

| Days > 30 °C | 6.00 | 0.00 |

| Cum. Sun (h) | 535.06 | 397.28 |

| Soil temp (10 cm) mean (°C) | 14.53 | 12.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruess, S.; Paulus, G.; Lang, S. Spatiotemporal Analysis of Vineyard Dynamics: UAS-Based Monitoring at the Individual Vine Scale. Remote Sens. 2025, 17, 3354. https://doi.org/10.3390/rs17193354

Ruess S, Paulus G, Lang S. Spatiotemporal Analysis of Vineyard Dynamics: UAS-Based Monitoring at the Individual Vine Scale. Remote Sensing. 2025; 17(19):3354. https://doi.org/10.3390/rs17193354

Chicago/Turabian StyleRuess, Stefan, Gernot Paulus, and Stefan Lang. 2025. "Spatiotemporal Analysis of Vineyard Dynamics: UAS-Based Monitoring at the Individual Vine Scale" Remote Sensing 17, no. 19: 3354. https://doi.org/10.3390/rs17193354

APA StyleRuess, S., Paulus, G., & Lang, S. (2025). Spatiotemporal Analysis of Vineyard Dynamics: UAS-Based Monitoring at the Individual Vine Scale. Remote Sensing, 17(19), 3354. https://doi.org/10.3390/rs17193354