DWTF-DETR: A DETR-Based Model for Inshore Ship Detection in SAR Imagery via Dynamically Weighted Joint Time–Frequency Feature Fusion

Abstract

Highlights

- A Dual-Domain Feature Fusion Module (DDFM) is proposed to jointly extract spatial and frequency-domain features, enhancing sensitivity to ship backscatter in cluttered inshore environments.

- A Dual-Path Attention Fusion Module (DPAFM) combines shallow detail and deep semantic features via attention-based reweighting, improving robustness against blurred boundaries.

- The dual-domain and dual-path fusion strategies validate the effectiveness of combining time–frequency information with attention-guided enhancement for SAR ship detection.

- The findings provide insights for transformer-based detection models, with potential applications in real-time monitoring of harbors and nearshore maritime surveillance.

Abstract

1. Introduction

- Building upon the DETR framework, the proposed DWTF-DETR enhances the backscatter feature representation of ship targets by incorporating a Dual-Domain Feature Fusion Module (DDFM), which implements a joint time–frequency domain feature extraction scheme. Unlike the baseline RT-DETR, which primarily relies on spatial-domain features, DDFM explicitly integrates both spatial and spectral information, thereby improving the model’s ability to capture and utilize high- and low-frequency characteristics specific to SAR imagery.

- To address the challenge of blurred or incomplete ship boundaries in nearshore scenes, DWTF-DETR introduces a Dual-Path Attention Fusion Module (DPAFM). This attention-guided feature reorganization strategy goes beyond the standard feature aggregation in existing DETR-based models by dynamically weighting and combining shallow detail features with deep semantic representations, thus enhancing sensitivity to structural characteristics of ships under complex backgrounds.

- Extensive experiments conducted on both a self-constructed inshore SAR ship detection dataset and the public HRSID dataset demonstrate that the proposed method achieves more accurate ship detection in port scenarios compared to the baseline RT-DETR and other mainstream deep learning-based approaches.

2. Related Methods

2.1. Origin and Principles of RT-DETR

2.1.1. Origin of RT-DETR

2.1.2. Application of RT-DETR in SAR Ship Detection

- Introducing feature enhancement modules to improve feature expressiveness;

- Optimizing feature interaction mechanisms to strengthen long-range information propagation;

- Designing multi-scale modeling strategies to better adapt to variations in target sizes.

2.2. Spatial-Domain and Frequency-Domain Feature Extraction

2.3. Attention-Guided Feature Reorganization

3. Proposed Method

3.1. Overview of the Network Architecture

3.2. Dual-Domain Feature Fusion Module (DDFM)

3.2.1. Spatial-Domain Branch

3.2.2. Frequency-Domain Branch

3.2.3. Dual-Domain Fusion Component

3.3. Dual-Path Attention Fusion Module (DPAFM)

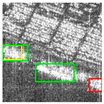

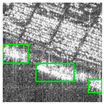

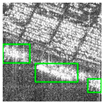

4. Materials

4.1. Test Datasets

4.2. Experimental Details

4.2.1. Experimental Environment

4.2.2. Experimental Design

- Operator Comparison Experiment:

- Ablation Experiment:

- Comparative Experiment:

4.2.3. Comparison Metrics

5. Results

5.1. Operator Experiment

5.2. Ablation Experiment

5.3. Comparative Experiment

- Methods utilizing frequency-domain features, including: FFCM, SFHF, and FocalNet [61].

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, M.; Zhu, Y.; Li, L.; Guo, J.; Liu, Z.; Li, Y. S4Det: Breadth and Accurate Sine Single-Stage Ship Detection for Remote Sense SAR Imagery. Remote Sens. 2025, 17, 900. [Google Scholar] [CrossRef]

- Li, D.; Liang, Q.; Liu, H.; Liu, Q.; Liu, H.; Liao, G. A Novel Multidimensional Domain Deep Learning Network for SAR Ship Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Nie, X.; Yang, M.; Liu, R.W. Deep Neural Network-Based Robust Ship Detection Under Different Weather Conditions. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 47–52. [Google Scholar]

- Zhou, X.; Chang, N.-B.; Li, S. Applications of SAR Interferometry in Earth and Environmental Science Research. Sensors 2009, 9, 1876–1912. [Google Scholar] [CrossRef]

- Li, J.; Xu, C.; Su, H.; Gao, L.; Wang, T. Deep Learning for SAR Ship Detection: Past, Present and Future. Remote Sens. 2022, 14, 2712. [Google Scholar] [CrossRef]

- Dong, T.; Wang, T.; Li, X.; Hong, J.; Jing, M.; Wei, T. A Large Ship Detection Method Based on Component Model in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 4108–4123. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Yasir, M.; Jianhua, W.; Mingming, X.; Hui, S.; Zhe, Z.; Shanwei, L.; Colak, A.T.I.; Hossain, M.S. Ship Detection Based on Deep Learning Using SAR Imagery: A Systematic Literature Review. Soft Comput. 2023, 27, 63–84. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ke, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S. SAR Ship Detection Based on an Improved Faster R-CNN Using Deformable Convolution. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3565–3568. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Feng, Y.; You, Y.; Tian, J.; Meng, G. OEGR-DETR: A Novel Detection Transformer Based on Orientation Enhancement and Group Relations for SAR Object Detection. Remote Sens. 2024, 16, 106. [Google Scholar] [CrossRef]

- Lin, H.; Liu, J.; Li, X.; Wei, L.; Liu, Y.; Han, B.; Wu, Z. DCEA: DETR With Concentrated Deformable Attention for End-to-End Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 17292–17307. [Google Scholar] [CrossRef]

- Lei, S.; Lu, D.; Qiu, X.; Ding, C. SRSDD-v1.0: A High-Resolution SAR Rotation Ship Detection Dataset. Remote Sens. 2021, 13, 5104. [Google Scholar] [CrossRef]

- Liu, X.; Liu, M.; Yin, Y. Infrared Ship Detection in Complex Nearshore Scenes Based on Improved YOLOv5s. Sensors 2025, 25, 3979. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Zhang, F.; Yin, Q.; Ma, F.; Zhang, F. Inshore Dense Ship Detection in SAR Images Based on Edge Semantic Decoupling and Transformer. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4882–4890. [Google Scholar] [CrossRef]

- Cheng, J.; Xiang, D.; Tang, J.; Zheng, Y.; Guan, D.; Du, B. Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance. Remote Sens. 2022, 14, 2832. [Google Scholar] [CrossRef]

- Chen, Z.; Ding, Z.; Zhang, X.; Wang, X.; Zhou, Y. Inshore Ship Detection Based on Multi-Modality Saliency for Synthetic Aperture Radar Images. Remote Sens. 2023, 15, 3868. [Google Scholar] [CrossRef]

- Fu, X.; Wang, Z. Fast Ship Detection Method for Sar Images in the Inshore Region. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3569–3572. [Google Scholar]

- Li, C.; Yue, C.; Li, H.; Wang, Z. Context-Aware SAR Image Ship Detection and Recognition Network. Front. Neurorobot. 2024, 18, 1293992. [Google Scholar] [CrossRef]

- Bai, L.; Yao, C.; Ye, Z.; Xue, D.; Lin, X.; Hui, M. A Novel Anchor-Free Detector Using Global Context-Guide Feature Balance Pyramid and United Attention for SAR Ship Detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4003005. [Google Scholar] [CrossRef]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An Anchor-Free Method Based on Feature Balancing and Refinement Network for Multiscale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1331–1344. [Google Scholar] [CrossRef]

- Tang, G.; Zhao, H.; Claramunt, C.; Zhu, W.; Wang, S.; Wang, Y.; Ding, Y. PPA-Net: Pyramid Pooling Attention Network for Multi-Scale Ship Detection in SAR Images. Remote Sens. 2023, 15, 2855. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, S.; Liu, S. BANet: A Balance Attention Network for Anchor-Free Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5222212. [Google Scholar] [CrossRef]

- Wang, P.; Chen, Y.; Yang, Y.; Chen, P.; Zhang, G.; Zhu, D.; Jie, Y.; Jiang, C.; Leung, H. A General Multiscale Pyramid Attention Module for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2815–2827. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A Novel Quad Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Xu, S.; Fan, J.; Jia, X.; Chang, J. Edge-Constrained Guided Feature Perception Network for Ship Detection in SAR Images. IEEE Sens. J. 2023, 23, 26828–26838. [Google Scholar] [CrossRef]

- Feng, Y.; Zhang, Y.; Zhang, X.; Wang, Y.; Mei, S. Large Convolution Kernel Network with Edge Self-Attention for Oriented SAR Ship Detection. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2025, 18, 2867–2879. [Google Scholar] [CrossRef]

- Wu, G.; Wang, S.L.; Liu, Y.; Wang, P.; Li, Y. Ship Contour Extraction from Polarimetric SAR Images Based on Polarization Modulation. Remote Sens. 2024, 16, 3669. [Google Scholar] [CrossRef]

- Hu, C.; Chen, H.; Sun, X.; Ma, F. Polarimetric SAR Ship Detection Using Context Aggregation Network Enhanced by Local and Edge Component Characteristics. Remote Sens. 2025, 17, 568. [Google Scholar] [CrossRef]

- Efficient Frequency-Domain Image Deraining with Contrastive Regularization|SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-3-031-72940-9_14 (accessed on 15 July 2025).

- Gong, M.; Wang, D.; Zhao, X.; Guo, H.; Luo, D.; Song, M. A Review of Non-Maximum Suppression Algorithms for Deep Learning Target Detection. In Proceedings of the Seventh Symposium on Novel Photoelectronic Detection Technology and Applications, Kunming, China, 5–7 November 2020; Volume 11763, pp. 821–828. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, Y.; Shen, J.; Liu, F. Improved RT-DETR for Infrared Ship Detection Based on Multi-Attention and Feature Fusion. J. Mar. Sci. Eng. 2024, 12, 2130. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X. Ship-DETR: A Transformer-Based Model for EfficientShip Detection in Complex Maritime Environments. IEEE Access 2025, 13, 66031–66039. [Google Scholar] [CrossRef]

- Yu, C.; Shin, Y. SAR Ship Detection Based on Improved YOLOv5 and BiFPN. ICT Express 2024, 10, 28–33. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, T.; Chen, S.; Zhan, R.; Li, L.; Zhang, J. YO-DETR: A Lightweight End-to-End SAR Ship Detector Using Decoder Head without NMS. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 9408–9411. [Google Scholar]

- Cao, J.; Han, P.; Liang, H.; Niu, Y. SFRT-DETR:A SAR Ship Detection Algorithm Based on Feature Selection and Multi-Scale Feature Focus. Signal Image Video Process. 2025, 19, 115. [Google Scholar] [CrossRef]

- Xing, Z.; Ren, J.; Fan, X.; Zhang, Y. S-DETR: A Transformer Model for Real-Time Detection of Marine Ships. J. Mar. Sci. Eng. 2023, 11, 696. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Zhao, W.; Wang, X.; Li, G.; He, Y. Frequency-Adaptive Learning for SAR Ship Detection in Clutter Scenes. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Xu, K.; Qin, M.; Sun, F.; Wang, Y.; Chen, Y.-K.; Ren, F. Learning in the Frequency Domain. arXiv 2020, arXiv:2002.12416. [Google Scholar]

- Zhou, Z.; Zhao, L.; Ji, K.; Kuang, G. A Domain-Adaptive Few-Shot SAR Ship Detection Algorithm Driven by the Latent Similarity Between Optical and SAR Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–18. [Google Scholar] [CrossRef]

- Liu, C.; Sun, Y.; Zhang, X.; Xu, Y.; Lei, L.; Kuang, G. OSHFNet: A Heterogeneous Dual-Branch Dynamic Fusion Network of Optical and SAR Images for Land Use Classification. Int. J. Appl. Earth Obs. Geoinf. 2025, 141, 104609. [Google Scholar] [CrossRef]

- Wang, Y.; Li, J.; Guo, J.; Liu, R.; Cao, Q.; Li, D.; Wang, L. SFIDM: Few-Shot Object Detection in Remote Sensing Images with Spatial-Frequency Interaction and Distribution Matching. Remote Sens. 2025, 17, 972. [Google Scholar] [CrossRef]

- When Fast Fourier Transform Meets Transformer for Image Restoration|SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-3-031-72995-9_22 (accessed on 15 July 2025).

- Liu, C.; Sun, Y.; Xu, Y.; Sun, Z.; Zhang, X.; Lei, L.; Kuang, G. A Review of Optical and SAR Image Deep Feature Fusion in Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 12910–12930. [Google Scholar] [CrossRef]

- Chen, L.; Li, J.; Zhong, H.; Shi, H.; Yang, Z.; Li, W. PGMNet: A Prototype-Guided Multimodal Network for Ship Recognition in SAR Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–17. [Google Scholar] [CrossRef]

- Scharr, H. Optimal Operators in Digital Image Processing. 2000. Available online: https://www.researchgate.net/publication/36148383_Optimal_operators_in_digital_image_processing_Elektronische_Ressource (accessed on 18 September 2025).

- Liu, J.; Li, Z.; Zhang, X.; Li, P.; Xu, Y. Region of Interest Detection on the Complex Sea Scenes Based on Visual Saliency. In Proceedings of the Second Target Recognition and Artificial Intelligence Summit Forum, Changchun, China, 28–30 August 2019; Volume 11427, pp. 1057–1066. [Google Scholar]

- Meester, M.J.; Baslamisli, A.S. SAR Image Edge Detection: Review and Benchmark Experiments. Int. J. Remote Sens. 2022, 43, 5372–5438. [Google Scholar] [CrossRef]

- Chen, J.; Hu, Z.; Zhao, Y.; Wu, W.; Chen, L.; Hu, Y.; Huang, B. Discrete Edge Feature Guided Rotation Detection Method for Remote Sensing Ship Wake. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 21275–21288. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. arXiv 2019, arXiv:1709.01507. [Google Scholar]

- Ouyang, H. DEYO: DETR with YOLO for End-to-End Object Detection. arXiv 2024, arXiv:2402.16370. [Google Scholar]

- Xie, Y.; Ma, X.; Zhao, Q. Research on Target Detection Network Based on Improved Swin-DETR. In Proceedings of the 2023 4th International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Hangzhou, China, 25–27 August 2023; pp. 324–328. [Google Scholar]

- Roh, B.; Shin, J.; Shin, W.; Kim, S. Sparse DETR: Efficient End-to-End Object Detection with Learnable Sparsity. arXiv 2022, arXiv:2111.14330. [Google Scholar]

- Han, Q.; Han, X.; Niu, L.; Fan, Y. Light-YOLOv7: Lightweight Ship Object Detection Algorithm Based on CA and EMA. In Proceedings of the 2024 9th International Conference on Automation, Control and Robotics Engineering (CACRE), Jeju Island, Republic of Korea, 18–20 July 2024; pp. 231–236. [Google Scholar]

- Yang, J.; Li, C.; Dai, X.; Yuan, L.; Gao, J. Focal Modulation Networks. arXiv 2022, arXiv:2203.11926. [Google Scholar] [CrossRef]

| Datasets | Method | Precision (%) | Recall (%) | F1 Score (%) | mAP@50 (%) |

|---|---|---|---|---|---|

| self-constructed dataset | Sobel | 88.21 | 77.50 | 82.51 | 81.58 |

| Prewitt | 88.24 | 76.11 | 81.73 | 82.07 | |

| Laplacian | 87.53 | 77.51 | 82.22 | 83.28 | |

| Scharr | 88.56 | 77.22 | 82.50 | 83.39 | |

| HRSID | Sobel | 90.64 | 76.48 | 82.96 | 84.33 |

| Prewitt | 87.54 | 76.58 | 81.70 | 84.18 | |

| Laplacian | 87.94 | 79.25 | 83.37 | 84.88 | |

| Scharr | 89.12 | 78.58 | 83.52 | 84.95 |

| Datasets | Method | A1 | A2 | Precision (%) | Recall (%) | F1 Score (%) | mAP@50 (%) |

|---|---|---|---|---|---|---|---|

| self-constructed dataset | M1 | × | × | 87.86 | 77.18 | 82.17 | 82.34 |

| M2 | ✓ | × | 88.56 (+0.70) | 77.22 (+0.04) | 82.50 (+0.33) | 83.39 (+1.05) | |

| M3 | × | ✓ | 89.71 (+1.85) | 76.57 (−0.61) | 82.62 (+0.45) | 82.88 (+0.54) | |

| M4 | ✓ | ✓ | 88.46 (+0.60) | 77.73 (+0.55) | 82.75 (+0.58) | 83.94 (+1.60) | |

| HRSID | M1 | × | × | 89.05 | 78.13 | 83.23 | 84.88 |

| M2 | ✓ | × | 89.12 (+0.07) | 78.58 (+0.45) | 83.52 (+0.28) | 84.95 (+0.08) | |

| M3 | × | ✓ | 89.45 (+0.40) | 79.22 (+1.09) | 84.02 (+0.79) | 85.28 (+0.40) | |

| M4 | ✓ | ✓ | 91.37 (+2.31) | 78.83 (+0.70) | 84.64 (+1.40) | 85.59 (+0.72) |

| Method | Ships in Complex Land–Object Environments | Moored and Near-Shore Ships | ||

|---|---|---|---|---|

| Result1 | Hotmap1 | Result2 | Hotmap2 | |

| M1 |  |  |  |  |

| M2 |  |  |  |  |

| M3 |  |  |  |  |

| M4 |  |  |  |  |

| Methods | Precision (%) | Recall (%) | F1 Score (%) | mAP@50 (%) | |

|---|---|---|---|---|---|

| General Detector | YOLOv8 | 85.75 | 68.30 | 76.04 | 77.15 |

| YOLOv11 | 83.76 | 69.63 | 76.04 | 76.49 | |

| YOLOv12 | 85.98 | 68.27 | 76.11 | 75.97 | |

| YOLO-DETR | 87.24 | 73.49 | 79.78 | 79.56 | |

| SWIN-DETR | 86.64 | 76.88 | 81.47 | 82.16 | |

| Sparse-DETR | 87.31 | 76.85 | 81.74 | 82.68 | |

| RT-DETR | 87.86 | 77.18 | 82.17 | 82.34 | |

| Methods based on feature enhancement | YOLO-BiFPN | 89.51 | 75.75 | 82.06 | 82.92 |

| RT-DETR-BiFPN | 87.73 | 77.62 | 82.36 | 83.06 | |

| SEAttention | 87.54 | 77.18 | 82.03 | 82.90 | |

| CBAM | 88.63 | 76.41 | 82.07 | 82.94 | |

| EMA | 88.16 | 77.16 | 82.29 | 82.60 | |

| Methods utilizing multi- domain fusion | FFCM | 88.05 | 77.16 | 82.24 | 82.72 |

| SFHF | 87.29 | 78.12 | 82.45 | 83.31 | |

| FocalNet | 86.97 | 76.41 | 81.34 | 82.27 | |

| Our method | DWTF-DETR | 88.46 | 77.73 | 82.75 | 83.94 |

| Methods | Layers | Parameters (M) | GFLOPs | |

|---|---|---|---|---|

| General Detector | YOLOv8 | 225 | 3.01 | 8.2 |

| YOLOv11 | 319 | 2.59 | 6.4 | |

| YOLOv12 | 465 | 2.57 | 6.5 | |

| YOLO-DETR | 228 | 6.19 | 12.0 | |

| SWIN-DETR | 402 | 36.41 | 97.3 | |

| Sparse-DETR | 473 | 19.73 | 54.0 | |

| RT-DETR | 295 | 19.97 | 57.3 | |

| Methods based on feature enhancement | YOLO-BiFPN | 369 | 1.93 | 6.4 |

| RT-DETR-BiFPN | 315 | 20.40 | 64.6 | |

| SEAttention | 323 | 20.06 | 57.3 | |

| CBAM | 343 | 20.06 | 57.3 | |

| EMA | 325 | 22.34 | 66.4 | |

| Methods utilizing multi- domain fusion | FFCM | 445 | 16.66 | 50.9 |

| SFHF | 493 | 18.01 | 54.9 | |

| FocalNet | 535 | 14.56 | 48.7 | |

| Our method | DWTF-DETR | 331 | 25.09 | 66.7 |

| Method | E1 | E2 | E3 | Method | E1 | E2 | E3 |

|---|---|---|---|---|---|---|---|

| YOLOv8 |  |  |  | RT-DETR-BiFPN |  |  |  |

| YOLOv11 |  |  |  | SE Attention |  |  |  |

| YOLOv12 |  |  |  | CBAM |  |  |  |

| YOLO-DETR |  |  |  | EMA |  |  |  |

| SWIN-DETR |  |  |  | FFCM |  |  |  |

| Sparse-DETR |  |  |  | SFHF |  |  |  |

| RT-DETR |  |  |  | FocalNet |  |  |  |

| YOLO11-BiFPN |  |  |  | DWTF-DETR |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, T.; Wang, T.; Han, Y.; Li, D.; Zhang, G.; Peng, Y. DWTF-DETR: A DETR-Based Model for Inshore Ship Detection in SAR Imagery via Dynamically Weighted Joint Time–Frequency Feature Fusion. Remote Sens. 2025, 17, 3301. https://doi.org/10.3390/rs17193301

Dong T, Wang T, Han Y, Li D, Zhang G, Peng Y. DWTF-DETR: A DETR-Based Model for Inshore Ship Detection in SAR Imagery via Dynamically Weighted Joint Time–Frequency Feature Fusion. Remote Sensing. 2025; 17(19):3301. https://doi.org/10.3390/rs17193301

Chicago/Turabian StyleDong, Tiancheng, Taoyang Wang, Yuqi Han, Deren Li, Guo Zhang, and Yuan Peng. 2025. "DWTF-DETR: A DETR-Based Model for Inshore Ship Detection in SAR Imagery via Dynamically Weighted Joint Time–Frequency Feature Fusion" Remote Sensing 17, no. 19: 3301. https://doi.org/10.3390/rs17193301

APA StyleDong, T., Wang, T., Han, Y., Li, D., Zhang, G., & Peng, Y. (2025). DWTF-DETR: A DETR-Based Model for Inshore Ship Detection in SAR Imagery via Dynamically Weighted Joint Time–Frequency Feature Fusion. Remote Sensing, 17(19), 3301. https://doi.org/10.3390/rs17193301