Convex-Decomposition-Based Evaluation of SAR Scene Deception Jamming Oriented to Detection

Abstract

1. Introduction

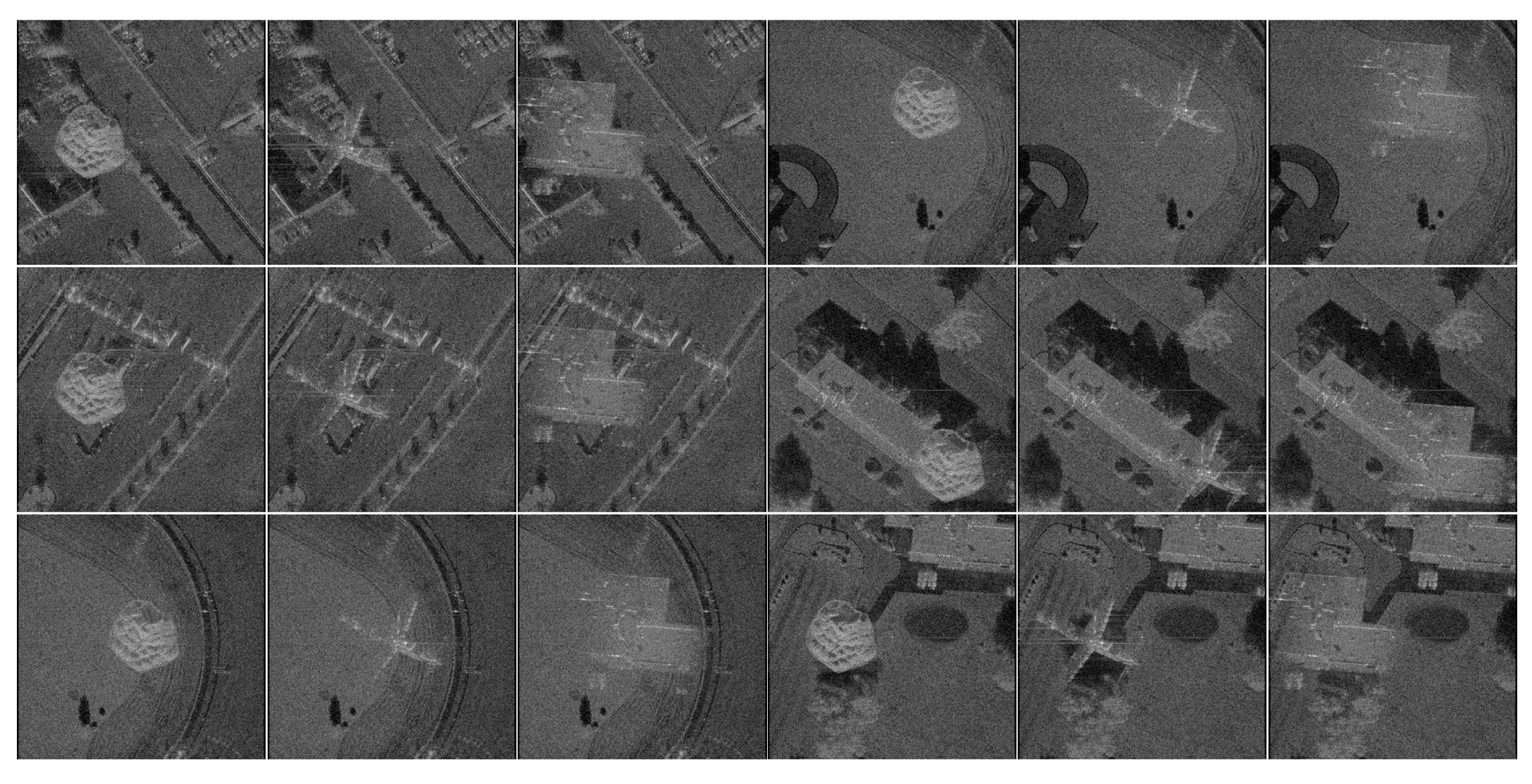

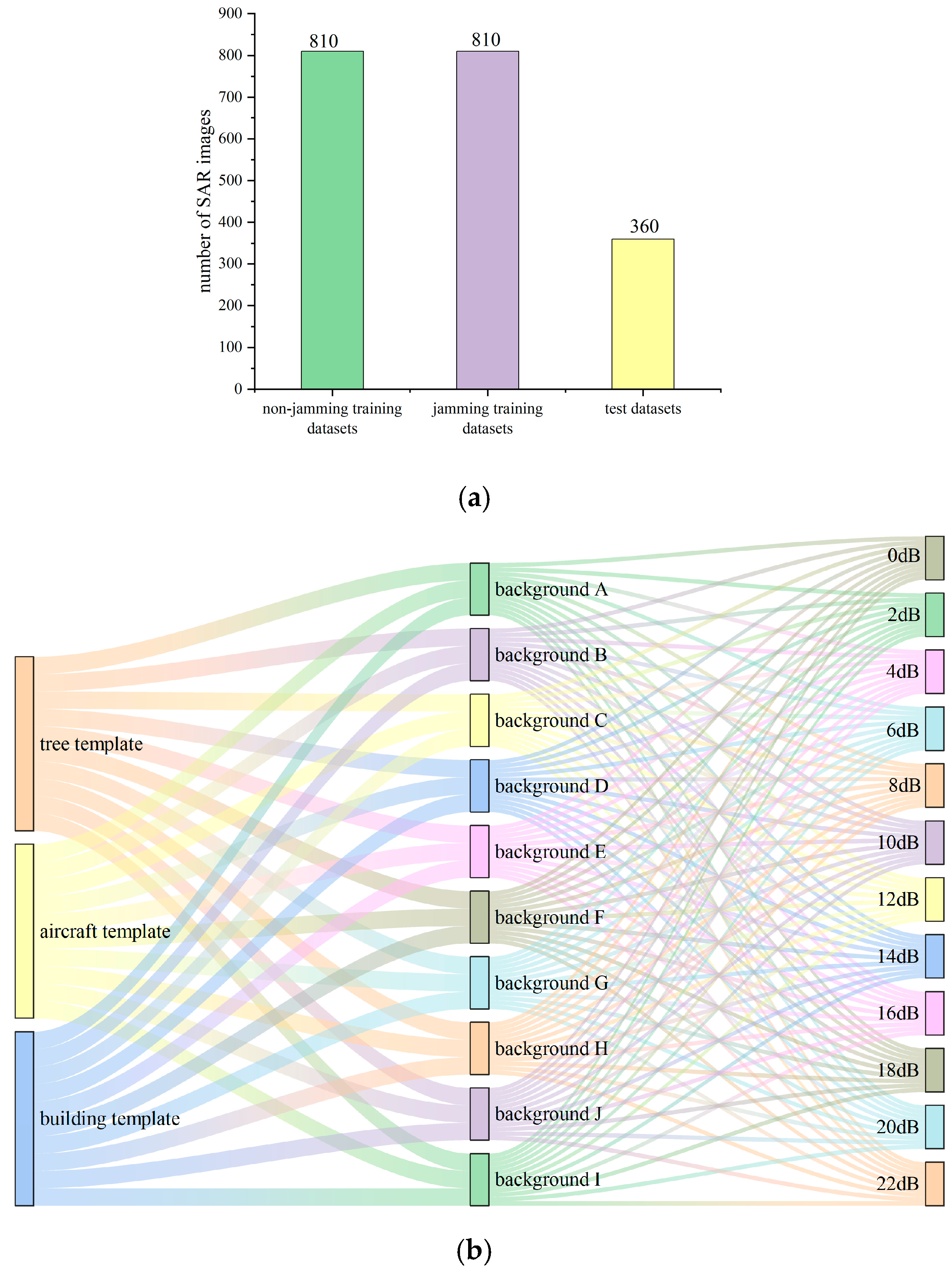

2. Generation of SAR Scene Deception Jamming Dataset

3. Visual and Non-Visual Feature Parameters

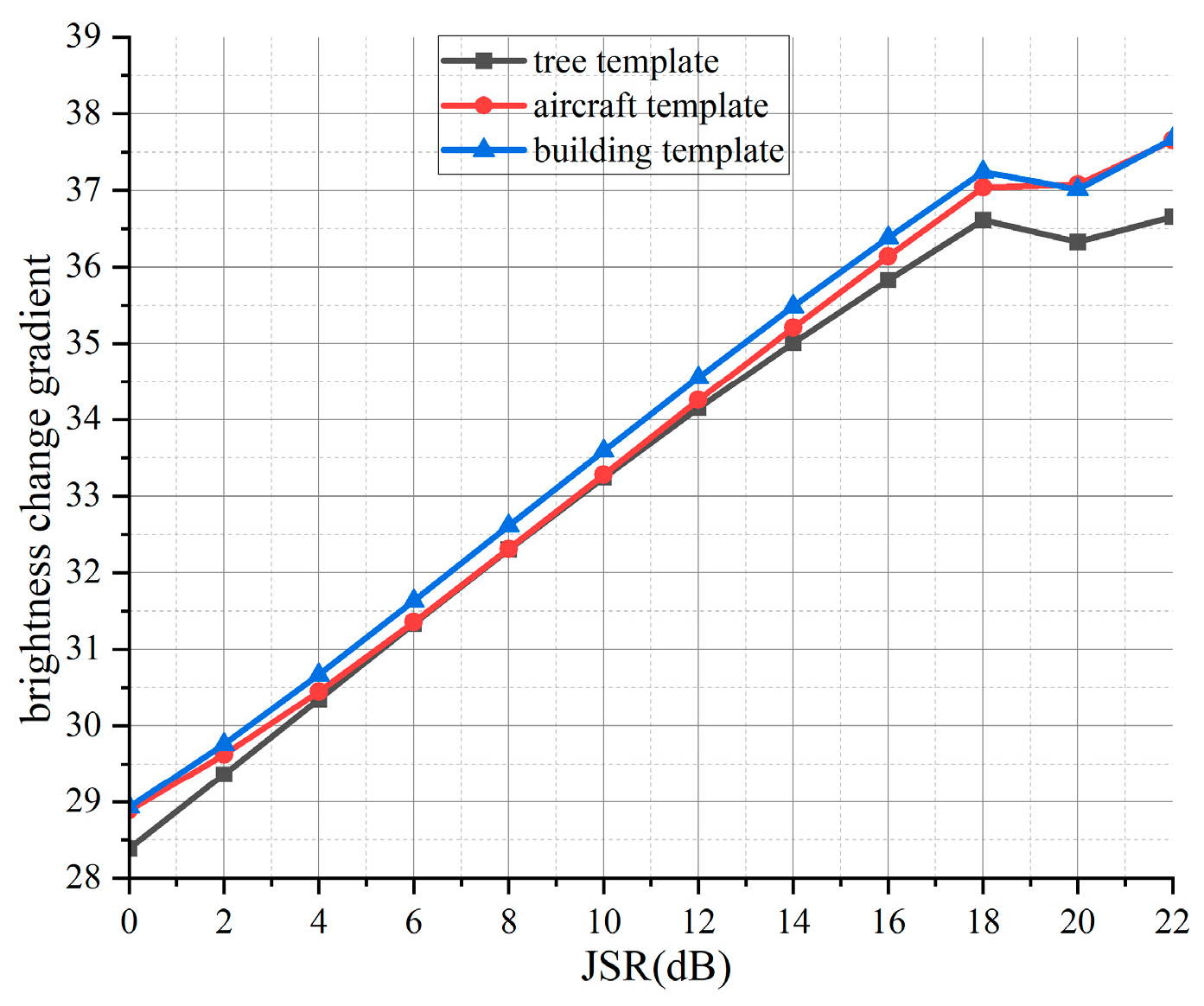

3.1. Brightness Change Gradient

3.2. Texture Direction Contrast Degree

3.3. Edge Matching Degree

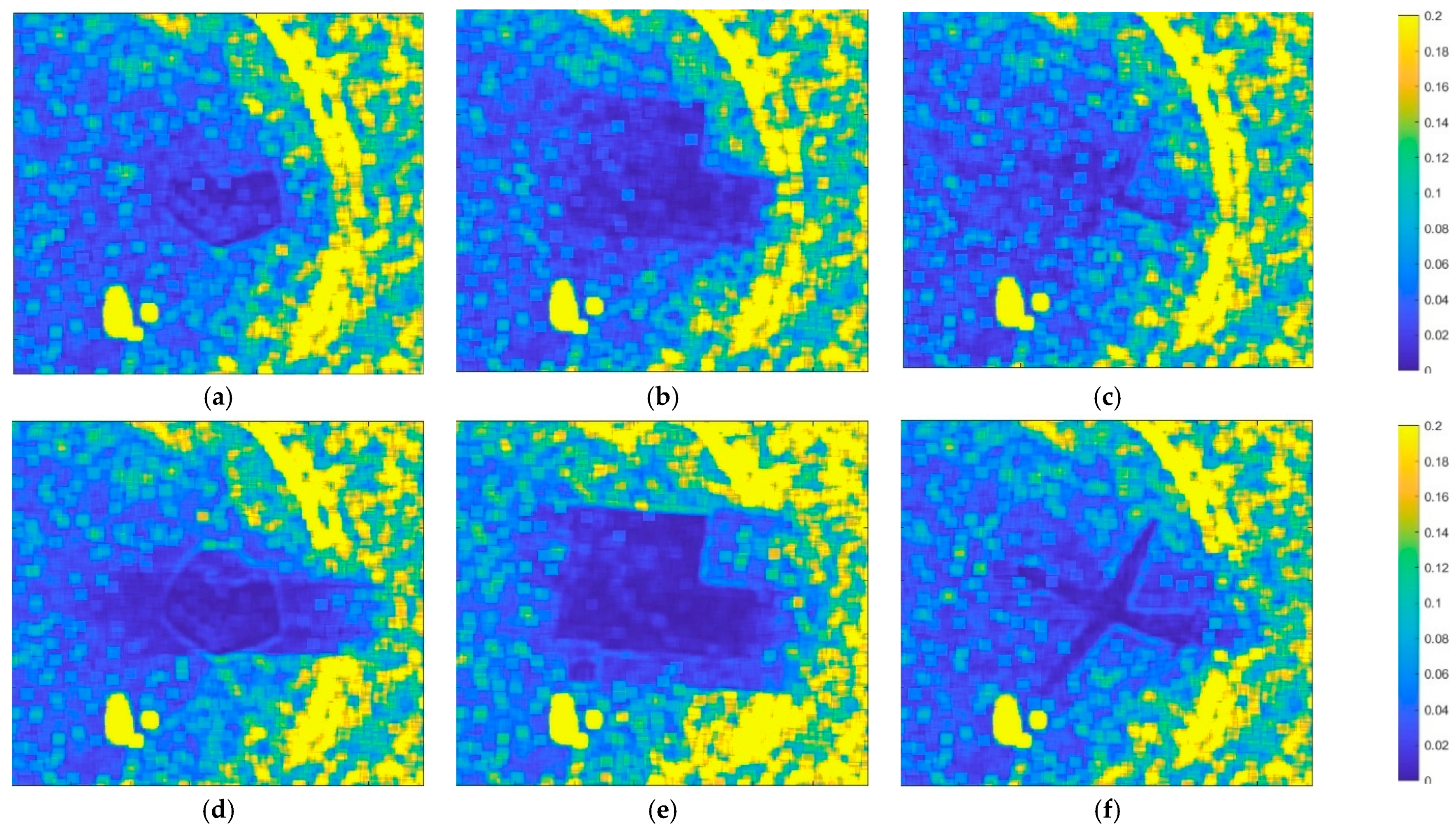

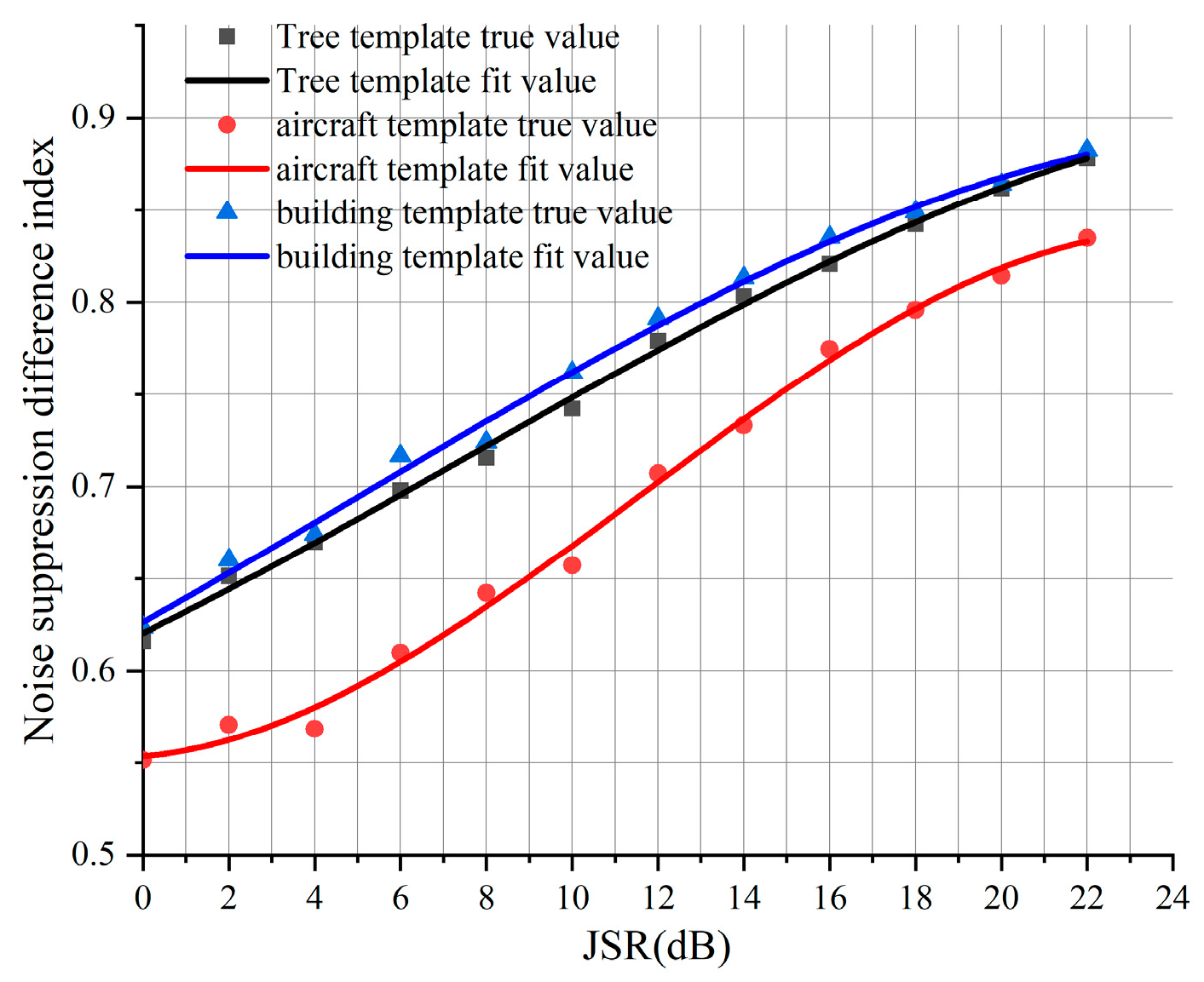

3.4. Noise Suppression Difference Index

4. Convex-Decomposition-Guided Evaluation Indicator for Deception Degree

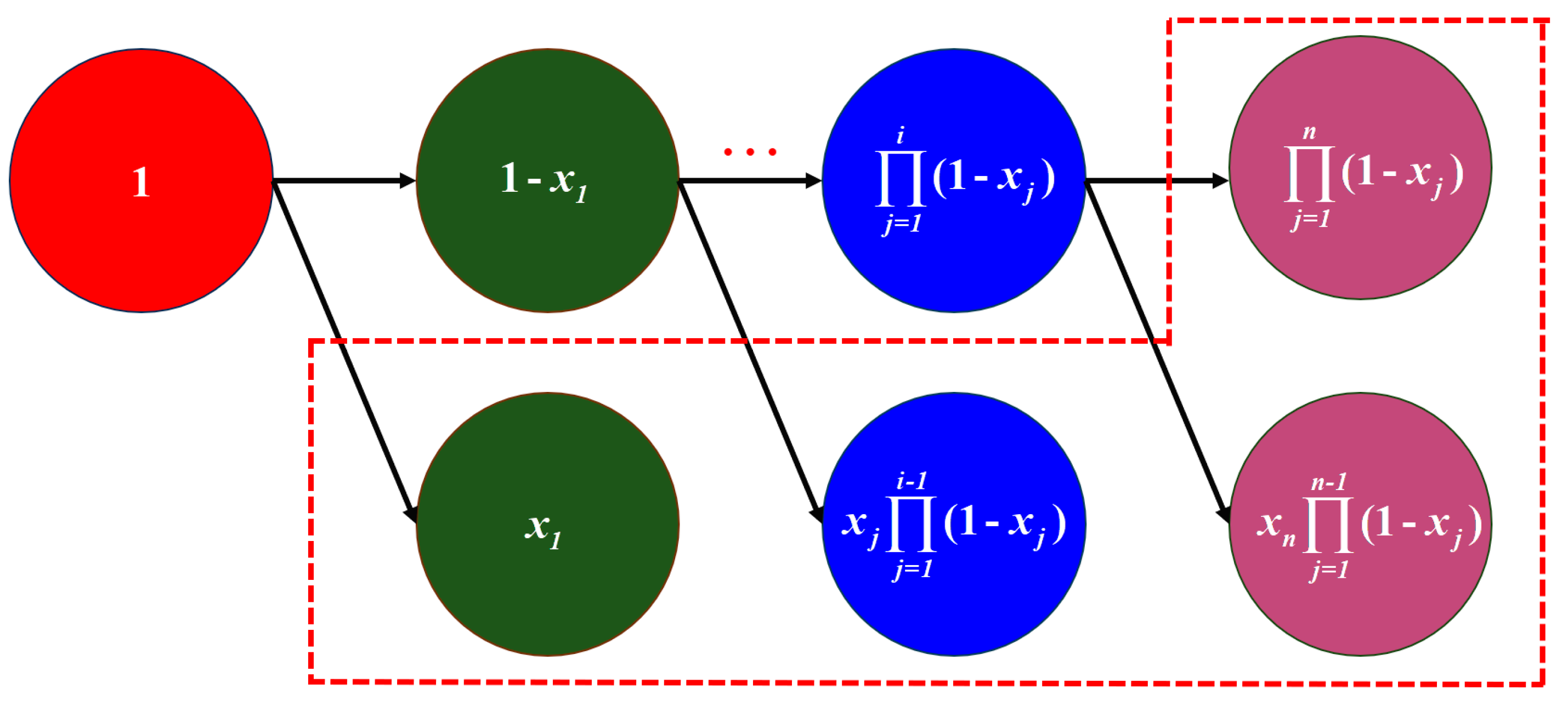

4.1. Convex-Decomposition Weighting

4.2. Convex-Decomposition-Based Scene Deception Degree

- (1)

- Constructing the weight optimization model

- (2)

- Convex-Decomposition Weight Decomposition and Subproblem Construction

- (3)

- Iterative solving and convergence judgment

5. Analysis of Experimental Results

5.1. Analysis of SAR Scene Deception Jamming Feature Patametern

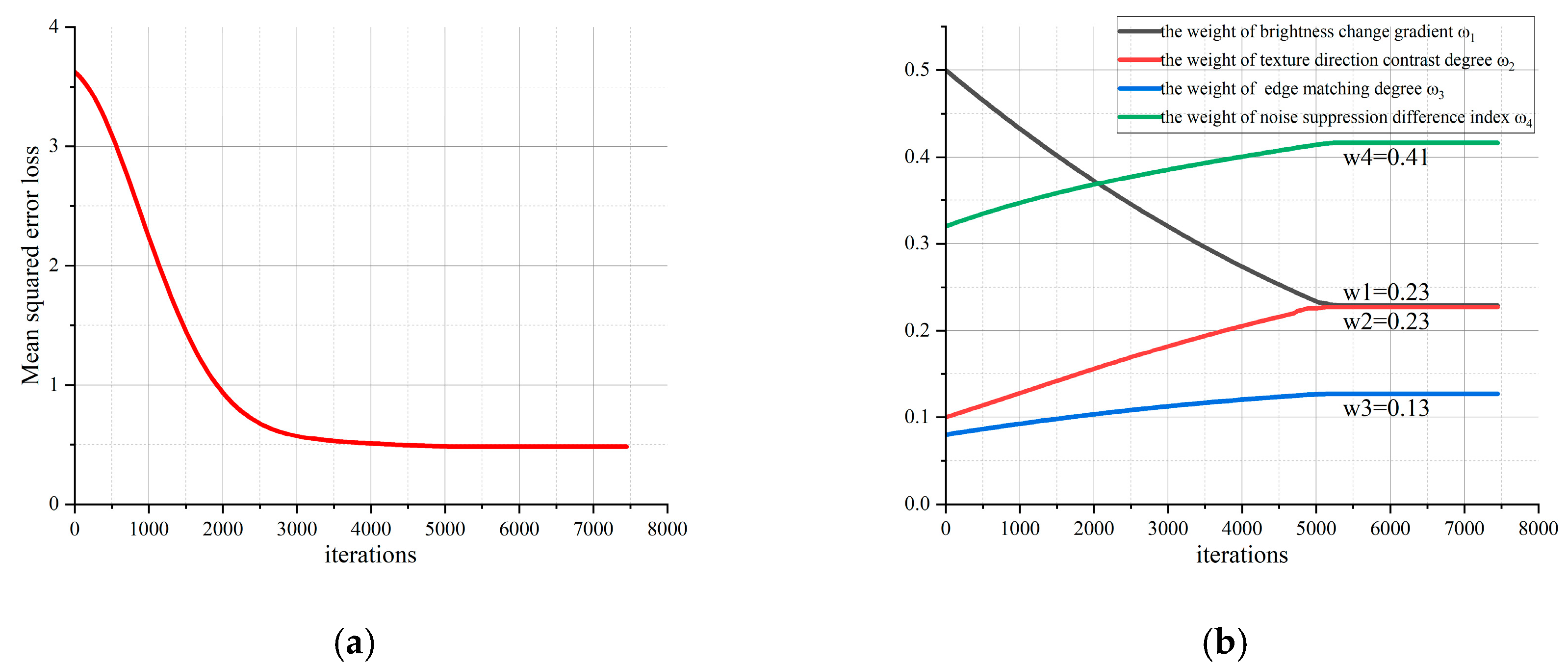

5.2. Iterative Optimization Process of Convex-Decomposition Weight Decomposition Methods

5.3. Analysis of the Relationship Between Scene Deception Degree and Jamming Detection Rate

6. Discussion

6.1. SAR Scene Deception Jamming Detection Results

6.2. Ablation Experiment

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Y.; Li, J.; Yang, L.; Xu, C. Evaluation of SAR Jamming Effect Based on Texture Structure Similarity and Contour Similarity. Mod. Electron. Technol. 2023, 46, 34–38. [Google Scholar]

- Chen, T.; Zhang, H.; Lu, F. A Method for Evaluating SAR Jamming Effect Based on AHP and Target Detection and Recognition Performance. Command Control Simul. 2022, 44, 66–72. [Google Scholar]

- Gao, C.; Zhang, W.; Wang, Y.; Tan, D. SAR Jamming Effect Evaluation Method Combining Hierarchical Visual Features and Structural Similarity. Electron. Inf. Warf. Technol. 2019, 34, 46–50. [Google Scholar]

- Han, G.; Ma, X.; Dai, D.; Xiao, S. SAR Jamming Effect Evaluation Method Based on Structural Similarity. Mod. Def. Technol. 2012, 172, 163–167. [Google Scholar]

- Lu, H.; Lu, J.; Guo, J. A SAR Jamming Effect Evaluation Method Based on Correlation Measure. Mod. Def. Technol. 2008, 112, 97–99. [Google Scholar]

- Han, G.; Liu, Y.; Li, Y. SAR Jamming Effect Evaluation Based on Visual Weighted Processing. Radar Sci. Technol. 2011, 1, 18–23. [Google Scholar]

- Zhou, G.; Shi, C.; Yang, Y.; Li, H. SAR Jamming Effect Evaluation Method Based on Entropy. Aerosp. Electron. Countermeas 2006, 4, 33–35. [Google Scholar]

- Liu, J.; Da, T.; Sun, J.; Wang, S.; Zhang, W. An Intelligent Evaluation Method for SAR Image Jamming Effect. Shipboard Electron. Countermeas. 2020, 43, 78–82. [Google Scholar]

- Han, G.; Li, Y.; Xing, S.; Liu, Q.; Yang, W. Evaluation Method for Effect of New-Type SAR Deception Jamming. Acta Astronaut. 2011, 9, 1994–2001. [Google Scholar]

- Camastra, F.; Vinciarelli, A. Estimating the intrinsic dimension of data with a fractal-based method. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 10, 1404–1407. [Google Scholar] [CrossRef]

- D’Hondt, O.; Lopez-Martinez, C.; Ferro-Famil, L.; Pottier, E. Quantitative analysis of texture parameter estimation in SAR images. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; IEEE: Barcelona, Spain, 2007; pp. 274–277. [Google Scholar]

- Xiang, S.; Xue, J.; Yu, L.; Chen, C. No-reference depth quality assessment for texture-plus-depth images. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; IEEE: Chengdu, China, 2014; pp. 1–6. [Google Scholar]

- Yelmanov, S.; Romanyshyn, Y. Assessment of Image Information Capacity Based on the Analysis of Brightness Increments Distribution. In Proceedings of the 2022 IEEE 41st International Conference on Electronics and Nanotechnology (ELNANO), Kyiv, Ukraine, 10–14 October 2022; IEEE: Kyiv, Ukraine; pp. 640–645. [Google Scholar]

- Li, Y.; Liu, M. GEO Spatial Correlation SAR Imaging Framework Using MBO2D-SC Algorithm for Sea Surface Wave Imaging Under Prolonged Synthetic Aperture Time. IEEE Sens. J. 2025, 25, 25168–25184. [Google Scholar] [CrossRef]

- Chateigner, C.; Guennec, Y.; Ros, L.; Siclet, C. On Asymmetrically Clipped Hybrid FSK-Based Unipolar Modulations: Performance and Frequency-Domain Analysis. In Proceedings of the 2025 IEEE Wireless Communications and Networking Conference (WCNC), Milan, Italy, 24–27 March 2025; IEEE: Milan, Italy, 2025; pp. 1–6. [Google Scholar]

- Zhang, X.; Zhao, L. No-reference Image Quality Evaluation Method Based on zero-shot learning and Feature Fusion. In Proceedings of the 2025 5th International Symposium on Computer Technology and Information Science (ISCTIS), Xi’an, China, 16–18 May 2025; IEEE: Xi’an, China, 2025; pp. 114–117. [Google Scholar]

- Liu, G.; Wang, J.; Ban, T. Frame detection based on cyclic autocorrelation and constant false alarm rate in burst communication systems. China Commun. 2015, 12, 55–63. [Google Scholar] [CrossRef]

- Shang, S.; Wu, F.; Zhou, Y.; Liu, Z.; Yang, Y.; Li, P. Research on Moving Target Shadow Simulation of Video SAR. In Proceedings of the 2022 5th International Conference on Information Communication and Signal Processing (ICICSP), Shenzhen, China, 26–28 November 2022; IEEE: Shenzhen, China, 2022; pp. 192–196. [Google Scholar]

- Zhang, C.; An, H.; Lou, M. SAR Radio Frequency Interference Region-Intensity Feature Extraction and Joint Evaluation Network. Radar Sci. Technol. 2024, 22, 391–399. [Google Scholar]

- Long, W.; Guo, Y.; Xu, Y. SAR Image Detection Based on Bipartite Matching Transformer. J. Signal Process. 2024, 40, 1648–1658. [Google Scholar]

- Cai, Z.; Xing, S.; Quan, S.; Su, X.; Wang, J. A power-distribution joint optimization arrangement for multi-point source jamming system. Results Eng. 2025, 27, 106856. [Google Scholar] [CrossRef]

- Ammar, M.A.; Abdel-Latif, M.S.; Elgamel, S.A.; Azouz, A. Performance Enhancement of Convolution Noise Jamming Against SAR. In Proceedings of the 2019 36th National Radio Science Conference (NRSC), Port Said, Egypt, 16–18 April 2019; IEEE: Port Said, Egypt, 2019; pp. 126–134. [Google Scholar]

- Cheng, S.; Zheng, H.; Yu, W.; Lv, Z.; Chen, Z.; Qiu, T. A Barrage Jamming Suppression Scheme for DBF-SAR System Based on Elevation Multichannel Cancellation. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4008305. [Google Scholar] [CrossRef]

- Chang, X.; Li, Y.; Zhao, Y.; Du, Y.; Liu, D.; Wan, J. A Scattered Wave Deceptive Jamming Method Based on Genetic Algorithm against Three Channel SAR GMTI. In Proceedings of the 2021 CIE International Conference on Radar (Radar), Haikou, China, 15–19 December 2021; IEEE: Haikou, China, 2021; pp. 414–419. [Google Scholar]

- Wang, Y.; Liu, Z.; Huang, Y.; Li, N. Multitarget Barrage Jamming Against Azimuth Multichannel HRWS SAR via Phase Errors Modulation of Transmitted Signal. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4014605. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, Z. Anti-Jamming Method for SAR Using Joint Waveform Modulation and Azimuth Mismatched Filtering. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4012205. [Google Scholar] [CrossRef]

- Huang, L.; Dong, C.; Shen, Z.; Zhao, G. The Influence of Rebound Jamming on SAR GMTI. IEEE Geosci. Remote Sens. Lett. 2015, 12, 399–403. [Google Scholar] [CrossRef]

- Lv, Q.; Fan, H.; Liu, J.; Zhao, Y.; Xing, M.; Quan, Y. Multilabel Deep Learning-Based Lightweight Radar Compound Jamming Recognition Method. IEEE Trans. Instrum. Meas. 2024, 73, 2521115. [Google Scholar] [CrossRef]

- Zhao, B.; Huang, L.; Li, J.; Zhang, P. Target Reconstruction from Deceptively Jammed Single-Channel SAR. IEEE Trans. Geosci. Remote Sens. 2018, 56, 152–167. [Google Scholar] [CrossRef]

- Chen, S.; Lin, Y.; Yuan, Y.; Li, X.; Hou, L.; Zhang, S. Suppressive Interference Suppression for Airborne SAR Using BSS for Singular Value and Eigenvalue Decomposition Based on Information Entropy. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5205611. [Google Scholar] [CrossRef]

- Zhou, F.; Tao, M.; Bai, X.; Liu, J. Narrow-Band Interference Suppression for SAR Based on Independent Component Analysis. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4952–4960. [Google Scholar] [CrossRef]

- Huang, J.; Yin, J.; An, M.; Li, Y. Azimuth Pointing Calibration for Rotating Phased Array Radar Based on Ground Clutter Correlation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1000315. [Google Scholar] [CrossRef]

- Sui, R.; Wang, J.; Sun, G.; Xu, Z.; Feng, D. A Dual-Polarimetric High Range Resolution Profile Modulation Method Based on Time-Modulated APCM. IEEE Trans. Antennas Propag. 2025, 73, 1007–1017. [Google Scholar] [CrossRef]

| SDD Interval | (a) | (b) | (c) | (d) | (e) |

|---|---|---|---|---|---|

| 0.2–0.4 | 99.9% | 99.8% | 99.9% | 99.9% | 99.9% |

| 0.4–0.6 | 95.2% | 86.7% | 99.1% | 97.0% | 94.9% |

| 0.6–0.8 | 69.8% | 62.8% | 78.7% | 70.1% | 67.7% |

| 0.8–1.0 | 55.5% | 58.4% | 60.5% | 59.6% | 60.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Quan, S.; Xing, S.; Zhang, H. Convex-Decomposition-Based Evaluation of SAR Scene Deception Jamming Oriented to Detection. Remote Sens. 2025, 17, 3178. https://doi.org/10.3390/rs17183178

Zhu H, Quan S, Xing S, Zhang H. Convex-Decomposition-Based Evaluation of SAR Scene Deception Jamming Oriented to Detection. Remote Sensing. 2025; 17(18):3178. https://doi.org/10.3390/rs17183178

Chicago/Turabian StyleZhu, Hai, Sinong Quan, Shiqi Xing, and Haoyu Zhang. 2025. "Convex-Decomposition-Based Evaluation of SAR Scene Deception Jamming Oriented to Detection" Remote Sensing 17, no. 18: 3178. https://doi.org/10.3390/rs17183178

APA StyleZhu, H., Quan, S., Xing, S., & Zhang, H. (2025). Convex-Decomposition-Based Evaluation of SAR Scene Deception Jamming Oriented to Detection. Remote Sensing, 17(18), 3178. https://doi.org/10.3390/rs17183178