1. Introduction

Computer-aided maritime surveillance has gained attention since the evolution of computer vision (CV) methods for classifying, detecting, and tracking ships in different contexts. Using images and video helps companies identify illegal activities and monitor the behavior of ships on coastlines [

1]. The traditional Automatic Identification System (AIS) data used to control maritime traffic and provide increased security for navigation suffers from several problems, primarily caused by human manipulation and incorrect data sent by ships to the control station [

2]. Although AIS data is mandatory for some classes of ships, illegal activities can lead to the AIS transponder turning off. To minimize the impacts, some works have proposed fusing AIS data with satellite data [

3,

4,

5] and others with radar images [

6], such as Synthetic Aperture Radar (SAR). The association between two data types enables the identification of collaborative and non-collaborative ships.

The remote sensing technologies involving satellites make SAR data an important method for maritime monitoring [

7]. The advantages of SAR are its all-day and all-weather data-collecting capabilities. In [

8,

9], surveys on SAR ship detection are presented, ranging from traditional detection methods using sea–land segmentation and constant false alarm rate (CFAR)-based models to recent deep learning (DL) CV models. Despite SAR being an important step for maritime imagery, many problems must be tackled. Initially, land–ocean segmentation could be necessary in some SAR image scenarios. In scenarios with limited SAR image data, one alternative is to fine-tune models pre-trained on standard datasets (e.g., COCO or ImageNet). However, this approach may lead to suboptimal detection performance due to domain differences. Moreover, ships on the ocean or near land with similar patterns can produce complex backgrounds, making the detection more challenging. Since the SAR Ship Detection (SSD) [

10] dataset and SAR Ship Detection Dataset (SSDD) [

11] were released, numerous researchers have been working to enhance ship detection in complex environments with intricate backgrounds. These two datasets facilitated access to SAR images in the maritime context, making a significant contribution to research, particularly in the application of Deep Learning. In [

8], 177 papers were organized into the past, present, and future development of models applied to ship detection in SAR images. Convolutional Neural Network (CNN)-based models have been utilized in SAR ship detection, particularly the You Only Look Once (YOLO) model, in nearly all its versions, ranging from YOLOv1 to YOLOv10. This work aims to analyze the use of attention modules in various YOLO versions, with the goal of achieving improved performance in benchmark datasets and addressing challenges related to SAR imagery, including small objects (e.g., small ships), complex backgrounds, and cluttered scenes (e.g., similar objects between land and ships in the ocean). While focused on ship detection, we also evaluate the generalizability of our approach to other object types and multi-class SAR detection tasks.

This study employs three recent YOLO architectures (v10, v11, and v12) as baselines to investigate whether strategically integrating attention blocks across distinct network layers can enhance detection performance in complex SAR environments. Building on the YOLO family’s ongoing evolution, which prioritizes dual objectives of reduced computational complexity and heightened accuracy, we leverage these advancements to optimize SAR ship detection.

To enhance small ship detection in SAR imagery, we integrate three attention mechanisms, Convolutional Block Attention Module (CBAM), Swin Transformer, and Bi-level Routing Attention (BRA), into YOLO versions 10, 11, and 12. These modules are strategically embedded at critical network stages: during downsampling, upsampling, or as a cross-stage feature fusion mechanism positioned between the downsampling and upsampling stages. This targeted placement aims to optimize multi-scale feature extraction, specifically addressing challenges posed by small vessels and complex maritime backgrounds in SAR data.

Our systematic integration of attention mechanisms across targeted YOLO architectures advances SAR ship detection in three key dimensions. First, we establish, to our knowledge, the first YOLOv11 and YOLOv12 frameworks explicitly optimized for SAR object detection, demonstrating superior performance on ship, aircraft, and multi-class datasets. Regarding SAR ship detection, this work addresses a critical gap in the maritime surveillance literature. Second, by embedding CBAM, Swin, and BRA modules at strategically identified layers, we significantly enhance small target detection capabilities, overcoming persistent challenges posed by vessel compactness in radar imagery. Finally, rigorous validation across two benchmark datasets (SAR Ship and SSDD) demonstrates that optimizing attention allocation in layers sensitive to object scale and background complexity (

Section 4.3.2) yields state-of-the-art performance. This approach not only resolves SAR-specific detection nuances but also provides a transferable foundation for scaling deep learning applications in remote sensing.

The remainder of this work is structured as follows.

Section 2 reviews the SAR-specific object detection literature, with emphasis on YOLO architectural developments and the application of attention mechanisms.

Section 3 outlines our experimental methodology, including the benchmark datasets (SAR Ship, SSDD, SADD, and MSAR), evaluation metrics, YOLO baseline architectures, and strategies for integrating attention modules.

Section 4 presents a comprehensive evaluation: environmental setup, performance analysis, and ablation studies on the primary SAR Ship dataset, followed by rigorous cross-dataset validation on SSDD to assess generalizability. To further stress-test our approach, we extend the evaluation to include non-ship SAR object detection on the SADD and MSAR datasets. Finally,

Section 5 synthesizes key findings and proposes future research directions for maritime surveillance. All supplementary material are at

https://github.com/rlrocha90/Enhancing-YOLO-based-SAR-Ship-Detection-with-Attention-Mechanisms (accessed on 7 February 2025).

2. Related Works

Since the development of the original YOLO model [

12], researchers across various fields have continuously proposed new iterations and enhancements to its architecture [

13,

14]. The evolution from the initial YOLO reflects a consistent effort to achieve two primary goals: reducing model complexity to enable faster image processing and improving detection performance. Remarkably, YOLOv10 has managed to achieve both objectives simultaneously, showcasing advancements in efficiency without compromising accuracy [

15].

Detecting small objects is a particularly challenging yet crucial task. These models might help address this issue [

16]. This task becomes even more demanding when using images captured by stationary cameras, drones, satellites, or other vision systems, where small objects are often indistinguishable from background noise or are represented by only a few pixels [

17]. Traditional CNNs face inherent limitations in accurately identifying such small targets despite the substantial progress enabled by the DL paradigm. Overcoming these challenges requires innovative modifications to the architecture and the development of specialized techniques to enhance detection capabilities for small objects. YOLO’s evolution underscores the importance of addressing these limitations while maintaining its hallmark speed and efficiency, solidifying its relevance in real-world applications.

SAR images are crucial in maritime object detection because they capture data under various weather and lighting conditions [

18]. However, these images often include complex backgrounds due to the unique image acquisition process employed by SAR sensors. Additionally, detecting small objects, such as ships, relies heavily on precise chip cropping after image generation to focus on regions of interest. One large SAR image from the sensor is cropped into sub-images, and then ship chips (containing the ship image) are obtained and added to the dataset. This makes ship detection in SAR data (i.e., SAR ship detection) particularly challenging.

Numerous models have been proposed to address this issue, with many leveraging variations in the YOLO architecture as a baseline. Earlier versions, such as YOLOv3 [

19] and YOLOv4 [

20], laid the groundwork for applying YOLO-based methods to SAR ship detection. Subsequent advancements, including YOLOv5 [

7,

21], YOLOv7 [

22,

23], YOLOv8 [

24,

25,

26], and YOLOX [

27], introduced innovations to handle the intricacies of SAR images and the challenges posed by small-object detection better.

In addition to the YOLO family, other DL architectures have also been employed. These include Deep Convolutional Neural Networks (DCNNs) [

28,

29], the Detection Transformer (DETR) [

30], and the CenterNet network framework [

31], among others. Each approach aims to enhance the detection of small objects in SAR imagery, leveraging unique architectural features to improve accuracy and robustness.

In almost all the cited works, authors have leveraged the layered architecture of recent DL models to integrate various enhancing modules seamlessly. This approach enhances the models’ efficient and performant operations, such as dilated [

27], deformable, and depthwise separable convolutions, and ghost modules [

32]. However, attention mechanisms are one of the most performative ones that have revolutionized how models focus on relevant regions of an image while ignoring less important details [

33]. Attention mechanisms have been particularly effective in addressing challenges like small-object detection [

34], adverse weather conditions [

35], and occlusion [

36] by dynamically weighting features based on their relevance. Since the introduction of attention mechanisms for image processing in [

37], followed by advancements like Vision Transformer (ViT) [

26] and DETR [

38], attention-based methods have become integral to modern computer vision tasks.

One notable development is the Bi-Level Routing Attention (BRA) mechanism [

39], which introduces a dynamic, query-aware sparse attention mechanism. This innovation has inspired numerous applications across diverse scenarios. For instance, BRA has been utilized in power fitting detection tasks [

40], medical imaging for chest X-ray analysis [

41], and small-object detection in remote sensing images, often in combination with YOLO-based architectures (e.g., BRA+YOLO) [

42,

43].

Furthermore, BRA has been applied to enhance object detection in adverse weather scenarios using BRA+YOLOv9 [

44], improve recognition accuracy, and address the challenges of detecting occluded, long-range, and diminutive targets, such as Unmanned Aerial Vehicles (UAVs) [

45]. Additionally, this mechanism has been applied in recognizing classroom learning behavior, as demonstrated in BRA-enhanced YOLOv8 models [

46].

Another attention module, developed with numerous applications, is the Convolutional Block Attention Module (CBAM) [

47]. This attention module treats the input feature map along two separate dimensions, channel and spatial, to capture image details. Among works with the CBAM, in [

48], the authors used YOLOv8 with the CBAM and Receptive Field Attention Convolution (RFAConv) combined to address erroneous detection tasks in UAV scenarios, which are challenging due to the small size of the targets and complex background. The combined modules were used to replace the original C2f and Conv modules of YOLOv8. In [

49], the authors used YOLOv5 to recognize traffic signs, also using the CBAM. After a pre-processing step, the CBAM attention mechanism was introduced to the model, allowing it to focus on the shape of the traffic signs. The CBAM helped improve perceptions in a complex background. In a more recent work [

50], the authors used a YOLOv11 model to detect pests and diseases on maize leaves. The challenges are significant, primarily due to complex conditions. The CBAM was introduced between the SPPF and C2PSA modules to improve the model’s capability to identify and select essential features in both channel and spatial dimensions.

Attention mechanisms have been successfully employed in various object detection models, including Swin-YOLOv5 [

34,

51] and ViT-based approaches [

26]. Swin [

52] is a new vision Transformer based on Shifted Windows. This scheme achieves greater efficiency in computing self-attention across non-overlapping local windows while also allowing for cross-window connections. In [

53], the authors aimed to address the low detection accuracy and inaccurate positioning of small objects in remote sensing images by utilizing the YOLOv5 model and a modified CSPDarknet53 structure, combined with the Swin Transformer. Swin was added to retain context information and extract more differentiated features. In another recent work [

54], the authors improved the Swin Transformer, developing it into a Neural Swin Transformer (NST), and aggregated it with the YOLOv11 model. They applied the proposed model to ship detection in SAR images and compared it with ship detection in optical images. The proposed approach, incorporating neural elements, eliminates the redundant information generated by the local window self-attention module in Swin.

However, these works typically focus on general object detection tasks rather than the unique complexities of SAR imagery, such as the presence of complex backgrounds and the low resolution of small ships. To contextualize these advancements,

Table 1 synthesizes key SAR ship detection methods. While YOLO-based models have dominated recent research (e.g., YOLOv3 and YOLOv8), only a few studies have integrated attention mechanisms. Notably, YOLOv8+SimAM [

25] achieves 97.72% mAP@0.5 on SSD, and BRA has been applied in remote sensing [

42]. However, these approaches lack optimization for SAR-specific challenges, such as small vessels (relative size < 0.2) or computational efficiency. Non-YOLO frameworks (e.g., DETR, CenterNet) exhibit high accuracy but incur higher computational costs, which limits their real-time deployment.

Building on this foundation, our work bridges the identified gaps through three key innovations: (i) we pioneer optimized YOLOv11 and YOLOv12 frameworks for SAR ship detection, addressing the absence of tailored adaptations for these state-of-the-art architectures, (ii) unlike ad hoc attention integration, we implement systematic layer replacement to target SAR-specific complexities like small objects and background clutter, and (iii) we validate robustness via cross-dataset evaluation (SAR Ship and SSDD), ensuring generalizability beyond single-benchmark tuning.

Crucially, while prior SAR-focused YOLO enhancements relied on convolutional modifications (e.g., dilated or deformable convolutions [

27,

32,

55]), our attention mechanism integration, replacing original layers with BRA, Swin, or a CBAM, selectively amplifies discriminative features for small vessels while avoiding computational bloat.

Furthermore, compared to non-YOLO frameworks (e.g., DETR [

30], CenterNet [

31]), our approach maintains the real-time efficiency of the YOLO family. As

Table 1 confirms, our CBAM-enhanced YOLOv12n achieves superior accuracy (98.6% and 98.0% mAP@0.5 on SSDD and SSD, respectively) with reduced computations (5.9 GFLOPS vs. baseline 6.5 GFLOPS), enabling high-precision maritime surveillance without sacrificing deployability.

3. Materials and Methods

This section provides an overview of the datasets, metrics, architectural design, and methodologies used to enhance SAR ship detection performance. The first dataset used in this work is central to it: the SAR Ship Dataset (SSD), a highly challenging dataset characterized by diverse ship sizes, complex backgrounds, and small object features. The second, the SAR Ship Detection Dataset (SSDD), is smaller but has the same challenges in images. Additionally, two other datasets are presented to investigate the models’ generalization power in different contexts, like the SAR Aircraft Detection Dataset (SADD) and Large-Scale Multi-Class SAR Image (MSAR).

For all four datasets, we compute the bounding box size proportion following the COCO metric to define small, medium, and large objects. The COCO dataset contains images with a resolution of pixels, and the values used are small, less than pixels; medium, between and pixels; and large, larger than pixels. Since the datasets used here have different image sizes, we defined a way to compute the bounding box size using a conversion factor to carry out a fair comparison. We will reference this as the COCO converted metric. The conversion factor is computed as , and this factor is used to define the pixel quantity for small, medium, and large bounding boxes.

This section also details the architecture of the three most recent YOLO models (YOLOv10, YOLOv11, and YOLOv12), which integrate state-of-the-art innovations, as well as the adopted attention mechanisms (BRA, Swin, and the CBAM). By addressing specific challenges in SAR imagery, such as the detection of small objects and complex contextual interference, the methodologies aim to optimize detection accuracy and efficiency. The architectural innovations and their implications for feature representation and multi-scale object detection are explored to highlight the advancements introduced by this work.

3.1. Datasets

3.1.1. SAR Ship Dataset—SSD

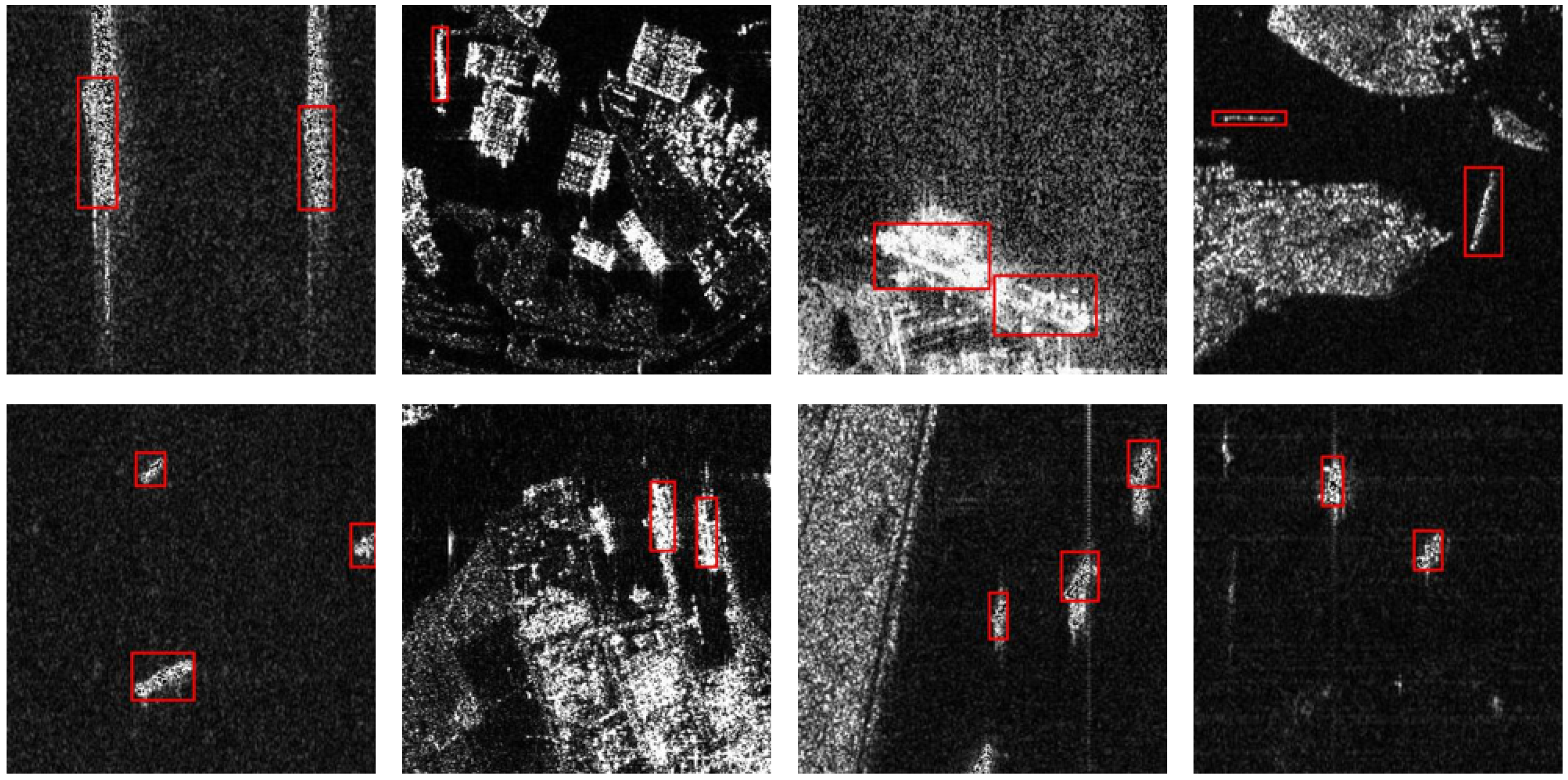

The SSD [

10] was constructed using SAR images from two satellite sources: 102 images from Gaofen-3 and 108 images from Sentinel-1. The authors processed these SAR images by cropping subregions containing ships to generate a comprehensive collection of labeled samples. Each subregion was resized or standardized to 256 × 256 pixels, resulting in grayscale images suitable for SAR-specific detection tasks.

The dataset comprises 39,729 labeled ship chips (i.e., patches), where each chip is a grayscale image labeled for ship detection. These images capture several real-world challenges: ships are often very small and multiscale, under 20% of a 256 × 256 patch and varying widely due to different sensors and incidence, angle distortions; complex backgrounds like ports, islands, and calm or stirred seas produce clutter and false alarms; and inherent SAR speckle noise further obscures ship features, worsening detection. Using the COCO converted metric, the objects in this dataset are

small,

medium, and

large. Additionally, densely packed or overlapping vessels in coastal scenes increase the likelihood of missed detections, and the limited dataset diversity (in imaging modes, resolutions, and polarizations) creates imbalance and generalization issues for models trained on it. Representative examples from the dataset are presented in

Figure 1.

The authors of the SSD identify ship size and background complexity as the most significant factors affecting performance in SAR ship detection tasks. Backgrounds in SAR images often include elements that can resemble ships, introducing challenges in distinguishing ships from non-ship objects. Additionally, ship sizes in the dataset can be very small, sometimes represented by only a few pixels (<0.2 of the chip), further complicating detection. These characteristics make the SSD a valuable benchmark for evaluating models designed for SAR-based ship detection.

One of the strengths of this dataset is the variety of ship sizes it offers. This variety arises from the diverse shapes of ships, differences in resolution due to imaging conditions, and the mechanisms used by satellites like Gaofen-3 and Sentinel-1 to capture SAR images. This diversity presents an excellent opportunity to test models for their robustness in detecting ships at multiple scales or to evaluate the performance of sub-models specialized in small, medium, or large ship sizes.

Further insight into ship size distribution is also presented by analyzing the bounding box size relative to the image size. The relative size is computed as

where

and

are the bounding box dimensions, and

and

are the image dimensions. Their analysis reveals that the relative size of bounding boxes is predominantly less than 0.2, indicating that most ships in the dataset are relatively small compared to the image dimensions. This characteristic highlights the importance of models that can effectively handle small-object detection.

Another significant challenge posed by the SSD is its complex background. SAR images are generated based on the reflection of radar signals, which means that all elements within the scene contribute to the final scatter image, not just the ships. This results in a background that can include patterns resembling ships, such as ocean waves, islands, landmasses, and man-made structures like buildings. These elements can exhibit scattering characteristics similar to those of ships, making the dataset highly complex and demanding for ship detection tasks.

This complexity, while challenging, also offers opportunities for leveraging DCNNs in detection tasks. One of the primary difficulties lies in the small size of ships, which can become even harder to discern after subsampling operations in convolutional layers. Small objects risk being lost in deeper layers of the network due to resolution reduction. However, DCNNs excel at learning intricate patterns and can adapt to complex backgrounds by extracting meaningful features across multiple scales. With the right architectural design, such as incorporating feature pyramids or attention mechanisms, these networks might effectively address small-object detection and background complexity, demonstrating strong performance on SAR-based datasets.

3.1.2. SAR Ship Detection Dataset—SSDD

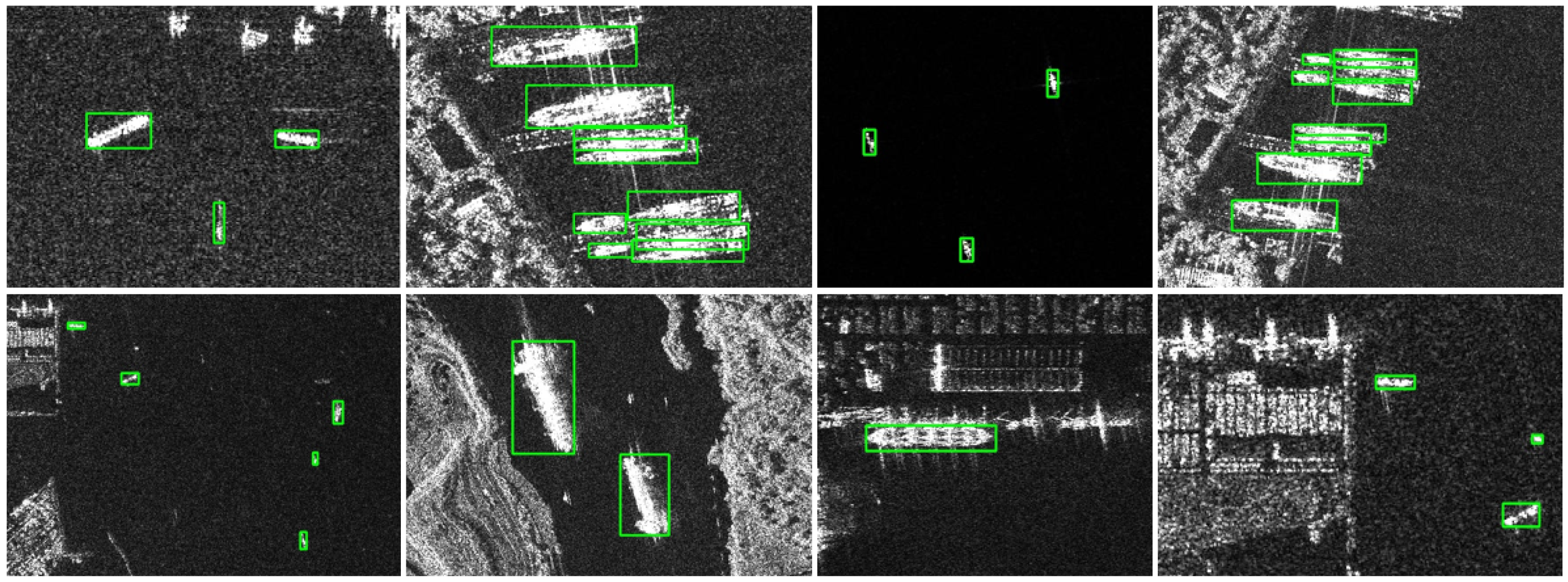

The SSDD [

56] dataset is the first SAR dataset released as a benchmark for researchers. The 1160 images that comprise the dataset come from three radars, RadarSat-2, TerraSAR-X, and Sentinel-1, featuring ships of various sizes and materials. The authors collected images with diverse sea conditions in the sea and offshore.

The dataset comprises 1160 images and 2456 ships, with small ships occupying only a few pixels in each image. The images have different sizes, ranging from 214 × 214 to 668 × 668 pixels. The authors used a three-pixel threshold to determine whether or not to annotate an object as a ship. In [

11], a comprehensive review of the SSDD is presented, providing details about formats and annotation versions. Some challenges encountered in SSDD images include small ships with inconspicuous features, densely parallel ships, ships with large scale differences, severe speckle noise, a complex sea background, and various types of sea clutter. Using the COCO normalized metric, we found

small objects,

medium objects, and

large objects.

Although the SSDD provides horizontal bounding boxes (HBBs), oriented bounding boxes (OBBs), and polygon-segmented (Pseg) objects, in this work, we only use the HBB annotation. This is so that the results can be fairly compared with those from SSD, which only have HBB annotations. The original annotation format used is the PASCAL VOC with parameters

,

,

, and

points of the bounding box, and a simple conversion was made to adapt them to the YOLO annotation format, with

,

,

, and

parameters of the bounding box. Some examples of how ships are shown in SSDD images can be found in

Figure 2.

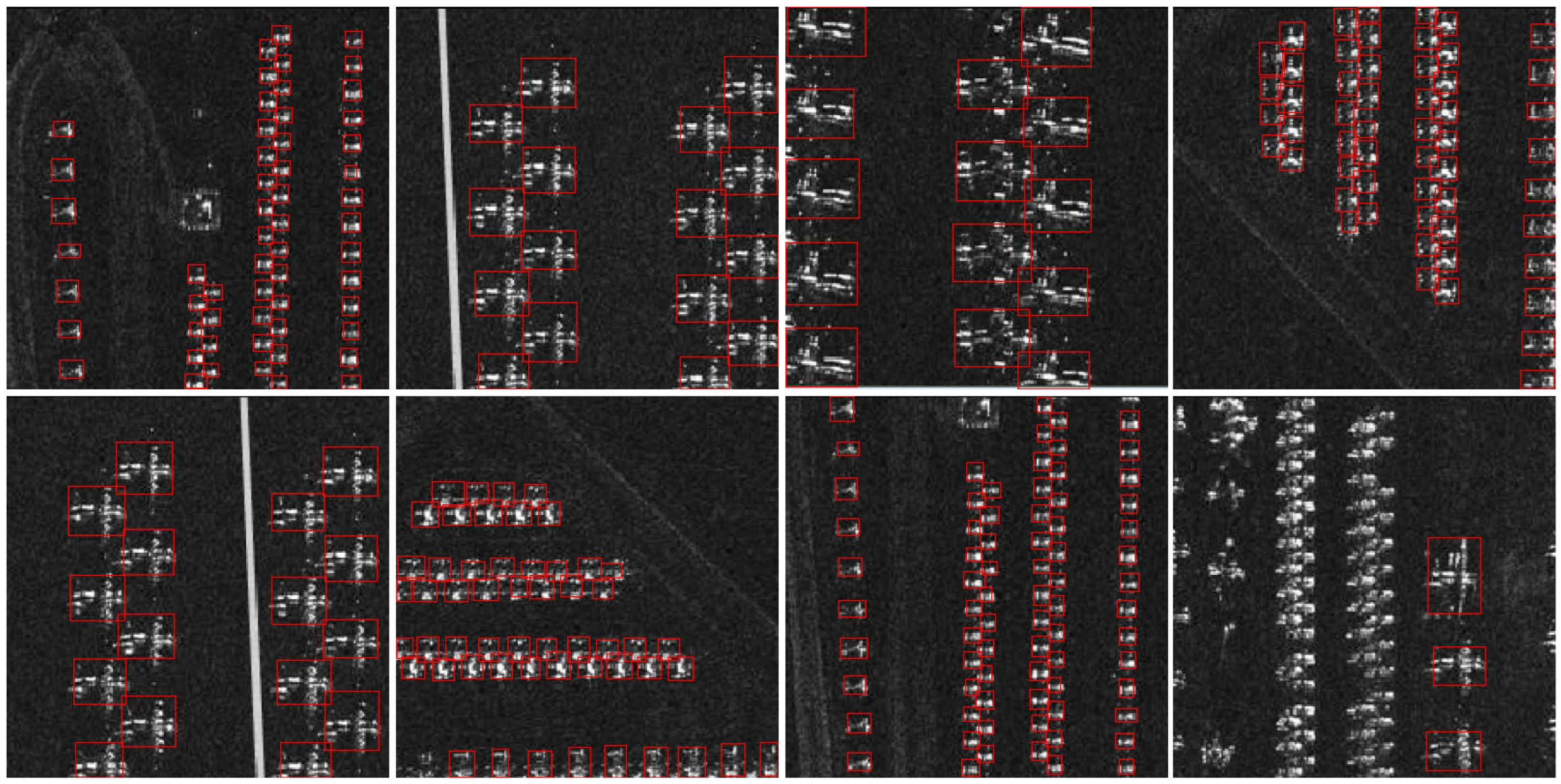

3.1.3. SAR Aircraft Detection Dataset—SADD

In [

57], the authors collected and constructed a public SAR dataset with aircraft images. All images were collected from the German TerraSAR-X satellite, with HH polarization and image resolutions ranging from 0.5 to 3 m. The dataset is composed of 884 images with one or more aircraft target per image. The background is relatively complex, which includes the airport runway, the airport apron, and the civil aviation airport. The original dataset has an image size of

pixels, but we use a dataset version that is already

.

The authors demonstrated that the sizes of objects in the dataset are diverse. The original dataset used in [

57] was divided into positive and negative targets, with the positive targets representing aircraft and the negative ones representing the background components. Here, for the detection task, we only consider the positive targets (884 images and 7662 targets). Although the authors showed that small objects are in the majority in the original dataset with positive and negative targets, when using only positive targets for the detection object task, we have more than 60% of medium-sized ones (ranging from

pixels to

pixels). Using the COCO metric, this dataset has

small,

medium, and

large objects. Some examples can be found in

Figure 3.

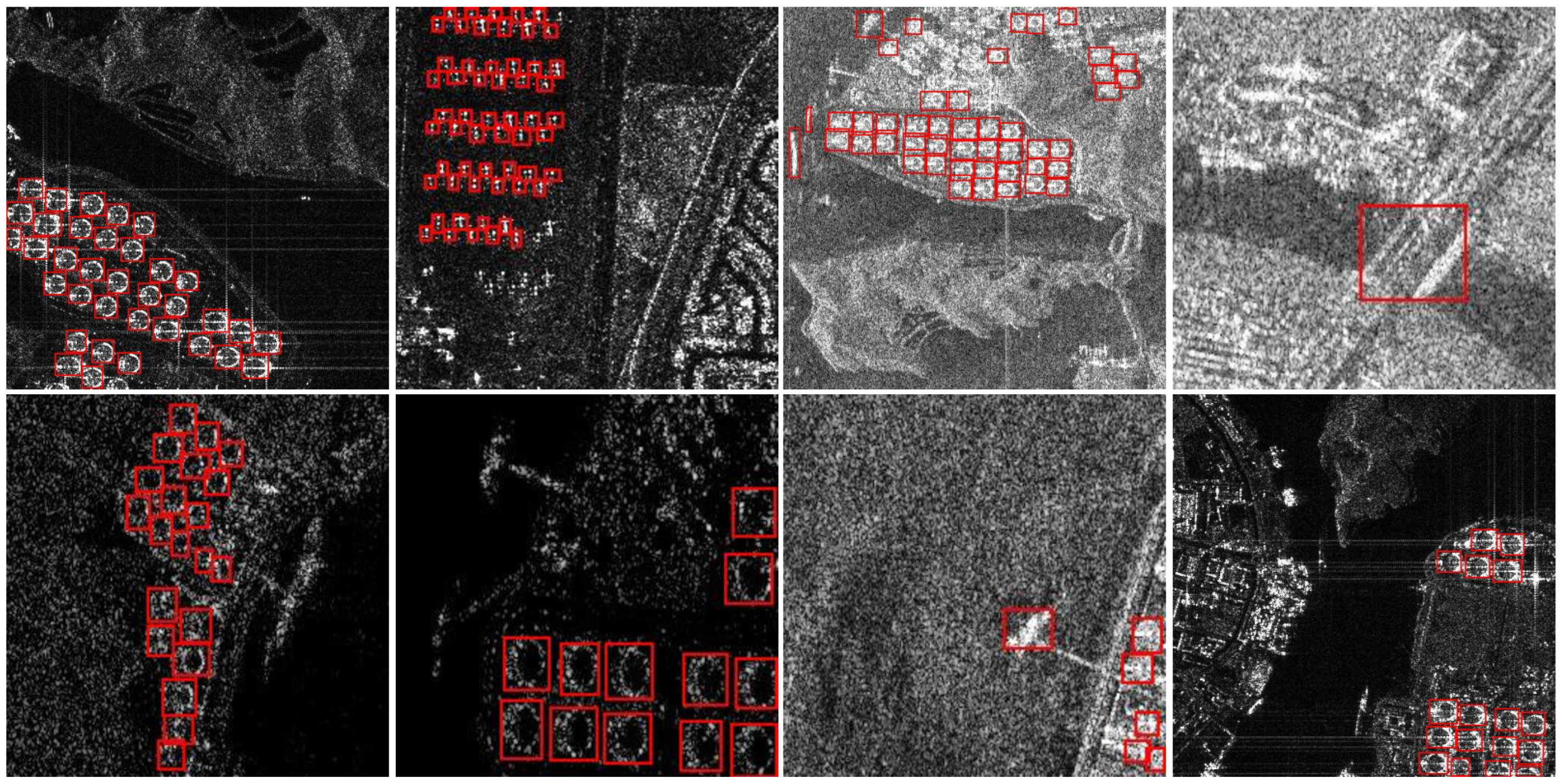

3.1.4. Large-Scale Multi-Class SAR Image—MSAR

In [

58], a new SAR dataset is presented, featuring multi-class objects and various scenarios, including airports, ports, inshore areas, islands, offshore areas, and urban areas. The image targets were collected from the HISEA-1 and the Gaofen-3 satellites. This dataset includes all four polarization modes, HH, HV, VH, and VV.

The dataset consists of four classes, aircraft, oil tank, bridge, and ships, comprising a total of 28,449 images. Some examples can be seen in

Figure 4. Here, we also used the COCO converted metric to determine the bounding box size proportion, with

small,

medium, and

large objects.

3.2. Performance Evaluation Metrics

In object detection, the performance of models is often evaluated using the mean average precision (mAP) metric. This metric summarizes how well a model detects objects across different categories and confidence thresholds [

59,

60]. It is a standard measure in benchmarking datasets and object detection models, enabling meaningful comparisons. For instance, the YOLO series uses mAP to track the performance progression of its models across versions. At the same time, the COCO dataset uses mAP extensively in its challenges to rank and compare models. Two commonly used mAP variations in modern benchmarks are mAP@0.5 and mAP@0.5:0.95. These metrics provide insights into the accuracy and robustness of a model’s predictions.

A foundational concept in computing mAP is the intersection-over-union (IoU), which measures the overlap between the predicted bounding box,

, and the ground truth bounding box,

. The IoU is given by

where

is the area of intersection between the predicted and ground truth boxes, and

is the area of their union.

Precision (P) and recall (R) are key metrics used in object detection.

Precision measures the proportion of correctly predicted bounding boxes (

) among all predicted boxes (

):

Recall measures the proportion of correctly predicted bounding boxes (

) among all ground truth boxes (

):

For each class, a precision–recall (PR) curve is generated by varying the confidence threshold of predictions. The average precision (AP) for a class is the area under the PR curve.

This integration is often approximated numerically using a discrete set of points.

The metric mAP@0.5 computes the mean of AP values across all classes, where a prediction is considered correct if its IoU with the ground truth exceeds 0.5.

where

C is the total number of object classes.

The metric mAP@0.5:0.95 provides a more comprehensive evaluation by averaging AP values over multiple IoU thresholds from 0.5 to 0.95, with a step size of 0.05.

where

T is the number of IoU thresholds; typically,

for thresholds

.

mAP@0.5 measures the mean of the average precision (AP) over all classes using a fixed IoU threshold of 0.5, meaning that any detection must overlap with the ground truth by at least 50% to be considered correct. Precision and recall are then calculated over varying confidence thresholds. In contrast, mAP@0.5:0.95 averages the AP across 10 IoU thresholds, ranging from 0.50 to 0.95 in increments of 0.05, providing a more comprehensive evaluation of both detection capability and localization accuracy. While mAP@0.5:0.95 demands tighter box alignment (performance at higher thresholds reflects more precise location matching), mAP@0.5 is lenient in terms of localization (i.e., minor misalignments between predicted and ground truth boxes are tolerated).

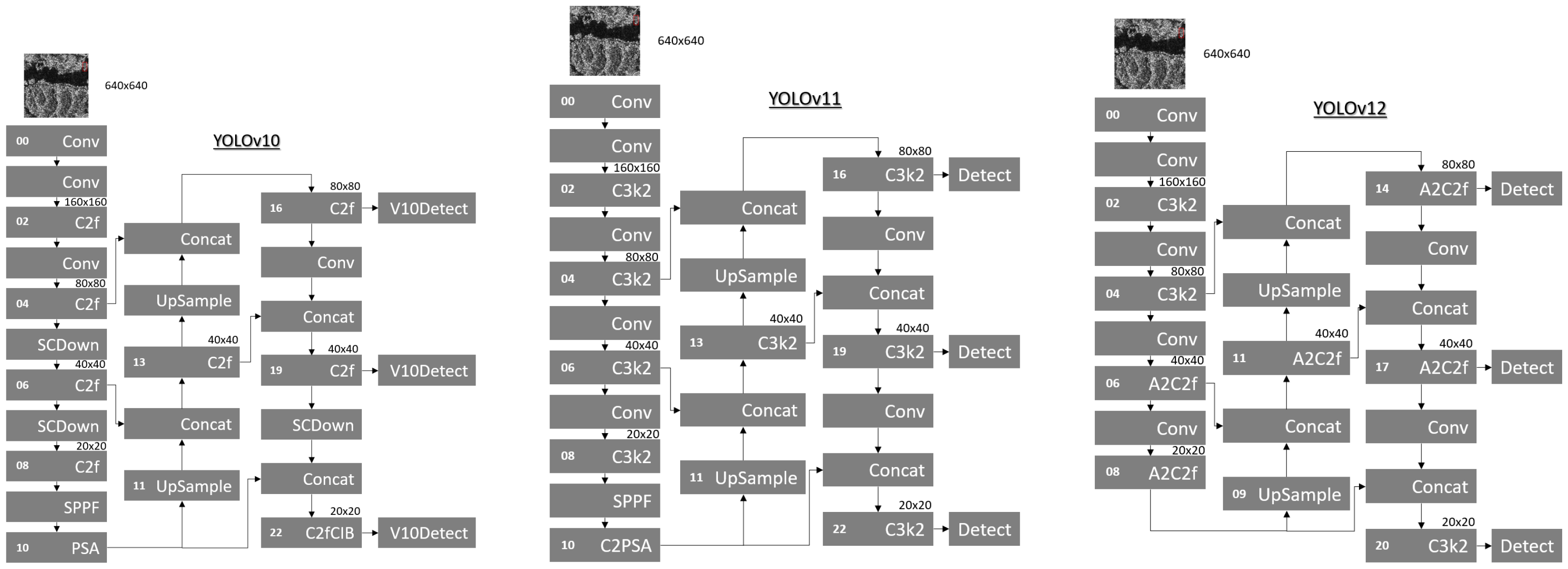

3.3. Architectures of the YOLO Models

This work proposes improving the performance of YOLO models by adding and/or modifying the attention layers. Until the end of this work, the three most recent YOLO models are YOLOv10 [

15], YOLOv11 [

61], and YOLOv12 [

62]. YOLOv10 is an evolution of YOLOv8, proposed to optimize YOLO’s parameter utilization and efficiency by reducing computational redundancy in its architecture. Beyond all the optimization for efficiency and accuracy improvement at a low cost, YOLOv10 also presents NMS-free (non-maximum suppression-free) post-processing, which reduces the problem of redundant predictions. Version 11 introduces an improved backbone and neck architecture, enhancing feature extraction. In version 12, the authors proposed a new self-attention approach to process large receptive fields more efficiently, as well as a new aggregation module based on the previous ELAN [

63]. A performance comparison is presented in [

62], and YOLOv12 outperforms both YOLOv10 and YOLOv12 in performance, both mAP@0.5 and mAP@0.5:0.95. The architectures of the YOLO models employed in this work are found in

Figure 5.

In this work, we specifically adopt the n (nano) versions of YOLOv10, YOLOv11, and YOLOv12 due to their favorable balance between accuracy and computational efficiency. These lightweight models are particularly well suited for SAR ship detection tasks, which require real-time processing on platforms with limited hardware resources, such as drones or embedded systems. This balance makes the YOLO n models an effective and deployable solution for maritime surveillance scenarios, especially where small-object detection and low-latency inference are critical.

In the next subsections, we present all attention mechanisms used to enhance the models’ performance.

3.3.1. Bi-Level Routing Attention—BRA

BRA was introduced as an attention module in the BiFormer model [

39]. BRA is a dynamic, query-aware, sparse attention mechanism where each query attends to a small subset of semantically relevant key–value pairs. This approach filters irrelevant key–value pairs and applies fine-grained token-to-token attention only in selected regions. The BRA process consists of three steps, which are described below.

- Step 1:

Region Partition and Input Projection

The two-dimensional (2D) input feature map

is divided into

non-overlapping regions, forming

. Query, key, and value tensors (

Q,

K,

) are derived via linear projections.

where

are projection weights for the query, key, and value, respectively.

- Step 2:

Region-to-region routing with directed graph

Region-level queries and keys (

) are obtained by averaging

Q and

K per region. An adjacency matrix

is computed as

representing region-to-region affinity. The affinity graph is pruned by keeping only the top-

k connections per region using a routing index matrix,

.

where the

row of

contains indices of the

k most relevant regions for the

region.

- Step 3:

Token-to-token attention

Key and value tensors are gathered using

, and fine-grained attention is applied.

where

, and

is a local context enhancement term.

BRA is effective for small targets and dense occlusion [

46]. For SAR ship images, where small objects cluster in specific areas, BRA improves detection accuracy [

43]. As shown in [

39], BRA preserves fine-grained details crucial for small objects. Complex backgrounds and noise in remote sensing images (see

Figure 1 for examples) are mitigated by BRA, thereby enhancing the precision of small-object detection [

42].

BRA’s placement in YOLO (e.g., during upsampling, before downsampling, or during feature fusion) impacts small-object detection. Across different configurations, BRA enhances detection by incorporating global attention information, which captures the overall structure and global semantic details, as well as local attention details [

42]. Additionally, it improves computer vision tasks by capturing structural and detailed features [

64].

3.3.2. Swin Transformer—Swin

The Swin Transformer [

52] introduces a hierarchical vision Transformer architecture using shifted windows. Its core innovation lies in computing self-attention within non-overlapping local windows that shift between layers, enabling efficient cross-window communication while maintaining linear computational complexity relative to image size. This design is particularly advantageous for SAR ship detection, where modeling multi-scale features and complex backgrounds is essential.

The Swin Transformer block builds upon the standard Transformer block [

33] but replaces the multi-head self-attention (MSA) module with two novel components:

As shown in

Figure 6, each block contains consecutive W-MSA and SW-MSA modules, with LayerNorm (LN) applied before each MSA and Multi-Layer Perceptron (MLP) operation.

The processing within a Swin block follows these steps:

where

and

denote intermediate features after the (S)W-MSA and MLP operations at block

l, respectively.

The key innovation involves alternating between regular and shifted window partitions:

Regular partitioning: At layer l, an feature map is divided into non-overlapping windows (e.g., windows for input → four partitions).

Shifted partitioning: At layer , windows are offset by pixels (e.g., pixels when ).

This shifting strategy creates new window boundaries that overlap with adjacent windows from the previous layer, enabling cross-window connections while maintaining non-overlapping computation within each layer. As demonstrated in [

52], this approach efficiently models long-range dependencies without quadratic computational complexity.

The architecture processes images through four stages, with progressive downsampling (resulting in a 2× reduction in resolution between stages). Each stage contains multiple Swin Transformer blocks that collectively extract local features within windows (W-MSA), model cross-window dependencies (SW-MSA), and maintain spatial hierarchy for multi-scale ship detection. This hierarchical design makes Swin particularly effective for SAR imagery, where ships appear at vastly different scales and require context integration across complex maritime backgrounds.

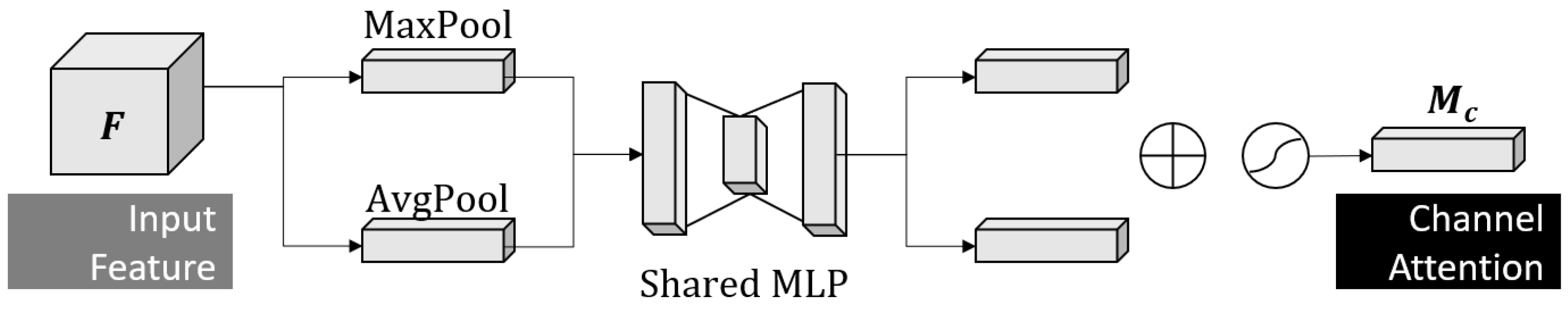

3.3.3. Convolutional Block Attention Module—CBAM

The Convolutional Block Attention Module (CBAM) [

47] addresses key challenges in SAR ship detection by sequentially refining features along channel and spatial dimensions. This lightweight module enhances discriminative feature learning while suppressing irrelevant background clutter, crucial for detecting small ships in complex maritime environments.

The CBAM processes input feature maps

through two complementary attention submodules: (i)

Channel Attention: Identifies what features are meaningful. (ii)

Spatial Attention: Locates where informative regions exist. The sequential refinement is expressed as

where ⊗ denotes element-wise multiplication. Channel attention values are broadcast spatially, while spatial attention weights are broadcast across channels. This is represented in

Figure 7The channel attention mechanism submodule emphasizes semantically important feature channels. As shown in

Figure 8, it generates channel-wise descriptors through parallel pooling operations.

Some key characteristics of this module are (i) shared weights: the same MLP (single hidden layer with ReLU) processes both pooled features, (ii) sigmoid activation: produces channel weights , and (iii) feature refinement: emphasizes channels containing ship signatures while suppressing noise.

The spatial attention mechanism submodule focuses on spatially significant regions, particularly important for small ships in SAR imagery. It combines channel-pooled features

where

and

pool along channel dimension (

), concat stacks along channel dimension and

is a convolutional filter integrating a spatial context of

size. In

Figure 9 this is visually represented.

As detailed in the original CBAM paper [

47], the module processes input features through two complementary attention mechanisms: channel attention (focusing on “what” is salient) and spatial attention (focusing on “where” salient features reside). Through experimentation, the authors determined that arranging these modules sequentially (i.e., channel attention followed by spatial attention) yielded superior performance compared to parallel arrangements. This sequential ordering also outperformed the spatial-first configuration. Consequently, this work adopts the CBAM implementation exactly as originally proposed, with channel attention preceding spatial attention in the processing chain.

4. Results and Discussions

This section presents the results obtained from training two different original YOLO versions using the SAR image datasets presented in

Section 3.1. We proposed modifications to the YOLOv11 and YOLOv12 architectures, including the addition of new attention blocks or the replacement of the original attention layers. The attentions BRA, Swin, and CBAM, described in

Section 3.3.1,

Section 3.3.2 and

Section 3.3.3, respectively, were used to evaluate their performance. All models presented here were trained from scratch on SAR images annotated with horizontal bounding boxes, without relying on any pre-trained weights.

4.1. Environmental Setup

All models tested and presented in this work were trained and analyzed on a machine running Ubuntu 22.04.2 LTS, equipped with an AMD Ryzen Threadripper 3960X 24-core processor at 3.8 GHz, 128 GB of RAM, 6 TB of storage, and an NVIDIA GeForce RTX 4090 GPU with 24 GB of graphics memory. For training, we use 500 epochs with patience, defined as 100 epochs, batch size 16, and 2 workers. The input image was defined as the default 640 × 640. All datasets were split into training, validation, and test sets using a 7:2:1 ratio, respectively, even when sampled. The metrics used to compare the models are precision (P), recall (R), mAP@0.5, mAP@0.5-0.95, Floating-Point Operations Per Second (FLOPS), and Frames per Second (FPS). The FPS is shown only when the full dataset is used. Python (version 3.11.11) was used as the programming language for all tasks, including dataset manipulation, random image selection, dividing the dataset into training, validation, and testing subsets, training the model, and making predictions.

4.2. Performance Evaluation on the SAR Ship Dataset

Table 2 compares the baseline YOLOv10n, v11n, and v12n, all of which were trained on the SSD. We first conducted training on the original models to understand how they adapt and perform in complex SAR images. The results were used to determine which models would undergo the addition or replacement of layers with attention mechanisms to enhance their performance. The table illustrates the evolution in performance from YOLOv10 to YOLOv12, demonstrating consistency with the original publications and evaluations in benchmark datasets such as COCO.

The YOLOv11n model has a lower number of layers (v11: 181, v10: 385, and v12: 465) compared to the other two models. Even with this, it overperforms the YOLOv10n model with fewer than half layers. The YOLOv12n outperforms both YOLOv10n and YOLOv12n, despite having a greater number of layers. Even with this, the v12 model has a computational complexity almost equal to v11, with 6.5 and 6.4 GFLOPS, respectively. This indicates that the number of layers does not significantly impact performance when comparing models.

Table 3 summarizes the performance of various models on the SAR Ship dataset, as reported by multiple authors since the dataset’s release in 2019. The models listed in the table, ranging from earlier works to more recent methods up to 2022, were originally grouped in [

8]. Due to a lack of some model names, only the reference number is presented in the Model column. In addition, four very recent models have been incorporated into the comparison to provide a fair assessment alongside the latest technologies and baseline methods.

The table includes key evaluation metrics such as precision, recall, mAP@0.5, and mAP@0.5:0.95, as well as details on each study’s data splits (Train/Val/Test). Note that several earlier works did not report precision and recall, and many only provided mAP@0.5. The mAP@0.5:0.95 metric is only available for the models trained in our work and by [

25]. Due to these unbalanced metrics across studies, mAP@0.5 is used as the primary standard for comparison.

Table 3 indicates that since the introduction of SSD usage, the models’ performance has been increasing continuously. Some models were originally trained using 70% of the dataset for training, whereas others used 80%. Models marked with an asterisk (*) in

Table 3 did not specify their data division strategy, so we assume they followed the original division of the SSD.

Only one model using a split ratio of 7:2:1 achieved more than 97% mAP@0.5 [

27]. The other one achieves more than 97% mAP@0.5 using a split ratio of 8:0:2 (i.e., employing 80% of the dataset for training), which is not a fair comparison. It is interesting to note that for a split ratio of 7:2:1, all three original YOLO models surpassed the state of the art, with YOLOv12n being the best among them.

4.3. Ablation Study

This section presents in-depth ablation studies of the YOLO models, analyzing various configurations, their performance, and the corresponding trade-offs. The ablation studies provide insights into selecting the optimal model configuration and explore alternative setups that are effective for small-object detection.

The ablation tests will focus on YOLOv11n and YOLOv12n because they represent the most accurate and computationally efficient baselines, aligning with the goal of pioneering optimizations for the latest YOLO architectures in SAR detection and showing the most promise for improvement in initial experiments. YOLOv10n’s lower performance and higher computational cost made it a less compelling candidate for the intensive ablation study phase.

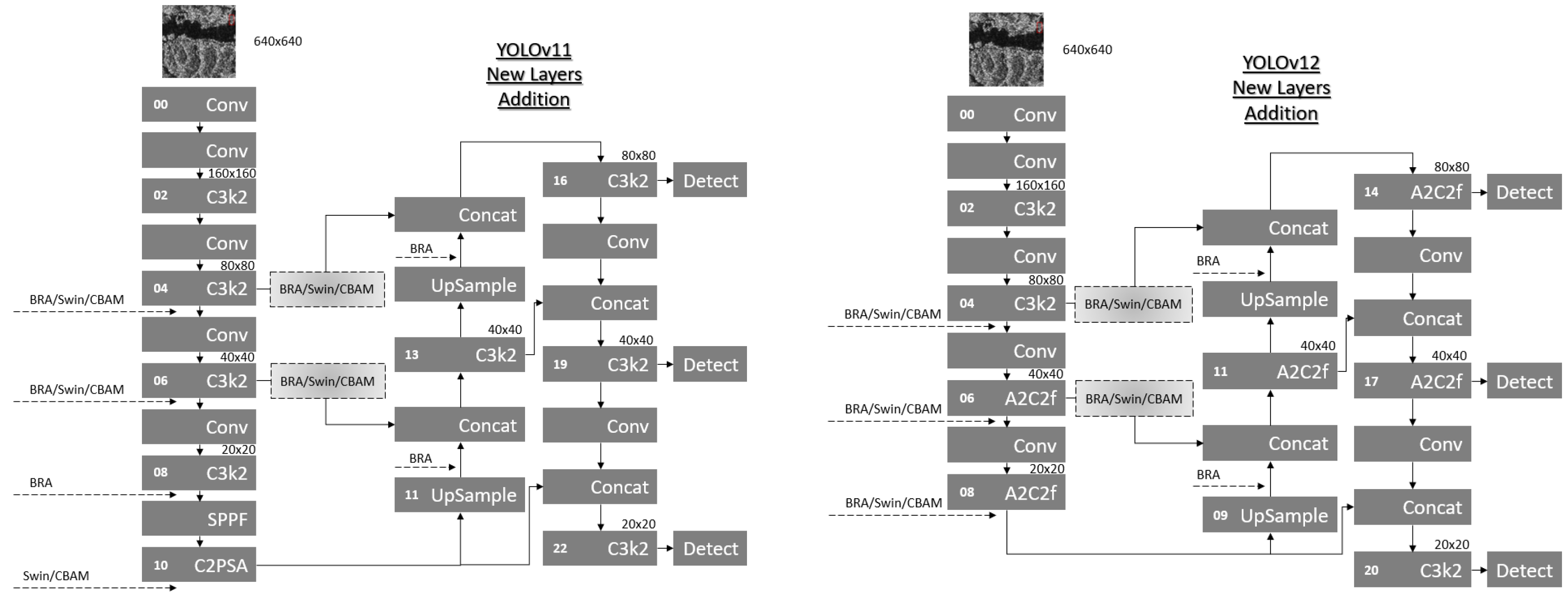

4.3.1. Ablation Study on Attention Layer Positioning in YOLO Architecture—Adding Layers

In this study, we systematically investigate different insertion points for BRA, Swin, and the CBAM within the YOLOv11n and YOLOv12n architectures. We first conduct a training batch only for YOLOv12n, which exhibited a better performance on the original architecture. For this task, we employed a subset of the original SAR Ship dataset, comprising 2856 images for training, 814 images for validation, and 410 images for testing. Using this reduced dataset, it was possible to accelerate the experimentation process while still providing sufficient data to evaluate the impact of different attention module positioning strategies.

We added attention layers before selected Conv layers in the backbone and before and after the upsampling modules in the neck. We followed the rationale presented in [

46] and conducted the same exploration, aiming to determine the optimal position for new attention modules. The best layer and position cannot be defined in advance. Although attention at mid-stage feature maps helps capture the broader context for object localization, the size of objects in the image can necessitate the addition of a new attention module in different layers.

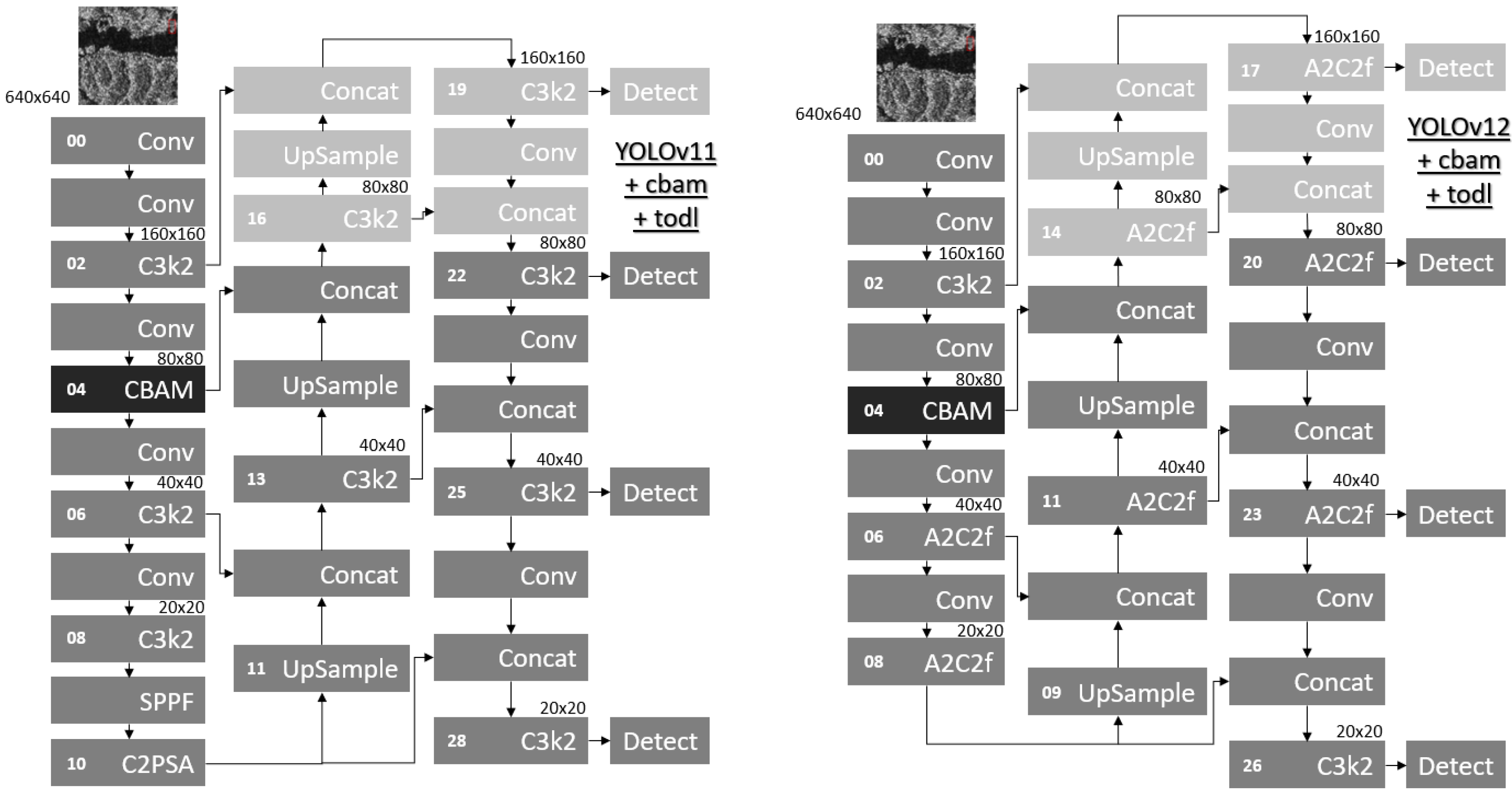

For YOLOv11n, as shown in

Figure 10, attention modules were added before the following layers: (i) BRA modules were added before layers 5, 7, 9, 12, and 15, and (ii) the CBAM/Swin modules were added before layers 5, 7, and 11. For YOLOv12n, attention modules were added as follows: (i) BRA modules were added before layers 5, 7, 9, 10, and 13, and (ii) the CBAM/Swin modules were added before layers 5, 7, and 9.

We also conducted a fusion from the backbone to the neck. As discussed in [

72], feature fusion in the neck part can improve object detection performance across multiple scales. Due to the presence of redundant information in the fusion of feature maps, the authors suggest incorporating attention modules into the fusion process.

In both models, as shown in

Figure 10, the fusion was performed from layers 4 and 6 to concat layers in the neck at layers 12 and 15 in v11, and 10 and 13 in v12. Every insertion was performed individually, meaning that only one attention module was added at a time, and the resultant model was trained. When finished, the previous attention module was removed and inserted into another layer.

Table 4 presents only the best results for each attention module inserted into YOLOv12n (all results can be found at github page. See the URL in

Section 1).

Table 4 indicates that adding attention modules does not increase the performance of the model. The CBAM achieved the highest mAP@0.5. However, it retains the same mAP@0.5 value as the baseline and performs worse in other metrics.

Additionally, we conducted the same performance test using YOLOv11n.

Table 5 presents only the best results for each attention module inserted into YOLOv11n (all results can be found at github page. See the URL in

Section 1).

For YOLOv11n, the results were superior to those of the baseline model. All changed versions using BRA and the CBAM achieved the same value for mAP@0.5. However, with BRA added, the model had a better mAP@0.5-0.95 value, achieving . The model using Swin in fusion mode achieved higher performance among all tested models in both versions, v11 and v12.

As SAR images represent complex environments, the sampled dataset cannot replicate the same level of complexity as the full dataset. Therefore, next, we test all new configurations using the entire dataset. To train these models using the full dataset, we choose only the models that achieved the best performance in the sampled dataset. Although more data tends to guide the model to a better result, the more data present in the full dataset introduces more complexity and, in this way, all models had the same or a worse performance than the ones presented in

Table 4 and

Table 5.

For YOLOv12n, using the full SSD, we did not find any combination of all added layers that surpassed the original model’s performance. The results ranged from

to

for mAP@0.5. For YOLOv11n, using BRA in the fusion, we obtained

, which is an improvement over the original YOLOv11n’s

. Even with this, the original YOLOv12n still has the best performance at

. Another fact is that the models’ inference speed is also affected when more layers are added. In both models, the number of FPS was reduced. For YOLOv12, the addition of BRA decreases the frame rate from 112 to 96 frames per second, which is the worst-case scenario. For YOLOv11, the frame rate decreases from 164 to 116, and this decrease is also observed when BRA is added. These results can be found in

Table 6 and

Table 7.

The integration of multiple and distinct attention modules into a model, particularly through the sequential addition of layers, can result in a phenomenon known as the ’non-focusing effect’ [

73]. This effect occurs when the interaction between different attention mechanisms does not complement each other, potentially leading to degraded model performance rather than improved performance. The results demonstrate that simply adding modules such as BRA, Swin, or the CBAM to the YOLOv11n and YOLOv12n models did not yield performance gains, and in some cases, even resulted in a reduction.

Adding layers often fails to improve performance while inflating computational costs. Therefore, next, we conducted tests with both models, replacing some of the original attention modules with the three analyzed ones. We hypothesize that replacing layers can enhance performance by refining critical feature extraction pathways without sacrificing efficiency while avoiding the “no focus” effect caused by conflicting attention hierarchies. This approach may optimize architectures for domain-specific challenges, such as detecting small SAR ships.

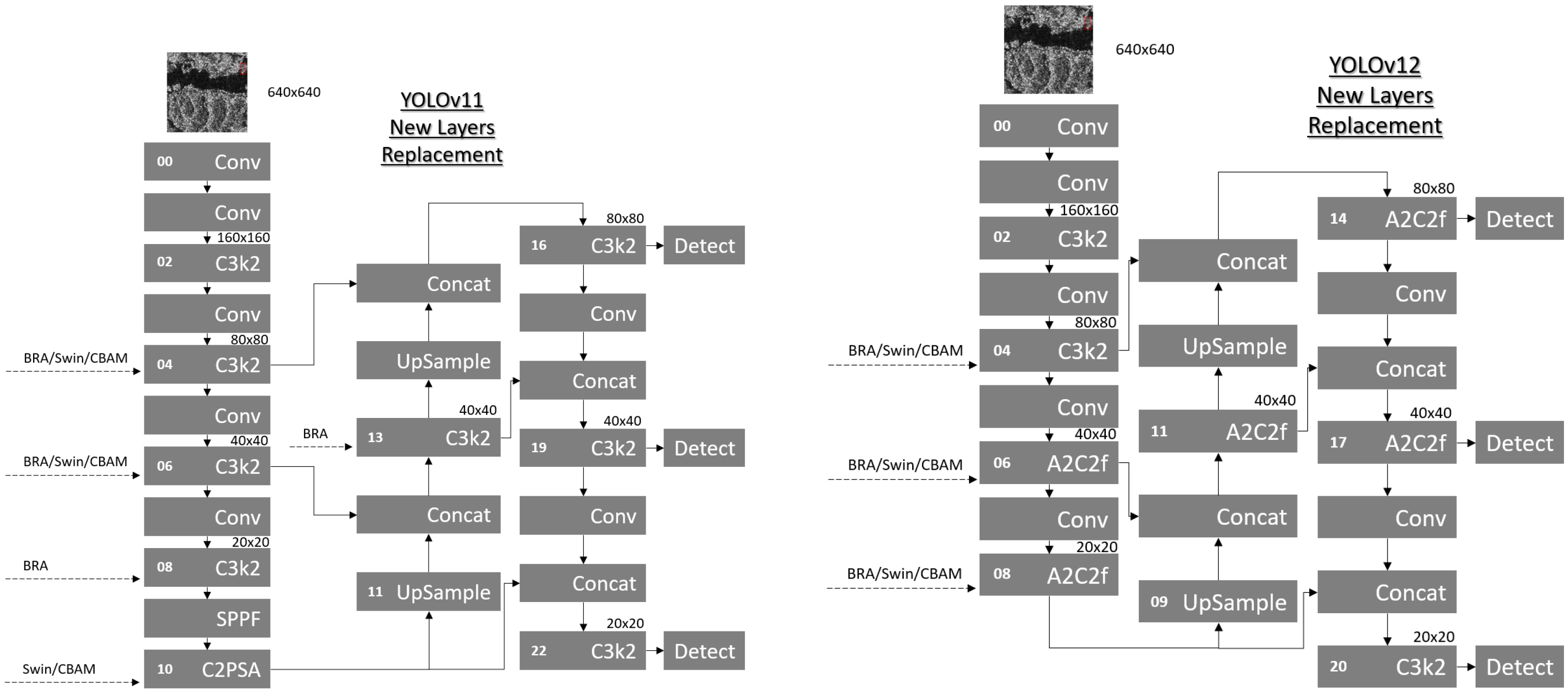

4.3.2. Ablation Study on Attention Layer Positioning in YOLO Architecture—Replacing Layers

Although YOLOv11n improved its performance with the addition of one BRA layer, it still performed worse than the original YOLOv12n. We thus decided to replace some layers instead of just adding them. In this section, we conducted all training with the full dataset.

For YOLOv11, the attention modules replaced the following layers: (i) BRA modules replaced layers 4, 6, 8, and 13, and (ii) the CBAM/Swin modules replaced layers 4, 6, and 10. For YOLOv12, the BRA, CBAM, and Swin attention modules replaced layers 4, 6, and 8.

Figure 11 shows all the combinations for both models. To train the model, only one attention model was replaced at a time. Each module was replaced with a new attention module (BRA, the CBAM, or Swin), which was then trained and tested. The previous attention module was then removed, and the new one was inserted to replace the original module in another position. This process is repeated for all attention modules in all positions.

The same explanation regarding the attention module insertion, given in

Section 4.3.1, can be applied here. The difference in layer number is related to replacing existing layers, not adding new ones.

Table 8 below shows the results in detail. We only show the best result for each replaced attention module (all results can be found at github page. See the URL in

Section 1).

Replacing the original attention layers yielded three significant outcomes. First, the CBAM-enhanced YOLOv12n achieved the highest performance for SAR ship detection, with mAP@0.5 increasing from 0.978 (baseline YOLOv12n) to 0.980. For YOLOv11n, mAP@0.5 improved from 0.976 to 0.978. Second, layer 4 (specifically the second C3k2 module) was identified as the optimal replacement location across attention mechanisms. While all attention modules (BRA, the CBAM, and Swin) improved performance at this layer, the CBAM delivered the highest gains. With a default input size of 640 × 640, this layer outputs an 80 × 80 feature map that feeds into the first detection head via concatenation with an UpSample module at layer 14 (forming layer 16), which is crucial for detecting small objects. Third, the CBAM proved most effective for SAR-specific challenges, outperforming both baseline models and other attention mechanisms. Replacing layer 4 with the CBAM achieved the peak mAP@0.5 (0.980 for v12n). Notably, the CBAM at layer 6 in YOLOv12n also yielded strong results (mAP@0.5: 0.979), although slightly lower than the results obtained with layer 4 replacement. Additionally, the CBAM-enhanced YOLOv12n reduced computational costs to 5.9 GFLOPS (compared to the baseline of 6.5 GFLOPS) while maintaining real-time FPS.

The FPS results in

Table 8 confirm a pattern consistent with earlier ablation studies: replacing the original attention layer with BRA substantially reduces inference speed, decreasing YOLOv12n’s FPS from 112 (baseline) to 93 (−17%). In contrast, the CBAM and Swin maintain near-baseline efficiency, with the CBAM achieving 110 FPS for YOLOv12n (compared to the baseline of 112 FPS) and 161 FPS for YOLOv11n (compared to the baseline of 164 FPS). Meanwhile, Swin matches the baseline FPS exactly at 112 FPS for YOLOv12n and 164 FPS for YOLOv11n when deployed at layer 4. This efficiency retention underscores BRA’s higher computational complexity, while the CBAM and Swin offer lightweight alternatives suitable for real-time SAR surveillance.

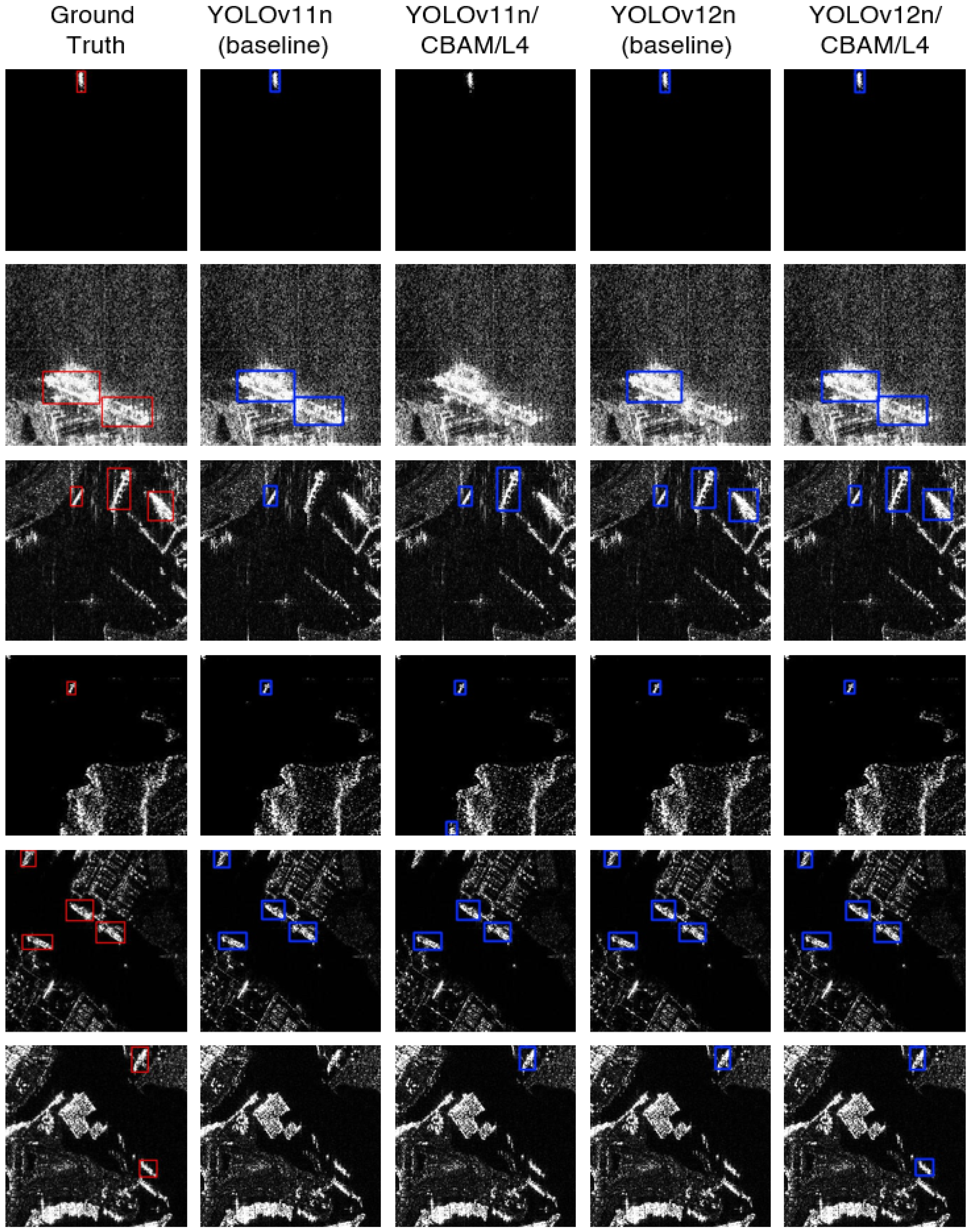

Figure 12 visually validates the performance gains from attention-layer replacement by comparing ground truth annotations with bounding box predictions from the top-optimized models: YOLOv11n/CBAM/L4 and YOLOv12n/CBAM/L4. The samples explicitly demonstrate YOLOv12n-CBAM’s superior detection capability in complex SAR scenarios, notably its precision in identifying small ships amidst background clutter, which aligns with its quantitative performance peak in

Table 8. This visualization underscores how targeted attention replacement (particularly CBAM at layer 4) enhances feature discrimination critical for maritime surveillance. The figure provides a critical visual demonstration of model performance differences: for a given input, detection results vary across architectures. While baseline YOLOv11n and YOLOv12n sometimes match the proposed model in detecting certain ships, only the optimized YOLOv12n with the CBAM at Layer 4 consistently detects all vessels aligned with ground truth annotations across all examples shown. This underscores the necessity of holistic evaluation using quantitative metrics, such as mAP@0.5, which objectively averages precision across the entire dataset to validate performance gains.

4.3.3. An Ablation Study on the Addition of a Small-Object Detection Head

To address the challenges posed by small-object detection, several studies have incorporated additional detection heads into YOLO architectures, targeting objects such as fish, vehicles, and fruits in diverse contexts [

74,

75,

76]. Building on this, ref. [

46] introduced a fourth detection head, the Tiny Object Detection Layer (TODL), to improve small face detection in classrooms. These efforts primarily extend YOLOv5 and YOLOv8, which natively include three detection heads. While these efforts leveraged YOLO’s native three-head architecture, our work pioneers TODL integration in YOLOv11n and YOLOv12n.

Specifically, we adapted TODL to address the SSD dataset’s critical challenges: ships averaging < 0.2 relative size amidst complex backgrounds like islands and sea clutter. As shown in

Figure 13, our implementation incorporates (i) an upsampling module scaling feature maps to a 160 × 160 resolution, (ii) a dedicated TODL detection head for high-resolution feature processing, and (iii) a convolutional refinement module restoring 80 × 80 scale. This configuration may theoretically enhance small-ship discrimination by preserving fine-grained details before the first detection head. However, the quantitative results revealed limited practical gains.

The results in

Table 9 show that integrating the CBAM at layer 4 and a new TODL detection head operating on

feature maps improved performance only for YOLOv11. This aligns with the pattern observed when adding new attention modules to the baseline models. While YOLOv12 showed no improvement in mAP@0.5, it achieved gains in mAP@0.5-0.95. We hypothesize that YOLOv12’s limited gains stem from information degradation in earlier convolutional layers. Repeated convolution (subsampling) operations can suppress tiny objects (sub-pixel ship features). If targets initially occupy too few pixels, subsequent upsampling cannot recover the lost information in preceding layers. Thus, while TODL benefits other domains, its efficacy for SAR ships remains constrained by fundamental resolution limits.

4.3.4. Generalization Experiment: Performance Evaluation on SSDD

To rigorously validate model generalizability for real-world maritime surveillance, we conducted cross-dataset testing using the SSDD. Characterized by 1160 images from RadarSat-2, TerraSAR-X, and Sentinel-1 sensors with horizontal bounding box annotations, the SSDD presents distinct challenges, including higher ship density (2.1 ships/image vs. SSD’s 1.3) and smaller vessel sizes [

11].

We performed inference on both original models (YOLOv11n and YOLOv12n) and their CBAM-enhanced variants (with the original attention layer at position 4 replaced by the CBAM), using models trained exclusively on the SSD dataset to predict the SSDD. This cross-dataset evaluation (conducted without retraining) assesses generalization capability and dataset-specific bias. The results shown in

Table 10 reveal that while all models exhibited suboptimal performance (mAP@0.5: 0.678–0.714), replacing the attention layer at position 4 with the CBAM consistently improved robustness. For YOLOv11n, mAP@0.5 increased from 0.693 to 0.714; for YOLOv12n, it rose from 0.678 to 0.693. This demonstrates the CBAM’s efficacy in enhancing feature discrimination for SAR-specific challenges, such as small ships and complex backgrounds, even in unseen data domains.

In the sequence, we retrained the baseline YOLO’s models and optimal CBAM-enhanced variants from scratch to evaluate consistent performance patterns. The results in

Table 11 demonstrate that CBAM replacement at layer 4 universally improved robustness across datasets: YOLOv11n-CBAM reached 0.978 and 0.986 mAP@0.5 (vs. baseline 0.976 and 0.984) with improvements of 0.007 and 0.008 in mAP@0.5-0.95 (vs. baseline 0.703 and 0.715) on the SSD and SSDD, respectively. On the other hand, YOLOv12n-CBAM achieved 0.980 and 0.979 mAP@0.5 (vs. baseline 0.978 and 0.975) with gains of 0.012 and 0.011 in mAP@0.5-0.95 (vs. baseline 0.704 and 0.700) on the SSD and SSDD, respectively.

Crucially, no single model dominated both contexts: YOLOv12n-CBAM performed best on the SSD (0.980 mAP@0.5), whereas YOLOv11n-CBAM excelled on the SSDD (0.986 mAP@0.5), indicating that dataset-specific characteristics, such as target density and clutter complexity, favor different architectures. These findings confirm that while targeted attention-layer optimization enhances cross-dataset adaptability, optimal model selection requires a consideration of operational constraints, necessitating context-specific fine-tuning to address the inherent peculiarities of each dataset.

The decrease in FPS count on the SSDD stems from the inherent complexities of the dataset, which amplify computational demands. The SSDD exhibits higher ship density (2.1 ships/image vs. SSD’s 1.3). This increases computational load during detection, as more objects require processing per frame. Additionally, the SSDD’s variable image dimensions (214 × 214 to 668 × 668 pixels) versus the SSD’s uniform 256 × 256 chips introduce resizing overhead during preprocessing. Non-maximum suppression (NMS) operations further strain post-processing on denser detections. Potential channel mismatches (SSD: one channel; SSDD: three channels) may also contribute to inefficiencies, as channel conversion could introduce latency.

Additionally, we fine-tuned these models using the best YOLOv11 and YOLOv12 with the CBAM at layer 4, pre-trained on the SSD, as the base model. The objective was to analyze the cross-dataset adaptability between a model pre-trained on the SSD (base) dataset and fine-tuned on the SSDD dataset. In

Table 1, the DETR model achieved 0.991 mAP@0.5 using a dataset split ratio of 8:2 [

30]. Note that DETR (14.34 million parameters, 44.4 GFLOPS) differs significantly in size from our nano-sized YOLO models. The nano-sized YOLO models used in this work have specifically 2.6 million parameters (YOLOv11n) and 2.5 million parameters (YOLOv12n). Therefore, to enable a fairer comparison, we tested an 80:20 split on the SSDD using medium-sized YOLO variants (“m”): YOLOv11m (20.1 million parameters, 68.0 GFLOPS) and YOLOv12m (19.6 million parameters, 59.8 GFLOPS). With these scenarios, we define new considerations for the results in

Table 12: for both YOLO models, v11 and v12, the baseline original model, the original with layer 4 replaced by the CBAM trained from scratch, and fine-tuned on the SSDD, all using a 7:2:1 dataset split ratio. Next, the results are presented using the same configured models, but now with an 8:2 dataset split and model size “m”.

Table 12 shows that the CBAM’s inclusion in layer 4 increased the performance of both models (YOLOv11 and YOLOv12) on both sizes “n” and “m”. Training a model from scratch using different ratios and model sizes maintained consistency in model improvement when using the CBAM at layer 4.

Fine-tuning CBAM-enhanced models (pre-trained on SSD) for the SSDD yielded varied outcomes across architectures and sizes. As shown in

Table 12, while performance remained competitive (mAP@0.5: 0.983–0.988), trends differed by model. YOLOv12n gained +0.009 mAP@0.5 when fine-tuned (0.988 vs. 0.979 from scratch at 7:2:1 split), outperforming its baseline. Conversely, YOLOv11n declined by −0.003 mAP@0.5 (0.983 vs. 0.986 from-scratch) under the same split. When compared to non-CBAM baselines, most fine-tuned models improved (e.g., YOLOv12n: +0.013 over baseline), though none surpassed DETR’s 0.991 mAP@0.5 (

Table 1). The highest SSDD performance was 0.989 mAP@0.5 by YOLOv11m/CBAM/L4 trained from scratch on an 8:2 split.

As the last analysis, we tuned the hyperparameters of the best model, the YOLOv11m/ CBAM/L4 trained from scratch with a split of 8:2, which attained an mAP@0.5 of 0.989. For this, we used YOLO’s tuning mode. The goal is to achieve a higher mAP metric than the DETR model. We defined 300 iterations with 30 epochs for each iteration, and all hyperparameters were tuned. As a result, the tuned YOLOv11m/CBAM/L4 (from scratch + 8:2) achieved an mAP@0.5 of 0.992 and mAP@0.5-0.95 of 0.762 (+0.001 in mAP@0.5 above DETR results), making it the best model in both “n” and “m” sizes of YOLOv11.

4.3.5. Generalization Experiment: Performance Evaluation on Non-Ship SAR Datasets

Although we have shown that YOLOv11 and CBAM combined in Layer 4 can have a significant improvement on the SSD and SSDD datasets, the contexts are the same, and the images are closely related. Therefore, we conducted a simple but informative analysis on the SADD and MSAR datasets to confirm the effect of CBAM aggregation on the YOLO model.

The analysis was made following the same setup as described in

Section 4.1. For the SADD, we increased the training epochs to 1000, using the default dataset split of 7:2:1. The other parameters remained the same. As presented in

Section 3.1, these two datasets are composed of different objects, but most of them have medium bounding box sizes. In

Table 13, the results for the SADD dataset are presented.

As shown in

Table 13, changing YOLOv11n’s original layer 4 by introducing the CBAM achieved the best mAP@0.5 results, reaching higher values than YOLOv11n baseline (+0.004), YOLOv12n baseline (+0.002), and YOLOv12n+CBAM (+0.004). These results support the extensive initial analysis made on the SSD dataset and reinforce the CBAM’s contribution for small and medium object detection in SAR images.

In a deeper analysis, the last dataset, MSAR, has four object classes, as described in

Section 3.1. This introduces new complexity to the model, making it a more challenging dataset. For the MSAR dataset, we used 500 epochs for training, using a 7:2:1 rule for the dataset split. All remaining parameters are the same as described in

Section 4.1. In

Table 14, all results are presented and compared with baselines.

Based on the results presented in

Table 14, which evaluates model performance on the multi-class MSAR dataset, several findings emerge regarding the impact of integrating the CBAM attention mechanism. The overall performance analysis indicates that the CBAM-enhanced YOLOv12n model achieved the highest scores in both overall mAP@0.5 (0.974) and mAP@0.5-0.95 (0.788), demonstrating superior detection and localization accuracy across all object classes. This suggests that the combination of YOLOv12’s architectural advancements and the CBAM’s feature refinement capabilities is particularly effective for handling the diversity and complexity inherent in multi-class SAR imagery.

A class-specific examination reveals that the aircraft category, which posed the greatest challenge with the lowest baseline performance, benefited most significantly from the CBAM’s integration. YOLOv12n+CBAM/L4 achieved the best mAP@0.5 (0.937) and recall (0.863) for aircraft, indicating a marked improvement in detecting these difficult targets. For the ship and oil tank classes, where baseline performance was already near-perfect, the CBAM provided more modest gains, primarily enhancing recall and finer-grained localization accuracy as reflected in the mAP@0.5-0.95 metrics. The bridge class showed interesting variability, with YOLOv12n+CBAM delivering the best results (0.978 mAP@0.5). At the same time, YOLOv11n+CBAM experienced a slight decrease compared to its baseline, highlighting that the effectiveness of attention mechanisms can vary depending on both the object class and the base architecture.

These findings reinforce this work’s core thesis that strategic attention-layer replacement, particularly in YOLOv12, creates a more robust and accurate detector capable of handling the complexities of multi-class SAR object detection, with the most substantial improvements occurring in the most challenging detection scenarios.

5. Conclusions

This study advances SAR ship detection by strategically integrating attention mechanisms, specifically Bi-Level Routing Attention (BRA), Swin Transformer, and the Convolutional Block Attention Module (CBAM), into state-of-the-art YOLO architectures (v10, v11, v12). Crucially, we demonstrate that replacing the original attention layer at a critical network position (layer 4, corresponding to the second C3k2 module) yields superior performance gains compared to simply adding new attention modules. Among the mechanisms evaluated, the CBAM proved most effective when deployed at this optimal location within YOLOv12n.

The optimized CBAM-enhanced YOLOv12n achieved an mAP@0.5 of 98.0% on the challenging SAR Ship Dataset (SSD), surpassing the baseline YOLOv12n (97.8%) and prior state-of-the-art methods. Beyond improved accuracy, this model offers enhanced computational efficiency, reducing operations to 5.9 GFLOPS (compared to 6.5 GFLOPS in the baseline) while utilizing fewer layers (462 vs. 465). Rigorous cross-dataset validation on the SSDD dataset confirmed the robustness and generalizability of this approach, with CBAM replacement consistently improving performance over baselines. Furthermore, evaluation on non-ship SAR datasets (the SADD for aircraft and MSAR for multi-class detection) demonstrated that the strategic integration of the CBAM delivers performance gains across various object types and complex multi-class scenarios, solidifying its value as a general enhancement for SAR object detection architectures.

This work establishes a robust framework for efficient, high-precision maritime surveillance using SAR imagery. Future research will explore attention mechanisms in distinct contexts (e.g., drone-based imagery), the fine-tuning of their hyperparameters, incorporating oriented bounding boxes (OBBs) and slicing-aided hyper inference (SAHI) for enhanced precision, and further refining dynamic attention routing and small-object detection layers to build even more capable all-weather surveillance systems. The DETR architecture will also be considered in our future works due to its good performance on the SSDD dataset.